Significance

The ability to learn highly skilled movements may depend on the dopamine-related plasticity occurring in motor cortex, because the density of dopamine receptors—the reward sensor—increases in this area from rodents to primates. We hypothesized that primary motor (M1) and somatosensory (S1) neurons would encode rewards during operant conditioned motor behaviors. Rhesus monkeys were implanted with cortical multielectrode implants and trained to perform arm-reaching tasks with different reward schedules. Consistent with our hypothesis, M1 and S1 neurons represented reward anticipation and delivery and a mismatch between the quantities of anticipated and actual rewards. These same neurons also represented arm movement parameters. We suggest that this multiplexing of motor and reinforcement information by cortical neurons underlies motor learning.

Keywords: reward, motor cortex, multichannel recording, primate, prediction error

Abstract

Rewards are known to influence neural activity associated with both motor preparation and execution. This influence can be exerted directly upon the primary motor (M1) and somatosensory (S1) cortical areas via the projections from reward-sensitive dopaminergic neurons of the midbrain ventral tegmental areas. However, the neurophysiological manifestation of reward-related signals in M1 and S1 are not well understood. Particularly, it is unclear how the neurons in these cortical areas multiplex their traditional functions related to the control of spatial and temporal characteristics of movements with the representation of rewards. To clarify this issue, we trained rhesus monkeys to perform a center-out task in which arm movement direction, reward timing, and magnitude were manipulated independently. Activity of several hundred cortical neurons was simultaneously recorded using chronically implanted microelectrode arrays. Many neurons (9–27%) in both M1 and S1 exhibited activity related to reward anticipation. Additionally, neurons in these areas responded to a mismatch between the reward amount given to the monkeys and the amount they expected: A lower-than-expected reward caused a transient increase in firing rate in 60–80% of the total neuronal sample, whereas a larger-than-expected reward resulted in a decreased firing rate in 20–35% of the neurons. Moreover, responses of M1 and S1 neurons to reward omission depended on the direction of movements that led to those rewards. These observations suggest that sensorimotor cortical neurons corepresent rewards and movement-related activity, presumably to enable reward-based learning.

Reward-based learning is a fundamental mechanism that allows living organisms to survive in an ever-changing environment. This type of learning facilitates behaviors and skills that maximize reward (1). At the level of motor cortex, synaptic modifications and formation of new synaptic connections that accompany learning have been reported (2). The reinforcement component of these plastic mechanisms is partly mediated by dopamine (DA), a key neurotransmitter associated with reward (3–5). DA inputs to cortical areas, including primary motor (M1) and somatosensory (S1) areas, arise from the midbrain (6–8). Recent studies demonstrating the presence of a higher concentration of DA receptors in the forelimb over the hind-limb representation in rodent motor cortex (9), and impairment of motor skill acquisition resulting from blocking these DA receptors in the forelimb representation (10), point to the role of DA receptors in learning skilled forelimb movements. In agreement with this line of reasoning, animals with a rich repertoire of skilled movements, such as monkeys and humans, show a disproportionate increase in the density of DA receptors in the cortical motor areas: M1, premotor, and supplementary motor cortex (6, 11, 12).

Although evidence points to the involvement of M1 and S1 circuitry in reward-related learning and plasticity (1, 13, 14), it is still not well understood how M1 and S1 neurons process both reward and motor information. A longstanding tradition in neurophysiological investigations of M1 and S1 was to attribute purely motor and sensory functions to these areas. Accordingly, the majority of previous studies have focused on the relationship of M1 and S1 spiking activity with such characteristics as movement onset (15), movement direction (16, 17), limb kinematics (18, 19), muscle force (20–22), temporal properties of movements (23, 24), and progression of motor skill learning (25). Fewer studies have examined nontraditional representations in M1 and S1, such as spiking activity associated with rewards (26–31). Representation of reward in M1 was provoked by several studies that, in addition to motor-related neural activity, examined neuronal discharges in these areas that represented motivation, reward anticipation, and reward uncertainty (30–33). Although these studies have confirmed that M1 neurons multiplex representation of reward with the representation of motor parameters, a number of issues need further investigation. Particularly, it is unclear whether reward-related neuronal modulations in M1 (31, 33) represent rewards per se, their anticipation, and/or the mismatch between the actual and expected rewards. Furthermore, the relationship of these reward-associated signals with the processing of motor information is poorly understood. Understanding of these interactions will help elucidate mechanisms underlying reward-based learning (34).

To this end, we have developed an experimental paradigm in which reward and characteristics of movements were manipulated independently: (i) Movements and reward were separated by two different delays, (ii) multiple movement directions were examined for their impact on reward, and (iii) unexpected variability in reward amounts were introduced to produce their mismatch to the animal’s expectation of reward. Neuronal ensemble activity was recorded chronically in rhesus monkeys using multielectrode arrays implanted in both M1 and S1. These experiments revealed a widespread representation of reward anticipation in M1 and S1 neuronal populations. Additionally, many M1 and S1 neurons responded to unexpected changes in reward magnitude [i.e., represented reward prediction error (RPE)]. Moreover, neuronal responses to RPEs were directionally tuned; they varied according to the direction of the movement planned by the animal. Taken together these results indicate that predictive encoding of reward is robust in both M1 and S1 and that reward-related signals interact with neuronal directional tuning in sensorimotor cortex. As such, reward-related neuronal modulations in the monkey primary sensorimotor cortices could contribute to motor learning (35).

Results

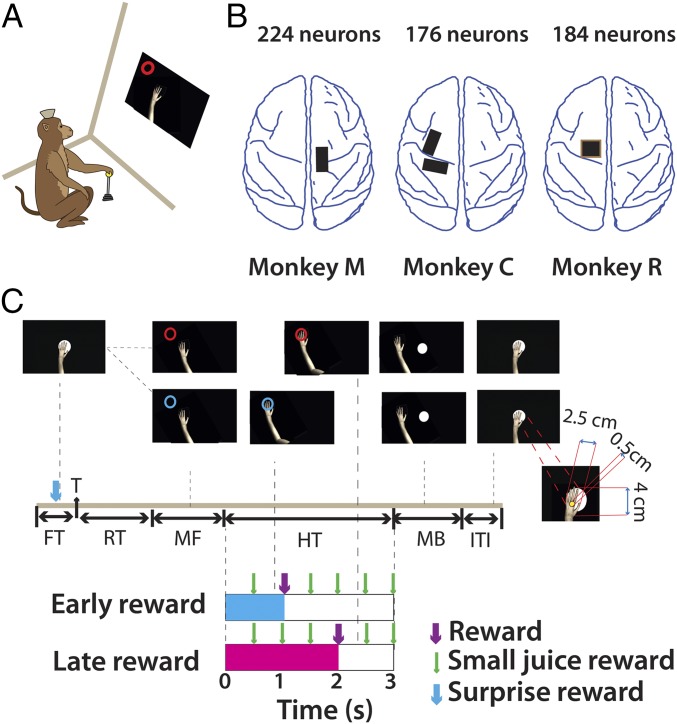

Our central goal was to test whether M1 and S1, at both the single-cell and the ensemble level, can mediate reward-based learning. More specifically, we sought to measure cortical neuronal activity that encoded reward anticipation, unexpected changes in reward presentation, and modulation of reward-associated signals by arm movements. For this purpose, we used a task in which animals moved a realistic image of a monkey arm (avatar arm) on a computer screen using a hand-held joystick. In any given trial, the avatar hand had to be moved and placed over a circular, colored target and held over it for 3 s. A passive observation version of the task (Fig. 1C) was also implemented. In this version, a computer controlled the movement of the virtual arm while the monkey, whose limbs were restrained from moving, was rewarded for simply observing the task displayed on the computer screen. The color of the target informed the monkeys about the time when a juice reward would be delivered.

Fig. 1.

Experimental setup and schematic of the paradigm. (A) Rhesus monkeys were trained to perform a center-out task using a custom-made hand-held joystick. Moving the joystick displaced an avatar monkey arm on the computer monitor. (B) Neural data were obtained from M1 neurons in three monkeys (monkeys M, C, and R) and S1 neurons in one monkey (monkey M) using chronically implanted arrays (black and brown rectangles). (C) Experiment schematic: Trial began when monkeys positioned the arm within the center target. Following fixation (FT), a circular target (T), red or blue, appeared at a distance of 9 cm from the center in one of eight target locations. Monkeys were trained to react quickly (RT) and acquire the target by moving the avatar arm (MF, movement forward). After reaching they held the arm at the target location for 3 s. A juice reward (R) was delivered either after 1 s (early reward) or after 2 s (late reward) into the hold time. Target color (blue vs. red) cued the monkey regarding reward time (early, cyan vs. late, magenta). Small juice rewards were given every 500 ms during the 3-s hold period (HT, hold time). At the end of the hold period, monkeys returned the arm to the center (MB, movement back). The next trial began after a brief intertrial interval (ITI). (Inset) The avatar monkey arm relative to a target-size disk. Note the cursor (0.5-cm yellow circle) has to be completely within the target to count as a successful “reach.” In Exp. 2, in 10% of the trials out of the total a surprise reward (SR) was given to the monkey before the target appeared.

In early-reward trials, cued by a blue target, reward was delivered 1 s after target acquisition, whereas in late-reward trials, cued by a red target, reward was delivered after 2 s (Fig. 1C). In the joystick control task, after receiving the juice reward (0.66 mL, 500-ms pulse) monkeys continued to maintain the avatar arm at the target location for the remaining hold period. Holding still at the target location was encouraged by small juice rewards (50 ms, 0.03 mL) that were delivered periodically (every 500 ms) during the entire 3-s hold period. Changes in position during the hold time precluded the remaining rewards. All of the monkeys learned the requirement to hold the virtual arm still. In a majority of the trials (>90% in monkeys M and R and 80–85% in monkey C) the monkeys did not move their hands. The SD of joystick position during the hold time was 0.014 cm in monkey M, 0.06 cm in monkey C, and 0.025 in monkey R.

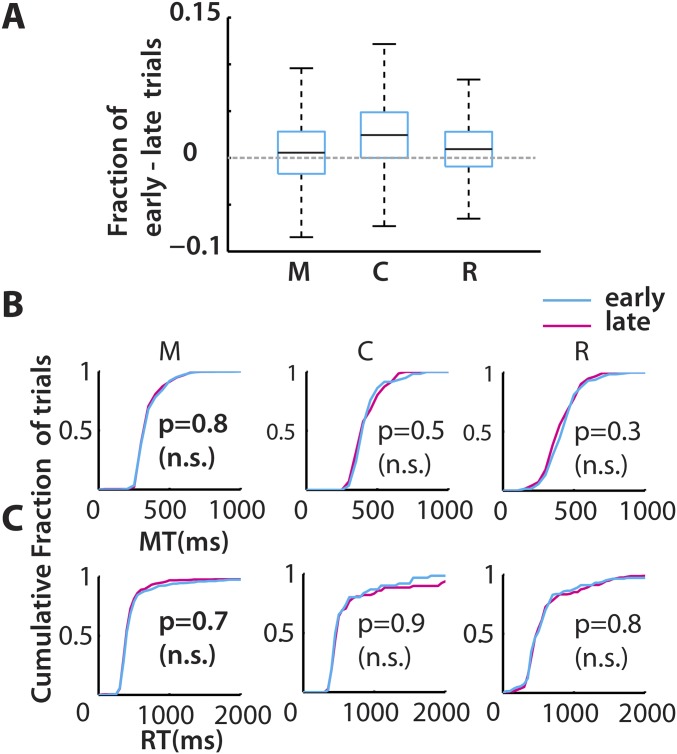

Because the total hold time period (3 s) was the same irrespective of the early or late reward delivery, the reward rate was maintained irrespective of trial sequence. We expected that this homogeneity of the reward rate would result in similar movement patterns for both trial types even though the rewards were given at different times after the movement was completed. Indeed, the reaction times (RT; Fig. S1C) and movement times (MT; Fig. S1B), which are proxies for motivation levels, were similar for both trial types. The early- (blue trace in cumulative histograms) and late- (red trace) reward trials were not significantly different [P > 0.05, Kolmogorov–Smirnov (KS) test, n = 3 monkeys]. The same held true in a majority of behavioral sessions (24/25 sessions in monkey M, 12/13 in monkey C, and 7/7 in monkey R). It was found that monkeys only weakly favored early reward over the late reward as inferred by a small difference between the numbers of attempted early-reward trials and late-reward trials (Fig. S1A, monkey M: 0.6%, monkey C: 2.4%, and monkey R: 1%). In summary, although monkeys had a slight tendency to initiate early-reward trials more often, there were no significant differences in the movement parameters.

Fig. S1.

Effects of reward time on behavior. (A) All three monkeys (M, C, and R) attempted more early-reward trials than late-reward trials. Box plots represent pooled data from across sessions (monkey C, 14 sessions; M, 18 sessions; and R, 7 sessions). The row of panels show the MT in B and the RT in C, as a cumulative distribution, for all of the trials in a typical session color-coded by time of reward: early (cyan) and late (magenta). The RT and MT were not significantly different (n.s.) between early- and late-reward conditions (KS test: P > 0.05).

Neuronal Modulations During Different Task Epochs.

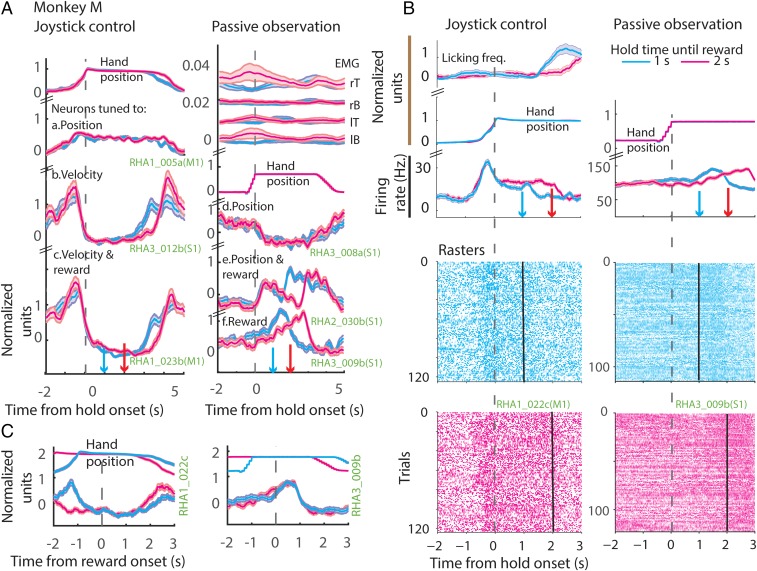

A total of 485 neurons were recorded in the three monkeys (M1, monkey M: n = 125, monkey C: n = 176, monkey R: n = 184; S1 from monkey M: n = 96). Several representative neurons with different kinds of neuronal responses elicited during the task are illustrated in Fig. 2A. The data are separated into joystick control sessions (Fig. 2A, Left) and passive observation (Fig. 2A, Right), and early- (cyan) and late- (magenta) reward conditions. The associated arm position (joystick control session), the virtual arm position (passive observation session), and the electromyographies (EMGs) from biceps and triceps (passive observation session) are displayed above the perievent time histograms (PETHs). Some neurons were modulated by the position of the arm, that is, neuronal firing increased (e.g., RHA1_005a) or decreased (e.g., RHA3_008a) when the arm moved away from the center. Some neurons were modulated by the arm movement velocity: The neuronal firing increased as a function of the tangential velocity (e.g., RHA3_012b). Some neurons were modulated by arm position and reward, meaning that neuronal firing increased as the arm moved away from the center and decreased till the end of reward delivery epoch and increased right after, and, finally, spiking activity decreased again when the arm moved back to the center (e.g., RHA2_030b). Some arm velocity-related neurons also maintained spiking activity at a level higher than baseline till the end of the reward delivery epoch (e.g., RHA1_023b; see also RHA1_022c in Fig. 2B). Some neurons did not respond to position or velocity but increased their spiking activity till the end of the reward delivery epoch (e.g., RHA3_009b). Note that the neurons in a column, with different response properties, were all recorded simultaneously during the same session.

Fig. 2.

Neuronal modulation during task epochs. (A) Example neurons from a joystick control session (Left) and passive observation session (Right) from Exp. 1. (Left) The changes in the mean (z-scored) arm position (at the top), followed by binned (50 ms), z-scored firing rate (PETH) of example neurons, all aligned to onset of hold time. (Right) A passive observation session; the EMG activations are displayed at the top. EMGs were measured from left (lT) and right triceps (rT), and left (lB) and right biceps (rB). Then, the virtual arm position is plotted. Neurons tuned to position, velocity, reward, or a combination of those is displayed next. Bands around the mean represent ±1 SE. Data are color-coded based on the time of reward: early (blue) and late (red). Time of reward is indicated in the upper panels by color-coded downward-pointing arrows. Note that the arm is stationary during the hold period. Weak muscle activations were seen around the time of movements in the passive observation session. (B) Example neurons plotted in the same format as in A; however, the y axis depicts the absolute firing rate modulation. The lower panels show spike raster plots. (C) PETH of the example neurons shown in B aligned to the time of reward onset. y axis depicts the normalized firing rate modulation.

Fig. 2 B and C show two example M1 and S1 neurons whose spiking activity distinguished early from late reward. PETHs shown in Fig. 2B are aligned on hold onset and those in Fig. 2C are aligned on reward onset. The data are separated into joystick control sessions (Left) and passive observation (Right) and early- (cyan) and late- (magenta) reward conditions. The associated arm/hand position and licking frequency (joystick control session) and the virtual arm position (passive observation session) are displayed above the PETHs as in Fig. 2A. Spike rasters are also included in Fig. 2B. Although the arm position was stable (RHA1_023b in Fig. 2A and RHA1_022c in Fig. 2B), and the EMGs (seen in Fig. 2A) did not show any significant muscle contractions around the time of reward, the M1 and S1 neurons exhibited differences in firing rate before reward onset (P < 0.05, two-sided t test).

Reward-Related Modulation in the M1/S1 Neural Population.

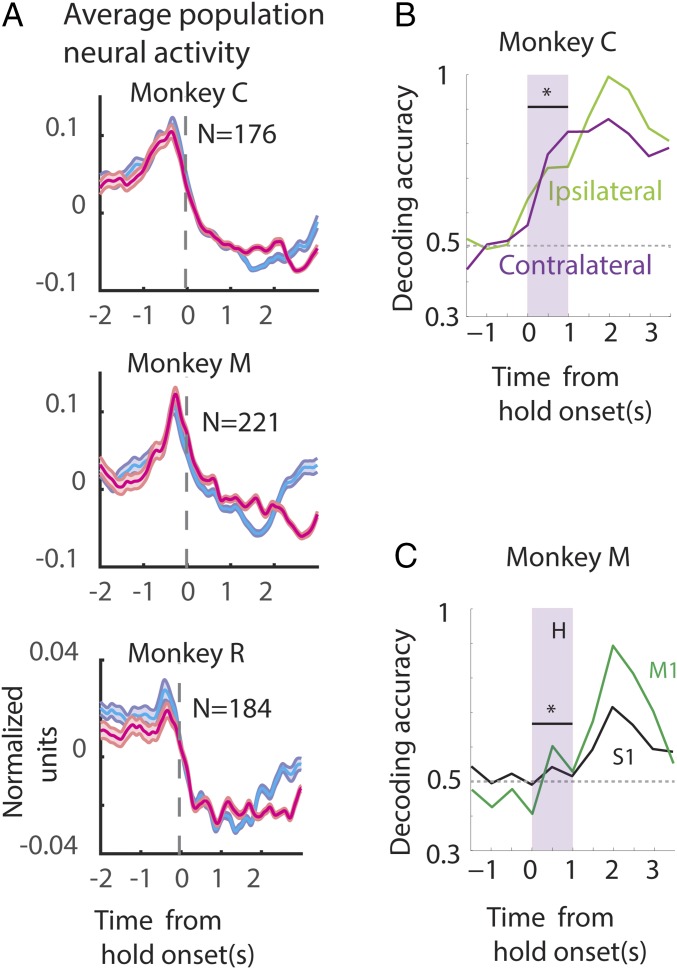

The population average PETH for monkeys M, C, and R during joystick control are displayed in Fig. 3A and during passive observation conditions for monkeys M and C in Fig. S2A. Population PETHs, centered on hold onset, for the early- (cyan) and late-reward trials (magenta) show that the neuronal ensemble average firing rate is very high for movement (t < 0), except in monkey M for passive observation. The average spiking activity decays during the hold time up to the time of reward. Early-trial neural activity can be distinguished from late-trial activity, especially in monkey C (both joystick control and passive observation) and monkey M (joystick control). In all of the monkeys the average PETHs were not very different for the early and late trials before the onset of the hold period.

Fig. 3.

Representation of reward by the neuronal population. (A) Population firing rate: The firing rate of each neuron was computed, z-scored, and averaged to plot the population PETH for each monkey for a joystick control session. The associated confidence bands are also shown (±1 SE). (B and C) Neuronal ensemble-based classifier analysis. Proportion of correct classification of trials, denoted by decoding accuracy, according to reward time (1 or 2 s) is shown on the y axis. The time aligned to hold onset is shown along the x axis. The classifier was trained using all of the contralateral M1 neurons (purple) or the ipsilateral M1 neurons (green). Note that the classification was significantly better (P < 0.05, bootstrapped ROC) than chance even before reward was delivered (at 0–1 s of hold period, shaded gray box) when using ipsilateral or contralateral M1 neurons (B) or S1 neurons (C).

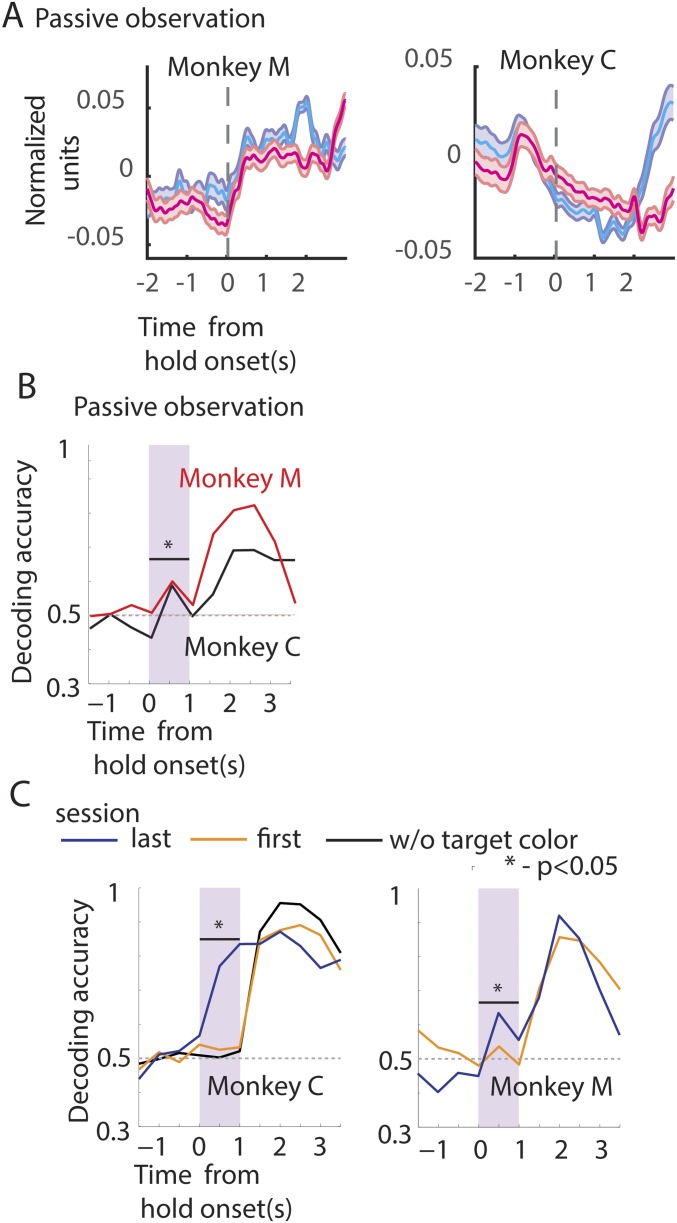

Fig. S2.

Representation of reward by the neuronal population. (A) Population firing rate during passive observation session for monkeys M and C. Plot format adapted from Fig. 3. (B and C) Neuronal ensemble-based classifier analysis. Proportion of correct classification of trials into early- and late-reward trials, denoted by decoding accuracy. Format adapted from Fig. 3B. (B) The classifier was trained using all of the neurons from monkey M (red) and monkey C (black) for passive observation sessions. (C) Early in training (session 1) classification of reward time was not significant (orange trace); however, later in training (after eight or nine sessions) robust levels of classification were achieved (blue trace). However, if the target color information was removed the proportion of correct drops to chance levels during the hold time (black trace).

Simply averaging the spiking activity across the neural population may conceal some differences. Thus, to examine whether the neuronal ensemble can distinguish early- from late-reward trials, before the early reward (0–1 s of the hold period) we used a machine learning algorithm–the support vector machine (SVM). For that, we used a 500-ms-wide moving window along the trial time axis, while separate SVM classifiers were trained for each window position to decode the trial into early vs. late rewards. Different window durations, step sizes, and algorithms were also tested (Materials and Methods). To compare the representation by different cortical areas of the early- vs. late-reward conditions, the classifier was also trained on different pools of neurons (ipsilateral and contralateral M1, S1, etc.). Irrespective of whether the neurons were selected from the ipsilateral or the contralateral M1, the classifier could clearly dissociate early- from late-reward trials in the initial 1-s hold period preceding any of the rewards [shaded window in Fig. 3B, monkey C: contralateral M1, receiver operating characteristic (ROC): 0.77 ± 0.11; ipsilateral M1, ROC: 0.72 ± 0.09; P < 0.05, ROC post-SVM]. S1 neurons carried much less information to separate early- from late-reward trials because decoder performance was lower than that obtained with ensembles of M1 neurons (Fig. 3C, monkey M: M1, ROC: 0.6 ± 0.08; S1, ROC: 0.54 ± 0.04; ROC post-SVM).

As an additional test, we examined passive observation sessions where the animals did not produce any movements of their arms. Even in the passive observation task the SVM classifier could distinguish between early- and later-reward trials, based on the neuronal population activity recorded during the initial hold time period (0–1 s; Fig. S2B, monkey C: ROC: 0.58 ± 0.06; monkey M: 0.62 ± 0.07). These classification results suggest that reward time classification was not due to differences in arm movement patterns between the early- and late-reward conditions.

The association between the target color and neural representation of the expected time of reward (early vs. late) was reinforced with practice. If the difference in M1/S1 neural activity between early- and late-reward trials reflected this association, then predictions of reward timing based on neural ensemble activity should improve with practice. Consistent with this hypothesis, during the first session we observed that M1 neural ensemble activity, produced during the initial hold time period (0–1 s), did not clearly distinguish between early- vs. late-reward trials (Fig. S2C, orange trace; monkey C: ROC: 0.51 ± 0.1 and monkey M: 0.54 ± 0.07). However, after a few sessions of training (joystick control sessions: monkey C, nine sessions and monkey M, eight sessions), M1 neural ensemble activity could distinguish early- from late-reward trials (Fig. S2C, blue trace; monkey C: ROC: 0.77 ± 0.11 and monkey M: 0.62 ± 0.08). As a control, the target color information was withheld in the last session, by changing it to a neutral color (white). Immediately, the classifier performance dropped to chance levels during the initial hold time (0–1 s) (Fig. S2C, black trace for monkey C; ROC: 0.5 ± 0.1).

In summary, the neuronal population-based classifier analysis could distinguish the early- from late-reward trials before early reward. The improvement in classifier output with training suggests a learned association between the target color and the time of reward, which may be strengthened over time.

Modulation in Neuronal Activity Due to a Mismatch from Expected Reward Magnitude.

We next asked whether M1/S1 neurons responded to unexpected changes in reward magnitude. To test this, in a small fraction (20%) of randomly interspersed trials reward was withheld (NR, no-reward trials). In these trials, the animals received a lower-than-expected amount of juice, referred to as a negative RPE (34, 36). In another subset of trials (20%), the animals received more than expected juice (0.9 mL, 750-ms pulse; DR, double-reward trials), referred to as a positive prediction error. In the remaining 60% of the trials the expected amount of reward was delivered (0.46 mL, 350-ms pulse; SR, single-reward trials).

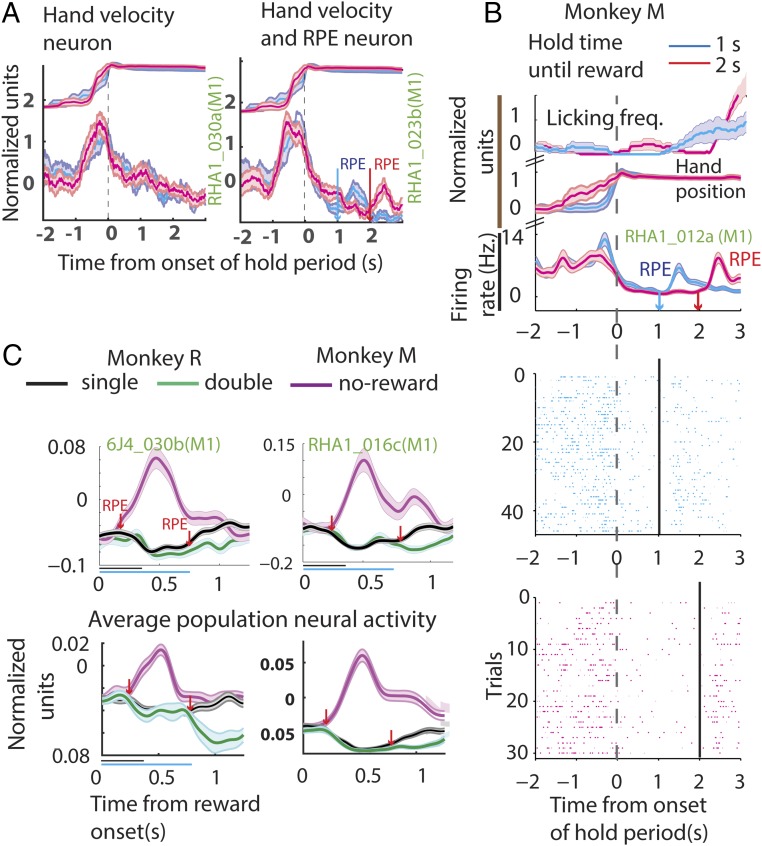

When reward was withheld, negative RPE, a brief period of increased neuronal firing activity was observed in the PETHs of some neurons (RPE in Fig.4A, Right), whereas others, recorded during the same session, did not show this increase in spiking activity (Fig. 4A, Left). Fig. 4B shows the PETH of another example neuron (RHA_012a), along with the associated raster plot and licking frequency (SI Materials and Methods for more information on licking frequency estimation). This M1 neuron displayed a significant increase in spiking activity when reward was omitted (cyan and magenta RPEs). More example neurons from monkeys M and R are displayed in Fig. 4C, Top and Fig. S3. These neurons exhibited a significant increase in spiking activity in response to reward omission (two-sided t test: P < 0.05). Such an increase in neuronal activity was recorded in many M1 and S1 neurons (monkey M, 113/221 neurons and monkey R, 38/189 neurons; two-sided t test: P < 0.05), shortly after the potential reward time [monkey M: 143 ± 5.6 ms (SD), n = 113 cells and monkey R: 165 ± 5.2 ms (SD), n = 38 cells]. As shown in Fig. 4C, Bottom (magenta trace), the average activity of the M1/S1 neuronal population also captured this transient neuronal excitation.

Fig. 4.

Neuronal modulation due to mismatch in the expected reward magnitude. (A) Plots showing hand movement trace and the neural activity of example neurons from the same session when a reward is omitted—a no-reward trial. The plot format is the same as that of Fig. 2A. Note the transient increase in spiking activity, indicative of RPE, after the potential reward onset time for the neuron in the right but not in the left panel. (B) Another example neuron, along with the absolute firing rate, licking frequency, and spike raster during a no-reward trial. The plot format is adapted from Fig. 2B. (C) (Top) Example neuron from monkey M (Right) and monkey R (Left). The normalized neural activity is plotted with respect to time of reward for single-reward trials (black), double-reward trials (green), and no-reward trials (magenta). (Bottom) The average population neural activity. Bands around the mean represent ±1 SE. The horizontal lines below the abscissa span the duration of the single reward (black, 350 ms) and the double reward (green, 700 ms). Note that when reward is withheld a transient increase in neural activity is observed, whereas when more than the expected reward is delivered a reduction in activity is witnessed. The arrows (downward-pointing, red) indicate when the neural activity was significantly different from the regular reward condition.

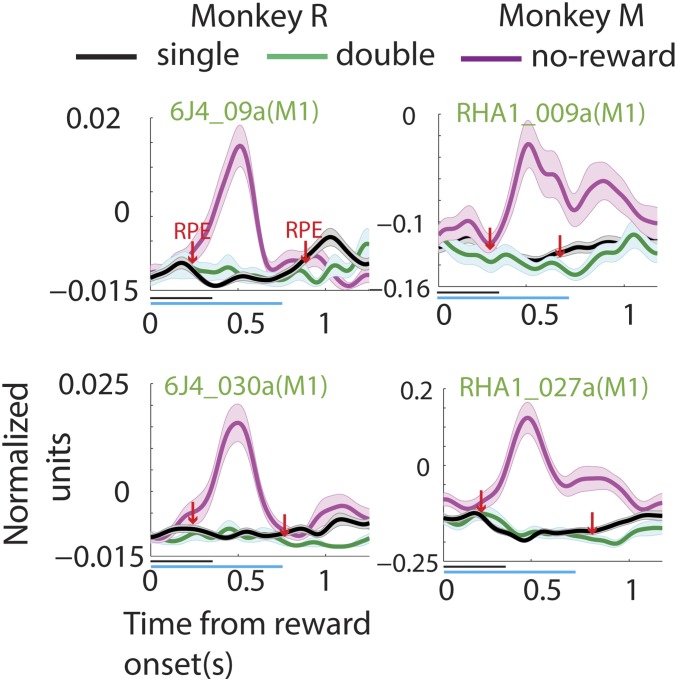

Fig. S3.

Neuronal modulation due to mismatch in the expected reward magnitude. Panels show example neurons from monkey M (Right) and monkey R (Left). The normalized neural activity is plotted with respect to time of reward for single-reward trials (black), double-reward trials (green), and no-reward trials (magenta). Bands around the mean represent ±1 SE. The plot format is adapted from Fig. 4C.

When the reward magnitude was doubled-positive RPE a significant reduction in spiking activity was observed. Neurons in Fig. 4C, Top and Fig. S3 display this reduction in spiking activity (green trace; two-sided t test: P < 0.05) when the juice delivery epoch extended beyond the regular period of 350 ms. This effect, which was significant in many neurons (monkey M, 55/221 cells and monkey R, 30/189 cells), could also be captured by the average population activity of M1/S1 neuronal ensembles (Fig. 4C, Bottom, green trace).

In summary, a majority of M1 and S1 neurons responded to both positive and negative RPE by producing opposing changes in firing rate modulation: an increase to signal a reward withdrawal and a reduction for a reward increase.

M1 and S1 Neurons Multiplex Target Direction, Arm Velocity, and Reward Information.

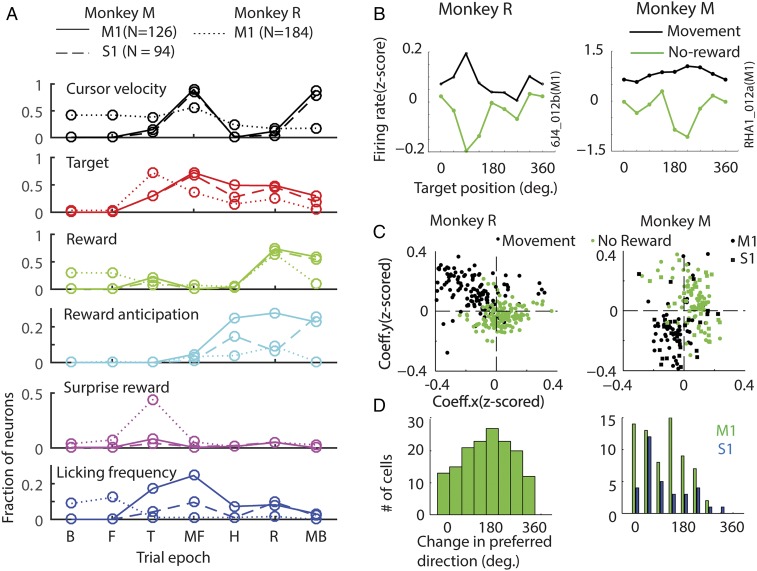

In a center-out arm movement task, M1/ S1 neurons are tuned to changes in the location of the target, direction of movement, and arm velocity, among other parameters. To assess the relative strength of the reward-related modulation in the same M1/S1 neuronal spiking activity, a multiple linear regression analysis was carried out by including reward magnitude, along with cursor velocity and target location, as regressors. This analysis was carried out independently in seven different, 400-ms-wide trial epochs [Fig. 5A: baseline (B), fixation (F), target (T), movement forward (MF), hold (H), reward (R), and movement back (MB)]. Single-reward and no-reward trials were included in one model; double- and single-reward trials were part of another model.

Fig. 5.

Reward-related neural activity is multiplexed along with other task relevant parameters. (A) Epoch-wise multiple regression analysis. Each panel shows fraction of neurons whose variability in spiking activity could be explained by changes in cursor velocity (panel 1), target position (panel 2), reward (panel 3, single reward vs. no reward), reward anticipation (panel 4), surprise reward (panel 5), and licking frequency (panel 6), using a multiple regression model (P < 0.05), in different 400-ms-wide epochs during a trial: B, before fixation; F, fixation; T,target onset; MF, moving (forward) to target; H, hold period; R, reward delivery; and MB, moving back to center. (B) Spatial tuning of reward-related neural activity. Normalized firing rate of example cells is plotted for eight different arm movement directions. Average ± SEM (smaller than the markers) of the neural activity is shown for different epochs: during movement (300–700 ms after target onset, black) and after the expected time of reward (200–600 ms, green) for the no-reward trials. (C) The directional tuning of all of the neurons for the movement and reward epochs (in no-reward trials) was assessed using multiple regression. The directional tuning of all of the neurons is captured by the scatter plot. The model assessed neuronal firing rate with respect to different target positions along the x axis and y axis. By plotting the respective (x and y) coefficients (Coeff.x and Coeff.y, respectively) in 2D space, each neuron’s preferred direction (angle subtended by the neuron with the abscissa) and tuning depth (radial distance of the neuron from the center) were determined. Tuning during the movement (black dots) and after the expected time of reward (200–600 ms, green) for the no-reward trials is shown. (D) The histograms show the number of neurons that underwent a change in spatial tuning from the preferred direction. Directional tuning of many neurons changed by 180 ± 45°.

As expected, variability in firing activity in many M1/S1 neurons could be accounted for (P < 0.05) by changes in cursor velocity (monkey M: M1, 88%; β = 0.18 ± 0.006. S1: 84%; β = 0.17 ± 0.008. Monkey R: M1, 59%; β = 0.08 ± 0.0053) and target location (Fig. 5A; monkey M: M1, 73%; β = 0.067 ± 0.016. S1:68%; β = 0.06 ± 0.019. Monkey R: M1, 71%; β = −0.15 ± 0.008), especially during the movement phases (MF and MB in “cursor velocity” and “target” panels in Fig. 5A). However, reward magnitude significantly affected M1 neural activity in the reward epoch (R in “reward” panel of Fig. 5A): A sizeable population of M1 and S1 neurons robustly responded to a negative RPE (Fig. 5A; monkey M: M1: 82.6%; β = −0.15 ± 0.0069. S1:61%; β = −0.13 ± 0.01. Monkey R: M1, 63.4%. β = −0.08 ± 0.0058).

During reward delivery, when the flow of juice began to diminish, monkeys licked at the juice spout. A potential concern was whether the licking movements were represented in the neuronal modulations observed during the reward epoch. Although neural recordings were performed in the forearm areas of M1 (monkeys M and R) and S1 (monkey M), licking movements could be potentially represented by the M1/S1 neurons. In fact, a small percentage of neurons (<5%) showed spiking activity that corresponded to licking frequency. Licking frequency was determined using a custom methodology and added as a regressor in subsequent analysis. We observed that some neurons (“licking frequency” panel in Fig. 5A; monkey M: M1 = 13%, S1 = 7.5%; monkey R: 17%) were weakly tuned to changes in the frequency of licking (M1: β = 0.0032 ± 0.0032; S1: β = 0.01 ± 0.002, monkey R: 0.05 ± 0.007). More importantly, changes in reward magnitude continued to account for most of the variability in M1/S1 neuronal spiking activity, as they did before introducing licking frequency as an additional regressor (monkey M: M1, 82.6%; β = −0.15 ± 0.0069. S1:61%; β = −0.13 ± 0.01. Monkey R: M1, 63.4%; β = −0.08 ± 0.0058).

To isolate effects related to reward anticipation, the multiple regression analysis was performed by including reward anticipation as an additional regressor, along with other confounding variables, such as cursor velocity, target location, licking frequency, reward magnitude, and so on. Because reward anticipatory activity is expected to increase as the time of reward approaches (e.g., RHA3_009b in Fig. 2 A and B), the “time elapsed after target appearance” was included as the regressor that represents anticipation. By running this modified regression model we obtained the fraction of neurons significantly modulating (P < 0.05) their neural activity in accordance with the time elapsed after target onset. The fraction was represented as a function of time. We observed that, as the time of reward approached, a greater fraction of cells reflected anticipation. For example, in monkey M the number of M1 anticipation-related neurons increased as the time of reward approached (Fig. 5A, “reward anticipation”): 0% around target appearance, 4% during movement, 25% during the hold time, and 27% around the time of reward. S1 neurons had a similar trend with the exception of a noticeable dip around the time of reward: 0% around target appearance, 1% during movement, 14% during the hold time, 9% around the time of reward, and 25% toward the end of hold time. In monkey R the effect of reward anticipation was weaker but nevertheless a significant population of M1 neurons showed the same trend: 0% around target appearance, 3% during movement, 4% during the hold time, and 9% around the time of reward. These results confirmed that M1/S1 spiking activity reflected the time elapsed from target onset. Notably, we did not see any significant changes in the representation of the other regressors (velocity, target location, etc.), which suggests that the time-dependent changes are specific to reward anticipation and not related to any movements.

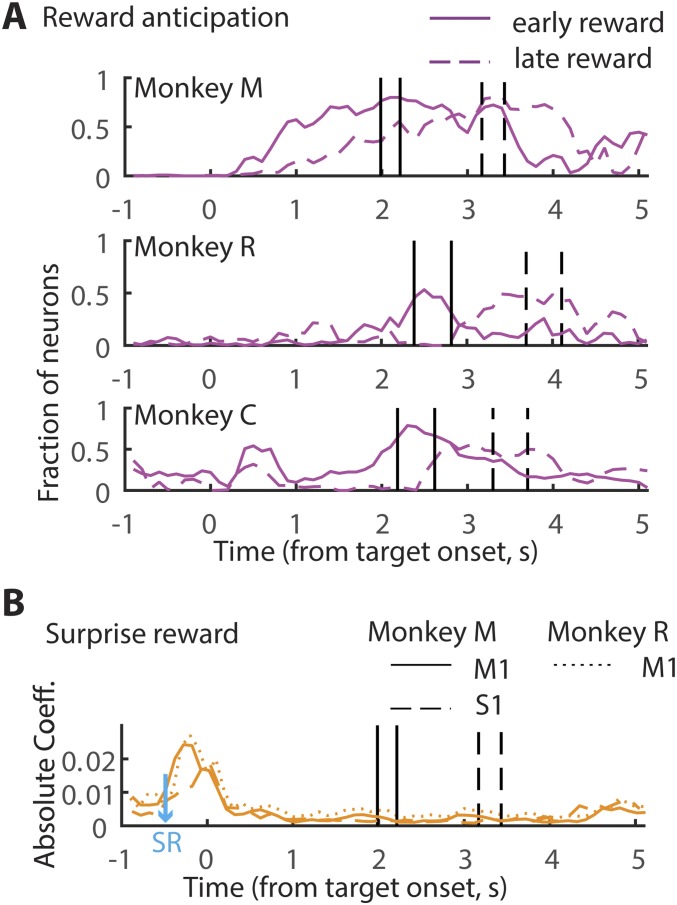

To aid better visualization of the increase in reward anticipatory activity, trials were separated into early- and late-reward trials, and the regression analysis was performed in every time bin leading up to the reward. More specifically, we performed an independent regression on every 100-ms time bin, using the average of 100 ms of data. In this analysis we also included all of the previously used regressors: instantaneous velocity, target position, reward outcome, time after target appeared, surprise reward, and licking frequency. Trials for this analysis were aligned to target onset, instead of to each trial-relevant epoch. Because the reward time was staggered, ±2 SD of the distribution was determined for both early- and late-reward trials and indicated in the plots (black vertical lines, Fig. S4A). By running this continuous regression model, in monkey M, as expected from the previous analysis, gradually more and more M1/S1 neurons significantly modulated their firing rate as the time of reward approached (Fig. S4A). Importantly, the time course of this increase in the number of M1/S1 cells tuned to reward plateaued around the time reward was delivered. As a result, the time course was faster for the early-reward condition compared with the late-reward condition. A similar trend, albeit weaker, can be seen for monkey R also. Because monkey C did not participate in Exp. 2, and because licking movements were not monitored for monkey C, the regression model for this monkey only included target position, velocity, and reward anticipation. However, a similar trend could also be seen for reward anticipatory activity in monkey C despite the utilization of a reduced model. Interestingly, in monkey C some neurons were observed to be modulated right after target appearance, which may be indicative of the prospective reward. In all, these results reinforce the notion that M1/S1 neurons also modulate their firing rate as a function of reward anticipation.

Fig. S4.

Continuous regression analysis. (A) Each panel shows the fraction of neurons that significantly explained changes in reward anticipation in a monkey. Regression was performed every 100 ms. The data were separated into early- (solid line) and late- (dashed line) reward trials. The vertical lines demarcate the mean ±1 SE time of reward. (B) The strength of the regression coefficient associated with surprise reward (SR) is displayed. The surprise reward was delivered 500 ms before target onset (blue arrow).

Having examined the impact of reward omission (negative RPE) on spiking activity in M1 and S1, a similar multiple linear regression analysis was used to study the influence of an unexpected increase in reward (positive RPE). Several neurons modulated their firing rate significantly (P < 0.05) by positive RPE, although the effects were weaker than for negative RPE (monkey M: M1, 45.5%; β = −0.05 ± 0.0037. S1:22%; β = −0.05 ± 0.005. Monkey R: M1, 35.5%; β = −0.037 ± 0.0043). We anticipated that a more salient positive RPE would be generated if the monkeys were rewarded when they least expected it. To do so, in a few (10%) randomly chosen trials animals received juice (surprise reward in Fig. 1C) while they waited for the trial to begin. Although some neurons (∼10%) in monkey M modulated their firing rate to the unexpected reward, a much larger fraction of neurons responded in monkey R (“surprise reward” panel in Fig. 5A; M1, 35%; β: −0.04 ± 0.003). To assess the strength of the neural activity elicited by surprise reward we examined the regression coefficients generated by the regression model (continuous regression was performed on every 100 ms, as mentioned previously). By doing so, we observed that in monkey M, despite the fact that only 10% of the neurons significantly responded to the surprise reward, these cells showed a clear increase in firing modulation strength (Fig. S4B). Both M1 (β = 0.023 ± 0.002) and S1 (β = 0.016 ± 0.003) neurons showed an increase in activity around the time of the surprise reward. In monkey R, a similar trend was observed, with the coefficient of strength increasing to reflect surprise reward (β = 0.025 ± 0.003).

In summary, our results suggest that, in addition to target location and cursor movement velocity, RPE, reward anticipation, and, to a small extent, licking movements contributed to the spiking activity of the M1 and S1 neurons. M1/S1 neurons also modulated their firing rate as a result of both negative and positive RPEs. The effects of negative RPEs on M1/S1 neurons seemed to be stronger and more widespread than those of positive RPEs.

Reward-Related Modulations in the Spatial Tuning of Sensorimotor Neurons.

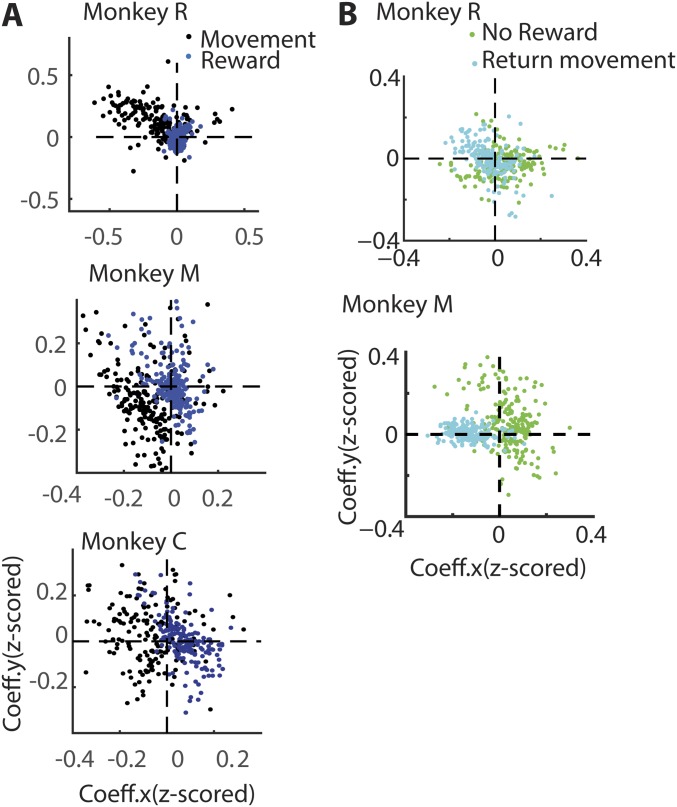

It is well known that the firing rate of M1/S1 neurons varies in a consistent way according to arm movement direction (black trace in Fig. 5B), a property known as neuronal directional tuning. Thus, as expected, the normalized firing rate of the neuron 6J4_012b from monkey R shows clear directional tuning (Fig. 5B, Left, black trace, P < 0.05, bootstrap): a higher firing rate for the target located at 90°, therefore referred to as the “preferred” direction, and a lower firing rate for the target at 270°, the “nonpreferred” direction. An example neuron from monkey M (Fig. 5B, Right, RHA1_012b) is also directionally tuned (P < 0.05, bootstrap). To examine directional tuning in the neuronal population, a multiple regression model (Materials and Methods) was fitted to every neuron’s spiking activity when the arm was moving to the target (MF, movement forward epoch in Fig. 5A). The model assessed neuronal firing rate with respect to different target positions along the x axis and y axis. By plotting the respective (x and y) coefficients in 2D space, each neuron’s preferred direction (angle subtended by the neuron with the abscissa) and tuning depth (radial distance of the neuron from the center) were determined. The black dots in Fig. 5C and Fig. S5 represent the directional tuning of individual neurons. These graphs indicate that many neurons were directionally tuned (monkey M: 206/220; monkey R: 167/198 neurons P < 0.05, bootstrap; monkey M: quadrant 3; monkey R: quadrant 2).

Fig. S5.

(A and B) Directional tuning of each neuron is captured by the scatter plots. The format is retained from Fig. 5C. (A) Comparison of directional tuning during the movement (black dots) with tuning during the last 400 ms of hold time epoch before reward delivery commenced (blue dots). (B) Comparison of directional tuning during the time of potential reward in no-reward trials (green dots) and tuning during the return movement epoch (cyan dots).

We then asked whether the same neurons exhibited directional tuning around the time of reward. For that, a multiple regression model was fitted to every neuron’s average spiking activity in the last 400 ms, before reward delivery began. The directional tuning of every neuron was plotted in Fig. S5A (blue dots). This analysis revealed that the directional tuning, calculated at the time of reward onset, was correlated with the tuning during the movement epoch in monkey M (R = 0.7; P < 0.001; n = 220). The tuning was weaker in monkey C (R = 0.27; P < 0.001; n = 176) and monkey R (R = 0.18; P = 0.002; n = 184). These results suggest that M1/S1neurons were weakly tuned to the target location at the time of reward onset. This result is consistent with the observations from Fig. 5A (target panel) that show neural activity modulated by target location when the target was visible (till the end of hold epoch).

We then measured directional tuning when the reward was omitted. As shown in Fig. 5B (green trace), both neurons illustrated showed strong directional tuning (P < 0.05, bootstrap). However, in both cases these neurons’ spiking activity was suppressed in the preferred direction (90° in 6J4_012b and 180° in RHA1_012b). To further examine directional tuning in the same M1/S1 neuronal population, a multiple regression model was fitted to every average neuronal spiking activity during the first 400 ms of the potential reward epoch in these no-reward trials. Fig. 5C shows the preferred direction and tuning depth for each M1 and S1 neuron (green dots and squares) during this epoch. These graphs indicate that many neurons underwent a significant change in their directional tuning, from the movement to the reward epochs (monkey M: quadrant 3–1; monkey R: quadrant 2–4). To assess whether these changes in M1 neuronal preferred direction were significant, a nested regression model was designed and tested (Materials and Methods). This analysis revealed that many neurons in monkey R (M1: n = 154/198, P < 0.05, bootstrap nested regression) and in monkey M (M1: n = 68/126; S1: n = 33/94, P < 0.05, bootstrap nested regression) underwent a significant change in preferred direction during this transition, from movement to reward epochs. Indeed, a fraction of these neurons (monkey R, 48%; monkey M: M1, 46%; S1: 30%) exhibited a change in directional tuning on the order of 180 ± 45° (Fig. 5D). These results indicated that, when reward was omitted, a large fraction of M1/S1 neurons exhibited suppression in firing activity in their preferred direction.

Next, we tested whether these changes in directional tuning could be influenced by the return arm movement produced by the animals. The return arm movement was offset by at least 1 s from the beginning of the reward delivery epoch in late-reward conditions and by 2 s in early-reward conditions. Further, during the postreward hold time, small rewards kept the monkeys motivated to hold the hand at the target. Moreover, the animals delayed the return movement (300–500 ms) following reward omission. The task design and the behavior would therefore minimize the influence of return arm movement on the neural activity at the time of reward delivery. However, we also ran a multiple regression analysis to compare neuronal directional tuning around the time of return movement with that around the time of the potential reward, in a no-reward trial (Fig. S5B). The directional tuning during the return movement (cyan dots in Fig. S5B) was not correlated with the tuning observed during the no-reward epoch (green dots; replicated from Fig. 5B) in monkey M (R = −0.05; P = 0.28; n = 220) but negatively correlated in monkey R (R = −0.35; P < 0.001; n = 184). The return movement had no influence on the neural tuning at the time of reward for one monkey, whereas for the second monkey a negative relationship between the neuronal activity during these two epochs was found, which by itself may not account for the firing rate modulations around the time of reward.

SI Materials and Methods

Animals and Implants.

Monkey M (12 kg, male) was chronically implanted with four 96-channel arrays, in bilateral arm and leg areas of M1 and S1 cortex (92). Monkey C (6.5 kg, female) was implanted with eight 96-channel arrays in bilateral M1, S1, PMd, supplementary motor area, and posterior parietal cortex (92). Monkey R (9 kg, male) was implanted with four 320-channel arrays, one each in ipsilateral and contralateral M1 and S1. In addition, monkey R was implanted with six 32-channel deep electrode arrays in bilateral putamen. In this study we have recorded from 224 neurons in monkey M from right hemisphere M1 and S1, 176 from left hemisphere M1 and S1 in monkey C, and 184 neurons from left hemisphere M1 of monkey R.

Behavioral Task.

The task was a modified version of the center-out task. A circular ring (4 cm in diameter) appeared in the center of the screen. Monkeys had to hold the avatar arm within the center target (“fixation period”) for a random hold period uniformly drawn from the interval 500–1,500 ms. Following that, the center target disappeared and a colored (red/blue) circular target ring (4 cm in diameter) appeared in the periphery, in one of eight evenly spaced peripheral locations, π/4° apart, on a circle of radius 9 cm. Monkeys had to then move the arm to place a virtual cursor (0.5 cm in diameter), at the base of the middle finger (Fig. 1C, Inset), completely within the target (93). They had to then hold in that position for 3 s. During the hold period, fruit juice reinforcements (small reward; 50-ms pulse; 0.03 mL juice) were delivered every 500 ms to encourage the monkeys to hold within the target circle (Fig. 1C). The reward (large reward, 500-ms pulse; 0.66 mL juice) was delivered after 1 s or 2 s. The target color indicated the time of reward (blue, early or red, late). The two types of trials were randomly intermixed. After the 3-s hold period, the peripheral target disappeared and the central target reappeared. Monkeys had to move the arm back to the center to initiate the next trial after an intertrial interval of 1,000 ms. During passive observation, monkeys observed preprogrammed reach trajectories on the screen. The avatar arm moved along the ideal trajectories between the center and peripheral target starting ∼500 ms after target onset. Small rewards were precluded; only the large reward was delivered at the appropriate time. Monkeys were monitored using a custom-made eye tracker (94) to ensure that they were looking at the screen. The monkeys’ arms were restrained from moving. In Exp. 2 the task contingencies remained the same as in Exp. 1. In 60% of trials that were randomly chosen, called single-reward trials, a 350-ms pulse (0.46 mL juice) gated the reward. In 20% of trials that were randomly chosen, reward was omitted (no reward). In another 20%, the reward was doubled (double reward), that is, the reward was gated by a 700-ms pulse (0.9 mL juice). Due to the design, single- and double-reward trials were compared after 350 ms, when the disparity was first apparent, whereas single- and no-reward trials were compared right after reward onset. Further, in a small subset of randomly chosen trials (10%) a surprise reward (gated by a 350-ms pulse) was delivered during the fixation period.

Experiment Setup.

Monkeys moved a custom-made spring-loaded joystick (94) using either their left (monkeys M and C) or their right (monkeys C and R) arms in a given session. A computer monitor (26 cm × 30 cm) placed ∼50 cm in front of the monkey displayed the virtual avatar arm and target reach target. The virtual environment was created using Motionbuilder software (Autodesk, Inc.) (95). All three monkeys were well-trained to perform center-out reaching movements using the joystick-controlled avatar arm to target positions. Because the position of the invisible cursor was deterministic, it could be learned with training. The accuracy of reaching movements using the virtual interface was similar to cursor-control conditions. A custom-made software suite, called BMI, controlled task flow.

A typical session lasted 45–60 min. In all, monkey C performed 36 sessions, monkey M performed 39, and monkey R performed 7 sessions. Monkey M was started on the left-hand joystick control sessions of Exp. 1 (18 sessions), followed by passive observation (13 sessions) and eight sessions of Exp. 2. In all of the sessions, neural data were recorded from the right hemisphere M1 and S1. Monkey C was started on passive observation (13 sessions), 14 left-hand-controlled joystick sessions, and 9 right-hand-controlled joystick sessions. Target color was not available for five (four left-hand and one right-hand control) of the sessions. In all of the sessions, neural data were recorded from the left hemisphere M1 and S1. Monkey R performed seven sessions of Exp. 2 using the right hand, while neural recording were obtained from the left hemisphere M1 neurons (Fig. 1B). For arm position, an analog voltage readout of the joystick, which was indicative of the arm position along the x and y dimensions, was routed via Plexon’s analog channels, where it was digitized at 1 kHz and subsequently read into BMI at 100 Hz. All of the analyses were performed on the 100-Hz data.

EMG Setup.

Four channels of a Bagnoli TM -8 system (Delsys) were used to record the muscle activity of left and right biceps and triceps during some passive observation sessions in monkey M. Wired DE-2.1 single differential surface electrodes were located on each muscle in parallel to the muscle fiber. A reference electrode was fixed at the left elbow bone (capitulum). The gain of the main amplifier was set to a factor of 1,000 for all channels. EMGs were band-pass-filtered (20–450 Hz) and recorded (simultaneously with kinematics) in the Plexon data acquisition system.

Neural Data Acquisition.

Neural activity was recorded using a 128-channel multichannel acquisition processor (Plexon Inc.) (71). Raw voltage waveforms were amplified and digitized at 40 kHz. Plexon’s online Sort Client (Plexon Inc.) was used to identify spikes using a manually supervised, template-matching procedure. Plexon time stamps were synchronized with BMI to enable perievent neural data analyses. All of the analyses were performed in MATLAB (The MathWorks, Inc.).

PETH of Neuronal Activity.

The recorded action potential events (raster plots in Figs. 2 and 4) were counted in bins of 10-ms widths. The histogram data were smoothed using a moving Gaussian kernel (width = 50 ms) for illustration purposes. To visualize the absolute firing rate, in some cases (Figs. 2B and 4B) the neuronal modulations were not z-scored. To visualize differences in firing rate modulations across neuron types and to combine activity across neurons the average modulation profile for each neuron (Figs. 2 A and D and 4 A and C) was normalized by subtracting the mean bin count and dividing by the SD of the cell’s bin count. The average activity across trials was then determined and aligned to the onset of the hold period to obtain PETHs. With this normalization, PETHs express the event-related modulations as a fraction of the overall modulations, or, statistically, the z-score.

Image Detection-Based Determination of Licking Frequency.

Following reward delivery, monkeys typically began licking at the juice spout, when the juice flow had reduced. Around this time, the possibility that any observed neural modulation resulted from licking behavior, instead of reward expectation, constitutes a potential cause for concern. To rule out this issue, we carefully monitored licking behavior using a custom image detection methodology. Previously, electric, optic, or force sensors have been used to detect tongue protrusion and retraction (96). However, previous methods have registered “false negatives” due to the inability to detect the partial and lateral movements of the tongue, which often occur without contacting or interrupting the sensor (reviewed in ref. 96). Using our approach the false positive (<0.002) and false negative (<0.025) rates of lick detection were minimal. Furthermore, our method has the potential to detect lateral/partial movements of the tongue also. Although one caveat at present is the subjective bias in labeling tongue movements, those can be remedied by using unsupervised image classification algorithms.

Discussion

In the present study we manipulated reward expectation using explicit instruction of reward timing by color cues. This experimental setting allowed us to study the representation of temporal intervals related to rewards by M1 and S1 neurons, as well as the representation of the reward, and the integration of this information with the traditional M1 and S1 neuronal firing modulations, such as encoding of movement direction and velocity. We observed that (i) more than 9–27% of M1/S1 neurons exhibited anticipatory neuronal activity related to reward timing, which was distinct enough to differentiate reward times that were 1 s apart, (ii) and modulated their neural activity as a function of errors in reward expectation, and (iii) M1/S1 neurons corepresented reward and motor parameters.

Reward-Related Activity in Sensorimotor Neurons.

Modulation in neural activity was observed in many M1/S1 neurons around the time of reward, which we have interpreted as representing both expectation of reward and mismatch in reward expectation. These findings are consistent with reward anticipatory modulations previously seen in premotor cortical neurons (30, 32) and in motor cortical neurons (33) (SI Discussion for more information). However, given that many of these M1/S1 neurons are involved in planning of arm movements, a potential concern is that the monkeys could be moving their arms, loosening their grip on the joystick, or performing other unmonitored hand movements, which could be misinterpreted as reward-related modulation. However, in our task design any arm movement produced during the hold time would preclude the animal from receiving the intended reward. To comply with this constraint, all three monkeys learned to hold their arms steady. This was confirmed by inspection of the joystick position, during the 3-s hold time, which revealed that the arms of the monkeys remained stable on most trials during the hold time. Despite a steady position, one could still attribute the observed M1/S1 neural firing modulation to a change in arm or body posture, which led to no change in the arm end point. However, because the monkeys were operating a spring-loaded joystick, adjusting their arm/body posture while keeping the joystick stationary would be extremely demanding and logistically quite an unfeasible task for the animals. Notwithstanding these mitigating factors, we have conducted passive observation sessions, where monkeys did not operate the joystick at all but simply watched the task being performed on the screen. In these sessions, the monkey’s hands were well-restrained to the sides of the chair, while EMGs were recorded to control for any inadvertent muscle contractions. A slight increase in EMG was recorded from the (long head of) triceps and the biceps as the virtual arm moved; however, no EMG modulations were observed around the time of reward. Under these conditions of complete absence of overt arm movements, clear reward-related M1/S1 neuronal firing modulations occurred in several neurons (e.g., RHA2_030b and RHA3_009b in Fig. 2). Furthermore, examination of neuronal firing rate modulations demonstrated that the spiking activity of some neurons represented arm position and velocity, but not reward-related activity, whereas other simultaneously recorded neurons displayed reward-related activity. If the reward-related activity was influenced by arm movements, this finding would be unlikely. Furthermore, a few M1/S1 neurons, recorded simultaneously during the same session, displayed only reward-related activity.

Finally, we identified licking-related neurons and the firing rate modulations in these neurons followed the changes in licking frequency. To determine the changes in neural activity that were correlated with licking movements, we have used a generalized linear model analysis (37–39). From this analysis we learned that spiking activity of only a small percentage of neurons was related to licking frequency (<15%), whereas a much higher number of our recorded M1/S1 neurons represented arm movements (>80%) and changes in reward (>50%). These findings are consistent with the fact that our multielectrode arrays were originally implanted in the so-called forearm representation of M1/S1. Based on all these controls, we propose that our results are totally consistent with the interpretation that the observed M1/S1 neuronal firing rate modulations described here were related to reward expectation, rather than emerging as the result of some unmonitored animal movement.

RPE Signals in M1/S1.

Following “catch” trials when a reward was withheld, a transient increase in neural activity was observed in many M1 and S1 neurons. This observation is consistent with a recent study (31) that reported an increase in firing in M1 and dorsal premotor (pMd) neurons when reward was not delivered in one monkey. We have replicated those findings in our study and further extended them by demonstrating that M1 and S1 neurons reduced their firing rate for a higher-than-expected reward. Altogether, these results support the hypothesis that M1 and S1 encode bidirectional RPEs. Our findings are also consistent with those obtained in an imaging study (40) and a recent electrocorticography study (41) in which RPE-related activity was detected in sensorimotor brain regions.

Neuronal response to both positive and negative RPEs, indicated by distinct bidirectional changes in firing rates, was initially reported for midbrain DA neurons (34, 36, 42). Subsequently, bidirectional RPE signals have been observed in many other brain areas (43–47). The widespread nature of RPE signals may suggest a common general mechanism involved in learning from outcomes. Our results suggest that this mechanism may also exist in the monkey primary motor and sensory cortical areas as well.

Although the role of DA in reward processing is rather complex and still a subject of debate (48, 49), one line of research has implicated the phasic DA response, typically seen in midbrain DA neurons, as the mediator of RPEs (36, 50–52). Typically, reinforcement-based learning models have used prediction errors to account for behavioral plasticity (53–55). Recent studies that looked at plasticity in the motor system, particularly regarding the role of reward in motor adaptation (13, 56) and facilitation of movement sequences (57), have suggested that M1 neurons may play a crucial role in this process. The fact that we observed here that M1 and S1 neurons exhibit bidirectional prediction error signals reinforces this hypothesis significantly.

Interestingly, a significant fraction of M1/S1 neurons, at the time of reward omission, exhibited directional tuning that was distinct from the one displayed by them during the arm movement epoch. Changes to the M1 neuron directional tuning, and hence, to the cell’s preferred direction, has been observed previously, as a function of task context (58–60) or even during the time duration of a trial (61, 62). During a typical trial, no tuning could be detected before the target appeared; strong neuronal tuning emerged only after the target appeared and remained throughout the period the target was acquired, until the end of the hold period. However, when reward was withheld, many M1/S1 neurons changed their preferred directions by 180 ± 45°. We speculate that these changes are related to reward omission and may serve to facilitate reward-based learning. However, it still remains to be tested whether these reward-related modulations affect future M1/S1 neural activity.

Distributed Encoding in M1/S1 Neurons.

Based on studies carried out in the last couple of decades, M1 neuronal activity has been correlated with several kinetic (20–22, 63, 64) and kinematic parameters (16, 17, 19). Even though many of these movement parameters are correlated with one another, in general one line of thinking in the field purports that cortical motor neurons are capable of multiplexing information related to different parameters (65–68), including reward (65, 69, 70). Simultaneous recording from several M1/S1 neurons in our study has shown that many of these neurons are associated with some aspect of movement—position, velocity, and so on—whereas a subset of the neurons also seem to be receiving reward-related signals. This latter group of M1/S1 neurons are perhaps the ones that displayed both movement and reward-related activity. These findings are consistent with those from recent studies that have examined neurons in M1 and pMd responding to arm position, acceleration, and reward anticipation (31).

In conclusion, we observed that M1/S1 cortical neurons simultaneously represent sensorimotor and reward-related modulations in spiking activity. According to this view, M1 and S1 ensembles can be considered as part of a distributed network that underlies reward-based learning.

SI Discussion

Reward-related anticipatory neuronal activity, which we and others (72, 73) have observed, is quite similar to the neuronal “climbing activity” previously reported in many studies and, depending on the nature of the experiment, attributed to different factors, such as encoding of interval timing for self-initiated movements (74), motor preparatory signals (75, 76), anticipation of behaviorally relevant sensory stimuli (77), sensorimotor transformation (78), orientation of selective spatial attention (79, 80), maintenance of working memory (81, 82), and decision making (70, 83, 84). Overall, these studies indicate that anticipatory activity is characteristic of many brain regions. Our findings show that the primate primary sensorimotor areas are not exceptions to this rule. Here, we have shown that it is possible to observe reward-related timing activity in M1/S1 by introducing two reward times to a task. Further studies, however, will be needed to define precision of these neuronally derived timing signals.

Reward timing and anticipatory signals in M1 and S1 are consistent with two plausible theories previously discussed in the literature. The first possibility is that these visually cued timing signals, observed here in M1/S1 neurons, could play a role in reinforcement learning occurring in these neural circuits (85). In addition to neurons in the contralateral M1 and S1, we also observed robust anticipatory firing in the ipsilateral M1 neurons. This lends credence to a second possibility, that M1 and S1 cortical ensembles may be part of a widespread brain circuit, formed by multiple interconnected cortical and subcortical structures, that define altogether a “neural timing system” capable of anticipating salient events, such as reward delivery (86). In support of this possibility, reward-related timing signals were previously observed in the orbitofrontal cortex (87, 88), and striatum (89, 90). However, direct experimental evidence in favor of the widespread timing network has not been obtained. One would have to simultaneously record from multiple brain areas (91, 92) that are implicated in event timing to gain a better understanding of the role played by this brain timing network.

Materials and Methods

All studies were conducted with approved protocols from the Duke University Institutional Animal Care and Use Committee and were in accordance with NIH guidelines for the use of laboratory animals. Three adult rhesus macaque monkeys (Macaca mulatta) participated in this study. More information regarding animals and the implants are available in SI Materials and Methods.

Behavioral Task.

The task was a modified version of the center-out task. A circular ring appeared in the center of the screen. When monkeys held the avatar arm within the center target, a colored (red/blue) circular target ring appeared in the periphery, in one of eight evenly spaced peripheral locations. Monkeys had to then move the arm to place it on the target and hold it there for 3 s. A juice reward was delivered after 1 s (early reward) or after 2 s (late reward). The target color cued the time of reward (blue, early and red, late). In Exp. 2, in 20% of trials reward was omitted (no reward). In another 20% the reward was doubled (double reward). Further, in a small subset of randomly chosen trials (10%) a surprise reward (gated by a 350-ms pulse) was delivered during the fixation period. More information on the task design can be found in SI Materials and Methods.

Neural activity was recorded using a 128-channel multichannel acquisition processor (Plexon Inc.) (71). All of the analyses were performed in MATLAB (The MathWorks, Inc.).

PETH of Neuronal Activity.

The recorded action potential events were counted in bins of 10 ms, smoothed using a moving Gaussian kernel (width = 50 ms) for illustration purposes. Details regarding normalization procedures can be found in SI Materials and Methods.

Classifying Trials Based on Early vs. Late Reward.

To investigate the strength of information about the time of reward provided by a single neuron, or provided by the entire population, a decoding analysis was performed based on a linear discriminant analysis algorithm or SVM algorithm, using the MATLAB statistics toolbox (SI Materials and Methods for details).

Multiple Linear Regression.

To model every neuron’s changes in firing rate as a function of task contingencies (Fig. 5A) a multiple regression analysis was carried out with the following general linear models (GLM; see SI Materials and Methods for details).

Spatial Tuning.

The normalized mean firing rate was determined during the movement epoch, the hold time epoch, reward epoch, and return movement epoch. The z-scored firing rate in each epoch was fit for each neuron with a GLM (SI Materials and Methods for details.)

Decoding Analysis.

To investigate the strength of information about the time of reward provided by the entire population, a population-decoding analysis was performed based on an SVM algorithm using the MATLAB statistics toolbox with 10-fold cross-validation. For the decoding of time of reward, trials were classified based on time of reward: early vs. late reward (Fig. 3 B and C and Fig. S2 B and C). Each trial was represented as one point in the n-dimensional feature space spanned by the firing rates of n neurons or n principal components during this trial. Typically, classifier performance did not improve significantly after 10–15 principal components. Each unit with at least 100 trials for each condition was included in this analysis. This dataset was then split up into 10 partitions, where nine partitions were used as a training set and one partition was used as a test set. This was repeated for all 10 partitions, so that each trial was part of the test set exactly once. By systematically varying the classifier threshold and bootstrapping (n = 1,000), area under the ROC curve and the 95% confidence bounds were determined. If the lower bound exceeded chance levels of prediction (0.5 for binary classification) then the classifier output (as measured by “proportion of correct”) was considered “significant.” Time course was obtained by performing this analysis in a 200-ms window, advanced in steps of 200 ms. The procedures were repeated with several different window sizes (100–1,000 ms) and steps (25–250 ms). The entire procedure of selecting trials, training, and testing the model was repeated several times to account for differences in selecting the data. The analysis was repeated with a nonparametric classifier also (k-nearest neighbor algorithm) that did not make any presumptions regarding the underlying data distributions. Optimal decoding was achieved with 30 < N (number of neighbors) < 40. Compared with SVM, the k-NN classifier’s outcome was more variable across iterations, but with a large number of iterations (n > 1,000) convergence was achieved. The results remained the same irrespective of the classifier used.

Multiple Regression Analysis.

To model every neuron’s changes in firing rate as a function of task contingencies, a multiple regression analysis was carried out with the following GLM:

β1 to β6 are the slope parameter estimates, β0 is constant, and ε is the residual. Each model was tested one after another. In each case, independent versions of the model were fit in six different, 400-ms-long task epochs (Fig. 5A): baseline epoch began 400 ms before fixation target appeared; Fixation, 0–400 ms of fixation; Target, 0–400 ms after target appeared; Movement (forward, MF), 400 ms before hold onset; Hold, 100–500 ms after hold onset; Reward, 100–500 ms after reward onset; Movement (back), 400 ms before reaching center. In the GLM analysis in Fig. S4A, independent GLMs were fit and tested for every 100-ms epoch. The mean-removed, average firing rate of the neuron in the epoch was y. All of the predictors were mean-centered and normalized. Average value of cursor velocity and licking frequency was determined in each epoch of a trial. Target positions ranged from 0 to 7, corresponding to 0–2π, in steps of π/4. Reward parameters took binary values (0, no reward or 1, reward or 0, reward and 1, double reward). Note that no-reward and double-reward trials were not part of the same model. We also tested a GLM that included all three reward types in the design, and results were similar. Predictor space was examined for multicollinearity issues. A forward stepwise variable selection procedure was used to monitor for cocontributions among predictors. The use of multiple regression technique was validated by testing assumptions of randomness of residuals, constancy of variance, and normality of error terms. If the model fit the changes in spiking activity of a neuron in an epoch well (P < 0.05) then the neuron and its slope parameters were considered. Statistical significance of regression coefficients was determined using a t test with P < 0.05 as criterion. All tests performed were two-sided. Separate GLM models were fit for data from S1 and M1, for the individual monkeys, and for early- and late-reward trials.

Spatial Tuning.

The normalized mean firing rate was determined during the “movement” epoch (200–600 ms after stimulus; Fig. 5 B and C and Fig. S5A) and the hold time epoch (400–100 ms before reward onset; Fig. S5A) in every trial, movement epoch and “reward” epoch (200–600 ms after reward onset) in every trial (Fig. 5C), and reward epoch and the “return movement” epoch (0–400 ms; Fig. S5B). The z-scored firing rate in each epoch was fit for each neuron with the following GLM:

where Px and Py are positions of the targets in 2D space in each trial. Coefficients β1 and β2 are the slope parameter estimates, β0 is the constant, and ε is the residual. z is the firing rate in an epoch. Separate GLM models were designed for each epoch.

After fitting the model, the residuals were resampled with repetition several times (bootstrapping, n = 10,000). Resampled residuals were added to the zest (estimate of z using the best fit β1, β2, β0), to obtain a new z. Regression was carried out on the newly determined z values, to determine a new set of parameters (β1, β2, β0). This procedure was repeated 10,000 times to obtain as many bootstrapped estimates for each parameter. The plots show the mean of the 10,000 estimates of β1 and β2 for each neuron. The parameter estimates were considered significantly different from 0 if the 5–95 percentile of its distribution did not include 0.

To determine whether the spatial tuning in the reward epoch was significantly different from that of the movement epoch a nested regression approach was used. Two independent GLM models were combined in this way:

Here, E2 is a dummy variable that is set to 1 for the no-reward epoch and 0 for the movement epoch. A bootstrapped approach as mentioned previously was used. The change in spatial tuning was considered significant if either β3 or β4 was significantly different from 0.

Acknowledgments

We thank G. Lehew and J. Meloy for the design and production of the multielectrode arrays used in this study; D. Dimitrov and M. Krucoff for conducting implantation surgeries; Z. Li for expertise with the BMI Software; T. Phillips for animal care and logistical matters; T. Jones, L. Oliveira, and S. Halkiotis for administrative assistance; and S. Halkiotis for manuscript review. This work was supported by National Institute of Neurological Disorders and Stroke, NIH Award R01NS073952 (to M.A.L.N.). The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1703668114/-/DCSupplemental.

References

- 1.Wolpert DM, Diedrichsen J, Flanagan JR. Principles of sensorimotor learning. Nat Rev Neurosci. 2011;12:739–751. doi: 10.1038/nrn3112. [DOI] [PubMed] [Google Scholar]

- 2.Guo L, et al. Dynamic rewiring of neural circuits in the motor cortex in mouse models of Parkinson’s disease. Nat Neurosci. 2015;18:1299–1309. doi: 10.1038/nn.4082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Wise RA. Intracranial self-stimulation: Mapping against the lateral boundaries of the dopaminergic cells of the substantia nigra. Brain Res. 1981;213:190–194. doi: 10.1016/0006-8993(81)91260-9. [DOI] [PubMed] [Google Scholar]

- 4.Wise RA. Dopamine, learning and motivation. Nat Rev Neurosci. 2004;5:483–494. doi: 10.1038/nrn1406. [DOI] [PubMed] [Google Scholar]

- 5.Fibiger HC, LePiane FG, Jakubovic A, Phillips AG. The role of dopamine in intracranial self-stimulation of the ventral tegmental area. J Neurosci. 1987;7:3888–3896. doi: 10.1523/JNEUROSCI.07-12-03888.1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Berger B, Gaspar P, Verney C. Dopaminergic innervation of the cerebral cortex: Unexpected differences between rodents and primates. Trends Neurosci. 1991;14:21–27. doi: 10.1016/0166-2236(91)90179-x. [DOI] [PubMed] [Google Scholar]

- 7.Williams SM, Goldman-rakic PS. Characterization of the dopaminergic innervation of the primate frontal cortex using a dopamine-specific antibody. Cereb Cortex. 1993;3:199–222. doi: 10.1093/cercor/3.3.199. [DOI] [PubMed] [Google Scholar]

- 8.Seamans JK, Yang CR. The principal features and mechanisms of dopamine modulation in the prefrontal cortex. Prog Neurobiol. 2004;74:1–58. doi: 10.1016/j.pneurobio.2004.05.006. [DOI] [PubMed] [Google Scholar]

- 9.Hosp JA, Nolan HE, Luft AR. Topography and collateralization of dopaminergic projections to primary motor cortex in rats. Exp Brain Res. 2015;233:1365–1375. doi: 10.1007/s00221-015-4211-2. [DOI] [PubMed] [Google Scholar]

- 10.Molina-Luna K, et al. Dopamine in motor cortex is necessary for skill learning and synaptic plasticity. PLoS One. 2009;4:e7082. doi: 10.1371/journal.pone.0007082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lewis DA, Campbell MJ, Foote SL, Goldstein M, Morrison JH. The distribution of tyrosine hydroxylase-immunoreactive fibers in primate neocortex is widespread but regionally specific. J Neurosci. 1987;7:279–290. doi: 10.1523/JNEUROSCI.07-01-00279.1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gaspar P, Berger B, Febvret A, Vigny A, Henry JP. Catecholamine innervation of the human cerebral cortex as revealed by comparative immunohistochemistry of tyrosine hydroxylase and dopamine-beta-hydroxylase. J Comp Neurol. 1989;279:249–271. doi: 10.1002/cne.902790208. [DOI] [PubMed] [Google Scholar]

- 13.Galea JM, Mallia E, Rothwell J, Diedrichsen J. The dissociable effects of punishment and reward on motor learning. Nat Neurosci. 2015;18:597–602. doi: 10.1038/nn.3956. [DOI] [PubMed] [Google Scholar]

- 14.Krakauer JW, Latash ML, Zatsiorsky VM. Progress in motor control. Learning. 2009;629:597–618. [Google Scholar]

- 15.Laubach M, Wessberg J, Nicolelis MA. Cortical ensemble activity increasingly predicts behaviour outcomes during learning of a motor task. Nature. 2000;405:567–571. doi: 10.1038/35014604. [DOI] [PubMed] [Google Scholar]

- 16.Georgopoulos AP, Schwartz AB, Kettner RE. Neuronal population coding of movement direction. Science. 1986;233:1416–9. doi: 10.1126/science.3749885. [DOI] [PubMed] [Google Scholar]

- 17.Mahan MY, Georgopoulos AP. Motor directional tuning across brain areas: Directional resonance and the role of inhibition for directional accuracy. Front Neural Circuits. 2013;7:92. doi: 10.3389/fncir.2013.00092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lebedev MA, et al. Cortical ensemble adaptation to represent velocity of an artificial actuator controlled by a brain-machine interface. J Neurosci. 2005;25:4681–4693. doi: 10.1523/JNEUROSCI.4088-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Moran DW, Schwartz AB. Motor cortical representation of speed and direction during reaching. J Neurophysiol. 1999;82:2676–2692. doi: 10.1152/jn.1999.82.5.2676. [DOI] [PubMed] [Google Scholar]

- 20.Kakei S, Hoffman DS, Strick PL. Muscle and movement representations in the primary motor cortex. Science. 1999;285:2136–2139. doi: 10.1126/science.285.5436.2136. [DOI] [PubMed] [Google Scholar]

- 21.Sergio LE, Hamel-Pâquet C, Kalaska JF. Motor cortex neural correlates of output kinematics and kinetics during isometric-force and arm-reaching tasks. J Neurophysiol. 2005;94:2353–2378. doi: 10.1152/jn.00989.2004. [DOI] [PubMed] [Google Scholar]

- 22.Addou T, Krouchev NI, Kalaska JF. Motor cortex single-neuron and population contributions to compensation for multiple dynamic force fields. J Neurophysiol. 2015;113:487–508. doi: 10.1152/jn.00094.2014. [DOI] [PubMed] [Google Scholar]

- 23.Lebedev MA, Doherty JEO, Nicolelis MAL. Decoding of temporal intervals from cortical ensemble activity. J Neurophysiol. 2008;99:166–186. doi: 10.1152/jn.00734.2007. [DOI] [PubMed] [Google Scholar]

- 24.Roux S, Coulmance M, Riehle A. Context-related representation of timing processes in monkey motor cortex. Eur J Neurosci. 2003;18:1011–1016. doi: 10.1046/j.1460-9568.2003.02792.x. [DOI] [PubMed] [Google Scholar]

- 25.Costa RM, Cohen D, Nicolelis MAL. Differential corticostriatal plasticity during fast and slow motor skill learning in mice. Curr Biol. 2004;14:1124–1134. doi: 10.1016/j.cub.2004.06.053. [DOI] [PubMed] [Google Scholar]

- 26.Arsenault JT, Rima S, Stemmann H, Vanduffel W. Role of the primate ventral tegmental area in reinforcement and motivation. Curr Biol. 2014;24:1347–1353. doi: 10.1016/j.cub.2014.04.044. [DOI] [PMC free article] [PubMed] [Google Scholar]