Abstract

Background

Despite the benefits of influenza vaccination, each year more than half of adults in the United States do not receive it.

Objective

To evaluate the association between an active choice intervention in the electronic health record (EHR) and changes in influenza vaccination rates.

Design

Observational study.

Patients

Adults eligible for influenza vaccination with a clinic visit at one of three internal medicine practices at the University of Pennsylvania Health System between September 2010 and March 2013.

Intervention

The EHR confirmed patient eligibility during the clinic visit and, upon accessing the patient chart, prompted the physician and their medical assistant to actively choose to “accept” or “cancel” an order for the influenza vaccine.

Main Measures

Change in influenza vaccination order rates at the intervention practice compared to two control practices for the 2012–2013 flu season, comparing trends during the prior two flu seasons adjusting for time trends and patient and clinic visit characteristics.

Key Results

The sample (n = 45,926 patients) was 62.9% female, 35.9% white, and 54.4% black, with a mean age of 50.2 years. Trends were similar between practices during the 2 years in the pre-intervention period. Vaccination rates increased in both groups in the post-intervention year, but the intervention practice using active choice had a significantly greater increase than the control (adjusted difference-in-difference: 6.6 percentage points; 95% CI, 5.1–8.1; P < 0.001), representing a 37.3% relative increase compared to the pre-intervention period. More than 99.9% (9938/9941) of orders placed during the study period resulted in vaccination.

Conclusions

Active choice through the EHR was associated with a significant increase in influenza vaccination rates.

KEY WORDS: active choice, choice architecture, nudge, physician behavior, behavioral economics, electronic health record, influenza vaccination

INTRODUCTION

Influenza is a significant cause of illness, hospitalization, and mortality in the United States.1 , 2 Vaccination has been demonstrated as an effective method for reducing the burden of disease.3 , 4 Despite these benefits, however, each year more than 50% of adults in the United States do not receive the influenza vaccine, and this level has not improved in nearly a decade.5 , 6 Therefore, new strategies may be needed to address this issue.

The growing adoption of the electronic health record (EHR) may provide new opportunities for developing scalable methods to increase the use of preventive services.7 While clinical design support through the EHR has been demonstrated to improve performance across clinician process measures, there is less evidence of its impact on patient outcomes.8 – 11

Insights from behavioral economics could offer new approaches to design choices within the EHR to improve clinician and patient decisions.12 – 15 For example, our prior work found that using “active choice,” a method that requires clinicians to accept or decline an order for colonoscopy and mammography, significantly increased order rates for these screening tests.16 In this study, we evaluated the impact of an active choice intervention using the EHR to increase influenza vaccination rates. Rather than the standard approach of relying on clinicians to recognize the need for vaccination and opt into placing an order, the EHR confirmed patient eligibility during the clinic visit and used an alert to ask the physician and their medical assistant to actively choose to “accept” or “cancel” an order for influenza vaccination.

METHODS

This study was approved by the University of Pennsylvania institutional review board. Informed consent was waived because it was infeasible given the retrospective study design and the minimal risk posed to patients.

Study Sample

The sample comprised patients with a clinic visit during influenza season (from September 1 to March 31) at one of three similar internal medicine practices at the University of Pennsylvania Health System between September 2010 and March 2013. All three sites were academic teaching practices located within the same area (0.3 miles apart) in Philadelphia, Pennsylvania. To ensure we evaluated a sample of patients who were due for the influenza vaccine, we excluded patients with either of the following during the current flu season: 1) EHR noted the patient had already received the influenza vaccine at the practice or elsewhere (using health maintenance data from EPIC, the outpatient EHR); 2) health insurance claim for influenza vaccine identified.

Intervention

Prior to the intervention, providers at all three sites had to manually check whether a patient was due for the vaccine and then place an order for it. On February 15, 2012, one of the clinics implemented a change to the EHR settings using a best practice alert in EPIC. This intervention confirmed patient eligibility for the vaccine during the clinic visit and, upon signing into the EHR for that patient, prompted the provider to actively choose to “accept” or “cancel” an order for the influenza vaccine. This alert was at the time of patient check-in to medical assistants who could pend orders for the physician to review and potentially sign. Regardless of the action of the medical assistant, physicians also received the alert when first opening a patient’s chart. Physicians could place their own order or approve the order pended by the medical assistant. Because the alert was delivered independently to both the physician and medical assistant, in some cases both members could enter an order. In this scenario, the physician would then sign one of the orders and cancel the other.

Main Outcome Measures

The primary outcome measure was the percentage of patients eligible for the influenza vaccine who had an order for it placed on the day of the clinic visit.

Data

Clarity, an EPIC reporting database, was used to obtain data on patient demographics and comorbidities, clinic visits including type of visit and status of provider as primary care physician or not, and influenza vaccine orders. Health insurance claims were obtained from the billing system at the University of Pennsylvania Health System. Data on Medicare or Medicaid insurance were missing during the pre-intervention year for some patients because the method by which the health system captured this data changed. These patients were coded as having other insurance.

Statistical Analysis

We used multiple time series research design,17 , 18 also known as difference-in-differences, to compare within-practice pre- and post-intervention outcomes between the intervention practice and the two control practices. While some opportunity for residual confounding remains, this approach reduces potential biases from unmeasured variables from three possible sources.18 – 20 First, a difference between groups that is stable over time cannot be mistaken for an effect of the intervention, because practice site fixed effects are used to compare each practice with itself before and after the intervention. Second, changes affecting both groups similarly over time, such as technological improvements or pay-for-performance initiatives, cannot be mistaken for an effect, because the regression models use monthly time fixed effects. Third, if the patient mix is changing differently among practices, and if these changes are accurately reflected in the measured risk factors, this cannot be mistaken for an effect of the intervention, because the regression models adjust for these measured risk factors.

Similar to prior work,13 , 16 , 21 a multivariate logistic regression model was fit to the binary outcome measure (vaccination ordered) using the patient as the unit of analysis and adjusting for demographics (age, gender, race/ethnicity), comorbidities (using the Charlson Comorbidity Index, which predicts 10-year mortality),22 insurance type, whether the visit was with the primary care provider, and visit type (new, return, reassign provider, other). The model compared the post-intervention year influenza season (September 2012 to March 2013) to influenza seasons in the prior 2 years, adjusting for calendar month, year, and practice site fixed effects, and clustering on patient. Standard errors in the models were adjusted to account for clustering by patient.23 , 24 To assess the mean effect of the intervention in the post-intervention period, we used exponentiation of the mean of the monthly interaction term log odds ratios for the outcome measure.13 , 16 , 21 , 25 , 26 To obtain the adjusted difference in the percentage of patients with a test ordered, along with 95% confidence intervals, we used the bootstrap procedure, resampling patients.27 , 28 For all measures, a test of controls was conducted to test the null hypothesis of parallel trends between the intervention and control practices using monthly data during the pre-intervention period. Two-sided hypothesis tests used a significance level of 0.05; analyses were conducted using SAS version 9.4 software (SAS Institute Inc., Cary, NC).

RESULTS

The sample comprised 45,926 patients with a mean age of 50.2 years, of which 62.9% were women, 35.9% were white, and 54.4% were black (Table 1). More than 99.9% (9938/9941) of vaccination orders placed during the study period resulted in the patient receiving the vaccination.

Table 1.

Sample Characteristics

| Characteristic | Intervention practice | Control practices | ||||

|---|---|---|---|---|---|---|

| Pre year 2 (Sept-10 to Mar-11) | Pre year 1 (Sept-11 to Mar-12) | Post year (Sept-12 to Mar-13) | Pre year 2 (Sept-10 to Mar-11) | Pre year 1 (Sept-11 to Mar-12) | Post year (Sept-12 to Mar-13) | |

| Patients, n | 6541 | 5750 | 4958 | 10,478 | 9638 | 8561 |

| Clinic visits, n | 10,439 | 9187 | 6834 | 15,723 | 14,162 | 11,579 |

| Age†, mean years (SD) | 49.9 (16.8) | 49.2 (16.8) | 49.6 (16.9) | 50.3 (16.5) | 50.5 (16.4) | 50.9 (16.5) |

| Female gender, % | 66.7% | 66.7% | 66.0% | 61.4% | 60.8% | 59.8% |

| Race/ethnicity, % | ||||||

| Non-Hispanic white | 21.1% | 21.8% | 21.7% | 45.2% | 45.3% | 42.9% |

| Non-Hispanic black | 72.3% | 70.2% | 70.2% | 44.1% | 43.6% | 45.5% |

| Hispanic | 1.1% | 1.4% | 1.6% | 1.4% | 1.7% | 1.4% |

| Other | 5.5% | 6.6% | 6.5% | 9.2% | 9.4% | 10.2% |

| Insurance type†, % | ||||||

| Private | 53.4% | 56.4% | 54.2% | 55.9% | 62.2% | 60.9% |

| Medicare‡ | 0.4% | 17.4% | 24.7% | 0.4% | 16.5% | 23.0% |

| Medicaid‡ | 8.1% | 15.9% | 19.0% | 5.2% | 10.7% | 13.4% |

| Other/self-insured | 38.1% | 10.3% | 2.1% | 38.5% | 10.7% | 2.7% |

| Charlson score†, median (IQR) | 0 (0, 1) | 0 (0, 1) | 0 (0, 1) | 0 (0, 1) | 0 (0, 1) | 0 (0, 1) |

| Clinic visit type†, % | ||||||

| New patient | 10.5% | 12.6% | 11.5% | 19.2% | 18.8% | 20.0% |

| Return patient | 67.6% | 68.0% | 69.0% | 67.1% | 63.9% | 63.8% |

| Reassign patient provider | 13.4% | 6.7% | 6.7% | 4.7% | 4.3% | 4.0% |

| Other visit type | 8.5% | 12.6% | 12.9% | 9.0% | 13.1% | 12.2% |

| Clinic visit PCP status†, % | ||||||

| Yes with PCP | 58.9% | 67.4% | 66.0% | 52.6% | 61.9% | 62.8% |

| No | 29.2% | 31.0% | 32.8% | 23.7% | 35.9% | 36.4% |

| PCP not assigned | 11.9% | 1.6% | 0.9% | 23.6% | 2.2% | 0.8% |

Abbreviations: Pre pre-intervention, Post post-intervention, PCP primary care physician, IQR interquartile range

* Control practices represent two similar internal medicine clinics located less than 0.3 miles from the intervention internal medicine clinic†Age, insurance type, Charlson comorbidity score, clinic visit type, and clinic visit PCP status are based on the first visit for each patient within each year‡Medicare and Medicaid data in pre-intervention year 1 were often not available, and were classified as other/self-insured

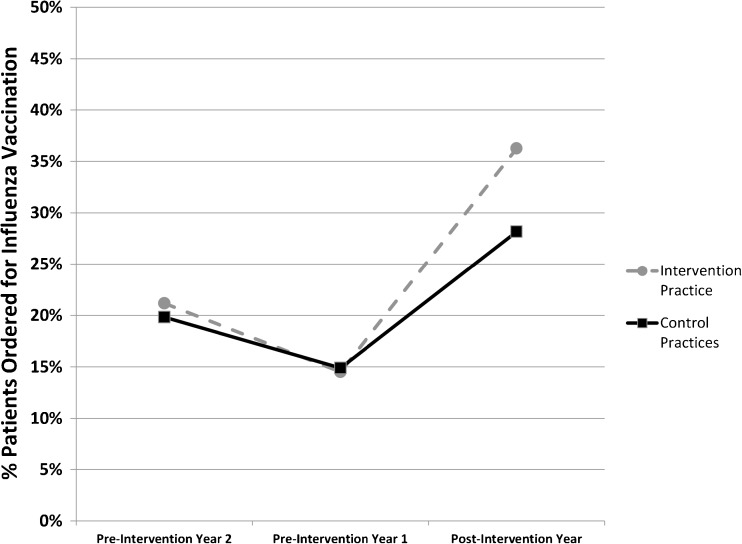

From pre-intervention year 2 to pre-intervention year 1, the vaccination order rate in the control practices declined from 19.8 to 14.9% (Fig. 1). During the same time period, the vaccination order rate in the intervention practice also declined from 21.2 to 14.5%. A test of controls for the pre-intervention period could not reject the null hypothesis of parallel trends between the intervention and control groups (odds ratio [OR]: 0.98, 95% confidence interval [CI]: 0.87–1.10, P = 0.70).

Figure 1.

Influenza vaccination order rates for the intervention and control practices before and after active choice implementation. Percentage of patients with an order placed for the influenza vaccination at the intervention practice (gray dashed line) and the two control practices (black solid line) for the following influenza seasons: pre-intervention year 2 (Sept 2010 to March 2011), pre-intervention year 1 (Sept 2011 to March 2012), and post-intervention year (Sept 2012 to March 2013).

In the post-intervention year, the vaccination order rate increased to 28.2% in the control practices and 36.3% in the intervention practice. In the adjusted difference-in-difference model, compared to the control group over time, the intervention practice had a significant increase in vaccination order rates (6.6 percentage points; 95% CI, 5.1–8.1; P < 0.001), representing a 37.3% relative increase compared to the pre-intervention period (Fig. 1).

DISCUSSION

Our findings demonstrate that the active choice intervention in this study was associated with a significant increase in influenza vaccination rates when compared to a control group over time. This suggests that choice architecture within the EHR could be used more broadly as a scalable approach for optimizing medical decision-making and improving influenza vaccination rates.

Seasonal trends in influenza vaccination rates may be related to the severity of influenza activity in the respective years. Based on data from the Centers for Disease Control and Prevention, influenza activity was low in the pre-intervention years in our study, with the 2011–2012 season having lower activity than the 2010–2011 season, potentially explaining the decline in vaccination rates.29 Compared to the pre-intervention years, we observed increased vaccination rates for both the control and intervention practices during the 2012–2013 influenza season. This may reflect a response to increased influenza activity during the 2012–2013 season.29 A strength of our study is the difference-in-differences design, which mitigates external factors that apply to all clinics similarly from biasing the interpretation of the impact of the intervention.17 – 20

Our findings expand our understanding of using choice architecture within the EHR to increase influenza vaccination rates, and this may impact physician and patient behaviors in several ways. First, in prior work, we also found that an active choice intervention led to higher rates of ordering colonoscopy and mammography testing for eligible patients (∼12 percentage-point increase).16 However, while colonoscopy completion rates increased by 3.5 percentage points, mammography completion rates were unchanged. In the present study, we found that nearly all orders resulted in actual vaccination. This may be because the ordering clinician has more control over the administration of the service, being able to give the vaccination during the office visit. Interventions that occur at the time of order entry may therefore be more impactful for services that can be completed or coordinated within the same clinic visit.

Second, a recent systematic review evaluating 57 clinical trials focused on influenza vaccination found that many interventions were focused on reminding either patients or physicians about the importance of vaccination.30 However, many of the patient-focused interventions were more labor-intensive, such as mailing letters to patients or having a pharmacist or nurse call them. Provider-focused interventions included financial incentives, physical reminders through posters in the clinic or postcards in the mail, and regular education sessions. The EHR is potentially a more scalable and automated approach, reducing complexity and administrative burden. For example, Fiks and colleagues conducted a randomized trial of EHR-based reminders in 20 pediatric practices and found that the intervention led to higher rates of influenza vaccination among patients with asthma.31 However, there is growing evidence that too many EHR-based reminders can cause alert fatigue, potentially reducing the impact of these interventions over time.32 – 35 Best practice alerts such as those used in this study not only act as reminders, but have the added feature of allowing the provider to quickly place an order. A study by Ledwich and colleagues found that best practice alerts in two rheumatology clinics led to higher rates of influenza and pneumococcal vaccinations.36 However, this study did not have a control group for comparison. Our study leveraged best practice alerts and compared changes across two control practices over time.

Third, while active choice was used in our study, there is evidence that other forms of choice architecture, such as changing default options, can achieve significant increases in influenza vaccination rates at similar magnitudes. Dexter and colleagues randomly assigned inpatient physician teams to receive a reminder about patient eligibility for influenza vaccination or to have a standing order placed (opt-out process).37 Physician reminders led to a 30% vaccination rate, while the opt-out process led to a higher vaccination rate of 42%. In another study, Chapman and colleagues randomly assigned university employees to either an opt-in process in which vaccination appointments needed to be scheduled, or an opt-out process in which appointments for vaccination were already scheduled but could be changed.38 The authors found similar differences in vaccination rates, with 33% vaccination in the opt-in group compared to 45% vaccination in the opt-out group. We also found that changing from an opt-in to opt-out process for generic substitution can increase generic prescription rates.13 , 14 Therefore, it may be important to consider various approaches to changing choice architecture and how they affect behavior. In some settings, changing default options is not possible, and other forms of interventions may be more appropriate. For example, two randomized controlled trials have demonstrated the use of social comparison feedback to physicians in order to reduce unnecessary antibiotic prescribing.15 , 39 , 40

This study is subject to limitations. First, any observational study is susceptible to unmeasured confounders. However, by comparing outcomes over time between the intervention and control practices, potential bias from unmeasured confounding is reduced. Second, these findings are from a small number of practices within a single health system, which may limit generalizability to other settings. Third, we were unable to assess relative differences in effects between physicians (who could place and sign orders) and medical assistants (who could pend orders for the physician to review and sign). Fourth, the intervention began shortly before the end of pre-intervention year 1, which may conservatively bias our results towards the null. Fifth, we did not evaluate alert fatigue, and longer-term evaluations are needed to evaluate sustainability of the effect of the intervention over time.

In conclusion, compared to a control group over time, the active choice intervention in the EHR was associated with a significant increase in influenza vaccination rates. Changing the manner in which choices are offered and displayed in the EHR may be an effective, scalable approach that could be used to increase the rates of influenza vaccination and other preventive services more broadly.

Acknowledgements

This study was funded by a grant from the Leonard Davis Institute of Health Economics at the University of Pennsylvania to Dr. Patel and a grant from the National Institute on Aging (P30AG034546) through the LDI Center for Health Incentives and Behavioral Economics to Dr. Volpp. Dr. Patel had full access to all the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Compliance with Ethical Standards

Conflict of Interest

Dr. Patel is a principal at Catalyst Health, a behavior change and technology consulting firm. Dr. Volpp is a principal at VAL Health, a behavioral economics consulting firm. Dr. Volpp has also received consulting income from CVS Caremark and research funding from Humana, CVS Caremark, Discovery (South Africa), Hawaii Medical Services Association, and Merck, none of which is related to the work described in this manuscript. All other authors declare no conflict of interest.

References

- 1.Thompson WW, Shay DK, Weintraub E, Brammer L, Bridges CB, Cox NJ, Fukuda K. Influenza-associated hospitalizations in the United States. JAMA. 2004;292(11):1333–40. doi: 10.1001/jama.292.11.1333. [DOI] [PubMed] [Google Scholar]

- 2.Disease Burden of Influenza. Available online at: http://www.cdc.gov/flu/about/disease/burden.htm. Accessed September 15, 2016.

- 3.Bridges CB, Thompson WW, Meltzer MI, Reeve GR, Talamonti WJ, Cox NJ, Lilac HA, Hall H, Klimov A, Fukuda K. Effectiveness and cost-benefit of influenza vaccination of healthy working adults: a randomized controlled trial. JAMA. 2000;284(13):1655–63. doi: 10.1001/jama.284.13.1655. [DOI] [PubMed] [Google Scholar]

- 4.Nichol KL, Wuorenma J, von Sternberg T. Benefits of influenza vaccination for low-, intermediate-, and high-risk senior citizens. Arch Intern Med. 1998;158(16):1769–76. doi: 10.1001/archinte.158.16.1769. [DOI] [PubMed] [Google Scholar]

- 5.FluVaxView. Centers for Disease Control and Prevention. Available online at: http://www.cdc.gov/flu/fluvaxview/. Accessed September 15, 2016.

- 6.Fewer Than Half of Americans Report Having Gotten A Flu Vaccine this Season. Centers for Disease Control and Prevention. Available online at: http://www.cdc.gov/flu/news/half-of-americans-received-flu-vaccine.htm. Accessed September 15, 2016.

- 7.Use and Characteristics of Electronic Health Record Systems Among Office-based Physician Practices: United States, 2001–2013. National Center for Health Statistics. Centers for Disease Control and Prevention. Available online at: http://www.cdc.gov/nchs/products/databriefs/db236.htm. Accessed September 15, 2016.

- 8.Hunt DL, Haynes RB, Hanna SE, Smith K. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. JAMA. 1998;280(15):1339–46. doi: 10.1001/jama.280.15.1339. [DOI] [PubMed] [Google Scholar]

- 9.Garg AX, Adhikari NK, McDonald H, Rosas-Arellano MP, Devereaux PJ, Beyene J, Sam J, Haynes RB. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005;293(10):1223–38. doi: 10.1001/jama.293.10.1223. [DOI] [PubMed] [Google Scholar]

- 10.Souza NM, Sebaldt RJ, Mackay JA, Prorok JC, Weise-Kelly L, Navarro T, Wilczynski NL, Haynes RB, CCDSS Systematic Review Team Computerized clinical decision support systems for primary preventive care: a decision-maker-researcher partnership systematic review of effects on process of care and patient outcomes. Implement Sci. 2011;6:87. doi: 10.1186/1748-5908-6-87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bright TJ, Wong A, Dhurjati R, Bristow E, Bastian L, Coeytaux RR, Samsa G, Hasselblad V, Williams JW, Musty MD, Wing L, Kendrick AS, Sanders GD, Lobach D. Effect of clinical decision-support systems: a systematic review. Ann Intern Med. 2012;157(1):29–43. doi: 10.7326/0003-4819-157-1-201207030-00450. [DOI] [PubMed] [Google Scholar]

- 12.Patel MS, Volpp KG. Leveraging insights from behavioral economics to increase the value of health-care service provision. J Gen Intern Med. 2012;27:1544–7. doi: 10.1007/s11606-012-2050-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Patel MS, Day S, Small DS, Howell JT, Lautenbach GL, Nierman EH, Volpp KG. Using default options within the electronic health record to increase the prescribing of generic-equivalent medications: a quasi-experimental study. Ann Intern Med. 2014;161:S44–52. doi: 10.7326/M13-3001. [DOI] [PubMed] [Google Scholar]

- 14.Patel MS, Day SC, Halpern SD, Hanson CW, Martinez JR, Honeywell S, Volpp KG. Change in generic medication prescription rates after health system-wide redesign of default options within the electronic health record. JAMA Intern Med. 2016;176(8):847–8. doi: 10.1001/jamainternmed.2016.1691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Meeker D, Linder JA, Fox CR, Friedberg MW, Persell SD, Goldstein NJ, Knight TK, Hay JW, Doctor JN. Effect of behavioral interventions on inappropriate antibiotic prescribing among primary care practices: a randomized clinical trial. JAMA. 2016;315(9):562–70. doi: 10.1001/jama.2016.0275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Patel MS, Volpp KG, Small DS, Wynn C, Zhu J, Yang L, Honeywell S, Day SC. Using active choice within the electronic health record to increase physician ordering and patient completion of high-value cancer screening tests. Healthcare J Deliv Sci Innov. 2016;4:340–5. doi: 10.1016/j.hjdsi.2016.04.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Campbell DT, Stanley JC. Experimental and Quasi-Experimental Designs for Research. Dallas, TX: Houghton Mifflin Co; 1963. [Google Scholar]

- 18.Dimick JB, Ryan AM. Methods for evaluating changes in health care policy: the difference-in-differences approach. JAMA. 2014;312(22):2401–2. doi: 10.1001/jama.2014.16153. [DOI] [PubMed] [Google Scholar]

- 19.Rosenbaum PR. Stability in the absence of treatment. J Am Stat Assoc. 2001;96:210–9. doi: 10.1198/016214501750333072. [DOI] [Google Scholar]

- 20.Shadish WR, Cook TD, Campbell DT. Experimental and Quasi-Experimental Designs for Generalized Causal Inference. Boston, MA: Houghton-Mifflin; 2002. [Google Scholar]

- 21.Patel MS, Patel N, Small DS, Rosin R, Rohrbach JI, Stromberg N, Hanson CW, Asch DA. Change in length of stay and readmissions among hospitalized medical patients after inpatient medicine service adoption of mobile secure text messaging. J Gen Intern Med. 2016;31(8):863–70. doi: 10.1007/s11606-016-3673-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Charlson ME, Pompei P, Ales KL, MacKenzie CR. A new method of classifying prognostic comorbidity in longitudinal studies: development and validation. J Chronic Dis. 1987;40(5):373–83. doi: 10.1016/0021-9681(87)90171-8. [DOI] [PubMed] [Google Scholar]

- 23.Rogers WH. Regression standard errors in clustered samples. Stata Tech Bull Rep. 1993;3:88–94. [Google Scholar]

- 24.Williams RL. A note on robust variance estimation for cluster-correlated data. Biometrics. 2000;56:645–6. doi: 10.1111/j.0006-341X.2000.00645.x. [DOI] [PubMed] [Google Scholar]

- 25.Newman SC. Biostatistical Methods in Epidemiology. New York: Wiley; 2001. p. 136. [Google Scholar]

- 26.Agresti A. An Introduction to Categorical Data Analysis. New York: Wiley; 2007. pp. 108–9. [Google Scholar]

- 27.Efron B, Tibshirani RJ. An Introduction to the Bootstrap. New York, NY: Chapman & Hall; 1993. [Google Scholar]

- 28.Davison AC, Hinkley DV. Bootstrap Methods and Their Application. Cambridge, England: Cambridge University Press; 1997. [Google Scholar]

- 29.Centers for Disease Control and Prevention. Past Flu Seasons. Available online at: https://www.cdc.gov/flu/pastseasons/index.htm. Accessed January 15, 2017.

- 30.Thomas RE, Lorenzetti DL. Interventions to increase influenza vaccination rates of those 60 years and older in the community. Cochrane Database Syst Rev. 2014;7:CDOO5188. doi: 10.1002/14651858.CD005188.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Fiks AG, Hunter KF, Localio AR, Grundmeier RW, Bryant-Stephens T, Luberti AA, et al. Impact of electronic health record-based alerts on influenza vaccination for children with asthma. Pediatrics. 2009;124(1):159–69. doi: 10.1542/peds.2008-2823. [DOI] [PubMed] [Google Scholar]

- 32.Black AD, Car J, Pagliari C, Anandan C, Cresswell K, Bokun T, et al. The impact of eHealth on the quality and safety of health care: a systematic overview. PLoS Med. 2011;8:e1000387. doi: 10.1371/journal.pmed.1000387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.van der Sijs H, Aarts J, Vulto A, Berg M. Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc. 2006;13:138–47. doi: 10.1197/jamia.M1809. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Avery AJ, Savelyich BS, Sheikh A, Cantrill J, Morris CJ, Fernando B, et al. Identifying and establishing consensus on the most important safety features of GP computer systems: e-Delphi study. Inform Prim Care. 2005;13:3–12. doi: 10.14236/jhi.v13i1.575. [DOI] [PubMed] [Google Scholar]

- 35.Phansalkar S, van der Sijs H, Tucker AD, Desai AA, Bell DS, Teich JM, Middleton B, Bates DW. Drug–drug interactions that should be non-interruptive in order to reduce alert fatigue in electronic health records. J Am Med Inform Assoc. 2013;20(3):489–93. doi: 10.1136/amiajnl-2012-001089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Ledwich LJ, Harrington TM, Ayoub WT, Sartorius JA, Newman ED. Improving influenza and pneumococcal vaccination in rheumatology patients taking immunosuppressants using an electronic health record best practice alert. Arthritis Rheum. 2009;61(11):1505–10. doi: 10.1002/art.24873. [DOI] [PubMed] [Google Scholar]

- 37.Dexter PR, Perkins SM, Maharry KS, Jones K, McDonald CJ. Inpatient computer-based standing orders vs physician reminders to increase influenza and pneumococcal vaccination rates: a randomized trial. JAMA. 2004;292(19):2366–71. doi: 10.1001/jama.292.19.2366. [DOI] [PubMed] [Google Scholar]

- 38.Chapman GB, Li M, Colby H, Yoon H. Opting in vs opting out of influenza vaccination. JAMA. 2010;304(1):43–4. doi: 10.1001/jama.2010.892. [DOI] [PubMed] [Google Scholar]

- 39.Gerber JS, Prasad PA, Fiks AG, Localio AR, Grundmeier RW, Bell LM, Wasserman RC, Keren R, Zaoutis TE. Effect of an outpatient antimicrobial stewardship intervention on broad-spectrum antibiotic prescribing by primary care pediatricians: a randomized trial. JAMA. 2013;309(22):2345–52. doi: 10.1001/jama.2013.6287. [DOI] [PubMed] [Google Scholar]

- 40.Navathe AS, Emanuel EJ. Physician peer comparisons as a nonfinancial strategy to improve the value of care. JAMA. 2016;316(17):1759–60. doi: 10.1001/jama.2016.13739. [DOI] [PubMed] [Google Scholar]