Abstract

Background

The vision of transforming health systems into learning health systems (LHSs) that rapidly and continuously transform knowledge into improved health outcomes at lower cost is generating increased interest in government agencies, health organizations, and health research communities. While existing initiatives demonstrate that different approaches can succeed in making the LHS vision a reality, they are too varied in their goals, focus, and scale to be reproduced without undue effort. Indeed, the structures necessary to effectively design and implement LHSs on a larger scale are lacking. In this paper, we propose the use of architectural frameworks to develop LHSs that adhere to a recognized vision while being adapted to their specific organizational context. Architectural frameworks are high-level descriptions of an organization as a system; they capture the structure of its main components at varied levels, the interrelationships among these components, and the principles that guide their evolution. Because these frameworks support the analysis of LHSs and allow their outcomes to be simulated, they act as pre-implementation decision-support tools that identify potential barriers and enablers of system development. They thus increase the chances of successful LHS deployment.

Discussion

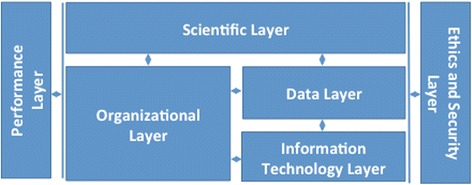

We present an architectural framework for LHSs that incorporates five dimensions—goals, scientific, social, technical, and ethical—commonly found in the LHS literature. The proposed architectural framework is comprised of six decision layers that model these dimensions. The performance layer models goals, the scientific layer models the scientific dimension, the organizational layer models the social dimension, the data layer and information technology layer model the technical dimension, and the ethics and security layer models the ethical dimension. We describe the types of decisions that must be made within each layer and identify methods to support decision-making.

Conclusion

In this paper, we outline a high-level architectural framework grounded in conceptual and empirical LHS literature. Applying this architectural framework can guide the development and implementation of new LHSs and the evolution of existing ones, as it allows for clear and critical understanding of the types of decisions that underlie LHS operations. Further research is required to assess and refine its generalizability and methods.

Keywords: Learning health system, Architectural framework, Pre-implementation, Decision-support tools

Background

Since the early 2000s, increased attention has focused on understanding, designing, and implementing learning health systems (LHSs) as a means to improve the quality, responsiveness, efficiency, and effectiveness of healthcare delivery. While various definitions of the LHS exist in the literature, the most authoritative source is the Institute of Medicine (IOM), which envisions “the development of a continuously learning health system in which science, informatics, incentives, and culture are aligned for continuous improvement and innovation, with best practices seamlessly embedded in the delivery process and new knowledge captured as an integral by-product of the delivery experience” [1]. The current operationalization of various LHS initiatives also demonstrates the important role that patient data plays in supporting continuous learning, improving decision-making [2–5], informing new research directions [6], and creating more efficient, effective, and safe systems [7].

A LHS strives to accelerate the generation and uptake of knowledge to support the provision of quality, cost-effective healthcare that improves patient outcomes [1, 3, 8, 9]. A LHS can be understood as a rapid-learning organizational system that quickly adapts to new clinical and research information about personalized treatments that are best for each patient and then supports the effective delivery of these treatments [10]. As such, LHSs incorporate continuous learning at the system, organizational, departmental, and individual levels, in cycles or loops moving from data to knowledge and then from knowledge to practice and back again. In single learning loops, information and feedback circulate to support the evaluation, management, and improvement of patient care [11, 12]. Dynamic LHS models are based on double-loop learning, whereby long-held assumptions about system-level values, norms, and policies are also challenged by questioning existing processes and procedures [13, 14]. However, in order to achieve truly continuous learning, it is necessary to arrive at triple-loop learning, wherein people understand the process by which they learn, and thus learn how to learn [15].

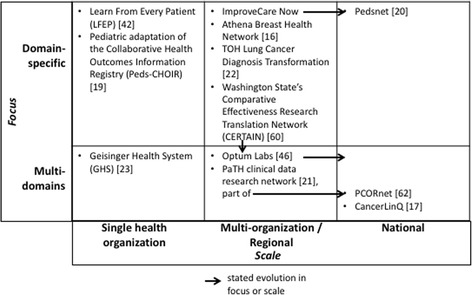

The IOM position paper and seminal literature primarily address national, all-encompassing LHSs. However, several recently proposed or adopted LHSs [16–21] show that these initiatives may vary in focus from domain specific (i.e., specific to a particular disease or concern such as lung cancer [22]) to multi-domain (i.e., spanning multiple diseases, for example, idiopathic pulmonary fibrosis, atrial fibrillation, and obesity [21]). They may also vary in scale, from a single healthcare organization, to multiple sites (often within a specific region or catchment area), to the national level. In Fig. 1, we categorize a sample of LHS initiatives to illustrate this variety. A given LHS may evolve, or aim to evolve, from domain specific to multi-domain, or from a local or regional scale to a national one.

Fig. 1.

Sample of current LHSs initiatives categorized by focus and scale [16, 17, 19–23, 42, 46, 60, 62]

While the initiatives summarized in Fig. 1 reflect the IOM’s high-level LHS vision and goals, their respective designs are actually predicated on very specific sets of assumptions, needs, purposes, core elements, and decisions. This highlights the fact that there are multiple ways in which the IOM vision for LHSs can be implemented. However, their specificity renders them unsuitable for reproduction in other contexts [2]. Indeed, simply using the elements of existing LHSs to guide the development and deployment of a new LHS hinders thinking about alternative choices that may better achieve the goals of the people involved in designing and implementing it. These include physicians, administrators, information technology specialists, and patients, broadly referred to as stakeholders in the remainder of the article.

Higher level operational frameworks are emerging from the deployment of LHSs and the lessons learned therein [23]. Nevertheless, they do not yet provide support for the numerous decisions to be made when developing and implementing a LHS, for example, which measures should be used to assess the achievement of a LHS’s goals, or which governance model is most appropriate for a given LHS. Moreover, despite existing contributions towards the articulation of LHS architectures [24, 25], a standardized approach incorporating all LHS components has yet to be created. To address this gap, we propose a comprehensive LHS architectural framework based on the well-recognized Federal Enterprise Architecture Framework [26]. The proposed LHS architectural framework allows the definition of the structures necessary for implementing a LHS in a manner that promotes rapid and systematic integration of evidence-based knowledge [27]. Such tools provide pre-implementation decision-support that helps to identify potential barriers and thereby increase the chances of successful implementation [28]. They also address the need to balance standardization and adaptation to local contexts, a classic challenge in implementation science.

Our approach

A well-recognized approach for describing the structure and behavior of complex systems such as LHSs starts with creating their architectural framework. Such frameworks provide a holistic view of an organization as a system; they thus capture the structure of its main components at varied levels (including organizational and technical), the interrelationships among these components, and the principles that guide their evolution [28–30]. An architectural framework is not a detailed system design but rather a high-level blueprint of the system’s essential characteristics. Once an architectural framework has been defined for a particular type of system (such as LHSs), it can be used to guide the detailed design of specific systems (such as given LHSs each with its own context, purpose, etc.) through the development of models relevant to each component of the architectural framework. For example, business process models are commonly used to represent the core activities of an organization.

Using an architectural framework could guide people in a given health system context (for example, a group of physicians and nurses specialized in lung cancer care) to design, develop, and implement a LHS that both adheres to the larger LHS vision as defined by the IOM [1] and is adapted to their specific context. By using the framework, stakeholders can identify the various decisions that need to be made at each layer of a LHS, as well as the impacts of these decisions on the overall system. This would help to ensure that the resulting LHS initiative is context specific and fully addresses purposes, needs, and goals. An architectural framework also supports the evolution of a LHS over time by offering a consistent framework within which stakeholders review the alignment of goals and objectives, the adaptation of the system to its changing environment, and the adjustment of processes and functions [12]. Using a consistent architectural framework addresses a LHS’s need to orchestrate human, organizational, procedural, data, and information technology components [22]. A number of architectural frameworks outside the healthcare domain exist, each reflecting the context in which it was developed and the purpose for which it was created [29]. The most commonly used are the Zachman framework for information systems [25], the Open Group Architecture Framework for enterprise information technology [31], and the Federal Enterprise Architecture Framework [26]. The Zachman framework stands as an organizational ontology, describing an organization’s levels with basic questions such as “why,” “who,” “how,” etc. The Open Group Architecture Framework for enterprise information technology, as its name implies, captures an organization’s information technology components. The Federal Enterprise Architecture Framework captures an organization’s or system’s human and technical components. The latter is the most complete, combining the characteristics of the other two frameworks and enabling the alignment of multi-stakeholder goals within an organization’s structure and technical systems [32]. The Federal Enterprise Architecture Framework thus provides an ideal basis for LHS architectures situated in multi-professional health systems, such as hospitals or health maintenance organizations.

The Federal Enterprise Architecture Framework has been used to provide a standardized manner in which to capture US government agencies’ IT and organizational resources so as to enable resource sharing and prevent duplication. Numerous organizations outside of government settings have since applied it for the same purpose [32]. As such, it has been widely used and assessed through case studies and conceptual analysis [32, 33]. An investigation of its use within the US government has shown that its large scope of application and the obligation for agencies of varied nature to strictly comply to its guidelines has created issues for its users and limited its potential benefits [33]. These limitations can however be addressed by ensuring that an architectural framework is understood and used as a means to inform, guide, and constrain decisions, rather than become an end goal [33]. Accordingly, our approach to LHSs’ architectures addresses this challenge by promoting the use of the framework as a flexible, context-specific guide rather than as a prescriptive standard.

The Federal Enterprise Architecture Framework includes the architectural framework itself, named the Consolidated Reference Model, and an accompanying methodology. We focus here on the Consolidated Reference Model, which deconstructs an organization in terms of six decision layers: strategy, business, data, applications, infrastructure, and security. It recommends particular tools and artifacts within each layer to enable designers to develop more detailed plans within them such as, for example, process models within the business layer. It also provides recommendations for ensuring that each layer’s models are developed in harmony with the other layers. A data model, for example, should capture the information needed to measure the achievement of goals identified in the performance layer. We propose here to adapt the Consolidated Reference Model such that it captures LHS dimensions identified in the literature. This results in a high-level framework that is both grounded in practice and able to address the challenge of implementing new LHSs across different contexts, foci, and scales. It also guides the evolution of existing LHSs.

Discussion

Here, we identify the five dimensions common to LHS in extant literature and then discuss the proposed architectural framework that captures these dimensions as an adaptation of the Consolidated Reference Model.

Dimensions of learning health systems

Seminal literature [7, 12, 34, 35] and existing LHS initiatives [16, 19, 20, 36] reveal five key dimensions that capture the nature of a LHS. Core elements within each dimension point to decisions that must be made as LHSs are developed and implemented.

The first dimension captures the goals pursued by a LHS. These vary from better decision support at the point-of-care to continuous quality improvement. The next three dimensions, which form the core of a well-functioning LHS [36], derive from the Collaborative Chronic Care Network (C3N) platform [37, 38]: the social dimension focuses on building a community; the technical dimension addresses data integration; and the scientific dimension enables learning, innovation, and discovery [37]. The fifth dimension—ethics—is critical for ensuring that a LHS pursues its learning and innovation activities in a manner that protects patients’ rights and privacy [39].

Goals dimension

The overarching goal driving the LHS vision is to provide cost-effective, safe, and high-quality care, leading to the improvement of patient health and other outcomes [1, 7]. The IOM has called for 90% of clinical decisions to be supported by timely and up-to-date clinical information and to reflect the best available evidence by 2020 [2]. To achieve this objective, LHSs should create virtuous circles of continuous learning and improvement through data sharing and the generation of evidence-based knowledge [6, 40]. Since LHSs aim to increase the speed by which research is translated into improved patient care, they are sometimes referred to as “rapid-learning health systems” [9]. Accordingly, a LHS should be able to accelerate all elements of the knowledge generation and adoption process, including the introduction of new drugs, comparative effectiveness research, discovery and implementation of best practices, and patient and physician decision support for shared decision-making [10].

Existing LHS initiatives tend to focus on some of these factors. For example, some LHSs concentrate on patient and physician decision support (e.g., Peds-CHOIR [19]), while others focus on conducting studies that enable improved pathways, practices, and guidelines over time (e.g., PEDSnet [20]). A LHS may pursue a number of different goals, such as the Athena Breast Health Network initiative that seeks automated identification of at-risk patients, standardization in pathology reporting and recommendation practices, and improvement of care practices [16]. No existing LHS, as far as we are aware, aims to achieve all identified LHS goals. While this does not diminish the important and tangible benefits that existing LHSs provide, it does highlight the need to allow each LHS to pursue its own set of goals in line with its particular focus, scale, and evolution.

Social dimension

The networks of people and institutions that constitute a LHS must be considered as an integral component of that system, not just as passive users of its digital infrastructure [3]. An appropriate culture of transparency, collaboration and teamwork, innovation, and continuous learning must exist [15, 41, 42]. Elements necessary to generate such a culture include governance and leadership principles, appropriate decision-making processes, alignment of stakeholder goals (patients, clinicians, administrators, researchers), and requisite expertise (including clinical and analytic) [6, 37]. The resulting social dimension can take different forms, such as a matrix organization with distributed responsibilities and multi-site teams (Athena Breast Health Network [16]); a community of learning engaged in various activities like monthly teleconferences and learning sessions (PEDSnet [20]); or a centralized service model wherein one stakeholder is responsible for data analysis, quality improvement and assurance, and care coordination services (CancerLinQ [17]).

Technical dimension

The digital infrastructure supporting a LHS is critical to empowering the social dimension by driving innovation across the healthcare ecosystem. It is, therefore, essential for the successful fulfillment of LHS goals [3]. At the heart of this infrastructure lies reliable and analyzable health data [6]. Current LHSs show that data can originate from various sources, including electronic health records (EHR), patient entries, and associated healthcare information systems; registries using pre-specified and system-specific data fields filled by physicians; or surveys given to consenting patients participating in a research project. Although these sources can be integrated, existing domain-specific LHSs tend to use focused data repositories (e.g., LFPE [42], Peds-CHOIR [19]), while multi-domain LHSs are more likely to use full-EHR entries (e.g., CancerLinQ [17], PaTH [21]). While an infrastructure could be created for a specific source and type of data, an extensible architectural framework should make use of recognized interoperable standards for defining, exchanging, and synchronizing healthcare data [43].

Another element within the technical dimension is data lifecycle management. The walk through a chain of evidence from data collection to data transformation and consumption can be a challenging task, given the diversity of stakeholders involved in each step [44]. The ways in which data objects and the end-to-end data lifecycle are handled will likewise vary according to the selected technical architecture. Seminal LHS literature promotes a distributed approach to data management to ensure the infrastructure’s resilience [7]. In this perspective, data management can be distributed at different points during its lifecycle. In a federated system, for example, data repositories remain under the control and at the location of the institution producing the data, although data queries can originate from any participating institution, which then handles its own analysis [40, 45]. Alternatively, data repositories may be distributed but the results of data queries centralized (e.g., PaTH [21]). A fully centralized approach to data management may be preferred to better handle real-time requests (e.g., Peds-NET [38]) or advanced analytics (e.g., Optum Labs [46]).

Scientific dimension

While learning may happen within any of the abovementioned LHS dimensions, it is the scientific dimension that most fosters learning by focusing on discovering and testing innovations for improved health outcomes [37]. This dimension brings together the social and technical dimensions of a LHS into a continuous learning circle that moves from data aggregation and analysis to interpretation and practice change. This leads to the generation of new data that can be integrated within the learning system [11]. Such a learning circle ideally integrates both data collected at point-of-care and the results of various types of studies, such as comparative effectiveness research, methodological research, and behavioral and policy research (Optum Labs [46]). However, existing initiatives show that improvements in clinical care can also come from operational-level learning, for example, learning how to significantly reduce delays in patient diagnostic processes using lean process methods [47]. Knowledge can likewise be disseminated at different speeds and to various stakeholders—depending on the LHS’ goals—to provide real-time decision support for patients and care providers or for long-term changes in care pathways.

Ethical dimension

The fifth LHS dimension identified in the literature is perhaps the most challenging: the need for a moral framework to guide all learning activities within a LHS. Developing such a framework confronts the distinction between clinical research and clinical practice in terms of ethics, since the use of identifiable patient data for continuous learning within a LHS—that may involve several health providers—is neither a recognized form of clinical research nor routine use for clinical practice such as physician-patient encounters. Integrating patient data collected at the point of care with population-based research data is thus difficult to accomplish given existing ethical guidelines regarding patient privacy and data security. There has been preliminary work in this area, including the proposition of an ethical framework to guide LHS learning activities [39] and an exploratory study identifying the ethical issues faced by healthcare organizations wanting to transition to a LHS [9]. However, more research is needed to reach consensus regarding which learning activities require oversight and how to determine the extent of such an oversight.

To date, few LHSs explicitly address the ethical dimension. One example that does is the Geisinger Health System, which, as part of Geisinger’s transformation into a LHS, developed institutional guidelines for navigating the differences and overlap between quality improvement and research [23]. These guidelines aim to ensure that the oversight regimen emphasizes the optimization of learning anywhere along this continuum. Another example is the CancerLinQ initiative [17], which has created guiding principles that promote the ethical management and use of data through data stewardship and protection (including secure de-identification of patient data), as well as transparency and accountability to patients, providers, and eligible stakeholders.

Components of a LHS architectural framework

We present here our proposed architectural framework that captures the five LHS dimensions discussed above. It is illustrated in Fig. 2.

Fig. 2.

LHS architectural framework

Each decision layer in the proposed framework, except for the scientific layer, has been adapted from the Federal Enterprise Architecture Framework’s Consolidated Reference Model [33]. Table 1 provides a summary while the content and methods of each layer are described in more detail below.

Table 1.

Overview of decision layers in the proposed architectural framework

| Decision layer | Consolidated Reference Model | Role in the LHS architectural framework | Relevant LHS dimension |

|---|---|---|---|

| Performance | Prescribes priority and strategic goals, and measures to track goal achievement. | Prescribes goals taken from IOM Strategic Map | Goals |

| Scientific | N/A | Develops new transferable knowledge | Scientific |

| Organizational | Provides taxonomy with hierarchical description of the Federal Government in terms of sectors, business functions, and services. | Provides organizational taxonomy of a health system and its organizational units as well as its external stakeholders | Social |

| Data | Provides four domain taxonomies relating to mission, enterprise, guidance, and resource data. | Captures data sources for clinical and point-of-care data, and specifies data standards and lifecycle management procedures | Technical |

| Information technology | Categorizes applications and their components at three levels (systems, application components, and interfaces); categorizes information technology infrastructure components (platform, network, facility). | Brings together applications and infrastructure components given their varying importance across LHSs | Technical |

| Ethics and security | Defines security controls and measurements related to, e.g., regulatory conditions, risks, and compliance. | Adds ethical dimension related to privacy and security of patient data in line with existing legislative frameworks | Ethical |

N/A not applicable

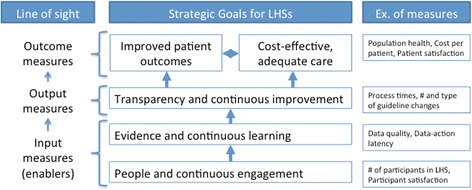

The performance layer

The performance layer identifies the goals pursued by a LHS, as well as measures to track the achievement of these goals. According to the IOM [1], LHSs have two strategic goals: better outcomes and lower costs. These goals are to be achieved through three components: people and continuous engagement, evidence and continuous learning, and transparency and continuous improvement. Figure 3 shows how the IOM’s strategic goals and components can serve as high-level goal categories for LHSs. It also shows how goals within each category can be related and measured to achieve the “line of sight” principle by which outcome measures are derived from output and input measures. Creating an architectural framework for a given LHS requires its stakeholders to state the specific goals that they wish to pursue within each category. Given the importance of patient engagement for LHS success, however, the component related to “people and continuous engagement” should always include goals related to engaging patients alongside physicians and other stakeholders. A LHS’s stakeholders should also agree upon the measures that will be used to assess the achievement of those goals.

Fig. 3.

Categories of goals and possible measures in the performance layer

A LHS that meets the IOM vision needs to emphasize social value, in particular contributing to a healthier population through better patient health outcomes alongside business value such as lower costs. The well-known balanced scorecard is one method that can be used to capture the goals and related measures of a LHS [48]. This method helps to identify the goals that are most important to an organization’s performance and then enables the organization to monitor their achievement and their impact on one another through a set of measures. It can also support double- and triple-loop learning. For example, if measures related to continuous learning and continuous improvements are satisfactory, but patient outcomes and cost measures deteriorate, the hypothesized causal relationships among these goals, the goals themselves, and even the structure of the learning process may need to be questioned.

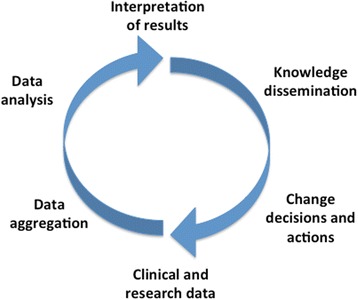

The scientific layer

The scientific layer identifies the learning activities that will be undertaken in a given LHS, such as quality improvement or comparative effectiveness research. For each learning activity, a learning cycle needs to be defined that indicates which data should be collected, how they are to be analyzed, how data analysis will generate knowledge, what changes will be affected by this knowledge, and how those changes will be disseminated and implemented [11, 42]. See Fig. 4 (Generic learning cycle within the scientific layer). A learning cycle for comparative effectiveness research may thus differ from one seeking to improve best practices [49]. Given the dynamic and holistic nature of learning cycles, methods from systems theory (such as systems dynamics) can be used to model them in a formal manner [50]. This would serve to clarify where and how learning would happen in each activity. For example, learning from clinical data and systematic reviews may inform guidelines and pathways, while learning from patient management may inform process improvements. Given that different stakeholders and data may be required for each learning cycle, the scientific layer should drive decisions about organizational and data models.

Fig. 4.

Generic learning cycle within the scientific layer

The organizational layer

This layer of the architectural framework captures the chosen governance model and associated responsibilities. The methods used to formalize governance vary according to need. For example, a matrix structure may be described using well-known structured systems modeling notations, while communities of practice—groups of people engaging in collective learning about a domain of interest—may be better described using network models designed to highlight the relationships among individuals and organizations. Decision-making processes should also be identified and linked to the organizational structure. Given that knowledge-intensive processes, such as decision-making, require much more flexibility than routine ones, novel engineering methods may be needed to capture them [51]. Whichever method is adopted, it should explicitly capture how the LHS fosters patient engagement and where patients fit into the organizational structure and its decision-making processes. Moreover, the organizational layer should be aligned with the performance layer through, for example, the use of teamwork-linked performance measures and more general population-based health improvement measures.

The data layer

The data layer provides a common way to describe and share data across organizational boundaries. Two types of data may be used in a LHS: scientific (research) data and patient data collected at the point of care and in the population base. Since one cannot assume that relevant data is readily available, this layer identifies the LHS’s data sources. In the case where more than one data source is leveraged, this layer also needs to address interoperability issues [35], namely, the chosen recognized standards allowing for syntactic interoperability (the ability of systems to exchange data) such as Health Level Seven (HL7) [52], and controlled vocabularies and ontologies allowing for semantic interoperability (the ability to automatically interpret exchanged information) such as SNOMED (Systematized Nomenclature of Medicine) and RxNORM (a terminology that contains all medications available on the US market) [45, 53]. Data lifecycle management procedures and processes, including how data quality will be ensured [54] should also be defined in this layer. As such, the methods defined in this layer are strongly related to the framework’s ethics and security layer.

The information technology layer

The information technology layer enables a standardized manner of categorizing information and communication technology assets, whether software, hardware, or network related. Since the boundaries of a LHS may not align with organizational boundaries, the purpose of this layer is to address only those components that are used to store, analyze, and transform input data, and to disseminate results to LHS stakeholders. This layer should also capture how these assets are related, for example, through a centralized or decentralized model [55]. Given the likely presence of existing health information systems in institutions wanting to launch or transform themselves into a LHS, this layer needs to specify how information technology supporting a LHS interfaces with an institutions’ existing technology assets. Decisions made within this layer need to interface with other layers; a computer-supported tailored feedback tool, for example, must be related to a learning cycle in the scientific layer focused on clinical audit and feedback [56].

The ethics and security layer

This layer captures the ethical and privacy dimensions of health data collection and use as they relate to security controls and measures. As such, it should minimally encompass existing security and privacy legislative frameworks such as The Health Insurance Portability and Accountability Act of 1996 in the USA [57] or the Personal Information Protection and Electronic Documents Act and the Personal Health Information Protection Act in Canada [58, 59]. Moving forward, new frameworks encompassing guidelines for both clinical research and clinical practice [39] will likely be recognized and should be integrated. The choices made within ethics and security layer should further guide the development of scientific, data, and information technology procedures.

Illustrating the use of the LHS architectural framework

While the architectural framework described here captures all five LHS dimensions through the decision layers, a given LHS may focus on only some of these layers. Taking contrasting examples from among those summarized in Fig. 1, we briefly describe two very different yet equally successful LHS initiatives using our proposed LHS architectural framework’s decision layers (see Table 2). A full application of the architectural framework would entail more comprehensive descriptions and the development of models within each layer. For now, Table 2 highlights the ability of the LHS architectural framework to capture very different initiatives in a common manner. As such, it facilitates an understanding of the choices that were made by each governance team.

Table 2.

Illustration of the application of the proposed LHS architectural framework

| Layer | Learn From Every Patient initiative [42] | PaTH clinical data research network initiative [21] |

|---|---|---|

| Performance | Goal: continuous quality improvement in clinical care for children with cerebral palsy. Measures: changes in healthcare utilization rates and related costs |

Goal: informatics-supported infrastructure for cohort identification and data sharing for idiopathic pulmonary fibrosis, atrial fibrillation, and obesity. Measures: not discussed |

| Scientific | 12-month study of one cohort, within a series of learning projects for continuous quality improvement | Comparative effectiveness randomized trials with specific research questions informed by clinical data research experts and vetted by informatics group |

| Organizational | Program team includes key clinical stakeholders, clinical and information technology teams | Steering Committee includes representatives from each site, three advisory committees (including patients), four working groups (research questions, information technology, methodology, regulations) |

| Data | Source: data fields and questions added to institution’s EHR. Data quality: ensured by database manager |

Source: Complete set of longitudinal data about target populations taken from site EHRs. Standards: Standardized descriptions of data elements using established standards and vocabularies; use of HL7 by all participating health systems. Quality: manual and automated monitoring by data engineers. |

| Information technology | Existing EHRs and related infrastructure | Source data loaded onto centrally-maintained data warehouse; queriable through analytics interface |

| Ethics and security | No review board authorization required (all data collected appropriate for standard clinical care) | Not directly discussed; data transformation process includes de-identification prior to loading into warehouse |

A methodology to guide a LHS’s implementation is needed to accompany the proposed LHS architectural framework. While a detailed discussion of such a methodology is beyond the scope of this paper, its general procedures might be drawn from the Collaborative Planning Methodology that accompanies the Consolidated Reference Model [33]. This is a five-step iterative process divided into two phases: organize and plan, and implement and measure. The first phase focuses on developing consensus among all stakeholders about the needs, purpose, governance system, and content of the LHS architectural framework. While the framework focuses on the system, the accompanying methodology focuses on guiding discussions among the people involved in designing and implementing a LHS. The methodology accompanying the use of the framework should thus ensure active stakeholder engagement throughout the design and implementation process. A number of reports on emerging and existing LHSs emphasize this point, for example, in terms of the importance of identifying the people and teams that should be involved [23, 42] and of mobilizing a healthcare community around a LHS initiative [16, 22, 60]. This is an important point, since individual and team learning are needed before institutional or system-level learning can occur in the form of improved processes, clinical guidelines, and more. Finally, the methodology does not assume a complete implementation, without planned future improvements. Rather, it accounts for progressive and iterative deployment and improvements as needed.

A smaller scale initiative may start by making decisions and developing models within the organizational and scientific layers, emphasizing the involvement and buy-in of key individuals and teams in one learning project that utilizes existing data and information technology assets. As the benefits of the first project become apparent, the governance team can use that momentum to identify other learning cycles, articulate goals and measures in the performance layer, address additional data storage and analysis needs, etc. In this approach, the LHS architectural framework serves to build learning capacity within the governance team at individual and group levels, which is key to implementation sustainability [61]. The stakeholders of a larger scale initiative may have the necessary resources and organizational support to address all layers of the LHS architectural framework in its first iteration. Using the framework in this manner should ensure that alignment and learning happens across siloes, by supporting communication among clinical and administrative stakeholders, as well as information technology specialists.

Conclusion

In this paper, we present an LHS architectural framework that captures common LHS dimensions identified in the literature and inspired by the Federal Enterprise Architecture Framework’s Consolidated Reference Model [33]. We propose this framework as a high level yet practical means to guide the development, implementation, and evolution of LHSs. While exemplars highlight the possibility that existing approaches can successfully make the LHS vision a reality, their variability and the design implications that these variations entail mean that many of these approaches cannot be easily replicated. Applying the LHS architectural framework discussed here unifies the core components of LHSs while facilitating a better understanding of variations among systems and the types of decisions that these systems support. By enabling the analysis of existing LHS initiatives in a consistent manner, our proposed framework allows for reproduction, adaptation, and scaling of these initiatives. By supporting decision-making, our framework can support LHS implementation in the following manner:

Performance layer: helps to identify, relate, and measure context-specific goals that can achieve the LHS vision.

Scientific layer: guides the definition of learning cycles that are specific to desired learning outcomes while addressing common elements, such as how data are collected and analyzed, or how results are implemented.

Organizational layer: helps to capture a governance model and associated responsibilities.

Data layer: supports the description of data used in the scientific layer, as well as processes to ensure their quality.

Information technology layer: supports the categorization of the information technology assets used to store, analyze, and transform data in the data layer.

Ethics and security layer: facilitates the capture of the ethical and privacy ramifications of health data collection and use as they relate to security controls and privacy legislative measures.

Future research is required to assess and further refine the generalizability and methods used in the proposed framework. Nevertheless, given the ineluctable emergence of new LHS initiatives, the guidance offered by LHS-specific architectural frameworks, such as the one presented in this paper, is critical for supporting repeatable and successful LHS implementation at varied scales and foci.

Acknowledgements

We thank Elena Goubanova for her detailed description of the Ottawa Hospital Cancer Lung Diagnosis Transformation.

The authors would like to thank the reviewers for the comments that helped to improve the paper.

Funding

This research did not receive any funding.

Availability of data and materials

Not applicable.

Authors’ contributions

LL and LJ searched and analyzed the literature on Learning Health Systems. LL and WM developed the architectural framework for Learning Health Systems. MFKF and AG worked on the proof of concept implementation of the architectural framework. All authors made substantial contributions to the intellectual content and editing of the manuscript; they all read and approved the final manuscript.

Competing interests

The authors declare that they have no competing interests.

Consent for publication

Not applicable.

Ethics approval and consent to participate

Not applicable.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Lysanne Lessard, Email: Lessard@telfer.uottawa.ca.

Wojtek Michalowski, Email: Wojtek@telfer.uottawa.ca.

Michael Fung-Kee-Fung, Email: MFUNG@toh.ca.

Lori Jones, Email: lori.jones@uottawa.ca.

Agnes Grudniewicz, Email: Agnes.Grudniewicz@telfer.uottawa.ca.

References

- 1.Institute of Medicine. Roundtable on value & science-driven health care. Institute of Medicine. 2006. http://nationalacademies.org/hmd/~/media/Files/Activity%20Files/Quality/VSRT/Core%20Documents/Making%20a%20Difference.pdf. Accessed 28 Aug 2016.

- 2.Friedman C, Rigby M. Conceptualising and creating a global learning health system. Int J Med Inform. 2012;82(4):e63–71. doi: 10.1016/j.ijmedinf.2012.05.010. [DOI] [PubMed] [Google Scholar]

- 3.Friedman C, Rubin J, Brown J, Buntin M, Corn M, Etheredge L, et al. Toward a science of learning systems: a research agenda for the high-functioning Learning Health System. J Am Med Inform A. 2015;22(1):43–50. doi: 10.1136/amiajnl-2014-002977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Delaney BC, Curcin V, Andreasson A, Arvanitis TN, Bastiaens H, Corrigan D, et al. Translational medicine and patient safety in Europe: TRANSFoRm—architecture for the learning health system in Europe. Biomed Res Int. 2015;961526. doi: 10.1155/2015/961526. [DOI] [PMC free article] [PubMed]

- 5.Lambin P, Zindler J, Vanneste B, van de Voorde L, Jacobs M, Eekers D, et al. Modern clinical research: how rapid learning health care and cohort multiple randomised clinical trials complement traditional evidence based medicine. Acta Oncol. 2015;54(9):1289–300. doi: 10.3109/0284186X.2015.1062136. [DOI] [PubMed] [Google Scholar]

- 6.Abernethy AP. Demonstrating the learning health system through practical use cases. Pediatrics. 2014;134(1):171–2. doi: 10.1542/peds.2014-1182. [DOI] [PubMed] [Google Scholar]

- 7.Friedman CP, Wong AK, Blumenthal D. Achieving a nationwide learning health system. Sci Trans Med. 2010;2(57):1–3. doi: 10.1126/scitranslmed.3001456. [DOI] [PubMed] [Google Scholar]

- 8.Abernethy AP. “Learning health care” for patients and populations. Med J Aust. 2011;194(11):564. doi: 10.5694/j.1326-5377.2011.tb03103.x. [DOI] [PubMed] [Google Scholar]

- 9.Morain SR, Kass NE. Ethics issues arising in the transition to learning health care systems: results from interviews with leaders from 25 health systems. eGems. 2016;4(2):1212. doi: 10.13063/2327-9214.1212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Etheredge L. Rapid learning: a breakthrough agenda. Health Aff. 2014;33(7):1155–62. doi: 10.1377/hlthaff.2014.0043. [DOI] [PubMed] [Google Scholar]

- 11.Flynn AJ, Patton J, Platt J. Tell it like it seems: challenges identifying potential requirements of a learning health system. HICSS. 2015;2015:3158–67. [Google Scholar]

- 12.Grossman C, Goolsby WA, Olsen L, McGinnis JM, editors. Engineering a learning healthcare system: a look at the future. Washington, DC: National Academies Press; 2011. [PubMed] [Google Scholar]

- 13.Jaaron AAM, Backhouse CJ. Operationalising “double-loop” learning in service organisations: a systems approach for creating knowledge. Syst Pract Action Res. 2016 [Google Scholar]

- 14.Ratnapalan S, Uleryk E. Organizational learning in health care organizations. Systems. 2014;2:24–33. doi: 10.3390/systems2010024. [DOI] [Google Scholar]

- 15.Sheaff R, Pilgrim D. Can learning organizations survive in the newer NHS? Implement Sci. 2006;1(27); doi: 10.1186/1748-5908-1-27. [DOI] [PMC free article] [PubMed]

- 16.Elson SL, Hiatt RA, Anton-Culver H, Howell LP, Naeim A, Parker BA, et al. The Athena Breast Health Network: developing a rapid learning system in breast cancer prevention, screening, treatment, and care. Breast Cancer Res Treat. 2013;140(2):417–25. doi: 10.1007/s10549-013-2612-0. [DOI] [PubMed] [Google Scholar]

- 17.Schilsky RL, Michels DL, Kearbey AH, Yu PP, Hudis CA. Building a rapid learning health care system for oncology: the regulatory framework of CancerLinQ. J Clin Oncol. 2014;32(22):2373–9. doi: 10.1200/JCO.2014.56.2124. [DOI] [PubMed] [Google Scholar]

- 18.Forrest CB, Crandall WV, Bailey LC, Zhang P, Joffe MM, Colletti RB, et al. Effectiveness of anti-TNFa for Crohn Disease: research in a pediatric learning health system. Pediatrics. 2014;134(1):37–44. [DOI] [PMC free article] [PubMed]

- 19.Bhandari R, Feinstein AB, Huestis S, Krane E, Dunn A, Cohen L, et al. Pediatric-Collaborative Health Outcomes Information Registry (Peds-CHOIR): a learning health system to guide pediatric pain research and treatment. Pain. 2016;157(9):2033–44. doi: 10.1097/j.pain.0000000000000609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Forrest C, Margolis P, Bailey L, Marsolo K, Del Beccaro M, Finkelstein J, et al. PEDSnet: a national pediatric learning health system. J Am Med Inform Assoc. 2014;21(4):602–6. doi: 10.1136/amiajnl-2014-002743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Amin W, Tsui F, Borromeo C, Chuang CH, Espino JU, Ford D, et al. PaTH: towards a learning health system in the Mid-Atlantic region. J Am Med Inform Assoc. 2014;21(4):633–6. doi: 10.1136/amiajnl-2014-002759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Fung-Kee-Fung M, Maziak DE, Pantarotto JR, Smylie J, Taylor L, Timlin T, et al. Lung cancer diagnosis transformation: aligning the people, processes, and technology sides of the learning system. J Clin Oncol. 2016;34(Suppl 7S):50. doi: 10.1200/jco.2016.34.7_suppl.50. [DOI] [Google Scholar]

- 23.Psek WA, Stametz RA, Bailey-Davis LD, Davis D, Darer J, Faucett WA, et al. Operationalizing the learning health care system in an integrated delivery system. eGems. 2015;3(1):1122. doi: 10.13063/2327-9214.1122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Estrin D, Sim I. Open mHealth architecture: an engine for health care innovation. Science. 2010;330:759–60. doi: 10.1126/science.1196187. [DOI] [PubMed] [Google Scholar]

- 25.Sowa JF, Zachman JA. Extending and formalizing the framework for information systems architecture. IBM Syst J. 1992;31(3):590–616. doi: 10.1147/sj.313.0590. [DOI] [Google Scholar]

- 26.U.S. White House. Federal Enterprise Architecture Framework II. 2013. https://obamawhitehouse.archives.gov/sites/default/files/omb/assets/egov_docs/fea_v2.pdf. Accessed 7 Nov 2016.

- 27.Foy R, Sales A, Wensing M, Aarons GA, Flottorp S, Kent B, et al. Implementation science: a reappraisal of our journal mission and scope. Implement Sci. 2015;10(51). doi: 10.1186/s13012-015-0240-2. [DOI] [PMC free article] [PubMed]

- 28.Jonkers H, Lankhorst MM, ter Doest HWL, Arbab F, Bosma H, Wieringa RJ. Enterprise architecture: management tool and blueprint for the organisation. Inf Syst Front. 2006;8(2):63–6. doi: 10.1007/s10796-006-7970-2. [DOI] [Google Scholar]

- 29.Chen D, Doumeingts G, Vernadat F. Architectures for enterprise integration and interoperability: past, present and future. Comput Ind. 2008;59(7):647–59. doi: 10.1016/j.compind.2007.12.016. [DOI] [Google Scholar]

- 30.ISO/IEC/IEEE. Systems and software engineering: recommended practice for architectural description of software-intensive systems. ISO/IEC/IEEE: 2011. https://www.iso.org/obp/ui/#iso:std:iso-iec-ieee:42010:ed-1:v1:en. Accessed 7 Nov 2016.

- 31.The Open Group. TOGAF® version 9.1. Zaltbommel: Van Haren; 2011. https://www.opengroup.org/togaf/. Accessed 7 Nov 2016.

- 32.Saha P. A synergistic assessment of the Federal Enterprise Architecture Framework against GERAM (ISO15704:2000) In: Saha P, editor. Handbook of Enterprise Systems Architecture in Practice. Hershey: IGI Global; 2007. pp. 1–17. [Google Scholar]

- 33.Gaver S. Why doesn’t the Federal Enterprise Architecture Work? An examination why the Federal Enterprise Architecture Program has not delivered the expected results and what can be done about it; Part I: The Problem. http://www.ech-bpm.ch/sites/default/files/articles/why_doesnt_the_federal_enterprise_architecture_work.pdf. Accessed 5 Apr 2017.

- 34.Olsen L, Aisner D, McGinnis JM, editors. The learning healthcare system: workshop summary (IOM roundtable on evidence-based medicine) Washington, D.C.: The National Academies Press; 2007. [PubMed] [Google Scholar]

- 35.Grossman C, Powers B, McGinnis JM, editors. Digital infrastructure for the learning health system: the foundation for continuous improvement in health and health care; workshop series summary. Washington, D.C.: The National Academies Press; 2011. [PubMed] [Google Scholar]

- 36.Mandl KD, Kohane IS, McFadden D, Weber GM, Natter M, Mandel J, et al. Scalable Collaborative Infrastructure for a Learning Healthcare System (SCILHS): architecture. J Am Med Inform Assoc. 2014;21(4):615–20. doi: 10.1136/amiajnl-2014-002727. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Margolis PA, Peterson LE, Seid M. Collaborative Chronic Care Networks (C3Ns) to transform chronic illness care. Pediatrics. 2013;131(Suppl 4):S219–23. doi: 10.1542/peds.2012-3786J. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Forrest CB, Margolis PA, Seid M, Colletti RB. PEDSnet: how a prototype pediatric learning health system is being expanded into a national network. Health Aff. 2014;33(7):1171–7. doi: 10.1377/hlthaff.2014.0127. [DOI] [PubMed] [Google Scholar]

- 39.Faden RR, Kass NE, Goodman SN, Pronovost P, Tunis S, Beauchamp TL. An ethics framework for a learning health care system: a departure from traditional research ethics and clinical ethics. Ethical Oversight of Learning Health Care Systems, Hastings Center Report Special Report. 2013;43(1):S16-S28. [DOI] [PubMed]

- 40.Bernstein JA, Friedman CP, Jacobson P, Rubin JC. Ensuring public health’s future in a national-scale learning health system. Am J Prev Med. 2015;48(4):480–7. doi: 10.1016/j.amepre.2014.11.013. [DOI] [PubMed] [Google Scholar]

- 41.Olsen L, Saunders R, McGinnis JM, editors. Patients charting the course: citizen engagement and the learning health system; workshop summary. Washington, D.C.: National Academies Press; 2011. [PubMed] [Google Scholar]

- 42.Lowes L, Noritz G, Newmeyer A, Embi P, Yin H, Smoyer W, et al. ‘Learn from every patient’: implementation and early results of a learning health system. Dev Med Child Neurol. 2016; doi:10.1111/dmcn.13227. [DOI] [PubMed]

- 43.Grannis S. Innovative approaches to information assymetry. In: Grossman C, Powers B, McGinnis JM, editors. Digital infrastructure for the learning health system: The foundation for continuous improvement in health and health care; workshop series summary. Washington, DC: The National Academies Press; 2011. pp. 119–24. [PubMed] [Google Scholar]

- 44.Ainsworth J, Buchan I. Combining health data uses to ignite health system learning. Methods Inf Med. 2015;54:479–788. doi: 10.3414/ME15-01-0064. [DOI] [PubMed] [Google Scholar]

- 45.Maro JC, Platt R, Holmes JH, Strom BL, Hennessy S, Lazarus R, et al. Design of a national distributed health data network. Ann Intern Med. 2009;151:341–4. doi: 10.7326/0003-4819-151-5-200909010-00139. [DOI] [PubMed] [Google Scholar]

- 46.Wallace P, Shah N, Dennen T, Bleicher PA, Crown WH. Optum Labs: building a novel node in the learning health care system. Health Aff. 2014;33(7):1187–94. doi: 10.1377/hlthaff.2014.0038. [DOI] [PubMed] [Google Scholar]

- 47.Fung-Kee-Fung M, Boushey RP, Morash C, Watters J, Morash R, Mackey M, et al. Use of a community of practice (CoP) platform as a model in regional quality improvements in cancer surgery: the Ottawa model. J Clin Oncol. 2012;30(Suppl 34):68. doi: 10.1200/jco.2012.30.34_suppl.68. [DOI] [Google Scholar]

- 48.Kaplan RS, Norton DP. Using the balanced scorecard as a strategic management system. In: Kaplan RS, Norton DP, editors. Focus your organisation on strategy with the balanced scorecard. Cambridge MA: Harvard Business School Publishing; 2005. pp. 35–48. [Google Scholar]

- 49.Friedman CP, Omollo K, Rubin J, Flynn AJ, Platt J, Markel DS. Learning Health Sciences and Learning Health Systems. In 3rd Symposium on Healthcare Engineering & Patient Safety. Ann Arbor, Michigan. https://cheps.engin.umich.edu/wp-content/uploads/sites/118/2015/11/2015_09_24-CHEPS-Poster-Learning-Health-Sciences-and-Systems-v2.pdf. Accessed 2 Nov 2016.

- 50.Monk T. Operational research as implementation science: definitions, challenges and research priorities. Implement Sci. 2016;11(81). doi:10.1186/s13012-016-0444-0. [DOI] [PMC free article] [PubMed]

- 51.Di Ciccio C, Marrella A, Russo A. Knowledge-intensive processes: characteristics, requirements and analysis of contemporary approaches. J Data Semant. 2014;4(1):29–57. doi: 10.1007/s13740-014-0038-4. [DOI] [Google Scholar]

- 52.Health Level Seven® International. Introduction to HL7 Standards. 2016. http://www.hl7.org/implement/standards/index.cfm?ref=nav. Accessed 7 Nov 2016.

- 53.Bennett C. Utilizing RxNorm to support practical computing applications: capturing medication history in live electronic health records. J Biomed Inform. 2012;45:634–41. doi: 10.1016/j.jbi.2012.02.011. [DOI] [PubMed] [Google Scholar]

- 54.Reiner D, Press G, Lenaghan M, Barta D, Urmston R, editors. Information lifecycle management: the EMC perspective. 20th International Conference on Data Engineering; 2004; doi: 10.1109/ICDE.2004.1320052

- 55.McCarthy DB, Propp K, Cohen A, Sabharwal R, Schachter AA, Rein AL. Learning from health information exchange technical architecture and implementation in Seven Beacon Communities. eGems. 2014;2(1):1060. doi: 10.13063/2327-9214.1060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Landis-Lewis Z, Brehaut JC, Hochheiser H, Douglas GP, Jacobson RS. Computer-supported feedback message tailoring: theory-informed adaptation of clinical audit and feedback for learning and behavior change. Implement Sci. 2015;10(12); doi: 10.1186/s13012-014-0203-z. [DOI] [PMC free article] [PubMed]

- 57.U.S. Department of Health & Human Services. Health information privacy: summary of the HIPAA security rule. Office for Civil Rights. n.d. https://www.hhs.gov/hipaa/for-professionals/security/laws-regulations/. Accessed 7 Nov 2016.

- 58.Government of Canada. Justice laws website: Personal Information Protection and Electronic Documents Act (S.C. 2000, c. 5). Government of Canada. 2016. http://laws-lois.justice.gc.ca/eng/acts/P-8.6/. Accessed 7 Nov 2016.

- 59.Ontario Ministry of Health and Long-Term Care. Legislation: Personal Health Information and Protection Act, 2004. Queen’s Printer for Ontario: 2016. http://www.health.gov.on.ca/en/common/ministry/publications/reports/phipa/phipa_mn.aspx. Accessed 7 Nov 2016.

- 60.Devine E, Alfonso-Cristancho R, Devlin A, Edwards T, Farrokhi E, Kessler L, et al. A model for incorporating patient and stakeholder voices in a learning health care network: Washington State’s Comparative Effectiveness Research Translation Network. J Clin Epidemiol. 2013;66(Suppl 1):S122–9. doi: 10.1016/j.jclinepi.2013.04.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Kislov R, Waterman H, Harvey G, Boaden R. Rethinking capacity building for knowledge mobilisation: developing multilevel capabilities in healthcare organisations. Implement Sci. 2014;9(166); doi:10.1186/s13012-014-0166-0. [DOI] [PMC free article] [PubMed]

- 62.Selby JV, Beal AC, Frank L. The Patient-Centered Outcomes Research Institute (PCORI) national priorities for research and initial research agenda. JAMA. 2012;307(15):1583–4. [DOI] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.