Abstract

In this article, we take issue with the claim by Sunstein and others that online discussion takes place in echo chambers, and suggest that the dynamics of online debates could be more aptly described by the logic of ‘trench warfare’, in which opinions are reinforced through contradiction as well as confirmation. We use a unique online survey and an experimental approach to investigate and test echo chamber and trench warfare dynamics in online debates. The results show that people do indeed claim to discuss with those who hold opposite views from themselves. Furthermore, our survey experiments suggest that both confirming and contradicting arguments have similar effects on attitude reinforcement. Together, this indicates that both echo chamber and trench warfare dynamics – a situation where attitudes are reinforced through both confirmation and disconfirmation biases – characterize online debates. However, we also find that two-sided neutral arguments have weaker effects on reinforcement than one-sided confirming and contradicting arguments, suggesting that online debates could contribute to collective learning and qualification of arguments.

Keywords: Online debates, echo chambers, trench warfare, motivated reasoning, confirmation bias, disconfirmation bias

The emergence of the Internet and Web 2.0 technologies has given rise to both optimistic and pessimistic visions of the future of public debate (Dahlberg, 2007; Dahlgren, 2005; Freelon, 2010; Hirzalla et al., 2011). Among the most widely known metaphors that capture the pessimistic vision is Sunstein’s (2001, 2007) view of online debates as echo chambers. He argues that since network communities transcend physical and geographical limitations, new opportunities arise for maintaining contact with primarily (or solely) marginal communities of like-minded people. It also provides the opportunity to eschew everything (and everyone) determined not to be of interest. Such selective subjective processes are accentuated by the function of algorithms that expose people to content based on previous preferences (Bücher, 2012). According to several key observers, such features do not foster a democratized and enlightened debate, but rather group mentality, polarization, and extremism.

Echo chambers are characterized by how people in online debates selectively avoid opposing arguments, and therefore face little resistance. This is assumed to reinforce and ultimately polarize political views. However, both the structural and the psychological premises that Web 2.0 will increase the likelihood of echo chambers have encountered criticism (Brundidge, 2010). The Internet not only provides the opportunity to discuss issues with like-minded people, it also increases the possibility of doing so with people who hold considerably different points of view. The Habermasian ideal is that debaters will change their minds according to the quality of the arguments presented in the debate. The literature on motivated reasoning has rather shown that people’s prior attitudes strongly bias how they process arguments, and that this bias is reinforced not only through selective exposure but also through selective judgment processes (e.g. Taber et al., 2009; Taber and Lodge, 2006). Therefore, when people are presented with opposing arguments in online debates, these arguments may not make debaters question and alter their initial opinion, but instead lead to a stronger belief in the previously held opinion. We call this trench warfare dynamics.

In this article, we use a twofold strategy to investigate the notion of echo chambers and trench warfare. First, we use a survey comprising more than 5000 respondents and investigate to what extent online debates are characterized by echo chambers: do people only talk to like-minded people online? Second, based on the same survey, we use an experimental approach to study more closely the impact of contradiction within online debates. We ask, what is the effect of contradicting, non-contradicting, and balanced arguments in an online debate setting? Are opinions changed in terms of reinforcement or modification?

Thus, the article provides a two-step analysis, focusing first on the exposure to contradicting and confirming arguments, and second on the effects of contradiction as compared to confirmation. In sum, the contribution of this article is to relate online debates and notions of echo chambers to theories of attitude formation and change, and use national representative survey experiments, whereas previous studies of confirmation and disconfirmation biases have traditionally relied on laboratory experiments.

Echo chambers

The concept of echo chambers rests on the premise of selective exposure: people have a tendency to favour information that reinforces their preexisting views. Information or media messages that challenge people’s beliefs typically create dissonance, which is unpleasant and something most people want to avoid (Festinger, 1957; Hart et al., 2009). The result of selective exposure is a reinforcement of individuals’ own beliefs.

In the heyday of broadcasting, the tendency toward selective exposure and the reinforcement of individuals’ own beliefs were somewhat counterbalanced because almost everybody watched the main news channels. Today, channel multiplication has significantly increased people’s choices. The digital revolution has multiplied the number of media platforms, offering audiences many forms of media use (Prior, 2007).1 When confronted with choice, it has been argued, people will avoid opinions they disagree with. The result might be a segmented public sphere consisting of groups of like-minded people who echo or confirm one another – so-called echo chambers (e.g. Jamieson and Capella, 2008).

The echo chamber thesis posits a situation where people are less exposed to opposing perspectives and moderating impulses. This is considered to be true for those who are interested in politics in particular.2 In a public debate characterized by a series of echo chambers, shared forums for the open exchange of opinions are being replaced by a closed group mentality. The concept was first used to characterize right-wing American media, talk radio and Fox News in particular (Jamieson and Capella, 2008). It was soon adopted by Sunstein (2001) and others, who used it to characterize online debate.3 They feared that the increased control given to consumers by the new media would lead people to avoid exposure to political diversity. In socializing with like-minded people on the Internet, users receive confirmation and reinforcement of their own opinions. In the literature on attitude formation, this is described as confirmation bias or attitude congruency bias (e.g. Taber et al., 2009: 139). People tend to evaluate arguments that support their views as strong and compelling. The outcome is more segmented political orientations and a fragmented public debate.

Social media appear to add fuel to the fire. In Republic.com 2.0, Sunstein (2007) argues that the development of Web 2.0, with the growth of the blogosphere, has aggravated the problem of echo chambers. The blogs constitute online subcommunities where like-minded people steer each other in the direction of even more extreme opinions without being confronted by counterarguments. According to Sunstein (2007), a great deal is at stake: ‘The system of free expression must do far more than avoid censorship; it must ensure that people are exposed to competing perspectives’ (p. xi). The opinions formed in echo chambers are polarized and extreme, or are at least becoming so. Hence, echo chamber dynamics entail that people discuss with like-minded people and are exposed to supporting arguments that confirm and reinforce their existing opinions.

Trench warfare dynamics

Theoretically, the structural features of the Internet and social media enable echo chambers. However, there are problems with both the psychological premise of the selective exposure thesis and the structural argument that the Internet promotes increased selectivity (Brundidge, 2010). Although studies have found that people to some extent seek supportive messages, it has been more difficult to show that people avoid contradictory ones (Brundidge, 2010; Festinger, 1957). With regard to the structural argument, the architecture of the Internet enables Web pages to be linked, which creates convergence rather than fragmentation (Jenkins, 2006; Van Dijk, 2006). Indeed, Garrett (2009) argues that the Internet has a far from perfect ability to remove ‘unwanted’ perspectives from people’s exposure to political information.

Some studies find that people seek to reinforce their views on the Internet. In the US context, experimental studies show that Republicans tend to choose news stories from Fox News, regardless of what the news story is about (Iyengar and Hahn, 2009), and it seems that selective exposure is higher for groups of strong partisans – for example, groups who strongly identify with the Republican Party (Mutz, 2006). Nevertheless, there is little research that suggests people avoid political diversity on the Internet. Indeed, studies show that politically diverse arenas do exist online (e.g. Stromer-Galley, 2002; Stromer-Galley and Muhlbeger, 2009), and people who consume online news and participate in online discussions are more likely to exhibit what is called network heterogeneity, although the effects were small (Brundidge, 2010). Moreover, ideological segregation on the Internet is low in absolute terms; there are few online news consumers with homogeneous news diets (Gentzkow and Shapiro, 2011: 1799), at least in the US context.

These studies show that the Internet not only provides people with the opportunity to discuss issues with like-minded users but also offers increased possibilities of doing so with people who hold considerably different points of view. The Habermasian perspective on such debates is that debaters will change their minds according to the quality of arguments presented in the debate. However, the vast literature on motivated reasoning has shown that we should not expect people to approach political arguments neutrally (Taber et al., 2009: 137). Prior attitudes strongly bias how people process arguments, and this bias is reinforced not only through selective exposure but also through selective judgment processes (e.g. Lebo and Cassino, 2007; Lodge and Taber, 2000; Taber et al., 2009; Taber and Lodge, 2006). For example, studies show that evaluations of arguments on issues like capital punishment (Edwards and Smith, 1996) and gun control (Taber and Lodge, 2006) are biased by peoples’ prior opinion on the issues. This is referred to as ‘disconfirmation bias’. People use time and cognitive resources to degrade and counter argue attitudinally contrasting arguments (Taber et al., 2009). Hence, when people are presented with arguments opposing their initial beliefs in online debates, these arguments may lead to a stronger belief in the already held opinion.

Although confirmation bias is a well-established finding, Taber et al. (2009: 139) argue that our understanding of disconfirmation bias is still somewhat limited. In this article, we contribute to this developing literature by focusing on online debates. We argue that the architecture of the Internet creates a particularly good environment for reinforcement through contradiction. The spiral of silence theory put forward by Noelle-Neumann (1974, 1984) maintains that people who perceive their opinions to be in the minority do not speak up because they fear social isolation. The theory is based on the assumption that mass media have a narrow agenda that excludes rival viewpoints, which may therefore create the impression of homogeneity in opinions. Several scholars have criticized the spiral of silence theory for not taking small-group interaction into account (e.g. Salmon and Kline, 1985), and the introduction of the Internet has arguably increased opportunities for small-group interaction – and this is of course the basis for the echo chamber thesis. This also means that social isolation is less of a threat. Hence, the Internet has made it possible for users to engage in debate with people who have different views and to simultaneously belong to an online environment that supports their values and opinions.4 The result of this situation can be trench warfare dynamics: people will interact and engage in debate with others who hold opposing political views, but this will only serve to strengthen their initial beliefs. Hence, the notion of trench warfare does not refute echo chamber dynamics, but differs from the concept of echo chambers in highlighting the interaction between individuals who hold different basic values and opinions.

Research questions and expectations

As mentioned initially, in this article, we investigate two interrelated research questions. First, to what extent are online debates characterized by echo chambers? Do people only discuss with like-minded others online? Based on the echo chamber thesis, we should expect people to discuss mostly with like-minded others. However, much of the empirical research accounted for above suggests that people might also discuss with those who hold opposing views.

Second, we study the effects of echo chamber and trench warfare dynamics in online debates. More specifically we ask, what is the effect of confirming, contradicting, and balanced two-sided arguments in online debates? Based on the echo chamber perspective and studies of confirmation bias and attitude congruency bias, we can expect that people who are exposed to one-sided confirming arguments will have their beliefs reinforced. Based on studies of disconfirmation bias, we suggest that one-sided contradicting arguments will reinforce existing opinions as well. Hence, the first hypothesis is that both exposure to one-sided confirming and one-sided contradicting arguments in online debates will reinforce existing views and beliefs.

However, although one-sided strong arguments might lead to reinforcement, the effects of a two-sided argument on reinforcement are less clear. Taber et al. (2009) find that two-sided arguments have the same effects as one-sided arguments. However, the two-sided arguments they use are not neutral or balanced, they strongly favour one position, but mention a moderating element. We are therefore interested in what happens to debaters who encounter neutral two-sided arguments. They are not just confirmed, and not just contradicted. They will meet an argument that agrees with them, but then also say that this is not the whole picture. This might lead to modification or at least less reinforcement than one-sided arguments. Therefore, our working hypothesis is that exposure to neutral two-sided arguments, arguments that present two sides of an issue without concluding, will reinforce existing views and beliefs to a lesser extent than one-sided arguments.

Taber et al.’s (2009) findings suggest that motivated biases vary with the strength of prior attitudes. If a belief is strong, the motivation to defend the prior attitude is also strong. Hence, we expect that people at the extremes will be more affected by one-sided confirming and contradicting arguments than people with moderate opinions.

Case, data, measures, and experimental design

We investigate our research question based on data from Norway. In Norway, Internet access and use are comparatively very high, and Facebook in particular is widely used (Enjolras et al., 2013). According to Taylor Nelson Sofres (TNS) Gallup, in 2008, 31% of the population in Norway was on Facebook at least once a week, while in 2013, the proportion who used Facebook daily was 67% (88% for people under 30).5 Most voters use Facebook and about 20% of the population used Twitter in 2012 (Enjolras et al., 2013). Our data come from a web survey carried out in 2014 comprising 5677 respondents representative of the Internet population. The samples were drawn from TNS Gallup’s web panel, consisting of 62,000 individuals. In addition to the questions analysed here, the surveys included a broad range of questions pertaining to social media usage, civic engagement, and the public sphere.

Measuring the nature of online debates

To explore whether echo chamber is a fitting characteristic of online debates, we use the following questions from the survey: How often do you discuss politics with … people who have the same basic values as yourself? People who have very different basic values from your own? Very often, often, sometimes, never. In addition, we use the following question: When you debate online, how often do you experience … being contradicted by someone in complete disagreement? The response categories were often, seldom, and never. The question was only posed to those participating in online debates either on social media or on other Internet sites such as online newspapers. The respondents were also asked whether debate participation led them to ‘become more confident of your own opinion after a debate’, ‘learn something you did not previously know’ or ‘change your opinion after a debate’.

Experimental design

The experimental design draws on earlier experiments related to motivated processing (e.g. Taber et al., 2009), but the approach is adapted to the online situation and the nature of online debates, as well as the possibilities and limits set by the survey experimental method (Mutz, 2011).

The first group of respondents was exposed to an argument strongly in favour of expanding gender equality. The second group was exposed to an argument strongly stating that gender equality had gone too far, and the third group was exposed to a mixed argument (see Table 1).

Table 1.

Stimuli – Two one-sided and one neutral two-sided argument on gender equality.

| More gender equality | It is still far from gender equality in Norway today. Men dominate in corporate positions of power, there are still big differences in pay between men and women, women take the main responsibility in the family, and women have poorer work opportunities than men. We must continue to strive for more gender equality. |

| Gender equality gone too far | Gender equality has gone too far in Norway. It is after all natural differences between what men and women are suited for and interested in. Gender quotas also lead to many talented and hardworking men being bypassed in the name of gender equality. |

| Neutral two-sided argument | We do not have gender equality in Norway, but in some areas, gender equality has gone too far. To some extent, there are differences between what men and women are suited for and interested in. And affirmative action is at the expense of many talented men. Meanwhile, it is increasingly men who earn the most, and there are men who dominate in business. In some areas, we need more equality; in other areas, it is more than enough. |

These arguments can be characterized as long arguments (Taber et al., 2009: 143). In the survey, the arguments were presented in the style of a typical online debate; we used the style of the Norwegian newspaper Dagbladet (see Figure 1).6

Figure 1.

Stimuli was presented in the format of online debates.

As a follow-up to the stimuli, the respondents were asked to what extent they agreed with the statement, and whether they thought it was a good argument (no matter if they agreed or not). In addition, they were asked whether they learned something from the argument.

Dependent variables

We investigate the effects of the treatment through respondents’ self-reported perceptions of the arguments as well as attitude change. The first dependent variable was a follow-up question after reading the argument: Does this argument influence your opinion on gender equality? Yes, I have become more certain of my initial opinion. Yes, I have become less certain of my initial opinion. No, I am not influenced in any direction.

The second dependent variable is attitude change. A general question on gender equality was asked early on in the survey: On a scale from 0 to 10, where 0 indicates that gender equality should continue and 10 indicates that gender equality has gone too far, where will you place yourself? Several questions on use of Facebook and then Twitter were asked between the general gender equality question and the experiment. Immediately after the experiment and the follow-up questions (see above), we repeated the general gender equality question.

To analyse attitude change, we identify two main groups: reinforced and modified. The reinforced group comprises respondents who are either stable or have moved in a polarized direction, that is, toward the extremes on the scale. The modified group comprises respondents who had their opinion modified, that is, moved toward the centre of the scale (including the centre), as well as respondents who ‘changed sides’, that is, moved from one side of the centre to the other side.

We will analyse the effect of the stimuli by combining information about which argument respondents were exposed to with information on attitudes toward gender equality. Based on the stimuli and the general question about gender equality, we identify three main experimental groups:

Confirmed (0–4 on the scale and were exposed to the ‘more gender equality’ argument, or 6–10 on the scale and ‘exposed to the gender equality gone too far’ argument);

Contradicted (0–4 on the scale and exposed to the ‘gender equality gone too far’ argument, or 6–10 on the scale and exposed to the ‘more gender equality’ argument);

Mixed: exposed to the two-sided neutral argument.

Respondents who placed themselves at the centre of the first question on gender equality cannot be coded as receiving a confirming or contradicting argument, and we treat them independently of the three main groups.

Another possibility would be to use the extent to which respondents agree with the statement in combination with the stimuli, as has been done in earlier studies (e.g. Taber et al., 2009). However, our approach enables us to compare the effects of one-sided confirming and contradicting arguments with the effects of two-sided neutral arguments.

A challenge in formulating the general gender equality question is that people might believe that gender equality has gone too far for different reasons. First, some may believe that gender equality has gone so far that women are not allowed to be women in the traditional sense anymore (i.e. they are not ‘allowed’ to be stay-at-home mums, etc.). Second, some may believe that gender equality has been at the expense of men’s opportunities. Hence, we tried to include both aspects in the stimuli. Still some individuals, who are against more gender equality based on them supporting the traditional role of women, might be put off by arguments related to men as victims, and vice versa.

Empirical analysis

The empirical analysis is divided into two main parts, which correspond to the two research questions formulated initially. In the first part, we investigate the nature of online debates. In the second, we study echo chamber and trench warfare effects.

The nature of online debates: Results

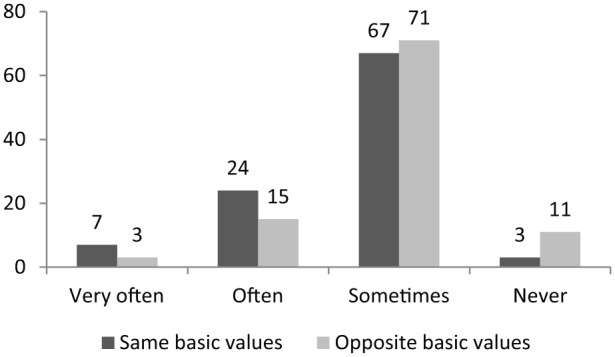

The idea of separation is at the core of the echo chamber metaphor: people are not exposed to opposing arguments and opinions. However, our data show that although people tended to debate with those who have the same basic values as themselves to a greater extent than individuals who have very different values, the differences are not great. We are only interested in the ones who debate online; hence, individuals who report that they never debate on both these questions were excluded from the analysis presented in Figure 2. The data also show that those who often discuss with like-minded people also tend to discuss with those who have different basic values (correlation = .4). In all, 53% of those who discussed very often with like-minded people said that they very often or often discussed with people who have opposing values as well.

Figure 2.

The nature of online debates I: Discussing with people who have the same basic values, and people who have the opposite basic values, 2014.

Respondents who answered never on both questions were excluded from the analysis. Therefore, approximately 40% of the sample are included in the analysis, constituting the online debaters (N = 2,215).

From this finding, it follows that online debaters also claim to be quite frequently challenged and exposed to contradictory points of view (Table 2).

Table 2.

The nature of online debates II: Contradiction, opinion reinforcement, opinion change, and learning, 2014.

| Often (%) | Seldom (%) | Never (%) | N | |

|---|---|---|---|---|

| Contradicted by someone in complete disagreement | 31 | 45 | 24 | 2190 |

| More confident of own opinion | 45 | 34 | 21 | 2186 |

| Change opinion | 6 | 63 | 30 | 2183 |

| Learn something | 30 | 49 | 21 | 2187 |

Only those who engage in online debates are included in the table. Weights used.

About one-third (31%) of those who debate politics or societal issues online report that they are often contradicted by someone who is in complete disagreement with them. In all, 24% say they are never contradicted in online debates. Moreover, 45% of online debaters claim to often learn something new, while only 21% say this never happens.

Still, although a relatively large proportion of debaters are contradicted by someone in complete disagreement, the data indicate that debates frequently serve to reinforce opinions, rather than change them. Half of the respondents taking part in online debates say that they often become more confident of their own opinions after a debate. Only a small proportion (6%) says that the debates often cause them to change their minds, whereas one out of four says that that never happens. Table 3 shows the relationship between being contradicted in online debates and attitude reinforcement. The results indicate a link between being contradicted and the strengthening of opinions.

Table 3.

Signs of trench warfare. The proportions of debaters who are often contradicted, who become more confident of their own opinion, learn something new, and change their opinion after a debate, 2014.

| Contradicted by someone in complete disagreement | |||

|---|---|---|---|

| Often (%) | Seldom (%) | Never (%) | |

| Often more confident of own opinion | 71 | 46 | 10 |

| Often change opinion | 9 | 6 | 1 |

| Often learn something | 44 | 33 | 5 |

| N (min.) | 683 | 983 | 509 |

The differences between the groups are statistically significant (p < .01).

As many as 71% of those who are ‘often’ contradicted say they often become more certain of their own opinions after a debate. On the other hand, debaters who are never contradicted are seldom more convinced after a debate. Rather than functioning as echo chambers, where one’s conviction becomes stronger in the absence of opposing views, online debates appear to give many people an opportunity to hone their arguments against other debaters. However, through this process of being contradicted, they might end up with a reinforced belief in their own opinion. In the next section, we investigate such effects based on the experiment described above.

Effects: Confirmation and disconfirmation biases

The section above indicates that people do discuss with those who have different core values, and they are exposed to arguments that contradict their own beliefs and opinions. Thus far, we have therefore not been able to identify a tendency toward echo chambers, in the sense of a separated sphere of public debate. However, as we have stated in our hypotheses, we expect that polarization of views may result not only from confirmation bias but also from disconfirmation bias. Hence, in this section, we study the effects of confirmation bias and disconfirmation bias in online debates. More specifically, we test whether confirming, contradicting and two-sided neutral arguments have effects on attitude reinforcement.

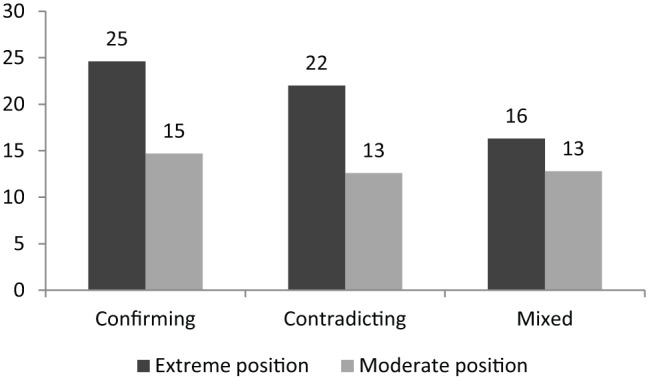

We use two different types of dependant variables: self-reported reinforcement as well as attitude change (see above for details). We first concentrate on self-reported reinforcement. Figure 3 shows the proportion who said that they became more confident of their own opinion after reading the argument. The effects are analysed based on the polar position of gender equality (lapsed).7

Figure 3.

Confirmation and disconfirmation biases in online debates I. Proportion more convinced of initial opinion after being exposed to confirming, contradicting, and neutral two-sided arguments.

Q: Does this argument influence your opinion on gender equality?

‘Extreme’ = 0, 1, 9, and 10. ‘Moderate’ = 2, 3, 4, 6, 7, and 8.

The results (Figure 3) support the hypothesis that both confirmation bias and disconfirmation bias affect attitude reinforcement through online debates (Hypothesis 1). The results also support the expectation that neutral two-sided arguments have weaker effects on reinforcement than one-sided arguments (Hypothesis 2). However, this difference in effect is contingent on attitude strength. People at the extremes are more affected by confirming and contradicting arguments than neutral two-sided arguments. Let us comment in more detail.

Both the confirming and contradicting arguments have similar effects on self-reported opinion reinforcement, but the difference in the group that was exposed to a mixed argument is only found in the ‘extreme’ group. In all, 25% (extreme position) report that they became more confident of their initial opinion after being exposed to the confirming argument, and the same is true for 22% (extreme position) of the group exposed to a contradicting argument. The group exposed to a neutral mixed argument with extreme attitudes was significantly less convinced of their initial position (p < .01). This difference is not found in the groups with moderate attitudes. The different effects of confirming arguments on the one hand and the two-sided neutral argument on the other also indicate that contradicting arguments contribute to reinforcing beliefs.

As expected, attitude strength seems to be an important moderator for confirmation and disconfirmation biases. We expected reinforcement to be greater for the individuals with the most polarized beliefs (Hypothesis 3), and the results clearly support this expectation. The effect of confirming and contradicting arguments is strongest in the group with the strongest attitudes (extreme), and the difference is significant (p < .01). This difference in effect between moderates and extremes is not significant for the experiment group exposed to the neutral mixed argument.

The results from Figure 3 are also found in the logistic regression presented in Table 4. The difference between contradicted and mixed arguments is not significant for all respondents, but it is significant if we only include respondents who hold an extreme position.

Table 4.

Test of confirmation and disconfirmation biases in online debates. Effect of confirmed and contradicted compared to mixed argument. Logistic regression: b coefficients and standard error (SE).

| All respondents |

Extreme position | |||

|---|---|---|---|---|

| b | SE | b | SE | |

| Constant | −1.822 | .069 | −1.635 | .115 |

| Mixed (ref.) | ||||

| Confirmed | .311** | .095 | .513** | .151 |

| Contradicted | .138 | .097 | .369* | .156 |

Dependent variable: 1 = more certain of initial opinion.

The reference category is the group that was exposed to the mixed argument.

‘Extreme’ = 0, 1, 9, and 10. ‘Moderate’ = 2, 3, 4, 6, 7, and 8.

p < .01; *p < .05.

The second dependent variable we study is attitude change. We measured general attitudes toward gender equality both before and after exposure to treatment. As described above, we distinguish between two groups: reinforced and modified. The reinforced group comprises those with stable and polarizing attitudes on the scale. The modified group comprises those who moved toward a less polarized position, or changed from one side of the scale to the other (see above for details).

In Table 5, we present the effects of the different stimuli on attitude change. We include attitude strength and the distinction between respondents with an initial extreme versus moderate position in this analysis as well. Please note that in the way we code the material, the scope for being polarized, as well as changing sides, is greater for the ‘moderate’ than for the ‘extreme’ respondents, since those who are located at the far ends of the spectrum have a limited possibility of moving more toward the extremes. Hence, the difference in polarization and changing sides between moderates and extremes should not be emphasized.

Table 5.

Confirmation and disconfirmation biases in online debates II. The effect of stimuli on attitude change: proportion of respondents with reinforced (stable and polarized) and modified opinions.

| Reinforced |

Modified |

N (100%) | ||||||

|---|---|---|---|---|---|---|---|---|

| Stable | Polarized | Sum | Modified | Changing sides | Sum | |||

| ‘Extreme’ | Confirmed | 47.5 | 5.4 | 52.9 | 38.8 | 8.4 | 47.2 | 560 |

| Contradicted | 47.0 | 5.9 | 52.9 | 36.8 | 10.3 | 47.1 | 526 | |

| Mixed | 42.4 | 3.4 | 45.8 | 43.6 | 10.6 | 54.2 | 559 | |

| ‘Moderate’ | Confirmed | 25.5 | 28.0 | 53.5 | 31.7 | 14.8 | 46.5 | 1,072 |

| Contradicted | 23.8 | 27.5 | 51.3 | 28.7 | 20.1 | 48.8 | 1,082 | |

| Mixed | 23.0 | 23.8 | 46.8 | 35.8 | 17.3 | 53.1 | 1,189 | |

| ‘Center’ | More | 33.2 | 66.8 | n.a. | n.a. | n.a. | 223 | |

| Less | 34.3 | 65.7 | n.a. | n.a. | n.a. | 248 | ||

| Mixed | 37.3 | 62.7 | n.a. | n.a. | n.a. | 217 | ||

‘Extreme’ = 0, 1, 9, and 10. ‘Moderate’ = 2, 3, 4, 6, 7, and 8.

Again, the results show indications of both confirmation and disconfirmation biases. Both confirming and contradicting arguments have the same effects on reinforcement. The neutral mixed argument has weaker effects than the confirming and contradicting arguments. While around 53% of the confirmed and contradicted experiment groups are reinforced, this is true for 46% of the mixed experiment group. Hence, a greater proportion in the group that was exposed to a two-sided argument had their attitudes modified. The effect is not massive, but it is significant (p < .01). Respondents who had their initial opinion confirmed were more likely to reinforce their opinion, while people exposed to a mixed argument were more likely to modify their opinion. The similar effect of confirming and contradicting arguments compared to mixed arguments was confirmed by the logistic regression (presented in Table 6). The effect of confirming and contradicting attitudes is similar and significant.

Table 6.

Test of the effect of confirmed and contradicting compared to mixed arguments. Logistic regression: b coefficients and standard error (SE).

Dependent variable: 1 = opinion reinforced; 0 = opinion modified.

The reference category is the group that was exposed to the mixed argument.

p < .01; *p < .05.

While the effect was contingent on the extent of polarization in the self-reported reinforcement analysis above (Figure 3), the effect on attitude change is not related to the degree of polarization. It is, however, worth noting that ‘moderate’ respondents who were exposed to contradicting stimuli were more likely to ‘change sides’ (20%) than the ‘moderate’ respondents who were exposed to a confirming argument (15%). This difference is significant (p < .01). This indicates that, although contradicting arguments have an equally strong reinforcement effect as confirming arguments, contradicting arguments have a stronger modifying effect for people with moderate views.

Conclusion

In the digital age, the ability to eschew certain information and maintain contact with primarily like-minded people has increased considerably. Hence, online debates have often been referred to as echo chambers, in which the participants are like-minded people who affirm each other’s opinions and encounter little or no opposition.

In this article, we have shown that this is not an accurate description of online debate. Most online debaters say they discuss with people who have different basic values from themselves almost as often as they discuss with people with the same basic values. In addition, they report that they encounter resistance and learn something new when they engage in debates. However, this does not mean that they change their opinions. Debaters who say they are often contradicted also claim to emerge from online debates stronger in their beliefs.

These findings are supported by the results from the survey experiment. We find clear indications of both confirmation bias and disconfirmation bias, which means that both discussing with opponents and supporters might lead to a reinforcement of the original opinion. Both confirming and contradicting arguments affect attitude reinforcement in similar ways. This is true for both the self-reported reinforcement and attitude change reinforcement measures that we used in the study. One-sided confirming and contradicting arguments had stronger effects on reinforcement than two-sided neutral arguments. It is important to note that attitude strength is important in this picture. Effects are stronger for individuals with strong attitudes than individuals with moderate attitudes. However, this interaction effect is most consistent in the analysis based on self-reported reinforcement.

Together, our results indicate that if a single metaphor is to be applied to online debating, trench warfare is a more fitting description than echo chambers. People are frequently met with opposing arguments, but the result is reinforcement of their original opinions and beliefs. However, the logic of confirmation bias, which is central to the echo chamber thesis, is also central in the notion of trench warfare. The Internet provides the opportunity to interact with like-minded people and those with opposite views at the same time. Interaction with like-minded people enables debaters to stay strong in their encounter with opposing arguments. With the Internet, they do not have to fear the social isolation emphasized in the spiral of silence theory (Noelle-Neumann, 1974, 1984). Subgroups with beliefs that conflict with mainstream opinions are more visible on the Internet, and it is therefore easier for people who share their convictions to find and link up with them.

If the normal situation in online debate is that one is exposed to both echo chamber and trench warfare effects, this may entail a double set of mechanisms of reinforcement operating at the same time. This could lead to polarization. The echo chamber argument states that lack of external confrontation will lead to internal closure and increasing polarization of political opinions. The trench warfare argument that we have developed in this article states the opposite dynamic, but with a similar result. According to this argument, confrontation will lead to a stronger belief in one’s own opinions and therefore lead to polarization.

However, our results also indicate that there are limits to online trench warfare. A majority reported that they did not change they opinion in any direction, and the analysis of attitude change also found that modification was common. And although short-term effects show reinforcement, the long-term effects of being confronted with opposing views might nevertheless be learning (cf. Dewey, 1991 [1927]) and even modification. Although very few respondents reported being less certain of their own opinion after being confronted with opposing arguments, the results of attitude change indicate that two-sided mixed arguments and to a lesser extent contradicting arguments have stronger modifying effects than confirming arguments.

Our analysis of the nature of online debates is limited in that a broad population survey captures marginal phenomena only to a limited extent. We must assume that the dynamics of the most extreme groups are not captured by this study. The strength of the analysis, however, is that it offers an overall picture of online debate based on representative survey data. The survey method also has limitations related to how respondents perceive question alternatives, and respondents’ recollection of what they do can differ from what they actually do. Moreover, trench warfare dynamics might be contingent on the context of the debate: both in regards to topic and platforms. We chose a heavily debated topic with familiar arguments. Other less salient topics might yield different results. Trench warfare dynamics are also most likely different in debates initiated by a public broadcasters compared to social network sites. Hence, we emphasize the need to investigate different aspects of trench warfare dynamics using a wide range of data and methods.

What of a public sphere that is dominated by trench warfare? Is it in any way ‘better’ than one dominated by echo chambers? The answer to this question will depend on which normative perspective one adopts toward the public sphere. In the Habermasian vision, where consensus is the goal, trench warfare is by definition an impediment to the healthy functioning of public debate. Dewey (1991 [1927]), on the other hand, would claim that the public sphere is an arena for collective learning and for the qualification of arguments. Hence, whether or not people change their opinions after a debate is not the main criterion for evaluation. From this perspective, trench warfare presents less of a problem than echo chambers. In order to identify and solve the problems of modern democracies, it is necessary that different opinions are expressed and exchanged, despite continuing differences in points of view.

Acknowledgments

Previous versions of the article were presented at the conference Democracy as Idea and Practice in Oslo, the 1st Gothenburg-Barcelona Workshop on Experimental Political Science in Gothenburg, and at a workshop at the Department of Media and Communication, University of Oslo. We thank all participants, as well as two anonymous reviewers for helpful comments and suggestions.

The potential of new media technology to fragment political communication was already discussed in the pioneering work of Pool (1990). He claimed that the mass media revolution would be reversed, but offered no imagined futures, just ways to think about them (Pool, 1990: 8).

The reinforcement thesis claims that those who are uninterested in politics are less exposed to information about politics in all circumstances. See Arceneaux et al. (2013) for an interesting perspective on this difference.

In his influential book Republic.com, Sunstein (2001) described this development as cyberbalkanization. The term was first used by Van Alstyne and Brynjolfsson (1996), who claimed that new opportunities to socialize with like-minded people on the Internet have more likely caused us to move toward a dystopian cyber-Balkans than toward a utopian ‘global village’. The term was later taken up by, among others, Putnam (2000).

See Zerback and Fawzi (2016) for a spiral of silence perspective on online debates.

In all, 20% of the respondents were, however, exposed to the arguments in pure text format.

‘Extreme’ = 0, 1, 9, and 10. ‘Moderate’ = 2, 3, 4, 6, 7, and 8.

Footnotes

Declaration of conflicting interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship and/or publication of this article:The data collection was financed by the Centre for Research on Civil Society and Voluntary Sector, Oslo, Norway.

References

- Arceneaux K, Johnson M, Cryderman J. (2013) Communication, persuasion, and the conditioning value of selective exposure: Like minds may unite and divide but they mostly tune out. Political Communication 30(2): 213–231. [Google Scholar]

- Brundidge J. (2010) Encountering ‘difference’ in the contemporary public sphere: The contribution of the Internet to the heterogeneity of political discussion networks. Journal of Communication 60: 680–700. [Google Scholar]

- Bücher T. (2012) Want to be on the top? Algorithmic power and the threat of invisibility on Facebook. New Media & Society 14(7): 1164–1180. [Google Scholar]

- Dahlberg L. (2007) Rethinking the fragmentation of the cyberpublic: From consensus to contestation. New Media & Society 9(5): 827–847. [Google Scholar]

- Dahlgren P. (2005) The Internet, public spheres, and political communication: Dispersion and deliberation. Political Communication 22(2): 147–162. [Google Scholar]

- Dewey J. (1991. [1927]) The Public and its Problems. New York: H. Holt. [Google Scholar]

- Edwards K, Smith EE. (1996) A disconfirmation bias in the evaluation of arguments. Journal of Personality and Social Psychology 71: 5–24. [Google Scholar]

- Enjolras B, Karlsen R, Steen-Johnsen K, Wollebæk D. (2013) Liker, liker ikke. Sosiale medier, samfunnsengasjement og offentlighet. Oslo: Cappelen Damm. [Google Scholar]

- Festinger L. (1957) A Theory of Cognitive Dissonance. Evanston, IL: Row, Peterson & Co. [Google Scholar]

- Freelon DG. (2010) Analyzing online political discussion using three models of democratic communication. New Media & Society 12(7): 1172–1190. [Google Scholar]

- Garrett RK. (2009) Echo chambers online? Politically motivated selective exposure among Internet news users. Journal of Computer-Mediated Communication 14: 265–285. [Google Scholar]

- Gentzkow M, Shapiro JM. (2011) Ideological segregation online and offline. Quarterly Journal of Economics 126: 1799–1839. [Google Scholar]

- Hart W, Albarracín D, Eagly AH, et al. (2009) Feeling validated versus being correct: A meta-analysis of selective exposure to information. Psychological Bulletin 135(4): 555–588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hirzalla F, van Zoonen L, de Ridder J. (2011) Internet use and political participation: Reflections on the mobilization/normalization controversy. Information Society 27(1): 1–15. [Google Scholar]

- Iyengar S, Hahn KS. (2009) Red media, blue media: Evidence of ideological selectivity in media use. Journal of Communication 59: 19–39. [Google Scholar]

- Jamieson KH, Capella JN. (2008) Echo Chamber. Oxford: Oxford University Press. [Google Scholar]

- Jenkins H. (2006) Convergence Culture: Where Old and New Media Collide. New York: New York University Press. [Google Scholar]

- Lebo MJ, Cassino D. (2007) The aggregated consequences of motivated reasoning and the dynamics of partisan presidential approval. Political Psychology 28(6): 719–746. [Google Scholar]

- Lodge M, Taber CS. (2000) Three steps toward a theory of motivated political reasoning. In: Lupia A, McCubbins M, Popkin S. (eds) Elements of Reason: Cognition, Choice, and the Bounds of Rationality. New York: Cambridge University Press, pp. 183–213. [Google Scholar]

- Mutz DC. (2006) Hearing the Other Side: Deliberative Versus Participatory Democracy. New York: Cambridge University Press. [Google Scholar]

- Mutz DC. (2011) Population-Based Survey Experiments. Princeton, NJ: Princeton University Press. [Google Scholar]

- Noelle-Neumann E. (1974) The spiral of silence: A theory of public opinion. Journal of Communication 24: 43–51. [Google Scholar]

- Noelle-Neumann E. (1984) The Spiral of Silence: Public Opinion – Our Social Skin. Chicago, IL: University of Chicago Press. [Google Scholar]

- Pool IS. (1990) Technologies without Boundaries. Cambridge, MA: Harvard University Press. [Google Scholar]

- Prior M. (2007) Post-Broadcast Democracy: How Media Choice Increases Inequality in Political Involvement and Polarizes Elections. New York: Cambridge University Press. [Google Scholar]

- Putnam R. (2000) Bowling Alone. The Collapse and Revival of American Community. New York: Simon & Schuster. [Google Scholar]

- Salmon CT, Kline FG. (1985) The spiral of silence ten years later: An examination and evaluation. In: Sanders KR, Kaid LL, Nimmo D. (eds) Political Communication Yearbook 1984. Carbondale, IL: Southern Illinois University Press, pp. 3–30. [Google Scholar]

- Stromer-Galley J. (2002) New voices in the public sphere: A comparative analysis of interpersonal and online political talk. Javnost: The Public 9: 23–42. [Google Scholar]

- Stromer-Galley J, Muhlbeger P. (2009) Agreement and disagreement in group deliberation: Effects on deliberation satisfaction, future engagement, and decision legitimacy. Political Communication 26(2): 173–192. [Google Scholar]

- Sunstein C. (2001) Republic.com. Princeton, NJ; Oxford: Princeton University Press. [Google Scholar]

- Sunstein C. (2007) Republic.com 2.0. Princeton, NJ; Oxford: Princeton University Press. [Google Scholar]

- Taber CS, Lodge M. (2006) Motivated skepticism in the evaluation of political beliefs. American Journal of Political Science 50(3): 755–769. [Google Scholar]

- Taber CS, Cann D, Kucsova S. (2009) The motivated processing of political arguments. Political Behavior 32: 137–155. [Google Scholar]

- Van Alstyne M, Brynjolfsson E. (1996) Electronic communities: Global village or cyberbalkans? In: Proceedings of the 17th international conference on information systems, Cleveland, OH, 15 December. [Google Scholar]

- Van Dijk JAGM. (2006) The Network Society: Social Aspects of New Media, 2nd edn. London: SAGE. [Google Scholar]

- Zerback T, Fawzi N. (2016) Can online exemplars trigger a spiral of silence? Examining the effects of exemplar opinions on perceptions of public opinion and speaking out. New Media & Society.Published online before print. DOI: 10.1177/1461444815625942 [DOI] [Google Scholar]