Abstract

BACKGROUND

Computerized surveys present many advantages over paper surveys. However, school-based adolescent research questionnaires still mainly rely on pen-and-paper surveys as access to computers in schools is often not practical. Tablet-assisted self-interview (TASI) present a possible solution, but their use is largely untested. This paper presents a method for and our experiences with implementing a TASI in a school setting.

METHODS

A TASI was administered to 3907 middle and high school students from 79 schools. The survey assessed use of tobacco products and exposure to tobacco marketing. To assess in-depth tobacco use behaviors, the TASI employed extensive skip patterns to reduce the number of not-applicable questions that non-tobacco users received. Pictures were added to help respondents identify the tobacco products they were being queried about.

RESULTS

Students were receptive to the tablets and required no instructions in their use. None were lost, stolen, or broken. Item non-response, unanswered questions, was a pre-administration concern; however, 92% of participants answered 96% or more of the questions.

CONCLUSIONS

This method was feasible and successful among a diverse population of students and schools. It generated a unique dataset of in-depth tobacco use behaviors that would not have been possible through a pencil-and-paper survey.

Keywords: Survey Administration, Tobacco Use, Adolescent Health, School-based research

School-based, self-administered surveys with youth have traditionally been conducted with paper-and-pencil instruments (PAPI).1-4 Computer-assisted self-interview (CASI) are becoming the commonly used mode of questionnaire administration and provide many advantages over PAPI, including the ability to use skip patterns, insert photos, and eliminate data entry. Some researchers have tested or utilized CASIs in schools, 2,5-8 but there use is not widespread because it relies on using a school’s computer lab which is often not accessible to researchers and/or the equipment is not reliable.2 Computerized tablets may provide a way to administer a survey with the benefits of a CASI, but have been underutilized in large-scale school-based studies and their feasibility remains unknown. Additionally tablets may provide students with additional privacy when completing surveys in a classroom setting and result in data that is less influenced by social desirability biases.6,9 In this paper, we aim to fill this gap.

The Texas Adolescent Tobacco Marketing and Surveillance Study (TATAMS) is a rapid response surveillance system designed to track tobacco and electronic cigarette use behaviors in a cohort of 3907 6th, 8th, and 10th grade students in the 5 counties that surround the 4 largest cities in Texas—Austin, Houston, San Antonio, and Dallas/Ft. Worth. The study focuses on cigarettes, little filtered cigars, cigars and cigarillos, smokeless tobacco, hookah, and electronic cigarettes. The survey was designed to capture in-depth use of these products individually, including ever use, past 30 day use, frequency of use, brand preference (excluding hookah), flavor preference, age of initiation, plans to quit, and dependence (excluding hookah). The survey also assessed cognitive and affective factors associated with these behaviors, and student exposure to tobacco marketing in a variety of venues. The final survey length was 80 pages long with 407 questions -- hardly feasible in paper format. Since the prevalence of past 30 day use of these products in this age group ranges from 16% for electronic cigarettes for high school student to 1.6% for cigar products for middle school students, many questions would be “not applicable” for most of our participants.10 Our solution was to create a tablet-assisted self-interview (TASI) that would automatically advance students past “not applicable” questions. In addition, a TASI would allow for the inclusion of full-color photos of tobacco products that may be unfamiliar to youth. This was particularly important for e-cigarettes, as they are relatively new to the market and are available in a variety of shapes, colors, and sizes. Additionally, since the use of tobacco products is illegal in our study population, the survey assesses sensitive behaviors, making it prone to social desirability bias. Tablets, as they have small screens and answers are hidden from view once a participant advances to the next screen, may give students a greater sense of privacy which could reduce this source of bias.6,9

Item non-response (INR), defined as a question that is left unanswered by a participant that completed a survey, may be driven by confusion regarding the question, how to appropriately answer, respondent carelessness, or privacy concerns.11 In school-based student surveys there may be another factor. In the classroom, students are not typically given the option to decline to participate in an assignment. Therefore, despite being given the option, those students who do not want to participate in the survey may feel that they cannot decline.12 They may decide that their best recourse is to skip questions. One advantage with a PAPI is that field staff can quickly review students’ surveys in the school and ask them to complete unanswered questions. Since this field staff review of surveys was not possible with our methodology, we were concerned that we may have a high level of item non-response.

In this paper we will share (1) the methods used to administer the TASI in schools, (2) the concerns we had before attempting TASI, (3) the relative advantages and disadvantages of using TASI versus PAPI, based on our experiences with both methods, and 4) a brief analysis of item non-response in the survey data.

METHODS

Participants

In the 2014-2015 academic year, 3907 6th, 8th, and 10th grade students were recruited across 79 schools surveys in the 5 counties that surround the 4 largest cities in Texas—Austin, Houston, San Antonio, and Dallas/Ft. Worth.

Instruments

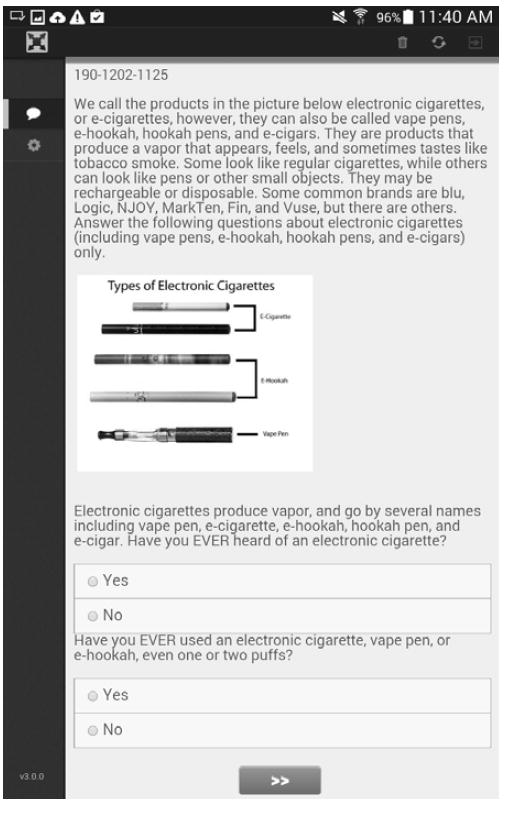

The survey was a 407-item instrument assessing students’ experiences with tobacco and nicotine products. Full-color photos (Figure 1) that had been cognitively tested in a pilot phase, and shown to aid in identification of the products, were included. In addition to behavioral measures of tobacco use, the survey also assessed students’ self-reported exposure to tobacco marketing as well as social, cognitive, and affective factors thought to be related to these behaviors. Items were adapted from similar youth tobacco use surveys. 3,13

Figure 1. Screenshot of the First Page of the Electronic Cigarette Section of the Tablet-assisted Self-Interview (TASI).

Note.

The unique master ID, 190-1202-1125, is located in the upper left corner.

Procedure

Tablet information

We used 123 Samsung Galaxy 4 Tab and 77 Samsung 3 Tab Lite tablets. They had 7 inch screens. Preparing each tablet for first use in the field took one hour, but many could be set up simultaneously. This involved encrypting each with an 8-digit key and an 8-digit password. Encrypting “scrambles” the data stored on the device and the data can only be “unscrambled” when the encryption key is entered. The encryption key had to be entered each time a tablet was turned on and the password had to be reentered after thirty minutes of non-use. A thirty minute time-out period was chosen because at the schools it would sometimes take that long to setup all the tablets prior to an administration. Tablet settings were also modified to preserve battery life, including turning off the volume, and setting the date and time to connect with our office’s wireless internet. Each tablet was labeled with a unique tablet ID number and our contact information in case it became lost.

Survey software and programming

The survey was programmed by ICF International in the application Askiaface version 3.0. The survey operated offline, which is critical for data collection in the absence of active connection to the Internet. The survey software created a master ID for each student’s survey; the ID was based on the tablet number and the date and time the survey started. The master ID appeared on each page of the survey and was recorded with the survey data. The survey was programmed with skip patterns to automatically advance students over “not applicable” questions and to auto-fill the relevant text for questions based on a previous answer. For instance, if a respondent said they read People magazine, then follow-up questions about what tobacco advertisements they saw in that magazine would specifically reference People. All questions were formatted, regardless of whether the tablet was in portrait or landscape rotation, so that no horizontal scrolling was needed to view the entire page. One survey page with questions required vertical scrolling to view all answer choices. For open-ended questions, the survey was optimized to so that the correct keyboard, either numerical or alphabetical, displayed. The 179 non-matrix formatted items were programmed with soft validations, prompts that appear if the student does not enter an answer, to ask them to provide an answer. The students still had the option of skipping the question. Due to time constraints in the programming schedule, soft validations were not added to the 228 items contained in matrices.

Survey administration protocol

Study staff arrived at the schools 30 minutes before administration to set up the tablets. Surveys were administered in groups ranging from 8 to 69 students, and typically were conducted during health or PE class. The average administration period was 45 minutes. Tablets were handed out to students, background was provided on the study, and the assent form was read aloud. After students’ assented, they took the survey independently and unless problems arose, such as, accidentally exiting out of the survey application, no instructions on using the tablets were given. Participants received a hard copy $10 e-gift code after completing the survey.

The tablets were collected from the students when they finished. Survey data were uploaded daily from the tablets via a wireless internet connection at our offices. Surveys were automatically deleted from the tablet when uploaded.

Data Analysis

Student level demographic data, including sex, grade level, age, and race/ethnicity (Hispanic, non-Hispanic White/Other, and non-Hispanic Black) were collected on the survey. School-level socio-economic status (SES) for the public and charter schools in the sample was gathered from the Texas Education Agency and is based on the percent of students receiving free or reduced lunch at that school. We do not have a comparable measure for the private schools. Descriptive statistics included percentages for categorical outcomes, and means and standard deviations for continuous outcomes. T-tests were used to assess differences for continuous outcomes. Analyses were conducted using SAS 9.4 (SAS Institute, Cary NC).

Item non-response was assessed 2 ways. The first was assessing individual students’ level of item non-response, or conversely their completion rate. Student completion rate of the survey was measured by calculating the ratio of answered questions by each student to the number of questions the student received. The second way was calculating the completion rate by question. Question-level completion rate is the ratio of students who received the question but left it unanswered to the number of students who received the question.

RESULTS

Sample Demographics

Of the 3,907 students who completed the survey, 43.9% (N = 1715) were male, 45.6% (N = 1784) were non-Hispanic White/other, 38.3% (N = 1498) were Hispanic, and 16.0% (N = 625) were non-Hispanic Black. The average age of respondents was 13.4 years (SD = 1.6) and ranged from 10 to 18. The sample included 28.7% (N = 1122) 6th graders, 33.8% (N = 1322) 8th graders, and 37.4% 10th graders (N = 1463). Eighty-three percent (N = 3260) of the sample attended a public non-charter school, 7.4% (N = 290) attended a charter school, and 9.1% (N = 357) attended a private school. The mean SES of the public and charter schools was 53% (SD = 27) who received free or reduced lunch, and ranged from 0% to 97% by school.

Field Experiences with Tablets

The project manager debriefed with the field staff after each day of data collection to assess how the tablets were operating in the field. The experiences were overwhelmingly positive. Students were receptive to the tablets and required no instructions on how to use them, with the exception of needing assistance if their survey went blank due to inactivity or if they accidently exited the survey application. To our knowledge students never purposefully exited the survey application; however, roughly one out of thirty students would accidentally exit the survey and field staff were always able get them back in to the survey quickly. Accidentally existing the survey occurred by inadvertently hitting the home key on the device. No students turned off the device during administration.

Between the 2 tablets types, staff noted that the Samsung Tab 4 touch screens were more responsive than the Samsung 3 Tab Lite. Students occasionally had a hard time getting the latter version to register their answer. No tablets went missing or were stolen. Rarely, students would drop tablets, but no screens were broken, and out of the 200 tablets 2 have minor cosmetic dents. All tablets were still fully operational after data collection was complete. Our data collection team experienced no difficulties in keeping the tablets charged and the tablets easily held a charge all day for multiple survey administrations; however, staff members were diligent in charging them after each day of use. The tablets weighed one pound, making transportation a minor inconvenience, especially when surveying large groups at a time. We had a difficult time finding adequate equipment, such as suitcases, to transport the tablets that did not quickly wear out due to the weight. Additional planning was needed to make sure that staff members were available who could carry the weight of the tablets.

No privacy concerns arose. This included during classroom administration, during storage of the surveys on the tablets before they could be uploaded, and while uploading the surveys to the server. Students worked independently. Field staff noted it was difficult to read the students’ tablets screens as they walked around the classroom during administration. No schools or schools districts, that participated or were approached for this study, expressed any concern about potential data breaches from using tablets.

Item Nonresponse Outcomes

Of the 407 questions on the survey, the median number of questions received by students was 137, with a minimum of 117 and maximum of 337. Over half of students (58.1%) answered all questions that were displayed to them, and 92% of students answered 96% or more of displayed questions. The median completion rate for the whole sample was 100% and for students who received 200 or more questions the median completion rate was 98.2%. The mean completion rate by question was 96.9%. There was a statistically significant difference in completion between matrix-formatted and non-matrix formatted questions (matrix-formatted = 94.5%, non-matrix formatted = 99.5%, p < .001).

DISCUSSION

The method utilized by TATAMS to administer a TASI was successful with a diverse population of students, including those of different ages, race/ethnicity, school types (public vs. private vs. charter), and SES. We have defined success as being able to successfully administer our survey with: (1) no lost, stolen or broken tablets, (2) a minimal amount of item non-response, and (3) and the ease that students had with operating the tablets. To our knowledge, TATAMS is only the second study to employ tablets in a large-scale, school-based survey, and the first one based in the United States. The New Zealand Youth Health and Wellness Survey began using laptops in 2001, and switched to tablets in 2007 and 2012, to administer to roughly 9,000 students at each time point,14 but they did not report in any depth on their administration methods.

The average question on our survey was left unanswered by only 3% of participants. We found no studies that assess INR in a TASI with a similar population to compare our results. However, Denscombe et al15 did assess INR in a PAPI versus a CASI, school-administered 34-item survey with 15-16 year olds. The average INR by question in the PAPI was 4% and 2% in the computer-based survey. Their computer-based survey did not have soft validations. Despite having a much longer instrument, on average our students received 137 items, we had a comparable level of INR.

Our study shows that a low level of INR can be achieved with a TASI. However, INR is dependent on many factors, such as survey length, question sensitivity, question type, and quality of the survey instrument. It should be noted that our survey went through an expert review and cognitive interviewing process prior to administration, which strengthened our survey instrument. Additionally, the presence of the soft validations on the non-matrix formatted items may have aided the low item non-response. These questions did have a statistically lower item non-response, when compared to the matrix-formatted questions that did not have soft validation.

Before using a TASI, we had concerns that ultimately proved to be non-problematic. These concerns included the following: (1) survey administrators who would not be able to see if students were skipping questions and this would result in an unacceptable level of INR; (2) tablets that would break or go missing; (3) that it would be time-consuming to keep tablets charged; and (4) the screens would be too small. Another major concern was that non-tobacco users would finish the survey quicker than users due to having fewer questions. This could result in students or school staff being able to identify who was a tobacco user. However, the survey administration staff never saw any evidence of this in the field. Finally, some issues were prevented by not having the tablets connected to the internet; including, the concern that students would exit the survey and use other applications online, and that wireless internet connections would not be reliable or unavailable within the schools.

Our research team has experience administering school-based paper “Optical Mark Recognition” paper-and-pencil survey instruments (PAPI) to over 29,000 school-children across Texas.16,17 Based on these experiences, Table 1 summarizes the pros and cons of PAPIs versus TASIs. Compared to PAPIs, TASIs allow for skip pattern,18 and eliminates data entry and prescanning survey cleaning. Students may also feel they have more privacy completing a TASI because: (1) it is easier to hide the screen than a paper, and (2) as a student advances through the survey, their answers to questions are no longer displayed.6 Anecdotally, students appeared to prefer the experience of taking the survey on the tablet. We have witnessed that students find the bubbling process on “Optical Mark Recognition” PAPIs fatiguing, which leads to: (1) not bubbling properly, (2) staff having to review each survey and fill-in incomplete bubbling, and (3) students losing focus in the survey, and possibly not completing or dropping. With the TASI, we received very few complaints or questions about the survey length and no one dropped out of the survey during administration. However, it is important to note that students received a $10 incentive for this study and not for our previous PAPIs. Using PAPIs do have a few benefits over tablets including: (1) survey administration staff can monitor student’s completion status and ask them to complete unanswered questions, (2) with tablets, the number of participants/students you can survey at a time is limited by the number of tablets you have available, and (3) PAPIs are more lightweight than tablets making transportation easier and shipping less expensive. PAPIs also cost less to implement; however, it is important to consider that the dataset generated from a PAPI may be less robust than what is possible when using a TASI. Additionally, as 73% of teens have access to a smartphone,19 one way to reduce cost may be to develop a TASI administration protocol that would allow for students to complete surveys on their own device, and the researcher would just need to provide tablets for those who did not have smart phones.

Table 1.

The Advantages and Disadvantages of Using a Tablet-assisted Self-interview (TASI) versus a Paper-and-pencil Instrument (PAPI)

| Area of Concern | Tablet-assisted Self-interview | Paper-and-pencil Instrument | Notes |

|---|---|---|---|

| Skip Patterns | X | Not advised on paper surveys, but can be automated on tablet surveys. | |

| Student Instructions | X | Students required no instruction on tablet use. They require constant reminders to bubble on an “Optical Mark Recognition” (OMR) PAPI. | |

| Student Survey Progress / Item non-response | X | With paper surveys, it is easy to see if students are progressing. Paper surveys can quickly be flipped through to check for item non-response. | |

| Survey Cleaning | X | OMR surveys must be manually reviewed, question by question, for stray marks and incomplete bubbling. | |

| Data Entry | X | Paper surveys require hand entry or scanning. | |

| Survey Supply | X | Number of participants you can survey at a time is limited by the number of tablets. | |

| Participant Privacy | X | Tablets allow for participants to hide answers during administration. | |

| Audio | X | Audio computer-assisted self-administered interviews can read the survey aloud to the participants on tablets. | |

| Cost | X | While paper surveys cost less to implement, there is a trade off on the amount, and possibly quality, of data that can be collected. |

Whereas using tablets to collect surveys has many advantages, it is also important to consider how using tablets can influence how students answer questions. This is especially important if you want to compare the survey data from TASIs to paper-based surveys, or if you are switching administration modes in an existing longitudinal or serial cross-sectional study. Two ways tablets may effect students’ response are that: (1) students may prefer completing the survey on a tablet, which could result in them be more willing to invest the energy to provide accurate answers,7,20 and (2) students may feel that tablets allow for more privacy.6 Modes of survey administration that increases a participant’s sense of privacy have consistently been shown to increase accurate self-reporting of illicit or embarrassing behavior. 9 This is typically theorized to occur because social desirability bias is reduced when privacy is increased, and therefore more honest answers are provided. The classroom setting is not the ideal private setting for a survey as a participant/student is surrounded by his/her peers and by authority figures. It is important to understand that although an increase in reporting an illicit behavior is typically viewed as more accurate, the issue is more complicated. In a classroom, the effects of social desirability bias are complex and may depend on who the student believes will view their answers. A student who fears a teacher seeing their answers would almost always result in underreporting of the illicit behavior; however, if the student is mainly concerned that other students will see their answers, the direction of the bias will depend on the individual students’ assumption about the desirability of the behavior by their peers.8

We know of no studies that have compared paper- versus tablet- based surveys and the association between reporting of risk behaviors. There have been several studies to date assessing the association of mode of administration between PAPI and computer-administered surveys, and students’ reporting of sensitive behaviors. Eaton et al2 summarize these studies. In general there was either no association or the associations found have not been consistent in direction. However, we do not view a computer-based survey and TASI to be comparable in privacy. In fact several researchers have discussed the extra measures needed to ensure students’ privacy when taking a computer-based survey, such as placing barriers between computers.2 It is our untested assumption that tablets, especially one with a small 7-inch screen, provide an increased sense of privacy over both paper- and computer- based surveys and therefore TASI will be less influenced by social desirability bias than these other administration methods. Research is needed to further assess this.

Although TASIs are a novel approach to school data collection, using computer-assisted surveys have been used since the 1960s and additional technologies and advancements are available.18 Audio computer-assisted self-administered interviews (ACASI) read the survey aloud to participants, and are of value when surveying individuals with lower reading abilities or non-native speakers. Surveys can also have an embedded dictionary allowing students to click on a word to access a definition. This would be especially important on surveys on sensitive topics where the students may feel uncomfortable asking questions to survey administrators. Also, bilingual students may be aided having a survey were they can flip between 2 languages while taking the survey. Lastly, with computer-based surveys, the researcher has the ability to embed video into the survey.

Limitations

Students were given a $10 e-gift code for taking the survey. This is not typical for school-based surveys and some of the success we had with the tablets may be attributable to the goodwill generated by the incentive. We found that students worked more quietly and independently than we have experienced with our PAPIs. This may be an effect of the tablet, but could also be because the current survey was on a particularly sensitive topic.

IMPLICATIONS FOR SCHOOL HEALTH

Health policies, intervention programs, and classroom curriculums should ideally be developed, implemented, and evaluated based on peer-reviewed scientific evidence. The data used to generate this evidence base often comes from self-administered questionnaires which are often administered in school. TASI may present a way to be more efficient in this research and hopefully provide stronger, more valid and more reliable data. Through the resourceful use of skip patterns, TASI presents a way to efficiently query students on and about low prevalence behaviors. In the context of this study, we were able to question students not just on their tobacco product use, but also ask in-depth follow-up questions specific for each product they reported using, that would not have been possible on a paper survey. This should allow for a greater understanding of what causes students to start and continue tobacco product use, which would be used to inform tobacco prevention policies and programs in schools. Data collected through TASI may also be less biased by social desirability, which could then lead to a stronger evidence base upon which to create policies and programs.

School-based studies are typically the most convenient way to recruit a large, representative sample of grade-school aged participants. However, many school districts report being overwhelmed by research requests to survey their students. Therefore, it is important for those studies that are granted access to be well-designed, valid, and capture as much data as possible, in an efficient manner. Using tablet-based surveys can be a means to achieve this.

Acknowledgments

This work was supported by The National Cancer Institute of the National Institutes of Health (NIH); and the Center for Tobacco Products of the Food and Drug Administration (FDA) of the of United States Department of Health and Human Services [grant number P50 CA180906-01]. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health or the Food and Drug Administration.

Footnotes

Human Subjects Approval Statement

The University of Texas Health Science Center’s Institutional Review Board approved this study (reference number HSC-SPH-13-0377). For participating schools, district and principal approval, and where appropriate, school institutional review board approval was obtained.

Contributor Information

Joanne Delk, Michael & Susan Dell Center for Healthy Living, The University of Texas Health Science Center at Houston-UTHealth, School of Public Health, Austin Campus, Austin, TX 78701, joanne.e.delk@uth.tmc.edu.

Melissa Blythe Harrell, Human Genetics and Environmental Sciences at The University of Texas Health Science Center at Houston-UTHealth, School of Public Health, Austin Campus, Austin, TX 78701, melissa.b.harrell@uth.tmc.edu.

Tala H.I. Fakhouri, ICF International, Division of Public Health and Survey Research, Rockville, MD, fakhouri.tala@gmail.com.

Katelyn A. Muir, ICF International, Division of Public Health and Survey Research, Burlington, VT, katelyn.muir@gmail.com.

Cheryl L. Perry, Health Promotion and Behavioral Sciences at The University of Texas School of Public Health-UTHealth, School of Public Health, Austin Campus, Austin, TX 78701, cheryl.l.perry@uth.tmc.edu.

References

- 1.Brener ND, Kann L, Kinchen SA, Grunbaum J, Whalen L, Eaton D, et al. Methodology of the youth risk behavior surveillance system. MMWR Recomm Rep. 2004;53(RR-12):1–13. [PubMed] [Google Scholar]

- 2.Eaton DK, Brener ND, Kann L, Denniston MM, McManus T, Kyle TM, et al. Comparison of paper-and-pencil versus web administration of the Youth Risk Behavior Survey (YRBS): risk behavior prevalence estimates. Eval Rev. 2010;34(2):137–153. doi: 10.1177/0193841X10362491. [DOI] [PubMed] [Google Scholar]

- 3.US Centers for Disease Control and Prevention. [October 29, 2015];National Youth Tobacco Survey. 2015 Available at: http://www.cdc.gov/tobacco/data_statistics/surveys/nyts/index.htm.

- 4.Monitoring the Future. [October 29, 2015];Purpose and Design. Available at: http://www.monitoringthefuture.org/purpose.html#Administration.

- 5.Beebe TJ, Harrison PA, Mcrae JA, Anderson RE, Fulkerson JA. An evaluation of computer-assisted self-interviews in a school setting. Public Opin Q. 1998;62(4):623–632. [Google Scholar]

- 6.Denny SJ, Milfont TL, Utter J, Robinson EM, Ameratunga SN, Merry SN, et al. Hand-held internet tablets for school-based data collection. BMC Res Notes. 2008;1(1):1. doi: 10.1186/1756-0500-1-52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Vereecken C. Paper pencil versus pc administered querying of a study on health behaviour in school-aged children. Arch Public Health. 2001;59(1):43–61. [Google Scholar]

- 8.Vereecken CA, Maes L. Comparison of a computer-administered and paper-and-pencil-administered questionnaire on health and lifestyle behaviors. J Adolesc Health. 2006;38(4):426–432. doi: 10.1016/j.jadohealth.2004.10.010. [DOI] [PubMed] [Google Scholar]

- 9.Tourangeau R, Smith TW. Asking sensitive questions the impact of data collection mode, question format, and question context. Public Opin Q. 1996;60(2):275–304. [Google Scholar]

- 10.Singh T, Arrazola RA, Corey CG, Husten CG, Neff LJ, Homa DM, et al. Tobacco use among middle and high school students - United States, 2011-2015. MMWR Morb Mortal Wkly Rep. 2016;65(14):361–367. doi: 10.15585/mmwr.mm6514a1. [DOI] [PubMed] [Google Scholar]

- 11.Wolfe EW, Converse PD, Airen O, Bodenhorn N. Unit and item nonresponses and ancillary information in web-and paper-based questionnaires administered to school counselors. Meas Eval Couns Dev. 2009;42(2):92–103. [Google Scholar]

- 12.Denscombe M, Aubrook L. “It’s just another piece of schoolwork”: the ethics of questionnaire research on pupils in schools. Br Educ Res J. 1992;18(2):113–131. [Google Scholar]

- 13.United States General Services Administration. [October 29, 2015];PATH/Baseline-Youth Extended Interview. Available at: http://www.reginfo.gov/public/do/PRAViewIC?ref_nbr=201309-0925-003&icID=203761.

- 14.Clark T, Fleming T, Bullen P, Crengle S, Denny Simon, Dyson B, et al. Health and well being of secondary school students in New Zealand: trends between 2001, 2007 and 2012. J Paediatr Child Health. 2013;49(11):925–934. doi: 10.1111/jpc.12427. [DOI] [PubMed] [Google Scholar]

- 15.Denscombe M. Item non response rates: a comparison of online and paper questionnaires. Int J Soc Res Methodol. 2009;12(4):281–291. [Google Scholar]

- 16.Hoelscher DM, Springer AE, Ranjit N, Perry CL, Evans AE, Stigler M, et al. Reductions in child obesity among disadvantaged school children with community involvement: the Travis County CATCH Trial. Obesity (Silver Spring) 2010;18(S1):S36–S44. doi: 10.1038/oby.2009.430. [DOI] [PubMed] [Google Scholar]

- 17.Springer AE, Kelder SH, Byrd-Williams CE, Pasch KE, Ranjit N, Delk JE, et al. Promoting energy-balance behaviors among ethnically diverse adolescents overview and baseline findings of the Central Texas CATCH Middle School Project. Health Educ Behav. 2013;40(5):559–570. doi: 10.1177/1090198112459516. [DOI] [PubMed] [Google Scholar]

- 18.Baker RP. Computer Assisted Survey Information Collection. New York, NY: John Wiley & Sons; 1998. [Google Scholar]

- 19.Pew Research Center. [Novermber 23, 2015];Teens, Social Media & Technology Overview 2015. Available at: http://www.pewinternet.org/files/2015/04/PI_TeensandTech_Update2015_0409151.pdf.

- 20.Cannell CF, Miller PV, Oksenberg L. Research on interviewing techniques. Sociol Methodol. 1981;12(4):389–437. [Google Scholar]