Abstract

Super-resolution microscopy provides direct insight into fundamental biological processes occurring at length scales smaller than light’s diffraction limit. The analysis of data at such scales has brought statistical and machine learning methods into the mainstream. Here we provide a survey of data analysis methods starting from an overview of basic statistical techniques underlying the analysis of super-resolution and, more broadly, imaging data. We subsequently break down the analysis of super-resolution data into four problems: the localization problem, the counting problem, the linking problem, and what we’ve termed the interpretation problem.

Graphical abstract

1. A GLANCE AT SUPER-RESOLUTION

Processes fundamental to life, including DNA transcription, RNA translation, protein folding, and assembly of proteins into larger complexes, occur at length scales smaller than the diffraction limit of light used to probe them (<200 nm).

For this reason, up until a decade ago, these processes were largely inaccessible to conventional microscopy methods. Key technical achievements by way of experiments, from structured illumination methods1,2 to manipulations of fluorophore photophysics,3–5 have peered into this previously impenetrable scale with several techniques now providing detailed in vivo 3D images as shown in Figure 1.6

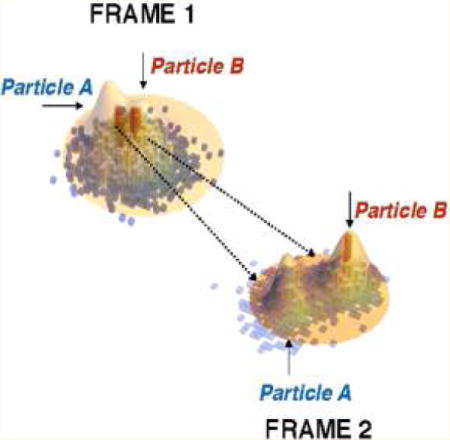

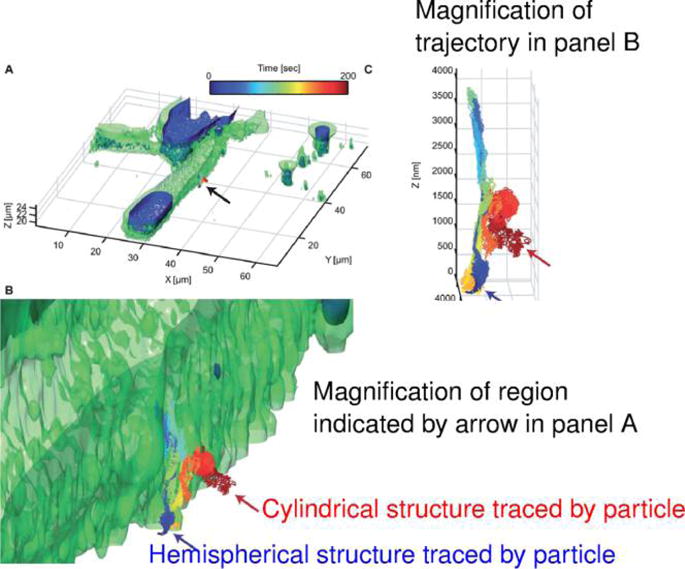

Figure 1.

Recent optical microscopy methods like lattice light sheet resolve structures within cells.6 In addition to imaging an entire structure, single particle tracking is also possible with this technology providing simultaneous information on both the “environment” and “dynamics” of tagged biomolecules. Reproduced with permission from ref 6. Copyright 2014, American Association for the Advancement of Science.

On the experimental front, many technical challenges remain including the following: high density labeling; poor time resolution at the expense of high spatial resolution; challenges with fluorophore activation and complex photophysics; overexpression of select proteins altering cell homeostasis; and high light intensity, some ~104 times higher than that under which cells have evolved for methods such as photoactivated localization microscopy.7 Despite these challenges, experiments have begun to resolve the spatiotemporal dynamics and organization of cellular components within their native environment, revealing, for instance, the intricacy of yeast DNA transfer from mother to daughter cell8 and the stochastic assembly of chemoreceptors on E. coli’s surface.9 What is more, recent advances in optics have mitigated the spatial-temporal resolution trade-off providing greater in vivo resolution in 3D.6,10–18 Advances continue to accrue, with the latest techniques reaching spatial resolutions of ~1 nm and temporal resolutions on the order of μs.19

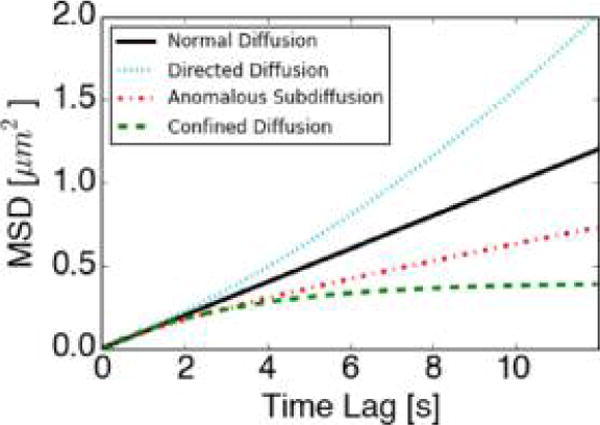

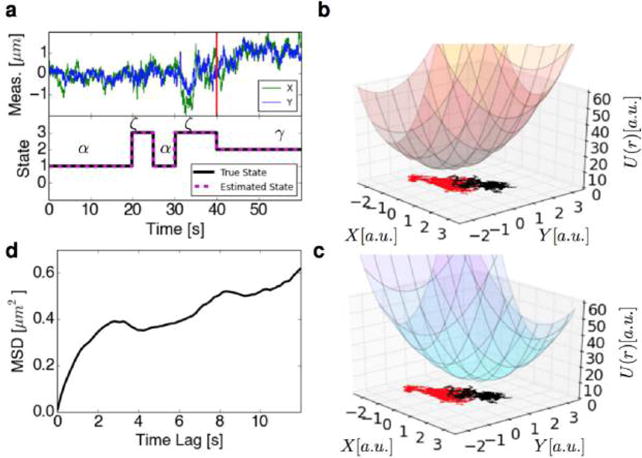

Ten years have passed since the inception of super-resolution microscopy and the variety of data collected has presented new modeling challenges.20 Initial data analysis methods, such as mean square displacement analyses, were directly motivated from the analysis of bulk ensemble data largely inspired by Occam’s razor. Thus, such methods did not explicitly take advantage of the richness of single molecule data sets such as their temporal ordering or even their intrinsic heterogeneity.

A large fraction of this review is devoted to later “data-driven” efforts, deeply inspired from the fields of machine learning and inference, and increasingly available through an array of open-source software,21–25 to turn the thousand-word picture provided by super-resolution methods into a quantitative narrative.

Here, we first review the basic physics of super-resolution methods (section 2) and tools of data-driven modeling (section 3). Subsequent sections tackle specific challenges present along the way: the localization problem (section 4), the counting problem (section 5), the linking problem (section 6), and what we’ve termed the interpretation problem (section 7).

2. BEATING THE DIFFRACTION LIMIT: AN INTRODUCTION

2.1. Why Fluorescence Microscopy?

Upon excitation of a sample within a specific wavelength range (the absorption spectrum), a fluorophore emits light at a longer wavelength (the emission spectrum). The excitation wave-length may be filtered away leaving behind only the emission from the fluorescent components. In this way, fluorescence brings improved contrast to microscopy.

The first fluorescence microscopes, developed by the Carl Zeiss company and others in the early 20th century, relied either on the autofluorescence of various tissues or chemical dyes and stains such as fluorescein.26 An important milestone in increasing the ability to fluorescently label a given biological structure was achieved by Coons et al. in the 1940s, who demonstrated that antibodies, raised to bind a specific antigen with high specificity, could be attached to fluorescent dyes, thus realizing a method to fluorescently label any antigen of interest.27 The subsequent discovery of the green fluorescent protein,28 together with advances in molecular biology techniques, then allowed the expression of proteins directly fused to fluorescent markers by the end of the 20th century.29

At the same time, the detection of the signal from single fluorophores (rather than larger labeled structures) was achieved by progressive improvements in instrumentation.30,31 This powerful combination of new advanced optical techniques with fluorescent protein tags, which could be detected in live cells at the single molecule level, set the stage for a new era of measurements in cell biology and biophysics.18

2.2. Point Spread Functions and the Diffraction Limit

Although labeling techniques have greatly improved over the last century, fundamental physical reasons have limited the resolution achievable by optical microscopy. Historically, this resolution has been defined as the ability to distinguish two close objects.

As early as 1834, Airy derived the profile of the diffraction pattern, or point spread function (PSF), of a point source of radiation imaged through a telescope, now known as the Airy disk.32 He established that “the image of a star will not be a point but a bright circle surrounded by a series of bright rings. The angular diameter of these will depend on nothing but the aperture of the telescope, and will be [sic] inversely as the aperture”.32 More precisely, for a telescope of aperture a imaging at a wavelength λ, the intensity I at an angle θ from the optical axis, relative to the intensity I0 at the center, is given by

| (1) |

where x = (2π/λ)a sin θ and J1 is the first order Bessel function of the first kind. Rings appear at the maxima x = x1, x2, … of I(x). In the limit of small angles (i.e., θ ≈ sin θ), these maxima correspond to θi = λxi/(2πa). Thus, the angular diameters of the rings are indeed inversely proportional to the aperture a (Figure 2).

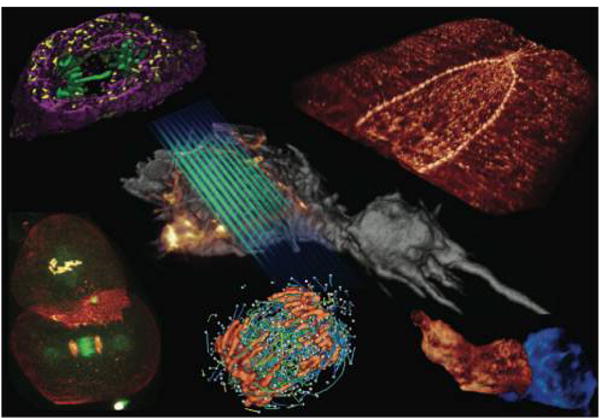

Figure 2.

Point emitter generates an Airy spot (a) with an intensity profile (b) given by eq 1. The wavenumber k, used in (b), is 2π/λ. The intensity profile and diffraction spots were plotted using a simple Python script.

A few decades later, Abbe showed that a similar result held for optical microscopy: a point source imaged at a wavelength λ through a microscope objective of numerical aperture NA, defined as the product of the index of refraction of the medium between the objective and the sample, n, and the sine of the half angular aperture of the objective, θ, yields a spot of size d ≈ λ/2NA in the transverse direction and 2λ/NA2 in the axial direction33 (Figure 3).

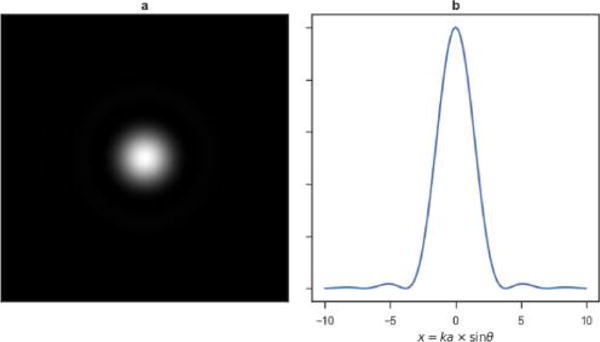

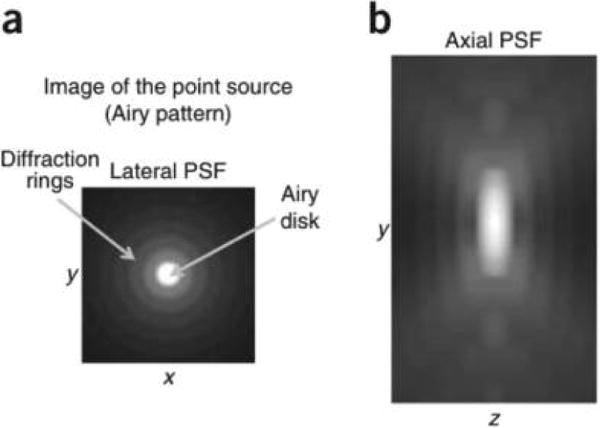

Figure 3.

Microscope seen as a telescope. (a) A microscope’s resolution is determined by the numerical aperture NA of its objective, which is defined as the product of the index of refraction of the medium between the objective and the sample, n, and the sine of the half angular aperture, θ. (b) A telescope’s angular resolution is determined by its (physical) aperture, D. The intensity profile and diffraction spots were plotted using a simple Python script.

Whether in astronomy or microscopy, it is the finite extent of the image of a point source that limits our ability to separate two objects nearby. In 1879, Rayleigh suggested a rule, now called the Rayleigh criterion, whereas two diffraction spots could be considered as resolved if their centers were further apart than the center of a spot is from its first zero in intensity34 (Figure 4). He emphasized that this rule was simply suggested as an approximation “in view of the necessary uncertainty as to what exactly is meant by resolution”, though this rule still remains in use today.35 In fact, it is generally agreed in astronomy that spots up to ~20% closer are resolvable.35

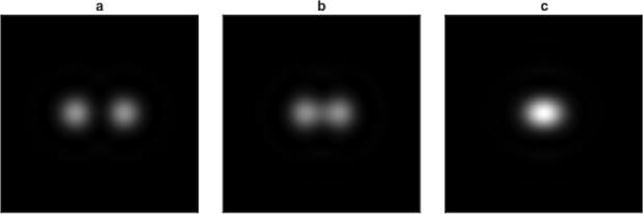

Figure 4.

Fully resolved (a), barely resolved (b), and nonresolved (c) Airy diffraction spots. The intensity profile and diffraction spots were plotted using a simple Python script.

Nowadays, super-resolution imaging continues to leverage ideas and tools from astronomy, both on the experimental36 and analysis side.37

Even though the Rayleigh criterion may not be strictly accurate, the resolution of a microscope is certainly inversely correlated with the size of the diffraction spot. As this spot has a size of d = λ/2NA in the transverse direction and d = 2λ/NA2 in the axial direction, improvements to the resolution are achieved by working at a shorter wavelength or larger numerical aperture.

The room for improvement from changes in the wavelength is limited by the spectrum of visible light, λ = 400 to 700 nm. Electron microscopy achieves a much higher, near-atomic resolution by operating at a pm-scale wavelength, but this comes at the cost of invasive sample preparations, radiation damage to the sample, and low contrast.38

The numerical aperture NA = n sin θ has also reached its practical limits: now, oil immersion objectives (n ≈ 1.5) with half angular apertures of more than 60° achieve NA ≈ 1.4. Few (easy to work with) liquids have higher indices of refraction. Taking these improvements together, the smallest spot size that can be achieved is thus around 150 nm in the transverse direction and 400 nm in the axial direction (Figure 5).

Figure 5.

Projections of an example 3D PSF on (a) the x−y plane and (b) the y−z plane.39 Reproduced with permission from ref 39. Copyright 2011, Nature Publishing Group.

2.3. Beyond the Diffraction Limit

Objects may be distinguished from one another at a subdiffraction scale by using a combination of methods including structured illumination, stochastic fluorophore activation, and basic data processing.

As an example of the latter, if we approximate the imaging system as a linear system, i.e., where the measured image can be obtained by applying a linear operator (e.g., convolution by the PSF) to the original sample (e.g., the emitter’s original intensity distribution), it is in principle possible to mathematically invert (“deconvolve”) the imaging operator to reconstruct a higher resolution image, by solving a system of linear equations. Unfortunately, theoretical results indicate that the performance of such an approach is strongly limited by noise.40,41 Nonetheless, in the context of microscopy, this idea was first implemented by Agard et al.42 and may achieve a 2-fold improvement.43

Furthermore, Rayleigh’s criterion does not limit the ability to determine to very high accuracy the position of a single point emitter. For example, the center of a single spot can be estimated to a precision length smaller than the size of the spot itself by fitting the emission pattern to a known PSF, or an approximation of it, such as a Gaussian. The central limit theorem then suggests that the accuracy of such a calculation should be proportional to the inverse square root of the number of observed photons.

By determining the approximate position of emitters over a time series of fluorescence images, where the low density of fluorescent markers ensured their spatial separation, Morrison et al. tracked the diffusion of individual low-density receptors on cell membranes, with a resolution of ~25 nm, well below the diffraction limit.44,45

Even as early as in 1995, Betzig suggested that such a localization strategy may be applicable in more densely labeled samples as well, provided that “unique optical characteristics” could be imparted on individual fluorophores.46 Such “unique characteristics” would allow distinguishing the signals arising from each of the fluorophores; thus, the fluorophores underlying each diffraction spot could then be localized with subdiffraction accuracy.46

Betzig’s original suggestion was to discriminate certain molecules that would exhibit a random spread in their zero phonon absorption line width.46 However, it was instead the serendipitous discovery of a photoconvertible fluorescent protein, that is, a fluorescent protein whose emission spectrum can be modified by a light-induced chemical modification,47 as well as the development of optically switchable constructs based on organic dyes,48 that provided the critical advance toward the realization of this proposal in biological samples.

Briefly, the light-induced conversion of probes to a fluorescent state at a slow enough rate ensures that only a few probes are emitting at any given time even if the sample itself is densely labeled, thus generating the sparsity needed for localization in dense environments.3–5 Both labeling approaches were shown to be amenable to this technique: the approach based on fluorescent proteins was named (fluorescence) photoactivated localization microscopy ((F)PALM);4,5 and the approach based on organic dyes, stochastic optical reconstruction microscopy (STORM)3).

While this review will primarily focus on techniques that rely on the stochasticity of photoconversion to temporally separate the emission of different fluorophores, it is also possible to exploit another physical phenomenon to enforce this separation. Specifically, as early as in 1994, Hell et al. noted that while the diffraction limit imposes a lower bound on the size of excitation spots, it is possible to decrease the size of this spot by “deexcitation” (stimulated emission depletion, STED) of the fluorophores located on its edges.49 Specifically, this deexcitation is carried out by alternatively exciting fluorophores within a small region of the sample and immediately illuminating a doughnut-shaped area around this region with a depletion laser, bringing the fluorophores back to their ground state. The intensity profile of this second region is also diffraction limited; however, given enough time, only the fluorophores close to the exact center of the doughnut (where the deexcitation intensity is zero) stay active. Measuring the fluorescence of these remaining fluorophores thus realizes a point spread function that is effectively smaller than the diffraction limit.

A similar approach, relying on the readout of fluorescence along thin stripes rather than small spots, was also developed, under the name of saturated structured-illumination microscopy (SSIM).2 This method relies on the observation that high spatial frequencies in the fluorophore distribution can be “brought back” to a lower frequency under illumination by a similarly high frequency pattern (i.e., by observing the beats between the two patterns).50 Using linear optics (structured illumination microscopy, SIM), the illumination pattern itself is diffraction-limited, and thus the resolution improvement of SIM is limited to a factor of 2 over diffraction-limited microscopy; however, the nonlinearity offered by the saturation method described above allows the generation of higher-frequency patterns and thus further gains in resolution.2

Ultimately, the fundamental basis for any of these techniques is to note that the diffraction limit was derived under certain “standard”, but not absolute, hypotheses: that all fluorophore positions must be recovered from a single image and that the signal captured depends linearly on the excitation. Attacking the first condition, by spreading the information across multiple frames, is the approach taken by stochastic photoconversion. STED and SSIM, additionally, also violate the second condition, by operating in a nonlinear regime.

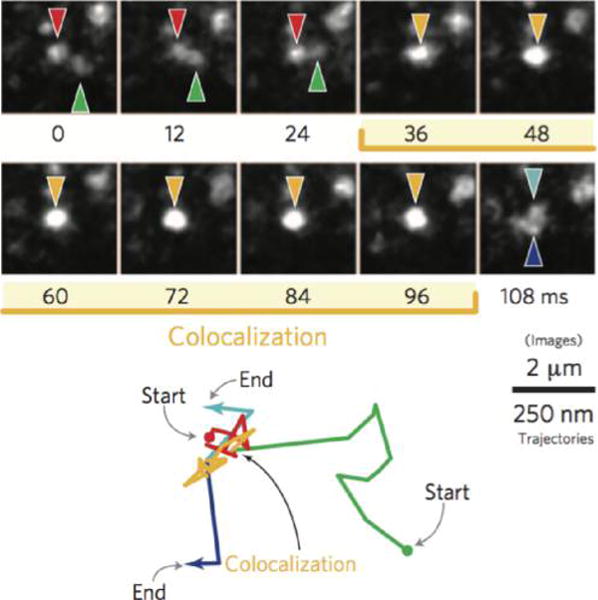

The large improvement in resolution afforded by structured illumination and stochastic activation of fluorophores, together termed super-resolution microscopy, immediately opened the door to a large number of discoveries. As early as in 2007, Shroff et al. demonstrated the ability of two-color super-resolution to resolve the relative positions of pairs of proteins assembled in adhesion complexes, the attachment points between the cytoskeleton of migrating cells and their substrates, which otherwise seem entirely colocalized in diffraction limited microscopy (Figure 6).51

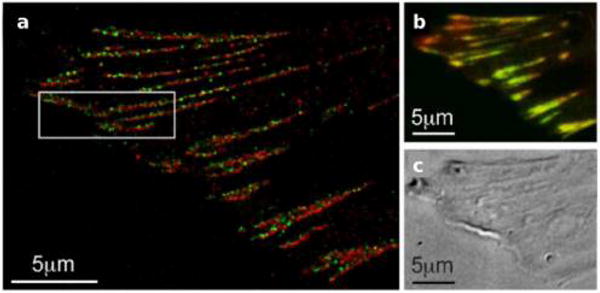

Figure 6.

Two-color PALM can be used to address fundamental questions on molecular organization. (a) Adhesion proteins paxilin (green) and zyxin (red) are shown by two-color PALM to adopt different spatial distributions even though they appear colocalized in (b) diffraction limited microscopy. (c) A differential interference contrast image of the image shown in (b).51 Reproduced with permission from Hari Shroff, Catherine G. Galbraith, James A. Galbraith, Helen White, Jennifer Gillette, Scott Olenych, Michael W. Davidson, and Eric Betzig. “Dual-color superresolution imaging of genetically expressed probes within individual adhesion complexes.” Proceedings of the National Academy of Sciences 104, no. 51 (Dec 18, 2007): 20308−20313. Copyright (2007), National Academy of Sciences, U. S. A.

3. DATA-DRIVEN MODELING: KEY CONCEPTS

In the previous section, we discussed the basic physics underlying super-resolution microscopy. Here, we frame the foundational statistical ideas necessary for a quantitative treatment of super-resolution microscopy data that we will use in later portions of this review without exhaustively reviewing all tools or aiming at mathematical rigor.

While the methods and tools presented here are often established in statistics, examples drawn from optical microscopy will be used to motivate the ensuing discussion. We begin with a high-level description of two model building approaches: forward and inverse methods. Forward model-building approaches, whose first step is to posit physical models motivated by observation and first-principles,52 are broadly used in physics and chemistry. By contrast to forward modeling, inverse modeling approaches or “inference” use the data to learn the model under some assumptions.

The list below summarizes the essence of the data-driven, inverse, methods.

Propose model(s): Models or hypotheses are proposed. This includes proposing models for the system of interest such as whether motion of a particle is “directed” or “diffusive” and models for the measurement noise–such as photon statistics–treated in greater detail in section 3.1.1, which affects static53–55 and dynamic22,25,56–58 quantities throughout optical microscopy. Models proposed can be parametric or nonparametric models (as defined in section 3.1.2).

Inference/Data-mining: After a model form is selected, model parameters are inferred, or estimated, from the data. We review both frequentist and Bayesian inference methods (sections 3.2.1 and 3.2.2) and refer the reader to a recent review for information theoretic inference schemes.59

Reject or select model(s): After a model has been parametrized, we compare the model’s prediction(s) and consistency with existing or new data using hypothesis testing. Often the model’s consistency with the data is used to compare candidate models, a topic more broadly referred to as the model selection problem, discussed in section 3.3.

3.1. Deciding How to Propose Models

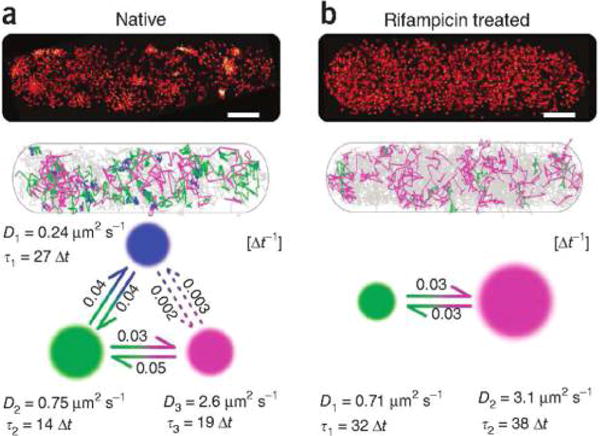

Models proposed often come along with assumptions about the system and its noise. These assumptions may be only locally valid. That is, while simple models of normal, confined, or directed diffusion12,60 may be valid over a small region of space or time, these models may not hold over the entire data set. In this case, locally valid models may be stitched or otherwise combined to describe the heterogeneity inherent to live cells.61–66 Indeed, systematic strategies for combining simpler models are an area of active research in statistics and machine learning67–69 that we will discuss in greater depth in section 7.3).

As a practical example, combining simple models together is important as is evident from single particle trajectories whose dynamics may be affected by molecular crowding,70–74 binding,63,75–79 or active transport.80–84 While an anomalous diffusion model may appear more appropriate for such in vivo dynamics, normal diffusion models are often still warranted for such apparently anomalous diffusive processes since, for instance, temporal and spatial resolution now experimentally resolvable can measure short diffusive trajectory segments before events typically leading to anomalous diffusion become manifest.61,63,65,66,85–88

Once models are obtained and parametrized, models provide a mathematical framework in which to address quantitative questions and test basic model hypotheses.

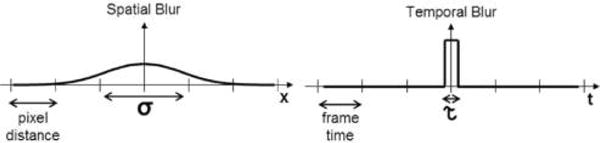

3.1.1. Signal versus Noise

Fluorescence microscopy data sets are intrinsically noisy. For example, the sample may exhibit background autofluorescence, the camera may introduce shot noise, experimental apparatus may drift during data acquisition, and molecules of interest may move faster than the acquisition rate.

The importance of accounting for measurement noise inherent to optical microscopy has been highlighted in the recent literature.24,88–93 For instance, it is now becoming more widely appreciated that neglecting measurement noise in single particle tracking (SPT) can be mistakenly interpreted as a signature of anomalous diffusion.22

In particular, inverse approaches, where models are directly drawn from the data, strongly rely on criteria to distinguish signal from noise. These approaches are particularly sensitive to noise models,61,86,94–96 and for this reason, it is often best to simultaneously infer the model for the system of interest together with the noise model in a self-consistent fashion. The hidden Markov model that we describe in greater depth in section 3.4.1 is one such example.

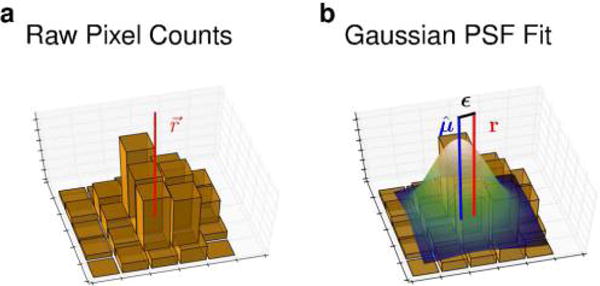

For now, we begin with a motivating example of noise encountered in attempting to localize a point emitter whose true spatial location, r, is assumed to be fixed (Figure 7). An estimate of the true spatial location r may be obtained, for example, as the center of a Gaussian PSF fitted to the 2D photon count histogram (a standard approach in super-resolution microscopy53–55).

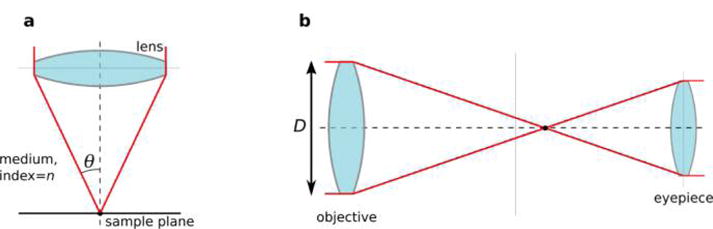

Figure 7.

Measurement error affects particle localization. (a) 2D histograms contain photon numbers detected (by a CCD camera, say) along the x and y coordinates with the true molecular position (r) indicated by the point at which the solid vertical red line intersects with the x,y-plane. (b) In order to localize a static point emitter, the histogram may be fit to a PSF (e.g., a Gaussian or an Airy function) illustrated by a color surface contour. The estimate of the emitter’s position, μ, shown by a solid vertical blue line, is approximated from the PSF. The difference between μ and r is the localization error ε. Factors such as photon count, exposure time, pixel size and background autofluorescence all contribute to ε.

A careful description of the various methods for extracting from the data (section 4) will highlight how finite photon counts, camera pixelation, camera-type specific noise, and background autofluorescence all contribute to the noise,54,56 and cause the estimated position to differ from the true molecular position by an error ε, that is,

| (2) |

The error ε, illustrated in Figure 7, is typically assumed, in static imaging applications, to be a random variable of mean zero.53,54

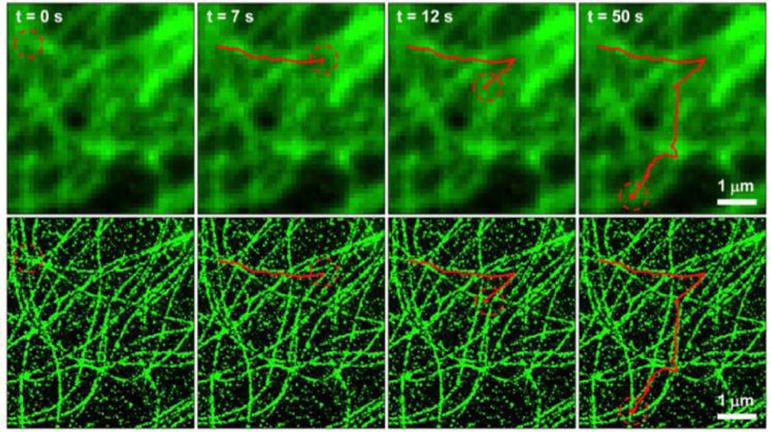

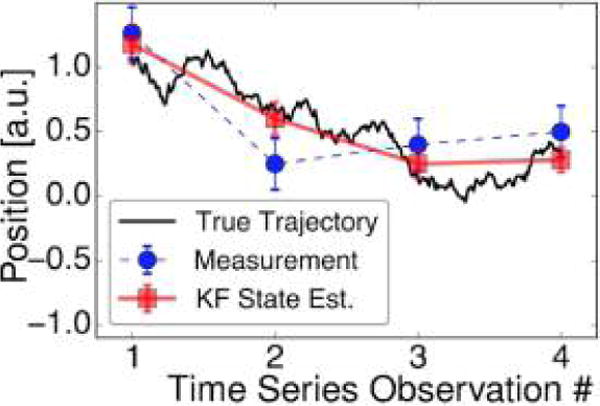

Beyond the type of noise that we have surveyed here that arises in inferring models from static structures, we briefly mention noise sources that arise from molecular motion during imaging such as “motion blur” or “dynamic [measurement] error”22,56 discussed in greater depth in sections 6.5 and 7 and illustrated in Figure 8.

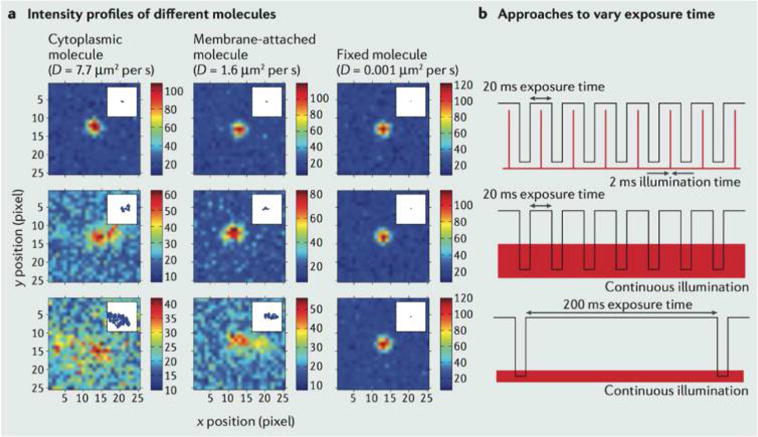

Figure 8.

Motion and optics affect measurement noise. The simulated images illustrate how thermal motion, inherent to nanoscale measurements, affect the intensity profile of three molecules with different (given) diffusion coefficients D (describing the diffusion of cytoplasmic, membrane- attached and fixed/immobile molecules) shown in the columns of panel (a). (a) The estimated point spread function (PSF) measured for different molecules under alternate illumination strategies (shown in the rows). The color bar displays the number of photons collected. (b) The illumination strategies are stroboscopic (top), continuous illumination with short camera exposure time (middle), continuous illumination with long camera exposure time (bottom). The two-state signals shown denote the state of the shutter (open/closed) and laser (on/off). The localization precision (accuracy and uncertainty) is affected by how accurately the PSF can be inferred from digital images produced by tagged molecules. The plots from panel (a) demonstrate how some molecular and sampling parameters affect measurement noise statistics when the PSF is used to infer the molecular position. “Smearing” of the PSF, induced by motion blur when a simulated molecule spans an area covered by different pixels in a single frame, introduces a new source of position uncertainty (in addition to the localization noise described in Figure 7). Reproduced with permission from ref 97. Copyright 2013, Nature Publishing Group.

Motion blur presents important theoretical challenges: (i) it complicates the task of localizing particles, whose localization may no longer be adequately modeled using temporally uncorrelated Gaussian noise22,25,54–56,58 (section 4)); (ii) it complicates linking localized point emitters across frames into a single particle trajectory (section 6); and (iii) it fundamentally alters the interpretation of kinetic data12,15,22,25,58,86,95,98–100 (section 7). As a more concrete example of the latter, the point emitter’s motion within a single image frame statistically couples measurement noise to the molecule’s thermal fluctuations,22,25,58 which is a coupling often ignored.54,55,60

Faster data acquisition may not necessarily reduce dynamical noise since, as is described in the stochastic process time series literature,101,102 improved temporal resolution comes hand-in-hand with increased measurement noise (section 6.5). As a result, recent literature has begun addressing the problem of disentangling measurement noise from thermal noise in high frequency (above 10 kHz) single molecule measurements.103–105

Noise is unavoidable and the mathematical frameworks we present in subsequent sections present principled strategies for incorporating knowledge of noise statistics into the process of model inference.

3.1.2. Parametric vs Nonparametric Modeling Frameworks

Once a model for both the system of interest and its associated noise are selected, the next step is to infer all model parameters. The main feature distinguishing parametric from nonparametric models is the number of parameters or, more precisely, how this number depends on the amount of data available.

Parametric methods have a fixed number of parameters, independent of the amount of data.106 In other words, a parametric model’s functional form, M, is prespecified and its parameters, θ = {θ1, θ2, ⋯, θK}, are K numbers to be determined from the data, D = {D1, D2, ⋯, DN}. Thus, rigid assumptions on the nature of the model are made before data is even considered. Despite this apparent shortcoming, parametric models also present important advantages: they are easily interpretable and parameters can be estimated “efficiently”, where efficiency is measured in terms of an estimator’s parameter variance relative to the Cramér-Rao lower bound (CRLB).54,107

As an example of a parametric model, we assume that we are modeling the data as independent identically distributed (iid) draws from a 1D Gaussian density indexed by its parameters θ ≡ (μ, σ). The model, captured by the conditional probability of the data given the model, is

| (3) |

Having just selected a parametric family for p(Di|θ) we may now use the data to provide an estimate for θ denoted by .

The model above makes a number of implicit assumptions. For example, it assumes a priori that there are no statistical dependencies between measurements; noise is time-independent, the density of the random variables are unimodal, the mean of the random variables equals the mode of the distribution, etc. These a priori specifications highlight the type of rigid structure that is often imposed by a parametric model.

By contrast, the phrase “nonparametric model” implies that a model is not initially characterized by a finite, fixed number of parameters.106,108 Nonparametric methods are advantageous as they provide the flexibility to account for features arising in the data that were not a priori known. For example, if the process under consideration is characterized by a fixed, albeit multimodal density, where the number of modes is unknown in advance, a nonparametric model, such as a kernel density estimator,109 would reveal the more complex shape of the density characterizing the data generating process as more data becomes available. By contrast, a parametric approach would likely misbehave since the multimodal aspect of the data was not explicitly accounted for in advance.

Although nonparametric approaches are more general, they do not avoid modeling assumptions106,108 and infinite amounts of data do not necessarily “automatically guide one to the correct model”. More concretely, the iid assumption, which can be verified by statistical methods110–112 discussed in section 3.3), may still be incorrectly invoked by a multimodal (mixture) model. In particular, the “identical” aspect of iid is often suspect, as the distribution from which each observation is sampled may change over time due to, for example, drift in the alignment of optical components.

3.2. Inference

Parametric and nonparametric models alike contain parameters that we must learn, or infer, from the data. Here we review two approaches to parameter inference, frequentist or Bayesian, and we refer the reader to Tavakoli et al.59 and Pressé et al.113 for information theoretic approaches.

3.2.1. Frequentist Inference

The term “frequentist” in the statistics literature implies that there exists a fixed parameter θ responsible for generating the observations. The frequentist approach can be summarized as follows: (i) “probabilities” refer to limiting relative frequencies of events and are objective properties and (ii) parameters of the data generating process are fixed (typically unknown) constants.106

Maximum likelihood is a common frequentist approach to parameter estimation and it yields a maximum likelihood estimate (MLE) for an unknown parameter.106,110,114

Briefly, in maximum likelihood estimation, we begin by writing down a likelihood, the probability of the sequence of observations given a model,

conjectured to have given rise to the these observations. For shorthand, we drop the M dependence such that p(D1, D2, …, DN|M, θ) = p(D1, D2, …, DN|θ). We then maximize the data’s likelihood with respect to the parameter vector θ associated with the posited model M.

For example, for iid observations where , the MLE for θ is

| (4) |

where the MLE, , maximizes the log likelihood function l(θ|D). While both the likelihood and its logarithm yield identical MLEs, likelihoods are typically small quantities and logarithms are used to avoid numerical underflow problems.

To make our example specific, suppose we want to estimate the emission rate of a fluorophore, r, from a single count of the number of photons emitted, n, in time interval ΔT. Our model assumes that all photons emitted are collected and that the number of photons emitted per time interval is Poisson distributed. Under these model assumptions, the likelihood of observing n photons given our model is

| (5) |

By maximizing the likelihood, eq 5, with respect to the rate, r, we obtain an estimate for the rate in terms of known quantities, . Any other value of r would decrease the likelihood of observing n photons in the interval ΔT.

3.2.1.1. Time Series Analysis and Likelihoods

Likelihood ideas readily accommodate time series data as they respect the natural time ordering of observation. This feature of likelihood methods is especially useful in treating temporally correlated observations that arise in tracking superresolved particles or even counting fluorophores within a region of interest.12,14,15,21,66,85–88,115

For example, consider the simplified case where the data consists of a series of coordinates at different points in time D = {r1, r2, …, rN}. Here, the likelihood over the full joint distribution of the time ordered data, p(D|θ), is given by

| (6) |

While we’ve assumed in writing D = {r1, r2, …, rN} that the data provided positions with no associated uncertainty, we discuss noise in greater depth thoughout this review.

Accurately computing the likelihood and maximizing it with respect to all model parameters, such as all conditional probabilities that appear in eq 6, is difficult because of the large number of conditional probabilities that appear when N is large.110 The Markov assumption for first-order Markov processes, that the probability of sampling ri at time point i only depends on the state of the system in the immediate past (i − 1), is therefore commonly invoked in SPT analysis in order to reduce the number of parameters that must be inferred. From this point on, whenever we refer to Markov processes or the Markov assumption we assume they are first-order. While the validity of the Markov assumption can also be checked,110 there are information theoretic reasons for a priori preferring Markov models, discussed elsewhere.113,116

Under the Markov assumption, eq 6 reduces to the more tractable form

| (7) |

with the Markov process’ conditional density, p(ri |ri−1, θ), often called the “transition density”.

As a more concrete example for which the transition densities take specific functional forms, suppose the model, M, coincides with simple normal diffusion and observations are uniformly spaced Δt time units apart. For this example, the transition density of a time series measured without noise, p(ri |ri−1, θ) is available in closed-form and is a Gaussian density with mean ri−1 and, for standard SPT models, covariance matrix 2DΔt where D is a diagonal matrix of diffusion coefficients.

In more general Markovian time series models, obtaining the transition density often requires solving the Fokker–Planck equation associated with the posited model M for the model’s transition density.117,118 Since the Fokker–Planck equation may lack a closed-form solution, the transition density may therefore not have an analytical form adding to the computational cost of likelihood maximization. While it is true that individual likelihoods are simple, their products can become very complicated and significantly increase the computational burden. In and of itself that should not be a prohibitively expensive computational issue. However, given the size of the computational burden already needed for processes such as linking algorithms, an increase due to the need to solve numerically elaborate likelihoods might well be the proverbial “straw that breaks the camel’s back”.

While some popular Markovian motion models arising in super-resolution trajectory analysis (section 7.1) admit closed-form transition densities,25,86 others do not. For this reason, we mention that approximating a likelihood may introduce biased parameter estimates which, in turn, may affect the outcome of model goodness-of-fit tests (section 3.3) used to check the consistency of the model with the data.

3.2.1.2. Confidence Intervals

The precision of a parameter’s MLE is quantified by the concept of confidence interval (or a confidence set if multiple parameters are estimated). In order to introduce this concept, we briefly return to the example shown in Figure 7 where we suppose that an emitter, fixed in space, does not photobleach. Under this circumstance, we repeatedly image the molecule, and for each of the N independent images measured, a different number of photons are collected due to the inherently stochastic emission properties of the fluorophore, as well as other noise sources.

As a result of these variations, different images produce different empirical PSF fits. These fits yield the following hypothetical estimates of a molecule’s position

For each estimated mean, , we may then compute confidence intervals at the level of 1 − α where α ∈ [0,1] (and where α = 0.05 is typically used, which corresponds to computing the 95% confidence interval).

Confidence intervals are functions of the observed (random) data and the assumed model. Within a frequentist inference framework, the true fixed parameter either does or does not fall within the confidence interval106 (i.e., with probability 1 or 0 since the frequentist parameter is not random), but the width of the confidence interval does give an indication of the “quality” of the measurement. It should be noted that, despite being very useful in accounting for random errors, confidence intervals critically depend on the assumed model and can fail if this model is wrong or if undetected systematic errors exist.

In our example, one could compute the collection of confidence intervals associated with each of the estimates . By definition, if the data was indeed generated from the specified model, then a fraction 1 − α (e.g., 95%) of these confidence intervals will contain the true (unknown but fixed) molecular position, r.

As mentioned earlier, maximum likelihood methods are popular in part because, in the limit of infinite sample size, they asymptotically achieve the CRLB.54,119–121 In the non-asymptotic regime, it is ultimately the shape and breadth of the full likelihood function around its maximum which provides an estimate for the quality of the MLE.

3.2.1.3. Other Frequentist Estimators

Maximum likelihood is not the only type of frequentist method. Among other frequentist methods, we can mention least-squares regression53 which reduce to maximum likelihood under the assumption of Gaussian uncorrelated noise, generalized moment-type methods110 (which match select model moments to the data), and general least-squares (which minimize the distance of a parametrized function from the data).

While typically less efficient (as measured by the CRLB) than likelihood maximization,110 both moment and least-squares methods can be attractive, because computing the exact likelihood can be complicated or intractable in some models since the full joint distribution may not be known or easily computable. Hence, an approach only requiring specification of a few moments or minimizing the distance to a parametric function may be preferred.

3.2.1.4. Fundamental Assumptions of Frequentist Inference

We end this section on frequentist inference with a note on the assumption that the parameter to be inferred is “fixed”. While this may appear as an advantage, heterogeneity inherent to live cells may require the assumption of having a single fixed parameter vector describing all data to be relaxed.

What is more, in live cell super-resolution applications, in vivo conditions can never be exactly reproduced and thus each experiment is never a true iid draw as described in our hypothetical example used to illustrate the confidence interval concept.

This being said, a collection of point parameter MLEs can still be useful in quantifying live cell heterogeneity. For example, once the MLE of an assumed model and data set are obtained, this MLE can be used to verify the model’s consistency with the data. If the model is deemed consistent, the MLE can be used to compute a theoretical CRLB or to simulate new observations in order to compute “bootstrap estimates” of the MLE parameter’s confidence interval.106,122 The simplest indication of heterogeneity would then be suggested by nonoverlapping confidence intervals.

3.2.2. Bayesian Inference

Bayesian methods, now widely used across biophysics,61,65,96,123–127 are defined by the following key properties:106 (i) a probability describes a degree of belief, not a limiting frequency, (ii) distributions for parameters generating the data can be defined even if parameters themselves are fixed constants, and (iii) inferences about parameters are obtained from “posterior probability” distributions.

While frequentist methods yield parameter “point estimates” or MLEs, Bayesian methods return a full distribution over unknown parameters (the posteriors) for the same amount of data. Because the posterior is a joint distribution over all parameters, it can also quantify relationships between parameters. Therefore, while frequentist methods treat the data as a random variable, Bayesian methods treat data in addition to model parameters, as random variables.128

In frequentist reasoning, the likelihood played a central role. By contrast, in Bayesian inference, it is the posterior, p(θ|D, M). That is, the probability of the model parameters having considered the data (and the model’s structure, M). Again, for shorthand, we drop the M dependence of the posterior.

We can construct the posterior using the likelihood as well as the prior over the model, p(θ), by invoking Bayes’ theorem as follows

where p(D) is obtained by normalization from

| (8) |

where p(D, θ) is the joint probability of the model and the data.

For parametric models, the integral in eq 8 represents an integration over the model’s parameters. However, for multiple possible candidate models, we first need to sum over all models and, subsequently, integrate over their respective parameters.

We may even compare models, say Ml for different values of l selected from a broader set of models M by integrating over all parameters assigned to that model

| (9) |

Then marginal posteriors, p(Ml|D), across different l’s can be compared to select between different candidate models irrespective of their precise parameter values.124

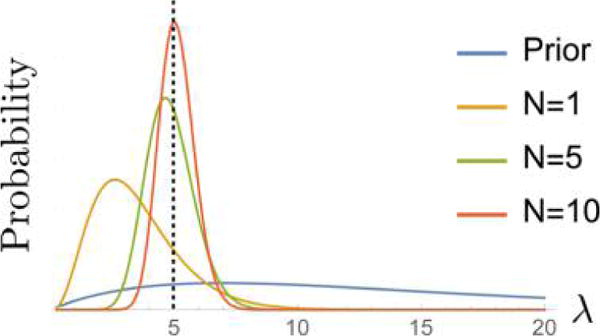

For an increasing number, N, of data points, the likelihood function itself dictates the shape of the posterior distribution. Thus, a proper choice of likelihood function, even in Bayesian inference, is critical (Figure 9).

Figure 9.

Posterior often becomes sharper as more data is used. Here data are sampled from a Poisson distribution with λ = 5 (designated by the dotted line) with D = {2, 8, 5, 3, 5, 2, 5, 10, 6, 4}. The prior (eq 12 with α = 2, β = 1/7) is plotted along with the resulting posterior informed by N = 1, N = 5, and N = 10 data points.

3.2.2.1. Priors

Parameter estimates obtained by maximizing a posterior generally converge to the MLE in the limit of infinite data108 (Figure 9). However, with finite data sets, faced in all data-driven modeling efforts, the prior can cause Bayesian and MLE estimates for parameter values to differ substantially (with Bayesian parameter point estimates coinciding with the maximum of the posterior distribution). This suggests that, for scarce data, a judicious choice of prior is important.

An extreme realization of limited data is found in traditional statistical mechanics where a (canonical) distribution over arbitrarily many degrees of freedom for particles follows from just a single point observation (the average energy) with vanishingly small error.113,129,130 While more data is typically assumed available in biophysics, data may still be quite limited. For example, in single particle tracking, individual tracks may be shortened due to photobleaching or by having particles move in and out of focus.61 Priors can be tailored to address many issues arising from data sparsity thereby conferring an important advantage to Bayesian methods and, in particular, the analysis of super-resolution data. For this reason, we discuss two different prior types: informative and uninformative.128,131

3.2.2.2. Uninformative Priors

The constant, uniform prior, inspired by Laplace’s principle of insufficient reason for mutually exclusive hypotheses, such as coin flips, is the simplest uninformative prior.

For a prior, p(θ), constant over a reasonable range, the likelihood and posterior have precisely the same dependence on model parameters, θ,

| (10) |

As a result, in this simple case, the likelihood can be treated as a model posterior.

A counterintuitive implication of assuming uninformative priors follows from the observation that uniformity of a prior over a model parameter, say θ, implies some structure, and thus knowledge, on the distribution of a related variable, say eθ.128 Conversely, if we assume that the variable eθ is uniform on the [0, 1] interval, the variable θ is concentrated at 1.

This problem is addressed by invoking priors invariant under coordinate transformations, such as the Jeffreys prior,132–134 which is sometimes used in the biophysical literature.135–138

3.2.2.3. Informative Priors

Bayes’ theorem, the recipe by which priors, p(θ), are updated into posteriors, p(θ|D), upon the availability of data, directly motivate another prior form.

Specifically, if we insist that priors and posteriors be of the same “mathematical form”, then the form of the likelihood sets the form of the prior. Such a prior, which when multiplied by its likelihood generates a posterior of the same form as the prior, is called a conjugate prior.

An immediate advantage of using conjugate priors is that, when additional independent data (say D2 beyond D1) are incorporated into a posterior, the new posterior p(θ|D2, D1), obtained by multiplying the old posterior, p(θ|D1), by the likelihood, is again guaranteed to have the same form as old posterior and the prior

| (11) |

Priors, say p(θ|γ), may depend on additional parameters, γ, called hyperparameters distinct from the model parameters θ. These hyperparameters, in turn, can also be distributed according to a hyperprior distribution, p(γ|η), thereby establishing a parameter hierarchy.

As a specific example of a parameter hierarchy, an observable (say the FRET intensity) can be function of the state of a protein, which depends on transition rates to that state (model parameters), which, in turn, depends on prior parameters (hyperparameters) determining how transition rates are assumed to be a priori distributed. We will see examples of such hierarchies in the context of later discussions on nonparametric Bayesian methods (section 3.4).

We illustrate the concept of conjugacy by returning to our earlier single emitter example. The prior conjugate to the Poisson distribution with parameter λ, where λ plays the role of rΔT (eq 5), is the gamma distribution

| (12) |

which contains two hyperparameters, α and β. After a single observation of n1 emission events in time ΔT, the posterior is

| (13) |

while, after N independent measurements, with D = {n1, ⋯nN}, we have

| (14) |

Figure 9 illustrates both how the posterior is dominated by the likelihood provided N is large and how an arbitrary hyperparameter choice becomes less important for large enough N.

Single molecule photobleaching provides yet another illustrative example64 and allows us to introduce the topic of inference of a probability distribution relevant to our later discussion on Bayesian nonparametrics.

Here we consider the probability that a molecule has an inactive fluorophore (one that never turns on), a basic problem facing quantitative super-resolution image analysis.139 Let θ be the probability that a fluorophore is active (and detected). Correspondingly, 1 − θ is the probability that the fluorophore never turns on. The probability that y molecules among a total of n molecules in a complex turn on is then binomially distributed

| (15) |

Over multiple measurements (each over a region of interest with one complex having n total molecules) with outcome yi, we obtain the following likelihood:

| (16) |

One choice for p(θ) is the beta distribution, the conjugate prior to the binomial

| (17) |

whose multivariate generalization is the Dirichlet distribution, which can be further generalized to the infinite-dimensional case (the Dirichlet process) that we will revisit on multiple occasions.

By construction (i.e., by conjugacy), our posterior now takes the form of the beta distribution

| (18) |

Given these data, the estimated mean, , obtained by maximizing this posterior, called the maximum a posteriori estimate, is now

| (19) |

which is, perhaps unsurprisingly, a weighted sum over the data and prior expectation (the first and second terms, respectively, on the right-hand side).

Conjugate priors do have obvious mathematical appeal and yield analytically tractable forms for posteriors (eq 18), but they are restrictive. Numerical methods to sample posteriors, including Gibbs sampling and related Markov chain Monte Carlo (MCMC) methods,140,141 continue to be used64 and developed142 for biophysical problems and have somewhat reduced the historical analytical advantage of conjugate priors. However, the advantage conferred by the tractability of conjugate priors has turned out to be major advantage for more complex inference problems such as those involving Dirichlet processes (section 3.4).

3.2.2.4. Credible Intervals

The analog of a frequentist confidence interval is a Bayesian credible interval. Likewise in higher dimensions, the Bayesian analog of the confidence set is the credible region. A Bayesian 1 − α credible region is any subset of parameter space over which the integral of the posterior distribution probability is equal to 1 − α.143 For a more concrete 1D example, suppose that we compute the posterior over a 1D Gaussian random variable. The probability of the Gaussian’s mean parameter falling between a given lower bound a and upper bound b would be the area of the posterior density over [a, b]. Any interval having a probability of 1 − α is a valid 1 − α credible interval.

3.3. Reject or Select Model Based on Empirical Evidence

3.3.1. Frequentist Goodness-of-Fit Testing

Before physically interpreting models, we should verify whether various model assumptions are consistent with the data. Checking a model’s distribution, and its implied statistical dependencies, against measured data falls under the category of “goodness-of-fit tests”.106,114,144

3.3.1.1. Example of Goodness-of-Fit Testing for iid Random Variables

A classic example of a goodness-of-fit test is the well-known Kolmogorov–Smirnov (KS) test,144 which we describe here. We assume that N iid samples of a scalar random variable X are drawn from an unknown distribution F. The empirical cumulative distribution function (ECDF) is defined as where IA is the indicator function of event A. That is, the ECDF evaluated at v is the fraction of times the random variable was equal to or less than v.

In the classic KS framework, the statistical model assumes a known distribution F0. The KS test checks the null hypothesis, H0: F = F0 against the alternative hypothesis Ha: F ≠ F0. To decide between hypotheses, one computes the “test statistic” for iid samples . If TN is greater than a critical value (determined for any desired test accuracy144) one rejects the null hypothesis and places less confidence in the model. From a frequentist point of view, the test can be understood as follows: if we generate samples of size N from the distribution F0, their ECDF will typically not match the cumulative distribution function (CDF) of F0 exactly but will be at some “distance” (as quantified by the KS test statistic) from it; we then ask, how likely is it that this distance is as large as the one computed for our data set? If such a large distance occurs with a probability less than α, then the null hypothesis is rejected.

As we will discuss in section 3.3.4, more complex models will tend to fit the data better. The KS test does not penalize a model for complexity in any way, it simply checks for consistency of a model’s distribution against the empirically observed data. This should be contrasted with model selection criteria (section 3.3.4) which attempt to balance model complexity and “predictive scores” (such as the likelihood).114

3.3.1.2. Goodness-of-Fit Testing in Time Series Analysis

The basic idea behind the KS goodness-of-fit test has been extended to multivariate random variables and to random variables exhibiting time dependence (i.e., time series).111,112

While many other goodness-of-fit tests, including the chisquare and Cramér-von Mises tests,144 exist, here we briefly illustrate how the ideas behind the KS can be generalized to time series data. We consider the case of scalar time series data D = {r1, r2, … rT }.

If a Markovian model, with prespecified parameter vector θ0, is assumed, we can introduce the Rosenblatt transform145 also known as the “probability integral transform” (PIT)111,112 defined by dx for observation i.

The sequence of Zi’s is now iid, despite the fact that the sequence was derived from a time series exhibiting statistical dependence or possibly even nonstationary behavior.86,111 All of the same tests used for iid variables, such as the KS test above, can be invoked to test the consistency of the model θ0 with the data.

While we have discussed a scalar Markovian time series, the PIT can be extended to multivariate non-Markovian series provided the joint density (i.e., the likelihood) can be accurately computed.111,112

Goodness-of-fit testing readily apply to 3D trajectories of particles. For instance, in recent work on mRNA motion in the cytoplasm of live yeast cells,86 goodness-of-fit testing was used to reject the hypothesis that time series coordinates are statistically independent. This rejection ultimately motivated new spatiotemporally correlated models as well as new models for measurement noise that identified novel kinetic signatures of molecular motor induced transport.86

3.3.2. Bayesian Hypothesis Testing

Previously, we demonstrated how goodness-of-fit testing could be used to verify the consistency of a model’s statistical assumptions against the data. Such an absolute consistency check on a model’s specific parameter values is accomplished by formulating hypotheses or “events” such as, H0: θ = θ0 and H1: θ ≠ θ0. Events can also be something with more of a “model comparison flavor”, such as H0: θ = θ0 and H1: θ = θ1.

Here we consider the case where the model M is fixed and we test H0: θ = θ0 and H1: θ ≠ θ0. In this type of binary testing, the Hi are events such that individual event probabilities sum to one, . The posterior probability of the null, H0, hypothesis is

| (20) |

| (21) |

where the integral in the denominator is an integral over all θ ≠ θ0.

While generalizing the ideas here to test multiple hypotheses is straightforward,114 it is the selection of priors and choice of models that makes Bayesian hypothesis testing technically complicated.106 To be more specific, eq 21 selects between values of the parameters θ of a single model but does not, in itself, provide information on whether the model itself is correct at all (and thus whether this selection is meaningful).

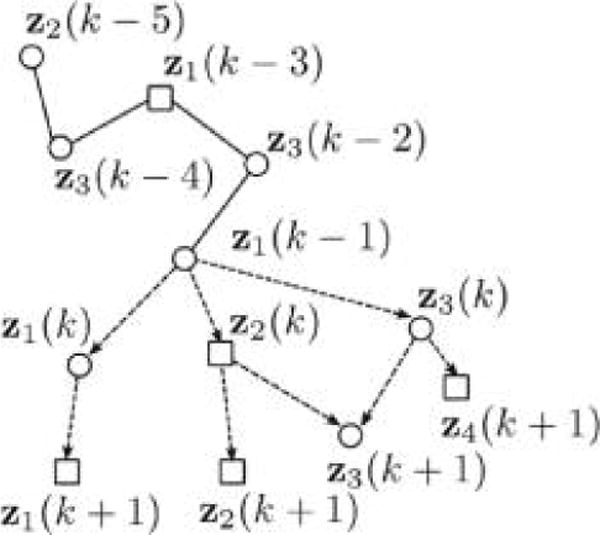

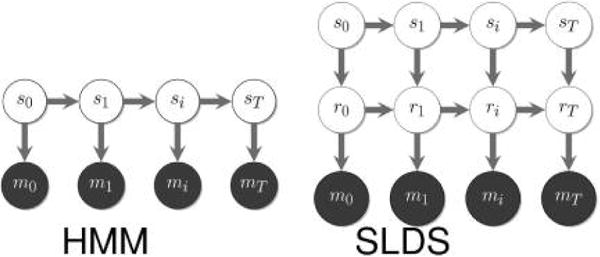

Before discussing model selection in greater depth (section 3.3.4), we now briefly describe an important model, the hidden Markov model (HMM), that motivates modeling approaches used across biophysics146 that we will revisit on multiple occasions.

3.3.3. Hidden Markov Models

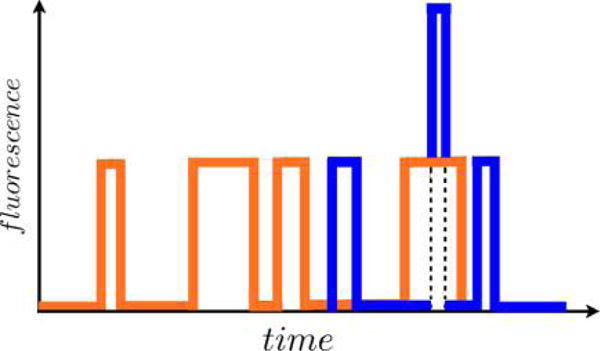

HMMs146–149 have been used so far in a number of problems, chief among them force spectroscopy and single molecule FRET (smFRET) data analysis.149–155

To introduce the HMM, we begin by considering a sequence of observations D = y = {y1, y2, …, yN} and assume that these give us indirect information about the “latent” or hidden states (variables) si at every time point i, that alone describe the system dynamics. Hidden states and observations are then related by the probability of making the observation yi given the state of the system si, p(yi|si) assuming observations are uncorrelated in time.

The HMM then starts with a parametric form for p(yi|si) (the emission probability), the number of states K, and the data D. From this input, the HMM infers the set of parameters, θ, which includes (i) the “emission parameters” that parametrize p(yi|si), (ii) initial state probabilities, and (iii) the transition rates, i.e., transition probabilities p(si|sj) for all (i, j) pairs, that describe the jump process between states assumed Markovian.

In discrete time, the likelihood used to describe the data for an HMM model is

| (22) |

where s = {s1, …, sN} and i is the time index.

In general, we are not interested in knowing the full distribution p(y, s|θ); instead we only care about the marginal likelihood p(y|θ) which describes the observation probability given the model, no matter what state the system occupied at each point in time. In other words, since we do not know what state the system is at any time point, we must sum over the si.

The HMM itself can be represented by the following sampling scheme

| (23) |

That is p(s1) is the distribution from which s1 is sampled. Then for any i > 1, p(si|si−1) is the conditional distribution from which si, conditioned on si−1, is sampled, while its observation yi is sampled from the conditional p(yi|si, θ).

We then maximize the likelihood for this HMM model, eq 22, over each parameter θ. Multiple methods are available to maximize HMM-generated likelihood functions numerically.156 Most commonly, these include the Viterbi algorithm149,157 and forwar–backward algorithms in combination with expectation maximization.147,158

3.3.3.1. Aggregated Markov Models

Aggregated Markov models (AMMs)159–161 are a special case of HMMs where many hidden states have identical output. For instance, for Gaussian p(yi|si), two or more states have identical Gaussian means.

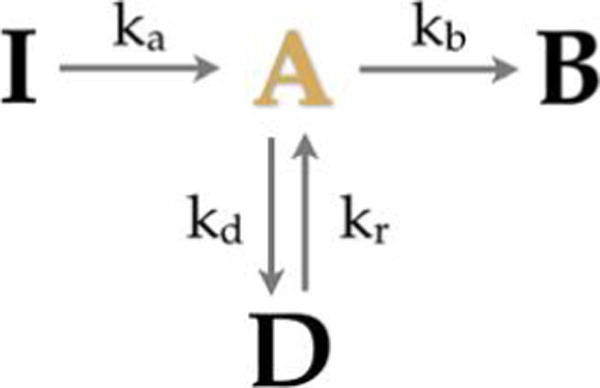

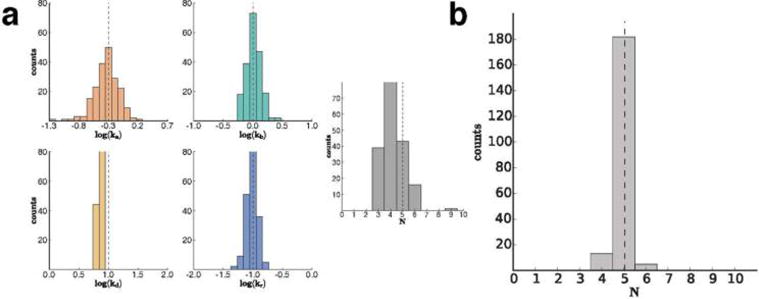

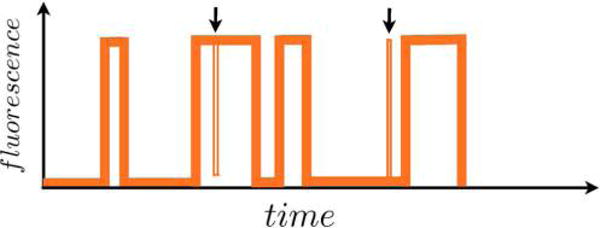

This special category of HMMs was introduced to biophysics in the analysis of ion-channel patch clamp experiments159,162–164 where the number of microscopic channel states exceeded the typically binary output of patch clamp experiments. Since then, AMMs have also been successfully applied to smFRET, where a low FRET state may also arise from different microscopic states (e.g., blinking of fluorophore photophysics or an internal state of the labeled protein)151 and, recently, to address the single molecule counting problem using super-resolution imaging data.21

In AMMs, experimentally indistinguishable states are lumped together into an “aggregate of states” belonging to an “observability class”, say “bright” and “dark” in the case of smFRET.

Limiting ourselves, for simplicity, to just two aggregates, 1 and 2, we may write a rate matrix Q as follows

| (24) |

The submatrices Qij, describe the transitions from aggregate i to aggregate j and the kl element of Qij describes transitions from the microscopic state k in aggregate i to microscopic state l in aggregate j.

AMMs are subsequently treated in much the same as HMMs. That is a likelihood is constructed and maximized with respect to the model parameters. In continuous time and ignoring noise, the likelihood of observing the sequence of aggregates D = {a1, a2, …, aN} is

| (25) |

where the ith element of the column vector, , represents the initial probability of being in state i from the a1 aggregate and

| (26) |

The row vector, 1T, in eq 25, is a mathematical device used to sum over all final microscopic states of the aggregate, aN, observed at the last time point as we do not know in which microscopic state the system finds itself in within the Nth measurement.

Just as with HMMs, the θ parameters include all transitions between microscopic states across all aggregates as well as initial probabilities for each state within each observability class and any emission parameters used. As there are fewer observability classes in AMMs than there are microscopic states, many parameters from AMMs may be unidentifiable165 and thus may need to be prespecified.

AMMs and HMMs can be generalized to include the possibility of missed (unobserved) transitions21,166 – for instance when transitions happen on time scales that approach or exceed the data acquisition frequency–and can readily apply to discrete or continuous time.

One fundamental shortcoming of either HMMs or AMMs is their explicit reliance on a predetermined number of states. This is true of many modeling strategies that predetermine the model “complexity”.

The next section on model selection addresses the challenge of finding the model of the right complexity.

3.3.4. Model Selection

We assume that models under consideration have passed tests, ensuring the interpretive value of the model, and that we are interested in selecting the best (or preferred) predictive or descriptive candidate model among these without excessively overfitting.114

Here we review the basic ideas behind well-established model selection criteria that can be naively described as finding the best model by balancing a “predictive component” (often quantified by a likelihood) and a “complexity penalty” (often quantified by a function of the number of parameters in a model).

In this article, we do not review cross validation type techniques for accessing the predictive ability of models. In cross validation some fraction of the data is kept in reserve in order to see how the prediction performs. Cross validation is used mostly in “man supervised learning” applications, for example “deep learning” of gigantic labeled color image data sets. With such data sets we often have a good idea of what the ground truth is. In super-resolution image analysis the genuine “ground truth” is much harder to come by, except in simulations.

3.3.4.1. Information-Theoretic Model Selection Criteria

While many information theoretic methods exist167–169 and have recently been reviewed elsewhere,59 here we focus on the Akaike Information Criterion (AIC).170–173 The AIC starts from the idea that, while it may not be possible to find the true (hypothetical) model, it may be possible to find one that minimizes the difference in the “information content” between the true (hypothetical) model and the preferred candidate model. The preferred model should provide a good fit to existing data, the training set, and provide predictive power on alternate data sets, the validation set.

While the derivation of the AIC is involved,59,114 the AIC expression itself is simple

| (27) |

where denotes the MLE of a given model.

Minimizing the AIC, which is equivalent to maximizing the likelihood subject to a penalty on parameter numbers, K, generates the preferred model. The AIC itself is an asymptotic result valid in the large data set limit though higher order corrections exist.114 The penalty term, 2K, is derived, not imposed by hand. Also, as we will see, it is a weaker penalty than that of the Bayesian information criterion that we now describe.

As a concrete example, suppose goodness-of-fit testing cannot rule out the possibility that the model itself is an undetermined sum of exponentials obscured by noise. The AIC would then be used to find the number of exponential components required without overfitting the data. Additional examples motivated from the biophysical literature are provided by Tavakoli et al.59

3.3.4.2. Bayesian Information Criterion

While the mathematical form for the Bayesian (or Schwartz) information criterion (BIC)174 used in model selection, that we will see shortly, may appear similar to the AIC, eq 27, it is conceptually very different. It follows from Bayesian logic with no recourse to information theory.59,114,175–177 As before, the derivation itself is involved and discussed in detail in Tavakoli et al.59

Unlike the AIC, the BIC searches for a true model173,178 that exists regardless of the number of data points N available used to find this model. That is, the model itself is assumed to be of fixed, albeit unknown, complexity, by contrast to the AIC which seeks an approximate model and is therefore more willing to grow the model complexity along with the size of the data set, N, available.

Briefly put, the BIC starts from a posterior for a model class with a fixed number of parameters, K, just as we had seen with our exponential example earlier. Following the logic of eq 9, we consider the average posterior that comes from summing over parameter values for each of the K parameters generating a marginal posterior.

If a candidate model contains too many parameters, then many of these parameters (integrated over all values that they can be assigned) will result in a small marginal posterior for that particular parameter number. By contrast, if too few parameters are present in the posterior, the model itself will be insufficiently complex to capture the data, and this will also yield small marginal posteriors.

From this logic follows the BIC

| (28) |

which differs from the AIC, eq 27, in the complexity penalty term (second term on the right-hand side).

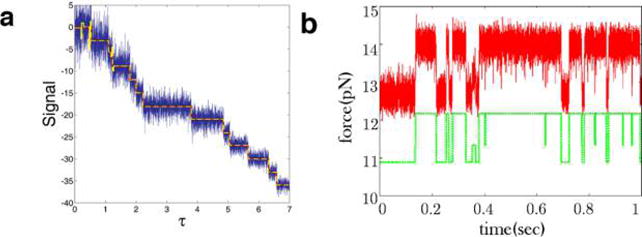

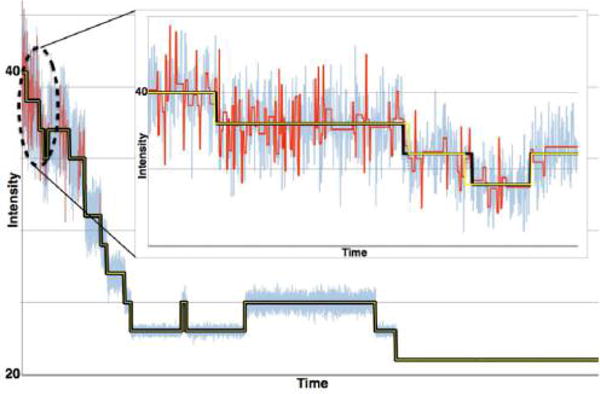

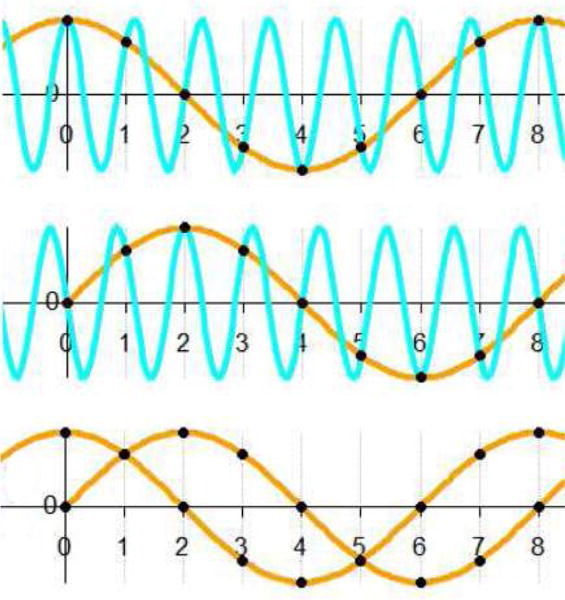

While the logic underlying both AIC and BIC is different, both AIC and BIC are used interchangeably in practice, and their performance is problem-specific59 (Figure 10). For instance, since the AIC tends to overfit small features it may underperform for slightly nonlinear models (which may have fewer parameters) and that may be obscured by noise but may be preferred for highly nonlinear models (with a correspondingly larger number of parameters) as small features may no longer be due to noise.172

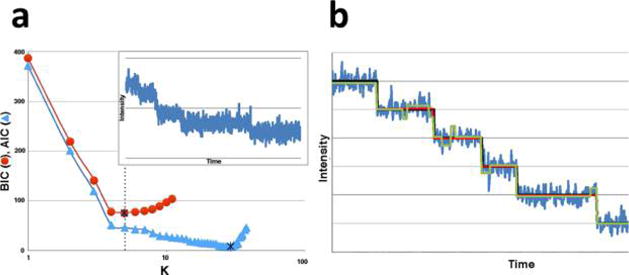

Figure 10.

AIC and BIC are often both applied to step-finding. (a) We generated 1000 data points with a background noise level of standard deviation σb = 20. On top of the background, we arbitrarily added 6 dwells (5 points where the mean of the process suddenly changes) with noise around the signal having a standard deviation of σs = 5 (see inset). At this high noise level, and for this particular application, the BIC outperforms the AIC and the minimum of the BIC is at the theoretical value of 5 (dotted line). This is because the AIC overinterprets noise. All noise is Gaussian and uncorrelated. (b) Here we show another synthetic time trace with hidden steps, generated in the same manner as the data set in (a). The AIC (green) finds a model that overfits the true model (black), while the BIC (red) does not. However, as we increase the number of steps (while keeping the total number of data points fixed), the AIC does eventually outperform the BIC. This is to be expected. The AIC assumes the model could be unbounded in complexity and therefore does not penalize additional steps (model parameters) as much. The BIC, by contrast, assumes that there exists a true model of finite complexity. The data set for this simulation was generated via a Python implementation of the Gillespie algorithm, while both the BIC and AIC were implemented via elementary Python scripts.

3.4. Overview of Bayesian Nonparametrics

Bayesian nonparametrics is a relatively recent (1973) approach to statistical modeling179 that fundamentally integrates the model selection step into the model-building process. In other words, it builds models whose complexity reflect that of the data.179–181 This is especially important to super-resolution and, more broadly, single molecule data analysis, as few model features, such as the number of states in an HMM, are initially known.

As we previously discussed, nonparametric approaches do make parametric assumptions. For instance, a particular nonparametric model may assume that measurements are iid. However, beyond these parametric constraints, nonparametric approaches allow for a possibly infinite number of parameters.

3.4.1. Dirichlet Process

The prior process, the analog of the prior used in parametric Bayesian inference, is of major importance in Bayesian nonparametrics. The most widely used of these processes is the Dirichlet process (DP) prior,179 along with its various representations,67,68,182 such as the “Chinese restaurant” process and the stick-breaking construction.181,183

Just as a prior in parametric statistics samples parameter values, the DP samples probability distributions. Probability density estimation,184 clustering,185 HMMs,183 and Markovian switching linear dynamical systems (SLDS),68 have all been generalized to the nonparametric case by exploiting the DP.

We introduce the DP with a parametric example and begin by considering probabilities of outcomes, π = {π1, π2, …, πK} with Σk πk = 1 and πk ≥ 0 for all k, distributed according to a Dirichlet distribution, π|α ~ Dirichlet (α1, …, αK). In other words

| (29) |

The Dirichlet distribution is conjugate to the multinomial distribution.

To build a posterior from the prior above, we introduce a multinomial likelihood with populations n = {n1, n2, …, nK} distributed over K unique bins

| (30) |

The resulting posterior obtained from the prior, eq 29, and likelihood, eq 30, is now

| (31) |

The multinomial shown above is a starting point for many inference problems. For example, eq 31 may represent the posterior probability of weights in mixture models, such as sums of Gaussians, and is used in clustering problems.184

To be more specific, the finite mixture model can be represented using the following sampling scheme with yi denoting the ith observation

| (32) |

Here si is the (latent or unobservable) cluster integer label, F(·) is a distribution governing the yi random variables assigned to cluster parametrizes F and the prior over the unobservable ’s are governed by the so-called “base distribution” H.184

Equation 32, formalizes the logic that if a model is a sum of Gaussians (i.e., a sum of , with each component indexed si with parameters , we draw an observation by first selecting a mixture component (according to the weight of each component, multinomial(π1, … πK)) and subsequently sampling a value from that particular mixture component (i.e., a Gaussian).

In the absence of prior knowledge on the expected mixture components, we set all αk to α/K and, under these assumptions, we can write down the probability of belonging to cluster (or mixture component) j184

| (33) |

where nj is the number of observations assigned to cluster “j” and .

The DP is the infinite dimensional (K → ∞) generalization of the Dirichlet prior distribution and is used in infinite mixture models.184 In infinite mixture models, the prior probability of occupying a pre-existing mixture components is

| (34) |

whereas the probability of creating a new component is

| (35) |

Before moving forward, some comments are in order. When doing classic finite mixture modeling, we needed to predefine the number of mixture components, K. Each K component has a prior probability given by eq 33; if we change K (and as a result change the yi cluster assignments), one is faced with a model selection problem. In the DP mixture model, the data determines the number of active clusters/states as well as the parameters needed to describe the data in an à la carte fashion within a single Bayesian posterior.

In both finite and infinite mixture models, the quality of the clustering strongly depends on the base distribution, H, since this distribution governs the θ which determines the similarity (or dissimilarity) between the probability distributions, F(·), associated with different clusters.

3.4.1.1. Infinite Mixture Models

Previously, in eq 32, we showed how to sample a priori mixture weights for finite mixture models. For infinite mixture components, we may combine the first two as well as the last two expressions of eq 32 and obtain

| (36) |

where α is the “concentration parameter” quantifying the preference for creating new clusters184 while H is a judiciously selected68,179,186 “base distribution” that plays the same role here as it did for finite mixture models in setting θk. G is the DP’s sample and it is a random distribution that can be represented as follows

| (37) |

where the Kronecker delta, , denotes a mass point for parameter value θk 67,187 and F*G denotes

| (38) |

It can be shown that the mean of the random distribution G is given by and its variance is (H(1–H))/(α + 1).108 Thus, as α increases, G approaches H. Hence the concentration parameter can be thought of as constraining the similarity between G and H.

While we have just described infinite mixture models, we have not yet described how to draw samples from the DP. DP itself is often represented by the “stick-breaking construction” which is easily implemented computationally182

Following this procedure, we find that G ~ DP(α, H).108

The analogy to stick-breaking here is motivated by imagining that we begin with a stick of unit length. We break it at a location, v1, sampled from a beta distribution v1 ~ beta(1, α). We set π1 = v1. The remainder of the stick has length (1–v1). We then assign to π1 a value of θ1 sampled from H and reiterate to get π2, so the πk are all sampled according to the stick-breaking construction. In practice, we terminate the stick breaking process when the remaining stick length falls below a prespecified cutoff. In the statistics literature, the resulting distribution for π is known as the Griffiths–Engen–McClosky (GEM) distribution.188

The DP powerfully generalizes previously finite models, such as the hidden Markov model,67 ubiquitously used across single molecule biophysics189 that we discuss in greater depth in section 7.3.2.

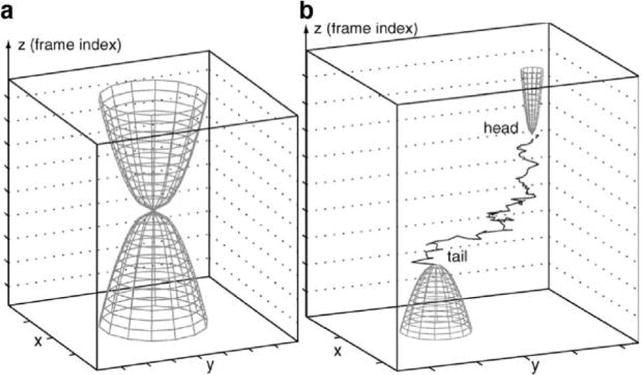

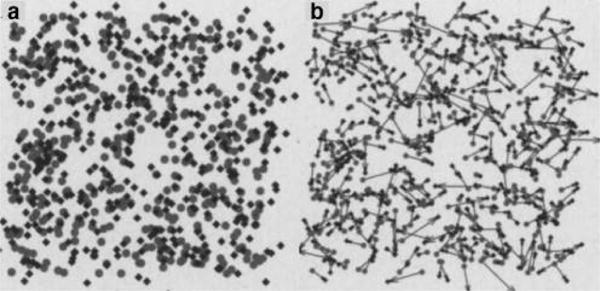

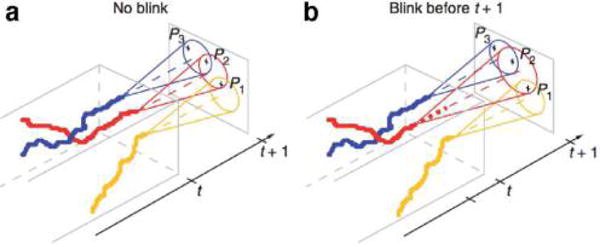

4. THE LOCALIZATION PROBLEM

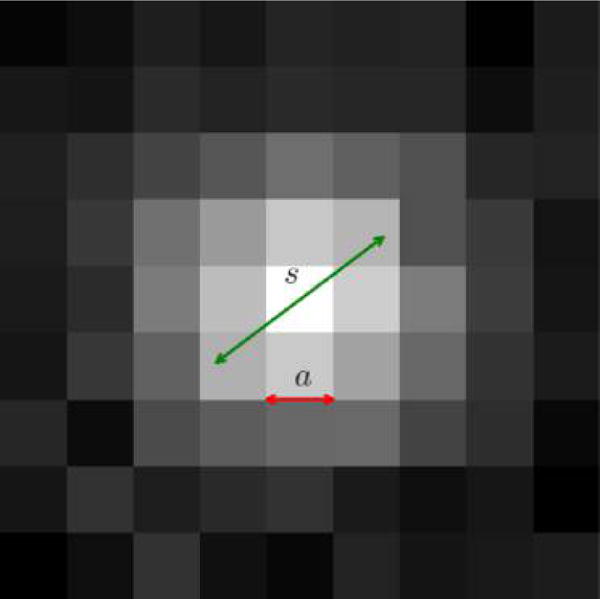

The localization problem is the first step in the analysis of a super-resolution data set and involves finding the position of a fluorescent molecule, x0 = (x0, y0), from an image I. The image itself is thought of as a matrix, whose elements describe individual intensities at each pixel.

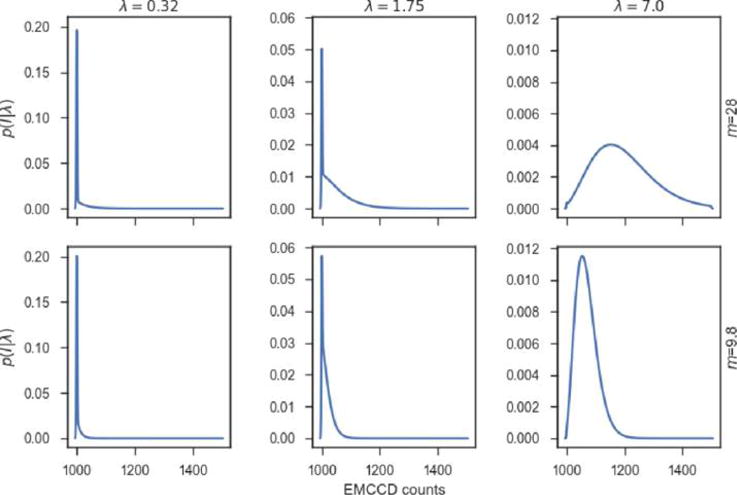

In order to localize a fluorophore, we must have a model describing the expected mean number of photons per frame in pixel x given the fluorophore location at position x0, λ(x; x0). Typically, λ(x; x0) is given by the point spread function of the imaging system. The intensity at each pixel at location x is itself distributed randomly, according to a distribution p(I(x)|λ(x; x0)), due to shot noise and readout noise.

We begin by describing readout noise (section 4.1) and follow with a discussion on identifying “regions of interest” (ROIs) containing fluorophores (section 4.2). Once positively identified, we draw from our discussion on maximum likelihood in order to describe inference frameworks used in localization in section 4.3. While theoretically attractive, maximum likelihood methods may be computationally expensive and require good noise models to outperform simpler approaches. For this reason, we describe performance criteria of localization methods ultimately used to judge whether the computational cost of a method is warranted in section 4.4. Sections 4.5 and 4.6 describe simpler localization strategies, including least-squares fit. Subsequent sections tackle generalizations of the methods discussed thus far: 3D super-resolution in section 4.7, simultaneous fitting of multiple emitters in section 4.8, and deconvolution-style approaches in section 4.9. Finally, we end with a note on drift correction in super-resolution (section 4.10) without which the best localization methods are of limited value.

4.1. Readout Noise in Single Molecule Experiments

Intuitively, one can expect photon shot noise to be partly responsible for reducing the accuracy of localization methods. Indeed, localization must be achieved with few photons per frame as the total photon budget of most fluorophores, meaning the number of photons collected before the fluorophore undergoes irreversible photobleaching, is limited to hundreds or thousands of photons.4,190 While greater brightnesses can be achieved by using quantum dots as fluorescent markers,191 they remain more challenging to deliver into cells and present toxicity concerns.192

Perhaps more unexpectedly, accurate localization also requires a model describing how a fluorophore’s emitted photons are converted into a camera readout. For instance, at a given illumination level, assuming an average number of photons strike the sample per frame per unit area, one may naively expect the camera’s readout at a given pixel, I = I(x), to be a Gaussian random variable identical for all pixels, or at least well approximated by such a description. In fact, as we now discuss, both Gaussian and identical assumptions are violated in practice.

Since few photons hit each camera pixel on any given frame, the Poisson limit theorem states that given the average number of photons λ for this pixel, the distribution of the actual number Np of such photons follows a Poisson distribution (“shot noise”)

| (39) |

where for notational simplicity, we let λ = λ(x; x0).

The total noise of the measurement arises from the convolution of this shot noise by a camera readout noise, that is neither necessarily normally distributed, nor pixel-independent.193 In other words, the readout I at a camera pixel is distributed according to a distribution p(I|Np) that is non-normal and pixel-dependent. As later described, we will use both knowledge of p(Np|λ) and p(I|Np) to address the localization problem.194

4.1.1. Camera Specific Readout

Two technologies, with different readout distributions, are widely used for single molecule imaging:194 the older EMCCD (electron-multiplication charge coupled device), where the electrons produced by a photon hitting a pixel are collected and amplified by chip-wide electronics, and the more recent sCMOS (scientific complementary metal oxide semiconductor), which offer higher sensitivity and read rates, at the cost of pixel-to-pixel noise variation (“fixed pattern noise”), by performing signal amplification at the pixel and column level.194