Abstract

Statistical classification is a critical component of utilizing metabolomics data for examining the molecular determinants of phenotypes. Despite this, a comprehensive and rigorous evaluation of the accuracy of classification techniques for phenotype discrimination given metabolomics data has not been conducted. We conducted such an evaluation using both simulated and real metabolomics datasets, comparing Partial Least Squares-Discriminant Analysis (PLS-DA), Sparse PLS-DA, Random Forests, Support Vector Machines (SVM), Artificial Neural Network, k-Nearest Neighbors (k-NN), and Naïve Bayes classification techniques for discrimination. We evaluated the techniques on simulated data generated to mimic global untargeted metabolomics data by incorporating realistic block-wise correlation and partial correlation structures for mimicking the correlations and metabolite clustering generated by biological processes. Over the simulation studies, covariance structures, means, and effect sizes were stochastically varied to provide consistent estimates of classifier performance over a wide range of possible scenarios. The effects of the presence of non-normal error distributions, the introduction of biological and technical outliers, unbalanced phenotype allocation, missing values due to abundances below a limit of detection, and the effect of prior-significance filtering (dimension reduction) were evaluated via simulation. In each simulation, classifier parameters, such as the number of hidden nodes in a Neural Network, were optimized by cross-validation to minimize the probability of detecting spurious results due to poorly tuned classifiers. Classifier performance was then evaluated using real metabolomics datasets of varying sample medium, sample size, and experimental design. We report that in the most realistic simulation studies that incorporated non-normal error distributions, unbalanced phenotype allocation, outliers, missing values, and dimension reduction, classifier performance (least to greatest error) was ranked as follows: SVM, Random Forest, Naïve Bayes, sPLS-DA, Neural Networks, PLS-DA and k-NN classifiers. When non-normal error distributions were introduced, the performance of PLS-DA and k-NN classifiers deteriorated further relative to the remaining techniques. Over the real datasets, a trend of better performance of SVM and Random Forest classifier performance was observed.

Keywords: metabolomic phenotyping, statistical classification, machine learning, discrimination, partial least squares-discriminant analysis, Random Forests, support vector machines, artificial Neural Networks, Naïve Bayes, k-Nearest Neighbors

1. Introduction

As the reactants, intermediates, and products of metabolic reactions, in vivo metabolite concentrations are reflective of stable hereditary factors such as DNA sequence and epigenetic modifications as well as transient stimuli that elicit metabolic responses over varying time domains. Many diseases—including prevalent human diseases such as diabetes [1], coronary artery disease [2], heart failure [3], and cancer [4]—are either caused by or result in metabolic dysregulation. Consequently, metabolite concentrations quantified from human samples report both constitutive diseases processes such as atherosclerosis [5] and acute disease events such as myocardial infarction [6] and cerebral infarction [7]. While metabolic phenotyping is well suited to inform clinical phenotype prediction, the success of this approach depends on the discriminative power of the statistical classification techniques employed. Consequently, we sought to conduct a thorough and rigorous analysis of classifier techniques for use in metabolomics, with special attention paid to high dimensional data as a common feature of untargeted analyses. In evaluating multiple statistical classification techniques, the optimization of different objective functions will lead to different results. An objective function of maximizing biological knowledge extraction may lead to the choice of simple, interpretable classifiers. In contrast, an objective function of error minimization may lead to the selection of “black box” classification techniques such as classifier ensembles for which conducting biological inference is not straightforward. In conducting our evaluations, we have defined minimizing classification error and cross-entropy loss objective functions, predicated on the assumption that, for metabolite concentrations to inform diagnostic or prognostic predictions, accuracy is more important than model interpretability. In selecting classification techniques to evaluate, we have sought to include classifiers with widespread utilization in metabolomics (e.g., PLS-DA), ensemble methods (e.g., Random Forests), methods that allow nonlinear discrimination functions and are robust given non-normal data (e.g., Support Vector Machines and Neural Networks), and methods with embedded feature selection (e.g., Sparse PLS-DA). In order to evaluate classifier performance, we utilized simulation studies designed to emulate an analysis workflow post analytical detection and quantification of metabolite abundances—that is, we assume method-specific data processing such as peak detection, signal deconvolution, and chromatographic alignment have already been conducted. While we refer to simulated abundances as metabolites for simplicity, our evaluations would generalize to datasets with ion features that have not been grouped or annotated as compounds. In addition to simulation studies, we evaluated classifier performance across three independent clinical datasets in which a principle aim was using metabolomics to facilitate a diagnostic determination.

We briefly introduce the classifier techniques evaluated and provide a high-level introduction to our analytical process in the following paragraphs. Partial least squares-discriminant analysis (PLS-DA) is a ubiquitous classification technique that has been widely utilized in metabolomics studies [8]. The objective of partial least squares (PLS) is to find latent components that maximize the sample covariance between sample phenotype and observed abundance data after applying linear transformations to both [9]. An advantage of PLS approaches is that the latent components are iteratively determined to maximize the remaining phenotype covariance, which facilitates straightforward dimension reduction (by considering a parsimonious set of the components that capture sufficient phenotypic variance) and can mitigate estimability issues arising from the presence of more metabolites then samples (p > n) and from multicollinearity. To generalize PLS regression to classification, a matrix of binary phenotype indicators can be used as dependent variables and a discriminant analysis such as Fisher’s discriminant analysis or nearest centroids can be conducted (hence PLS-DA). Given that metabolomics studies typically have (p ≫ n) that is, far more metabolites quantified than replicates, variable (metabolite) selection is often advisable. This artifact is especially pronounced when considering data with ion features. Sparse PLS-DA can be conceptualized as a modification of PLS-DA that embeds feature (metabolite) selection through regularization. Sparsity is enforced by penalizing the norm of the weights that define the linear transformations that relate the observed abundance data and the latent components [10,11]. Dependent on the penalization parameter, some of the individual metabolite weights may shrink to zero—effectively removing that metabolite from the model. While PLS methods aptly handle the multicollinearity present in metabolomics data due to abundance correlations within metabolic pathways, the latent components are linear combinations of the metabolites and assume metabolite abundances are approximately normally distributed. The need for nonlinear function approximation is warranted given consideration to the nonlinearity of enzyme kinetics (see, for example, [12]). Support vector machines (SVMs) are binary classifiers that seek to find linear hyperplanes that maximize the separation between classes [13]. SVMs can approximate nonlinear decision boundaries between classes by employing a linear or nonlinear mapping of the metabolite data to a higher dimensional space in which a separable or nearly-separable linear hyperplane between classes can be found. The strength of SVM classifications in nonlinear discrimination—of great benefit in metabolomics—stems from the ability of SVMs to approximate arbitrary continuous functions [14] (universal approximation). This desirable property has also been shown for Neural Networks (see, for example, [15] for a proof of universal function approximation for multilayer feedforward networks). Neural networks are so named as early work in this field [16] focused on developing mathematical models that mimic cognition—specifically, recognition via the activation of neurons and propagation of signals. A general feedforward network consists of three types of node layers: an input layer for inputting metabolite abundances, hidden layer(s) conceptualized as neurons that aggregate and process signals, and an output layer that is used for prediction (e.g., predicting phenotype). The final classification technique considered in this analysis was Random Forests. A Random Forest is an ensemble of classification or regression trees that employs bootstrap aggregation (“bagging”) and random subspace constraints to minimize the variance of model sampling [17,18]. Bagging is conducted in this context by constructing a collection of individual trees using repeated sampling with replacement from the original data and aggregating the trees into an ensemble for making predictions. Bagging is a form of model averaging that has been shown to increase accuracy in proportion to the degree to which the underlying model is sensitive to perturbations of training data [19]. Random Subspace constraints stipulate that during the iterative process of tree construction, only a random subset of metabolites will be considered in defining branch splits [20]. Enforcing a random subspace constraint improves the performance of the bagging strategy by reducing the correlation between the individual trees [18]. Naïve Bayes classifiers are derived from an application of Bayes’ Theorem to the multiclass classification problem. Naïve Bayes classifiers estimate the posterior probability of each phenotype label conditioned on observed metabolite abundances, predicated on the “naïve” assumption that the distribution of each metabolite is independent given phenotype [13,18]. The final classification technique considered, k-Nearest Neighbors (k-NN), estimates the posterior probability of each phenotype label for an observation by the empirical distribution of phenotype labels in the neighborhood of training examples most proximal to the observation with respect to a similarity measure [21].

We chose to evaluate the performance of the selected classification techniques using simulated datasets as evaluating performance on a single or small collection of real datasets would exhibit high variance. Evaluating performance on a large number of simulated datasets allows for more precise estimates of relative performance and for directly evaluating the effects of increased noise, increased nonlinearity, and/or departures from approximate normality. A significant hurdle in simulating metabolomics data is that such data is marked by a significant degree of pairwise and higher order partial correlations [22,23]. Metabolites in the same reaction or linked reactions function as substrates, intermediates, and products thus generating complex correlation structures. As a result, simulating metabolomics data necessitates generating multivariate distributions of correlated metabolites. Furthermore, as the enzymes that catalyze biochemical reactions are often subject to regulatory processes such as feedback inhibition [12], complex partial correlation structures must also be simulated. Acknowledging this, we simulated metabolite data in blocks representing biological processes. To ensure diversity in the simulation studies, random correlation matrices were generated for each simulation. Generating random correlation matrices requires specialized methods to ensure that the resulting matrices are positive definite. For this, we employed the method developed by Lewandowski et al. [24] which generates partial correlations using a graph (network) structure known as a C-vine. In addition to simulating realistic covariance structures, to ensure simulation studies mimicked untargeted metabolomics data, biological outliers, technical outliers, and missing values arising from abundances below the limit of detection were simulated. Further details are contained in the methods section.

2. Results

2.1. Simulated Metabolomics Data

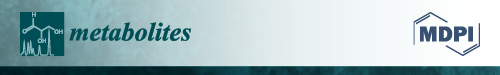

For both the baseline and realistic scenarios, 1000 simulation studies were conducted. The C-vine procedure (Figure 1) for generating random covariance matrices facilitated generating clusters of simulated metabolites to mimic discrete biological processes. An important aspect of this is the generation of partial correlations, as regulatory mechanisms such as feedback inhibition may generate such relationships.

Figure 1.

C-vine graph illustrating partial correlation structure. C-vines were utilized to generate biologically plausible metabolomics data. represents the correlation between metabolites and . represents the partial correlation between metabolites and after conditioning on and .

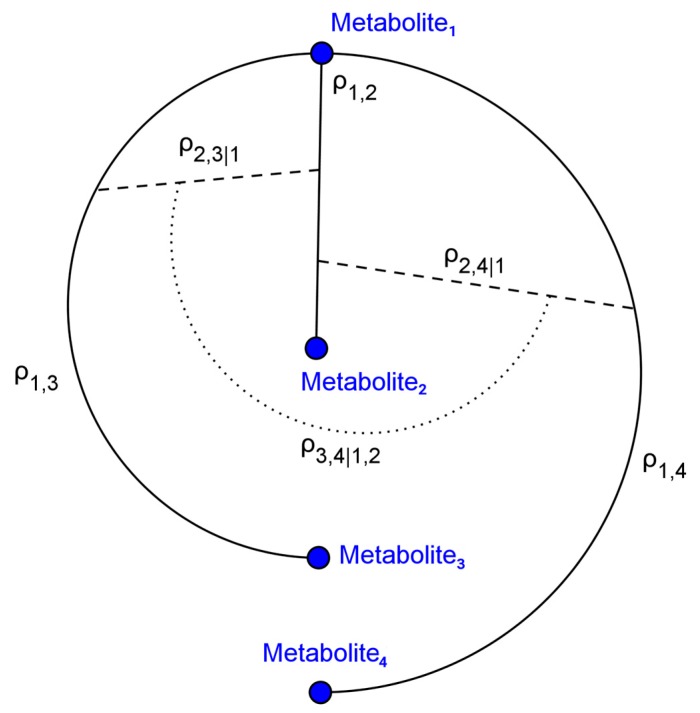

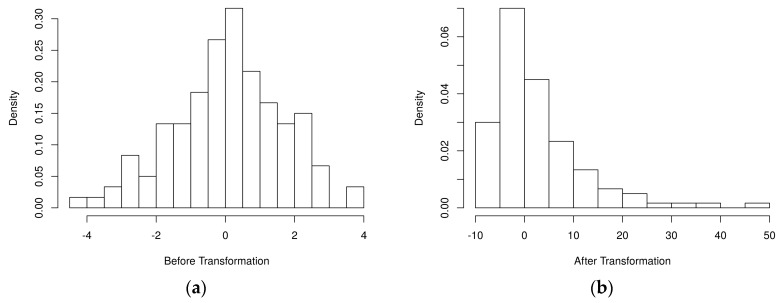

Figure 2 depicts the simulated metabolite abundance data from a randomly selected baseline scenario study both prior-to and post-significance filtering. Blocks of correlated metabolites are visible (column clusters) as expected given the data generation procedure. In the baseline scenarios, classifiers were evaluated on both the prior-to and post-significance filtered datasets such as those shown in Figure 2; in the realistic scenarios, further transformation was conducted. Figure 3 illustrates how abundance data was generated to follow a variety of random non-normal distributions for the realistic scenarios. In addition to introducing non-normal error distributions, in the realistic scenarios, biological and technical outliers were simulated and missing values were added to simulate abundances below a limit of detection.

Figure 2.

Heatmap showing simulated metabolite abundance data from a randomly selected baseline scenario before and after significance filtering. (a) prior to significance filtering: distinct clusters of metabolites can be discriminated as expected given the block-wise generation of correlated metabolites; (b) post-significance filtering.

Figure 3.

Histogram of an example simulated metabolite abundance distributions for each scenario. (a) baseline scenarios: metabolite abundances were simulated from multivariate normal distributions representing discrete biological processes (one metabolite shown); (b) realistic scenarios: metabolite abundances were initially generated as in the baseline scenarios. Then, simulated block-wise outliers were added to simulate biological outliers, metabolite-level outliers were added to simulate technical outliers, random nonlinear transformations were applied block-wise to generate non-normal error distributions, and missing values were added to simulate abundances below a limit of detection.

2.2. Evaluation of Classifier Performance in Simulation Studies

2.2.1. Aggregate Performance

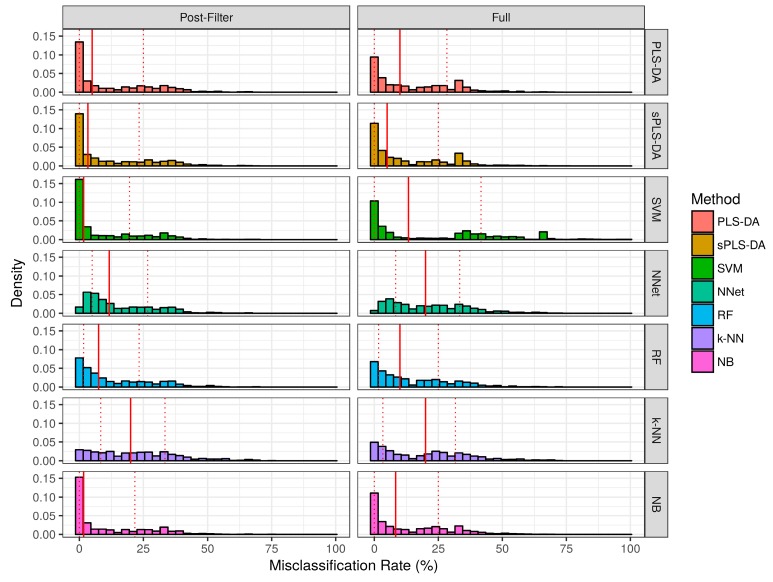

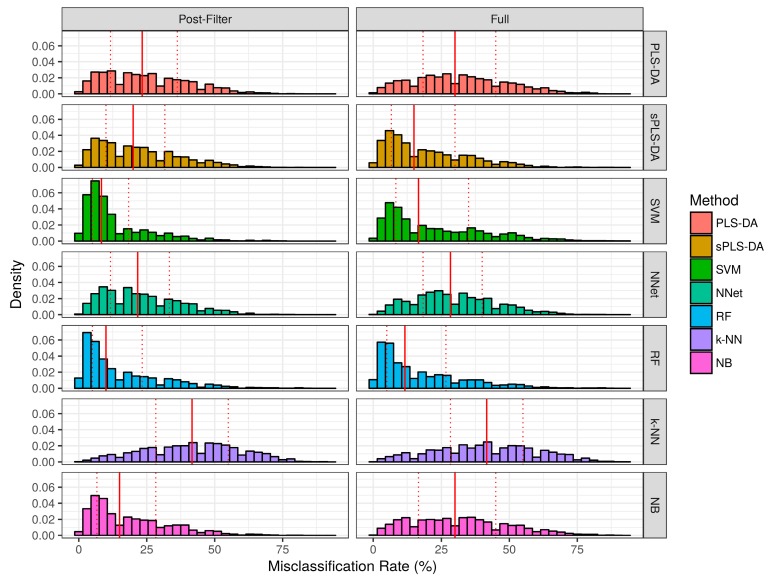

The misclassification rate for each technique over the simulation studies are summarized in Figure 4 and Table 1. Over the baseline scenarios and prior-to significance filtering, sPLS-DA exhibited a lower misclassification rate than the remaining techniques (Median ± Interquartile range: 5.0% ± 25.0%). Naïve bayes classifiers demonstrated the second lowest misclassification rate (Median ± Interquartile range: 8.3% ± 25.0%) in the baseline scenarios prior to significance filtering. Following sPLS-DA and Naïve Bayes, the performance of PLS-DA, Random Forests, and SVM was similar with respect to median misclassification rate. Neural networks and k-NN had higher median misclassifications rate than the other techniques. Prior to significance filtering, the spread of SVM performance was greater than the remaining techniques. The application of significance filtering improved the mean and median misclassification rate for each technique. In the realistic scenarios (prior-to and post-significance filtering), the performance of PLS-DA and k-NN classifiers deteriorated significantly more than the other techniques. Post-significance filtering in the realistic scenarios, the ascending order of median misclassification rates was as follows: SVM, Random Forest, Naïve Bayes, sPLS-DA, Neural Networks, PLS-DA and k-NN classifiers.

Figure 4.

Empirical distribution of the misclassification rate observed in baseline scenario simulation studies. Solid red line represents the median of each distribution, while dashed red lines represent the 1st and 3rd quantiles (25th and 75th percentile).

Table 1.

Misclassification rate (%) observed by technique and by significance filtering status (pre- vs. post-) throughout the 1000 baseline simulation studies and 1000 realistic simulation studies. The lowest median misclassification rate observed over each scenario type is shown in bold face.

| Method | Baseline Pre- | Baseline Post- | Realistic Pre- | Realistic Post- | ||||

|---|---|---|---|---|---|---|---|---|

| Mean ± SD | Median ± IQR | Mean ± SD | Median ± IQR | Mean ± SD | Median ± IQR | Mean ± SD | Median ± IQR | |

| PLS-DA | 15.0 ± 15.5 | 10.0 ± 28.3 | 13.1 ± 15.6 | 5.0 ± 25.0 | 32.2 ± 17.4 | 30.0 ± 26.7 | 25.0 ± 15.7 | 23.3 ± 24.6 |

| sPLS-DA | 13.1 ± 15.3 | 5.0 ± 25.0 | 12.1 ± 15.1 | 3.3 ± 23.3 | 19.7 ± 15.2 | 15.0 ± 23.3 | 22.0 ± 15.0 | 20.0 ± 21.7 |

| SVM | 23.2 ± 24.6 | 13.3 ± 41.7 | 10.4 ± 14.3 | 1.7 ± 19.6 | 22.8 ± 18.3 | 16.7 ± 26.7 | 13.3 ± 12.5 | 8.3 ± 13.3 |

| NNet | 22.0 ± 15.6 | 20.0 ± 25.0 | 15.9 ± 13.8 | 11.7 ± 21.7 | 29.9 ± 15.2 | 28.3 ± 21.7 | 23.3 ± 14.3 | 21.7 ± 21.7 |

| RF | 15.0 ± 14.8 | 10.0 ± 23.3 | 13.5 ± 14.4 | 7.5 ± 21.7 | 17.5 ± 16.0 | 11.7 ± 21.7 | 15.5 ± 15.0 | 10.0 ± 18.3 |

| k-NN | 20.2 ± 17.3 | 20.0 ± 28.3 | 21.9 ± 16.4 | 20.0 ± 25.0 | 41.3 ± 18.8 | 41.7 ± 26.7 | 41.6 ± 17.7 | 41.7 ± 26.7 |

| NB | 14.0 ± 15.3 | 8.3 ± 25.0 | 11.1 ± 14.6 | 1.8 ± 21.7 | 32.1 ± 18.2 | 30.0 ± 28.3 | 19.1 ± 14.8 | 15.0 ± 21.7 |

PLS-DA: Partial Least Squares-Discriminant Analysis; sPLS-DA: Sparse PLS-DA; SVM: Support Vector Machines; NNet: Artificial Neural Network; RF: Random Forest; k-NN: k-Nearest Neighbors; NB: Naïve Bayes

Cross-entropy loss over the simulation studies is summarized in Table 2 and Figure 5. Over the baseline scenarios prior-to significance filtering, SVM and Random Forest classifiers exhibited similar performance (Median ± IQR: 0.55 ± 0.52 and 0.70 ± 0.61, respectively); PLS-DA, sPLS-DA, Naïve Bayes, and Neural Networks were similar and higher than SVM/RF classifiers; k-NN classifiers exhibited the greatest cross-entropy loss. Post-significance filtering in the baseline scenarios, Naïve bayes classifiers exhibited the lowest cross-entropy loss, followed by SVM and Random Forests. As before, PLS-DA, sPLS-DA, and Neural Networks showed similar performance, while k-NN classifiers demonstrated the greatest cross-entropy loss. In the realistic scenarios post-significance filtering, the ascending order of cross-entropy loss was as follows: sPLS-DA, PLS-DA, Neural Networks, Random Forests, SVM, Naïve Bayes, and k-NN classifiers (Table 2).

Table 2.

Cross-entropy loss observed by technique and by significance filtering status (pre- vs. post-) throughout the 1000 baseline simulation studies and 1000 realistic simulation studies. The lowest median cross-entropy loss observed over each scenario type is shown in bold face.

| Method | Baseline Pre- | Baseline Post- | Realistic Pre- | Realistic Post- | ||||

|---|---|---|---|---|---|---|---|---|

| Mean ± SD | Median ± IQR | Mean ± SD | Median ± IQR | Mean ± SD | Median ± IQR | Mean ± SD | Median ± IQR | |

| PLS-DA | 1.18 ± 0.16 | 1.17 ± 0.23 | 1.04 ± 0.18 | 0.99 ± 0.30 | 1.51 ± 0.14 | 1.51 ± 0.19 | 1.50 ± 0.16 | 1.50 ± 0.20 |

| sPLS-DA | 1.03 ± 0.18 | 0.98 ± 0.30 | 1.02 ± 0.19 | 0.96 ± 0.29 | 1.49 ± 0.16 | 1.48 ± 0.20 | 1.50 ± 0.16 | 1.49 ± 0.20 |

| SVM | 0.64 ± 0.45 | 0.70 ± 0.61 | 0.42 ± 0.40 | 0.21 ± 0.56 | 1.95 ± 0.71 | 1.83 ± 0.95 | 1.90 ± 0.61 | 1.81 ± 0.77 |

| NNet | 1.12 ± 0.20 | 1.09 ± 0.32 | 1.03 ± 0.18 | 0.97 ± 0.29 | 1.53 ± 0.17 | 1.54 ± 0.22 | 1.51 ± 0.18 | 1.52 ± 0.22 |

| RF | 0.58 ± 0.36 | 0.55 ± 0.52 | 0.55 ± 0.34 | 0.51 ± 0.50 | 6.61 ± 17.57 | 1.66 ± 0.92 | 6.37 ± 16.38 | 1.65 ± 0.92 |

| k-NN | 54.8 ± 56.4 | 44.9 ± 54.3 | 60.8 ± 52.9 | 50.6 ± 46.1 | 345.0 ± 181.6 | 326.5 ± 257.2 | 343.7 ± 171.9 | 328.9 ± 244.3 |

| NB | 3.12 ± 3.86 | 1.20 ± 5.56 | 0.94 ± 1.27 | 0.11 ± 1.85 | 129.0 ± 113.4 | 94.4 ± 119.5 | 96.9 ± 92.2 | 65.7 ± 94.3 |

PLS-DA: Partial Least Squares-Discriminant Analysis; sPLS-DA: Sparse PLS-DA; SVM: Support Vector Machines; NNet: Artificial Neural Network; RF: Random Forest; k-NN: k-Nearest Neighbors; NB: Naïve Bayes

Figure 5.

Empirical distribution of the misclassification rate observed in the realistic scenario simulation studies. The solid red line represents the median of each distribution, while dashed red lines represent the 1st and 3rd quantiles (25th and 75th percentile).

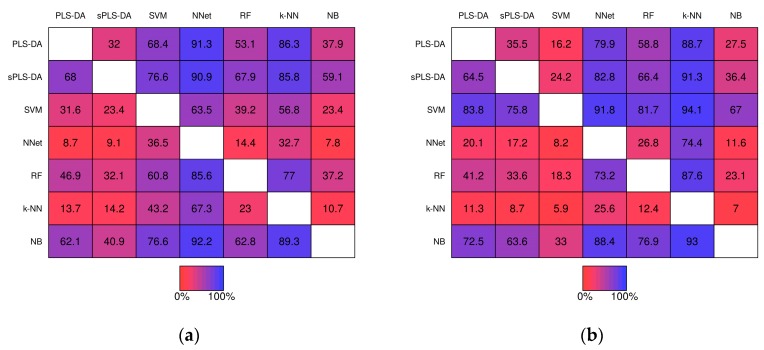

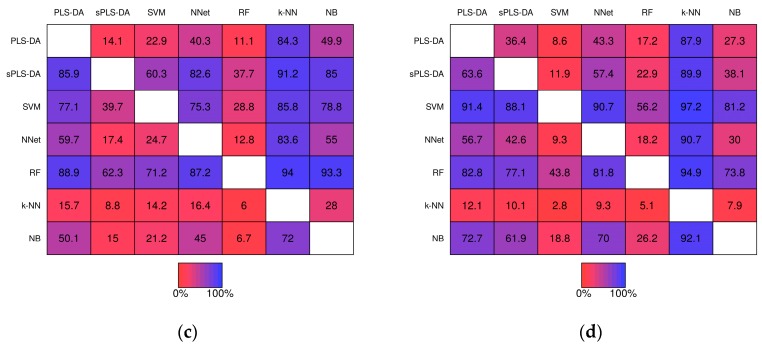

2.2.2. Pairwise Performance Comparisons within Simulation Studies

Pairwise comparisons of misclassification rate within the simulation studies are shown in Figure 6. For example, PLS-DA had a lower misclassification rate than sPLS-DA in 32.0% of the 1000 baseline scenario studies prior-to significance filtering (Figure 6a, row 1, column 2) and in 35.5% of the studies post-significance filtering (Figure 6b row 1, column 2). k-NN classifiers exhibited greater misclassification rate relative to the other techniques in the majority of studies with the exception of Neural Networks given baseline scenarios. In the realistic scenarios PLS-DA, exhibited a higher misclassification rate than each of the other techniques (except k-NN) within the same simulation the majority of the time.

Figure 6.

Matrices showing the proportion of the time a fixed technique performed better than another fixed technique during the same simulation study. Proportions were computed from the 1000 baseline simulation studies prior-to significance filtering (a) and post-significance filtering (b); and from the 1000 realistic simulation studies prior-to significance filtering (c) and post-significance filtering (d).

2.3. Performance over Real Datasets

Performance over the real datasets is shown in Table 3. Over the Adenocarcinoma study data, PLS-DA, Random Forest, and Naïve Bayes observed the lowest misclassification rate (17.9%) on the test dataset prior to significance filtering. Post-significance filtering a PLS-DA classifier demonstrated the lowest misclassification (7.1%) over the test data. With respect to cross-entropy loss, Random Forests demonstrated the lowest cross-entropy loss prior to significance filtering, and an SVM classifier exhibited the lowest cross-entropy loss post-significance filtering. Over the acute myocardial infarction (MI) study data, Random Forest classifiers had the lowest misclassification rate estimated by double cross-validation prior-to and post-significance filtering (22.1% and 7.9%). With respect to cross-entropy loss, Random Forest classifiers demonstrated lowest cross-validation estimated loss, while SVM classifiers demonstrated the lowest loss following significance filtering. Finally, over the NOS1AP variants dataset, an sPLS-DA classifier demonstrated the lowest misclassification rate prior-to significance filtering (2.1%) on the test data. Post-significance filtering, a Random Forest classifier demonstrated lowest misclassification (4.2%) on the test set. Random Forest classifiers demonstrated lowest cross-entropy loss when evaluated on the test dataset prior to and post-significance filtering.

Table 3.

Misclassification rate and cross-entropy loss observed over real datasets. Pre- represents pre-significance filtering while post- represents post-significance filtering. Lowest error is shown in bold face.

| Dataset | Technique | Misclassification (%) | Cross-Entropy Loss | ||

|---|---|---|---|---|---|

| Pre- | Post- | Pre- | Post- | ||

| Adenocarcinoma | PLS-DA | 17.9 | 7.1 | 0.78 | 0.68 |

| sPLS-DA | 32.1 | 14.3 | 0.83 | 0.72 | |

| RF | 17.9 | 14.3 | 0.68 | 0.57 | |

| SVM | 21.4 | 10.7 | 0.78 | 0.53 | |

| NNet | 21.4 | 28.6 | 0.77 | 0.86 | |

| k-NN | 28.6 | 14.3 | 61.2 | 30.8 | |

| NB | 17.9 | 10.7 | 4.85 | 2.56 | |

| Acute MI | PLS-DA | 47.4 | 42.1 | 1.41 | 1.28 |

| sPLS-DA | 47.4 | 15.8 | 1.43 | 1.35 | |

| RF | 22.1 | 7.9 | 1.08 | 0.76 | |

| SVM | 55.3 | 13.2 | 1.89 | 0.65 | |

| NNet | 47.4 | 31.6 | 1.47 | 1.16 | |

| k-NN | 44.7 | 39.5 | 95.3 | 106.4 | |

| NB | 42.1 | 15.8 | 164.0 | 20.3 | |

| NOS1AP | PLS-DA | 22.9 | 6.3 | 1.14 | 0.93 |

| Variants | sPLS-DA | 2.1 | 6.3 | 0.98 | 0.93 |

| RF | 6.3 | 4.2 | 0.27 | 0.21 | |

| SVM | 12.5 | 6.3 | 0.50 | 0.26 | |

| NNet | 16.7 | 6.3 | 1.08 | 0.86 | |

| k-NN | 41.7 | 8.3 | 88.8 | 17.8 | |

| NB | 12.5 | 6.3 | 4.14 | 1.75 | |

3. Discussion

In this report, we have detailed a rigorous and comprehensive evaluation of selected statistical classification techniques for discrimination of phenotype given metabolomic data. This work addresses a concern raised by others [8,25] that PLS-DA predominates classification in metabolomics without regard to potential limitations or misuses. In addition to PLS-DA, many of the classifier techniques included in this analysis have been utilized for achieving a classification or discrimination task in metabolomics (see for example: [26] for sPLS-DA, [27] for Random Forests, [28] for SVM, [29] for Neural Networks). Previous analyses of relative classifier performance such as a comparison of PLS-DA, SVM, and Random Forests detailed in both Gromski et al., [30] and Chen et al., [31] have been conducted over specific datasets. In the first analysis, Random Forests and SVM classifiers were shown to exhibit optimal performance and in the second the performance of Random Forests was shown to be optimal. The current study is novel in the use of simulation studies with stochastically varied parameters in order to evaluate the consistency of performance estimates in conjunction with an evaluation over a sample of real datasets. By stochastically varying parameters in the simulation studies including the number of metabolite clusters that differ between phenotypes, the effect size of differences, the degree of departure from approximate normality, the proportion of missing values, and the proportion of simulated biological and technical outliers, we have ensured that estimates of classifier performance are sufficiently general.

A few key conclusions are supported by the analysis of misclassification rate. First, the performance of PLS-DA, Neural Networks, and k-nearest neighbor classifiers was generally worse than other classification techniques. The deterioration of performance of PLS-DA classifiers with the introduction of realistic metabolomics data artifacts such as non-normal error distributions, outliers, and missing values was especially pronounced. In the scenario that is likely most relevant to metabolomics practitioners (“realistic scenarios” post-significance filtering) the ordering of most accurate to least was SVM, Random Forest, Naïve Bayes, sPLS-DA, Neural Networks, PLS-DA and k-NN classifiers. Over these scenarios, SVM classifiers demonstrated superior performance with respect to pairwise comparisons within the same simulations, while k-NN demonstrated inferior performance relative to other techniques. The lackluster relative performance of k-NN classifiers may be attributed to needing larger sample sizes than available in the simulated and real datasets in this analysis; modified versions have been proposed previously to optimize k-NN for small sample size problems [32]. Another conclusion supported by this work is that regularization of PLS-DA improves accuracy in addition to encouraging a sparser classifier. This is consistent with previous work given a regression as opposed to discrimination problem. Chun and Keles [33] demonstrated that the asymptotic consistency of PLS estimators does not hold for p ≫ n and that in the regression case with p ≫ n, regularization (sPLS) substantially decreased mean square error relative to PLS.

Significant conclusions can also be drawn from the analysis of cross-entropy loss. In the scenario that is likely most relevant to metabolomics practitioners (“realistic scenarios” post-significance filtering), the cross-entropy loss ordering (least to greatest error) was sPLS-DA, PLS-DA, Neural Networks, Random Forests, SVM, Naïve Bayes, and k-NN classifiers. While similar cross-entropy loss performance was observed for sPLS-DA, PLS-DA, Neural Networks, Random Forests, and SVM classifiers over the realistic scenarios post significance filtering, Naïve Bayes and k-NN classifiers performed substantially worse. As relatively high cross-entropy loss corresponds to a relatively low predicted probability of the true phenotype, Naïve Bayes and k-NN classifiers have a demonstrated tendency to make such errors over simulated metabolomics data. The difference in relative performance of the classifier techniques across the two loss functions considered demonstrates the impact loss function choice on the measurement of classifier accuracy. Cross-entropy loss function has the advantage of differential penalization of phenotype predictions based on predicted phenotype probability, while 0–1 loss (yielding the misclassification rate) considers only whether the most likely phenotype label matches the true phenotype. Consequently, it may be a more appropriate measure of accuracy for probabilistic reasoning in clinical applications.

A limitation of this work is that, while we have sought to minimize the effect of algorithm parameters on observed misclassification rate and cross-entropy loss by conducting extensive parameter tuning via cross-validation, the entire parameter space was not evaluated for multiple techniques. For example, while a thorough grid search (with smoothing) was conducted to select the Gaussian kernel bandwidth parameter for the SVM classifiers, the space of kernels not evaluated remains infinite. Additionally, in the current study we have defined measures of prediction error as the objective criteria for measuring classifier performance. However, other criteria such as model interpretability may be important for practitioners. This is especially the case when classifier techniques are used for hypothesis testing or for biological inference. Additionally, throughout this analysis we have evaluated each classifier with identical preprocessing steps prior to model fitting. For example, for dimension reduction we have chosen to employ a uniform univariate significance filtering process irrespective of the classifier technique. However, there exist classifier specific methods for feature selection such as support vector machine-recursive feature Elimination (SVM-RFE) [34] that may optimize the performance of a specific technique.

4. Materials and Methods

4.1. Simulated Metabolomics Data

Evaluation of classifier techniques for metabolomics-based phenotype discrimination requires simulation studies that realistically mimic data captured using analytical methods such as nuclear magnetic resonance or chromatography-coupled mass spectrometry from biological samples (e.g., cell or biofluid extract). While the distribution of metabolite abundances may have platform and/or sample medium specific artifacts, we posit that six features are common to untargeted metabolomics studies: (1) significant correlations and higher-order partial correlations between metabolites within biological processes, (2) a small proportion of differentially abundant metabolites localized specific biological processes, (3) a large number of quantified metabolites relative to sample size—most demonstrating variance orthogonal to phenotype, (4) non-Gaussian error distributions and nonlinear relationships between metabolite abundances and phenotype attributes, (5) metabolite abundance levels below a limit of detection, and (6) presence of biological and technical outliers.

Metabolites within biochemical processes are related by substrate, intermediate, and product relations thus generating complex correlation structures. Consequently, we generated simulated abundance data to follow multivariate distributions with covariance structures that allow for mimicking biological processes. Further, as the enzymes that catalyze biochemical reactions are often subject to regulatory processes such as feedback inhibition [12], we simulated complex partial correlation structures. We represent metabolite abundance data as a matrix of dimension n × p given n samples and p metabolites, sample phenotype labels as a vector y or as a matrix of binary indicators . For each simulation study dataset we generated 40 multivariate blocks of 25 metabolites. Each block, was generated such that followed a multivariate Gaussian distribution, that is: . The covariance matrices were each randomly generated using C-vines for simulating partial correlations between metabolites [24]. The algorithm for generating correlation matrices utilizing C-vines is presented below (Algorithm 1) and was developed by Lewandowski, Kurowicka and Joe [24]:

| Algorithm 1 |

| 1: Initialize

2: For do: 3: 4: For do: 5: Generate 6: End For 7: End For 8: |

Three phenotypes were simulated by supplying different means for a small proportion of simulated metabolite blocks. A reference phenotype had for all . The number of perturbed blocks in the comparator phenotypes was generated to follow a discrete uniform distribution, . The perturbed block means were generated using a hierarchical model with and . A simulated Bernoulli process with was employed to modulate the sign of . The “realistic” scenario data was generated as above with the added data generation step of applying a nonlinear transformation to the empirical cumulative distribution function of the multivariate gaussian blocks to generate randomly-parameterized general gaussian distributions (GGD). The probability distribution function of a GGD with location parameter zero is defined as [35]:

| (1) |

where is the standard Gaussian probability distribution function. In addition to introducing non-normal error distributions, a dirichlet-multinomial hierarchical model was used for simulating unbalanced phenotype distributions, Bernoulli processes were added to simulate biological and technical outliers, and an artificial lower limit of detection was introduced yielding a missing not at random (MNAR) mechanism.

To evaluate the hypothesis that significance filtering prior to classifier construction would have an impact on the relative performances of the techniques evaluated, within each simulation study, we evaluated performance prior-to and post-significance filtering. Significance filtering was conducted by filtering on metabolites with significant pairwise t-tests between groups at a significance level of in the baseline scenarios and pairwise wilcoxon rank-sum tests in the realistic scenarios.

4.2. Classification Techniques

4.2.1. Partial Least Squares-Discriminant Analysis (PLS-DA)

Partial least squares (for linear regression: PLS-R) has the following model formulation [36,37,38]:

| (2) |

In this formulation, and represent Gaussian noise, and are latent component matrices, and and are the loading matrices that relate the latent components to the observed metabolite abundances and the observed response variables . PLS algorithms seek to find weight vectors and such that is maximized [39]. Specifically, the nonlinear iterative partial least squares algorithm (NIPALS) may be used to find and . The algorithm pseudocode is presented as in rosipal [40] below (Algorithm 2). Until convergence, repeat:

| Algorithm 2 |

| 1: w = XT u/(uT u) 2: ‖w‖→1 3: t = Xw 4: c=YT t/(tT t) 5: ‖c‖→1 6: u=Yc. |

Finally, and can be found by ordinary least squares (OLS) regression and and are deflated. In our simulation studies and applications, we consider the first three score vectors, . To utilize PLS for classification is defined as a binary indicator matrix of sample phenotypes and a discriminant analysis is conducted following regression.

4.2.2. Sparse Partial Least Squares-Discriminant Analysis (sPLS-DA)

Lê Cao et al., [11] proposed a regularized version of PLS-DA to encourage sparsity in PLS modeling. In this section, we modify the description found in Lê Cao et al., [41] to maintain consistency. The objective of sPLS remains to find and such that is maximized, but now subject to penalization of the norm of . To proceed, we introduce a result from höskuldsson [39], that and are the vectors that satisfy:

| (3) |

given the singular value decomposition: . Consequently, the regularized optimization problem can be restated as:

| (4) |

where is the number of deflations. The penalization parameter was selected in each simulation study or real data analysis utilizing a grid search strategy (see Section 4.3).

4.2.3. Support Vector Machines (SVM)

Support vector machines (SVM) are binary classifiers that seek to find hyperplanes that maximize the separation between classes. These hyperplanes may be linear in the original space of metabolite abundances (of dimension ) or in a higher dimensional space (of dimension ) that allow for nonlinear boundaries in the original space [18]. A decision hyperplane for binary classification with as phenotype indicators has the following form [13]:

| (5) |

where is an arbitrary real valued function and is a vector of weights. This leads to the following optimization problem for [13,18]:

| (6) |

As the optimization problem in (6) seeks to maximize the margin, , that separates the phenotypes, SVM classifiers are often referred to as maximal margin classifiers. In the case that a hyperplane does not separate the observations by phenotype, then slack terms, , are added allowing for a “soft” margin and yielding the optimization problem:

| (7) |

where is a constant. The optimization problem is then solved by quadratic programming utilizing Lagrange multipliers. Conveniently, this optimization does not require explicit computation of the original data given new basis functions, that is , but rather the inner products [13,18]. Consequently, nonlinear transformations are usually defined in terms of the kernel function determined by the inner product, . In each simulation study or real data analysis, Radial (Gaussian) kernels: , were utilized with selected using a grid search strategy (see Section 4.3). As SVMs are binary classifiers, to employ SVMs for multi-phenotype discrimination, multiple classifiers need to be constructed and aggregated. In our analyses, we employ a “one-against-one” approach [42].

4.2.4. Neural Networks (NNet)

In this analysis, we evaluated feedforward Neural Networks for classification. A feed forward network is a class of directed acyclic graphs loosely inspired by models of cognition in which metabolite abundances are conceptualized as stimuli and phenotype predictions are conceptualized as perceptions [13,21]. Topologically, a feedforward network consists of an input layer (allowing for the transfer of metabolite abundances), one or more hidden layers for processing and aggregation of signals from earlier layers, and an output layer with each phenotype represented by a node. Bias nodes may be incorporated to introduce signal independent of topologically antecedent layers. Given this topological representation, the general formula (output for each phenotype) with implicit bias terms is [21]:

| (8) |

where and are continuous functions applied at output and hidden layer vertices, respectively; represent directed edges between input layer vertices and hidden layer vertices; represent directed edges between hidden layer vertices and output layer vertices; and represent “skip-layer” transfers from input layer vertices directly to output layer vertices. In our analyses, we have utilized “Resilient Backpropagation” (RPROP) for training Neural Network classifiers [43]. In general, backpropagation algorithms iteratively use training observations to compute the output of a network (“forward pass”) followed by computation of the partial derivatives of the error function with respect to network weights (“backward pass”) for updating the weights by gradient descent [21,44]. Resilient backpropagation modifies the weight updating step to adaptively modulate the magnitude of weight updating based on the sign of the partial derivatives [43].

4.2.5. Random Forests (RF)

A Random Forest (RF) classifier can be conceptualized as an ensemble of classification trees each constructed utilizing a bootstrap sample from the original data. The process of constructing individual classification trees proceeds by recursive binary splits (splitting a parent node into two daughter nodes) selected from a restricted subset of random variables (metabolites) and cutpoints [17,18]. Specifically, at each iteration, a set of candidate regions:

| (9) |

is generated following the selection of a set of random variables (metabolites) sampled with replacement from the bootstrapped data. After generating the regions, the empirical phenotype distribution is computed over each region , that is:

| (10) |

for each phenotype . For each region, a phenotype is then ascribed:

and are then chosen to minimze a measure of node impurity—in our case, the misclassification error: . Once and have been selected, the current parent node is split into the daughter nodes satisfying or . When generating an ensemble of individual classification trees, the correlation between individual trees estimated from the bootstrapped samples is reduced by enforcing a random subspaces constraint [20], considering at each binary split only a randomly drawn subset of variables (metabolites). Once an ensemble of trees has been aggregated as a Random Forest, predicted phenotype probabilities can be determined by aggregating individual tree predictions.

4.2.6. Naïve Bayes (NB)

Naïve Bayes classifiers are derived from a straightforward application of Bayes’ theorem to multiclass classification [13,18], that is:

| (11) |

Noting that is independent of , the phenotype label, the posterior distribution of phenotype labels is then proportional to the numerator of Label (11) only. Given the “naïve” assumption that the metabolite abundances are independent the posterior probability is then:

| (12) |

A Gaussian distribution is then assumed for each metabolite conditioned on phenotype, that is , and the Gaussian distribution parameters are estimated via maximum likelihood estimation.

4.2.7. k-Nearest Neighbors (k-NN)

k-Nearest Neighbors classifiers can also be derived from an application of Bayes’ theorem [13]. Given a set of training samples where represents metabolite abundances and represents the sample phenotype, phenotype probabilities for a new sample can be estimated using a neighborhood of the closest training samples with respect to a distance metric such as Euclidean distance. Representing number of samples within with phenotype label as and the total number of samples within as , the posterior probabilities of phenotype label are then:

| (13) |

4.3. Parameter Selection

Each of the classification techniques evaluated in the present study represent families of classifiers whose members are uniquely determined by algorithm parameters. Consequently, we sought to minimize the probability that an observed relative difference in classifier performance was due to sub-optimal parameter selection for one or more techniques. During the course of each simulation study (prior-to and post-significance filtering), parameter selection was conducted by minimizing expected cross-entropy loss estimated by cross-validation and smoothed over a parameter grid using kernel smoothing. For reproducibility, the relevant fixed and cross-validation selected algroithm parameters used in defining the classifiers are shown in Table 4.

Table 4.

Simulation study parameters.

| Technique | Parameter | Type | Value/Search Grid |

|---|---|---|---|

| PLS-DA | Number of components | Optimized | [1, 2, ..., 15] |

| Sparse PLS-DA | Number of components | Optimized | [1, 2, ..., 15] |

| Regularization () | Optimized | [0.1, ..., 0.9] by 0.1 | |

| Random Forest | Ensemble size | Fixed | 1000 |

| Random subspace size | Optimized | [5, ..., p] of length 25 | |

| SVM | Kernel | Fixed | Gaussian |

| Bandwidth () | Optimized | 10^[−5, ..., −1] of length 1000 †; 10^[−2, ..., 0] of length 1000 ‡ |

|

| Neural Network | Number of hidden layers | Optimized | 1 or 2 |

| Number of hidden nodes | Optimized | [15, ..., 100] by 5 | |

| Activation function | Fixed | Logistic | |

| Learning function | Fixed | Resilient Backpropagation | |

| Error function | Fixed | Cross-entropy Loss | |

| k-NN | Number of neighbors | Optimized | [1, 2, ..., 20] |

† Prior-to significance filtering. ‡ Post-significance filtering.

4.4. Evaluation of Classifier Performance

Classifier performance was evaluated by computation of the empirical risk (error) associated with two different loss functions [44]. Defining a phenotype prediction from a classifier, a 0–1 loss function is: , with associated empirical risk (the misclassification rate): . Cross-entropy loss is defined as: with empirical error: . While the misclassification rate measures the frequency of a classifier incorrectly classifying observations, the empirical cross-entropy error measures the average amount of extra information required to represent the true phenotypes with the predicted phenotypes. Consequently, the empirical cross-entropy error provides a measure of how well the predicted phenotypes “match” the true phenotypes. The distinction between these loss functions can be observed with the following case. Given a binary classification task, a misclassified observation with a predicted phenotype probability of 49% incurs less cross-entropy loss than a predicted phenotype probability of 0.1%. Given a 0–1 loss function, the computed loss would be the same for a misclassified observation with a predicted phenotype probability of 49% as a predicted phenotype probability of 0.1%.

4.5. Clinical Datasets

In addition to evaluation of classifier performance via simulation studies, classifier performance was evaluated over two clinical datasets. In the first, DeFilippis et al., [6] employed an untargeted approach for determining a plasma signature that differentiates between thrombotic myocardial infarction (MI), non-thrombotic MI, and stable coronary artery disease (CAD). Thrombotic MI is characterized by atherosclerotic plaque rupture/disruption that leads to the formation of a thrombus and the obstruction of a coronary artery [45] while non-thrombotic MI occur secondary to other causes such as blood supply demand mismatch during tachyarrhythmias, coronary artery spasm or low blood oxygen levels. Plasma samples from 23 subjects presenting with acute MI and 15 subjects with stable coronary artery disease undergoing cardiac catheterization were analyzed. Of the 23 acute MI subjects, 11 were adjudicated to be thrombotic MI and 12 were adjudicated to be non-thrombotic MI utilizing a strict criteria. 1,032 metabolites were detected and quantified by gas chromatography mass spectrometry (GC-MS with electron ionization), and ultra performance liquid chromatography mass spectrometry (UPLC-MS with electrospray ionization) in both positive and negative ion modes. Given the limited sample size, we employed a cross-validation approach to measuring classifier performance. In the second dataset, Fahrman et al. [46] sought to determine plasma or serum based biomarkers that could be used to detect adenocarcinoma lung cancer with better specificity than existing methods such as low-dose computed tomography. The researchers developed two case-control cohorts for the purpose of discovering and validating biomarkers of adenocarcinoma lung cancer. Untargeted gas chromatography time-of-flight mass spectrometry with electron ionization was used to determine metabolic abundances in both the discovery and validation cohorts. In our analysis of classifier performance, we utilized the plasma sample metabolite abundances from the second cohort and employed a train-test approach. In the second cohort, abundances of 413 metabolites were reported. In the final dataset, Zhang [47] conducted a metabolomics analysis of serum from healthy subjects with different NOS1AP (Nitric Oxide Synthase 1 Adaptor Protein) rs12742393 polymorphisms. In this serurm from AA, AC, CC genotypes were examined by GC-TOF-MS and UPLC-QTOF-MS. Error was quantified over the adenocarcinoma dataset and the NOS1AP dataset using withheld test sets of 1/3 of the total observations. Over the acute MI dataset, error was estimated using repeated double cross-validation [48].

4.6. Statistical Software

The simulation studies and analyses over real datasets were conducted in the R environment [49] and made use of functions from the following packages: clusterGeneration [50], class [51], randomForest [52] , e1071 [53], neuralnet [54], caret [55], cvTools [56], dplyr [57], and tidyr [58].

5. Conclusions

The analysis reported supports a few conclusions regarding classifier accuracy for application in untargeted metabolomics. In the most realistic simulation studies that incorporated non-normal error distributions, unbalanced phenotype allocation, outliers, missing values, and dimension reduction, classifier performance (least to greatest error) was ranked as follows: SVM, Random Forest, Naïve Bayes, sPLS-DA, Neural Networks, PLS-DA and k-NN classifiers. When non-normal error distributions were introduced, the performance of PLS-DA and k-NN classifiers deteriorated further relative to the remaining techniques. Over the real datasets, a trend of better performance of SVM and Random Forest classifier performance was observed. Finally, this work demonstrates that relative classifier performance is not invariant given choice of loss function.

Acknowledgments

This work was supported in part by a grant from the American Heart Association (11CRP7300003) and the National Institute of General Medical Sciences (GM103492). Shesh N. Rai was supported by the Wendell Cherry Chair in Clinical Trial Research and generous support from the James Graham Brown Cancer Center. This work was conducted in part using the resources of the University of Louisville’s research computing group and the Cardinal Research Cluster. The authors thank Samantha M. Carlisle for her review and insights.

Supplementary Materials

R scripts for conducting the simulation studies are publicly available via GitHub (http://github.com/trainorp/MetabClass).

Author Contributions

Andrew P. DeFilippis and Shesh N. Rai conceived and designed the experiments; Patrick J. Trainor performed the experiments and analyzed the data; Patrick J. Trainor wrote the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Del Prato S., Marchetti P., Bonadonna R.C. Phasic insulin release and metabolic regulation in type 2 diabetes. Diabetes. 2002;51(Suppl. 1):S109–S116. doi: 10.2337/diabetes.51.2007.S109. [DOI] [PubMed] [Google Scholar]

- 2.Freeman M.W. Principles of Molecular Medicine. Humana Press; New York, NY, USA: 2006. Lipid metabolism and coronary artery disease; pp. 130–137. [Google Scholar]

- 3.Ashrafian H., Frenneaux M.P., Opie L.H. Metabolic mechanisms in heart failure. Circulation. 2007;116:434–448. doi: 10.1161/CIRCULATIONAHA.107.702795. [DOI] [PubMed] [Google Scholar]

- 4.Cairns R.A., Harris I.S., Mak T.W. Regulation of cancer cell metabolism. Nat. Rev. Cancer. 2011;11:85–95. doi: 10.1038/nrc2981. [DOI] [PubMed] [Google Scholar]

- 5.Chen X., Liu L., Palacios G., Gao J., Zhang N., Li G., Lu J., Song T., Zhang Y., Lv H. Plasma metabolomics reveals biomarkers of the atherosclerosis. J. Sep. Sci. 2010;33:2776–2783. doi: 10.1002/jssc.201000395. [DOI] [PubMed] [Google Scholar]

- 6.DeFilippis A.P., Trainor P.J., Hill B.G., Amraotkar A.R., Rai S.N., Hirsch G.A., Rouchka E.C., Bhatnagar A. Identification of a plasma metabolomic signature of thrombotic myocardial infarction that is distinct from non-thrombotic myocardial infarction and stable coronary artery disease. PLoS ONE. 2017;12:e0175591. doi: 10.1371/journal.pone.0175591. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Jung J.Y., Lee H.S., Kang D.G., Kim N.S., Cha M.H., Bang O.S., Ryu D.H., Hwang G.S. 1H-NMR-based metabolomics study of cerebral infarction. Stroke. 2011;42:1282–1288. doi: 10.1161/STROKEAHA.110.598789. [DOI] [PubMed] [Google Scholar]

- 8.Gromski P.S., Muhamadali H., Ellis D.I., Xu Y., Correa E., Turner M.L., Goodacre R. A tutorial review: Metabolomics and partial least squares-discriminant analysis—A marriage of convenience or a shotgun wedding. Anal. Chim. Acta. 2015;879:10–23. doi: 10.1016/j.aca.2015.02.012. [DOI] [PubMed] [Google Scholar]

- 9.Frank I.E., Friedman J.H. A statistical view of some chemometrics regression tools. Technometrics. 1993;35:109. doi: 10.1080/00401706.1993.10485033. [DOI] [Google Scholar]

- 10.Lê Cao K.-A., Martin P.G.P., Robert-Granié C., Besse P. Sparse canonical methods for biological data integration: Application to a cross-platform study. BMC Bioinform. 2009;10:34. doi: 10.1186/1471-2105-10-34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lê Cao K.-A., Rossouw D., Robert-Granié C., Besse P. A sparse PLS for variable selection when integrating omics data. Stat. Appl. Genet. Mol. Biol. 2008;7 doi: 10.2202/1544-6115.1390. [DOI] [PubMed] [Google Scholar]

- 12.Voet D., Voet J.G., Pratt C.W. Fundamentals of Biochemistry: Life at the Molecular Level. 4th ed. Wiley; Hoboken, NJ, USA: 2013. [Google Scholar]

- 13.Bishop C.M. Pattern Recognition and Machine Learning. Springer; New York, NY, USA: 2006. [Google Scholar]

- 14.Hammer B., Gersmann K. A note on the universal approximation capability of support vector machines. Neural Processing Lett. 2003;17:43–53. doi: 10.1023/A:1022936519097. [DOI] [Google Scholar]

- 15.Hornik K. Approximation capabilities of multilayer feedforward networks. Neural Netw. 1991;4:251–257. doi: 10.1016/0893-6080(91)90009-T. [DOI] [Google Scholar]

- 16.McCulloch W.S., Pitts W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943;5:115–133. doi: 10.1007/BF02478259. [DOI] [PubMed] [Google Scholar]

- 17.Breiman L. Random forests. Mach. Learn. 2001;45:5–32. doi: 10.1023/A:1010933404324. [DOI] [Google Scholar]

- 18.Hastie T., Tibshirani R., Friedman J.H. The Elements of Statistical Learning: Data Mining, Inference, and Prediction. 2nd ed. Springer; New York, NY, USA: 2009. [Google Scholar]

- 19.Breiman L. Bagging predictors. Mach. Learn. 1996;24:123–140. doi: 10.1007/BF00058655. [DOI] [Google Scholar]

- 20.Tin Kam H. The random subspace method for constructing decision forests. IEEE Trans. Pattern Anal. Mach. Intell. 1998;20:832–844. doi: 10.1109/34.709601. [DOI] [Google Scholar]

- 21.Ripley B.D. Pattern Recognition and Neural Networks. Cambridge University Press; Cambridge, UK: 1996. [Google Scholar]

- 22.Camacho D., de la Fuente A., Mendes P. The origin of correlations in metabolomics data. Metabolomics. 2005;1:53–63. doi: 10.1007/s11306-005-1107-3. [DOI] [Google Scholar]

- 23.Steuer R. Review: On the analysis and interpretation of correlations in metabolomic data. Brief. Bioinform. 2006;7:151–158. doi: 10.1093/bib/bbl009. [DOI] [PubMed] [Google Scholar]

- 24.Lewandowski D., Kurowicka D., Joe H. Generating random correlation matrices based on vines and extended onion method. J. Multivar. Anal. 2009;100:1989–2001. doi: 10.1016/j.jmva.2009.04.008. [DOI] [Google Scholar]

- 25.Brereton R.G., Lloyd G.R. Partial least squares discriminant analysis: Taking the magic away. J. Chemom. 2014;28:213–225. doi: 10.1002/cem.2609. [DOI] [Google Scholar]

- 26.Jiang M., Wang C., Zhang Y., Feng Y., Wang Y., Zhu Y. Sparse partial-least-squares discriminant analysis for different geographical origins of salvia miltiorrhizaby 1H-NMR-based metabolomics. Phytochem. Anal. 2014;25:50–58. doi: 10.1002/pca.2461. [DOI] [PubMed] [Google Scholar]

- 27.Gao R., Cheng J., Fan C., Shi X., Cao Y., Sun B., Ding H., Hu C., Dong F., Yan X. Serum metabolomics to identify the liver disease-specific biomarkers for the progression of hepatitis to hepatocellular carcinoma. Sci. Rep. 2015;5:18175. doi: 10.1038/srep18175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Guan W., Zhou M., Hampton C.Y., Benigno B.B., Walker L.D., Gray A., McDonald J.F., Fernández F.M. Ovarian cancer detection from metabolomic liquid chromatography/mass spectrometry data by support vector machines. BMC Bioinform. 2009;10:259. doi: 10.1186/1471-2105-10-259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Brougham D.F., Ivanova G., Gottschalk M., Collins D.M., Eustace A.J., O’Connor R., Havel J. Artificial neural networks for classification in metabolomic studies of whole cells using 1H nuclear magnetic resonance. J. Biomed. Biotechnol. 2011;2011 doi: 10.1155/2011/158094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Gromski P.S., Xu Y., Correa E., Ellis D.I., Turner M.L., Goodacre R. A comparative investigation of modern feature selection and classification approaches for the analysis of mass spectrometry data. Anal. Chim. Acta. 2014;829 doi: 10.1016/j.aca.2014.03.039. [DOI] [PubMed] [Google Scholar]

- 31.Chen T., Cao Y., Zhang Y., Liu J., Bao Y., Wang C., Jia W., Zhao A. Random forest in clinical metabolomics for phenotypic discrimination and biomarker selection. Evid.-Based Complement. Altern. Med. 2013;2013 doi: 10.1155/2013/298183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Parthasarathy G., Chatterji B.N. A class of new knn methods for low sample problems. IEEE Trans. Syst. Man Cybern. 1990;20:715–718. doi: 10.1109/21.57285. [DOI] [Google Scholar]

- 33.Chun H., Keleş S. Sparse partial least squares regression for simultaneous dimension reduction and variable selection. J. R. Stat. Soc. Ser. B. 2010;72:3–25. doi: 10.1111/j.1467-9868.2009.00723.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Guyon I., Weston J., Barnhill S., Vapnik V. Gene selection for cancer classification using support vector machines. Mach. Learn. 2002;46:389–422. doi: 10.1023/A:1012487302797. [DOI] [Google Scholar]

- 35.Nadarajah S. A generalized normal distribution. J. Appl. Stat. 2005;32:685–694. doi: 10.1080/02664760500079464. [DOI] [Google Scholar]

- 36.Rosipal R., Trejo L.J. Kernel partial least squares regression in reproducing kernel hilbert space. J. Mach. Learn. Res. 2001;2:97–123. [Google Scholar]

- 37.Boulesteix A.-L. PLS dimension reduction for classification with microarray data. Stat. Appl. Genet. Mol. Biol. 2004;3:1–30. doi: 10.2202/1544-6115.1075. [DOI] [PubMed] [Google Scholar]

- 38.Boulesteix A.L., Strimmer K. Partial least squares: A versatile tool for the analysis of high-dimensional genomic data. Brief. Bioinform. 2006;8:32–44. doi: 10.1093/bib/bbl016. [DOI] [PubMed] [Google Scholar]

- 39.Höskuldsson A. Pls regression methods. J. Chemom. 1988;2:211–228. doi: 10.1002/cem.1180020306. [DOI] [Google Scholar]

- 40.Rosipal R. Chemoinformatics and Advanced Machine Learning Perspectives: Complex Computational Methods and Collaborative Technqiues. IGI Global; Hershey, PA, USA: 2011. Nonlinear partial least squares: An overview; pp. 169–189. [Google Scholar]

- 41.Lê Cao K.-A., Boitard S., Besse P. Sparse pls discriminant analysis: Biologically relevant feature selection and graphical displays for multiclass problems. BMC Bioinform. 2011;12:253. doi: 10.1186/1471-2105-12-253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Chih-Wei H., Chih-Jen L. A comparison of methods for multiclass support vector machines. IEEE Trans. Neural Netw. 2002;13:415–425. doi: 10.1109/72.991427. [DOI] [PubMed] [Google Scholar]

- 43.Riedmiller R., Braun H. A direct adaptive method for faster backpropagation learning: The rprop algorithm; Proceedings of the IEEE International Conference on Neural Networks; San Francisco, CA, USA. 28 March–1 April 1993. [Google Scholar]

- 44.Vapnik V.N. Statistical Learning Theory. Wiley; New York, NY, USA: 1998. [Google Scholar]

- 45.Thygesen K., Alpert J.S., Jaffe A.S., Simoons M.L., Chaitman B.R., White H.D., Writing Group on the Joint ESC/ACCF/AHA/WHF Task Force for the Universal Definition of Myocardial Infarction. Thygesen K., Alpert J.S., White H.D., et al. Third universal definition of myocardial infarction. J. Am. Coll. Cardiol. 2012;60:1581–1598. doi: 10.1016/j.jacc.2012.08.001. [DOI] [PubMed] [Google Scholar]

- 46.Fahrmann J.F., Kim K., DeFelice B.C., Taylor S.L., Gandara D.R., Yoneda K.Y., Cooke D.T., Fiehn O., Kelly K., Miyamoto S. Investigation of metabolomic blood biomarkers for detection of adenocarcinoma lung cancer. Cancer Epidemiol. Biomark. Prev. 2015;24:1716–1723. doi: 10.1158/1055-9965.EPI-15-0427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Yinan Z. Metabolomic Study on a Schizophrenia and Type 2 Diabetes Susceptibility Gene nos1ap-rs12742393. [(accessed on 20 June 2017)];2017 Available online: http://www.metabolomicsworkbench.org/data/DRCCMetadata.php?Mode=Project&ProjectID=PR000416.

- 48.Filzmoser P., Liebmann B., Varmuza K. Repeated double cross validation. J. Chemom. 2009;23:160–171. doi: 10.1002/cem.1225. [DOI] [Google Scholar]

- 49.R Development Core Team . R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing; Vienna, Austria: 2016. [Google Scholar]

- 50.Qiu W., Joe H. [(accessed on 20 June 2017)];Clustergeneration: Random Cluster Generation (with Specified Degree of Separation) 2015 version 1.3. Available online: https://cran.r-project.org/web/packages/clusterGeneration/index.html.

- 51.Venables W.N., Ripley B.D., Venables W.N. Modern Applied Statistics with s. 4th ed. Springer; New York, NY, USA: 2002. [Google Scholar]

- 52.Liaw A., Wiener M. Classification and regression by randomforest. R News. 2002;2:18–22. [Google Scholar]

- 53.Meyer D., Dimitriadou E., Hornik K., Weingessel A., Leisch F. E1071: Misc Functions of the Department of Statistics, Probability Theory Group. Version 1.6. [(accessed on 20 June 2017)];2016 Available online: https://cran.r-project.org/web/packages/e1071/index.html.

- 54.Fritsch S., Guenther F. Neuralnet: Training of Neural Networks. Version 1.33. [(accessed on 20 June 2017)];2016 Available online: https://cran.r-project.org/web/packages/neuralnet/index.html.

- 55.Khun M. Caret: Classification and Regression Training. Version 6.76. [(accessed on 20 June 2017)];2017 Available online: https://cran.r-project.org/web/packages/caret/index.html.

- 56.Alfons A. Cvtools: Cross-Validation Tools for Regression Models. Version 0.3.2. [(accessed on 20 June 2017)];2016 Available online: https://cran.r-project.org/web/packages/cvTools/index.html.

- 57.Wickham H., Francois R. Dplyr: A Grammar of Data Manipulation. Version 0.6.0. [(accessed on 20 June 2017)];2016 Available online: https://cran.r-project.org/web/packages/dplyr/index.html.

- 58.Wickham H. Tidyr: Easily Tidy Data with ‘Spread()’ and ‘Gather()’ Functions. Version 0.6.0. [(accessed 20 June 2017)];2016 Available online: https://cran.r-project.org/web/packages/tidyr/index.html.