Abstract

Facial expression of emotion is a foundational aspect of social interaction and nonverbal communication. In this study, we use a computer-animated 3D facial tool to investigate how dynamic properties of a smile are perceived. We created smile animations where we systematically manipulated the smile’s angle, extent, dental show, and dynamic symmetry. Then we asked a diverse sample of 802 participants to rate the smiles in terms of their effectiveness, genuineness, pleasantness, and perceived emotional intent. We define a “successful smile” as one that is rated effective, genuine, and pleasant in the colloquial sense of these words. We found that a successful smile can be expressed via a variety of different spatiotemporal trajectories, involving an intricate balance of mouth angle, smile extent, and dental show combined with dynamic symmetry. These findings have broad applications in a variety of areas, such as facial reanimation surgery, rehabilitation, computer graphics, and psychology.

Introduction

The ability to express emotional intent via facial expression is a foundational aspect of social interaction and nonverbal communication. Since Paul Ekman’s pioneering work [1–3], much effort has been devoted to the study of the emotional processing of facial expressions. Research has revealed that a variety of different emotional cues can be perceived within 100 to 200 ms after encountering a face [4, 5]. Furthermore, studies have shown that evaluations of facial emotions can have immensely important societal outcomes, e.g., recognizing an angry face to avoid a threat or recognizing a trustworthy face to determine a good leader [6]. The smile is the most well-studied facial expression, given that smiles are used frequently during interpersonal interactions [7]. Previous research suggests that an inability to effectively smile increases one’s risk for depression [8], which highlights the smile’s important role in mental health.

Unfortunately, tens of thousands of individuals each year suffer from trauma, cerebrovascular accidents (strokes), neurologic conditions, cancers, and infections that rob them of the ability to express emotions through facial movement [9]. The psychological and social consequences are significant. Individuals with partial facial paralysis are often misinterpreted, have trouble communicating, become isolated, and report anxiety, depression, and decreased self-esteem [8, 10, 11]. One option for such individuals is known as facial reanimation, which consists of surgery and rehabilitation aimed at restoring facial movement and expression. Despite the prevalence of facial reanimation procedures, clinicians lack rigorous quantitative definitions of what constitutes a socio-emotionally effective facial expression [12–14]. The key question is this: what spatial and temporal characteristics are most pertinent for displaying emotions in a real-world (dynamic) setting?

While much has been discovered about the psychology of facial expression and the perception of emotions, less is known about the impact of dynamic elements, e.g., the rate of mouth movement, left-right asymmetries, etc. This is largely because the vast majority of facial expression studies have been conducted on static images of actors expressing different facial emotions, thereby ignoring the temporal component associated with these expressions. In real-world applications, the dynamics of a facial expression can drastically influence facial perception [5, 15, 16]. Successfully treating patients who have facial movement disorders fundamentally requires an adequate understanding of both the spatial and temporal properties of effective emotional expression. However, the temporal components of facial emotional perception are less frequently studied, because systematically manipulating the timing of emotional expressions is a very difficult task—even for the best-trained actor.

Synthetic models of human facial expressions [16–18] offer exciting possibilities for the study of spatial and temporal aspects of dynamic expressions of emotion. Past research has revealed that spatial and temporal characteristics of dynamic facial expressions can be useful for distinguishing between different types of smiles [19, 20]. Furthermore, some studies have shown that the dynamics of facial expressions can have important, real-world economic and social outcomes [21, 22], and other studies have examined the role of symmetry (or asymmetry) in dynamic facial expressions [23, 24]. The general consensus is that dynamic aspects of facial expressions can have noteworthy affects on the perception of the expression, and more work is needed to understand how subtle spatiotemporal changes of facial expressions alter their intended meaning.

In this work, we leverage recent advances in computer animation and statistical learning to explore the spatiotemporal properties associated with a successful smile. Specifically, we develop a 3D facial tool capable of creating dynamic facial expressions, which allows us to isolate and manipulate individual features of lip motion during a smile. The resulting tool is able to control the timing of a smile to a greater degree than is possible with trained actors, and allows us to manipulate clinically relevant features of smiles. Using this tool, we investigate which combinations of spatial (i.e., mouth angle, smile extent, and dental show) and temporal (i.e., delay asymmetry) parameters produce smiles judged to be “successful” (i.e., effective, genuine, and pleasant) by a large sample of fairgoers (802 participants).

For this study, we focused on analyzing only the effect of mouth motion, given that (i) smiling impairment due to restricted mouth motion has been specifically shown to increase depressive symptoms in patients with facial neuromuscular disorders [8], and (ii) existing surgical interventions have shown particular success in rehabilitating corresponding muscles after trauma [9]. Although orbicularis oculi contraction is important in Duchenne smiles [7], to date, techniques are limited in restoring periocular movement. Multiple approaches focus on restoring mouth movement, so we seek to understand the effects of targeted manipulation of the mouth on the perception of smiling expressions. Past studies have found that the lower-half of the face (particularly the mouth shape) is the most salient factor for determining the intended meaning of a smile [25]. Thus, with this model, we expect the study participants to perceive differences in the emotions of the expressions and, as a result, provide meaningful information for clinical translation.

Materials and methods

Computer-animated facial tool

Using an interpolative blend shape approach [26], we developed a computer-animated, realistic 3D facial tool capable of expressing a variety of emotions. Similar to other recent anatomically motivated face simulation systems such as FACSgen [17] and FACSgen 2.0 [18], our model follows linear motion interpolation between anatomically valid static face poses. The modeling process was closely monitored and rechecked by a board-certified facial reconstructive surgeon (coauthor Lyford-Pike) in order to ensure a high degree of anatomical accuracy of the resulting mouth animation. Importantly, our model allows us to manipulate the character’s mouth independently of other muscle groups. This allows us to focus our study directly on mouth motion, which is both one of the most important aspects for visually identifying emotion [25] and the element of face movement most easily manipulatable through surgical intervention. The resulting face generation model supports variations in the extent, dental show, position, angle, timing, and asymmetry of mouth motion.

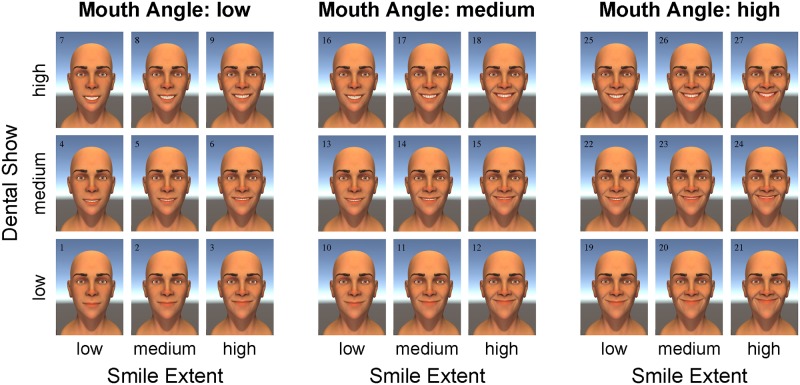

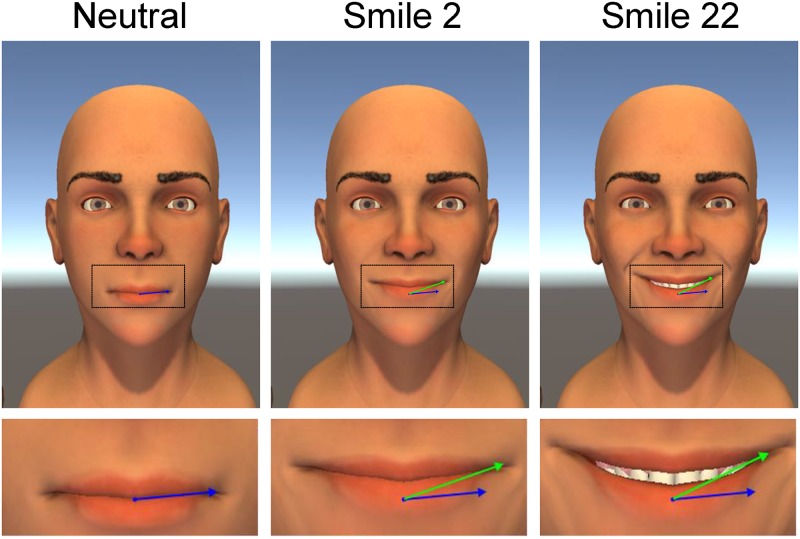

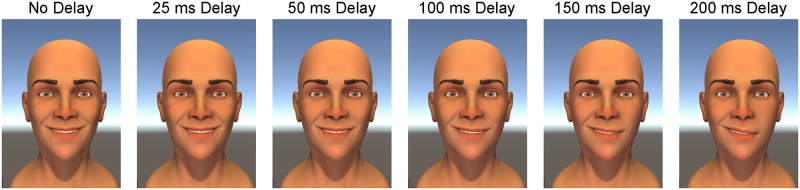

With this tool, we created 250 ms animations of smile-like expressions, systematically manipulating spatial and temporal properties. We focus on 27 stimuli (see Fig 1) that were created by taking a systematic sweep of three blend shapes. The three blend shapes were designed to manipulate three parameters: (i) the mouth angle, (ii) the smile extent, and (iii) the amount of dental show (see Fig 2). Before collecting data, we designated smile 22 (high mouth angle, low smile extent, and medium dental show) as a prototypical smile for the investigation of timing asymmetries. To create spatiotemporal asymmetries in the smiling expressions, we manipulated the timing delay of the left side of the facial expression for smile 22. In addition to the symmetric versions of smile 22 previously described, we created five other versions of smile 22 with different delay asymmetries (see Fig 3). The delay asymmetries were created by delaying the start of the smile expression on the left side of the face.

Fig 1. The 27 different smiling faces.

The 27 smiles represent all possible combinations of the three spatial factors (mouth angle, smile extent, dental show) at three different levels (low, medium, high). The numbers 1–27 have been included post hoc for labelling purposes and were not present in the animations.

Fig 2. Definitions of the spatial parameters used in the study.

Mouth angle is the angle between the green and blue lines. Smile extent is the length of the green line. Dental show is the distance between the lower and upper lips.

Fig 3. Smile 22 with various amounts of timing (delay) asymmetry.

All animations started with the same (symmetric) neutral expression and ended with the same (symmetric) smiling expression, so the asymmetries were only visible for a few frames of the 250 ms animation.

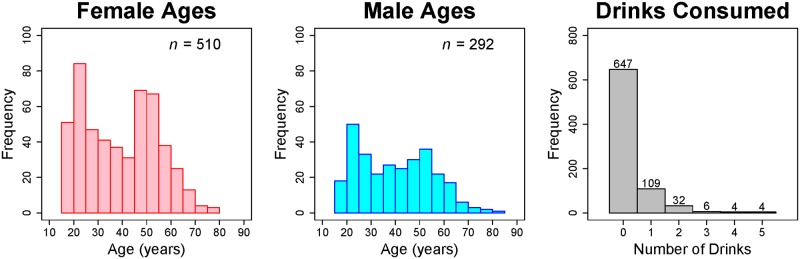

Study participants

We use data collected from a diverse sample of study participants over the course of three days at the 2015 Minnesota State Fair in the University of Minnesota’s Driven to Discover building. Note that using fairgoers as the “general public” should provide a more representative sample compared to the WEIRD sample that is commonly used in behavioral research [27]. Participants ranged in age from 18 to 82, and there was a bimodal age distribution for both the female and male participants with peaks at about 20 and 50 years of age, see Fig 4. Participants were excluded from our analyses if they (i) had consumed six or more alcoholic drinks that day, and/or (ii) did not complete the entire survey. Our final sample included 802 participants (510 females and 292 males) who met the inclusion criteria for our study.

Fig 4. Histograms of demographic variables.

Age distributions for female (left) and male (middle) participants, as well as the alcohol consumption numbers (right).

Procedure

Our study protocol (including our informed consent procedure) was approved by the Institutional Review Board at the University of Minnesota. During the 2015 Minnesota State Fair, we asked laypersons who entered, and/or walked by, the Driven to Discover building to participate in our “Smile Study”. As compensation for participating in our study, participants were entered in a drawing to win an iPad. Upon verbally consenting to participate in our study, a volunteer explained to each individual that we were interested in how people perceived facial expressions of emotion. After the basic introduction, each participant was handed an iPad with a custom-built app, which contained a welcome screen, an information/consent screen, and an instructions screen (see Fig 5). After the instructions screen, participants provided some basic demographic information: age, gender, zip code, and number of alcoholic drinks they had consumed that day. Then each participant was shown 15 randomly sampled animations, followed by five still pictures of facial expressions of emotion. Note that the animations were randomly sampled from a larger population of facial expressions, but in this paper we only analyze the data corresponding to the 27 animations in Fig 1.

Fig 5. Screenshots from smile study iPad app.

The welcome screen, consent screen, and instructions screen that were shown to participants at the onset of our study.

For each stimulus, participants were asked to (i) “Rate the overall effectiveness as a smile” using a 5-point Likert scale: Very Bad, Bad, Neutral, Good, and Very Good, (ii) “Tap one or more [words] that best describe the face” using a list of seven emotions: Anger, Contempt, Disgust, Fear, Happiness, Sadness, and Surprise, (iii) “Indicate how much the expression is” Fake (low end) versus Genuine (high end) using a continuous slider bar, and (iv) “Indicate how much the expression is” Creepy (low end) versus Pleasant (high end) using a continuous slider bar. Throughout the remainder of the paper, we refer to the ratings as (i) Effective ratings, (ii) Emotion ratings, (iii) Genuine ratings, and (iv) Pleasant ratings, respectively. Participants were instructed to interpret the words Effective, Fake, Genuine, Creepy, and Pleasant in a colloquial sense of these words. This was done to avoid biasing the participants’ opinions, so that the ratings could be interpreted in a colloquial—instead of a clinical—sense of these words. Participants were allowed to quit the study at any point.

Data analysis

Overview

For the Genuine (Pleasant) ratings, the endpoints of the continuous slider bar were numerically coded as 0 = Fake (0 = Creepy) and 1 = Genuine (1 = Pleasant), so a score of 0.5 corresponds to a “neutral” rating—and scores above 0.5 correspond to above neutral ratings. Similarly, Effective ratings were numerically coded as 0 = Very Bad, 0.25 = Bad, 0.5 = Neutral, 0.75 = Good, and 1 = Very Good, so scores above 0.5 correspond to above neutral ratings. We define a “successful smile” as one that is rated above neutral in overall effectiveness, genuineness, and pleasantness. To determine the spatial and temporal properties related to a successful smile, we use a nonparametric mixed-effects regression approach [28–32]. More specifically, we used a mixed-effects extension of smoothing spline analysis of variance (SSANOVA) [33, 34]. The models are fit using the “bigsplines” package [35] in the R software environment [36]. Inferences are made using the Bayesian interpretation of a smoothing spline [37, 38].

Symmetric smiles

To determine how spatial (mouth angle, smile extent, and dental show) properties affect the perception of a smile, we use a nonparametric mixed-effects model of the form

| (1) |

where yij is the rating that the i-th participant assigned to the j-th stimulus, ηA(⋅) is the unknown main effect function for age with ai ∈ {18, …, 82} denoting the age of the i-th participant, ηG(⋅) is the unknown main effect function for gender with gi ∈ {F, M} denoting the gender of the i-th participant, ηD(⋅) is the unknown main effect function for drinking with di ∈ {0, 1, 2, 3, 4, 5} denoting the number of alcoholic drinks consumed by the i-th participant, and ηS(⋅) is the unknown smile effect function with sij = (sij1, sij2, sij3)′ denoting a 3 × 1 vector containing the known spatial properties of the j-th stimulus displayed to the i-th participant such that sij1 ∈ {low, medium, high} denotes the mouth angle, sij2 ∈ {low, medium, high} denotes the smile extent, and sij3 ∈ {low, medium, high} denotes the amount of dental show. The unknown parameter bi is a random baseline term for the i-th participant, which allows each participant to have a unique intercept term in the model. The bi terms are assumed to be independent and identically distributed (iid) Gaussian variables with mean zero and unknown variance θ2. Finally, the ϵij terms are unknown error terms, which are assumed to be (i) iid Gaussian variables with mean zero and unknown variance σ2, and (ii) independent from the bi effects.

Using the SSANOVA model, the smile effect function ηS can be decomposed such as

| (2) |

where η0 is an unknown constant, η1(⋅), η2(⋅), and η3(⋅) denote the main effect functions for the three spatial parameters (angle, extent, and dental show, respectively), η12(⋅) denotes the angle-extent interaction effect function, η13(⋅) denotes the angle-dental show interaction effect function, η23(⋅) denotes the extent-dental show interaction effect function, and η123(⋅) denotes the three-way interaction effect function. The model in Eq (2) includes all possible two-way and three-way interactions between the spatial parameters, but we could consider simpler models that remove some (or all) of the interaction effects. The simplest model has the form

| (3) |

which only contains the additive effects of the three spatial parameters. To determine which effects should be included in the model, we fit the nine possible models (see Table 1), and we used the AIC [39] and BIC [40] to choose the model that provides the best fit relative to the model complexity.

Table 1. Models for smile effect function ηS.

| # | ηS |

|---|---|

| 1. | η0 + η1 + η2 + η3 + η12 + η13 + η23 + η123 |

| 2. | η0 + η1 + η2 + η3 + η12 + η13 + η23 |

| 3. | η0 + η1 + η2 + η3 + η12 + η13 |

| 4. | η0 + η1 + η2 + η3 + η12 + η23 |

| 5. | η0 + η1 + η2 + η3 + η13 + η23 |

| 6. | η0 + η1 + η2 + η3 + η12 |

| 7. | η0 + η1 + η2 + η3 + η13 |

| 8. | η0 + η1 + η2 + η3 + η23 |

| 9. | η0 + η1 + η2 + η3 |

Note: 1 = angle, 2 = extent, 3 = dental show.

We fit the nine models in Table 1 using three different response variables: smile effectiveness, smile genuineness, and smile pleasantness. For each of the fit models, we used a cubic smoothing spline for the age and drinking marginal effects, a nominal smoothing spline (i.e., shrinkage estimator) for the gender effect, and an ordinal smoothing spline for the angle, extent, and dental show marginal effects [33]. The interaction effects are formed by taking a tensor product of the marginal smoothing spline reproducing kernels [34]. The models are fit using the two-step procedure described in [29], which uses a REML algorithm [41] to estimate the unknown variance component θ2 (step 1) followed by a generalized cross validation (GCV) routine [42] to estimate the unknown smoothing parameters (step 2).

Asymmetric smiles

To examine how timing asymmetry influences the interpretation of smile expressions, we analyzed six variations of smile 22 using a model of the form

| (4) |

where ηT(⋅) is the unknown main effect function for timing (delay) asymmetry with tij ∈ {0, 25, 50, 100, 150, 200} denoting the delay time (in ms) for the j-th stimulus displayed to the i-th participant, and the other terms can be interpreted as previously described. We fit the above model to the same three response variables (effective, genuine, and pleasant) using the same two-stage estimation procedure [29]. We used a cubic smoothing spline for the timing asymmetry effect function, and the three covariates were modeled as previously described, i.e., using a cubic smoothing spline for age and drinking, and a nominal smoothing spline for gender.

Results

Spatial properties

The AIC and BIC values for the fit models are given in Table 2. Note that both the AIC and BIC select Model 1 (from Table 1) as the optimal model for each of the three response variables. This implies that the perception of a smile involves a three-way interaction between the mouth angle, the smile extent, and the amount of dental show displayed in the expression. To quantify the model fit, we calculated the model coefficient of multiple determination (i.e., R-squared) without (R2) and with () the estimated random effect in the prediction. We define R2 (or ) as the squared correlation between the response variable and the fitted values without (or with) the random effects included. In the leftmost columns of Table 3, we display the R-squared values from the optimal model, along with the estimated variance components. Table 3 reveals that the fixed-effects terms in the model explain about 10% of the variation in the response variables (i.e., ), whereas the model explains about 40% of the variation in the response variables with the random effects included (i.e., ).

Table 2. Information criteria for the fit models.

| Effective | Genuine | Pleasant | ||||

|---|---|---|---|---|---|---|

| AIC | BIC | AIC | BIC | AIC | BIC | |

| 1. | 66.46 | 187.82 | 565.80 | 681.27 | 15.81 | 138.40 |

| 2. | 131.40 | 236.67 | 603.65 | 714.47 | 72.20 | 173.13 |

| 3. | 147.61 | 240.53 | 632.02 | 721.55 | 83.48 | 173.18 |

| 4. | 154.31 | 240.31 | 648.83 | 730.57 | 84.52 | 170.05 |

| 5. | 275.07 | 360.14 | 634.23 | 718.81 | 190.41 | 268.78 |

| 6. | 184.12 | 259.47 | 696.93 | 779.78 | 114.31 | 192.81 |

| 7. | 306.35 | 378.94 | 679.18 | 763.13 | 209.51 | 278.62 |

| 8. | 305.46 | 371.27 | 685.56 | 752.07 | 217.78 | 283.36 |

| 9. | 264.43 | 318.44 | 724.89 | 804.83 | 217.55 | 277.12 |

Note: A constant (i.e., 900) was added to each score.

Table 3. Model fit information for symmetric and asymmetric smiles.

| Symmetric Smiles | Asymmetric Smiles | |||||

|---|---|---|---|---|---|---|

| Effective | Genuine | Pleasant | Effective | Genuine | Pleasant | |

| R2 | 0.0981 | 0.0718 | 0.1247 | 0.0399 | 0.0404 | 0.0660 |

| 0.4664 | 0.3911 | 0.3902 | 0.6346 | 0.6177 | 0.6914 | |

| σ2 | 0.0413 | 0.0507 | 0.0405 | 0.0374 | 0.0456 | 0.0349 |

| θ2 | 0.0124 | 0.0098 | 0.0076 | 0.0094 | 0.0102 | 0.0130 |

| ρ | 0.2314 | 0.1626 | 0.1589 | 0.2006 | 0.1830 | 0.2717 |

Note 1: Symmetric Smiles results are those for Model 1.

Note 2: ρ = intra-class correlation coefficient: θ2/(θ2 + σ2).

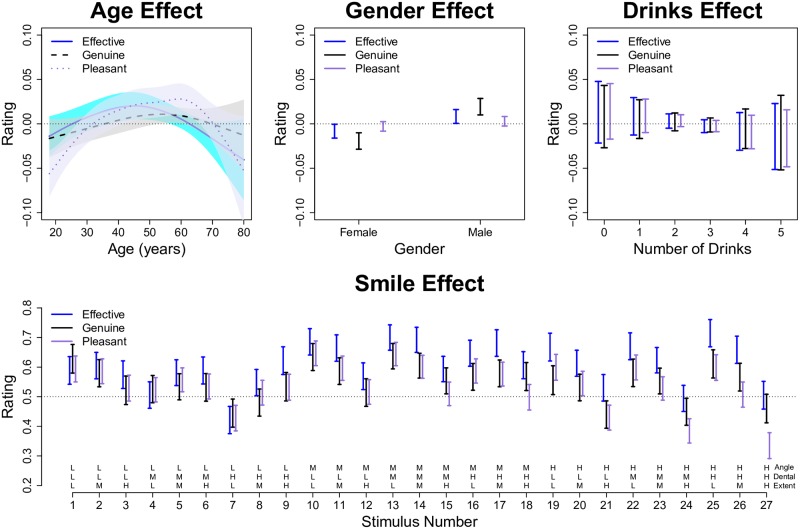

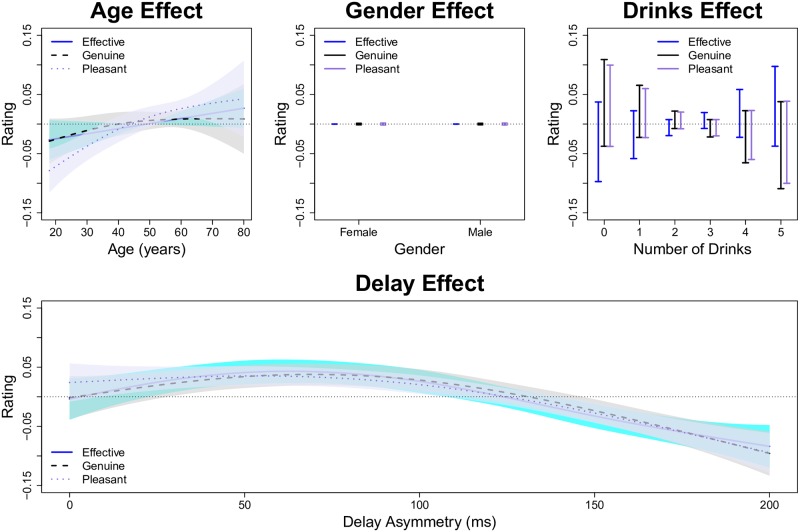

We plot the SSANOVA model predictions (i.e., estimated effect functions) for the optimal model in Fig 6. The main effect functions for age (Fig 6, top left) reveal that there is quadratic trend such that younger and older participants give slightly lower ratings; however, the confidence intervals on the age effect functions are rather wide and the trend is not significantly different from zero for two of the three response variables. Similarly, we see little to no effect of gender (Fig 6, top middle) or the number of alcoholic drinks (Fig 6, top right). In contrast, the smile effect function (Fig 6, bottom) reveals that (i) aside from the extreme smiles with high angle and high extent, the ratings of the three response variables are rather similar within each smile, and (ii) there are significant differences between the ratings across the 27 smiles.

Fig 6. Predictions for SSANOVA model of symmetric smiles.

The top row plots the estimated main effect functions for the three covariates: age, gender, and drinking. The bottom row plots the estimated smile effect function predictions for each of the 27 smile animations depicted in Fig 1. Within each subplot, the shaded regions or bars denote 90% Bayesian confidence intervals.

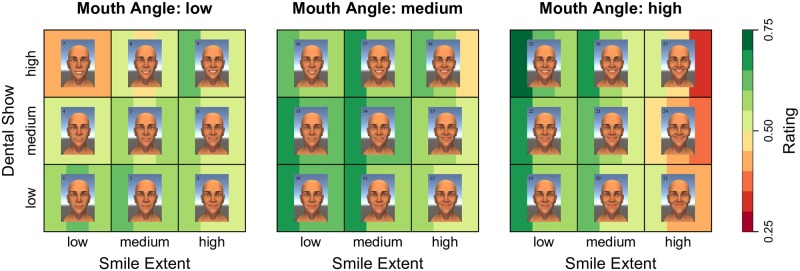

To obtain a better understanding of the three-way interaction effect, Fig 7 plots the smile effect as a function of three factors (angle, extent, dental show). This plot reveals that there is a sweet spot of parameters (particularly mouth angle and smile extent) that results in the most successful smiles. The highest rated smiles were those with low to medium extents in combination with medium to high angles. Using the parameter definitions in Fig 2, successful smiles have mouth angles of about 13–17° and smile extents of about 55–62% the interpupillary distance (IPD). However, as is evident from Figs 6 and 7, the best smiles represent a diverse collection of different combinations of facial parameters. This reveals that, although there is an optimal window of parameters, there is not a single path to a successful smile.

Fig 7. Visualization of the smile effect.

A heat-map plotting the three-way interaction between the smile parameters. The three vertical bars behind each face denote the predicted score for the three response variables: effective, genuine, and pleasant (respectively). Greener colors correspond to better (i.e., higher rated) smiles, and redder colors correspond to worse (i.e., lower rated) smiles.

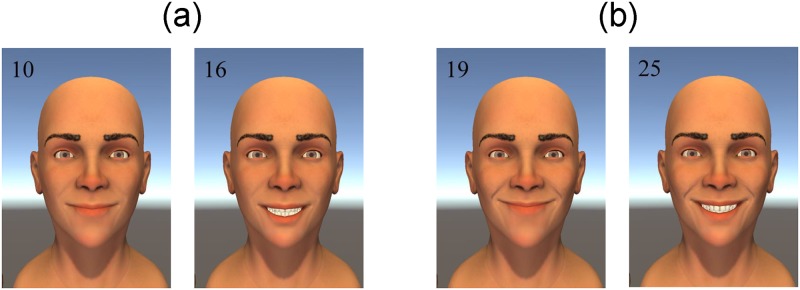

Fig 7 also reveals that there are particular combinations of the smile parameters that result in unsuccessful smiles. One interesting finding was how low the ratings were for smiles with extreme angles. Another interesting finding is that the effect of dental show on the smile ratings differs depending on the angle-extent combination of the smile. For smiles that have smaller angle-extent values, displaying low or medium dental show is better than displaying high dental show. In contrast, for smiles with medium to large angle-extent combinations, displaying high dental show is better. This point is illustrated in Fig 8. However, for smiles with angle-extent combinations that are too large (i.e., smiles 21, 24, 27), increasing dental show decreases smile quality.

Fig 8. Dental show effect at different levels of angle-extent.

(a) Two smiles with smaller angle-extent combinations. (b) Two smiles with larger angle-extent combinations. Increasing dental show makes the smile worse (i.e., less successful) for (a) and better (i.e., more successful) for (b).

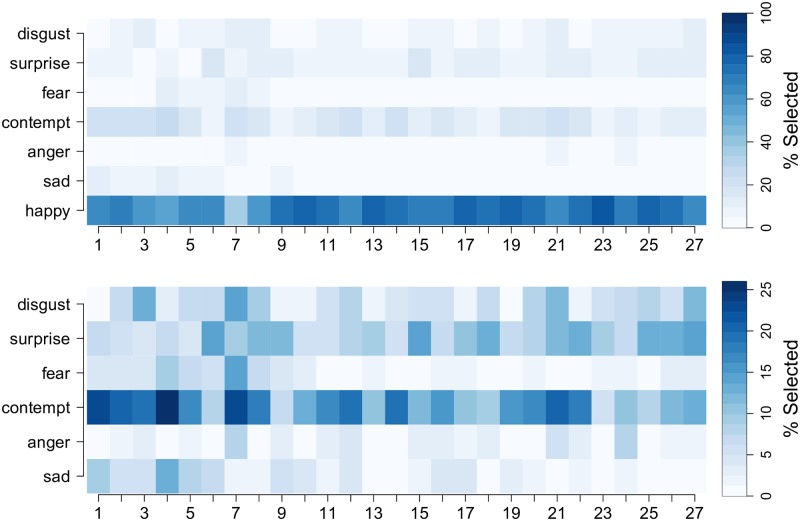

To understand which emotions were perceived from each smile, Fig 9 plots the percentage of participants who selected each of the seven emotions for each of the 27 smiles. From the top subplot of Fig 9, it is evident that the emotion “Happy” was selected most often—which was expected. To better visualize which non-happy emotions were perceived from the smiles, the bottom subplot of Fig 9 shows the percentage of participants who selected the six non-happy emotions. This plot reveals that (i) “Contempt” is the most frequently perceived non-happy emotion, (ii) participants tended to perceive “Contempt” from a variety of different types of smiles, and (iii) smiles with a combination of low angle and low extent showed the largest percentages of “Contempt” ratings.

Fig 9. Perceived emotions for the 27 smiling faces.

The percentage of subjects who selected the given emotion (rows) for each smile (columns). The top subplot depicts the results for all seven emotions, whereas the bottom subplot provides a more detailed look at the non-happy emotions that were perceived from each expression.

Temporal properties

The fit statistics for the SSANOVA models fit to the asymmetric smiles are given in the rightmost columns of Table 3. Note that the models explained about 4% of the data variation at the aggregate level and about 60% of the variation at the individual level. The SSANOVA model predictions (i.e., effect functions) are plotted in Fig 10. In this case, we see that there is a trend such that older participants provide higher ratings (Fig 10, top left); however, the confidence intervals are wide, and the trend is insignificant for two of the three predictors. Similar to the previous model, there is no significant gender effect (Fig 10, top middle) or drinking effect (Fig 10, top right). The interesting result from this model is plotted in the bottom portion of Fig 10. We find that having a slight asymmetry (25–100 ms) increases the smile ratings by a significant amount compared to having a perfectly symmetric smile. However, a delay asymmetry of 125 ms or more resulted in reduced smile ratings, which decreased almost linearly with the delay asymmetry in the range of 100–200 ms. At the largest delay asymmetry (200 ms), the expected smile ratings were about 0.09 units below the symmetric smile ratings.

Fig 10. Predictions for SSANOVA model of asymmetric smiles.

The top row plots the estimated main effect functions for the three covariates: age, gender, and drinking. The bottom row plots the estimated timing asymmetry effect function. Within each subplot, the shaded regions or bars denote 90% Bayesian confidence intervals.

Discussion

Our results shed new light on how people perceive dynamic smile expressions. Using an anatomically-realistic 3D facial tool, we determined which spatial (angle, extent, dental show) and temporal (delay asymmetry) smile parameters were judged to be successful by a large sample of participants. Most importantly, our results allow us to both dispel and confirm commonly held beliefs in the surgical community, which are currently guiding medical practice. Our result regarding the optimal window (or sweet spot) of smile extent contradicts the principle that “more is always better” with respect to smile extent. Consequently, using absolute smile extent (or excursion) as a primary outcome measure—as is currently done in practice [14, 43]—is inappropriate. Instead, clinicians should use both mouth angle and smile extent as outcome measures because an effective smile requires a balance of both.

Among medical professionals, there is a debate about the importance of showing teeth during smiling, with some believing it to be of paramount importance while others trivialize its role. Our finding that dental show significantly influences the perception of a smile clarifies this debate. Specifically, the degree of dental show can have negative or positive effects: increasing dental show can decrease smile quality (for low angle-extent smiles), increase smile quality (for high angle-extent smiles), or have little influence on smile quality (for medium angle-extent smiles). Thus, the interaction between dental show and the angle-extent parameters confirms the idea that individuals with limited facial movement should be encouraged to form closed-mouth smiles (see Fig 8). Our results reveal that forming open-mouth smiles with small angles/extents can produce unintended perceptions of the expression, e.g., contempt or fear instead of happiness.

Our finding that small timing asymmetries can increase smile quality may seem counter-intuitive, in light of past research revealing that people tend to prefer symmetric faces [44, 45]. However, this result is consistent with principles of smile design in which dynamic symmetry (i.e., being very similar but not identical) “allows for a more vital, dynamic, unique and natural smile” compared to static symmetry (i.e., mirror image), see [46, pg. 230]. Furthermore, this finding is consistent with some research which has found that slightly asymmetric faces are preferred over perfectly symmetric faces [47–49]. Our results suggest that this preference relates to the perception of the genuineness and pleasantness of the smile expression, such that slight timing asymmetries are viewed as more genuine/pleasant (see Fig 10).

Our discovery of the threshold at which delay asymmetries become detrimental to smile quality (i.e., 125 ms) provides a helpful benchmark for clinicians and therapists. This finding compliments past research which has found that emotional cues can be perceived within 100–200 ms of encountering an image of a face [4, 5]. The smile is successful long as the left-right smile onset symmetry remains within 125 ms. Beyond 125 ms, delay asymmetries have a noteworthy negative effect such that a 200 ms delay asymmetry results in an expected 0.09 unit decrease in smile ratings. Note that this decrease is a medium effect size with respect to psychological standards [50]: defining , we have that for effectiveness, for genuine, and for pleasant. Furthermore, the difference between the ratings with a 75 ms versus a 200 ms delay is a medium-large effect size by typical psychological standards: defining , we have that for effectiveness, for genuine, and for pleasant. It is interesting to note that modifying only the dynamic symmetry can have such a noticeable effect on how the smile is perceived.

In summary, our findings complement the literature on the dynamics of facial expressions of emotion [15, 25]. Similar to past studies [16–18], we have found that computer generated models of facial expressions can be a useful tool for systematically studying how people perceive facial expressions of emotion. Our results agree with past literature that has found dynamic (spatiotemporal) aspects of facial expressions to be important to their perception [19–24]. In particular, we found that a successful smile consists of (i) an optimal window of mouth angle and smile extent, (ii) the correct amount of dental show for the given angle-extent combination, and (iii) dynamic symmetry such that the left and right sides of the mouth are temporally synced within 125 ms. Consequently, our results extend the literature by providing spatiotemporal benchmarks of a successful smile with respect to clinically meaningful parameters.

Conclusion

Our study looked at how dynamic (spatiotemporal) properties of mouth movement relate to perceptions of facial expressions generated by a 3D computer model. We found that a successful smile involves an intricate balance of mouth angle, smile extent, and dental show in combination with dynamic spatiotemporal timing. Future research should encompass more combinations of angle, extent, dental show, and timing parameters, in order to develop a more complete spatiotemporal understanding of how the interplay between these elements affects individuals’ perceptions of the smile trajectory. Also, future studies could consider manipulating additional facial features (e.g., orbicularis oculi contraction) to create a more diverse set of facial expressions. Additionally, 3D cameras could be used to create scans of people smiling to enable the data-driven generation of emotional expressions, replacing the artist-created blend shapes approach used in our study [26, 51, 52]. Such an approach could be useful for fine-tuning the smile stimuli used in this study, which have the limitation of being artist-generated. Furthermore, 3D cameras could be used to study timing asymmetries in a more diverse sample of facial expressions, which would be useful for examining the robustness of our timing asymmetry effect. Note that our results regarding timing asymmetries have the limitation of coming from a single smile expression (i.e., smile 22). Another useful extension of our study would be to examine how a large sample of participants perceive a variety of other facial expressions of emotion, e.g., surprise, anger, fear, or sadness. Finally, the integration of biologic measurements (e.g., eye-tracking or electroencephalography) could provide useful data about the perception of dynamic facial expressions.

Supporting information

Data and R code to reproduce results.

(ZIP)

Smile animations (stimuli) used in paper.

(ZIP)

Acknowledgments

Data were collected at the University of Minnesota’s Driven to Discover Building at the 2015 Minnesota State Fair. The authors thank Moses Adeagbo, Heidi Johng, Alec Nyce, and Rochelle Widmer for aiding in the data collection and development of this study.

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

This work was supported by faculty start-up funds from the University of Minnesota (NEH, SJG, SL-P).

References

- 1. Ekman P. A methodological discussion of nonverbal behavior. The Journal of Psychology. 1957;43:141–149. 10.1080/00223980.1957.9713059 [DOI] [Google Scholar]

- 2. Ekman P, Friesen WV. Constants across cultures in the face and emotion. Journal of Personality and Social Psychology. 1971;17:124–129. 10.1037/h0030377 [DOI] [PubMed] [Google Scholar]

- 3. Ekman P, Friesen WV, Ancoli S. Facial signs of emotional experience. Journal of Personality and Social Psychology. 1980;39:1125–1134. 10.1037/h0077722 [DOI] [Google Scholar]

- 4. Adolphs R. Recognizing Emotion From Facial Expressions: Psychological and Neurological Mechanisms. Behavioral and Cognitive Neuroscience Reviews. 2002;1:21–62. 10.1177/1534582302001001003 [DOI] [PubMed] [Google Scholar]

- 5. O’Toole AJ, Roark DR, Abdi H. Recognizing moving faces: A psychological and neural synthesis. TRENDS in Cognitive Sciences. 2002;6:261–266. 10.1016/S1364-6613(02)01908-3 [DOI] [PubMed] [Google Scholar]

- 6. Oosterhof NN, Todorov A. The functional basis of face evaluation. Proceedings of the National Academy of Sciences. 2008;105:11087–11092. 10.1073/pnas.0805664105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Ekman P, Davidson R, Friesen WV. The Duchenne smile: Emotional expression and brain physiology II. Journal of Personality and Social Psychology. 1990;58:342–353. 10.1037/0022-3514.58.2.342 [DOI] [PubMed] [Google Scholar]

- 8. VanSwearingen JM, Cohn JF, Bajaj-Luthra A. Specific impairment of smiling increases the severity of depressive symptoms in patients with facial neuromuscular disorders. Aesthetic Plastic Surgery. 1999;23(6):416–423. 10.1007/s002669900312 [DOI] [PubMed] [Google Scholar]

- 9. Lee LN, Lyford-Pike S, Boahene KD. Traumatic Facial Nerve Injury. Otolaryngologic Clinics of North America. 2013;46:825–839. 10.1016/j.otc.2013.07.001 [DOI] [PubMed] [Google Scholar]

- 10. Starmer H, Lyford-Pike S, Ishii LE, Byrne PA, Boahene KD. Quantifying Labial Strength and Function in Facial Paralysis: Effect of Targeted Lip Injection Augmentation. JAMA Facial Plastic Surgery. 2015;17:274–278. 10.1001/jamafacial.2015.0477 [DOI] [PubMed] [Google Scholar]

- 11. Twerski AJ, Twerski B. Problems and management of social interaction and implications for mental health In: May M, editor. The Facial Nerve. Thieme Medical; 1986. p. 788–779. [Google Scholar]

- 12. Hadlock T. Standard Outcome Measures in Facial Paralysis: Getting on the Same Page. JAMA Facial Plastic Surgery. 2016;18:85–86. 10.1001/jamafacial.2015.2095 [DOI] [PubMed] [Google Scholar]

- 13. Jowett N, Hadlock TA. An Evidence-Based Approach to Facial Reanimation. Facial Plastic Surgery Clinics of North America. 2015;23:313–334. 10.1016/j.fsc.2015.04.005 [DOI] [PubMed] [Google Scholar]

- 14. Niziol R, Henry FP, Leckency JI, Grobbelaar AO. Is there an ideal outcome scoring system for facial reanimation surgery? A review of current methods and suggestions for future publications. Journal of Plastic, Reconstructive & Aesthetic Surgery. 2015;68:447–56. 10.1016/j.bjps.2014.12.015 [DOI] [PubMed] [Google Scholar]

- 15. Krumhuber EG, Kappas A, Manstead ASR. Effects of Dynamic Aspects of Facial Expressions: A Review. Emotion Review. 2013;5(1):41–46. 10.1177/1754073912451349 [DOI] [Google Scholar]

- 16. Wehrle T, Kaiser S, Schmidt S, Scherer KR. Studying the dynamics of emotional expression using synthesized facial muscle movements. Journal of Personality and Social Psychology. 2000;78(1):105–119. 10.1037/0022-3514.78.1.105 [DOI] [PubMed] [Google Scholar]

- 17. Roesch EB, Tamarit L, Reveret L, Grandjean D, Sander D, Scherer KR. FACSGen: A Tool to Synthesize Emotional Facial Expressions Through Systematic Manipulation of Facial Action Units. Journal of Nonverbal Behavior. 2011;35(1):1–16. 10.1007/s10919-010-0095-9 [DOI] [Google Scholar]

- 18. Krumhuber EG, Tamarit L, Roesch EB, Scherer KR. FACSGen 2.0 animation software: generating three-dimensional FACS-valid facial expressions for emotion research. Emotion. 2012;12(2):351–363. 10.1037/a0026632 [DOI] [PubMed] [Google Scholar]

- 19. COHN JF, SCHMIDT KL. The timing of facial motion in posed and spontaneous smiles. International Journal of Wavelets, Multiresolution and Information Processing. 2004;2(2):121–132. 10.1142/S021969130400041X [DOI] [Google Scholar]

- 20. Trutoiu LC, Carter EJ, Pollard N, Cohn JF, Hodgins JK. Spatial and Temporal Linearities in Posed and Spontaneous Smiles. ACM Transactions on Applied Perception. 2014;11(3):12:1–15. 10.1145/2641569 [DOI] [Google Scholar]

- 21. Krumhuber EG, Manstead AS, Cosker D, Marshall D, Rosin PL, Kappas A. Facial Dynamics as Indicators of Trustworthiness and Cooperative Behavior. Emotion. 2007;7(4):730–735. 10.1037/1528-3542.7.4.730 [DOI] [PubMed] [Google Scholar]

- 22. Krumhuber E, Manstead ASR, Cosker D, Marshall D, Rosin PL. Effects of dynamic attributes of smiles in human and synthetic faces: a simulated job interview setting. Journal of Nonverbal Behavior. 2009;33(1):1–15. 10.1007/s10919-008-0056-8 [DOI] [Google Scholar]

- 23. Schmidt KL, VanSwearingen JM, Levenstein RM. Speed, amplitude, and asymmetry of lip movement in voluntary puckering and blowing expressions: implications for facial assessment. Motor Control. 2005;9(3):270–280. 10.1123/mcj.9.3.270 [DOI] [PubMed] [Google Scholar]

- 24. Schmidt KL, Liu Y, Cohn JF. The role of structural facial asymmetry in asymmetry of peak facial expressions. Laterality. 2006;11(6):540–561. 10.1080/13576500600832758 [DOI] [PubMed] [Google Scholar]

- 25. Krumhuber EG, Manstead ASR. Can Duchenne Smiles Be Feigned? New Evidence on Felt and False Smiles. Emotion. 2009;9(6):807–820. 10.1037/a0017844 [DOI] [PubMed] [Google Scholar]

- 26. Pighin F, Hecker J, Lischinski D, Szeliski R, Salesin DH. Synthesizing realistic facial expressions from photographs In: ACM SIGGRAPH 2006 Courses. ACM; 2006. p. 19. [Google Scholar]

- 27. Henrich J, Heine SJ, Norenzayan A. The weirdest people in the world? Behavioral and Brain Sciences. 2010;33:61–135. 10.1017/S0140525X0999152X [DOI] [PubMed] [Google Scholar]

- 28. Gu C, Ma P. Generalized Nonparametric Mixed-Effect Models: Computation and Smoothing Parameter Selection. Journal of Computational and Graphical Statistics. 2005;14:485–504. 10.1198/106186005X47651 [DOI] [Google Scholar]

- 29. Helwig NE. Efficient estimation of variance components in nonparametric mixed-effects models with large samples. Statistics and Computing. 2016;26:1319–1336. 10.1007/s11222-015-9610-5 [DOI] [Google Scholar]

- 30. Wang Y. Mixed effects smoothing spline analysis of variance. Journal of the Royal Statistical Society, Series B. 1998;60:159–174. 10.1111/1467-9868.00115 [DOI] [Google Scholar]

- 31. Wang Y. Smoothing spline models with correlated random errors. Journal of the American Statistical Association. 1998;93:341–348. 10.1080/01621459.1998.10474115 [DOI] [Google Scholar]

- 32. Zhang D, Lin X, Raz J, Sowers M. Semiparametric Stochastic Mixed Models for Longitudinal Data. Journal of the American Statistical Association. 1998;93:710–719. 10.1080/01621459.1998.10473723 [DOI] [Google Scholar]

- 33. Gu C. Smoothing Spline ANOVA Models. 2nd ed New York: Springer-Verlag; 2013. [Google Scholar]

- 34. Helwig NE, Ma P. Fast and stable multiple smoothing parameter selection in smoothing spline analysis of variance models with large samples. Journal of Computational and Graphical Statistics. 2015;24:715–732. 10.1080/10618600.2014.926819 [DOI] [Google Scholar]

- 35. Helwig NE. bigsplines: Smoothing Splines for Large Samples; 2017. Available from: http://CRAN.R-project.org/package=bigsplines. [Google Scholar]

- 36. R Core Team. R: A Language and Environment for Statistical Computing; 2016. Available from: http://www.R-project.org/. [Google Scholar]

- 37. Wahba G. Bayesian “confidence intervals” for the cross-validated smoothing spline. Journal of the Royal Statistical Society, Series B. 1983;45:133–150. [Google Scholar]

- 38. Gu C, Wahba G. Smoothing spline ANOVA with component-wise Bayesian “confidence intervals”. Journal of Computational and Graphical Statistics. 1993;2:97–117. 10.1080/10618600.1993.10474601 [DOI] [Google Scholar]

- 39. Akaike H. A new look at the statistical model identification. IEEE Transactions on Automatic Control. 1974;19:716–723. 10.1109/TAC.1974.1100705 [DOI] [Google Scholar]

- 40. Schwarz GE. Estimating the dimension of a model. Annals of Statistics. 1978;6:461–464. 10.1214/aos/1176344136 [DOI] [Google Scholar]

- 41. Patterson HD, Thompson R. Recovery of Inter-Block Information when Block Sizes are Unequal. Biometrika. 1971;58:545–554. 10.1093/biomet/58.3.545 [DOI] [Google Scholar]

- 42. Craven P, Wahba G. Smoothing noisy data with spline functions: Estimating the correct degree of smoothing by the method of generalized cross-validation. Numerische Mathematik. 1979;31:377–403. 10.1007/BF01404567 [DOI] [Google Scholar]

- 43. Bae YC, Zuker RM, Manktelow RT, Wade S. A comparison of commissure excursion following gracilis muscle transplantation for facial paralysis using a cross-face nerve graft versus the motor nerve to the masseter nerve. Plastic Reconstructive Surgery. 2006;117:2407–2413. 10.1097/01.prs.0000218798.95027.21 [DOI] [PubMed] [Google Scholar]

- 44. Grammer K, Thornhill R. Human (Homo sapiens) facial attractiveness and sexual selection: The role of symmetry and averageness. Journal of Comparative Psychology. 1994;108:233–242. 10.1037/0735-7036.108.3.233 [DOI] [PubMed] [Google Scholar]

- 45. Rhodes G, Proffitt F, Grady JM, Sumich A. Facial symmetry and the perception of beauty. Psychonomic Bulletin & Review. 1998;5:659–669. 10.3758/BF03208842 [DOI] [Google Scholar]

- 46. Bhuvaneswaran M. Principles of smile design. Journal of Conservative Dentistry. 2010;13:225–232. 10.4103/0972-0707.73387 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Zaidel DW, Chen AC, German C. She is not a beauty even when she smiles: possible evolutionary basis for a relationship between facial attractiveness and hemispheric specialization. Neuropsychologia. 1995;33:649–655. 10.1016/0028-3932(94)00135-C [DOI] [PubMed] [Google Scholar]

- 48. Zaidel DW, Deblieck C. Attractiveness of natural faces compared to computer constructed perfectly symmetrical faces. International Journal of Neuroscience. 2007;117:423–431. 10.1080/00207450600581928 [DOI] [PubMed] [Google Scholar]

- 49. Zaidel DW, Hessamian M. Asymmetry and Symmetry in the Beauty of Human Faces. Symmetry. 2010;2:136–149. 10.3390/sym2010136 [DOI] [Google Scholar]

- 50. Cohen J. A power primer. Psychological Bulletin. 1992;112:155–159. 10.1037/0033-2909.112.1.155 [DOI] [PubMed] [Google Scholar]

- 51.Lee Y, Terzopoulos D, Waters K. Realistic modeling for facial animation. In: Proceedings of the 22nd annual conference on Computer graphics and interactive techniques. ACM; 1995. p. 55–62.

- 52. Xu F, Chai J, Liu Y, Tong X. Controllable high-fidelity facial performance transfer. ACM Transactions on Graphics (TOG). 2014;33(4):42 10.1145/2601097.2601210 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data and R code to reproduce results.

(ZIP)

Smile animations (stimuli) used in paper.

(ZIP)

Data Availability Statement

All relevant data are within the paper and its Supporting Information files.