Abstract

Previous studies indicate that positive mood broadens the scope of visual attention, which can manifest as heightened distractibility. We used event-related potentials (ERP) to investigate whether music-induced positive mood has comparable effects on selective attention in the auditory domain. Subjects listened to experimenter-selected happy, neutral or sad instrumental music and afterwards participated in a dichotic listening task. Distractor sounds in the unattended channel elicited responses related to early sound encoding (N1/MMN) and bottom-up attention capture (P3a) while target sounds in the attended channel elicited a response related to top-down-controlled processing of task-relevant stimuli (P3b). For the subjects in a happy mood, the N1/MMN responses to the distractor sounds were enlarged while the P3b elicited by the target sounds was diminished. Behaviorally, these subjects tended to show heightened error rates on target trials following the distractor sounds. Thus, the ERP and behavioral results indicate that the subjects in a happy mood allocated their attentional resources more diffusely across the attended and the to-be-ignored channels. Therefore, the current study extends previous research on the effects of mood on visual attention and indicates that even unfamiliar instrumental music can broaden the scope of auditory attention via its effects on mood.

Keywords: music, emotion, attention, mismatch negativity, P3a, P3b

Introduction

Neuroimaging studies indicate that affective and cognitive processes are closely integrated in the brain (Dolan et al., 2002; Pessoa, 2008; Lindquist et al., 2012). Within experimental psychology, it has been long recognized that affective states can influence a range of cognitive processes (Ashby et al., 1999). For instance, behavioral and neuroscientific studies have shown that executive control of attention—an emblematic example of higher order cognition—is dependent on emotion (Schupp et al., 2006; Mitchell and Phillips, 2007). A vast majority of these studies have examined how involuntary, bottom-up capture of attention and voluntary, top-down maintenance of attentional focus is biased towards emotional stimuli (Schupp et al., 2006). Far fewer studies have investigated how the affective state of the individual is reflected on these attentional functions and their neural correlates.

The idea that positive affective states broaden and negative states constrict the scope of attention has a long tradition in cognitive psychology (Fredrickson, 2001). Empirical support for the first notion comes from behavioral studies indicating that positive mood promotes a global bias in global-local visual processing experiments (Fredrickson and Branigan, 2005), more flexible allocation of attention from one visual stimulus to the next in the attentional blink paradigm (Olivers and Nieuwenhuis, 2006) implicit learning of to-be-ignored text (Biss et al., 2010), and even awareness of contralesional stimuli in patients with visual neglect (Soto et al., 2009; Chen et al., 2012) Although diffuse allocation of attention in positive mood can promote flexible responding, it may also manifest as heightened distractibility. In line with this proposition, Dreisbach and Goschke (2004) found that positive mood increased interference from novel distractors in a visual categorization task. Along the same lines, Rowe et al. (2007) found that after undergoing a positive mood induction subjects were less able to ignore task-irrelevant stimuli adjacent to a central target in the Eriksen Flanker task. Furthermore, a recent study reported that subjects were more susceptible to distraction by deviant sounds during a audio-visual oddball task after a positive mood induction (Pacheco-Unguetti and Parmentier, 2015). Thus, these studies suggest that positive mood may promote more broad focus of attention and flexible switching at the expense of filtering out irrelevant peripheral information.

Evidence from event-related potential (ERP) and neuroimaging studies also supports the supposed expansion of attentional focus in positive mood. Specifically, induction of positive mood has been reported to augment early cortical ERP responses to peripheral visual stimuli while subjects fixate on a central discrimination task (Moriya and Nittono, 2011; Vanlessen et al., 2013, 2014) suggesting that positive mood increases the amount of processing resources devoted to to-be-ignored visual stimuli. An fMRI study on the effects of mood on scope of visual encoding took advantage of the relative selectivity of the so-called parahippocampal place area (PPA) to place information such as pictures of houses (Schmitz et al., 2009). Subjects engaged in face discrimination task in which they were shown compound stimuli composed of a small picture of a face superimposed at the center of a larger picture of a house. Positive mood enhanced the suppression of the PPA response to repeated house images while negative mood induction decreased the PPA response to novel house pictures suggesting that positive mood broadened and negative mood reduced the scope of visual encoding. Finally, an fMRI study by Trost et al. (2014) found that listening to pleasant (consonant) music improved performance in a concurrent visual target detection task and influenced activity in brain regions associated with attentional control which the authors interpreted as evidence that music induced positive emotions may broaden visual attention.

An influential theoretical account for the effects of emotion on cognition posits that affective states promote the adoption of the situation-appropriate information processing strategies by signaling whether the current situation is ‘benign’ or ‘problematic’ (Schwarz and Clore, 2003). According to this framework, a negative affective state signals a problematic situation that requires detailed oriented, narrow focus of attention whereas a positive affective state indicates the absence of a problem in the environment and as a consequence a lowered need for highly focused attention and effort (Mitchell and Phillips, 2007). Echoing this notion, the effects of positive affect on attention have been described in terms of relaxation of inhibitory control (Rowe et al., 2007), flexible switching (Dreisbach and Goschke, 2004) and a state characterized by exploration (Olivers and Nieuwenhuis, 2006). Other authors have argued that cognitive control itself is an inherently emotional process (Inzlicht et al., 2015). Namely, it has been proposed that situations requiring cognitive control always trigger a negative affective state and, furthermore, that this negative state is critical for mobilizing the effort needed for cognitive control (Inzlicht et al., 2015; van Steenbergen, 2015). One prediction that follows from this proposition is that positive mood should counteract the negative affect driving cognitive control (van Steenbergen, 2015) and thereby loosen control over attentional focus (Pacheco-Unguetti and Parmentier, 2015).

If positive mood broadens the scope of attention, it might at first glance seem that sad mood should promote highly focused attention. However, while some studies support this notion (Schmitz et al., 2009), other studies that have contrasted performance in task of executive functions in sad and neutral moods suggest that sad mood either has little effect of cognitive control (Chepenik et al., 2007) or has similar effects than happy mood. Namely, some studies indicate that similarly to happy mood, sadness also induces more flexible switching of attention in attentional blink experiment (Jefferies et al., 2008) as well as heightened distractibility (Pacheco-Unguetti and Parmentier, 2013). Such results are compatible with the proposal that all non-neutral emotional states induce a cognitive load and thereby deplete resources for control over attention [for a critical discussion, see Mitchell and Phillips (2007)].

The vast majority of the studies reviewed so far have been conducted in the visual domain while the effects of affective states on auditory selective attention remain largely unexplored. Some of the earliest studies on selective attention were conducted in the auditory domain (Cherry, 1953; Broadbent, 1958) and subsequently a vast body of behavioral, electrophysiological and neuroimaging work on selective auditory attention has accumulated (Fritz et al., 2007). A classic setting for investigating selective auditory attention is the dichotic listening paradigm (Cherry, 1953) in which the participants are presented with two different streams of auditory stimuli simultaneously into the left and right ears and asked to attend one the streams while ignoring the other. In the ERP literature, the influence of selective auditory attention in such paradigms has often been quantified by measuring responses to deviant sounds presented among repeating standard sounds in the unattended channel and to target sounds in the attended channel.

In dichotic listening paradigms as well as in conventional oddball paradigms, unattended deviant sounds elicit a negative-polarity fronto-central deflection between 150 and 250 ms after sound onset. The traditional interpretation holds that this deflection is composed of two functionally distinct responses, namely, the N1—an obligatory response to any above-threshold sound—and the mismatch negativity (MMN)—a change-specific response elicited only when a sound violates some regularity established by preceding sounds (Näätänen et al., 2005; however, see May and Tiitinen, 2010). The N1/MMN complex is reduced in amplitude for sounds in the unattended channel in dichotic listening paradigms (Woldorff et al., 1991; Näätänen et al., 1993; Alain and Woods, 1997; Szymanski et al., 1999) and thus provides a useful index of auditory selective attention. Salient changes in unattended sounds may also elicit the P3a, which is a positive-polarity and fronto-centrally maximal response between 200 and 400 ms (Squires et al., 1975; Escera et al., 1998). The P3a has traditionally been considered a marker of involuntary, bottom-up attention capture (Escera et al., 1998). In line with this interpretation, sounds that elicit P3a also consistently deteriorate performance in a concurrent visual or auditory behavioral task (Escera et al., 2000). Finally, task-relevant target stimuli typically elicit the P3b response, which is a positive polarity deflection with a more parietal maximum and a slower latency relative to that of the P3a. The P3b is generally assumed to reflect effortful, top-down allocation or investment of attentional resources towards task-relevant stimuli (Kok, 2001). In sum, the N1, MMN, P3a and P3b provide a way to investigate whether affective states have comparable effects top-down and bottom-up auditory attention as has previously been demonstrated for visual attention. Surprisingly, no study do date has employed these classic markers of auditory attention to investigate the effects of positive mood on attentional scope.

In the current study, we used a dichotic listening paradigm in which the sound in the to-be-ignored channel consisted of standard tones and infrequent novel sounds meant to elicit the N1/MMN/P3a-complex while the sounds in the attended channel consisted of repeating non-target sounds and infrequent target tones, the latter of which were meant to elicit the P3b. Behavioral measures of distraction (reaction times and error rates) were obtained by requiring the subjects to press a button each time a target sound was presented. For mood induction, subjects listened to unfamiliar happy, neutral or sad instrumental music, which has been demonstrated to be effective mood induction method (Västfjäll, 2002). The aforementioned studies on the effects of mood on attention have mostly employed non-musical mood manipulations such as viewing affective pictures (Dreisbach, 2006; Schmid et al., 2014) or films (Fredrickson and Branigan, 2005) and self-generated mood induction such as mental imagery (Vanlessen et al., 2013). Even those studies that have utilized music have tended to pair music listening with additional emotion inducing tasks such as the recalling or imagining of emotional life events (Pacheco-Unguetti and Parmentier, 2013) or generating mood congruent thoughts (Rowe et al., 2007). In the current study, we test whether mood induced by simply listening to unfamiliar instrumental music can affect attentional scope in the auditory domain.

If positive mood broadens the scope auditory attention at the expense of control over distraction, subjects in happy mood should display enlarged N1/MMN and P3a responses to the novel sound in the unattended channel as well as deteriorated behavioral detection of the targets on trials following the novel sounds. Furthermore, since allocating attentional resources across competing stimuli has been reported to reduce the P3b, the P3b to the target sounds could also be predicted to be diminished in positive mood. Finally, the sad mood induction condition allowed us to test whether music-induced sadness augments or decreases responsiveness to to-be-ignored stimuli, both of which have been reported previously in studies using other than purely musical mood induction (Schmitz et al., 2009; Pacheco-Unguetti and Parmentier, 2013).

Materials and methods

Participants

Fifty-seven volunteers with normal hearing and no history of neurological disorders participated in the experiment. The subjects were pseudo-randomly assigned to sad, neutral or happy musical mood induction condition. Two subjects were excluded from further analyses due to a technical failure during the EEG recording and one due to a very low hit rate in the task. Thus, the final sample consisted of 54 subjects (Sad: N = 18, Neutral: N = 18, Happy: N = 18, mean age = 28, 9 males). The subjects were rewarded with a movie ticket for their participation. The experiment protocol was approved by the Ethical Committee of the University of Jyväskylä.

Procedure

The experimental session began with the attachment of the EEG electrodes and a short practice trial of the task. Thereafter, the subjects underwent a mood induction protocol that lasted for 3 min and consisted of listening to an excerpt of a sad, neutral or happy musical piece. Since our primary aim was to test whether mood induced by music per se is sufficient to influence attention, we minimized the possible confounding effects of autobiographical memories and lyrical content by using unfamiliar instrumental music. The subjects were instructed to concentrate on listening to the music and were informed that they will receive questions about the music afterwards. The role of affect was not explicitly mentioned. After listening to the musical piece, the subjects reported how much they liked the music, and how well a set of emotion terms (e.g. sad, happy) described their emotional response to the musical piece on a seven-point scale (1= not at all, 7 = extremely well). The questions and emotion terms were presented one by one on a computer screen and the subjects gave their answers by a button press. In addition to the piece used in the mood induction, all subjects also rated the other two pieces as a part of another task (to be reported elsewhere).

The mood induction was followed by a dichotic listening experiment. The subjects’ task was to attend to sounds presented to the right ear and detect target tones (piano and trumpet sounds) among them while ignoring sounds presented to the left ear. They were instructed to press one button on a response box when they heard one the piano sound and push another button when they heard the trumpet sound (the sound-button association was counterbalanced across subjects). The dichotic listening task was divided into two approximately 5-min blocks. Between the blocks subjects listened to a one-minute reminder excerpt of the musical piece they heard during the mood induction.

Stimuli

The musical pieces for the Sad, Neutral and Happy mood conditions were 3-min experts from Discovery of the Camp (Band of Brothers soundtrack), the first movement of La Mer by Claude Debussy and Midsommarvaka by Hugo Alfven, respectively. These pieces have been used in previous studies to successfully induce the positive, negative and neutral mood states (Krumhansl, 1997; Vuoskoski and Eerola, 2012).

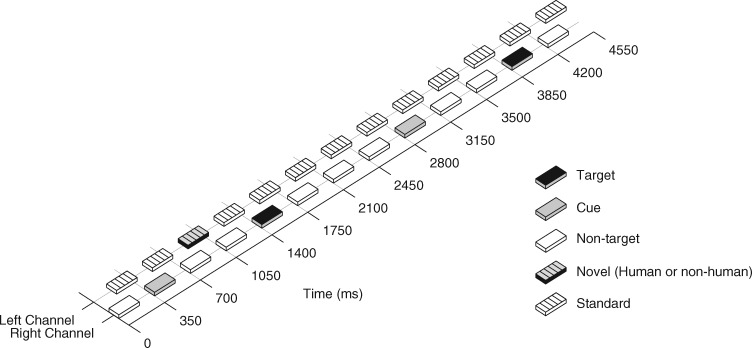

In the dichotic listening task (Figure 1), sounds were presented in an alternating manner to the left and right ears (i.e. each sound presented to one ear was followed by a sound presented to the other ear). The sounds in the attended right channel consisted of Target, Non-target and Cue sounds (approximately 20, 60 and 20%, respectively). The Target sounds were trumpet and piano tones edited from the McGill University Master Samples sound library. The Non-target and Cue sounds were complex tones with two upper harmonic partials that were –3 and –6 dB relative to the fundamental, respectively. Both the Target and Non-target sounds had the F0 of 440 Hz while the Cue sounds had the F0 of 1000 Hz.

Fig. 1.

The dichotic listening paradigm. The sounds alternated between the attended right (Target, Non-target and Cue sounds) and the to-be-ignored left channel (Standard and Novel sounds). The subjects’ task was to respond to the target sounds by a button press.

The sounds in the unattended left channel consisted of Human and Non-human Novel sounds and repeating Standard tones (approximately 5, 5 and 90%, respectively). The Human novel sounds were vocalizations such as laughs and cries etc. modified from the International affective digitized sounds database (Bradley and Lang, 1999). The Non-human novel sounds, in turn, were spectrally-rich, artificial sounds. These particular sounds were used since they provide a rich array of varying novel sounds that have been used in previous studies to obtain robust P3a responses (Sorokin et al., 2010). The Standard tones were complex tones with two upper harmonics partials (–3 and –6 dB relative to the fundamental) and had the f0 of 500 Hz.

All sounds were 200 ms in duration including 10-ms rise and fall times. The sounds were presented in alternating manner to the left and right channels with a constant interstimulus interval (ISI) of 175 ms (i.e. the within-channel ISI was 350 ms).

The Target sounds in the attended channel were presented pseudo-randomly among the Non-target sounds with the restriction that consecutive Target sounds were separated at least by 9 sounds. On half of the Target trials, the third preceding sound was a novel sound in the unattended channel (Novel-target trials) and on the other half of the Target trials it was a Standard sound (Standard-target trials).

The Cue sound signaled that a Target sound would be presented after five intervening sounds (i.e. Standard, Non-target, Standard, Non-target, Standard or Standard, Non-target, Novel, Non-target, Standard). The Cue sounds were included in the sequence to help the subjects to maintain their attention toward the right channel and to lessen the predictive value of the Novel sounds in the unattended channel as cues for the upcoming presentation of a Target sound.

EEG and autonomic activity data collection and preprocessing

EEG was recorded from 128 active electrodes mounted on a standard BioSemi electrode cap using a BioSemi system (BioSemi B.V., the Netherlands) with a sampling rate of 512 Hz. Two active EEG electrodes were attached to the nose tip for off-line re-referencing and below the right eye for monitoring eye blinks.

The off-line processing of the EEG was performed using EEGlab (Delorme and Makeig, 2004). The continuous EEG was filtered (0.5–20 Hz) and epoched from −100 to 400 ms relative to stimulus onset. Thereafter, epochs with large, idiosyncratic artifacts were identified by eye and removed before an independent component analysis (ICA) was performed on the epoched data. Components due to eye blinks and horizontal eye movements were removed and epochs with amplitudes exceeding ±100 μV were discarded. The remained epochs were averaged separately for each sound type.

Electrocardiogram (ECG) and Electrodermal activity (EDA) were measured using the Biosemi ActiveTwo system during music listening and a 2-min silent baseline obtained at the beginning of the recording session. SCL was measured with Nihon Kohden electrodes placed on the volar surfaces on medial phalanges (Dawson et al., 2007) and ECG with Biosemi Active-electrodes placed on the lower left rib cage and on the distal end of the right collarbone (Stern et al., 2001). Mean values of EDA, heart rate and heart rate variability in low (0.04–0.15 Hz) and high (0.15–0.4 Hz) frequency ranges were computed for the baseline and a 2-min period beginning 30 s after the onset of the music.

Response amplitude quantification and statistical analyses

The N1/MMN and the P3a mean amplitudes were measured from novel-standard difference signals separately for the two novel sound categories over 100–150 and 225–275 ms post-stimulus onset, respectively. The P3b mean amplitude was measured from target-non-target differences signal over 350–400 ms post-stimulus onset. Our preliminary analyses showed that there was no significant amplitude difference between P3b responses on Target trials following novel sounds and those following standard sounds. Therefore, the P3b for both types of trials were averaged together.

For the statistical analysis of the response mean amplitudes, we used three regions of interest (ROI) of midline channels. Namely, we included a frontal ROI (channels C18, C19, C20 and C21/Fz) and a central ROI (channels A1/Cz, A2, A3 and A4) for the analysis of the MMN and P3a and a posterior ROI (channels A19/Pz, A20, A21 and A22) for the analysis of the P3b.

Because of the rapid presentation rate, we had to ensure that no confounding effects in the time range of the N1/MMN, P3a or P3b could arise simply from acoustic differences between stimulus types in the preceding and following sounds. Therefore, we only analyzed responses to Standard and Non-target sounds that were matched to the Novel sounds and Target tones with this regard. Namely, we only analyzed responses to Standard sounds that were presented in a similar position in the sequence as the Novel sounds relative to the Target sounds (i.e. Non-target–Standard–Non-target–Standard–Target vs Non-target–Novel–Non-target–Standard–Target). Furthermore, we only analyzed responses to those Non-target sounds that were, similarly to the Target tones, preceded by a standard tone and followed by a standard tone and a non-target sounds (i.e. Standard–Non-target–Standard–Non-target vs Standard–Target–Standard–Non-target).

The analysis of the reaction times was restricted to correct trials and responses that were given between 200 and 1200 ms after Target onset.

Reaction times and the number of correct responses were analyzed a 3 × 3 repeated measures ANOVA with factors Stimulus (Standard, Human novel, Non-human novel) as a within-subject factor and Condition (Sad, Neutral, Happy) as a between-subjects factor.

The N1/MMN and P3a responses elicited by Human and Non-human sounds were analyzed using separate 2 × 2 × 3 repeated measures ANOVA with factors ROI (Frontal vs Central), Stimulus (Human novel and Non-Human novel) as a within-subject factors and Condition (Sad, Neutral and Happy) as a between-subjects factor. The amplitude of the P3b to target tones at the posterior ROI was analyzed with a one-way ANOVA with Condition (Sad, Neutral and Happy) as a factor. The electrodes used in the amplitude analyses conventional choices for analyzing MMN, P3a and P3b amplitudes and represented well the peaks of response scalp distributions across the mood conditions.

Repeated measures ANOVAs were used to compare the self-reported emotional responses with Musical piece (happy, neutral and sad) as a within-subject factor. Univariate ANOVAs were used to compare music-baseline difference of the physiological measures across the mood conditions.

Post-hoc pair-wise comparisons were corrected using the false discovery rate procedure (Benjamini and Hochberg, 1995).

Results

Self-reported emotional responses

Out of the three musical pieces, subjects reported feeling most happiness while listening to the happy musical piece [F(2,88) = 21.59, P < 0.001]. Pairwise comparisons: happy > neutral, P < 0.01; happy > sad, P < 0.001; neutral > sad, P < 0.01) and most sadness while listening to the sad piece [F(2,88) = 82,12, P < 0.001], Pairwise comparisons: sad > neutral, P < 0.001; sad > happy, P < 0.001; happy > neutral, P < 0.001). There were no significant differences in the linking ratings between the musical pieces [F(2,88) = 2.21, P = 0.116].

Physiological responses

No significant differences between the mood conditions were found for EDA [F(2,42) = 0.01, P = 0.99), heart rate [F(2,42) = 0.10, P = 0.91] or heart rate variability in the high [F(2,42) = 0.27, P = 0.77] or low frequency range [F(2,42) = 0.26, P = 0.77].

Behavioral performance

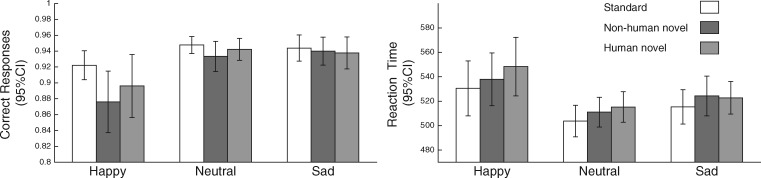

The reaction times were prolonged reaction times on trials following novel sounds [Main effect of Stimulus: F(2,102) = 6.393, P < 0.01] (Figure 2). No significant effects of condition on reaction times were found. The subject also made more incorrect responses on trials following a novel sound than on trials following standard sounds [Main effect of Stimulus: F(1.76,91.530) = 7.552, P < 0.01] (Figure 2). However, there was also a significant Stimulus × Condition interaction [F(3.59,91.530) = 3.002, P < 0.05] suggesting that the magnitude of this distraction effect differed between the mood conditions. The post hoc pair-wise comparisons indicated that differences in error rates between standard and novel trials stemmed from the performance of the subject in the Happy condition. Namely, only these subjects showed significantly elevated error rates on novel trials (Non-human novel sounds: P < 0.001, Human novel sounds: P < 0.05) relative to standard trials whereas no significant difference in error rates between the stimulus types were found for the subjects in the neutral and sad conditions (for all contrasts, P > 0.4). Direct comparison between the groups revealed higher error rates in Happy condition relative to the Sad and Neutral conditions for the Non-human novel sounds (P < 0.01 for both).

Fig. 2.

The percentage of correct responses and reaction times (ms) for the Standard-target and Novel-target trials in the happy, neutral and sad mood induction conditions and the corresponding 95% confidence intervals.

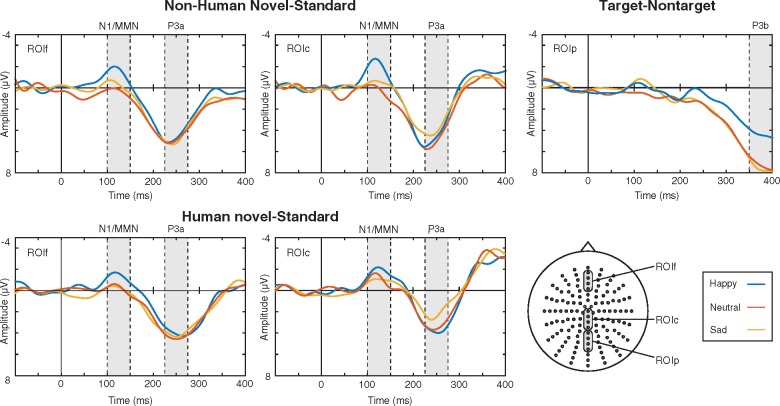

ERP results

The Novel sound-minus-standard sound and Target-minus-non-target sound difference signals and their scalp distributions are displayed in Figures 4 and 3, respectively. The novel sounds in the non-attended channel elicited an N1/MMN-like negativity followed by a prominent P3a at fronto-central electrodes. The targets in the attended channel elicited a P3b response with the typical posterior scalp distribution.

Fig. 4.

Non-human-novel-minus-standard and human-novel-minus-standard difference signals at the frontal and central ROIs (ROIf and ROIc) and Target-minus-non-target difference signals at posterior ROI (ROIp) for the subjects in the Happy, Neutral and Sad conditions.

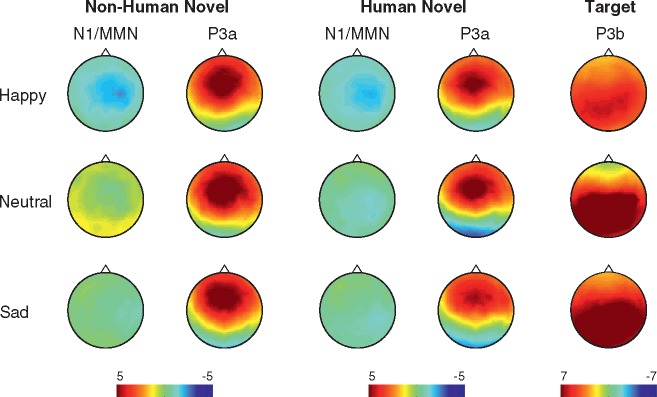

Fig. 3.

The scalp distributions for the N1/MMN, P3a and P3b for the subjects in the happy, neutral and sad mood induction conditions.

The N1/MMN, there was a significant main effect of Condition [F(2,51) = 4.144, P < 0.05]. The post-hoc pair-wise comparisons indicated that the N1/MMN was significantly larger for the subjects in the Happy condition than for those in the Sad (P < 0.05) or Neutral (P < 0.05) conditions. There was also a significant ROI × Stimulus interaction [F(I,51) = 5.883, P < 0.05] stemming from a larger N1/MMN amplitude at the central ROI relative to the frontal ROI for the non-human novel sound (P = 0.001).

No significant differences between the conditions were found for the P3a. There was a significant ROI × Stimulus interaction [F(1,51) = 8.835, P < 0.01]. The post hoc pair-wise comparisons indicated that the P3a elicited by the non-human novel sounds was larger at the frontal ROI than in the central ROI (P < 0.01) and that the non-human novel sounds elicited a larger P3a than the human novel sounds both at the frontal and central ROIs (P < 0.05 and P < 0.01, respectively).

For the P3b, there was a significant main effect of Condition [F(2,51) = 3.293, P < 0.05]. After adjusting for multiple comparisons, the post hoc pair-wise comparisons indicated that the P3b of the subjects in the Happy condition was smaller at a trend level than the P3b of the subjects in the Sad and Neutral Conditions (P = 0.06 for both).

Discussion

The current study indicates that music-induced positive mood broadens the scope of auditory selective attention. Specifically, we found that subjects who had listened to a happy musical piece showed augmented ERP responses to to-be-ignored novel sounds and reduced responses to target sounds. These electrophysiological results were echoed by behavioral data showing that these subjects also displayed elevated error rates on target trials following novel sounds. Thus, our results offer further support for the notion that positive mood broadens attentional scope (Rowe et al., 2007). To the best of our knowledge, this is the first study demonstrating that this effect is reflected in the neurocognitive functions measured by classical ERP indices of selective auditory attention, the N1/MMN, P3a and P3b, and can be achieved even with unfamiliar instrumental music.

Music-induced mood and auditory attention

The N1/MMN elicited by the to-be-ignored novel sounds was attenuated in the Sad and Neutral mood conditions relative to the Happy mood condition. We attribute the diminished N1/MMN to more effective control over of resource allocation towards to-be-ignored auditory information. In line with this interpretation, a number of studies have found that ERPs and electromagnetic fields (ERFs)—including the MMN—obtained in dichotic listening paradigms to sounds in the unattended channel are attenuated already at relatively early stages of processing (Woldorff et al.,1991, 1993). These findings have typically been taken as evidence that selective attention prevents the full processing of unattended sensory information (Woldorff et al., 1991, for a more refined model see Sussman et al., 2003). The enlarged N1/MMN in subjects in the Happy mood condition suggests that positive mood reduces the selectivity of auditory attention and allows more resources to be allocated to the processing of unattended sound streams.

In addition to enlarged N1/MMN, the subjects in happy mood showed reduced P3b for the target sounds relative to the other two subject groups. The P3b amplitude has traditionally been taken as an index of the amount of attentional resources available for stimulus processing. According to this framework, the current results imply that not only did the subject in the Happy mood condition allocate more resources to the unattended sounds but they also devoted less processing resources to the target sounds in the attended channel.

According to one model on the effects of positive mood on visual spatial attention, positive mood does not cause a reallocation of processing resources from foveal stimuli to peripheral ones but mobilizes additional resources for the processing of both supposedly by changing the balance between ‘internal’ and ‘external’ attention (Chun et al., 2011) in favor of the latter (Vanlessen et al., 2016). This proposition is supported by studies suggesting that the enhanced processing of peripheral stimuli in positive mood does not come at the expense of processing foveal ones (reviewed in Vanlessen et al., 2016). As mentioned above, the current study, in contrast, found evidence for a reduced allocation of attentional resources to the target stimuli. Thus, the current results suggest that in the auditory domain positive mood can induce a tradeoff between the processing of target and to-be-ignored sounds at least when the latter include highly distracting novel sounds.

In sum, the ERP results indicate that positive mood promotes more sensitive change detection for unattended sounds but also leads to less effective shielding against distraction from task-irrelevant stimuli as well as reduced allocation of processing resources to task-relevant stimuli. The finding that behavioral distraction effect was most pronounced in the Happy mood condition is in line with this interpretation. It bears reminding, that only some positive affective states might broaden attentional scope while those with high approach motivation may in fact cause more focused attention (Harmon-Jones et al., 2012). Also, some studies have failed to find any effects of positive mood on attention (Bruyneel et al., 2013) suggesting that there are yet-to-be-determined factors that may preclude the broadening effect of positive mood on attentional scope.

The non-human novel sounds elicited larger P3a responses across the mood groups indicating that they more readily engaged the underlying attentional orienting mechanism. In line with this interpretation, the behavioral distraction effect (i.e. the increased error rates) also appeared stronger for the non-human novel sounds than for the human novel sounds. These were somewhat surprising findings given that a previous study using the same novel sounds in multi-feature MMN paradigm did not find such a difference between the novel sound types (Sorokin et al., 2010) suggesting that the amplitude difference did not simply result form low-level acoustical differences between the novel sound categories. There are many methodological differences between the current and the previous study including that in the current study the subjects actively attended the right channel while the previous used a passive condition where the subjects watched a silent movie. Neuroimaging studies indicate that attending the right channel engages the left auditory regions (Jäncke et al., 2001). There is evidence that relative to the right auditory areas, those on the left appear to be less sensitivity to non-speech human vocalizations (Belin et al., 2000). Thus, one possibility is that attending to the right ear may be particularly disruptive for the processing of such sounds thereby makes them less attention catching.

The current results indicate that even instrumental music, that is unfamiliar to the listener and is selected by the experimenter and thereby cannot have explicit memory associations to real-life emotional events, can affect attentional scope via its emotional effects. Although the relation of music-induced and ‘genuine’ everyday emotions continues to be debated, functional neuroimaging studies suggest that the neural underpinnings of the two are at least partly the same (Koelsch, 2014). Music-induced positive emotions such as joy and happiness (Mitterschiffthaler et al., 2007; Brattico et al., 2011; Trost et al., 2012) and music-induced pleasure more generally have been consistently associated with the activation of the striatal dopaminergic reward system (Blood and Zatorre, 2001; Brown et al., 2004; Menon and Levitin, 2005; Koelsch et al., 2006; Salimpoor et al., 2011). Since the influence of positive mood in attention has been suggested to be mediated by dopamine (Ashby et al., 1999; Aarts et al., 2011), elevated dopamine release is an obvious candidate for neurochemical mediator for the current results. Interestingly, Parkinson patients have been found to show less distraction after withdrawing from dopaminergic medication (Cools et al., 2001) and a dopamine antagonist has been reported to improve performance in a spatial working memory task with visual distractors (Mehta et al., 2004). However, the findings that dopamine antagonists seem to increase distraction and augment neural responses to unattended sounds in a somewhat similar manner to the current results (Kähkönen et al., 2002) are at odds with a simplistic suggestion that listening to happy music simply increases dopamine levels in the brain, which in turn facilitates attentional switching at the expense of focus.

One might argue that the happy music affected attention simply because it was more arousing than the neural and sad music. However, the physiological measures did not reveal differences in physiological arousal levels between the conditions. Furthermore, a previous study directly examining the effects of physiological arousal (manipulated with exercise) found no evidence for broadening of attentional focus (Harmon-Jones et al., 2012). Future studies could test the contribution of arousal by including also low valence and high arousal musical material (e.g. scary music).

While the current results are compatible with theories proposing that positive mood broadens the scope of attention, they are inconsistent with the notion that all non-neutral emotional states disrupt cognitive control (Pacheco-Unguetti and Parmentier, 2015). Namely, we found no evidence that sad mood induces heightened distractibility. In line with some earlier studies, sad mood did neither show any significant effects on the behavioral measures (Chepenik et al., 2007; Rowe et al., 2007) nor the ERP responses. It could argued, of course, that listening to music cannot induce sadness that is intense enough to influence attentional scope. Although this explanation cannot be completely ruled out, previous studies do indicate that musical mood manipulation tends to be effective in inducing sadness (Vuoskoski and Eerola, 2012; Juslin et al., 2013) even when indexed by indirect measures that should be relatively insensitive to demand characteristics. Importantly, a Vuoskoski and Eerola (2012) found that after listening to the same sad musical piece employed in the current many subjects tended to show similar biases in interpretation and memory as after remembering sad life events that supposedly causes an affective state closely resembling real-life sadness. Obviously, this is not to say that music-induced sadness is identical to sadness elicited by actual personal losses. One clear difference between real-life and music-induced sadness is that while the first is considered negative and to-be-avoided, that latter is sought after and tends to mixed with other positive emotions (Taruffi and Koelsch, 2014; Peltola and Eerola, 2016). Thereby, the effects of emotions induced by sad music on attention might very well differ from those of ‘genuine’ sadness. Studies directly comparing the effects of sadness induced music listening and by conditions that elicit emotions more directly linked with real-life sadness might shed light on the issue.

Summary and conclusions

For the first time, the current study provides behavioral and ERP evidence that positive mood induced by unfamiliar instrumental music can broaden the scope of auditory selective attention. This effect manifested both as greater sensitivity to to-be-ignored sounds as well as reduced resources allocation to to-be-attended sounds. The results highlight the emotional power of music and the close interplay between affect and cognition.

Funding

This work was financially supported by the Academy of Finland Grant 270220 (Surun Suloisuus).

Conflict of interest. None declared.

References

- Aarts E., van Holstein M., Cools R. (2011). Striatal dopamine and the interface between motivation and cognition. Frontiers in Psychology, 2, 163.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alain C., Woods D.L. (1997). Attention modulates auditory pattern memory as indexed by event-related brain potentials. Psychophysiology, 34(5), 534–46. [DOI] [PubMed] [Google Scholar]

- Ashby F.G., Isen A.M., Turken A.U. (1999). A neuropsychological theory of positive affect and its influence on cognition. Psychological Review, 106(3), 529–50. [DOI] [PubMed] [Google Scholar]

- Belin P., Zatorre R.J., Lafaille P., Ahad P., Pike B. (2000). Voice-selective areas in human auditory cortex. Nature, 403(6767), 309–12. [DOI] [PubMed] [Google Scholar]

- Benjamini Y., Hochberg Y. (1995). Controlling the false discovery rate: a practical and powerful approach to multiple testing. Journal of the Royal Statistical Society. Series B (Methodological), 57, 289–300. [Google Scholar]

- Biss R.K., Hasher L., Thomas R.C. (2010). Positive mood is associated with the implicit use of distraction. Motivation and Emotion, 34(1), 73–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blood A.J., Zatorre R.J. (2001). Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proceedings of the National Academy of Sciences of the United States of America, 98(20), 11818–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradley M., Lang P. (1999). International Affective Digitized Sounds (IADS): Stimuli, Instruction Manual and AFFECTIVE Ratings (Tech. Rep. No. B-2). Gainesville, FL: The Center for Research in Psychophysiology, University of Florida. [Google Scholar]

- Brattico E., Alluri V., Bogert B., et al. (2011). A functional MRI study of happy and sad emotions in music with and without lyrics. Frontiers in Psychology, 2, 308.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Broadbent D.E. (1958). Perception and Communication. London: Pergamon Press. [Google Scholar]

- Brown S., Martinez M., Parsons L. (2004). Passive music listening spontaneously engages limbic and paralimbic systems. Neuroreport, 15(13), 2033–7. [DOI] [PubMed] [Google Scholar]

- Bruyneel L., van Steenbergen H., Hommel B., Band G.P.H., De Raedt R., Koster E.H.W. (2013). Happy but still focused: failures to find evidence for a mood-induced widening of visual attention. Psychological Research, 77(3), 320–32. [DOI] [PubMed] [Google Scholar]

- Chen M.-C., Tsai P.-L., Huang Y.-T., Lin K. (2012). Pleasant music improves visual attention in patients with unilateral neglect after stroke. Brain Injury, 27(1), 75–82. [DOI] [PubMed] [Google Scholar]

- Chepenik L.G., Cornew L.A., Farah M.J. (2007). The influence of sad mood on cognition. Emotion, 7(4), 802–11. [DOI] [PubMed] [Google Scholar]

- Cherry E.C. (1953). Some experiments on the recognition of speech, with one and with two ears. The Journal of the Acoustical Society of America, 25(5), 975–9. [Google Scholar]

- Chun M.M., Golomb J.D., Turk-Browne N.B. (2011). A taxonomy of external and internal attention. Annual Review of Psychology, 62, 73–101. [DOI] [PubMed] [Google Scholar]

- Cools R., Barker R.A., Sahakian B.J., Robbins T.W. (2001). Enhanced or impaired cognitive function in Parkinson’s disease as a function of dopaminergic medication and task demands. Cerebral Cortex, 11(12), 1136–43. [DOI] [PubMed] [Google Scholar]

- Dawson M., Schell A., Filion D. (2007). The electrodermal system In: Cacioppo J.T., Tassinary L.G., Berntson G.G., editors. Handbook of Psychophysiology, Vol. 2, pp. 200–23, Cambridge: Cambridge University Press. [Google Scholar]

- Delorme A., Makeig S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods, 134(1), 9–21. [DOI] [PubMed] [Google Scholar]

- Dolan R.J., Friston K.J., Ohman A., et al. (2002). Emotion, cognition, and behavior. Science, 298(5596), 1191–4. [DOI] [PubMed] [Google Scholar]

- Dreisbach G. (2006). How positive affect modulates cognitive control: the costs and benefits of reduced maintenance capability. Brain and Cognition, 60(1), 11–9. [DOI] [PubMed] [Google Scholar]

- Dreisbach G., Goschke T. (2004). How positive affect modulates cognitive control: reduced perseveration at the cost of increased distractibility. Journal of Experimental Psychology: Learning, Memory, and Cognition, 30(2), 343–53. [DOI] [PubMed] [Google Scholar]

- Escera C., Alho K., Schröger E., Winkler I.W. (2000). Involuntary attention and distractibility as evaluated with event-related brain potentials. Audiology and Neurotology, 5(3–4), 151–66. [DOI] [PubMed] [Google Scholar]

- Escera C., Alho K., Winkler I., Näätänen R. (1998). Neural mechanisms of involuntary attention to acoustic novelty and change. Journal of Cognitive Neuroscience, 10(5), 590–604. [DOI] [PubMed] [Google Scholar]

- Fredrickson B.L. (2001). The role of positive emotions in positive psychology. The broaden-and-build theory of positive emotions. The American Psychologist, 56(3), 218–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fredrickson B.L., Branigan C. (2005). Positive emotions broaden the scope of attention and thought‐action repertoires. Cognition & Emotion, 19(3), 313–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fritz J.B., Elhilali M., David S.V., Shamma S.A. (2007). Auditory attention-focusing the searchlight on sound. Current Opinion in Neurobiology, 17(4), 437–55. [DOI] [PubMed] [Google Scholar]

- Harmon-Jones E., Gable P.A., Price T.F. (2012). The influence of affective states varying in motivational intensity on cognitive scope. Frontiers in Integrative Neuroscience, 6, 73.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Inzlicht M., Bartholow B.D., Hirsh J.B. (2015). Emotional foundations of cognitive control. Trends in Cognitive Sciences, 19(3), 126–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jäncke L., Buchanan T.W., Lutz K., Shah N.J. (2001). Focused and nonfocused attention in verbal and emotional dichotic listening: an FMRI study. Brain and Language, 78(3), 349–63. [DOI] [PubMed] [Google Scholar]

- Jefferies L.N., Smilek D., Eich E., Enns J.T. (2008). Emotional valence and arousal interact in attentional control. Psychological Science, 19(3), 290–5. [DOI] [PubMed] [Google Scholar]

- Juslin P.N., Harmat L., Eerola T. (2013). What makes music emotionally significant? Exploring the underlying mechanisms. Psychology of Music, 42(4), 599–623. [Google Scholar]

- Kähkönen S., Ahveninen J., Pekkonen E., et al. (2002). Dopamine modulates involuntary attention shifting and reorienting: an electromagnetic study. Clinical Neurophysiology, 113(12), 1894–902. [DOI] [PubMed] [Google Scholar]

- Koelsch S. (2014). Brain correlates of music-evoked emotions. Nature Reviews. Neuroscience, 15(3), 170–80. [DOI] [PubMed] [Google Scholar]

- Koelsch S., Fritz T., V Cramon D.Y., Müller K., Friederici A.D. (2006). Investigating emotion with music: an fMRI study. Human Brain Mapping, 27(3), 239–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kok A. (2001). On the utility of P3 amplitude as a measure of processing capacity. Psychophysiology, 38(3), 557–77. [DOI] [PubMed] [Google Scholar]

- Krumhansl C.L. (1997). An exploratory study of musical emotions and psychophysiology. Canadian Journal of Experimental Psychology, 51(4), 336–53. [DOI] [PubMed] [Google Scholar]

- Lindquist K.A., Wager T.D., Kober H., Bliss-Moreau E., Barrett L.F. (2012). The brain basis of emotion: a meta-analytic review. Behavioral and Brain Sciences, 35(03), 121–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- May P.J.C., Tiitinen H. (2010). Mismatch negativity (MMN), the deviance-elicited auditory deflection, explained. Psychophysiology, 47(1), 66–122. [DOI] [PubMed] [Google Scholar]

- Mehta M.A., Manes F.F., Magnolfi G., Sahakian B.J., Robbins T.W. (2004). Impaired set-shifting and dissociable effects on tests of spatial working memory following the dopamine D2 receptor antagonist sulpiride in human volunteers. Psychopharmacology, 176(3–4), 331–42. [DOI] [PubMed] [Google Scholar]

- Menon V., Levitin D.J. (2005). The rewards of music listening: response and physiological connectivity of the mesolimbic system. NeuroImage, 28(1), 175–84. [DOI] [PubMed] [Google Scholar]

- Mitchell R.L.C., Phillips L.H. (2007). The psychological, neurochemical and functional neuroanatomical mediators of the effects of positive and negative mood on executive functions. Neuropsychologia, 45(4), 617–29. [DOI] [PubMed] [Google Scholar]

- Mitterschiffthaler M.T., Fu C.H.Y., Dalton J.A., Andrew C.M., Williams S.C.R. (2007). A functional MRI study of happy and sad affective states induced by classical music. Human Brain Mapping, 28(11), 1150–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moriya H., Nittono H. (2011). Effect of mood states on the breadth of spatial attentional focus: an event-related potential study. Neuropsychologia, 49(5), 1162–70. [DOI] [PubMed] [Google Scholar]

- Näätänen R., Jacobsen T., Winkler I. (2005). Memory-based or afferent processes in mismatch negativity (MMN): a review of the evidence. Psychophysiology, 42(1), 25–32. [DOI] [PubMed] [Google Scholar]

- Näätänen R., Paavilainen P., Tiitinen H., Jiang D., Alho K. (1993). Attention and mismatch negativity. Psychophysiology, 30(5), 436–50. [DOI] [PubMed] [Google Scholar]

- Olivers C.N.L., Nieuwenhuis S. (2006). The beneficial effects of additional task load, positive affect, and instruction on the attentional blink. Journal of Experimental Psychology. Human Perception and Performance, 32(2), 364–79. [DOI] [PubMed] [Google Scholar]

- Pacheco-Unguetti A.P., Parmentier F.B.R. (2013). Sadness increases distraction by auditory deviant stimuli. Emotion, 14(1), 203–13. [DOI] [PubMed] [Google Scholar]

- Pacheco-Unguetti A.P., Parmentier F.B.R. (2015). Happiness increases distraction by auditory deviant stimuli. British Journal of Psychology, 107(3), 419–33. [DOI] [PubMed] [Google Scholar]

- Peltola H.-R., Eerola T. (2016). Fifty shades of blue: classification of music-evoked sadness. Musicae Scientiae, 20(1), 84–102. [Google Scholar]

- Pessoa L. (2008). On the relationship between emotion and cognition. Nature Reviews Neuroscience, 9(2), 148–58. [DOI] [PubMed] [Google Scholar]

- Rowe G., Hirsh J.B., Anderson A.K. (2007). Positive affect increases the breadth of attentional selection. Proceedings of the National Academy of Sciences of the United States of America 104(1), 383–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salimpoor V.N., Benovoy M., Larcher K., Dagher A., Zatorre R.J. (2011). Anatomically distinct dopamine release during anticipation and experience of peak emotion to music. Nature Neuroscience, 14(2), 257–62. [DOI] [PubMed] [Google Scholar]

- Schmid Y., Hysek C.M., Simmler L.D., Crockett M.J., Quednow B.B., Liechti M.E. (2014). Differential effects of MDMA and methylphenidate on social cognition. Journal of Psychopharmacology, 28(9), 847–56. [DOI] [PubMed] [Google Scholar]

- Schmitz T.W., De Rosa E., Anderson A.K. (2009). Opposing influences of affective state valence on visual cortical encoding. The Journal of Neuroscience, 29(22), 7199–207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schupp H.T., Flaisch T., Stockburger J., Junghöfer M. (2006). Emotion and attention: event-related brain potential studies. Progress in Brain Research, 156, 31–51. [DOI] [PubMed] [Google Scholar]

- Schwarz N., Clore G. (2003). Mood as information: 20 years later. Psychological Inquiry, 14(3), 296–303. [Google Scholar]

- Sorokin A., Alku P., Kujala T. (2010). Change and novelty detection in speech and non-speech sound streams. Brain Research, 1327, 77–90. [DOI] [PubMed] [Google Scholar]

- Soto D., Funes M.J., Guzmán-García A., Warbrick T., Rotshtein P., Humphreys G.W. (2009). Pleasant music overcomes the loss of awareness in patients with visual neglect. Proceedings of the National Academy of Sciences of the United States of America, 106(14), 6011–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Squires N.K., Squires K.C., Hillyard S.A. (1975). Two varieties of long-latency positive waves evoked by unpredictable auditory stimuli in man. Electroencephalography and Clinical Neurophysiology, 38(4), 387–401. [DOI] [PubMed] [Google Scholar]

- Stern R.M., Ray W.J., Quigley K.S. (2001). Psychophysiological Recording. Oxford: Oxford University Press. [Google Scholar]

- Sussman E., Winkler I., Wang W. (2003). MMN and attention: competition for deviance detection. Psychophysiology, 40(3), 430–5. [DOI] [PubMed] [Google Scholar]

- Szymanski M.D., Yund E.W., Woods D.L. (1999). Phonemes, intensity and attention: differential effects on the mismatch negativity (MMN). The Journal of the Acoustical Society of America, 106(6), 3492.. [DOI] [PubMed] [Google Scholar]

- Taruffi L., Koelsch S. (2014). The paradox of music-evoked sadness: an online survey. PLoS One 9(10), e110490.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trost W., Ethofer T., Zentner M., Vuilleumier P. (2012). Mapping aesthetic musical emotions in the brain. Cerebral Cortex, 22(12), 2769–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trost W., Frühholz S., Schön D., et al. (2014). Getting the beat: entrainment of brain activity by musical rhythm and pleasantness. NeuroImage, 103, 55–64. [DOI] [PubMed] [Google Scholar]

- Vanlessen N., De Raedt R., Koster E.H., Pourtois G. (2016). Happy heart, smiling eyes: a systematic review of positive mood effects on broadening of visuospatial attention. Neuroscience & Biobehavioral Reviews, 68, 816–37. [DOI] [PubMed] [Google Scholar]

- Vanlessen N., Rossi V., De Raedt R., Pourtois G. (2013). Positive emotion broadens attention focus through decreased position-specific spatial encoding in early visual cortex: evidence from ERPs. Cognitive, Affective, & Behavioral Neuroscience, 13(1), 60–79. [DOI] [PubMed] [Google Scholar]

- Vanlessen N., Rossi V., De Raedt R., Pourtois G. (2014). Feeling happy enhances early spatial encoding of peripheral information automatically: electrophysiological time-course and neural sources. Cognitive, Affective & Behavioral Neuroscience, 14(3), 951–69. [DOI] [PubMed] [Google Scholar]

- van Steenbergen H. (2015). Affective Modulation of Cognitive Control: A Biobehavioral Perspective In Handbook of Biobehavioral Approaches to Self-Regulation (pp. 89–107). New York, NY: Springer. [Google Scholar]

- Västfjäll D. (2002). Emotion induction through music: a review of the musical mood induction procedure. Musicae Scientiae, 5(1 suppl), 173–211. [Google Scholar]

- Vuoskoski J.K., Eerola T. (2012). Can sad music really make you sad? Indirect measures of affective states induced by music and autobiographical memories. Psychology of Aesthetics, Creativity, and the Arts, 6(3), 204–13. [Google Scholar]

- Woldorff M.G., Gallen C.C., Hampson S.A., et al. (1993). Modulation of early sensory processing in human auditory cortex during auditory selective attention. Proceedings of the National Academy of Sciences of the United States of America, 90(18), 8722–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woldorff M., Hackley S., Hillyard S. (1991). The effects of channel-selective attention on the mismatch negativity wave elicited by deviant tones. Psychophysiology, 28(1), 30–42. [DOI] [PubMed] [Google Scholar]