Abstract

Unlike conventional scalar sensors, camera sensors at different positions can capture a variety of views of an object. Based on this intrinsic property, a novel model called full-view coverage was proposed. We study the problem that how to select the minimum number of sensors to guarantee the full-view coverage for the given region of interest (ROI). To tackle this issue, we derive the constraint condition of the sensor positions for full-view neighborhood coverage with the minimum number of nodes around the point. Next, we prove that the full-view area coverage can be approximately guaranteed, as long as the regular hexagons decided by the virtual grid are seamlessly stitched. Then we present two solutions for camera sensor networks in two different deployment strategies. By computing the theoretically optimal length of the virtual grids, we put forward the deployment pattern algorithm (DPA) in the deterministic implementation. To reduce the redundancy in random deployment, we come up with a local neighboring-optimal selection algorithm (LNSA) for achieving the full-view coverage. Finally, extensive simulation results show the feasibility of our proposed solutions.

Keywords: full-view area coverage, camera sensor networks, sleep scheduling

1. Introduction

Research on wireless sensor networks has aroused explosive interest since it can be the bridge between the physical world and digital information [1]. With the improvements in microelectro-mechanical systems (MEMS) technology, multimedia capability has been added to sensor nodes which can thus provide more detailed and exciting information about the environment [2,3,4]. As a result researchers have recently started to work on wireless multimedia sensor networks.

Compared with scalar sensors the current multimedia sensors such as camera sensors and ultrasound sensors have an important characteristic, namely directionality. Wireless multimedia sensor networks are also variously known as directional sensor networks (DSNs), visual sensor networks (VSNs) or camera sensor networks (CSNs). Visual coverage is an important quantifiable property of camera sensor networks, describing the visual data collection within the sensing range of the systems. The knowledge of visual coverage guides the deployment of the whole network. Traditional signal-based sensor networks cannot identify differences between similar targets, while the visual information has a more inherent advantage in this respect [5]. Consequently, there are numerous applications employing camera sensor networks to monitor the environment, like maritime oil exploration, intruder recognition and tracking, military and civilian activity monitoring, etc.

It is critical to capture in real-time the easily identified posture of intruders for the sake of the recognition efficiency. For example, a camera can precisely identify a target only if he/she is in front of or near the camera; that is, the target is facing straight into the camera. However, in practical applications the face direction of intruders is uncertain, which represents a significant challenge for camera sensor networks. To solve this problem, the full-view coverage model has been proposed to guarantee the face recognition of intruders from any arbitrary direction. If no matter which direction the target is facing there is always a sensor whose sensing range includes the target and the sensor′s viewing direction is sufficiently close to the direction the target is facing, the target is considered as being full-view covered. Similar to the full-view coverage, the k-coverage model overlaps the sensing range of sensors with the purpose of improving the fault-tolerance of the network [6]. Compared with the k-coverage model, the full-view coverage model has more pertinence to the object’s facing direction.

Just like the coverage issue in wireless sensor networks, the geometric shape of the monitored target can be seen as a linear region, and then be developed in the two-dimensional plane. Full-view coverage problems can be classified as target-based coverage [7,8,9,10,11,12], barrier-based coverage [13,14], and area-based coverage [15,16], with the complexity of the research gradually increasing. In this paper, we focus on the area-based full-view coverage problem. Informally, if the camera sensor network monitors the ROI from all perspectives, it is guaranteed that every aspect of an object at any position can be captured by the camera nodes in the CSN.

One significant problem in full-view area coverage is how to decrease the complexity of the issue caused by the irregular sensing range and the variable viewing direction. It was proved that the full-view area coverage with a minimum number of sensors (FACM) problem is NP-hard [17,18]. Existing works commonly use approximation algorithms to solve it [15,19,20,21]. Another important question is how to deduce the critical sensor density needed both in deterministic and random deployment for full-view coverage. Some references [18,22,23,24] have given some results for the critical density, which believe that there exists asymptotic coverage for mathematical convenience when the total number of cameras approaches to infinity. Our main contributions are highlighted as follows.

We deduce the necessary and sufficient conditions of the FACM problem for the local point and study the geometric parameters, the maximum full-view neighborhood coverage and the position trajectory of sensors around the local point.

We find that the full-view area coverage can be guaranteed approximately, as long as the regular hexagons decided by the virtual grids are seamlessly stitched.

We propose two solutions for camera sensors for two different deployment strategies, respectively. By computing the theoretical optimal length of the virtual grids, the deployment pattern algorithm (DPA) for FACM is presented in the deterministic implementation. For reducing the redundancy in random deployment, a local neighboring-optimal selection algorithm (LNSA) is devised for achieving the full-view coverage in the grid points.

The paper is organized as follows: we discuss the works of literature related to the full-view coverage in Section 2. Then the geometric analysis and preliminaries based on the network model are described in Section 3. We derive the density estimation and the deployment pattern algorithm (DPA) for deterministic implementation in Section 4. However, a local neighboring-optimal selecting algorithm (LNSA) is proposed for full-view area coverage in random implementation in Section 5. The feasibility of the proposed DPA and LNSA is demonstrated with simulation results in Section 6. We finally conclude the paper and discuss the future work in Section 7.

2. Related Work

For improving the quality of service (QoS) of CSNs, researchers have recently dedicated much effort to the coverage issue in sensor networks. Since extensive works based on omnidirectional sensing models have not been applied to CSNs, the analysis of coverage models in camera sensor networks has received sustained attention [25,26,27,28,29]. Mavrinac [30] analyzed available coverage models in the context of specific applications for CSNs and derived a generalized geometric model. In allusion to the intrinsic nature of camera sensors, the binary sector coverage model has been widely adopted in the existing works. Guvensan et al. [31] listed all properties of camera sensor nodes and concluded the five enhancement principles [5,15,32,33,34,35]. It is obvious to find that the existing research had focused on two general solutions: adjusting the working directions of the sensor nodes and scheduling the sleep durations of redundant sensors.

The previous works often focus on the coverage enhancement by scheduling the sensor nodes themselves. When researchers started to take the object’s face direction into consideration, the coverage issue in camera sensor networks became more complicated and exciting. Wang and Cao [36] introduced the concept of full-view coverage and derived the conditions for guaranteeing the full-view coverage of a point or a sub-region. Moreover, they obtained the sensor density needed for full-view coverage both in random deployment and triangular lattice-based implementation. Inspired by [36], we study the sufficient and necessary condition of the full-view point coverage under the constraint with a minimum number of camera sensors.

Hu et al. [18] defined a centralized parameter—equivalent sensing radius (ESR)—to evaluate the critical requirement for asymptotic full-view coverage under uniform deployment in heterogeneous CSNs. They investigated the impact of mobility and present four mobility models. Besides, they first took heterogeneity of camera sensors into consideration, since camera sensors may come from different manufacturers and have different sensing parameters, or experience different obstruction by the terrain. The ESR of four mobility models may be derived with the statistical method, which assumes that the number of camera nodes tends toward infinity.

Similar to [18], Wu [37] also defined a centralized parameter—critical sensing area (CSA)—to evaluate the critical requirement for asymptotic full-view coverage in heterogeneous networks. They investigated the full-view coverage problem under uniform deployment and Poisson deployment. Here the statistical method was also accepted for computing the probabilities that all points in a dense grid meet the conditions of full-view coverage. The work of [18,37] is conducive to understanding the influence between full-view coverage quality and the parameters of camera nodes, such as sensing radius, number of sensors and the effective angle.

He and co-workers [18] studied how to select the minimum number of camera sensors to guarantee the full-view coverage of a given region. Inspired by the work of [36], they innovatively adopted dimension reduction method to decrease the complexity of the FACM problem. Here the minimum-number full-view point coverage is NP-hard. They solved the NP-hard problem by two approximation algorithms, i.e., the greedy algorithm (GA) and the set-cover-based algorithm (SCA).

The full-view coverage was extended from 2-D plane to the 3-D surface by Manoufali et al. [38], whose algorithm has high complexity for the reason that they take the effect of realistic sea surface movement into consideration. Besides, they proposed a method to improve the target detection and recognition in the presence of poor link quality using cooperative transmission with low power consumption. Similar work also was proceeded by Xiao [39], who studied the coverage problem in directional sensor networks for 3-D terrains and designed a surface coverage algorithm. The sensing direction of camera nodes was uniformly set as vertically downward, which helps give the profile map of the area covered by nodes in the ideal case. Although research on 3-D surface coverage in CSNs is a recent emerging topic, it is not the focus of this paper.

As one branch of the full-view coverage, the face-view barrier coverage problem is also presented in the recent literature [13,40,41,42]. Ma [13] defined the minimum camera barrier coverage problem (MCBCP) for achieving full-view coverage in the barrier field. Their main contribution could be summarized as modeling the weighted directed graph and proposing an optimal algorithm for the MCBCP problem. Different from the barrier coverage described in [13], Yu [40] paid more attention to the intruderS′ trajectory along the barrier and head rotation angles. They derived a rigorous probability bound for intruder detection via a feasible deployment pattern, which could guarantee the intruderS′ face always been detected in the barrier.

Zhao [41] considered the power conservation and addressed the efficient sensor deployment (ESD) problem and energy-efficient barrier coverage (EEBC) problem. They solved this optimization problem and proposed a scheduling algorithm to prolong the network lifetime. Tao [42] summarized the sensing properties and behaviors of directional sensors in the majority of studies on barrier coverage and classified the existing research results. The barrier coverage issue is the special case of the area coverage which means the shape of the covered field is a fence. Since the main difference between barrier-based coverage and area-based coverage is the geometric shape of the monitored target, which is decided by the modeling of the specific applications, we focus on the issue of the full-view area coverage. In this paper, we deduce the necessary and sufficient conditions of the FACM problem from a novel perspective. DPA and LNSA are proposed for two implementation strategies. We compare our conclusions mainly with [19,36], whose results are mostly relevant to our works. We list the comparison between our work and the past related works in Table 1.

Table 1.

Comparison between our work and the past related works.

| Reference | Algorithm | Primary Objective | Main Contribution |

|---|---|---|---|

| [36] | FURCA | Full-view area coverage | The safe region and unsafe region |

| [18] | - | Finding critical condition of full-view area coverage | Equivalent sensing radius (ESR) |

| [38] | - | Full-view area coverage | Model the realistic sea surface |

| [19] | DASH | Full-view area coverage | Dimension Reduction |

| [37] | - | Finding critical condition of full-view area coverage | Critical sensing area (CSA) |

| This work | DPA/LNSA | Full-view area coverage | Maximum full-view Neighbor-hood coverage |

3. Problem Description and Assumptions

3.1. Network Model

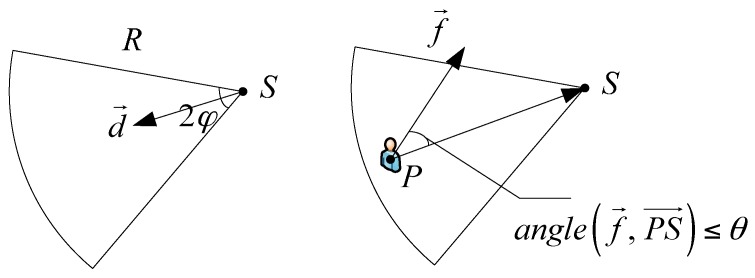

As mentioned in the Introduction, the sector sensing model has been adopted in many studies [13,15,18,19,24,26,36,37,38]. In this paper, we also adopt the sector sensing model and explain this model in the following content. Given a set of camera nodes is deployed over a field without any obstacles, we assume that the camera nodes are homogeneous with the same sensing radius and the angle of view. The region sensed by a camera node can be presented as a 4-tuple including the position S, the working direction , the sensing radius R, and the angle of view . We assume the intruder can be represented as a 2-tuple with the location P and the facial direction .

The sensing capability of detecting the intruder is illustrated in Figure 1. There exists a predefined parameter which is called the effective angle . If the angle between the object’s facing direction and the vector exceeds the effective angle , we assume that the sensor cannot recognize the intruder, even if the location P is located in the field of view Sc.

Figure 1.

The sensing model of the camera node.

The relationship of the sensor position S, the sensor working direction , and the intruder location P can be determined by an Intruder in Sector (IIS) test. We state the intruder passes the IIS test if and only if it meets two conditions as follows:

The one condition is that the intruder location P must fall within the field of view sensed by the camera node, which is donated by .

The one other condition is that the angle between the object’s facing direction and the camera’s working direction is less than or equal to the effective angle, i.e., .

To facilitate reading, we provide in Table 2 a glossary of the variables that will be utilized throughout this paper.

Table 2.

Notation used in this paper.

| Symbol | Meaning |

|---|---|

| S | Camera node set, S = {S1, S2, …, Sn}, where Si also represent the position of the i-th camera node |

| P | Location of the intruder |

| R | Sensing radius of the camera node |

| r | Radius of the maximum full-view neighborhood coverage disk |

| r′ | Radius of the trajectory for nodes around P |

| One-half of camera’s angle of view | |

| Effective angle | |

| l | Grid length in the triangle lattice-based deployment |

| λ | Sensor density for achieving full-view area coverage |

| Sc | Camera’s field of view (FoV) |

| D(P, r) | Disk with r as the radius and P as the center |

| Tk | The k-th sector |

| Working direction of the i-th camera node | |

| Facial direction of the intruder | |

| Start line for dividing C(P, R) into T1, T2, …, Tk |

3.2. Definition and Problem Description

To capture the facial image of the intruder no matter which direction he is facing towards, the concept of full-view coverage has been proposed for CSNs. Based on the definitions of full-view coverage in previous works, we further give two principal definitions and the main full-view coverage problem which will be solved in the following sections.

Definition 1 (View coverage and full-view point coverage).

For an intruder P with facial direction appearing in ROI, there is always a camera node S making the intruder pass the IIS test. We state that the intruder is view covered by node S. The intruder is full-view covered if and only if there always is a node making the intruder pass IIS test for all possible face directions.

View coverage is more generalized coverage model which has drawn attention in [21,40]. It is often used in the constrained condition such as in barrier coverage where the intruders pass through a bounded region from the entrance to the exit with all continuous paths. This is not the focus of our paper.

Definition 2 (Full-view area coverage problem).

Without loss of generality, the intruder mentioned above can be seen as a point. Each point of the region is also important in the area-based coverage. A region is full-view covered if every point in it is full-view covered.

With the definition mentioned above, the FACM problem can be stated as follows:

Given: n homogenous camera sensors are deployed in a ROI A.

Problem: Find a set of camera sensors and make ROI be full-view covered by a minimum number of sensors. It can also be further elaborated the problem under two situations. Under the deterministic deployment strategy, it can be described as computing the critical density and positions of sensors for achieving full-view area coverage. Under the random deployment strategy, the work mainly focuses on adjusting sensorS′ working direction and scheduling the sleep durations of redundant sensors.

3.3. Preliminaries

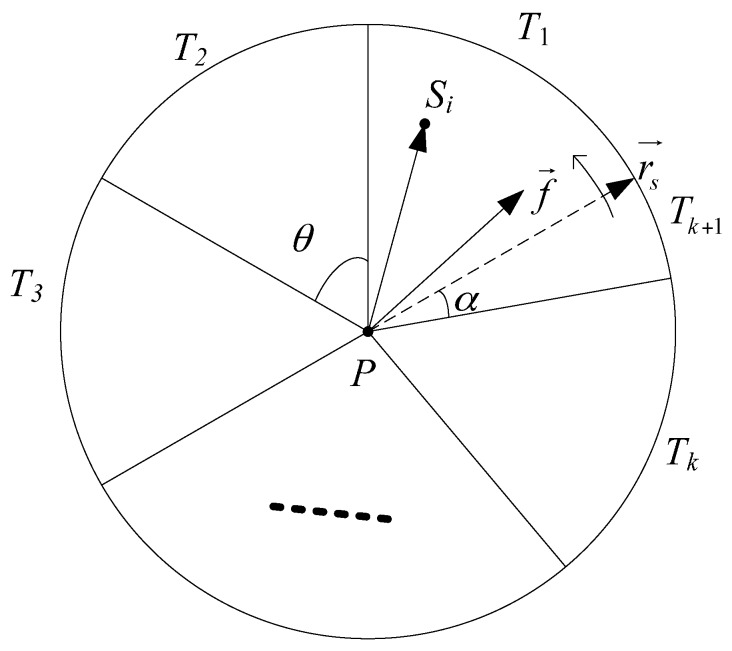

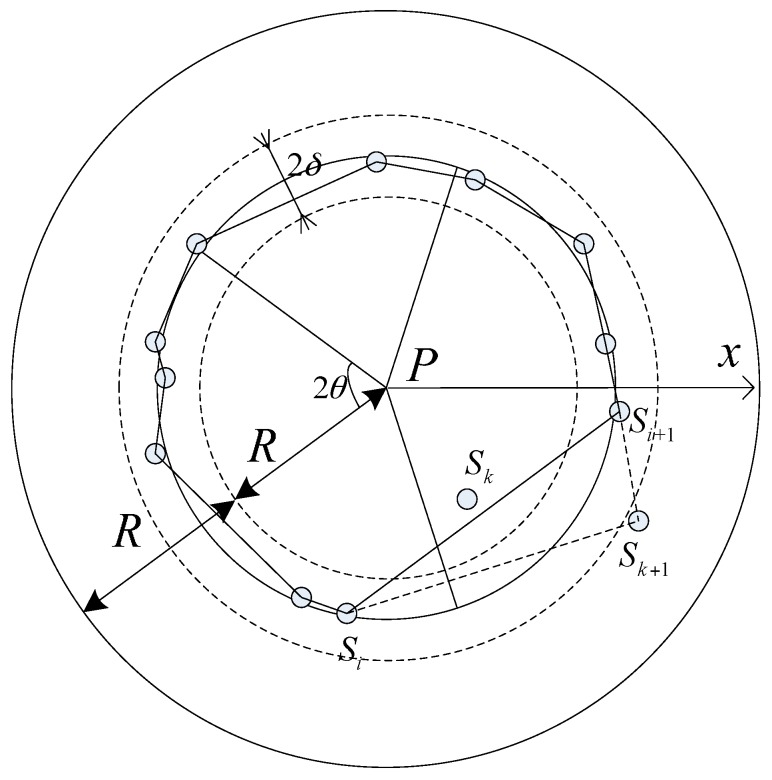

Firstly, we develop the sufficient condition for full view coverage of an arbitrary point P in the region A. As Figure 2 shows, D(P, R) is a disk centered at P with radius R. Without loss of generality, denote the dashed radius as the start line. Rotate the start line anti-clockwise with to get sector T1, T2, …, Tk, . If , then between T1 and Tk there may be one more sector Tk+1 with the interior angle . In the following explanation of the sufficient condition, we agree on .

Figure 2.

The sufficient condition for full view coverage of an arbitrary point P.

The sufficient condition about a point P to be full-view covered is such that, at least one node Sj locates in Tj and makes P pass IIS test, j = 1, 2, ···, k + 1. The proof is simple [37]. No matter which the facial direction face towards, it must be within at least one or two sectors simultaneously. Due to the sufficient condition, there is at least one node S falling in each sector and covering point P and holds. Tk+1 performs all the same as other sectors here. If properly deployed, nodes are enough to achieve full view coverage of a point.

It is redundant to deploy nodes in D(P, R) for achieving full-view coverage of pint P. We turn to the sufficient and necessary condition of full-view point coverage with a minimum number of camera nodes. Rotate the start line anti-clockwise with to get sector (shown in Figure 3), . If , then between and there may be one more sector with the interior angle . In the following explanation of sufficient and necessary condition, we agree on .

Figure 3.

The sufficient and necessary condition of full-view point coverage with a minimum number of camera nodes.

The sufficient and necessary condition of full-view point coverage with a minimum number of camera nodes can be described as that at least one camera node locates in the angle bisectors of sector Tj for all j = 1, 2, ···, k+1, which can be proved by contradiction.

Given the nodes S1, S2, …, Sk, Sk+1 locate in the angle bisectors of sectors and cover P, we analyze the full-view coverage with the different facing direction in the neighboring sector , , . If Sk+1 does not locate in the angle bisectors of the sector , must hold. It contradicts the condition for view-coverage. Therefore, the node must locate in the angle bisectors of the sector. If properly deployed, must be the minimum number of sensors to achieve full view coverage of a point.

4. Density and Location Estimation for Deterministic Deployment

4.1. Dimension Reduction and Analysis

Deterministic deployment is to place nodes at planned, predetermined locations. When using deterministic deployment, it is often desirable to find a placement pattern such that the nodeS′ locations can be easily found. Furthermore, it is also desirable that such an arrangement pattern can achieve the lowest node density (the number of nodes per unit area) for complete coverage.

In the previous section, we have gotten the lower requirement of nodes for full-view point coverage. It is infeasible to verify the full-view coverage of every point in a continuous domain. Hence, we proposed a novel method to develop the relationship between them. If a point is full-view covered by a set of camera nodes, the curious thing is whether there exist some nearby consecutive points full-view covered around the point.

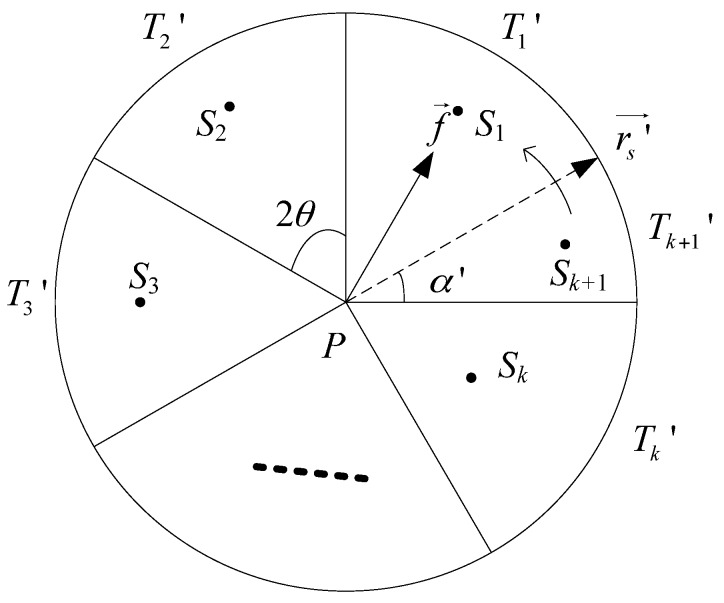

First of all, considering the geometric property of the coverage model, the maximum overlapped coverage region in the sector approximates to the inscribed circle in the sector. The maximum overlapped coverage area is disk with P as the origin and r as the radius.

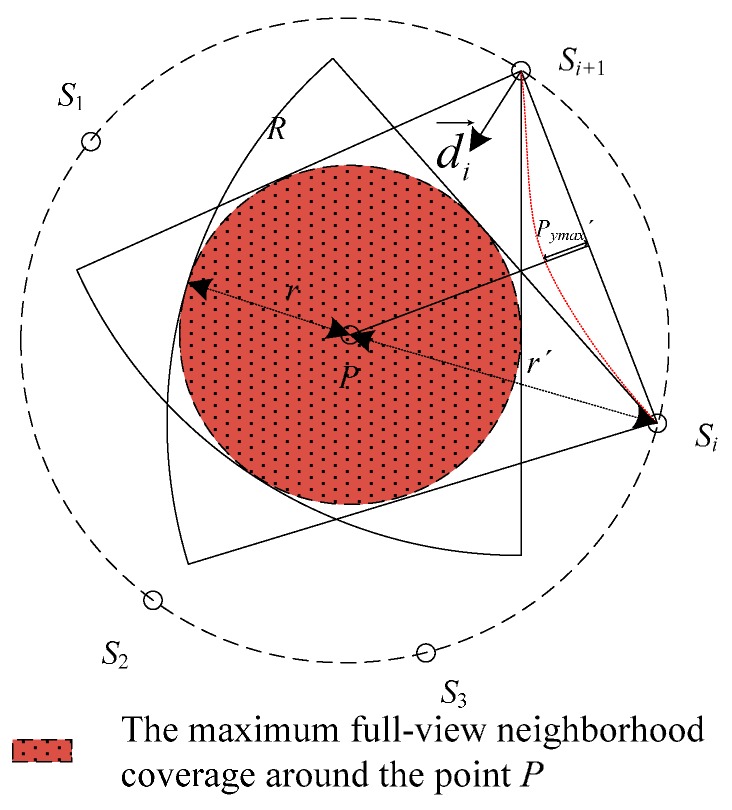

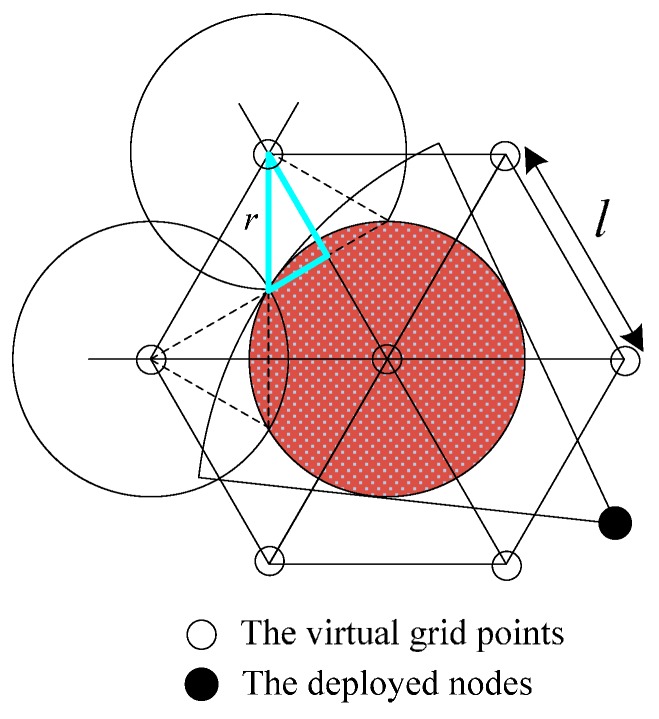

Besides, the trajectory of node positions can also be described as a circumference with P as the origin and r′ as the radius, where , . The full-view neighbor-hood coverage and the trajectory of nodes shown in Figure 4 are presented as D(P, r) and C(P, r′) under a specified condition.

Figure 4.

Full-view neighborhood coverage and the trajectory of nodes.

Combined with the necessary and sufficient conditions of full-view point coverage with the minimum number of nodes, we can estimate the optimal nodeS′ positions under deterministic deployment. The working directions of the nodes face towards the point by default. The first working direction starts from −180° and the next working direction rotates clockwise , and so on. If the location P (Px, Py) has been known, the position of Sk can be described as in Equation (1):

| (1) |

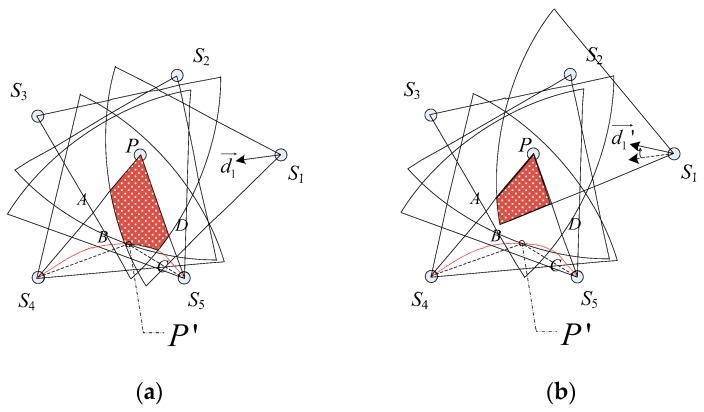

However, whether the disk is full-view covered is also subject to the distance between the adjacent nodes. To facilitate the analysis and design, we illustrate the constraint condition for full-view neighborhood coverage with the following instances. As showed in Figure 5a, P has been full view covered by S1, S2, S3, S4, S5. According to the geometric symmetry, we focus on the overlapped coverage region in .

Figure 5.

The example for interpreting the influence of the critical trajectory P′.

Theorem.

With the same group of nodes, the maximum full-view neighborhood coverage around the point can be guaranteed if and only if .

Proof:

If P′ can also be full-view covered by the same set of nodes, P′ must locate in the overlapped coverage region and is less than or equal to the effective angle. We establish the Cartesian coordinates system with S4S5 as the horizontal axis and the perpendicular bisector of S4S5 as the vertical axis to draw the critical trajectory of P′, where the critical condition is . The relationship of and is shown in Equation (2) as follows:

(2)

Taking the derivative of Equation (2), we have the extremum , where . The fact that P′ may intersect with the overlapped coverage region is determined by the working directions and the distance between the adjacent nodes. As shown in Figure 5b, as we rotate the working direction of S1 slightly, the critical trajectory of P′ has no influence on the full-view area coverage. The working directions all orientate towards the point P by default. Then we have the condition that for maximizing the full-view neighborhood coverage around P. We can simplify Equation (2) to Equation (3):

| (3) |

| (4) |

Substituting Equation (4) into Equation (3), we have Equation (5):

| (5) |

For , Equation (5) can be rewritten as (6):

| (6) |

Therefore, we have the constraint condition for achieving maximum full-view neighborhood coverage around P. □

At this point, the relationship between a point and its neighborhood has been established. Based on this, the full-view area coverage problem has been converted into the point coverage problem. Here the point is the center of the full-view covered disk. In the traditional disk model, triangle lattice-based deployment is proved to be optimal regarding sensor density [43]. In the next part, we establish the triangle lattice-based virtual grid points set for solving the full-view point coverage.

4.2. Deployment Pattern Algorithm for Deterministic Implementation

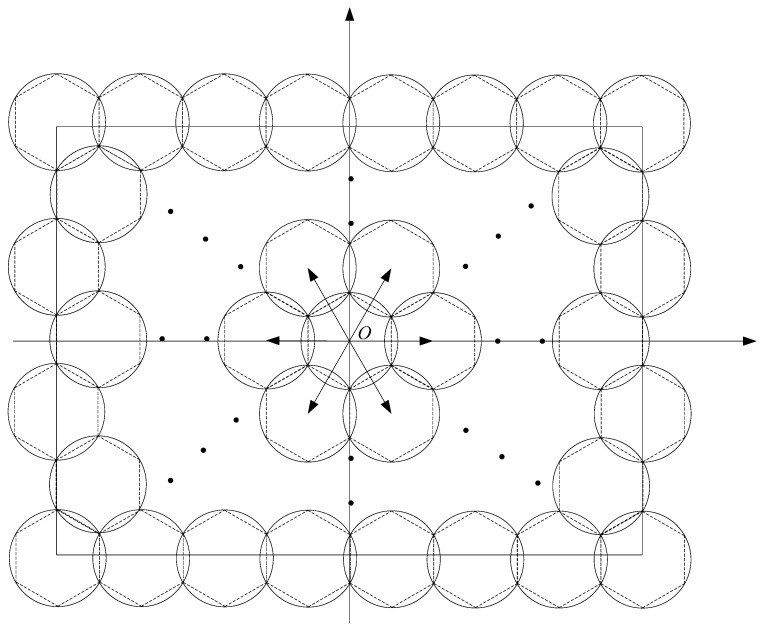

In this section, we construct a deployment pattern algorithm for full-view area coverage based on the triangle lattice, which is shown in Figure 6. The grid length l of the triangle is critical. Given the sensing radius R and the effective angle, we calculate the best l such that every point in ROI is full-view covered.

Figure 6.

The diffusion method for ascertaining the positions of camera nodes.

To avoid the influence of boundary effects, we choose the triangle lattice-based virtual grid with the method of spreading from the center of ROI (as shown in Figure 6), in which the locations of nodes around the center (x0, y0) of the ROI are calculated out first. The deployment activities may be executed by a team of robots [44,45].

The position of the triangle lattice-based virtual grid point for the other full-view disk can be represented as , where , , .

As shown in Figure 7, the optimal grid length can be computed based on the by the Pythagorean Theorem (). Thus, we get the optimal grid length l in Equation (7). For the truth that the minimum number of nodes for achieving full-view point coverage is , the node density is times as likely to the density of the triangle lattice-based virtual grid points. The sensor density can be computed by the following Equation (8). Here, is the area of the regular hexagon.

Figure 7.

The triangle lattice-based seamless stitching method.

| (7) |

| (8) |

Deployment Pattern Algorithm: Given the region A, and the parameters of camera nodes. First, we establish the virtual grid points set (x, y) with the method of spreading from the center of the ROI. Specifically, the virtual grid points set can be obtained by the optimal grid length l. Then we solve the FACM problem by deploying the nodes around the virtual grid points gradually. For example, the nodes are deployed around the virtual grid point P with . every to make sure the maximum full-view coverage in Figure 4. The process can be described by the pseudocode given as Algorithm 1.

| Algorithm 1. The Deployment Pattern Algorithm (DPA) | |

| INPUT: the ROI A; the parameters of camera nodes OUTPUT: the optimal positions of camera nodes S | |

| 1: Establish the virtual grid points set (x, y) from the center (x0, y0) of ROI

2: For each point P in (x, y) 3: Deploy nodes around P with every | |

| 4: End |

5. LNSA for Full-View Area Coverage in Random Deployment

The previous works concentrate on the derivation of the lower bound of the critical density that the ROI is full-view covered with random deployment. The critical density has been derived from the premise that the number of nodes tends to infinity. Here we take the same assumption that the redundant nodes are distributed in the ROI. Our goal is to find a minimum set of camera nodes guaranteeing the ROI can be full-view covered. According to the result derived from Section 4, a node scheduling algorithm is proposed to set the camera nodes into sleeping mode or working mode. For the reason that the scheduling process in the next round-robin schedule may be analogous to the first round, we primarily put the attention upon the first round of the scheduling process in our paper.

As shown in Figure 8, the nodes are all set into the slightly sleeping model that the nodes can only send GPS signals. The algorithm starts when the node positions message has been confirmed and transformed to the remote console. The nodes randomly deployed in the boundary of the region may be sparser than in the interior area. The region is less likely to be covered as required due to the sparse density of nodes in the border. The conventional method of avoiding it is to deploy nodes in an expanded region. For ease of analysis, the activating progress proceeds with the diffusion process from the center of the ROI. According to Section 4, the ROI can be divided into many regular hexagons centered on the virtual grid where the scheduling algorithm is operated respectively.

Figure 8.

The local neighboring nodes in the disk .

As we mentioned above, the maximum full-view neighborhood coverage around the point P can be approximated to a disk , and the position trajectory of nodes is a circle . For each regular hexagon, the FACM problem in each disk can be solved by selecting the camera nodes around the circle .

After dividing the ROI, the regular hexagon Hi should be full-view covered by a minimum number of camera nodes. We schedule the nodes in the disk with Pi as center and 2R as the radius. The selected nodes will be deleted from the nodeS′ list. The polar coordinate system with P as the center and the radial Px as the polar axis is introduced because the key parameters discussed here are the angles between the adjacent nodes and the distances from Pi to the nodes. In this section, the position of node Si can be represented as , .

It is assumed that n nodes S = {S1, S2, …, Sn} exist on the disk . A threshold parameter has been introduced to filter out the nodes far away from the critical trajectory. The nodes with are refined at first. Reordering the refined nodes with the increase of , the new node set can be represented as S′c = {Sc1, Sc2, …, S ck}. If the angle between the adjacent nodes, such as Scj and Scj+1, is greater than , the regular hexagon Hi maybe not full-view covered. Therefore, the algorithm picks the spare nodes from to join S′c based on the nearest polar radius principle that the node whose polar radius is closer to R has more opportunity to be selected.

The node set S′c around the critical trajectory has been achieved when there are no adjacent nodes that . Next, the algorithm chooses nodes at intervals of as the awakening nodes in S′c. The specific process of the algorithm can be described by the pseudoicode of Algorithm 2.

| Algorithm 2. The Local Neighbor-optimal Selecting Algorithm (LNSA) |

| INPUT: the position information of all nodes S = {S1, S2, …, Sn} in . OUTPUT: the selected nodes Sb = {S′b1, S′b2, …, S′bi, …, S′bh}. 1: Divide ROI into many regular hexagons H1, H2, …, Hi, …, Ha with the virtual grid points as the center, build the polar coordinate system in Hi 2: Perform the following steps in each regular hexagon Hi 3: Refine the nodes in Hi with and constitute S′c = {Sc1, Sc2, …, Sck} 4: for i = 1: k 5: If 6: Pick the spare nodes from to join S′c 7: i = i − 1 8: End 9: End 10: Choose nodes every as the awakening nodes S′bi = {Sb1, Sb2, …, Sbm} in S′c. |

| 11: End 12: Sb = {S′b1, S′b2, …, S′bi, …, S′bh} |

6. Performance Evaluation

In this section, we perform simulations to verify that how many nodes are needed to achieve full-view coverage for the ROI. We consider two conditions as follows: in one case, the problem is how to design a deployment pattern algorithm such as that the ROI can be full-view covered by the minimum number of camera nodes. And in the other case, there are redundant camera nodes distributed in the ROI. The problem is how to select the minimum number of nodes for achieving the full-view area coverage. To our best knowledge, there are few studies on full-view area coverage with a minimum number of nodes, especially in computing the exact positions of camera nodes under the deterministic implementation. Therefore, we mainly compare our deployment pattern algorithm (DPA) and node scheduling algorithm (LNSA) with [19,36].

As the authors did not name their algorithms in their papers, we adopt Acronym Creator (http://acronymcreator.net/) to name the selected algorithms for comparison. Therefore, the algorithm presented by Wang [36] can be named as FURCA (for FUll-view Region Coverage Algorithm). The algorithm presented by He [19] can be named as DASH (for Dimension reduction And near-optimal Solutions).

6.1. Simulation Configuration

The ROI is a 100 m × 100 m square field. We use two settings for the sensing radius: R = 5 m and R = 15 m. In both situations, we deploy the nodes in the extended ROI with the ROI of (100 + 2R) m × (100 + 2R) m to avoid the boundary effect. The angle of view is fixed to be , and the effective angle is also fixed to be respectively.

We first show the relationship between the minimum number of nodes needed for full-view area coverage and the effective angle in the deterministic implementation with different sensing radius and angle of view.

In the second step of the simulation, we vary the number of nodes from 500 to 4500 for R = 15 with the different angle of view to observe the relationship between the critical density and the percentage of full-view area coverage. Besides, the number of selected nodes for achieving full-view area coverage is shown in the next section. we run ten times and take the mean to eradicate any discrepancies in the random deployment.

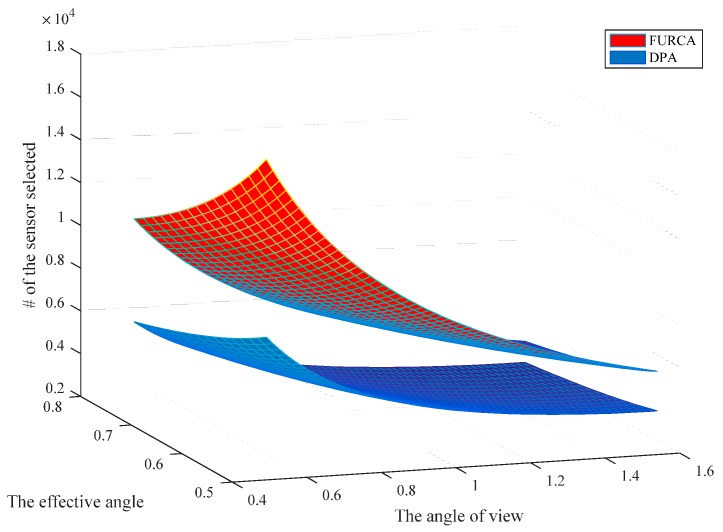

6.2. Simulations Analysis of DPA

We investigate the impact of the parameters on the performance of two deterministic deployment patterns by varying the value of the angle of view and the effective angle, respectively. The simulation results with R = 5 are shown in Figure 9. It provides an overview about the minimum number of sensors for achieving full-view area coverage. We notice that the number of sensors needed in our pattern is strictly smaller than the deployment pattern of FURCA. DPA select the less number of nodes than FURCA for the reason that we deduce the best gird length l with more specific condition compared with FURCA. FURCA obtained the best gird length l by contradiction, which does not consider the full-view coverage. DPA establish the internal relation between the full-view point coverage and the full-view neighborhood coverage.

Figure 9.

The direct-vision comparison of two deployment patterns.

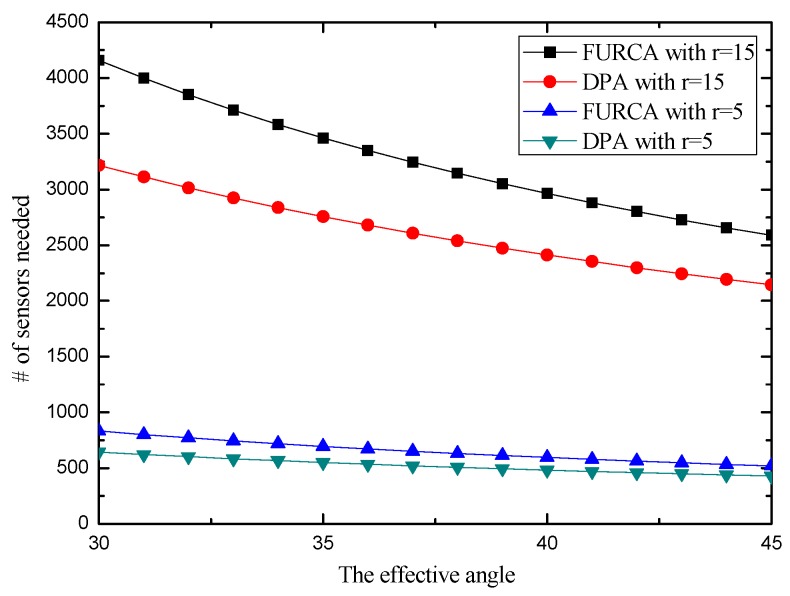

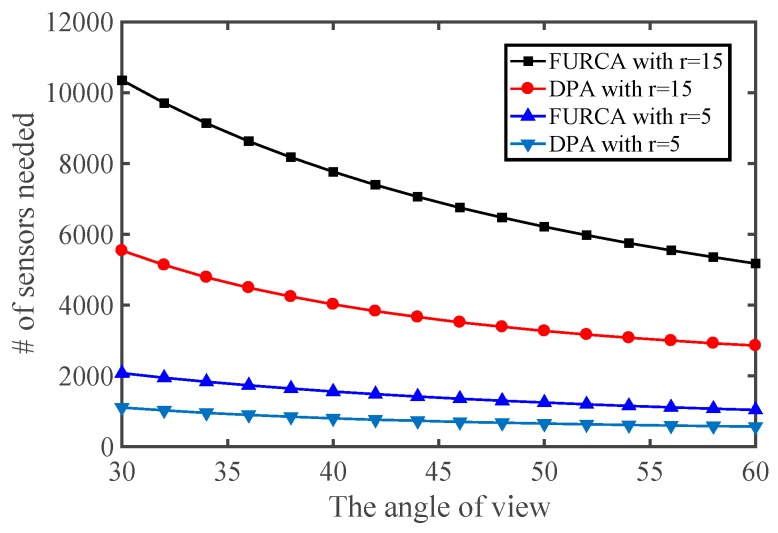

In addition, the number of sensors required non-linearly decreases with the effective angle and the angle of view both in Figure 10 and Figure 11. This is because more targeted actions have been taken in the triangle lattices-based seamless stitching method in the proposed approach. However, the number of sensors required may be similar in both two kinds of deployment patterns when the sensing radius is small enough, which indicates that the proposed approach is restricted by the sensing radius.

Figure 10.

The number of sensors required vs. the effective angle.

Figure 11.

The number of sensors required vs. the angle of view.

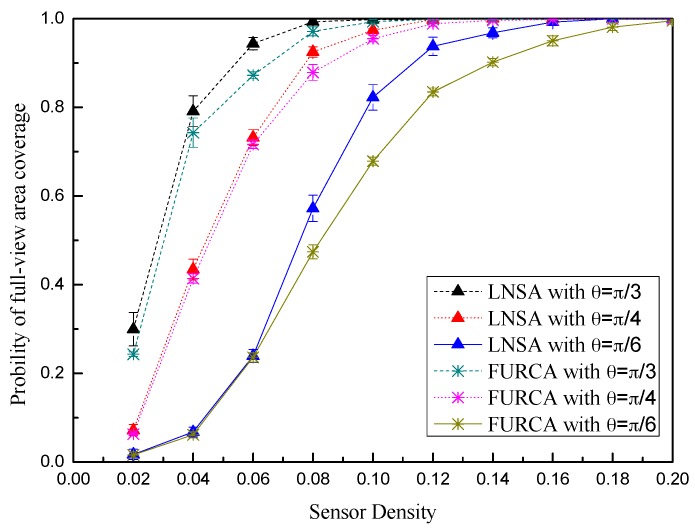

6.3. Performance Evaluation of LNSA

We design the probability of full-view area coverage for the ROI to describe the convergence performance of the scheduling algorithm. We assume that there are adequate virtual targets ta (# of all the targets) located in the ROI, where ts (# of the targets meeting conditions) can be full-view covered by the deployed sensors. The probability of full-view area coverage can be computed as . It is evident that p may be close to the real probability of full-view area coverage for the ROI when ta tends to infinity. The Intruder in Sector (IIS) test works for computing the full-view coverage ratio. We perform IIS test in each virtual grid point and build the binary coverage array .

| (9) |

| (10) |

We now investigate the convergence performance of the node scheduling algorithms, namely, FURCA and LNSA. The results are shown with the form of the error bars in Figure 12. We can see that the convergence rate of LNSA is faster than the convergence rate of FURCA during the middle and later periods. It is because that our scheduling algorithm can also adjust the working direction towards the triangle lattice points. With the growth of , the probability of full-view area coverage for the same node density also increases both in two scheduling algorithms.

Figure 12.

The probability of full-view area coverage vs. the sensor density.

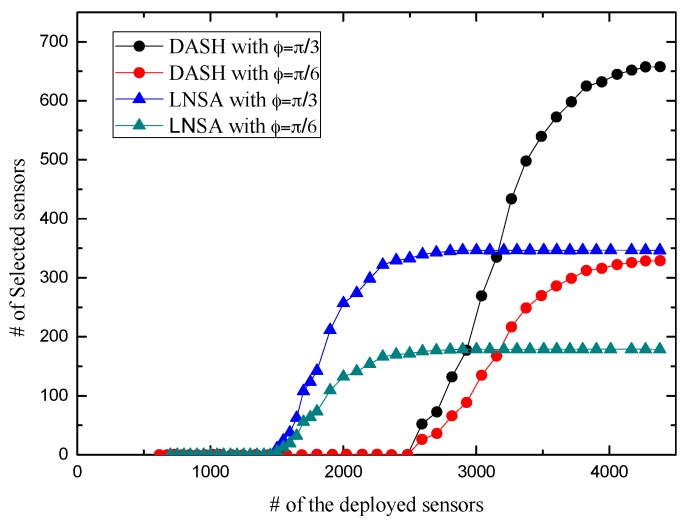

Then we proceed to investigate the relationship between the number of selected nodes and the number of deployed sensors. Here the sensing radius is fixed to R = 15. The results are shown in Figure 13. The beginning part in Figure 13 always tends to zero for the reason that the scheduling algorithms start work when the density of nodes increases to the quantity of insuring the whole region being full-view covered. In addition, our scheduling algorithm always goes ahead in the starting of the activating process. Besides, we find that the number of selected sensors of our scheduling algorithm is less than the one in DASH when the activating process finishes. It is because that our approach imposes restrictions on the working direction.

Figure 13.

The number of selected sensors vs. the number of deployment sensors.

In the random deployment, FURCA proposed the probability that a given region A is full-view covered which indicates the full-view coverage status of ROI. It just guarantees ROI be full-view covered by increasing the number of nodes rather than adjust the working directions or scheduling the sleeping nodes. Based on the work of FURCA, DASH reduce the dimension of full-view area coverage by full-view ensuring set (FVES). DASH guarantees ROI be full-view covered while scheduling the sleeping nodes to prolong the lifetime of the networks. For the reason that LNSA adjust the working directions and scheduling the sleeping nodes, we have better simulation results in comparison with FURCA and DASH.

7. Conclusions

In this paper, we study the coverage issue of camera sensor networks. Different for the traditional binary sector coverage model, we are interested in the face direction of the target in the ROI. A model called full-view area coverage was introduced in this paper. With this model, we proposed an efficient deployment pattern algorithm DPA on the deterministic implementation for achieving the full-view area coverage with a minimum number of camera nodes. We also derived a node scheduling algorithm LNSA aiming to reduce the redundancy in a random uniform implementation. We investigated the performance of our deployment pattern with FURCA in term of the effective angle. The scheduling algorithms of DASH and LNSA were compared in term of the convergence performance and the number of selected sensors. Extensive simulation results validate the feasibility of the proposed algorithms. Although the coverage issue of full-view coverage is an emerging research field, further study may focus on the improvement of the sensing model and the verification of the algorithms when applied to specific applications.

Reference

Acknowledgments

The subject is sponsored by the National Natural Science Foundation of P.R. China (61572260, 61373138, 61672296), Jiangsu Natural Science Foundation for Excellent Young Scholar (BK20160089), Natural Science Foundation of Jiangsu Province (No. BK20140888), A Project Funded by the Priority Academic Program Development of Jiangsu Higher Education Institutions(PAPD), Jiangsu Collaborative Innovation Center on Atmospheric Environment and Equipment Technology (CICAEET) and Innovation Project for Postgraduate of Jiangsu Province (SJZZ16_0147, SJZZ16_0149, SJZZ16_015, KYLX15_0842).

Author Contributions

Peng-Fei Wu proposed the main ideas of the DPA/LNSA algorithms while Fu Xiao designed and conducted the simulations. Chao Sha and Hai-ping Huang analyzed the data, results and verified the theory. Ru-Cchuan Wang and Nai-Xue Xiong served as advisor to the above authors and gave suggestions on simulations, performance evaluation and writing. The manuscript write up was a combined effort from the six Authors. Allauthors have read and approved the final manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Xiong N., Huang X., Cheng H., Wan Z. Energy-efficient algorithm for broadcasting in ad hoc wireless sensor networks. Sensors. 2013;13:4922–4946. doi: 10.3390/s130404922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Xiong N., Liu R.W., Liang M., Wu D., Liu Z., Wu H. Effective alternating direction optimization methods for sparsity-constrained blind image deblurring. Sensors. 2017;17:174. doi: 10.3390/s17010174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Xiao F., Xie X., Jiang Z., Sun L., Wang R. Utility-aware data transmission scheme for delay tolerant networks. Peer-to-Peer Netw. Appl. 2016;9:936–944. doi: 10.1007/s12083-015-0354-y. [DOI] [Google Scholar]

- 4.Xiao F., Jiang Z., Xie X., Sun L., Wang R. An energy-efficient data transmission protocol for mobile crowd sensing. Peer-to-Peer Netw. Appl. 2017;10:510–518. doi: 10.1007/s12083-016-0497-5. [DOI] [Google Scholar]

- 5.Ai J., Abouzeid A.A. Coverage by directional sensors in randomly deployed wireless sensor networks. J. Comb. Optim. 2006;11:21–41. doi: 10.1007/s10878-006-5975-x. [DOI] [Google Scholar]

- 6.Malek S.M.B., Sadik M.M., Rahman A. On balanced k-coverage in visual sensor networks. J. Netw. Comput. Appl. 2016;72:72–86. doi: 10.1016/j.jnca.2016.06.011. [DOI] [Google Scholar]

- 7.Mini S., Udgata S.K., Sabat S.L. Sensor deployment and scheduling for target coverage problem in wireless sensor networks. J. Netw. Comput. Appl. 2014;14:636–644. doi: 10.1109/JSEN.2013.2286332. [DOI] [Google Scholar]

- 8.Karakaya M., Qi H. Coverage estimation for crowded targets in visual sensor networks. ACM Trans. Sens. Netw. (TOSN) 2012;8:26. doi: 10.1145/2240092.2240100. [DOI] [Google Scholar]

- 9.Younis M., Akkaya K. Strategies and techniques for node placement in wireless sensor networks: A survey. Ad Hoc Netw. 2008;6:621–655. doi: 10.1016/j.adhoc.2007.05.003. [DOI] [Google Scholar]

- 10.Costa D.G., Silva I., Guedes L.A. Optimal Sensing Redundancy for Multiple Perspectives of Targets in Wireless Visual Sensor Networks; Proceedings of the IEEE 13th International Conference on Industrial Informatics (INDIN); Cambridge, UK. 22–24 July 2015. [Google Scholar]

- 11.Osais Y.E., St-Hilaire M., Fei R.Y. Directional sensor placement with optimal sensing range, field of view and orientation. Mob. Netw. Appl. 2010;15:216–225. doi: 10.1007/s11036-009-0179-0. [DOI] [Google Scholar]

- 12.Cai Y., Lou W., Li M. Target-oriented scheduling in directional sensor networks; Proceedings of the 26th IEEE International Conference on Computer Communications; Barcelona, Spain. 6–12 May 2007; pp. 1550–1558. [Google Scholar]

- 13.Ma H., Yang M., Li D., Hong Y., Chen W. Minimum camera barrier coverage in wireless camera sensor networks; Proceedings of the 31st Annual IEEE International Conference on Computer Communications (IEEE INFOCOM 2012); Orlando, FL, USA. 25–30 March 2012; pp. 217–225. [Google Scholar]

- 14.Zhang Y., Sun X., Wang B. Efficient algorithm for k-barrier coverage based on integer linear programming. China Commun. 2016;13:16–23. doi: 10.1109/CC.2016.7489970. [DOI] [Google Scholar]

- 15.Aghdasi H.S., Abbaspour M. Energy efficient area coverage by evolutionary camera node scheduling algorithms in visual sensor networks. Soft Comput. 2015;20:1191–1202. doi: 10.1007/s00500-014-1582-4. [DOI] [Google Scholar]

- 16.Yang Q., He S., Li J., Chen J., Sun Y. Energy-efficient probabilistic area coverage in wireless sensor networks. IEEE Trans. Veh. Technol. 2015;64:367–377. doi: 10.1109/TVT.2014.2300181. [DOI] [Google Scholar]

- 17.Wang Y.-C., Hsu S.-E. Deploying r&d sensors to monitor heterogeneous objects and accomplish temporal coverage. Pervasive Mob. Comput. 2015;21:30–46. [Google Scholar]

- 18.Hu Y., Wang X., Gan X. Critical sensing range for mobile heterogeneous camera sensor networks; Proceedings of the 33rd Annual IEEE International Conference on Computer Communications (INFOCOM’14); Toronto, ON, Canada. 27 April–2 May 2014; pp. 970–978. [Google Scholar]

- 19.He S., Shin D.-H., Zhang J., Chen J., Sun Y. Full-view area coverage in camera sensor networks: Dimension reduction and near-optimal solutions. IEEE Trans. Veh. Technol. 2015;65:7448–7461. doi: 10.1109/TVT.2015.2498281. [DOI] [Google Scholar]

- 20.Singh A., Rossi A. A genetic algorithm based exact approach for lifetime maximization of directional sensor networks. Ad Hoc Netw. 2013;11:1006–1021. doi: 10.1016/j.adhoc.2012.11.004. [DOI] [Google Scholar]

- 21.Newell A., Akkaya K., Yildiz E. Providing multi-perspective event coverage in wireless multimedia sensor networks; Proceedings of the 35th IEEE Conference on Local Computer Networks (LCN); Denver, CO, USA. 10–14 October 2010; pp. 464–471. [Google Scholar]

- 22.Ng S.C., Mao G., Anderson B.D. Critical density for connectivity in 2d and 3d wireless multi-hop networks. IEEE Trans. Wirel. Commun. 2013;12:1512–1523. doi: 10.1109/TWC.2013.021213.112130. [DOI] [Google Scholar]

- 23.Khanjary M., Sabaei M., Meybodi M.R. Critical density for coverage and connectivity in two-dimensional fixed-orientation directional sensor networks using continuum percolation. J. Netw. Comput. Appl. 2015;57:169–181. doi: 10.1016/j.jnca.2015.08.010. [DOI] [Google Scholar]

- 24.Wang J., Zhang X. 3d percolation theory-based exposure-path prevention for optimal power-coverage tradeoff in clustered wireless camera sensor networks; Proceedings of the IEEE Global Communications Conference (IEEE Globecom 2014); Austin, TX, USA. 8–12 December 2014; pp. 305–310. [Google Scholar]

- 25.Almalkawi I.T., Guerrero Zapata M., Al-Karaki J.N., Morillo-Pozo J. Wireless multimedia sensor networks: Current trends and future directions. Sensors. 2010;10:6662–6717. doi: 10.3390/s100706662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Wu C.-H., Chung Y.-C. A polygon model for wireless sensor network deployment with directional sensing areas. Sensors. 2009;9:9998–10022. doi: 10.3390/s91209998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Costa D.G., Guedes L.A. The coverage problem in video-based wireless sensor networks: A survey. Sensors. 2010;10:8215–8247. doi: 10.3390/s100908215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Costa D.G., Silva I., Guedes L.A., Vasques F., Portugal P. Availability issues in wireless visual sensor networks. Sensors. 2014;14:2795–2821. doi: 10.3390/s140202795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Li P., Yu X., Xu H., Qian J., Dong L., Nie H. Research on secure localization model based on trust valuation in wireless sensor networks. Secur. Commun. Netw. 2017;2017:6102780. doi: 10.1155/2017/6102780. [DOI] [Google Scholar]

- 30.Mavrinac A., Chen X. Modeling coverage in camera networks: A survey. Int. J. Comput. Vis. 2013;101:205–226. doi: 10.1007/s11263-012-0587-7. [DOI] [Google Scholar]

- 31.Guvensan M.A., Yavuz A.G. On coverage issues in directional sensor networks: A survey. Ad Hoc Netw. 2011;9:1238–1255. doi: 10.1016/j.adhoc.2011.02.003. [DOI] [Google Scholar]

- 32.Cheng W., Li S., Liao X., Changxiang S., Chen H. Maximal coverage scheduling in randomly deployed directional sensor networks; Proceedings of the 2007 International Conference on Parallel Processing Workshops (ICPPW 2007); Xi’an, China. 10–14 September 2007; p. 68. [Google Scholar]

- 33.Makhoul A., Saadi R., Pham C. Adaptive scheduling of wireless video sensor nodes for surveillance applications; Proceedings of the 4th ACM Workshop on Performance Monitoring and Measurement of Heterogeneous Wireless and Wired Networks; Tenerife, Spain. 26 October 2009; pp. 54–60. [Google Scholar]

- 34.Gil J.-M., Han Y.-H. A target coverage scheduling scheme based on genetic algorithms in directional sensor networks. Sensors. 2011;11:1888–1906. doi: 10.3390/s110201888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Sung T.-W., Yang C.-S. Voronoi-based coverage improvement approach for wireless directional sensor networks. J. Netw. Comput. Appl. 2014;39:202–213. doi: 10.1016/j.jnca.2013.07.003. [DOI] [Google Scholar]

- 36.Wang Y., Cao G. On full-view coverage in camera sensor networks; Proceedings of the 30th IEEE International Conference on Computer Communications (IEEE INFOCOM 2011); Shanghai, China. 10–15 April 2011; pp. 1781–1789. [Google Scholar]

- 37.Wu Y., Wang X. Achieving full view coverage with randomly-deployed heterogeneous camera sensors; Proceedings of the 2012 IEEE 32nd International Conference on Distributed Computing Systems; Macau, China. 18–21 June 2012; pp. 556–565. [Google Scholar]

- 38.Manoufali M., Kong P.-Y., Jimaa S. Effect of realistic sea surface movements in achieving full-view coverage camera sensor network. Int. J. Commun. Syst. 2016;29:1091–1115. doi: 10.1002/dac.3077. [DOI] [Google Scholar]

- 39.Xiao F., Yang X., Yang M., Sun L., Wang R., Yang P. Surface coverage algorithm in directional sensor networks for three-dimensional complex terrains. Tsinghua Sci. Technol. 2016;21:397–406. doi: 10.1109/TST.2016.7536717. [DOI] [Google Scholar]

- 40.Yu Z., Yang F., Teng J., Champion A.C., Xuan D. Local face-view barrier coverage in camera sensor networks; Proceedings of the 2015 IEEE Conference on Computer Communications (INFOCOM); Hong Kong, China. 26 April–1 May 2015; pp. 684–692. [Google Scholar]

- 41.Zhao L., Bai G., Jiang Y., Shen H., Tang Z. Optimal deployment and scheduling with directional sensors for energy-efficient barrier coverage. Int. J. Distrib. Sens. Netw. 2014;2014:596983. doi: 10.1155/2014/596983. [DOI] [Google Scholar]

- 42.Tao D., Wu T.-Y. A survey on barrier coverage problem in directional sensor networks. IEEE Sens. J. 2015;15:876–885. [Google Scholar]

- 43.Kershner R. The number of circles covering a set. Am. J. Math. 1939;61:665–671. doi: 10.2307/2371320. [DOI] [Google Scholar]

- 44.Li X., Fletcher G., Nayak A., Stojmenovic I. Placing sensors for area coverage in a complex environment by a team of robots. ACM Trans. Sens. Netw. 2014;11:3. doi: 10.1145/2632149. [DOI] [Google Scholar]

- 45.Li F., Luo J., Wang W., He Y. Autonomous deployment for load balancing k-surface coverage in sensor networks. IEEE Trans. Wirel. Commun. 2015;14:279–293. doi: 10.1109/TWC.2014.2341585. [DOI] [Google Scholar]