Abstract

Motion tracking based on commercial inertial measurements units (IMUs) has been widely studied in the latter years as it is a cost-effective enabling technology for those applications in which motion tracking based on optical technologies is unsuitable. This measurement method has a high impact in human performance assessment and human-robot interaction. IMU motion tracking systems are indeed self-contained and wearable, allowing for long-lasting tracking of the user motion in situated environments. After a survey on IMU-based human tracking, five techniques for motion reconstruction were selected and compared to reconstruct a human arm motion. IMU based estimation was matched against motion tracking based on the Vicon marker-based motion tracking system considered as ground truth. Results show that all but one of the selected models perform similarly (about 35 mm average position estimation error).

Keywords: kinematics, sensor fusion, motion tracking, inertial measurements units

1. Introduction

In recent years, the development of sensing technologies and sensor signals processing techniques paved the way for the use of wearable sensors to monitor human status and performance. These developments resulted in the need for managing efficiently such networks, as explained by Fortino et al. in [1]. Wearable body sensor networks (BSN) are nowadays used in several applications which include healthcare, ergonomics, sport and entertainment, (see [2] for a review on the argument). A field that has benefited from the research on BSN is motion tracking.

Motion tracking has received the attention and the effort of generations of researchers. There are several techniques that allow for motion reconstruction based on different information sources. One of the biggest challenges in motion tracking is having an accurate estimation with non-invasive sensors and non limited workspace. In the recent years, a new generation of inertial measurement units (IMUs) based on micro-electro-mechanical systems (MEMS) technology has given a new surge to motion tracking research. These devices are cost-effective and can be successfully used for accurate, non-invasive and portable motion tracking. The big interest in these devices is mainly motivated by the fact that they overcome many issues raised by optical systems and mechanical trackers. IMUs indeed do not suffer from occlusions and have theoretically unlimited workspace compared to optical motion tracking systems, and despite the accuracy of mechanical trackers, IMUs are much more affordable and far less intrusive.

Inertial units-based motion tracking has been used for navigation since decades ago. Initially developed for the attitude estimation of aerial vehicles (see [3,4]), it is nowadays used for other unmanned vehicles tracking (see [5,6,7,8]). In recent years, IMUs are often used to track human motion thus becoming an enabling technology for several applications which include localization, human-robot interaction, rehabilitation and ergonomics. This development is also witnessed by the rise of companies that sell IMUs and IMU-based systems e.g., Invensense (Invensense, San Jose, CA, USA), Trivisio (Trivisio, Trier, Germany), Microstrain (Lord Microstrain, Willistone, VT, USA) and XSens (Xsens Technologies B.V., Enschede, The Netherlands) and the amount of start-ups which target IMU-based systems. The products that they sell often include attitude reconstruction, which is provided as output to the user, or even full body motion reconstruction.

IMUs are typically composed of accelerometers and gyroscopes. These signals are used in different manners according to the applications as it will be explained in Section 2 (as an example see [9]). In most cases IMUs are used to reconstruct the pose or at least either the position or the orientation of the body they are attached to. The naive use of IMUs is the integration of the sensors’ signals over time to estimate velocity, position and orientation. Since both accelerometer and gyroscope measurements suffer from time varying biases and noises, this approach leads to a quick drift of the estimation that is unreliable after a few seconds. Therefore, researchers started investigating both algorithmic and hardware solutions to solve the drift issue. In many cases IMUs are equipped with a three axis magnetometer (e.g., [10,11,12,13]), we refer to these sensors as mIMUs. The magnetometer measures the local (earth) magnetic field that is used as an earth-fixed reference for the current estimation of the IMU orientation. Other solutions include exploiting ultrasonic sensors [14], GPS [15], ultra wide bands (UWB) [16], cameras [17], and magnetic field generated by actuated coils [18].

Motivated through the variety of approaches to IMU-based human motion tracking (IHMT), the goal of this article is introducing the reader to IHMT. In the first part (Section 2) this article introduces the reader to IHMT main issues, then it presents a survey of the methods that have been used so far to tackle the IHMT problem. In the second part (Section 3) , the article includes a tutorial section which explains in details five selected methods for upper limb tracking. This part aims at both making concrete some of the main issues presented in the survey and letting the reader familiarize with IHMT methods. These methods are finally compared to each other in Section 4. The latter section concludes the presented work.

2. IMU-Based Human Motion Tracking

2.1. Reviews on Wearable Motion Tracking

In recent decades, emerging technologies allowed for a huge step forward in human motion tracking. Exoskeletons, vision-based systems as well as motion capture based on inertial systems have become commonly used firstly in laboratory settings and nowadays in everyday life. Several reviews described human motion capture under different perspectives with a focus on the application [19] and/or on technical aspects [20]. For example, Patel in [21] proposes a review of wearable sensors for human monitoring in which a great emphasis is laid on applications and on the enabling technology. Their survey moves from sensing technology including motion capture based on inertial sensors to applications, including health monitoring, wellness and safety. Similarly, Shull et al. [22] review wearable sensing systems applied to gait analysis in clinical settings. They group methods according to the sensors that are used, subject populations and measured parameters. In a recent review paper [23], Gravina et al. discuss issues and advantages of body sensor networks, then they focus on their applications to human activity recognition. Wong et al. [24] review applications of wearable sensors to biomechanics. Differently from [21], they focus on the devices and the sensors that are used for motion tracking. Moreover, they explain advantages and disadvantages of the different methods. In the recent review [9] a specific focus is laid on wearable inertial sensors. The authors analyze several medical applications of wearable inertial motion tracking, including gait analysis, stabilometry, instrumented clinical tests, upper body mobility assessment, daily-life activity monitoring and tremor assessment. For all applications, they report the methods proposed to tackle those. Interestingly, the selection of the applications provides a grouping of methods that reflects different complexity levels in using IMU sensors: for example stabilometry requires simpler algorithms and fewer sensors than upper body mobility assessment. Harle et al. [25] provide a technical review of the issues arising and methods used in IMU-based pedestrian localization. Similarly, Yang et al. in [26] target localization, reviewing sensors as well as methods with their respective sources of errors. In [27] the focus is on walking speed estimation. Sabatini in [28] proposes a review of (m)IMU-based tracking systems for 3D attitude estimation focusing on the technical aspects of IHMT methods. In particular, sensor fusion techniques and related issues are explained in details including techniques to estimate and tune filter parameters. In [29] the authors compare six algorithms for the estimation of a smartphone’s attitude. The goal of their analysis was to compare algorithms in order to select the most suited one for pedestrian localization even when magnetometer’s signal is disturbed. They test such algorithms indoor while artificially distorting the magnetic field by means of magnets. Although authors claim that two of the selected methods perform better than the others, reported average orientation errors differ less than half of the error’s standard deviation.

The survey presented in the following subsections has a different scope and target with respect to previously published surveys: both applications and technical aspects are taken into account. Moreover, it explicitly encompasses the evolution of algorithms from the estimate of one rigid body pose to full body tracking. All reported methods are listed and characterized in the Appendix A (see Table A1). The table summarizes relevant information related to application, target, kinematic representation, sensor fusion technique and validation of each method and may help the reader in the following sections.

2.2. Introductory Concepts to IHMT Methods

In the late 1990s, technological advancements made inertial systems a candidate alternative to optical ones for online human motion capture. Moving from the findings in aerial vehicles navigation and accelerometry techniques, researchers started tackling the problem of human motion tracking based on (m)IMUs [30,31]. In this, major issues are: How to represent and constrain human limbs kinematics, how to fuse measurements from multiple sensors to track these with minimal drift, also considering erroneous measurements e.g., due to magnetic disturbances, and how to make the relation between technical sensor and (anatomical) body segment frames through calibration, when several (m)IMUs are involved. Moreover, ways of and measures for assessing and comparing different methods are of major interest for evaluation purposes. In the following, these major aspects and preliminary concepts of IHMT are described in more detail in order to prepare the reader for Section 2.3, which provides the IHMT survey.

2.2.1. Kinematics and Constraints

All reported techniques used for inertial body motion tracking assume that human limbs are rigid bodies. Therefore, from the point of view of kinematics IHMT reduces to determining the attitude and/or the position of these limbs. When more limbs are involved a kinematic chain can be modeled. The first multi-limb models used this kinematic chain in a second step after estimating the attitude of each limb separately (e.g., [10,32]). However, the kinematic chain can be better exploited by providing joint constraints that can be added to the sensor fusion algorithm to make the estimation more consistent with human motion.

Kinematics Representation

While the position of a limb in space is typically represented through a Cartesian frame, several possibilities are proposed in literature to represent its orientation. Euler angles are a common choice since they have an intuitive physical meaning, which is the case of the roll-pitch-yaw representation of a vehicle attitude (e.g., [15,33,34,35]) or the identification of the roll-pitch-yaw angles with the anatomical degrees of freedoms (DoFs) of human limbs (e.g., [36,37] ). The major drawback of such a solution (and of all the three parameters attitude representations) is the existence of singularities that may occur in certain configurations, as in the case of the gimbal lock. This is why many methods use quaternions for the estimation (e.g., [38,39,40,41,42]). Quaternions allow for a computationally efficient and singularity-free attitude representation and are often used for the attitude representation of a single body. However, besides their non-minimal rotation parametrization, they do not provide direct access to anatomical or functional angles. Factorized quaternion algorithms [43,44] decompose attitude quaternions in order to identify physically meaningful rotations (e.g., about the articulations’ axes), thus allowing for a simpler implementation of constraints. Kinematic chains are indeed often parametrized either by means of Euler angles (e.g., [45]) or using the Denavit Hartenberg (DH) [46] convention (e.g., [47,48,49]) to represent the relative joint angles.

Constraints

Kinematic constraints play a fundamental role in the whole estimation process, as they can prevent the relative displacement of the body segments to drift over time. Kinematic constraints are sometimes embedded in the sensor fusion algorithm to provide more consistent solutions (e.g., [45,47,50,51]). In other cases the constraints are applied after the sensor fusion algorithm has provided the attitude estimation (e.g., [39]). Since the estimated quantities are often random variables, applying limits to those variables in a consistent way is a delicate issue. This is shown by Simon in [52], where different approaches to solve the issue are reviewed. In [44] quaternions are used to represent the attitudes of the human arm limbs. Anatomical constraints such as joint angle limits and limitations of the limb motions are implemented by posing the attitude estimation as an optimization problem in which the estimated attitudes have to respect the constraints and at the same time optimize the consistency with the accelerometer measurements. Also in [45,47,48,49,50,53,54], the elbow is constrained to reduced DoFs.

In contrast to the kinematic chain model, free segments models have been proposed in [51,55]. These representations keep some of the anatomical constraints as hard constraints, e.g., the connectivity between successive limbs [55], while others are relaxed (implemented as soft constraints) in order to reduce the effects of errors related to their implementation. For example, the elbow is not a perfect hinge joint as its axis is not fixed when the ulna moves with respect to the humerus [56]. Moreover, localizing the elbow axes is a further source of error [48]. On the one hand, detrimental effects of such model errors may be mitigated by the free segments approach, on the other hand this may lead to unwanted behaviours, such as measuring elbow abduction, which is not physically plausible.

2.2.2. Sensor Fusion Technique

Signals gathered from accelerometers, gyroscopes, magnetometers and other sensors need suitable sensor fusion techniques to derive useful information about the attitudes and poses of the limbs. Note, sensor-to-limb calibration parameters are here assumed known. Calibration methods are addressed below. In most of the sensor fusion methods the unknown variables (e.g., Euler angles) are estimated in discrete settings at successive time steps based on previous time step estimation and current time step measures. Two main approaches are adopted for sensor fusion: complementary filters (CF) and Kalman filters (KF). More complex approaches include particle filters (PF) and optimization-based approaches, which are now suitable for online IHMT.

Complementary filters exploit the different frequency spectra of gyroscope, accelerometer and magnetometer signals. Many of the methods that exploit the CF approach (e.g., [10,30,57,58,59,60]) apply the following steps: The accelerometer signal is used to estimate the acceleration due to gravity in the sensor frame. This and the magnetometer signal are then used to obtain a “low frequency” estimate of the sensor’s attitude. At the same time an estimate of this attitude is dynamically calculated from the gyroscope measurement. These two estimates are then fused in the complementary filter. Some methods assume the body acceleration being negligible, thus modeling the accelerometer signal as a noisy measurement of acceleration due to gravity (e.g., [30,60]). In other methods body acceleration is calculated and removed from the accelerometer signal (e.g., [58]). Acceleration due to gravity and measured local earth magnetic field are then used to estimate the pose of the sensor in an earth-fixed frame (world frame). Some methods (e.g., [10]) simply implement the TRIAD algorithm [61] or TRIAD-like algorithms to reconstruct the attitude with respect to the world frame. Others [30,59,60] use more complex optimization algorithms to find the attitude as best fit of the different measurements.

The most widespread sensor fusion techniques are the Kalman Filters [62]. There are many cases in which a linear KF suffices for sensor fusion (e.g., [32,63,64,65]). In most of the cases, nonlinear equations require a manipulation of the KF. The Extended Kalman Filter (EKF) is the most immediate solution that has been adopted to use the KF approach with nonlinearities (e.g., [38,40,66,67,68,69]. Alternatively, Unscented Kalman Filters (UKF) are used in [47,49,53] as they provide a more accurate estimation of probability density functions (PDF) under nonlinear transformations. The method proposed in [70] uses unscented transformations, but implements constraints through probabilistic graphical models. Particle filters have been used by [39,42] to further improve and generalize the representation of PDFs.

Compared to the EKF, the UKF improves the estimation of the transformed probability density function. Moreover, the UKF keeps a convenient complexity when compared to the PF. Conversely, the PF allows to drop the hypothesis of Gaussian distributed random variables, thus permitting a more accurate PDF estimation. Comparisons between EKF and UKF provided conflicting results [71,72,73]. The most recent work highlighted the performance being highly influenced by the application. They describe the UKF as being more robust to initialization issues whereas the EKF is more computationally efficient [74]. The few comparisons that were found between the PF and the UKF are far from IMU-based motion tracking applications and are not reported.

Recent improvements of computational powers made optimization approaches attractive to IHMT. The methods presented in [44,51,55] show optimization based approaches that allow for both sensor fusion and implementation of constraints. Optimization approaches make it easier to take into account large time windows for the estimate. However, a good compromise has to be found between accuracy and speed of the algorithm [51] to allow for online IHMT. These latter methods are really promising because they showed to be highly flexible to add, remove, loosen or strengthen constraints as well as to find a compromise between accuracy and computational burden.

2.2.3. IHMT Common Issues

There are three main issues that recur in IHMT: how to reduce the estimate’s drift, how to handle magnetic disturbances and calibration issues.

Drift

A very first approach to IHMT was based on inertial navigation systems (INS) strapdown integration of gyroscope measurements which was inherited from the navigation of aerial vehicles. Though adapted to follow the dynamics of a human, this solution cannot be used alone as the estimate quickly drifts. Many methods have their main focus in reducing drifts. One solution is fusing the INS or INS-like estimate with a quasi-static one, as it is done in many CF-based approaches (e.g., [58,75], see Section 2.2.2). Since drift is mainly due to gyroscope bias, a second solution is to include the bias in the estimation and to account for it [50,75,76,77,78]. A third solution exploits constraints from the kinematic chain to avoid a drifting attitude estimate of one limb with respect to the others [41,45,47,49,50,53,58,66,67,77,79]. A further solution is used mainly in lower limbs tracking and exploits contacts of the feet with the ground [38,69,78,80]. When the foot is in contact with the ground its velocity is almost null. This information can be used to reset the speed (zero velocity update, ZUPT) and, when moving on a flat ground, to also reset the height of the foot with respect to the ground. These techniques have highly reduced drifts as demonstrated in many of the aforementioned methods.

Magnetic Disturbances

Many of the aforementioned methods rely on magnetometers. Despite being a valuable aid to have an absolute orientation reference, their signals are easily distorted by the presence of ferromagnetic materials in the vicinity of the sensor. Distortion effects are typically classified as hard and soft iron interferences (e.g., [38,81]), which are related respectively to permanently magnetized objects and to objects that are magnetized only when an external field is applied. Hard iron effects cause an offset of the earth magnetic field whereas soft iron effects cause a distortion. If the magnetic environment does not change, these effects can be corrected through internal sensor calibration, which is out of the scope of this article. However, for dealing with a changing magnetic field, either through a changing environment or translational motion of the sensor in an environment with inhomogeneous magnetic field, several solutions have been proposed. The simplest solution is to establish a policy to decide when the magnetometer signal is reliable. This can be done by thresholding its magnitude (e.g., [82,83]). Another common solution is limiting the contribution of the magnetometer measurement to the heading variable (e.g., [45]) or to two components (e.g., [59]). A more sophisticated solution is model-based estimation of the disturbance; e.g., in [84] the magnetic field direction is estimated simultaneously with the sensor orientation. Another approach is proposed in [85]. Under the assumption that magnetic field is constant for a given period, the authors take the magnetometer measurement at the beginning of the period as a reference. They then use the error with respect to this reference at each time step to update the error state estimate in their Kalman Filter. A survey of methods to handle earth’s magnetic field disturbances is proposed by Ligorio et Sabatini in [86].

Calibration

All IMU based motion reconstruction algorithms require some parameters to be provided.

A subset of these parameters defines the orientations (and sometimes the positions) of the IMU frames with respect to the tracked body segments they are attached to. In most of the cases these parameters are assumed to be known: the IMU frame is supposed to be physically aligned to the body frame (e.g., [50,60,87]). In other cases these parameters are obtained by means of a calibration procedure that is carried out at the beginning of the capturing session (e.g., [47]). Another subset is related to the dimensions of the human body: human limb lengths are typically either measured (e.g., [87]), taken from anthropometric models (e.g., [64]) or calculated by means of calibration procedures (e.g., [64,88]). In contrast to the IMU-to-segment orientations and positions, there is no need for online estimation of human limb lengths as they can safely be assumed constant during tracking.

Several calibration procedures were proposed to obtain IMU and limb parameters when tracking humans. The most typical procedure requires the human to rest in the neutrum-pose (N-pose) that is standing still while leaving the arms vertical alongside the trunk in the sagittal plane (e.g., [39,45,47,89]). Another widespread calibration pose is the T-pose, where the user is standing still keeping the arms horizontal in the sagittal plane [47,64,90,91]. In [45] the user is asked to lean forward to define an earth-fixed reference frame. In [14] the user is required to assume a rest pose before each motion. Besides the static poses, functional calibration methods require the user to perform rotations around different joint axes in order to better align the body segment frames with anatomical axes (e.g., [54,92,93]).

In a clinical setting, it is particularly important to obtain anatomically interpretable joint angles and, hence, to obtain accurate IMU-to-segment orientations. In [48,94] calibration procedures comprising IMU placement protocols, static poses and functional movements are proposed for identifying the knee and elbow flexion/extension axes and the forearm pronation/supination axes, thus improving the estimation of the anatomical joint angles. A simpler calibration procedure based on two static poses (standing and sitting or lying) is proposed and evaluated in [89]. Picerno et al. [37] proposes a specific rig equipped with an IMU for IMU-to-segment orientation calibration based on anatomical landmarks. For this, the rig endpoints have to be manually placed on anatomical landmarks, which is applied to the thigh and the shank.

The effects of errors in the different calibration parameters on the limb orientation estimation errors have been recently investigated in [51] demonstrating a clear dominant role of the sensor-to-segment orientations compared to the positions and limb lengths.

2.2.4. Methods’ Assessment

The assessment of motion tracking methods often relies on ground truth data, where the estimated trajectories are compared to ground truth trajectories using typical metrics, such as the root mean square error, namely RMSE, (e.g., [30,36,50,84,88,95,96,97]) or correlation coefficients (e.g., [49,53,58,87,88,96,98]). Two performance measures are mainly used for the evaluation: the drift and the accuracy of the target reconstruction, either based on the position of a reference point or the orientation of a rigid body.

Drift assessment requires to perform relatively long trials. In [36] the proposed algorithm is evaluated with respect to its drift dependency over time. In [87] the drift of the wrist position estimate is calculated and reported for one circular and one square trajectory. Luinge and Veltink [95] report attitude estimation drifts obtained from a strapdown integration of the measured angular velocities as compared to a sensor fusion algorithm using a KF. In many papers validation trials are longer than a few seconds (e.g., [10,31,38,75,92]) so that it is possible to at least qualitatively assess drift by visual inspection of the RMSE along time. Other works (e.g., [10,32,80]) do not report long assessment trials, making it difficult to evaluate drift.

Accuracy assessment requires ground truth data being at least as accurate as the method is expected to be. Single body attitude/position estimation can exploit very reliable ground truth data, such as those gathered from tilt tables (e.g., [30,43,97]). Since the IMU can be aligned very accurately to these devices, the error introduced through the evaluation device is limited and often much smaller than the estimation error. In many applications related to human motion tracking, the positions of anatomical landmarks are of interest. For this, marker-based optical motion capture (OMC) has become the gold standard (e.g., [39,40,47,48,50,55,66,77,79,91,95,99]). OMC permits to evaluate both attitude and position tracking. In the first case the main source of error resides in the alignment of the coordinate frame calculated from the OMC data with respect to the model estimation frame. The second case requires to estimate the parameters (e.g., the segment lengths) needed to calculate the reference point positions from the IMU data. Since it is not possible to measure these parameters exactly, they represent an additional source of error.

Other types of reference data were also used. One example is the work of Zhu et al. [32], in which the authors constrain the hand to follow a straight line and then check the reconstructed trajectory to be straight. Other mechanical platforms and robots were used in [42,57,75,76,81] as ground truth data.

As an alternative to tilt tables, Picerno et al. used in [100] a method for assessing the orientation estimation accuracy by attaching mIMUs to a rigid plate that is oriented in 12 different ways. They use the RMSE of the reconstructed orientation angles with respect to the known plate’s poses as accuracy metric. Devices from Xsens have also been used as providers of ground truth data. Robert-Lachaine et al. recently published [91] a comparison of MVN [64] and optical motion tracking performance when using such systems either with proprietary kinematic models or when estimating angles derived from ISB (International Society of Biomechanics) recommendations. The MVN suite was also used by Pons et al. in [41] and Taunyazov in [90], whereas the attitude estimate provided by the MTx IMU units was used by Brigante et al. in [68] and by Lee et al. in [44] to validate their methods.

It is worth noting that evaluation results always depend on the assessment method, while a great variety of such methods is currently used. Hence, it is difficult to make a fair comparison between the results reported in different publications. The summary Table A1 takes this into account by reporting the assessment methods along with the results.

2.3. Survey of IHMT Methods

This section provides a survey of IHMT methods categorized by the targeted body parts. This categorization allows following the historical development of IHMT methods. Indeed, starting to work on methods for a specific target (e.g., the upper limbs) and then refining it before moving to other targets represents a pattern found for many teams of researchers. Note, the presentation of the different methods combines solutions introduced in Section 2.2.

2.3.1. Generic Limb Orientation

Limbs pose tracking has been tackled by means of (m)IMUs since the end of the last century. Works of Bachmann [30] and Marin [31] pioneered the field targeting orientation tracking of human limbs and robotic links by using mIMUs for computer graphics applications. The former proposed a quaternion-based attitude tracking method that updates the attitude quaternion by means of the gyroscope measurement and corrects it based on a “low frequency” estimate from the accelerometer and magnetometer measurements. Differently from [30] the second model assumes a time decay of the limbs’ angular velocities. This assumption is suitable for human motion as humans cannot maintain an average non-zero magnitude of their limbs’ accelerations for long time periods. The same group further investigated this matter with different approaches: Marin et al. in [31] moved to using an EKF to fuse a gyroscope-based quaternion attitude estimate with the estimate obtained from the accelerometer and magnetometer signals through an optimization procedure. Yun tackles the problem of limb attitude estimation in a similar way with two variants: In [97] the authors take into account a decay of human limb acceleration, whereas in [43] they adopt a factorized quaternion approach to limit the use of the magnetometer measurements for heading estimation. Both methods replace optimization with the QUEST (QUaternion ESTimator) algorithm [4] to determine the attitude from the accelerometer and magnetometer measurements. Hol et al. propose an alternative approach to IHMT for pose estimation of one limb based on UWB [101]. They develop a sensor composed of a 6-axes IMU and a UWB transmitter, whose pose estimation is the goal of the algorithm. First UWB measurements are modeled considering transmission time as an unknown, whereas gyroscope and accelerometer models are based on the kinematics of the sensor and they include time varying biases. Finally, an EKF is set to estimate the pose of the sensor. Kok et al. in [102] extend this method. They also propose a tightly coupled approach to fuse UWB measurements and IMU measurements to obtain a set of variables which includes poses of human limbs. A novel two-steps method for calibration of UWB is first proposed to obtain positions and time offsets of UWB receivers and transmitter, as well as the parameters of an asymmetric probability distribution, that they use to model measurements of UWB. Obtained UWB measurements are then used to set an optimization problem which includes IMU measurements to estimate the poses of human limbs. In [10] the upper limb posture is estimated using a CF which fuses accelerometer and magnetometer signals based on the TRIAD algorithm to reconstruct the attitude of each limb. Two nonlinear CFs are proposed in [75,76] to fuse accelerometer, magnetometer and gyroscope measurements to obtain an attitude quaternion estimation. The authors define an orientation error and demonstrate by means of a Lyapunov stability analysis that the proposed filters enforce the defined error to converge to zero. The method of Madgwicks et al. [59] is also based on a CF and includes two variations. The former uses inertial signals, while the latter uses also magnetometer measurements. The method with magnetometers is described in Section 2.2.2. It exploits an earth-fixed frame to reconstruct the IMU’s orientation as a quaternion . The relation of its time derivative with the angular velocity allows the authors to use the gyroscope measurements for the CF “high frequency” estimate of . The “low frequency” estimate is obtained from an optimization procedure in which the goal is to align vectors measured in the sensor frame with their known counterparts in the Earth fixed frame. This second part can be adapted depending on the availability of measurements; e.g., acceleration due to gravity in case of an IMU and, in addition, local magnetic field in the case of an mIMU. Finally, the method of To and Mahfouz [42] tries to improve the quaternion attitude estimation by using von Mises-Fisher and Bingham densities in a PF that provides the attitude quaternion based on the IMU signals.

2.3.2. Lower Limbs Tracking

IMU-based lower limbs tracking has been tackled for several purposes. In some cases it has been used for gait analysis [36,37,79,93], in other cases only parts of the lower limbs were targeted, mainly for monitoring purposes in medical settings [94,96] or rehabilitation [44,59]. In [88] a system for tracking shank and thigh orientation in the sagittal plane is presented. The authors use two IMUs (both the accelerometers and the gyroscopes were biaxial) attached to these body segments. They perform direct integration with updates according to the difference between the detected acceleration and the acceleration due to gravity.

The work in [63] aims instead at estimating the knee flexion/extension angle based on IMUs attached to the user’s thigh and shank. They use KFs to estimate the IMUs’ attitudes and model the knee as a hinge joint to obtain the flexion/extension angle assuming the orientations of the sensors with respect to the knee joint to be known. Similarly, in [98] the target is knee angle estimation, and the IMU poses with respect to the knee rotation axis are supposed to be known or determined through a calibration procedure. Favre et al. show an application of a similar approach to knee ligament injury monitoring [92,96]. The same authors further developed their method to overcome calibration issues. In [103] they propose a functional calibration procedure to obtain clinically relevant joint angles. The importance of calibration (see Section 2.2.3) for measurements in clinical settings is further witnessed by the works of Picerno et al. [37] and Cutti et al. [48,94] who developed calibration procedures to map mIMU-based 3D kinematics reconstruction to anatomical landmarks. Knee angle estimation based on two IMUs on thigh and shank is also the target of Seel et al. in [93]. They propose a calibration procedure that allows to obtain the knee joint position and knee flexion/extension axis in the sensors’ frames. Based on this, they propose two magnetometer-free joint angle estimation methods. The first method exploits IMU orientation estimation to obtain the knee angle as orientation difference about the knee axis. The second method exploits directly the hinge joint assumption to obtain the knee angle by integrating the difference of the angular speeds with respect to the knee axis. Finally, drift is removed by an acceleration-based joint angle estimation. They test their method against ground truth from an OMC system by mounting IMUs and optical markers on both human and prosthetic legs.

Lower limbs reconstruction has often been used to aid localization during locomotion. Examples include the methods presented in [65,104]. The first exploits detection of contacts and a lower limbs biomechanical model to correct acceleration and velocity errors. Localization is then obtained by integration of linear velocity. The second implements KFs too estimate limbs orientations from mIMUs signals. KFs estimate IMUs biases and the errors of limbs orientation quaternions, which are then used to correct orientation estimate from INS. The method implements also ZUPT and adaptive weighting of accelerometer and magnetometer signals to mitigate detrimental effects of linear acceleration and magnetic field disturbances. Estimates from left and right legs are finally merged by a KF to obtain pelvis displacement.

Joukov et al. propose to use five IMUs to track locomotion for gait analysis [79]. They use two kinematics models to model the support and the swing leg. The first connects the feet to the ground by means of hinge joints (stance leg), whereas the latter connects the waist to the ground by means of three prismatic and three hinge joints. IMU data are fused in an EKF whose states comprise the joint variables and their time derivative. The method is validate on ten cycles but only knee joint angles are reported.

Zihajehzadeh and Park in [105] propose a method that substitute magnetometer with UWB. They use 7 IMUs attached to feet, shanks, thighs and pelvis as well as 3 UWB tags attached to feet and pelvis to reconstruct lower limbs motion and localize pelvis. Interestingly they exploit the robustness of the estimate of limbs’ inclination to remove yaw estimation drift. Their method moves from a first KF (tilt KF) that estimates inclination of the seven limbs based on accelerometer an gyroscope signals. Then they use UWB for a second KF whose output are feet positions and yaw of feet and pelvis. This output and tilt KF output are finally used to estimate shanks and thighs yaw. They obtained good results (orientation error below 5 and position error below 5 cm) walking, jumping and ascending validation trials.

2.3.3. Upper Limbs Tracking

More than a decade ago, Luinge and Veltink in [95] exploited IMUs to track the orientation of the upper limbs by modelling the accelerometer and gyroscope measurements as a function of attitude, biases and noises and using a KF to estimate orientation errors and biases based on these models. This method was applied in [54] for tracking the relative orientation of the forearm with respect to the upper arm. Other works from these and associated researchers addressed magnetic disturbance handling [84] and extending the method to full body motion tracking [64].

Mihelj [50] used IMUs to track human arm motion in a rehabilitation task. In this task the user’s hand was firmly fixed to a robot and the known hand pose was used to complement the IMU information. mIMUs were also used by Jung et al. in [67] to track the motion of the trunk and the upper limbs. These were modeled as two four DoF serial kinematic chains which were connected to the trunk, while the latter had three rotational joints with respect to the pelvis.

Bleser et al. proposed in [45] a novel method for upper limbs tracking that exploits an egocentric camera and markers to aid mIMU-based estimation. The topic was then further investigated addressing motion tracking algorithms for general kinematic chains [66], investigation of the effects of different model calibration errors and biomechanical model representations on the segment orientation estimation accuracy [51] (studied based on arm motions), simultaneous motion and IMU-to-segment calibration estimation [106] as well as low-cost full body sensor suits [107]. Targeted applications include ergonomics in industrial manufacturing [108] and rehabilitation [109].

Peppoloni proposed in [47] an mIMU-based method for arm tracking, modeling each shoulder and elbow with five DoFs and using an UKF to fuse the mIMU data. In [70], the same group proposed a method where the UKF was replaced by a probabilistic graphical model approach. The method takes into account the constraints provided by the kinematic chain model and implements a message passing approach to estimate the joint angles. Considered applications include ergonomics [110], robot teleoperation [111] and rehabilitation.

Particle filters were used by Zhang et al. [39] to fuse inertial and magnetometer measurements for estimating the elbow flexion/extension angles. The same authors worked previously on an UKF-based method presented in [49].

The upper limbs tracking approach of El Gohary [53,77] exploits IMU measurements fused in an UKF. The method was eventually improved [78] by including IMU biases and ZUPTs to limit drift.

Taunyazov et al. [90] adopted a simpler approach to track the upper limbs. Their method relies on one IMU mounted on the upper arm and a simple mechanical tracker equipped with a potentiometer to measure the elbow’s rotation angle.

Finally, upper arm pose estimation is the goal of the methods analyzed in Section 3, i.e., [32,45,47,58,97] which are all based on mIMUs and exploit different calibration and sensor fusion techniques.

2.3.4. Full Body Motion Tracking

Some of the aforementioned methods were extended to full body motion tracking. Works from professor Veltink’s group led to the development of a commercially available inertial body tracking system based on a body suit with 17 mIMUs [64] (the Xsens MVN system). The motion reconstruction algorithm also benefits from the work of Schepers, Roetenberg and Slycke on the exploitation of disturbed magnetic field signals [99,112].

Vlasic et al. [14] developed a full body suit equipped with 18 IMUs and eight ultrasonic sources. The IMUs were equipped with microphones so that the received signals provided a reference to avoid the drift that would occur when purely integrating accelerometer and gyroscope measurements.

The full body tracking method of Pons-Moll et al. [41] is mainly based on camera images. The limbs poses are inferred from the video information within a set of possible poses. This set is reduced thanks to the orientation cues from IMUs mounted on the body.

A linear CF is proposed in [58] in which the orientation quaternion of each limb is obtained as a linear combination of an estimate based on the gyroscope measurements and one based on the accelerometer and magnetometer measurements.

Miezal et al. [66] exploited an EKF to develop a general framework for motion tracking of arbitrary kinematic chains based on mIMUs.

An interesting probabilistic method has been developed by Kok et al. in collaboration with XSens [55]. Instead of using a recursive filter, joint angles are estimated from IMU measurements using constrained optimization. Here, constraints from the biomechanical model and from assumptions about the average acceleration over time are included into the cost function as both hard and soft constraints. Moreover, errors due to sensor shortcomings and soft tissue artefacts are modelled by incorporating appropriate noise terms. The maximum a posteriori estimate is obtained in an offline process using an infeasible start Gauss Newton method to solve the weighted least squares problem. Recently, Miezal et al. [51] proposed a variation of Kok’s offline method to enable online constrained optimization using a sliding window approach.

Multiple limbs and full body suits have been applied to several fields. In the sports field, for example, Ruffaldi et al. in [113] use IMUs to analyze rowing performance by estimating the rower’s motion based on five mIMUs. Measurements from the rowing simulator hardware (oars and seat) aids the overall estimate. Supej et al. developed a full body suit based on Xsens MTx sensors to track ski performance [114]. In [57] Miller et al. addresses remote robot control through IMU-based motion tracking. YostLabs (YostLabs, Portsmouth, OH, US), formerly YEI technologies, distributes full body IMU-based motion tracking applied to computer graphics and Virtual Reality (PrioVR). The system enables computer game players to control virtual characters through their own motions (see YEI technology, http://www.yeitechnology.com/).

3. Selected Methods

This second part of the article is in the form of a tutorial, and provides more details on five methods which have been selected in order to span the different areas that were identified in Section 2. These methods differ concerning the sensor fusion technique, using either CF, KF, EKF or UKF. They also differ concerning the sensors that are used: all of them exploit IMUs, but magnetometer signals are not always used and one method requires a visual reference for tracking human upper limbs. They also differ regarding the kinematic models: some use Euler angles, some use the DH convention and others use quaternions. Moreover, they differ in how the constraints of the kinematic chain are considered. Finally, they differ in how the parameters of the algorithms are set. In the following these methods are briefly recalled, more details can be found in the cited papers.

The following notation will be used. The i-th accelerometer signal will be , the gyroscope’s will be and the magnetometer’s will be . Vector will denote linear acceleration of a point. The earth magnetic field and the gravity vectors will be respectively and . will specify that the vector is written in the reference system l. will be the size n identity matrix, a n by m null matrix, and T is the sample time. The quaternion will represent the attitude of the i-th body.

3.1. Method 1

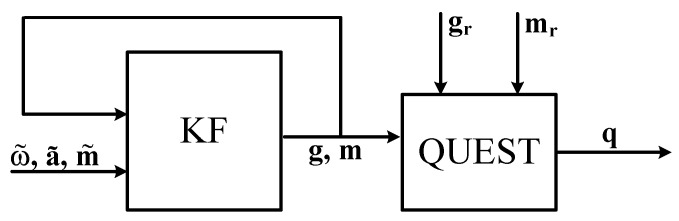

The first method that is described in [32] is suitable for the reconstruction of an arbitrary kinematic chain, given that each link is provided with a nine axis mIMU. Figure 1 shows the block diagram that summarizes this method. Given two consecutive links in the kinematic chain, namely i and , each one provided with a frame , the authors represent the orientation between the two frames by a axis-angle representation with axis and rotation angle , from which the rotation matrix can be obtained.

Figure 1.

Diagram of method 1 for the i-th limb, the diagram represents one temporal slice of the motion reconstruction. Vectors and are the gravity and the magnetic field vectors represented in the i-th limb frame in a reference configuration, e.g., N pose.

The rate of change of and within the same frame can be calculated as

| (1) |

where is the angular velocity of the frame and is the skew-symmetric matrix of vector

| (2) |

It is worth noting that the gyroscope measurement is treated here as a known control input, thus not taking into account the gyroscope measurement noise.

Under the assumption of slow motion (or that the linear acceleration is known) and that the i-th sensor frame is aligned with , then , and are approximately the output of the i-th sensor, i.e.,

| (3) |

The authors hence propose to use a KF for each segment in which the state is

| (4) |

The process model between steps j and is derived from Equation (1):

| (5) |

where is white noise. According to Equation (3) the measurement model is hence

| (6) |

where and is the white measurement noise.

The KF estimation of and feeds the QUEST algorithm to calculate the attitude quaternion . The resulting quaternion is converted to a rotation matrix that feeds the homogeneous matrices

| (7) |

in which is the position of in the frame , are then recursively applied from the chain root up to the desired point to obtain its position in the root frame.

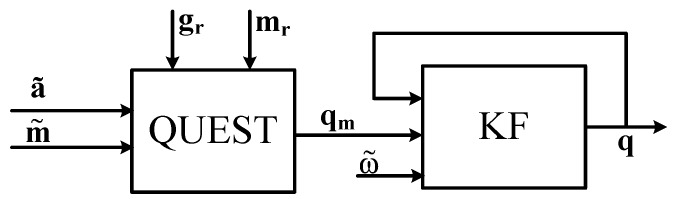

3.2. Method 2

In the method proposed by Yun et al. [97] each limb is supposed to be independent of the others and equipped with a nine axes mIMU sensor. Figure 2 shows the block diagram that summarizes this method. The attitude of i-th limb with respect to the root frame is represented by a quaternion . Under the assumption that the linear acceleration of the human limbs is negligible with respect to the gravity, and that the mIMU axes are aligned with the limb ones, then is initially estimated by means of the QUEST algorithm fed by equally weighted accelerometer and magnetometer signals thus obtaining . To compensate for the dynamic effect of the linear acceleration, the authors estimate the rate of change of based on the link angular velocity also measured by the mIMU:

| (8) |

where is the matrix representation of the quaternion:

| (9) |

Figure 2.

Diagram of method 2 for the i-th limb, the diagram represents one temporal slice of the motion reconstruction. Vectors and are the gravity and the magnetic field vectors represented in the i-th limb frame in a reference configuration, e.g., N pose.

The authors also assume that human limb acceleration is bounded and averages to zero over a certain amount of time, hence they propose to model the angular velocity as exponentially decaying over time:

| (10) |

where is a parameter of the algorithm that determines the time horizon within which averages to zero. These two methods for estimating are fused by means of an EKF in which the state vector is

| (11) |

The process model between steps j and is derived from Equations (8) and (10):

| (12) |

where is white noise in a 7-dimensional space and

| (13) |

The measurement model is the identity as and measurement is . The final estimation of is then used to reconstruct the pose of the links composing the human arm.

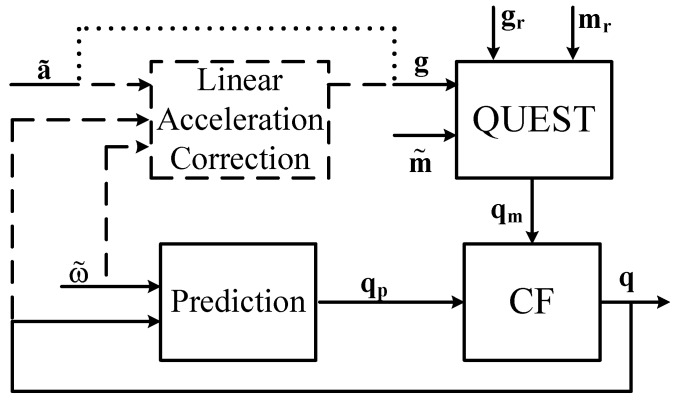

3.3. Method 3

The third method is presented in [58]. The attitude of the i-th limb with respect to the root is represented by the quaternion . They assume to attach a mIMU sensor to each moving limb. The authors propose two versions of their method. In the first version that is called pure the linear acceleration is neglected. In the second version that is called perfect the authors model the human body as a kinematic chain that allows them to calculate the linear acceleration of each frame. Figure 3 summarizes the two versions of the method. The authors assume that the mIMU axes are aligned with the limb frames, thus having , and they suppose to know all the parameters that are required to define the kinematic chain.

Figure 3.

Diagram of method 3 for the i-th limb, the diagram represents one temporal slice of the motion reconstruction. Dashed lines apply to the perfect version only whereas dotted lines to the pure version only. Vectors and are the gravity and the magnetic field vectors represented in the i-th limb frame in a reference configuration, e.g., N pose.

In both versions the authors propose a complementary filter in which the “high frequency” estimation of , namely is obtained from the limb angular velocity as in Equation (8). The “low frequency" estimation of , namely is obtained from and by means of the QUEST algorithm. Given estimation at time step j, the proposed CF computes

| (14) |

where k is a parameter that allows us to tune the filter.

The pure and the perfect filters differ in the that is provided to the algorithm:

| (15) |

The authors associate a hierarchical model tree with the human kinematic chain so that one limb is the root and every other limb i has its parent p. Given the angular velocity of the i-th limb, is

| (16) |

where is the linear acceleration of the i-th limb’s parent, is defined in Equation (2), and is the position of i-th frame origin in the parent frame.

Finally is used to reconstruct each limbs pose according to the defined kinematic chain.

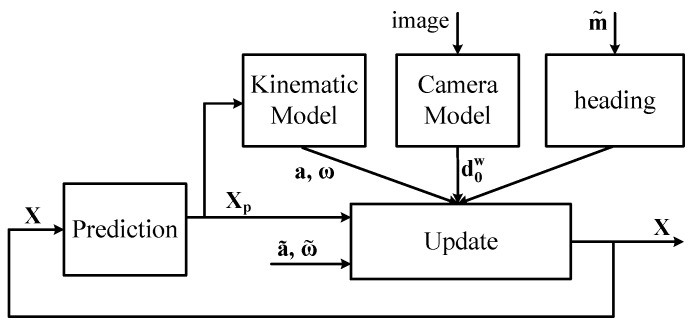

3.4. Method 4

The fourth selected method [45] has two main innovations with respect to the previous ones. First it embeds the kinematic constraint equations in the sensor fusion filter. Second it proposes a visual reference to aid magnetometers under severe magnetic disturbances. This method aims at tracking the upper body (but can be extended to the full body) by means of five mIMUs attached on the chest, the upper arms and the forearms. The mIMU on the chest is also provided with a camera (CmIMU) that tracks the markers placed on the user’s wrists. The authors consider a five degree of freedom (DoF) model for each arm and also model the shoulder motion accounting for the scapulohumeral rhythm [115]. The resulting kinematic chain is rooted in the chest and organized as a hierarchical tree.

The method is based on three loosely coupled EKFs, the first returns the trunk orientation given the mIMU signals, the latter two estimate the shoulder (three DoFs) and elbow (two DoFs) joint angles of each arm based on the mIMU signals, the trunk orientation and the wrist position are obtained from the camera image. Figure 4 summarizes the components of the EKF that estimates the arm motion given the trunk orientation.

Figure 4.

Diagram of the method 4, the diagram represents one temporal slice of the motion reconstruction.

The state of these EKFs is , the process model is linear and it assumes constant angular acceleration between two time steps j and , thus having for the i-th angle

| (17) |

The authors then propose a calibration procedure to relate the state to the available measurements. Assumed that the mIMUs sit on the frames of the limbs, the orientation of each mIMU frame with respect to the related link frame is represented by the rotation matrix that is obtained through this procedure as well as the position of the CmIMU with respect to the shoulder joint center. The other link lengths are gathered from anthropometric tables. Given , the orientation of each mIMU with respect to the root is and the mIMU measurements are

| (18) |

where being defined in Equation (2), is the acceleration of the link hosting the i-th mIMU, and are white noises. The latter measurement equation is only partially used and reduced to the heading direction. A further measurement equation relates the position of the wrist to the wrist position estimated from the camera:

| (19) |

where is the wrist position and is white noise. The measurement equations are then grouped as

| (20) |

that is linearized to obtain the observation matrix

| (21) |

This method directly provides the poses of all limbs.

3.5. Method 5

The last selected method [47] has two main differences with respect to the previous ones. First, it does not rely on the linearization of nonlinear equations, but it exploits the unscented transformation to cope with non-linearities. Second, it proposes refinements in the kinematics of the upper body and exploits a nonlinear sensor fusion algorithm to cope with nonlinear models. The method aims at upper limb motion tracking. Each of the clavicles, upper arm and forearm is provided with a mIMU. Taken the chest as root, a seven DoFs hierarchical kinematic model of each arm was developed according to the Denavit Hartenberg convention.

The sensor fusion technique of this method is an Unscented Kalman Filter in which the state vector is and the process model is the same as Equation (17).

The authors propose a calibration procedure to gather the parameters needed to relate the state X to the measurements. The orientation of each mIMU frame with respect to the related link frame is represented by the rotation matrix , whereas its translation with respect to the parent frame is measured to obtain the homogeneous matrix that fully refer the mIMU frame to its parent’s one. Given that the sth mIMU is attached to the i-th frame whose parent frame is p, the measurements model is:

| (22) |

where is the rotation matrix from the parent frame to the sensor frame, vector, and is the position of sensor frame relative to parent in sensor frame. The measurement equations are then grouped as Equation (20). In this case the function h is not linearized, but it is used for the unscented transformation that provides the measurement estimation based on the state prediction. As for method 4, the state already provides he pose of each limb.

4. Comparison

4.1. Experimental Setup

Selected methods were compared to each other using OMC. Ground truth data were obtained from the Vicon (OMG plc, Oxford, UK) OMC system while tracking a healthy 28 years old male that was equipped with the mIMUs Colibri mIMUs from Trivisio Prototyping GmbH, sampled at 100 Hz), the CmIMU (Firefly MV color camera from PointGrey with diagonal field of view of 140 deg, sampled at 12.5 Hz, hardware synchronized with the mIMUs), and markers on the anatomical landmarks. After holding N-pose and T-pose as calibration procedure, he was asked to perform several movements that involved one functional degree of freedom at a time, namely elbow flexion/extension (), forearm pronation/supination (), shoulder flexion/extension (), shoulder abduction/adduction (), and shoulder internal rotation (). The participant gave his informed consent for inclusion before he participated in the study. The study was conducted in accordance with the Declaration of Helsinki, and the protocol was approved by the Ethics Committee of Scuola Superiore Sant’Anna (Delibera n. 1/2017). The setup of the experiment is shown in Figure 5.

Figure 5.

Experimental setup showing markers that were placed on the anatomical landmarks and on the 9 mIMUs.

The joint motion reconstruction from optical data is based on the following kinematic model: the chest was considered as a steady rigid body. Shoulder was modeled as a spherical joint, so that the humerus has three rotational DoFs with respect to the chest. The forearm was considered to have two DoFs with respect to the humerus, i.e., the flexion-extension and pronation-supination functional DoFs. The Vicon Nexus® software (Oxford Metrics, Oxford, UK) allowed us to use this kinematic model for offline adjustment of the marker positions. The marker on the acromions served to capture the shoulder joint center, that is assumed to be cm under the acromion in the direction.

Each sequence lasted at least 10 s. Wrist position was used to assess the methods. Moreover, each sequence of movements includes a pair of repetitions performed at higher speed to test how methods’ performance varies as the linear acceleration increases.

Captured data include the markers positions in the Vicon reference system , the mIMU signals in the respective sensor reference systems, and the images gathered by the CmIMU in the camera reference system. All the data are synchronized and gathered at Hz. IMU and OMC data were manually synchronized by exploiting the transitions from static postures to motion. This synchronization method may introduce a time misalignment in the data which accounts for up to 3 samples (variations in the data are sufficiently clear to identify onset of motion). This means that the maximum misalignment cannot exceed 30 ms. The dataset used for the comparison is available in Zenodo (https://zenodo.org/) and it can be found through the digital object identifier (DOI) of this paper.

4.1.1. Data Alignment for the Comparison

The comparison of the estimated positions (joints and end effectors) against the OMC based ones requires to represent both in a common reference system. OMC data is available in a frame that is defined during the OMC system calibration. Since the chest frame was the root for the IMU based estimation, all these data were available in . Once decided to represent all the body frames in , the rigid transformation between the global frame and the root IMU frame is sufficient for the comparison. The homogeneous transformation matrix

| (23) |

represents such a transformation, where is the rotation matrix that aligns axes with ones, and is the position vector of the origin. Since in data capturing there was not enough information to calculate , it was decided to estimate it for each method. Let be a n samples set of optical captured positions and the corresponding estimated positions. Let then and be the captured and reconstructed positions in the reference configuration, i.e., the N-pose for the present evaluation. If we consider two new sets of samples, namely X and Y, obtained from and as

| (24) |

then

| (25) |

The rotation matrix is calculated to minimize the reconstruction error, for any method m and a reference r the quality Q can be computed as

| (26) |

Finally, the samples set obtained as

| (27) |

can be compared against X.

This method underestimates the absolute error of each method, but it provides a fair comparison between the methods. The quality of a set of body tracking techniques can then be evaluated against the OMC dataset by comparing the joint and end-effector points with the reference method.

4.1.2. Performance Indices

Aligned mIMU and OMC data are then used to calculate the performance measures that we introduced in Section 2.2.4 and hence compare the algorithms. Given two random variables X and Z each sampled with N samples, the following indices will be used for this purpose:

- Accuracy:

(28) - Correlation:

(29)

4.2. Experimental Results

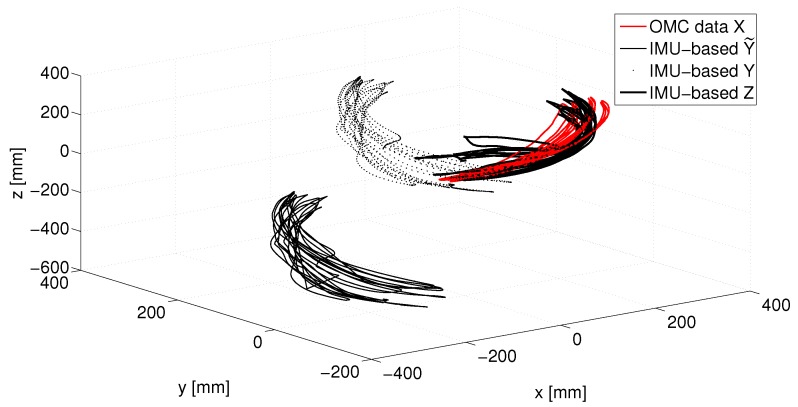

Data gathered from the mIMUs provided the input for the methods reported in Section 3 to reconstruct the arm kinematics. The parameters of each method’s filter were selected to optimize the method performance in terms of stability and accuracy. To enable the comparison of the methods, OMC and mIMU-based position estimation were aligned according to the method reported in Section 4.1.1. Figure 6 shows how the mIMU-based data are first translated to match OMC data in N-pose and then rotated to obtain the best alignment with OMC data.

Figure 6.

Alignment procedure for the mIMU-based data. mIMU-based estimated (Y), translated () and aligned (Z) are reported along with OMC data (Z).

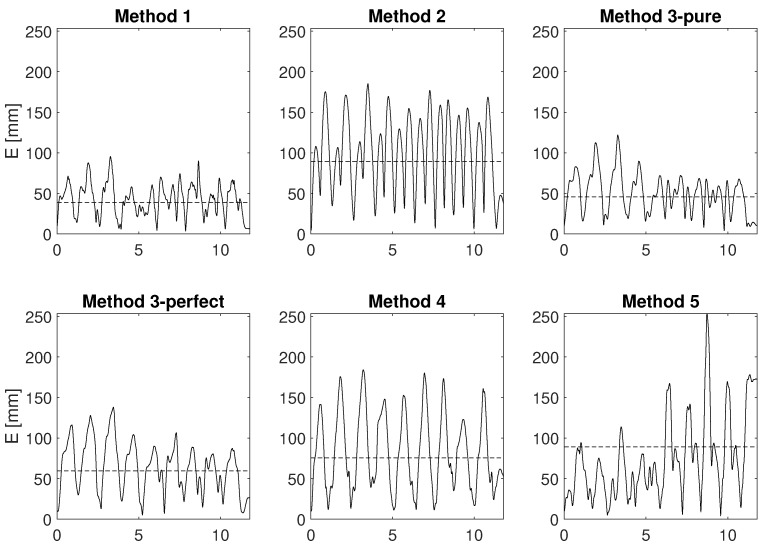

Figure 7 refers to the functional motion and shows how the error E (see Equation (28)) evolves over time.

Figure 7.

Error on the wrist motion reconstruction for the E functional motion trial. On the x-axis the seconds elapsed since the trial beginning are shown. Dotted lines represent average error in the trial.

After these examples we report the values of the error and of the correlation that the different methods scored. For the comparison of the methods three functional movements were selected, namely , , . The first movement allows us to assess how the methods behave when only one joint of the kinematic chain as well as only one mIMU moves. The latter two involve the motion of two mIMUs. With respect to the motion, in the and motions the estimation provided by methods that use the kinematic chain is likely to differ more from the other methods’ estimation. The average of E and C in the three trials are reported in Table 1.

Table 1.

Accuracy and correlation of the methods’ estimation. Error E and correlation C are calculated according to Equation (28) for elbow flexion-extension , shoulder flexion-extension , and shoulder abduction-adduction . For each performance index (i.e. each column), bold values highlight methods that performed better.

| E | S | S | ||||

|---|---|---|---|---|---|---|

| Method | E (mm) | C | E (mm) | C | E (mm) | C |

| 1 | 38.8 | 0.86 | 108.9 | 0.46 | 66.0 | 0.66 |

| 2 | 89.2 | 0.77 | 121.4 | 0.86 | 243.8 | 0.36 |

| 3-pu. | 45.7 | 0.84 | 122.86 | 0.46 | 156.0 | 0.59 |

| 3-pe. | 59.7 | 0.84 | 100.4 | 0.50 | 272.2 | 0.60 |

| 4 | 75.7 | 0.91 | 82.7 | 0.86 | 86.0 | 0.73 |

| 5 | 89.2 | 0.77 | 214.4 | 0.89 | 125.4 | 0.66 |

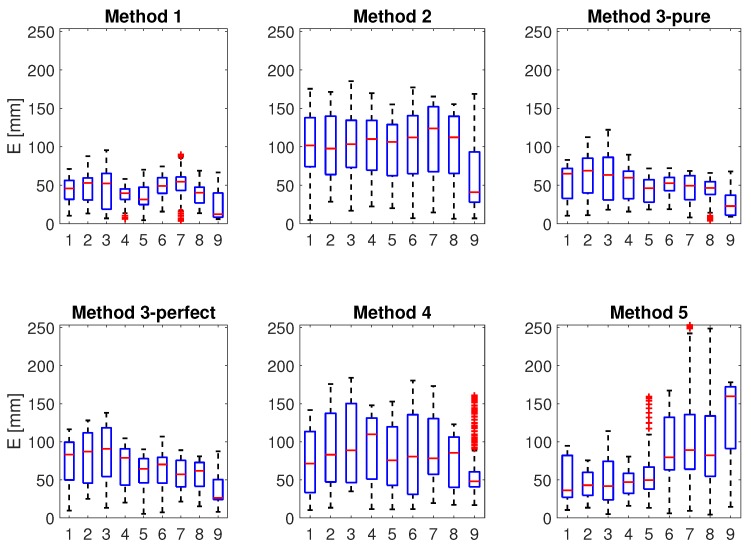

To obtain a more detailed insight of the methods’ performance, the functional motion was further studied. It was divided into cycles: cycles 1–7 were carried out at a slower speed, whereas the latter two were carried out at a higher speed. Figure 8 shows how the error E is distributed within the cycles of the trial, whereas Table 2 reports the average of E and C (see Equation (28)) for the same cycles.

Figure 8.

Error on the wrist motion reconstruction for each period of the E functional motion trial. The boxplots show the median of E along with the 25th and 75th percentiles. Whiskers extend to 1.5 times over the interquartile range.

Table 2.

Accuracy and correlation of the methods’ estimation. Error E and correlation C are calculated according to Equation (28) for each period of the E motion. For each cycle, bold values highlight methods that performed better. The amount of bold values per column allows the reader to have an impression of what methods performed better.

| Method | |||||||

|---|---|---|---|---|---|---|---|

| Cycle | Index | 1 | 2 | 3-Pure | 3-Perfect | 4 | 5 |

| E (mm) | 1 | 43.7 | 102.8 | 53.8 | 73.4 | 72.3 | 51.3 |

| 2 | 49.9 | 100.3 | 64.2 | 79.9 | 90.7 | 44.8 | |

| 3 | 46.6 | 103.4 | 63.1 | 84.0 | 98.3 | 49.4 | |

| 4 | 37.7 | 102.8 | 53.3 | 68.1 | 92.1 | 47.7 | |

| 5 | 35.3 | 97.1 | 44.5 | 57.6 | 79.0 | 59.6 | |

| 6 | 49.4 | 102.8 | 51.3 | 64.1 | 85.7 | 89.6 | |

| 7 | 50.4 | 108.4 | 45.9 | 57.5 | 90.5 | 101.3 | |

| 8 | 38.9 | 100.2 | 44.7 | 56.3 | 76.0 | 97.8 | |

| 9 | 23.6 | 60.9 | 27.8 | 36.5 | 59.0 | 136.9 | |

| C | 1 | 0.94 | 0.73 | 0.96 | 0.92 | 0.94 | 0.93 |

| 2 | 0.94 | 0.77 | 0.91 | 0.87 | 0.92 | 0.85 | |

| 3 | 0.96 | 0.75 | 0.91 | 0.82 | 0.95 | 0.81 | |

| 4 | 0.95 | 0.72 | 0.96 | 0.92 | 0.96 | 0.76 | |

| 5 | 0.95 | 0.78 | 0.93 | 0.89 | 0.94 | 0.87 | |

| 6 | 0.93 | 0.81 | 0.95 | 0.92 | 0.92 | 0.82 | |

| 7 | 0.83 | 0.77 | 0.84 | 0.85 | 0.91 | 0.76 | |

| 8 | 0.88 | 0.74 | 0.86 | 0.89 | 0.95 | 0.71 | |

| 9 | 0.87 | 0.86 | 0.92 | 0.93 | 0.95 | 0.94 | |

4.3. Discussion

The methods can now be compared according to the indices that were proposed in Section 4.1.2. Before comparing the methods, we see from Figure 7 that for all the methods the error varies periodically with time. This time error may be due to residual error of mIMU-OMC data alignment. However, each of the methods may have other source of error related to biomechanical constraints: lack of kinematic constraints (methods 1, 2 and 3 pure) and too rigid constraints (method 3 perfect, 4 and 5) are all suitable candidates for periodic errors in the estimation of a periodic motion.

4.3.1. Accuracy

The first measure that we proposed is accuracy, as obtained via E. Clearly, the lower E the more accurate the method is. Accuracy is a measure of reliability in accurate position estimation, accuracy is needed when an absolute measure of the position is required, for example in the analysis of motion or to provide force rendering when interacting with a Virtual Environment. From Table 1 we see that method 1 is generally the most accurate and method 4 is also comparable. We note that the accuracy gap between methods 1 and 4 is smaller for and movements, being method 4 more accurate for the latter movement. This partially supports the finding that imposing a kinematic chain in the motion estimation improves the estimation when the measurements of mIMUs on different links are affected by the same joint variable. This hypothesis is further supported when methods 1, 2 and 3-pure are compared. Differently from methods 3-perfect, 4 and 5, the first two methods do not take into account the kinematics of the arm. The latter has a lower accuracy in the motion, but has a better accuracy for the motions and (except for method 3-perfect in the motion).

4.3.2. Correlation

Correlation C is the second measure that is considered. Correlation indicates whether the estimated position follows the real pattern of the performed movement. A good correlation of the estimated human motion with the real movement suffices to teleoperate a remote robot. In facts, in this case it is anyhow needed to map the operator motion to the robot kinematics, and what matters is that this map does not vary along time. In other words, even if accuracy is poor, the human who teleoperates the robot can easily adapt motion of his arm to be able to control the robot unless there is a good correlation between the performed motion and the method’s estimate. When correlation is poor, human has to adapt the motion of the arm and eventually perform unnatural motions to control the robot (e.g., activate more DoFs to obtain a simple elbow motion). From the point of view of the correlation, Table 1 shows that the best performing methods are 4 and 5.

4.3.3. Fast Motion

One of the differences between the methods is the use of the kinematics of the human arm, in particular of the linear acceleration of the limbs. A reasonable hypothesis is that methods 3-perfect, 4 and 5 perform better with respect to the others when the motion of the limbs is fast and the speed changes quickly. This condition should make linear acceleration of the limbs play a bigger role. In our case, this role is enhanced by mounting the mIMUs far from the parent joint. However, looking at Figure 8 and at Table 2, there is no significant difference in the variation of the accuracy between slow cycles (1 to 7) and fast cycles (8 and 9). Similarly, Table 2 shows that correlation does not improve between cycles 1–7 and 8–9 for methods 1,2,3-perfect and 5, whereas there is a small improvement for method 4. These results suggest that the linear acceleration of the limbs plays a minor role with respect to gravity, as assumed by several models. However, this aspect should be further investigated with specific motions in which gravity plays a minor role to identify limb’s orientation.

4.3.4. Sources of Error

As a final remark of the discussion, the accuracy and the correlation that were obtained are generally comparable or worse with respect to the literature. However, apart from possible suboptimal tuning of the methods’ parameters, possible sources of error that can explain our results

Knowlegde of human parameters (i.e., arm length). This source of error can be minimized by including human parameters in the estimation e.g., [51]

Body to mIMUs calibration. Although the calibration procedure that we carried out suffices to determine the orientation of the mIMUs, uncertainties in the position of mIMUs with respect to their parent is still subject to assumptions. Also the effects of this source of error can be reduced by a proper calibration and by taking into account the sensor poses in the sensor fusion technique.

Time alignment of OMC data with mIMU data. OMC and mIMU-based data are manually done based on a known motion from a steady condition. However, the effects of misalignment are much smaller than the error we have reported.

Preprocessing of data. Here we tested only the reported algorithm, not considering possible filtering on mIMU data. For example, having a hard magnetic calibration, it would be possible to handle bad data with distorted magnetic field.

5. Conclusions

After introducing the reader to the main issues of IHMT, relevant methods from the literature were reported. Analysis of the literature revealed that several approaches perform similarly for IHMT. However, optimization-based methods seem to have the potential to bring substantial improvements to IHMT. Currently methods and solutions for lower limbs tracking such as ZUPT have not been widely applied to full body tracking yet. These methods are often capable of accurate estimation even during long walk trials. Therefore their combination with their upper limbs counterparts may improve accuracy and reduce drift of full body IHMT also when walking.

Five methods that span the different techniques used for IMU data sensor fusion were presented and analysed in depth, and an evaluation of these methods was proposed based on accuracy and correlation with OMC data. Results showed that method 1 is the best performing for accuracy followed by method 4, which is the best in terms of correlation. We hence advise to use method 1 for attitude estimation and for navigation purposes. Instead we consider 4 the best method for robot teleoperation. Motion speed analysis provided minor results, possibly due to the choice of movements that make gravity play a dominant role in limb attitude estimation.

Appendix A. Summary of Presented Methods

A summary of the methods presented in Section 2 is reported in Appendix A in Table A1. Methods are sorted in chronological order and described according to the following categories:

Ref: reference to the article where the methods described.

Year: publication year.

Body: this category describes which part(s) of the body are targeted by the method Application: some methods are connected by the authors to one ore more applications. Most of the times this link is made explicit in the introduction. This category reports the application(s) extracted from the article. They span from computer graphics to robotics and medical applications.

Target: this category describes the physical quantities that are targeted by the method in relation to the body parts to be tracked. For example, when the body parts to be tracked are upper limbs, arm’s orientation and position are possible targets. The word “attitude” is referred to methods that are not referred to a specific part of the human body but to a generic rigid body.

Focus: Most of the cited articles focuses on the description of the IHMT method. However, some articles focus on the assessment of some methods, or on their application. In these cases fewer or no details about the IHMT method are reported. Calibration and handling of magnetic disturbances are also focuses of some of the articles reported in Section 2.

Sensors: List of the sensors required to apply the method described in the article. Each sensor is preceded by the numbers of units that are used.

Kinematics and Constraints: This category lists the kinematic representation(s) that was (were) chosen in the method and the biomechanical constraints that were used. Orientation is often represented as a quaternion or a rotation matrix which is a function of three independent variables. Exponential maps have also been used to represent orientation. When a kinematic chain is used, position and orientation may be obtained through the joint variables. This is the case when “kinematic chain” is reported without other kinematic representation specifications (e.g., “quaternion orientation”). “Kinematic chain” also indicates that joints are used either to impose constraints to motion or to reconstruct limbs’ pose. This category reports also the use of free segments modelling. Other constraints specifications (e.g., hinge, soft or hard constraints) are specified for some methods. This category is linked to Section 2.2.1.

Parameters: Kinematic chain, constraints and sensor fusion technique require to set and tune some parameters. These parameters are grouped and reported in this category. Lengths (e.g., arm length), orientations (e.g., mIMU’s orientation with respect to the link it is attached to), Kalman Filter parameters (e.g., covariance matrices) are parameters that many models require.

Sensor Fusion Technique: The mathematical method(s) that was (were) used is reported in this category. Kalman Filters, Complementary Filters and Optimization are the most popular possibilities that are reported. However, some methods use other tools such as inertial navigation systems and probabilistic graphical models. This category is linked to Section 2.2.2.

Calibration: When one or more calibration procedures are required to obtain the parameters needed for the method, these are here summarized. Most of the methods include static calibration, whereas other requires more complex procedures which include motion of specific articulations. This category is linked to Section 2.2.3.

Validation: Validation methods are reported in this category, the number of participants and the source of ground truth data is reported.

Measure: Quantitative validation of the methods requires the definition of variables to be assessed. In many cases these variables replicate the target of the method, in other cases they are a subset of the variables need for tracking of the declared targets.

RMSE: Root mean square error of the variables reported as measures for the validation when compared to ground truth data. Angular variables are reported in degrees whereas position are reported in millimetres. Drift are reported in meters per seconds.

Correlation: Correlation of estimated variables with ground truth data as reported in the referenced article.

The Notes column completes the description of each method with a few details specific of the method. Some formulas are worth to be mentioned:

Sens align link refers to the assumption that a sensor’s frame is supposed to be aligned to the frame of the body it is attached to; Sens sitting link is used referring to the situation of a (m)IMU attached to a human limb. In this case the the limb is supposed to be a beam and the formula refers to the assumption that the sensor’s frame origin is a point of such a beam; Bias est refers to models in which sensors’ biases are among the variables that are estimated;

Lin acc noise is referred to methods in which linear acceleration of the sensor is considered to be noise;

Show plot refers to method for which RMSE and/or correlation are not reported as figures in the text on in tables but a plot of such quantities is available.

Appendix A.1. Description of Abbreviations

In Table A1 some abbreviations are used to make the table more compact. These abbreviations are listed according to the previously defined categories and described in Table A2:

Table A1.

Summary of IHMT methods.

| Ref. | Year | Body | Application | Target | Focus | Sensors | Kinematics & Constraints | Parameters | Sensor Fusion Technique | Calibration | Validation | Measure | RMSE | Correlation | Notes |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| [30] | 1999 | attitude | computer graphics | link ori | method | 1 acc, 1 gyro, 1 mag | quat ori | CF gain | CF, GN opt | no | 1, tilt table | roll | 1.0 | - | initial condition study, 120 s valid trial, lin acc negl |

| [31] | 2001 | attitude | computer graphics | link ori | method | 1 acc, 1 gyro, 1 mag | quat ori | CF gain | CF, GN opt | - | 1, tilt table | quat compar | - | - | initial condition study, 25 s valid trial |

| [10] | 2004 | attitude | computer graphics | link ori | method | 1 acc, 1 gyro, 1 mag | rot mat | CF gain | CF | - | 1, robot EE | roll, pitch, yaw | - | 0.7–0.87 | drift, 12s valid trial, omni phantom |

| [32] | 2004 | upper limbs | - |

|