Abstract

This study investigated the relation between linguistic and spatial working memory (WM) resources and language comprehension for signed compared to spoken language. Sign languages are both linguistic and visual-spatial, and therefore provide a unique window on modality-specific versus modality-independent contributions of WM resources to language processing. Deaf users of American Sign Language (ASL), hearing monolingual English speakers, and hearing ASL-English bilinguals completed several spatial and linguistic serial recall tasks. Additionally, their comprehension of spatial and non-spatial information in ASL and spoken English narratives was assessed. Results from the linguistic serial recall tasks revealed that the often reported advantage for speakers on linguistic short-term memory tasks does not extend to complex WM tasks with a serial recall component. For English, linguistic WM predicted retention of non-spatial information, and both linguistic and spatial WM predicted retention of spatial information. For ASL, spatial WM predicted retention of spatial (but not non-spatial) information, and linguistic WM did not predict retention of either spatial or non-spatial information. Overall, our findings argue against strong assumptions of independent domain-specific subsystems for the storage and processing of linguistic and spatial information and furthermore suggest a less important role for serial encoding in signed than spoken language comprehension.

Keywords: linguistic working memory, spatial working memory, language comprehension, sign language, serial encoding

1. Introduction

Language comprehension involves actively accessing, maintaining, and processing linguistic information. The impact of linguistic working memory (WM) capacity on spoken language comprehension has been well documented. For instance, WM measures that assess both processing and storage resources (e.g., reading and listening span tasks) have been found to be good predictors of narrative and sentence comprehension abilities (Caplan & Waters, 1999; Daneman & Carpenter, 1980; Daneman & Merikle, 1996; Daneman & Hannon, 2007; King & Just, 1991; Waters & Caplan, 1996). The ability to temporarily store information for further processing is limited in capacity (e.g. Cowan, 2001), and an important theoretical question concerns the domain-specificity of these limited resources (e.g., Baddeley, 2012; Cowan, 2005; Logie, 2011). In particular, there has been a long-standing debate about whether WM capacity is served by separate subsystems for linguistic and visuospatial processing (each with its own limited resource capacity) or by a single, central capacity-limited system (e.g., Barrouillet, Bernardin, & Camos, 2004; Cocchini, Logie, Della Sala, MacPherson, & Baddeley, 2002; Fougnie, Zughni, Godwin, & Marois, 2015; Ricker, Cowan, & Morey, 2010; Saults & Cowan, 2007; Vergauwe, Barrouillet, & Camos, 2010). A consensus is now emerging that there are likely both domain-general capacity limits and domain-specific resource limitations on WM capacity (for discussion, see Cowan, Saults, & Blume, 2014; Morey, Morey, Van der Heijden, & Holweg, 2013).

An important part of the evidence in favor of the multiple-component approach to WM comes from studies that investigated dissociations of WM resources used to process linguistic and spatial information (e.g., Friedman & Miyake, 2000; Handley, Capon, Copp, & Harper, 2002; Shah & Miyake, 1996). For example, Shah and Miyake (1996), using a spatial span task that taxed both processing and storage components of spatial WM, found that spatial span and reading span did not correlate significantly and that reading span, but not spatial span, was correlated with language comprehension measures. They concluded that there are two separate pools of domain-specific resources that support the processing and maintenance of spatial and linguistic information. This dissociation between the processing of linguistic and spatial information is also emphasized in the dominant model of working memory initially proposed by Baddeley and Hitch (1974). This model includes two separate subsystems for the storage and processing of linguistic and spatial information, the phonological loop and visuospatial sketchpad, (Baddeley & Logie, 1999; Baddeley, 1986, 2007; Logie, 1995; but see Barrouillet et al. (2007), Cowan (2005), and Oberauer (2009) for alternative models without an explicit separaration between modality-specific memory representations).

Although general language processing (spoken or written) does not seem to rely on spatial WM resources, there is some evidence for an association between spatial WM mechanisms and the comprehension of spatial language, specifically. For example, Pazzaglia and colleagues investigated how reading comprehension of spatial and non-spatial texts were affected by concurrent articulatory or spatial tasks (De Beni, Pazzaglia, Gyselinck, & Meneghetti, 2005; Pazzaglia & Cornoldi, 1999; Pazzaglia, De Beni, & Meneghetti, 2007). They found that verbal suppression negatively impacted both spatial and non-spatial text comprehension, whereas spatial suppression selectively impacted spatial text comprehension. Furthermore, Meneghetti, Gyselinck, Pazzaglia, & De Beni (2009) showed that participants with high mental rotation scores were better able to preserve good spatial text comprehension during a spatial concurrent task compared to participants with low mental rotation scores (also see Meneghetti, De Beni, Pazzaglia, & Gyselinck, 2011).

The study of the relationship between WM systems for linguistic and spatial information predominantly comes from spoken language research. Given that sign languages are both linguistic and visual-spatial, they provide a unique avenue for investigation of modality-specific vs. modality-independent characterizations of working memory resources. Currently, there is evidence for strong similarities in the architecture of the WM system for sign and spoken languages, including a phonological loop for the storage and rehearsal of signs (Wilson & Emmorey, 1997, 1998, 2003). Furthermore, neuroimaging studies have shown largely overlapping neural systems for WM processes for sign and speech (Bavelier, Newman, et al., 2008; Buchsbaum et al., 2005; Pa, Wilson, Pickell, Bellugi, & Hickok, 2008; Rönnberg, Rudner, & Ingvar, 2004; Rudner, Fransson, Ingvar, Nyberg, & Rönnberg, 2007; for discussion, see Rudner, Andin, & Rönnberg, 2009).

On the other hand, there is also evidence for modality-specificity with respect to serial order processing mechanisms and differential reliance on serial order information in WM tasks for spoken vs. signed (for discussion, see Bavelier, Newman, et al., 2008; Hirshorn, Fernandez, & Bavelier, 2012; Rudner, Karlsson, Gunnarsson, & Rönnberg, 2013). Many studies have reported larger spans in the spoken than the signed modality for forward serial recall tasks, including digit, letter, and word span tasks (e.g. Bavelier, Newport, Hall, Supalla, & Boutla, 2006, 2008; Boutla, Supalla, Newport, & Bavelier, 2004; Geraci, Gozzi, Papagno, & Cecchetto, 2008; Hall & Bavelier, 2011; Wilson, Bettger, Niculae, & Klima, 1997; but see also Andin et al., 2013; Wilson & Emmorey, 2006a, 2006b). Importantly, modality differences are typically not found in backwards serial recall tasks or in tasks with reduced temporal organization demands, such as free recall (e.g., Bavelier, Newport et al., 2008; Boutla et al., 2004; Rudner, Davidsson, & Rönnberg, 2010; Rudner & Rönnberg, 2008a). Moreover, some studies have found that signers outperformed speakers on spatial serial recall tasks, such as the Corsi block test (e.g., Geraci et al., 2008; Romero et al., 2014; Wilson et al., 1997; but see Logan, Mayberry & Fletcher, 1996; Marschark et al., 2015).

The purpose of the current study was to investigate the relation between linguistic and spatial working memory resources and language comprehension for signed compared to spoken language. To this end, we administered several types of spatial and linguistic serial recall tasks commonly used in spoken language research to a group of deaf users of American Sign Language (ASL), a group of hearing monolingual English speakers, and a third group of hearing ASL-English bilinguals who participated in both the ASL and spoken English tasks. The tasks included both ‘short-term memory’ tasks (tapping the passive storage of information) and ‘complex working memory’ tasks (requiring the manipulation or transformation of information stored in memory). Specifically, linguistic and spatial short-term memory was assessed with a letter span task (Boutla et al., 2004; Wilson & Emmorey, 1997) and the Corsi block test (Corsi, 1972; Milner, 1971), respectively. Linguistic and spatial working memory were assessed with a listening/sign span task (Daneman & Carpenter, 1980; Turner & Engle, 1989; Wang & Napier, 2013) and a spatial span task (Shah & Miyake, 1996), respectively..

The letter span and language span tasks share a forward serial recall component, and therefore we predicted (in line with previous studies) that we would observe an advantage for spoken English on both span tasks compared to ASL. In contrast, based on previous research on visuospatial advantages in signers, we predicted an advantage for ASL signers (both hearing and deaf) compared to monolingual English speakers on the Corsi block test (Geraci et al., 2008; Romero Lauro et al., 2014; Wilson et al., 1997) and also possibly the spatial span task, because this task involves mental rotation (see Emmorey, Klima, & Hickok, 1998; Emmorey, Kosslyn, & Bellugi, 1993; McKee, 1987).

We also assessed signed and spoken language comprehension using ASL and English narrative comprehension tasks that paralleled the reading comprehension task used by Daneman and Carpenter (1980). However, in contrast to Daneman and Carpenter (1980), the narratives were all descriptions of spatial layouts of environments (e.g., a college campus, a park, a furniture store, etc.). For ASL, such descriptions involve the use of signing space to indicate landmark locations, while for English these spatial scene descriptions involve the use of spatial prepositions. Following each narrative, participants were presented with comprehension questions that related either to spatial or non-spatial information in the narratives.

Given similarities in the basic architecture of WM and parallels in language processing for spoken language and sign language (for review, see Carreiras, 2010; Emmorey, 2007), we predicted that linguistic working memory would correlate with language comprehension ability for both ASL and English. However, because sign comprehension requires encoding visuospatial material into linguistic representations, we also hypothesized that sign language processing draws on resources that support spatial WM, particularly for spatial language comprehension. We note that Holmer, Helmann, and Rudner (2016) found no correlation between scores on a sign language comprehension test and spatial memory in deaf signing children, but their sign comprehension test did not specifically assess spatial language. It is also possible that spatial WM might be correlated with the comprehension of spatial language in both the signed and spoken modality (see Meneghetti et al., 2009). Either of these outcomes would challenge the idea that linguistic processing and visuospatial processing are two fundamentally distinct domains of human cognition. On the other hand, if spatial WM capacity is not correlated with sign language comprehension ability (nor with spoken language comprehension ability), this result would be consistent with models that propose domain-specific resources within linguistic working memory (e.g., Baddeley, 1986, 2007; Cocchini et al., 2002; Logie, 1995).

2. Method

2.1 Participants

Thirty-five deaf ASL signers (32 female, M age = 33.1 years, SD = 10.7) and 35 monolingual English speakers (17 female, M age = 22.5 years, SD = 3.8) participated in the study. In addition, a group of 19 hearing ASL-English bilinguals (12 female, M age = 32.0 years, SD = 9.2) also participated in the study. The monolingual English speakers were significantly younger than the deaf ASL signers (p < .001) and the hearing ASL–English bilinguals (p < .001), who did not differ from the deaf ASL signers in age (p = .88). The monolingual and bilingual English speakers reported normal hearing and normal (or corrected-to-normal) vision. All deaf participants had severe to profound hearing loss (71dB – 90dB). The deaf signers were either native signers exposed to ASL from birth (N = 23) or early signers exposed to ASL before age eight (N = 12; mean age of ASL exposure = 4.7 years, SD = 2.5 years). Of the hearing ASL signers, fourteen were Codas (Children of Deaf Adults) exposed to ASL from birth, and five acquired ASL after age seven. The hearing signers were all proficient in ASL, with high self-reported ASL comprehension ratings (M = 6.4, SD = 1.0, with 7 = ‘like native’) and ASL production ratings (M = 6.2, SD = 1.0). All reported using ASL in their daily lives. Ten bilinguals worked as interpreters. The mean number of years of education was 16.5 years (SD = 2.9) for the deaf signers, 15.1 years (SD = 1.4) for the monolingual English speakers, and 15.8 years (SD = 2.4) for the hearing ASL-English bilinguals. The deaf signers had a significantly higher number of years of education than the English monolingual speakers (p < .01), but neither group differed significantly from the hearing ASL-English bilinguals (ps > .25).

2.2 Materials

2.2.1 Linguistic working memory tasks

2.2.1.1 ASL letter span

To measure signed linguistic short-term memory, we used a version of the WAIS Digit Span task (Wechsler, 1955) adapted for ASL (Boutla et al., 2004), in which sequences of fìngerspelled letters (rather than digits) are presented at a rate of one letter per second and are recalled in order of presentation. Previous research has suggested that the phonological (form) similarity of the ASL number signs 1 through 9 complicates the use of digits in linguistic short-term memory tasks (Wilson & Emmorey, 1997). Instead, phonologically dissimilar letters were used to create sequences that increased from two to nine letters, with two sequences of each length. The fingerspelled letters used in the task were B, F, H, K, L, R, S, V, and X (from Wilson & Emmorey, 2006a). ASL letter span was determined as the highest level at which both letter sequences were correctly recalled. The test was terminated when the participant failed on both sequences of a particular length. Participants received partial credit (0.5) for the last passed level if one of the two sequences at that level had been correctly recalled. The digital video letter sequences were presented on a computer screen. All sequences were signed by a deaf native female signer at a rate of one letter per second.

2.2.1.2 English letter span

The letters used in the English letter span task were G, R, P, K, M, S, H, Y, L (from Bavelier et al., 2006). These were selected to be as phonologically dissimilar as the letters used in the ASL Letter span task (Bavelier et al., 2006; Wilson & Emmorey, 2006b). An adult native female monolingual English speaker recorded the stimuli. The letter sequences were presented audiovisually on a computer through computer speakers at a rate of one letter per second. Scoring proceeded in the same way as for the ASL letter span task.

2.2.1.3 Sign span

A sign span task was developed for ASL that was modeled after the Daneman and Carpenter (1980) reading span task and Turner and Engle’s (1989) adaptation of that task (see Wang and Napier (2013) for a similar sign span task developed for Auslan). The task consisted of 60 ASL sentences (simple declaratives), half of which were semantically plausible (for example, the woman mops the floor) and half semantically non-plausible (for example, the calculator was angry). The sentence stimuli were presented on a computer and signed by a deaf native female ASL signer. Participants had to: 1) quickly decide whether the sentence was semantically plausible or implausible, and 2) remember the last sign of each sentence (which were mostly nouns). The plausibility decision had to be given within a 2,000ms interval between each sentence within a set. To avoid possible effects of articulatory suppression on working memory, participants were not asked to manually indicate their response with a button press. Rather, participants indicated their answers by stepping on a red (not plausible) or green (plausible) square on a foam cushion under the desk in front of them. The red and green squares were placed on the left and right side of the cushion, respectively. Participants were instructed to return their foot to the neutral position in between responses, indicated by a yellow square. Pilot testing revealed that foot responses were considered easier than manual responses.

At the end of each set of sentences, a picture appeared on the screen (presented for 1500ms) to prompt the participant to recall the final signs of each sentence in order. All sentences were 3–5 signs in length (mean = 3.8). The mean English frequency for the translations of the final ASL signs was 3.5 (SubtLex-US log10 word frequency, Brysbaert & New, 2009). Stimuli sets increased from two to six sentences, each level consisting of three sets of sentences. Congruent with the scoring system used in Turner and Engle (1989), span scores were determined by the level at which participants recalled two out of three sets correctly. The task was terminated when the participant failed to correctly recall two out of three sets. Participants still received partial credit (0.5) for that level if they recalled one set (out of three) correctly.

Two pseudo-randomized versions of the task were created to ensure that no more than two plausible items or two non-plausible items appeared consecutively within each set and that each level contained approximately 50% plausible and non-plausible sentences. The two versions were counterbalanced across participants. Six sets with two sentences were used as practice sets with feedback to the participant.

2.2.1.4 Listening span

Parallel to the ASL sign span task, the English listening span task consisted of 30 semantically plausible and 30 semantically implausible sentences (all simple declaratives), presented audiovisually on a computer through computer speakers. An adult native female monolingual English speaker recorded the sentences. All stimuli sentences were 6–11 words in length (mean = 7.8). Mean English log10 word frequency for the final words was 3.3; as with the ASL version of the task, the final words were mostly nouns. Stimuli sets increased from two to six sentences, each level consisting of three sets of sentences. Presentation and scoring proceeded in the same way as for the ASL sign span task. Two pseudo-randomized versions of the task were counterbalanced across participants. Six sets with two sentences were used as practice sets with feedback to the participant.

2.2.2 Spatial working memory tasks

2.2.2.1 Corsi block test

The Corsi block test is a visuospatial counterpart to the standard linguistic short-term memory span task (Corsi, 1972; Milner, 1971). The task consists of nine identical blocks (3×3×3 cm) that are irregularly positioned on a wood board (23 × 28 cm). Each block is identified with a number 1–9, but the numbers are only visible to the experimenter who taps sequences at a rate of one block per second. The sequences in this study were selected from Pagulayan, Busch, Medina, Bartok, and Krikorian (2006) and shown on video to ensure consistent presentation rate. Only the hand of the experimenter tapping the sequences with the end of a pencil was visible on the videos. At the end of each sequence, participants had to reproduce the sequence with their index finger on the Corsi board positioned in front of them.

Each participant began with practice sets of two and three block-sequences, with four sequences at each level. If the participant made an error on either of these practice sequences, the experimenter demonstrated the correct sequence on the Corsi board using the eraser-end of a pencil. The actual task increased from sequences of four blocks to nine blocks, with five sequences at each level (Pagulayan et al., 2006). Participants who successfully reproduced the first four sequences within a level skipped the fifth sequence and continued with the next level. If the participant missed any of the first four sequences at a given level, the fifth sequence was administered. The task was stopped when the participant failed to correctly repeat two or more of the five trials at any given level. Corsi block span was determined as the highest level with four correctly reproduced sequences. Participants received partial credit (0.5) for the subsequent level if they correctly recalled two or three sequences.

2.2.2.2 Spatial span

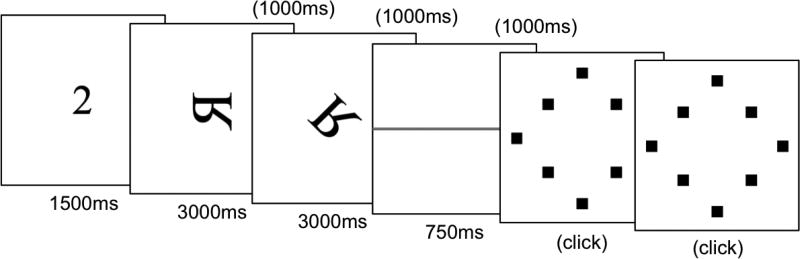

As a measure of spatial working memory capacity, we used the spatial span task developed by Shah and Miyake (1996). Participants were presented with a letter on a computer screen that was displayed either normally or mirrored, and rotated in one of seven possible orientations of 45-degree increments around its center (excluding the normal, upright position). Each given letter was presented for 3,000ms, and the subsequent presentations of the letter were separated by 1,000ms. Participants had to judge whether the letter was “normal” or “mirrored”, using the foot-response mechanism as for the ASL span task (red square on the left side for ‘mirrored’ responses, green square on the right side for ‘normal’ responses, and the yellow square in between as neutral start and end position). They also had to remember where the top of the letter was pointing. Once all the letters in the set were presented, a horizontal red line on the screen (presented for 750ms) prompted recall of the orientation of the set of letters in the order of appearance. For the recall phase, a diamond shaped grid of eight black squares was presented on the screen representing the eight possible orientations of the letters (including the upright orientation). At the beginning of each trial sequence, a number briefly appeared that indicated to participants the number of letters in the current set. Figure 1 provides a schematic representation of a trial sequence with two letters. Participants used the mouse to click on the square that represented the direction that the top of each of the letters had been pointing to.

Figure 1.

An example of one trial of a two letter-set in the spatial span task. The number “2” indicates the number of letters in the current set. Each letter is presented in either a normal or mirrored presentation. A screen with a horizontal line signals the location-recall portion of the task in which participants indicate where the top of each letter was pointing by clicking one of eight boxes on a grid.

Consistent with the language span tasks, participants were presented with a maximum of 15 letter sets (three at each level) ranging from two to six letters. Within a given set, the same letter was always used, but letters varied across sets at each level. Five different letters were used in the task (F, J, L, P or R) for a total of 70 possible combinations of letters (5), orientations (7), and mirrored or normal presentation (2). The scoring procedure was the same as for the language span tasks. Two pseudo-randomized versions of the task were created that ensured approximately equal distribution of normal and mirrored letters at each level and that opposing letter orientations were not presented successively in the same set, for example, “P” rotated 270 degrees followed by a rotation of 90 degrees. The two versions were counterbalanced across participants. Ten sets with one letter and 15 sets with two letters were used as practice sets with feedback to the participant.

2.2.3 Narrative comprehension tasks

2.2.3.1 ASL narrative comprehension

An ASL narrative comprehension task was developed based on the English reading comprehension task used by Daneman and Carpenter (1980). Participants viewed 12 short ASL narratives signed by a deaf female native signer and answered four questions at the end of each narrative. Each narrative described the spatial layout of an environment (e.g., furniture in a room, landmarks in a town) either from a survey perspective (“bird’s eye view”) or a route perspective (i.e., a tour). The ASL descriptions indicated spatial relationships between landmarks by where signs were placed in signing space. Four questions at the end of the narrative related either to the spatial locations of landmarks from the narrative (two questions; e.g., “What structure is across from the park’s entrance?”) or they referred to non-spatial facts from the narrative (two questions; e.g., “What time does the park open?”). The questions referred to information presented at the beginning, middle, or end of the narrative (balanced across question type). Participants’ responses were videotaped, and there was no time limit for answering the questions. Comprehension was measured as percent correct for the spatial and non-spatial questions.

2.2.3.2 English narrative comprehension

The 12 spatial narratives and corresponding questions from the ASL comprehension task were translated into English and were used as a measure of English language comprehension. An adult female monolingual English speaker recorded the narratives, which were presented audiovisually on a computer through headphones. As for the signers, speakers’ (vocal) responses were videotaped for later scoring, and comprehension was measured as the proportion of spatial and non-spatial questions answered correctly.

Because the ASL and English narratives were translation equivalents, the hearing ASL-English bilinguals were tested with half (six) of the narratives in each language, and narrative language was counterbalanced across participants. The bilinguals responded to questions in ASL for the ASL narratives and in spoken English for the English narratives. The order of ASL and English narratives was also counterbalanced across participants.

2.3 Procedure

Participants received both signed and written instructions or spoken and written instructions for all tasks. All tasks were presented on an iMac desktop 2.16 GHz Intel Core 2 Duo (OS 10.6.8) with 17” (43.2 cm) screen (1680×1050 pixel resolution). Psyscope X60 (Cohen, MacWhinney, Flatt, & Provost, 1993) was used to present the ASL and English letter span tasks, the listening span task, the spatial span task, and the Corsi block test. Quicktime Player 7 was used to present the ASL sign span task. Participants’ responses on all tasks were recorded on video to allow reviewing for accuracy. The two linguistic memory tasks (sign/listening span and letter span) and the two spatial memory tasks (spatial span and Corsi block test) were administered as paired sets. The order of spatial and linguistic memory tasks and the order of the two spatial and two linguistic memory tasks were varied across participants. For the deaf ASL signers and monolingual English speakers, the narrative task generally followed the memory tasks (although occasionally the narrative task occurred prior to or between the memory tasks due to scheduling demands). To avoid possible carry over strategies from English to ASL (or vice versa) for the hearing bimodal bilinguals, the spatial memory tasks always separated the linguistic tasks (narrative and memory) in each language, and we counterbalanced whether the set of ASL or English tasks were presented first. Although most participants completed all tasks on the same day, for practical reasons some participants completed the tasks across two different sessions.

3. Results

Table 1 reports the descriptive statistics for the WM measures and narrative comprehension performance for the deaf ASL signers, monolingual English speakers, and hearing bimodal bilinguals. We first report the results of between-subject comparisons between deaf ASL signers and monolingual English speakers for the linguistic STM and WM measures and for the narrative comprehension task. For all analyses, the data from the deaf native signers (N = 23) and early signers (N = 12) were combined because we found no significant difference in performance between the two groups on any of our measures (all ps > .10). Furthermore, because of a significant difference in age between the two groups, age was included as a covariate in the analyses1. For these same measures, we separately report within-subject comparisons between ASL and English for the hearing bimodal bilinguals. Next we compare the performance of all three groups on the visuospatial STM and WM measures. Finally, we report the results of multiple regression analyses of scores on factual and spatial questions in the narrative comprehension task and the WM measures for each language.

Table 1.

Mean spans (and standard deviations) for the linguistic and spatial working memory measures for each group, and mean percent correct (and standard deviations) for the narrative comprehension task.

| Domain | Measure | ASL signers M (SD) |

English speakers M (SD) |

Bimodal bilinguals M (SD) |

|

|---|---|---|---|---|---|

| ASL | ENG | ||||

| Verbal memory | Letter span | 5.0 (0.6) | 5.8(1.1) | 5.0 (0.5) | 6.1 (1.0) |

| Language span | 3.2(1.0) | 3.9 (0.9) | 3.6(1.0) | 3.3 (1.0) | |

| Spatial memory | Corsi blocks span | 5.4(1.2) | 5.2 (0.6) | 5.3 (0.7) | |

| Spatial span | 3.0(1.3) | 3.2(1.2) | 3.7(1.3) | ||

| Narrative comprehension | Factual questions | 77 (12) | 81(11) | 83 (09) | 81 (13) |

| Spatial questions | 67 (15) | 69 (14) | 68 (17) | 74 (13) | |

| Total | 72 (12) | 75(11) | 76(11) | 77 (12) | |

3.1 Group comparisons

3.1.1 Linguistic STM and WM measures

English forward letter spans were significantly longer than ASL forward letter spans for monolingual English speakers compared to deaf ASL signers (F(1,67) = 7.62, p< .01, η2 = .13). Sentence spans for the deaf signers and monolingual speakers were not significantly different after controlling for the effect of age (F(1,67) = 1.96, p = .17). The same pattern of results was observed for the within-subject analyses with hearing ASL-English bilinguals. Specifically, the bilinguals had longer forward letter spans for English than ASL (t(18) = 4.78, p < .001, d = 1.15), while English and ASL sentence span measures did not differ significantly (t(18) = −1.27, p = .22).

3.1.2 Narrative comprehension task

A 2 × 2 ANOVA on the narrative scores with Group (deaf signers, monolingual speakers) as a between-subjects factor and Question type (factual, spatial) as a within-subjects factor (including age as covariate) showed no significant differences between the monolingual English speakers and the deaf ASL signers (F(1,67) = 1.02, p = .32). Both groups scored higher on factual questions than location questions (F(1,67) = 7.50, p < .01, η2 = .10), and there was no significant Group by Question type interaction (F(1,67) < 1, p = .99)2 Similarly, scores on the ASL and English narratives did not differ significantly for the hearing ASL-English bilinguals (F(1,18) < 1, p = .46). Like the other two groups, the bilinguals scored higher on factual questions than location questions (F(1,18) = 20.37, p < .001, η2 = .53). The Language modality by Question type interaction did not reach significance (F(1,18) = 3.27, p = .09).

In summary, only the linguistic short-term memory task yielded significant group and/or modality differences, reflecting larger spans for the spoken modality than for the signed modality. Importantly, there were no group differences in narrative performance indicating the ASL and English narratives were well-matched, and all groups performed similarly, scoring higher on factual questions than spatial questions.

3.1.3 Visuospatial STM and WM measures

For the visuospatial STM and WM measures, we compared performance between all three participant groups in one-way ANOVAs with Group as between-subjects factor including age as covariate. No significant group differences were observed (Spatial span: F(2,85) = 1.97, p = .15; Corsi blocks: F(2,85) = 1.97, p = .15).

3.2 Multiple regression analyses

The R statistical package (R Development Core Team, version 3.2.3) was used to conduct multiple regression analyses to model the narrative scores on factual and location questions for English and ASL, using WM scores from the relevant language span tasks and the spatial span tasks as predictor variables. Only the scores from the linguistic and spatial complex WM measures (listening/signing span and spatial span) were included in the multiple regression analysis. We excluded the STM measures (letter span and Corsi blocks) from these analyses because scores on the STM and the complex WM measures correlated significantly with each other for both the deaf ASL signers and the monolingual English speakers (range: .31 < r < .48), and therefore these measures appear to partially capture similar inter-individual variation in linguistic and spatial working memory abilities. Moreover, several studies have shown that complex linguistic working measures are better predictors of language comprehension than short-term memory measures (e.g., Caplan & Waters, 1999; Daneman & Merikle, 1996; Daneman & Hannon, 2007). In addition, including fewer predictors in the regression models increases the statistical power to detect differences between the contribution of linguistic and spatial WM resources on language comprehension performance for each language. For the English narrative analysis, we pooled the data across the hearing monolingual English speakers and hearing ASL-English bilinguals, and for ASL narrative analysis, we pooled the data across the deaf ASL signers and hearing ASL-English bilinguals3. Because of the group differences in age and years of education, these latter two variables were also included as predictor variables. All measures were standardized by converting them to z-scores.

3.2.1 English Narratives: Factual Questions

Age (Estimate = 0.41, SE = 0.17, t = 2.49, p < .05) and Listening span scores (Estimate = 0.40, SE = 0.13, t = 3.01, p < .01) contributed significantly to the model of factual questions in English, but years of education (Estimate = −0.27, SE = 0.17, t = −1.64, p = . 11) and Spatial span scores did not (Estimate = 0.16, SE = 0.13, t = 1.24, p = .22). Higher age and higher listening span scores, but not spatial span scores, predicted higher scores on factual questions in the English narratives. The overall model accounted for 21% of the performance on the factual questions (F(4,49) = 4.57, p < .01, adjusted R2 = 0.21).

3.2.2 English Narratives: Spatial Questions

Age (Estimate = 0.42, SE = 0.16, t = 2.67, p < .05), Listening span scores (Estimate = 0.29, SE = 0.12, t = 2.29, p < .05) and Spatial span scores (Estimate = 0.40, SE = 0.143, t = 3.12, p < .01) each contributed significantly to the model of scores on spatial questions, and years of education contributed marginally (Estimate = −0.31, SE = 0.16, t = −1.96, p = .06). Higher age and higher listening span scores and spatial span scores all predicted higher scores on spatial questions in the English narrative. The overall model accounted for 29% of the performance on the location questions (F(4,49) = 6.31, p < .01, adjusted R2 = 0.29).

3.2.3 ASL Narratives: Factual Questions

The overall model of factual questions in ASL did not reach significance (F(4,49) > 1, p = .46), and none of the predictor variables in the model approached significance (all ps > .30).

3.2.4 ASL Narratives: Spatial Questions

Spatial span scores (Estimate = 0.40, SE = 0.143, t = 3.12, p < .01), but not Language span scores (Estimate = 0.12, SE = 0.13, t < 1, p = .36), contributed significantly to the model of scores on spatial questions, with higher spatial span scores predicting higher scores on spatial questions in the ASL narratives. Neither age (Estimate = 0.09, SE = 0.14, t < 1, p = .55) nor years of education (Estimate = 0.14, SE = 0.11, t = 1.22, p = .23) contributed significantly to the model. The overall model accounted for 19% of the performance on the spatial questions (F(4,49) = 4.16, p < .01, adjusted R2 = 0.19).

In summary, the results from the multiple regression analyses showed that for English, language span scores, but not spatial span scores, predicted the retrieval of factual information, while both language span scores and spatial span scores predicted the retrieval of spatial information. In contrast, for ASL neither language span scores nor spatial span scores clearly predicted the retrieval of factual information, and only spatial span scores predicted the retrieval of spatial information in ASL narratives4.

4. Discussion

This is the first study to examine STM and WM spans for speakers and signers within both linguistic and spatial domains, and the first (to our knowledge) to investigate whether linguistic and/or spatial WM spans are correlated with an objective measure of sign language comprehension (but see Holmer et al., 2016, for results from children). Results from the linguistic STM span measures (ASL and English letter span tasks) revealed the expected advantage for spoken compared to signed language, replicating several previous studies (e.g., Bavelier et al., 2006; Bavelier, Newport et al., 2008; Boutla et al., 2004). However, this modality advantage did not extend to a complex WM span task with a serial recall component. Complex linguistic WM span tasks have only been used in a few previous studies that compared WM for deaf signers and hearing speakers (Alamargot, Lambert, Thebault, & Dansac, 2007; Andin et al., 2013; Boutla et al., 2004; Marschark et al., 2016). Alamargot et al. (2007) and Boutla et al. (2004) reported similar WM spans for deaf signers and hearing speakers; however, neither of these studies used span tasks that required serial recall. Rather, both studies involved production spans in which participants were asked to freely recall words or signs and use them in self-generated sentences. In contrast, Marschark et al. (2016) recently reported larger WM spans for hearing signers and non-signers than for deaf signers on two complex WM tasks (reading span and operation span) with verbal written stimuli. However, poorer performance by deaf signers could be due (at least in part) to the use of written English materials, which may have increased the task demands for deaf participants. In line with our results, Andin et al. (2013) found no difference between Swedish or British deaf signers and matched hearing non-signers on an operation span task that required serial recall of visually presented digits. Importantly, none of these previous studies involved the serial recall of verbal signed stimuli in a WM task, as tested in the current study. The present results therefore provide further evidence that the often reported advantage for speakers on linguistic STM tasks that require serial recall (i.e., digit, letter, or word spans) may not extend to complex WM span tasks that require storage and processing of linguistic stimuli.

Consistent with our results for the deaf ASL signers and hearing English monolinguals, the within-subject comparison for the language span tasks in the hearing bimodal bilinguals revealed no significant difference between WM spans for English and ASL, but a significant difference between STM (letter) spans for the two languages. The latter result replicates previous STM findings with hearing ASL-English bilinguals (Boutla et al., 2004; Hall & Bavelier; 2011). In addition, equal WM spans for ASL and English replicates the results of Wang (2013) who found no difference between listening spans for English and Auslan spans in hearing interpreters (using a very similar WM span task).

Further, Wang and Napier (2013) found that hearing Auslan signers outperformed deaf Auslan signers on the Auslan WM task. These authors suggested one reason for this finding might be that hearing signers are more likely to use English subvocal rehearsal than deaf signers, which could facilitate serial recall (see Hall and Bavelier, 2011). Rudner et al. (2016) also suggest that hearing signers may make strategic use of their speech-based representations for mnemonic purposes. However, the pattern of findings from our study argues against this interpretation because we found an advantage for English over ASL for hearing bilinguals on the STM task. It seems unlikely that the bilinguals would use a speech-based rehearsal strategy for the language span task (thus eliminating the potential difference between ASL and English), but not use this strategy for the letter span task.

In contrast to our expectations, we did not find evidence for an advantage for deaf or hearing signers on either the Corsi block test or the spatial span task. For the spatial span task, this finding suggests that possible differences between deaf and hearing readers in encoding letter stimuli (see Rudner et al., 2013) did not influence performance on the task. For the Corsi blocks test, Geraci et al. (2008) previously found better performance for adult deaf signers compared to hearing speakers, and Wilson et al. (1997) found that 8-to 11-year old deaf children also outperformed their hearing peers on this test. In contrast, Logan, Mayberry, and Fletcher (1996), Koo et al. (2008), and Marschark et al. (2015) all reported similar Corsi block spans for deaf and hearing adults. Furthermore, Keehner and Gathercole (2007) only found an advantage for hearing signers compared to non-signers on an adaptation of the Corsi block test that required 180 degrees mental rotation, simulating spatial relations in signed discourse, but not with the standard version (no rotation required). We suggest that these mixed findings regarding sign-based advantages in spatial serial recall tasks may partly be due to the fact that different versions of the Corsi block test have been used across studies (cf. Busch, Farrell, Lisdahl-Medina, & Krikorian, 2005). For example, it is possible that the configuration of certain tapping paths benefits signers over non-signers. Different methods of task administration may also contribute to the variation in results (e.g., video vs. live presentation; tapping with a pencil end vs. tapping with a finger). The widely differing signing populations that have been tested – children and adults, hearing as well as deaf signers, both native and non-native signers – may further contribute to the mixed results.

The primary goal of this study was to determine the relation between linguistic and spatial WM resources and language processing in different modalities. Specifically, we used multiple regression models to examine the relation between linguistic and spatial WM measures and comprehension accuracy for spatial and non-spatial information in spoken or signed narratives. For English, linguistic WM – but not spatial WM – predicted retention of non-spatial information expressed within a narrative (e.g., descriptive facts about landmarks), and both linguistic and spatial WM predicted retention of spatial information (e.g., the relative location of landmarks within an environment). This result is consistent with findings by Pazzaglia et al. (2007) with hearing readers, who found that performing a concurrent verbal task impaired the encoding of spatial as well as non-spatial texts, and provides further evidence for associations between spatial WM resources and the comprehension of spatial language, regardless of whether the information is presented in written or spoken format.

In contrast, for ASL spatial WM predicted retention of spatial, but not factual (non-spatial) information and somewhat surprisingly, linguistic WM did not predict retention of either spatial or non-spatial information. These results are in line with a recent study by Marschark et al. (2015) who found that performance on the Corsi blocks task correlated with receptive sign language scores for deaf signers without cochlear implants, and suggest that both signers and speakers draw on non-linguistic, spatial WM resources when processing spatial information in narratives. Another recent study from that group reported the absence of significant correlations between deaf and hearing signers’ performance on two linguistic complex WM spans and their self-rated expressive and receptive sign language abilities (Marschark et al., 2016). The lack of correlation between linguistic WM span and ASL comprehension suggests that sign language comprehension may rely less on serial order encoding than spoken language comprehension. That is, there may be a more limited role for serial order mechanisms when encoding and retrieving information in signed compared to spoken narratives.

However, we should point out that the percentage of explained variance in the analyses of the ASL narrative task was relatively low and that these null results should therefore be interpreted with caution. Although the results are based on a relatively large number of participants (N = 54), the sample included native and early deaf signers, as well as hearing signers, which may have introduced additional inter-individual variation in our measures. Although there were no significant differences between the groups of signers on any of the obtained measures, we cannot rule out the possibility that different results would be obtained with a more homogeneous sample of signers.

Overall, these findings challenge strong assumptions of independent domain-specific subsystems for the storage and processing of linguistic and spatial information (Baddeley, 1986, 2007; Logie, 1995; Shah & Miyake, 1996). Rather, the results are more consistent with models that characterize WM as a domain general pool of resources with modality-independent capacity limits (e.g. Barrouillet et al., 2004; Just & Carpenter, 1992; Saults & Cowan, 2007). Our results are also in line with recent proposals of a more important role for the binding of multidimensional features in an episodic buffer during WM tasks (e.g. Baddeley, 2000; Hall and Bavelier, 2010; Rudner & Rönnberg, 2008b). According to this view, whereas speakers strongly rely on the phonological loop for the storage and recall of linguistic information in WM tasks, signers instead rely on integrated multidimensional memory representations in the episodic buffer that include phonological information, but also, for instance, semantic and spatial information (e.g. Hirshorn et al., 2012; Rudner et al., 2009, 2010, 2013).

In summary, our findings suggest that linguistic processing and spatial processing do not rely on fundamentally distinct resource pools. Furthermore, we show that language modality differences impact the encoding of linguistic information in working memory. Signers appear to rely less strongly on serial encoding during language processing compared to hearing speakers, and instead engage spatial WM resources to keep linguistic representations active during language processing.

Highlights.

Serial recall advantage for speakers is limited to linguistic short-term memory tasks

Signers rely less strongly on serial encoding in language processing than speakers

Speakers and signers engage spatial WM resources when processing spatial language

Signers do not show advantages in visual-spatial WM regardless of hearing status

Acknowledgments

This research was supported by The National Institutes of Health grants DC010997 and HD047736 to Karen Emmorey and San Diego State University. We would like to thank our research participants, Tanya Denmark for help with development of the sign span task, and Allison Bassett and Kristen Secora for help with the study.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Because age and years of education correlated significantly with each other within each group (rs > .40), only age was included as covariate.

This pattern of results was not driven by the performance of the early signers – the pattern holds when only native signers are included in the analysis.

Analyzing the groups seperately yielded a similar pattern, but the multiple regression models for the hearing bimodal bilinguals generally did not reach significance, most likely due to a lack of power (N = 19).

The observed null results were not driven by the performance of the early signers and hold when only native signers are included in the analysis.

Portions of this manuscript have been presented at the 11th Theoretical Issues in Sign Language Research Conference, London, UK, July 10–13, 2013.

References

- Alamargot D, Lambert E, Thebault C, Dansac C. Text composition by deaf and hearing middle-school students: The role of working memory. Reading and Writing: An Interdisciplinary Journal. 2007;20(4):333–360. [Google Scholar]

- Andin J, Orfanidou E, Cardin V, Holmer E, Capek CM, Woll B, Rudner M. Similar digit-based working memory in deaf signers and hearing non-signers despite digit span differences. Frontiers in Psychology. 2013;4(942) doi: 10.3389/fpsyg.2013.00942. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baddeley AD. Working memory. Oxford, NY: Clarendon Press; 1986. [Google Scholar]

- Baddeley AD. The episodic buffer: a new component of working memory. Trends in Cognitive Sciences. 2000;4(11):417–423. doi: 10.1016/s1364-6613(00)01538-2. [DOI] [PubMed] [Google Scholar]

- Baddeley AD. Working memory, thought and action. Oxford, UK: Oxford University Press; 2007. [Google Scholar]

- Baddeley AD. Working memory: Theories, models, and controversies. Annual Review of Psychology. 2012;63:1–29. doi: 10.1146/annurev-psych-120710-100422. [DOI] [PubMed] [Google Scholar]

- Baddeley AD, Hitch G. Working memory. The Psychology of Learning and Motivation. 1974;8:47–89. [Google Scholar]

- Baddeley AD, Logie RH. Working memory: The multiple component model. In: Miyake A, Shah P, editors. Models of working memory: Mechanisms of active maintenance and executive control. New York, NY: Cambridge University Press; 1999. pp. 28–61. [Google Scholar]

- Barrouillet P, Bernardin S, Camos C. Time constraints and resource sharing in adults’ working memory spans. Journal of Experimental Psychology: General. 2004;133(1):83–100. doi: 10.1037/0096-3445.133.1.83. [DOI] [PubMed] [Google Scholar]

- Barrouillet P, Vergauwe E, Bernardin S, Portrat S, Camos V. Time and cognitive load in working memory. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2007;33(3):570–585. doi: 10.1037/0278-7393.33.3.570. http://doi.org/10.1037/0278-7393.33.3.570. [DOI] [PubMed] [Google Scholar]

- Bavelier D, Newman AJ, Mukherjerr M, Hauser P, Kemeny S, Braun A, Boutla M. Encoding, rehearsal, and recall in signers and speakers: Shared network but differential engagement. Cerebral Cortex. 2008;18(10):2263–2274. doi: 10.1093/cercor/bhm248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bavelier D, Newport EL, Hall ML, Supalla T, Boutla M. Persistent difference in short-term memory span between sign and speech: Implications for cross-linguistic comparisons. Psychological Science. 2006;17(12):1090–1092. doi: 10.1111/j.1467-9280.2006.01831.x. [DOI] [PubMed] [Google Scholar]

- Bavelier D, Newport EL, Hall ML, Supalla T, Boutla M. Ordered short-term memory differs in signers and speakers: implications for models of short-term memory. Cognition. 2008;107(2):433–459. doi: 10.1016/j.cognition.2007.10.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boutla M, Supalla T, Newport EL, Bavelier D. Short-term memory span: Insights from sign language. Nature Neuroscience. 2004;7:997–1002. doi: 10.1038/nn1298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brysbaert M, New B. Moving beyond Kučera and Francis: A critical evaluation of current word frequency norms and the introduction of a new and improved word frequency measure for American English. Behavior Research Methods. 2009;41(4):977–990. doi: 10.3758/BRM.41.4.977. [DOI] [PubMed] [Google Scholar]

- Buchsbaum B, Pickell B, Love T, Hatrak M, Bellugi U, Hickok G. Neural substrates for verbal working memory in deaf signers: fMRI study and lesion case report. Brain and Language. 2005;95(2):265–272. doi: 10.1016/j.bandl.2005.01.009. [DOI] [PubMed] [Google Scholar]

- Busch RM, Farrell K, Lisdahl-Medina K, Krikorian R. Corsi block-tapping task performance as a function of path configuration. Journal of Clinical and Experimental Neuropsychology. 2005;27(1):127–134. doi: 10.1080/138033990513681. [DOI] [PubMed] [Google Scholar]

- Caplan D, Waters GS. Verbal working memory and sentence comprehension. Brain and Behavioral Sciences. 1999;22(1):77–126. doi: 10.1017/s0140525x99001788. [DOI] [PubMed] [Google Scholar]

- Carreiras M. Sign language processing. Language and Linguistics Compass. 2010;4(7):430–444. [Google Scholar]

- Cocchini G, Logie RH, Delia Sala SD, MacPherson SE, Baddeley AD. Concurrent performance of two memory tasks: Evidence for domain-specific working memory systems. Memory and Cognition. 2002;30(7):1086–1095. doi: 10.3758/bf03194326. [DOI] [PubMed] [Google Scholar]

- Cohen JD, MacWhinney B, Flatt M, Provost J. PsyScope: a new graphic interactive environment for designing psychology experiments. Behavioral Research Methods, Instruments, and Computers. 1993;25(2):257–271. [Google Scholar]

- Corsi PM. Human memory and the medial temporal region of the brain. Dissertation Abstracts International. 1972;34(2):891B. [Google Scholar]

- Cowan N. The magical number 4 in short-term memory: A reconsideration of mental storage capacity. Behavioral and Brain Sciences. 2001;24(1):87–114. doi: 10.1017/s0140525x01003922. [DOI] [PubMed] [Google Scholar]

- Cowan N. Working memory capacity. New York, NY: Psychology Press; 2005. [Google Scholar]

- Cowan N, Saults JS, Blume CL. Central and peripheral components of working memory storage. Journal of Experimental Psychology: General. 2014;143(5):1806–1836. doi: 10.1037/a0036814. http://doi.org/10.1037/a0036814. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daneman M, Carpenter P. Individual differences in working memory and reading. Journal of Verbal Learning and Verbal Behavior. 1980;19(4):450–466. [Google Scholar]

- Daneman M, Hannon B. What do working memory span tasks like reading span really measure? In: Osaka N, Logie RR, DΈsposito M, editors. The cognitive neuroscience of working memory. Oxford, NY: Oxford University Press; 2007. pp. 21–42. [Google Scholar]

- Daneman M, Merikle PM. Working memory and language comprehension: A meta-analysis. Psychonomic Bulletin & Review. 1996;3(4):422–433. doi: 10.3758/BF03214546. [DOI] [PubMed] [Google Scholar]

- De Beni R, Pazzaglia F, Gyselinck V, Meneghetti C. Visuospatial working memory and mental representation of spatial descriptions. European Journal of Cognitive Psychology. 2005;17(1):77–95. [Google Scholar]

- Emmorey K. The psycholinguistics of signed and spoken languages: how biology affects processing. In: Gaskell G, editor. Oxford handbook of psycholinguistics. Oxford, NY: Oxford University Press; 2007. pp. 703–721. [Google Scholar]

- Emmorey K, Klima E, Hickok G. Mental rotation within linguistic and nonlinguistic domains in users of American Sign Language. Cognition. 1998;68(3):221–246. doi: 10.1016/s0010-0277(98)00054-7. [DOI] [PubMed] [Google Scholar]

- Emmorey K, Kosslyn S, Bellugi U. Visual imagery and visual-spatial language: Enhanced imagery abilities in deaf and hearing ASL signers. Cognition. 1993;46(2):139–181. doi: 10.1016/0010-0277(93)90017-p. [DOI] [PubMed] [Google Scholar]

- Fougnie D, Zughni S, Godwin D, Marois R. Working memory storage is intrinsically domain-specific. Journal of Experimental Psychology: General. 2014;144(1):30–47. doi: 10.1037/a0038211. http://doi.org/10.1037/a0038211. [DOI] [PubMed] [Google Scholar]

- Friedman NP, Miyake A. Differential roles for visuospatial and verbal working memory in situation model construction. Journal of Experimental Psychology: General. 2000;129(1):61–83. doi: 10.1037//0096-3445.129.1.61. [DOI] [PubMed] [Google Scholar]

- Geraci G, Gozzi M, Papagno C, Cecchetto C. How grammar can cope with limited short-term memory: Simultaneity and seriality in sign languages. Cognition. 2008;106(2):780–804. doi: 10.1016/j.cognition.2007.04.014. [DOI] [PubMed] [Google Scholar]

- Hall ML, Bavelier D. Working Memory, Deafness, and Sign Language. In: Marschark M, Spencer P, editors. The Oxford handbook of deaf studies, language, and education. Vol. 2. Oxford University Press; 2010. pp. 458–472. [Google Scholar]

- Hall ML, Bavelier D. Short-term memory stages in sign vs. speech: The source of the serial span discrepancy. Cognition. 2011;120(1):54–66. doi: 10.1016/j.cognition.2011.02.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Handley SJ, Capon A, Copp C, Harper C. Conditional reasoning and the Tower of Hanoi: The role of spatial and verbal working memory. British Journal of Psychology. 2002;93(4):501–518. doi: 10.1348/000712602761381376. [DOI] [PubMed] [Google Scholar]

- Hirshorn EA, Fernandez NM, Bavelier D. Routes to short-term memory indexing: Lessons from deaf native users of American Sign Language. Cognitive Neuropsychology. 2012;29(1–2):85–103. doi: 10.1080/02643294.2012.704354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holmer E, Heimann M, Rudner M. Theory of mind and reading comprehension in deaf and hard-of-hearing signing children. Frontiers in Psychology. 2016;7:854. doi: 10.3389/fpsyg.2016.00854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Just MA, Carpenter PA. A capacity theory of comprehension: Individual differences in working memory. Psychological Review. 1992;99(1):122–149. doi: 10.1037/0033-295x.99.1.122. [DOI] [PubMed] [Google Scholar]

- Keehner M, Gathercole SE. Cognitive adaptations arising from nonnative experience of sign language in hearing adults. Memory and Cognition. 2007;35(4):752–761. doi: 10.3758/bf03193312. [DOI] [PubMed] [Google Scholar]

- King J, Just MA. Individual differences in syntactic processing: The role of working memory. Journal of Memory and Language. 1991;30(5):580–602. [Google Scholar]

- Koo D, Crain K, LaSasso C, Eden GF. Phonological awareness and short-term memory in hearing and deaf individuals of different communication backgrounds. Annals of the New York Academy of Sciences. 2008;1145(1):83–99. doi: 10.1196/annals.1416.025. [DOI] [PubMed] [Google Scholar]

- Logan K, Mayberry M, Fletcher J. The short-term memory of profoundly deaf people for words, signs, and abstract spatial stimuli. Applied Cognitive Psychology. 1996;10(2):105–119. [Google Scholar]

- Logie RH. Visuo-spatial working memory. Hove, UK: Lawrence Erlbaum Associates; 1995. [Google Scholar]

- Logie RH. The functional organization and capacity limits of working memory. Current Directions in Psychological Science. 2011;20(4):240–245. [Google Scholar]

- Marschark M, Spencer L, Durkin A, Borgna G, Convertino C, Machmer E, Trani A. Understanding language, hearing status, and visual-spatial skills. Journal of Deaf Studies and Deaf Education. 2015;20:310–330. doi: 10.1093/deafed/env025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marschark M, Sarchet T, Trani A. Effects of hearing status and sign language use on working memory. Journal of Deaf Studies and Deaf Education. 2016;21(2):148–155. doi: 10.1093/deafed/env070. http://doi.org/10.1093/deafed/env070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKee D. An analysis of specialized cognitive functions in deaf and hearing signers. University of Pittsburgh; Pittsburgh, PA: 1987. [Google Scholar]

- Meneghetti C, De Beni R, Pazzaglia F, Gyselinck V. The role of visuo-spatial abilities in recall of spatial descriptions: A mediation model. Learning and Individual Differences. 2011;21(6):719–723. [Google Scholar]

- Meneghetti C, Gyselinck V, Pazzaglia F, De Beni R. Individual differences in spatial text processing: High spatial ability can compensate for spatial working memory interference. Learning and Individual Differences. 2009;19(4):577–589. [Google Scholar]

- Milner B. Interhemispheric differences in the localization of psychological processes in man. British Medical Bulletin. 1971;27(3):272–277. doi: 10.1093/oxfordjournals.bmb.a070866. [DOI] [PubMed] [Google Scholar]

- Morey CC, Morey RD, Van der Reijden M, Holweg M. Asymmetric cross-domain interference between two working memory tasks: Implications for models of working memory. Journal of Memory and Language. 2013;69:324–348. http://doi.org/10.1016/j.jml.2013.04.004. [Google Scholar]

- Oberauer K. Design for a working memory. Psychology of Learning and Motivation. 2009;51:45–100. http://doi.org/10.1016/S0079-7421(09)51002-X. [Google Scholar]

- Oberauer K, Farrell S, Jarrold C, Lewandowsky S. What limits working memory capacity? Psychological Bulletin. 2016;142(7):758–799. doi: 10.1037/bul0000046. http://doi.org/10.1037/bul0000046. [DOI] [PubMed] [Google Scholar]

- Pa J, Wilson SM, Pickell H, Bellugi U, Hickok G. Neural organization of linguistic short-term memory is sensory modality-dependent: Evidence from signed and spoken language. Journal of Cognitive Neuroscience. 2008;20(12):2198–2210. doi: 10.1162/jocn.2008.20154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pagulayan FK, Busch RM, Medina KL, Bartok JA, Krikorian R. Developmental normative data for the Corsi block-tapping task. Journal of Clinical and Experimental Neuropsychology. 2006;28(6):1043–1052. doi: 10.1080/13803390500350977. [DOI] [PubMed] [Google Scholar]

- Pazzaglia F, Cornoldi C. The role of distinct components of visuo-spatial working memory in the processing of texts. Memory. 1999;7(1):19–41. doi: 10.1080/741943715. [DOI] [PubMed] [Google Scholar]

- Pazzaglia F, De Beni R, Meneghetti C. The effects of verbal and spatial interference in the encoding and retrieval of spatial and nonspatial texts. Psychological Research. 2007;71(4):484–494. doi: 10.1007/s00426-006-0045-7. [DOI] [PubMed] [Google Scholar]

- R core development team. R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing; 2011. Retrieved from http://www.r-project.org. [Google Scholar]

- Ricker TJ, Cowan N, Morey CC. Visual working memory is disrupted by covert verbal retrieval. Psychonomic Bulletin & Review. 2010;17(4):516–521. doi: 10.3758/PBR.17.4.516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romero Lauro LJ, Crespi M, Papagno C, Cecchetto C. Making sense of an unexpected detrimental effect of sign language use in a visual task. Journal of Deaf Studies and Deaf Education. 2014;19(3):358–365. doi: 10.1093/deafed/enu001. [DOI] [PubMed] [Google Scholar]

- Rönnberg J, Rudner M, Ingvar M. Neural correlates of working memory for sign language. Cognitive Brain Research. 2004;20(2):165–182. doi: 10.1016/j.cogbrainres.2004.03.002. [DOI] [PubMed] [Google Scholar]

- Rudner M, Andin J, Rönnberg J. Working memory, deafness and sign language. Scandinavian Journal of Psychology. 2009;50(5):495–505. doi: 10.1111/j.1467-9450.2009.00744.x. [DOI] [PubMed] [Google Scholar]

- Rudner M, Davidsson L, Rönnberg J. Effects of age on the temporal organization of working memory in deaf signers. Aging, Neuropsychology, and Cognition. 2010;17(3):360–383. doi: 10.1080/13825580903311832. [DOI] [PubMed] [Google Scholar]

- Rudner M, Fransson P, Ingvar M, Nyberg L, Rönnberg J. Neural representation of binding lexical signs and words in the episodic buffer of working memory. Neuropsychologia. 2007;45(10):2258–2276. doi: 10.1016/j.neuropsychologia.2007.02.017. [DOI] [PubMed] [Google Scholar]

- Rudner M, Karlsson T, Gunnarsson J, Rönnberg J. Levels of processing and language modality specificity in working memory. Neuropsychologia. 2013;51(4):656–666. doi: 10.1016/j.neuropsychologia.2012.12.011. [DOI] [PubMed] [Google Scholar]

- Rudner M, Orfanidou E, Cardin V, Capek C, Woll B, Rönnberg J. Pre-existing semantic representation improves working memory performance in the visual spatial domain. Memory & Cognition. 2016;44:608–620. doi: 10.3758/s13421-016-0585-z. [DOI] [PubMed] [Google Scholar]

- Rudner M, Rönnberg J. Explicit processing demands reveal language modality-specific organization of working memory. Journal of Deaf Studies and Deaf Education. 2008a;13(4):466–484. doi: 10.1093/deafed/enn005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudner M, Rönnberg J. The role of the episodic buffer in working memory for language processing. Cognitive Processing. 2008b;9(1):19–28. doi: 10.1007/s10339-007-0183-x. [DOI] [PubMed] [Google Scholar]

- Saults JS, Cowan N. A central capacity limit to the simultaneous storage of visual and auditory arrays in working memory. Journal of Experimental Psychology: General. 2007;136(4):663–684. doi: 10.1037/0096-3445.136.4.663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shah P, Miyake A. The separability of working memory resources or spatial thinking and language processing: An individual differences approach. Journal of Experimental Psychology: General. 1996;125(1):4–27. doi: 10.1037//0096-3445.125.1.4. [DOI] [PubMed] [Google Scholar]

- Turner ML, Engle RW. Is working memory capacity task dependent? Journal of Memory and Language. 1989;28(2):127–154. [Google Scholar]

- Vergauwe E, Barrouillet P, Camos V. Do mental processes share a domain-general resource? Psychological Science. 2010;21(3):384–390. doi: 10.1177/0956797610361340. [DOI] [PubMed] [Google Scholar]

- Wang J. Bilingual working memory capacity of professional Auslan/English interpreters. Interpreting. 2013;15(2):139–167. [Google Scholar]

- Wang J, Napier J. Signed language working memory capacity of signed language interpreters and deaf signers. Journal of Deaf Studies and Deaf Education. 2013;18:271–286. doi: 10.1093/deafed/ens068. [DOI] [PubMed] [Google Scholar]

- Waters GS, Caplan D. Processing resource capacity and the comprehension of garden path sentences. Memory and Cognition. 1996;24(3):342–355. doi: 10.3758/bf03213298. [DOI] [PubMed] [Google Scholar]

- Wechsler D. Wechsler adult intelligence scale (WAIS) New York, NY: PsychCorp; 1955. [Google Scholar]

- Wilson M, Bettger J, Niculae I, Klima E. Modality of language shapes working memory. Journal of Deaf Studies and Deaf Education. 1997;2(3):150–160. doi: 10.1093/oxfordjournals.deafed.a014321. [DOI] [PubMed] [Google Scholar]

- Wilson M, Emmorey K. A visuospatial ‘“phonological loop’” in working memory: Evidence from American Sign Language. Memory and Cognition. 1997;25(3):313–320. doi: 10.3758/bf03211287. [DOI] [PubMed] [Google Scholar]

- Wilson M, Emmorey K. A ‘“word length effect’” for sign language: Further evidence for the role of language in structuring working memory. Memory and Cognition. 1998;26(3):584–590. doi: 10.3758/bf03201164. [DOI] [PubMed] [Google Scholar]

- Wilson M, Emmorey K. The effect of irrelevant visual input on working memory for sign language. Journal of Deaf Studies and Deaf Education. 2003;8(2):97–103. doi: 10.1093/deafed/eng010. [DOI] [PubMed] [Google Scholar]

- Wilson M, Emmorey K. Comparing sign language and speech reveals a universal limit on short-term memory capacity. Psychological Science. 2006a;17(8):682–683. doi: 10.1111/j.1467-9280.2006.01766.x. [DOI] [PubMed] [Google Scholar]

- Wilson M, Emmorey K. No difference in short-term memory span between sign and speech. Psychological Science. 2006b;17(12):1093–1094. doi: 10.1111/j.1467-9280.2006.01835.x. [DOI] [PubMed] [Google Scholar]