Abstract

Laboratory data are critical to analyzing and improving clinical quality. In the setting of residual use of creatine kinase M and B isoenzyme testing for myocardial infarction, we assessed disease outcomes of discordant creatine kinase M and B isoenzyme +/troponin I (−) test pairs in order to address anticipated clinician concerns about potential loss of case-finding sensitivity following proposed discontinuation of routine creatine kinase and creatine kinase M and B isoenzyme testing. Time-sequenced interventions were introduced. The main outcome was the percentage of cardiac marker studies performed within guidelines. Nonguideline orders dominated at baseline. Creatine kinase M and B isoenzyme testing in 7496 order sets failed to detect additional myocardial infarctions but was associated with 42 potentially preventable admissions/quarter. Interruptive computerized soft stops improved guideline compliance from 32.3% to 58% (P < .001) in services not receiving peer leader intervention and to >80% (P < .001) with peer leadership that featured dashboard feedback about test order performance. This successful experience was recapitulated in interrupted time series within 2 additional services within facility 1 and then in 2 external hospitals (including a critical access facility). Improvements have been sustained postintervention. Laboratory cost savings at the academic facility were estimated to be ≥US$635 000 per year. National collaborative data indicated that facility 1 improved its order patterns from fourth to first quartile compared to peer norms and imply that nonguideline orders persist elsewhere. This example illustrates how pathologists can provide leadership in assisting clinicians in changing laboratory ordering practices. We found that clinicians respond to local laboratory data about their own test performance and that evidence suggesting harm is more compelling to clinicians than evidence of cost savings. Our experience indicates that interventions done at an academic facility can be readily instituted by private practitioners at external facilities. The intervention data also supplement existing literature that electronic order interruptions are more successful when combined with modalities that rely on peer education combined with dashboard feedback about laboratory order performance. The findings may have implications for the role of the pathology laboratory in the ongoing pivot from quantity-based to value-based health care.

Keywords: creatine kinase MB form, decision support techniques, interrupted time series analysis, myocardial infarction, pathologists, patient safety, quality improvement

Introduction

The clinical laboratory and its data play a critical role in quality improvement, including the detection, discussion, and deterrence of low-value services. “Low-value” services are described as services that fail to improve care, that waste resources, and may instigate harm.1,2 Persistence of low-value clinical orders can descend from unfamiliarity with best practice such as established clinical guidelines,3 from complexity of implementing change,3 from perceived patient preferences,4 and from awareness of guideline limitations or their local applicability.5,6

In seeking to eliminate low-value laboratory services, pathologists should recognize clinician perceptions of change can be affected by several human cognitive biases in addition to their expressed concerns about patient well-being. For example, “optimism bias” assumes that current activities are rational; “confirmation bias” selectively filters information contrary to preconceived beliefs, while “loss aversion (status quo)” bias defends established routines.7,8 “Commission bias” is the normal tendency to perceive that poor outcomes are more likely to originate from inaction (in this case not ordering a redundant test), compared to action.1 However, even when these normal human biases may contribute undesirable momentum for low-value services, clinicians generally articulate resistance to change in the language of patient needs and patient care. To deal with visible and less visible barriers to improving clinical value, an established research need is identifying the best practice to influence clinicians to abandon low-value orders.7,9 Despite a current national focus on this need,7,10–13 empirical studies of clinicians abandoning low-value tests are “few and far between.”9

Clinical teams featuring pathologists are positioned to provide timely data and to address literature gaps concerning how best to achieve changes leading to high-value services. The diagnosis of myocardial infarction (MI) is common, clinically important, and features a defined clinical laboratory order set. Since 2007, the consensus laboratory standard has been sensitive cardiac troponin T or troponin I (cTnI). Compared to previous tests, cTnI tests have superior sensitivity and specificity for detecting myocardial injury.14–18 Creatine kinase M and B isoenzyme (CKMB) testing does have occasional uses and may be needed on rare occasions to diagnose uncommon conditions.19–21 We therefore agreed readily to retain these tests in our laboratory compendium. Nevertheless, the legitimate need to retain the test did not explain why routine CKMB orders persisted well past 2007 in our region and beyond.22–24 We also planned to determine whether there was a need to intervene, without eliminating clinician-desired access to the CKMB orders. An Emergency Medicine (EM) Department conducted an evaluation of discrepant cases and found no value to the CKMB, followed by successful removal of the CKMB testing from the emergency department (ED) menu, with attendant cost savings.23 A community hospital has also shown that a suite of unneeded MI tests, including CKMB and myoglobin, can be eliminated from the routine evaluation of MI, with substantial cost savings.24 The individual contributions of education and changes to computerized provider order entry (CPOE) could not be analyzed in this successful intervention. At about the same time as we undertook our intervention, a successful academic intervention was completed and described at another university; the authors pointed out the unmet need to translate interventions from academic settings to external partners.22

In preliminary discussions, clinician leaders expressed anticipated reservations about the possible loss of CKMB and CK in order sets, including missed or delayed diagnoses, with emphasis on unique aspects of regional populations (“our patients are different”) and potential consequences (missed diagnoses, harm to patients, lawsuits).1,5,6,25 We recognized that expressed patient-centered concern for missed diagnoses can mask equally powerful unconscious cognitive bias.26 As part of their quality improvement mission, pathologists can and should evaluate the reality of such concerns. We hypothesized that local data demonstrating that we could safely drop redundant care markers without missing MIs would address the stated patient-centered concerns and would be equally effective to address unexpressed human perceptual biases, especially if change process was driven by teamwork with committed peer leaders who provide “academic detailing” to colleagues.3,27,28 We also planned to gauge the effectiveness of individual steps within a multimodal intervention and then to replicate the successful components externally.

Methods

Data Extraction

Baseline laboratory orders for chest pain/rule-out MI (R/O MI) data were evaluated retrospectively from May 2008 through July 2012 at the academic facility and then January 2013 through March 2014 at 2 external facilities. Test order data included inpatient and outpatient settings, EM, and Urgent Care. Under an institutional review board (IRB) for quality improvement projects, summary CPOEs were obtained from the laboratory information system (Sunquest version 6.2, Sunquest Information Systems, Tucson, Arizona) using an ad hoc report writer (Crystal Reports XI, SAP, Newtown Square, Pennsylvania) to extract cTnI, CKMB, and CK test orders with results including the calculated MB index (MBI) (all combinations including CKMB, hereafter abbreviated as CKMB). Also extracted were ordering location, clinician, and date/time of testing. The data warehouse of West Virginia University Clinical Translational Science Institute provided service-level data for dashboard reports and data for rural hospitals who participated in a shared data warehouse.

Data Analysis

Microsoft Excel (Version 2010, Redmond, Washington) and JMP (SAS Institute Inc, Cary, North Carolina) were used for data trending, classification, distribution analysis, graphing, and dashboard reports. Creatine kinase-only orders (not containing cTnI or CKMB) were omitted as not related to the MI diagnosis. Orders were classified as “guideline” (cTnI-only orders) or “nonguideline” (any other included combination). Because diagnostic volume varied, results are presented as percentage adherent or nonadherent to guidelines. Differences in percentages across time and across groups were tested using χ2 tests of association. A sensitivity analysis used generalized linear mixed effects models (GLIMMs) with linear splines, assuming that the distribution of guideline orders followed a binomial distribution and was correlated over time within groups, in order to test whether the probability of having a guideline order was different immediately before and after staged intervention components or preintervention/postintervention. Analyses used SAS/STAT software, version 9.4 of the SAS System for Windows (© 2012 SAS Institute Inc, Cary, North Carolina).

Discordant Test Sensitivity Data

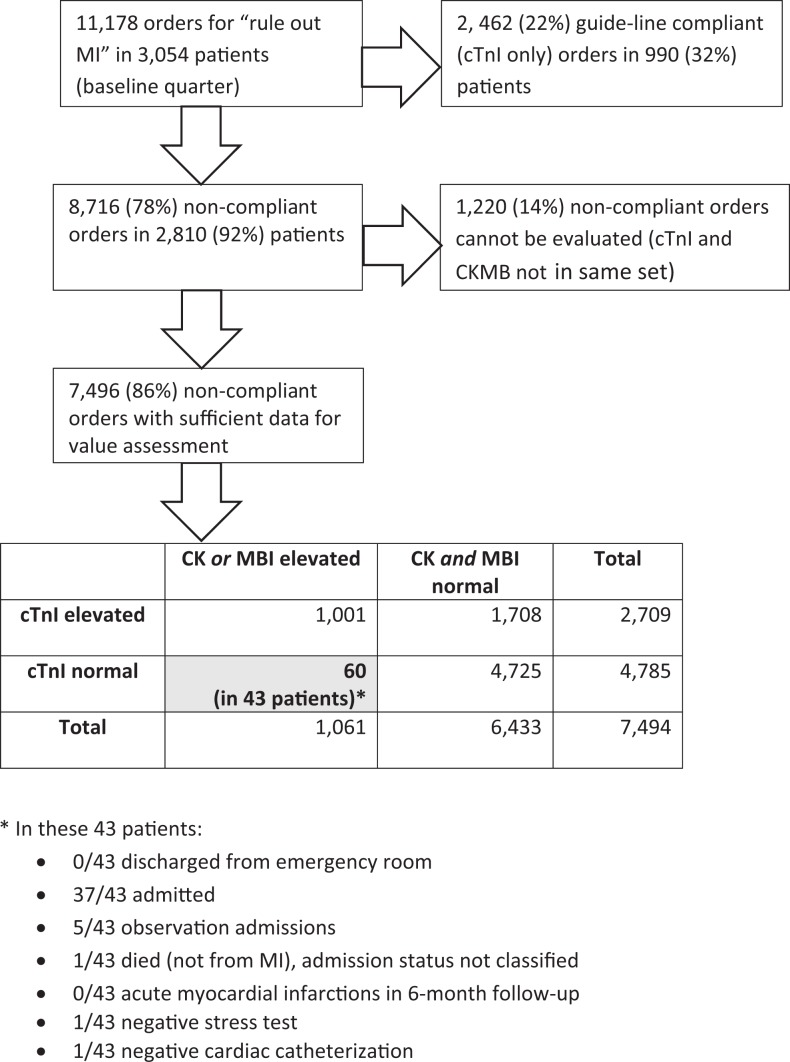

We evaluated the frequency of test discordance for a baseline quarter, seeking any negative cTnI (cTnI[−]) result, paired with positive CKMB (CKMB+) findings. We obtained IRB permission to review medical records containing the CKMB+/cTnI(−) discordant finding in the last baseline quarter, retrieving the following data for summary presentation: (1) whether patient was admitted; (2) whether MI was diagnosed at the time or over the next 6 months; (3) other occurrences of hospitalization, (4) subsequent nuclear cardiac stress testing and cardiac catheterization, with results. Figure 1 provides the decision tree (and results).

Figure 1.

Decision tree and truth table for evaluating CKMB/MB Index (MBI) performance that was used for to convince clinicians to change their ordering patterns for the “rule-out myocardial infarction” diagnosis. CKMB indicates creatine kinase M and B isoenzyme.

Electronic Changes to Laboratory Test Orders

The EM peer leader and hospital administration including the chief medical information officer (CMIO) initially selected a minimally interruptive “soft stop” that changed a default order set that had included CKMB and CK. With this change, the ordering clinician now had to select these tests from a visible checklist, adding an estimated 1 second to enter a nonguideline order. After trial evaluation, the CMIO removed CKMB and CK tests from R/O MI order sets while retaining them in the laboratory catalog as a separate order requiring an estimated 15 to 30 seconds.

Intervention including Academic Detailing

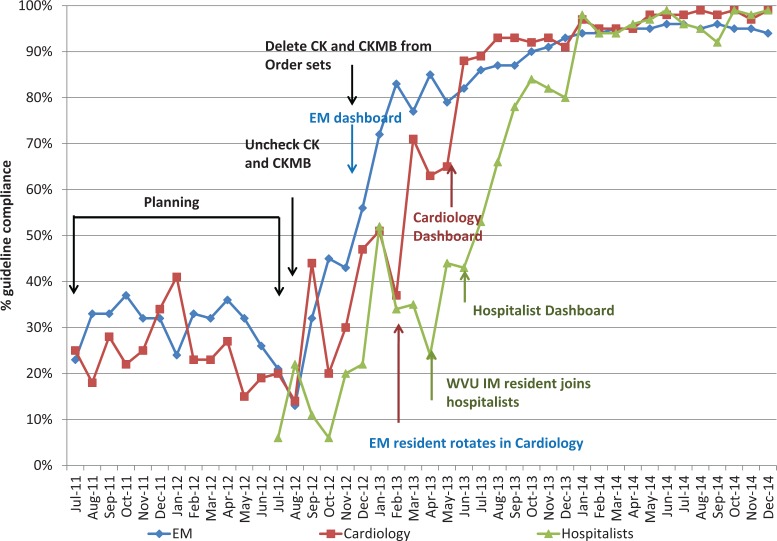

Standards for Quality Improvement Reporting Excellence (SQUIRE version 1.0)29 influenced the interrupted time series design. Figure 2 provides intervention elements and time sequence details of intervention, also illustrating the dates of data collection. General education sessions (rounds) provided R/O MI laboratory consensus standards15,30 during the initial planning period and introduced impending changes to order sets. The results of institutional discordant test pairs analysis and baseline unit compliance (Figures 1 and 2) were presented (in interrupted time sequence) to prospective peer leaders to facilitate their recruitment. Peer leader volunteers then used the baseline data and subsequent alterations in practice patterns to motivate peers, using figural dashboards. The dashboard format illustrated in Figure 2 was adapted from previously published methods31 and was amenable to institutional, service, or peer-to-peer representation, depending on the judgment of the service peer leader.

Figure 2.

Percent compliance with guidelines for ordering cardiac markers compared to intervention milestones within services at academic hospital looking at 3 different large specialty groups (Emergency Medicine, Cardiology, and Nonacademic hospitalists; ie, no residents rotate with these individuals). Dates of interventions are color coded with Electronic medical record (EMR) interventions shown as black.

Peer leaders from EM, followed by Cardiology, and then nonacademic hospitalists (who do not supervise residents) sequentially introduced a service-level review of guidelines, the format for dashboard feedback, baseline data for institutional, and service-level experience. The feasible goal was consistently presented as <100% compliance, acknowledging clinical discretion to order CKMB if needed. Emergency Medicine created a goal of 75% guideline compliance. Subsequent intervention groups including external hospitals targeted 75% to 80% compliance.

Cost Calculations

We searched for simplified, reproducible models recommended for cost reporting29,32,33 and emphasized to colleagues that the approach favors transparency and replication rather than economic sophistication. The formula was: (2012 Medicare reimbursement value) × (number of nonguideline orders) × (% reduction goal) = modeled cost opportunity. Year 2012, Medicare reimbursements were US$16.25 for CKMB and US$9.17 for CK. For purposes of tabular transparency, we assumed 1:1 correspondence of these tests in the MI setting, although CKMB tests were actually more common than CK tests (61:39 in the modeled quarter). Reimbursement represents one way to evaluate costs; a minimizing and equally transparent alternative substituted advertised disposable reagent cost for the Medicare cost. This alternative also addressed the ongoing transition in laboratory reimbursement, from an income to a cost center. After intervention, outcomes were revised to the achieved change in costs, using the actual decrease in testing.

External Facilities

The intervention and supporting data including the internal experience were presented in sequence (Figure 3) at 2 smaller, rural hospitals, located >100 miles from the academic hospital. Participating facilities belonged to a larger hospital system, in which facility leaders retained independence over clinical protocols including quality improvement decisions. Academic facility support to external peer leaders was limited to assistance with altering electronic order sets, provision of the initial presentation with the initial analysis of the performance of test discordant data, and to access to dashboard feedback for use by the peer leader during the intervention. External peer leader characteristics and tasks differed from the academic site in these ways: they were in private practice, they communicated across disciplines with all clinical staff, and their intervention began with the more interruptive (but still soft) “stop” requiring a separate catalog order of CKMB and CK. All phases of the external interventions (education, alterations to CPOE, and peer feedback supported by dashboard reports) were implemented simultaneously at external facilities, but the 2 external interventions were again in time sequence.

Figure 3.

Percent compliance with guidelines at 3 institutions. The interventions were performed sequentially in the 3 different institutions, first at West Virginia University Hospitals, then Jefferson Medical Center (a Critical Access Hospital), and finally at Berkeley Medical Center and analyzed as an interrupted time series analysis.

Internal and External Validity

National data for R/O MI chest pain testing provided external documentation of the change in test order intensity compared to peer tertiary care University Health Consortium (UHC) member organizations. Each UHC facility receives its own performance compared to national norms for all similar facilities, but not the performance of any other facility. University Health Consortium membership can vary over time; quartile representations are therefore more useful than numerical place. A representation of these data is in Table 1. Lower rank numbers represent more parsimonious use of CKMB order sets. We also investigated the relationship between order sets (which could include orders for 1 to 3 tests) and total number of individual tests, in order to provide internal validity. This activity would have detected any internal trends related to changes in patient visits or case mix, had they existed.

Table 1.

UHC Data Comparing WVU Cardiac Markers Usage per Adjusted Discharge to UHC Peer Institutions.*

| Year and Quarter | WVU Metric | Mean Metric | WVU Rank |

|---|---|---|---|

| 2012 Q4 | 26.35 | 14.6 | 56 of 66 |

| 2013 Q1 | 14.34 | 14.8 | 33 of 65 |

| 2013 Q2 | 8.24 | 13.5 | 26 of 57 |

| 2013 Q3 | 4.36 | 12.29 | 22 of 62 |

| 2013 Q4 | 3.74 | 11.49 | 22 of 65 |

| 2014 Q1 | 3.95 | 10.65 | 17 of 51 |

| 2014 Q2 | 1.94 | 11.43 | 19 of 67 |

| 2014 Q3 | 1.04 | 11.49 | 18 of 71 |

| 2014 Q4 | 0.52 | 9.95 | 9 of 69 |

| 2015 Q1 | 0.48 | 9.36 | 11 of 69 |

Abbreviation: University Health Consortium.

* University Health Consortium data for cardiac marker utilization per adjusted discharge showing our academic facility versus national peer institutions. Initially, the facility was in the last quartile but has improved to first quartile performance.

Results

Figure 1 shows that a minority of 11 178 last baseline quarter order sets were guideline compliant at the academic facility, with no consistent preintervention time trends in compliance. Most (78%) noncompliant order sets contained the minimum laboratory data (simultaneous CKMB and cTnI) needed to evaluate their contribution to case-finding sensitivity. Of these, 60 order sets (<1%) featured the CKMB+/cTnI (−) test discordance which might theoretically have added case-finding sensitivity. Record review for the 60 discordant pairs in 43 affected patients revealed no MIs at the time or in the subsequent 6 months (0 positive predictive value). None of the 43 patients with the test discordant pattern were discharged, 37 were fully admitted, 5 were “observed” for an extended period, and 1 died of noncardiac comorbidities, leaving 42 preventable admissions in 43 affected patients. In addition, one of the 42 surviving patients received a subsequent cardiac catheterization, and 1 received a nuclear stress test, with no significant disease detected. Other than the positive CKMB or MBI, reasons for not discharging patients with the discordant pattern were not revealed by record review, including absence of suggestive EKG changes. Clinical colleagues interpreted decision tree data to provide precise evidence for absence of clinical benefit, with imprecise yet strongly suggestive evidence of increased risk for unneeded admission and preventable costs in the population, as well as possibly unjustified invasive testing.

Real-world interventions are complicated, and our interrupted time series internal intervention reflects some of these realities. Most clearly seen for the initial intervention service (EM, Figure 2), internal groups experienced some improvements in guideline compliance during planning. However, preintervention improvements were not sustained. In addition, modest examples of cross-service contamination were detected (Figure 2) at the academic facility. A rotating EM resident transiently influenced guideline performance of the Cardiology service about 4 weeks before the introduction of academic detailing. The Cardiology peer leader detected the contaminating influence and highlighted it during introductory sessions to motivate change (“We can’t let EM outperform cardiology”). The nonacademic hospitalist service hired a former resident who had participated in an earlier phase of the intervention approximately 1 month before their intervention, creating a preintervention change limited to 1 clinician.

System-wide “unchecking” of CK and CKMB tests appeared less effective than the subsequent, relatively more disruptive requirement for a separate order to trigger redundant tests. However, the CMIO confirmed that many clinicians then developed customized electronic “workarounds” facilitating ongoing redundant orders. Sustained service-wide achievement of increasing guideline compliance was achieved sequentially in 3 services only after the initiation of peer leader academic detailing, compared to either previous within-group performance or to group(s) that had not yet undergone intervention (P < .001). This statistical result was robust to using GLIMMs and testing overall within-group differences, each P < .001.

External hospitals remained at baseline during the academic hospital intervention (Figure 3) and then sequentially exceeded goals (P < .001 for either within- or between-facility comparisons) following introduction of the time-compressed intervention). Postintervention compliance gains were sustained, with >95% compliance at the academic site and ≥85% compliance at external hospitals. Slight contamination across neighboring counties may have transiently influenced the second external facility (Figure 3). Known and potential episodes of contamination did not alter statistical paradigms at either the academic or external facilities.

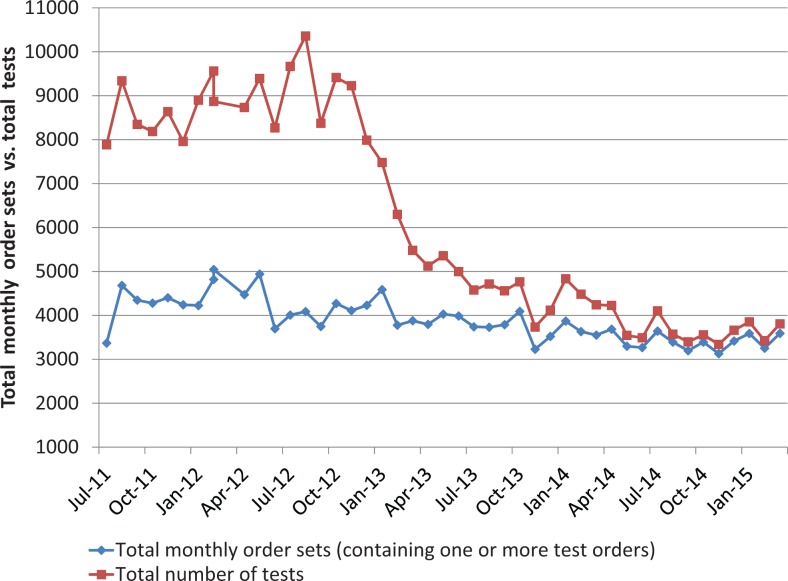

Table 1 (UHC data) documents a case mix–adjusted national trend toward less CK and CKMB testing at academic institutions and further indicates that redundant testing nevertheless persisted nationally during and after our interventions. The change reported here clearly surpassed external trends with improvement of our academic center from the fourth to the first quartile. The most parsimonious UHC performers are believed to have removed CKMB testing entirely from their laboratory catalog, a step that our clinicians did not want to take. The decline in the total number of tests is not accompanied by a change in total order sets (Figure 4), so the improvement is not a secular trend artifact of “diagnostic intensity.”

Figure 4.

Cardiac markers per month by order sets and tests. Note that the number of tests dropped dramatically while the order sets (containing 1 to 3 orders) remained the same, showing how clinicians adopted the guideline of 1 test (ie, cardiac troponin I [cTnI]) over time, thus reducing the number of tests performed.

The achieved reduction in total tests, from 68 131 total summed CKMB and CK tests in 15 months during 2011 to 2012 to 5627 such tests during 15 months in 2014 to 2015, provides an annualized difference-modeled Medicare savings as follows: > US$635 000 (in annualized 2011) in perpetuity for just the index hospital and >US$138 000 annually for the reagent model. This potentially rational approach may underestimate savings because our transparent cost model assumes a 1:1 correspondence of CKMB and CK tests, whereas the more expensive CKMB test is actually more common. In addition, the model underestimates postintervention increases in the numbers of patients evaluated for R/O MI, associated with ongoing maintained or even decreased per capita redundant tests. However, it overestimates reagent savings because published costs are higher than negotiated purchase costs. Furthermore, annualized and in-perpetuity approaches assume that the unneeded tests would never be addressed but for this intervention. We therefore present actual detailed modeled savings data during the intervention (Table 2), so interested readers can reach their own conclusions about savings during and after intervention. Preventable hospitalization costs, arguably the socially important costs implied by our data, were not modeled, requested, nor feasible with resources available.

Table 2.

Estimated Savings for the University Hospital (July 2011-March 2015).*,†

| Status of Intervention | Total Order Sets | Total Tests | Total Redundant Tests‡ | Medicare Costs of Redundant Tests§ | Reagent Costs of Redundant Tests¦ |

|---|---|---|---|---|---|

| Preintervention, July 2011-September 2012 (15 months) | 64 341 | 132 472 | 68 131 | US$865 945 | US$376 764 |

| During intervention, September 2012-December 2013 (15 months) | 58 745 | 87 820 | 29 075 | US$369 543 | US$160 785 |

| Postintervention, January 2014-March 2015 (15 months) | 51 887 | 57 514 | 5627 | US$71 519 | US$31 117 |

| Total tests reduced | Total Medicare savings | Total reagent savings | |||

| Imputed savings over the entire intervention# | 101 560 | US$1 290 828 | US$561 627 | ||

| Annual savings based on the last postintervention period¶ | 50 003 | US$635 541 | US$138 259 |

Abbreviations: CK, creatine kinase; CKMB, creatine kinase m and b isoenzyme; cTnI, cardiac troponin I.

*Savings are based on laboratory costs only; we did not estimate savings based on admitting fewer patients or on doing fewer procedures.

†A model of laboratory cost savings from reduction of redundant cardiac markers, looking at the unneeded tests and savings using 2012 Medicare reimbursement rates and advertised reagent costs.

‡For cardiac markers, order sets equal tests performed on a 1:1 basis if only a cTnI was ordered. The difference between total tests and total order sets was therefore estimated to be redundant tests.

§Medicare costs were estimated to the published per-test reimbursement (an order set containing a redundant CK and CKMB received the published 2011 Medicare reimbursement of US$25.42, and we estimated equal numbers of CK and CKMBs for cost purposes).

¦Savings were estimated to include only the published billing or per-test reagent costs (does not include labor, equipment depreciation, and assigned indirect costs of performing these tests).

#Estimated savings = preintervention modeled costs and postintervention modeled costs.

¶Annual savings were calculated using the differences of redundant tests between the preintervention and postintervention periods, corrected to an annual rate.

Clinicians appeared to accept that redundant testing was very likely associated with preventable hospitalization, although presenters stipulated that some CKMB+/cTnI (−) patients might have been admitted anyway. Questions from direct care providers focused on patient safety (and medical–legal) concerns, temporal regimens for initiating the second cTnI test in a series, methods of peer feedback, and time stresses imposed by CPOE changes. A concern at rural sites, whether the absence of CKMB testing would affect transfers to regional cardiologists at neighboring tertiary care facilities still doing CKMB testing, did not materialize as an operational problem. External site partners were offered the opportunity to recapitulate the truth table process or cost models from their own data but expressed satisfaction with the modeled data from the initial academic site.

Discussion

We propose these hypotheses concerning the critical role of clinical laboratory data and teams that include pathologists in quality improvement interventions. First, pathologists have an essential role in providing decision support, such as identifying, quantifying, and communicating low-value activities. Second, when quality improvement opportunities are detected, there are circumstances in which modeled case-finding sensitivity data can address reasonable clinician concerns about patient safety including belief that “our patients are different,” a common concern in rural areas with poor transportation, low income, and variable access to health care. The same data also overcome inertia associated with unspoken concerns that stem from normal human reluctance to change, including commission and status quo biases. We believe our experience illustrates that local laboratory data can tell a compelling story to motivate collaborative change.

Third, we have added additional detail to the general knowledge that multimodal interventions outperform changes to CPOE alone. We assumed that removal of a low-value order set was necessary to achieve change. Our subsequent findings reinforce knowledge that CPOE changes need to be sufficiently disruptive to capture attention but alone may still be insufficient, underperforming a combination of CPOE and peer leadership supported by dashboard feedback. This is consistent with existing behavioral7,8,13 and systems25–28 theory favoring multimodal interventions. Pathology laboratory data are crucial for providing the feedback about what kind of CPOE intervention is sufficient. Expressed concerns about added time for soft stops in CPOE fade when the barrier is to a test that clinicians come to recognize as generally unhelpful.

Fourth, we provide data that interventions started in tertiary care settings can be translated by private practice peer leaders in rural hospitals, including a critical access facility. The more rapid rate of guideline compliance at the external hospitals was certainly assisted by the deliberate time compression of intervention elements that had been independently tested at the academic center. That may not be the whole story. Staff cohesion and peer leader influence may also be greater at smaller facilities. This finding begins to address a research need identified by Larochelle and colleagues, who provided an earlier description of eliminating redundant MI orders and called for demonstrations of external translation to additional facilities.22 We have begun that process.

Figure 1, depicting diagnostic futility and a near certain probability of additional risk related to unneeded hospitalization and procedures, may represent a figural template for the kinds of motivation for change that pathologists can provide. We should be clear that our finding, that the CKMB test fails to add value to troponin testing, is anticipated by other work.34 It is nevertheless responsive to the near universal concern about guidelines that “our patients are different” at a facility or within a region. The laboratory can lead collaborations that seek to measure if that concern is realistic and the effort to show whether a nonrecommended test provides some benefit can detect the still more compelling finding that a test is doing harm. Future work might formally investigate whether peer leadership for targeted laboratory orders can also reduce some of the well-known variability in admissions, including from EDs for chest pain.35

Fifth, we were not surprised to confirm that clinicians and administrators routinely expressed interest to see modeled cost implications. In this case, a simplified economic model indicates a >US$635 000 annual savings in 2012, with no additional inputs required for ongoing savings once the intervention was complete. These savings were similar to the savings found by Zhang et al24 for billing costs, and our calculated reagent savings were greater than the reagent savings calculated for an ED intervention by Le et al23 likely because of the use of transparently published reagent costs, compared to actual but invisibly discounted reagent costs. More fundamentally, we were not surprised to learn that clinicians responded to the cost data with interest but were not particularly impressed by savings alone. Instead, the savings in the setting of improved care with fewer errors were compelling and are more representative of the critical role of pathologists and interdisciplinary colleagues in assessing the performance and value laboratory tests. In this sense, all cost models greatly underestimate the actual societal savings. An advantage of simplified cost models is their transparency to participants from many kinds of backgrounds, removing the uncertainty inherent in concealment or ignoring of cost implications, and avoiding the time and investment associated with more rigorous economic models. Clinicians readily understood and accepted the limitations of simplified models and saw no need to improve on them.

Nevertheless, fundamental concerns about the best ways to intervene remain. These are largely unrelated to the simplifications in our cost model but do relate to costs and benefits. More expensive laboratory orders may have a different dynamic, particularly when they are “sendouts.” When tests are more expensive and become significant institutional costs or significant institutional income depending on the setting, the importance of the evaluative role of pathologists increases. Pathologists have a key role in making sure that both costs and the quality of outcomes are considered; laboratory performance data are the best response to the pressures that surround competing views of costs.

Our approach and findings feature strengths that may assist several research and practical implementation needs. The US National Academy of Sciences, Engineering, and Medicine has identified interdisciplinary participation of laboratory clinicians with direct patient care clinicians as important to leadership for quality improvement.36 In addition, trainees at several career stages and from several specialties were able to make contributions to a real-world intervention, suggesting that the approach may have positive implications for career development. External validity was demonstrated relative to national secular trends at peer institutions (Table 1), and internal validity testing shows that the change is independent of diagnostic intensity (Figure 4). The “decision tree” data (Figure 1) is a strength that can assist to recruit intervention partners, including partners in private practice. In this case, it responded to clinician concerns and also suggested previously overlooked harms of baseline low-value tests. We hypothesize that this approach is scalable to other facilities and clinical contexts.

Our approach also features some common limitations of intervention research. We are concerned that the intervention sounds complicated. The intervention is simple; any appearance of complexity is in the effort to transparently show convincing data of what was done and what resulted, an important requirement of quality improvement and time sequential study designs. Although sequential internal and then external successes appear promising, time sequential intervention designs are imperfect and require detailed presentation, as they substitute for randomized assignments in the setting of clinical quality interventions, providing inferences instead of proofs.32,33 Despite the detail inherent in peer descriptions of methods and data, our data clearly show that facilities can implement this change rapidly, with the time it takes to make CPOE changes as the most important temporal barrier.

A limitation related to scalability is that external translation of the intervention was supported by shared data in an accessible warehouse. External intervention partners often lack a shared data resource, suggesting a data gap pertaining to future scalability until medical records better accommodate data exchanges while protecting patient privacy. To address this gap, we have planned additional demonstrations with hospitals that do not share data, including hospitals of varying sizes and hospitals that normally compete for market share. Another research gap for scalability is how well decision tree and truth table data will influence clinical behavior when the findings are less clear than the perfect futility we demonstrated for redundant CKMB testing. Our data do not fully address whether more complex analyses such as receiver-operator curves can also be motivating when needed. Patients with low-risk chest pain presentations experience low rates of adverse cardiac events.37 Other clinical decisions may have more nuanced comparisons between risks and benefits of admission compared to low-risk chest pain. Nevertheless, our findings strongly support the case that data-driven approaches to selection of appropriate laboratory orders will become increasingly important in many settings.38

A word about the progress of intervention research is also in order. After we planned and implemented our intervention, SQUIRE II updates39 and summarized Agency for Healthcare Research and Quality (AHRQ) planning advice40 became available for quality improvement interventions. We commend these updated tools to colleagues’ planning quality improvement interventions.

In summary, our experience leads to testable hypotheses about the critical role of pathologists in providing data that can motivate change. We propose that clinical leadership and clinical staff more willingly abandon low-value laboratory practice patterns and improve care when specific local laboratory data reveal that current practices are ineffective and/or that they may increase patient risk. We have shown that an intervention tested in an academic setting, when supported by relevant performance data, can be reliably adapted and led by private practice peer leader clinicians, including at small rural facilities. We have presented a visual picture of the relative increments of several kinds of CPOE and the subsequent addition of peer leadership, confirming that soft stops cannot be too soft, CPOE alone is only a partial answer, and peer leadership is needed to achieve change. In addition, we provide a successful example of an identified national need, data-driven collaboration between laboratory and other clinical staff to improve quality.36 Leading authorities understand that clinicians are a widely available yet underutilized resource in quality improvement.8,13 Our approach, utilizing laboratory expertise and laboratory data to motivate clinical behavioral change, may be investigated for utility in needed, wider engagement of clinician energy and capability in quality improvements that are responsive to public health needs.

Acknowledgments

The authors wish to acknowledge editorial and technical support from Dana M. Gray, West Virginia University Department of Pathology.

Authors’ Note: Alan M. Ducatman, Danyel H. Tacker, and Barbara S. Ducatman contributed equally to this work; were members of the study design, analysis, and writing team; and participated in review of health outcomes, peer leadership, and quality improvement implementation. The content is solely the responsibility of the authors and does not necessarily represent official views of the Benedum Foundation or the NIH.

Declaration of Conflicting Interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: The project described was funded by a HOPE pilot grant from the Claude Worthington Benedum Foundation and by the National Institute of General Medical Sciences U54GM104942.

References

- 1. Lin GA, Redberg RF. Addressing overuse of medical services one decision at a time. JAMA Intern Med. 2015;175:1092–1093. doi:10.1001/jamainternmed.2015.1693. [DOI] [PubMed] [Google Scholar]

- 2. Zhan C, Miller MR. Excess length of stay, charges, and mortality attributable to medical injuries during hospitalization. JAMA. 2003;290:1868–1874. doi:10.1001/jama.290.14.1868. [DOI] [PubMed] [Google Scholar]

- 3. Counts JM, Astles JR, Lipman HB. Assessing physician utilization of laboratory practice guidelines: barriers and opportunities for improvement. Clin Biochem. 2013;46:1554–1560. doi:10.1016/j.clinbiochem.2013.06.004. [DOI] [PubMed] [Google Scholar]

- 4. Perkins RB, Jorgensen JR, McCoy ME, Bak SM, Battaglia TA, Freund KM. Adherence to conservative management recommendations for abnormal Pap test results in adolescents. Obstet Gynecol. 2012;119:1157–1163. doi:10.1097/AOG.0b013e31824e9f2f. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Farias M, Jenkins K, Lock J, et al. Standardized clinical assessment and management plans (SCAMPs) provide a better alternative to clinical practice guidelines. Health Aff (Millwood). 2013;32:911–920. doi:10.1377/hlthaff.2012.0667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Pronovost PJ. Enhancing physicians’ use of clinical guidelines. JAMA. 2013;310(23):2501–2502. doi:10.1001/jama.2013.281334. [DOI] [PubMed] [Google Scholar]

- 7. Roman BR, Asch DA. Faded promises: the challenge of deadopting low-value care. Ann Intern Med. 2014;161:149–150. doi:10.7326/M14-0212. [DOI] [PubMed] [Google Scholar]

- 8. Khullar D, Chokshi DA, Kocher R, et al. Behavioral economics and physician compensation – promise and challenges. N Engl J Med. 2015;372:2281–2283. doi:10.1056/NEJMp1502312. [DOI] [PubMed] [Google Scholar]

- 9. Davidoff F. On the undiffusion of established practices. JAMA Intern Med. 2015;175:809–811. doi:10.1001/jamainternmed.2015.0167. [DOI] [PubMed] [Google Scholar]

- 10. Schwartz AL, Landon BE, Elshaug AG, Chernew ME, McWilliams JM. Measuring low-value care in Medicare. JAMA Intern Med. 2014;174:1067–1076. doi:10.1001/jamainternmed.2014.1541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Colla CH, Morden NE, Sequist TD, Schpero WL, Rosenthal MB. Choosing wisely: prevalence and correlates of low-value health care services in the United States. J Gen Intern Med. 2015;30:221–228. doi:10.1007/s11606-014-3070-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Mickan S, Burls A, Glasziou P. Patterns of ‘leakage” in the utilisation of clinical guidelines: a systematic review. Postgrad Med J. 2011;87:670–679. doi:10.1136/pgmj.2010.116012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Colla CH. Swimming against the current – what might work to reduce low-value care? N Engl J Med. 2014;371:1280–1283. doi:10.1056/NEJMp1404503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. White H, Thygesen K, Alpert JS, Jaffe A. Universal MI definition update for cardiovascular disease. Curr Cardiol Rep. 2014;16:492–014 -0492-5. doi:10.1007/s11886-014-0492-5. [DOI] [PubMed] [Google Scholar]

- 15. Apple FS, Jesse RL, Newby LK, et al. ; IFCC Committee on Standardization of Markers of Cardiac Damage, National Academy of Clinical Biochemistry. National Academy of Clinical Biochemistry and IFCC Committee for Standardization of Markers of Cardiac Damage Laboratory Medicine Practice guidelines: analytical issues for biochemical markers of acute coronary syndromes. Clin Chem. 2007;53:547–551. doi:10.1373/clinchem.2006.084715. [DOI] [PubMed] [Google Scholar]

- 16. Thygesen K, Alpert JS, White HD, et al. ; Joint ESC/ACCF/AHA/WHF Task Force for the Redefinition of Myocardial Infarction. Universal definition of myocardial infarction. Circulation. 2007;116:2634–2653. doi:10.1161/CIRCULATIONAHA.107.187397. [DOI] [PubMed] [Google Scholar]

- 17. Apple FS, Jesse RL, Newby LK, Wu AH, Christenson RH; National Academy of Clinical Biochemistry; IFCC Committee for Standardization of Markers of Cardiac Damage. National Academy of Clinical Biochemistry and IFCC Committee for Standardization of Markers of Cardiac Damage Laboratory Medicine Practice Guidelines: analytical issues for biochemical markers of acute coronary syndromes. Circulation. 2007;115:e352–e355. doi:10.1161/CIRCULATIONAHA.107.182881. [DOI] [PubMed] [Google Scholar]

- 18. Thygesen K, Alpert JS, Jaffe AS, Simoons ML, Chaitman BR, White HD; Task Force for the Universal Definition of Myocardial Infarction. Third universal definition of myocardial infarction. Nat Rev Cardiol. 2012;9:620–633. doi:10.1038/nrcardio.2012.122. [DOI] [PubMed] [Google Scholar]

- 19. Alpert JS, Jaffe AS. Interpreting biomarkers during percutaneous coronary intervention: the need to reevaluate our approach. Arch Intern Med. 2012;172:508–509. doi:10.1001/archinternmed.2011.2284. [DOI] [PubMed] [Google Scholar]

- 20. Novack V, Pencina M, Cohen DJ, et al. Troponin criteria for myocardial infarction after percutaneous coronary intervention. Arch Intern Med. 2012;172:502–508. doi:10.1001/archinternmed.2011.2275. [DOI] [PubMed] [Google Scholar]

- 21. Michielsen EC, Bisschops PG, Janssen MJ. False positive troponin result caused by a true macrotroponin. Clin Chem Lab Med. 2011;49:923–925. doi:10.1515/CCLM.2011.147. [DOI] [PubMed] [Google Scholar]

- 22. Larochelle MR, Knight AM, Pantle H, Riedel S, Trost JC. Reducing excess cardiac biomarker testing at an academic medical center. J Gen Intern Med. 2014;29:1468–1474. doi:10.1007/s11606-014-2919-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Le RD, Kosowsky JM, Landman AB, Bixho I, Melanson SEF, Tanasijevic MJ. Clinical and financial impact of removing creatine kinase-MB from the routine testing menu in the emergency setting. Am J Emerg Med. 2015;33:72–75. doi:10.1016/j.ajem.2014.10.017. [DOI] [PubMed] [Google Scholar]

- 24. Zhang L, Sill AM, Young I, Ahmed S, Morales M, Kuehl S. Financial impact of a targeted reduction in cardiac enzyme testing at a community hospital. J Community Hosp Intern Med Perspect. 2016;6:32816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Wallace E, Lowry J, Smith SM, Fahey T. The epidemiology of malpractice claims in primary care: a systematic review. BMJ Open. 2013;3:pii: e002929. Print 2013. doi:10.1136/bmjopen-2013-002929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Sah S, Elias P, Ariely D. Investigation momentum: the relentless pursuit to resolve uncertainty. JAMA Intern Med. 2013;173:932–933. doi:10.1001/jamainternmed.2013.401. [DOI] [PubMed] [Google Scholar]

- 27. Shortell SM, Singer SJ. Improving patient safety by taking systems seriously. JAMA. 2008;299:445–447. doi:10.1001/jama.299.4.445. [DOI] [PubMed] [Google Scholar]

- 28. Valente TW, Pumpuang P. Identifying opinion leaders to promote behavior change. Health Educ Behav. 2007;34:881–896. doi:10.1177/1090198106297855. [DOI] [PubMed] [Google Scholar]

- 29. Davidoff F, Batalden P, Stevens D, Ogrinc G, Mooney S; Standards for quality improvement reporting excellence development group. Publication guidelines for quality improvement studies in health care: evolution of the SQUIRE project. J Gen Intern Med. 2008;23:2125–2130. doi:10.1007/s11606-008-0797-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Thygesen K, Alpert JS, Jaffe AS, et al. ; Joint ESC/ACCF/AHA/WHF Task Force for Universal Definition of Myocardial Infarction; Authors/Task Force Members Chairpersons; Biomarker Subcommittee; ECG Subcommittee; Imaging Subcommittee; Classification Subcommittee; Intervention Subcommittee; Trials & Registries Subcommittee; Trials & Registries Subcommittee; Trials & Registries Subcommittee; Trials & Registries Subcommittee; ESC Committee for Practice Guidelines (CPG); Document Reviewers. Third universal definition of myocardial infarction. J Am Coll Cardiol. 2012;60:1581–1598.22958960 [Google Scholar]

- 31. Roberts DH, Gilmartin GS, Neeman N, et al. Design and measurement of quality improvement indicators in ambulatory pulmonary care: creating a “culture of quality” in an academic pulmonary division. Chest. 2009;136:1134–1140. doi:10.1378/chest.09-0619. [DOI] [PubMed] [Google Scholar]

- 32. Dreyer N, Rubino A, L’Italien GJ, Schneeweiss S. Developing good practice guidance for non-randomized studies of comparative effectiveness: a workshop on quality and transparency. Pharmacoepidemiol Drug Saf. 2009;18:S123–S123. [Google Scholar]

- 33. Dreyer NA, Schneeweiss S, McNeil BJ, et al. GRACE principles: recognizing high-quality observational studies of comparative effectiveness. Am J Manag Care. 2010;16:467–471. [PubMed] [Google Scholar]

- 34. Jaruvongvanich V, Rattanadech W, Damkerngsuntorn W, Jaruvongvanich S, Vorasettakarnkij Y. CK-MB activity, any additional benefit to negative troponin in evaluating patients with suspected acute myocardial infarction in the emergency department. J Med Assoc Thai. 2015;98:935–941. [PubMed] [Google Scholar]

- 35. Sabbatini AK, Nallamothu BK, Kocher KE. Reducing variation in hospital admissions from the emergency department for low-mortality conditions may produce savings. Health Aff (Millwood). 2014;33:1655–1663. doi:10.1377/hlthaff.2013.1318. [DOI] [PubMed] [Google Scholar]

- 36. Balogh E, Miller B, Ball J, eds. Improving Diagnosis in Health Care. Washington, DC: National Academy of Sciences, Engineering, and Medicine, the National Academies Press; 2015. doi:10.17226/21794. [Google Scholar]

- 37. Weinstock MB, Weingart S, Orth F, et al. Risk for clinically relevant adverse cardiac events in patients with chest pain at hospital admission. JAMA Intern Med. 2015;175:1207–1212. doi:10.1001/jamainternmed.2015.1674. [DOI] [PubMed] [Google Scholar]

- 38. Berwick DM, Hackbarth AD. Eliminating waste in US health care. JAMA. 2012;307:1513–1516. doi:10.1001/jama.2012.362. [DOI] [PubMed] [Google Scholar]

- 39. Ogrinc G, Davies L, Goodman D, Batalden P, Davidoff F, Stevens D. SQUIRE 2.0 (standards for quality improvement reporting excellence): revised publication guidelines from a detailed consensus process. BMJ Qual Saf. 2016;25(12):986–992. doi:10.1136/bmjqs-2015-004411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Ganz D. National guideline clearinghouse/expert commentaries: implementing guidelines in your organization: what questions should you be asking?. http://www.guideline.gov/expert/expert-commentary.aspx?id=49423. Published July 13, 2015. Accessed April 24, 2017.