Abstract

Background:

We performed a directed environmental scan to identify and categorize quality indicators unique to critical care that are reported by key stakeholder organizations.

Methods:

We convened a panel of experts (n = 9) to identify key organizations that are focused on quality improvement or critical care, and reviewed their online publications and website content for quality indicators. We identified quality indicators specific to the care of critically ill adult patients and then categorized them according to the Donabedian and the Institute of Medicine frameworks. We also noted the organizations' rationale for selecting these indicators and their reported evidence base.

Results:

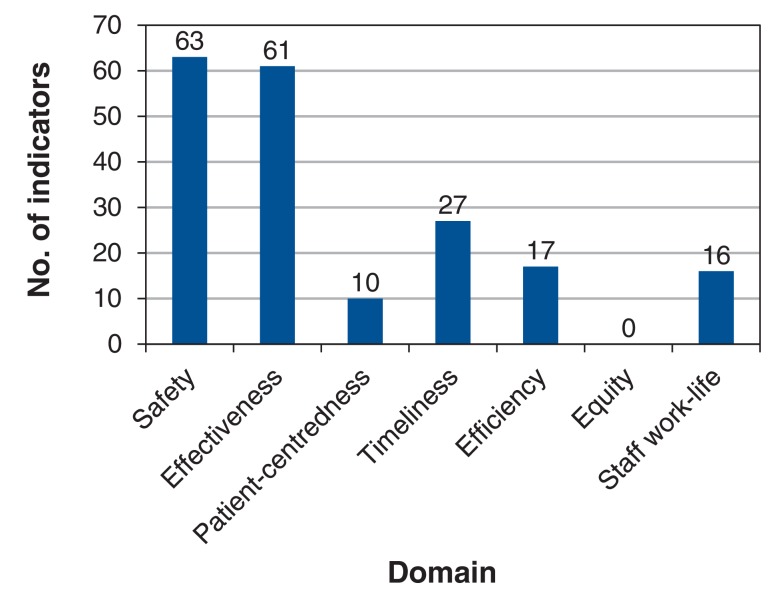

From 28 targeted organizations, we identified 222 quality indicators, 127 of which were unique. Of the 127 indicators, 63 (32.5%) were safety indicators and 61 (31.4%) were effectiveness indicators. The rationale for selecting quality indicators was supported by consensus for 58 (26.1%) of the 222 indicators and by published research evidence for 45 (20.3%); for 119 indicators (53.6%), the rationale was not reported or the reader was referred to other organizations' reports. Of the 127 unique quality indicators, 27 (21.2%) were accompanied by a formal grading of evidence, whereas for 52 (40.9%), no reference to evidence was provided.

Interpretation:

There are many quality indicators related to critical care that are available in the public domain. However, owing to a paucity of rationale for selection, supporting evidence and results of implementation, it is not clear which indicators should be adopted for use.

In the health care sector, quality indicators have been developed to compare actual patient care to best practice. They provide a quantitative tool for health care providers and decision-makers who aim to improve processes and outcomes of patient care.1 Conceptual frameworks may be used to categorize these indicators.2 Two of the most commonly used frameworks are those of Donabedian3 and the Institute of Medicine4 (now called the Health and Medicine Division of the National Academies of Sciences, Engineering, and Medicine). In the Donabedian framework, indicators of health care quality are categorized as related to structure (conditions under which care is provided), process (methods by which health care is provided) or outcome (changes in health status attributable to health care). From the perspective of patient care, the Institute of Medicine identified 6 dimensions: safety, effectiveness, patient-centredness, timeliness, efficiency and equity.

The scientific literature abounds with a bewildering array of candidate quality indicators,5-7 and intensive care societies, quality improvement organizations, and patient advocacy and safety groups have begun to report on quality using some of these indicators. The purpose of this directed environmental scan was to inform decision-making by synthesizing existing recommendations from relevant organizations within the Canadian and international context.

Methods

Search strategy

The study was conducted at the University of Ottawa and the University of Montreal. We convened a panel of experts to identify organizations that have interests in quality of care or intensive care. We invited 10 intensivists with expertise in quality improvement (including the development, implementation and evaluation of quality initiatives), epidemiology and systematic reviews. Selection of panel members was based on 2 criteria: scientific productivity in critical care, and clinical and methodological expertise in literature review. In addition, we sought geographic representativeness among the panel members, and we included investigators from Ontario, Quebec, British Columbia and Alberta. The panel members were involved in study design and are included as authors. Two authors (P.H. and S.V.) were involved in the selection and invitation of panel members.

We identified Canadian and provincial organizations (n = 15) (i.e., provincial health care quality councils and critical care societies) as well as a convenience sample of major international organizations (n = 13) using the panel of experts' recommendations. We specifically sought information from international intensive care societies and state-wide integrated health care systems that contributed to the science of development and implementation of quality indicators. This international sample was not meant to be comprehensive. It was selected to benchmark Canadian findings to data from international organizations that operate in similar health care systems.

From August 2012 to January 2013, we reviewed publications and websites (i.e., grey literature) from these organizations (Table 1) to identify quality indicators related to the care of critically ill adults and children (excluding neonates). We searched website content using the keywords "intensive care unit," "critical care" and "quality indicator." If no quality indicators relevant to this environmental scan were identified using this search strategy, we contacted the organizations via email. Organizations without publicly available quality indicators and those with quality indicators that could not be accessed online (n = 12) were contacted by 1 author (S.V.). The emails were sent to the contact information available on the website. We requested information pertaining to the organizations' quality of care initiatives and how indicators were selected. Scientific publications were not included in this study as the aim of this scan was to characterize the organizational perspective.

Table 1: Organizations included in environmental scan.

| National critical care societies |

|---|

| Canadian Critical Care Society (www.canadiancriticalcare.org) |

| Society of Critical Care Medicine (www.sccm.org) |

| European Society of Intensive Care Medicine (www.esicm.org) |

| Australian and New Zealand Intensive Care Society (www.anzics.com.au) |

| Intensive Care Society (www.ics.ac.uk) |

| Provincial critical care societies |

| Alberta Critical Care Clinical Network (now Critical Care Strategy Clinical Network of Alberta) (email communication) |

| BC Society of Critical Care Medicine (www.bcsccm.ca) |

| Critical Care Secretariat (Ontario) (www.health.gov.on.ca/en/pro/programs/criticalcare) |

| Provincial health quality councils |

| BC Patient Safety & Quality Council (http://bcpsqc.ca/clinical-improvement) |

| Health Quality Council of Alberta (www.hqca.ca) |

| Saskatchewan Health Quality Council (http://hqc.sk.ca/improve-health-care-quality/measure) |

| Manitoba Institute for Patient Safety (www.mbips.ca) |

| Health Quality Ontario (www.hqontario.ca) |

| New Brunswick Health Council (www.nbhc.ca) |

| National health providers |

| Health Canada (www.hc-sc.gc.ca/index-eng.php) |

| National Health Service (www.nhs.uk) |

| Quality improvement and patient safety |

| Canadian Patient Safety Institute (www.patientsafetyinstitute.ca) Safer Healthcare Now! Initiative Critical Care Vital Signs Monitor project |

| Canadian Healthcare Association (now HealthCareCAN) (www.healthcarecan.ca) |

| National Quality Forum (www.qualityforum.org) |

| Institute for Healthcare Improvement (www.ihi.org) |

| Agency for Healthcare Research and Quality (www.ahrq.gov) Healthcare Cost and Utilization Project |

| National Quality Measures Clearinghouse (www.qualitymeasures.ahrq.gov) |

| Institute of Medicine (now Health and Medicine Division of the National Academies of Sciences, Engineering, and Medicine) (www.nationalacademies.org/HMD) |

| Canadian Institute for Health Information (www.cihi.ca) |

| Intensive Care National Audit & Research Centre (www.icnarc.org) |

| Accreditation |

| Accreditation Canada (www.accreditation.ca) |

| Other |

| Health Talk Online (www.healthtalkonline.org/Intensive_care) |

| U.S. Department of Veterans Affairs (www.va.gov) |

Definition of quality indicators

For the purpose of this environmental scan, we defined a quality indicator as any measurement proposed by the organization that could be used as a measure for monitoring or improving the quality of patient care.1 We considered that an indicator had a full operational definition if it included a description in quantifiable terms of what to measure and the specific steps needed to measure it consistently.8 Collections of indicators ("bundles") aimed at improving patient care with respect to a single disease (e.g., bundles concerning sepsis treatment or prevention of ventilator-associated pneumonia) were counted as single composite measures.

Data extraction

We included all quality indicators that focused on the care of critically ill patients. We excluded indicators used solely in neonatal populations. The quality indicator needed to be explicitly associated with critical care by the organization in order to be included. One reviewer (S.V.) narratively summarized all identified quality indicators, including their descriptive definition, measurement criteria, rationale for selection and evidentiary basis. The evidentiary basis for each indicator was collected as described by the organization. S.V. also identified indicators for which information on early implementation results and potential unintended outcomes was available from targeted organizations. Data were extracted by 1 reviewer owing to resource limitations. Data may be obtained by contacting the corresponding author.

Synthesis of information

Two of the authors (S.V. and A.G.) assessed the redundancy of quality indicators (i.e., indicators that measured the same target) based on reported operational definitions. S.V. and A.G. also agreed on the categorization of each quality indicator according to the Donabedian3 and the Institute of Medicine4 classifications. We extended the Institute of Medicine classification by adding a "staff work-life" domain (i.e., staff turnover, nurse absenteeism and nurse overtime), as used in the Critical Care Vital Signs Monitor project.9 All disagreements were resolved by consensus between S.V. and A.G. We also reviewed, where available, the reporting of evidentiary support, the reporting of potential unintended consequences and evaluation of the implementation of quality indicator programs.

Ethics approval

This study only used data already in the public domain, and therefore ethics approval was not required.

Results

Of the 10 intensivists invited to participate, 1 could not take part because of time constraints. Our expert panel of 9 intensivists identified 28 organizations for inquiry (Table 1). A total of 222 quality indicators were identified from their publications and website content. The organizations that provided the largest number of quality indicators were the Alberta Critical Care Clinical Network (now the Critical Care Strategy Clinical Network of Alberta) (n = 55), the Institute for Healthcare Improvement (IHI) (n = 43) and the Canadian Patient Safety Institute (CPSI) (n = 32). Of the 222 quality indicators, 127 (57.2%) had a full operational definition, 88 (39.6%) had a partial definition, and 7 (3.2%) had no definition (i.e., identification by title only). After review of the definitions and titles of the 222 indicators, 127 were considered unique, of which 9 were composite measures and 3 were specific to pediatric critical care (Appendix 1, available at www.cmajopen.ca/content/5/2/E488/suppl/DC1).

Classification of quality indicators

Table 2 displays the distribution of quality indicators across a 2-dimensional matrix that merges the Donabedian classification (structure, process and outcome) with the Institute of Medicine classification (safety, effectiveness, patient-centredness, timeliness, efficiency and equity), together with the added domain of staff work-life. This typology facilitates the evaluation of domains of quality that require further assessment while underscoring the type of information that should be collected.10 As in other fields of medicine, the greatest number of available indicators were process indicators related to safety and effectiveness. From our review, structure and outcome indicators related to patient-centredness, efficiency and equity were lacking among all endorsed indicators in critically ill patients.

Table 2: Donabedian3 and Institute of Medicine4 classification of quality indicators*.

| Institute of Medicine domain | Donabedian domain; no. (%) of indicators | |||

|---|---|---|---|---|

| Structure | Process | Outcome | Total | |

| Safety | 1 (0.5) | 33 (17.0) | 29 (14.9) | 63 (32.5) |

| Effectiveness | 8 (4.1) | 33 (17.0) | 20 (10.3) | 61 (31.4) |

| Patient-centredness | 0 (0) | 8 (4.1) | 2 (1.0) | 10 (5.2) |

| Timeliness | 3 (1.5) | 19 (9.8) | 5 (2.6) | 27 (13.9) |

| Efficiency | 2 (1.0) | 13 (6.7) | 2 (1.0) | 17 (8.8) |

| Equity | 0 (0) | 0 (0) | 0 (0) | 0 (0) |

| Staff work-life | 8 (4.1) | 4 (2.1) | 4 (2.1) | 16 (8.2) |

| Total | 22 (11.3) | 110 (56.7) | 62 (32.0) | 194 (100) |

*A total of 127 records were considered unique indicators, but indicators could be assigned to more than 1 domain.

Donabedian classification

The most commonly reported structure indicators were use of private rooms for patients with antibiotic-resistant infections, nurse to patient ratio, intensive care unit (ICU) occupancy, intensivist to patient ratio and "closed" ICU structure. Process indicators that were endorsed by 4 or more organizations included compliance with hand hygiene, formal medication reconciliation process at ICU admission, prescription of venous thromboembolism prophylaxis, glycemic control protocols and implementation of rapid-response teams. In addition, 9 bundles of indicators were identified as process indicators, in the following categories: prevention of ventilator-associated pneumonia, central line insertion and maintenance, and sepsis resuscitation and management. These bundles were developed by the IHI, the Agency for Healthcare Research and Quality and the CPSI through the Safer Healthcare Now! initiative. Similarly, outcomes indicators reported by 4 or more organizations included rate of catheter-related bloodstream infections, rate of ventilator-associated pneumonia, ICU-acquired Clostridium difficile or methicillin-resistant Staphylococcus aureus infections, ICU length of stay and standardized mortality ratio.

Institute of Medicine classification

The largest numbers of quality indicators were in the domains of safety (n = 63/194 [32.5%]) and effectiveness (n = 61 [31.4%]). Twenty-seven indicators (13.9%) were related to timeliness, 17 (8.8%) were related to efficiency, and 16 (8.2%) were related to staff work-life. Only 10 indicators (5.2%) were related to patient-centredness, and none were related to equity (Figure 1). The most commonly reported safety indicators were compliance with hand hygiene, formal medication reconciliation process at ICU admission, prescription of venous thromboembolism prophylaxis, glycemic control protocols, implementation of a rapid-response team, rate of catheter-related bloodstream infections, rate of ventilator-associated pneumonin, and ICU-acquired C. difficile or methicillin-resistant S. aureus infections. These safety indicators were all endorsed by 4 or more organizations. The most frequently reported effectiveness indicators were venous thromboembolism prophylaxis, glycemic control protocols, rate of catheter-related bloodstream infections, ICU length of stay and standardized mortality ratio.

Figure 1.

Number of quality indicators identified according to Institute of Medicine classification.4

Rationale for selection and supporting evidence

Organizations' rationale for selecting quality indicators was internal consensus methodology for 58 (26.1%) of the 222 identified indicators, a reference to published research for 45 (20.3%) and a reference to another organization's established quality indicators for 40 (18.0%). For 79 indicators (35.6%), no rationale was reported.

Only 5 of the 28 organizations formally evaluated the level of evidence to support their quality indicators (i.e., Grading of Recommendations, Assessment, Development and Evaluations [GRADE],11 Centers for Disease Control and Prevention12 or Agency for Healthcare Research and Quality13 framework), for 28 (12.6%) of the 222 identified indicators. Indicators that were not graded formally or informally were supported by references to literature (n = 77 [34.7%]) or were not supported at all (n = 110 [49.5%]). Of the 127 unique quality indicators, 27 (21.2%) included a formal evaluation of evidence, 6 (4.7%) included an informal evaluation, 42 (33.1%) included a reference to published literature, and 52 (40.9%) had no reference to evidence provided by the organization.

The quality indicators with the highest level of supporting evidence are presented in Table 3. Reporting organizations, evidence grading tools and interpretation of each evidence grade are given in Table 4.

Table 3: Quality indicators with highest grades of supporting evidence.

| Area of care; quality indicator | Evidence grade* | Implementation results reported |

|---|---|---|

| Mechanical ventilation | ||

| Ventilator-associated pneumonia bundle | Moderate to high† | Yes‡ |

| Elevation of head of bed | Level 1‡ | |

| Daily sedation vacation and assessment of readiness to extubate | Level 1‡ | |

| Prevention of venous thromboembolism | Level 1‡ | |

| Pressure ulcer disease prophylaxis | Level 1‡ | |

| Daily oral care with chlorhexidine | Level 1‡ | |

| Pneumonia | ||

| Blood cultures performed within 24 hr or before arrival | Evidence synthesis§ | No |

| Antibiotics consistent with guidelines | Evidence synthesis§ | No |

| Invasive procedures | ||

| Ultrasound guidance for central venous catheter insertion | High† | No |

| Central line insertion bundle | Moderate to high† | Yes‡¶ |

| Maximal barrier precautions | 1B¶; evidence synthesis§ | |

| Chlorhexidine skin antisepsis | 1A¶ | |

| Hand hygiene | 1B¶ | |

| Optimal catheter type and site selection | 1A-1B¶ | |

| Central line care bundle | Yesঠ| |

| Daily review of line necessity | 1A¶ | |

| Aseptic lumen access | 1A¶ | |

| Catheter site and tubing care | 1B¶ | |

| Patient-centred care | ||

| Documentation of goals of care | Moderate† | No |

| Sepsis management | ||

| Sepsis management bundle | ||

| Administer low-dose steroids by standard policy | 2C‡** | No |

| Maintain adequate glycemic control | 1B‡ | No |

| Prevent excessive inspiratory plateau pressures | 1C‡** | Yes** |

| Sepsis resuscitation bundle | ||

| Serum lactate levels measured | 1B‡ | No |

| Timing of blood cultures | 1C‡** | No |

| Treat hypotension and/or elevated lactate with fluids | 1B‡ | No |

| Maintain adequate central venous oxygen saturation | 1C-2C‡ | No |

| Antibiotics given by time goal | 1B‡** | No |

| Apply vasopressors for ongoing hypotension | 1C‡ | No |

| Maintain adequate central venous pressure | 1C‡** | Yes** |

| Patients with sepsis: second litre of crystalloid administered by time goal | 1C** | No |

| Blood for culture drawn before antibiotics administered | 1C** | No |

| Glycemic control policies | Moderate to high† | No |

| After initial stabilization for patients with severe sepsis | 1B** | No |

| Validated protocol for insulin dosage adjustments | 2C** | No |

| Prevention of adverse events | ||

| Appropriate transfusion practices | Not graded | Yes‡ |

| Pharmacist on rounds | Moderate to high† | No |

| Medication reconciliation by a pharmacist | Moderate† | Yes‡ |

| Venous thromboembolism prophylaxis | Evidence synthesis§ | Yes¶†† |

| Preventing pressure ulcers | Moderate†; evidence synthesis§ | Yes‡ |

| Simulation training | Moderate to high† | No |

| Training on infusion pumps | Low† | No |

| Infection control | ||

| Isolation of patients with resistant infections | Moderate† | No |

| Hand hygiene improvement | Low† | Yes‡‡ |

| Staffing | ||

| Establishment of rapid-response team | Moderate† | Yes‡¶ |

| Staffing ratios: increasing nurse to patient ratio to prevent death | Moderate† | No |

*See Table 4.

†Agency for Healthcare Research and Quality.

‡Institute for Healthcare Improvement.

§National Quality Measures Clearinghouse.

¶Canadian Patient Safety Institute.

**Society of Critical Care Medicine, European Society of Intensive Care Medicine.

††Australian and New Zealand Intensive Care Society.

‡‡Health Quality Ontario.

Table 4: Reporting organizations, evidence grading tools and interpretation of each evidence grade.

| Organization | Evidence grading tool | Grade | Interpretation |

|---|---|---|---|

| Agency for Healthcare Research and Quality | Evidence-based Practice Centers program13 | High | High confidence that the evidence reflects the true effect. Further research is very unlikely to change our confidence in the estimate of the effect. |

| Moderate | Moderate confidence that the evidence reflects the true effect. Further research may change our confidence in the estimate of the effect and may change the estimate. | ||

| Low | Low confidence that the evidence reflects the true effect. Further research is likely to change our confidence in the estimate of the effect and is likely to change the estimate. | ||

| Insufficient | Evidence is either unavailable or does not permit a conclusion | ||

| Institute for Healthcare Improvement14 | Level 1 | Evidence obtained from at least 1 properly designed randomized controlled trial | |

| National Quality Measures Clearinghouse15 | Evidence synthesis | - | Clinical practice guideline or other peer-reviewed synthesis of the clinical research evidence |

| Category 1A | Strongly recommended for implementation and strongly supported by well-designed experimental, clinical or epidemiologic studies | ||

| Category 1B | Strongly recommended for implementation and supported by some experimental, clinical or epidemiologic studies and a strong theoretical rationale; or an accepted practice (e.g., aseptic technique) supported by limited evidence | ||

| Society of Critical Care Medicine, European Society of Intensive Care Medicine16 | Grading of Recommendations, Assessment, Development and Evaluation (GRADE)11 | 1A | Strong recommendation, high quality of evidence |

| 1B | Strong recommendation, moderate quality of evidence | ||

| 1C | Strong recommendation, low quality of evidence | ||

| 2C | Weak recommendation, low quality of evidence |

Grading of Recommendations, Assessment, Development and Evaluation (GRADE)11

Canadian Patient Safety Institute

Centers for Disease Control and Prevention framework12

Early results of implementation

Data about the implementation of quality indicators were reported by 4 of the 28 stakeholder organizations: the IHI, the CPSI, the Australian and New Zealand Intensive Care Society and Health Quality Ontario. The implementation data were positive, showing decreases in rates of ventilator-associated pneumonia (IHI ventilator bundle), central line infections (CPSI, IHI and Health Quality Ontario indicators) and "code blue" calls with the establishment of rapid-response teams (IHI and CPSI).

Three organizations (Agency for Healthcare Research and Quality, IHI and CPSI) reported potential or observed unintended consequences of implementing recommended quality indicators. The IHI and the CPSI reported risks of hypoglycemia associated with use of insulin protocols, pulmonary edema associated with fluid resuscitation, self-extubation associated with daily interruptions of sedation, bleeding associated with venous thromboembolism prophylaxis, and C. difficile and hospital-acquired pneumonia associated with implementation of ventilator-associated pneumonia bundles. The incidence of unintended consequences was not reported by any of these 3 organizations.

Interpretation

In this directed environmental scan, we identified 127 unique quality indicators related to critical care. Although there are a variety of safety and effectiveness measures that address processes of care, there are very few measures of patient-centredness, efficiency and equity. Only 127 (57.2%) of all identified quality indicators had a full operational definition, and 27 (21.2%) of the unique quality indicators were accompanied by a formal grading of supporting evidence. Only 4 organizations reported results of implementation.

This study was a first step to describe the breadth and depth of critical care quality indicators, by examining the grey literature of selected stakeholder organizations. Based on available online information, the organizations that we contacted consider implementation of quality indicators to be a priority. However, rigorous reporting of the rationale for selection, evidentiary basis and evaluation after implementation of these quality indicators was scarce. This may reflect the relatively new science of quality improvement. A similar paucity of evidence has been described in other specialized domains of care. For example, in a review of published and grey literature examining quality indicators for trauma care, Stelfox and colleagues17 found several candidate quality indicators but limited assessment of the reliability and validity of the evidence as well as limited implementation data.

Our review showed that some organizations are moving toward the use of an amalgamated selection of quality indicators spanning all domains of quality of critical care to make up a "scorecard" or "dashboard." The Critical Care Vital Signs Monitor project9 and the scorecard developed by Critical Care Services Ontario18 are examples of such quality indicator scorecards. Similarly, the Society of Obstetricians and Gynaecologists of Canada has developed a dashboard of quality indicators in maternal-newborn care by a thorough review of research and a consensus process.19 Such a process could be a consideration for the critical care community, given the large number of quality indicators that we identified and others that are likely to be identified in the future.

Based on the results of this study, we suggest that organizations consider adopting the quality indicators with the highest grade of supporting evidence (Table 3). These include ventilator-associated pneumonia bundles, measures to prevent central-line-associated bloodstream infection, venous thromboembolism prophylaxis, limited components of sepsis resuscitation and management bundles, glycemic control policies, the presence of a pharmacist on rounds and use of simulation exercises for trainees.

Limitations

This study has several limitations. First, this was primarily a Canadian scan together with a convenience sample of international organizations; it was not a comprehensive search through all pertinent organizations. Second, owing to resource limitations, the data extraction was done by only 1 reviewer. There was also a delay between the data synthesis and extraction (completed in January 2013) and publication, but, again, resource constraints precluded an updated scan. As a result of these limitations, it is possible that we have missed important information related to the development of indicators, the evidence base, syntheses or evidence assessment methods if such process reports were disseminated through scientific publications. However, as websites and published bulletins represent the main public voice of most societies, we believe that these sources would ideally include all major important scientific information. Finally, an evaluation of the quality indicators themselves was beyond the scope of this review.

Conclusion

Many organizations across the globe have begun to endorse quality indicators, bundles and dashboards, with the aim of improving the care of critically ill patients. This environmental scan revealed a small number of quality indicators with strong supporting evidence that could be considered for adoption into clinical practice. Collaborative efforts among organizations could be aimed at the development of a consensus-based dashboard of quality indicators for critical care. Future research should describe the breadth and depth of published quality indicators in critical care and should measure the results of implementation of quality indicators and unintended consequences to further the evidentiary support of quality indicators.

Supplemental information

For reviewer comments and the original submission of this manuscript, please see www.cmajopen.ca/content/5/2/E488/suppl/DC1

Supplementary Material

References

- 1.Mainz J. Defining and classifying clinical indicators for quality improvement. Int J Qual Health Care. 2003;15:523–30. doi: 10.1093/intqhc/mzg081. [DOI] [PubMed] [Google Scholar]

- 2.Stelfox HT, Straus SE. Measuring quality of care: considering measurement frameworks and needs assessment to guide quality indicator development. J Clin Epidemiol. 2013;66:1320–7. doi: 10.1016/j.jclinepi.2013.05.018. [DOI] [PubMed] [Google Scholar]

- 3.Donabedian A. Evaluating the quality of medical care. 1966. Milbank Q. 2005;83:691–729. doi: 10.1111/j.1468-0009.2005.00397.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Committee on Quality of Health Care in America, Institute of Medicine. Crossing the quality chasm: a new health system for the 21st century. Washington: National Academy of Sciences. 2001. [Google Scholar]

- 5.de Vos M, Graafmans W, Keesman E, et al. Quality measurement at intensive care units: Which indicators should we use? J Crit Care. 2007;22:267–74. doi: 10.1016/j.jcrc.2007.01.002. [DOI] [PubMed] [Google Scholar]

- 6.Berenholtz SM, Dorman T, Ngo K, et al. Qualitative review of intensive care unit quality indicators. J Crit Care. 2002;17:1–12. doi: 10.1053/jcrc.2002.33035. [DOI] [PubMed] [Google Scholar]

- 7.Rhodes A, Moreno RP, Azoulay E, et al. Task Force on Safety and Quality of European Society of Intensive Care Medicine (ESICM) Prospectively defined indicators to improve the safety and quality of care for critically ill patients: a report from the Task Force on Safety and Quality of the European Society of Intensive Care Medicine (ESICM). Intensive Care Med. 2012;38:598–605. doi: 10.1007/s00134-011-2462-3. [DOI] [PubMed] [Google Scholar]

- 8.Lloyd R. Quality health care: a guide to developing and using indicators. Burlington (MA): Jones and Bartlett Learning. 2004. [Google Scholar]

- 9.Chrusch CA, Martin CM.Quality improvement in critical care: selection and development of quality indicators. Can Respir J . [PubMed] [Google Scholar]

- 10.Selecting quality and resource use measures: a decision guide for community quality collaboratives. Part IV. Selecting quality and resource use measures. Rockville (MD): Agency for Healthcare Research and Quality. 2010. [updated 2014]. [accessed 2014 May 1]. Available www.ahrq.gov/professionals/quality-patient-safety/quality-resources/tools/perfmeasguide/perfmeaspt4.html.

- 11.Atkins D, Best D, Briss PA, et al. GRADE Working Group. Grading quality of evidence and strength of recommendations. BMJ. 2004;328:1490. doi: 10.1136/bmj.328.7454.1490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Guidelines for the prevention of intravascular catheter-related infections, 2011 [updated 2017]. Atlanta: Centers for Disease Control and Prevention; 2011Available: https://www.cdc.gov/infectioncontrol/guidelines/bsi/index.html accessed 2017 June 15 [Google Scholar]

- 13.Owens DK, Lohr KN, Atkins D, et al. AHRQ series paper 5: grading the strength of a body of evidence when comparing medical interventions - Agency for Healthcare Research and Quality and the Effective Health Care Program. J Clin Epidemiol. 2010;63:513–23. doi: 10.1016/j.jclinepi.2009.03.009. [DOI] [PubMed] [Google Scholar]

- 14.How-to guide: prevent ventilator-associated pneumonia. Cambridge (MA): Institute for Healthcare Improvement. 2012. [accessed 2017 May 17]. Available www.ihi.org/resources/Pages/Tools/HowtoGuidePreventVAP.aspx.

- 15.National Quality Measures Clearinghouse. Measure summary NQMC-7332. Rockville (MD): Agency for Healthcare Research and Quality. 2012. [accessed 2013 Jan. 17]. Available https://www.qualitymeasures.ahrq.gov/ [DOI] [PubMed]

- 16.Dellinger RP, Levy MM, Carlet JM, et al. Surviving Sepsis Campaign: international guidelines for the management of severe sepsis and septic shock: 2008. Intensive Care Med. 2008;34:17–60. doi: 10.1007/s00134-007-0934-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Stelfox HT, Straus SE, Nathens A, et al. Evidence for quality indicators to evaluate adult trauma care: a systematic review. Crit Care Med. 2011;39:846–59. doi: 10.1097/CCM.0b013e31820a859a. [DOI] [PubMed] [Google Scholar]

- 18.Critical Care Secretariat. Critical Care Unit Balanced Scorecard Toolkit. Toronto: Critical Care Services Ontario. 2012. [accessed 2014 May 1]. Available https://www.criticalcareontario.ca/EN/Toolbox/Toolkits/Critical%20Care%20Unit%20Balanced%20Scorecard%20Toolkit%20%282012%29.pdf.

- 19.Sprague AE, Dunn SI, Fell DB, et al. Measuring quality in maternal-newborn care: developing a clinical dashboard. J Obstet Gynaecol Can. 2013;35:29–38. doi: 10.1016/s1701-2163(15)31045-8. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.