Abstract

Objective

To describe and appraise the latest simulation models for direct and indirect ophthalmoscopy as a learning tool in the medical field.

Methods

The present review was conducted using four national and international databases – PubMed, Scielo, Medline and Cochrane. Initial set of articles was screened based on title and abstracts, followed by full text analysis. It comprises of articles that were published in the past fifteen years (2002–2017).

Results

Eighty-three articles concerning simulation models for medical education were found in national and international databases, with only a few describing important aspects of ophthalmoscopy training and current application of simulation in medical education. After secondary analysis, 38 articles were included.

Conclusion

Different ophthalmoscopy simulation models have been described, but only very few studies appraise the effectiveness of each individual model. Comparison studies are still required to determine best approaches for medical education and skill enhancement through simulation models, applied to both medical students as well as young ophthalmologists in training.

Keywords: direct ophthalmoscopy, indirect ophthalmoscopy, skills, simulator, simulation models

Introduction

The ophthalmoscopy exam is an important medical skill that allows ophthalmologists, neurologists and emergency room physicians to diagnose many sight and life-threatening conditions, although its skills have never been fully mastered by the medical community,1,2 mainly due to lack of physician’s confidence,3,4 interest5 or regular practice.6,7 This leads to loss of a major diagnostic assistance that relies in a “small, portable and simple to comprehend” tool.8

Practical skills begin with regular training, thus, simulation models have been implemented in a range of procedures in the medical field. Simulation creates opportunities9,10 and allows repetitive practice without affecting patient’s care.11 Several models have been adapted to ophthalmoscopy, like computer simulation,12 mannequins,13 photographs and, most recently, virtual reality.14,15

The purpose of this article is to describe the most common devices used in simulation ophthalmoscopy training and to review the latest results of articles that evaluate each model individually.

Methods

A retrospective, descriptive review of current simulation models applied to ophthalmoscopy examination was conducted, based on the past fifteen years of research (2002–2017). Four national and international databases were consulted (PubMed, Scielo, Medline and Cochrane). An initial screen yielded a total of eighty-three articles, each meeting at least one of the following criteria:

ophthalmoscopy models in ophthalmology training,

simulation models in medical education and

experimental research using simulation models in ophthalmology.

After secondary analysis, only 38 articles were considered to meet two or three of the aforementioned criteria, which were included in this review.

Results and discussion

Teaching ophthalmoscopy may vary from rudimentary techniques to high-technology programs. Although certain difficulty in skill assessment has always been associated, no effective model to evaluate physician’s or students’ angle of view has been developed. Here, we describe two techniques – direct and indirect – with a mention on famous equipment and latest evaluating reports.

Direct ophthalmoscopy

Models and devices

The oldest approach of teaching ophthalmoscopy relies on a simple image-quiz model, where examiners learn normal parameters through real retinal images, and try to apply this on real patients, completing standardized questionnaires. Although limited, this approach is simple and non-expensive in ophthalmology training.

In 2004, Chung and Watzke16 described a simple model for direct exam with a handheld ophthalmoscope. It consists of a plastic closed chamber, where a 37-mm photograph of a normal retina is internally allocated, so that a physician can assess it through an 8-mm hole, which is supposed to simulate a mydriatic pupil. Common problems with this device include low photograph quality, intense light reflection and loss of space perception by examiners.

At the end of 2007, Pao et al17 presented a new model called THELMA (The Human Eye Learning Model Assistant), which consists of a Styrofoam mannequin head that uses retinal images in a similar fashion as the aforementioned device. Advantages of this model include better physician–patient relationship simulation and sense of adequate position, although intense light reflection was still a problem, especially due to paper quality of printed photographs.

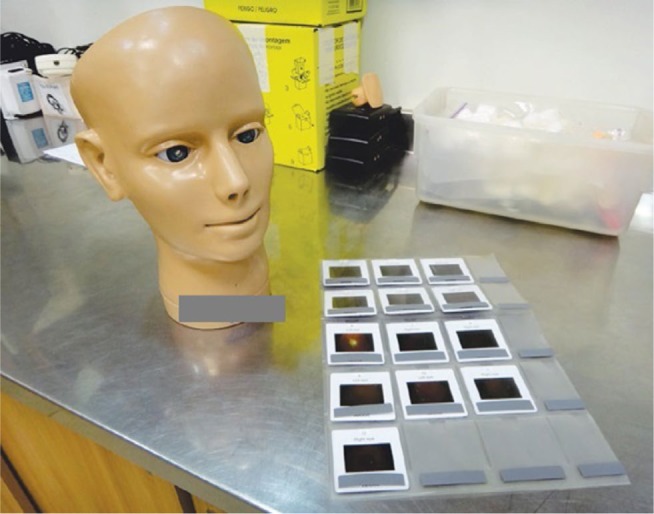

Later on, newer models have been created. The EYE Exam Simulator (developed by Kyoto Kagaku Co., Kyoto, Japan) and Eye Retinopathy Trainer® (developed by Adam, Rouilly Co., Sittingbourne, UK) are real-size mannequin heads, with an adjustable pupil that allows access to a wider, 35 mm designed, high-quality retina, through a handheld ophthalmoscope (Figure 1). Due to higher complexity, young examiners may experience technical problems if there are no experienced staffs to aid initial simulation training.18

Figure 1.

The Eye Retinopathy Trainer®, developed by Adam, Rouilly Co.

In 2014, Schulz19 presented a semi-reflective device where the reflected light beam from the retina splits into different pathways, where one beam of light is redirected to a video camera and projected into a laptop computer, allowing assessment by an outsider. This was developed in an attempt to create a device where instructors were able to appreciate the same field of view as the examiner’s, improving skill evaluation. Problems with this model include loss of synchrony between image projection and actual examination.

Borgersen et al20 described the possible use of YouTube video lessons along with traditional theoretical lessons, since different instructional videos have been widely used in the past to aid in the guidance of general physical examination and basic medical skills. Problems with this method included lack of sufficient video lessons, low-quality videos and absence of long-term comparison studies.

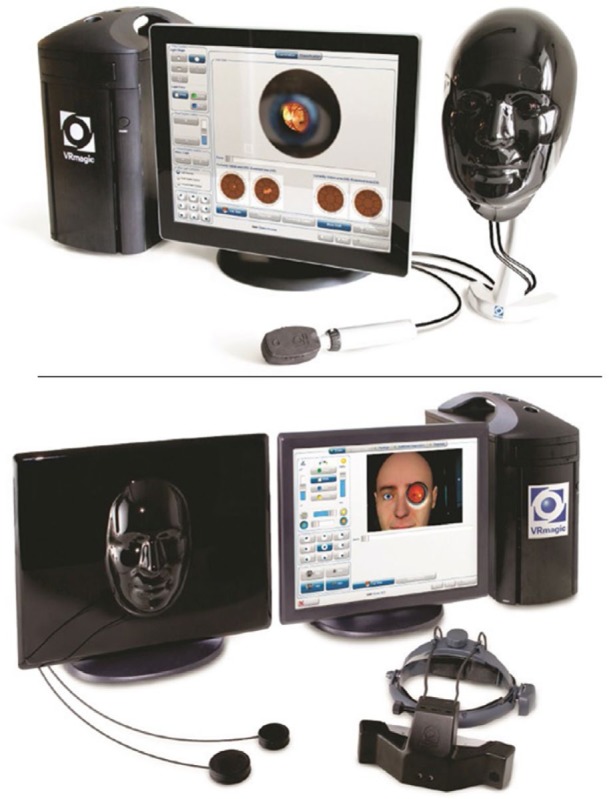

Virtual reality seems to be the latest tendency nowadays for skill training. The most recent, designed by the company VRmagic, is the EYEsi Direct Ophthalmoscope Simulator,18,21 and it is considered to be a highly complex and humanized equipment. It consists of a touch screen device connected to an artificial human face model, where the examiner can perform an exam using the device’s own simulated handheld ophthalmoscope. This device presents unique advantages, like mapping visualized retinal regions, ability to control physiologic and pathologic functions and variants, and immediate feedback with detailed explanations. Problems with this device include expensive cost and the need of a trained staff.11 Currently, there are no comparative studies regarding this model.

Studies in the literature

Table 1 depicts the latest reports on models and devices described earlier.

Table 1.

Direct ophthalmoscopy

| Reference | Simulation model | Evaluation method | Results |

|---|---|---|---|

| Hoeg et al (2009)22 | Plastic canister | Theoretical lessons to second-year medical students using photographs of normal retina, papilledema, diabetic retinopathy and glaucoma. No test was performed | 75.8% students reported enhanced quality of learning |

| Swanson et al (2011)23 | Plastic canister | Standardized questionnaire applied before and after the simulation | Right answers improved from 47% to 86% (p=0.0001) |

| McCarthy et al (2009)24 | EYE Exam Simulator | Lessons to and comparison between ophthalmology residents (11) and emergency medicine residents (46). No test was performed | No confidence or skill improvement |

| Larsen et al (2014)25 | EYE Exam Simulator | Blinded instructors evaluated second-year medical students’ ability to adequately describe ophthalmoscopy findings, in a four-year period | Confidence and interest improvement during the four-year period |

| Kelly et al (2013)26 | Unspecified direct ophthalmoscopy simulator | First-year medical students (138) were randomized into three groups (simulator, photographs or real exam). Standardized questionnaires were applied | 71% of participants preferred real exam over simulators (skill management). Retinal photographs were associated with higher answer accuracy (p<0.001) than simulator and real-exam groups |

| Androwiki et al (2015)27 | Eye Retinopathy Trainer | Fourth-year medical students (90) were randomized into two groups (simulator vs real exam). Standardized questionnaires and objective structured clinical examinations (OSCE) were applied | Simulation group showed better performance (p<0.00001) in OSCE, although the average questionnaires scores were not different |

| Schulz et al (2015)28 | Semi-reflective device (teaching ophthalmoscope), with image projection during examination | First- and second-year medical students (55) were randomized into two groups (conventional ophthalmoscope vs teaching ophthalmoscope). Standardized questionnaires and two OSCE stations (conventional and teaching ophthalmoscope) were applied | Higher scores in the OSCE station 2 (interventional) (p=0.01) and higher levels of confidence (p<0.001) |

| Chen et al (2015)29 | Non-mydriatic automatic fundus camera | Medical students (5) were assessed to identify crucial retinal structures through a traditional ophthalmoscope technique vs an automatic fundus direct camera | Better macula visualization in the experimental group, although no statistical difference was seen between optic disk and vasculature identification |

| Milani et al (2013)30 | Photograph match | Fourth-year medical students (134) were randomized into two groups (experimental vs control). The experimental group had their fundus photographed. Participants had 3 days to identify and match each one’s photographs | 84.3% of students using optic nerve photographs showed improvement in direct ophthalmoscopy technique compared to control group (p<0.001) |

| Gilmour and McKivigan (2016)31 | Photograph match | Medical students (33) examined standardized patients and were asked to match the findings to a photographic grid | Only 30% students matched the photograph correctly, with an average confidence rating of 27.5%. Older students were more likely to match correctly (p=0.023) |

| Byrd et al (2014)32 | Real patient training | Second-year medical students were compared to internal medicine residents. One year later, skills were reassessed and compared with their classmates who did not participate. An assessment quiz was applied | Participants’ scores were 48% higher than their classmates and 37% higher than IM residents (p<.001). |

Abbreviation: IM, internal medicine.

Eleven studies including the first- to fourth-year medical students were reviewed. Results showed the following: 1) improved confidence by examiner, 2) improved interest in ophthalmoscopy, 3) skill enhancement and 4) better identification of anatomic structures. One study reported no self-confidence or skill improvement, although no evaluation test was applied.24 Furthermore, simulation quality must be adapted to each person, since the excess of realism and complexity can confuse examiners when learning basic skills, especially medical students.25

Indirect ophthalmoscopy

Models and devices

In 2006, Lewallen33 presented a simple model for indirect ophthalmoscopy training. It consists of a round glass sphere allocated in a Styrofoam surface. Inside, package inserts from prescription medications are inserted and positioned according to the internal diameter of the sphere. The examiner’s goal is to read the reflected words, in order to understand basic principles of indirect ophthalmoscopy exam. This is one of the simplest methods of training, although no comparative studies have been conducted with this model.

In 2009, Lantz34 adapted the device created by Chung and Watzke to an indirect approach. Here, the artificial pupil was designed to measure 9 mm and it was originally developed to train pathologists for autopsies. With a light-attached helmet, the purpose of this model was to estimate postmortem period, although there is no reason this could not be adapted to general ophthalmoscopy training.

Similar to the direct simulator, VRmagic also developed the EYEsi Indirect Ophthalmoscope Simulator,18,35 which presents the same features described earlier. Further, it also displays functions on light and lens position, although cases and images are not different from those included in the direct simulator. Both devices are presented in Figure 2.

Figure 2.

EYEsi Ophthalmoscope Simulator, developed by VRmagic.

Note: Top, direct simulator; bottom, indirect simulator.

Studies in the literature

Table 2 depicts the latest reports on models and devices described earlier.

Table 2.

Indirect ophthalmoscopy

| Studies | Simulation model | Evaluation method | Results |

|---|---|---|---|

| Leitritz et al (2014)36 | EYEsi Indirect Ophthalmoscope Simulator | Medical students (37) were randomized into two groups (control vs simulator). Real patient examination and standardized questionnaires were applied | Simulation group had a training score higher than the conventional group (p<0.003), although no difference was noted in questionnaire scores |

| Chou et al (2016)37 | EYEsi Indirect Ophthalmoscope Simulator | Medical students (25) were compared to ophthalmologists/optometrists (17). Standardized questionnaires and simulated cases were applied | Trained professionals showed higher scores on all simulated cases and a faster mean duration of examination (p<0.0001), although medical students showed higher scores in questionnaires |

Only two articles describing indirect ophthalmoscopy training were considered. Both studies employed the same simulator and showed higher performance in the group that used the device (p<003; p<0.0001).

Conclusion

Simulation is a helpful tool in ophthalmoscopy training, once it can provide better understanding of skill management. Constant training is a well-known strategy for skill enhancement, although it may initially induce physicians and students to forget protocols developed to guarantee comfort and patient’s safety.

Although recent models are promising, there is still lack of studies to verify their actual efficiency and to compare recent models to traditional and rudimentary techniques. However, preliminary results presented in this review seem to be satisfactory. Further comparison studies are required for better characterization of newer simulation models.

Footnotes

Disclosure

The authors report no conflict of interest in this work.

References

- 1.Wu EH, Fagan MJ, Reinert SE, Diaz JA. Self-confidence in and perceived utility of the physical examination: a comparison of medical students, residents, and faculty internists. J Gen Intern Med. 2007;22(12):1725–1730. doi: 10.1007/s11606-007-0409-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Holmboe ES. Faculty and the observation of trainees’ clinical skills: problems and opportunities. Acad Med. 2004;79(1):16–22. doi: 10.1097/00001888-200401000-00006. [DOI] [PubMed] [Google Scholar]

- 3.Gupta RR, Lam WC. Medical students’ self-confidence in performing direct ophthalmoscopy in clinical training. Can J Ophthalmol. 2006;41(2):169–74. doi: 10.1139/I06-004. [DOI] [PubMed] [Google Scholar]

- 4.Shuttleworth GN, Marsh GW. How effective is undergraduate and postgraduate teaching in ophthalmology? Eye (Lond) 1997;11(Pt 5):744–750. doi: 10.1038/eye.1997.189. [DOI] [PubMed] [Google Scholar]

- 5.Lopes Filho JB, Leite RA, Leite DA, de Castro AR, Andrade LS. Avaliação dos conhecimentos oftalmológicos básicos em estudantes de Medicina da Universidade Federal do Piauí. Rev Bras Oftalmol. 2011;70(1):27–31. Portuguese. [Google Scholar]

- 6.Morad Y, Barkana Y, Avni I, Kozer E. Fundus anomalies: what the pediatrician’s eye can’t see. Int J Qual Health Care. 2004;16(5):363–365. doi: 10.1093/intqhc/mzh065. [DOI] [PubMed] [Google Scholar]

- 7.Lippa LM. Ophthalmology in the medical school curriculum: reestablishing our value and effecting change. Ophthalmology. 2009;116(7):1235–1236. doi: 10.1016/j.ophtha.2009.01.012. [DOI] [PubMed] [Google Scholar]

- 8.Sit M, Levin AV. Direct ophthalmoscopy in pediatric emergency care. Pediatr Emerg Care. 2001;17(3):199–204. doi: 10.1097/00006565-200106000-00013. [DOI] [PubMed] [Google Scholar]

- 9.Weller JM, Nestel D, Marshall SD, Brooks PM, Conn JJ. Simulation in clinical teaching and learning. Med J Aust. 2012;196(9):594. doi: 10.5694/mja10.11474. [DOI] [PubMed] [Google Scholar]

- 10.Benbassat J, Polak BC, Javitt JC. Objectives of teaching direct ophthalmoscopy to medical students. Acta Ophthalmol. 2012;90(6):503–507. doi: 10.1111/j.1755-3768.2011.02221.x. [DOI] [PubMed] [Google Scholar]

- 11.Grodin MH, Johnson TM, Acree JL, Glaser BM. Ophthalmic surgical training: a curriculum to enhance surgical simulation. Retina. 2008;28(10):1509–1514. doi: 10.1097/IAE.0b013e31818464ff. [DOI] [PubMed] [Google Scholar]

- 12.Labuschagne MJ. The role of simulation training in ophthalmology. Cont Med Educ. 2013;31(4):157–159. [Google Scholar]

- 13.Xie P, Hu Z, Zhang X, et al. Application of 3-dimensional printing technology to construct an eye model for fundus viewing study. PLoS One. 2014;9(11):e109373. doi: 10.1371/journal.pone.0109373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sikder S, Tuwairqi K, Al-Kahtani E, Myers WG, Banerjee P. Surgical simulators in cataract surgery training. Br J Ophthalmol. 2014;98(2):154–158. doi: 10.1136/bjophthalmol-2013-303700. [DOI] [PubMed] [Google Scholar]

- 15.Ting DSW, Sim SSKP, Yau CWL, Rosman M, Aw AT, Yeo IYS. Ophthalmology simulation for undergraduate and postgraduate clinical education. Int J Ophthalmol. 2016;9(6):920–924. doi: 10.18240/ijo.2016.06.22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Chung KD, Watzke RC. A simple device for teaching direct ophthalmoscopy to primary care practitioners. Am J Ophthalmol. 2004;138(3):501–502. doi: 10.1016/j.ajo.2004.04.009. [DOI] [PubMed] [Google Scholar]

- 17.Pao KY, Uhler TA, Jaeger EA. Creating THELMA – The Human Eye Learning Model Assistant. J Acad Ophthalmol. 2008;1:25–29. [Google Scholar]

- 18.Ricci LH, Ferraz CA. Simulation models applied to practical learning and skill enhancement in direct and indirect ophthalmoscopy: a review. Arq Bras Oftalmol. 2014;77(5):334–338. doi: 10.5935/0004-2749.20140084. [DOI] [PubMed] [Google Scholar]

- 19.Schulz C. A novel device for teaching fundoscopy. Med Educ. 2014;48(5):524–525. doi: 10.1111/medu.12434. [DOI] [PubMed] [Google Scholar]

- 20.Borgersen NJ, Henriksen MJ, Konge L, Sørensen TL, Thomsen AS, Subhi Y. Direct ophthalmoscopy on YouTube: analysis of instructional YouTube videos’ content and approach to visualization. Clin Ophthalmol. 2016;10:1535–1541. doi: 10.2147/OPTH.S111648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.VRmagic Co Direct Ophthalmoscope Simulator. [Accessed April 20, 2016]. Available from: http://www.vrmagic.com/fileadmin/downloads/simulator_brochures/Eyesi_Direct_Brochure_131029_EN_WEB_DP.pdf.

- 22.Hoeg TB, Sheth BP, Bragg DS, Kivlin JD. Evaluation of a tool to teach medical students direct ophthalmoscopy. WMJ. 2009;108(1):24–26. [PubMed] [Google Scholar]

- 23.Swanson S, Ku T, Chou C. Assessment of direct ophthalmoscopy teaching using plastic canisters. Med Educ. 2011;45(5):520–521. doi: 10.1111/j.1365-2923.2011.03987.x. [DOI] [PubMed] [Google Scholar]

- 24.McCarthy DM, Leonard HR, Vozenilek JA. A new tool for testing and training ophthalmoscopic skills. J Grad Med Educ. 2012;4(1):92–96. doi: 10.4300/JGME-D-11-00052.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Larsen P, Stoddart H, Griess M. Ophthalmoscopy using an eye simulator model. Clin Teach. 2014;11(2):99–103. doi: 10.1111/tct.12064. [DOI] [PubMed] [Google Scholar]

- 26.Kelly LP, Garza PS, Bruce BB, Graubart EB, Newman NJ, Biousse V. Teaching ophthalmoscopy to medical students (the TOTeMS study) Am J Ophthalmol. 2013;156(5):1056–1061. doi: 10.1016/j.ajo.2013.06.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Androwiki JE, Scravoni IA, Ricci LH, Fagundes DJ, Ferraz CA. Evaluation of a simulation tool in ophthalmology: application in teaching fundoscopy. Arq Bras Oftalmol. 2015;78(1):36–39. doi: 10.5935/0004-2749.20150010. [DOI] [PubMed] [Google Scholar]

- 28.Schulz C, Moore J, Hassan D, Tamsett E, Smith CF. Addressing the ‘forgotten art of fundoscopy’: evaluation of a novel teaching ophthalmoscope. Eye (Lond) 2016;30(3):375–384. doi: 10.1038/eye.2015.238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Chen M, Swinney C, Chen M, Bal M, Nakatsuka A. Comparing the utility of the non-mydriatic fundus camera to the direct ophthalmoscope for medical education. Hawaii J Med Public Health. 2015;74(3):93–95. [PMC free article] [PubMed] [Google Scholar]

- 30.Milani BY, Majdi M, Green W, et al. The use of peer optic nerve photographs for teaching direct ophthalmoscopy. Ophthalmology. 2013;120(4):761–765. doi: 10.1016/j.ophtha.2012.09.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Gilmour G, McKivigan J. Evaluating medical students’ proficiency with a handheld ophthalmoscope: a pilot study. Adv Med Educ Pract. 2016;8:33–36. doi: 10.2147/AMEP.S119440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Byrd JM, Longmire MR, Syme NP, Murray-Krezan C, Rose L. A pilot study on providing ophthalmic training to medical students while initiating a sustainable eye care effort for the underserved. JAMA Ophthalmol. 2014;132(3):304–309. doi: 10.1001/jamaophthalmol.2013.6671. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Lewallen S. A simple model for teaching indirect ophthalmoscopy. Br J Ophthalmol. 2006;90(10):1328–1329. doi: 10.1136/bjo.2006.096784. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lantz PE. A simple model for teaching postmortem monocular indirect ophthalmoscopy. J Forensic Sci. 2009;54(3):676–677. doi: 10.1111/j.1556-4029.2009.01030.x. [DOI] [PubMed] [Google Scholar]

- 35.VRmagic Co Indirect Ophthalmoscope Simulator. [Accessed April 21, 2016]. Available from: https://www.vrmagic.com/fileadmin/downloads/simulator_brochures/Eyesi_Indirect_Brosch%C3%BCre_160510_EN_WEB.pdf.

- 36.Leitritz MA, Ziemssen F, Suesskind D, et al. Critical evaluation of the usability of augmented reality ophthalmoscopy for the training of inexperienced examiners. Retina. 2014;34(4):785–791. doi: 10.1097/IAE.0b013e3182a2e75d. [DOI] [PubMed] [Google Scholar]

- 37.Chou J, Kosowsky T, Payal AR, Gonzalez Gonzalez LA, Daly MK. Construct and face validity of the Eyesi indirect ophthalmoscope simulator. Retina. 2016 Dec 29; doi: 10.1097/IAE.0000000000001438. Epub. [DOI] [PubMed] [Google Scholar]