Recent theories suggest that the basis of neurodevelopmental auditory disorders such as dyslexia might be an impaired processing of fast auditory changes, highlighting how the encoding of rapid acoustic information is critical for auditory communication. Here, we present a novel electrophysiological approach to capture in humans neural markers of contrasts in fast continuous tone sequences. Contrast-specific responses were successfully identified, even for very fine contrasts, providing direct insight on the encoding of rapid auditory information.

Keywords: electroencephalographic, frequency tagging, auditory contrast, sensory discrimination

Abstract

The human auditory system presents a remarkable ability to detect rapid changes in fast, continuous acoustic sequences, as best illustrated in speech and music. However, the neural processing of rapid auditory contrast remains largely unclear, probably due to the lack of methods to objectively dissociate the response components specifically related to the contrast from the other components in response to the sequence of fast continuous sounds. To overcome this issue, we tested a novel use of the frequency-tagging approach allowing contrast-specific neural responses to be tracked based on their expected frequencies. The EEG was recorded while participants listened to 40-s sequences of sounds presented at 8Hz. A tone or interaural time contrast was embedded every fifth sound (AAAAB), such that a response observed in the EEG at exactly 8 Hz/5 (1.6 Hz) or harmonics should be the signature of contrast processing by neural populations. Contrast-related responses were successfully identified, even in the case of very fine contrasts. Moreover, analysis of the time course of the responses revealed a stable amplitude over repetitions of the AAAAB patterns in the sequence, except for the response to perceptually salient contrasts that showed a buildup and decay across repetitions of the sounds. Overall, this new combination of frequency-tagging with an oddball design provides a valuable complement to the classic, transient, evoked potentials approach, especially in the context of rapid auditory information. Specifically, we provide objective evidence on the neural processing of contrast embedded in fast, continuous sound sequences.

NEW & NOTEWORTHY Recent theories suggest that the basis of neurodevelopmental auditory disorders such as dyslexia might be an impaired processing of fast auditory changes, highlighting how the encoding of rapid acoustic information is critical for auditory communication. Here, we present a novel electrophysiological approach to capture in humans neural markers of contrasts in fast continuous tone sequences. Contrast-specific responses were successfully identified, even for very fine contrasts, providing direct insight on the encoding of rapid auditory information.

the human auditory system presents a remarkable ability to detect changes even when embedded in fast, continuous sequences, as best illustrated in speech and music. For example, understanding speech or enjoying a musical piece requires the ability to process acoustic contrasts occurring at a fast rate, which are often low within the subsecond range (Bregman 1990). Psychoacoustic investigations of the phenomenon have shown that the rate of the sequence in which the contrast is embedded is a critical parameter influencing contrast processing (Albouy et al. 2016; Freyman and Nelson 1986). In normal listeners, the ability to discriminate between two successive sounds of distinct frequencies is positively correlated with the duration of the sound and is also enhanced by the presence of a silent gap between the sounds (Albouy et al. 2016; Freyman and Nelson 1986). Specifically, as duration increases, the sensitivity to frequency contrast between two successive tones increases rapidly over a range of short durations (starting at 5-ms duration), and more gradually for longer durations (100 ms), to reach an asymptote at ∼200 ms (Freyman and Nelson 1986). This observation has been interpreted as an effect of backward masking through which the perceptual analysis of a sound, or accumulation of evidence, is stopped by a subsequent sound presented soon after the first one, thus explaining the reduced discrimination abilities observed with reduced tone duration (Demany and Semal 2005, 2007; Massaro and Idson 1977). Relatedly, difficulties in processing brief, rapidly changing acoustic information have been described in auditory neurodevelopmental disorders such as dyslexia, specific language impairments, and congenital amusia (Albouy et al. 2016; Goswami 2015; Tallal et al. 1993; Tallal 2004), highlighting the importance of processing auditory information that arrives rapidly and sequentially.

To investigate the underlying neural mechanisms, electroencephalographic (EEG) recordings appear as an optimal means to obtain measures of contrast processing with high temporal resolution. Moreover, these measures present the advantage of being independent from behavioral performance, thus preventing contamination by irrelevant decisional processes that could possibly bias these measures, especially in impaired individuals. Typically, in these EEG studies, the detection of auditory contrasts is associated with a particular brain response referred to as the mismatch negativity (MMN) and identified in the human EEG ∼150–200 ms from contrast onset (for recent reviews, see, e.g., Escera and Malmierca 2014, May and Tiitinen 2010, and Näätänen et al. 2007). More recently, a number of studies have shown that earlier components in the range of midlatency, auditory-evoked potentials (i.e., a series of positive and negative deflections occurring between 10 and 50 ms after stimulus onset) can also be modulated by a contrast in acoustic features such as a contrast in the frequency of tones (further referred to as “tone contrast” in the current study; see Escera and Malmierca 2014, Kraus et al. 1994, and Nelken 2014; see Grimm and Escera 2012 and Grimm et al. 2016 for review).

However, most of these EEG studies have investigated deviant-related neural responses using relatively long interstimulus intervals (usually longer than 150 ms, between 200 and 500 ms on average, including silent gaps between successive items). In contrast, there are surprisingly few studies investigating the neural processing of contrasts embedded in fast, continuous sequences, although this range seems critical to understand the brain mechanisms underlying the processing of brief, rapidly changing acoustic information and its impairment (e.g., in neurodevelopmental disorders such as dyslexia; see Albouy et al. 2016). Moreover, previous studies measuring MMN potentials have failed to capture significant deviance responses, especially when the contrast was regular and thus predictable and was presented at high rate, as is most often the case in speech and music for instance (see Grimm et al. 2016; Sussman et al. 1998).

The current study aimed to investigate the neural processing of contrasts, especially when embedded in fast, continuous sound sequences, that is, when the contrast is presented at a rate that appears to be critical for auditory communication in daily life activities and its impairment. To this aim, we used a new alternative approach that combines an oddball design and a frequency-tagging method (see e.g., de Heering and Rossion 2015, Liu-Shuang et al. 2014, Norcia et al. 2015, and Rossion 2014 for the first studies using this approach in high-level vision). This approach was proposed as a means to objectively dissociate contrast-specific responses from the other response components to the continuous sequence of inputs based on the frequency one would expect given the structure of the sequence, irrespective of the rate with which stimuli are presented. In the current study, the continuous sequence of inputs consisted in pure tones of 125-ms duration repeated for 40 s. Hence, the response to the envelope of acoustic energy was expected to elicit a periodic EEG response at 8 Hz (and harmonics), corresponding to the frequency of the tone repetition in the sequence (Fig. 1). Most importantly, a contrasting stimulus was introduced every 5th tone in the sequence according to an AAAAB pattern, such that an EEG response observed exactly at 8 Hz/5 (1.6 Hz) and harmonics would be the signature of contrast detection and/or its consequence by neural populations.

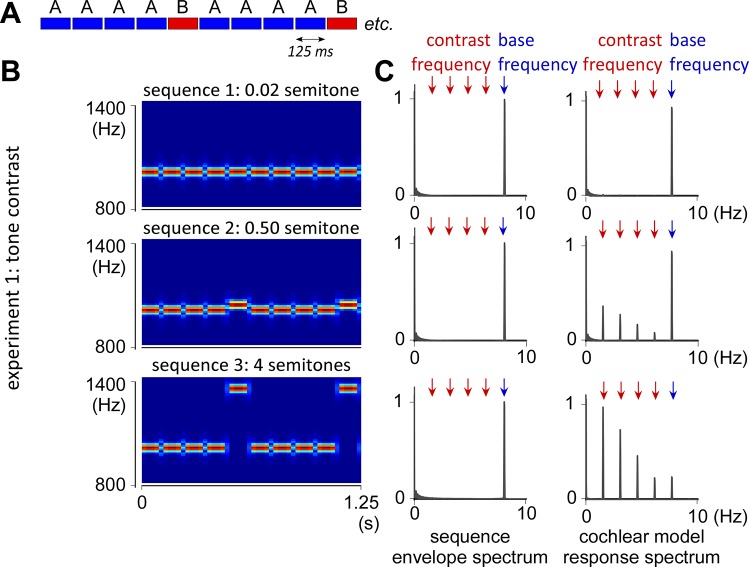

Fig. 1.

AAAAB pattern and stimuli of experiment 1 with tone contrast. A: structure of the AAAAB pattern repeated over the 40-s sequences. B: time frequency maps of the AAAAB pattern in experiment 1. The tone contrast between A and B tones was either 0.02 (undetectable contrast; sequence 1), 0.50 (small detectable contrast; sequence 2), or 4 semitones (large detectable contrast; sequence 3). C, left: frequency spectrum of the sound envelope of the 3 sequences, with a peak at 8 Hz corresponding to the 125-ms repetition rate of the tones (i.e., base frequency). C, right: frequency spectrum of the cochlear response predicted by a cochlear channel model (displayed as normalized relative to the maximum magnitude within each sequence). Note that the cochlear channel model predicts an additional peak at 8 Hz/5 and harmonics, corresponding to the contrast occurrence. Also note that the predicted amplitude of the response at base frequency was much higher than the response at contrast frequency in sequence 2 (small contrast). Conversely, the predicted amplitude of the base response was much smaller than the contrast response in sequence 3 (large contrast).

In two experiments, we tested two different types of auditory contrasts, tone or interaural time contrast. In the first experiment, the tone contrast was either undetectable, small, or large according to the Western musical scale of tones. With the rate of presentation used here, the large contrast was also expected to induce a perceptual effect of “stream segregation,” in which the A and B tones would be perceived as distinct auditory streams (Bregman 1990; van Noorden 1975). Furthermore, because contrast responses observed in this first experiment could be due to the effects occurring at a peripheral level, as stimuli of different tones do not activate the same peripheral cochlear afferents, we conducted a second experiment in which a difference in interaural timing was used to induce a contrast in the perceived spatial location of A and B tones. In this second experiment, the peripheral cochlear channels activated by the A and B tones were strictly identical, and the observation of a contrast-specific EEG response would thus demonstrate that the processing of auditory contrast embedded in fast, continuous sequences does not rely solely on a process of peripheral cochlear channeling.

MATERIALS AND METHODS

Participants

Twenty-four healthy participants took part in the study after providing written, informed consent, with 12 volunteers in experiment 1 (5 males, all right-handed except for 1, mean age 27 ± 3 yr) and 12 volunteers in experiment 2 (6 males, all right-handed, mean age 29 ± 4 yr). All participants were mostly familiar with music produced according to the Western musical scale, but none were professional musicians (no training of >10 yr). They had no history of hearing, neurological, or psychiatric disorders and were not taking any drugs at the time of the experiment. The study was approved by the Ethics Committee of the Catholic University of Louvain (Brussels, Belgium).

Experiment 1: Tone Contrast

The stimulus consisted of a pattern made of alternated A and B tones in the form AAAAB (Fig. 1A). This pattern was continuously looped to generate 40-s sequences. A and B were pure tones of 125-ms duration (i.e., 8-Hz presentation rate), with 10-ms rise and fall cosine ramps. Given the structure of the sequence, an EEG response observed at 8 Hz and harmonics should correspond to the neural response elicited by the acoustic energy of the sequence (further referred to as the “base response”), whereas an EEG response observed at 8 Hz/5 and harmonics should correspond to a neural response specifically related to the contrast between A and B sounds (“contrast response”) (de Heering and Rossion 2015; Jonas et al. 2016; Liu-Shuang et al. 2014; Rossion 2014). Note that the term “contrast response” refers to the differential response between A and B tones, which would arise if and only if the responses elicited by the A and B tones substantially differed from each other (see e.g., Lochy et al. 2016 and Retter and Rossion 2016).

In condition 1 (baseline condition), the contrast between A and B was 0.02 semitone (tone A = 1,000 Hz, tone B = tone A + 0.02 semitones = 1,001.2 Hz). In condition 2, the contrast was 0.50 semitones (tone A = 1,000 Hz, tone B = 1,029.3 Hz). In condition 3, the contrast was four semitones (tone A = 1,000 Hz, tone B = 1,259.9 Hz) (Fig. 1B). These sound sequences were presented in three separate blocks for the three conditions. The order of the blocks was counterbalanced across participants. In each block, the 40-s auditory sequence was repeated eight times (8 trials/condition). The onset of each sequence was self-paced and preceded by a 3-s foreperiod. The experimenter remained in the recording room at all times to monitor compliance to the procedure and instructions throughout the experiment. To ensure that participants focused their attention on the sound, they were asked to carefully listen to the sound and report at the end of each sequence any irregularity in duration, pitch, and intensity of the sounds over the sequences. There was no actual irregularity in any of the sequences; nevertheless, participants did report detecting subtle changes throughout the sequences in about half of the trials on average.

Based on previous behavioral studies on contrast detection and auditory stream segregation (see, e.g., Bregman 1990 and van Noorden 1975), a contrast of 0.02 semitone embedded in a continuous sequence presented at 8 Hz was expected to be undetectable. Conversely, 0.50 and 4 semitone contrasts were expected to be detectable. In addition, the sequence with 4 semitone contrast was expected to induce stream segregation. To confirm this, every participant was asked at the end of each condition whether “there was one or two distinct notes repeated over the sequences”, as an index of discrimination across A and B tones. They were also asked whether they had “a feeling that an additional, high-pitched sound was played on top of the stream of fast repeated low-pitched tone,” as an index of stream segregation between A and B tones. The auditory stimuli were created and presented using Matlab (MathWorks) with binaural presentation through headphones at a comfortable hearing level corresponding to ~70 dB SPL (BeyerDynamic DT 990 PRO).

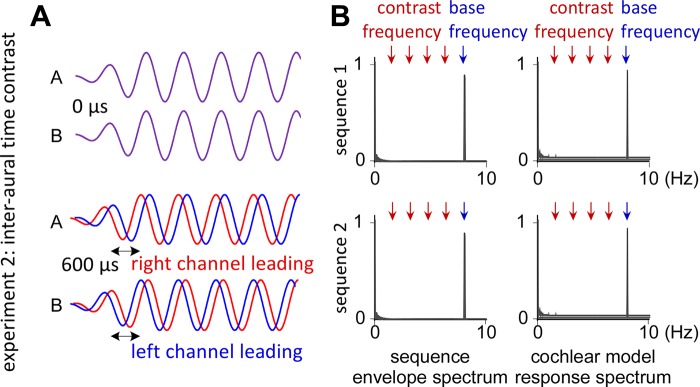

Experiment 2: Interaural Time Contrast

The sequence design and task of experiment 2 were identical to those of experiment 1, except for the fact that the contrast between A and B tones was based on a difference in interaural timing, inducing an illusion of spatial contrast. Because interaural timing contrasts are known to be processed in the central relays of the ascending auditory pathway after the cochlea (see e.g., Yost 2000), experiment 2 allowed for testing whether contrast-related responses embedded in fast, continuous auditory sequences and identified with the frequency-tagging approach could be elicited by mechanisms other than differences in peripheral cochlear channeling.

Such as in experiment 1, an undetectable 0.02 semitone frequency contrast between A and B tones was used as baseline condition (tone A = 200 Hz, tone B = tone A + 0.02 semitones = 200.2 Hz). Lower tone frequencies were used in experiment 2 compared with experiment 1, because a longer cycle period was required to introduce the interaural time delay. In condition 2, A tones were presented with the left/right ear preceding the right/left ear by a time lag of 600 µs, whereas B tones were presented with the right/left ear preceding the left/right ear by 600 µs (Fig. 2). This interaural timing contrast induced an illusion of spatial source contrast between A and B tones and was also expected to induce a perceptual phenomenon of stream segregation based on these perceived separate spatial sources for A and B tones (segregated auditory streams are usually associated with distinct sound sources; see Bregman 1990 and van Noorden 1975). To confirm this, participants were asked at the end of each condition whether or not they had “a feeling that one sound was coming from one side on top of the stream of fast repeated tones coming from the opposite side.” The side of the perceived location of A and B tones was counterbalanced across participants.

Fig. 2.

Stimuli of experiment 2 with interaural time contrast. A: in condition 1, there was no interaural time contrast between A and B tones (top). In condition 2, the A and B tones were presented with an interaural time contrast of 600 µs (bottom), inducing an illusion of 180° azimuth contrast between A and B tones and thus an effect of stream segregation due to the perceived separate spatial sources. B: frequency spectrum of the sound envelope and of the cochlear signals obtained using a cochlear channel model of response (displayed as normalized relative to the maximum magnitude within each sequence). Note that, in both conditions, no peaks emerge at contrast frequencies, because interaural time contrasts cannot be processed by cochlear channels.

Sound Sequence Analysis

The envelope of the different 40-s sound sequences of experiments 1 and 2 was extracted using the Hilbert function implemented in Matlab to obtain a time-varying estimate of the instantaneous amplitude of the sound envelope (Cirelli et al. 2016; Nozaradan et al. 2016a, 2016b, 2016c). The obtained waveforms were then transformed in the frequency domain using a discrete Fourier transform to obtain a frequency spectrum of envelope magnitude, as displayed in Figs. 1 and 2 (for experiments 1 and 2, respectively). Hence, this analysis yielded a model of response corresponding to the envelope of acoustic energy of the sequences.

The sequences were also analyzed using a cochlear channel model (Gammatone filter bank modeling the peripheral processing performed by cochlear channels), as implemented in the Auditory Toolbox running on Matlab (Slaney 1998). The obtained bands centered on the carrier frequencies of the A and B tones were then transformed in the frequency domain using a Fourier transform (Figs. 1 and 2). This analysis yielded a cochlear channel model or prediction of responses to the contrasts embedded in the sequences, to which the EEG data could be further compared.

EEG Recording

Participants were seated comfortably in a chair with the head resting on a support. They were instructed to relax, avoid any unnecessary head or body movement, and keep their eyes fixated on a point displayed on a computer screen in front of them. The EEG was recorded using 64 Ag-AgCl electrodes placed on the scalp according to the International 10/10 system. Vertical and horizontal eye movements were monitored using four additional electrodes placed on the outer canthus of each eye and on the inferior and superior areas of the left orbit. Electrode impedances were kept below 10 kΩ. The signals were amplified, low-pass filtered at 500 Hz, digitized using a sampling rate of 1,000 Hz, and referenced to an average reference (64-channel high-speed amplifier; Advanced Neuro Technologies).

EEG Analysis

The continuous EEG recordings were filtered using a 0.1-Hz high-pass FFT filter to remove very slow drifts in the recorded signals. Epochs lasting 40 s were obtained by segmenting the recordings from +0 to +40 s relative to the onset of the auditory sequence. Artifacts produced by eye blinks or eye movements were removed using a validated method based on an independent component analysis applied on the entire set of EEG data (Jung et al. 2000) using the runica algorithm (Bell and Sejnowski 1995; Makeig 2002), leading to the rejection of one independent component per participant. For each subject and condition, EEG epochs were averaged across trials (i.e., 8 trials averaged per condition and participant). The time domain averaging procedure was used to enhance the signal-to-noise ratio of EEG activities time-locked to the input sequences (and to attenuate the contribution of possible artifacts, e.g., due to heart rate). The obtained average waveforms were then transformed in the frequency domain using a discrete Fourier transform, yielding a frequency spectrum of signal amplitude (µV) ranging from 0 to 500 Hz with a frequency resolution of 0.025 Hz. These EEG processing steps were carried out using Letswave 5 and 6 (http://nocions.webnode.com/) and Matlab (MathWorks).

Within the obtained frequency spectra, the amplitudes may be expected to correspond to the sum of 1) responses elicited by the stimulus and 2) unrelated residual background noise due, for example, to spontaneous EEG activity, muscle activity, or eye movements. Therefore, to obtain valid estimates of the responses, the contribution of this noise was removed by subtracting, at each bin of the frequency spectra, the average amplitude measured at neighboring frequency bins (2nd to 12th frequency bins relative to each bin) for each participant, condition, and electrode. The validity of this subtraction procedure relies on the assumption that, in the absence of a periodic EEG response, the signal amplitude at a given frequency bin should be similar to the signal amplitude of the mean of the surrounding frequency bins (Chemin et al. 2014; Mouraux et al. 2011; Nozaradan et al. 2012, 2015, 2016a). The magnitudes of the contrast and base responses were obtained for each condition and participant by averaging the amplitude over a pool of fronto-central electrodes (Fz, FCz, Cz, F1, F2, FC1, and FC2) based on the topographical maps of responses to auditory contrasts as obtained in most previous studies using oddball designs (Näätänen et al. 2007).

The magnitudes of the responses were then estimated from these averaged activities by taking the noise-subtracted amplitude measured at the exact frequency of the expected responses (8 Hz for the base response and 8 Hz/5 = 1.6 Hz for the contrast response). Because the sound envelope itself was not a pure sine wave, and because the shape of the EEG response does not necessarily match the shape of the sound envelope, the periodic EEG response elicited by the periodic sound envelope was not expected to correspond to a pure sine wave. Therefore, the frequency domain representation of this periodic activity was expected to appear as a series of peaks at the expected frequencies and upper harmonics (Liu-Shuang et al. 2014). To determine the number of harmonic frequencies to take into account, one-sample t-tests against zero were computed on the noise-subtracted amplitudes obtained for each participant in each condition. In the absence of a significant response, the noise-subtracted signal amplitude may be expected to tend toward zero (Mouraux et al. 2011). Harmonics were analyzed until they were no longer significant in either condition (Liu-Shuang et al. 2014). This yielded four harmonics for the contrast response (1.6, 3.2, 4.8, and 6.4 Hz) and two harmonics for the base response (8 and 16 Hz). For each response, these amplitudes were averaged across harmonics for further tests. The average of the amplitudes over the harmonics relies on the assumption that these activities are related to the same phenomenon (see e.g., Appelbaum et al. 2006 and Heinrich et al. 2009; see also Retter and Rossion 2016 for an extensive justification for grouping amplitudes across harmonics to quantify the magnitude of the EEG contrast or base responses).

Statistical Evaluation

Statistical analyses were performed using SPSS Statistics 21.0 (IBM, Armonk, NY). Significance level was set at P < 0.05. All t-tests were Bonferroni-corrected for multiple comparisons by multiplying the obtained P values by the number of performed comparisons. When relevant, the Greenhouse-Geisser correction was used to correct for violations of sphericity in the performed ANOVAs.

We first examined whether the auditory stimulus elicited EEG responses at the expected frequencies across the conditions of the two experiments. To this aim, a one-sample t-test was used to determine whether the amplitudes of the expected base and contrast responses were significantly different from zero.

Furthermore, the base and contrast responses were also compared with each other and across conditions for each experiment. These comparisons were performed using a two-way repeated-measures ANOVA with the factors “response” (two levels: contrast and base response) and “condition” (experiment 1: 3 levels, 0.02, 0.50, and 4 semitones contrast; experiment 2: 2 levels, 0 and 600 μs interaural time contrast). When significant, partial ANOVAs and paired-sample t-tests were used to perform post hoc comparisons.

The obtained EEG responses were also compared with the responses predicted by the cochlear model. The aim of this comparison was to test whether the relative amplitude of the contrast and base responses observed in the EEG could be explained by the peripheral processing of the sequences for tone contrasts. One-sample t-tests were used to compare across participants the ratio between the amplitude measured at 8 Hz/5 and 8 Hz with the corresponding ratio obtained from the cochlear channel model for each condition. Moreover, these ratio values were also compared across conditions using a one-way ANOVA and paired-sample t-tests.

In addition to the frequency domain analysis of the 40-s epochs, we also analyzed the time course of the EEG responses within AAAAB pattern. For each participant and condition, the 40-s epochs were first filtered between 1 and 17 Hz (FFT band-pass filter, 0.1 Hz slope), thus preserving the contrast and base responses and their harmonics while filtering out the EEG activity unrelated to these responses. The 40-s epochs were then segmented into a series of 0.625-s chunks, corresponding to the temporal window between the onsets of each AAAAB pattern in the sequences, and the chunks were averaged. Note that this event-related analysis does not exclude the possibility of overlapping oscillatory activity from previous trials. Instead, it provides a time domain analysis of the EEG frequency-tagged responses to characterize the shape of these responses and their modulation across conditions at a fine temporal scale complementary to the frequency domain analysis.

Because there was no a priori assumption regarding the latencies at which a difference could arise between these waveforms, a point-by-point comparison of the waveforms over the averaged 0.625-s duration chunk was performed. To account for the multiple comparisons, a cluster-based permutation approach was used (cluster-level statistics, representing an analysis of continuous data based upon inference about clusters and randomization testing; see e.g., Maris and Oostenveld 2007 and van den Broeke et al. 2015). The technique assumes that true neural activity will tend to generate signal changes over contiguous time points (Groppe et al. 2011). In experiment 1, the waveforms of the different conditions were compared by means of a one-way repeated-measures ANOVA comparing the three conditions. In experiment 2, the waveforms were compared using a paired-sample t-test comparing the two conditions. Clusters of contiguous time points above the critical F- or t-value for a parametric two-sided test were identified, and an estimate of the magnitude of each cluster was obtained by computing the sum of the F-values or absolute t-values constituting each cluster. Random permutation testing (1,000 times) of the waveforms of the different epochs (performed independently for each subject) was then used to obtain a null distribution of maximum cluster magnitude. Finally, the proportion of random partitions that resulted in a larger cluster-level statistic than the observed one (i.e., p value) was calculated. Clusters in the observed data were regarded as significant if they had a magnitude exceeding the threshold of the 95th percentile of the permutation distribution of the F-statistic and t-statistic.

Finally, the time course of the amplitude of the frequency-tagged responses across repetitions of the AAAAB patterns was analyzed over the 40-s sequence, thus at a longer time scale. A sliding FFT was computed on the averaged 40-s epochs for each condition and participant. A temporal window of 6.25 s (10 repetitions of the AAAAB pattern) moving by steps of 0.625 s (i.e., the duration of one AAAAB pattern) was used as a compromise between temporal resolution, spectral resolution (0.15 Hz resolution obtained in the FFT as calculated on 6.25-s windows), and signal-to-noise ratio of the responses. This analysis yielded 54 successive values of amplitudes for the contrast and base responses. As for the unwindowed FFT spectra, the obtained values were corrected by subtracting, at each target frequency (base and contrast responses and their harmonics), the amplitude measured at a neighboring frequency bin (the 3rd upper frequency bin relative to each target bin was taken instead of the 2nd-12ve bin range, as used in the 40-s unwindowed FFT spectra, because the frequency resolution was coarser in the windowed FFT compared with the unwindowed FFT, and a 2nd-12ve bin range would thus overlap with the frequencies of interest). A one-way ANOVA was then used to compare these values over time. Although successive values in the series were not completely independent from each other due to the overlap between successive time windows, the ANOVA tested the null hypothesis that the values would not be significantly different across the sequence. If significant, the presence of a linear trend was tested. Importantly, the aim of this analysis was not to determine the best-fit model explaining the dynamics of amplitude across time points but rather to test whether these amplitudes significantly decreased or increased over the sequence as an estimation of the buildup or decay of the response over the sequence.

RESULTS

Sound Sequence Analysis

In experiment 1, the spectrum of the envelope of acoustic energy consisted of a peak at 8 Hz (and harmonics) for all the different sequences, corresponding to the 125-ms duration of the sequential sounds (Figs. 1 and 2). The cochlear channel model of response also consisted of a peak at 8 Hz (and harmonics) in all conditions. However, an additional peak at 8 Hz/5 (1.6 Hz and harmonics: 3.2, 4.8, and 6.4 Hz) was obtained for the sequences with 0.50 and 4 semitones contrast (conditions 2 and 3). In condition 2, this contrast response was relatively small compared with the base response (contrast/base ratio of 0.39). In condition 3, the contrast response was prominent relative to the base response (contrast/base ratio of 4.21).

Expectedly, the spectrum obtained for the cochlear channel model in the 0.02 semitone contrast (condition 1) did not exhibit a significant peak at 8 Hz/5 and harmonics, as such a small tone contrast is not resolved by the cochlear channels. This was also the case for experiment 2, where the cochlear channel models only exhibited a peak at 8 Hz but not at 8 Hz/5, as the interaural time contrast is not processed by the cochlear channels (Fig. 2).

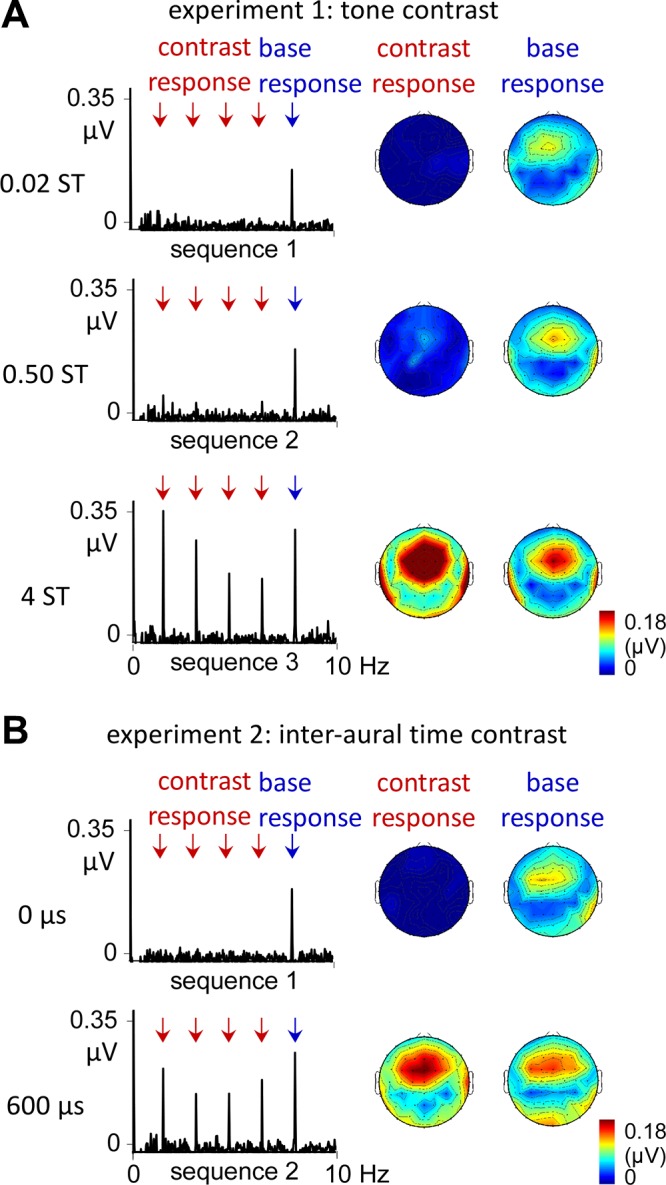

EEG Data

In both experiments 1 and 2, the grand average spectra (Fig. 3) exhibited a clear base response over fronto-central electrodes, demonstrating successful synchronization to the envelope of acoustic energy of the sequences in all conditions. Moreover, when the contrast was detectable (conditions 2 and 3 in experiment 1 and condition 2 in experiment 2), contrast responses appeared as additional peaks in the EEG spectra. The one-sample t-tests comparing the noise-subtracted amplitude of these responses against zero (as detailed in Table 1) confirmed these observations. Note, however, the small amplitude obtained for the small 0.50 semitone contrast response in experiment 1, suggesting some just above-threshold response with such a small contrast (10 participants/12 with >0.01 μV in this condition, as opposed to 0 participants/12 in condition 1). In both experiments, these contrast responses exhibited similar fronto-central topographies to those of the base response, as shown in Fig. 3.

Fig. 3.

Electroencephalographic (EEG) spectra of experiments 1 and 2 and corresponding topographies. A: in experiment 1, a base response was observed with similar amplitude across conditions. Most importantly, a contrast response emerged in condition 2, as predicted by the cochlear channel model. This contrast response was markedly increased in condition 3, although it was not associated with a relative reduction of the base response, as predicted by the cochlear channel model. B: a similar profile of response was obtained in experiment 2, with a stable base response across conditions and a contrast response emerging in condition 2. Note that the topographical distribution of these responses was fronto-central and overall similar across conditions and experiments.

Table 1.

t-Tests against zero of the noise-subtracted amplitudes for the base and contrast responses

| Base Response (8 Hz f1–f2) | Contrast Response (1.6 Hz f1–f4) | |

|---|---|---|

| Experiment 1: frequency contrast | ||

| Condition 1: 0.02 semitone | 0.094 ± 0.058 μV, P = 0.0006 | −0.001 ± 0.007 μV, P = 1 |

| Condition 2: 0.50 semitone | 0.106 ± 0.054 μV, P < 0.0001 | 0.038 ± 0.044 μV, P = 0.036 |

| Condition 3: 4 semitone | 0.143 ± 0.106 μV, P = 0.0021 | 0.223 ± 0.132 μV, P = 0.0003 |

| Experiment 2: interaural time contrast | ||

| Condition 1: 0 μs | 0.103 ± 0.055 μV, P < 0.0001 | 0.001 ± 0.017 μV, P = 1 |

| Condition 2: 600 μs | 0.130 ± 0.074 μV, P < 0.0001 | 0.162 ± 0.075 μV, P < 0.0001 |

Values are means ± SD; n = 12 for each experiment. These values were obtained for each participant from a pool of fronto-central electrodes and averaged across harmonics within contrast (f1–f2) and base (f1–f4) responses.

Experiment 1.

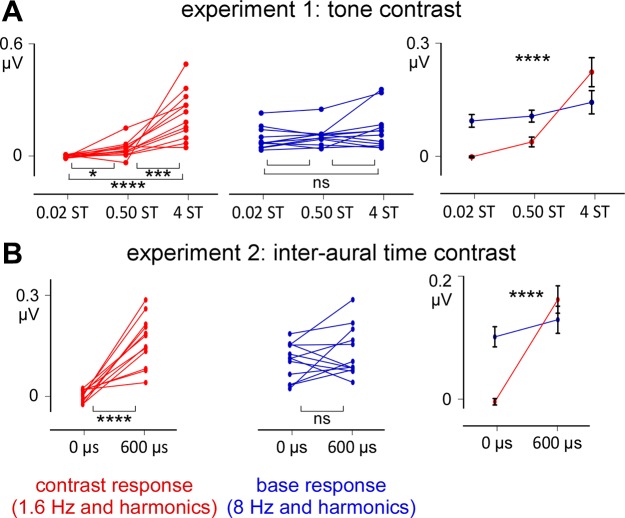

The two-way ANOVA used to compare the amplitudes of the base and contrast responses (factor response) across the three conditions (factor condition) (Fig. 4) revealed a significant interaction between the two factors [F(1.587, 17.462) = 20.654, η2 = 0.652, P < 0.0001]. This interaction was explained by the fact that the base response was not significantly different across the three conditions (conditions 1 and 2, P = 0.748; conditions 2 and 3, P = 0.441; conditions 1–3, P = 0.100), as opposed to the contrast response (conditions 1 and 2, P = 0.043; conditions 2 and 3, P = 0.003; conditions 1–3, P < 0.0001).

Fig. 4.

Noise-subtracted amplitudes across participants for experiments 1 and 2 (fronto-central electrodes). A and B: left and middle graphs correspond to the amplitude measured in each participant (asterisks = pairwise comparisons). Right graphs correspond to the group level average ± SD. NS, nonsignificant; *P ≤ 0.05; ***P ≤ 0.001; ****P ≤ 0.0001 (ANOVAs).

Experiment 2.

Such as in experiment 1, the two-way ANOVA used to compare the amplitudes of the base and contrast responses across the two conditions showed a significant interaction between the two factors [F(1, 11) = 33.879, η2 = 0.755, P < 0.0001] This interaction was also explained by the fact that the base response was not significantly different across conditions (P = 0.253), as opposed to the contrast response (P < 0.0001) (Fig. 4).

Comparison between EEG responses and the cochlear responses predicted by the cochlear channel model (for experiment 1; tone contrast).

No significant difference was observed between the EEG responses and the responses predicted by the cochlear channel model in condition 1 with 0.02 semitone contrast, as no significant contrast response was observed in the EEG as well as in the cochlear model (values of contrast-to-base amplitude ratio: 0.01 for the cochlear channel model and −0.05 ± 0.26 for the EEG responses; 1-sample t-test, P = 0.387). More importantly, there was also no significant difference in condition 2 with 0.50 semitone contrast (0.39 for the cochlear channel model and 0.46 ± 0.50 for the EEG responses, P = 0.621), suggesting that the emergence of a response to this fine frequency contrast could be explained by peripheral processing of the contrast. However, this was not the case in condition 3, where the contrast-to-base ratio predicted by the cochlear channel model was much greater than the actual ratio observed in the EEG (4.20 for the cochlear channel model and 1.73 ± 0.76 for the EEG responses, P < 0.0001), suggesting the involvement of central mechanisms in processing the sequence with larger frequency contrast.

These ratios were also significantly different across the three conditions [F(20.40, 1.26) = 16.66, η2 = 0.60, P = 0.001], with greater values in condition 3 compared with conditions 1 (post hoc t-test, P = 0.001) and 2 (P = 0.002) and also greater values in condition 2 compared with condition 1 (P = 0.020).

Time course of the EEG responses to the AAAAB pattern.

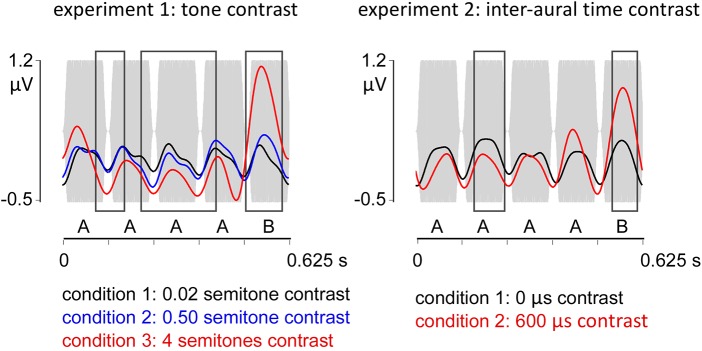

As depicted in Fig. 5, the EEG waveforms in response to the different sequences were characterized in all conditions by a positive deflection peaking at ∼50 ms relative to the onset of each tone, thus at a latency compatible with middle latency auditory potentials (Grimm et al. 2016), followed by a negative deflection at ∼100 ms.

Fig. 5.

Time course of the EEG responses to the AAAAB pattern. Gray bars represent the pattern envelope. In the 2 experiments, responses to each tone were observed as positive deflections peaking at ∼50 ms, followed by a negative deflection at ∼100 ms. A point-by-point comparison (cluster statistics) across conditions revealed significant differences across conditions during and also after the presentation of the B tone (black boxes), which are thus compatible with middle and late latency auditory potentials, respectively, triggered by the contrast.

In experiment 1, the point-by-point comparison of the waveforms across conditions revealed three significant clusters extending between 91 and 170, 216–422, and 504–601 ms (Fig. 5). Post hoc tests between conditions 1 and 2 revealed one significant cluster (227–278 ms) characterized by more negative amplitude values. Comparison between conditions 2 and 3 revealed three significant clusters exhibiting more negative amplitude values coinciding with the occurrence of the A tones (91–134, 266–371, and 382–428 ms) and one significant cluster with more positive amplitude values (504–594 ms) coinciding with the occurrence of the B tone in the AAAAB pattern. In experiment 2, the point-by-point comparison of the two conditions revealed two significant clusters between 157 and 241 ms and 537–606 ms (Fig. 5).

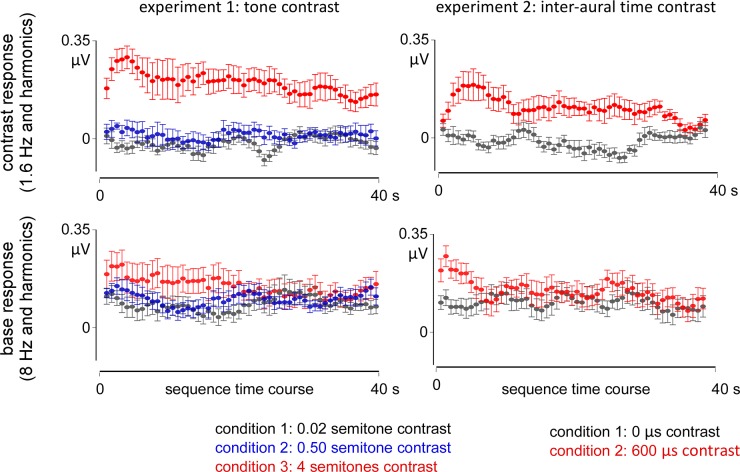

Time course of the EEG response across successive repetitions of the AAAAB pattern.

In experiment 1, the magnitude of the base response was stable over time in conditions 1 and 2, but not in condition 3, where a progressive decrease over time was found (Fig. 6). These observations were confirmed by the ANOVA showing no significant difference in amplitude across the time points in condition 1 or 2 [F(53, 11) = 1.105, η2 = 0.091, P = 0.289, and F(53, 11) = 1.080, η2 = 0.089, P = 0.329, respectively], but a significant decrease in condition 3 [F(53, 11) = 1.632, η2 = 0.129, P = 0.004; significant linear trend at P < 0.0001, −6% slope]. A similar profile was observed in experiment 2. Specifically, a stable amplitude of the base response was observed over time in condition 1 [0-μs interaural time contrast: F(53, 11) = 0.601, η2 = 0.051, P = 0.988] but not in condition 2 [600-μs interaural time contrast: F(53, 11) = 1.897, η2 = 0.147, P = 0.0003; significant linear trend at P < 0.0001, −10%].

Fig. 6.

Evolution of the EEG responses across successive AAAAB patterns. The time points were obtained using a sliding FFT (6.25-s time window with 0.625 s steps). For both the contrast (top) and base responses (bottom), the amplitude remained stable over time, except in the conditions with large contrast where a transient increase in amplitude was also observed, spanning 3–10 s after the onset of the sequence, followed by a significant decrease (in red, experiments 1 and 2; top).

For the contrast response, the ANOVA comparing the amplitude over time yielded overall similar results than for the base response. In experiment 1, no significant differences across time points were obtained in condition 1 [0.02 semitone contrast: F(53, 11) = 1.335, η2 = 0.108, P = 0.062] or in condition 2 [0.50 semitone contrast: F(53, 11) = 1.398, η2 = 0.034, P = 0.999], but a significant decrease over time in amplitude was observed in condition 3 [4 semitones contrast: F(53, 11) = 1.874, η2 = 0.145, P = 0.0003; significant linear trend at P < 0.0001, −8%] (Fig. 6). In experiment 2, a significant difference across time points was found in condition 1 (0-μs interaural time contrast: F(53, 11) = 1.622, η2 = 0.128, P = 0.005), but this fluctuation in amplitude did not follow a linear tendency over the sequence (P = 0.778). In condition 2, the significant difference across time points [600-μs interaural time contrast: F(53, 11) = 2.091, η2 = 0.159, P < 0.0001] was characterized by a significant linear decrease over the sequence (P < 0.0001, −15%).

Visual inspection of the time course of the contrast response revealed a similar profile across the two experiments (Fig. 6, red circles). In both experiments, at large contrast, the time course of the contrast response was characterized by a gradual increase at the beginning of the sequence, peaking over the fifth time point (i.e., between ~3 and 10 s). This transient buildup was observed only for perceptually salient contrasts. Moreover, it was observed only in the contrast responses but not in the base responses, thus suggesting that the two responses might be supported by distinct neural mechanisms.

DISCUSSION

In two experiments, contrast-related responses were successfully identified for different types of auditory contrasts embedded in a fast continuous sound sequence, even when the contrast was very fine. Using a frequency-tagging approach, these contrast responses were identified at the exact frequency at which the auditory contrast was introduced (according to an AAAAB pattern). These responses thus emerged in the EEG frequency spectrum at frequencies distinct from those of the EEG response to the envelope of acoustic energy of the sequence. Comparison with a cochlear channel model revealed that peripheral cochlear channeling could not fully account for the EEG responses to the sequence with large tone contrast, thus suggesting an involvement of additional central mechanisms in processing the contrast. This was also suggested by the fact that contrast responses were obtained for a contrast consisting in a difference in interaural timing, and as such a contrast cannot be resolved at the level of cochlear channels.

Time course analysis of the EEG responses to the AAAAB patterns revealed that these responses occurred at latencies corresponding to that of middle-latency auditory potentials (∼50 ms). Finally, analysis of the evolution of the responses across repetitions of the AAAAB patterns revealed a stable amplitude over time, except for the responses to the most perceptually salient contrasts, which showed dynamics of buildup and decay across repetitions of the pattern.

Undetectable Contrast

No contrast response was identified for the tone contrast of 0.02 semitone. This result corroborates previous evidence that the cochlear channels cannot resolve such a small tone contrast (Moore 2003), as also reflected in the subjective report of all the participants who could not discriminate between A and B tones in this condition. Instead, only the base response to the envelope of acoustic energy of the sequence was observed in the EEG spectrum. This response displayed a fronto-central distribution, as is typically found for responses to amplitude-modulated tones, compatible with bilateral activation of the Heschl’s gyrus in response to fast sequences of tones (Snyder et al. 2006). Time course analysis within the AAAAB pattern revealed that this base response corresponded to a positive deflection peaking ~50 ms relative to the onset of each tone in the pattern, which could be related to auditory middle latency responses originating from the Heschl’s gyrus (see e.g., Gutschalk et al. 2005 and Snyder et al. 2006). This positive deflection was followed by a negative peak at ~100 ms, which could correspond to higher-level late-latency cortical activity (see e.g., Cornella et al. 2015). Future research using the same design but coupled with recording methods providing a greater spatial resolution (e.g., intracerebral EEG; see e.g., Jonas et al. 2016) or with analysis methods such as those that have been used to investigate the neural generators of evoked potentials, such as the MMN (e.g., dynamic causal modeling; see e.g., Garrido et al. 2008), should address the question of the generators of these components more appropriately.

Interestingly, there was no significant decrease in amplitude of these responses over repetitions of the tones in the 40-s sequence. The fact that this response was not subject to adaptation even after dozens of seconds of repetitive stimulation at relatively fast rate is in discrepancy with the hypothesis of a reduction of the neural response to a stimulus by a similar preceding stimulus according to a mechanism of adaptation (Bregman 1990; Gutschalk et al. 2005; Hartmann and Johnson 1991). Alternatively, the adaptation could have been missed by our analysis if this was a quick process already appearing after one or two repetitions of the sound in the sequence.

Small Detectable Contrast

As opposed to the 0.02 semitone condition, the 0.50 semitone condition elicited a small but significant contrast response in accord with the subjective report of the participants, who could all discriminate between A and B tones in this condition. This result highlights the sensitivity of the frequency-tagging method to capture the neural processing of contrast inserted in fast, continuous streams and suggests that the method could be possibly used to probe contrast response at threshold in individuals with specific auditory expertise or impairment. Indeed, the semitone is considered an experience-induced perceptual boundary in Western listeners, as this frequency interval is usually the least common interval found in most Western speech and music (Zarate et al. 2012).

Comparison with a cochlear channel model of response indicated that the channeling processes occurring at the cochlear stage could account for the EEG responses to this fine tone contrast. In other words, the contrast-specific response observed here could be explained by the activity of neuronal populations responding specifically to the frequency band corresponding to B tones, which is distinct from the tonotopic channel responding to A tones (Micheyl et al. 2007). Interestingly, there was no significant decrease in amplitude of these responses over the 40-s sequence, thus revealing again a relatively stable process with no adaptation, even after dozens of repetitions of the tones at fast rate.

Large Contrast

With four semitones contrast, the contrast-related response was even sharper, thus increasing in amplitude when increasing the contrast. However, these responses could no longer be explained by the cochlear channel model, thus suggesting an involvement of additional central mechanisms. For instance, these neural responses might comprise an effect of bottom-up attention captured by the AAAAB pattern made more perceptually salient due to the sharper contrast, as opposed to sequences conveying a smoother pattern surface through finer contrast between tones. The involvement of central mechanisms, possibly occurring already at brainstem level, was also suggested by the results of the second experiment using contrast of interaural timing instead of tone contrast. Indeed, the contrast responses observed in this second experiment cannot be explained by peripheral channeling, as in this experiment the A and B tones activated the same tonotopic channels.

Time Course of the Responses

Time domain analysis revealed a significant modulation of the response to the AAAAB pattern by the contrast, thus explaining the emergence of contrast responses in the frequency domain analysis. For the fine contrast, these modulations appeared within time windows compatible with late responses triggered by the B tone (i.e., during the presentation of the A tones). However, for larger contrasts, these modulations also appeared in a time window compatible with middle-latency responses to the B tone (i.e., during the actual presentation of the B tone). To date, deviance-related modulations of middle-latency auditory evoked potentials have been reported to physical feature changes occurring with a low probability but have not been reported in sequences following a fast repeating pattern such as the one used here (see e.g., Grimm et al. 2016 for a review).

We also analyzed the evolution of the responses across repetitions of the AAAAB pattern. For large contrasts, this analysis revealed a transient increase of the contrast response, peaking 3–10 s after the onset of the sequence. This transient increase could be attributed to the processing of regularity requiring a few instances of the stimulus to lead to the formation of complex perceptual patterns (see e.g., Barascud et al. 2016). Alternatively, this buildup could be interpreted in light of previous work having observed a progressive increase in amplitude of the event-related potentials over a few presentations of ABA patterns used to explore the perceptual phenomenon of stream segregation between A and B tones (Snyder et al. 2006). In this work, the buildup was interpreted as a temporal window in which successive tones were analyzed and integrated within separate streams, thus underlying the buildup of stream segregation (Snyder et al. 2006). This interpretation fits well with the results of the current study showing a similar buildup period of the contrast response but not the base response and, most importantly, only in the conditions that generated a stream segregation effect as reported by all participants (i.e., the large frequency contrast of experiment 1 and the separate spatial source condition of experiment 2). Future research using the same frequency-tagging method but manipulating the perceptual segregation into separate streams independently of the contrast, for example, by exploiting the multistability of sequences inducing either stream segregation or integration (see e.g., Bregman 1990), could address this issue more directly.

This transient increase of the contrast response was followed by a progressive decay over the 40-s sequence, possibly reflecting a mechanism of adaptation due to the repetition of the contrast over time. Such adaptation has already been observed in human and nonhuman animals by showing modulations of the sensitivity to the contrast due to the increased predictability of the occurrence of the contrast, leading to the formation of complex perceptual patterns (Barascud et al. 2016; Bendixen 2014; Chait et al. 2008; Schröger et al. 2014; Simpson et al. 2014; Sussman et al. 1998; Yaron et al. 2012). Most importantly, the fact that this progressive decay was observed only for large contrasts but not for fine contrasts in the current study suggests that the perceptual saliency of the contrast is critical to this adaptation, in addition to parameters such as contrast predictability.

Overall, the two experiments demonstrate that this new combination of frequency tagging with an oddball design can be used to characterize the neural responses specific to contrasts in fast, continuous acoustic sequences. Specifically, the approach opens us to further research studying contrast-specific responses across a large range of presentation rates to investigate the processing of auditory information that arrives rapidly and sequentially and also investigating the modulation of this processing by endogenous factors such as attention or exogenous factors such as acoustic spectral properties of the stimuli, for instance. Moreover, because it does not require an explicit response from the participant, this method could be particularly valuable to probe these processes in individuals with hearing impairment or neurodevelopmental disorders such as dyslexia, showing difficulties in processing brief, rapidly changing acoustic information (Albouy et al. 2016; Oxenham 2008).

GRANTS

S. Nozaradan was supported by an Australian Research Council grant (DECRA DE160101064), and an FRSM 3.4558.12 Convention grant from the Belgian National Fund for Scientific Research was given to A. Mouraux.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the authors.

AUTHOR CONTRIBUTIONS

S.N., A.M., and M.C. conceived and designed research; S.N. and M.C. performed experiments; S.N. and M.C. analyzed data; S.N., A.M., and M.C. interpreted results of experiments; S.N. prepared figures; S.N. drafted manuscript; S.N., A.M., and M.C. edited and revised manuscript; S.N., A.M., and M.C. approved final version of manuscript.

REFERENCES

- Albouy P, Cousineau M, Caclin A, Tillmann B, Peretz I. Impaired encoding of rapid pitch information underlies perception and memory deficits in congenital amusia. Sci Rep 6: 18861, 2016. doi: 10.1038/srep18861. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Appelbaum LG, Wade AR, Vildavski VY, Pettet MW, Norcia AM. Cue-invariant networks for figure and background processing in human visual cortex. J Neurosci 26: 11695–11708, 2006. doi: 10.1523/JNEUROSCI.2741-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barascud N, Pearce MT, Griffiths TD, Friston KJ, Chait M. Brain responses in humans reveal ideal observer-like sensitivity to complex acoustic patterns. Proc Natl Acad Sci USA 113: E616–E625, 2016. doi: 10.1073/pnas.1508523113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bell AJ, Sejnowski TJ. An information-maximization approach to blind separation and blind deconvolution. Neural Comput 7: 1129–1159, 1995. doi: 10.1162/neco.1995.7.6.1129. [DOI] [PubMed] [Google Scholar]

- Bendixen A. Predictability effects in auditory scene analysis: a review. Front Neurosci 8: 60, 2014. doi: 10.3389/fnins.2014.00060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bregman A. Auditory Scene Analysis: The Perceptual Organization of Sound. Cambridge, MA: MIT Press, 1990. [Google Scholar]

- Chait M, Poeppel D, Simon JZ. Auditory temporal edge detection in human auditory cortex. Brain Res 1213: 78–90, 2008. doi: 10.1016/j.brainres.2008.03.050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chemin B, Mouraux A, Nozaradan S. Body movement selectively shapes the neural representation of musical rhythms. Psychol Sci 25: 2147–2159, 2014. doi: 10.1177/0956797614551161. [DOI] [PubMed] [Google Scholar]

- Cirelli LK, Spinelli C, Nozaradan S, Trainor LJ. Measuring neural entrainment to beat and meter in infants: effects of music background. Front Neurosci 10: 229, 2016. doi: 10.3389/fnins.2016.00229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cornella M, Bendixen A, Grimm S, Leung S, Schröger E, Escera C. Spatial auditory regularity encoding and prediction: Human middle-latency and long-latency auditory evoked potentials. Brain Res 1626: 21–30, 2015. doi: 10.1016/j.brainres.2015.04.018. [DOI] [PubMed] [Google Scholar]

- de Heering A, Rossion B. Rapid categorization of natural face images in the infant right hemisphere. eLife 4: e06564, 2015. doi: 10.7554/eLife.06564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Demany L, Semal C. The slow formation of a pitch percept beyond the ending time of a short tone burst. Percept Psychophys 67: 1376–1383, 2005. doi: 10.3758/BF03193642. [DOI] [PubMed] [Google Scholar]

- Demany L, Semal C. In auditory perception of sound sources. In: Springer Handbook of Auditory Research, edited by Fay RR and Popper AN. New York: Springer International, p. 77–113, vol. 29, 2007. [Google Scholar]

- Escera C, Malmierca MS. The auditory novelty system: an attempt to integrate human and animal research. Psychophysiology 51: 111–123, 2014. doi: 10.1111/psyp.12156. [DOI] [PubMed] [Google Scholar]

- Freyman RL, Nelson DA. Frequency discrimination as a function of tonal duration and excitation-pattern slopes in normal and hearing-impaired listeners. J Acoust Soc Am 79: 1034–1044, 1986. doi: 10.1121/1.393375. [DOI] [PubMed] [Google Scholar]

- Garrido MI, Friston KJ, Kiebel SJ, Stephan KE, Baldeweg T, Kilner JM. The functional anatomy of the MMN: a DCM study of the roving paradigm. Neuroimage 42: 936–944, 2008. doi: 10.1016/j.neuroimage.2008.05.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goswami U. Sensory theories of developmental dyslexia: three challenges for research. Nat Rev Neurosci 16: 43–54, 2015. doi: 10.1038/nrn3836. [DOI] [PubMed] [Google Scholar]

- Grimm S, Escera C. Auditory deviance detection revisited: evidence for a hierarchical novelty system. Int J Psychophysiol 85: 88–92, 2012. doi: 10.1016/j.ijpsycho.2011.05.012. [DOI] [PubMed] [Google Scholar]

- Grimm S, Escera C, Nelken I. Early indices of deviance detection in humans and animal models. Biol Psychol 116: 23–27, 2016. doi: 10.1016/j.biopsycho.2015.11.017. [DOI] [PubMed] [Google Scholar]

- Groppe DM, Urbach TP, Kutas M. Mass univariate analysis of event-related brain potentials/fields I: a critical tutorial review. Psychophysiology 48: 1711–1725, 2011. doi: 10.1111/j.1469-8986.2011.01273.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gutschalk A, Micheyl C, Melcher JR, Rupp A, Scherg M, Oxenham AJ. Neuromagnetic correlates of streaming in human auditory cortex. J Neurosci 25: 5382–5388, 2005. doi: 10.1523/JNEUROSCI.0347-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartmann WM, Johnson D. Stream segregation and peripheral channeling. Music Percept J 9: 155–183, 1991. doi: 10.2307/40285527. [DOI] [Google Scholar]

- Heinrich SP, Mell D, Bach M. Frequency-domain analysis of fast oddball responses to visual stimuli: a feasibility study. Int J Psychophysiol 73: 287–293, 2009. doi: 10.1016/j.ijpsycho.2009.04.011. [DOI] [PubMed] [Google Scholar]

- Jonas J, Jacques C, Liu-Shuang J, Brissart H, Colnat-Coulbois S, Maillard L, Rossion B. A face-selective ventral occipito-temporal map of the human brain with intracerebral potentials. Proc Natl Acad Sci USA 113: E4088–E4097, 2016. doi: 10.1073/pnas.1522033113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jung TP, Makeig S, Westerfield M, Townsend J, Courchesne E, Sejnowski TJ. Removal of eye activity artifacts from visual event-related potentials in normal and clinical subjects. Clin Neurophysiol 111: 1745–1758, 2000. doi: 10.1016/S1388-2457(00)00386-2. [DOI] [PubMed] [Google Scholar]

- Kraus N, McGee T, Carrell T, King C, Littman T, Nicol T. Discrimination of speech-like contrasts in the auditory thalamus and cortex. J Acoust Soc Am 96: 2758–2768, 1994. doi: 10.1121/1.411282. [DOI] [PubMed] [Google Scholar]

- Liu-Shuang J, Norcia AM, Rossion B. An objective index of individual face discrimination in the right occipito-temporal cortex by means of fast periodic oddball stimulation. Neuropsychologia 52: 57–72, 2014. doi: 10.1016/j.neuropsychologia.2013.10.022. [DOI] [PubMed] [Google Scholar]

- Lochy A, Van Reybroeck M, Rossion B. Left cortical specialization for visual letter strings predicts rudimentary knowledge of letter-sound association in preschoolers. Proc Natl Acad Sci USA 113: 8544–8549, 2016. doi: 10.1073/pnas.1520366113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Makeig S. Response: event-related brain dynamics—unifying brain electrophysiology. Trends Neurosci 25: 390, 2002. doi: 10.1016/S0166-2236(02)02198-7. [DOI] [PubMed] [Google Scholar]

- Maris E, Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. J Neurosci Methods 164: 177–190, 2007. doi: 10.1016/j.jneumeth.2007.03.024. [DOI] [PubMed] [Google Scholar]

- Massaro DW, Idson WL. Backward recognition masking in relative pitch judgments. Percept Mot Skills 45: 87–97, 1977. doi: 10.2466/pms.1977.45.1.87. [DOI] [PubMed] [Google Scholar]

- May PJ, Tiitinen H. Mismatch negativity (MMN), the deviance-elicited auditory deflection, explained. Psychophysiology 47: 66–122, 2010. doi: 10.1111/j.1469-8986.2009.00856.x. [DOI] [PubMed] [Google Scholar]

- Micheyl C, Carlyon RP, Gutschalk A, Melcher JR, Oxenham AJ, Rauschecker JP, Tian B, Courtenay Wilson E. The role of auditory cortex in the formation of auditory streams. Hear Res 229: 116–131, 2007. doi: 10.1016/j.heares.2007.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore BC. An Introduction to the Psychology of Hearing (5th ed.). Amsterdam: Academic, 2003. [Google Scholar]

- Mouraux A, Iannetti GD, Colon E, Nozaradan S, Legrain V, Plaghki L. Nociceptive steady-state evoked potentials elicited by rapid periodic thermal stimulation of cutaneous nociceptors. J Neurosci 31: 6079–6087, 2011. doi: 10.1523/JNEUROSCI.3977-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Näätänen R, Paavilainen P, Rinne T, Alho K. The mismatch negativity (MMN) in basic research of central auditory processing: a review. Clin Neurophysiol 118: 2544–2590, 2007. doi: 10.1016/j.clinph.2007.04.026. [DOI] [PubMed] [Google Scholar]

- Nelken I. Stimulus-specific adaptation and deviance detection in the auditory system: experiments and models. Biol Cybern 108: 655–663, 2014. doi: 10.1007/s00422-014-0585-7. [DOI] [PubMed] [Google Scholar]

- Norcia AM, Appelbaum LG, Ales JM, Cottereau BR, Rossion B. The steady-state visual evoked potential in vision research: A review. J Vis 15: 4, 2015. doi: 10.1167/15.6.4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nozaradan S, Mouraux A, Jonas J, Colnat-Coulbois S, Rossion B, Maillard L. Intracerebral evidence of rhythm transform in the human auditory cortex. Brain Struct Funct, 2016a [Epub ahead of print]. doi: 10.1007/s00429-016-1348-0. [DOI] [PubMed] [Google Scholar]

- Nozaradan S, Peretz I, Keller PE. Individual differences in rhythmic cortical entrainment correlate with predictive behavior in sensorimotor synchronization. Sci Rep 6: 20612, 2016b. doi: 10.1038/srep20612. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nozaradan S, Peretz I, Mouraux A. Steady-state evoked potentials as an index of multisensory temporal binding. Neuroimage 60: 21–28, 2012. doi: 10.1016/j.neuroimage.2011.11.065. [DOI] [PubMed] [Google Scholar]

- Nozaradan S, Schönwiesner M, Caron-Desrochers L, Lehmann A. Enhanced brainstem and cortical encoding of sound during synchronized movement. Neuroimage 142: 231–240, 2016c. doi: 10.1016/j.neuroimage.2016.07.015. [DOI] [PubMed] [Google Scholar]

- Nozaradan S, Zerouali Y, Peretz I, Mouraux A. Capturing with EEG the neural entrainment and coupling underlying sensorimotor synchronization to the beat. Cereb Cortex 25: 736–747, 2015. doi: 10.1093/cercor/bht261. [DOI] [PubMed] [Google Scholar]

- Oxenham AJ. Pitch perception and auditory stream segregation: implications for hearing loss and cochlear implants. Trends Amplif 12: 316–331, 2008. doi: 10.1177/1084713808325881. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Retter TL, Rossion B. Uncovering the neural magnitude and spatio-temporal dynamics of natural image categorization in a fast visual stream. Neuropsychologia 91: 9–28, 2016. doi: 10.1016/j.neuropsychologia.2016.07.028. [DOI] [PubMed] [Google Scholar]

- Rossion B. Understanding individual face discrimination by means of fast periodic visual stimulation. Exp Brain Res 232: 1599–1621, 2014. doi: 10.1007/s00221-014-3934-9. [DOI] [PubMed] [Google Scholar]

- Schröger E, Bendixen A, Denham SL, Mill RW, Bőhm TM, Winkler I. Predictive regularity representations in violation detection and auditory stream segregation: from conceptual to computational models. Brain Topogr 27: 565–577, 2014. doi: 10.1007/s10548-013-0334-6. [DOI] [PubMed] [Google Scholar]

- Simpson AJ, Harper NS, Reiss JD, McAlpine D. Selective adaptation to “oddball” sounds by the human auditory system. J Neurosci 34: 1963–1969, 2014. doi: 10.1523/JNEUROSCI.4274-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slaney M. Auditory Toolbox Version 2, Technical Report. Palo Alto, CA: Interval Research Corporation, 1998, p. 1998–2010. [Google Scholar]

- Snyder JS, Alain C, Picton TW. Effects of attention on neuroelectric correlates of auditory stream segregation. J Cogn Neurosci 18: 1–13, 2006. doi: 10.1162/089892906775250021. [DOI] [PubMed] [Google Scholar]

- Sussman E, Ritter W, Vaughan HG Jr. Predictability of stimulus deviance and the mismatch negativity. Neuroreport 9: 4167–4170, 1998. doi: 10.1097/00001756-199812210-00031. [DOI] [PubMed] [Google Scholar]

- Tallal P. Improving language and literacy is a matter of time. Nat Rev Neurosci 5: 721–728, 2004. doi: 10.1038/nrn1499. [DOI] [PubMed] [Google Scholar]

- Tallal P, Miller S, Fitch RH. Neurobiological basis of speech: a case for the preeminence of temporal processing. Ann N Y Acad Sci 682, 1 Temporal Info: 27–47, 1993. doi: 10.1111/j.1749-6632.1993.tb22957.x. [DOI] [PubMed] [Google Scholar]

- van den Broeke EN, Mouraux A, Groneberg AH, Pfau DB, Treede RD, Klein T. Characterizing pinprick-evoked brain potentials before and after experimentally induced secondary hyperalgesia. J Neurophysiol 114: 2672–2681, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Noorden L. Temporal Coherence in the Perception of Tone Sequences (PhD Thesis). Eindhoven, Netherlands: Technical University Eindhoven, 1975. [Google Scholar]

- Yaron A, Hershenhoren I, Nelken I. Sensitivity to complex statistical regularities in rat auditory cortex. Neuron 76: 603–615, 2012. doi: 10.1016/j.neuron.2012.08.025. [DOI] [PubMed] [Google Scholar]

- Yost WA. Fundamentals of hearing: An Introduction (4th ed.). New York: Academic, 2000. [Google Scholar]

- Zarate JM, Ritson CR, Poeppel D. Pitch-interval discrimination and musical expertise: is the semitone a perceptual boundary? J Acoust Soc Am 132: 984–993, 2012. doi: 10.1121/1.4733535. [DOI] [PMC free article] [PubMed] [Google Scholar]