Abstract

The selection of appropriate outcomes is crucial when designing clinical trials in order to compare the effects of different interventions directly. For the findings to influence policy and practice, the outcomes need to be relevant and important to key stakeholders including patients and the public, health care professionals and others making decisions about health care. It is now widely acknowledged that insufficient attention has been paid to the choice of outcomes measured in clinical trials. Researchers are increasingly addressing this issue through the development and use of a core outcome set, an agreed standardised collection of outcomes which should be measured and reported, as a minimum, in all trials for a specific clinical area.

Accumulating work in this area has identified the need for guidance on the development, implementation, evaluation and updating of core outcome sets. This Handbook, developed by the COMET Initiative, brings together current thinking and methodological research regarding those issues. We recommend a four-step process to develop a core outcome set. The aim is to update the contents of the Handbook as further research is identified.

Electronic supplementary material

The online version of this article (doi:10.1186/s13063-017-1978-4) contains supplementary material, which is available to authorized users.

Keywords: Core outcome set, Clinical trial, COMET Initiative, Patients and the public

Chapter 1: Background

1.1 Outcomes in clinical trials

Clinical trials are research studies undertaken with human beings for the purpose of assessing the safety and effectiveness of interventions, treatments or care procedures. Randomised controlled trials (RCTs) are seen as the ‘gold standard’ in evaluating the effects of treatments [1].

There are three basic components of randomised clinical trials [2]:

At least one test treatment and a comparator treatment

Randomisation of treatment allocation

Outcome measure(s)

It is the third component that is the focus of this Handbook. Broadly, in the context of clinical trials, an outcome is defined to be a measurement or observation used to capture and assess the effect of treatment such as assessment of side effects (risk) or effectiveness (benefits). When designing a clinical trial, the ‘PICO’ format is often used to formulate a research question. A ‘well-built’ question should include four parts; that is identifying the patient problem or population (P), the intervention (I), the comparator (C) and the outcomes of interest (O) [3]. In a randomised trial, differences between the groups in outcomes can be inferred to be as a result of the differing interventions. Therefore, the selection, measurement and reporting of important, relevant and appropriate outcomes are critical.

Researchers, clinicians and policy-makers often distinguish between the efficacy and the effectiveness of an intervention. Whereas efficacy trials (also described as explanatory trials) determine whether an intervention can have a beneficial effect in an ideal situation under optimum conditions [4], effectiveness trials (also described as pragmatic trials) measure the degree of beneficial effect under ‘real-world’ clinical settings. In contrast to an efficacy trial, an effectiveness trial will usually be conducted following as close to clinical practice as possible [5]. Design of effectiveness trials are, therefore, based on conditions of, and with consideration to, routine clinical practice and clinical decision-making. Efficacy trials tend to precede effectiveness trials, and although it is preferential to distinguish between efficacy and effectiveness trials, in reality they exist on a continuum [1], often making it difficult to separate the two as distinct phases of research. The focus of this Handbook will be effectiveness trials.

Clinical trials will usually include multiple outcomes of interest, and the main outcomes are usually those essential for decision-making. Some outcomes will be of more interest than others. The primary outcome is typically chosen to be the one of greatest therapeutic importance [6] to relevant stakeholders, such as patients and clinicians, is an integral component of the research question under investigation and is usually the one used in the sample size calculation [7]. Sometimes, researchers propose more than one primary outcome if they are thought to be of equal therapeutic importance and relevance to the research question. This can also be useful if it is unclear which single primary outcome will best answer the question. Secondary outcomes evaluate other beneficial or harmful effects of secondary importance or are useful for explaining additional effects of the intervention [8]. Secondary outcomes may also be exploratory in nature. Harmful effects should always be viewed as important regardless of their primary or secondary outcome label [7]. In addition to assessing relative therapeutic benefit and safety, decision-makers are usually also interested in the acceptability and cost-effectiveness of the interventions under study.

A variety of different types of outcomes can be measured in trials, and researchers must decide which of these to measure. As well as the importance of an outcome to relevant stakeholders, researchers must consider an array of information, including how responsive it is to the interventions being compared and the appropriateness to the trial; for example, the financial cost and acceptability to patients associated with measuring that outcome. The decision is made more complex by the numerous types of outcomes that exist, and researchers must decide which of these types of outcomes is most appropriate for both the question under investigation and the specific context of the clinical trial. For example, a clinical outcome describes a medical event(s) that occurs as a result of disease or treatment [9], and relates to a patient’s symptoms, overall mental state or how the patient functions. In contrast, a surrogate endpoint is used as a substitute for a clinical outcome [10]. A biomarker is another type of outcome and is a medical sign, typically used in earlier phase trials, used to predict biological processes. Examples of biomarkers include everything from pulse and blood pressure through basic chemistries to more complex laboratory tests of blood and other tissues [11].

In addition to deciding what to measure, Zarin et al. describe that a fully specified outcome measure includes information about the following [12]: domain (e.g. anxiety), that is what to measure; specific measurement (e.g. Hamilton Anxiety Rating Scale), that is how to measure that outcome/domain; the specific metric used to characterise each participant’s results (e.g. change from baseline at specified time); and method of aggregation (e.g. a categorical measure such as proportion of participants with a decrease greater than 50%).

Furthermore, outcomes can also be measured in different ways. Some clinical outcomes are composed of a combination of items, and are referred to as composite outcomes. Outcomes can be objective, that is not subject to a large degree of individual interpretation, and these are likely to be reliably measured across patients in a study, by different health care providers, and over time. Laboratory tests may be considered objective measures in most cases. Outcomes may also be considered to be subjective. Most clinical outcomes involve varying degrees of subjectivity; for example, a diagnosis or assessment by a health care provider, carer or the patient themselves. A clinician-reported outcome is an assessment that is determined by an observer with some recognised professional training that is relevant to the measurement being made. In contrast, an observer-reported outcome is an assessment that is determined by an observer who does not have a background of professional training that is relevant to the measurement being made, i.e. a non-clinician observer such as a teacher or caregiver. This type of assessment is often used when the patient is unable to self-report (e.g. infants, young children). Finally, a patient-reported outcome is a measurement based on a report that comes directly from the patient (i.e. the study participant) about the status of particular aspects of or events related to a patient’s health condition [9].

1.2 Problems with outcomes

Clinical trials seek to evaluate whether an intervention is effective and safe by comparing the effects of interventions on outcomes, and by measuring differences in outcomes between groups. Clinical decisions about the care of individual patients are made on the basis of these outcomes, so clearly the selection of outcomes to be measured and reported in trials is critical. The chosen outcomes need to be relevant to health service users and others involved in making decisions and choices about health care. However, a lack of adequate attention to the choice of outcomes in clinical trials has led to avoidable waste in both the production and reporting of research, and the outcomes included in research have not always been those that patients regard as most important or relevant [13].

Inconsistencies in outcomes have caused problems for people trying to use health care research, illustrated by the following two examples [14]: (1) a review of oncology trials found that more than 25,000 outcomes appeared only once or twice [15] and (2) in 102/143 (71%) Cochrane reviews, the authors were unable to obtain the findings for key outcomes in the included trials, with 26 (18%) missing data for the review’s prespecified primary outcome from over half of the patients included in the research [16]. In addition, variability in how outcomes are defined and measured can make it difficult, or impossible, to synthesise and apply the results of different research studies. For example, a survey of 10,000 controlled trials involving people with schizophrenia found that 2194 different measurement scales had been used [17].

Alongside this inconsistency in the measurement of outcomes, outcome-reporting bias adds further to the problems faced by users of research who wish to make well-informed decisions about health care. Outcome-reporting bias has been defined as the selection of a subset of the original recorded outcomes, on the basis of the results, for inclusion in the published reports of trials and other research [18]. Empirical evidence shows that outcomes that are statistically significant are more likely to be fully reported [19]. Selective reporting of outcomes means that fully informed decisions cannot be made about the care of patients, resource allocation, research priorities and study design. This can lead to the use of ineffective or even harmful interventions, and to the waste of health care resources that are already limited [20].

1.3 Standardising outcomes

1.3.1 Core outcome sets (COS)

These issues of inconsistency and outcome-reporting bias could be reduced with the development and application of agreed standardised sets of outcomes, known as core outcome sets (COS), that should be measured and reported in all trials for a specific clinical area [21]. As previously noted [22], these sets represent ‘the minimum that should be measured and reported in all clinical trials of a specific condition and could also be suitable for use in other types of research and clinical audit’ [23]. It is to be expected that the core outcomes will always be collected and reported, and that researchers will likely also include other outcomes of particular relevance or interest to their specific study. Measuring a COS does not mean that outcomes in a particular trial necessarily need to be restricted to just those in the set.

The first step in the development of a COS is typically to identify what to measure [22]. Once agreement has been reached regarding what should be measured, how the outcomes included in the core set should be defined and measured is then determined.

The use of COS will lead to higher-quality trials, and make it easier for the results of trials to be compared, contrasted and combined as appropriate, thereby reducing waste in research [22]. This approach would reduce heterogeneity between trials because all trials would measure and report the agreed important outcomes, lead to research that is more likely to have measured relevant outcomes due to the involvement of relevant stakeholders in the process of determining what is core, and be of potential value to use in clinical audit. Importantly, it would enhance the value of evidence synthesis by reducing the risk of outcome-reporting bias and ensuring that all trials contribute usable information.

1.3.2 Core outcome set initiatives

One of the earliest examples of an attempt to standardise outcomes is an initiative by the World Health Organisation in the 1970s, relating to cancer trials [24]. More than 30 representatives from groups doing trials in cancer came together, the result of which was a WHO Handbook of guidelines recommending the minimum requirements for data collection in cancer trials. The most notable work to date relating to outcome standardisation since has been conducted by the OMERACT (Outcome Measures in Rheumatology) collaboration [25] which advocates the use of COS, designed using consensus techniques, in clinical trials in rheumatology. This, and other relevant initiatives, is described below.

OMERACT is an independent initiative of international health professionals interested in outcome measures in rheumatology. The first OMERACT conference on rheumatoid arthritis was held in Maastricht, in the Netherlands in 1992 [26]. The motivation for this was discussions between two of the executive members, comparing the outcomes for patients with rheumatoid arthritis in European clinical trials with that of North American clinical trials, and noting that they used different endpoints. This made it extremely difficult to compare and combine in meta-analyses. Over the last 20 years, OMERACT has served a critical role in the development and validation of clinical and radiographic outcome measures in rheumatoid arthritis, osteoarthritis, psoriatic arthritis, fibromyalgia, and other rheumatic diseases. OMERACT strives to improve outcome measurement in rheumatology through a ‘data driven’, iterative consensus process involving relevant stakeholder groups [27].

An important aspect of OMERACT now is the integration of patients at each stage of the OMERACT process, but this was not always the case. Initially, OMERACT did not include patients in the process of developing COS. The patient perspective workshop at OMERACT 6 in 2002 addressed the question of looking at outcomes from the patient perspective. Fatigue emerged as a major outcome in rheumatoid arthritis, and it was agreed that this should be considered for inclusion in the core set [28–30]. This patient input along with clinical trialist insight, epidemiologist assessment and industry perspective, has led OMERACT to be a prominent decision-making group in developing outcome measures for all types of clinical trials and observational research in rheumatology. OMERACT has now developed COS for many rheumatological conditions, and has described a conceptual framework for developing core sets in rheumatology (described in ‘Section 0’) [31].

Since OMERACT there have been other examples of similar COS initiatives to develop recommendations about the outcomes that should be measured in clinical trials. One example is the Initiative on Methods, Measurement, and Pain Assessment in Clinical Trials (IMMPACT) [32], whose aim is to develop consensus reviews and recommendations for improving the design, execution and interpretation of clinical trials of treatments for pain. The first IMMPACT meeting was held in November 2002, and there have been a total of 17 consensus meetings on clinical trials of treatments for acute and chronic pain in adults and children. Another exemplar is the Harmonising Outcome Measures for Eczema (HOME) Initiative [33]. This is an international group working to develop core outcomes to include in all eczema trials.

1.3.3 The COMET Initiative

The COMET (Core Outcome Measures in Effectiveness Trials) Initiative brings together people interested in the development and application of COS [34]. COMET aims to collate and stimulate relevant resources, both applied and methodological, to facilitate exchange of ideas and information, and to foster methodological research in this area. As previously described [35], specific objectives are to:

Raise awareness of current problems with outcomes in clinical trials

Encourage COS development and uptake

Promote Patient and Public Involvement (PPI) in COS development

Provide resources to facilitate these aims

Avoid unnecessary duplication of effort

Encourage evidence-based COS development

The COMET Initiative was launched at a meeting in Liverpool in January 2010, funded by the MRC North West Hub for Trials Methodology (NWHTMR). More than 110 people attended, with representatives from trialists, systematic reviewers, health service users, clinical teams, journal editors, trial funders, policy-makers, trials registries and regulators. The feedback was uniformly supportive, indicating a strong consensus that the time was right for such an initiative. The meeting was followed by a second meeting in Bristol in July 2011 which reinforced the need for COS across a wide range of areas of health and the role of COMET in helping to coordinate information about these. COMET has gone on to have subsequent successful international meetings in Manchester (2013), Rome (2014) [36] and Calgary (2015) [37] to affirm this.

For COS to be an effective solution, they need to be easily accessible by researchers and other key groups. Previously, it has been difficult to identify COS because they are hard to find in the academic literature. This might mean that they have not been used in new studies or that there has been unnecessary duplication of effort in developing new COS. The COMET Initiative sought to tackle this problem by undertaking a systematic review of COS (see section ‘Populating the COMET database’), and to reduce the possibility of waste in research by bringing these resources together in one place. The COMET website and database were launched in August 2011. The COMET Initiative database is a repository of studies relevant to the development of COS. In addition to the searchable database, the website provides:

Information about COMET, including aims and objectives and a description of the COMET Management Group

Information about upcoming and past COMET events, including workshops and meetings organised by the COMET Initiative

Resources for COS developers, including relevant publications, examples of grant-funded projects, examples of COS development protocols, plain language summaries and PPI resources

Relevant web links, including core outcome networks and collaborations, patient involvement, how to measure, and research funding

The growing awareness of the need for COS is reflected in the website and database usage figures [22, 38]. Use of the website continues to increase, with more than 20,900 visits in 2015 (25% increase over 2014), 15,366 unique visitors (25% increase), 13,049 new visitors (33% increase) and a rise in the proportion of visits from outside the UK (11,090 visits; 53% of all visits). By December 2015, a total of 9999 searches had been completed, with 3411 in 2014 alone (43% increase).

1.3.3.1 Populating the COMET database

A systematic review of COS was conducted in August 2013 [14], and subsequently updated to include all published COS up to, and inclusive of, December 2014 [39]. The aim of the systematic reviews was to identify studies which had the aim of determining which outcomes or domains to measure in all clinical trials in a specific condition, and to identify and describe the methodological techniques used in these studies. The two reviews have identified a total of 227 published COS up to, and including, December 2014. The systematic reviews highlighted great variability in the ways that COS had been developed, particularly the methods used and the stakeholders included as participants in the process. The update demonstrated that recent studies appear to have adopted a more structured approach towards COS development and public representation has increased. We will be referring to the results of these reviews in more detail throughout the Handbook.

As noted above, the types of studies included in the database are those in which COS have been developed, as well as studies relevant to COS development, including systematic reviews of outcomes and patients’ views. Individuals and groups who are planning or developing a COS, who have completed one or who have identified one in an ad hoc way can submit it for inclusion. There are now over 120 ongoing COS included in the COMET database (correct as of April 2016). Furthermore, we maintain a separate list of studies (not included in the database), to include information on studies that have not yet progressed beyond an expression of interest or a very early stage in their development. It also contains some studies where permission is needed before they can be included in the main, online database. This list currently includes approximately 90 studies (correct as of February 2016).

1.3.4 Other relevant initiatives

Whilst the initiatives described above are specific to the development of COS for trials in particular areas of health, there are a few other recent initiatives relevant to the improvement of outcome measurement. One such initiative is the Core Outcomes in Women’s Health (CROWN) initiative. This is an international group, led by journal editors, to harmonise outcome reporting in women’s health research [40]. This consortium aims to promote COS in the specialty, encourage researchers to develop COS and facilitate reporting of the development of COS.

The US National Institutes of Health (NIH) encourages the use of common data elements (CDEs) in NIH-supported research projects or registries. The NIH provide a resource portal that includes databases and repositories of data elements and Case Report Forms that may assist investigators in identifying and selecting data elements for use in their projects [41]. PROMIS is another NIH initiative and is part of the NIH goal to develop systems to support NIH-funded research supported by all of its institutes and centres [41]. PROMIS provides a system of measures of patient-reported health status for physical, mental health and social health which can be used across chronic conditions (see description in ‘Ontologies for grouping individual outcomes into outcome domains’ in Chapter 2). Once it has been decided what outcomes should be measured, PROMIS is a source of information regarding how those outcomes could be measured.

Once a COS has been agreed, it is then important to determine how the outcomes included in the set should be defined and measured. Several measurement instruments may exist to measure a given outcome, usually with varying psychometric properties (e.g. reliability and validity). Important sources of information for selecting a measurement instrument for a COS are systematic reviews of measurement instruments. The COnsensus-based Standards for the selection of health Measurement INstruments (COSMIN) initiative collates systematic reviews of measurement properties of available measurement instruments that intend to measure (aspects of) health status or (health-related) quality of life. An overview of these reviews and guidelines for performing such reviews can be found on the COSMIN website [42]. COSMIN has also developed a checklist about which measurement properties are important and standards for how to evaluate their measurement properties [43]. The COSMIN checklist will facilitate the selection of the most appropriate PRO measure amongst competing instruments. A collaboration between COSMIN and COMET has recently resulted in the development of a guideline on how to select outcome measurement instruments for outcomes included in a COS [44]. COSMIN has recently started a new project with the aim of developing a separate version of the COSMIN checklist for non-PRO measurements.

The Clinical Outcome Assessments (COA) staff at the US Food and Drug Administration (FDA), previously known as the Study Endpoints and Labelling Development (SEALD) Study Endpoints Team, aim to encourage the development and application of patient-focussed endpoint measures in medical product development to describe clinical benefit in labelling. The COA staff engage with stakeholders to improve clinical outcome measurement standards and policy development, by providing guidance on COA development, validation and interpretation of clinical benefit endpoints in clinical trials. The FDA define a COA as a ‘measure of patient’s symptoms, overall mental state, or the effects of a disease or condition on how the patient functions’. Put simply, the COA staff work to ensure that the evidence provided about an outcome instrument can be relied upon in the context of drug development and regulatory decision-making.

The Clinical Data Interchange Standards Consortium (CDISC) is a global Standards Development Organisation (SDO) ‘to develop and support global, platform-independent data standards that enable information system interoperability to improve medical research and related areas of health care’ [45]. CDISC aims to establish worldwide industry standards to support the electronic acquisition, exchange, submission and archiving of clinical research data and metadata to improve data quality and streamline medical and biopharmaceutical product development and research processes. The Coalition for Accelerating Standards and Therapies (CFAST) Initiative is a CDISC partnership, set up to accelerate clinical research and medical product development by creating and maintaining data standards, tools and methods for conducting research in therapeutic areas that are important to public health [45]. One of its objectives is to identify common standards for representing clinical data for drug studies in priority therapeutic areas. This includes standardising definitions of outcomes, and the way in which outcomes are described.

1.4 The aim of this Handbook

As stated above, one of the aims of the COMET Initiative is to encourage evidence-based COS development. Although some guidance exists for COS developers [21, 46, 47], it is varied in the level of detail and is often unclear regarding the level of evidence available to support particular methodological recommendations.

The aim of the Handbook is to describe current knowledge in the area of COS development, implementation, review and uptake, and to make recommendations for practice and research as appropriate. The Handbook will be updated as further research is undertaken and reported, in order to continue to inform good methodological practice in this area.

Figure 1 illustrates the approach taken to COS development in this Handbook. Steps 1–4 are covered in detail in Chapter 2, and describe a process for determining what to measure, the COS. Step 5, determining how to measure the outcomes in the COS, is covered briefly since much has been written and described elsewhere. Step 5 is crucial to achieve future uptake and thereby realise the benefits of COS development. Chapter 3 covers implementation, review and feedback. Chapter 4 provides recommendations for practice, proposes a methodological research agenda, and discusses other areas where the use of COS may be beneficial.

Fig. 1.

The core outcome set (COS) development process

Chapter 2: Developing a core outcome set

2.1 Background

The development of a COS in health care involves working with key stakeholders to prioritise large numbers of outcomes and achieve consensus as to the core set. Various methods have been used to develop a COS and it is uncertain which are most suitable, accurate and efficient. Research to identify optimal methods of developing COS is ongoing and there is currently wide variation in the approaches used [14]. Methods include the Delphi technique [48, 49], nominal group technique [50, 51], consensus development conference [52] and semistructured group discussion [53]. Many studies have used a combination of methods to reach consensus’ for example, Ruperto et al. [54] used the Delphi approach followed by the nominal group technique at a face-to-face meeting, whilst Harman et al. [55], Potter et al. [56], van’t Hooft et al. [57] and Blazeby et al. [58] used the Delphi approach followed by face-to-face semistructured discussion.

One example where consensus work has been undertaken in two different ways is in paediatric asthma. The American Thoracic Society/European Respiratory Society employed an expert panel approach [59], whereas other researchers combined results from a Delphi survey with clinicians and interviews with parents and children [60]. The results were overlapping but not identical. Female sexual dysfunction is another disease area where different methods have been used to obtain consensus. In one study, a literature review was undertaken and critiqued by experts [61], whereas in another study, a modified Delphi method was used to develop consensus definitions and classifications [62]. Both studies resulted in the same primary outcome; however, secondary outcomes differed. Similarly, multiple COS have also been developed for systemic lupus erythematosus. OMERACT adopted a nominal group process to rank outcome domains [63], whereas EULAR adopted a consensus building approach [64]. The results from both studies were very similar, with EULAR recommending other additional outcomes.

COS developers have identified that methodological guidance for COS development would be helpful [65]. There is limited empirical evidence, however, regarding whether different methods lead to similar or different conclusions, and there is a need to develop evidence-based approaches to COS development.

The OMERACT Handbook is a useful resource for those wishing to develop COS in the area of rheumatology under the umbrella of the OMERACT organisation [46]. We have previously identified issues to be considered in the development of COS more generally [21] and expand on those here, together with additional ones identified since this earlier publication. We present information about how COS developers have tackled these issues using data from our previous systematic reviews [14, 39] and describe results from methodological research studies where available.

In the systematic review (227 COS identified), 63% of studies made recommendations about what to measure only. Some of the remaining studies also made recommendations about how to measure the outcomes that they included in their core set, with 35% of studies doing this as a single process, considering both what to measure and how to measure. The remaining 2% of studies in the systematic review of COS considered what to measure and how to measure outcomes included in the core set as a two-stage process, first considering what to measure and then considering how to measure. Thus, there appears to be consistency in that the first step in the process is typically to gain agreement about ‘what’ to measure, with decisions about ‘how’ and ‘when’ to measure these outcomes usually later in the process. This two-stage process has the advantage of being able to identify gaps where further research would be needed, e.g. if an outcome is deemed to be of core importance but no outcome measurement instrument exists with adequate psychometric properties.

This chapter provides guidance on developing consensus about what to measure, i.e. a COS, and provides recommendations for finding and selecting instruments for measuring the outcomes in the core set, i.e. the how.

2.2 Scope of a core outcome set

The scope of a COS refers to the specific area of health or health care of interest to which the COS is to be applied. The scope should be described in terms of the health condition, target population and interventions that the COS is to be applicable to, thus covering the first three elements of the PICO (Population, Intervention, Comparator, Outcomes) structure for a clinical trial.

This can be one of the most difficult aspects of the process, but clarity from the outset will likely reduce later problems of misinterpretation and ambiguity. This will help to focus the development of the COS and help potential users decide on its relevance to their work.

2.2.1 Health condition and target population

For example, in prostate cancer, a COS may be developed for all patients or it may focus on patients with localised disease.

2.2.2 Interventions

For example, a COS may be created for use in all trials of interventions to treat localised prostate cancer or just for surgery.

Of the 227 COS published up to the end of 2014, 53% did not specify whether the COS was intended for all interventions or a particular intervention type, 7% were for any intervention, and 40% were for a specific intervention type.

2.2.3 Setting

The focus of this Handbook is on the development of COS for effectiveness trials. A distinction is made between efficacy and effectiveness trials, since developing a COS to cover both designs may lead to difficulties with respect to particular domains such as health care resource use [48]. COS are equally applicable in other settings; for example, routine clinical practice (see ‘Chapter 4’).

2.3 Establishing the need for a core outcome set

2.3.1 Does a relevant core outcome set already exist?

The first thing to do is find out whether a relevant COS exists by reviewing the academic literature.

One of the difficulties in this area of research has been to identify whether studies have already been done, or are underway, to develop a COS. The COMET Initiative has developed an online searchable database, enabling researchers to check for existing or ongoing work before embarking on a new project, thus minimising unnecessary duplication of effort. A video of ‘How to search the COMET database’ can be found on the COMET website [66].

The COMET database is populated through an annual systematic review update of published studies, and by COS developers registering their new projects. To avoid missing any ongoing projects not yet registered in the COMET database, it is recommended that researchers contact other experts in the particular health condition, as well as the COMET project coordinator, to check whether any related work is ongoing. It may also be prudent to apply the COMET search strategy [14] with additional filter terms for the area of interest for the recent period since the last COMET annual update.

Although there may be no exact match for the scope of interest, it may be that a related COS exists, e.g. a COS for all interventions in the condition of interest has been developed but a COS for a specific intervention type is sought, or a COS was developed by relevant stakeholders in countries other than that of the team with the current interest, or a COS was developed with the same scope but did not involve obtaining patients’ views.

2.3.2 Is a core outcome set needed?

If a relevant COS does not exist, a review of previous trials [67] or systematic reviews [68] in the area can provide evidence of need for a COS. Systematic reviewers are starting to use the outcome matrix recommended by the ORBIT project [69] to display the outcomes reported in the eligible studies. This matrix may demonstrate inconsistency of outcomes measured to date in addition to potential outcome-reporting bias.

The rest of this chapter is written from the premise that the development of a new COS is warranted. If a COS already exists, but the quality could be improved by additional work related to particular stakeholder groups, countries, or alternative consensus methods, then certain sections below will also be of relevance. The issue of quality assessment is discussed in ‘Quality assessment/critical appraisal’ below and in ‘Chapter 4’.

2.3.3 Avoiding unnecessary duplication of effort

The COMET database is a useful resource for researchers to see what work has been done in their area of interest and for research funders wishing to avoid unnecessary duplication of effort when supporting new COS activities, as illustrated by the following two examples.

Example 1

In September 2014 Valerie Page (Watford General Hospital, UK) contacted COMET via the website to register the development of a COS in delirium. We followed up the request for additional information so that we could register this in the database, and in the meantime we logged this on the private non-database list that we use to keep track of work that we know about prior to inclusion in the database. Whilst waiting for this information to be returned, in May 2015 we received a second request for registration of COS development in the same clinical area by Louise Rose from the University of Toronto, Canada. The researchers were unaware of each other’s work. We got in touch with both researchers and asked for permission to share details of their work, as well as to pass on contact details. In September 2015 we received confirmation that Louise Rose and Valerie Page, with the European Delirium Association and American Delirium Society, are now working collaboratively on this. Details of this collaborative effort to develop a COS for delirium can be found in the database [70].

Example 2

Benjamin Allin (University of Oxford, UK) started planning a study to develop a COS for infants with gastroschisis in early 2015. He checked the COMET database to see if a COS existed, but nothing was registered at that time. He contacted COMET in September 2015 to register his project. On receiving this request, the COMET project coordinator checked the COMET database to find out if there was any relevant work in this area and identified an ongoing study registered in this same area of gastroschisis. This latter work had been registered by Nigel Hall (University of Southampton and Southampton Children’s Hospital, UK) in June 2015. Again, the two groups were put in touch, and they met up to discuss the proposed core sets, which resulted in a plan being drawn up for collaboration to work together to produce one COS rather than two. The existing gastroschisis COS entry in the database has been updated to reflect this collaborative effort [71].

2.4 Study protocol

There are potential sources of bias in the COS development process, and preparing a protocol in advance may help to reduce these biases, improve transparency and share methods with others. We recommend that a protocol be developed prior to the start of the study, and made publically available, either through a link on the COMET registration entry or a journal publication [72–74]. In a similar way to the development of the SPIRIT guidance for clinical trial protocols, there is a need to agree protocol content.

2.5 Project registration

One of the aims of the COMET Initiative is to provide a means of identifying existing, ongoing and planned COS studies. COS developers should be encouraged to register their project in a free-to-access, unrestricted public repository, such as the COMET database, which is the only such repository we are aware of.

The following information about the scope and methods used is recorded in the database for existing and ongoing work:

Clinical areas for which the outcomes are being considered, identifying both primary disease and types of intervention

Target population (age and sex), and any other details about the population within the health area

Setting for intended use (e.g. research and/or practice)

Method of development to be used for the COS

People and organisations involved in identifying and selecting the outcomes, recording how the relative contributions will be used to define the COS

Details of any associated publications, including the protocol and the final report, can be recorded in the COMET database, added to the original COMET registration page.

2.6 Stakeholder involvement

It is important to consider which groups of people should be involved in deciding which outcomes are core to measure, and why. Bringing diverse stakeholders together to try to reach a consensus is seen to be the future of collaborative, influential research.

Key stakeholders may include health service users, health care practitioners, trialists, regulators, industry representatives, policy-makers, researchers and the public. Decisions regarding the stakeholder groups to be involved, how they are to be identified and approached, and the number from each group will be dependent upon the particular scope of the COS as well as upon existing knowledge, the methods of COS development to be used, and practical feasibility considerations. For example, a COS for an intervention that aims to improve body image, e.g. breast reconstruction following mastectomy, is likely to have predominantly patients as the key stakeholders [56].

The stages of involvement during the process should also be considered for each stakeholder group. For example, it may be considered appropriate to involve methodologists in determining how to measure particular outcomes, but not to be involved in determining what to measure. These decisions should be documented and explained in the study protocol.

Consideration should be given to the representativeness of the sample of stakeholders and the ability of people across the different groups to engage with the chosen consensus method (including online activities and face-to-face meetings).

Consideration should be given to potential conflicts of interest within the group developing the COS (for example, the developers of measurement instruments in the area of interest or those whose work is focussed on a specific outcome).

2.6.1 Patient and public involvement and participation

COMET recognises the expertise and crucial contribution of patients and carers in developing COS. COS need to include outcomes that are most relevant to patients and carers, and the best way to do this is to include them in COS development. Examples exist where patients have identified an outcome important to them as a group that might not have been considered if the COS had been developed by practitioners on their own [75, 76]. However, it is worth noting that examples also exist where health professionals have identified areas that patients were reluctant to talk about in focus groups; for example, sexual health [77].

2.6.1.1 Patient and public participation

We refer to patients taking part in the COS study as ‘research participants’ and the activity as research ‘participation’. People involved in a COS study as research participants give their views on the importance of outcomes and may also subsequently be asked their opinion on how those outcomes are to be measured.

Of the 227 COS that had been published up to the end of December 2014, 44 (19%) studies reported including patient participants in the COS development process. However, of these 44 COS, only 26 (59%) studies provided details of how patients had participated in the development process. The most commonly used methods to include patient participants were the Delphi technique and semistructured group discussion which were used in 38% and 35% of studies, respectively. Three of the 26 (12%) COS studies were developed with only patients as participants. Of the remaining 23 studies, patients participated alongside clinicians during the development process in 19 (83%) studies, as compared to two (9%) studies where patients and clinicians participated separately throughout the whole development process. In the two remaining studies, patients and clinicians participated separately in the initial stages, but then alongside side each other during the final stages of the development process. For the 21 studies where patients and clinicians did participate alongside each other for all or part of the COS development process, the percentage of patient participants included ranged from 4 to 50%.

Of ongoing COS studies (n = 127 as of 12 April 2016), 88% now include patients as participants. The question now is not whether patients should participate, but rather the nature of that participation. It is recommended that both health professionals and patients be included in the decision-making process concerning what to measure, as the minimum, unless there is good reason to do otherwise. ‘Qualitative methods in core outcome set development’ below discusses considerations to enhance patient participation in a COS.

2.6.1.2 Patient and public involvement

When planning a COS study that involves patients as research participants, it is important to also involve patients in designing the study. We refer to patients who are involved in designing and overseeing a COS study as ‘public research partners’ and this activity as ‘patient involvement’. PPI has been defined as where research is ‘being carried out “with” or “by” members of the public rather than “to”, “about” or “for” them’ [78].

Involving public research partners in both the design and oversight of the COS development study may have the potential to:

Provide advice on the best ways of identifying and accessing particular patient populations

Inform discussions about ethical aspects of the study

Facilitate the design of more appropriate study information

Promote the development of more relevant materials to promote the study

Enable ongoing troubleshooting opportunities for patient participation issues during the study, e.g. recruitment and retention issues of study participants

Inform the development of a dissemination strategy of COS study results for patient participants and the wider patient population

Ensure that your COS is relevant to patients and, crucially, that patients see it to be relevant and can trust that the development process has genuinely taken account of the patient perspective.

Involving public research partners in designing and overseeing the COS study requires that researchers plan for this involvement. They might choose different methods of doing this; for example, they might have one or two discussion groups in the planning stage and then ongoing involvement of one or two public contributors on the Study Advisory Group (SAG). For example, Morris et al. (2015) engaged parents at various stages of the research process and consulted with parents from their ‘Family Faculty’ in designing a plain language summary of the results of their COS [79]. Numerous resources now exist to help researchers to plan and budget for PPI in research; for example: in the UK, INVOLVE have numerous resources [80].

COMET has also produced a checklist for COS developers to consider with public research partners when planning their COS study. These can be found on the COMET website.

2.7 Determining ‘what’ to measure – the outcomes in a core outcome set

2.7.1 Identifying existing knowledge about outcomes

It is recommended that potential relevant outcomes are identified from existing work to inform the consensus process. There are three data sources that should be considered: systematic reviews of published studies, reviews of published qualitative work, investigation into items collected in national audit data sets and interviews or focus groups with key stakeholders to understand their views of outcomes of importance. Depending on the resources available, protocols within clinical trial registries may also be a useful source of information.

2.7.1.1 Systematic review of outcomes in published studies

Systematic reviews are advantageous because they can efficiently identify an inclusive list of outcomes being reported by researchers in a given area. Nevertheless, it is important to note that systematic reviews of outcomes just aggregate the opinions of the previous researchers on what outcomes they deemed important to measure; hence the need for subsequent consensus development to agree with the wider community of stakeholders what outcomes should be included in a COS.

The scope of the systematic review should be carefully considered in the context of the COS to ensure that outcomes are included from all relevant studies without unnecessary data collection. The clinical area should be clearly defined and appropriate databases accessed accordingly. Commonly used databases include Medline, CINAHL, Embase, the Cochrane Database of Systematic Reviews and PsycINFO. In the systematic reviews of COS [14, 39], 57 (25%) studies carried out a review of outcomes [65]. The number of databases searched was not reported for 17 studies (30%), and two studies did not perform an electronic database search. Thirty-eight studies described which databases they searched (Table 1).

Table 1.

Description of databases searched (n = 38)

| Number of databases | n | Databases searched |

|---|---|---|

| 1 | 18 | Medline (n = 9) |

| PubMed (n = 7) | ||

| Central Register of Controlled Trials (n = 2) | ||

| 2 | 8 | Medline and Embase (n = 4) |

| PubMed and Central Register of Controlled Trials (n = 1) | ||

| Medline and CancerLit (n = 1) | ||

| Medline and Central Register of Controlled Trials (n = 1) | ||

| Medline and PubMed (n = 1) | ||

| 3 | 4 | Medline, CINAHL and Embase (n = 2) |

| Medline, Embase and Cochrane Central Register of Controlled Trials (n = 1) | ||

| PubMed, CINAHL, PsychINFO (n = 1) | ||

| 4 | 1 | PubMed, Medline, Embase and the Cochrane Collaboration |

| 5 | 3 | Medline, PreMedline, CancerLit, PubMed (National Library of Medicine) and Cochrane Library (n = 1) |

| Medline, Embase, PsycINFO, Cochrane Library and CINAHL (n = 1) | ||

| Cochrane Wounds Group Specialised Register, The Cochrane Central Register of Controlled Trials (CENTRAL) (The Cochrane Library), Medline, Embase and CINAHL (n = 1) | ||

| 6 | 0 | |

| 7 | 2 | Cochrane Oral Health Group’s Trials Register (CENTRAL), Medline, Embase, Science Citation Index Expanded, Social Science Citation Index, Index to Scientific and Technical Proceedings, System for Information on Grey Literature in Europe (n = 1) |

| Cochrane Skin Group Specialised Register, the Cochrane Central Register of Controlled Trials in The Cochrane Library (Issue 4, 2009), Medline, Embase, AMED, PsycINFO, LILACS (n = 1) | ||

| 8 | 2 | CINAHL (Cumulative Index to Nursing and Allied Health Literature), Embase, Medline, National Criminal Justice Reference Service (NCJRS), PsycINFO, Sociological Abstracts, The Cochrane Database, The Patient-reported Health Instruments (PHI) website (n = 1) |

| Medline, PubMed, Embase, PsycINFO, CINAHL, Web of Sciences, Cochrane Central Register of Controlled Trials, and Cochrane Database of Systematic Reviews (n = 1) |

There is no recommended time window to conduct systematic reviews. Some COS studies may examine all the available academic literature. This may be an enormous task in common disease areas. Scoping searches are useful to determine the number of identified studies for a specific area. Overly large reviews are resource intensive and may not yield important additional outcomes. One strategy is to perform the systematic review in stages to check if outcome saturation is reached. For example, a review of trials published over the last 5 years may be conducted initially and the outcomes extracted. The search may then extended, and the additional outcomes checked against the original list. If there are no further outcomes of importance then the systematic review may be considered complete. For most areas a recent search is recommended as a minimum (e.g. the past 24 months) to capture up-to-date developments and outcomes relevant to that COS. Seventeen studies in the systematic reviews of COS (30%) did not state the date range searched. Seven studies (12%) did not apply any date restrictions to their search. The number of years reported in the remaining 33 studies ranged between 2 and 59. Frequencies are provided in Table 2.

Table 2.

Number of years searched (n = 33)

| Number of years searched | Frequency |

|---|---|

| Less than 5 | 1 |

| 5 to 9 | 3 |

| 10 to 14 | 12 |

| 15 to 19 | 2 |

| 20 to 24 | 6 |

| 25 to 29 | 1 |

| 30 to 34 | 1 |

| 35 to 39 | 4 |

| More than 40 | 3 |

Data extraction should be considered in terms of:

Study characteristics

Outcomes

Outcome measurement instruments and/or definitions provided by the authors for each outcome

In terms of outcome extraction from the academic literature, it is recommended that all are extracted verbatim from the source manuscript [81]. This transparency is important to allow external critical review of the COS right back to its inception. In addition, extraction of outcome definitions supplied by, and measurement instruments used by, the authors is recommended as this will inform the selection of the outcome measurement set which will occur at a later stage. This is necessary because outcome definitions may vary widely between investigators and it is often not clear as to what outcomes are measuring [67, 82–84].

2.7.1.2 How to extract outcomes from the academic literature to inform the questionnaire survey

It is likely that some outcomes will be the same but will have been defined or measured in different publications in various ways. For example, in a review of outcomes for colorectal cancer surgery some 17 different definitions were identified for ‘anastomotic leakage’ [85]. The first step is to group these different definitions together (extracting the wording description verbatim) under the same outcome name. Similarly, in a review of outcomes for weight loss surgery, it was apparent that different terminology is used for weight loss itself in the academic literature [84]. The 41 different outcome assessments referring to weight were all categorised into one item for a subsequent Delphi questionnaire survey.

The next step is to group these outcomes into outcome domains, constructs which can be used to classify broad aspects of the effects of interventions, e.g. functional status. Outcomes from multiple domains may be important to measure in trials, and several outcomes within a domain may be relevant or important. Initially researchers create outcome domains for each outcome to be grouped into (see ‘Ontologies for grouping individual outcomes into outcome domains’ below). The domains need discussion and to be agreed by the team for the list to be categorised. Each outcome will then be mapped to a domain (independently) and this will provide transparency. For example, in a systematic review of studies evaluating the management of otitis media with effusion in children with cleft palate, a total of 43 outcomes were listed under 13 domain headings (see Table 18 in [81]).

Categorisation of each verbatim outcome definition to an outcome name, and each outcome name to an outcome domain is recommended to be performed independently by two researchers from multiprofessional backgrounds. This may include expert health service researchers, clinicians (e.g. surgeons, dietician, nurses, health psychologists) and methodologists. Where two researchers work on this process a senior researcher will need to resolve differences and make final decisions.

2.7.1.3 Systematic review of studies to identify outcomes of importance to health service users

Similarly, it is necessary to systematically review the academic literature to identify Patient-reported Outcome Measures (PROMs) and then extract patient-reported outcome domains. These come from existing PROMs often at the level of the individual questionnaire item [86]. This is recommended because the scale name used in PROMs and the scores attributed to the combined items are often found to be inconsistent. Therefore, analyses at a granular level are recommended [86]. The full process for this is described in Fig. 1 of the paper by Macefield et al. At this stage it is worth extracting details of the patient-reported outcome development and validity which will be helpful when selecting measures with which to assess the core outcomes.

A PRO long list extracted from PROMs may be supplemented with additional domains derived from a review of qualitative research studies if time allows (e.g. [87, 88]). It is recommended that interpretation of data from qualitative papers is guided by experts in the field.

2.7.2 Identifying and filling the gaps in existing knowledge

It is important to identify which key stakeholder groups’ views are not encompassed by systematic reviews of outcomes in published studies or the existing academic literature more generally, and decide whether these are gaps that need to be filled. An initial list from published clinical studies may be supplemented by undertaking qualitative research with key stakeholders whose views are important yet unlikely to be represented within systematic reviews of outcomes in previous studies. Where resources are limited, consultation with an advisory group whose membership reflects the key stakeholders may be used as an alternative to qualitative research, but it should be noted that such consultation is not qualitative research and the information arising from it does not have the same standing as the knowledge generated by research.

Qualitative interviews or focus groups with key stakeholders, especially patients, are recommended, particularly if the PROMS have lacked detailed patient participation in their development. The following section outlines in more detail how qualitative work may contribute to COS development. Nevertheless, it is recommended that qualitative research is guided by researchers with expertise in these. Interviews should be performed with a purposeful sample and use a semistructured interview schedule to elicit outcomes of importance to that population. The interview schedule may be informed by the domain list generated from the academic literature or be more informed by a grounded theory approach and start with very open questions. Interviews are audio-recorded, transcribed and analysed for content. The information can then be used to create new outcome domains or supplement the long list [89, 90].

2.7.3 Ontologies for grouping individual outcomes into outcome domains

Outcome domain models or frameworks exist to attempt to provide essential structure to the conceptualisation of domains [91], and have been used to classify outcomes that have been measured in clinical trials in particular conditions. Despite their intended use to provide a framework, there is not always consistency between the different models. In a review of Health-related Quality of Life (HRQoL) models, Bakas et al. found that there were wide variations in terminology for analogous HRQoL concepts [91]. Outcome hierarchies have been proposed for specific conditions [92] and cancer [93].

There have been several frameworks to classify health, disease and outcomes to date. There are various conceptual frameworks relevant to outcomes in health and these cover somewhat different areas of outcomes, some of which are described below.

2.7.3.1 Outcome-related frameworks

World Health Organisation (WHO)The WHO definition of health, although strictly a definition of health, can be considered a framework as it includes three broad health domains [94]: physical, mental and social wellbeing. This definition has not been amended since 1948 but is a useful starting place to study health. In a scoping review of conceptual frameworks, Idzerda et al. point out that although the three domains are clearly outlined, no further information about what should be included within each domain is provided [95].

Patient-reported Outcomes Measurement Information System (PROMIS)

The PROMIS domain framework builds on the WHO definition of health to provide subordinate domains beneath the broad headings stated above [41]: physical (symptoms and functions), mental (affect, behaviour and cognition) and social wellbeing (relationships and function). It was developed for adult and paediatric measures as a way of organising outcome measurement tools.

World Health Organisation International Classification of Functioning Disability and Health (WHO ICF)

The International classification of Functioning, Disability and Health (ICF) offers a framework to describe functioning, disability and health in a range of conditions. The ICF focuses on the assessment of an individual’s functioning in day-to-day life. It provides a framework for body functions, activity levels and participation levels in basic areas and roles of social life; providing domains of biological, psychological, social and environmental aspects of functioning [96]. In many clinical areas, ICF core sets have been developed. These core sets identify the most relevant ICF domains for a particular health condition.

5Ds

5Ds is presented as a systematic structure for representation of patient outcomes and includes five ‘dimensions’: death, discomfort, disability, drug or therapeutic toxicity, and dollar cost [97]. This representation of patient outcome was developed specifically for rheumatic diseases, and the authors claim that each dimension represents a patient outcome directly related to patient welfare; for example, they describe how a patient with arthritis may want to be alive, free of pain, functioning normally, experiencing minimal side effects and be financially solvent. This framework assumes that outcomes are multidimensional, and it is critical that the ‘concept of outcome’ is orientated to patient values.

Wilson and Cleary

Wilson and Cleary [98] propose a taxonomy or classification for different measures of health outcome. They suggest that one problem with other models is the lack of specification about how outcomes interrelate. They divide outcomes into five levels: biological and physiological factors, symptoms, functioning, general health perceptions, and overall quality of life. In addition to classifying these outcome measures, they propose specific causal relationships between them that link traditional clinical outcomes to measures of health-related quality of life. For example, ‘Characteristics of the environment’ are related to ‘Social and psychological supports’ which in turn relates to ‘Overall quality of life’. Ferrans et al. [99] revised the Wilson and Cleary model to further clarify and develop individual and environmental factors.

Outcome Measures in Rheumatology (OMERACT) Filter 2.0

The OMERACT Filter 2.0 [31] is a conceptual framework that encompasses ‘the complete content of what is measurable in a trial’. That is, a conceptual framework of measurement of health conditions in the setting of interventions. It comprises three core areas: death, life impact and pathophysiological manifestations; it also comprises one strongly recommended, resource use. These core areas are then further categorised into core domains. They liken the areas to ‘large containers’ for the concepts of interests (domains and subdomains). They recommend that the ICF domains are also considered under life impact (ICF domains: activity and participation) and pathophysiological manifestations (ICF domains: body function and structure). Although OMERACT recommends the inclusion in a COS of at least one outcome reflecting each core area, empirical evidence is emerging that this is not always considered appropriate [48].

Outcome Measures Framework (OMF)

The Outcome Measures Framework (OMF) project was funded by the Agency for Healthcare Research and Quality (a branch of the U.S. Department of Health and Human Services) to create a conceptual framework for development of standard outcome measures used in patient registries [100]. The OMF has three top-level broad domains: characteristics, treatments and outcomes. There are six subcategories within the outcomes domain: survival, disease response, events of interest, patient/caregiver-reported outcomes, clinician-reported outcomes and health system utilisation. The model was designed so that it can be used to define outcome measures in a standard way across medical conditions. Gliklich et al. conclude that ‘as the availability of health care data grows, opportunities to measure outcomes and to use these data to support clinical research and drive process improvement will increase’.

Survey of Cochrane reviews

Rather than attempting to define outcome domains as others have done, Smith et al. performed a review of outcomes from Cochrane reviews to see whether there were similar outcomes across different disease categories, in an attempt to manage and organise data [101]. Fifteen categories of outcomes emerged as being prominent across Cochrane Review Groups and encompassed person-level outcomes, resource-based outcomes, and research/study-related outcomes. The 15 categories are: adverse events or effects (AE), mortality/survival, infection, pain, other physiological or clinical, psychosocial, quality of life, activities of daily living (ADL), medication, economic, hospital, operative, compliance (with treatment), withdrawal (from treatment or study) and satisfaction (patient, clinician, or other health care provider). The authors recognise that these 15 categories might collapse further.

2.7.3.2 Use of outcome-related frameworks in core outcome set studies

In the systematic reviews of COS [14, 39], 17 studies provided some detail about how outcomes were grouped or classified (Table 3).

Table 3.

Methods for classifying/grouping outcomes (n = 17)

| Reference | Method for classifying/grouping outcomes |

|---|---|

| Duncan (2000) [225] | Each outcome measure was classified into one of the following categories: death or, at the level of pathophysiological parameters (blood pressure, laboratory values, and recanalisation), impairment, activity, or participation. Measures were classified according to the system used by Roberts and Counsell [226], which includes the Rankin/modified Rankin scale as a measure of activity rather than participation |

| Sinha (2012) [60] | Each outcome was grouped into one of the following six outcome domains, some of which were further divided into subdomains: disease activity, physical consequence of disease, functional status, social outcomes and quality of life, side effects of therapy and health resource utilisation. Where it was unclear which domain was appropriate, this was resolved by discussion between the authors. Reference given in support of this approach: Sinha et al. 2008 [227] |

| Broder (2000) [129] | List developed by staff at institution (but no further detail) |

| Distler (2008)[228] | The results of this literature search were discussed at the first meeting of the Steering Committee. Based on this discussion, a list of 17 domains and 86 tools was set up for the first stage of the Delphi exercise to define outcome measures for a clinical trial in PAH-SSc. Domains were defined as a grouping of highly related features that describe an organ, disease, function, or physiology (e.g. cardiac function, pulmonary function, and quality of life) |

| Devane (2007) [173] | Outcome measures addressing similar dimensions or events were discussed by the team and collapsed where possible. For example, various modes of delivery/birth were presented as ‘mode of birth (e.g. spontaneous vaginal, forceps, vaginal breech, caesarean section, vacuum extraction)’. This pilot tool was tested for clarity, with a sample of 12 participants, including 3 maternity care consumers, and subsequently refined |

| Smaïl-Faugeron (2013) [111] | Because we expected a large diversity in reported outcomes, we grouped similar outcomes into overarching outcome categories by a small-group consensus process. The group of experts consisted of 6 dental surgeons specialising in paediatric dentistry, including 3 clinical research investigators. First, the group identified outcomes that were identical despite different terms used across trials. Second, different but close outcomes (i.e. outcomes that could be compared across studies or combined in a meta-analysis) were grouped together into outcome domains. Finally, the group, with consensus, determined several outcome categories and produced a reduced-outcome inventory |

| Merkies (2006) [229] | In advance of the workshop, a list of outcome measures applied in treatment trials was prepared including their scientific soundness, WHO and quality of life classification (WHO classification reference is ICF) |

| Rahn (2011) [230] | From this outcome inventory, the outcomes were organised and grouped into eight proposed overarching outcome domains: 1. Bleeding; 2. Quality of life; 3. Pain; 4. Sexual health; 5. Patient satisfaction; 6. Bulk-related complaints; 7. Need for subsequent surgical treatment and 8. Adverse events. Categories were determined based on their applicability to all potential interventions for abnormal uterine bleeding and the physician expert group’s consensus of their relevance for informing patient choices. Outcomes related to cost, resource use, or those determined by the review group to have limited relevance for assessing clinical effectiveness were excluded from categorisation and further analyses |

| Chow (2002) [231] | Some detail but process not described – the endpoints employed in previous bone metastases trials of fractionation schedules were identified and listed in the first consensus survey under the following headings: 1. Pain assessments; 2. Analgesic assessments and primary endpoint; 3. Endpoint definitions; 4. Timing, frequency and duration of follow-up assessment; 5. When to determine a response; 6. Progression and duration of response; 7. Radiotherapy techniques; 8. Co-interventions following radiotherapy; 9. Re-irradiation. 10. Non-evaluable patients (lost follow-up) and statistics; 11. Other endpoints; 12. Other new issues and suggestions and 13. Patient selection issues |

| Van Der Heijde (1997) [232] | Grouped into patient-assessed, physician-assessed or physician-ordered measures |

| Chiu (2014) [233] | A wide variety of different outcomes measures were reported [in the studies included in the systematic review]. We classified these into 4 categories: postoperative alignment, sensory status, control measures and long-term change |

| Fong (2014) [139] | Twenty-one maternal and 24 neonatal outcomes were identified by our systematic reviews, one randomised controlled trial and two surveys. The maternal components included complications associated with pre-eclampsia and were broadly classified under neurological, respiratory, haematological, cardiovascular, gastrointestinal, renal and other categories. The neonatal components were prematurity-associated complications involving respiratory, neurological, gastrointestinal, cardiovascular systems and management-based outcomes such as admission to the neonatal unit, inotropic support and use of assisted ventilation |

| Fraser (2013) [234] | Based on an overall evaluation of intra-arterial head and neck chemotherapy, there are several outcome variables that should be monitored and reported when designing future trials. These outcome variables can be categorised into ‘Procedure-related’, ‘Disease control’ and ‘Survival’ |

| Goldhahn (2014) [235] | The group reached an agreement to use the ICF thereby identifying key domains within this framework using the nominal group technique. Recently, the WHO has established core sets for hand conditions [236]. A Comprehensive Core Set of 117 ICF categories were selected appropriate for conducting a comprehensive, multidisciplinary assessment. A brief core set of 23 ICF categories were selected, and considered more appropriate for individual health care professionals. The body functions contained in this core sets include emotional function, touch function sensory functions related to temperature and other stimuli, sensation of pain, mobility of joint functions, stability of joint functions, muscle power functions, control voluntary movement functions, and protective functions of the skin. The group agreed that the ICF categories would be consistent with clinicians’ current practice patterns of focussing on pain, joint range of motion and hand strength [237] |

| Saketkoo (2014) [238] | The Delphi process began with an ‘item-collection’ stage called tier 0, wherein participants nominated an unrestricted number of potential domains (qualities to measure) perceived as relevant for inclusion in a hypothetical 1-year RCT. This exercise produced a list of >6700 items—reduced only for redundancy, organised into 23 domains and 616 instruments and supplemented by expert advisory teams of pathologists and radiologists |

| Smelt (2014) [159] | We then grouped the answers [from round 1 of the Delphi questionnaires] according to the presence of strong similarity. During this process, we followed an inductive method, i.e. answers were examined and those considered to be more or less the same were grouped as one item. No fixed number of items was set beforehand in order to accommodate all new opinions. The answers were grouped by two of the authors (AS and VdG) separately, to ensure independence of assessments. Any discrepancies were resolved through a discussion with two other authors (ML and DK) who also checked whether they agreed with the items as formulated by AS and VdG |

| Wylde (2014) [137] | The pain features that were retained after the three rounds of the Delphi study were reviewed and systematically categorised into core outcome domains by members of the research team [86]. The IMMPACT recommendations [239] were used as a broad framework for this process. Each individual feature was reviewed to determine whether it was appropriate to group it into an IMMPACT-recommended pain outcome (pain intensity, the use of rescue treatments, pain quality, temporal components of pain) or a new pain outcome domain. The developed outcomes domains were then reviewed to ensure that the features that they encompassed adequately reflected the domain and that the features were conceptually similar. These core outcome domains were subsequently discussed and refined by the Project Steering Committee and the PEP-R group |

ICF International classification of Functioning, Disability and Health, PAH-SSc pulmonary arterial hypertension associated with systemic sclerosis, PEP-R Patient Experience Partnership in Research (a patient and public involvement group specializing in musculoskeletal research), WHO World Health Organisation

2.7.3.3 Use of outcomes recommended in core outcome sets to inform an outcome taxonomy

Based on the classification of outcomes in two previous cohorts of Cochrane systematic reviews [101, 102] and the outcomes recommended in 198 COS [14], the following taxonomy has been proposed:

- Mortality

- Includes subsets all, cause-specific, quality of death, etc.

- Physiological (or Pathophysiological)

- Disease activity (e.g. cancer recurrence, asthma exacerbation, includes ‘physical consequence of disease’, etc.)

- Blood pressure, laboratory values, recanalisation

- Infection

- New, recurrent

Pain

- Quality of life

- Includes Health-related Quality of Life (HRQoL)

Mental health

Psychosocial (includes behavioural)

- Function (or Functional status)

- Does this cover activities? Participation? (Read the Roberts and Counsell paper referenced in the review of stroke outcomes)

Compliance with/withdrawal from treatment

- Satisfaction

- Reported by patient, health professional, etc.

- Resource use (or health resource utilisation)

- Includes subset hospital, community, additional treatment, etc.

- Adverse events (or side effects)

- Be clear that this could include things like death, pain, etc. when they are unanticipated harmful effects of an intervention

Pilot work is underway with selected Cochrane Review Groups to test the taxonomy for applicability. To date, one additional outcome domain, knowledge, has been identified as missing from the list.

2.7.4 Determining inclusion and wording of items to be considered in the initial round of the consensus exercise

It is important to spend time on this aspect of the process, in terms of the structure, content and wording of the list of items, to avoid imbalance in the granularity of item selection and description and ambiguity of language. Participants in the consensus process may identify such issues, necessitating revisions to the list during subsequent rounds [48]. A SAG (see ‘Achieve global consensus’ below) can provide valuable input at the design stage, prior to the start of the formal consensus process.

The review of existing knowledge, and research to fill gaps in that knowledge, has the potential to result in a long list of items. Consideration is needed regarding whether to retain the full list in the consensus exercise or whether to reduce the size of the list using explicit criteria. Preparatory work on how best to explain the importance of scoring all items on the list may help to improve levels of participation.

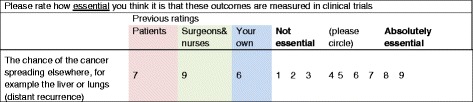

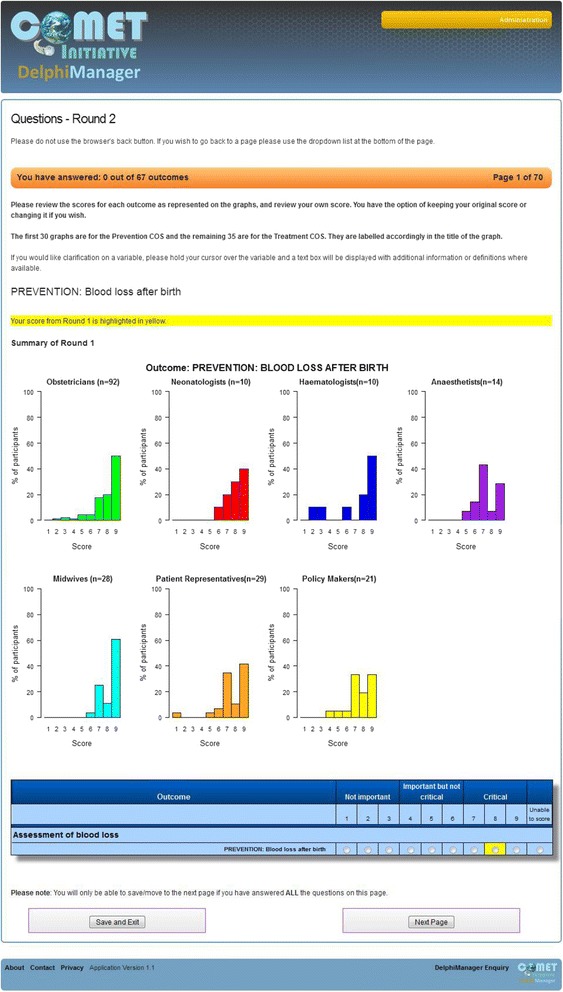

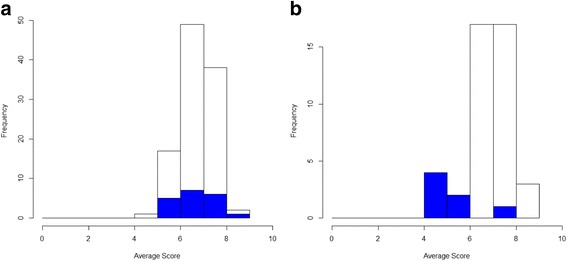

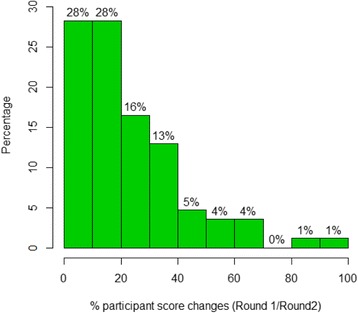

As noted in the section on qualitative research in COS development, because qualitative research involves patients and other stakeholders describing their views and experiences in their own terms, it gives COS developers access to the words, phrases and language that patients use to describe how conditions or interventions affect them. COS developers can, therefore, incorporate the words that patients use in interviews and focus groups to label and explain outcome items in a Delphi, thereby ensuring that the items are understandable and accessible for patients. Pilot or pretesting work involving cognitive or ‘think aloud’ interviews to examine how patients and other stakeholders interpret the draft items can help to refine the outcome labels and explanations [103, 104]. As the name suggests, this technique literally involves asking participants to think aloud as they work through the draft Delphi and provide a running commentary on what they are thinking as they read the items and consider their responses. This allows COS developers to understand the items from the perspective of participants. Cognitive interviews are widely used in questionnaire development to refine instruments and ensure they are understandable for the target groups.