Abstract

Behavior chains are composed of sequences of behaviors that minimally include procurement and then consumption. This review surveys recent research from this laboratory that has examined the properties of discriminated heterogeneous behavior chains. In contrast to another review (Thrailkill & Bouton, 2016a), it discusses work examining what makes chained behavior persistent, and what makes it relapse. Results suggest that responses in a discriminated heterogeneous behavior chain may become associated, so that extinction of either one reduces the strength of the other. Evidence also suggests that the goal of the first (procurement) response may be the next (consumption) response (rather than the upcoming discriminative stimulus, a putative conditioned reinforcer, or the primary reinforcer at the end of the chain). Further studies suggest that methods that promote generalization across acquisition and extinction (partial reinforcement and delivery of noncontingent reinforcers during extinction) lead to greater persistence of the procurement response. A third set of studies analyzed the contextual control and relapse of chained behaviors. The context controls both the acquisition and extinction of chained behaviors. In addition, a separately-extinguished consumption response is renewed when returned to the context of the chain. The research expands our general understanding of the learning processes that govern instrumental behavior as well as our understanding of chains.

Keywords: Relapse, Persistence, Extinction, Context, Heterogeneous chains, Discriminated operant

Operant behaviors are typically organized into chains of linked behaviors, as Skinner (e.g., 1934) observed many years ago. A behavior chain minimally involves a response that leads directly to the reinforcer (a consumption response) and at least one more response that provides access to the consumption response (a procurement response; terminology from Collier, 1981). Understanding behavior chains may have important translational implications, because unhealthy behaviors such as drug abuse, overeating, and smoking are all part of a chain. For example, smoking behavior involves not only inhaling smoke from a cigarette (consumption), but also seeking out and buying cigarettes (procurement). To stop smoking, one must quit buying the cigarettes in addition to inhaling them. Importantly, the procurement behavior (e.g., buying cigarettes in the minimart) and the consumption behavior (e.g., smoking outside by the street) often each take place in the presence of their own discriminative stimulus (SD). Clinical research also suggests an important role for procurement-related stimuli in the maintenance of unhealthy consumption behaviors (Conklin, Perkins, Salkeld, & McClernon, 2008). On this view, because behavior is often part of a chain, understanding behavior chains will be relevant for developing approaches that address problematic operant behavior.

The term “behavior chain” has several possible meanings in the literature. In one, the term refers to a sequence of linked behaviors in which responses during one stimulus are followed by another stimulus that is thought to (a.) reinforce the previous response and (b.) set the occasion for the next one (Gollub, 1977; Kelleher & Gollub, 1962). In one example, a pigeon pecking a key on a so-called chained schedule of reinforcement pecks in the presence of different stimuli that signal different response requirements; because the pecking response in each component is the same, this is a homogeneous behavior chain. In another example, a rat may be required to press a lever in one stimulus and then pull a chain in another; because the chain involves topographically dissimilar responses, this is a heterogeneous chain. Either of these types of chains can be distinguished from other “chaining”, or response-sequence tasks that do not involve distinct SDs for each response (e.g., Balleine, Garner, Gonzales, & Dickinson, 1995; Ostlund, Winterbauer, & Balleine, 2009). The term behavior chain can further refer to tasks that differentially reinforce different response patterns that involve distinct SDs to occasion the start of each pattern (e.g., Bachá-Méndez, Reid, & Mendoza-Soylovna, 2007; Grayson & Wasserman, 1979). Such response patterns can function as a distinct operant unit; that is, the entire sequence can be sensitive to its reinforcement contingencies (e.g., Reid, Chadwick, Dunham, & Miller, 2001; Schwartz, 1982).

The present review focuses on the type of chain in which different behaviors are signaled by different SDs, which we call discriminated heterogeneous chains for even more precision. The main reason for this focus is that discriminated heterogeneous chains are the types of chains that are involved in smoking and drug taking, where, as described above, different procurement and consumption responses take place in different SDs. Our recent work has studied how extinction can reveal what holds such chains together and what takes them apart (see Thrailkill & Bouton, 2016a, for a review). We begin by briefly reviewing studies that address the associative content of discriminated chains. We then emphasize studies that extend this approach to address the applied questions of what makes chained behavior persistent and what makes it relapse. We address similarities and differences between simple behaviors and behaviors that occur as part of a chain. Overall, the work has revealed several important details about behavior chains with possible implications for the applied and theoretical understanding of operant behavior.

The associative structure of a discriminated behavior chain

Earlier research on heterogeneous chains suggested that organisms may learn an association between the responses in the chain (Olmstead, Lafond, Dickinson, & Everitt, 2001; Zapata, Minney, & Shippenberg, 2010). For instance, Olmstead and colleagues (2001) trained rats to press a lever (R1) to insert a second lever (R2) on which a response led to an outcome (O) of an intravenous infusion of cocaine. (Notice that there were no SDs for either response other than the presence of the individual levers.) After learning this chain (R1-R2-O), the rats received sessions in which the R2 lever was presented by itself and either reinforced or not reinforced (extinguished). In a test, R1 responses were then measured in extinction. Responding on R1 was weaker after R2’s extinction than after its reinforcement. This led the authors to suggest that rats decreased R1 because it no longer led to a valued outcome (the R2-reinforcement combination). However, it was unclear whether the strength of R1 reflected its relation to the R2 response, the R2 SD, the primary reinforcer, or some combination of the above. In addition, extinguishing R2 in one group and reinforcing it in the other made it impossible to know which was the active ingredient.

To address these concerns, Thrailkill and Bouton (2016b) studied whether extinction of R2 weakens R1 after training with a discriminated heterogeneous chain. Figure 1 provides a diagram of the procedure. After an intertrial interval (ITI), a procurement SD (S1; e.g., a light) turned on and set the occasion for a procurement response (R1; e.g., lever press). Completing the procurement requirement (e.g., random ratio 4; RR 4) simultaneously turned off S1 and turned on S2. In S2, completing the response requirement (e.g., RR 4) on the consumption manipulandum (R2; e.g., chain pull) terminated S2, delivered the food-pellet reinforcer, and initiated the next ITI. After learning this discriminated chain, groups received either extinction of R2 in S2, or extinction of S2 without being able to make R2 (response manipulanda were removed from the chamber). After extinction, each group received a test with extinction presentations of S1 with both R1 and R2 manipulanda present. Groups that could perform R2 during extinction made the fewest number of S1R1 responses. In contrast, the group that received extinction of S2 without the ability to learn to not make R2 made a number of S1R1 responses that was similar to that in a nonextinguished control group. Thus, extinction of any Pavlovian excitatory value of S2 did not weaken R1 responding. Instead, although extinction of S2R2 could weaken R1, making R2 in extinction was critical. This result is consistent with other research showing that extinction of simple responses requires learning to inhibit the response when it is nonreinforced (Bouton, Trask, & Carranza-Jasso, 2016; Rescorla, 1997).

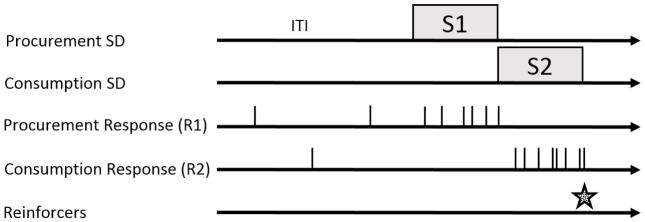

Figure 1.

Diagram of events in a discriminated heterogeneous instrumental chain. Procurement (R1; e.g., lever press) and consumption responses (R2; e.g., chain pull) can occur freely. After an intertrial interval (ITI), the procurement SD (S1) turns on. Procurement responses (R1) during S1 can produce the simultaneous offset of S1 and onset of the consumption SD (S2). Consumption responses (R2) during S2 can produce the simultaneous offset of S2, presentation of the reinforcer (food), and initiate the next ITI.

Importantly, a further experiment established that the effect of R2 extinction on R1 was specific to the R1 with which it had been chained. Rats learned two separate chains (S1R1-S2R2-O and S3R3-S4R4-O), and then received extinction of S2R2. In a test, rats received extinction presentations of S1R1 and S3R3. Extinguishing S2R2 specifically weakened R1, that is, the response that had preceded it in its chain. Rats responded on the nonextinguished R3 response as much as did an untreated control group. Therefore, the effect of extinguishing R2 on R1 was not the result of generalization between responses, or perhaps a general state of frustration that inhibited all behavior.

Instead, the results suggest that the rats had learned to associate the two responses, and that the response association makes R1 sensitive to the current status of R2. Extinction of R2 (but not S2) seemed to weaken R1 in a manner analogous to how decreasing the value of the reinforcing outcome for a simple operant response can decrease performance of the response (Adams, 1982; Dickinson & Balleine, 2002). In an analogous way, our results suggest that R2 (but not S2) may be a goal of the procurement response (R1).

The results are reminiscent of findings from Pavlovian serial compound conditioning methods in which two CSs are conditioned in series, i.e., CS1→CS2-US (Holland & Ross, 1981). In those studies, extinction of either conditioned stimulus (CS1 or CS2) weakened responding to the other CS. If this logic applies to a discriminated operant behavior chain, we would expect not only that extinction of R2 would weaken R1 (as above), but extinction of R1 should also weaken R2. Thrailkill and Bouton (2015a) therefore addressed this possibility using analogous designs. After training the discriminated heterogeneous chain (S1R1-S2R2-O), rats received extinction of R1 or S1 before testing R2. To be specific, in extinction, groups received extinction of R1 in S1, or S1 alone in the absence of the opportunity to make the response. After extinction, groups received extinction test presentations of S2 with both manipulanda present. Groups that were able to make R1 during extinction, but not the group that was merely exposed to S1, showed suppressed R2 responding relative to a no-treatment control. Clearly, extinguishing S1R1 weakened R2, but making the response (R1) was again required. A further experiment with the two-chain procedure found that the effect of R1’s extinction was specific to the R2 with which it had been trained. Because the effects were specific to the associated response, the results suggest that R1 extinction may weaken R2 through some form of “mediated” extinction (Holland & Ross, 1981; Holland & Wheeler, 2009). In Pavlovian conditioning, presentation of a stimulus is thought to activate the representation of an associated, but absent, stimulus, which then allows for new learning about the absent stimulus (Holland, 1990). In our operant case, making R1 in extinction might have analogously activated a representation of the absent R2. Practically, this finding suggests that addressing procurement (R1) behaviors can be an additional way of weakening associated consumption (R2) behaviors.

The studies up to this point suggest that chained responses are sensitive to manipulations of the strength of one another: Procurement (R1) extinction weakens consumption (R2), and consumption (R2) extinction weakens procurement (R1). However, the extent to which the value of the reinforcing outcome at the end of the chain influenced the responses remained unclear. In order to study the importance of that, Thrailkill and Bouton (2017) turned to the traditional reinforcer devaluation method (Adams, 1982; Dickinson, 1985). In an example of this method, after acquiring a lever-press response for food pellets, rats learn an aversion to the food pellets through multiple pairings of them with lithium chloride. The latter “devalues” the outcome and causes the rat to reject the food pellets. Next, rats are allowed to emit the lever-press response in extinction. Testing in extinction is important because it isolates any effects of devaluation on operant responding to the rat’s knowledge or memory of the response-outcome relation. If the response is suppressed by devaluation of its outcome, it is said to be goal-directed, with the outcome serving as its goal.

We adapted the reinforcer devaluation method to ask how changing the value of the reinforcer influences the responses in our discriminated heterogeneous chain (Thrailkill & Bouton, 2017). After learning the usual chain, rats received aversion conditioning with the reinforcing food pellets in the chambers: Half the rats received a nausea-inducing injection of lithium chloride immediately following consumption of the pellets (Paired), and half received the same exposure to pellets and lithium chloride but separated by about 24 hrs (Unpaired). Once the Paired rats rejected the pellets, the groups received a test with extinction presentations of either S1 or S2 with both R1 and R2 manipulanda in the chamber. Surprisingly, neither R1 nor R2 were affected by reinforcer devaluation; Paired and Unpaired rats made both responses at a high and similar rate during the test. Such results suggest that the value of the reinforcing outcome had little to do with the strength of either response. Further, given the earlier finding that R1 was weakened after extinction (devaluation) of R2 but not S2 (Thrailkill & Bouton, 2016b), the analysis suggests that R2 behavior, but neither the conditioned reinforcer (S2) nor the primary reinforcer (the food pellet), serves as the “goal” for R1.

It is worth noting that insensitivity to devaluation is a hallmark of operant responses that have undergone extensive training and are said to be “habitual” (e.g., Dickinson, 1985; 1994; Dickinson, Balleine, Watt, Gonzales, Boakes, 1995; Thrailkill & Bouton, 2015b). Therefore, it was possible that the training had been extensive enough to convert the chained responses into habits. Indeed, Zapata et al. (2010) found the effect of extinguishing R2 on R1 to diminish with extended training in a related procedure. However, rats demonstrated insensitivity to outcome devaluation in the above experiment after less training (6 sessions) than the studies in which R2 extinction to weaken R1 responding (8 sessions; Thrailkill & Bouton, 2016b, Experiment 1). Therefore, rather than being merely due to the creation of a habit, R1’s insensitivity to outcome devaluation suggests that its goal is R2 and not the reinforcer.

To summarize, our studies have identified several features of the associative structure underlying a discriminated heterogeneous behavior chain. The two responses in the chain are associated, so that the extinction of either response suppresses the other. Moreover, animals need to make the response in order for the associated response to be extinguished; merely presenting the stimulus that set the occasion for the extinguished response had no effect (see also Bouton et al., 2016). Finally, the R2 behavior, but not R1’s putative conditioned reinforcer (S2) nor its primary reinforcer (the food pellet), is arguably the goal of R1. These results inform the following sections in which we turn to studies that address the persistence of the chain in extinction, and how chained behaviors relapse.

Persistence of chained behaviors

One way to study the persistence of a behavior is to study how rapidly it extinguishes when the reinforcer is removed. Resistance to extinction has been the focus of many studies with simple free operant and discriminated-operant procedures (Amsel, 1967, Capaldi, 1966, 1967; Vurbic & Bouton, 2014). But to our knowledge, there has been little previous work on the persistence of behavior trained in a chain. The issue is relevant for application purposes, because sometimes it is important to create persistent chains. For example, consider the behavior of bomb- or drug-detecting dogs. These animals may search (R1) a line of cars (S1); when they detect a target odor (S2), they are reinforced with a toy or treat for performing a detection response (R2) that alerts the dog’s handler to the presence of the target. Search dogs must persist in searching, and maintain a high level of detection accuracy, while undergoing long periods of extinction in which searching often ends without a target. Understanding what variables might influence search persistence during extinction can have significant implications for training.

Extinction of simple instrumental responses is slower when training involves reinforcement of only some responses (i.e., partial reinforcement, PRF) as opposed to every response (i.e., continuous reinforcement, CRF). This partial-reinforcement extinction effect (PREE) is a well-studied property of both operant and Pavlovian behavior, and has been shown in a range of situations and species, including dogs (e.g., Feuerbacher & Wynne, 2011; Mackintosh, 1974). The PREE is thought to occur, at least partly, because introducing nonreinforced responses during training increases the similarity between training and extinction (Capaldi, 1966; 1994). On this view, responding in extinction persists because the organism has been reinforced after occasions in which responding has gone nonreinforced.

Thrailkill, Kacelnik, Porritt, and Bouton (2016) therefore studied the effects of partial reinforcement on the persistence of R1 during extinction after it was trained in our discriminated heterogeneous chain. Training proceeded with a method that was similar to that illustrated in Figure 1. Half the rats received training in which S1 turned on and R1 ended S1 (and transitioned to S2) after an average of 10 s (a variable interval 10-s schedule; VI 10 s). The other half transitioned to S2 after an average of 30 s (VI 30 s). The idea was that rats in the 30 s group (Long) would make more nonreinforced S1R1 responses prior to reinforcement during training. The two groups were divided into two further groups for which S2 always followed completion of S1R1 (continuous reinforcement), or did so only on a third of the trials (partial reinforcement). Thus, one of the groups that had VI 30 s in S1 transitioned to S2 on every trial (Group Long CRF), and the other transitioned only 1/3 of the time (Group Long PRF). The same was true for the two groups that had VI 10 s in S1 (Groups Short CRF and Short PRF). Following training, we examined extinction of S1R1 on its own. In order to compare rats in long and short groups, all rats received S1 trials of a common duration in extinction (the geometric mean of the average S1 duration; Church & Deluty, 1977). If training with Long S1 duration and partial reinforcement increases generalization from training to extinction, then the corresponding groups should show greater persistence of S1R1 responding in extinction.

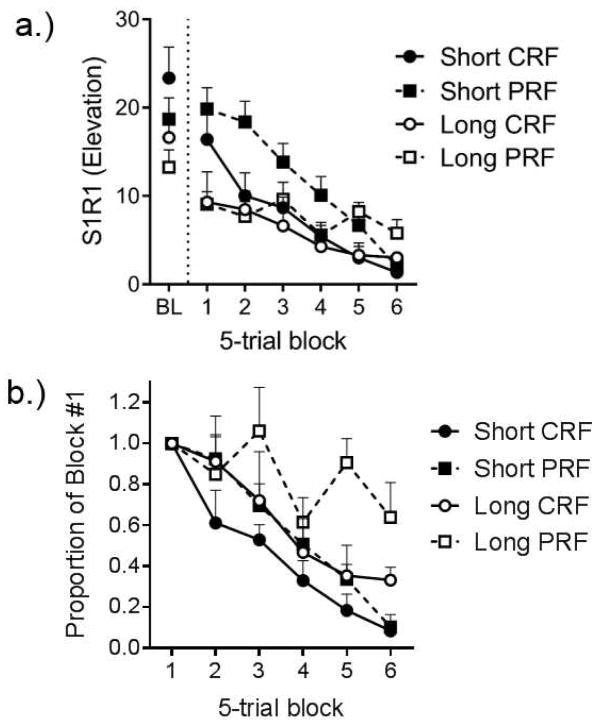

Figure 2 shows the results. At the end of training (left), R1 responding in the group that had received the combination of the 30-s VI and partial reinforcement was lower than that in the other groups. However, this group was arguably the most persistent in extinction, as is shown on the right side of Figure 2a. Responding in the Short (VI 10 s) groups began the session with the highest rate of responding, but also decreased the fastest within the session, whereas the Long (VI 30 s) groups started at a lower rate but decreased more slowly. Similarly, training with partial reinforcement seemed to prolong S1R1 responding in extinction in the PRF groups. The pattern is clearer in Figure 2b, where S1R1 responding during extinction is plotted as a proportion of the first block. Here the data clearly suggest that resistance to extinction was greatest in Group Long PRF.

Figure 2.

Extinction of R1 following training with partial versus continuous reinforcement. Response rate elevation refers to the response rate (/min) on R1 in the presence of S1 minus R1 response rate during the 30-s period before S1 onset. a.) Response rate elevation in the final session of training (BL; Left) and each block of five S1R1 extinction trials in the test session (Right). b.) response rate elevation scores in 5-trial blocks expressed as proportion of responding in the first block (Block #1) of the test session with common-duration S1 trials. Error bars are the standard error of the mean, Adapted from Thrailkill et al. (2016a).

The results are consistent with theoretical accounts of the PREE which predict that training with partial reinforcement will increase persistence in extinction because experience with nonreinforced trials allows the organism to generalize from training to extinction (Bouton, Woods, & Todd, 2014; Capaldi 1966, 1967). Consistent with this view, Group Short PRF was slower to extinguish than Group Long CRF. However, note that these two groups received the same cumulative time in S1 and number of reinforced S2R2 opportunities in training (one S2 per every 30 s of S1 time). This pattern is incompatible with accounts of the PREE that emphasize the accumulation of nonreinforced time in determining persistence in extinction (Gallistel & Gibbon, 2000). Since training arranged the same rate of reinforcement in S1 in the two groups, such an account would predict the same rate of extinction. But the larger point is that training a discriminated heterogeneous chain with partial reinforcement increases the persistence of R1.

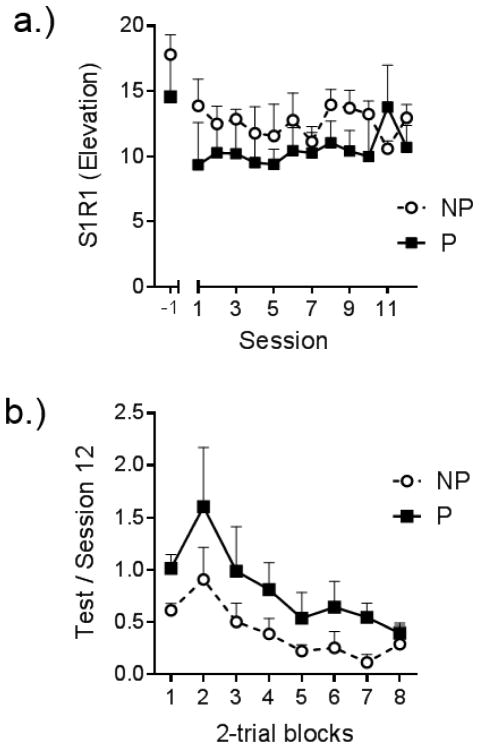

A second method that is known to slow the loss of responding in extinction is to present the reinforcer noncontingently. Suspending the response-reinforcer contingency, but continuing to deliver the reinforcer, results in slower extinction compared to removing the reinforcer entirely (e.g., Baker, 1990; Rescorla & Skucy, 1969; Winterbauer & Bouton, 2011). One hypothesis is that reinforcers acquire a discriminative function for the response in training. That is, a reinforcer that follows a response often precedes the reinforcement of a subsequent response. Therefore, we studied whether presenting the reinforcer noncontingently in extinction would likewise slow down the extinction of chained responding (Thrailkill et al., 2016, Experiment 3). Two groups of rats learned the S1R1-S2R2-O chain under conditions in which each group also received two noncontingent deliveries of the food pellet reinforcer during the last 20 s of the ITI. (Though not tested experimentally, the added pellets seemed to slow down S1R1 responding during training.) Nevertheless, as shown in Figure 3a, rats learned and performed the chain reliably at the end of the training phase. Each group then received a series of extinction trials with S1R1; half the rats continued to receive free pellets in the ITI before S1, and the other half had the pellets removed during the test. The results (Figure 3b) clearly suggest that extinction of S1R1 was slower in the group tested with noncontingent pellets (P) than the group tested without (NP). It is notable that in another experiment we found that introducing noncontingent reinforcers for the first time during S1R1 extinction reduced S1R1 responding, presumably by creating generalization decrement (Thrailkill et al., 2016, Experiment 2). Therefore, presenting the noncontingent pellets during training and delivering them in the ITI were each important for enhancing the persistence of S1R1 in extinction.

Figure 3.

Extinction of S1R1 following training with noncontingent reinforcers. Response rate elevation refers to the response rate (/min) on R1 in the presence of S1 minus R1 response rate during the 30-s period before S1 onset. a.) Response rate elevation in the final session of training without noncontingent pellets (-1; Left) followed by each session of training with noncontingent pellets prior to each S1R1 (Right). b.) Response rate elevation scores in the test for groups tested with (P) or without (NP) noncontingent pellets expressed a proportion of S1R1 responding in the in final session training with noncontingent pellets (Session 12) 5-trial blocks expressed as a proportion of responding in the first block of the session (Block #1). Error bars are the standard error of the mean, Adapted from Thrailkill et al. (2016a).

The results of these studies of the persistence of R1 in extinction are compatible with what is known about extinction with simple discriminated- and free-operant responses. Training with partial reinforcement may reduce the overall rate of response during training, but it paradoxically increases persistence in extinction by increasing the generalization of training to extinction. Presenting noncontingent reinforcers during training may have a similar effect; noncontingent reinforcers increased the similarity of training and extinction. The results are all consistent with theoretical accounts of resistance to extinction (Bouton et al., 2014; Capaldi, 1966, 1994). Our results suggest methods that might encourage persistence of chained behaviors in applied settings, such as search behavior in bomb- and drug-detecting working dogs.

Relapse of chained behaviors

Extinction learning is adaptive and fundamental to how organisms change their behavior given new conditions. A critical aspect of extinction learning is that it involves new learning that interferes with the original learning (Bouton, 1993). A number of so-called relapse phenomena illustrate that extinction is not erasure (e.g., Bouton, 2004). Perhaps the simplest example of relapse is reinstatement (e.g., Reid, 1958; Rescorla & Skucy, 1969). Typically, a reinforced response is nonreinforced (extinguished) until it reaches a low level. If the reinforcer is then presented not contingent on behavior, the extinguished response can return. Such “reinstatement” has been studied extensively in Pavlovian and operant conditioning, and is one of the most widely discussed examples of relapse in the instrumental learning literature (Bouton, 2004; Shaham, Shalev, Lu, de Wit, & Stewart, 2003; Venniro, Caprioli, & Shaham, 2016).

Interestingly, to our knowledge no studies have directly addressed reinstatement of the responses after extinction of a behavior chain. We therefore conducted two experiments (unpublished) to evaluate reinstatement of extinguished chained behaviors. In one experiment, outlined in Table 1, two groups of eight rats learned the discriminated chain in the manner described initially (see Thrailkill and Bouton, 2016b, Experiment 1). Then the groups received extinction of either R1 or R2 separately, in their respective SDs. In either case, responses could turn off the SD according to the RR 4 that had been used in training, but no reinforcers were presented at this time. After three sessions of extinction (each containing 30 trials), all rats received a test consisting of 10 further extinction trials followed by an extended ITI that included the presentation of four noncontingent food-pellet reinforcers after 2 min. The reinforcers were spaced by an average of 4 seconds. After an additional 2 min, the rats received further extinction trials. Figure 4a shows R1 and R2 responding for the different groups during the reinstatement test session. The figure shows the two 5-trial blocks of continued extinction that began the test session (left), and then the two 5-trial blocks that followed the noncontingent pellets (right). The figure suggests that the pellets reinstated both R1 and R2. We compared responding (measured as the elevation of response rate in the SD above a 30-s preSD period) on the last extinction block with the first test block in each group with Wilcoxon signed-rank tests. The analysis found significant increases in responding from the last extinction block to the first test block in Group R1, T = 34, p = .02, and Group R2, T = 36, p = .01. The results suggest that, like extinguished simple operant and Pavlovian responses, extinguished chained procurement and consumption responses are reinstated by noncontingent presentation of the reinforcer. There was no evidence to suggest that the noncontingent pellets were more effective for reinstating either response.

Table 1.

Design of experiments that examined reinstatement of chained behaviors

| Experiment | Training | Extinction | Test |

|---|---|---|---|

| Separate Extinction | S1R1→S2R2+ | S1R1− | S1R1−, pellets, S1R1− |

| S2R2− | S2R2−, pellets, S2R2− | ||

| Chain Extinction | S1R1→S2R2+ | S1R1→S2R2− | S1R1→S2R2−, pellets, S1R1→S2R2− |

Note. S1R1 and S2R2 refer to discriminated procurement and consumption responses, + and − refer to reinforced and nonreinforced trials.

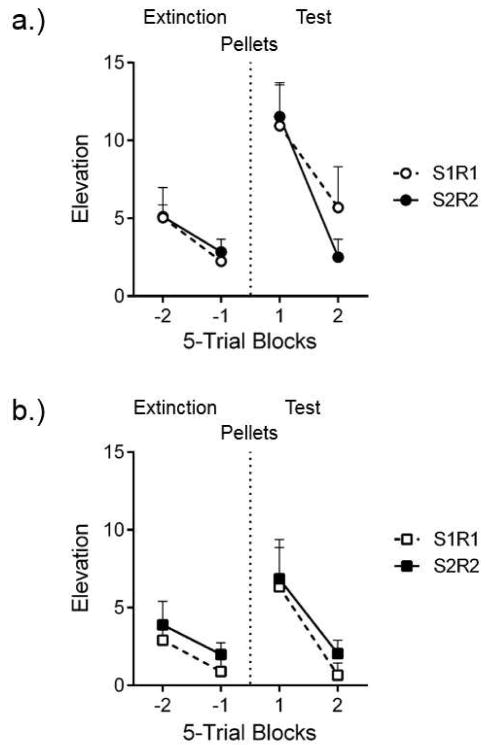

Figure 4.

Reinstatement of extinguished chained responses. Mean response rate elevation scores for the S1R1 and S2R2 responses that (a.) had received separate extinction of the two responses in separate groups and (b.) extinction of both responses in the chain. In each panel, elevation scores in one set of 5-trial blocks of extinction (Extinction) are followed by those in a second set of 5-trial blocks of extinction (Test). Each set of extinction blocks was separated by a 240 s interval that included 4 noncontingent pellet deliveries separated by 4 s on average (Pellets). Error bars are the standard error of the mean and only appropriate for between-group comparisons

A companion experiment assessed reinstatement of R1 and R2 after extinction of the whole chain. The design is also shown in Table 1. One group of eight rats learned the discriminated chain (S1R1-S2R2-O) and then received 90 extinction trials of the entire chain (S1R1-S2R2-), distributed over three daily sessions, instead of S1R1 and S2R2 separately as in the preceding experiment. During extinction, S1 was presented and transitioned to S2 when R1 met the usual RR 4 requirement; but trials also transitioned to S2 noncontingently if the rats failed to meet the RR 4 requirement within 20 s. In a test session, all rats received 10 further extinction trials, followed by noncontingent presentations of four food-pellet reinforcers in the ITI (as above). Beginning 2 min later, rats received further extinction trials in which the SDs for the entire chain were presented. Figure 4b shows R1 and R2 responding during the test session. The figure again shows the two 5-trial blocks of continued extinction at the start of the test (left) and then the first two 5-trial blocks of test trials after the noncontingent pellets were presented (right). Both R1 and R2 were reinstated. Responding again increased from the last block of extinction to the first block of testing on R1, T = 34, p = .02, and on R2, T = 32, p < .05. The overall results suggest that extinction of R1 and R2 separately or within the chain did not erase the learning that supports a discriminated chained response. Visual comparison, nonetheless, suggests that reinstatement was weaker following extinction of the entire chain. Although the comparison confounds extinction procedure with experiment, and must be taken cautiously, one possibility is that extinction of the entire chain may have led to stronger extinction, because as described above, extinction of each response weakens both itself and the other response associated with it in the chain (Thrailkill & Bouton, 2015a, 2016b).

Another compelling and well-known relapse phenomenon is the renewal effect, which suggests that extinction learning is especially sensitive to the context for expression (Bouton, 1993; Bouton & Todd, 2014). Though first demonstrated with extinguished Pavlovian responses (e.g., Bouton & Bolles, 1979; Bouton & King, 1983), many studies now suggest that operant extinction is similarly at least partly specific to the context in which it is learned. In a typical experiment (e.g., Bouton, Todd, Vurbic, & Winterbauer, 2011), response acquisition takes place in a distinct physical context, defined by a set of olfactory, visual, and tactile cues (Context A). Extinction of the response then takes place in a second context, defined by a second set of distinct cues (Context B). Once extinguished, the response increases (renews) when it is tested back in Context A. Importantly, renewal also occurs when the test takes place in a third context (ABC renewal), and when acquisition and extinction occurred in the same context and then testing occurs in a different context (AAB renewal). ABC and AAB renewal importantly suggest that removing the response from the extinction context is sufficient for it to be renewed. This, and several other studies (e.g., Todd, 2013; Todd, Vurbic, & Bouton, 2014), suggest that extinction at least partly involves context-dependent inhibitory learning. Renewal of extinguished operant responding has been shown in rats (e.g., Bouton et al., 2011; Nakajima, Tanaka, Urushihara, & Imada, 2000) and pigeons (e.g., Berry, Sweeney, & Odum, 2014), and with responding that has been reinforced with drug reinforcers as well as food reinforcers (e.g., Crombag & Shaham, 2002; Fuchs, Eaddy, Su, & Bell, 2007).

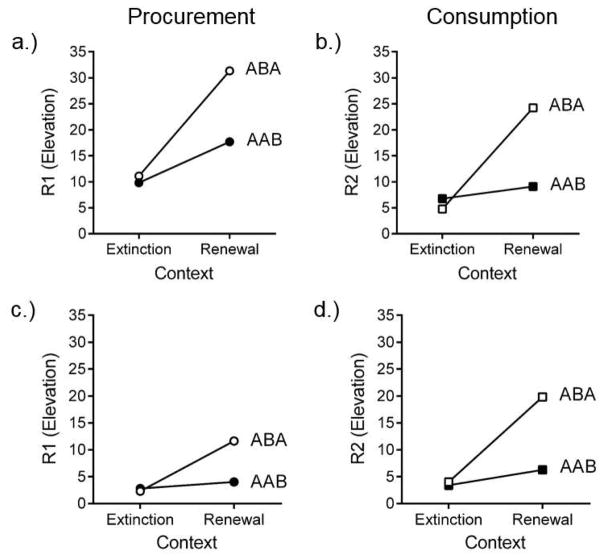

We recently extended our analysis of discriminated heterogeneous behavior chains to address whether extinction of chained behaviors is context specific in the manner understood for simple operant responses (Thrailkill, Trott, Zerr, & Bouton, 2016). An initial study examined renewal of individual responses that were extinguished separately after training in the chain. Two groups of rats learned the usual chain in one context (Context A), and then received extinction of either S1R1 or S2R2. For half the rats in each group, extinction took place in a different context (Context B); for the other half extinction took place in the acquisition context (Context A). All rats then received a test of the extinguished response in both Contexts A and B in a counterbalanced order (the methods generally followed Bouton et al., 2011, Experiment 1, where ABA and AAB renewal of simple operant responses were studied). As can been seen in Figures 5a and 5b, responding in each group remained suppressed in the extinction context and was renewed in the renewal context. The effect was larger in the groups extinguished in Context B and tested in A, but it was also reliable in the groups extinguished in A and tested in B, a pattern that also seems true of simple operants (Bouton et al., 2011). Therefore, as with simple operant and Pavlovian responses, the extinction of a chained response depends on the context for expression.

Figure 5.

Renewal of extinguished chained responses. Mean responses rate elevation scores for ABA and AAB renewal following (a. and b.) separate extinction of the responses in different groups, and (c. and d.) extinction of the responses in the chain. See text for details. Adapted from Thraikill et al. (2016b).

A second experiment examined renewal of S1R1 and S2R2 following extinction of the full chain. After the same training in Context A, two groups received extinction of the entire chain in Context A or Context B. As described above, extinction consisted of trials that always included opportunities to make S1R1 followed by S2R2. Rats in each group were then tested with either S1R1 or S2R2 in the extinction context and the other (renewal) context in a counterbalanced order. The results of the test are shown in Figure 5c and 5d. As before, responding in each group remained suppressed in the extinction context and was renewed in the renewal context. Note that, like the reinstatement results described earlier, the level of responding in the renewal and extinction contexts across experiments suggests that extinguishing the entire chain may produce stronger extinction of each response, again perhaps because extinction of one also weakens the other (Thrailkill & Bouton, 2015a, 2016b). However, such an interpretation will require direct experimental comparison for support.

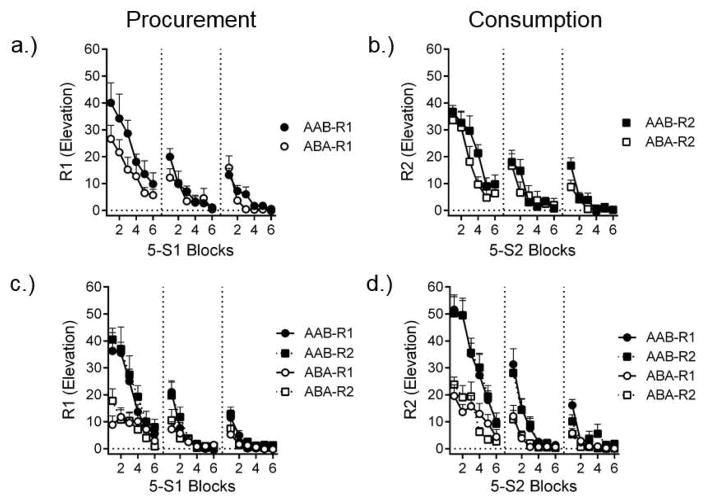

While each response renewed in the renewal context, it is also notable that the context switch after conditioning had different effects on R1 and R2 in the two experiments. The results from the extinction phase in the two experiments are shown in Figure 6. Extinguishing S1R1 and S2R2 individually (Experiment 1) revealed that R1 was weaker at the beginning of extinction in Context B than it was in Context A (Figure 6a). That result is consistent with our work with simple operants suggesting that operant behavior is weakened when the context is changed after conditioning (e.g., Bouton et al., 2011; Bouton, Todd, & León, 2014; Thrailkill & Bouton, 2015b; see Trask, Thrailkill, & Bouton, 2016 for further discussion). However, in the groups extinguished with S2R2, there was no effect of switching contexts from A to B (Figure 6b). The pattern suggests that changing the context after acquisition had a stronger effect on procurement (R1) than on consumption (R2). Thus, procurement behaviors may be more sensitive to the context than consumption responses that follow them in a chain. Interestingly, as can be seen in the bottom panels of Figure 6, when extinction was conducted with the whole chain (Experiment 2), both procurement and consumption were weakened by the context switch. In this case, R2 was tested immediately after the rats had performed R1, which was decremented by the context switch. It seems, therefore, that being in the “context” of the preceding response in the chain (i.e., S1R1) was important in order for consumption to be sensitive to the context switch. Consumption (R2) was weaker when it followed a procurement response (R1) that had itself been weakened by context change.

Figure 6.

Contextual control of nonextinguished chained responses. Mean responses rate elevation scores for R1 and R2 over blocks of 5 trials in three extinction sessions. AAB and ABA refer to whether extinction sessions were conducted in the same context as acquisition training (AAB) or took place in a different context (ABA). The top row shows extinction of R1 and R2 in separate group (a. and b.). The bottom row shows extinction of R1 and R2 separately for groups that would eventually receive a test of either R1 or R2 but had each response extinguished as part of the entire (c. and d.) extinction of the responses in the chain. See text for details. Adapted from Thrailkill et al. (2016b).

Taken together, the two experiments suggest that the procurement response may be the functional “context” for the consumption response. In training, consumption responding is only reinforced when it occurs immediately following the procurement response. This relation might make procurement a strong enough cue for consumption that it might overshadow or block control by the more global contextual cues. We therefore reasoned that if procurement functioned like a physical context for consumption, then a consumption response extinguished outside the chain should be renewed if it were returned to and tested in the “context” of the chain. We tested this idea in a third experiment. Rats received extinction of S2R2 after training in the usual S1R1-S2R2-O chain. Following extinction, some rats received S2R2 following S1R1 again, and others merely received continued S2R2 extinction trials. As predicted, rats increased their S2R2 responding when it was tested again in the context of the chain. Crucially, that result required that the rat was able to perform R1 (with the R1 manipulandum in the chamber) during the test. Simply presenting S1 before S2R2, without being able to make R1, did not produce renewal of R2. Thus, renewal of S2R2 upon return to the chain depended on the rats being able to make R1. Preceding behaviors can function as a context, in addition to exteroceptive, interoceptive, and temporal cues.

These results are consistent with the work reviewed above suggesting that responses in the chain become associated (Thrailkill & Bouton, 2015a, 2016b). A final experiment clinched the idea by testing whether renewal of extinguished consumption by the procurement response was specific to the associated procurement response. Rats first received training with two separate chains, following the procedure described above (S1R1-S2R2-O and S3R3-S4R4-O). All rats then received extinction of both consumption responses (S2R2 and S4R4). Rats were then tested with trials in which making S1R1 led to either S2R2, the consumption response with which it had been trained (congruent trials), or to S4R4, the consumption response from the other chain (incongruent trials). If renewal is specific to the association in the chain, it should occur only on the congruent trials. This is exactly what the experiment found. In addition, a second group extinguished and tested without the opportunity to make R1 (its manipulandum was removed from the chamber) did not increase their extinguished R2 responding following S1. Therefore, the results suggest that preceding behaviors can play the role of context and renew extinguished consumption responses through a specific association that requires the response.

Our studies of extinction and relapse deepen our understanding of processes that control chained behavior. Presenting the reinforcer from training reinstates extinguished procurement and consumption responses, and changing the context after extinction can renew extinguished chained responses. The results are consistent with the literature showing similar effects on simple operant and Pavlovian responses. Further consistent with that literature, the procurement response is damaged by context change. In contrast, the consumption response may not be; the results of context change and renewal testing suggest that procurement can be the context for consumption. These results expand the definition of context to include preceding behavior in the list of physical, temporal, and reinforcer contexts that previous work suggest control the retrieval and expression of extinction learning (Bouton, 2004; Bouton & Trask, 2016).

Summary

Since behavior often takes place in the form of a chain, an integrative understanding of the processes controlling chained behaviors may have broad implications. While the chain structure of operant behavior has been recognized for many years (e.g., Skinner, 1934), the field has only recently begun to understand the learning processes that underlie the chain. The research reviewed above has identified several important variables that contribute to the persistence and relapse of behaviors that are part of a discriminated behavior chain.

First, learning in the discriminated chain method appears to reflect a lawful set of associative relations. The experiments confirm that S1 and S2 occasion their associated responses, R1 and R2; rats responded appropriately in these Ss, but not in the ITIs. However, one of the most important associations seems to be the link between the two responses in the chain: extinction of procurement weakens the consumption response (Thrailkill & Bouton, 2015a), and extinction of consumption weakens the procurement response (Thrailkill & Bouton, 2016b). In each case, the effect was specific to responses associated in the chain and required that animals be given the opportunity to actually perform and inhibit the behavior during extinction (Bouton et al., 2016). Extinguishing the Pavlovian properties of the SDs that occasioned the responses had no effect on the tendency to make either response. In addition, according to the effects we have seen of reinforcer devaluation, the representation of the reinforcing outcome seemed to play little, if any, role in controlling the procurement or consumption response (Thrailkill & Bouton, 2017). Thus, in a discriminated chain, the opportunity to make the consumption response, and not achieving the next SD (a conditioned reinforcer) or the primary reinforcer, appears to be the goal of the procurement response.

Second, persistence in extinction depends at least partly on generalization from training to extinction. A long tradition of research on resistance to extinction of simple Pavlovian and instrumental responses suggests that training that involves nonreinforcement will translate to greater persistence during extinction (Capaldi, 1966, 1994). Likewise, training that increased the number of nonreinforced procurement responses led to slower extinction of procurement (Thrailkill, Kacelnik, et al., 2016). Another way to increase generalization is to continue to present stimuli from training during extinction. Consistent with this, we found that presenting noncontingent reinforcers that were also presented during training was effective at slowing extinction. Overall, the results are consistent with the perspective that training variables that influence generalization of training to extinction have a strong influence on resistance to extinction. In our example of working dogs that search and identify target odors, training methods that promote generalization from training to situations in which targets are rare may help promote search persistence.

Third, extinction does not destroy the original learning; extinguished procurement and consumption responses readily returned following reinstating presentations of the training reinforcer. The physical context also controls extinguished chained behaviors; extinguished procurement and consumption responses relapse (renew) if the context is changed (Thrailkill, Trott, et al., 2016). This suggests that extinction of each response in the chain, whether separately or together with other responses in the chain, involves new inhibitory learning that is specific to the context. In contrast, after conditioning itself, the context seems to control procurement behavior, but not consumption. This leads to a fourth insight: Procurement functions as the primary context for the consumption response. A separately-extinguished consumption response is renewed when it is returned to the context of the chain. This effect also depends on the association between procurement and consumption responses in the chain.

Overall, the research has expanded our understanding of the associative content and control of behavior in discriminated heterogeneous behavior chains. It also illustrates the value of the methodological and conceptual tools that have been developed for understanding simpler operant and Pavlovian responses.

Highlights.

Operant behavior consists of chains of linked procurement and consumption responses.

Chained behaviors are associated, and procurement is motivated by the opportunity to make the consumption response.

Persistence in extinction depends on generalization across training and extinction conditions.

Contexts control chained behaviors before and after extinction, where they can influence relapse.

Acknowledgments

This research was supported by NIH Grant RO1 DA033123 to MEB.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Adams CD. Variations in the sensitivity of instrumental responding to reinforcer devaluation. The Quarterly Journal of Experimental Psychology B: Comparative and Physiological Psychology. 1982;34:77–98. [Google Scholar]

- Amsel A. Partial reinforcement effects on vigor and persistence: advances in frustration theory derived from a variety of within-subjects experiments. In: Spence KW, Spence JT, editors. The Psychology of Learning and Motivation. Vol. 1. New York NY: Academic Press; 1967. pp. 1–65. [Google Scholar]

- Bachá-Méndez G, Reid AK, Mendoza-Soylovna A. Resurgence of integrated behavioral units. Journal of the Experimental Analysis of Behavior. 2007;87:5–24. doi: 10.1901/jeab.2007.55-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker AG. Contextual conditioning during free operant extinction: Unsignaled, signaled: and backward-signaled noncontingent food. Animal Learning & Behavior. 1990;18:59–70. [Google Scholar]

- Balleine BW, Garner C, Gonzalez F, Dickinson A. Motivational control of heterogeneous instrumental chains. Journal of Experimental Psychology: Animal Behavior Processes. 1995;21:203–217. [Google Scholar]

- Berry MS, Sweeney MM, Odum AL. Effects of baseline reinforcement rate on operant ABA and ABC renewal. Behavioural processes. 2014;108:87–93. doi: 10.1016/j.beproc.2014.09.009. [DOI] [PubMed] [Google Scholar]

- Bouton ME. Context, time, and memory retrieval in the interference paradigms of Pavlovian learning. Psychological Bulletin. 1993;114:80–99. doi: 10.1037/0033-2909.114.1.80. [DOI] [PubMed] [Google Scholar]

- Bouton ME. Context and behavioral processes in extinction. Learning & Memory. 2004;11:85–494. doi: 10.1101/lm.78804. [DOI] [PubMed] [Google Scholar]

- Bouton ME, Bolles RC. Contextual control of the extinction of conditioned fear. Learning and Motivation. 1979;10:445–466. [Google Scholar]

- Bouton ME, King DA. Contextual control of the extinction of conditioned fear: tests for the associative value of the context. Journal of Experimental Psychology: Animal Behavior Processes. 1983;9:248–265. [PubMed] [Google Scholar]

- Bouton ME, Todd TP. A fundamental role for context in instrumental learning and extinction. Behavioural Processes. 2014;104:13–19. doi: 10.1016/j.beproc.2014.02.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Todd TP, León SP. Contextual control of discriminated operant behavior. Journal of Experimental Psychology: Animal Learning and Cognition. 2014;40:92–105. doi: 10.1037/xan0000002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Todd TP, Vurbic D, Winterbauer NE. Renewal after the extinction of free operant behavior. Learning & Behavior. 2011;39:57–67. doi: 10.3758/s13420-011-0018-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Trask S. Role of the discriminative properties of the reinforcer in resurgence. Learning & Behavior. 2016;44:137–150. doi: 10.3758/s13420-015-0197-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Trask S, Carranza-Jasso R. Learning to inhibit the response during instrumental (operant) extinction. Journal of Experimental Psychology: Animal Learning and Cognition. 2016;42:246–258. doi: 10.1037/xan0000102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Woods AM, Todd TP. Separation of time-based and trial-based accounts of the partial reinforcement extinction effect. Behavioural Processes. 2014;101:23–31. doi: 10.1016/j.beproc.2013.08.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Capaldi EJ. Partial reinforcement: a hypothesis of sequential effects. Psychological Review. 1966;73:459–477. doi: 10.1037/h0023684. [DOI] [PubMed] [Google Scholar]

- Capaldi EJ. A sequential hypothesis of instrumental learning. In: Spence KW, Spence JT, editors. The Psychology of Learning and Motivation. Vol. 1. Oxford, UK: Academic Press; 1967. pp. 67–144. [Google Scholar]

- Capaldi EJ. The sequential view: from rapidly fading stimulus traces to the organization of memory and the abstract concept of number. Psychonomic Bulletin & Review. 1994;1:156–181. doi: 10.3758/BF03200771. [DOI] [PubMed] [Google Scholar]

- Church RM, Deluty MZ. Bisection of temporal intervals. Journal of Experimental Psychology: Animal Behavior Processes. 1977;3:216–228. doi: 10.1037//0097-7403.3.3.216. [DOI] [PubMed] [Google Scholar]

- Collier GH. Determinants of choice. In: Bernstein DJ, editor. Nebraska symposium on motivation. Lincoln, NE: University of Nebraska Press; 1981. pp. 67–127. [PubMed] [Google Scholar]

- Conklin CA, Robin N, Perkins KA, Salkeld RP, McClernon FJ. Proximal versus distal cues to smoke: The effects of environments on smokers’ cue reactivity. Experimental and Clinical Psychopharmacology. 2008;16:207–214. doi: 10.1037/1064-1297.16.3.207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crombag HS, Shaham Y. Renewal of drug seeking by contextual cues after prolonged extinction in rats. Behavioral Neuroscience. 2002;116:169–173. doi: 10.1037//0735-7044.116.1.169. [DOI] [PubMed] [Google Scholar]

- Dickinson A. Actions and habits: The development of behavioural autonomy. Philosophical Transactions of the Royal Society of London. 1985;308:67–78. [Google Scholar]

- Dickinson A. Instrumental conditioning. In: Mackintosh NJ, editor. Animal learning and cognition: Handbook of perception and cognition series. 2. San Diego, CA: Academic Press; 1994. pp. 45–79. [Google Scholar]

- Dickinson A, Balleine BW. The role of learning in the operation of motivational systems. In: Gallistel CR, editor. Learning, Motivation & Emotion, Steven’s Handbook of Experimental Psychology. Vol. 3. New York, NY: Wiley; 2002. pp. 497–533. [Google Scholar]

- Dickinson A, Balleine B, Watt A, Gonzalez F, Boakes RA. Motivational control after extended instrumental training. Animal Learning & Behavior. 1995;23:197–206. [Google Scholar]

- Feuerbacher EN, Wynne CDL. A History of Dogs as Subjects in North American Experimental Psychological Research. Comparative Cognition & Behavior Reviews. 2011;6:46–71. [Google Scholar]

- Fuchs RA, Eaddy JL, Su ZI, Bell GH. Interactions of the basolateral amygdala with the dorsal hippocampus and dorsomedial prefrontal cortex regulate drug context-induced reinstatement of cocaine-seeking in rats. European Journal of Neuroscience. 2007;26:487–498. doi: 10.1111/j.1460-9568.2007.05674.x. [DOI] [PubMed] [Google Scholar]

- Gallistel CR, Gibbon J. Time, rate and conditioning. Psychological Review. 2000;107:289–344. doi: 10.1037/0033-295x.107.2.289. [DOI] [PubMed] [Google Scholar]

- Gollub LR. Conditioned reinforcement: Schedule effects. In: Honig WK, Staddon JER, editors. Handbook of operant behavior. Englewood Cliffs, NJ: Prentice-Hall; 1977. pp. 288–312. [Google Scholar]

- Grayson RJ, Wasserman EA. Conditioning of two-response patterns of key pecking in pigeons. Journal of the Experimental Analysis of Behavior. 1979;31:23–39. doi: 10.1901/jeab.1979.31-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holland PC. Event representation in Pavlovian conditioning: Image and action. Cognition. 1990;37:105–131. doi: 10.1016/0010-0277(90)90020-k. [DOI] [PubMed] [Google Scholar]

- Holland PC, Ross RT. Within-compound associations in serial compound conditioning. Journal of Experimental Psychology: Animal Behavior Processes. 1981;7:228–241. [Google Scholar]

- Holland PC, Wheeler DS. Representation-mediated food aversions. In: Reilly S, Schachtman T, editors. Conditioned taste aversion: Behavioral and neural processes. Oxford, UK: Oxford University Press; 2009. pp. 196–225. [Google Scholar]

- Kelleher RT, Gollub LR. A review of positive conditioned reinforcement. Journal of the Experimental Analysis of behavior. 1962;5:543–597. doi: 10.1901/jeab.1962.5-s543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mackintosh NJ. The Psychology of Animal Learning. Oxford, UK: Academic Press; 1974. [Google Scholar]

- Nakajima S, Tanaka S, Urushihara K, Imada H. Renewal of extinguished lever-press responses upon return to the training context. Learning and Motivation. 2000;31:416–431. [Google Scholar]

- Olmstead MC, Lafond MV, Everitt BJ, Dickinson A. Cocaine seeking by rats is a goal-directed action. Behavioral Neuroscience. 2001;115:394–402. [PubMed] [Google Scholar]

- Ostlund SB, Winterbauer NE, Balleine BW. Evidence of action sequence chunking in goal-directed instrumental conditioning and its dependence on the dorsomedial prefrontal cortex. The Journal of Neuroscience. 2009;29:8280–8287. doi: 10.1523/JNEUROSCI.1176-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reid RL. The role of the reinforcer as a stimulus. British Journal of Psychology. 1958;49:202–209. doi: 10.1111/j.2044-8295.1958.tb00658.x. [DOI] [PubMed] [Google Scholar]

- Reid AK, Chadwick CZ, Dunham M, Miller A. The development of functional response units: The role of demarcating stimuli. Journal of the experimental analysis of behavior. 2001;76:303–320. doi: 10.1901/jeab.2001.76-303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rescorla RA. Response inhibition in extinction. The Quarterly Journal of Experimental Psychology: Section B. 1997;50:238–252. [Google Scholar]

- Rescorla RA, Skucy JC. Effect of response-independent reinforcers during extinction. Journal of Comparative and Physiological Psychology. 1969;67:381–389. [Google Scholar]

- Schwartz B. Interval and ration reinforcement of a complex sequential operant in pigeons. Journal of the Experimental Analysis of Behavior. 1982;37:349–357. doi: 10.1901/jeab.1982.37-349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shaham Y, Shalev U, Lu L, de Wit H, Stewart J. The reinstatement model of drug relapse: history, methodology and major findings. Psychopharmacology. 2003;168:3–20. doi: 10.1007/s00213-002-1224-x. [DOI] [PubMed] [Google Scholar]

- Skinner BF. The extinction of chained reflexes. Proceedings of the National Academy of Sciences. 1934;20:234–237. doi: 10.1073/pnas.20.4.234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thrailkill EA, Bouton ME. Extinction of chained instrumental behaviors: Effects of procurement extinction on consumption responding. Journal of Experimental Psychology: Animal Learning and Cognition. 2015a;41:232–246. doi: 10.1037/xan0000064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thrailkill EA, Bouton ME. Contextual control of instrumental actions and habits. Journal of Experimental Psychology: Animal Learning and Cognition. 2015b;41:69–80. doi: 10.1037/xan0000045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thrailkill EA, Bouton ME. Extinction and the associative structure of heterogeneous instrumental chains. Neurobiology of Learning and Memory. 2016a;133:61–68. doi: 10.1016/j.nlm.2016.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thrailkill EA, Bouton ME. Extinction of chained instrumental behaviors: Effects of consumption extinction on procurement responding. Learning & Behavior. 2016b;44:85–96. doi: 10.3758/s13420-015-0193-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thrailkill EA, Bouton ME. Effects of outcome devaluation on instrumental behaviors in a discriminated heterogeneous chain. Journal of Experimental Psychology: Animal Learning & Cognition. 2017;43:88–95. doi: 10.1037/xan0000119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thrailkill EA, Kacelnik A, Porritt F, Bouton ME. Increasing the persistence of a heterogeneous behavior chain: Studies of extinction in a rat model of search behavior of working dogs. Behavioural Processes. 2016;129:44–53. doi: 10.1016/j.beproc.2016.05.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thrailkill EA, Trott JM, Zerr C, Bouton ME. Contextual control of chained instrumental behaviors. Journal of Experimental Psychology: Animal Learning & Cognition. 2016;42:401–414. doi: 10.1037/xan0000112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd TP. Mechanisms of renewal after the extinction of instrumental behavior. Journal of Experimental Psychology: Animal Behavior Processes. 2013;39:193–207. doi: 10.1037/a0032236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd TP, Vurbic D, Bouton ME. Mechanisms of renewal after the extinction of discriminated operant behavior. Journal of Experimental Psychology: Animal Learning and Cognition. 2014;40:355–368. doi: 10.1037/xan0000021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trask S, Thrailkill EA, Bouton ME. Occasion setting, inhibition, and the contextual control of extinction in Pavlovian and instrumental (operant) learning. Behavioural Processes. 2016;137:64–72. doi: 10.1016/j.beproc.2016.10.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Venniro M, Caprioli D, Shaham Y. Animal models of drug relapse and craving: from drug priming-induced reinstatement to incubation of craving after voluntary abstinence. Progress in Brain Research. 2016;224:25–52. doi: 10.1016/bs.pbr.2015.08.004. [DOI] [PubMed] [Google Scholar]

- Vurbic D, Bouton ME. A contemporary behavioral perspective on extinction. In: McSweeney FK, Murphy ES, editors. The Wiley-Blackwell Handbook of Operant and Classical Conditioning. New York, NY: Wiley; 2014. pp. 53–76. [Google Scholar]

- Winterbauer NE, Bouton ME. Mechanisms of resurgence II: response-contingent reinforcers can reinstate a second extinguished behavior. Learning & Motivation. 2011;42:154–164. doi: 10.1016/j.lmot.2011.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zapata A, Minney VL, Shippenberg TS. Shift from goal-directed to habitual cocaine seeking after prolonged experience in rats. The Journal of Neuroscience. 2010;30:15457–15463. doi: 10.1523/JNEUROSCI.4072-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]