Abstract

Purpose

Wayfinding, the process of determining and following a route between an origin and a destination, is an integral part of everyday tasks. The purpose of this study was to investigate the impact of glaucomatous visual field loss on wayfinding behavior using an immersive virtual reality (VR) environment.

Methods

This cross-sectional study included 31 glaucomatous patients and 20 healthy subjects without evidence of overall cognitive impairment. Wayfinding experiments were modeled after the Morris water maze navigation task and conducted in an immersive VR environment. Two rooms were built varying only in the complexity of the visual scene in order to promote allocentric-based (room A, with multiple visual cues) versus egocentric-based (room B, with single visual cue) spatial representations of the environment. Wayfinding tasks in each room consisted of revisiting previously visible targets that subsequently became invisible.

Results

For room A, glaucoma patients spent on average 35.0 seconds to perform the wayfinding task, whereas healthy subjects spent an average of 24.4 seconds (P = 0.001). For room B, no statistically significant difference was seen on average time to complete the task (26.2 seconds versus 23.4 seconds, respectively; P = 0.514). For room A, each 1-dB worse binocular mean sensitivity was associated with 3.4% (P = 0.001) increase in time to complete the task.

Conclusions

Glaucoma patients performed significantly worse on allocentric-based wayfinding tasks conducted in a VR environment, suggesting visual field loss may affect the construction of spatial cognitive maps relevant to successful wayfinding. VR environments may represent a useful approach for assessing functional vision endpoints for clinical trials of emerging therapies in ophthalmology.

Keywords: glaucoma, wayfinding, virtual reality, sense of direction, cognitive map

Wayfinding is the process of determining and following a path or route between an origin and a destination.1 As a process humans use to orient and navigate on foot or with a vehicle, wayfinding is an integral part of people's daily life, occurring in many situations, such as walking inside a home or a building, navigating through a city, or driving across a country. Wayfinding requires the proper encoding, processing, and retrieval of spatial information, during which visual cues are often the main source in perceiving the environment. With vision, a traveler can acquire information about the surrounding environment from which an intended travel path can be planned and obstacles detected and avoided.

By artificially restricting the extent of the field of view, a previous study demonstrated an association between navigational ability and visual field.2–4 Lovie-Kitchin et al.4 found that the smaller the solid angle of visual field subtended at the eye, the poorer the performance on a navigation task. As a progressive optic neuropathy, glaucoma is a prototypical disease causing peripheral visual field loss. The disease is the main cause of irreversible blindness in the world, and visual field loss from glaucoma has been shown to impact many daily activities.5–9 However, there has been a lack of studies on the impact of glaucomatous visual field loss on efficient wayfinding. Turano and colleagues evaluated mobility performance in glaucoma by investigating the time taken to navigate an established physical travel path.5 However, their study did not address whether glaucoma subjects had increased difficulty in wayfinding tasks.

There are several difficulties in the study of navigation and wayfinding in humans with real-world tasks. Some derive from the large spatial scale of these tasks, making difficult, for example, the modification or removal of certain visual cues in systematic ways. Real-world navigation experiments may also be difficult for older subjects due to the associated physical effort necessary for repeated observations. New technologic advances have enabled researchers to conduct experiments in virtual reality (VR) settings. VR experiments may elicit responses from human beings similar to those in real situations,10 with the advantage that experimental conditions can be easily manipulated in systematic and reproducible ways, allowing hypothesis testing of specific factors influencing wayfinding behavior.11 Furthermore, during VR experiments, participants can have their spatial behavior easily recorded during multiple observations.

The purpose of the present study was to investigate wayfinding behavior and spatial cognition in patients with glaucoma using an immersive VR environment, the Virtual Environment Human Navigation Task (VEHuNT).

Methods

This was a cross-sectional study conducted at the Visual Performance Laboratory of the University of California San Diego (UCSD). Written informed consent was obtained from all participants, and the institutional review board and human subjects committee approved all methods. All methods adhered to the tenets of the Declaration of Helsinki for research involving human subjects, and the study was conducted in accordance with the regulations of the Health Insurance Portability and Accountability Act.

All participants underwent a comprehensive ophthalmologic examination including review of medical history, visual acuity, slit-lamp biomicroscopy, intraocular pressure measurement, gonioscopy, ophthalmoscopic examination, stereoscopic optic disc photography, and standard automated perimetry (SAP) using the Swedish Interactive Threshold Algorithm with 24-2 strategy of the Humphrey Field Analyzer II (model 750; Carl Zeiss Meditec, Inc., Dublin, CA, USA). Visual acuity was measured using the Early Treatment Diabetic Retinopathy Study chart, and letter acuity was expressed as the logarithm of the minimum angle of resolution. Only subjects with open angles on gonioscopy were included. Subjects were excluded if they presented any other ocular or systemic disease that could affect the optic nerve or the visual field. Optic nerve damage was assessed by masked grading of stereophotographs.

Glaucoma was defined by the presence of repeatable abnormal SAP tests (pattern standard deviation with P < 0.05 and/or a Glaucoma Hemifield Test outside normal limits) and corresponding optic nerve damage in at least one eye. Control subjects had no evidence of optic nerve damage and had normal SAP tests in both eyes. Only reliable SAP tests were included (less than 33% fixation losses or false-negative errors and less than 15% false-positive errors). The severity of visual field loss was represented by the binocular mean sensitivity (MS) of SAP 24-2 test. Binocular MS was calculated as the average of the sensitivities of the integrated binocular visual field obtained according to the binocular summation model described by Nelson-Quigg et al.12 According to this model, the binocular sensitivity can be estimated using the following formula:

|

where Sr and Sl are the monocular threshold sensitivities for corresponding visual field locations of the right and left eyes, respectively. In order to calculate the binocular sensitivity from the formula above, light sensitivity had to be converted to a linear scale (apostilbs) and then converted back to a logarithmic scale (decibels).

Subjects also completed a general cognitive impairment test, the Montreal Cognitive Assessment Test (MoCA).13 The MoCA test is a cognitive screening tool developed to detect mild cognitive dysfunction. It assesses different cognitive domains: attention and concentration, executive functions, memory, language, visual-constructional skills, conceptual thinking, calculations, and orientations. It consists of a one-page 30-point test administered by a trained technician in approximately 15 minutes. The total possible score is 30 points, and a score of 26 or above is considered normal. Only subjects with normal scores were included in the study.

VR Environment

VEHuNT was implemented using a derivative of the cave automatic virtual environment (CAVE) VR system,14 the 4kAVE. The 4kAVE consists of three vertically oriented 4000-pixel resolution, 80-inch, 3D light-emitting diode (LED) screens, with the lateral screens aligned at 45° relative to the central screen (Fig. 1). Proprietary software developed at UCSD for CAVE systems created spatial displays that stimulated approximately 150° of a person's field of view. Patients sat at a narrow table, wearing polarizing glasses to discern a 3D image during testing, and navigated through the virtual environment using a steering wheel and an accelerator pedal. The VEHuNT assessed subjects' spatial cognition abilities by performing wayfinding tasks in different sparsely filled virtual rooms with different arrangements of visual cues, such as colored walls, paintings on the walls, and 3D objects.

Figure 1.

The VEHuNT consisted of a cave automatic virtual environment (CAVE) used to present an immersive VR environment to study wayfinding tasks. The VEHuNT consisted of three vertically oriented 4K 80-inch 3D LED screens, with the lateral screens aligned at 45° relative to the central screen. Patients wore polarizing glasses to discern a 3D image during testing and navigated through the virtual environment using a steering wheel and an accelerator pedal.

Subjects were initially given a training session to familiarize themselves in navigating through the virtual environment. During the training session, subjects were initially virtually placed near a wall in a square room and asked to “drive” along a green-tiled circular path to a target located in another location in the room. This training paradigm was repeated until subjects were comfortable and adjusted to the virtual environment and the controls.

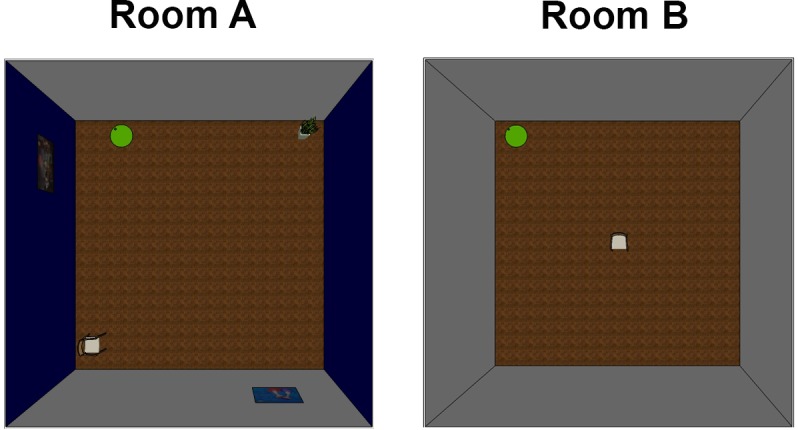

After the training session, subjects were asked to perform the actual wayfinding series of experiments, which were modeled after the Morris water maze classically used to assess spatial cognition in rat models.15 Two virtual rooms were built. The two rooms were geometrically identical (20 m × 20 m × 3 m) but contained a different set of visual cues designed to assess the impact of visual cues on navigation and spatial cognition in subjects with visual loss. Room A contained multiple peripheral visual cues, with two large symmetric colored wall cues, two asymmetric wall cues (paintings), and two asymmetric floor cues (chair and plant, both located peripherally) (Fig. 2, left). Due to the presence of multiple visual cues, this room promoted the use of an allocentric reference frame and cognitive mapping to assist wayfinding (see Discussion). The second room (room B) contained only one central cue, a chair near the center of the room (Fig. 2, right). The chair had a fixed angle, giving directional position. The presence of a single cue promoted the use of egocentric reference frame-based navigation, decreasing the need of a cognitive map for successful wayfinding. Subjects were initially instructed to drive to a clearly indicated location in each one of the two virtual rooms (visible target), identified by a green cone on the floor. Subjects were instructed to inspect the room and pay attention to the surroundings while driving to the target. Subsequent trials were conducted requiring the patient to revisit the initial location, but the location was no longer visibly marked (hidden target). It only reappeared when the subject's path intersected with the target area (the base of the cone), terminating the trial. Five trials with the target hidden were obtained for each room, with the subjects placed at a different starting position and heading in each. An additional trial with the target visible occurred after the first hidden task in each room to allow subjects who had not noted the target location during the first exposure to do so. The time to complete the task was recorded for each trial and used as outcome metric in the study. In addition, the length of the path taken by subjects from origin to destination was also recorded.

Figure 2.

The two rooms used in the experiment. The rooms were geometrically identical but contained a different set of visual cues designed to assess the impact of visual cues on navigation and spatial cognition. Room A contained multiple peripheral visual cues, with two large, symmetric colored wall cues, two asymmetric wall cues (paintings), and two asymmetric floor cues (chair and plant, both located peripherally). The second room (room B) contained only one central cue, a chair near the center of the room.

Subjective Assessment of Spatial and Navigational Abilities

The subjective assessment by each individual of his or her spatial and navigational abilities was evaluated by the Santa Barbara Sense of Direction Scale (SBSOD) questionnaire.16 This self-report questionnaire analyzes everyday tasks such as finding one's way in the environment and learning the layout of a new environment. Participants answered 15 questions using a 7-point Likert scale. It has been previously demonstrated that the questionnaire is internally consistent and has good test-retest reliability.16 Rasch analysis of the SBSOD questionnaires was performed to obtain a final Rasch-calibrated score of subjective navigational ability for each participant. Correlations of SBSOD scores with performance on a spatial task have been considered as evidence of the ecologic validity of the task.17

Statistical Analysis

Descriptive statistics included mean and standard deviation of the variables. Normality assumption was assessed by inspection of histograms and using the Shapiro-Wilk test. Fisher's exact test was used for group comparison for categorical variables. Student's t-test was used for group comparison for normally distributed variables, and Wilcoxon rank-sum (Mann-Whitney) test was used for group comparison for continuous non-normally distributed variables.

Times to complete wayfinding tasks had a skew toward higher completion times; therefore, a natural logarithmic transformation was applied to reduce skewness. Tobit models were used to investigate the relationship between times to complete the tasks and variables such as diagnosis (glaucoma versus control) and severity of visual field loss. Models were adjusted for potentially confounding effects of age, sex, and race. Tobit models were used because the time to complete the task was limited to a maximum of 120 seconds (ceiling effect). Generalized estimating equations were used to adjust for the correlation between multiple observations (i.e., from multiple trials) from each subject. A robust sandwich variance estimator was used. Statistical analyses were performed with log transformed variables. However, to facilitate interpretation, descriptive results are reported in back-transformed original units. For regression models that used log time as dependent variable, we also report results as percent change in time to complete the task for a one-unit change in the independent variables.

All statistical analyses were performed using commercially available software Stata, (version 14; StataCorp LP, College Station, TX, USA). The α level (type I error) was set at 0.05.

Results

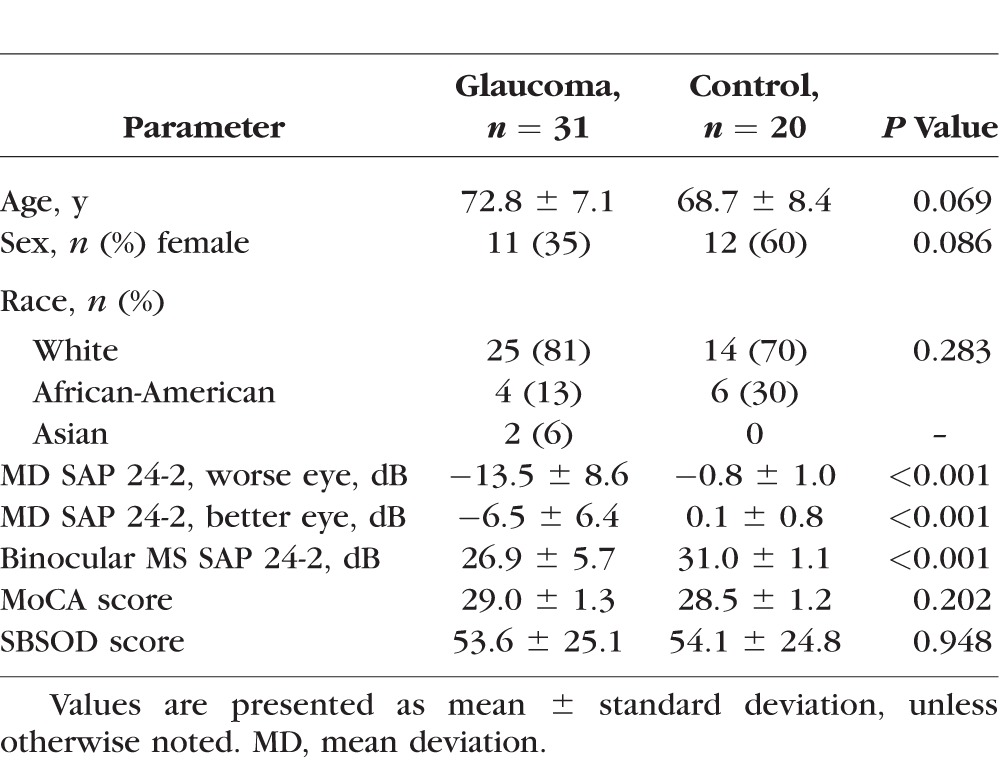

The study included 31 glaucoma patients and 20 normal control subjects. Table 1 shows demographic and clinical characteristics of included subjects. Mean age of glaucoma participants was similar between the two groups (72.8 ± 7.1 vs. 68.7 ± 8.4 years; P = 0.069). There were also no statistically significant differences in sex and race between groups. As expected, glaucoma subjects had significantly worse visual field sensitivity compared to controls, with average binocular MS of 26.9 ± 5.7 vs. 31.0 ± 1.1 dB, respectively (P < 0.001).

Table 1.

Demographic and Clinical Characteristics of Subjects Included in the Study

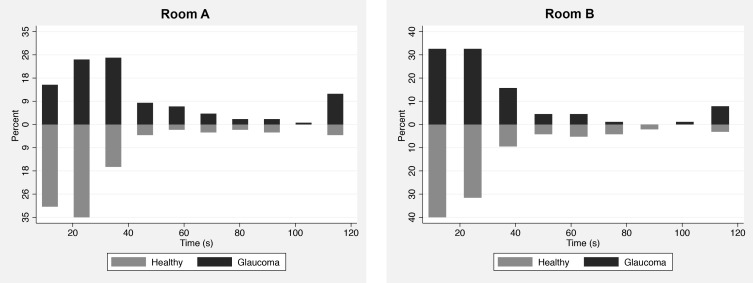

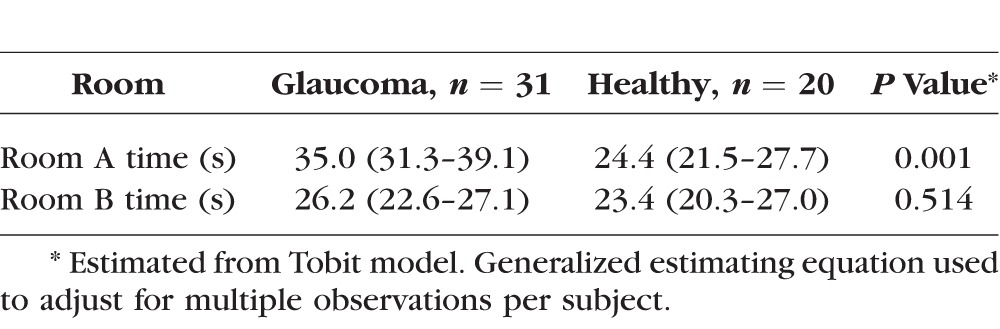

Table 2 shows average and 95% confidence interval (CI) of times to complete the wayfinding task for glaucoma and healthy subjects according to the type of room. Significant differences were seen between glaucoma and healthy subjects for hidden target tasks performed in room A (room with multiple visual cues), but not room B (room with central chair only). For room A, glaucoma patients spent an average of 35.0 seconds (95% CI: 31.3–39.1) for performing the wayfinding task, whereas healthy subjects spent an average of 24.4 seconds (95% CI: 21.5–27.7) (P = 0.001; Tobit model). For room B, no statistically significant difference was seen between glaucoma and healthy subjects on average time to complete the task [26.2 seconds (95% CI: 22.6–27.1) versus 23.4 seconds (95% CI: 20.3–27.0), respectively; P = 0.514; Tobit model]. Figure 3 shows histograms of raw times to complete the wayfinding tasks for glaucoma and healthy subjects for each room.

Table 2.

Mean and 95% Confidence Interval (CI) of Times to Complete the Wayfinding Tasks With Hidden Targets for Glaucoma and Healthy Subjects According to the Type of Room

Figure 3.

Histograms showing the distribution of times to complete the wayfinding tasks in each room for glaucomatous and healthy subjects.

For glaucoma patients, the average time taken to complete the hidden target task in room A was significantly longer than for room B (35.0 vs. 26.2 seconds, respectively; P = 0.008; Tobit model). For normal subjects, there was no statistically significant difference in time to complete the task with hidden target between the two rooms (24.4 vs. 23.4 seconds; P = 0.721; Tobit model).

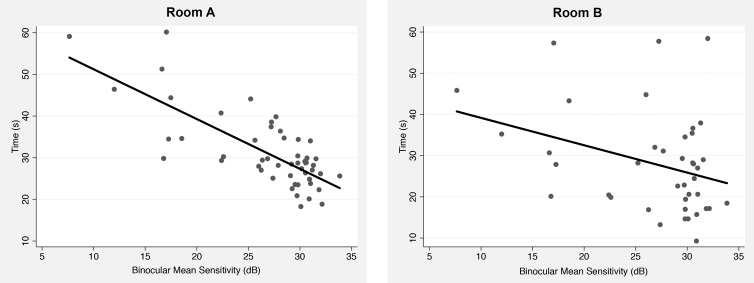

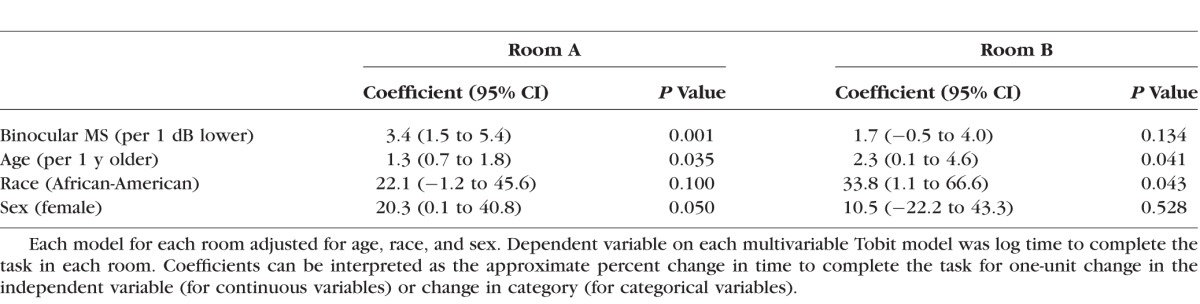

We also investigated the relationship between time to complete the task and severity of visual field loss in models adjusting for age, sex, and race. Table 3 shows results of Tobit multivariable models investigating the relationship between time to complete the hidden target task and binocular MS for each of the different rooms used in the VEHuNT. A significant relationship between time to complete the task and visual field loss was seen for room A but not for room B. For room A, each 1-dB worse binocular MS was associated with 3.4% (95% CI: 1.5%–5.4%; P = 0.001) increase in time to complete the task. Older age and female sex were also significantly associated with longer times to complete the wayfinding task. Figure 4 illustrates the relationship between predicted times to complete the hidden target task in the different rooms and binocular MS, adjusting for age, sex, and race. Relationship was significantly stronger for room A with R2 = 57% (95% CI: 44%–70%) compared to room B with R2 = 11% (95% CI: 0%–23%).

Table 3.

Results of Tobit Multivariable Models Investigating the Association Between Time to Complete the Wayfinding Task in Each Room and Visual Field Loss Assessed by Integrated Binocular MS

Figure 4.

Scatterplots with fitted regression line showing the relationship between times to complete the wayfinding task for each room and integrated binocular MS.

In the analysis of path length for the hidden target tasks, no significant differences were seen between glaucoma and controls for any of the rooms (P = 0.930 for room A, and P = 0.800 for room B).

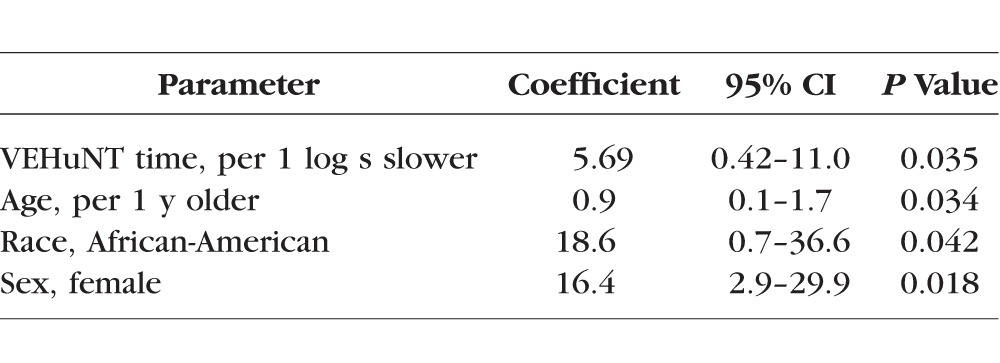

A statistically significant relationship was observed between Rasch scores from the SBSOD questionnaire and time to complete the hidden target tasks in the VEHuNT (P = 0.035; Table 4), even after adjustment for age, race, and sex.

Table 4.

Multivariable Model Evaluating Factors Associated With Results on the Santa Barbara Sense of Directions (SBSOC) Questionnaire

Discussion

In the present study, glaucoma patients were found to perform significantly worse than healthy subjects in wayfinding tasks that promoted allocentric-based spatial reference frames to successfully complete the task, suggesting that in the presence of significant visual field loss, glaucoma patients may have difficulty building accurate representations of the spatial structure of an environment. Our results may have significant implications for a better understanding of how vision loss in glaucoma may affect the ability to perform many everyday tasks that are related to wayfinding, such as driving in a city or walking through a building.

Significant differences were seen in the ability of glaucoma patients to perform wayfinding tasks according to the type of virtual environment used. Two different rooms were used, varying only in the complexity of the visual scene. Times to complete the wayfinding tasks were on average over 40% longer for glaucoma compared to healthy subjects for room A. However, no significant difference between the groups was seen for room B. Importantly, when only glaucoma subjects were considered, times to complete the task in room A were significantly longer than in room B. This indicates that the longer times for glaucoma patients were not only the result of worse general performance of this group in wayfinding tasks but also depended on the type of visual scene presented. In agreement with this result, the relationship between time to complete the task and severity of visual field loss was much stronger for room A (R2 = 57%) than room B (R2 = 11%).

Spatial information is stored in memory according to two general frames of reference: egocentric and allocentric.18,19 Egocentric frames of reference use the organism as the center of the organization of surrounding space. By contrast, allocentric frames of reference are centered on external objects or on the environment itself. By having a central chair placed in a fixed orientation in relation to the target, room B promoted an egocentric-based solution to the wayfinding problem. In contrast, room A promoted an allocentric-based strategy to solve the wayfinding problem. In order to successfully complete the task with the hidden target in room A, the subject had to initially build an accurate mental representation of the spatial structure of the environment, or cognitive map, containing the location of the target in relation to the several landmarks or visual cues present in that room.20 Subsequently, the subject would have to use the cognitive map in order to provide guidance on how to navigate toward the hidden target. Cognitive maps code the spatial relationship between things in the world and can be used to recognize places, compute distances and directions, and help a subject find the way from where the subject is to where the subject wants to be in complex environments.1

There is evidence that loss of peripheral vision may prevent building of accurate cognitive maps.21,22 The peripheral vision helps provide information about the global structure of the environment within each fixation such that when an object appears in the periphery, eye and head movements can bring it to the fovea for further processing and accurate localization.23 Visual information from successive fixations is then bound together to create cohesive representations of the environment in a cognitive map. Loss of peripheral vision may impede the ability to perform effective visual searches in the environment and attend to navigationally relevant objects. In addition, in the presence of significant peripheral vision loss, the visual information acquired from successive fixations may not be coherent enough to allow an accurate representation of the location of objects in space relative to each other and the global environment structure.

In our study, the loss of peripheral field in glaucoma patients may have made it difficult for them to build an accurate cognitive map of the virtual environment. This translated later into difficulties in performing the wayfinding tasks with hidden targets for room A. It is possible that the longer times taken by glaucoma patients for room A may represent an increased number of eye fixations necessary to capture spatial relations and build the cognitive map as a result of ineffective visual searches. It is also possible that due to their restricted field of view, some glaucoma patients may never have had the opportunity to code the target relative to a feature in the scene when the target was visible. However, this seems unlikely, as subjects were free to move their heads and eyes as they navigated through the virtual environment during the initial task with a visible target. Future studies employing eye-tracking methodology could help clarify these issues and explore the compensatory behaviors associated with visual field loss. Importantly, no differences were found in the times to complete the tasks among the different rooms for healthy subjects. This shows that the delay in completing the task in the more complex room for glaucoma patients was due to the loss of peripheral vision rather than some other inherent characteristic making the task longer in the more complex room.

To the best of our knowledge, our study is the first to assess wayfinding performance in glaucoma patients in a VR environment. The use of VR allows for highly controllable and repeatable experiments. Wayfinding and other metrics derived from VR platform experiments could potentially be used as endpoints for assessment of functional vision in clinical trials of emerging new therapies, and the VR environment described in our study could potentially be used in a variety of eye conditions other than glaucoma. As a more ecologically valid, objective, and reproducible assessment, VR-based platforms could overcome limitations of laboratory-based physical mobility courses.10

The use of VR for studying navigation tasks has limitations. It is difficult to directly accurately compare the deficits seen in the VR world to those seen in real-world wayfinding tasks since good tests of real-world wayfinding are not readily available. However, several previous studies have shown the validity of VR for assessing wayfinding in a variety of conditions, such as traumatic brain injury and stroke.10,11,24–26 Shi et al.27 also showed the benefits of using VR to study wayfinding in emergency evacuations, such as buildings on fire. The significant association shown in our study between wayfinding performance in the VEHuNT and the subjective assessment of navigational ability by the SBSOD questionnaire is indication of its clinical validity. Further, we were able to replicate well-known results derived from conventional wayfinding studies, such as a significant effect of sex on allocentric but not egocentric-based navigation,28–30 as well as a significant effect of age on wayfinding performance.31,32

In conclusion, glaucoma patients performed significantly worse than healthy subjects on allocentric-based wayfinding tasks conducted in a VR environment. Our findings suggest that glaucomatous visual field loss may affect the construction of spatial cognitive maps relevant to successful wayfinding, which may translate into significant difficulties in many daily tasks that are wayfinding dependent. VR environments may represent a useful approach for assessing functional vision endpoints for clinical trials of emerging therapies in ophthalmology.

Acknowledgments

Supported by the by National Institutes of Health/National Eye Institute Grants EY025056 (FAM) and EY021818 (FAM).

Disclosure: F.B. Daga, None; E. Macagno, None; C. Stevenson, None; A. Elhosseiny, None; A. Diniz-Filho, None; E.R. Boer, None; J. Schulze, None; F.A. Medeiros, None

References

- 1. Golledge RG. Wayfinding Behavior: Cognitive Mapping and Other Spatial Processes. Baltimore, MD: The Johns Hopkins University Press; 1999. [Google Scholar]

- 2. Pelli DG. The visual requirements of mobility. : Woo GC, Low Vision. New York: Springer; 1987. [Google Scholar]

- 3. Hassan SE,, Hicks JC,, Lei H,, Turano KA. What is the minimum field of view required for efficient navigation? Vision Res. 2007; 47: 2115–2123. [DOI] [PubMed] [Google Scholar]

- 4. Lovie-Kitchin JMJ,, Robinson J,, Brow B. What areas of the visual field are important for mobility in low vision patients? Clin Vision Sci. 1990; 5: 249–263. [Google Scholar]

- 5. Turano KA,, Rubin GS,, Quigley HA. Mobility performance in glaucoma. Invest Ophthalmol Vis Sci. 1999; 40: 2803–2809. [PubMed] [Google Scholar]

- 6. Diniz-Filho A,, Boer ER,, Gracitelli CP,, et al. Evaluation of postural control in patients with glaucoma using a virtual reality environment. Ophthalmology. 2015; 122: 1131–1138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Abe RY,, Diniz-Filho A,, Costa VP,, et al. The impact of location of progressive visual field loss on longitudinal changes in quality of life of patients with glaucoma. Ophthalmology. 2016; 123: 552–557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Medeiros FA,, Gracitelli CP,, Boer ER,, et al. Longitudinal changes in quality of life and rates of progressive visual field loss in glaucoma patients. Ophthalmology. 2015; 122: 293–301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Ramulu P. Glaucoma and disability: which tasks are affected, and at what stage of disease? Curr Opin Ophthalmol. 2009; 20: 92–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. van der Ham IJM,, Faber AME,, Venselaar M,, et al. Ecological validity of virtual environments to assess human navigation ability. Front Psychol. 2015; 6: 637. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Skelton RW,, Ross SP,, Nerad L,, Livingstone SA. Human spatial navigation deficits after traumatic brain injury shown in the arena maze, a virtual Morris water maze. Brain Inj. 2006; 20: 189–203. [DOI] [PubMed] [Google Scholar]

- 12. Nelson-Quigg JM,, Cello K,, Johnson CA. Predicting binocular visual field sensitivity from monocular visual field results. Invest Ophthalmol Vis Sci. 2000; 41: 2212–2221. [PubMed] [Google Scholar]

- 13. Nasreddine ZS,, Phillips NA,, Bedirian V,, et al. The Montreal Cognitive Assessment, MoCA: a brief screening tool for mild cognitive impairment. J Am Geriatr Soc. 2005; 53: 695–699. [DOI] [PubMed] [Google Scholar]

- 14. Cruzneira C,, Sandin DJ,, Defanti TA,, et al. The CAVE—audio-visual experience automatic virtual environment. Commun ACM. 1992; 35: 64–72. [Google Scholar]

- 15. Vorhees CV,, Williams MT. Morris water maze: procedures for assessing spatial and related forms of learning and memory. Nat Protoc. 2006; 1: 848–858. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Hegarty M. Development of a self-report measure of environmental spatial ability. Intelligence. 2002; 30: 425–447. [Google Scholar]

- 17. Palermo L,, Iaria G,, Guariglia C. Mental imagery skills and topographical orientation in humans: a correlation study. Behav Brain Res. 2008; 192: 248–253. [DOI] [PubMed] [Google Scholar]

- 18. Nadel L,, Hardt O. The spatial brain. Neuropsychology. 2004; 18: 473–476. [DOI] [PubMed] [Google Scholar]

- 19. Zaehle T,, Jordan K,, Wustenberg T,, et al. The neural basis of the egocentric and allocentric spatial frame of reference. Brain Res. 2007; 1137: 92–103. [DOI] [PubMed] [Google Scholar]

- 20. Tolman EC. Cognitive maps in rats and men. Psychol Rev. 1948; 55: 189–208. [DOI] [PubMed] [Google Scholar]

- 21. Fortenbaugh FC,, Hicks JC,, Turano KA. The effect of peripheral visual field loss on representations of space: evidence for distortion and adaptation. Invest Ophthalmol Vis Sci. 2008; 49: 2765–2772. [DOI] [PubMed] [Google Scholar]

- 22. Fortenbaugh FC,, Hicks JC,, Hao L,, Turano KA. Losing sight of the bigger picture: peripheral field loss compresses representations of space. Vis Res. 2007; 47: 2506–2520. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Peterson MS,, Kramer AF,, Irwin DE. Covert shifts of attention precede involuntary eye movements. Percept Psychophys. 2004; 66: 398–405. [DOI] [PubMed] [Google Scholar]

- 24. van Veen HAHC,, Distler HK,, Braun SJ,, Bulthoff HH. Navigating through a virtual city: using virtual reality technology to study human action and perception. Future Gener Comput Syst. 1998; 14: 231–242. [Google Scholar]

- 25. Livingstone SA,, Skelton RW. Virtual environment navigation tasks and the assessment of cognitive deficits in individuals with brain injury. Behav Brain Res. 2007; 185: 21–31. [DOI] [PubMed] [Google Scholar]

- 26. Carelli L,, Rusconi ML,, Scarabelli C,, et al. The transfer from survey (map-like) to route representations into Virtual Reality Mazes: effect of age and cerebral lesion. J Neuroengineering Rehabil. 2011; 8: 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Shi NJ,, Lin CY,, Yang CH. A virtual-reality-based feasibility study of evacuation time compared to traditional calculation method. Fire Saf J. 2000; 34: 377–391. [Google Scholar]

- 28. Li R. Why women see differently from the way men see? A review of sex differences in cognition and sports. J Sport Health Sci. 2014; 3: 155–162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Galea LAM,, Kimura D. Sex-differences in route-learning. Pers Individ Dif. 1993; 14: 53–65. [Google Scholar]

- 30. Malinowski JC,, Gillespie WT. Individual differences in performance on a large-scale, real-world wayfinding task. J Environ Psychol. 2001; 21: 73–82. [Google Scholar]

- 31. Wiener JM,, de Condappa O,, Harris MA,, Wolbers T. Maladaptive bias for extrahippocampal navigation strategies in aging humans. J Neurosci. 2013; 33: 6012–6017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Moffat SD,, Resnick SM. Effects of age on virtual environment place navigation and allocentric cognitive mapping. Behav Neurosci. 2002; 116: 851–859. [DOI] [PubMed] [Google Scholar]