In a novel track-intercept paradigm, human observers tracked a briefly shown object moving across a textured, dynamic context and intercepted it with their finger after it had disappeared. Context motion significantly affected eye and hand movement latency and speed, but not interception accuracy; eye and hand position at interception were correlated on a trial-by-trial basis. Visual context effects may be short-lasting, affecting movement trajectories more than movement end points.

Keywords: smooth pursuit, manual interception, prediction, perception-action, visual context

Abstract

In our natural environment, we interact with moving objects that are surrounded by richly textured, dynamic visual contexts. Yet most laboratory studies on vision and movement show visual objects in front of uniform gray backgrounds. Context effects on eye movements have been widely studied, but it is less well known how visual contexts affect hand movements. Here we ask whether eye and hand movements integrate motion signals from target and context similarly or differently, and whether context effects on eye and hand change over time. We developed a track-intercept task requiring participants to track the initial launch of a moving object (“ball”) with smooth pursuit eye movements. The ball disappeared after a brief presentation, and participants had to intercept it in a designated “hit zone.” In two experiments (n = 18 human observers each), the ball was shown in front of a uniform or a textured background that either was stationary or moved along with the target. Eye and hand movement latencies and speeds were similarly affected by the visual context, but eye and hand interception (eye position at time of interception, and hand interception timing error) did not differ significantly between context conditions. Eye and hand interception timing errors were strongly correlated on a trial-by-trial basis across all context conditions, highlighting the close relation between these responses in manual interception tasks. Our results indicate that visual contexts similarly affect eye and hand movements but that these effects may be short-lasting, affecting movement trajectories more than movement end points.

NEW & NOTEWORTHY In a novel track-intercept paradigm, human observers tracked a briefly shown object moving across a textured, dynamic context and intercepted it with their finger after it had disappeared. Context motion significantly affected eye and hand movement latency and speed, but not interception accuracy; eye and hand position at interception were correlated on a trial-by-trial basis. Visual context effects may be short-lasting, affecting movement trajectories more than movement end points.

during natural behaviors such as ball sports, observers instinctively track the ball with their eyes to hit or catch it optimally (Hayhoe and Ballard 2005; Land and McLeod 2000). Interceptive movements are guided and continuously updated by current visual information about the ball’s position, velocity, and spin available during the ongoing movement (Zhao and Warren 2015). In addition, interceptive hand movements must be initiated in anticipation of target motion to overcome neuromuscular delays, and thus require prediction (Mrotek and Soechting 2007; Wolpert and Ghahramani 2000). Keeping the eye on a moving target by engaging in smooth pursuit eye movements enhances the ability to predict a target’s trajectory in perception tasks (Bennett et al. 2010; Spering et al. 2011). Similarly, it has been assumed that smooth pursuit also enhances motion prediction in manual tasks (Brenner and Smeets 2011; Delle Monache et al. 2015; Mrotek 2013; Soechting and Flanders 2008). Indeed, Leclercq et al. (2012, 2013) identified eye velocity as the key extraretinal signal taken into account when planning a manual tracking response.

We recently provided further evidence for this assumption by showing that better smooth pursuit coincided with more accurate hand movements in a task in which human observers tracked and predictively intercepted the trajectory of a simulated baseball (Fooken et al. 2016). In this task, observers viewed a small object (the “ball”) moving along a curved trajectory toward a designated “hit zone.” The ball always disappeared after a brief presentation, before reaching the hit zone. Observers were instructed to continue to track the ball and to intercept it by pointing at it rapidly with their index finger at its assumed location anywhere within the hit zone. Interception performance was best predicted by observers’ eye position error across the entire ball trajectory, i.e., the closer the eyes to the actual position of the ball, the more accurate the interception. These findings confirm the close relation between smooth pursuit and motion prediction for interceptive hand movements.

In most laboratory studies on eye and hand movements, participants view, track, or intercept small objects in front of uniform, nontextured backgrounds. Yet natural environments are richly structured and dynamic. The present study addresses the question whether and how dynamic visual contexts affect eye and hand movements. It extends previous results by including a dynamic visual context to investigate context effects on eye and hand movements when intercepting a disappearing object. We first present evidence from the literature indicating that smooth pursuit eye movements are generally affected by visual contexts and that they integrate motion signals from target and context following a vector-averaging model. However, studies investigating context effects on hand movements have produced more variable results. The main research question to be answered here is whether target and context motion signals are integrated similarly (both following vector averaging) or differently for eye and hand movements, and whether context effects change over time.

Context effects on eye movements and motion perception.

Previous studies have already established that smooth pursuit eye movements are strongly affected by visual contexts: pursuit of a small target moving across a stationary textured context is slower, and pursuit across a dynamic context is faster compared with pursuit across uniform backgrounds (Collewijn and Tamminga 1984; Lindner et al. 2001; Masson et al., 1995; Niemann and Hoffmann 1997; for a review, see Spering and Gegenfurtner 2008). These findings suggest that the smooth pursuit system integrates target and context motion following a vector-averaging algorithm (Spering and Gegenfurtner 2008) similar to how it integrates motion signals from two sources in general (Groh et al. 1997; Lisberger and Ferrera 1997). Despite close links between smooth pursuit and visual motion perception (Schütz et al. 2011; Spering and Montagnini 2011) there is evidence for differential context effects on pursuit and perception. When human observers track a small moving object across a dynamic textured background, pursuit follows the vector average, i.e., when context velocity increases, the eyes move faster (Spering and Gegenfurtner 2007). However, motion perception can follow relative motion (motion contrast), i.e., when context velocity increases, the object may appear to move slower (Brenner 1991; Smeets and Brenner 1995a; Spering and Gegenfurtner 2007; Zivotofsky 2005). Relative motion signals seem to influence target velocity judgments the most when the context moves in the direction opposite to the target (Brenner and van den Berg 1994); they also affect the direction of saccades (Zivotofsky et al., 1998) and the initial phase of the optokinetic nystagmus (Waespe and Schwarz 1987).

Context effects on hand movements.

Effects of relative motion have also been observed for hand movements. A moving visible target was intercepted with a lower velocity when it was presented in front of a background moving in the same direction as the target vs. in front of a background moving in the opposite direction (Smeets and Brenner 1995a). A background moving orthogonally to the main motion of a target triggered a deviation of the hand trajectory away from the background’s motion direction (Brouwer et al. 2003; Smeets and Brenner 1995b). Similarly, pointing errors were shifted in the direction of relative motion when pointing at an anticipated target location in the presence of a moving background (Soechting et al. 2001). Interestingly, interception position was not affected by background motion direction when targets were visible (Brouwer et al. 2003; Smeets and Brenner 1995a, 1995b), consistent with observations that perceived target motion, but not perceived target position, is influenced by motion of the background. Even when no position information is available due to occlusion of the target before interception, Brouwer et al. (2002) found that participants used a default (average) target speed rather than differently perceived speeds (due to background motion) of the target to estimate interception position.

However, there is also evidence supporting a vector-averaging model. Hand movement trajectories toward stationary targets were initially shifted in the direction of context motion (Brenner and Smeets 1997, 2015; Mohrmann-Lendla and Fleischer 1991; Saijo et al. 2005). Importantly, this shift persists (i.e., is not compensated for) if continuous foveal information about the actual target position is not available, which in turn shifts interception errors in direction of background motion (see also Whitney et al. 2003). Similarly, Whitney and Goodale (2005) report overshooting a remembered location more or less, depending on whether the context moved along with or against the direction of a prior pursuit target. Thompson and Henriques (2008) found a differential effect of context on saccadic eye movements and interception: observers first tracked a target in front of different background textures and then made a saccade to a remembered target position. The amplitude of the memory saccade scaled with background motion direction, but manual interception did not.

In sum, it appears that moving contexts affect smooth pursuit eye movements in a relatively consistent manner, and in line with a vector-averaging model. By contrast, context effects on perception tend to follow relative motion signals (motion contrast). Context effects on interception responses are variable: their direction and magnitude depends on the specifics of stimuli and task—whether observers had to hit stationary, dynamic, visible, or remembered objects, and when and for how long the moving context was presented.

Comparing context effects on pursuit and interception of a disappearing target.

In the present study, we showed observers the initial launch of a ball moving along a curved trajectory across a uniform or textured, stationary or continuously moving background; the background always moved in the same direction as the target. As in Fooken et al. (2016), observers had to intercept the target with their index finger after it entered a hit zone. Critically, the target disappeared from view after brief presentation, preventing observers from using information about the target position when intercepting its estimated position within the hit zone. In two experiments, we compared smooth pursuit and interception responses across different contexts.

This study aims at investigating whether motion signals from target and context are integrated similarly or differently for eye and hand movements. Previous studies have already established that pursuit consistently behaves in line with a vector-averaging model (Lisberger and Ferrera 1997; Spering and Gegenfurtner 2008). Here we investigate whether hand movements also integrate target and context motion signals consistent with the predictions of a vector-averaging model, or if hand movements follow a different model, such as motion contrast. Our study differs from previous investigations of context effects on eye and hand movements in at least two important ways: 1) Smooth pursuit eye movements and manual interception responses were assessed simultaneously and in the same trials, and 2) the target disappeared before interception, rendering the context the only visual motion signal driving eye and hand at interception. Manipulating the speed of the dynamic context—either moving at the same speed as the target (experiment 1) or moving faster (experiment 2)—allows us to compare different models of target-context motion signal integration, such as vector averaging and motion contrast. Figure 1 summarizes specific hypotheses for the three context conditions tested in this study.

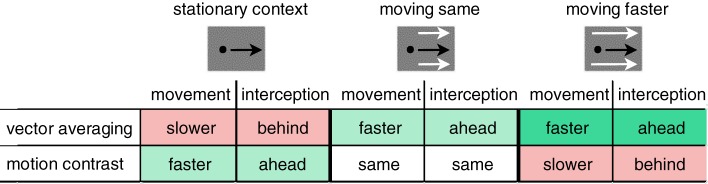

Fig. 1.

Predictions of vector-averaging vs. motion-contrast models for context effects on smooth pursuit eye and hand movements in the three target-context configurations tested in this study. Cells shaded in red indicate slower movements (e.g., slower eye velocity and finger peak velocity) and interception behind the target (e.g., negative timing error in eye and hand), cells shaded in green indicate faster movements and interception ahead of the target (e.g., positive timing error) as compared with the effect of a uniform, nontextured context. Hypothesis testing included measures of movement trajectory and interception for both eye and finger.

Following a vector-averaging model, we would expect a stationary context to slow down eye and hand movements and to elicit interception at a location that the target passed already, i.e., the eye or hand would lag behind the target. A context moving in the same direction as the target would lead to an increase in movement speed and would cause interceptions at a location before the target reaching it, i.e., the eye or hand would be ahead of the target. Following a motion-contrast model, a stationary context would increase movement speed and elicit interceptions before the target reaching the interception location. A dynamic context moving in the same direction and at the same speed as the target would have no effect on movement or interception, as compared with a uniform context. A dynamic context moving faster would decrease movement speed and trigger interceptions at a location that the target passed already. To test these hypotheses, we computed early measures, obtained during the movement phase—latency and relative velocity of pursuit, catch-up saccade properties, latency and peak velocity of the finger—as well as late measures, obtained at the time of interception—eye position and interception error.

METHODS

Observers.

Participants were 36 right-handed adults (mean age 24.8 yr, std = 4.3; 19 female) with normal or corrected-to-normal visual acuity and no history of neurological, psychiatric or eye disease, n = 18 in each experiment. Normal visual acuity was confirmed using ETDRS visual acuity charts (Original Series Chart “R,” Precision Vision, La Salle, IL) at a test distance of 4 m. All observers had binocular visual acuity of 20/20 or better. The dominant hand was defined as the hand used for writing. All observers, except authors MS. and PK, were unaware of the purpose of the study and were compensated at a rate of $10/h. Experimental protocols were in accordance with the Declaration of Helsinki and were approved by the Behavioral Research Ethics Board at the University of British Columbia, and observers gave written, informed consent before participating.

Visual stimuli and apparatus.

A solid black dot (ball), with a diameter of 0.38°, moved along a curved path, simulated to be the natural trajectory of a batted baseball. In the following equations, and are the horizontal and vertical acceleration components, taking into account ball mass (m), gravitational acceleration (g), aerodynamic drag force (FD), and Magnus force (FM) as induced by the baseball’s spin; is the angle between the velocity vector and the horizontal:

| (1) |

| (2) |

The drag force (FD) and the Magnus force (FM) are defined as

| (3) |

| (4) |

in which A is the cross-sectional area of the baseball, ρ the air density, γ is an empirical constant determined by measurements of a spinning baseball in a wind tunnel by Watts and Ferrer (1987), f refers to the frequency with which the simulated ball spins, v denotes the ball’s velocity, and CD is the drag coefficient (for conditions and constants used in the simulation, see Fooken et al. 2016). The ball moved at an initial speed of 24.5°/s and was launched at one of three different angles (30, 35, 40°) to increase task difficulty. The ball always appeared at the left side of the screen and moved toward the right; a dark gray line (2 pixels wide) separated the screen into two halves with the hit zone on the right (Fig. 2A). The ball was presented on one of three possible backgrounds in separate blocks of trials: a uniform gray background (35.9 cd/m2), or a textured background at the same mean luminance—either stationary or moving in the target direction. Backgrounds were images or movies of random textures, Motion Clouds (Leon et al. 2012), generated in PsychoPy 2 (Peirce 2007). These stimuli are richly textured (Fig. 2A) and have many of the same properties as natural images (Leon et al. 2012; Simoncini et al. 2012). We followed parameter settings of a previous study assessing perception and ocular following in response to these stimuli (Simoncini et al. 2012) and set Motion Clouds to a fixed spatial frequency of 0.15 cycles per degree (cpd) with bandwidth 0.08 cpd. The bandwidth of the envelope of the speed plane that defines the jitter of the mean motion was set to 5%, i.e., in each frame, 95% of the pattern moved in a coherent motion direction. In trials with stationary textures, one of 20 possible Motion Cloud images was shown, randomized across trials. In trials with dynamic textures, a Motion Cloud movie was played in the background. Stationary or moving backgrounds were shown from the trial start during the fixation period until time of interception (Fig. 2A). In experiment 1, the dynamic background moved at a horizontal velocity equivalent to the mean velocity of the target at launch (24.5°/s); in experiment 2, the background moved 50% faster than the target (~36.7°/s).

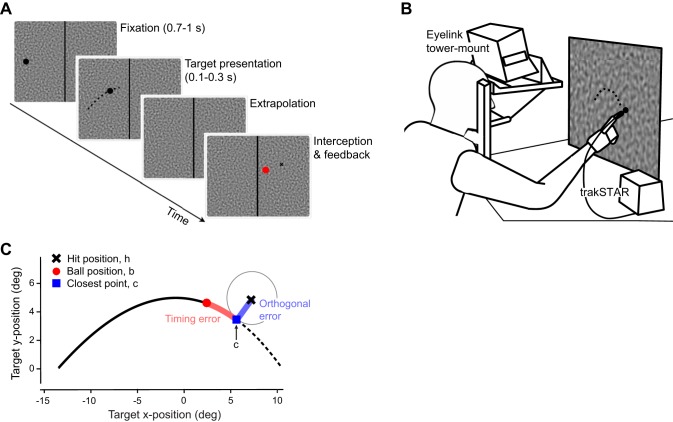

Fig. 2.

A: timeline of a single trial with a structured background. Each trial started with 1) fixation on the target on the left side of the screen for 700–1,000 ms, followed by 2) brief (100 or 300 ms) stimulus motion to the right after which 3) the target disappeared until 4) the observer intercepted in the “hit zone,” located on the right of the screen. Performance feedback at the end of each trial showed true target end position (red disk) relative to finger position (black cross). B: cartoon of setup showing an observer and the relative positions of eye tracker, magnetic finger tracker, and translucent screen for back-projection. All reach movements were with the right hand into ipsilateral body space. C: interception accuracy was calculated as timing error (red) and orthogonal error (blue). Example shows positive errors, indicating that interception occurred above the trajectory and ahead of the target.

Visual stimuli were back-projected using a PROPixx video projector (VPixx Technologies, Saint-Bruno, QC, Canada) with a refresh rate of 60 Hz and a resolution of 1,280 (H) × 1,024 (V) pixels. The screen was a 44.5 cm × 36 cm translucent display consisting of nondistorting projection screen material (Twin White Rosco screen, Rosco Laboratories, Markham, ON, Canada) clamped between two glass panels and fixed in an aluminum frame (Fig. 2B). Stimulus display and data collection were controlled by a Windows PC with an NVIDIA GeForce GT 430 graphics card running MATLAB 7.1 and Psychtoolbox 3.0.8 (Brainard 1997; Pelli 1997). Observers were seated at a distance of 46 cm with their head supported by a chin and forehead rest and viewed the stimuli binocularly. Using these setup parameters, 1° of visual angle corresponded to 0.8 cm.

Experimental procedure and design.

Each trial started with fixation on the ball located on the left side of the screen for 700–1,000 ms (uniform distribution). During fixation, the eye tracker performed a drift correction. The ball then moved rightward toward the hit zone and was occluded after a presentation duration of either 100 or 300 ms for the remainder of the trajectory (Fig. 2A). Observers were instructed to track the ball with their eyes and to intercept it as accurately as possible (hit/catch it) with their index finger once it had entered the hit zone. If interception occurred after the trajectory had ended (depending on launch angle, this time interval was 1.2–1.6 s, including visible and invisible parts of the trajectory), observers received a “time out” message. After each interception observers placed their hand on a fixed resting position on the table. At the end of each trial, observers received feedback about their finger interception position (red dot) and the actual ball position at time of interception (black cross; Fig. 2A). All observers completed the task with their dominant right hand, reaching at the target in the hit zone located in ipsilateral body space.

Each participant completed three blocks of trials, one for each type of background. Block order was randomized to control for possible training effects. Each block in each experiment started with 32 baseline trials in which the ball moved across the respective background and its trajectory was fully visible, followed by 4 demo trials and 84 interception trials, 42 trials per presentation duration, randomly interleaved.

Eye and hand movement recordings and preprocessing.

Position of the right eye was recorded with a video-based eye tracker (tower-mounted Eyelink 1000, SR Research, Ottawa, ON, Canada; Fig. 2B) at a sampling rate of 1,000 Hz. All data were analyzed off-line using custom-made routines in MATLAB. Eye position and velocity profiles were filtered using a low-pass, second-order Butterworth filter with cutoff frequencies of 15 Hz (position) and 30 Hz (velocity). Saccades were detected when five consecutive frames exceeded a fixed velocity criterion of 35°/s; saccade on- and offsets were then determined as the nearest reversal in the sign of acceleration. All saccades were excluded from pursuit analysis. Pursuit onset was detected within a 300-ms interval around stimulus motion onset (starting 100 ms before onset) in each individual trace. We first fitted each 2D position trace with a piecewise linear function, consisting of two linear segments and one breakpoint. The least-squares fitting error was then minimized iteratively (using the function lsqnonlin in MATLAB) to identify the best location of the breakpoint, defined as the time of pursuit onset.

Movements of observers’ right index finger were tracked with a magnetic tracker (3D Guidance trakSTAR, Ascension Technology, Shelburne, VT) at a sampling rate of 240 Hz (Fig. 2B). A lightweight sensor was attached to the observer’s fingertip with a small Velcro strap. The 2D finger interception position was recorded in x- and y-screen-centered coordinates for each trial. Finger latency was computed as the first frame exceeding a velocity threshold of 5 cm/s following stimulus onset. Each trial was manually inspected and we excluded trials with blinks and those in which observers moved their hand too early, i.e., before stimulus onset, too late (time out), or in which finger movement was not detected (8.8% in experiment 1, 7.9% in experiment 2).

Eye and hand movement data analyses.

To test our hypotheses, we computed the following eye movement measures: pursuit latency, relative eye velocity (calculated as gain: eye velocity divided by target velocity in the interval 140 ms after pursuit onset to interception) and cumulative catch-up saccade amplitude, defined as the total amplitude of all catch-up saccades in a given trial, i.e., the total distance covered by saccades (Fooken et al. 2016). These measures define the quality of the smooth component of the pursuit movement. We also calculated the 2D eye position error at the time of interception (see definition of “timing error,” below; Fig. 2C); this measure defines the accuracy of the eye at time of interception.

For interception movements, we analyzed finger latency, finger peak velocity, and interception accuracy. Interception accuracy was calculated as follows. First, the hit position, h, is defined as the 2D position of the finger when it first makes contact with the screen; the ball position at that time is denoted as b (see Fig. 1C). The point on the ball trajectory closest to h is denoted c. We now define the timing error as the signed distance from the ball position to the closest point, i.e., ∥c−b∥ if c is ahead of b, and −∥c−b∥ if c is behind b in the horizontal (+x) direction. A positive timing error (in degrees, where 1° = 40.8 ms) implies that the observer touched the screen before the time that the ball would have reached the hit position. We also calculated timing error for the eye, defined in the same way as for the finger (as the signed distance from the ball position to the closest point on the trajectory, c, relative to the eye’s position at time of hit, h). For the eye, a positive timing error indicates that the eye landed ahead of the target. Similarly, we define the “orthogonal error” (offset) as the signed distance from c to h, i.e., ∥h−c∥ if h is above c, and −∥h−c∥ if h is below c in the vertical (+y) direction. A positive orthogonal error (given in degrees, where 1° = 0.8 cm) indicates that the observer touched the screen above the trajectory.

Statistical analysis.

A standard score (z-score) analysis was performed on all eye and finger measures across all trials and observers; individual observers’ values deviating from the respective measure’s group mean by > 3 std (mostly due to small undetected saccades) were flagged as outliers and excluded from further analyses (1.2% on average across all measures and experiments). Statistical analyses focused on measures reflecting the movement itself (e.g., eye latency, relative pursuit velocity, cumulative catch-up saccade amplitude and finger latency, peak velocity) and the interception (e.g., eye and interception timing errors). Any observed effects of context on movement and interception (Fig. 1) were confirmed with repeated-measures analysis of variance (ANOVA) with within-subjects factors context, duration and launch angle, and between-subjects factor experiment. Post hoc comparisons between context conditions (pairwise t-tests with Bonferroni corrections applied separately for each ANOVA) and context × experiment interactions were analyzed to reveal any differential effects of contexts on dependent measures.

To control for possible effects of block order, we also ran each ANOVA with between-subjects factor block order, but we found no significant main effects or interactions with this factor; thus our results do not include this variable. Effects of presentation duration and launch angle on eye and hand measures are not the focus of this study and are thus reported selectively.

To investigate whether context modulated the relation between eye and hand, we performed trial-by-trial correlations between eye and interception timing error on an individual observer basis. We then calculated each observer’s slope for each context condition and experiment and tested whether the average slope across observers differed from zero using t-tests. Regression analyses were performed in R; all other statistical analyses were performed in IBM SPSS Statistics Version 24 (Armonk, NY).

RESULTS

We compared pursuit and manual interception accuracy in response to target motion across one of three contexts: a uniform gray context, a stationary textured context, or a dynamic context moving at the same speed (experiment 1) or at a faster speed as compared with the target (experiment 2). We report results in two parts: first, we present context effects on smooth pursuit in interception trials, in which the ball disappeared from view. Second, we report context effects on hand movements and compare findings for eye and hand.

Context effects on pursuit.

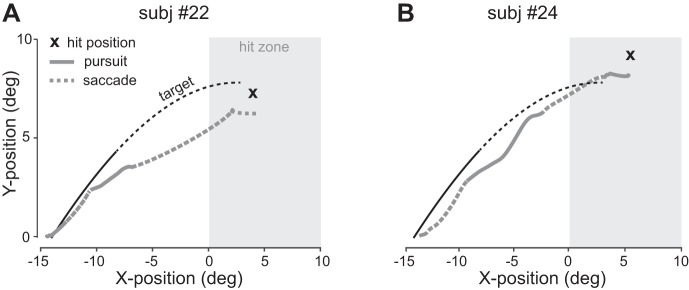

Short target presentation durations resulted in a transient pursuit response of relatively low velocity. Figure 3 shows eye position traces and hit positions from individual trials of two observers, showing that smooth tracking was supplemented by frequent catch-up saccades, M = 2.7 (std = 0.36) saccades per trial on average. In some trials, observers made large saccades along the extrapolated target trajectory (Fig. 3A), in other trials, observers attempted to continue to track the target smoothly for longer periods of time (Fig. 3B).

Fig. 3.

A and B: individual 2D eye position traces from typical trials of two observers. In both trials, the target was launched at an angle of 35°, moved across a uniform gray background, and was shown for 300 ms (the dashed part of the target trajectory indicates the ball’s flight between target disappearance and interception). subj, Subject.

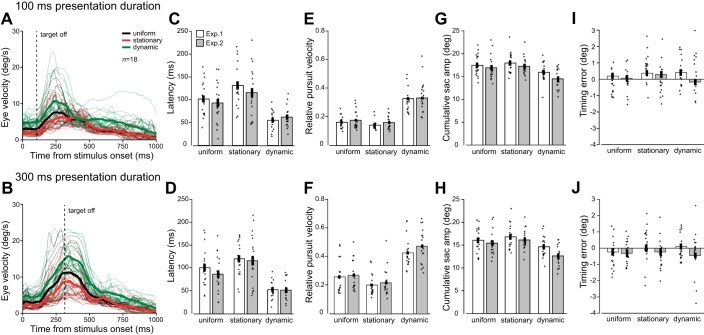

Despite the transient pursuit response, context effects on pursuit were clearly visible: a stationary context slowed pursuit, a dynamic context sped up pursuit for both presentation durations (compare red and green lines in Fig. 4, A and B). This observation was confirmed by repeated-measures ANOVA revealing significant main effects of context on pursuit latency [F(2,68) = 47.89, P < 0.001, η2 = 0.59; Fig. 4, C and D], relative pursuit velocity [F(2,68) = 144.42, P < 0.001, η2 = 0.81; Fig. 4, E and F], and cumulative saccade amplitude [F(2,68) = 34.13, P < 0.001, η2 = 0.50; Fig. 4, G and H]. These findings confirm the hypothesis that smooth pursuit follows vector averaging when integrating motion signals from a disappearing target and a stationary or dynamic context.

Fig. 4.

Context effects on smooth pursuit eye movements during interception trials in experiments 1 and 2. A and B: mean eye velocity traces for individual observers (n = 18) in experiment 1, averaged across launch angles, in response to a target presented for 100 ms (A) or 300 ms (B). C and D: mean latency (ms) in response to three types of context in experiment 1 (white, n = 18) and 2 (gray, n = 18) averaged across launch angles. Each data point is the mean for one observer. E and F: relative pursuit velocity. G and H: cumulative catch-up saccade amplitude. I and J: Timing error (deg). Error bars denote ±1 standard error of the mean. All pairwise Bonferroni-corrected post hoc comparisons for pursuit measures latency, relative velocity, and cumulative saccade amplitude (sac amp) were significant at P < 0.001.

However, results are different for eye timing error at interception—a measure obtained at a later time point. A vector-averaging model would predict the eye to lag behind the target in the stationary context condition and to be ahead in the dynamic context condition. Yet context effects on eye timing errors were not in line with this model: mean eye timing errors were similar across context conditions (no main effect of context, F(2,68) = 1.18, P = 0.31, η2 = 0.03; Fig. 4, I and J]. Even though there was a small trend for errors to differ between dynamic contexts moving along with the target (positive eye timing error) vs. contexts moving faster (negative eye timing error), the context × experiment interaction was nonsignificant [F(2,68) = 2.07, P = 0.13, η2 = 0.06).

Results in Fig. 4 are shown separately by presentation duration, because significant effects of duration were observed for relative pursuit velocity and cumulative saccade amplitude (both P < 0.001). All context and duration effects were constant across experiments (no main effects, all P > 0.14), and we found no interaction between launch angle and context (all P > 0.25); hence, results were averaged across launch angles. To summarize, context effects on pursuit suggest general impairment of the smooth component of the movement in the presence of a stationary context and pursuit enhancement when tracking a target in the presence of a dynamic context, in line with a vector-averaging model. By contrast, we did not find support for context effects on eye position (timing error) at time of interception and found no evidence that eye interception followed vector averaging.

Context effects on manual interception.

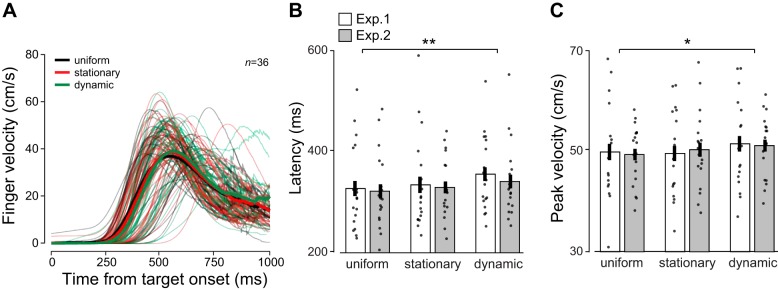

In both experiments, observers performed rapid reach movements toward the predicted target location. On average, these reaches were initiated with a latency of 335.5 ms after stimulus onset (334 and 337 ms for 100- and 300-ms presentation duration, respectively), took 899 ms to complete, and reached a mean peak velocity of 50 cm/s. Figure 5A shows mean and individual finger velocity traces, averaged across angles, durations, and experiments (no main effects, all P > 0.23), and aligned to target onset. Finger latencies were shortest for uniform contexts (M = 325.2, std = 13.5), intermediate for stationary contexts (M = 332.6, std = 13.3), and longest for dynamic contexts (M = 349.0, std = 12.5). Across experiments, a repeated-measures ANOVA showed a significant main effect of context on finger latency [F(2,68) = 5.59, P = 0.006, η2 = 0.14; Fig. 5B] and no context × experiment interaction (F < 1, P = 0.77). Peak velocity was lowest for uniform contexts (M = 49.56, std = 7.8), intermediate for stationary contexts (M = 49.91, std = 8.1), and highest for dynamic contexts (M = 51.29, std = 7.6). Across experiments, peak velocity was significantly affected by context [F(2,68) = 4.06, P = 0.02, η2 = 0.11; Fig. 5C], and there was no context × experiment interaction (F < 1, P = 0.56). The finding of elevated peak velocity for dynamic contexts is in alignment with what we found for the eye movement: relative pursuit velocity was also highest when the context was dynamic, consistent with a vector-averaging model. However, the finding of increased finger latency does not match the finding that pursuit latency was shortest for dynamic contexts.

Fig. 5.

Effects of context on finger latency and peak velocity. A: mean finger velocity traces for individual observers in experiments 1 and 2 (n = 36 total) averaged across presentation durations. Bold traces are averages across observers. Note that the peak of mean velocity traces does not match peak velocity shown in C, because mean traces were aligned to movement onset, not peak. B: latency (ms) for different contexts averaged across presentation durations. Each data point is the mean for one observer. C: peak velocity (cm/s) for different contexts. Asterisks indicate results of Bonferroni-corrected post hoc comparisons, *P < 0.05, **P < 0.01.

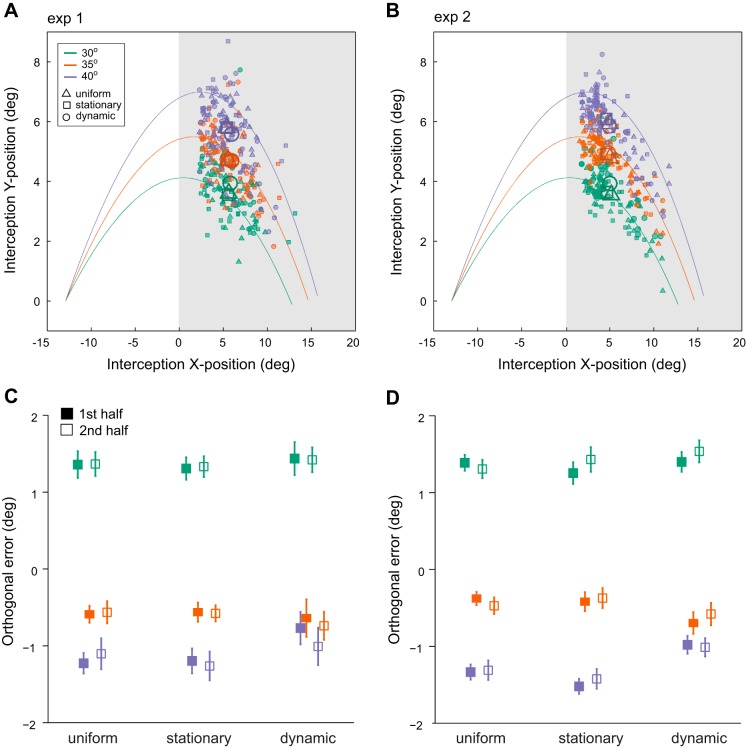

Next, we analyzed context effects on interception accuracy. Figure 6 shows 2D interception positions for three launch angles and three contexts for experiment 1 (Fig. 6A) and experiment 2 (Fig. 6B). Each data point is the mean interception position in the hit zone for one observer in a given condition. Overall, observers tended to intercept relatively early in the hit zone. For both experiments, interception locations were similar for the different context conditions (denoted by symbol type in Fig. 6, A and B).

Fig. 6.

2D finger interception positions in experiment 1 (A) and experiment 2 (B) within the hit zone (gray area on the right). Each data point shows the mean for one observer; larger symbols denote means across observers in a given condition. Context types are denoted by different symbols. C and D: mean orthogonal error. Solid symbols present the mean of the first, open symbols the second half of trials within each block. Launch angles are denoted by color. One degree of visual angle corresponds to 0.8 cm. Error bars denote ±1 standard error of the mean.

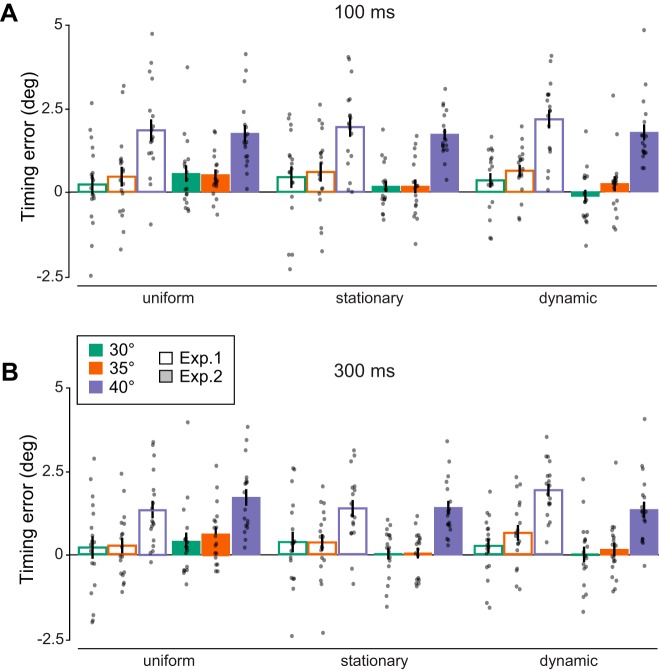

Figure 7 summarizes the results for interception timing error for both presentation durations separately. A main effect of duration [F(2,34) = 13.50, P = 0.001, η2 = 0.28] indicates improved interception accuracy with longer vs. shorter stimulus presentation (compare Fig. 7A and Fig. 7B). Similar to the results obtained for eye timing error, context effects on interception timing error were nonsignificant (no main effect of context, P = 0.82). If interception position had followed vector averaging, we would have expected interceptions behind or ahead of the target in the presence of a stationary or dynamic context (irrespective of whether it moves faster or at the same speed as the target). Instead, observers tended to point ahead more compared with the uniform condition when the context moved along with the target (positive difference in timing error, M = 0.28°, std = 0.84), and ahead less when the context moved faster (negative difference in timing error, M = −0.37°, std = 1.03). Yet, the context × experiment interaction for interception timing error was nonsignificant [F(2,68) = 2.11, P = 0.13, η2 = 0.06].

Fig. 7.

Context effects on interception in experiments 1 and 2 (n = 18 each). A: interception timing error in degrees for different contexts and launch angles for a target shown for 100 ms. Each data point is the mean for one observer. B: same conditions as in A for 300-ms presentation duration. All error bars denote ±1 standard error of the mean.

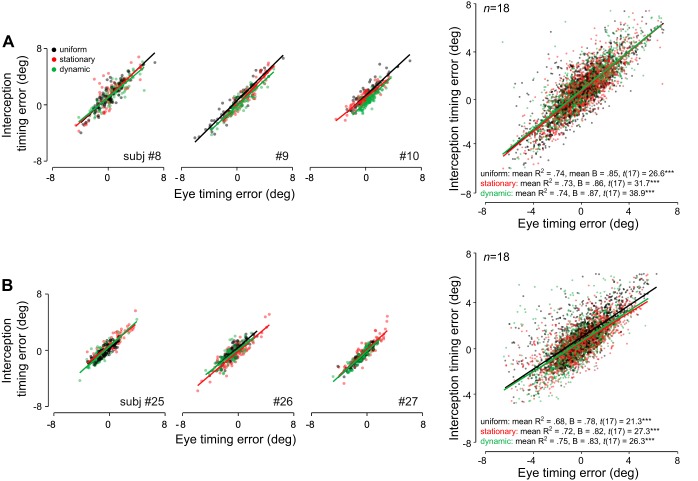

The observed similarities between eye and hand movement at time of interception were supported by a strong positive relationship between accuracy (timing error) in eye and hand across context conditions. Figure 8 shows trial-by-trial correlations for individual observers (three per experiment; left) and across the entire group (right). Regression slopes averaged across observers differed significantly from zero for all context conditions in both experiments (Fig. 8). These results were consistent across launch angles, with all slopes significantly different from zero (all t > 22.3, P < 0.001).

Fig. 8.

Relation between hand movement accuracy and eye movement accuracy at time of interception. A: interception timing error vs. eye timing error in experiment 1 for three representative observers and n = 18 for each context condition. B: same relation for experiment 2. Each data point is the error in a single trial for one observer in a given context condition; significance values are for t-tests comparing average regression slopes to zero, ***P < 0.001.

Motion signals or learned contingencies?

A few additional observations are worth noting. Figures 5 and 6 show that launch angle affected interception: timing error was largest for the steepest launch angle [F(2,68) = 238.75, P < 0.001, η2 = 0.88; Fig. 7]. Moreover, observers consistently intercepted above the target trajectory for the shallowest launch angle of 30° (mean orthogonal error 1.4°, std = 0.6) and below the trajectory for the steepest angle of 45° (M = −1.16°, std = 0.68), close to the spatial average of the three trajectories (Fig. 6, A and B). This observation was confirmed by a repeated-measures ANOVA revealing a main effect of launch angle on orthogonal error [F(2,68) = 747.99, P < 0.001, η2 = 0.96]. This behavior indicates that observers might have used a simple heuristic, intercepting close to the average to increase their likelihood of hitting within the ball’s range, rather than learning detailed statistics of the ball trajectories.

To further investigate whether observers learned a contingency between launch angle and feedback based on their pointing position we analyzed orthogonal errors separately for the first and second half of each block. If observers formed an implicit association between a specific launch angle and feedback position, orthogonal errors should decrease over the course of each block due to learning. Results are shown in Figs. 6C and 5D and do not support this assumption. Mean orthogonal errors across presentation durations for the three contexts and launch angles do not decrease systematically but are largely stable across each block of trials.

DISCUSSION

Many studies have investigated how the oculomotor system integrates visual information from multiple sources. Smooth pursuit and saccadic eye movements commonly follow the vector average of multiple available motion or position signals (Findlay 1982; Lisberger 2015; Lisberger and Ferrera 1997; Van der Stigchel and Nijboer 2011). However, motion integration might rely on different mechanisms for perception. When tracking a small visual target in the presence of a dynamic visual context, perception follows motion contrast or relative motion signals, rather than the vector average (Brenner 1991; Smeets and Brenner 1995a; Spering and Gegenfurtner 2007; Zivotofsky 2005). It is unclear how target and continuous context motion signals are integrated for manual interception movements.

Context effects on eye and hand.

Here we investigated how different naturalistic visual contexts affect eye and hand movements during a task that required observers to smoothly track a briefly presented visual target with their eyes. Observers had to extrapolate and predict the target trajectory by pointing at its assumed end location with their finger. In two experiments, we showed that visual contexts—motion clouds (Leon et al. 2012)—severely impacted smooth pursuit eye movements. Stationary textured contexts impaired smooth pursuit (latency, mean velocity, catch-up saccades), whereas dynamic textured contexts enhanced smooth pursuit. These context effects are consistent with the predictions of a vector-averaging model. Our study extends earlier findings, obtained with sinusoidal gratings, random dot patterns or stripes in the background (reviewed in Spering and Gegenfurtner 2008) to contexts with naturalistic spatiotemporal energy profiles in a task that involves a disappearing target. Target disappearance resulted in a transient smooth pursuit response, supported by catch-up saccades. Previous studies describing saccadic and smooth tracking of an occluded target observed synergy between the two systems (Orban de Xivry et al. 2006; Orban de Xivry and Lefèvre 2007). In line with this model, we found that saccadic compensation for smooth pursuit scaled with context: slower pursuit in response to a stationary context was accompanied by larger and more frequent catch-up saccades (larger cumulative saccade amplitude), whereas faster pursuit in response to a dynamic context required fewer and smaller catch-up saccades.

Similarly, hand movement measures obtained during the early phase of the hand movement, before interception, showed a signature of context. Dynamic contexts increased interception latency and finger peak velocity. This finding could reflect vector-averaging mechanisms for the computation of finger velocity. Alternatively, increased finger peak velocity in the presence of dynamic contexts could reflect a tradeoff between latency and speed in this condition.

However, the accuracy of eye and hand movement measures at time of interception, eye and interception timing error, were not significantly affected by context. These findings indicate that context effects might be short-lasting and may exert larger effects on the trajectory than on the end-point accuracy of a given movement. Taken together, our findings show striking similarities in how eye and hand movements respond to textured contexts. Consistent with this result, eye and interception timing errors were strongly correlated on a trial-by-trial basis across all context conditions.

We also observed similarities between eye and hand in response to presentation durations. Both pursuit (relative velocity and cumulative saccade amplitude) and interception accuracy improved with longer presentation duration. These results are consistent with findings showing that the ocular pursuit system requires more than 200 ms of initial target presentation to extract acceleration information used to guide predictive pursuit (Bennett et al. 2007).

While context motion signals affected eye and hand similarly, we observed differences terms of how each movement was affected by the ball’s initial trajectory. Whereas pursuit was unaffected, interception timing and orthogonal error depended on the ball’s launch angle, in line with reports in the literature. When intercepting a target that disappeared soon after its launch, temporal interception accuracy decreased with increasing time of invisible flight, indicating accumulation of temporal errors over time (de la Malla and López-Moliner 2015). This finding indicates that visual memory decays quickly during invisible tracking, resulting in larger timing errors for trajectories with later entry into the hit zone (launch angle of 40°), as observed in our study. Stable orthogonal errors over the course of each block of trials indicate that observers did not simply learn a contingency between the target’s launch angle and the pointing position (feedback).

Mechanisms of motion integration for pursuit and interception.

Following a vector-averaging model, a context moving along with the target should lead to an overestimation of target speed. This should result in higher eye and finger velocity, as well as in eye and finger end points located ahead of the true target position (e.g., positive timing error). Overestimation should be even stronger when the context moves faster than the target. While we found evidence for motion integration in line with a vector-averaging model for movement parameters such as latency and velocity, motion integration for final eye and interception positions did not follow vector averaging. These results are largely in line with previous studies indicating little or no effect of context on interception positions (Brouwer et al. 2003; Smeets and Brenner 1995a, 1995b; Thompson and Henriques 2008), despite context effects on movement trajectories (e.g., Smeets and Brenner 1995a, 1995b). Although we observed a small trend in timing errors consistent with a motion-contrast model, these trends were not supported by statistical analyses. These null effects could be due to noise, i.e., the variability in hand movements (van Beers et al. 2004), or to lack of power. Previous studies indicate that, under some circumstances, the motor system might take relative motion into account when executing interception movements. For example, Soechting et al. (2001) found that goal-directed pointing movements were influenced by the Duncker illusion, in which a stationary target is perceived as moving in the opposite direction to a moving context (relative motion). Other studies found that the illusion triggers deviations of the hand trajectory away from the context’s motion direction (Brouwer et al. 2003; Smeets and Brenner 1995b). Regardless of the direction of the effect—vector averaging or motion contrast—we observed similarities rather than differences between the two response modalities in terms of context effects.

Common motor programs for eye and hand movements.

In line with a model of common processing mechanisms, eye and hand are closely related when tracking and intercepting the target in the presence of a uniform background and textured context (Fig. 8). This finding extends the well-known result that “gaze leads the hand” (Ballard et al. 1992; Land 2006; Sailer et al. 2005; Smeets et al. 1996); is anchored on the target when pointing, hitting, catching, or tracking (Brenner and Smeets 2011; Cesqui et al. 2015; Gribble et al. 2002; Neggers and Bekkering 2000; van Donkelaar et al. 1994); and depends on task requirements during object manipulation (Belardinelli et al. 2016; Johansson et al. 2001). In our paradigm, the pointing movement was directed at an extrapolated, invisible target position, and eye and finger end positions often did not coincide at the same location (Fig. 3). Hence, it is interesting that eye and hand timing errors were correlated even in the absence of a visible target anchor. This finding is in agreement with one of the first reports of a close link between eye and hand movements in a visually guided reaching task (Fisk and Goodale 1985). This study revealed cofacilitation of eye and reaching movements when movement directions were aligned—i.e., eye movement to the right paired with a right-handed reaching movement toward an ipsilateral target and vice versa for left: saccades were initiated faster and reached higher peak velocities when accompanied by an aligned hand movement. Shared computations for eye and hand have been shown to be useful in computational models of interception (Yeo et al. 2012).

More recent behavioral and neurophysiological studies have confirmed the close relation between eye movements and reaching. A concurrent hand movement improves the timing, speed, and accuracy of saccades (Dean et al. 2011; Epelboim et al. 1997; Fisk and Goodale 1985; Lünenburger et al. 2000; Snyder et al. 2002) and of smooth pursuit eye movements (Chen et al. 2016; Niehorster et al. 2015). Shared reference frames in parietal cortical areas might underlie both eye and hand movements (Scherberger et al. 2003; Snyder et al. 2002), and recent studies have revealed such mechanisms in lateral intraparietal cortex (Balan and Gottlieb 2009; Yttri et al. 2013). These neurophysiological studies, conducted under standard stimulus conditions with uniform backgrounds, support the notion of close coupling between eye and hand movements. Whether these findings generalize to more complex and naturalistic task and stimulus conditions is an unanswered question. Our data provide behavioral evidence for the close relation between eye and hand movements in a naturalistic interception task.

GRANTS

This work was supported by a German Academic Scholarship Foundation (Studienstiftung des deutschen Volkes) stipend to P. Kreyenmeier and an NSERC Discovery Grant (RGPIN 418493) and a Canada Foundation for Innovation (CFI) John R. Evans Leaders Fund to M. Spering.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the authors.

AUTHOR CONTRIBUTIONS

P.K. performed experiments; P.K. and J.F. analyzed data; P.K. and J.F. prepared figures; P.K. drafted manuscript; P.K., J.F., and M.S. approved final version of manuscript; J.F. and M.S. conceived and designed research; J.F. and M.S. interpreted results of experiments; M.S. edited and revised manuscript.

ACKNOWLEDGMENTS

The authors thank Laurent Perrinet for help creating Motion Cloud stimuli and Thomas Geyer and members of the Spering laboratory for comments on the manuscript. Data were presented in preliminary form at the 2016 Vision Sciences Society meeting in St. Petersburg, FL (Kreyenmeier et al. 2016).

REFERENCES

- Balan PF, Gottlieb J. Functional significance of nonspatial information in monkey lateral intraparietal area. J Neurosci 29: 8166–8176, 2009. doi: 10.1523/JNEUROSCI.0243-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ballard DH, Hayhoe MM, Li F, Whitehead SD, Frisby JP, Taylor JG, Fisher RB. Hand-eye coordination during sequential tasks. Philos Trans R Soc Lond B Biol Sci 337: 331–338, 1992. doi: 10.1098/rstb.1992.0111. [DOI] [PubMed] [Google Scholar]

- Belardinelli A, Stepper MY, Butz MV. . It’s in the eyes: planning precise manual actions before execution. J Vis 16(1): 18, 2016. doi: 10.1167/16.1.18. [DOI] [PubMed] [Google Scholar]

- Bennett SJ, Baures R, Hecht H, Benguigui N. Eye movements influence estimation of time-to-contact in prediction motion. Exp Brain Res 206: 399–407, 2010. doi: 10.1007/s00221-010-2416-y. [DOI] [PubMed] [Google Scholar]

- Bennett SJ, Orban de Xivry JJ, Barnes GR, Lefèvre P. Target acceleration can be extracted and represented within the predictive drive to ocular pursuit. J Neurophysiol 98: 1405–1414, 2007. doi: 10.1152/jn.00132.2007. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spat Vis 10: 433–436, 1997. doi: 10.1163/156856897X00357. [DOI] [PubMed] [Google Scholar]

- Brenner E. Judging object motion during smooth pursuit eye movements: the role of optic flow. Vision Res 31: 1893–1902, 1991. doi: 10.1016/0042-6989(91)90184-7. [DOI] [PubMed] [Google Scholar]

- Brenner E, Smeets JBJ. Fast responses of the human hand to changes in target position. J Mot Behav 29: 297–310, 1997. doi: 10.1080/00222899709600017. [DOI] [PubMed] [Google Scholar]

- Brenner E, Smeets JBJ. Continuous visual control of interception. Hum Mov Sci 30: 475–494, 2011. doi: 10.1016/j.humov.2010.12.007. [DOI] [PubMed] [Google Scholar]

- Brenner E, Smeets JBJ. How moving backgrounds influence interception. PLoS One 10: e0119903, 2015. doi: 10.1371/journal.pone.0119903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brenner E, van den Berg AV. Judging object velocity during smooth pursuit eye movements. Exp Brain Res 99: 316–324, 1994. doi: 10.1007/BF00239598. [DOI] [PubMed] [Google Scholar]

- Brouwer AM, Brenner E, Smeets JBJ. Hitting moving objects: is target speed used in guiding the hand? Exp Brain Res 143: 198–211, 2002. doi: 10.1007/s00221-001-0980-x. [DOI] [PubMed] [Google Scholar]

- Brouwer AM, Middelburg T, Smeets JBJ, Brenner E. Hitting moving targets: a dissociation between the use of the target’s speed and direction of motion. Exp Brain Res 152: 368–375, 2003. doi: 10.1007/s00221-003-1556-8. [DOI] [PubMed] [Google Scholar]

- Cesqui B, Mezzetti M, Lacquaniti F, d’Avella A. Gaze behavior in one-handed catching and its relation with interceptive performance: what the eyes can’t tell. PLoS One 10: e0119445, 2015. doi: 10.1371/journal.pone.0119445. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen J, Valsecchi M, Gegenfurtner KR. LRP predicts smooth pursuit eye movement onset during the ocular tracking of self-generated movements. J Neurophysiol 116: 18–29, 2016. doi: 10.1152/jn.00184.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collewijn H, Tamminga EP. Human smooth and saccadic eye movements during voluntary pursuit of different target motions on different backgrounds. J Physiol 351: 217–250, 1984. doi: 10.1113/jphysiol.1984.sp015242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de la Malla C, López-Moliner J. Predictive plus online visual information optimizes temporal precision in interception. J Exp Psychol Hum Percept Perform 41: 1271–1280, 2015. doi: 10.1037/xhp0000075. [DOI] [PubMed] [Google Scholar]

- Dean HL, Martí D, Tsui E, Rinzel J, Pesaran B. Reaction time correlations during eye-hand coordination: behavior and modeling. J Neurosci 31: 2399–2412, 2011. doi: 10.1523/JNEUROSCI.4591-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delle Monache S, Lacquaniti F, Bosco G. Eye movements and manual interception of ballistic trajectories: effects of law of motion perturbations and occlusions. Exp Brain Res 233: 359–374, 2015. doi: 10.1007/s00221-014-4120-9. [DOI] [PubMed] [Google Scholar]

- Epelboim J, Steinman RM, Kowler E, Pizlo Z, Erkelens CJ, Collewijn H. Gaze-shift dynamics in two kinds of sequential looking tasks. Vision Res 37: 2597–2607, 1997. doi: 10.1016/S0042-6989(97)00075-8. [DOI] [PubMed] [Google Scholar]

- Findlay JM. Global visual processing for saccadic eye movements. Vision Res 22: 1033–1045, 1982. doi: 10.1016/0042-6989(82)90040-2. [DOI] [PubMed] [Google Scholar]

- Fisk JD, Goodale MA. The organization of eye and limb movements during unrestricted reaching to targets in contralateral and ipsilateral visual space. Exp Brain Res 60: 159–178, 1985. doi: 10.1007/BF00237028. [DOI] [PubMed] [Google Scholar]

- Fooken J, Yeo S-H, Pai DK, Spering M. . Eye movement accuracy determines natural interception strategies. J Vis 16(14): 1, 2016. doi: 10.1167/16.14.1. [DOI] [PubMed] [Google Scholar]

- Gribble PL, Everling S, Ford K, Mattar A. Hand-eye coordination for rapid pointing movements. Arm movement direction and distance are specified prior to saccade onset. Exp Brain Res 145: 372–382, 2002. doi: 10.1007/s00221-002-1122-9. [DOI] [PubMed] [Google Scholar]

- Groh JM, Born RT, Newsome WT. How is a sensory map read out? Effects of microstimulation in visual area MT on saccades and smooth pursuit eye movements. J Neurosci 17: 4312–4330, 1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayhoe M, Ballard D. Eye movements in natural behavior. Trends Cogn Sci 9: 188–194, 2005. doi: 10.1016/j.tics.2005.02.009. [DOI] [PubMed] [Google Scholar]

- Johansson RS, Westling G, Bäckström A, Flanagan JR. Eye-hand coordination in object manipulation. J Neurosci 21: 6917–6932, 2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kreyenmeier P, Fooken J, Spering M. Similar effects of visual context dynamics on eye and hand movements. J Vis 16(14): 457, 2016. doi: 10.1167/16.12.457. [DOI] [Google Scholar]

- Land MF. Eye movements and the control of actions in everyday life. Prog Retin Eye Res 25: 296–324, 2006. doi: 10.1016/j.preteyeres.2006.01.002. [DOI] [PubMed] [Google Scholar]

- Land MF, McLeod P. From eye movements to actions: how batsmen hit the ball. Nat Neurosci 3: 1340–1345, 2000. doi: 10.1038/81887. [DOI] [PubMed] [Google Scholar]

- Leclercq G, Blohm G, Lefèvre P. Accurate planning of manual tracking requires a 3D visuomotor transformation of velocity signals. J Vis 12(5): 6, 2012. doi: 10.1167/12.5.6. [DOI] [PubMed] [Google Scholar]

- Leclercq G, Blohm G, Lefèvre P. Accounting for direction and speed of eye motion in planning visually guided manual tracking. J Neurophysiol 110: 1945–1957, 2013. doi: 10.1152/jn.00130.2013. [DOI] [PubMed] [Google Scholar]

- Leon PS, Vanzetta I, Masson GS, Perrinet LU. Motion clouds: model-based stimulus synthesis of natural-like random textures for the study of motion perception. J Neurophysiol 107: 3217–3226, 2012. doi: 10.1152/jn.00737.2011. [DOI] [PubMed] [Google Scholar]

- Lindner A, Schwarz U, Ilg UJ. Cancellation of self-induced retinal image motion during smooth pursuit eye movements. Vision Res 41: 1685–1694, 2001. doi: 10.1016/S0042-6989(01)00050-5. [DOI] [PubMed] [Google Scholar]

- Lisberger SG. Visual guidance of smooth pursuit eye movements. Annu Rev Vis Sci 1: 447–468, 2015. doi: 10.1146/annurev-vision-082114-035349. [DOI] [PubMed] [Google Scholar]

- Lisberger SG, Ferrera VP. Vector averaging for smooth pursuit eye movements initiated by two moving targets in monkeys. J Neurosci 17: 7490–7502, 1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lünenburger L, Kutz DF, Hoffmann KP. Influence of arm movements on saccades in humans. Eur J Neurosci 12: 4107–4116, 2000. doi: 10.1046/j.1460-9568.2000.00298.x. [DOI] [PubMed] [Google Scholar]

- Masson G, Proteau L, Mestre DR. Effects of stationary and moving textured backgrounds on the visuo-oculo-manual tracking in humans. Vision Res 35: 837–852, 1995. doi: 10.1016/0042-6989(94)00185-O. [DOI] [PubMed] [Google Scholar]

- Mohrmann-Lendla H, Fleischer AG. The effect of a moving background on aimed hand movements. Ergonomics 34: 353–364, 1991. doi: 10.1080/00140139108967319. [DOI] [PubMed] [Google Scholar]

- Mrotek LA. Following and intercepting scribbles: interactions between eye and hand control. Exp Brain Res 227: 161–174, 2013. doi: 10.1007/s00221-013-3496-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mrotek LA, Soechting JF. Target interception: hand-eye coordination and strategies. J Neurosci 27: 7297–7309, 2007. doi: 10.1523/JNEUROSCI.2046-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neggers SFW, Bekkering H. Ocular gaze is anchored to the target of an ongoing pointing movement. J Neurophysiol 83: 639–651, 2000. [DOI] [PubMed] [Google Scholar]

- Niehorster DC, Siu WW, Li L. . Manual tracking enhances smooth pursuit eye movements. J Vis 15(15): 11, 2015. doi: 10.1167/15.15.11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niemann T, Hoffmann KP. The influence of stationary and moving textured backgrounds on smooth-pursuit initiation and steady state pursuit in humans. Exp Brain Res 115: 531–540, 1997. doi: 10.1007/PL00005723. [DOI] [PubMed] [Google Scholar]

- Orban de Xivry JJ, Bennett SJ, Lefèvre P, Barnes GR. Evidence for synergy between saccades and smooth pursuit during transient target disappearance. J Neurophysiol 95: 418–427, 2006. doi: 10.1152/jn.00596.2005. [DOI] [PubMed] [Google Scholar]

- Orban de Xivry JJ, Lefèvre P. Saccades and pursuit: two outcomes of a single sensorimotor process. J Physiol 584: 11–23, 2007. doi: 10.1113/jphysiol.2007.139881. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peirce JW. PsychoPy—psychophysics software in Python. J Neurosci Methods 162: 8–13, 2007. doi: 10.1016/j.jneumeth.2006.11.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelli DG. The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat Vis 10: 437–442, 1997. doi: 10.1163/156856897X00366. [DOI] [PubMed] [Google Scholar]

- Saijo N, Murakami I, Nishida S, Gomi H. Large-field visual motion directly induces an involuntary rapid manual following response. J Neurosci 25: 4941–4951, 2005. doi: 10.1523/JNEUROSCI.4143-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sailer U, Flanagan JR, Johansson RS. Eye-hand coordination during learning of a novel visuomotor task. J Neurosci 25: 8833–8842, 2005. doi: 10.1523/JNEUROSCI.2658-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scherberger H, Goodale MA, Andersen RA. Target selection for reaching and saccades share a similar behavioral reference frame in the macaque. J Neurophysiol 89: 1456–1466, 2003. doi: 10.1152/jn.00883.2002. [DOI] [PubMed] [Google Scholar]

- Schütz AC, Braun DI, Gegenfurtner KR. . Eye movements and perception: a selective review. . J Vis 11(5): 9, 2011. doi: 10.1167/11.5.9. [DOI] [PubMed] [Google Scholar]

- Simoncini C, Perrinet LU, Montagnini A, Mamassian P, Masson GS. More is not always better: adaptive gain control explains dissociation between perception and action. Nat Neurosci 15: 1596–1603, 2012. doi: 10.1038/nn.3229. [DOI] [PubMed] [Google Scholar]

- Smeets JBJ, Brenner E. Perception and action are based on the same visual information: distinction between position and velocity. J Exp Psychol Hum Percept Perform 21: 19–31, 1995a. doi: 10.1037/0096-1523.21.1.19. [DOI] [PubMed] [Google Scholar]

- Smeets JBJ, Brenner E. Prediction of a moving target’s position in fast goal-directed action. Biol Cybern 73: 519–528, 1995b. doi: 10.1007/BF00199544. [DOI] [PubMed] [Google Scholar]

- Smeets JBJ, Hayhoe MM, Ballard DH. Goal-directed arm movements change eye-head coordination. Exp Brain Res 109: 434–440, 1996. doi: 10.1007/BF00229627. [DOI] [PubMed] [Google Scholar]

- Snyder LH, Calton JL, Dickinson AR, Lawrence BM. Eye-hand coordination: saccades are faster when accompanied by a coordinated arm movement. J Neurophysiol 87: 2279–2286, 2002. [DOI] [PubMed] [Google Scholar]

- Soechting JF, Engel KC, Flanders M. The Duncker illusion and eye-hand coordination. J Neurophysiol 85: 843–854, 2001. [DOI] [PubMed] [Google Scholar]

- Soechting JF, Flanders M. Extrapolation of visual motion for manual interception. J Neurophysiol 99: 2956–2967, 2008. doi: 10.1152/jn.90308.2008. [DOI] [PubMed] [Google Scholar]

- Spering M, Gegenfurtner KR. Contrast and assimilation in motion perception and smooth pursuit eye movements. J Neurophysiol 98: 1355–1363, 2007. doi: 10.1152/jn.00476.2007. [DOI] [PubMed] [Google Scholar]

- Spering M, Gegenfurtner KR. Contextual effects on motion perception and smooth pursuit eye movements. Brain Res 1225: 76–85, 2008. doi: 10.1016/j.brainres.2008.04.061. [DOI] [PubMed] [Google Scholar]

- Spering M, Montagnini A. Do we track what we see? Common versus independent processing for motion perception and smooth pursuit eye movements: a review. Vision Res 51: 836–852, 2011. doi: 10.1016/j.visres.2010.10.017. [DOI] [PubMed] [Google Scholar]

- Spering M, Schütz AC, Braun DI, Gegenfurtner KR. Keep your eyes on the ball: smooth pursuit eye movements enhance prediction of visual motion. J Neurophysiol 105: 1756–1767, 2011. doi: 10.1152/jn.00344.2010. [DOI] [PubMed] [Google Scholar]

- Thompson AA, Henriques DYP. Updating visual memory across eye movements for ocular and arm motor control. J Neurophysiol 100: 2507–2514, 2008. doi: 10.1152/jn.90599.2008. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Haggard P, Wolpert DM. The role of execution noise in movement variability. J Neurophysiol 91: 1050–1063, 2004. doi: 10.1152/jn.00652.2003. [DOI] [PubMed] [Google Scholar]

- Van der Stigchel S, Nijboer TCW. The global effect: what determines where the eyes land? J Eye Mov Res 4: 1–13, 2011. https://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&list_uids=21603125&dopt=Abstract. doi: 10.16910/jemr.4.2.3.21603125 [DOI] [Google Scholar]

- van Donkelaar P, Lee RG, Gellman RS. The contribution of retinal and extraretinal signals to manual tracking movements. Exp Brain Res 99: 155–163, 1994. doi: 10.1007/BF00241420. [DOI] [PubMed] [Google Scholar]

- Waespe W, Schwarz U. Slow eye movements induced by apparent target motion in monkey. Exp Brain Res 67: 433–435, 1987. doi: 10.1007/BF00248564. [DOI] [PubMed] [Google Scholar]

- Watts RG, Ferrer R. The lateral force on a spinning sphere: aerodynamics of a curveball. Am J Phys 55: 40–44, 1987. doi: 10.1119/1.14969. [DOI] [Google Scholar]

- Whitney D, Goodale MA. Visual motion due to eye movements helps guide the hand. Exp Brain Res 162: 394–400, 2005. doi: 10.1007/s00221-004-2154-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whitney D, Westwood DA, Goodale MA. The influence of visual motion on fast reaching movements to a stationary object. Nature 423: 869–873, 2003. doi: 10.1038/nature01693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolpert DM, Ghahramani Z. Computational principles of movement neuroscience. Nat Neurosci 3 Suppl: 1212–1217, 2000. doi: 10.1038/81497. [DOI] [PubMed] [Google Scholar]

- Yeo SH, Lesmana M, Neog DR, Pai DK. Eyecatch: simulating visuomotor coordination for object interception. ACM Trans Graph 31: 42, 2012. doi: 10.1145/2185520.2185538. [DOI] [Google Scholar]

- Yttri EA, Liu Y, Snyder LH. Lesions of cortical area LIP affect reach onset only when the reach is accompanied by a saccade, revealing an active eye-hand coordination circuit. Proc Natl Acad Sci USA 110: 2371–2376, 2013. doi: 10.1073/pnas.1220508110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao H, Warren WH. On-line and model-based approaches to the visual control of action. Vision Res 110: 190–202, 2015. doi: 10.1016/j.visres.2014.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zivotofsky AZ. A dissociation between perception and action in open-loop smooth-pursuit ocular tracking of the Duncker Illusion. Neurosci Lett 376: 81–86, 2005. doi: 10.1016/j.neulet.2004.11.031. [DOI] [PubMed] [Google Scholar]

- Zivotofsky AZ, White OB, Das VE, Leigh RJ. Saccades to remembered targets: the effects of saccades and illusory stimulus motion. Vision Res 38: 1287–1294, 1998. doi: 10.1016/S0042-6989(97)00288-5. [DOI] [PubMed] [Google Scholar]