Abstract

Joint clustering of multiple networks has been shown to be more accurate than performing clustering on individual networks separately. Many multi-view and multi-domain network clustering methods have been developed for joint multi-network clustering. These methods typically assume there is a common clustering structure shared by all networks, and different networks can provide complementary information on this underlying clustering structure. However, this assumption is too strict to hold in many emerging real-life applications, where multiple networks have diverse data distributions. More popularly, the networks in consideration belong to different underlying groups. Only networks in the same underlying group share similar clustering structures. Better clustering performance can be achieved by considering such groups differently. As a result, an ideal method should be able to automatically detect network groups so that networks in the same group share a common clustering structure. To address this problem, we propose a novel method, ComClus, to simultaneously group and cluster multiple networks. ComClus treats node clusters as features of networks and uses them to differentiate different network groups. Network grouping and clustering are coupled and mutually enhanced during the learning process. Extensive experimental evaluation on a variety of synthetic and real datasets demonstrates the effectiveness of our method.

I. Introduction

Network (or graph) clustering is a fundamental problem to discover closely related objects in a network. In many emerging applications, multiple networks are generated from different conditions or domains, such as gene co-expression networks collected from different tissues of model organisms [1], social networks generated at different time points [2], etc. These applications drive the recent research interests to joint clustering of multiple networks, which has been shown to significantly improve the clustering accuracy over single network clustering methods [3].

The key superiority of multi-network clustering methods is to leverage the shared clustering structure across all networks, since a consensus clustering structure is more robust to the incompleteness and noise in individual networks. For example, multi-view network clustering methods [3]–[5] work on multiple representations (views) of the same set of data objects. Different views can provide complementary information on the underlying data distribution. Multi-domain network clustering [6], [7] integrates networks of different sets of objects, and uses mappings between objects in different networks to penalize inconsistent clusterings.

To be successful, the existing multi-network clustering methods typically assume different networks share a consensus clustering structure. This very basic assumption, however, is too simplified to real-world applications. Consider an important bioinformatics problem, the gene co-expression network clustering [1]. In a gene co-expression network, each node is a gene and an edge represents the functional association between two connected genes. To enhance performance, we can use multiple gene co-expression networks collected in different tissues. Recent studies show that genes have tissue-specific roles and form tissue-specific interactions [8]. The same set of genes may form a cluster (e.g., a functional module) in similar tissues but not in others. Thus we cannot assume that gene co-expression networks from different tissues form similar clustering structures.

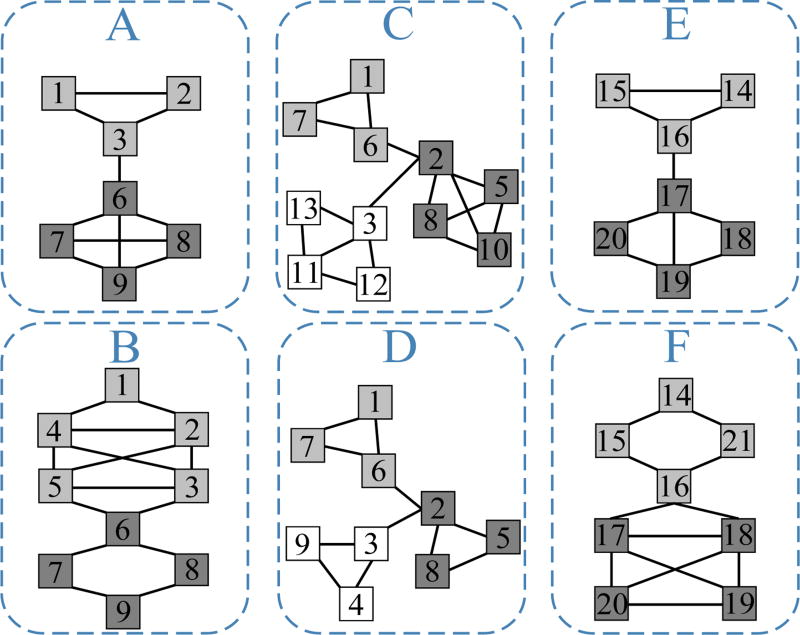

In this paper, we study a novel and generalized problem where we cannot simply assume the given networks share a consensus clustering structure. Consider the six networks in Fig. 1, they may represent gene co-expression networks from different tissues. Clearly, they do not share a common clustering structure. For example, nodes {1, 2, 3} form a cluster in network A but are in three different clusters in network C. However, by a visual inspection, we can partition these networks into three groups, i.e., {A, B}, {C, D} and {E, F}, since {A, B} share an underlying clustering structure, and so do {C, D} and {E, F}. This is practically reasonable. For example, a set of similar tissues can share many similar gene clusters. As another example, consider the co-author networks of different research areas [9]. Similar areas usually attract many similar author clusters (e.g., research sub-communities). Thus an ideal method to cluster this collection of networks should be able to (1) automatically detect network groups s.t. networks in the same group share a common clustering structure, and (2) enhance clustering accuracy by group-wise consensus structures.

Fig. 1.

An example of six networks. These networks can be grouped into {A, B}, {C, D} and {E, F} according to their clustering structures.

However, real-life networks are often diverse, noisy and incomplete. Even a group of similar networks may not have exactly the same clustering structure. Instead, they may only share a subset of their clusters and the shared clusters may only partially match. In Fig. 1, networks {C, D} only share two clusters {1, 7, 6} and {2, 5, 8}, and other nodes are irrelevant. Therefore, to effectively group together some networks, an ideal method should identify a subset of clusters that are common among these networks. This is a novel and non-trivial challenge. The existing multi-network clustering methods either assume all clusters are common [3]–[5] or simply enhance common clusters without identifying them [1], [6], [7] thus cannot tackle this problem.

In this paper, we propose a novel method ComClus to address these challenges. ComClus is novel in combining metric learning [10] with non-negative matrix factorization [11]. Briefly, ComClus treats node clusters as features of networks and group together networks sharing the same feature subspace (i.e., a common subset of clusters). In ComClus, network grouping and common cluster detection are coupled and mutually enhanced during the learning process. Correctly grouping networks sharing a common clustering structure can resolve ambiguities hence refine common cluster detection. Correct detection of common clusters reduces the possibility that a network goes to the wrong group. Experimental results on both synthetic and real-life datasets suggest the effectiveness of the proposed method.

II. Related Work

Several approaches have been developed for multi-network clustering. Multi-view clustering is among the most popular ones [3]–[5]. In these methods, views can be either networks or data-feature matrices of the same set of objects. Recent methods on multi-domain network clustering [6], [7] integrate networks of different sets of objects by cross-network object mapping relationships. Ensemble clustering [12] does not simultaneously clustering multiple data views, but aims to find an agreement of individual clustering results. All these methods simplify an assumption that multiple networks or views share a consensus clustering structure.

Multiple networks can also be represented by the tensor model. However, existing tensor decomposition methods, such as CP and Tucker decompositions [13], are good for co-clustering multiple matrices, but are not designed for network data where two modes of the tensor are symmetric. Moreover, tensor decomposition also limits all networks to share a single common underlying clustering structure.

Some methods detect communities in multi-layer networks [2], [14]. Each layer is a distinct network. The same set of objects are represented by different layers. These approaches aim to identify communities that are consistent in some layers, not to enhance clustering accuracy by using consensus. Thus they have a different goal from us (and the approaches mentioned above) and cannot be applied to solve our problem.

In [1], the authors developed a method, NoNClus, to cluster multiple networks with multiple underlying clustering structures. Our work differs markedly from [1]. [1] studies a different problem: to enhance clustering accuracy by using the network group information that is already known. In practice, however, such network group information may not be available beforehand. Thus NoNClus can neither identify common clusters among networks nor group networks by their different clustering structures.

III. The Problem

Let 𝒜 = {A(1), ..., A(g)} be the g given member networks. Each network is represented by its adjacency matrix , where ni is the number of nodes in A(i). Moreover, 𝒱(i) represents the set of nodes in A(i), ℐ(ij) = 𝒱(i) ∩ 𝒱(j) represents the set of common nodes between A(i) and A(j).

A network group 𝒜(p) is a subset of 𝒜 such that networks in 𝒜(p) share a common underlying clustering structure. In this paper, we consider each network to belong to one group. That is, if there are k network groups, then , and for any p ≠ q, 𝒜(p) ∩ 𝒜(q) = ∅. In Fig. 1, the six networks can be grouped as {A, B}, {C, D} and {E, F}.

The member networks in the same group share a set of common clusters. These clusters are used as features to characterize each network group and distinguish one group from another. In Fig. 1, the common clusters of network group {C, D} are clusters {1, 7, 6} and {2, 5, 8}.

Our goal is to simultaneously grouping and clustering the given member networks , such that (1) the member networks are partitioned into k groups with each group sharing a common set of clusters; and (2) the common clusters in each group are identified and their accuracies are enhanced. Note that we focus on finding non-overlapping clusters, which is also the common setting of the existing multi-view (domain) network clustering methods [1], [3]–[7].

IV. The Comclus Algorithm

In this section, we introduce ComClus, a novel subspace NMF method that incorporates metric learning [10] with NMF to learn cluster-level features for simultaneously grouping and clustering different member networks.

A. Preliminaries

Non-negative matrix factorization (NMF) [15] is widely used for clustering. We adopt the symmetric version of NMF (SNMF) [11] as the basic approach for clustering a single network, which minimizes the following objective function

| (1) |

where ‖ · ‖F is the Frobenius norm, is a k-dimensional latent vector of node i, and is the factor matrix of G. An entry Vij indicates to which degree the node i belongs to the cluster j.

B. Clusters as Network Features

In the next, we develop a subspace SNMF method to learn the set of clusters that can be used as features to characterize each member network in . Let be the global set of nodes in all member networks. In the SNMF, i.e., Eq. (1), each node i is represented in a k-dimensional latent space by vi* for a single network. Given multiple networks , we aggregate their latent spaces into a single global h-dimensional latent space, where h is the number of latent dimensions. Then for each node x in 𝒱, we represent it by a global latent vector .

In Eq. (1), each entry Gij is approximated by the inner product between vi* and vj*, where the full spaces of the k-dimensional latent vectors vi* and vj* are used for approximation. In our method, when approximating an entry in one member network A(i), we only use a subspace of the global h-dimensional ux* and uy*.

Specifically, for each network A(i), we define a metric vector whose entry indicates the importance of the global latent dimension p to network A(i). Therefore, when we approximate an entry , we use , where diag(w(i)) is a diagonal matrix with the diagonal vector as w(i). Let , using square loss function, we can collectively approximate A(i) by minimizing

| (2) |

In general, different networks can have different node sets 𝒱(i), thus have different sizes. Let n = |𝒱|. We define for each network A(i) a mapping matrix O(i) ∈ {0, 1}ni×n s.t. O(i)(x, y) = 1 iff node x in 𝒱(i) and node y in 𝒱 represent the same object. Let be a global latent factor matrix, we can obtain the matrix form of Eq. (2)

| (3) |

Then for all member networks, we have

| (4) |

In Eq. (4), all member networks share the same latent factor matrix U. When approximating A(i), a sub-block of U is used. Fig. 2 illustrates the idea. In this process, O(i) selects the rows of U for A(i), which corresponds to the selection of node set 𝒱(i) from 𝒱. selects the columns of U for A(i), which corresponds to the selection of latent subspace. Therefore, if two networks A(i) and A(j) share many nodes, they will have large overlap in the rows of U. If A(i) and A(j) further show similar clustering structures, it is highly possible that they will share similar columns in U, i.e., similar and . This is because using similar sub-blocks of U would achieve good approximations for both A(i) and A(j) at this time. On the other hand, if A(i) and A(j) have dissimilar clustering structures, using similar subspaces of U (i.e., similar sub-blocks of U) to approximate both A(i) and A(j) will result in large loss function value. By minimizing ℒA, and then tend to lie in separate subspaces of U.

Fig. 2.

An illustration of the subspace-based SNMF for two networks.

In Eq. (1), the latent dimensions (i.e., columns) of V represent clusters of nodes. Thus in our subspace SNMF, the columns of U represent latent clusters. Each w(i) (recall ) is a cluster-level feature vector where an entry indicates the selection the pth latent cluster for network A(i). Therefore, w(i) carries the clustering structure information of network A(i) and can be used as a feature for network grouping.

C. Regularization on Network Node Sets

In this section, we develop a regularizer to encode the similarity between node sets of different networks. The intuition is based on the following observation. Let us consider a special case when two networks A(1) and A(2) share few or no nodes, which makes O(1) and O(2) different. Their selected sub-blocks from U will have few overlap and be separated vertically. Using the example in Fig. 2, in this case, U(2) may lie vertically below U(1). At this time, A(1) and A(2) can be well approximated by the two different sub-blocks of U no matter w(1) and w(2) are similar or not. Thus it is likely that w(1) and w(2) are similar while O(1) and O(2) are different. However, this is counterintuitive since two networks having few common nodes should be considered dissimilar and we expect them to have dissimilar structural feature vectors w(1) and w(2). To address this issue, we employ the regularization on the network node set similarity. The details are shown in the following.

We measure the similarity between w(i) and w(j) by their inner product (w(i))Tw(j). To penalize the similarity when A(i) and A(j) share few nodes, we propose the following penalty function.

| (5) |

where Φi,j is the penalty strength on (w(i))Tw(j). A proper Φi,j should have a high value when |ℐ(ij)| is small and a low value when |ℐ(ij)| is large. We use a logistic function1 as following to measure the penalty strength.

| (6) |

where , λ is a parameter that can be set to log(999) s.t. Φi,j ∈ [10−3, 1 − 10−3].

Let and whose (i, j)th entry is Φi,j. Then we have

| (7) |

where ○ is the entry-wise product, and ‖ · ‖1 is the ℓ1 norm.

D. Network Grouping

In order to assign member networks into k groups while detecting common clusters within each network group, we define k centroid vectors , where . Member networks in the same group share the same centroid vector. That is, if network A(i) belongs to group 𝒜(j), we want to minimize the difference . Therefore, s(j) represents the consistent cluster feature subspace of member networks in group 𝒜(j) and large entries in s(j) indicate the shared latent dimensions, i.e., common clusters, in group 𝒜(j).

Let vi* ∈ {0, 1}1×k be the group membership vector of A(i), i.e., vij = 1 iff A(i) ∈ 𝒜(j), let S = [s(1), ..., s(k)], we can collectively minimize the difference between the cluster feature vectors and centroid vectors by minimizing

| (8) |

Equivalently, let , we have

| (9) |

Eq. (9) can be explained as a co-clustering of W by NMF [15], [16]. Thus we can relax the {0, 1} constraint on V s.t. to avoid the mixed integer programming [17], which is difficult to solve. Then an entry Vij indicates to which degree A(i) belongs to network group 𝒜(j).

E. The Unified Model

Combining the loss function of subspace SNMF in Eq. (4), the penalty function in Eq. (7) and the loss function of network grouping in Eq. (9), we obtain a unified objective function for simultaneous multi-network grouping and clustering.

| (10) |

where α and β are two parameters controlling the importances of the penalty function and network grouping, respectively. Note that W and are two different representations of the same variables, we keep both of them in our algorithm.

Formally, we forlumate a joint optimization problem as

| (11) |

In Eq. (11), we also add ℓ1 norms on U, V and S to provide the option on sparseness constraints. This can be useful when nodes (member networks) do not belong to many clusters (network groups) [16] and each network group do not have many common clusters. ρ is a controlling parameter. Intuitively, the larger the ρ the more sparse the {U, V, S}.

V. Learning Algorithm

Since the objective function in Eq. (11) is not jointly convex, we take an alternating minimization framework that alternately solves U, V, S and W until a stationary point is achieved. For the details, please refer to an online Supplementary Material2.

Cluster Membership Inference

After obtaining U, V, S, and W, we can infer the network group of A(i) by j* = arg maxj Vij . We can infer the cluster membership of node x in A(i) by . Also, for a node x, we can infer its membership to a common cluster shared in network group j by p* = arg maxp (Udiag(s(j)))xp. More uniquely, we can sort the values in s(j) in descending order to identify the most common clusters in network group j.

VI. Experimental Results

Simulation Study

We first evaluate ComClus using synthetic datasets. The member networks are generated as follows. Suppose we have k network groups. For each network group, we first generate an underlying clustering structure with dc clusters (30 nodes per cluster). The k underlying clustering structures have the same set of nodes but different node cluster memberships. Then member networks are generated from each underlying clustering structure. Based on an underlying clustering structure, each member network is appended with dn irrelevant (“noisy”) clusters (30 nodes per cluster). The noisy clusters of different networks may have different nodes.

Fig. 3(a) shows an example using dc = 3 and dn = 3, where non-zero entries are set to 1. To embed noises, we randomly flip ω0 fraction of 1 in the matrix to 0 and ω1 fraction of 0 to 1. To generate member networks with different sizes, we randomly remove or add ε fraction of nodes in the previous matrix. ε follows normal distribution with mean μ and standard deviation σ and its value is set between 0 and 1. An example member network with 193 nodes generated using ω0 = 80%, ω1 = 5%, μ = 0.1, σ = 0.05 is shown in Fig. 3(b).

Fig. 3.

Synthetic dataset generation, shown by network adjacency matrices.

Using this generation process, we generate two types of synthetic datasets, both have k = 5 network groups where each group has 10 networks (thus 50 networks in total). In the first dataset, dc = 6 and dn = 0. All member networks have the same set of 180 nodes. ω0 and ω1 are set to 80% and 5% respectively to simulate noise. We refer to this dataset as SynView dataset. In the second dataset, dc = 3 and dn = 3. To simulate noise, we set ω0 = 80%, ω1 = 5%, μ = 0.1, σ = 0.05. Thus different networks have different node sets and sizes. We refer to this dataset as SynNet dataset.

We compare ComClus with the state-of-the-art methods, including (1) SNMF [11]; (2) Spectral clustering (Spectral) [18]; (3) Multi-view pair-wise co-regularized spectral clustering (PairCRSC) [3]; (4) Multi-view centroid-based co-regularized spectral clustering (CentCRSC) [3]; (5) Multi-view co-training spectral clustering (CTSC) [5]; (6) Tensor factorization (TF) [13]; (7) multi-domain co-regularized graph clustering (CGC) [6]; and (8) NoNClus [1].

SNMF and spectral clustering methods can only be applied on single networks. PairCRSC, CentCRSC, CTSC and TF can only be applied on SynView dataset. CGC is a recent multi-domain graph clustering method that can be applied on SynNet dataset. NoNClus can be applied on SynNet dataset given that the similarity between networks is available. Thus, we generate a similarity matrix for the 50 member networks using the same method described above by setting ω0 = 80%, ω1 = 5%, μ = 0, σ = 0. The generated similarity matrix allows to partition member networks into 5 groups.

The accuracies of common clusters are evaluated using both normalized mutual information (NMI) and purity accuracy (ACC), which are standard evaluation metrics. Table I shows the averaged results of different methods over 100 runs. The NMIs and ACCs are averaged over all member networks. The parameters are tuned for optimal performance of all methods.

TABLE I.

Clustering accuray on synthetic datasets.

| Method | SynView dataset | SynNet dataset | ||

|---|---|---|---|---|

|

| ||||

| NMI | ACC | NMI | ACC | |

|

| ||||

| SNMF | 0.4853 | 0.6900 | 0.3391 | 0.7659 |

| Spectral | 0.4723 | 0.6698 | 0.3145 | 0.7408 |

| PairCRSC | 0.3280 | 0.5437 | − | − |

| CentCRSC | 0.6541 | 0.8155 | − | − |

| CTSC | 0.4604 | 0.6587 | − | − |

| TF | 0.4803 | 0.5431 | − | − |

| CGC | 0.1291 | 0.3322 | 0.4498 | 0.7625 |

| NoNClus | 0.5572 | 0.7320 | 0.3824 | 0.7454 |

| ComClus | 0.9764 | 0.9766 | 0.8605 | 0.9896 |

From Table I, we observe that ComClus achieves significantly better performance than other methods on both datasets. The multi-view/domain clustering methods, Pair-CRSC, CentCRSC, CTSC, TF and CGC, assume all member networks share the same underlying clustering structure thus are not able to handle these datasets. NoNClus differentiates network clustering structures completely based on the similarity between member networks, which makes it sensitive to the noise in the similarity matrix. In contrast, ComClus is able to automatically group networks based on their shared clusters and use the grouping information to further improve the clustering of individual networks.

20Newsgroup Dataset

Next we evaluate ComClus using the 20Newsgroup dataset3. We use 12 news groups of 3 categories, Comp, Rec and Talk4, corresponding to 3 underlying clustering structures, each with 4 clusters (news groups). In this study, we generate 10 member networks from each category. Thus there are 30 member networks forming 3 groups corresponding to the 3 categories. Each member network contains randomly sampled 200 documents from the 4 news groups (50 documents from each news group) in a category. The adjacency matrix of documents is computed based on cosine similarity between document contents.

The common nodes in different member networks are generated as follows. For any two member networks from the same category, a document in one network is randomly mapped to a document with the same cluster label (e.g., comp.graphics) in another network. For any two member networks from different categories, the documents are randomly mapped with one-to-one relationship. We vary the ratio of common nodes, γ, from 0 to 1 to evaluate its effects.

For comparison, the single network clustering methods SNMF and Spectral clustering are performed on individual member networks. The widely used k-means clustering [19] is also selected as a baseline method, it is applied on the original document-word matrix instead of the network data. Note that multi-view clustering methods PairCRSC, CentCRSC, CTSC, and TF cannot be applied here since they require full mapping of nodes between networks. We omit CGC since it is very slow on tens of networks. To apply NoNClus, we calculate the cosine similarity between the overall word frequencies of member networks.

Fig. 4 shows the averaged NMI and ACC of different methods over 100 runs. In general, ComClus achieves better performance than other methods. Note ComClus is better than NoNClus although NoNClus uses the high quality similarity information between member networks. This shows the importance to group and cluster multiple networks simultaneously. The blue dotted curve in Fig. 4 shows that ComClus achieves increased NMI and ACC of network grouping as more common nodes are added. This confirms that better common cluster detection enhances network grouping.

Fig. 4.

Performance on 20Newsgroup dataset with various common node ratio. The blue dotted curve shows the grouping performance of ComClus.

Reality Mining Dataset

In this section, we evaluate ComClus on the MIT reality mining proximity networks [20]. From the original dataset, we obtain 371 proximity networks about 91 subjects (e.g., faculties, staffs, students). Each of the network is constructed in one day between July 2004 and July 2005. In a proximity network, any pair of subjects are linked if their phones detect each other (within certain distance) at least once in that day.

As analyzed in [20], subjects have different roles during work and out of campus, which reflects in different subject clusters (e.g., working groups or social communities) in in- and off-campus. As suggested by [20], we separate each of the 371 proximity networks by time 8 p.m. to obtain two groups of networks for in- and off-campus, respectively.

Since many proximity networks are very sparse without obvious structures, we take two steps to process them. First, we extract networks from September to December 2004, which generally has more data collected than other periods. Then we aggregate the networks by month. Finally we have dataset RM-month: 8 proximity networks, 4 of them are in-campus and 4 of them are off-campus.

Next we evaluate ComClus to see if it can (1) automatically group in (off)-campus networks together; and (2) enhance common subject clusters in in (off)-campus networks.

First, in our results, we observe ComClus correctly groups in-campus and off-campus networks. To evaluate the subject clusters in in-campus networks, we use the ground truth from the dataset, which indicates the subjects’ affiliations, i.e., MIT media lab or business school. The averaged NMIs (over all in-campus networks) of different methods are shown in Table II. Here NoNClus is omitted because network similarity is not available in this dataset. For spectral based methods, we report the best results, which is given by CTSC. As can be seen, ComClus exactly discovers the subject clusters in incampus networks, while none of the baseline methods can achieve this accuracy. This shows the importance to group networks and enhance clustering by group-wise consensus. For off-campus networks, since there is no ground truth, we use internal density [21] as the cluster quality measure. As shown in Table II, ComClus achieves the best averaged density, which indicates its capability to discover meaningful clusters in off-campus networks. These results imply that subjects may have different communities during and after work.

TABLE II.

Performance on RM-month dataset.

| Measure | SNMF | CTSC | CGC | TF | ComClus |

|---|---|---|---|---|---|

|

| |||||

| NMI | 0.7278 | 0.8705 | 0.9083 | 0.9066 | 1.0000 |

| Density | 0.2019 | 0.1822 | 0.1702 | 0.1852 | 0.2253 |

VII. Conclusion

In this paper, we generalize the existing multi-network clustering methods to consider network groups and use group-wise consensus to enhance clustering accuracy. We treat node clusters as features of networks and propose a novel method ComClus to infer the shared cluster-level feature subspaces in network groups. ComClus is not only able to simultaneously group networks and detect common clusters, but also mutually enhance the performance of both procedures. Extensive experiments on synthetic and real datasets demonstrate the effectiveness of our method.

Acknowledgments

This work was partially supported by NSF grant IIS-1162374, NSF CAREER, and NIH grant R01 GM115833.

Footnotes

Other functions can also be used. We choose logistic function because of the easy control of its range and shape.

The supplementary material, experimental codes and datasets are available at http://filer.case.edu/jxn154/ComClus.

Comp: comp.graphics, comp.os.ms-windows.misc, comp.sys.ibm.pc. hardware, comp.sys.mac.pc.hardware; Rec: rec.autos, rec.motorcycles, rec. sport.baseball, rec.sport.hockey; Talk: talk.politics.guns, talk.politics.mideast, talk.politics.misc, talk.religion.misc.

References

- 1.Ni J, Tong H, Fan W, Zhang X. KDD. 2015. Flexible and robust multi-network clustering. [Google Scholar]

- 2.Mucha PJ, Richardson T, Macon K, Porter MA, Onnela J-P. Community structure in time-dependent, multiscale, and multiplex networks. science. 2010;328(5980):876–878. doi: 10.1126/science.1184819. [DOI] [PubMed] [Google Scholar]

- 3.Kumar A, Rai P, Daume H. NIPS. 2011. Co-regularized multi-view spectral clustering. [Google Scholar]

- 4.Zhou D, Burges CJ. ICML. 2007. Spectral clustering and transductive learning with multiple views. [Google Scholar]

- 5.Kumar A, Daumé H. ICML. 2011. A co-training approach for multi-view spectral clustering. [Google Scholar]

- 6.Cheng W, Zhang X, Guo Z, Wu Y, Sullivan PF, Wang W. KDD. 2013. Flexible and robust co-regularized multi-domain graph clustering. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Liu R, Cheng W, Tong H, Wang W, Zhang X. ICDM. 2015. Robust multi-network clustering via joint cross-domain cluster alignment. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bossi A, Lehner B. Tissue specificity and the human protein interaction network. Mol. Syst. Biol. 2009;5(1) doi: 10.1038/msb.2009.17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ni J, Tong H, Fan W, Zhang X. KDD. 2014. Inside the atoms: ranking on a network of networks. [Google Scholar]

- 10.Xing EP, Jordan MI, Russell S, Ng AY. NIPS. 2002. Distance metric learning with application to clustering with side-information. [Google Scholar]

- 11.Ding CH, He X, Simon HD. SDM. 2005. On the equivalence of nonnegative matrix factorization and spectral clustering. [Google Scholar]

- 12.Strehl A, Ghosh J. AAAI/IAAI. 2002. Cluster ensembles-a knowledge reuse frame-work for combining partitionings. [Google Scholar]

- 13.Kolda TG, Bader BW. Tensor decompositions and applications. SIAM review. 2009;51(3):455–500. [Google Scholar]

- 14.Boden B, Günnemann S, Hoffmann H, Seidl T. KDD. 2012. Mining coherent subgraphs in multi-layer graphs with edge labels. [Google Scholar]

- 15.Lee DD, Seung HS. NIPS. 2001. Algorithms for non-negative matrix factorization. [Google Scholar]

- 16.Hsieh C-J, Dhillon IS. KDD. 2011. Fast coordinate descent methods with variable selection for non-negative matrix factorization. [Google Scholar]

- 17.Boyd S, Vandenberghe L. Convex optimization. Cambridge university press; 2004. [Google Scholar]

- 18.Shi J, Malik J. Normalized cuts and image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2000;22(8):888–905. [Google Scholar]

- 19.MacQueen J, et al. Proceedings of the fifth Berkeley symposium on mathematical statistics and probability. Vol. 1. California, USA: 1967. Some methods for classification and analysis of multivariate observations; pp. 281–297. [Google Scholar]

- 20.Eagle N, Pentland AS, Lazer D. Inferring friendship network structure by using mobile phone data. Proc. Natl. Acad. Sci. USA. 2009;106(36):15 274–15 278. doi: 10.1073/pnas.0900282106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Leskovec J, Lang KJ, Mahoney M. WWW. 2010. Empirical comparison of algorithms for network community detection. [Google Scholar]