Abstract

Many studies, using a variety of imaging techniques, have shown that deafness induces functional plasticity in the brain of adults with late-onset deafness, and in children changes the way the auditory brain develops. Cross modal plasticity refers to evidence that stimuli of one modality (e.g. vision) activate neural regions devoted to a different modality (e.g. hearing) that are not normally activated by those stimuli. Other studies have shown that multimodal brain networks (such as those involved in language comprehension, and the default mode network) are altered by deafness, as evidenced by changes in patterns of activation or connectivity within the networks. In this paper, we summarise what is already known about brain plasticity due to deafness and propose that functional near-infra-red spectroscopy (fNIRS) is an imaging method that has potential to provide prognostic and diagnostic information for cochlear implant users. Currently, patient history factors account for only 10% of the variation in post-implantation speech understanding, and very few post-implantation behavioural measures of hearing ability correlate with speech understanding. As a non-invasive, inexpensive and user-friendly imaging method, fNIRS provides an opportunity to study both pre- and post-implantation brain function. Here, we explain the principle of fNIRS measurements and illustrate its use in studying brain network connectivity and function with example data.

Keywords: fNIRS, cochlear implants, deafness, brain plasticity, connectivity in brain networks

1 Introduction

1.1 Deafness, Language and Brain Plasticity: Evidence from Imaging Studies

Speech understanding involves complex multimodal networks involving vision, hearing and sensory motor areas as well as memory and frontal lobe functions mostly in the left hemisphere (LH), and encompasses a range of elements such as phonology, semantics, and syntactics. The right hemisphere (RH) has fewer specialised functions for language processing, and its role seems to be mostly in the evaluation of the communication context (Vigneau et al., 2011). Multiple imaging studies have shown that adults who have undergone periods of profound post-lingual deafness demonstrate changes in brain activity and function in language-associated brain areas that are not observed in normally-hearing individuals, and that further functional plasticity occurs as a result of cochlear implantation.

Lee et al. (2003) used positron emission tomography (PET) to compare resting-state activity in 9 profoundly deaf individuals and 9 age-matched normal-hearing controls. They found that glucose metabolism in some auditory areas (anterior cingulate gyri - Brodmann area 24 [BA24] - and superior temporal cortices - BA41, BA42) was lower than in normally-hearing people, but significantly increased with duration of deafness, and concluded that plasticity occurs in the sensory-deprived mature brain. In a later paper the same group showed, using PET resting state data, that children with good speech understanding 3 years after implantation had enhanced metabolic activity in the left prefrontal cortex and decreased metabolic activity in right Heschl’s gyrus and in the posterior superior temporal sulcus before implantation compared to those with poor speech understanding (Lee et al., 2007). They argued that increased activity in the resting state in auditory areas may reflect cross modal plasticity that is detrimental to later success with the CI. A recent paper by Dewey and Hartley (2015) using functional near infrared spectroscopy (fNIRS) has supported the proposal that cross modal plasticity occurs in deafness: they demonstrated that the auditory cortex of deaf individuals is abnormally activated by simple visual stimuli (moving or flashing dots).

Not all studies have suggested detrimental effects of brain changes due to deafness on the ability to adapt to listening with a CI. Giraud et al used PET to study the activity induced by speech stimuli in CI users, and found that, compared to normal-hearing listeners, they had altered functional specificity of the superior temporal cortex, and exhibited contribution of visual regions to sound recognition (Giraud, Price, Graham, & Frackowiak, 2001). Furthermore, the contribution of the visual cortex to speech recognition increased over time after implantation (Giraud, Price, Graham, Truy, et al., 2001), suggesting that the CI users were actively using enhanced audio-visual integration to facilitate their learning of the novel speech sounds received through the CI. In contrast, Rouger et al (2012) suggested a negative impact of cross modal plasticity: they found that the right temporal voice area (TVA) was abnormally activated in CI users by a visual speech-reading task and that this activity declined over time after implantation while the activity in Broca’s area (normally activated by speech reading) increased over time after implantation. Coez et al (2008) also used PET to study activation by voice stimuli of the TVA in CI users with poor and good speech understanding. The voice stimuli induced bilateral activation of the TVA along the superior temporal sulcus in both normal hearing listeners and CI users with good speech understanding, but not in CI users with poor understanding. This result is consistent with the proposal of Rouger that the TVA is ‘taken over’ by visual speech reading tasks in CI users who do not understand speech well. Strelnikov et al. (2013) measured PET resting state activity and activations elicited by auditory and audio-visual speech in CI users soon after implantation and found that good speech understanding after 6 months of implant use was predicted by a higher activation level of the right occipital cortex and a lower activation in the right middle superior temporal gyrus. They suggested that the pre-implantation functional changes due to reliance on lip-reading were advantageous to development of good speech understanding through a CI via enhanced audio-visual integration. The overall picture that comes from these studies, suggests that the functional changes that occur during deafness can both enhance and degrade the ability to adapt to CI listening, and this may depend on the strategies the each person uses while deaf to facilitate their communication.

Lazard et al. have published a series of studies using functional magnetic resonance imaging (fMRI), in which they related pre-implant data with post-implant speech understanding. They found that, when doing a rhyming task with written words, CI users with later good speech understanding showed an activation pattern that was consistent with them using the normal phonological pathway to do the task, whereas those with poor outcomes used a route normally associated with lexical-semantic understanding (Lazard et al., 2010). They further compared activity evoked by speech and non-speech imageries in the right and left posterior superior temporal gyrus/supramarginal gyrus (PSTG/SMG) (Lazard et al., 2013). These areas are normally specialised for phonological processing in the left hemisphere and environmental sound processing in the right hemisphere. They found that responses in the left PSTG/SMG to speech imagery and responses in the right PSTG/SMG to environmental sound imagery both declined with duration of deafness, as memories of these sounds are lost. However, abnormally high activity was observed in response to phonological visual items in the right PSTG/SMG (contralateral to the zone where phonological activity decreased over time). They suggested that this pattern may be explained by the recruiting of the right PSTG/SMG region for phonological processing, rather than it being specialised for processing of environmental sounds.

In summary, the studies reviewed above have shown functional changes due to periods of deafness, some of which are detrimental to post-implant speech understanding. On the other hand increased activity in the occipital lobe in resting state and for auditory or audio-visual stimuli was positively correlated with post-implant speech understanding. It is evident that the functional changes noted by the authors involve not just the auditory cortex, but the distributed multimodal language networks and that the reliance on lip-reading while deaf may be a major factor that drives the functional changes.

1.2 Functional Near Infra-red Spectroscopy

Light in the near infra-red spectrum (wavelengths 650–1000 nm) is relatively transparent to human tissues. The absorption spectra of oxygenated and de-oxygenated haemoglobin (HbO and HbR respectively) for near infra-red light differ in that HbO maximally absorbs light of longer wavelength (900–1000 nm) whereas HbR maximally absorbs light of shorter wavelength. These differential absorption spectra make it possible, when using near infra-red light of different wavelengths to image cortical activity, to separate out changes in the concentration of HbO and HbR. In response to stimulus-driven activity in the brain, an increase in oxygenated blood is directed to the region. When the supply of oxygenated blood exceeds the demand for oxygen, the concentration of HbR decreases. Thus a pattern emerges of HbO and HbR concentrations changing in opposite directions in response to neural activity. The measurement of changes in HbO and HbR resulting from a neural response to a stimulus or due to resting state activity can be analysed to produce activation patterns and also to derive connectivity measures between intra-hemispheric and cross-hemispheric regions of interest.

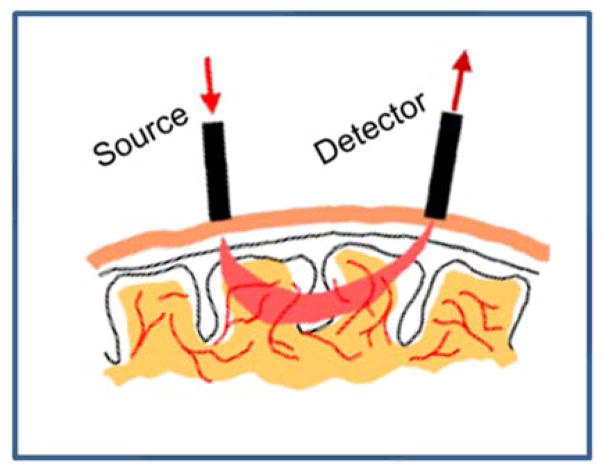

In the fNIRS imaging system optodes are placed at various locations on the scalp. Multiple channels can be used, and each fNIRS channel consists of a two optodes: a source and a detector. The source emits a light beam into the brain, directed perpendicular to the scalp surface, and the detector detects the light emerging from the brain. The detected light beam has scattered in a banana-shaped pathway through the brain reaching a depth of approximately half the distance between the source and detector (see Figure 1). The distance between source and detector, therefore, will determine the depth of cortical activity that is measured, and the amount of light that can be detected from the source at the detector position. It is currently thought that an optimal compromise between imaging depth and strength of the signal at the detector is obtained with a 3-cm distance of source and detector. In a montage of multiple optodes, each source can be associated with a number of surrounding detectors to form a multi-channel measurement system. In continuous wave systems, two frequencies of light are emitted by the diodes, and the signals at each source are frequency-modulated at different rates to facilitate separation of the light from different sources at the same detector position.

Figure 1.

Schematic of fNRIS optodes and light path in a single channel

For studying language areas in the brain in CI users, fNIRS offers some advantages over other imaging methods: compared to PET it is non-invasive; in contrast to fMRI it can be used easily with implanted devices, is silent, and is more robust to head movements; compared to EEG/MEG it has much greater spatial resolution, and in CI users is free from electrical or magnetic artifacts from the device or the stimuli. The portability, low-cost, and patient-friendly nature of fNIRS (similar to EEG) makes it a plausible method to contribute to routine clinical management of patients, including infants and children.

However, there are also limitations of fNIRS, which means that the research or clinical questions that can be validly investigated with it need to be carefully considered. The spatial resolution is limited by the density of the optodes used, and is not generally as good as that found with fMRI or PET imaging. Importantly, the depth of imaging is limited, so that it is only suitable for imaging areas of the cortex near the surface [although other designs of fNIRS systems that use lasers instead of diodes and use pulsatile stimuli can derive 3-dimension images of the brain, at least in infants (Cooper et al., 2014)]. However, fNIRS has been successfully used in a range of studies to determine effects on language processing in hearing populations of adults and children (Quaresima et al., 2012), therefore it shows great promise for assessing language processing in deaf populations and those with cochlear implants. The ability of fNIRS to image language processing in CI users has been demonstrated (Sevy et al., 2010).

In this paper we describe methods and present preliminary data for two investigations using fNIRS to compare CI users and normally-hearing listeners. In the first experiment, we measure resting state connectivity, and in the second experiment we compare the activation of cortical language pathways by visual and auditory speech stimuli and their connectivity.

2 Methods

2.1 fNIRS equipment and data acquisition

Data were acquired using a multichannel 32 optode (16 sources and 16 detectors) NIRScout system. Each source LED emitted light of two wavelengths (760 and 850 nm). The sources and detectors were mounted in a pre-selected montage using an EASYCAP with grommets to hold the fNIRS optodes, which allowed registration of channel positions on a standard brain template using the international 10–20 system. To ensure optimal signal detection in each channel, the cap was fitted first, and the hair under each grommet moved aside before placing the optodes in the grommets. For CI users, the cap was fitted over the transmission coil with the speech processor hanging below the cap. The data were exported into MatLab or NIRS-SPM for analysis.

2.2 Resting-State Connectivity in CI users using fNIRS

In this study we are comparing the resting state connectivity of a group of normal hearing listeners compared to a group of experienced adult CI users. Brain function in the resting state, or its default-mode activity (Raichle & Snyder, 2007) is thought to reflect the ability of the brain to predict changes to the environment and track any deviation from the predicted. Resting state connectivity (as measured by the correlation of resting state activity between different cortical regions) is influenced by functional organisation of the brain, and hence is expected to reflect plastic changes such as those due to deafness. In this study, we hypothesised that CI users would exhibit resting-state connectivity that was different from that of normally-hearing listeners.

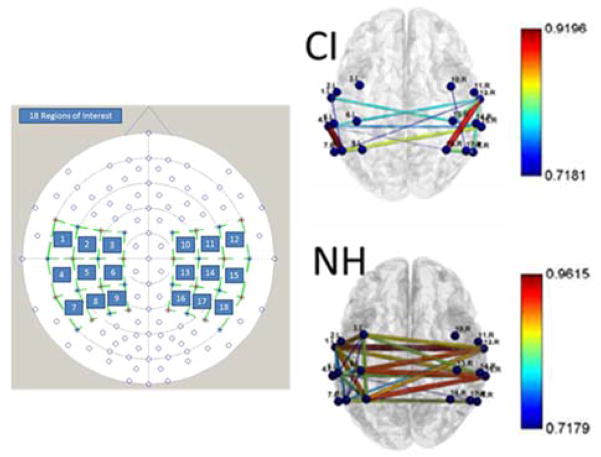

We used a 4x4 montage of 8 sources and detectors (24 channels) in each hemisphere (Figure 2), which covered the auditory and somatosensory regions of the brain. Sources and detectors were separated by 3 cm. Data in each channel were pre-processed to remove ‘glitches’ due to head movements and ‘good’ channels were identified by a significant cross-correlation (r > 0.75) between the data for the two wavelengths. Any ‘poor’ channels were discarded. The data were then converted to HbO and HbR based on a modified Beer-Lambardt Law (Cope et al., 1988).

Figure 2.

Example data showing resting state connectivity. The left panel shows the montage used and the 18 regions of interest. The right panels show the connectivity maps: the line colour and thickness denote the strength of connectivity (r) in the different brain regions of interest (colour bar denotes r-values). The data clearly show a lack of inter-hemispheric connectivity in the CI user compared to the NH listener.

Since resting state connectivity is based upon correlations between activity in relevant channels, it is important to carefully remove any aspects of the data (such as fluctuations from heartbeat, breathing, or from movement artifacts that are present in all channels) that would produce a correlation but is not related to neural activity. These processing steps to remove unwanted signals from the data included: minimizing regional drift using a discrete cosine transform; removing global drift using PCA ‘denoising’ techniques, low-pass filtering (0.08 Hz) to remove heart and breathing fluctuations. Finally the connectivity between channels was calculated using Pearson’s correlation, r. To account for missing channels, the data were reduced to 9 regions of interest in each hemisphere by averaging the data from groups of 4 channels.

2.3 Language Networks in CI users using fNIRS

In the second study, we aimed to measure the activity in, and connectivity between, regions in the brain involved in language processing in response to auditory and visual speech stimuli. We hypothesised that, compared to normal-hearing listeners, CI users would show altered functional organisation, and furthermore that this difference would be correlated with lip-reading ability and with their auditory speech understanding.

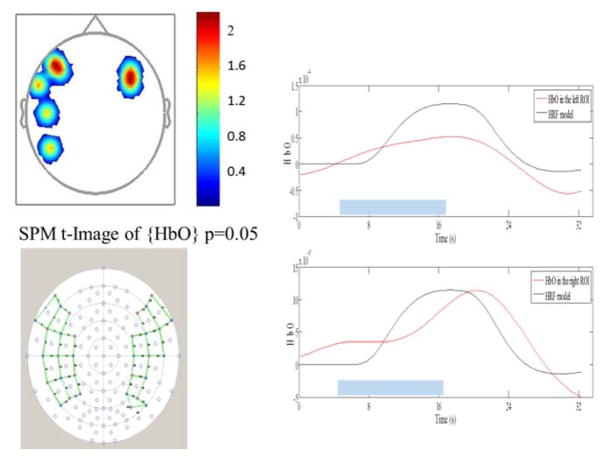

The optode montage for this experiment is shown in Figure 3. fNIRS data were collected using a block design in two sessions. In the first session, spondee words were used in 12-s blocks of visual spondees alternating with blocks of auditory spondees, with blocks separated by 15–25 s silent gaps. Between the stimulus blocks participants were asked to focus on a black screen in front of them, which was also used to present the visual stimuli. The auditory stimuli were presented via EAR-4 insert ear phones in normally-hearing listeners, or via direct audio input for the CI users. Sounds were only presented to the right ear of subjects (the CI users were selected to have unilateral right implants). In the second session, blocks consisted of sentences alternating between auditory-alone and audio-visual.

Figure 3.

Left panels show the montage of optodes used in Experiment 2 and the activation pattern elicited in one NH listener when watching visual spondees. The colour bar shows the t-values. The right panels show the average bilateral haemodynamic response functions from the regions of greatest activation in each hemisphere. The blue blocks indicate ‘stimulus-on’ times.

3 Results

3.1 Preliminary fNIRS Data for Resting-State Connectivity

Figure 2 shows example data for resting state connectivity in one CI user and one normal-hearing (NH) listener. The NH data is representative of all NH listeners tested to date, and shows particularly strong connectivity between analogous regions in left and right hemispheres: a result consistent with other reports from studies investigating resting state connectivity using fNIRS (Medvedev, 2014). To date, all the CI users, like the example shown, have consistently showed lower inter-hemispheric connectivity than the NH listeners. Further work is being conducted to evaluate group differences in more detail.

3.2 Preliminary fNIRS Data for Language Networks

Figure 3 shows example data from one NH participant in the second experiment showing activation patterns and haemodynamic response function in response to visual spondees. The activation pattern shows expected bilateral activation of Broca’s area (which is associated with articulation of speech), and some activation of language-related areas in the left hemisphere. Further work is being undertaken to compare activation patterns and connectivity in language pathways between CI users and NH listeners, and to correlate relevant differences to individual behavioural measures of speech understanding and lip-reading ability.

4 Discussion

Our preliminary data shows that fNIRS has the potential to provide insight into the way that brain functional organisation is altered by deafness and subsequent cochlear implantation. The fNIRS tool may be particularly useful for longitudinal studies that track changes over time, so that the changes in brain function can be correlated with simultaneous changes in behavioural performance. In this way, new knowledge about functional organisation and how it relates to individual’s ability to process language can be gained. For example, an fNIRS pre-implant test may, in the future, provide valuable prognostic information for clinicians and patients, and provide guidance for individual post-implant therapies designed to optimise outcomes. Routine clinical use of fNIRS is feasible due to its low-cost. It will be particularly useful in studying language development in deaf children due to its patient-friendly nature.

Acknowledgments

This research was supported by a veski fellowship to CMM, an Australian Research Council Grant (FT130101394) to AKS, a Melbourne University PhD scholarship to ZX, the Australian Fulbright Commission for a fellowship to RL, the Lions Foundation, and the Melbourne Neuroscience Institute. The Bionics Institute acknowledges the support it receives from the Victorian Government through its Operational Infrastructure Support Program.

References

- Coez A, Zilbovicius M, Ferrary E, Bouccara D, Mosnier I, Ambert-Dahan E, Bizaguet E, Syrota A, Samson Y, Sterkers O. Cochlear implant benefits in deafness rehabilitation: PET study of temporal voice activations. Journal of nuclear medicine. 2008;49(1):60–67. doi: 10.2967/jnumed.107.044545. [DOI] [PubMed] [Google Scholar]

- Cooper RJ, Magee E, Everdell N, Magazov S, Varela M, Airantzis D, Gibson AP, Hebden JC. MONSTIR II: a 32-channel, multispectral, time-resolved optical tomography system for neonatal brain imaging. The Review of scientific instruments. 2014;85(5):053105. doi: 10.1063/1.4875593. [DOI] [PubMed] [Google Scholar]

- Cope M, Delpy DT, Reynolds EO, Wray S, Wyatt J, van der Zee P. Methods of quantitating cerebral near infrared spectroscopy data. Advances in experimental medicine and biology. 1988;222:183–189. doi: 10.1007/978-1-4615-9510-6_21. [DOI] [PubMed] [Google Scholar]

- Dewey RS, Hartley DE. Cortical cross-modal plasticity following deafness measured using functional near-infrared spectroscopy. Hearing Research. 2015 doi: 10.1016/j.heares.2015.03.007. [DOI] [PubMed] [Google Scholar]

- Giraud AL, Price CJ, Graham JM, Frackowiak RS. Functional plasticity of language-related brain areas after cochlear implantation. Brain. 2001;124(Pt 7):1307–1316. doi: 10.1093/brain/124.7.1307. [DOI] [PubMed] [Google Scholar]

- Giraud AL, Price CJ, Graham JM, Truy E, Frackowiak RS. Cross-modal plasticity underpins language recovery after cochlear implantation. Neuron. 2001;30(3):657–663. doi: 10.1016/s0896-6273(01)00318-x. [DOI] [PubMed] [Google Scholar]

- Lazard DS, Lee HJ, Truy E, Giraud AL. Bilateral reorganization of posterior temporal cortices in post-lingual deafness and its relation to cochlear implant outcome. Human Brain Mapping. 2013;34(5):1208–1219. doi: 10.1002/hbm.21504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lazard DS, Lee HJ, Gaebler M, Kell CA, Truy E, Giraud AL. Phonological processing in post-lingual deafness and cochlear implant outcome. Neuroimage. 2010;49(4):3443–3451. doi: 10.1016/j.neuroimage.2009.11.013. [DOI] [PubMed] [Google Scholar]

- Lee HJ, Giraud AL, Kang E, Oh SH, Kang H, Kim CS, Lee DS. Cortical activity at rest predicts cochlear implantation outcome. Cereb Cortex. 2007;17(4):909–917. doi: 10.1093/cercor/bhl001. [DOI] [PubMed] [Google Scholar]

- Lee JS, Lee DS, Oh SH, Kim CS, Kim JW, Hwang CH, Koo J, Kang E, Chung JK, Lee MC. PET evidence of neuroplasticity in adult auditory cortex of postlingual deafness. Journal of Nuclear Medicine. 2003;44(9):1435–1439. [PubMed] [Google Scholar]

- Medvedev AV. Does the resting state connectivity have hemispheric asymmetry? A near-infrared spectroscopy study. Neuroimage. 2014;85(Pt 1):400–407. doi: 10.1016/j.neuroimage.2013.05.092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quaresima V, Bisconti S, Ferrari M. A brief review on the use of functional near-infrared spectroscopy (fNIRS) for language imaging studies in human newborns and adults. Brain and language. 2012;121(2):79–89. doi: 10.1016/j.bandl.2011.03.009. [DOI] [PubMed] [Google Scholar]

- Raichle ME, Snyder AZ. A default mode of brain function: a brief history of an evolving idea. Neuroimage. 2007;37(4):1083–1090. doi: 10.1016/j.neuroimage.2007.02.041. discussion 1097–1089. [DOI] [PubMed] [Google Scholar]

- Rouger J, Lagleyre S, Demonet JF, Fraysse B, Deguine O, Barone P. Evolution of crossmodal reorganization of the voice area in cochlear-implanted deaf patients. Human Brain Mapping. 2012;33(8):1929–1940. doi: 10.1002/hbm.21331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sevy AB, Bortfeld H, Huppert TJ, Beauchamp MS, Tonini RE, Oghalai JS. Neuroimaging with near-infrared spectroscopy demonstrates speech-evoked activity in the auditory cortex of deaf children following cochlear implantation. Hearing Research. 2010;270(1–2):39–47. doi: 10.1016/j.heares.2010.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strelnikov K, Rouger J, Demonet JF, Lagleyre S, Fraysse B, Deguine O, Barone P. Visual activity predicts auditory recovery from deafness after adult cochlear implantation. Brain. 2013;136(Pt 12):3682–3695. doi: 10.1093/brain/awt274. [DOI] [PubMed] [Google Scholar]

- Vigneau M, Beaucousin V, Herve PY, Jobard G, Petit L, Crivello F, Mellet E, Zago L, Mazoyer B, Tzourio-Mazoyer N. What is right-hemisphere contribution to phonological, lexico-semantic, and sentence processing? Insights from a meta-analysis. Neuroimage. 2011;54(1):577–593. doi: 10.1016/j.neuroimage.2010.07.036. [DOI] [PubMed] [Google Scholar]