Abstract

This research examines whether visual and haptic map learning yield functionally equivalent spatial images in working memory, as evidenced by similar encoding bias and updating performance. In three experiments, participants learned four-point routes either by seeing or feeling the maps. At test, blindfolded participants made spatial judgments about the maps from imagined perspectives that were either aligned or misaligned with the maps as represented in working memory. Results from Experiments 1 and 2 revealed a highly similar pattern of latencies and errors between visual and haptic conditions. These findings extend the well known alignment biases for visual map learning to haptic map learning, provide further evidence of haptic updating, and most importantly, show that learning from the two modalities yields very similar performance across all conditions. Experiment 3 found the same encoding biases and updating performance with blind individuals, demonstrating that functional equivalence cannot be due to visual recoding and is consistent with an amodal hypothesis of spatial images.

Most models of human spatial cognition are based exclusively on visual input. In a notable departure, Bryant (1997) proposed a spatial representation system (SRS) that provides a common representational format for the different input modalities of vision, hearing, touch, and language. Similarly, the notion of metamodal brain organization has been proposed to explain findings from neuroimaging studies demonstrating that brain regions traditionally considered to process sensory-specific information can be recruited for computation of similar stimuli from other inputs (See Pascual-Leone and Hamilton, 2001 for a discussion). Indeed, a growing body of behavioral evidence suggests that learning from different input modalities, including spatial language, leads to similar performance on spatial tasks (Avraamides, Loomis, Klatzky, & Golledge, 2004; Klatzky, Lippa, Loomis, & Golledge, 2003; Loomis, Lippa, Golledge, & Klatzky, 2002;Giudice, Klatzky, and Loomis, 2009). Extending Bryant's (1997) theory, Loomis and colleagues have interpreted this functionally equivalent behavior in terms of a “spatial image” that can be created using information from any of the different modalities. The spatial image is a transient, working memory representation of environmental locations and layout. In contrast to a 2-D pictorial image, spatial images are fully three-dimensional and externalized in perceptual/motor space, just as are percepts (perceptual representations). However, whereas percepts exist only while supported by sensory input, spatial images continue to exist for some time thereafter in working memory. A number of other researchers have proposed and supported this idea of a transient representation of surrounding space in working memory (e.g., Byrne, Becker, & Burgess, 2007; Mou, McNamara, Valiquette, & Rump, 2004; Waller & Hodgson, 2006; Wang & Spelke, 2000), mainly in connection with visual input.

Functional equivalence has been demonstrated for spatial updating tasks involving vision, spatial hearing, and spatial language (for review, see Loomis, Klatzky, Avraamides, Lippa, & Golledge, 2007). In these studies, participants perceived either a single target location or a small array of targets and then were required to walk blindly to a given target along a direct or indirect path. The finding of highly similar terminal locations for all paths between input modalities supports the hypothesis that participants were updating functionally equivalent spatial images (Klatzky et al., 2003; Loomis et al., 2002). Further evidence for this hypothesis was obtained with allocentric distance and direction judgments between remembered objects learned using vision and language (Avraamides et al., 2004, Experiment 3).

Functional equivalence can be interpreted in at least three ways: modality-specific spatial images that result in equivalent performance on spatial tasks (separate but equal hypothesis), spatial images of common sensory format, most probably visual (visual recoding hypothesis), and abstract spatial images not allied with any input modality (amodality hypothesis [Bryant, 1997]). As will become apparent, our results favor the amodality hypothesis.

Three experiments extend the study of functional equivalence to haptic and visual inputs by comparing spatial behavior for maps learned through vision and touch. Building on the notion of the spatial image, we hypothesize that haptic and visual map learning will yield spatial images showing equivalent biases and updating behavior. The experiments exploit a well-known phenomenon in the spatial cognition literature known as the “alignment effect”. Originally reported in connection with the interpretation of visual maps (Levine, 1982; Levine, Jankovic, & Palij, 1982), these studies showed that when the upward direction in a visual map was misaligned with the observer's facing direction in the physical environment, judging the direction of environmental features represented on the map is slower and less accurate than when the map is aligned with their physical heading. A similar alignment effect has been reported with blind people feeling tactual maps (Rossano & Warren, 1989). Subsequent research has centered on alignment effects associated with spatial memory—when a person's facing direction during recall is aligned with the facing direction during learning of a visual array, directional judgments are faster and more accurate then when the facing direction during testing is misaligned (Diwadkar & McNamara, 1997; Mou et al., 2004; Presson, DeLange, & Hazelrigg, 1989; Presson & Hazelrigg, 1984; Roskos-Ewoldsen, McNamara, Shelton, & Carr, 1998; Rossano & Moak, 1998; Sholl & Bartels, 2002; Sholl & Nolin, 1997; Waller, Montello, Richardson, & Hegarty, 2002). Although most spatial memory studies have dealt with visual learning, the alignment effect has also been demonstrated for spatial layouts specified by spatial hearing and verbal description, with similar alignment biases found for these and visually specified layouts (Avraamides & Pantelidou, 2008; Shelton & McNamara, 2004; Yamamoto & Shelton, 2009). The orientation specificity demonstrated by the alignment effect contrasts with orientation free representations, which are argued as being equally accessible from any orientation (Evans & Pedzek, 1980; Levine et al., 1982; Presson et al., 1989; but see McNamara, Rump, & Werner, 2003).

Several lines of research suggest that haptic learning also leads to orientation-specific memory representations, e.g., haptic scene recognition is faster and more accurate from the learning orientation than from other orientations (Newell, Ernst, Tjan, & Bulthoff, 2001; Newell, Woods, Mernagh, & Bulthoff, 2005) and haptic reproduction of layouts from an orientation different from learning is slower and more error prone than from the same orientation (Ungar, Blades, & Spencer, 1995). However, these studies were not aimed at characterizing the nature of haptic bias or the ensuing spatial image, as is the current focus. The present work is concerned, in part, with comparing alignment effects in spatial working memory resulting from visual and haptic encoding of tactile maps. Previous work comparing memory alignment effects following visual and non-visual exploration of target arrays provides support for the haptic-visual equivalence predicted in the current studies. For instance, Levine et al. (1982, Experiment 5) compared performance following visual learning with that following kinesthetic learning (the participant's finger was passively guided along an unseen route map), and Yamamoto and Shelton (2005) compared performance after visual learning with that following proprioceptive learning (blindfolded participants were guided through a room-sized layout of six objects). While visual and non-visual alignment effects were similar in both studies, neither used a within subjects designed to compare equivalence between modalities, nor did they directly address haptic and visual learning of spatial layouts, as are the primary goals of the current studies. In order to investigate haptic-visual equivalence, these experiments compare performance after map learning from both modalities across several within subjects conditions.

In addition to comparing alignment biases between haptic and visual encoding, the current experiments also investigate haptic spatial updating. Several studies have already demonstrated haptic updating, e.g., haptic scene recognition for perspectives from locations other than that at learning (Pasqualotto, Finucane, & Newell, 2005), updating the locations of haptically perceived targets after moving to a novel orientation from that experienced during learning (Barber & Lederman, 1988; Hollins & Kelley, 1988), and rotational updating of locations encoded by touching objects with a cane (May & Vogeley, 2006; Gall-Peters & May, 2007). Our work extends these earlier studies by directly comparing haptic and visual updating performance.

Based on the various lines of evidence discussed above, we predict that the results of Experiments 1 and 2 comparing haptic and visual map learning will show equivalent alignment biases and updating behavior, consistent with the amodality hypothesis. To show that equivalent performance cannot be explained by the visual recoding hypothesis, Experiment 3 tests blind participants, whose behavior cannot depend on vision, on an analogous haptic map learning task.

Experiment 1

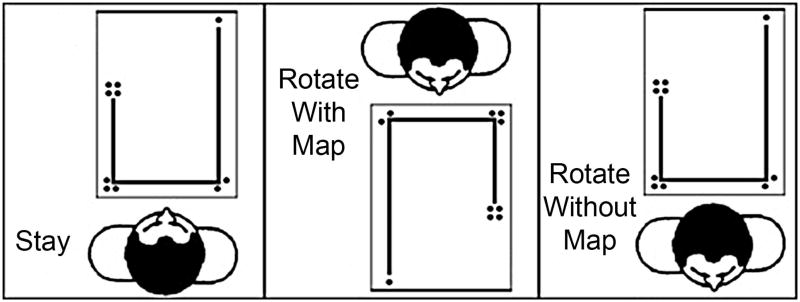

Following the lead of a study by Waller et al. (2002), participants in this experiment made aligned and misaligned judgments in three conditions which manipulated how the maps in memory were oriented with respect to the body (see Fig 2 for a depiction of each condition). In the Stay condition, aligned and misaligned judgments were made from the same physical orientation relative to the map as during learning. In the Rotate With Map condition, observer and map were tethered during a 180° rotation such that the map remained in front of them after turning. In the third condition, Rotate Without Map, the observer rotated 180° while standing in place, such that the stationary map was behind them after turning.

Figure 2.

Physical orientation of the participant with respect to the map during the testing phase. The left panel illustrates the Stay condition (equivalent to the learning perspective for all conditions), the center panel shows the Rotate With Map and the far right panel illustrates the Rotate Without Map condition. Participants had to make an aligned and misaligned JRD from each of the response type conditions.

As map information and amount of learning were matched between inputs, the hypothesis of functionally equivalent spatial images predicts that test performance should be similar after haptic and visual map learning across all conditions. Evidence for functional equivalence is obtained if there is a tight coupling for latencies and errors between inputs, and evidence for non-equivalence is found if latency and error data significantly differ between haptic and visual trials.

Results from the Stay condition are expected to extend the traditional alignment effect with visual map learning to touch, showing that aligned judgments with both learning modalities are made faster and more accurately than misaligned judgments. The rotate conditions allow comparison of updating performance between input modalities. This provides a stronger test of functional equivalence, as responses require mental transformations of the spatial image. As the spatial image is postulated as a working memory representation critical in the planning and execution of spatial behavior, once formed, it is assumed to be fixed with respect to the environment, even as the participant moves. Under this hypothesis, the two rotate conditions should yield a reliably different pattern of results between aligned and misaligned judgments, indicating the presence or absence of an updated spatial image. Updating is not expected in the Rotate With Map condition as the rotation does not change the map's orientation at test with respect to its representation in working memory. As such, test performance on aligned and misaligned trials should not reliably differ between the Rotate With Map and Stay conditions because judgments for both can be performed from a testing perspective which is congruent with the spatial image as initially perceived from the map at learning. It is a different matter with the Rotate Without Map condition, for after the rotation, the participant is now standing with their back to the map, thereby facing the opposite direction from the map's initial orientation. Here, we expect updating of the spatial image with respect to the participant's body, thus keeping the spatial image fixed with respect to the environment at test. If the spatial image for the map is indeed externalized in the same way that the perceived map is externalized, we expect the 180° rotation to result in a reversal of the alignment effect, such that judgments that were aligned and easy to perform from the learning orientation prior to rotation now become misaligned and difficult after the rotation and judgments that were misaligned and difficult to perform from the learning perspective are now aligned and made faster and more accurately after the rotation (for similar reasoning, see Waller et al. , 2002, p. 1059).1 In this view, updating reflects a change in the working memory representation, not a modification of the remembered perspective in long-term memory (LTM). To address LTM contributions, the design must fully dissociate differences between the learning heading and imagined heading and the actual heading and imagined heading (Mou et al., 2004) and this is not the intent of the present work.

Method

Participants

Twenty-four participants (twelve male) between the ages of 17 and 22, ran in the experiment. All gave informed consent and received Psychology course credit or monetary compensation for their time.

Materials

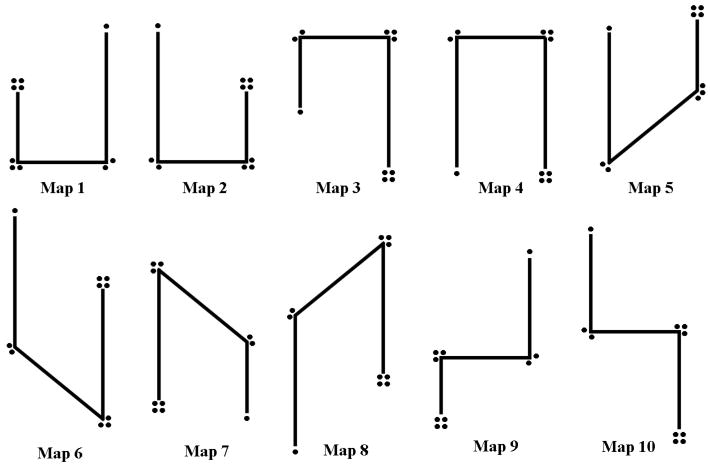

The experiment employed nine routes modified from Presson et al. (1989). All routes comprised 4 points connected by three straight-line segments. The routes ranged from 36 to 44 cm in length and were constructed by gluing wooden popsicle sticks (2 mm thick and 7 mm wide) on a 40 × 40 cm board, thus defining a map. Figure 1 shows all routes used in Experiments 1-3 (orthogonal orientations were also used). Segments one and three were always parallel to each other and the two turns created by segment two consisted of ± 45, 90 or 135 degree angles. The four points along each route were marked with 1, 2, 3 or 4 tactile bumps glued at the four vertices. These spherical bumps (3 mm high and 1 cm in diameter, spaced 1 cm center to center) designated locations 1-4 along the path from which subsequent judgments were made.

Figure 1.

Examples of the 10 maps used in all experiments. Each map contains tactile indicators (raised dots) of positions 1, 2, 3, and 4. Participants were instructed to learn the maps (either by haptic or visual inspection), then make two judgments of relative direction per map, one aligned and one misaligned from the orientation at test.

For all conditions, participants sat at a 65 cm high table, with the map positioned directly in front of them. Except for the hand/arm movements necessary for haptic exploration, they were instructed to not move their head or body during map learning. Apart from the visual inspection trials, participants wore a Virtual Research V8 head-mounted display (HMD) throughout the experiment. The HMD served two purposes. With its screens blanked, it acted as a blindfold during the haptic trials and wait period and during the testing phase, it displayed text instructions to the participant (see procedure for details). An Intersense IS-300 inertial tracker was mounted on the HMD to record head direction during testing. The tracker had an accuracy of 3° RMS and a resolution of 0.02°. Version 2.5 of the Vizard software package (WorldViz, Santa Barbara, CA; "www.worldviz.com") was used to coordinate the sequence of experimental conditions and record participant orientation data.

Procedure

All participants learned four-point maps from vision and haptic exploration and made one aligned and one misaligned judgment per map at test in each of three different response type conditions. Thus, the experiment represents a 2 (learning modality) by 2 (alignment) by 3 (response type) equally balanced, within participants design. Each participant trained and tested on nine paths, three practice paths and six experimental paths (one for each modality × response type combination). Trials were blocked by response type condition and alternated between modalities with each block preceded by a practice map. An aligned and misaligned judgment was made for each map in the experimental conditions, with two identical judgments performed with corrective feedback in the practice conditions (one each for haptic and visual learning).

The experiment consisted of three phases: a training period, a wait interval, and a testing period, described below. For all trials, participants were initially blindfolded using the HMD as a mask. The training phase allowed 30 sec of map exploration. During this period, participants were instructed to learn the geometry of the path and the location of each of the numbered points at the four vertices using haptic or visual inspection. For visual learning, training was initiated by lifting the HMD to see the map and concluded by lowering the HMD to its blindfold position. For haptic map learning, training commenced upon placing the index finger of the participant's dominant hand on the bottom left corner of the route and concluded by having them remove their hand from the map. A beep indicated termination of training and initiation of a 30 sec wait interval.

During the waiting phase, blindfolded participants stood up and did one of three things. In the Stay condition, they waited 30 sec in place. In the Rotate With Map condition, they were handed the map used during learning and asked to rotate 180° in place while holding the map in front of them. They were told when to stop turning and allowed to touch only the edge of the map frame, not the route itself. In this way, the body-stimulus coupling was preserved (0° offset) before, during and after rotation. In the Rotate Without Map condition, participants were instructed to turn 180° in place away from the map. To ensure that they understood that the map was now behind them (180° offset), they reached back with both hands to feel the corners of the frame upon completing the rotation. Participants in all three conditions were handed a joystick (dominant hand) near the end of the wait interval, following any rotation. The joystick was used to initiate responses during the testing phase.

The testing phase required participants to imagine that they were standing at one of the four numbered locations on the route while facing a second location and then to turn in place to face a third location. In the literature, this is referred to as a judgment of relative direction (JRD). Instructions were delivered to the participant as text messages through the HMD. The cuing instructions, presented immediately after the 30 sec waiting period, specified the location and orientation on the route at which participants should imagine themselves standing; e.g., “you are standing at location 3, location 4 is directly in front of you”. Upon imagining being oriented at the specified location, they pulled the joystick's trigger. This advanced the display to the prompting instructions which specified where to turn, e.g., “turn to face location 1”. No physical translations were made; participants simply rotated in place as if preparing to walk a straight-line path between the two locations. Participants pulled the joystick's trigger a second time to indicate they had completed their turning response and the computer logged the angle turned. Previous work has suggested that blindfolded rotations are less accurate than rotations where the participant can see their feet, possibly due to decay of the proprioceptive trace (Montello, Richardson, Hegarty, & Provenza, 1999). Thus, to help calibrate rotation, a constant auditory beacon, produced by an electronic metronome presented 1.83 m in front of the participant, was delivered simultaneously with the prompting instructions to provide a fixed frame of reference during pointing. The duration between the onset of the cuing instructions and the first trigger pull was recorded by the computer and called orientation time. The duration between the onset of the prompting instructions and the second trigger pull was also recorded and called turning time.

For all trials on which participants made JRDs, they were instructed to respond as quickly as possible without compromising accuracy and to turn the minimum distance between targets, i.e., all rotations between their imagined location and destination should be 180° or less. Two test trials were given per map, one that was aligned with the map's orientation at testing and one that was misaligned (order and map presentation were counterbalanced). The two alignment trials were separated by a 20 sec waiting interval where after the first response, participants were either turned back to the learning orientation (Stay condition) or returned to the 180° opposed orientation (Rotate With Map and Rotate Without Map conditions).

Results

The three dependent measures of orientation time, turning time and absolute pointing error (absolute difference between the angle turned and correct angle) were analyzed for each participant. Gender was also initially included in all of our statistical analyses. However, since no statistically reliable gender effects were observed, all analyses reported in this article collapse over gender. Data from one male participant was excluded because of a corrupted log file. The means and standard errors for all dependent measures, separated by learning modality, alignment, and response type conditions for Experiments 1-3 are provided in Table 1 of Appendix A.

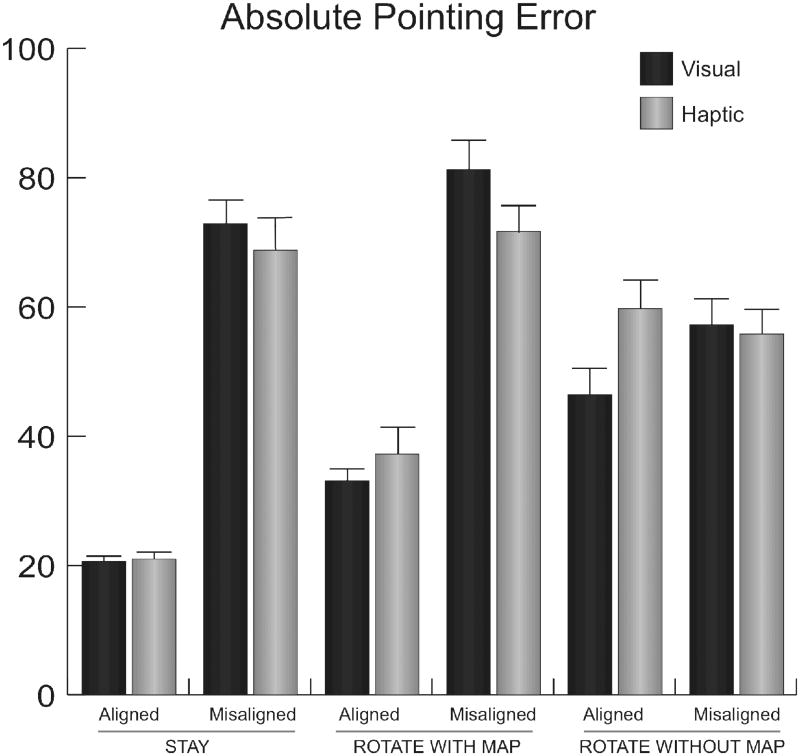

The most striking finding from Experiment 1 was the highly similar pattern of performance between modalities on all dependent measures in all response mode conditions. A strong alignment effect, in latencies and errors, was observed after haptic and visual learning in the Stay and Rotate With Map conditions. Average performance in the Stay condition revealed that trials that were aligned with the testing (and learning) orientation took approximately 3.7s less time to imagine being oriented, were executed 2.0s faster, and had 50° less pointing error than trials which were misaligned with the testing orientation. The Rotate With Map condition showed a similar pattern of results; aligned judgments took 1.9s less time to orient, were executed 2.1s faster, and had 41.3° less error than misaligned judgments. The Rotate Without Map condition showed a small numeric trend for updating, indicated by an advantage for aligned trials at test, but overall performance was generally slow and inaccurate across modalities for both aligned and misaligned trials. Since all of our significant alignment effects with the RT data also manifest with errors, Figure 3 shows only error performance for aligned and misaligned judgments in each response type condition, as we believe this measure is most relevant to our questions of interest.

Figure 3.

Mean absolute pointing error for Experiment 1, blocked by response type condition and collapsed across learning modality. Error bars represent 1 SEM.

The inferential tests confirm what is obvious from the descriptive data. A 2 (modality) by 2 (alignment) by 3 (response type) repeated measures ANOVA was conducted for each of the three dependent measures of orientation time, turning time and absolute pointing error. Significant main effects of alignment were observed for all dependent measures, with aligned judgments made faster and more accurately than misaligned judgments: orientation time, F (1, 22) = 9.779, p = 0.005, η2 = 0.31; turning time, F (1, 22) = 7.832, p = 0.011, η2 = 0.27; and absolute pointing error, F (1, 22) = 11.365, p = 0.003, η2 = 0.34. None of the dependent measures revealed significant main effects of modality, all ps > 0.234, or a modality by response type interaction, all ps > 0.194. The lack of reliable differences, coupled with extremely small effect sizes for these measures, η2 < 0.06 and η2 < 0.05 respectively provide statistical support for the development of functionally equivalent spatial images built up from haptic and visual learning.

Significant alignment by response type interactions were observed for orientation time, F (2, 44) = 6.385, p = 0.004, η2 = 0.23 and absolute pointing error, F (2, 44) = 8.031, p = 0.01, η2 = 0.27. Subsequent Dunn-Sidak pairwise comparisons, collapsed across modality, confirmed that the significant alignment by response type interaction was driven by the orientation RTs and pointing errors for the Stay and Rotate With Map conditions, which were reliably faster and more accurate on aligned trials than misaligned trials, ps > 0.05. By contrast, over-all performance in the Rotate Without Map condition was generally slow and inaccurate for both aligned and misaligned judgments and there was no significant effect of alignment on any of the dependent measures, all ps > 0.217.

Discussion

There are two important findings from Experiment 1. First, the results of the Stay condition, showing that haptic aligned trials were reliably faster and more accurate than misaligned trials, demonstrate that haptic map learning is susceptible to the same encoding biases which have been long-known for visual map learning. Second, the highly similar performance patterns observed in all conditions between haptic and visual learning, both in latencies and errors, provides compelling evidence for the development and accessing of functionally equivalent spatial images. We had also hoped to show unambiguous evidence of spatial updating in the Rotate Without Map condition. Performance based on an updated spatial image would manifest as a reversal of the alignment effect. The predicted result was not observed; mean performance in the Rotate Without Map condition was generally slow and inaccurate across all testing trials. However, analysis of individual participant data revealed that the mean data masked consistent individual differences. That is, 14 of 23 participants (60%) actually showed clear evidence for updating, as evidenced by a reversal of the alignment effect after rotation. The remaining 9 participants (40%) did not update their rotation, i.e., retained a preference for the learning orientation after turning, similar to the Stay and Rotate With Map conditions. In light of similar results of Waller et al., (2002, Experiment 2) obtained with a similar condition involving rotating in place after viewing a simple map on the ground, we should not be surprised by this pattern of results. While Waller and colleagues did find a reliable but small alignment effect for the average participant after rotation, aligned and misaligned trials were relatively slow and inaccurate. Moreover, as with our results, some of the participants clearly exhibited an alignment effect like that in their Stay condition and other participants exhibited a clear reversal of the alignment effect. Interestingly, our pattern of mixed alignment effects provides still further evidence for functional equivalence of spatial images from touch and vision, as 19 of the 23 Ss exhibited the same pattern for both haptic and visual trials.

Experiment 2

Various studies have shown that spatial updating resulting from physical body rotation is more accurate than updating associated with imagined display rotation (Simons & Wang, 1998; Simons, Wang, & Roddenberry, 2002; Wang & Simons, 1999; Wraga, Creem-Regehr, & Proffitt, 2004). The design of Experiment 2 builds on these ideas and is based in part on the influential work of Simons and Wang, who investigated spatial updating during observer movement vs. sensed movement of the stimulus array (Simons & Wang, 1998). The authors had participants visually learn the arrangement of five items on a circular table. The object array was then occluded, and the display was either visually rotated by a fixed angle or participants physically walked the same angle around the display. The experiments used a recognition/change detection task, where, at test, participants were required to determine whether the perspective after self- or stimulus-rotation matched the perspective at learning or if it had changed. Performance was found to be significantly more accurate when perspective changes were caused by their own movement than by manual rotation of the display. The superior updating performance during self-motion is thought to be a result of vestibular and proprioceptive information available in the walking condition, rather than changes in retinal projection (Simons et al., 2002). Supporting this view, the same updating advantage for observer movement versus display movement has been found with a haptic variant of the Simons and Wang paradigm (Pasqualotto et al., 2005).

In the current experiment, haptic and visual map learning was followed by aligned and misaligned JRDs made from three response-type conditions: a Stay condition identical to Experiment 1, a Rotate Map condition, where the map was manually turned 180° by the stationary participant and a Rotate Around Map condition, where the participant walked 180° around a stationary map. In line with the results of Experiment 1, we predicted that the Stay condition would show the traditional alignment effect. By contrast, based on the previous work comparing observer versus display rotation, we expected performance in the Rotate Around Map condition to show clear evidence of updating. Judgments aligned with the body's orientation relative to the updated map, but misaligned from the learning perspective, were expected to be made faster and more accurately than judgments misaligned during testing and aligned during learning. As with the display rotation conditions in previous studies, our Rotate Map condition was predicted to show little evidence of updating, but if updating did occur, aligned judgments should be faster and more accurate than misaligned judgments with respect to the testing perspective. Cross-condition comparisons are of particular interest in connection with haptic updating, for these have not been examined before in the literature. However, our primary interest is with functional equivalence—whether similar variations in latency and error are observed in each condition for touch and vision.

Method

Twenty-four participants (twelve male) between the ages of 17 and 25, ran in the experiment (none had participated in Exp 1). All gave informed consent and received Psychology course credit or monetary compensation for their time. Except for the events during the 30 sec interval between map training and testing, the procedure and stimuli were identical to those of Experiment 1. After haptic or visual map training, participants were blindfolded, stood up and then performed one of three actions before receiving the test instructions through the HMD. In the Stay condition, they waited 30 sec in place at the 0° learning orientation, as in the previous experiment. In the Rotate Map condition, they remained at the 0° learning orientation but grasped the edge of the map and rotated the physical display 180° in place (contact was only made with the frame, not with the raised route). In this way, the map remained in front of them at the start of testing but was oriented 180° from how it was learned. In the Rotate Around Map condition, participants were instructed to walk to the opposite side of the map such that they were now facing the map at a position 180° opposed from the learning orientation. To ensure that they understood that the map was still in front of them upon reaching the other side, they were asked to touch each corner of the frame.

Results

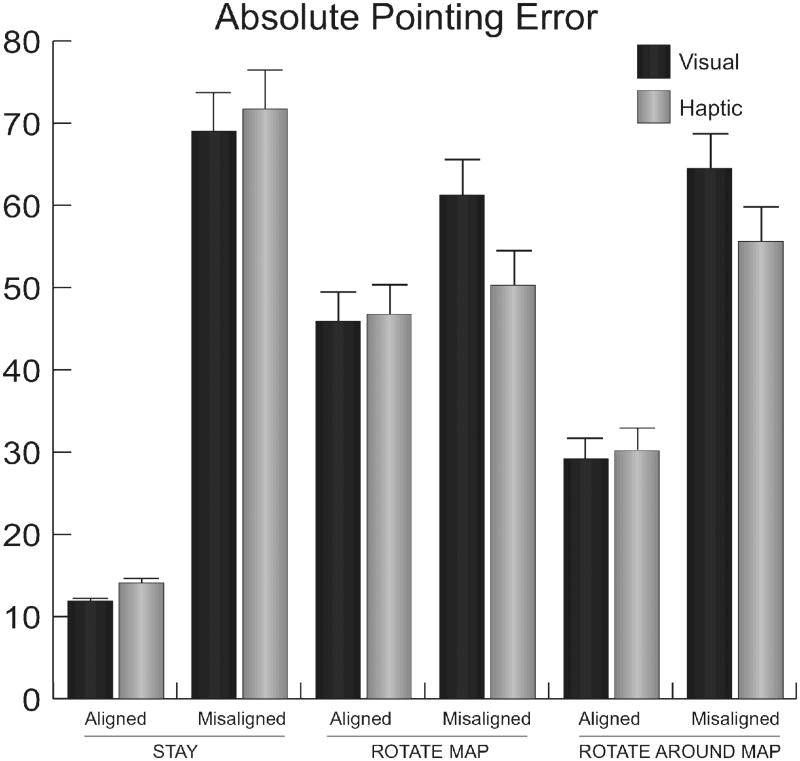

The mean values for the three dependent measures of Experiment 2 are summarized in Table 1 of the Appendix. Corroborating the findings from Experiment 1, a highly similar pattern of results between haptic and visual map learning was observed for aligned and misaligned judgments across all dependent measures and response type conditions. As in Experiment 1, performance in the Stay condition after both haptic and visual learning revealed that trials aligned with the testing (and learning) orientation were made faster and more accurately than misaligned trials, with an approximate 1.6s advantage for orientation time, a 2.2s advantage for turning time, and a 57.4° improvement in accuracy for pointing error for aligned vs. misaligned judgments. Experiment 2 results also provide clear evidence for haptic updating after observer movement, indicated by an advantage for aligned trials after participant rotation. For instance, judgments in the Rotate Around Map condition that were aligned with the testing orientation but misaligned with the learning orientation were made faster and more accurately than those which were misaligned at testing. Participants in this condition took approximately 1.4s less time to orient, responded 3.2s faster, and had 30° less error for aligned judgments than for misaligned judgments. The Rotate Map condition showed a hint of updating—orientation time was 0.92s faster, turning time was 0.26s faster, and error was 9.4° smaller for the aligned judgments. However, performance in this condition was generally slow and inaccurate for both aligned and misaligned trials. Figure 4 shows the aligned and misaligned errors for each response type condition.

Figure 4.

Mean absolute pointing error for Experiment 2, blocked by response type condition and collapsed across learning modality. Error bars represent 1 SEM.

A 2 (modality) by 2 (alignment) by 3 (response type) repeated measures ANOVA was conducted for each of the dependent measures of orientation time, turning time and absolute pointing error. Significant main effects of alignment were observed for all dependent measures, with aligned judgments made faster and more accurately than misaligned judgments: orientation time, F (1, 23) = 5.58, p = 0.027, η2 = 0.20; turning time, F (1, 23) = 10.222, p = 0.004, η2 = 0.31; and absolute pointing error, F (1, 23) = 16.831, p = 0.001, η2 = 0.42. None of the dependent measures revealed significant main effects of modality, all ps > 0.42 and η2 < 0.026. Significant alignment by response type interactions were observed for turning time, F (2, 46) = 5.615, p < 0.007, η2 = 0.20; and absolute pointing error, F (2, 46) = 4.781, p = 0.013, η2 = 0.17.

The absence of significant main effects for modality across measures agrees with the results of Experiment 1 and speaks to the strength of the relation between haptic and visual performance in all response modes. The reversal of the alignment effect observed in the Rotate Around Map condition, showing greater accuracy of aligned vs. misaligned trials at testing, t (47) = 2.171, p = 0.018, provides compelling evidence that participants were acting on an updated spatial image. Finally, although there was a small reversal of the alignment effect in the Rotate Map condition, mean latencies and errors for both aligned and misaligned trials were generally large and no significant differences were obtained between DVs, all ps > 0.1.

Discussion

Replicating the results of Experiment 1, performance in the Stay condition after both haptic and visual encoding showed a clear alignment effect, in latencies and errors, for the orientation at test. More importantly, the finding that both haptic and visual trials also led to nearly identical latencies and errors in the Rotate Around Map condition provides evidence for equivalent updating between inputs. These findings add to the sparse literature showing haptic updating (Pasqualotto et al., 2005; Barber & Lederman, 1988; Hollins & Kelley, 1988) and extend a well-established corpus of work showing that people are better able to update changes in perspective after physical body movement than orientation changes after display rotation (Simons & Wang, 1998; Simons et al., 2002). By contrast, mean pointing error in the Rotate Map condition, where participants stayed in place but rotated the map, was inaccurate for both aligned and misaligned trials. This was the expected result. Thus, our findings clearly demonstrate that the sensorimotor cues associated with walking around the map have a greater facilitative effect on updating performance than manually rotating the display. Taken together, results from Experiment 2 extend the findings of Experiment 1 by showing that haptic and visual map learning lead to comparable perceptual biases and updating performance across all conditions. We interpret these data as providing compelling evidence for the building up and accessing of functionally equivalent spatial images.

Experiment 3

The goals of Experiment 3 were two-fold. First, we wanted to investigate the alignment effect and haptic spatial updating with blind persons, and second, we wanted to rule out the possibility of visual recoding as explaining equivalent performance between visual and haptic learning. The ability of blind people to perform tasks like path integration and spatial updating (Loomis et al., 1993; Loomis et al., 2002; Klatzky et al., 1995) and mental rotation (Carpenter & Eisenberg, 1978; Marmor & Zabeck, 1976) is presumably mediated by spatial imagery built up from nonvisual spatial information such as proprioception, audition, and haptics. Tactile maps represent an important tool for supporting these behaviors as they provide access to environmental relations which are otherwise difficult to obtain from nonvisual perception (for review, see Golledge, 1991). However, little is known about the existence of alignment biases or the ability to update representations built up from haptic map learning by the blind. A study looking at the up = forward correspondence in blind participants did find a strong alignment effect for haptic maps (Rossano and Warren, 1989). This finding suggests that we will find a similar effect for our Stay condition. However, the Rossano and Warren (1989) study did not address mental transformations of space, such as are required in the spatial updating condition of the current experiment. Indeed, difficulties on spatial updating and inference tasks by blind individuals are frequently cited in the literature (Byrne & Salter, 1983; Dodds, Howarth, & Carter, 1982; Espinosa, Ungar, Ochaita, Blades, & Spencer, 1998; Rieser, Guth, & Hill, 1982, 1986).

Experiment 3 investigates these issues by asking blind participants to learn haptic maps and then make aligned and misaligned JRDs in stay and update conditions. As spatial images are not restricted to the visual modality and haptic information is matched during encoding for all experiments, we expect the same pattern of results for the blind participants here as were found with sighted participants in the first two studies. That is, a strong alignment effect will be observed in the Stay condition and walking around the map will facilitate updating (e.g., the Rotate Around Map condition of Experiment 2). Although formal study of these issues has been limited in the blind spatial cognition literature, several lines of evidence support our predictions. For instance, research with blind participants shows greater accuracy for reproducing an array of objects from the learning orientation than from other orientations (Ungar et al., 1995), increased directional errors when the up-equals-forward rule is violated (Rossano & Warren, 1989), and a recognition cost for scenes after rotation (Pasqualotto & Newell, 2007). Accurate spatial updating of small haptic displays, of similar scale to the current study, has also been demonstrated in the blind (Barber & Lederman, 1988; Hollins & Kelley, 1988).

The second goal of this experiment was to test the amodality hypothesis. The similar encoding biases and mental transformations observed for haptic and visual map learning in Experiments 1 and 2 indicate functional equivalence of spatial images. We favor the amodality hypothesis (Bryant, 1997), whereby sensory information from different encoding modalities gives rise to amodal spatial images, as explaining these findings. An alternative explanation of functionally equivalent behavior is the visual recoding hypothesis, which asserts that all inputs are converted into a visually-based spatial image (Lehnert & Zimmer, 2008; Pick, 1974). From this perspective, it could be argued that the alignment effect occurs because the map is represented as a visual picture-like image that retains the learning orientation in memory (Shepard & Cooper, 1982). Tentative support for the recoding hypothesis was found in a study by Newell and her colleagues showing that scene recognition after changes in orientation was similar for haptic and visual learning but cross-modal performance after haptic learning was worse than after visual learning (Newell et al., 2005). Based on this evidence, the authors suggest that haptic information is recoded into visual coordinates in memory. Thus, the decrement in cross-modal performance after haptic learning is explained by a recoding cost based on inefficiencies in the conversion process of one representation to another.

Our latency data from Experiments 1 and 2 provide no evidence for such a cost, as predicted by the visual recoding hypothesis; no reliable differences were observed for the RTs between learning modalities. While we consider the development of an amodal spatial image as the more parsimonious explanation for our results, these data cannot definitively rule out the possibility that visual recoding may account for functional equivalence. If the latter should be true, we should see a different pattern of results for blind participants in the current study as they are presumably not invoking a visually-mediated representation to support behavior. Conversely, if behavior is mediated by amodal spatial images, the presence or absence of vision has no bearing on post-encoding processes and performance should not differ between haptic conditions between participant groups in Experiments 1-3. Previous findings showing similar spatial updating performance between blindfolded-sighted and blind participants after learning object locations through spatial hearing and spatial language provide tentative evidence for amodality and updating of a common spatial image (Loomis et al., 2002).

Method

Ten blind participants took part in the study (see Table 2 in Appendix A for full participant details). All gave informed consent and received payment in exchange for their time. The procedure was similar to that of the first two experiments. Participants learned ten tactile maps (see Figure 1) and then made aligned and misaligned judgments from two response mode conditions. The Stay condition was identical to the previous experiments. The Rotate (update) condition required participants to walk 90° around the map before making their judgments at test. Note that the learning position was the same for both conditions at the 0° heading and that initial exploration along the vertical axis encouraged learning of this dominant reference direction for all layouts independent of their global orientation. The adoption of a 90° rotation instead of the 180° rotation used in Experiments 1 and 2 ensured that JRDs were made from a novel point of observation during the update condition. This procedure is often used in studies addressing long-term memory contributions, as it fully dissociates differences between physical, imagined, and learning orientations (Mou et al., 2004). Although our interest in the spatial image involves working memory, this modification simplifies interpretation of our results. That is, since judgments in the update condition are parallel to the 90-270° axis of the map, there is no possible contribution of an effect due to a disparity between the learning heading and the imagined heading, as can occur with a 180° rotation (see Mou et al., 2004 for a thorough discussion). As with the earlier experiments, evidence for spatial updating is obtained in the Rotate condition if judgments aligned with the orientation at test show a speed accuracy advantage over those which are misaligned. However, as we are now using a 90° rotation instead of a 180° rotation, updating no longer represents a reversal of the alignment effect with respect to the learning orientation.

Results

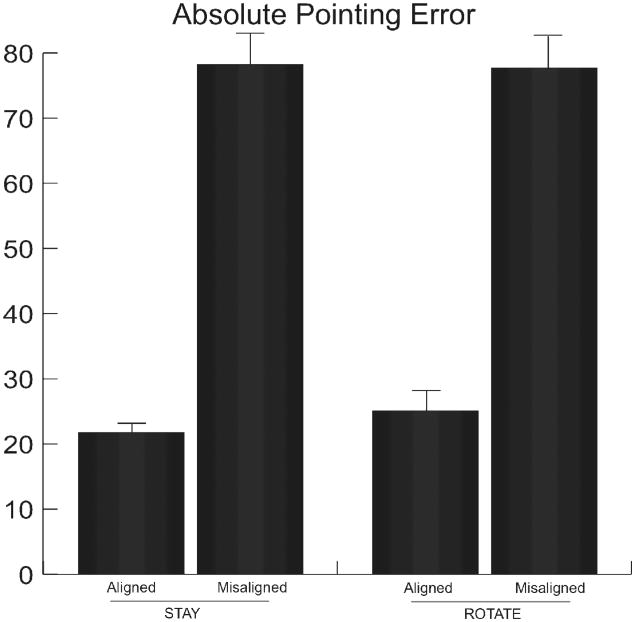

The mean values for the three dependent measures of Experiment 3 are summarized in Table 1 of the Appendix. Replicating the results of the first 2 experiments, performance in the Stay condition showed a clear alignment effect. Trials aligned with the testing (and learning) orientation were made faster and more accurately than misaligned trials, with a 1.5s advantage for orientation time, a 2.1s advantage for turning time, and a 56° reduction in pointing error. In support of updating of the spatial image, the same pattern of results was observed in the Rotate condition, with a 2.7s advantage for orientation time, a 3.1s advantage for turning time, and a 52.6° reduction in pointing error with aligned vs. misaligned judgments. Figure 5 shows aligned and misaligned pointing error for the Stay and Rotate conditions for the blind participants.

Figure 5.

Mean absolute pointing error for Experiment 3, blocked by response type condition. Error bars represent 1 SEM.

A 2 (alignment) by 2 (response type) repeated measures ANOVA was conducted for each of the dependent measures of orientation time, turning time and absolute pointing error. Significant main effects of alignment were observed for all dependent measures, with aligned judgments made faster and more accurately than misaligned judgments: orientation time, F (1, 9) = 6.374, p = 0.032, η2 = 0.41; turning time, F (1, 9) = 8.073, p = 0.019, η2 = 0.47; and absolute pointing error, F (1, 9) = 12.962, p = 0.001, η2 = 0.59. As would be expected by the accurate updating performance, there was no main effect of response mode, all ps > 0.54 and η2 < 0.043, or a response mode by alignment interaction, all ps > 0.46 and η2 < 0.043.

Although all of our participants were functionally blind, e.g., used a long cane or dog guide, the sample was not homogeneous; thus, it is possible that factors such as age of onset of blindness or residual light perception had an unintended effect on our results. We believe this unlikely given the finding of similar performance across blind participants, the fact that none had any more than light and minimal near-shape perception, and that the average duration of stable blindness was 37 years for the sample (See table 2 in Appendix A). However, to address these factors, we performed additional sub-analyses on the error data. A mixed model ANOVA with group (total VS. residual blindness) as a between-subjects factor and repeated measures on factors of alignment and response mode revealed main effects of alignment, F (1, 9) = 42.383, p = 0.001, η2 = 0.57 and group, F (1, 9) = 4.631, p = 0.04, η2 = 0.13. There was no main effect of response mode (Stay or Rotate) or any significant interactions, all ps > 0.15 and η2 < 0.06. Subsequent T-tests comparing aligned VS. misaligned performance showed that the main effect of group was driven by differences in misaligned performance, with the totally blind participants revealing greater error than participants with residual vision (93.2° VS. 62.7°), t (18) = 2.014, p = 0.05. Given the extremely small sample sizes of our blind sub-groups and the associated variability, it is difficult to make definitive conclusions from these results. However, what is clear from the data is that participants in both blind groups showed a strong alignment effect. While totally blind performance was reliably worse than participants with some residual vision on misaligned trials, this finding is not particularly meaningful as both groups were quite inaccurate. Of greater note, the total and residual blind groups showed comparable and accurate performance for aligned trials in both the Stay condition (23.1° VS. 20.5°)and Rotate condition (29.4° VS. 21.2°)respectively. Formal analyses comparing the eight congenitally blind participants with the two adventitiously blind subjects are not appropriate given the extremely small and unbalanced samples but it is worth noting informally that both groups showed a similar alignment effect across Stay and Rotate conditions. Mean errors for the congenitally and adventitiously blind groups respectively were: Stay aligned (22.1° VS. 20.4°), Rotate aligned (24.1° VS. 29.3°), Stay misaligned (80.1° VS. 70.6°), and Rotate misaligned (78.2° VS. 75.9°).

Discussion

The results of Experiment 3 are unequivocal with respect to the questions motivating the study. Our first question was whether blind map learners show similar alignment effects as their sighted counterparts. The results of the Stay condition confirmed that aligned judgments were made faster and more accurately than misaligned judgments. The almost identical advantage for aligned trials after rotation provides a definitive answer to our second question of whether blind participants show evidence for haptic updating. If they had not updated their rotation after walking 90° to a novel orientation, i.e., responded as if their current heading was the same as at learning, systematic errors of at least 90° would be expected for both aligned and misaligned judgments. However, not only were aligned judgments made significantly faster and more accurately than misaligned judgments in the rotate condition, but performance in the Stay and Rotate conditions did not reliably differ from each other. Indeed, as can be seen in Figure 5, the magnitude of the effect observed between conditions was similar across dependent measures: orientation time, 1.5 vs. 2.7 secs; turning time, 2.1 vs. 3.1 secs; and pointing error, 56° vs. 52° for Stay vs. Rotate conditions respectively. These results provide clear evidence for both a haptic alignment effect and accurate spatial updating in the blind.

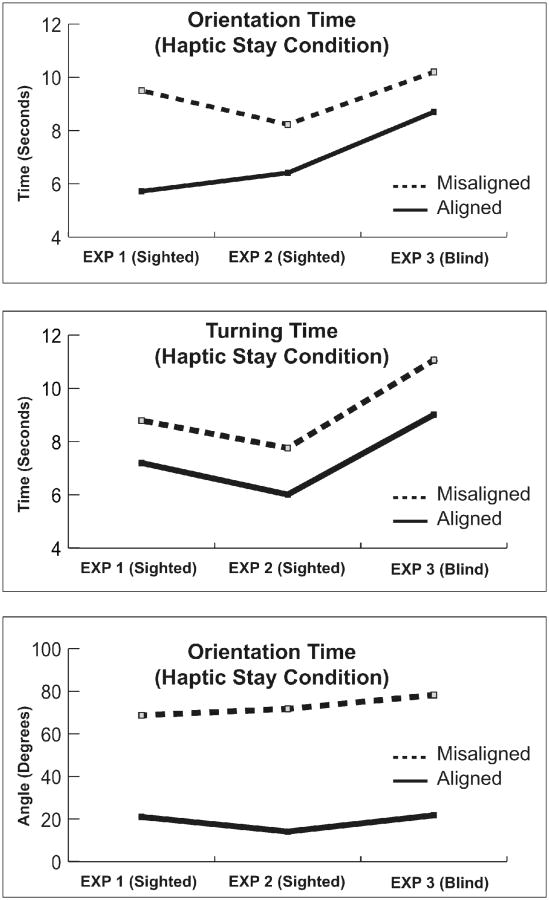

Experiment 3 also aimed to address whether visual recoding could account for the functional equivalence observed in Experiments 1 and 2. Our data do not support the recoding hypothesis. Results from blind participants show a highly similar pattern of latencies and errors as was observed in the analogous haptic conditions with blindfolded-sighted participants in the previous studies. Figure 6 shows the results from our three dependent measures of orientation time, turning time, and absolute pointing error for the identical Stay condition used in each of the three experiments (comparisons are not shown for the various rotate conditions due to methodological differences between studies). As is clear from Figure 6, comparable speed-accuracy benefits were observed for aligned vs. misaligned trials between blind and blindfolded sighted groups. The slightly larger numeric mean values for the blind participants are not surprising given the smaller sample and greater variability but importantly, the magnitude of the effect was similar across measures between groups. We interpret the absence of reliable differences between haptic and visual conditions of Experiments 1 and 2, coupled with the similar performance on haptic conditions between blindfolded sighted and blind participants in Experiments 1-3, as consistent with development of an amodal spatial image rather than a visually recoded representation. The similar performance between blind groups on all aligned trials, especially the Rotate condition which requires updating, provides additional evidence for the amodal hypothesis and shows that visual recoding cannot account for our results.

Figure 6.

Mean orientation time (top panel), turning time (middle panel), and absolute pointing error (bottom panel) for the haptic Stay conditions for Exps 1-3. Figure shows similar pattern of performance between blind and sighted subjects. Aligned judgments were faster and more accurate than misaligned judgments for all participant groups across experiments.

General Discussion

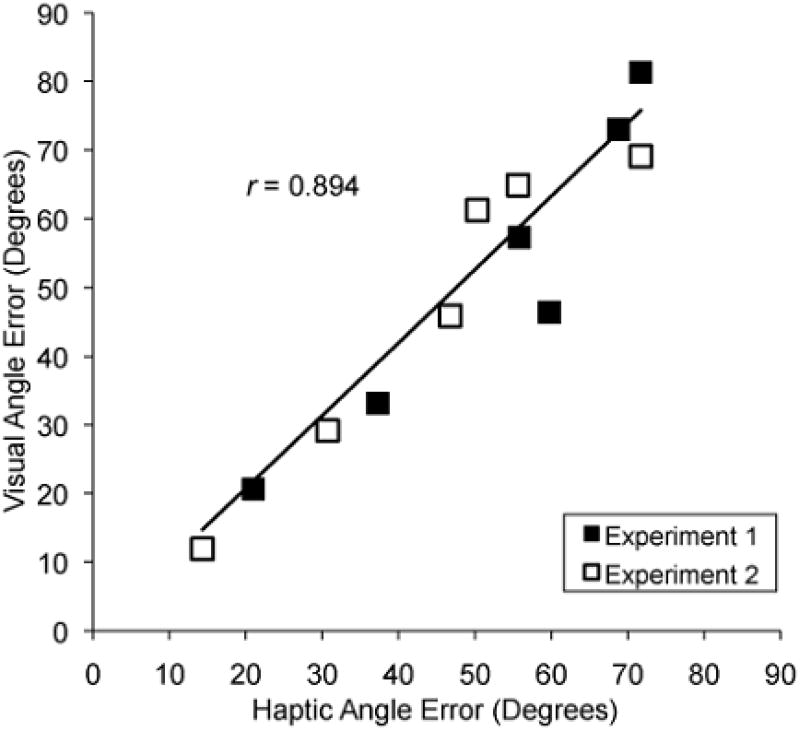

The most important finding from these experiments is the nearly identical overlap of mean response times and mean absolute pointing errors in all conditions after haptic and visual learning of spatial layouts. These results provide strong evidence for functional equivalence of spatial images from touch and vision. Figure 7 presents in graphical form the striking similarity of haptic and visual error data, averaged over participants, from all conditions of Experiments 1 and 2. The tight covariation is indicated by the high correlation coefficient (r (12) = 0.894, p < 0.01).

Figure 7.

Scatterplot showing correlation of mean absolute pointing error for haptic and visual trials by experiment. The solid line represents identical performance for the two modalities.

The findings from the various haptic map learning conditions are important in their own right. Results from the Stay conditions across experiments clearly demonstrate that haptic map learning is susceptible to the same alignment biases long-known for visual map learning (Levine et al., 1982; Presson & Hazelrigg, 1984). Beyond the near identical encoding biases between input modalities, performance in conditions requiring a mental transformation of the representation i.e., the Rotate Around Map condition of Experiment 2 and Rotate condition of Experiment 3, provide clear support for haptic updating of the spatial image. The results from Experiment 3 are especially important, as previous work has suggested that blind persons perform poorly on updating tasks requiring inferring object relations (Byrne & Salter, 1983; Dodds et al., 1982; Espinosa et al., 1998; Rieser et al., 1982, 1986). The current work, which ensured consistent information availability between blind and sighted, adds to a growing body of evidence showing similar updating performance between groups (Giudice, 2004; Loomis et al., 1993; Loomis et al., 2002). The updating observed in these conditions is consistent with the idea that changes in one's heading and/or position can cause an updating of the spatial image contained within working memory. Conversely, the results observed in the Rotate With Map condition of Experiment 1 support this same view, for updating was not observed when participants made aligned and misaligned judgments after turning in place while holding the map, i.e., maintained a 0° heading difference between the physical (and perceived) body orientation and the map.

Our results are consistent with evidence demonstrating the importance of the reference axis defined between an observer and a visual object array on the formation of spatial memory (Waller, Lippa, & Richardson, 2007) as well as findings showing that observer movement does not necessarily modify the privileged status of the learning orientation in memory (Mou et al., 2004; Shelton & McNamara, 2001; Waller et al., 2002; Kelly, Avraamides, and Loomis, 2007). Our results also agree with other studies showing functionally equivalent updating performance between encoding modalities such as vision, spatial hearing, and spatial language (Avraamides et al., 2004; Klatzky et al., 2003; Loomis et al., 2002). The current findings extend the equivalence hypothesis to touch and vision, including comparisons between sighted and blind participants.

Finally, we interpret the tight covariation of measures between touch and vision as providing evidence for the amodality hypothesis, e.g., development of sensory-independent spatial images in working memory. This interpretation of the current results is in conflict with the alternative hypothesis of visual recoding (Lehnert & Zimmer, 2008; Pick, 1974; Newell et al., 2005). Support for this alternative hypothesis would have been obtained if (1) performance in the haptic conditions of Experiments 1 and 2 had been slower than the analogous visual conditions, indicating a recoding cost or (2) had the blind participants in Experiment 3 revealed a notably different pattern of performance than that of the sighted participants in the first two experiments (indicating use of a fundamentally different process due to lack of visual recoding). Neither outcome was observed in our data. With regard to prediction 1, there were no reliable differences between the mean latency values for touch and vision across all response conditions of Experiments 1 and 2. Notably, RTs were faster after haptic learning 50% of the time for both orientation and turning times, with only a 0.6s and 0.7s speed advantage after visual learning for the remaining trials on the same measures. With respect to prediction 2, the similar pattern of results between the blind and blindfolded sighted on haptic trials across experiments demonstrates that vision is not necessary for performing the tasks, an outcome which is inconsistent with the view that participants were acting on a visually recoded spatial image.

Of the two remaining hypotheses, we favor the amodality hypothesis over the separate-but-equal hypothesis because of the absence of reliable differences in the latency and error data between haptic and visual conditions from Experiments 1 and 2. If modality-specific information affected the time to access the named perspective in working memory for each JRD or the processing latency required for transformations of separate spatial images once instantiated, one would expect reliable differences between haptic and visual conditions for the orientation time and turning time measures respectively. The absence of these differences in our data leans us toward the amodality hypothesis--that equivalent behavior is mediated by an amodal spatial image. However, this argument for amodality is not airtight, and more decisive tests are needed to refute one or the other hypothesis. One such promising test would rely on interference. If a visual distracter can be found that interferes with the retention of a spatial image in working memory, evidence for amodality would be obtained if such a distracter is equally effective in interfering with spatial images of the same location initiated by visual and haptic input.

Acknowledgments

Authors' Note: The authors thank Brandon Friedman, Ryan Magnuson, and Brendan McHugh for their assistance in running participants, Jon Kelly for his assistance in programming and Jonathan Bakdash for his contributions to the statistical analyses. This work was supported by NRSA grant 1F32EY015963-01 to the first author and NSF grant BCS-0745328 to the third author.

Appendix A

Table 1.

Mean (±SEM) orientation time, turning time, and pointing error for aligned and misaligned trials at test across all experiments. Combined row equals average of visual and haptic trials as described in Exps 1 and 2.

| Experiment | Orientation Time | Turning Time | Pointing Error | ||||

|---|---|---|---|---|---|---|---|

| Condition | Modality | Aligned | Misaligned | Aligned | Misaligned | Aligned | Misaligned |

| 1 (N=23) | |||||||

| Stay | Visual | 7.21 (0.61) | 10.81 (1.50) | 7.51 (1.22) | 9.88 (1.36) | 20.64 (3.48) | 72.93 (11.21) |

| Haptic | 5.72 (0.39) | 9.50 (1.17) | 7.20 (0.87) | 8.79 (1.13) | 21.00 (3.56) | 68.77 (14.44) | |

| Combined | 6.47 (0.38) | 10.16 (0.95) | 7.36 (0.74) | 9.33 (0.88) | 20.82 (2.39) | 70.85 (9.04) | |

| Rotate With Map | Visual | 6.77 (1.07) | 8.44 (1.34) | 6.46 (0.82) | 8.77 (1.26) | 33.14 (5.76) | 81.30 (14.42) |

| Haptic | 6.74 (0.60) | 8.90 (1.20) | 5.98 (0.56) | 7.88 (0.56) | 37.26 (8.66) | 71.69 (12.08) | |

| Combined | 6.75 (0.60) | 8.67 (0.89) | 6.22 (0.49) | 8.33 (0.68) | 35.20 (5.15) | 76.50 (9.33) | |

| Rotate Without Map | Visual | 8.73 (0.93) | 9.37 (1.46) | 7.32 (1.05) | 9.13 (1.26) | 46.44 (11.97) | 57.30 (12.45) |

| Haptic | 8.12 (0.96) | 8.01 (0.80) | 10.48 (1.73) | 9.35 (2.24) | 59.75 (13.19) | 55.80 (11.57) | |

| Combined | 8.43 (0.75) | 8.69 (0.82) | 8.90 (1.03) | 9.24 (1.27) | 53.10 (8.86) | 56.55 (8.40) | |

| 2 (N=24) | |||||||

| Stay | Visual | 6.55 (0.70) | 7.89 (1.07) | 5.31 (0.37) | 8.01 (0.69) | 11.87 (1.67) | 69.03 (13.02) |

| Haptic | 6.41 (0.57) | 8.23 (1.11) | 6.01 (0.54) | 7.76 (0.75) | 14.11 (2.21) | 71.74 (12.93) | |

| Combined | 6.48 (0.45) | 8.06 (0.76) | 5.66 (0.33) | 7.89 (0.51) | 12.99 (1.38) | 70.38 (9.08) | |

| Rotate Map | Visual | 7.04 (0.81) | 7.60 (0.98) | 6.27 (0.42) | 7.06 (0.97) | 45.93 (10.08) | 61.24 (12.07) |

| Haptic | 7.22 (0.78) | 8.51 (1.22) | 6.97 (0.62) | 6.71 (0.71) | 46.77 (10.26) | 50.31 (11.77) | |

| Combined | 7.13 (0.56) | 8.05 (0.77) | 6.62 (0.38) | 6.88 (0.59) | 46.35 (7.11) | 55.77 (8.38) | |

| Rotate Around Map | Visual | 6.96 (0.75) | 8.78 (1.13) | 5.71 (0.45) | 10.57 (1.94) | 29.17 (6.21) | 64.53 (12.60) |

| Haptic | 6.35 (0.81) | 7.42 (1.13) | 6.16 (0.58) | 7.71 (0.73) | 30.17 (6.55) | 55.64 (12.61) | |

| Combined | 6.66 (0.55) | 8.10 (0.80) | 5.93 (0.36) | 9.14 (1.05) | 29.94 (4.50) | 60.08 (8.84) | |

| 3 (N=10) | |||||||

| Stay | Haptic (Blind) | 8.70 (0.59) | 10.20 (1.31) | 9.01 (0.82) | 11.06 (1.23) | (2.28) | 78.22 (11.74) |

| Rotate | Haptic (Blind) | 8.24 (0.77) | 10.96 (1.26) | 7.89 (0.82) | 10.94 (1.73) | 25.14 (4.53) | 77.71 (11.91) |

Table 2. Blind Participant Information.

Blind participant details from Experiment 3.

| sex | Etiology of Blindness | Residual Vision | Age | Onset | Years (stable) |

|---|---|---|---|---|---|

| m | Leber's Congenital Amaurosis | light & minimal shape perception | 34 | birth | 33 |

| m | Congenital Glaucoma | none | 57 | birth | 57 |

| m | Leber's Hereditary Optic Neuropathy | none | 39 | 26 | 39 |

| m | Retinopathy of Prematurity | none | 55 | birth | 55 |

| f | Unknown Diagnosis | light perception | 28 | birth | 28 |

| m | Retinitis Pigmentosa | none | 49 | 24 | 25 |

| m | Retinopathy of Prematurity | none | 62 | birth | 62 |

| m | Retinitis Pigmentosa | light perception | 32 | birth | 32 |

| f | Retinitis Pigmentosa | light & minimal shape perception | 51 | birth | 16 |

| m | Congenital Glaucoma | light & minimal shape perception | 20 | birth | 20 |

Footnotes

Unlike Waller et al. (2002), we define aligned and misaligned judgments in terms of the expected orientation of the spatial image of the map relative to self. Under the assumption that the spatial image is externalized in the way that a perceptual representation is, we assume that the spatial image, at the moment of testing, has the same position and orientation relative to self that the physical map does. (As the data reveal, however, this latter assumption is not always supported). Thus, assuming that the spatial image is updated by 180° with respect to self as the result of a 180° rotation by the participant, then what was initially a misaligned judgment during learning becomes an aligned judgment during testing. In contrast, Waller et al. (2002), as well as other researchers, define alignment and misalignment with respect to the view of the map during the learning phase. For these authors, a particular judgment defined as aligned during learning continued to be defined as aligned even when the participant reversed orientation with respect to the physical map. We adopt our convention, for it is more closely connected with what is represented in working memory when spatial updating is successful.

References

- Avraamides MN, Kelly JW. Multiple systems of spatial memory and action. Cognitive Processing. 2008;9:93–106. doi: 10.1007/s10339-007-0188-5. [DOI] [PubMed] [Google Scholar]

- Avraamides MN, Loomis JM, Klatzky RL, Golledge RG. Functional equivalence of spatial representations derived from vision and language: Evidence from allocentric judgments. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2004;30:804–814. doi: 10.1037/0278-7393.30.4.804. [DOI] [PubMed] [Google Scholar]

- Avraamides MN, Pantelidou S. Does body orientation matter when reasoning about depicted or described scenes? In: Freksa C, Newcombe SN, Gärdenfors P, Wölfl S, editors. Spatial cognition vi: Lecture notes in artificial intelligence. Vol. 5248. Berlin: Springer; 2008. pp. 8–21. [Google Scholar]

- Barber PO, Lederman SJ. Encoding direction in manipulatory space and the role of visual experience. Journal of Visual Impairment & Blindness. 1988;82:99–106. [Google Scholar]

- Bryant DJ. Representing space in language and perception. Mind & Language. 1997;12:239–264. [Google Scholar]

- Byrne P, Becker S, Burgess N. Remembering the past and imagining the future: A neural model of spatial memory and imagery. Psychological Review. 2007;114:340–375. doi: 10.1037/0033-295X.114.2.340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Byrne RW, Salter E. Distances and directions in the cognitive maps of the blind. Canadian Journal of Psychology. 1983;37:293–299. doi: 10.1037/h0080726. [DOI] [PubMed] [Google Scholar]

- Carpenter PA, Eisenberg P. Mental rotation and the frame of reference in blind and sighted individuals. Perception and Psychophysics. 1978;23:117–124. doi: 10.3758/bf03208291. [DOI] [PubMed] [Google Scholar]

- Diwadkar VA, McNamaara TP. Viewpoint dependence in scene recognition. Psychological Science. 1997;8:302–307. [Google Scholar]

- Dodds AG, Howarth CI, Carter D. Mental maps of the blind: The role of previous visual experience. Journal of Visual Impairment and Blindness. 1982;76:5–12. [Google Scholar]

- Espinosa MA, Ungar S, Ochaita E, Blades M, Spencer C. Comparing methods for introducing blind and visually impaired people to unfamiliar urban environments. Journal of Environmental Psychology. 1998;18:277–287. [Google Scholar]

- Evans GW, Pedzek K. Cognitive mapping: Knowledge of real-world distance and location information. Journal of Experimental Psychology: Human Learning and Memory. 1980;6:13–24. [PubMed] [Google Scholar]

- Gall-Peters A, May M. 48th meeting of the Psychonomics Society. Long Beach, CA: 2007. Taking spatial perspectives after action- and language-based learning. [Google Scholar]

- Giudice NA. Unpublished Dissertation. University of Minnesota; Twin Cities, MN: 2004. Navigating novel environments: A comparison of verbal and visual learning. [Google Scholar]

- Giudice NA, Klatzky RL, Loomis JM. Evidence for amodal representations after bimodal learning: Integration of haptic-visual layouts into a common spatial image. Spatial Cognition & Computation. 2009;9:287–304. [Google Scholar]

- Golledge RG. Tactual strip maps as navigational aids. Journal of Visual Impairment & Blindness. 1991;85:296–301. [Google Scholar]

- Hollins M, Kelley EK. Spatial updating in blind and sighted people. Perception and Psychophysics. 1988;43:380–388. doi: 10.3758/bf03208809. [DOI] [PubMed] [Google Scholar]

- Kelly JW, Avraamides MN, Loomis JM. Sensorimotor alignment effects in the learning environment and in novel environments. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2007;33:1092–1107. doi: 10.1037/0278-7393.33.6.1092. [DOI] [PubMed] [Google Scholar]

- Klatzky RL, Golledge RG, Loomis JM, Cicinelli JG, Pellegrino JW. Performance of blind and sighted in spatial tasks. Journal of Visual Impairment & Blindness. 1995;89:70–82. [Google Scholar]

- Klatzky RL, Lippa Y, Loomis JM, Golledge RG. Encoding, learning, and spatial updating of multiple object locations specified by 3-d sound, spatial language, and vision. Experimental Brain Research. 2003;149:48–61. doi: 10.1007/s00221-002-1334-z. [DOI] [PubMed] [Google Scholar]

- Lehnert G, Zimmer HD. Common coding of auditory and visual spatial information in working memory. Brain Research. 2008;1230:158–167. doi: 10.1016/j.brainres.2008.07.005. [DOI] [PubMed] [Google Scholar]

- Levine M, Jankovic IN, Palij M. Principles of spatial problem solving. Journal of Experimental Psychology: General. 1982;111:157–175. [Google Scholar]

- Loomis JM, Klatzky RL, Avraamides M, Lippa Y, Golledge RG. Functional equivalence of spatial images produced by perception and spatial language. In: Mast F, Jäncke L, editors. Spatial processing in navigation, imagery, and perception. Springer; 2007. pp. 29–48. [Google Scholar]

- Loomis JM, Klatzky RL, Golledge RG, Cincinelli JG, Pellegrino JW, Fry PA. Nonvisual navigation by blind and sighted: Assessment of path integration ability. Journal of Experimental Psychology: General. 1993;122:73–91. doi: 10.1037//0096-3445.122.1.73. [DOI] [PubMed] [Google Scholar]

- Loomis JM, Lippa Y, Golledge RG, Klatzky RL. Spatial updating of locations specified by 3-d sound and spatial language. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2002;28:335–345. doi: 10.1037//0278-7393.28.2.335. [DOI] [PubMed] [Google Scholar]

- Marmor G, Zabeck L. Mental rotation by the blind: Does mental rotation depend on visual imagery? Journal of Experimental Psychology: Human Perception and Performance. 1976;2:515–521. doi: 10.1037//0096-1523.2.4.515. [DOI] [PubMed] [Google Scholar]

- May M, Vogeley K. International Workshop on Knowing that and Knowing how in Space. Bonn; Germany: 2006. Remembering where on the basis of spatial action and spatial language. [Google Scholar]

- McNamara TP, Rump B, Werner S. Egocentric and geocentric frames of reference in memory of large-scale space. Psychonomic Bulletin & Review. 2003;10:589–595. doi: 10.3758/bf03196519. [DOI] [PubMed] [Google Scholar]

- Montello DR, Richardson AE, Hegarty M, Provenza M. A comparison of methods for estimating directions in egocentric space. Perception. 1999;28:981–1000. doi: 10.1068/p280981. [DOI] [PubMed] [Google Scholar]

- Mou W, McNamara TP, Valiquette CM, Rump B. Allocentric and egocentric updating of spatial memories. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2004;30:142–157. doi: 10.1037/0278-7393.30.1.142. [DOI] [PubMed] [Google Scholar]

- Newell FN, Ernst MO, Tjan BS, Bulthoff HH. Viewpoint dependence in visual and haptic object recognition. Psychological Science. 2001;12:37–42. doi: 10.1111/1467-9280.00307. [DOI] [PubMed] [Google Scholar]

- Newell FN, Woods AT, Mernagh M, Bulthoff HH. Visual, haptic and crossmodal recognition of scenes. Experimental Brain Research. 2005;161:233–242. doi: 10.1007/s00221-004-2067-y. [DOI] [PubMed] [Google Scholar]

- Pasqualotto A, Finucane CM, Newell FN. Visual and haptic representations of scenes are updated with observer movement. Experimental Brain Research. 2005;166:481–488. doi: 10.1007/s00221-005-2388-5. [DOI] [PubMed] [Google Scholar]

- Pascual-Leone A, Hamilton R. The metamodal organization of the brain. In: Casanova C, Ptito M, editors. Vision: From neurons to cognition. Vol. 134. Amsterdam: Elsevier; 2001. pp. 427–445. Ch. 27 Progress in Brain Research. [DOI] [PubMed] [Google Scholar]

- Pasqualotto A, Newell FN. The role of visual experience on the representation and updating of novel haptic scenes. Brain and Cognition. 2007;65:184–194. doi: 10.1016/j.bandc.2007.07.009. [DOI] [PubMed] [Google Scholar]

- Pick HL., Jr . Visual coding of nonvisual spatial information. In: Liben L, Patterson A, Newcombe N, editors. Spatial representation and behavior across the life span. New York: Academic Press; 1974. pp. 39–61. [Google Scholar]

- Presson CC, DeLange N, Hazelrigg MD. Orientation specificity in spatial memory: What makes a path different from a map of the path. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1989;15:887–897. doi: 10.1037//0278-7393.15.5.887. [DOI] [PubMed] [Google Scholar]

- Presson CC, Hazelrigg MD. Building spatial representations through primary and secondary learning. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1984;10:716–722. doi: 10.1037//0278-7393.10.4.716. [DOI] [PubMed] [Google Scholar]

- Rieser JJ, Guth DA, Hill EW. Mental processes mediating independent travel: Implications for orientation and mobility. Visual Impairment and Blindness. 1982;76:213–218. [Google Scholar]

- Rieser JJ, Guth DA, Hill EW. Sensitivity to perspective structure while walking without vision. Perception. 1986;15:173–188. doi: 10.1068/p150173. [DOI] [PubMed] [Google Scholar]

- Roskos-Ewoldsen B, McNamara TP, Shelton AL, Carr W. Mental representations of large and small spatial layouts are orientation dependent. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1998;24:215–226. doi: 10.1037//0278-7393.24.1.215. [DOI] [PubMed] [Google Scholar]

- Rossano MJ, Moak J. Spatial representations acquired from computer models: Cognitive load, orientation specificity and the acquisition of survey knowledge. British Journal of Psychology. 1998;89:481–497. [Google Scholar]

- Rossano MJ, Warren DH. The importance of alignment in blind subjects' use of tactual maps. Perception. 1989;18:805–816. doi: 10.1068/p180805. [DOI] [PubMed] [Google Scholar]

- Shelton AL, McNamara TP. Systems of spatial reference in human memory. Cognitive Psychology. 2001;43:274–310. doi: 10.1006/cogp.2001.0758. [DOI] [PubMed] [Google Scholar]

- Shelton AL, McNamara TP. Orientation and perspective dependence in route and survey learning. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2004;30:158–170. doi: 10.1037/0278-7393.30.1.158. [DOI] [PubMed] [Google Scholar]

- Shepard RN, Cooper LA. Mental images and their transformations. Cambridge: MIT Press; 1982. [Google Scholar]

- Sholl MJ, Bartels GP. The role of self-to-object updating in orientation-free performance on spatial-memory tasks. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2002;28:422–436. doi: 10.1037//0278-7393.28.3.422. [DOI] [PubMed] [Google Scholar]

- Sholl MJ, Nolin TL. Orientation specificity in representations of place. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1997;23:1494–1507. [Google Scholar]

- Simons DJ, Wang RF. Perceiving real-world viewpoint changes. Psychological Science. 1998;9:315–320. [Google Scholar]

- Simons DJ, Wang RF, Roddenberry D. Object recognition is mediated by extraretinal information. Perception & Psychophysics. 2002;64:521–530. doi: 10.3758/bf03194723. [DOI] [PubMed] [Google Scholar]

- Ungar S, Blades M, Spencer C. Mental rotation of a tactile layout by young visually impaired children. Perception. 1995;24:891–900. doi: 10.1068/p240891. [DOI] [PubMed] [Google Scholar]

- Waller D, Hodgson E. Transient and enduring spatial representations under disorientation and self-rotation. Journal of Experimental Psychology: Learning, Memory, & Cognition. 2006;32:867–882. doi: 10.1037/0278-7393.32.4.867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waller D, Lippa Y, Richardson A. Isolating observer-based reference directions in human spatial memory: Head, body, and the self-to-array axis. Cognition. 2008;106:157–183. doi: 10.1016/j.cognition.2007.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waller D, Montello DR, Richardson AE, Hegarty M. Orientation specificity and spatial updating of memories for layouts. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2002;28:1051–1063. doi: 10.1037//0278-7393.28.6.1051. [DOI] [PubMed] [Google Scholar]

- Wang RF, Simons DJ. Active and passive scene recognition across views. Cognition. 1999;70:191–210. doi: 10.1016/s0010-0277(99)00012-8. [DOI] [PubMed] [Google Scholar]

- Wang RF, Spelke ES. Updating egocentric representations in human navigation. Cognition. 2000;77:215–250. doi: 10.1016/s0010-0277(00)00105-0. [DOI] [PubMed] [Google Scholar]

- Wraga M, Creem-Regehr SH, Proffitt DR. Spatial updating of virtual displays during self- and display rotation. Memory and Cognition. 2004;32:399–415. doi: 10.3758/bf03195834. [DOI] [PubMed] [Google Scholar]

- Yamamoto N, Shelton AL. Visual and proprioceptive representations in spatial memory. Memory and Cognition. 2005;33:140–150. doi: 10.3758/bf03195304. [DOI] [PubMed] [Google Scholar]

- Yamamoto N, Shelton AL. Orientation dependence of spatial memory acquired from auditory experience. Psychonomic Bulletin & Review. 2009;16:301–305. doi: 10.3758/PBR.16.2.301. [DOI] [PubMed] [Google Scholar]