Abstract

Stochastic gradient-based Monte Carlo methods such as stochastic gradient Langevin dynamics are useful tools for posterior inference on large scale datasets in many machine learning applications. These methods scale to large datasets by using noisy gradients calculated using a mini-batch or subset of the dataset. However, the high variance inherent in these noisy gradients degrades performance and leads to slower mixing. In this paper, we present techniques for reducing variance in stochastic gradient Langevin dynamics, yielding novel stochastic Monte Carlo methods that improve performance by reducing the variance in the stochastic gradient. We show that our proposed method has better theoretical guarantees on convergence rate than stochastic Langevin dynamics. This is complemented by impressive empirical results obtained on a variety of real world datasets, and on four different machine learning tasks (regression, classification, independent component analysis and mixture modeling). These theoretical and empirical contributions combine to make a compelling case for using variance reduction in stochastic Monte Carlo methods.

1 Introduction

Monte Carlo methods are the gold standard in Bayesian posterior inference due to their asymptotic convergence properties; however convergence can be slow in large models due to poor mixing. Gradient-based Monte Carlo methods such as Langevin Dynamics and Hamiltonian Monte Carlo [10] allow us to use gradient information to more efficiently explore posterior distributions over continuous-valued parameters. By traversing contours of a potential energy function based on the posterior distribution, these methods allow us to make large moves in the sample space. Although gradient-based methods are efficient in exploring the posterior distribution, they are limited by the computational cost of computing the gradient and evaluating the likelihood on large datasets. As a result, stochastic variants are a popular choice when working with large data sets [15].

Stochastic gradient methods [13] have long been used in the optimization community to decrease the computational cost of gradient-based optimization algorithms such as gradient descent. These methods replace the (expensive, but accurate) gradient evaluation with a noisy (but computationally cheap) gradient evaluation on a random subset of the data. With appropriate scaling, this gradient evaluated on a random subset of the data acts as a proxy for the true gradient. A carefully designed schedule of step sizes ensures convergence of the stochastic algorithm.

A similar idea has been employed to design stochastic versions of gradient-based Monte Carlo methods [15, 1, 2, 9]. By evaluating the derivative of the log likelihood on only a small subset of data points, we can drastically reduce computational costs. However, using stochastic gradients comes at a cost: While the resulting estimates are unbiased, they do have very high variance. This leads to an increased probability of selecting paths with high deviation from the true gradient, leading to slower convergence.

There have been a number of variations proposed on the basic stochastic gradient Langevin dynamics (Sgld) model of [15]: [4] incorporates a momentum term to improve posterior exploration; [6] proposes using additional variables to stabilize fluctuations; [12] proposes modifications to facilitate exploration of simplex; [7] provides sampling solutions for correlated data. However, none of these methods directly tries to reduce the variance in the computed stochastic gradient.

As was the case with the original Sgld algorithm, we look to the optimization community for inspiration, since high variance is also detrimental in stochastic gradient based optimization. A plethora of variance reduction techniques have recently been proposed to alleviate this issue for the stochastic gradient descent (Sgd) algorithm [8, 5, 14]. By incorporating a carefully designed (usually unbiased) term into the update sequence of Sgd, these methods reduce the variance that arises due to the stochastic gradients in Sgd, thereby providing strong theoretical and empirical performance.

Inspired by these successes in the optimization community, we propose methods for reducing the variance in stochastic gradient Langevin dynamics. Our approach bridges the gap between the faster (in terms of iterations) convergence of non-stochastic Langevin dynamics, and the faster per-iteration speed of Sgld. While our approach draws its motivation from the stochastic optimization literature, it is to our knowledge the first approach that aims to directly reduce variance in a gradient-based Monte Carlo method. While our focus is on Langevin dynamics, our approach is easily applicable to other gradient-based Monte Carlo methods.

Main Contributions

We propose a new Langevin algorithm designed to reduce variance in the stochastic gradient, with minimal additional computational overhead. We also provide a memory efficient variant of our algorithm. We demonstrate theoretical conversion to the true posterior under reasonable assumptions, and show that the rate of convergence has a tighter bound than one previously shown for Sgld. We complement these theoretical results with empirical evaluation showing impressive speed-ups versus a standard Sgld algorithm, on a variety of machine learning tasks such as regression, classification, independent component analysis and mixture modeling.

2 Preliminaries

Let be a set of data items modeled using a likelihood function where the parameter θ has prior distribution p(θ). We are interested in sampling from the posterior distribution . If N is large, standard Langevin Dynamics is not feasible due to the high cost of repeated gradient evaluations; a more scalable approach is to use a stochastic variant [15], which we will refer to as stochastic gradient Langevin dynamics, or Sgld. Sgld uses a classical Robbins-Monro stochastic approximation to the true gradient [13]. At each iteration t of the algorithm, a subset Xt = {xt1, . . . , xtn} of the data is sampled and the parameters are updated by using only this subset of data, according to

| (1) |

where ηt ~ N(0, ht), and ht is the learning rate. ht is set in such a fashion that and . This provides an approximation to a first order Langevin diffusion, with dynamics

| (2) |

where U is the unnormalized negative log posterior. Equation 2 has stationary distribution ρ(θ) ∝ exp{−U(θ)}. Let ϕ̄ = ∫ ϕ(θ)ρ(θ)dθ where ϕ represents a test function of interest. For a numerical method that generates samples , let ϕ̂ denote the empirical average . Furthermore, let ψ denote the solution to the Poisson equation ℒψ = ϕ − ϕ̄, where ℒ is the generator of the diffusion, given by

| (3) |

The decreasing step size ht in our approximation (Equation 1) means we do not have to incorporate a Metropolis-Hastings step to correct for the discretization error relative to Equation 2; however it comes at the cost of slowing the mixing rate of the algorithm. We note that, while the discretized Langevin diffusion is Markovian, its convergence guarantees rely on the quality of the approximation, rather than from standard Markov chain Monte Carlo analyses that rely on this Markovian property.

A second source of error comes from the use of stochastic approximations to the true gradients. This is equivalent to using an approximate generator ℒ̃t = ℒ+ΔVt, where ΔVt = (∇Ut − ∇U) · ∇ and ∇Ut is the current stochastic approximation to ∇U. The key contribution of this paper will be replacing the Robbins-Monro approximation to U with a lower-variance approximation, thus reducing the error.

To see more clearly the effect of the variance of our stochastic approximation on the estimator error, we present a result derived for Sgld by [3]:

Theorem 1

[3] Let Ut be an unbiased estimate of U and ht = h for all t ∈ {1, . . . , T }. Then under certain reasonable assumptions (concretely, assumption [A1] in Section 4), for a smooth test function ϕ, the MSE of Sgld at time K = hT is bounded, for someC > 0 independent of (T, h) in the following manner:

| (4) |

Here ||.|| represents the operator norm.

We clearly see that the MSE depends on the variance term 𝔼[||ΔVt||2], which in turn depends on the variance of the noisy stochastic gradients. Since, for consistency, we require h → 0 as T → ∞,1 provided 𝔼[||ΔVt||2] is bounded by a constant τ, the term T1 ceases to dominate as T → ∞, meaning that the effect of noise in the stochastic gradient becomes negligible. However outside this asymptotic regime, the effect of the variance term in Equation 4 remains significant. This motivates our efforts in this paper to decrease the variance of the approximate gradient, while maintaining an unbiased estimator.

An easy to decrease the variance is by using larger minibatches. However, this comes at a considerable computational cost, undermining the whole benefit of using Sgld. Inspired by the recent success of variance reduction techniques in stochastic optimization [14, 8, 5], we take a rather different approach to reduce the effect of noisy gradients.

3 Variance Reduction for Langevin Dynamics

As we have seen in Section 2, reducing the variance of our stochastic approximation can reduce our estimation error. In this section, we introduce two approaches for variance reduction, based on recent variance reduction algorithms for gradient descent [5, 8]. The first algorithm, Saga-Ld, is appropriate when our bottleneck is computation; it yields improved convergence with minimal additional computational costs over Sgld. The second algorithm, Svrg-Ld, is appropriate when our bottleneck is memory; while the computational cost is generally higher than Saga-Ld, the memory requirement is lower, with the memory overhead beyond that of stochastic Langevin dynamics scales as O(d). In practice, we found that computation was a greater bottleneck in the examples considered, so our experimental section only focuses on Saga-Ld; however on larger datasets with easily computable gradients, Svrg-Ld may be the optimal choice.

Algorithm 1.

Saga-Ld

| 1: | Input: for i ∈ {1, . . . , N}, step sizes | |

| 2: |

|

|

| 3: | for t = 0 to T − 1 do | |

| 4: | Uniformly randomly pick a set It from {1, . . . , N} (with replacement) such that |It| = b | |

| 5: | Randomly draw ηt ~ N(0, ht) | |

| 6: | ||

| 7: | for i ∈ It and for i ∉ It | |

| 8: | ||

| 9: | end for | |

| 10: | Output: Iterates |

3.1 Saga-Ld

The increased variance in Sgld is due to the fact that we only have information from n ≪ N data points at each iteration. However, inspired by a minibatch version of the Saga algorithm [5], we can include information from the remaining data points via an approximate gradient, and partially update the average gradient in each operation. We call this approach Saga-Ld.

Under Saga-Ld, we explicitly store N approximate gradients , corresponding to the N data points. Concretely, let be a set of vectors, initialized as for all i ∈ [N], and initialize and . As we iterate through the data, if a data point is not selected in the current minibatch, we approximate its gradient with gαi. If It = {i1t, . . . int} is the minibatch selected at iteration t, this means we approximate the gradient as

| (5) |

When Equation (5) is used for MAP estimation it corresponds to Saga[5]. However by injecting noise into the parameter update in the following manner

| (6) |

we can adapt it for sampling from the posterior. After updating θt+1 = θt +Δθt, we let for i ∈ It. Note that we do not need to explicitly store the ; instead we just update the corresponding gradients gαi and overall approximate gradient gα. The Saga-Ld algorithm is summarized in Algorithm 1.

The approximation in Equation (6) gives an unbiased estimate of the true gradient, since the minibatch It is sampled uniformly at random from [N], and the are independent of It. Saga-Ld offers two key properties: (i) As shown in Section 4, Saga-Ld has better convergence properties than Sgld; (ii) The computational overhead is minimal, since Saga-Ld does not require explicit calculation of the full gradient. Instead, it simply makes use of gradients that are already being calculated in the current minibatch. Combined, we end up with a similar computational complexity to Sgld, with a much better convergence rate.

The only downside of Saga-Ld, when compared with Sgld, is in terms of memory storage. Since we need to store N individual gradients gαi, we typically have a storage overhead of O(Nd) relative to Sgld. Fortunately, in many applications of interest to machine learning, the cost can be reduced to O(N) (please refer to [5] for more details), and in practice the cost of the higher memory requirements is typically outweighed by the improved convergence and low computational cost.

3.2 Svrg-Ld

If the memory overhead of Saga-Ld is not acceptable, we can use a variant that reduces storage requirements, at the cost of higher computational demands. The memory complexity for Saga-Ld is high because the approximate gradient gα is updated at each step. This can be avoided by updating the approximate gradient every m iterations in a single evaluation, and never storing the individual gradients gαi. Concretely, after every m passes through the data, we evaluate the gradient on the entire data set, obtaining , where g̃i = ∇log p(xi|θ̃) is the current local gradient. g̃ then serves as an approximate gradient until the next global evaluation. This yields an update of the form

| (7) |

Without adding noise ηt the update sequence in Equation (7) corresponds to the stochastic variance reduction gradient descent algorithm [8]. Pseudocode for this procedure is given in Algorithm 2.

Algorithm 2.

Svrg-Ld

| 1: | Input: θ̃ = θ0 ∈ ℝd, epoch length m, step sizes |

| 2: | for t = 0 to T − 1 do |

| 3: | if (t mod m = 0) then |

| 4: | θ̃ = θt |

| 5: | |

| 6: | end if |

| 7: | Uniformly randomly pick a set It from {1, . . . , N} (with replacement) such that |It| = n |

| 8: | Randomly draw ηt ~ N(0, ht) |

| 9: | |

| 10: | end for |

| 11: | Output: Iterates |

While the memory requirements are lower, the computational cost is higher, due to the cost of a full update of g̃. Further, convergence may be negatively effected due to the fact that, as we move further from θ̃, g̃ will be further from the true gradient. In practice, we found Saga-Ld to be a more effective algorithm on the datasets considered, so in the interest of space we relegate further details about Svrg-Ld to the appendix.

4 Analysis

Our motivation in this paper was to improve the convergence of Sgld, by reducing the variance of the gradient estimate. As we saw in Theorem 1, a high variance 𝔼[||ΔVt||2], corresponding to noisy stochastic gradients, leads to a large bound on the MSE of a test function. We expand this analysis to show that the algorithms introduced in this paper yield a tighter bound.

Theorem 1 required a number of assumptions, given below in [A1]. Discussion of the reasonableness of these assumptions is provided in [3].

[A1] We assume the functional ψ that solves the Poisson equation ℒψ = ϕ − ϕ̄ is bounded up to 3rd-order derivatives by some function Γ, i.e., ||𝒟kψ||≤ CkΓpk where 𝒟 is the kth order derivative (for k = (0, 1, 2, 3)), and Ck, pk > 0. We also assume that the expectation of Γ on {θt} is bounded (supt 𝔼Γp[θt] < ∞) and that Γ is smooth such that sups∈(0,1) Γp(sθ + (1 − s)θ′) ≤ C(Γp(θ) + Γp(θ ′)), ∀θ, θ′, p ≤ max 2pk for some C > 0.

In our analysis of Saga-Ld and Svrg-Ld, we make the assumptions in [A1], and add the following further assumptions about the smoothness of our gradients:

[A2] We assume that the functions log p(xi|θ) are Lipschitz smooth with constant L for all i ∈ [N], i.e. ||∇ log p(xi|θ)− ∇log p(xi|θ′)|| ≤ L||θ − θ′|| for all i ∈ [N] and θ, θ′ ∈ ℝd. We assume that (ΔVtψ(θ))2 ≤ C′||∇Ut(θ)−∇U(θ)||2 for some constant C′ > 0 for all θ ∈ ℝd, where ψ is the solution to the Poisson equation for our test function. We also assume that ||∇ log p(θ)|| ≤ σ and ||∇ log p(xi|θ)|| ≤ σ for some σ and all i ∈ [N] and θ ∈ ℝd.

The Lipschitz smoothness assumption is very common both in the optimization literature [11] and when working with Itô diffusions [3]. The bound on (ΔVtψ(θ))2 holds when the gradient ||∇ψ|| is bounded.

Loosely, these assumptions encode the idea that the gradients don’t change too quickly, so that we limit the errors introduced by incorporating gradients based on previous values of θ. With these assumptions, we state the following key results for Saga-Ld and Svrg-Ld, which are proved in the supplement.

Theorem 2

Let ht = h for all t ∈ {1, . . . , T }. Under the assumptions [A1],[A2], for a smooth test function ϕ, the MSE of Saga-Ld (in Algorithm 1) at time K = hT is bounded, for some C > 0 independent of (T, h) in the following manner:

| (8) |

A similar result can be shown for Svrg-Ld in Algorithm 2:

Theorem 3

Let ht = h for all t ∈ {1, . . . , T }. Under the assumptions [A1],[A2], for a smooth test function ϕ, the MSE of Svrg-Ld (in Algorithm 2) at time K = hT is bounded, for some C > 0 independent of (T, h) in the following manner:

| (9) |

The result in Theorem 3 is qualitatively equivalent to that in Theorem 2 when m = ⎣N/n⎦. In general, such a choice of m is preferable because, in this case, the overall cost of calculation of full gradient in Algorithm 2 becomes insignificant.

In order to assess the theoretical convergence of our proposed algorithm, we compare the bounds for Svrg-Ld (Theorem 3) and Saga-Ld (Theorem 2) with those obtained for Sgld (Theorem 1. Under the assumptions in this section, it is easy to show that the term T1 in Theorem 1 becomes O(N2σ2/(Tn)). In contrast, both Theorem 2 and 3 show that, due to a reduction in variance, Svrg-Ld and Saga-Ld exhibit a much weaker dependence. More specifically, this is manifested in the form of the following bound:

Note that this is tighter than the corresponding bound on Sgld. We also note that, similar to Sgld, Saga-Ld and Svrg-Ld require h → 0 as T → ∞. In such a scenario, the convergence becomes significantly faster relative to Sgld as h → 0.

5 Experiments

We present our empirical results in this section. We focus on applying our stochastic gradient method to four different machine learning tasks, carried out on benchmark datasets: (i) Bayesian linear regression (ii) Bayesian logistic regression and (iii) Independent component analysis (iv) Mixture modeling. We focus on Saga-Ld, since in the applications considered, the convergence and computational benefits of Saga-Ld are more beneficial than the memory benefits of Svrg-Ld;

In order to reduce the initial computational costs associated with calculating the initial average gradient, we use a variant of Algorithm 1 that calculates gα (in line 2 of Algorithm 1) in an online fashion and reweights the updates accordingly. Note that such a heuristic is also commonly used in the implementation of Sag and Saga in the context of optimization [14, 5].

In all our experiments, we use a decreasing step size for Sgld as suggested by [15]. In particular, we use εt = a(b+t)−γ, where the parameters a, b and γ are chosen for each dataset to give the best performance of the algorithm on that particular dataset. For Saga-Ld, due to the benefit of variance reduction, we use a simple two phase constant step size selection strategy. In each of these phases, a constant step size is chosen such that Saga-Ld gives the best performance on the particular dataset. The minibatch size, n, in both Sgld and Saga-Ld is held at a constant value of 10 throughout our experiments. All algorithms are initialized to the same point and the same sequence of minibatches is pre-generated and used in both algorithms.

5.1 Regression

We first demonstrate the performance of our algorithm on Bayesian regression. Formally, we are provided with inputs where xi ∈ ℝd and yi ∈ ℝ. The distribution of the ith output yi is given by p(yi|xi) = 𝒩(β⊤ xi, σe), where p(β) = 𝒩(0, λ−1I). Due to conjugacy, the posterior distribution over β is also normal, and the gradients of the log-likelihood and the log-prior are given by ∇β log(P(yi|xi, β)) = −(yi − βT xi)xi and ∇β log(P(β)) = −λβ. We ran experiments on 11 standard UCI regression datasets, summarized in Table 1.2 In each case, we set the prior precision λ = 1, and we partitioned our dataset into training (70%), validation (10%), and test (20%) sets. The validation set is used to select the step size parameters, and we report the mean square error (MSE) evaluated on the test set, using 5-fold cross-validation.

Table 1.

Summary of datasets used for regression.

| Datasets | concrete | noise | parkinson | bike | toms | protein | casp | kegg | 3droad | music | |

|---|---|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||||

| N | 1030 | 1503 | 5875 | 17379 | 45730 | 45730 | 53500 | 64608 | 434874 | 515345 | 583250 |

| P | 8 | 5 | 21 | 12 | 96 | 9 | 9 | 27 | 2 | 90 | 77 |

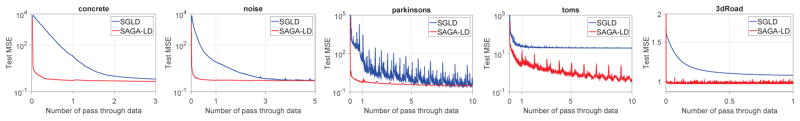

The average test MSE on a subset of datasets is reported in Figure 1. Due to space constraints, we relegate the remaining experimental results to the appendix. As shown in Figure 1, Saga-Ld converges much faster than the Sgld method (taking less than one pass through the whole dataset in many cases). This performance gain is consistent across all the datasets. Furthermore, the step size selection was much simpler for Saga-Ld than Sgld.

Figure 1.

Performance comparison of Sgld and Saga-Ld on a regression task. The x-axis and yaxis represent the number of passes through the entire data and the average test MSE, respectively. Additional experiments are provided in the appendix.

5.2 Classification

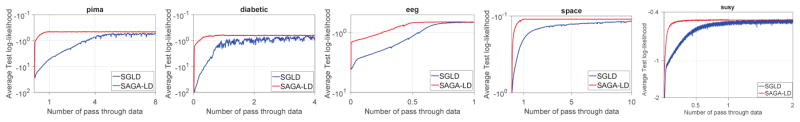

We next turn our attention to classification, using Bayesian logistic regression. In this case, the input is the set where xi ∈ ℝd, yi ∈ {0, 1}. The distribution of the output yi for given sample xi is given by P(yi = 1) = ϕ(βT xi), where p(β) = 𝒩(0, λ−1I) and ϕ(z) = 1/(1 + exp(−z)). Here, the gradient of the log-likelihood and the log-prior are given by ∇β log(P(yi|xi, β)) = (yi − ϕ(βT xi))xi and ∇β log(P(β)) = −λβ respectively. Again, λ is set to 1 for all experiments, and the dataset split and parameter selection method is exactly same as in our regression experiments. We run experiments on five binary classification datasets in the UCI repository, summarized in Table 2, and report the the test set log-likelihood for each dataset, using 5-fold cross validation. Figure 2 shows the performance of Sgld and Saga-Ld for the classification datasets. As we saw with the regression task, Saga-Ld converges faster that Sgld on all the datasets, demonstrating the efficiency of the our algorithm in this setting.

Table 2.

Summary of the datasets used for classification.

| Datasets | pima | diabetic | eeg | space | susy |

|---|---|---|---|---|---|

|

| |||||

| N | 768 | 1151 | 14980 | 58000 | 100000 |

| d | 8 | 20 | 15 | 9 | 18 |

Figure 2.

Comparison of performance of Sgld and Saga-Ld for Bayesian logistic regression. The x-axes and y-axes represent the number of effective passes through the dataset and the test log-likelihood, respectively.

5.3 Bayesian Independent Component Analysis

To evaluate performance under a Bayesian Independent Component Analysis (ICA) model, we assume our dataset is distributed according to

| (10) |

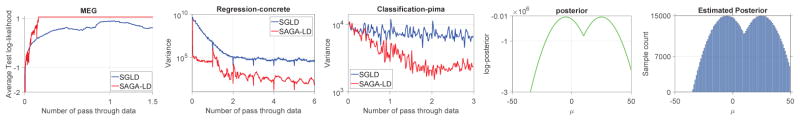

where W ∈ ℝd×d, , and . The gradient of the log-likelihood and the log-prior are where for all j ∈ [d] and ∇W log(p(W)) = −λW respectively. All other parameters are set as before. We used a standard ICA dataset for our experiment3, comprisein 17730 time-points with 122 channels from which we extracted the first 10 channels. Further experimental details are similar to those for regression and classification. The performance (in terms of test set log likelihood) of Sgld and Saga-Ld for the ICA task is shown in Figure 3. As seen in Figure 3, similar to the regression and classification tasks, Saga-Ld outperforms Sgld in the ICA task.

Figure 3.

The left plot shows the performance of Sgld and Saga-Ld for the ICA task. The next two plots show the variance of Sgld and Saga-Ld for regression and classification. The rightmost two plot shows true and estimated posteriors using Saga-Ld for the mixture modeling task

5.4 Mixture Model

Finally, we evaluate how well Saga-Ld estimates the true posterior of parameters of mixture models. We generated 20,000 data points from a mixture of two Gaussians, given by , where μ = −5, γ = 20, and σ = 5. We estimate the posterior distribution over μ, holding the other variables fixed. The two plots on the right of Figure 3 show that we are able to estimate the true posterior correctly.

Discussion

Our experiments provide a very compelling reason to use variance reduction techniques for Sgld, complementing the theoretical justification given in Section 4. The hypothesized variance reduction is demonstrated in Figure 3, where we compare the variances of Sgld and Saga-Ld with respect to the true gradient on regression and classification tasks. As we see from all of the experimental results in this section, Saga-Ld converges with relatively very few samples compared with Sgld. This is especially important in hierarchical Bayesian models where, typically, the size of the model used is proportional to the number of observations. Thus, with Saga-Ld, we can achieve better performance with very few samples. Another advantage is that, while we require the step size to tend to zero, we can use a much simpler schedule than Sgld.

6 Discussion and Future Work

Saga-Ld is a new stochastic Langevin method that obtains improved convergence by reducing the variance in the stochastic gradient. An alternative method, Svrg-Ld, can be used when memory is at a premium. For both Saga-Ld and Svrg-Ld, we proved a tighter convergence bound than the one previously shown for stochastic gradient Langevin dynamics. We also showed, on a variety of machine learning tasks, that Saga-Ld converges to the true posterior faster than Sgld, suggesting the widespread use of Saga-Ld in place of Sgld.

We note that, unlike other stochastic Langevin methods, our sampler is non-Markovian. Since our convergence guarantees are based on bounding the error relative to the full Langevin diffusion rather than on properties of a Markov chain, this does not impact the validity of our sampler.

While we showed the efficacy of using our proposed variance reduction technique to Sgld, our proposed strategy is very generic enough and can also be applied to other gradient-based MCMC techniques such as [1, 2, 9, 6, 12]. We leave this as future work.

Supplementary Material

Footnotes

In particular, if h ∝ T−1/3, we obtain the optimal convergence rate for the above upper bound.

The datasets can be downloaded from https://archive.ics.uci.edu/ml/index.html

The dataset can be downloaded from https://www.cis.hut.fi/projects/ica/eegmeg/MEG_data.html.

30th Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain.

Contributor Information

Avinava Dubey, Department of Machine Learning, Carnegie-Mellon University, Pittsburgh, PA 15213.

Sashank J. Reddi, Department of Machine Learning, Carnegie-Mellon University, Pittsburgh, PA 15213.

Barnabás Póczos, Department of Machine Learning, Carnegie-Mellon University, Pittsburgh, PA 15213.

Alexander J. Smola, Department of Machine Learning, Carnegie-Mellon University, Pittsburgh, PA 15213

Eric P. Xing, Department of Machine Learning, Carnegie-Mellon University, Pittsburgh, PA 15213

Sinead A. Williamson, IROM/Statistics and Data Science, University of Texas at Austin, Austin, TX 78712

References

- 1.Ahn Sungjin, Korattikara Anoop, Welling Max. Bayesian posterior sampling via stochastic gradient Fisher scoring. ICML. 2012 [Google Scholar]

- 2.Ahn Sungjin, Shahbaba Babak, Welling Max. Distributed stochastic gradient MCMC. ICML. 2014 [Google Scholar]

- 3.Chen Changyou, Ding Nan, Carin Lawrence. On the convergence of stochastic gradient MCMC algorithms with high-order integrators. NIPS. 2015 [Google Scholar]

- 4.Chen Tianqi, Fox Emily B, Guestrin Carlos. Stochastic gradient Hamiltonian Monte Carlo. ICML. 2014 [Google Scholar]

- 5.Defazio Aaron, Bach Francis, Lacoste-Julien Simon. SAGA: A fast incremental gradient method with support for non-strongly convex composite objectives. NIPS. 2014 [Google Scholar]

- 6.Ding Nan, Fang Youhan, Babbush Ryan, Chen Changyou, Skeel Robert D, Neven Hartmut. Bayesian sampling using stochastic gradient thermostats. NIPS. 2014 [Google Scholar]

- 7.Girolami Mark, Calderhead Ben. Riemann manifold Langevin and Hamiltonian Monte Carlo methods. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2011 [Google Scholar]

- 8.Johnson Rie, Zhang Tong. Accelerating stochastic gradient descent using predictive variance reduction. NIPS. 2013 [Google Scholar]

- 9.Ma Yi-An, Chen Tianqi, Fox Emily. A complete recipe for stochastic gradient MCMC. NIPS. 2015 [Google Scholar]

- 10.Neal Radford. Mcmc using hamiltonian dynamics. In Handbook of Markov Chain Monte Carlo. 2010 [Google Scholar]

- 11.Nesterov Yurii. Introductory Lectures On Convex Optimization: A Basic Course. Springer; 2003. [Google Scholar]

- 12.Patterson Sam, Teh Yee Whye. Stochastic gradient Riemannian Langevin dynamics on the probability simplex. NIPS. 2013 [Google Scholar]

- 13.Robbins Herbert, Monro Sutton. A stochastic approximation method. The Annals of Mathematical Statistics. 1951 Sep;22(3):400–407. [Google Scholar]

- 14.Schmidt Mark W, Roux Nicolas Le, Bach Francis R. Minimizing finite sums with the stochastic average gradient. 2013 arXiv:1309.2388. [Google Scholar]

- 15.Welling Max, Teh Yee Whye. Bayesian learning via stochastic gradient Langevin dynamics. ICML. 2011 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.