Abstract

Evaluation of clinical images is essential for diagnosis in many specialties. Therefore the development of computer vision algorithms to help analyze biomedical images will be important. In ophthalmology, optical coherence tomography (OCT) is critical for managing retinal conditions. We developed a convolutional neural network (CNN) that detects intraretinal fluid (IRF) on OCT in a manner indistinguishable from clinicians. Using 1,289 OCT images, the CNN segmented images with a 0.911 cross-validated Dice coefficient, compared with segmentations by experts. Additionally, the agreement between experts and between experts and CNN were similar. Our results reveal that CNN can be trained to perform automated segmentations of clinically relevant image features.

OCIS codes: (150.1135) Algorithms, (110.4500) Optical coherence tomography

1. Introduction

Over the past decade there has been increased interest in deep learning, a promising class of machine learning models that utilizes multiple neural network layers to rapidly extract data in a nonlinear fashion [1,2]. Trained with large data sets, deep learning has been used successfully for pattern recognition, signal processing and statistical analysis [2]. Additionally, deep convolutional neural networks (CNN) have facilitated breakthroughs in image processing and segmentation [1]. These results have important clinical implications, particularly with regards to interpretation of results from medical imaging procedures.

A major advance in computer vision was the development of SegNet, a CNN algorithm that learns segmentations from a training set of segmented images and applies pixel-wise segmentations to novel images [3]. Deep learning approaches based on SegNet have been used successfully for automated segmentation in medical imaging problems such as identifying abnormalities in the brain and segmentation of histopathological cells [4,5]. Although increasing, the application of deep learning has been limited in ophthalmology to detecting retinal abnormalities from fundus photographs [6,7], glaucoma from perimetry [8], segmentation of foveal microvasculature [9], and grading of cataracts [10].

Macular edema, characterized by loss of the blood retinal barrier in retinal microvasculature, leads to accumulation of intraretinal fluid (IRF) and decreased vision [11]. With the advent of optical coherence tomography (OCT), the measurement of macular edema inferred by central retinal thickness (CRT) has become one of the most important clinical endpoints in retinal diseases [12,13]. However, the extent and severity of IRF are not fully captured by CRT [14]. Thus, the segmentation of IRF on OCT images would enable more precise quantification of macular edema.

Nevertheless, manual segmentations, which require retinal expert identification, are extremely time consuming with variable interpretation, repeatability, and interobserver agreement [15,16]. Prior studies involving automated OCT segmentations were limited by additional manual input requirements and small validation cohorts [17,18]. In addition, semi-automated methods included artifact with segmentations [19], inaccurately detected microcysts [20], and could not distinguish cystic from non-cystic fluid accumulations [21]. CNN was recently used for automated segmentation of the anatomic retinal boundaries by Fang et al., however, it has not yet been applied for automated segmentation of IRF [22]. Additionally, we demonstrated that deep learning is effective in classifying OCT images of patients with age-related macular degeneration [23]. Using the same OCT electronic medical record database, we sought to apply the CNN for automated IRF segmentations and to validate our deep learning algorithm.

2. Materials and methods

This study was approved by the Institutional Review Board of the University of Washington (UW) and was in adherence with the tenets of the Declaration of Helsinki and the Health Insurance Portability and Accountability Act.

Macular OCT scans from the period 2006-2016 were extracted using an automated extraction tool from the Heidelberg Spectralis imaging database at the University of Washington Ophthalmology Department. All scans were obtained using a 61-line raster macula scan, and every image of each macular OCT was extracted. Clinical variables were extracted in an automated fashion from the Epic electronic medical records database in tandem with macular OCT volumes from patients that were identified as relevant to the study, because they had received therapy for diabetic macular edema, retinal vein occlusions, and/or macular degeneration. The central slice from these OCT volumes was randomly selected for manual segmentation.

A custom browser-based application was implemented (using HTML5) and a webpage was created to passively record the paths drawn by clinicians when segmenting the images. IRF was defined as “an intraretinal hyporeflective space surrounded by reflective septae” as used in other studies [17,24]. Each segmentation result was then reviewed by an independent retina-trained clinician and inaccurate segmentations were rejected with feedback and sent for resegmentation. These images were then divided: 70% were designated as a training set used for optimization of the CNN. The remaining 30% were designated as a validation set. These were used for cross-validation during the optimization procedure. An additional final test set of 30 images was randomly selected, ensuring that none of the patients from the training and validation sets were present in the test set. The images in the test set were segmented by four independent clinicians.

Since retinal OCTs images have variable widths, a vertical section of 432 pixels tall and 32 pixels wide was chosen a priori with the plan to slide the window across the images and collect the predicted probabilities from the CNN. The outputted probabilities for each pixel were then averaged to create a probability distribution map. Probability distribution maps were visualized to ascertain the certainty of the model in labeling pixels as intraretinal fluid. For binary segmentation, if the averaged probability was greater than 0.5, then the pixel was labeled as having intraretinal fluid.

In the training set, data augmentation was performed by varying the position of the window on the OCT image. Care was taken in the validation set to ensure that no overlap was present in any of the validation images. In addition, the training and validation sets were balanced such that 50% of the images contained at least one pixel marked as intraretinal fluid while the other half were from images without any intraretinal fluid. Prior to the input of the model, mean value of overall intensity of the training set was subtracted and then divided by the standard deviation to normalize the input into the model.

A smoothed Dice coefficient was used as the loss function and as the assessment of the final held-out test set with the smoothness parameter set to 1.0.

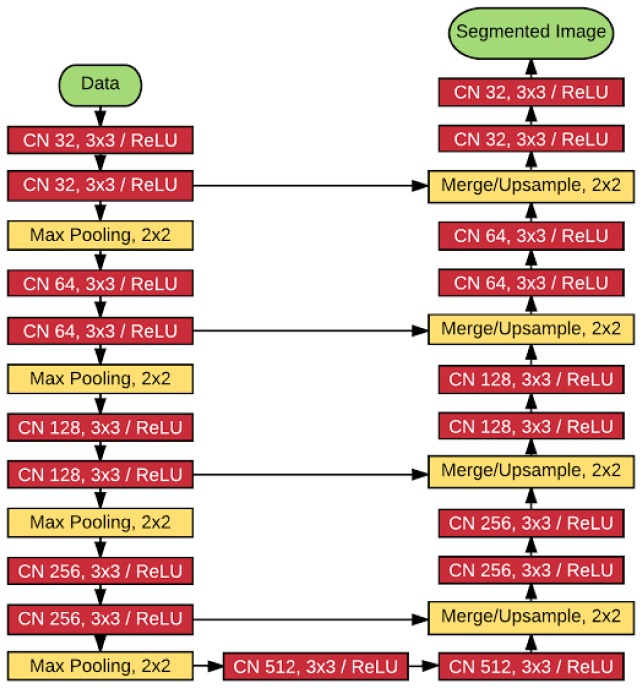

The CNN used was a modified version of the U-net autoencoder architecture [25], with a total of 18 convolutional layers (Fig. 1). The final activation function was a sigmoid function that generates a prediction of a binary segmentation map.

Fig. 1.

Schematic of deep learning module using a total of 18 convolutional layers. CN, convolutional neural network layer. ReLU, rectified linear unit. The first number after CN refers to the number of filters and the second set of number refers to the filter sizes. The Max Pooling and Upsampling windows are also shown. Stride was set to 1 on all layers, and merge was performed using concatenation.

Training was performed on a single server with dual Intel Xeon 3.40 GHz processor, 256 GB of RAM, SSD-based RAID 6, and 8xNVIDIA P100. Batch size was set to 1,192 and parallelized across the 8 GPUs such that for each GPU, the batch was 149. All weights were initialized using a random uniform distribution. Adam optimizer for stochastic gradient descent was used set with an initial learning rate of 1e-7. All training occurred using Keras (https://keras.io) and Tensorflow (https://www.tensorflow.org/) using NVIDIA Tesla P100 Graphics Processing Units (GPU) with NVIDA cuda (v8.0) and cu-dnn (v5.5.1) libraries (http://www.nvidia.com). Statistics were performed using R (http://www.r-project.org). The model, along with the trained final weights and the code to run the model, have been published as an open source project: (https://github.com/uw-biomedical-ml/irf-segmenter).

In assessing the final held-out test set, the CNN performed inference using a sliding window generating an array of probabilities for each pixel. Then this output was compared using the above Dice coefficient to baseline segmentations performed by clinicians.

In addition, the CNN was compared against four clinicians. For this comparison, an individual clinician was chosen as ground truth, and the Dice coefficient of the other three clinicians as well as the CNN were computed from this groundtruth. This was repeated for all four clinicians so that Dice coefficients with each clinician as baseline were computed. The coupled Dice coefficients showed no statistical difference between the clinicians and the CNN.

3. Results

Manual segmentations of 1,289 OCT macular images were used for training and cross validation. A total of 1,919,680 sections of 934 manually segmented OCT images were designated as a training set: these were presented to the CNN, and the segmentation errors from these images were used to adjust the weights in different layers of the networks. Another 1,088 sections of 355 images were used for cross-validation: they were presented to the CNN during training and segmentation errors were recorded, but were not used to adjust the weights in the network layers

The model was trained with 200,000 iterations and periodically assessed against the validation set (cross-validation). Training time was 7.25 days using parallelized training across 8 x NVIDIA P100 GPUs. Inference time on the test set was 13.2 seconds per OCT B scan image with a sliding window using a single NVIDIA P100 GPU.

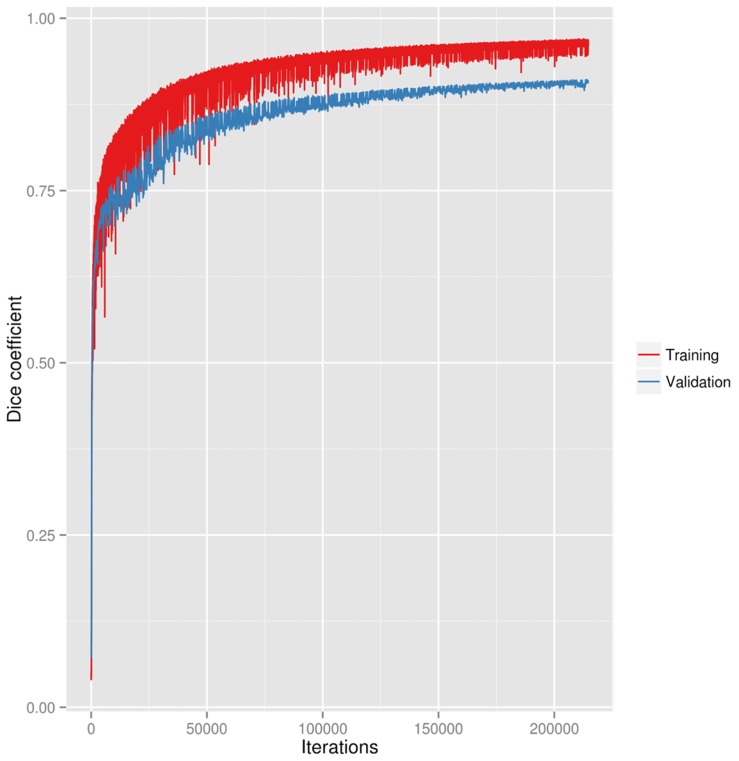

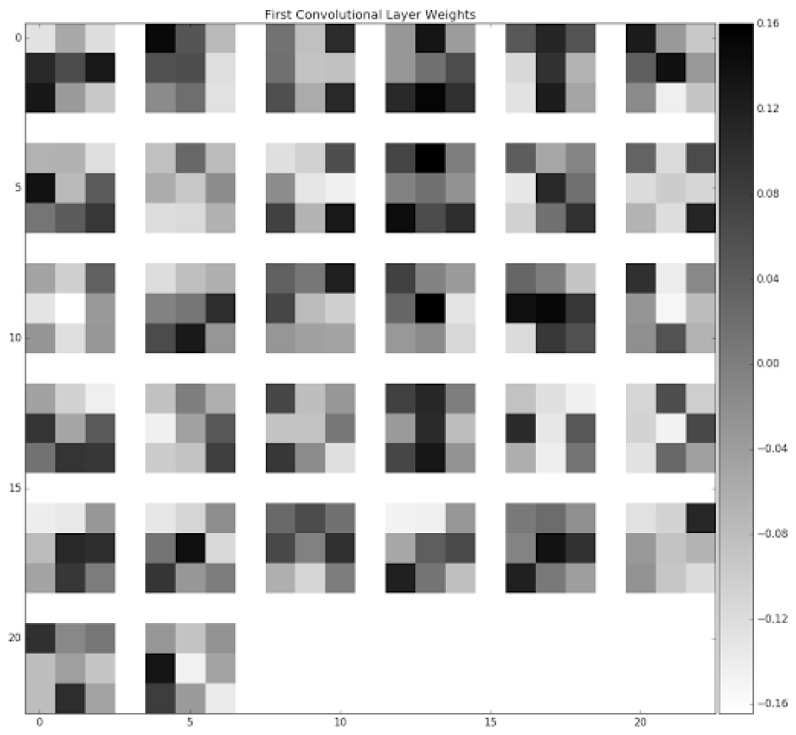

Final trained parameters of the first convolutional layer are shown in Fig. 2. The learning curve showing the Dice coefficients of the training iterations and the validation set are shown in Fig. 3. The model achieved a maximal cross-validation Dice coefficient of 0.911.

Fig. 2.

Visualization of trained weights of the first layer of convolutional filters.

Fig. 3.

Learning curve of the neural network training and validation.

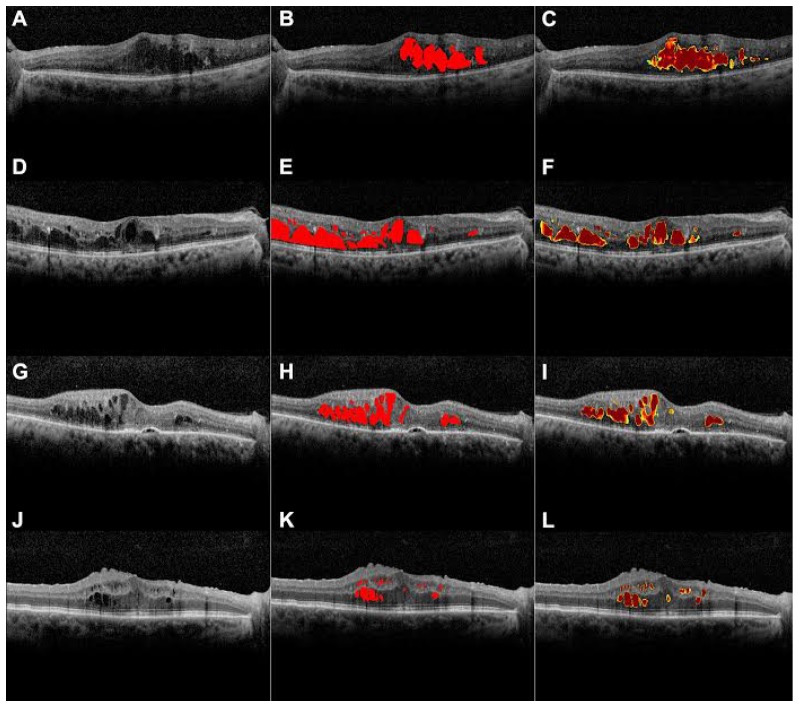

The weights that produced the highest cross-validated coefficient were then used for comparison against manual segmentations in a held out test set. Example deep learning segmentations of intraretinal fluid compared with manual segmentations from the held out test set are shown in Fig. 4.

Fig. 4.

Example segmentations of intraretinal fluid (IRF) from the held out test set by deep learning. A, an example optical coherence tomography (OCT) image with intraretinal fluid. B, manual segmentation of IRF C, automated segmentation by deep learning. D, an example optical coherence tomography (OCT) image with intraretinal fluid. E, manual segmentation of IRF. F, automated segmentation by deep learning. G, an example OCT image with IRF and pigment epithelial detachment (PED). H, manual segmentation of IRF avoiding PED. I, deep learning correctly segments IRF cysts but not PED. J, an example optical coherence tomography (OCT) image with IRF and shadowing under vasculature. K, manual segmentation of IRF. L, deep learning correctly segments IRF cysts but avoids the shadowing under the retinal vasculature.

To assess reliability of the deep learning algorithm we compared the Dice coefficients for deep learning automated segmentations against manual segmentations by four independent clinicians. The pairwise comparison of Dice coefficients are shown in Table 1.

Table 1. Mean dice coefficients with standard deviations.

| Clinician 2 | Clinician 3 | Clinician 4 | Deep Learning | ||

|---|---|---|---|---|---|

| Clinician 1 | 0.744 (0.185) | 0.771 (0.155) | 0.809 (0.164) | 0.754 (0.135) | |

| Clinician 2 | 0.710 (0.190) | 0.747 (0.189) | 0.703 (0.182) | ||

| Clinician 3 | 0.722 (0.209) | 0.725 (0.117) | |||

| Clinician 4 | 0.730 (0.176) |

The clinician in the first column is the baseline from whom Dice coefficients are computed for each row.

The mean Dice coefficient for human interrater reliability and deep learning were 0.750 and 0.729, respectively, reaching a generally accepted value of excellent agreement (Fig. 5) [26]. No statistically significant difference was found between clinicians and deep learning (p = 0.247).

Fig. 5.

Pairwise comparison of Dice coefficients from the held out test set. The mean Dice coefficient for human interrater reliability and deep learning were 0.750 and 0.729, respectively.

4. Discussion

The concept of automated segmentation using deep learning has become increasingly popular in medical imaging; however, comparatively little has been applied in ophthalmology and the validation of proposed models were limited to small sample size. Zheng et al. evaluated semi-automated segmentation of intra- and subretinal fluid in OCT images of 37 patients [17]. Initial automated segmentation was performed followed by coarse and fine segmentation of fluid regions. Then an expert manually selected each region of potential IRF and SRF. This semi-automated segmentation had a Dice coefficient of 0.721 to 0.785 compared to manual segmentation, which is similar to our study. The advantage of our algorithm includes being a fully automated system with no human input. Esmaeili et al. used recursive Gaussian filter and 3D fast curvelet transform to determine curvelet coefficients, which were denoised with a 3D sparse dictionary. The average Dice coefficients for the segmentation of intraretinal cysts in whole 3D volume and within central 3 mm diameter were 0.65 and 0.77, respectively [27]. However, the validation of their study included only four 3D-OCT images, a much smaller sample than our study. Chiu et al. reported a dice coefficient of 0.53 using an automated detection of IRF in OCT images of 6 patients with diabetic macular edema. Both Dice coefficient and intermanual segmenter correlations (0.58) were much lower than our study [21]. Wang et al. recently applied fuzzy level set for automated segmentation of IRF with results similar to human graders [28]. However, their study consisted of only 10 patients and was limited by segmentation artifacts requiring integration with OCT angiography for verification. A benefit of our method is that it does not require OCT angiography, which is not readily available in most practices.

Our study shows that CNN can be trained to identify clinical features that are diagnostic, similar to how clinicians interpret an OCT image. The deep learning algorithm successfully identified areas of IRF by generating pixelwise probabilities which were then averaged to create a segmentation map. Our model was trained with more than 200,000 iterations without overfitting. Additional advantages of this study include the use of a publicly available model and training strategy for precise localization and segmentation of images. Furthermore, the deep learning model is fast and requires no additional human input, such as with semi-automated segmentation programs. To our knowledge, the use of a validated CNN for accurate segmentation of IRF has never been shown.

Our training data sets originated from images from a single academic center, thus our results may not be generalizable. However, our training data set was randomly selected from all consecutive retinal OCT images obtained at our institution during a 10-year period, therefore a wide variety of pathologies likely have been included, increasing its overall applicability in other data sets. In summary, deep learning shows promising results in accurate segmentation and automated quantification of intraretinal fluid volume on OCT imaging and our open-source algorithm may be useful for future clinical and research applications

Disclosures

The authors declare that there are no conflicts of interest related to this article.

The contents do not represent the views of the U.S. Department of Veterans Affairs or the United States Government.

Acknowledgments

This work was supported by Research to Prevent Blindness. The hardware used in the completion of this project was donated by NVIDIA.

Funding

National Eye Institute (NEI) (K23EY02492); Latham Vision Science Innovation Grant; Research to Prevent Blindness; The Gordon & Betty Moore Foundation; Alfred P. Sloan Foundation.

References and links

- 1.Mitchell J. B., “Machine learning methods in chemoinformatics,” Wiley Interdiscip. Rev. Comput. Mol. Sci. 4(5), 468–481 (2014). 10.1002/wcms.1183 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.LeCun Y., Bengio Y., Hinton G., “Deep learning,” Nature 521(7553), 436–444 (2015). 10.1038/nature14539 [DOI] [PubMed] [Google Scholar]

- 3.A. Kendall, V. Badrinarayanan, R. Cipolla. Bayesian SegNet: Model uncertainty in deep convolutional encoder-decoder architectures for scene understanding [Internet]. arXiv [cs.CV]. 2015.

- 4.Korfiatis P., Kline T. L., Erickson B. J., “Automated segmentation of hyperintense regions in FLAIR MRI using deep learning,” Tomography 2(4), 334–340 (2016). 10.18383/j.tom.2016.00166 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hatipoglu N., Bilgin G., “Cell segmentation in histopathological images with deep learning algorithms by utilizing spatial relationships,” Med. Biol. Eng. Comput. (2017). [DOI] [PubMed]

- 6.Abràmoff M. D., Lou Y., Erginay A., Clarida W., Amelon R., Folk J. C., Niemeijer M., “Improved automated detection of diabetic retinopathy on a publicly available data set through integration of deep learning,” Invest. Ophthalmol. Vis. Sci. 57(13), 5200–5206 (2016). 10.1167/iovs.16-19964 [DOI] [PubMed] [Google Scholar]

- 7.Burlina P., Pacheco K. D., Joshi N., Freund D. E., Bressler N. M., “Comparing humans and deep learning performance for grading AMD: A study in using universal deep features and transfer learning for automated AMD analysis,” Comput. Biol. Med. 82, 80–86 (2017). 10.1016/j.compbiomed.2017.01.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Asaoka R., Murata H., Iwase A., Araie M., “Detecting preperimetric glaucoma with standard automated perimetry using a deep learning classifier,” Ophthalmology 123(9), 1974–1980 (2016). 10.1016/j.ophtha.2016.05.029 [DOI] [PubMed] [Google Scholar]

- 9.Prentašic P., Heisler M., Mammo Z., Lee S., Merkur A., Navajas E., Beg M. F., Šarunic M., Loncaric S., “Segmentation of the foveal microvasculature using deep learning networks,” J. Biomed. Opt. 21(7), 075008 (2016). 10.1117/1.JBO.21.7.075008 [DOI] [PubMed] [Google Scholar]

- 10.Gao X., Lin S., Wong T. Y., “Automatic feature learning to grade nuclear cataracts based on deep learning,” IEEE Trans. Biomed. Eng. 62(11), 2693–2701 (2015). 10.1109/TBME.2015.2444389 [DOI] [PubMed] [Google Scholar]

- 11.Coscas G., Cunha-Vaz J., Soubrane G., “Macular edema: definition and basic concepts,” Dev. Ophthalmol. 47, 1–9 (2010). 10.1159/000320070 [DOI] [PubMed] [Google Scholar]

- 12.Keane P. A., Sadda S. R., “Predicting visual outcomes for macular disease using optical coherence tomography,” Saudi J. Ophthalmol. 25(2), 145–158 (2011). 10.1016/j.sjopt.2011.01.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kumar A., Sahni J. N., Stangos A. N., Campa C., Harding S. P., “Effectiveness of ranibizumab for neovascular age-related macular degeneration using clinician-determined retreatment strategy,” Br. J. Ophthalmol. 95(4), 530–533 (2011). 10.1136/bjo.2009.171868 [DOI] [PubMed] [Google Scholar]

- 14.Browning D. J., Glassman A. R., Aiello L. P., Beck R. W., Brown D. M., Fong D. S., Bressler N. M., Danis R. P., Kinyoun J. L., Nguyen Q. D., Bhavsar A. R., Gottlieb J., Pieramici D. J., Rauser M. E., Apte R. S., Lim J. I., Miskala P. H., Diabetic Retinopathy Clinical Research Network , “Relationship between optical coherence tomography-measured central retinal thickness and visual acuity in diabetic macular edema,” Ophthalmology 114(3), 525–536 (2007). 10.1016/j.ophtha.2006.06.052 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Srinivas S., Nittala M. G., Hariri A., Pfau M., Gasperini J., Ip M., Sadda S. R., “Quantification of intraretinal hard exudates in eyes with diabetic retinopathy by optical coherence tomography,” Retina 1 (2017). [DOI] [PubMed]

- 16.Golbaz I., Ahlers C., Stock G., Schütze C., Schriefl S., Schlanitz F., Simader C., Prünte C., Schmidt-Erfurth U. M., “Quantification of the therapeutic response of intraretinal, subretinal, and subpigment epithelial compartments in exudative AMD during anti-VEGF therapy,” Invest. Ophthalmol. Vis. Sci. 52(3), 1599–1605 (2011). 10.1167/iovs.09-5018 [DOI] [PubMed] [Google Scholar]

- 17.Zheng Y., Sahni J., Campa C., Stangos A. N., Raj A., Harding S. P., “Computerized assessment of intraretinal and subretinal fluid regions in spectral-domain optical coherence tomography images of the retina,” Am. J. Ophthalmol. 155(2), 277–286 (2013). 10.1016/j.ajo.2012.07.030 [DOI] [PubMed] [Google Scholar]

- 18.Lang A., Carass A., Swingle E. K., Al-Louzi O., Bhargava P., Saidha S., Ying H. S., Calabresi P. A., Prince J. L., “Automatic segmentation of microcystic macular edema in OCT,” Biomed. Opt. Express 6(1), 155–169 (2015). 10.1364/BOE.6.000155 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hu Z., Medioni G. G., Hernandez M., Hariri A., Wu X., Sadda S. R., “Segmentation of the geographic atrophy in spectral-domain optical coherence tomography and fundus autofluorescence images,” Invest. Ophthalmol. Vis. Sci. 54(13), 8375–8383 (2013). 10.1167/iovs.13-12552 [DOI] [PubMed] [Google Scholar]

- 20.Swingle E. K., Lang A., Carass A., Al-Louzi O., Saidha S., Prince J. L., Segmentation of microcystic macular edema in Cirrus OCT scans with an exploratory longitudinal study, Proc SPIE Int Soc Opt Eng. 9417 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Chiu S. J., Allingham M. J., Mettu P. S., Cousins S. W., Izatt J. A., Farsiu S., “Kernel regression based segmentation of optical coherence tomography images with diabetic macular edema,” Biomed. Opt. Express 6(4), 1172–1194 (2015). 10.1364/BOE.6.001172 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Fang L., Cunefare D., Wang C., Guymer R. H., Li S., Farsiu S., “Automatic segmentation of nine retinal layer boundaries in OCT images of non-exudative AMD patients using deep learning and graph search,” Biomed. Opt. Express 8(5), 2732–2744 (2017). 10.1364/BOE.8.002732 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lee C. S., Baughman D. M., Lee A. Y., “Deep learning is effective for classifying normal versus age-related macular degeneration optical coherence tomography images,” Ophthalmology Retina. In press. [DOI] [PMC free article] [PubMed]

- 24.Sahni J., Stanga P., Wong D., Harding S., “Optical coherence tomography in photodynamic therapy for subfoveal choroidal neovascularisation secondary to age related macular degeneration: a cross sectional study,” Br. J. Ophthalmol. 89(3), 316–320 (2005). 10.1136/bjo.2004.043364 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional networks for biomedical image segmentation,” in Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015 (Springer, Cham; 2015). pp. 234–241. [Google Scholar]

- 26.Esmaeili M., Dehnavi A. M., Rabbani H., Hajizadeh F., “Three-dimensional segmentation of retinal cysts from spectral-domain optical coherence tomography images by the use of three-dimensional curvelet based K-SVD,” J. Med. Signals Sens. 6(3), 166–171 (2016). [PMC free article] [PubMed] [Google Scholar]

- 27.Zijdenbos A. P., Dawant B. M., Margolin R. A., Palmer A. C., “Morphometric analysis of white matter lesions in MR images: method and validation,” IEEE Trans. Med. Imaging 13(4), 716–724 (1994). 10.1109/42.363096 [DOI] [PubMed] [Google Scholar]

- 28.Wang J., Zhang M., Pechauer A. D., Liu L., Hwang T. S., Wilson D. J., Li D., Jia Y., “Automated volumetric segmentation of retinal fluid on optical coherence tomography,” Biomed. Opt. Express 7(4), 1577–1589 (2016). 10.1364/BOE.7.001577 [DOI] [PMC free article] [PubMed] [Google Scholar]