Abstract

This paper explores the problem of synchronization of a class of generalized reaction-diffusion neural networks with mixed time-varying delays. The mixed time-varying delays under consideration comprise of both discrete and distributed delays. Due to the development and merits of digital controllers, sampled-data control is a natural choice to establish synchronization in continuous-time systems. Using a newly introduced integral inequality, less conservative synchronization criteria that assure the global asymptotic synchronization of the considered generalized reaction-diffusion neural network and mixed delays are established in terms of linear matrix inequalities (LMIs). The obtained easy-to-test LMI-based synchronization criteria depends on the delay bounds in addition to the reaction-diffusion terms, which is more practicable. Upon solving these LMIs by using Matlab LMI control toolbox, a desired sampled-data controller gain can be acuqired without any difficulty. Finally, numerical examples are exploited to express the validity of the derived LMI-based synchronization criteria.

Keywords: Generalized neural networks, Reaction-diffusion, Integral inequality, Sampled-data control, Linear matrix inequality

Introduction

Over the last few decades, neural networks have procured substantial intrigue for its applications in many practical areas including automatic control, image and signal processing, fault diagnosis, combinatorial optimization, associative memory, etc (Young et al. 1997; Atencia et al. 2005; Hopfield 1984; Diressche and Zou 1998). All such applications heavily depend on the qualitative properties of neural networks. Synchronization, one of the collective dynamical behavior and one of the most fascinating phenomena of neural networks has been widely explored in the literature, see for example He et al. (2017), Shen et al. (2017), Bao et al. (2015), Tong et al. (2015) and Prakash et al. (2016). Besides, based on the choice of neuron states (internal/external), neural networks can be classified as local field neural networks (LFNNs) and static neural networks (SNNs), but still these neural networks can be transfered from one to other under certain assumptions, which are not always practicable. Hence, independent analysis on their dynamical behaviors are acquired. To avoid this complication, an unified model that includes both LFNNs and SNNs has been constructed in Zhang and Han (2011) and investigated its stability. This model of generalized neural network has been applied in areas wherever different types of neural networks are used. This promoted researchers to analyze the dynamics of generalized neural networks, see for examples Zheng et al. (2015), Manivannan et al. (2016), Liu et al. (2015a), Rakkiyappan et al. (2016a, b) and Li and Cao (2016), which are yet far from complete. The stability analysis for the switched Hopfield neutral neural networks was conducted in Manivannan et al. (2016), and some delay dependent stability criteria were established by constructing a new Lyapunov–Krasovskii functional. The problem of stability and pinning synchronization of delayed inertial memristive neural networks was investigated by employing matrix measure and Halanay inequality (Rakkiyappan et al. 2016a). Some LMI-based criteria were presented to guarantee the stability of reaction-diffusion delayed memristor-based neural networks (Li and Cao 2016). Based on the Lyapunov theory and analytical techniques, the fixed-time synchronization for delayed memristive recurrent neural networks was studied in Cao and Li (2017).

Besides, owing to the finite switching speeds and traffic congestion in signal transmission processes, time delays may endure in various dynamical systems, which may be unfavorable to successful applications of neural networks. It is evident from the literature that most research work on delayed neural networks has been confined to simple cases of discrete delays. However, in reality, neural networks frequently exhibit a special nature due to the occurrence of multitude of parallel pathways with a variety of axon sizes and lengths and thus there is a distribution of conduction velocities along these pathways and a distribution of propagation delays. Therefore, to construct a realistic neural network model, it is indispensable to include both discrete and distributed delays. Thus, the qualitative analysis of different neural networks with discrete and distributed delays has been established in the literature, see Wang et al. (2006), Zheng and Cao (2014), Rakkiyappan and Dharani (2017), Lee et al. (2015), Zhang et al. (2009) and Yang et al. (2014), for instance. Robust stability analysis of generalized neural networks with discrete and distributed delays has been analyzed in Wang et al. (2006). Robust synchronization analysis for a class of coupled delayed neural networks has been examined in Zheng and Cao (2014). In addition, in most stability and synchronization analysis of delayed systems, integral inequalities are extensively employed since they exactly produce bounds for integral terms with respect to quadratic functions. Jensen’s inequality is the mainly used and has served as a dominant tool in the stability analysis of time delay systems. Recently, an auxiliary function-based integral inequality has been introduced in Park et al. (2015) and it has been proved that this class of integral inequality produce more tighter bounds than that of Jensen inequality. Over which, it is proved in Chen et al. (2016a) that there occurs a general inequality based on orthogonal polynomials which comprises of all existing inequalities such as the Bessel-Legendre inequality, Jensen inequality, the Wirtinger-based inequality and the auxiliary function-based inequality as its special cases. Motivated by this fact, this paper employs the general integral inequality based on orthogonal polynomials to derive new less conservative criteria for the synchronization of generalized reaction-diffusion neural networks with mixed time-varying delays.

As one of the main factors that bring bad performance to the system, diffusion phenomena is often encountered in neural networks and electric circuits once electrons move in a nonuniform electromagnetic field. This implies that the whole structure and dynamics of neural networks rely on the evolution time of each variable as well as intensively rely on its position status and thus, the reaction-diffusion system arises in response to the above mentioned phenomenon. Recently, neural networks with diffusion terms and Markovian jumping have been immensely investigated in the literature, see Wang et al. (2012a), Gan (2012), Yang et al. (2013), Zhou et al. (2007), Li et al. (2015) and references therein. The problem of exponential synchronization of reaction-diffusion Cohen-Grossberg neural networks and Hopfield neural networks with time-varying delays has been investigated in Gan (2012) and Wang et al. (2012a), respectively. To our knowledge, many phenomena such as time delay and diffusion effects may show the way to undesirable behaviors like oscillation and instability. Consequently, in order to make complete use of their advantages and restrain their disadvantages, some control techniques should be adopted to realize synchronization in generalized reaction-diffusion neural networks.

As far as we know, most of the benefications to nonlinear control theory are based on continuous-time control such as adaptive control, non-fragile control, feedback control and so on. The finite-time passivity problem was discussed by constructing a nonfragile state feedback controller (Rajavel et al. 2017). The problem of exponential filtering for discrete-time switched neural networks was investigated via the average dwell time approach and the piecewise Lyapunov function technique (Cao et al. 2016). The key point in implementing such continuous-time controllers is that the input signal should be continuous, which we cannot always ensure in real-time situations. Moreover, due to the expeditious furtherance in intelligent instrument and digital measurement, nowadays, continuous-time controllers are generally replaced by discrete-time controllers to embellish improved stability, precision and better performance. Further, in digital control method, namely, the sampled-data control approach, the response network accepts the signals from the drive system only in discrete times and the amount of information transferred decreases. This is very advantageous in implementation of controllers because of reduced control cost. Owing to this reason, sampled-data control theory has attracted appreciable notice from researchers and fruitful works have been done previously, few are Liu et al. (2015b), Liu and Zhou (2015), Lee and Park (2017), Rakkiyappan et al. (2015a, b), Su and Shen (2015) and Lee et al. (2014). In all such above mentioned works, only Jensen’s inequality has been utilized to handle integral terms that occur when deriving the synchronization criteria. Different from these works, in this paper, we suppose to derive the synchronization criteria for generalized neural networks with mixed delays using a general integral inequality that comprises Jensen’s inequality and many other existing inequalities as its special cases, which has not yet been established in the literature.

In response to the above discussion, it is worth noticing that both mixed delays and reaction-diffusion effects cannot be neglected when modeling neural networks since they have a potential influence on the dynamics of such networks and however, it is not that easy to handle all of them in a whole under a unified framework of generalized neural network model. Hence, it is of great interest to study this problem and still there is space for further improvement. The main goal of this paper is to explore the global asymptotic synchronization criteria for generalized reaction-diffusion neural networks with mixed delays based on a newly introduced general integral inequality which is derived based on the orthogonal polynomials and includes many existing inequalities such as Wirtinger-based inequality, Jensen’s inequality, the Bessel-Legendre inequality, etc. as its special cases. By constructing proper Lyapunov–Krasovskii functional with triple and four integral terms, new solvability criteria are derived in terms of LMIs which depends on the size of the delays, the sampling period as well as the diffusion terms. Finally, two numerical examples with simulation results are presented to exhibit the effectiveness of the derived theoretical results.

The structure of this paper is summarized as follows: In “Synchronization analysis problem” section, the problem under investigation is described and “Main results” section is devoted to establish some new synchronization criteria. “Numerical examples” section gives two numerical examples to demonstrate the effectiveness of our theoretical results. Finally, conclusion is presented in “Conclusions” section.

Notations and preliminaries Through the whole, represents the n-dimensional Euclidean space. For a matrix X, refers to a positive (negative) definite symmetric matrix and means the transpose and the inverse of a square matrix X, respectively. The symbol in a symmetric matrix indicates the elements that are induced by symmetry. The shorthand denotes a diagonal or block diagonal matrix. denotes the sets of symmetric and symmetric positive definite matrices of respectively.

Before proceeding, we present some necessary lemmas which will be employed to derive the main results.

Lemma 1

(Lu 2008) Let be a cube and let w(x) be a real-valued function belonging to which satisfies Then

Lemma 2

(Chen et al. 2016a) Let (s) be a multiple integrable function and The following integral inequalities hold:

| 1 |

| 2 |

| 3 |

where

Lemma 3

(Gu et al. 2003) For any matrix and a scalar vector function such that the following integrations are well defined, then

Lemma 4

(Park et al. 2011) For any vector matrices and real scalars satisfying the following inequality holds:

subject to

Synchronization analysis problem

Consider the generalized reaction-diffusion neural network model with mixed time-varying delay components as

| 4 |

where and with where is a positive constant. is the transmission diffusion operator along the lth neuron, is the state of the lth neuron, denotes the decay rate of the lth neuron, and are the connection strength, the time-varying delay connection weight, and the distributed time-varying delay connection strength of the jth neuron on the lth neuron, respectively. is the value of the synaptic connectivity from neuron j to l. represents the neuron activation function. and are the time-varying delay and distributed time-varying delay, where and d are positive constants. Also, The initial and Dirichlet boundary conditions of system (4) are given by

| 5 |

and

| 6 |

respectively. where is the Banach space of continuous functions from to with .

Remark 1

It can be clearly seen that the model (4) is a generalized neural network model which incorporates some familiar neural networks as its particular cases. (i) Letting the model in (4) reduces to the reaction-diffusion static neural networks model and (ii) Letting , it reduces to a classic reaction-diffusion local field neural networks model.

For simplicity, we represent system (4) in a compact form as

| 7 |

where ,

Assumption 1

For any the neuron activation function is continuously bounded and satisfy

| 8 |

where and are some real constants and may be positive, zero or negative.

Remark 2

As it has been mentioned in Liu et al. (2013), the constants can be positive, negative or zero. Therefore, the resulting activation functions could be nonmonotonic and more general than the usual sigmoid functions. In addition, when using the Lyapunov stability theory to analyze the stability of dynamic systems, such a description is particularly suitable since it quantifies the lower and upper bounds of the activation functions that offer the possibility of reducing the induced conservatism.

To observe the synchronization of system (4), the slave system is designed as

| 9 |

where is the state vector and is the sampled-data controller to be designed. The boundary and initial conditions for (9) are given by

| 10 |

| 11 |

Define the error vector as Subtracting (4) from (9), we arrive at the error system as follows:

| 12 |

where

This paper adopts the following sampled-data controller:

| 13 |

where is the gain matrix to be obtained, denotes the sampling instant and satisfies

Using input delay approach, we have and Then the controller in (4a) becomes

| 14 |

Thus, (12) can be written as

| 15 |

Main results

In this section, by constructing Lyapunov–Krasovskii functional and by utilizing advanced techniques, new criteria to ensure the global asymptotic synchronization of the concerned generalized neural networks with reaction-diffusion terms and mixed delays will be furnished. Eventually, a design method of the sampled-data controller for the considered generalized reaction-diffusion neural networks will be proposed.

Further, let us define

Theorem 1

The generalized neural networks with reaction-diffusion terms and mixed time-varying delays can be globally asymptotically synchronized under a sampled-data controller, if there exist matrices such that the following LMIs hold:

| 16 |

| 17 |

with

Proof

Consider the Lyapunov–Krasovskii Candidate

| 18 |

where

The time derivative of V(t, x) is computed as follows:

| 19 |

| 20 |

| 21 |

| 22 |

| 23 |

| 24 |

| 25 |

| 26 |

| 27 |

Now by employing Lemma 2 to the integral terms in (21)–(25), we arrive at

| 28 |

| 29 |

| 30 |

| 31 |

| 32 |

| 33 |

| 34 |

| 35 |

| 36 |

Further,

| 37 |

| 38 |

According to the error system (15), we can have

| 39 |

Then, by using Green’s formula, Dirichlet boundary condition and Lemma 1 on (39) and adding upon to results in

| 40 |

Moreover, for positive diagonal matrices we have from Assumption 1 that

| 41 |

| 42 |

| 43 |

| 44 |

Now, by employing Lemma 4 to (29), (30) and (33), we obtain

| 45 |

Upon adding all along with (41)–(44), we have

| 46 |

where is given in (17). This proves that the considered generalized delayed reaction-diffusion neural network model with time-varying delays is globally asymptotically stable under the sampled-data controller.

Remark 3

Lemma 1 is utilized to deal with the reaction-diffusion terms. It can be witnessed in the literature that in the problems on qualitative inspection of reaction-diffusion neural networks, see for example Wang et al. (2012b), Liu (2010), Wang and Zhang (2010) and Lv et al. (2008), the impact of diffusion terms have been expunged. However, the results derived in this paper also includes the effect of diffusion terms, which is worth mentioning. Moreover, the results presented here are generic and no limitation is urged on the time-varying delay.

Remark 4

It is to be noticed that Theorem 1 furnishes a synchronization scheme for generalized delayed reaction-diffusion neural networks in the framework of input delay approach. The results are revealed in the form of LMIs. An advantage of LMI approach is that the LMI condition can be verified easily and effectively by employing the available softwares which are meant to solve LMIs.

If the effects of diffusion are ignored, then following result will be obtained.

Theorem 2

The generalized neural networks with mixed time-varying delays is globally asymptotically synchronized under a sampled-data controller, if there exist matrices such that (16) and the following LMI hold:

| 47 |

with

where

Proof

The proof follows from Theorem 1 with the second term of in (18) being neglected.

Remark 5

In general, computational complexity will be a big issue based on how large are the LMIs and how more are the decision variables. The results in Theorem 1 are derived based on the construction of proper Lyapunov–Krasovskii functional with triple and four integral terms and by using a newly introduced integral inequality technique which produces tighter bounds than what the existing ones such as the Wirtinger-based inequality, Jensen’s inequality and the auxiliary function-based integral inequalities produce. It should be mentioned that the derived synchronization criteria for the considered neural networks with reaction-diffusion terms and time-varying delays is less conservative than the other conditions in the literature, which will be proved in the next section. Meanwhile, it should also be noticed that the relaxation of the derived results is acquired at the cost of more number of decision variables. As far the results to be efficient enough it is more comfortable to have larger maximum allowable upper bounds but still in order to reduce computational burden and time consumption, our future work will be focused on reducing the number of decision variables.

Remark 6

It is worth to mention that, in the existing literature only few works that concerns with the qualitative analysis of generalized neural networks has been published (Zheng et al. 2015; Liu et al. 2015a; Rakkiyappan et al. 2016b). In Zheng et al. (2015) and Liu et al. (2015a), the stability analysis for a class of generalized neural networks with time-varying delays has been carried out based on a free-matrix-based inequality and an improved inequality, respectively. By constructing augmented Lyapunov–Krasovskii functional with more information on activation functions, improved delay-dependent stability criteria for generalized neural networks with additive time-varying delays has been presented in Rakkiyappan et al. (2016b). However, all these existing works does not include diffusion phenomena in the neural network model, which cannot be ignored when electrons are moving in a nonuniform electromagnetic field. Therefore, to manufacture high quality neural networks, it is must to consider the activations to vary in space as well as in time and in this case the model should be expressed by partial differential equations. In the existing literature there were only a very few works that investigate the synchronization of generalized reaction-diffusion neural networks with time-varying delays, namely Gan (2012) and Gan et al. (2016). Different from the existing literature, the present paper focuses on the synchronization behavior of generalized reaction-diffusion neural networks with mixed time-varying delays. Here, to realize synchronization, discrete controller, namely the sampled-data controller has been employed. Moreover, by using a new class of integral inequalities for quadratic functions, from which almost all of the existing integral inequalities can be obtained, such as Jensen inequality, the Wirtinger-based inequality, the Bessel-Legendre inequality, the Wirtinger-based double integral inequality, and the auxiliary function-based integral inequalities, less conservative synchronization criteria that depends on the information of delays as well as reaction-diffusion terms has been derived. The obtained results are formulated in the form of LMIs which can be efficiently solved via Matlab LMI control toolbox.

Numerical examples

This section offers two numerical examples with simulations to verify the validity of the proposed theoretical results acquired in this paper.

Example 1

Consider the drive-response generalized neural network model (7) and (9)

| 48 |

and

| 49 |

with the parameters

We let and Moreover, the activation function is considered to be Through simple calculation, we arrive at The sampling period is taken as

Next, by using Matlab LMI toolbox, the sufficient conditions in Theorem 1 are verified under the above chosen parameters and were found to be feasible with the control gain matrix

Thus, we conclude that the generalized neural network model with mixed delays under a sampled-data controller is globally asymptotically synchronized.

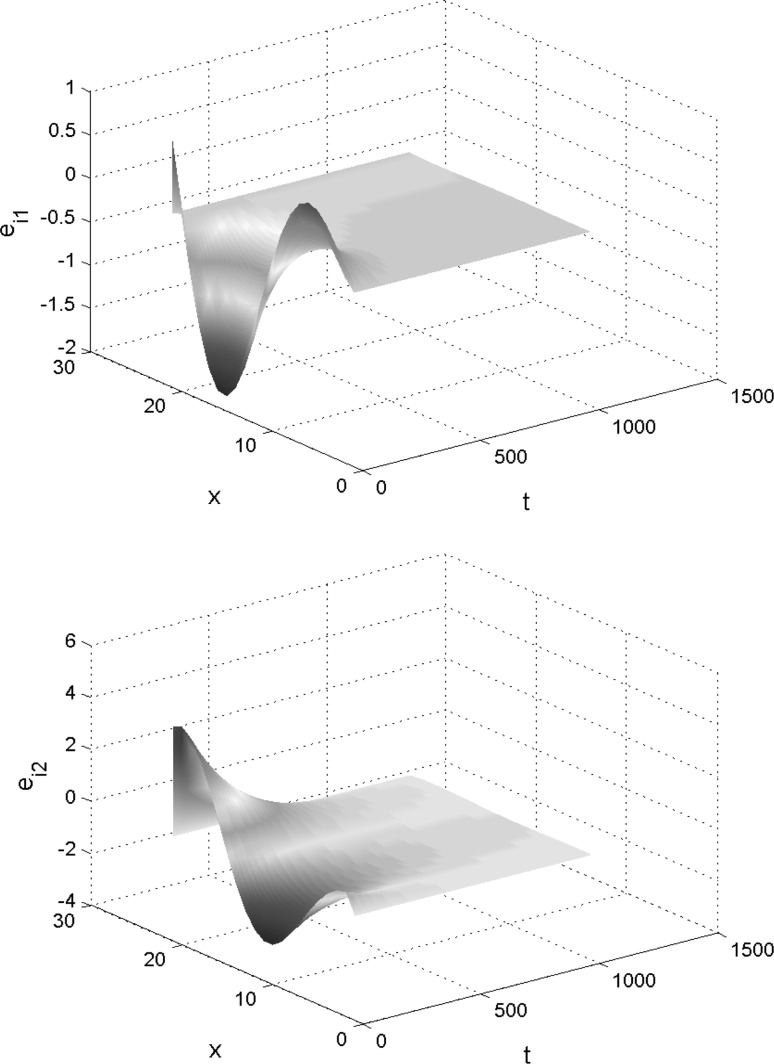

From Fig. 1 one can read that under the designed controller, the error dynamics of the generalized reaction-diffusion neural networks converges to zero. The simulation results clearly clarify the effectiveness of the controller to the asymptotical synchronization of generalized reaction-diffusion neural networks with mixed delays.

Fig. 1.

Dynamical behavior of the error states and

Moreover, this paper utilizes novel integral inequalities in order to derive the bound for some cross terms that arises when deriving the time derivative of the Lyapunov–Krasovskii functional. To show the effectiveness of such novel integral inequalities, the maximum allowable upper bounds are calculated, which is presented in Table 1.

Table 1.

The maximum allowable upper bounds for different

| 0.2 | 0.4 | 0.6 | 0.8 | |

| 0.9024 | 0.8842 | 0.8005 | 0.6522 |

Example 2

Consider the generalized neural network model in Example 1 without diffusion term as

| 50 |

where we take

and the activation function is chosen to be and let A straight forward calculation yields The sampling period is chosen as Verifying the results provided in Theorem 2 using Matlab LMI toolbox, it is proved that the LMIs presented are feasible and the controller gain is calculated as

Table 2 lists the maximum allowable upper bound for different

Table 2.

The maximum allowable upper bounds for different

It can be witnessed from Table 2 that Theorem 2 achieves larger allowable upper bounds than those obtained in Chen et al. (2016b), which implies that Theorem 2 has less conservative results, which is obtained by using the newly introduced general integral inequality lemma.

Conclusions

Over the past few years, the problem of synchronization of reaction-diffusion neural networks has become a hot area of research. However, only a few works on the synchronization problem for generalized reaction-diffusion neural networks have been established. This paper focuses on the synchronization of generalized reaction-diffusion neural network with mixed time-varying delay components under a sampled-data controller. In virtue of a general integral inequality based on orthogonal polynomials and Lyapunov–Krasovskii functionals with triple and four integral terms, new less conservative synchronization criterion has been derived in terms of LMIs. The acquired LMIs are solved numerically through MATLAB LMI toolbox. Finally, numerical simulations are established to illustrate the validity of the proposed theoretical results.

In practical sampled-data systems, the control packet can be lost because of several factors and this may induce undesirable behavior of the system under control. Therefore, it is worth to consider the problem of synchronization of generalized reaction-diffusion neural networks using sampled-data control with control packet loss, which will be one of our future topics.

Contributor Information

S. Dharani, Email: sdharanimails@gmail.com

R. Rakkiyappan, Email: rakkigru@gmail.com

Jinde Cao, Email: jdcao@seu.edu.cn.

Ahmed Alsaedi, Email: aalsaedi@hotmail.com.

References

- Atencia M, Joya G, Sandoval F. Dynamical analysis of continuous higher order Hopfield neural networks for combinatorial optimization. Neural Comput. 2005;17:1802–1819. doi: 10.1162/0899766054026620. [DOI] [PubMed] [Google Scholar]

- Bao H, Park JH, Cao J. Matrix measure strategies for exponential synchronization and anti-synchronization of memristor-based neural networks with time-varying delays. Appl Math Comput. 2015;270:543–556. [Google Scholar]

- Cao J, Li R. Fixed-time synchronization of delayed memristor-based recurrent neural networks. Sci China Inf Sci. 2017;60(3):032201. doi: 10.1007/s11432-016-0555-2. [DOI] [Google Scholar]

- Cao J, Rakkiyappan R, Maheswari K, Chandrasekar A. Exponential filtering analysis for discrete-time switched neural networks with random delays using sojourn probabilities. Sci China Technol Sci. 2016;59(3):387–402. doi: 10.1007/s11431-016-6006-5. [DOI] [Google Scholar]

- Chen J, Xu S, Chen W, Zhang B, Ma Q, Zou Y. Two general integral inequalities and their applications to stability analysis for systems with time-varying delay. Int J Robust Nonlinear Control. 2016;26:4088–4103. doi: 10.1002/rnc.3551. [DOI] [Google Scholar]

- Chen G, Xia J, Zhuang G. Delay-dependent stability and dissipativity analysis of generalized neural networks with Markovian jump parameters and two delay components. J Frankl Inst. 2016;353:2137–2158. doi: 10.1016/j.jfranklin.2016.02.020. [DOI] [Google Scholar]

- Diressche P, Zou X. Global attractivity in delayed Hopfield neural network models. SIAM J Appl Math. 1998;58:1878–1890. doi: 10.1137/S0036139997321219. [DOI] [Google Scholar]

- Gan Q. Global exponential synchronization of generalized stochastic neural networks with mixed time-varying delays and reaction-diffusion terms. Neurocomputing. 2012;89:96–105. doi: 10.1016/j.neucom.2012.02.030. [DOI] [Google Scholar]

- Gan Q, Lv T, Fu Z. Synchronization criteria for generalized reaction-diffusion neural networks via periodically intermittent control. Chaos. 2016;26:043113. doi: 10.1063/1.4947288. [DOI] [PubMed] [Google Scholar]

- Gu K, Kharitonov V, Chen J. Stability of time-delay systems. Boston: Birkhauser; 2003. [Google Scholar]

- He W, Qian F, Cao J. Pinning-controlled synchronization of delayed neural networks with distributed-delay coupling via impulsive control. Neural Netw. 2017;85:1–9. doi: 10.1016/j.neunet.2016.09.002. [DOI] [PubMed] [Google Scholar]

- Hopfield J (1984) Neurons with graded response have collective computational properties like those of two-state neurons. Proceedings of National Academy of Sciences, USA 81:3088–3092 [DOI] [PMC free article] [PubMed]

- Lee TH, Park JH. Improved criteria for sampled-data synchronization of chaotic Lure systems using two new approaches. Nonlinear Anal Hybrid Syst. 2017;24:132–145. doi: 10.1016/j.nahs.2016.11.006. [DOI] [Google Scholar]

- Lee T, Park J, Lee S, Kwon O. Robust sampled-data control with random missing data scenario. Int J Control. 2014;87:1957–1969. doi: 10.1080/00207179.2014.896476. [DOI] [Google Scholar]

- Lee T, Park JH, Park M, Kwon O, Jung H. On stability criteria for neural networks with time-varying delay using Wirtinger-based multiple integral inequality. J Franklin Inst. 2015;352:5627–5645. doi: 10.1016/j.jfranklin.2015.08.024. [DOI] [Google Scholar]

- Li R, Cao J. Stability analysis of reaction-diffusion uncertain memristive neural networks with time-varying delays and leakage term. Appl Math Comput. 2016;278:54–69. [Google Scholar]

- Li X, Rakkiyappan R, Sakthivel R. Non-fragile synchronization control for Markovian jumping complex dynamical networks with probabilistic time-varying coupling delay. Asian J Control. 2016;17:1678–1695. doi: 10.1002/asjc.984. [DOI] [Google Scholar]

- Liu X. Synchronization of linearly coupled neural networks with reaction-diffusion terms and unbounded time delays. Neurocomputing. 2010;73:2681–2688. doi: 10.1016/j.neucom.2010.05.003. [DOI] [Google Scholar]

- Liu H, Zhou G. Finite-time sampled-data control for switching T-S fuzzy systems. Neurocomputing. 2015;156:294–300. doi: 10.1016/j.neucom.2015.04.008. [DOI] [Google Scholar]

- Liu Y, Wang Z, Liang J, Liu X. Synchronization of coupled neutral type neural networks with jumping-mode-dependent discrete and unbounded distributed delays. IEEE Trans Cybern. 2013;43:102–114. doi: 10.1109/TSMCB.2012.2199751. [DOI] [PubMed] [Google Scholar]

- Liu Y, Lee S, Kwon O, Park JH. New approach to stability criteria for generalized neural networks with interval time-varying delays. Neurocomputing. 2015;149:1544–1551. doi: 10.1016/j.neucom.2014.08.038. [DOI] [Google Scholar]

- Liu X, Yu W, Cao J, Chen S. Discontinuous Lyapunov approach to state estimation and filtering of jumped systems with sampled-data. Neural Netw. 2015;68:12–22. doi: 10.1016/j.neunet.2015.04.001. [DOI] [PubMed] [Google Scholar]

- Lu J. Global exponential stability and periodicity of reaction-diffusion delayed recurrent neural networks with Dirichlet boundary conditions. Chaos Solitons Fractals. 2008;35:116–125. doi: 10.1016/j.chaos.2007.05.002. [DOI] [Google Scholar]

- Lv Y, Lv W, Sun J. Convergence dynamics of stochastic reaction-diffusion recurrent neural networks with continuously distributed delays. Nonlinear Anal Real World Appl. 2008;9:1590–1606. doi: 10.1016/j.nonrwa.2007.04.003. [DOI] [Google Scholar]

- Manivannan R, Samidurai R, Cao J, Alsaedi A. New delay-interval-dependent stability criteria for switched Hopfield neural networks of neutral type with successive time-varying delay components. Cogn Neurodyn. 2016;10(6):543–562. doi: 10.1007/s11571-016-9396-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park P, Ko J, Jeong C. Reciprocally convex approach to stability of systems with time-varying delays. Automatica. 2011;47:235–238. doi: 10.1016/j.automatica.2010.10.014. [DOI] [Google Scholar]

- Park P, Lee W, Lee S. Auxiliary function-based integral inequalities for quadratic functions and their applications to time-delay systems. J Franklin Inst. 2015;352:1378–1396. doi: 10.1016/j.jfranklin.2015.01.004. [DOI] [Google Scholar]

- Prakash M, Balasubramaniam P, Lakshmanan S. Synchronization of Markovian jumping inertial neural networks and its applications in image encryption. Neural Netw. 2016;83:86–93. doi: 10.1016/j.neunet.2016.07.001. [DOI] [PubMed] [Google Scholar]

- Rajavel S, Samidurai R, Cao J, Alsaedi A, Ahmad B. Finite-time non-fragile passivity control for neural networks with time-varying delay. Appl Math Comput. 2017;297:145–158. [Google Scholar]

- Rakkiyappan R, Dharani S. Sampled-data synchronization of randomly coupled reaction-diffusion neural networks with Markovian jumping and mixed delays using multiple integral approach. Neural Comput Appl. 2017;28:449C462. doi: 10.1007/s00521-015-2079-5. [DOI] [Google Scholar]

- Rakkiyappan R, Dharani S, Cao J. Synchronization of neural networks with control packet loss and time-varying delay via stochastic sampled-data controller. IEEE Trans Neural Netw Learn Syst. 2015;26:3215–3226. doi: 10.1109/TNNLS.2015.2425881. [DOI] [PubMed] [Google Scholar]

- Rakkiyappan R, Dharani S, Zhu Q. Stochastic sampled-data synchronization of coupled neutral-type delay partial differential systems. J Frankl Inst. 2015;352:4480–4502. doi: 10.1016/j.jfranklin.2015.06.019. [DOI] [Google Scholar]

- Rakkiyappan R, Premalatha S, Chandrasekar A, Cao J. Stability and synchronization analysis of inertial memristive neural networks with time delays. Cogn Neurodyn. 2016;10(5):437–451. doi: 10.1007/s11571-016-9392-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rakkiyappan R, Sivasamy R, Park JH, Lee T. An improved stability criterion for generalized neural networks with additive time-varying delays. Neurocomputing. 2016;171:615–624. doi: 10.1016/j.neucom.2015.07.004. [DOI] [Google Scholar]

- Shen H, Zhu Y, Zhang L, Park JH. Extended dissipative state estimation for Markov jump neural networks with unreliable links. IEEE Trans Neural Netw Learn Syst. 2017;28:346–358. doi: 10.1109/TNNLS.2015.2511196. [DOI] [PubMed] [Google Scholar]

- Su L, Shen H. Mixed passive synchronization for complex dynamical networks with sampled-data control. Appl Math Comput. 2015;259:931–942. [Google Scholar]

- Tong D, Zhou W, Zhou X, Yang J, Zhang L, Xu X. Exponential synchronization for stochastic neural networks with multi-delayed and Markovian switching via adaptive feedback control. Commun Nonlinear Sci Numer Simul. 2015;29:359–371. doi: 10.1016/j.cnsns.2015.05.011. [DOI] [Google Scholar]

- Wang Z, Zhang H. Global asymptotic stability of reaction-diffusion Cohen-Grossberg neural networks with continuously distributed delays. IEEE Trans Neural Netw. 2010;21:39–49. doi: 10.1109/TNN.2009.2033910. [DOI] [PubMed] [Google Scholar]

- Wang Z, Shu H, Liu Y, Ho DW, Liu X. Robust stability analysis of generalized neural networks with discrete and distributed time delays. Chaos Solitons Fractals. 2006;30:886–896. doi: 10.1016/j.chaos.2005.08.166. [DOI] [Google Scholar]

- Wang Y, Lin P, Wang L. Exponential stability of reaction-diffusion high-order Markovian jump Hopfield neural networks with time-varying delays. Nonlinear Anal Real World Appl. 2012;13:1353–1361. doi: 10.1016/j.nonrwa.2011.10.013. [DOI] [Google Scholar]

- Wang K, Teng Z, Jiang H. Adaptive synchronization in an array of linearly coupled neural networks with reaction-diffusion terms and time delays. Commun Nonlinear Sci Numer Simul. 2012;17:3866–3875. doi: 10.1016/j.cnsns.2012.02.020. [DOI] [Google Scholar]

- Yang X, Cao J, Yang Z. Synchronization of coupled reaction-diffusion neural networks with time-varying delays via pinning-impulsive controller. SIAM J Control Optim. 2013;51:3486–3510. doi: 10.1137/120897341. [DOI] [Google Scholar]

- Yang X, Cao J, Yu W. Exponential synchronization of memristive Cohen-Grossberg neural networks with mixed delays. Cogn Neurodyn. 2014;8:239–249. doi: 10.1007/s11571-013-9277-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young S, Scott P, Nasrabadi N. Object recognition using multilayer Hopfield neural network. IEEE Trans Image Process. 1997;6:357–372. doi: 10.1109/83.557336. [DOI] [PubMed] [Google Scholar]

- Zhang X, Han Q. Global asymptotic stability for a class of generalized neural networks with interval time-varying delays. IEEE Trans Neural Netw. 2011;22:1180–1192. doi: 10.1109/TNN.2011.2147331. [DOI] [PubMed] [Google Scholar]

- Zhang H, Wang Z, Liu D. Global asymptotic stability and robust stability of a class of Cohen-Grossberg neural networks with mixed delays. IEEE Trans Circuit Syst. 2009;I(56):616–629. doi: 10.1109/TCSI.2008.2002556. [DOI] [Google Scholar]

- Zheng C, Cao J. Robust synchronization of coupled neural networks with mixed delays and uncertain parameters by intermittent pinning control. Neurocomputing. 2014;141:153–159. doi: 10.1016/j.neucom.2014.03.042. [DOI] [Google Scholar]

- Zheng H, He Y, Wu M, Xiao P. Stability analysis of generalized neural networks with time-varying delays via a new integral inequality. Neurocomputing. 2015;161:148–154. doi: 10.1016/j.neucom.2015.02.055. [DOI] [Google Scholar]

- Zhou Q, Wan L, Sun J. Exponential stability of reaction-diffusion generalized Cohen-Grossberg neural networks with time-varying delays. Chaos Solitons Fractals. 2007;32:1713–1719. doi: 10.1016/j.chaos.2005.12.003. [DOI] [Google Scholar]