Abstract

Background

Patients in general medical-surgical wards who experience unplanned transfer to the intensive care unit (ICU) show evidence of physiologic derangement 6–24 h prior to their deterioration. With increasing availability of electronic medical records (EMRs), automated early warning scores (EWSs) are becoming feasible.

Objective

To describe the development and performance of an automated EWS based on EMR data.

Materials and methods

We used a discrete-time logistic regression model to obtain an hourly risk score to predict unplanned transfer to the ICU within the next 12 h. The model was based on hospitalization episodes from all adult patients (18 years) admitted to 21 Kaiser Permanente Northern California (KPNC) hospitals from 1/1/2010 to 12/31/2013. Eligible patients met these entry criteria: initial hospitalization occurred at a KPNC hospital; the hospitalization was not for childbirth; and the EMR had been operational at the hospital for at least 3 months. We evaluated the performance of this risk score, called Advanced Alert Monitor (AAM) and compared it against two other EWSs (eCART and NEWS) in terms of their sensitivity, specificity, negative predictive value, positive predictive value, and area under the receiver operator characteristic curve (c statistic).

Results

A total of 649,418 hospitalization episodes involving 374,838 patients met inclusion criteria, with 19,153 of the episodes experiencing at least one outcome. The analysis data set had 48,723,248 hourly observations. Predictors included physiologic data (laboratory tests and vital signs); neurological status; severity of illness and longitudinal comorbidity indices; care directives; and health services indicators (e.g. elapsed length of stay). AAM showed better performance compared to NEWS and eCART in all the metrics and prediction intervals. The AAM AUC was 0.82 compared to 0.79 and 0.76 for eCART and NEWS, respectively. Using a threshold that generated 1 alert per day in a unit with a patient census of 35, the sensitivity of AAM was 49% (95% CI: 47.6–50.3%) compared to the sensitivities of eCART and NEWS scores of 44% (42.3–45.1) and 40% (38.2–40.9), respectively. For all three scores, about half of alerts occurred within 12 h of the event, and almost two thirds within 24 h of the event.

Conclusion

The AAM score is an example of a score that takes advantage of multiple data streams now available in modern EMRs. It highlights the ability to harness complex algorithms to maximize signal extraction. The main challenge in the future is to develop detection approaches for patients in whom data are sparser because their baseline risk is lower.

Keywords: Critical care, Physiologic monitoring, Deterioration, Electronic health records, Early warning score, Risk score, Patient safety

1. Introduction

Beginning in the 1990s [1], multiple reports have documented that patients in general medical-surgical wards who experience unplanned transfer to the intensive care unit (ICU) show evidence of physiologic derangement 6–24 h prior to their deterioration. The realization that these clinical changes were often missed led to the development of rapid response teams (RRTs) [2–4]. These teams are expected to intervene prior to patients’ having an actual cardiac arrest or other patient decompensation. At an intuitive level, it seems that deaths and unanticipated ICU admissions could be reduced if patients experiencing physiologic derangement were identified earlier and more systematically. This has led to the development of various early warning scores (EWSs) [5–13].

Some of the existing EWSs, such as the modified Early Warning Score (MEWS) [5], National Early Warning Score (NEWS) [6,7], VitalPAC™ Early Warning Score (VIEWS) [8], Pediatric Early Warning Score (PEWS) [9], Bedside PEWS [10], and Children’s Hospital Early Warning Score (C-CHEWS) [11] were constructed based on expert opinion. Others, such as the Rothman Index [12] and the electronic Cardiac Arrest Risk Triage (eCART) score [13] were derived using statistical modeling. More recently, fully automated implementations of EWSs using real time electronic data capture have also been described [14,15].

In an earlier paper [16], we described the development of a score based on retrospective data captured from a comprehensive electronic medical record (EMR). This score, which we will refer to as EDIP (for Early Detection of Impending Physiologic deterioration), was developed as “proof of concept” for a planned pilot project at two community hospitals. The EDIP score was developed assuming a scenario in which the score would be run twice daily with a “look forward” time frame of up to 12 h. This would give clinicians ample lead time to take preventive actions. Predictors included two externally validated [17] scores (the Laboratory Acute Physiology score or LAPS) [18] composed of laboratory test results, a longitudinal COmorbidity Point Score (COPS) [18], individual vital signs, neurological status checks, transpired hospital length of stay, and patient end of life care directives. The EDIP score compared favorably relative to MEWS with a c-statistic of to 0.775 compared to 0.698 for MEWS(re) [16].

In this report we describe how we developed the Advanced Alert Monitor (AAM) score, an updated version of the EDIP score. It has an explicitly stated “look forward” time frame of up to 12 h. This updated version is in actual use in a two community hospital pilot in Kaiser Permanente Northern California (KPNC), an integrated health care delivery system, and deployment to the remaining 19 KPNC hospitals will begin in the summer of 2016.

2. Materials and methods

This project was approved by the KPNC Institutional Review Board for the Protection of Human Subjects, which has jurisdiction over the 21 hospitals described in this paper.

The KPNC hospitals from which we extracted data serve a total population of approximately 3.9 million Kaiser Foundation Health Plan, Inc., members who are cared for by the 8500 physicians of The Permanente Medical Group, Inc., under a mutual exclusivity arrangement. As we have described earlier [18,19], all KPNC hospitals and clinics employ the same information systems with a common medical record number and also can track care covered by the plan but delivered elsewhere. In July of 2006, KPNC began deployment of the Epic EMR (www.epicsystems.com), which has been adapted for internal use and is known as KP HealthConnect (KPHC), a process that was completed in March of 2010.

Data collected at the point of care through KPHC are stored in two repositories, one that holds data in real time for actual clinical transactions, and one that holds data following a brief (~1 min) delay (Chronicles). At the end of each day, data are transferred to a third data repository, known as Clarity, hosted on a Teradata Data Base Management System platform [20]. This third data repository serves as the primary data warehouse for analytic work in KPNC. In addition to capturing physiologic data and care directives, Clarity captures admission and discharge times, admission and discharge diagnoses and procedures (assigned by professional coders), and bed histories permitting quantification of intra- and inter-hospital transfers. A 1:1 correspondence between real time data and Clarity does not exist. This means that not all the information available in Clarity at the end of the day is necessarily available in real time, and some data in the real time environment is not transmitted to Clarity. For example, admission diagnosis may not be entered by the clinician until later in the day, and discharge diagnosis codes of record (assigned by professional coders after reviewing clinicians’ documentation) may lag 1–2 days from real time. These can constitute important limitations for model development. In this report, we describe analyses involving retrospective data (Clarity).

For this report, our study population consisted of all patients admitted to the 21 KPNC hospitals who met these criteria: hospitalization began from 1/1/2010 through 12/31/2013; initial hospitalization occurred at a KPNC hospital (i.e., for inter-hospital transfers, the first hospital stay occurred within KPNC); age ⩾18 years; hospitalization was not for childbirth; and KPHC had been operational at the hospital for at least 3 months.

3. Analytic approach

3.1. Predictors

We employed retrospective data captured from the Clarity relational database to develop the score. Table 1 summarizes the predictors used in the model. These fall into several discrete categories: individual physiologic data points (laboratory tests and vital signs, which, for ward patients, are typically obtained every 4–8 h); neurological status checks obtained from nursing flowsheets [16]; open source composite indices for severity of illness (Laboratory-based Acute Physiology Score, version 2, LAPS2) and longitudinal comorbidity burden (COmorbidity Point Score, version 2, COPS2) [19]; end of life care directives (i.e., physician orders regarding patient resuscitation preferences); and health care utilization services indicators (e.g., transpired length of stay in the hospital at a given time point, whether a patient had been in the ICU during the hospital stay). The table also indicates how some of these data elements were transformed for analytic purposes (e.g., by standardization or polynomial transformations). Detailed description of the statistical strategies employed for variable transformation and selection is provided in the appendix (Appendix B).

Table 1.

Predictors employed for model development.

| Predictor | Values or transformationa |

|---|---|

| Laboratory testsb | |

| Anion gap | Linear |

| Bicarbonate | Quadratic |

| Glucose | Linear |

| Hematocrit | Cubic |

| Lactate | Linear |

| Log blood urea nitrogen | Linear |

| Log creatinine | Quadratic |

| Sodium | Linear |

| Troponin | Linear |

| Troponin missing flag | Indicator |

| Total white blood cell count | Linear |

| Vital signsc | |

| Latest diastolic blood pressure | Quadratic |

| Instabilityd of systolic blood pressure | Linear |

| Latest systolic blood pressure | Cubic |

| Latest heart rate | Cubic |

| Log heart rate instability | Quadratic |

| Log oxygen saturation instability | Linear |

| Logite latest oxygen saturation | Cubic |

| Logit worst oxygen saturation | Linear |

| Log respiratory rate instability | Linear |

| Log temperature instability | Quadratic |

| Latest temperature | Quadratic |

| Latest respiratory rate | Cubic |

| Worst respiratory Rate | Linear |

| Latest neurological status | Linear |

| (Anion gap ÷ serum bicarbonate) × 1000 | Linear |

| Shock index (latest heart rate ÷ latest systolic blood pressure) | Linear |

| Composite indicesf | |

| LAPS2 | Cubic |

| LAPS2 at hospital entry time | Linear |

| Log COPS2 | Linear |

| Other | |

| Log transpired length of stayg | Linear |

| Logit age | Quadratic |

| Sex | Male indicator |

| Care directiveh | Full code or not full code |

| Season | Season 1: months 11, 12, 1, 2 |

| Season 2: months 3, 4, 5, 6 | |

| Season 3: months 7, 8, 9, 10 | |

| Time of day | Time frame 1: 01:00–07:00 |

| Time frame 2: 07:00–12:00 | |

| Time frame 3: all else | |

| Admit category | 1 EDi, SURGICAL |

| 2 NON-ED SURGICAL | |

| 3 ED MEDICAL | |

| 4 NON-ED, MEDICAL | |

| Hospital | 20 hospital indicators |

| Log (transpired length of stay X LAPS2) | Linear |

Refers to those instances where a variable was transformed prior to use in modeling. The transformations we employed were (a) none (variable introduced in models as a linear term), (b) quadratic polynomial (the variable and its square), and (c) cubic polynomial (variable, its square, and its cube).

All laboratory tests extracted were the latest (most recent) in the preceding 72 h.

For vital signs, we extracted all values in the preceding 24 h and then selected based on this data group as indicated. We deleted the following vital signs: Systolic blood pressures >300, Isolated systolic or diastolic blood pressures, Heart rates >300, Respiratory rates >80, Oxygen saturations <50%, Temperatures <85° or >108° Fahrenheit. We kept the highest, lowest and most deranged vital sign recorded in the past 24 h; for laboratory tests, we took the most recent value in the past 72 h. Most deranged was defined as the largest absolute difference from: 100 for systolic blood pressure, 70 for diastolic blood pressure, 75 for heart rate, 11 for respiratory rate, 98 for temperature and 100 for O2 saturation.

For a given variable, instability is defined as the difference between the highest and lowest value within a 24 h period (e.g., if a patient’s heart rate over a 24 h period ranged between 78 and 196, heart rate instability would equal 118).

Logit = log (X ÷ (1 – X)).

LAPS2, Laboratory-based Acute Physiology Score, version 2. This is a score measuring acute physiologic instability based on the 72 h preceding HET. See text and citation 19 for details. COPS2, COmorbidity Point Score, version 2. This is a longitudinal score based on 12 months of patient data; the higher the score, the greater the mortality risk due to comorbid illness. See text and citation 19 for details.

This refers to the length of time a patient had been in the hospital when a measurement was made, as opposed to the usual total hospital length of stay.

Refers to physician order regarding a patient’s preference regarding resuscitation in the event of an inpatient cardiac arrest. See text and citations 16 and 19 for details.

ED - emergency department; SURGICAL – the initial hospital unit to which patient was admitted was the operating room.

As we have described previously [18,19], we concatenated hospital stays into episodes, an important consideration in KPNC, which has many regionalized services that lead to considerable numbers of inter-hospital transports. We did not include diagnoses as predictors because we found that we could not capture them reliably in real time. Finally, for comparison purposes, we assigned each patient two recently published scores: NEWS [6] and eCART [13] (Appendix B).

3.2. Outcome

The outcome variable is the same composite outcome employed in our previous report [16]: (a) transfer to the ICU from either the ward or transitional care unit (TCU, also known as stepdown unit) where the patient stayed in the ICU for ⩾6 h or died in the ICU, or (b) death outside the ICU in a patient whose care directive was “full code” (i.e., had the patient survived, s/he would have been transferred to the ICU) or (c) transfer to the ICU from either the ward or TCU where the patient stayed in the ICU for <6 h if, following this transfer, the next hospital unit was the operating room. We excluded patients with a “comfort care only” care directive. Note that, in KPNC, nurse staffing is richest in the ICU and most limited on the ward, with TCU staffing in between these two.

3.3. Analysis dataset

We transformed patient-level data and created a dataset where the unit of analysis was an hour in the hospital (Appendix Fig. A.1). For example, a patient admitted at 7 pm on a Wednesday and discharged at 10 am the following Friday would contribute 39 rows to the data set, with the first row being the one corresponding to the hour when the patient was first placed in the ward or TCU (“rooming in” time, which we refer to as hospital entry time, or HET). Using this structure, we could assign patients an hourly score, permitting us to also predict multiple events per patient. We kept the highest, lowest and most deranged (Appendix B) vital sign recorded in the past 24 h; for laboratory tests, we took the most recent value in the past 72 h. Care directives were set to the prior hourly value if not missing and, if missing at HET, were set to “full code.” We truncated episodes with a length of stay greater than 15 days (~3% of all episodes) to 15 days to reduce their possible disproportionate impact on the model. In this hourly dataset, the outcome variable had a value of 1 if a patient experienced the outcome within 12 h of the current time point rather than only at the single time point of the event (Appendix Fig. A.1). Therefore, the outcome variable was set to 1 for at most 12 rows prior to the actual event. This was done so that not only the information at the time of the event was used to predict outcomes, but also information about the pattern of variables in the 12 h prior to the event. We hypothesized that this would allow us to build a model that can predict outcomes with some hours of lead-time before the event. Episodes with more than one ICU transfer were split into separate episodes starting at the time when the patient was transferred back to the ward or TCU.

We split the dataset randomly into 3 parts: (a) Training 1: used for variable selection and model fitting, containing 75% of the episodes with HET in 2010 and 2011 and 37.5% of the episodes with HET in 2012 and 2013; (b) Training 2: used to compare model performance and select a final model from the Training 1 models set. It contained 25% of the episodes with HET in 2010 and 2011 and 12.5% of the episodes with HET in 2012 and 2013; and (c) Validation: used for assessing the performance of the final model and contained half of the eligible episodes with HET in 2012 and 2013. We reserved the Validation dataset for testing of the final model with the selected set of coefficients; it was not used during the modeling process or for model selection. We also created a Training “light” data set for expediting the model building and fitting process. This consisted of all episodes in the Training 1 data set that experienced an outcome at any point of their stay and 10 times as many randomly selected episodes that did not experience the outcome.

3.4. Statistical methods

The percent of episodes experiencing the outcome is small (~3%) and the percent of outcomes experienced on an hourly basis is much smaller (~0.04%). The very small fraction of events experienced on an hourly basis led to a “class imbalance” problem. This problem occurs when the number of non-events is much larger than the number of events and the usual modeling techniques have poor accuracy [21,22]. We used the Training “light” data set to develop the models to deal with both the class-imbalance and computational problems associated with a large data set [23]. We left the Training 2 and Validation data sets intact, however, to compare models and evaluate their performance on a data set that reflects the real time use of the score without the need for complicated weighting schemes. Consistent with our previous work, we included all hospitalizations experienced by the patients in our cohort, ignoring within-patient clustering (random) effects; given the rich data available to us, we found that within-patient clustering showed minimal effects on our results.

Given the operating environment where the model was going to be implemented and the clinical use of the model, the modeling options were restricted to models that could be easily instantiated in Java and that would allow the identification of the specific model components with a substantial contribution to the score at the time when the score exceeded the defined cutoff (an alert). These restrictions made it difficult to consider complicated machine learning algorithms such as neural networks or support vector machines [24,25] that are not immediately transparent and can be difficult to implement using different programming languages.

To give us an idea of possible loss in performance by using less sophisticated approaches, we tested a variety of statistical modeling and machine learning approaches including discrete-time logistic regression [26,27], logistic model trees [28] and multiple ensemble models [29]. Some ensemble models included weighted combinations of estimates from various models stratified by the most predictive variables. Our final model was a discrete-time logistic regression equation that is easily programmed into Java for real time scoring. A discrete time logistic regression model is similar to the counting process Cox proportional and hazards model with several attractive features: time varying covariates can be easily estimated; competing risks models are more intuitive and easy to interpret, and obtaining estimates more often than on an hourly basis is not necessary and can be overwhelming. None of the alternative approaches we tested showed better performance, likely because of our processing speed and storage limitations prevented us from testing too many modeling strategies (Appendix Table A.4).

The list of potential independent variables described above, including some possible transformations and interactions, was reduced using the Training 1 data set through backwards selection with a p-value greater than 0.05 for removal of variables via the discrete time logistic regression. The impact of possible overfitting and correlated data structure was assessed as part of the validation process with an independent validation data set. Detailed description of the statistical strategies employed for variable selection is provided in the appendix (Appendix B).

We performed analyses using SAS 9.2.

3.5. Evaluating model performance

Clinical prediction classifiers are commonly evaluated using a number of measures that quantify the model’s calibration and discrimination. These include Nagelkerke’s pseudo-R2 for overall performance [30], the Hosmer-Lemeshow p-value for calibration|27|, and the c statistic [31,32]. However, using these measures presents a number of problems when one tries to predict extremely rare outcomes [33]. The most important of these is that a classifier that tries to maximize the accuracy of its classification rule when predicting a rare outcome may obtain an accuracy of 99% just by classifying all observations as non-events, and model improvements (e.g. as quantified by increases in the c statistic) are overshadowed by the large true negative rate.

Consequently, during the modeling process, we employed a more operational metric, the work-up to detection ratio (W:D) or number needed to evaluate [34], defined as the ratio of observations classified as positive to the true positive observations. From a clinician’s perspective, the W:D – the number of patients one would need to evaluate in order to identify a patient with the outcome – is an important metric for assessing an early warning score. The W:D is also defined as the inverse of the positive predictive value (PPV). In addition to the W:D, one also needs to consider the number of alerts per day, of critical interest to clinicians who actually respond to them. After discussions with the clinicians who would be the end-users of the EWS, we used the Training 2 data set to define a cutoff (“training” cutoff) that yielded, on average, at most 1 unique alert per day in a hospital unit with an average daily census of ~35 patients (in a hospital with ~7000 discharges per year and an average LOS of 4 days). By “unique” we mean that two different patient episodes had at least one alert as opposed to, for example, the same patient having multiple alerts within an episode. We used this training cutoff to calculate the sensitivity, specificity, PPV (precision), and negative predictive value (NPV) during model selection and validation.

We assessed the performance of the final AAM model using an episode-based version of the Validation dataset. This episode-level data set kept one observation per episode with an alert variable to indicate if the episode contained at least one alert, the time when the first alert was fired for alerted episodes and the time of the event. We assessed the occurrence of the outcome based on the first alert using 3 time frames following this first alert: 12 h, 24 h, and any time after the first alert but prior to discharge. Additional alerts (instances of an elevated score) would be handled differently, given that a clinical response would have been initiated already (Fig. A.2), so we did not employ them for assessing performance metrics.

3.6. Comparison of AAM to similar EWSs

We compared the AAM to the NEWS [6] and eCART [13] scores because these are two publicly available EWSs that use vital signs and laboratory data. We calculated these scores every hour and for all episodes of the Validation data set. Details of the calculations for these two scores are described in the appendix (Appendix C). We used the episode-level version of the Validation data set described above to compare the performance of the AAM score against the NEWS and eCART scores by assessing each score’s number of alerts, sensitivity, W:D, PPV, NPV, and specificity, based on a range of cutoffs and the training cutoff defined above. We also calculated the% of total events alerted 2, 6, 12 and 24 h prior to the event to compare the difference in the amount of lead time for action provided by the various measures. Given that no consensus exists over the use of an hourly data structure (with repeat alerts) vs. an episode-based structure for evaluating model performance and that the c-statistic is widely used to measure model performance, we calculated the c-statistic using both the hourly and the episode-based structured data.

4. Results

A total of 649,418 episodes involving 374,838 patients met our inclusion criteria, with 19,153 (3%) of the episodes experiencing at least one outcome. The analysis data set had 48,723,248 hourly observations split into the Training 1 (24,492,639), Training 2 (12,042,741) and Validation (12,186,823) subgroups. The Validation partition had a higher representation of recent data than the Training partitions to assess the final model’s performance on the most recent data possible (Appendix Table A.1). We used the Training 1 data set for variable selection. However, we used the Training “light” data set for model fitting because our computing environment did not allow for fast processing and fitting various models on a data set with close to 25 million observations. This smaller data set had 8,311,355 hourly observations with 106,997 episodes and 10,239 outcomes.

The patients’ characteristics were similar to those previously described [16,35]. Table 2 shows that, upon hospital entry time (HET), patients who experienced an outcome were, on average, 4 years older, more likely to be male (52.5% vs. 45.4%), chronically ill (mean COPS2 of 44 vs. 21) and more acutely ill (mean LAPS2 score of 87 vs. 53) than those who did not experience an outcome. They were also more than five times more likely to die and stayed an average of 6 extra days in the hospital. The patient characteristics at HET were similar across the Training and Validation data sets (Appendix Table A.2).

Table 2.

Cohort characteristics.a

| Characteristic | Episodes without an eventb |

Episodes with at least one event |

All episodes |

|---|---|---|---|

| Number of episodes | 630,265 | 19,153 | 649,418 |

| Number of patients | |||

| Agec | 66.0, 64.6 ± 17.7 | 70.0, 67.4 ± 15.7 | 67.0, 64.7 ± 17.7 |

| >65 year (n, %) | 342,019 (54.3) | 11,948 (62.4) | 353,967 (54.5) |

| Male (n, %) | 286,203 (45.4) | 10,063 (52.5) | 296,266 (45.6) |

| COPS2d | 21.0, 39.1 ± 41.1 | 44.0, 57.1 ± 49.2 | 21.0, 39.6 ± 41.5 |

| LAPS2e | 53.0, 59.1 ± 34.8 | 87.0, 88.5 ± 38.8 | 54.0, 59.9 ± 35.3 |

| 30-dayf mortality (n, %) | 27,752 (4.4) | 4699 (24.5) | 32,451 (5.0) |

| Total hospital length of stay | 2.0, 3.6 ± 5.4 | 8.0, 11.8 ± 16.3 | 2.0, 3.9 ± 6.2 |

Unit of analysis is an episode, which may span several hospitalization stays in those instances where a patient experienced inter-hospital transport (see text and citations 19 and 20 for details). All data are based on a time zero set to the time when the patient was first placed in a hospital unit that was not the emergency department (which we refer to as hospital entry time, or HET; see citation 20 for details).

Refers to the study adverse outcome (see text and citation 16 for details).

For age, COPS2, LAPS2, and length of stay, numbers reported are median, mean, and standard deviation.

COPS2, COmorbidity Point Score, version 2. This is a longitudinal score based on 12 months of patient data; the higher the score, the greater the mortality risk due to comorbid illness. See text and citation 19 for details.

LAPS2, Laboratory-based Acute Physiology Score, version 2. This is a score measuring acute physiologic instability based on the 72 h preceding HET. See text and citation 19 for details.

Mortality is measured 30 days from hospital entry time (HET).

The outcomes tended to occur early, with 25% occurring within 12 h of hospital admission and 50% of them occurring by 36 h. About 2% of the events occurred within an hour of admission to the hospital or of transfer back to the ward or TCU following discharge from the ICU. These were not included in the score evaluation because they cannot be predicted in our current operational setting. Fig. 1 shows the mean AAM score in the 24 h prior to the event for event episodes (episodes experiencing an event) compared to a random 24-h time period for control episodes (episodes that did not result in an event) in the full data set. The graph shows that the control episodes have generally lower scores than event episodes and that the AAM score starts increasing about 8 h prior to the event with the average score being close to 12 at the time of the event. Similar figures for eCART and NEWS scores are shown in the appendix (Appendix Figs. A.3 and A.4).

Fig. 1.

Mean value of the AAM score in the 24 h prior to event. The figure compares score trajectories among episodes where deterioration (outcome defined in text) did and did not occur. Episodes where the outcome occurred are shown at top (upper points and grey fitted line). For episodes without an outcome (lower points and dashed fitted line), we selected a random 24 h period. Episodes without the outcome have generally lower scores than episodes with the outcome. The figure shows that the AAM score starts increasing about 8 h prior to the event with the average score being close to 12 at the time of the event.

Table 1 shows the final set of predictors and their associated transformations used for all statistical modeling and machine learning approaches we tested. The appendix (Table A.3) contains the beta coefficients in the model used currently in practice. We fitted various statistical models including discrete-time logistic models: (1) stratified by the values of key predictors (respiratory and heart rates and LAPS2) at HET or at any point in the episode; (2) stratified by changes in LAPS2 scores in the last 6–24 h; or (3) stratified by recursive partitioning based on the values of key predictors at HET (Appendix Table A.4). All models we tested showed that LAPS2, respiratory rate, heart rate and blood pressure were the most important predictors.

Fig. 2 and Table A.5 (Appendix) show the distribution of alerts triggered by hours between alerts and events for the three scores based on the Validation data set and the training cutoffs (50 for eCART, 8 for NEWS and 7.5 for AAM). The three curves are very similar, with 52% of AAM alerts occurring within 12 h, 65% within 24 h and 35% more than 24 h before the event. Likewise, 54% and 50% of eCART and NEWS alerts occurred within 12 h of the event and 67% and 65% within 24 h of the event.

Fig. 2.

Percent of alerts triggered by hours between alert and event for AAM, eCART and NEWS. This figure shows the distribution of alerts triggered by hours between the alert and the event for AAM, eCART and NEWS based on the Validation data set for episodes experiencing an event. An alert was triggered if the score was greater than the training cutoff. Each respective score’s training cutoff was determined so that there would be no more than 1 alert per day in a hospital with an average daily census of ~35 patients. The cutoff used for eCART was 50, for NEWS was 8 and for AAM was 7.5.

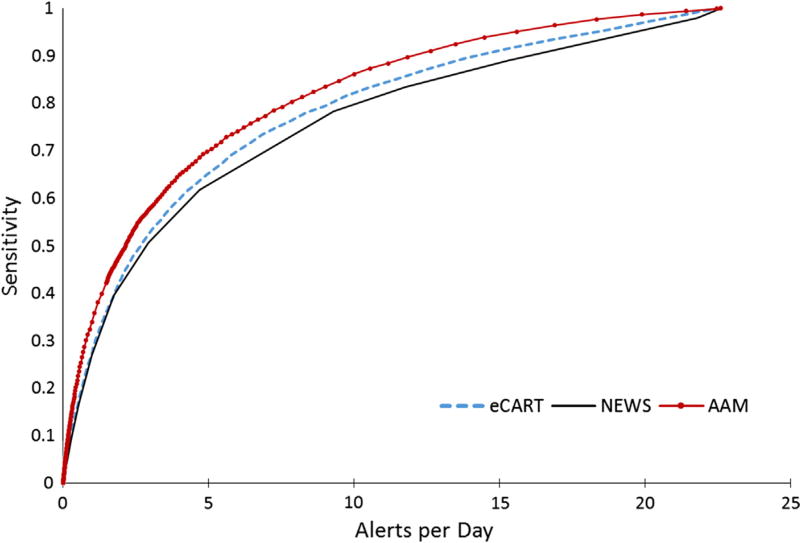

Figs. 3–6 compare the sensitivity and specificity of AAM, NEWS, and eCART through the full scope of cutoffs that yield a range of W: D ratios and alerts per day. AAM showed better sensitivity compared to NEWS and eCART for the same PPV, W:D or alerts per day. Table 3 also shows the sensitivity, PPV and W:D ratios for predicting an event within 12 and 24 h of the alert or over all the hospitalization episode based on the training cutoff. The overall sensitivity of AAM was 49%, compared to the eCART and NEWS scores with sensitivities of 44% and 40% at the training cutoffs. The W:D ratio and PPV were more favorable for AAM with 16.2% of the alerts ever experiencing an outcome compared to 14.4% for eCART and 15.2% for NEWS. The NPV and specificity measures were similar for all 3 scores. An NPV of ~98% for all 3 scores indicates that 2% of non-alerted patients eventually experienced an outcome. Lastly, the AAM episode-based c-statistic was 0.82 (95% CI: 0.81–0.83), which was higher than the c-statistics of 0.79 (95% CI: 0.77, 0.80) and 0.76 (95 CI: 0.75, 0.78) for eCART and NEWS. The AAM c-statistic computed based on the hourly Validation data, 0.82, was higher than the eCART and NEWS scores (0.74 for both).

Fig. 3.

eCART, NEWS and AAM receiver operator curves. This figure shows the Receiver Operating Characteristic Curve (ROC) for all three scores using the episode-based Validation data. The ROC is a standard technique for summarizing a classification model’s discrimination performance. The graph shows each score’s sensitivity (true-positive rate) vs. 1 minus sensitivity (false-positive rate) across all cutoffs. The c-statistic or the area under the ROC curve (AUC) measures the probability that given two patients (one who experienced the event and one who did not), the model will assign a higher score to the former (see citation 32). Given that there is no consensus over the use of an hourly data structure (with repeat alerts) vs. an episode-based structure for evaluating model performance, we calculated the c-statistic using both the hourly and the episode-based structured data. Both the curves and the c-statistics show that AAM has better discrimination ability than eCART, which performs better than NEWS, across all cutoffs.

Fig. 6.

eCART, NEWS and AAM specificity vs. alerts per day. The figure shows the specificity of each score against the number of alerts per day in a hospital with an average daily census of 70 patients as the cutoffs move across the scores’ range. All three scores have similar specificity.

Table 3.

Comparison of eCART, NEWS and AAMa scores using validation data.

| Performance metric | eCART | NEWS | AAM |

|---|---|---|---|

| Training cutoffb | 50 | 8 | 7.5 |

| Number of episodesc | 168,811 | ||

| Number of alerts | 15,274 | 13,129 | 15,288 |

| % alerts with events (PPVd) within: | |||

| 12 h | 7.8% | 7.6% | 8.4% |

| 24 h | 9.7% | 9.8% | 10.5% |

| Entire hospitalization episode | 14.4% | 15.2% | 16.2% |

| W:De at: | |||

| 12 h | 12.7 | 13.1 | 11.9 |

| 24 h | 10.3 | 10.2 | 9.5 |

| Entire hospitalization episode | 6.9 | 6.6 | 6.2 |

| Number not alerted | 153,537 | 155,682 | 153,523 |

| % of no alerts with no event (NPV) | 98.2% | 98.0% | 98.3% |

| Number of events | 5047 | ||

| Sensitivity in prior: | |||

| 12 h | 23.7% | 19.9% | 25.4% |

| 24 h | 29.4% | 25.6% | 31.9% |

| Entire hospitalization episode | 43.7% | 39.5% | 48.9% |

| Number with no event | 163,764 | 163,764 | 163,764 |

| Specificity | 92.0% | 93.2% | 92.2% |

See text for details on the 3 scores (NEWS, National Early Warning Score; eCART, electronic Cardiac Arrest Triage; AAM (Advanced Alert Monitoring). The table indicates specific metrics (left column) when the three scores were applied to hourly retrospective data.

Each respective score’s training cutoff was determined so that there would be no more than 1 alert per day in a hospital with an average daily census of ~35 patients. An alert was triggered if the score was greater than the training cutoff.

In those instances where the patient was transferred to intensive care more than once, we split the original episode into separate episodes (each time a patient returned to the ward, a new episode began) to fully evaluate episodes that had multiple outcomes.

PPV, positive predictive value; NPV, negative predictive value.

W:D, work-up to detection ratio (also known as number needed to evaluate).

We evaluated the AAM performance variation across medical centers and the effect of data quality errors on the AAM score using the Validation data set and the Training cutoff. The performance varied across medical centers with the PPV ranging from 0.11 to 0.23; the NPV from 0.97 to 0.99; the sensitivity from 0.38 to 0.56; the specificity from 0.88 to 0.95 and the hourly-based c-statistic from 0.76 to 0.85 (see Table A.6). We tested the effect of data quality and data capture errors that are likely to occur during implementation by randomly altering the values of the most predictive variables (Bicarbonate, Latest heart rate, Latest respiratory rate, Latest temperature, Latest diastolic blood pressure, Latest systolic blood pressure, Latest oxygen saturation, LAPS2 score, DNR). We substituted the normalized values of the continuous variables with a random drawing between −1 and 1 (i.e. within 1 standard deviation) for 10–20% of the observations. We reversed the value of the binary variable (DNR) for 10–20% of randomly selected observations. We then calculated the PPV, NPV, sensitivity, specificity and c-statistic of this modified data. We repeated this process 100 times. A change in 10% of the observations resulted in a 0.3% increase in sensitivity ±1%; a 1% decrease in specificity ±0.5%; a 2% decrease in PPV ±0.2% and a 0.01% increase in NPV ±0.2%. A change in 20% of the observations resulted in a 1.6% increase in sensitivity ±0.8%; a 1.4% decrease in specificity ±0.14%; a 2.4% decrease in PPV ±0.26% and a 0.1% increase in NPV ±0.03%. The effect on the c-stat was a mean decrease of 0.0137 (95% CI: 0.013, 0.0143) with a random change in these variables in 20% of the observations.

5. Discussion

Various ‘early warning scores’ (EWS), or ‘track-and-trigger systems’ are in use today in the UK [6,36] and the US [12,13]. These scores or systems aim to identify and care for patients who present with or develop acute illness in the hospital. Using a very large patient database, we have developed, validated and implemented an automated EWS that can be used to alert hospitalists outside the ICU as to when a patient is likely to deteriorate in the next 12 h. The score is among the first open-source EWSs based on routinely-collected EMR data elements (including vital signs and laboratory data) to be successfully implemented in practice. The model has built in redundancies that help improve the accuracy of the score when similar predictors are missing. For example, the LAPS2 includes vital signs as well as laboratory test results and functions as a very complex interaction term that is generated even in the presence of missing data (in fact, it employs a specific imputation procedure for results in patients at higher risk [19]). Moreover, the implementation of the AAM score in real time shows a high correlation with the retrospective score with a manageable number of alerts per day and reasonable performance.

The AAM score can be reported every hour and has a prediction window of 12 h, giving enough time for the clinical staff to respond. The ViEWS and other published EWSs [8,36] are reported only upon data entry and predict death within a 24 h window. Compared to eCART and NEWS, AAM showed better performance on all the measures for the same amount of work for clinicians (in terms of average alerts per day).

Because our model includes many more data elements and was developed on a much broader patient base, it is not surprising that it has good statistical performance. This suggests that it is possible to improve prediction with more complex models. Emerging machine learning technologies and increasingly versatile EMRs make development, testing, implementation, and maintenance of increasingly complex models feasible. Our goal with AAM was to maximize signal extraction, an approach consistent with expected improvements in data processing capability.

The AAM score has some opportunities for improvement. The first limitation was that the computing environment where we built the model was slow and had limited number of machine learning algorithms. In addition, the model deployment environment limited us to a model that could be translated by a Java programmer into a single equation. Since the AAM model was developed, both the data management technology for storing and manipulating very large data sets in distributed data platforms and the availability of sophisticated machine learning algorithms designed to run in a parallel processing environment have increased rapidly. This new technology allows model development to be based on the full data set rather than a case/control sample of the data. Based on our recent experience, it reduces the variable identification, transformation and selection, modeling development, and validation time. Many of these applications have an added capability of providing ready-made Java code that can more easily be transferred into operational environments, expanding the possible model choices that were not available at the time when AAM modeling started [37,38]. Currently, we are using this technology to design new predictors, test different data structures, and explore alternative modeling algorithms to update the AAM model. In particular, we will be testing the use of rates of change as additional predictors into the model. When we started the project we were essentially limited to logistic or Cox regression, but now we are testing the use of pattern recognition, fuzzy logic, random forest, gradient boosting trees, and a variety of ensemble models.

Our analysis was performed at a single health care delivery system, which may limit the generalizability of our results. In addition, we cannot rule out that the improved AAM performance we observed is due to the AAM being evaluated using internal data that is similar to the data in which it was developed, whereas the eCART and NEWS evaluation is being conducted at an with data that is “external” to the one used to develop those models. However, our database contains data from 21 hospitals that vary in size, local practices, and culture. Despite these limitations, it remains one of the largest repositories of deterioration events in the world.

Some of the AAM predictors have been controversial in the literature. The AAM score includes the variables age and care directives – predictors that have been purposefully excluded from other EWSs [6]. However, other models have also found age predictive [13,39] and care directive orders might not be consistently recorded in other settings. At KPNC hospitals, when a physician enters admission orders, end-of-life care directives are mandatory (“hard stop” in the EMR) or the physician’s admission orders will not be processed. In addition, due to the model transparency, these two variables can be excluded from the implemented version of the score with little impact to its predictive ability. Moreover, the AAM is not being employed in isolation: its use is integrated with a clinical response arm as well as protocols that include explicit mechanisms for eliciting and respecting patient preferences with respect to escalation of care.

As described in our earlier work [16], using the combined outcome of transfer to ICU and death to evaluate the system’s effect on patient outcomes carries some limitations. Transfers to ICU criteria vary across facilities as they depend on the availability of beds and local practices. In addition, this outcome poses a problem if used as a measure of success when evaluating the use of this score in practice because, in many cases, the goal of alerting scores should be to get patients to the ICU sooner, which would not affect the outcome of “transfer to the ICU” (in fact, an optimal early warning system might increase the overall transfer rate to the ICU) [40]. However, preliminary evaluation results, a description of which exceeds the scope of this report, show improvement in outcomes of care such as inpatient and 30-day mortality, LOS, and cost for pilot facilities, suggesting that the combined ICU transfer or mortality as an outcome for model development is a good proxy for undesirable outcomes and helps to identify the right patients.

Our modeling data structure had one observation per patient per hour, until a length of stay of 15 days was reached and thus allowed us to obtain hourly probability estimates but has some limitations. The resultant dataset can potentially repeat the same vital sign or lab values from one to three days, or until new measurements are taken. A given value for a patient-hour may, therefore, refer to a measurement taken 72 h earlier. There is no indication a new measurement is taken unless it differs from the previous measurement, thereby changing the displayed ‘most recent’ value. Information about any but the final measurement taken in a given hour is lost, so frequency of measurement cannot be analyzed. Similarly, the possible significance of gaps between measurements is not captured in this structure, and cannot be utilized in modeling.

6. Concluding remarks

Automated physiology-based predictive models embedded in EMRs are not mature technologies. No practitioner consensus or legal mandate regarding their role yet exists. However, it is clear that the role of predictive analytics in medicine will expand [41], and it is important to view our results from the standpoint of future research needs, and we conclude by highlighting three of these. The first is that of metrics. Since physiologic scores are based on repeated measurements, and since it takes clinicians some time to mount a response to patient instability, patient scores may be above threshold for some time (i.e., patients trigger repeated alerts). The score’s performance metrics may vary depending on how one handles repeated alerts. Thus, there is a need for consensus on how one defines score performance metrics for repeat alerts. This would require additional analyses across scoring systems (e.g., how long do patients, on average, remain above a given threshold?).

A second issue that merits future research attention is how to detect deterioration among patients who are healthier on admission. As is the case with all existing EWSs, the three models we described are inherently biased towards identifying patients with greater physiologic derangement. They are less useful in detection of deterioration of initially healthy patients (e.g., elective surgical patients) in whom data collection is more limited. Thus, future research in this area needs to focus on such patients. These patients are likely to be younger, so even small increases in the ability to detect deterioration would result in substantial gains in terms of years of life saved.

Finally, as scores such as AAM and eCART are used in actual practice, data from patients who reach alert thresholds will be “contaminated” by clinician treatment in response to alerts. This will pose challenges to the development, updating and evaluation of predictive models, and future research must begin considering these.

Fig. 4.

eCART, NEWS and AAM precision-recall curves. Precision-Recall (PR) curves of all three scores described in this paper. The PR curves show the scores’ positive predictive value (PPV or Precision) against the sensitivity (recall). PR curves are commonly used to present results of binary classifiers of outcomes when the outcome variable is extremely rare. The PPV or Precision is the inverse of the work-up to detection ratio (W:D). The figure shows that the AAM has higher sensitivity than eCART and NEWS for any given PPV or W:D ratio.

Fig. 5.

eCART, NEWS and AAM sensitivity vs. alerts per day. The figure shows the sensitivity of each score against the number of alerts per day in a hospital with an average daily census of 70 patients as the cutoffs move across the scores’ range. The AAM score has higher sensitivity than eCART and NEWS across all alerts per day.

Acknowledgments

This work was funded by the Gordon and Betty Moore Foundation (grant no. 2663.01, “Early detection, prevention, and mitigation of impending physiologic deterioration in hospitalized patients outside intensive care: Phase 3, pilot”), The Permanente Medical Group, Inc., and Kaiser Foundation Hospitals, Inc. Dr. Vincent Liu was supported by the National Institutes of Health’s K23 GM112018. We thank Dr. Tracy Lieu for reviewing the manuscript and Ms. Rachel Lesser for editing and formatting the manuscript.

Appendix A. Supplementary material

Supplementary data associated with this article can be found, in the online version, at http://dx.doi.org/10.1016/j.jbi.2016.09.013.

Footnotes

Conflict of interest

The authors declared that there is no conflict of interest.

References

- 1.Schein RM, Hazday N, Pena M, Ruben BH, Sprung CL. Clinical antecedents to in-hospital cardiopulmonary arrest. Chest. 1990;98:1388–1392. doi: 10.1378/chest.98.6.1388. [DOI] [PubMed] [Google Scholar]

- 2.Hournihan F, Bishop G, Hillman KM, Dauffurn K, Lee A. The medical emergency team: a new strategy to identify and intervene in high-risk surgical patients. Clin. Intens. Care. 1995;6:269–272. [Google Scholar]

- 3.Goldhill DR, Worthington LM, Mulcahy AJ, Tarling MM. Quality of care before admission to intensive care. Deaths on the wards might be prevented. BMJ. 1999;318:195. [PubMed] [Google Scholar]

- 4.Goldhill DR, Worthington L, Mulcahy A, Tarling M, Sumner A. The patient-at-risk team: identifying and managing seriously ill ward patients. Anaesthesia. 1999;54:853–860. doi: 10.1046/j.1365-2044.1999.00996.x. [DOI] [PubMed] [Google Scholar]

- 5.Subbe CP, Kruger M, Rutherford P, Gemmel L. Validation of a modified early warning score in medical admissions. Q. J. Med. 2001;94:521–526. doi: 10.1093/qjmed/94.10.521. [DOI] [PubMed] [Google Scholar]

- 6.Physicians LRCo, editor. NHS, National Early Warning Score (NEWS) Standardising the assessment of acute-illness severity in the NHS, report of a working party. Royal College of Physicians; London: 2012. [Google Scholar]

- 7.Smith GB, Prytherch DR, Meredith P, Schmidt PE, Featherstone PI. The ability of the national early warning score (NEWS) to discriminate patients at risk of early cardiac arrest, unanticipated intensive care unit admission and death. Resuscitation. 2013;84:465–470. doi: 10.1016/j.resuscitation.2012.12.016. [DOI] [PubMed] [Google Scholar]

- 8.Prytherch DR, Smith GB, Schmidt PE, Featherstone PI. ViEWS-towards a national early warning score for detecting adult inpatient deterioration. Resuscitation. 2010;81:932–937. doi: 10.1016/j.resuscitation.2010.04.014. [DOI] [PubMed] [Google Scholar]

- 9.Fraser DD, Singh RN, Frewen T. The PEWS score: potential calling criteria for critical care response teams in children’s hospitals. J. Crit. Care. 2006;21:278–279. doi: 10.1016/j.jcrc.2006.06.006. [DOI] [PubMed] [Google Scholar]

- 10.Parshuram CS, Hutchison J, Middaugh K. Development and initial validation of the bedside paediatric early warning system score. Crit. Care. 2009;13:R135. doi: 10.1186/cc7998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.McLellan MC, Connor JA. The cardiac children’s hospital early warning score (C-CHEWS) J. Pediatr. Nurs. 2013;28:171–178. doi: 10.1016/j.pedn.2012.07.009. [DOI] [PubMed] [Google Scholar]

- 12.Rothman MJ, Rothman SI, Beals JT. Development and validation of a continuous measure of patient condition using the electronic medical record. J. Biomed. Inform. 2013;46:837–848. doi: 10.1016/j.jbi.2013.06.011. [DOI] [PubMed] [Google Scholar]

- 13.Churpek M, Yuen T, Winslow C, Robicsek A, Meltzer D, Gibbons R, Edelson D. Multicenter development and validation of a risk stratification tool for ward patients. Am. J. Respir. Crit. Care Med. 2014;190:649–655. doi: 10.1164/rccm.201406-1022OC. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kollef MH, Chen Y, Heard K, LaRossa GN, Lu C, Martin NR, Martin N, Micek ST, Bailey T. A randomized trial of real-time automated clinical deterioration alerts sent to a rapid response team. J. Hosp. Med. 2014;9:424–429. doi: 10.1002/jhm.2193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Evans RS, Kuttler KG, Simpson KJ, Howe S, Crossno PF, Johnson KV, Schreiner MN, Lloyd JF, Tettelbach WH, Keddington RK, Tanner A, Wilde C, Clemmer TP. Automated detection of physiologic deterioration in hospitalized patients. J. Am. Med. Inform. Assoc.: JAMIA. 2015;22:350–360. doi: 10.1136/amiajnl-2014-002816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Escobar GJ, LaGuardia J, Turk BJ, Ragins A, Kipnis P, Draper D. Early detection of impending physiologic deterioration among patients who are not in intensive care: development of predictive models using data from an automated electronic medical record. J. Hosp. Med. 2012;7:388–395. doi: 10.1002/jhm.1929. [DOI] [PubMed] [Google Scholar]

- 17.van Walraven C, Escobar GJ, Greene JD, Forster AJ. The Kaiser Permanente inpatient risk adjustment methodology was valid in an external patient population. J. Clin. Epidemiol. 2010;63:798–803. doi: 10.1016/j.jclinepi.2009.08.020. [DOI] [PubMed] [Google Scholar]

- 18.Escobar G, Greene J, Scheirer P, Gardner M, Draper D, Kipnis P. Risk adjusting hospital inpatient mortality using automated inpatient, outpatient, and laboratory databases. Med. Care. 2008;46:232–239. doi: 10.1097/MLR.0b013e3181589bb6. [DOI] [PubMed] [Google Scholar]

- 19.Escobar GJ, Gardner M, Greene JG, Draper D, Kipnis P. Risk-adjusting hospital mortality using a comprehensive electronic record in an integrated healthcare delivery system. Med. Care. 2013;51:446–453. doi: 10.1097/MLR.0b013e3182881c8e. [DOI] [PubMed] [Google Scholar]

- 20.Teradata Integrated Data Warehouses. Available at: < http://www.teradata.com/products-and-services/Integrated-Data-Warehouse-Overview/?LangType=1033&LangSelect=true>.

- 21.Japkowicz N, Shaju S. The class imbalance problem: a systematic study. Intell. Data Anal. 2002;6(5):429–449. [Google Scholar]

- 22.Galar M, Fernandez A, Barrenechea E, Bustince H, Herrera F. A review on ensembles for the class imbalance problem: bagging-, boosting-, and hybrid-based approaches. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2012;42:463–484. [Google Scholar]

- 23.Liu XY, Wu J, Zhou ZH. Exploratory undersampling for class-imbalance learning. IEEE Trans. Syst. Man Cybernet. Part B, Cybernet.: Publ. IEEE Syst. Man Cybernet. Soc. 2009;39:539–550. doi: 10.1109/TSMCB.2008.2007853. [DOI] [PubMed] [Google Scholar]

- 24.Bishop CM. Pattern Recognition and Machine Learning. Springer; Berlin: 2006. [Google Scholar]

- 25.Hornik K, Meyer D. Support vector machines in R. J. Stat. Softw. 2006;15:1–28. [Google Scholar]

- 26.Allison PD. Discrete-time methods for the analysis of event histories. Sociol. Methodol. 1982;13:61–98. [Google Scholar]

- 27.Allison PD, editor. Logistic Regression Using SAS: Theory and Application. second. 2012. [Google Scholar]

- 28.Landwehr N, Hall M, Frank E. Logistic model trees. Mach. Learn. 2005;59:161–205. [Google Scholar]

- 29.Rokach L. Ensemble-based classifiers. Artif. Intell. Rev. 2010;33:1–39. [Google Scholar]

- 30.Nagelkerke N. A note on a general definition of the coefficient of determination. Biometrika. 1991;78:691–692. [Google Scholar]

- 31.Harrell FE, Jr, Lee KL, Califf RM, Pryor DB, Rosati RA. Regression modelling strategies for improved prognostic prediction. Stat. Med. 1984;3:143–152. doi: 10.1002/sim.4780030207. [DOI] [PubMed] [Google Scholar]

- 32.Steyerberg EW. Clinical Prediction Models: A Practical Approach to Development, Validation, and Updating New York. Springer; NY: 2009. [Google Scholar]

- 33.Saito T, Rehmsmeier M. The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS ONE. 2015;10:e0118432. doi: 10.1371/journal.pone.0118432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Romero-Brufau S, Huddleston JM, Escobar GJ, Liebow M. Why the C-statistic is not informative to evaluate early warning scores and what metrics to use. Crit. Care. 2015;19:285. doi: 10.1186/s13054-015-0999-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Escobar GJ, Greene JD, Gardner MN, Marelich GP, Quick B, Kipnis P. Intra-hospital transfers to a higher level of care: contribution to total hospital and intensive care unit (ICU) mortality and length of stay (LOS) J. Hosp. Med. 2011;6:74–80. doi: 10.1002/jhm.817. [DOI] [PubMed] [Google Scholar]

- 36.Smith GB, Prytherch DR, Schmidt PE, Featherstone PI. Review and performance evaluation of aggregate weighted ‘track and trigger’ systems. Resuscitation. 2008;77:170–179. doi: 10.1016/j.resuscitation.2007.12.004. [DOI] [PubMed] [Google Scholar]

- 37.Schutt R, O’Neil C. Doing Data Science: Straight Talk from the Frontline. O’Reilly Media, Inc.; 2013. [Google Scholar]

- 38.Lantz B. Machine Learning With R. Packt Publishing Ltd.; 2013. [Google Scholar]

- 39.Duckitt RW, Buxton-Thomas R, Walker J, Cheek E, Bewick V, Venn R, Forni LG. Worthing physiological scoring system: derivation and validation of a physiological early-warning system for medical admissions. An observational, population-based single-centre study. Br. J. Anaesth. 2007;98:769–774. doi: 10.1093/bja/aem097. [DOI] [PubMed] [Google Scholar]

- 40.Pedersen NE, Oestergaard D, Lippert A. End points for validating early warning scores in the context of rapid response systems: a Delphi consensus study. Acta Anaesthesiol. Scand. 2016;60(5):616–622. doi: 10.1111/aas.12668. [DOI] [PubMed] [Google Scholar]

- 41.Bates DW, Saria S, Ohno-Machado L, Shah A, Escobar G. Big data in health care: using analytics to identify and manage high-risk and high-cost patients. Health Aff. 2014;33:1123–1131. doi: 10.1377/hlthaff.2014.0041. [DOI] [PubMed] [Google Scholar]