Abstract

Developmental changes in children’s sensitivity to the role of acoustic variation in the speech stream in conveying speaker affect (vocal paralanguage) were examined. Four-, 7- and 10-year-olds heard utterances in three formats: low-pass filtered, reiterant, and normal speech. The availability of lexical and paralinguistic information varied across these three formats in a way that required children to base their judgments of speaker affect on different configurations of cues in each format.

Across ages, the best performance was obtained when a rich array of acoustic cues was present and when there was no competing lexical information. Four-year-olds performed at chance when judgments had to be based solely on speech prosody in the filtered format and they were unable to selectively attend to paralanguage when discrepant lexical cues were present in normal speech. Seven-year-olds were significantly more sensitive to the paralinguistic role of speech prosody in filtered speech than were 4-year-olds and there was a trend toward greater attention to paralanguage when lexical and paralinguistic cues were inconsistent in normal speech. An integration of the ability to utilize prosodic cues to speaker affect with attention to paralanguage in cases of lexical/paralinguistic discrepancy was observed for 10-year-olds. The results are discussed in terms of the development of a perceptual bias emerging out of selective attention to language.

The human voice is a rich instrument from which the sounds of language, emotion and music emanate. Because of its role in producing myriad phonetic contrasts that are the basis for spoken language, the voice is often thought of primarily as a vehicle for speech. However, underlying the speech stream are complex acoustic (or prosodic) variations that are not, by definition, linguistic. Instead, these cues can provide information about speaker characteristics such as gender, affect, intent and attitude. They also provide potential cues for the segmentation of speech into meaningful linguistic units.

A conceptual distinction can be made between two functions of prosodic variation in speech: the linguistic function and the paralinguistic function (Garnica, 1978; Street, 1990). Prosody facilitates speech intelligibility by marking lexical and grammatical information (linguistic function). On the other hand, it also conveys affective information (paralinguistic function). With the possible exception of harmonic structure, both linguistic prosody and paralanguage exploit a common set of acoustic variables (i.e. fundamental frequency, intensity and duration) but toward different ends. Harmonic structure (energy concentrations reflecting the resonance characteristics of the vocal tract) conveys information critical to the identification of vowels. It has been identified as an important paralinguistic cue but does not play a role in linguistic prosody (Scherer & Oshinsky, 1977; Scherer, Banse, Wallbott & Goldbeck, 1991).

Linguistic prosody

In linguistic prosody, changes in fundamental frequency (F0, perceived as pitch), intensity and duration help language learners to parse the speech stream (Peters, 1985). They facilitate the grouping of syllables into meaningful units (Morgan, 1994), mark clause boundaries (Hirsh-Pasek et al., 1987; Jusczyk et al, 1992) and mark new lexical items (Fernald & Mazzie, 1991). Recently incorporated into many theories of speech perception is the notion that prosody is crucial to the segmentation and identification of individual lexical items in infancy (Cutler & Mehler, 1993; Jusczyk, 1993) and in adulthood (Jusczyk, 1993; Cutler, 1996).

Paralanguage

In contrast, paralanguage provides more global information about the speaker (Garnica, 1978; Street, 1990). For example, changes in F0, intensity, duration and harmonics account for the majority of the variance in adults’ judgments of speaker affect (Lieberman & Michaels, 1962; Scherer & Oshinsky, 1977; Ladd, Silverman, Tolkmitt, Bergman & Scherer, 1985). In infant-directed speech, the functional intent (approval, comfort or attention-bid) of utterances can be reliably discriminated by the shape of the F0 contour (Katz, Cohn & Moore, 1996).

Infants demonstrate a basic sensitivity to paralinguistic cues. Newborns prefer the voice of their mother over the voice of a stranger (DeCasper & Fifer, 1980). By 5 months of age, infants respond differentially to approving and prohibiting maternal utterances (Fernald, 1993). By 7 months of age, infants discriminate complex acoustic signals based on their F0 and harmonic structure (Clarkson & Clifton, 1985; Clarkson, Clifton & Perris, 1988). At months of age, infants begin to regulate their behavior differentially to vocal expressions of happiness and fear (Svejda, 1981). At 9 months of age, there is preliminary evidence that infant behavior is regulated by vocal paralanguage even when it is inconsistent with the lexical content of maternal utterances (Lawrence & Fernald, 1993). This finding is not surprising given the rather limited receptive vocabulary of the average 9-month-old. However, these studies do suggest that, by about 9 months of age, infants are beginning to demonstrate sensitivity to the major paralinguistic functions of the human voice.

Despite infants’ sensitivity to vocal paralanguage, toddlers’ behavior in experimental settings is better predicted on the basis of lexical cues (e.g. ‘No, no, don’t touch’) than on the basis of paralanguage (e.g. an approving voice) when lexical content and vocal paralanguage conflict (Lawrence & Fernald, 1993; J. Lacks, personal communication, August 1997). This type of effect has also been reported for older children; 5- to 9-year-olds base affective judgments on lexical rather than paralinguistic cues (Bugental, Kaswan & Love, 1970; Solomon & Ali, 1972; Reilly & Muzekari, 1986). More importantly, this effect obtains in 7- to 10-year-olds even when exceedingly careful methodological controls are implemented (Friend & Bryant, 2000). This tendency of semantic meaning to interfere with judgments based on other cues is reminiscent of the classic Stroop effect suggesting that there may be a general bias toward the lexicalization of meaning in affective as well as other domains. Indeed, it has been reported that 3- year-olds evince lexical/paralinguistic interference in their judgments of speaker gender (Jerger, Martin & Pirozzolo, 1988) and that 5- to 6-year-olds exhibit interference between lyrical (lexical) and melodic (prosodic or paralinguistic) elements of song stimuli (Morrongiello & Roes, 1990).

Scherer (1991, p. 146) has claimed that ‘Affect vocalizations are the closest we can get to the pure biological expression of emotion and one of the most rudimentary forms of communication’. The tendency of young children to be biased toward lexical, rather than paralinguistic, affective interpretations is interesting given evidence of infant sensitivity to vocal paralanguage. At issue is whether the relative weighting of competing lexical and paralinguistic cues changes with development and what mechanisms might account for this change.

In this paper we examine children’s sensitivity to vocal paralanguage in several speech formats. An important distinction in child development research is that of a child’s aptitude versus his or her ability to demonstrate that aptitude in a particular experimental context. With this distinction in mind, speech formats were carefully selected to provide a developmental assessment of conditions (a) in which children were expected to exhibit the greatest aptitude and (b) in which performance was expected to be limited by attention to language.

Specifically, children’s sensitivity to happy and angry vocal paralanguage in utterances with consistent or discrepant lexical content was assessed under conditions in which the availability of lexical and paralinguistic information was controlled. Sensitivity was conceptualized in classical signal detection terms as the distance between two competing signal distributions (happy and angry) each corresponding to one level of affect conveyed paralinguistically. The more consistently children rate instances of angry paralanguage as ‘angry’ and happy paralanguage as ‘happy’, the greater the psychophysical distance between these distributions and hence the greater children’s sensitivity to the distinction between happy and angry paralanguage.

Theoretical approach

In the present view, young children treat lexical content as a richer, more precise and more reliable indicator of speaker affect than paralanguage. This occurs despite the fact that lexical information is easily dissimulated, and hence a less reliable cue to speaker affect, than vocal paralanguage. By adulthood, a fine-tuning of affective interpretations has taken place. This secondary finetuning leads to a shift away from exclusively lexical interpretations (Mehrabian & Wiener, 1967; Solomon & Yaeger, 1969; Argyle, Alkema & Gilmour, 1971; Reilly & Muzekari, 1986; but cf. O’Sullivan, Ekman, Friesen & Scherer, 1985). The only estimate of the transition away from primarily lexical interpretations in the literature places it as late as early adolescence (Solomon & Ali, 1972). Any account of the developmental mechanism underlying changes in sensitivity to paralinguistic cues must be sufficiently flexible to accommodate both the early shift from a reliance on paralanguage in infancy and the later shift from a reliance on lexical content in childhood.

One potential mechanism underlying these shifts in the salience of lexical and paralinguistic cues can be thought of as a perceptual bias emerging from selective attention to language. Specifically, it is argued that young children are biased to process acoustic variation in the speech stream in such a way as to maximize the salience of individual lexical items (Cutler & Mehler, 1993; Jusczyk, 1993; Cutler, 1996). Variations in F0, intensity and duration facilitate attention to these lexical items. Once attention is directed to lexical meaning, resource limitations may insure that paralanguage becomes, in effect, a subordinate function of nonlinguistic, acoustic, variation in speech. In early childhood, paralanguage may augment linguistic meaning, but not disconfirm or supplant it. This only becomes apparent when lexical content contrasts in meaning with vocal paralanguage. This line of reasoning predicts that a bias to base interpretations on lexical information should be associated with a limited ability to utilize acoustic variation in the speech signal paralinguistically; children may be unable, initially, to coordinate the dual function (linguistic and affective) of acoustic variation in speech. Conversely, improvements in the ability to utilize acoustic variation paralinguistically should be associated with a reduction in the bias to attend to lexical cues in discrepant speech (i.e. judgments of speaker affect should match more closely the paralinguistic content of utterances).

In order to examine the development of this process, an experiment was conducted in which the relative availability of lexical and paralinguistic information in a set of utterances was controlled in three speech formats. Children 4, 7 and 10 years of age heard these utterances and made judgments of the affective state of the speaker. Of particular interest was the relation between children’s sensitivity to paralinguistic cues in isolation and their tendency to be biased toward lexical interpretations of utterances that contained a discrepancy between lexical and paralinguistic cues to speaker affect. Each speech format is described in detail below.

In all speech formats, prosodic variation (linguistic and affective) remained intact. The speech formats differed in the extent to which harmonic structure (the resonances of the vocal tract) and lexical content were preserved in addition to speech prosody. Importantly, as mentioned previously, harmonic structure is thought to influence affective judgments (a speaker may clench teeth in anger, for example, thereby changing the resonance properties of the vocal tract and providing a cue to speaker affect) but is not thought to contribute to linguistic prosody. In the full speech format, children heard tape recordings of a woman reading sentences in happy and angry affect. Lexical and paralinguistic affect was completely crossed so that in half of the sentences the paralinguistic affect was consistent with the lexical content and in the other half it was discrepant. All lexical information and acoustic variation remained intact, thereby allowing an assessment of the relative weighting of lexical and paralinguistic cues in guiding children’s affective judgments. In the reiterant (prosody and harmonics) format, children heard intonational analogs of the full speech utterances that were produced by replacing the original syllables of the full speech utterances with nonsense syllables. This procedure eliminates meaningful lexical content but preserves prosodic variation and harmonic structure (Nakatani & Schaffer, 1977; Liberman & Streeter, 1978; Friend & Farrar, 1994). This procedure makes it possible to assess the development of children’s ability to utilize prosodic variation and harmonic structure paralinguistically in the absence of lexical content. In the filtered (prosodic information only) format, children heard utterances that were filtered to remove all frequencies above the speaker’s peak F0. This procedure blurs lexical content and eliminates harmonic structure while preserving prosodic variation. The procedure makes it possible to assess the development of children’s ability to utilize prosodic variation paralinguistically in the absence of both lexical content and harmonic structure.

Four-year-olds’ affective attributions were expected to be governed by lexical, rather than paralinguistic, information in the full speech format (Bugental et al, 1970; Solomon & Ali, 1972; Reilly & Muzekari, 1986; Friend & Mahelona, 1997). A limitation on the paralinguistic processing of prosodic variation was expected to account, in part, for this tendency. As a result, 4-year-olds were expected to perform at chance in the filtered format in which it was necessary to base affective judgments primarily on speech prosody. Four-year-olds were expected to perform relatively well on reiterant speech because it preserves both harmonic structure and speech prosody without competing lexical content.

Based on prior research, 7-year-olds were also expected to interpret full speech items lexically but a significant shift toward greater reliance on paralinguistic cues was predicted to occur between 4 and 7 years of age. Seven-year-olds were expected to perform above chance in the filtered format reflecting a developmental improvement in the ability to use speech prosody paralinguistically. Like 4-year-olds, 7-year-olds were expected to perform relatively well in the reiterant speech format. The same pattern of improvement predicted from 4 to 7 years of age was also predicted to continue from 7 to 10 years of age. In essence, reliance on lexical content was expected to decrease with age, sensitivity to paralinguistic information contained in the filtered speech format was expected to increase with age, and sensitivity to paralinguistic information in the reiterant speech context was expected to be evident at all ages.

These predicted patterns of performance would be reflected in statistically significant effects of age, speech format and discrepancy, and in interactions of speech format with discrepancy and of speech format with discrepancy and age. To recap, the full speech format assessed the relative weighting of lexical and paralinguistic cues, the reiterant format assessed sensitivity to prosodic variation and harmonic structure in the absence of lexical information, and the filtered format assessed children’s paralinguistic sensitivity to prosodic variations in isolation. The reiterant speech format also served as a manipulation check on comprehension of the task. All children were expected to perform well in this format and thus demonstrate both an understanding of the task and a nascent ability to decode vocal paralanguage.

Method

Participants

The participants were 90 children recruited from university housing and from local schools and day-care centers. Only females were employed in the interest of establishing the presence of a genuine developmental phenomenon prior to investigating potential gender differences. The children were all native speakers of American English and all had normal hearing as assessed by parental report. Each child received an honorarium of $5.00 for research participation. The data from six children were not included in analyses because their ages at the time of testing fell outside the desired age ranges for the current study. There were 28 children in each of three age groups: 4-year-olds (M = 4;7, range 4;1 to 4; 11), 7-year-olds (M = 7;7, range 7;0 to 8; 1) and 10-year-olds (M=10;8, range 10;1 to 11; 10).

Stimulus development

Full speech and reiterant speech

Eighteen sentences were taken from transcripts of mothers talking to their children. For each utterance, lexical content was rated by adults in a previous study as representing either happiness or anger at a minimum inter-rater agreement of 0.80 (Friend & Bryant, 2000). Agreement was calculated for each utterance as agreements (the number of judges who rated the utterance in the intended direction) divided by agreements plus disagreements (the total number of judges). For this particular study, the criterion of 0.80 agreement required that at least 10 of 12 judges rated the lexical content of each utterance in the intended direction. Half of the sentences were rated as happy and half were rated as angry. An additional five sentences were selected which were rated in a separate study as affectively neutral at a minimum inter-rater agreement of 0.75 (or three of four judges) (Bugental et al., 1970). Each happy and angry sentence was read in both happy and angry vocal paralanguage. The adequacy of the paralanguage manipulation was assessed by having judges rate the reiterant version of each stimulus since this version eliminated the potentially confounding influence of lexical content (see below).

The original and reiterant stimuli were generated by the experimenter, a female native speaker of American English. The stimuli were read into a Shure Model SM81 condenser microphone and recorded using a Teac Model A-3300SX laboratory tape recorder. The microphone was affixed to the experimenter’s head at a constant distance of 15.3 cm.

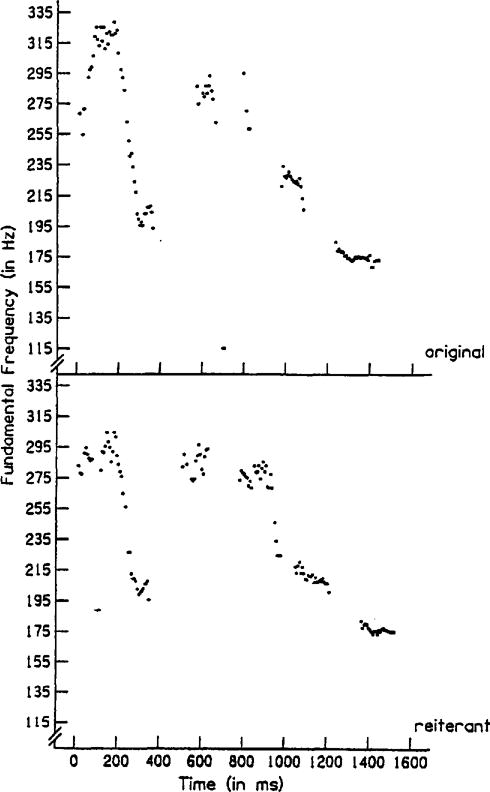

Following the reading of each sentence, a reiterant stimulus was generated. The reiterant stimulus was produced by replacing each syllable of the original sentence with one of three meaningless syllables (ma, ba or sa). The basis for choosing one of these syllables was the similarity of F0 contours for the original and reiterant syllables.

Reiterant speech was used to create a stimulus that was as acoustically similar to the original stimulus as possible without using meaningful lexical content. The reiterant syllables were produced to maximize their acoustic similarity to the target syllables. The acoustic similarity between stimuli was determined in two stages. First, F0 contours, obtained from a Visi-Pitch Model 6087 DS, were compared for the reiterant and original stimuli. Several original–reiterant stimulus pairs were generated for each stimulus sentence. Figure 1 provides the F0 contour for the sentence ‘You’re my favorite person’, read in happy intended affect, and for its reiterant counterpart ‘ma ma sasama basa’. The vocal affect of each reiterant stimulus was rated by adults in a separate experiment as happy, angry or neutral at a minimum inter-rater agreement of 0.75 (M = 0.91, range = 0.75–1.00, n = 100; Friend & Farrar, 1994). Mean ratings of the vocal affect of the happy, angry and neutral stimuli are provided in Table 1. These data suggest that the different levels of vocal paralanguage were comparable in their affective intensity.

Figure 1.

Fundamental frequency contours for the sentence, You’re my favorite person’, read in a happy affective voice.

Table 1.

Mean validation ratings (and standard deviations) of happy, neutral and angry reiterant stimuli

| Paralanguage manipulation | Mean rating |

|---|---|

| Happy | 5.02 (0.87) |

| Neutral | 2.54 (0.64) |

| Angry | 4.79 (0.86) |

Notes: All stimuli were rated on two scales, one ranging from 1 (not happy) to 7 (very happy) and one ranging from 1 (not angry) to 7 (very angry). The value reported for happy stimuli is from the ‘happy’ scale, the value for angry stimuli is from the ‘angry’ scale, and the value for the neutral stimuli is the average of the two scales. N= 100.

Next, F0 mean, F0 range, dB mean and dB standard deviation (relative dB obtained for F0 only) were measured for each member of those pairs of original and reiterant utterances which had the most visually similar F0 contours. An original–reiterant stimulus pair was chosen for inclusion in the study if two criteria were met: (1) both utterances produced visually similar F0 contours, and (2) the utterances differed in their values on the four acoustic variables by |z| < 1.5. These criteria were met for 15 stimulus pairs: six happy sentences read in both happy and angry voice, six angry sentences read in both happy and angry voice, and three neutral sentences read in neutral voice (see Tables 3–5, later, for the lexical content of items in the final stimulus set). The original and reiterant stimuli were digitized at a sampling rate of 20 kHz using a 12-bit A/D converter and high-frequency preemphasis (Whalen, Wiley, Rubin & Cooper, 1990). A waveform-editing program was used to remove any noise from the beginnings and ends of the stimuli.

Table 3.

Percentage of correct judgments of paralinguistic content by 4-year-olds as a function of stimulus and speech format

| Lexical content | Paralinguistic content | Speech format

|

||

|---|---|---|---|---|

| Filtered | Reiterant | Full | ||

| You’ll never behave yourself | Angry | 48 | 52 | 91 |

| Don’t play around with me child | Angry | 58 | 77 | 91 |

| You’re being punished you stay right there | Angry | 55 | 82 | 94 |

| Oh good you got them all | Happy | 61 | 77 | 80 |

| You play very well | Happy | 48 | 63 | 82 |

| You’re my favorite person | Happy | 58 | 77 | 87 |

| You’ll never behave yourself | Happy | 61 | 64 | 55 |

| Don’t play around with me child | Happy | 60 | 64 | 25 |

| You’re being punished you stay right there | Happy | 61 | 83 | 41 |

| Oh good you got them all | Angry | 48 | 76 | 36 |

| You play very well | Angry | 43 | 73 | 27 |

| You’re my favorite person | Angry | 45 | 66 | 38 |

Note: Values represent proportion of children correct (out of 28).

Table 5.

Percentage of correct judgments of paralinguistic content by 10-year-olds as a function of stimulus and speech format

| Lexical content | Paralinguistic content | Speech format

|

||

|---|---|---|---|---|

| Filtered | Reiterant | Full | ||

| You’ll never behave yourself | Angry | 92 | 73 | 100 |

| Don’t play around with me child | Angry | 86 | 96 | 98 |

| You’re being punished you stay right there | Angry | 96 | 95 | 100 |

| Oh good you got them all | Happy | 85 | 83 | 93 |

| You play very well | Happy | 73 | 96 | 98 |

| You’re my favorite person | Happy | 91 | 80 | 98 |

| You’ll never behave yourself | Happy | 91 | 98 | 73 |

| Don’t play around with me child | Happy | 79 | 96 | 61 |

| You’re being punished you stay right there | Happy | 75 | 96 | 57 |

| Oh good you got them all | Angry | 88 | 95 | 94 |

| You play very well | Angry | 59 | 89 | 55 |

| You’re my favorite person | Angry | 89 | 100 | 98 |

Note: Values represent proportion correct (out of 28).

Low-pass filtered stimuli

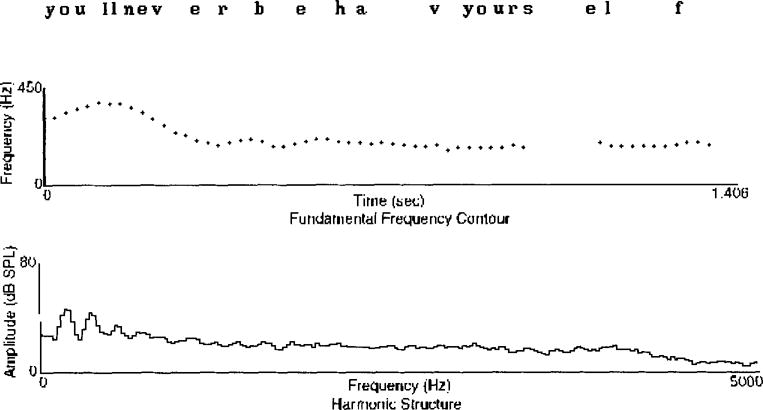

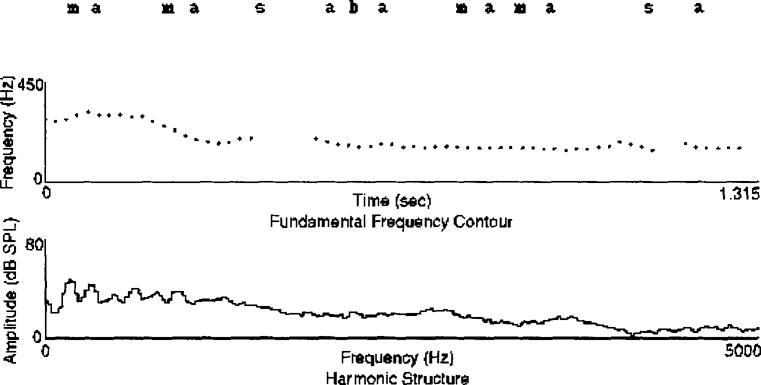

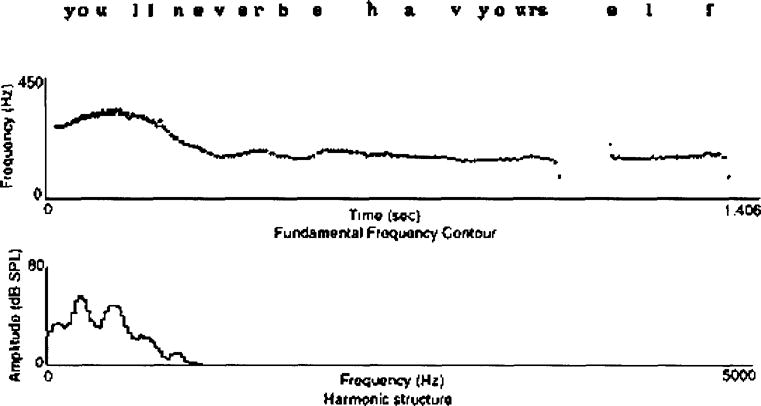

The digitized original stimuli were low-pass filtered at 400 Hz with a roll-off of 48 dB/octave using cascading ILS software filters and were recorded using a Teac Model A-3300SX laboratory tape recorder. The stimuli have the sound of muffled speech, as though someone is speaking behind a closed door. (See Figures 2, 3 and 4 for acoustic descriptions of full, filtered and reiterant speech stimuli. Note that the F0 contour is preserved in all cases, the distribution of energy across the frequency spectrum is preserved in full and reiterant speech, and the contribution of lexical content is preserved in full speech.)

Figure 2.

Fundamental frequency and harmonic characteristics of an angry lexical content/happy paralanguage stimulus in full speech format.

Figure 3.

Fundamental frequency and harmonic characteristics of an angry lexical content/happy paralanguage stimulus in reiterant speech format.

Figure 4.

Fundamental frequency and harmonic characteristics of an angry lexical content/happy paralanguage stimulus in filtered speech format.

Procedure

Three stimulus sets (reiterant, low-pass filtered, full) were presented to listeners. The three neutral stimuli were presented at the beginning and end of each stimulus set to provide listeners with a baseline of the speaker’s voice in each condition. Of the affective stimuli, six were consistent (three happy lexical content/happy voice, three angry lexical content/angry voice) and six were discrepant (three happy lexical content/angry voice, three angry lexical content/happy voice). Within each set, the stimuli were arranged in both random and reverse orders with the restriction that no more than two stimuli representing the same vocal affect (happy or angry) occurred in succession. The six possible orders in which the stimulus sets (reiterant, low-pass filtered, full) could occur were completely counterbalanced across participants (and approximately counterbalanced within age groups).

Stimuli were presented in a single-walled sound-attenuating chamber (Tracoustics) using a Teac Tascam Model 32 reel-to-reel tape deck and Beyerdynamic DT320 mkII stereo headphones. Stimulus intensity was set at a comfortable listening level of approximately 60 to 70 dBgpL and adjusted to maximize the similarity of stimulus intensities across sets. Within each stimulus set, children heard both the random and reverse orders for a total of 36 stimulus presentations. The orders were counterbalanced across children so that one-half of the children heard the stimuli in the random and then the reverse order and the other half heard the stimuli in reverse and then random order.

Each stimulus set was flanked by three neutral stimuli at the beginning and at the end of the set. This flanking arrangement served two purposes. First, it provided children with baseline vocal expressions in each speech format against which the other, affective, stimuli in the set could be judged. Second, because the neutral stimuli occurred at both the beginnings and ends of stimulus sets (children heard all stimulus sets in counterbalanced sequence), children were afforded comparisons of the baseline vocal expressions across speech formats. In this way, the effect of the novelty of the speech formats on children’s affective ratings was minimized.

After each stimulus presentation, the children were asked to tell the experimenter whether the woman’s voice sounded ‘happy’ or ‘mad’ by pointing to one of two schematic faces representing these emotions. Children were told, ‘On this tape you will hear recordings of a mother talking to her little girl in a toy store. Sometimes she feels happy and sometimes she feels mad. Your job is to tell me when she feels happy and when she feels mad by pointing to one of these faces. Sometimes it will be difficult to understand what she’s saying and that’s okay. The words are not really important. What’s really important is how she sounds.’ These instructions were intended to encourage children to use paralinguistic, rather than lexical, cues as the basis for their judgments. The tape was stopped after each of the first three stimuli to ascertain that the children understood the procedure. Each child was asked, ‘How does she feel? Does she feel happy or does she feel mad?’ For subsequent stimuli, each child simply pointed to the face that was most like how the mother felt each time she heard an utterance. The tape was stopped as needed and the question repeated. If the child found it difficult to decide, she was asked, ‘Does she feel more happy or more mad?’ If children made more than one response only the final response was recorded.

Results

All analyses were conducted at α = 0.01 and all planned comparisons were conducted using a Bonferroni correction for family-wise error. A Speech format (3) × Paralanguage (2) × Lexical content (2) × Age (3) × Order (6) repeated measures mixed models analysis of variance (ANOVA) was conducted on the number of judgments (out of a possible six) that accurately reflected the paralinguistic content of the stimuli. The purpose of this initial analysis was to determine whether the Paralanguage, Lexical content and Order terms were necessary or whether a reduced model could account for the data. Two findings relevant to this concern were (1) the absence of a main effect of Order and (2) the presence of an interaction of Paralanguage and Lexical content: planned comparisons revealed that children’s ratings were more consistent with the paralinguistic content of stimuli when lexical and paralinguistic information were consistent (M = 5.02) than when they were discrepant (M = 4.19, t = 8.99, p<0.01). There were no differences in accuracy between different levels of consistent or discrepant messages. For these reasons, the Order term was dropped from the model and the Paralanguage and Lexical content terms were collapsed into a single term, Discrepancy, with two levels: consistent and discrepant.

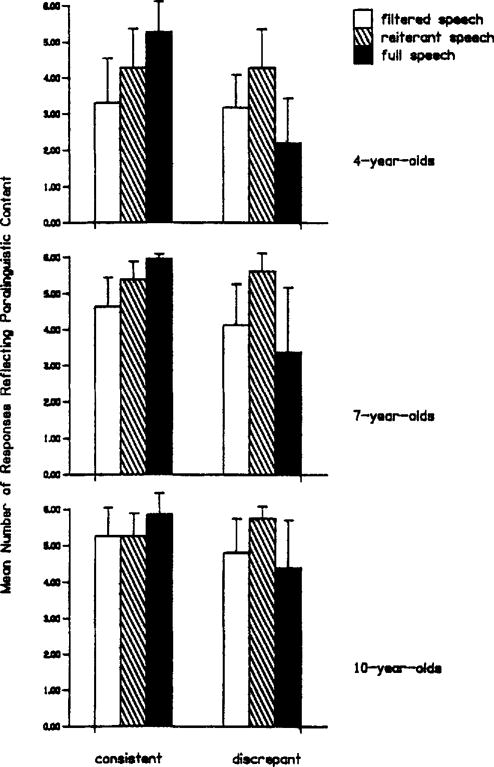

A Speech format (3) × Discrepancy (2) × Age (3) repeated measures mixed models ANOVA yielded main effects of Speech format (F(2,80) = 41.7), Discrepancy (F(1, 81) = 86.29) and Age (F(2,81) = 62.87). In addition, the predicted interactions of Speech format × Discrepancy (F(2,80) = 92.67) and Speech format × Discrepancy × Age (F(4,162) = 4.49) were significant.

In general, planned comparisons yielded support for the hypotheses (see Table 2 for means and standard deviations). Because the lower-order effects are subsumed by the predicted Speech format × Discrepancy × Age interaction, we proceed with a decomposition of that interaction with a focus on changes in patterns of paralinguistic sensitivity with age (see Figure 5). The interaction is best explained on the basis of three predicted effects: (1) a tendency for lexical content to govern affective judgments of discrepant utterances in the full speech format and a decrease with age in this tendency; (2) an increase with age in the sensitivity to the paralinguistic function of prosodic variation in filtered speech; and (3) relatively high sensitivity across ages in the reiterant speech format. These effects, as a function of age, are discussed below.

Table 2.

Mean number correct (and standard deviations) as a function of age, speech format and discrepancy

| Speech format | Age

|

|||||

|---|---|---|---|---|---|---|

| 4-year-olds | 7-year-olds | 10-year-olds | ||||

| Filtered | ||||||

| Consistent | 3.30 | (1.24) | 4.64 | (0.80) | 5.25 | (0.80) |

| Discrepant | 3.17 | (0.92) | 4.12 | (1.14) | 4.80 | (0.94) |

| Reiterant | ||||||

| Consistent | 4.27 | (1.09) | 5.38 | (0.50) | 5.25 | (0.63) |

| Discrepant | 4.28 | (1.08) | 5.62 | (0.50) | 5.75 | (0.34) |

| Full | ||||||

| Consistent | 5.27 | (0.84) | 5.96 | (0.13) | 5.87 | (0.58) |

| Discrepant | 2.21 | (1.23) | 3.38 | (1.79) | 4.39 | (1.31) |

Note: The maximum possible number correct is 6.

Figure 5.

Paralinguistic sensitivity as a function of age, speech format and discrepancy.

Four-year-olds

As predicted, 4-year-olds’ judgments of full speech stimuli were governed more by lexical, than by paralinguistic, information. Four-year-olds’ judgments of consistent, full speech, stimuli were significantly more concordant with paralinguistic information than were their judgments of discrepant, full speech, stimuli (t(27) = 9.16, p<0.01). Also as predicted, 4-year-olds’ performance in the filtered format did not differ from chance, reflecting an inability to use prosodic variation paralinguistically (t(27) =−3.36, p>0.01). Finally, 4-year-olds’ difficulty in the filtered format did not reflect a general insensitivity to paralinguistic cues. As predicted, children performed relatively well in the reiterant format as indicated by a comparison of their performance in the reiterant and filtered formats (t(27) = 5.14, p<0.01). This pattern of performance suggests that 4-year-olds are able to decode vocal paralanguage given a rich array of paralinguistic cues and no competing lexical content in the reiterant format. However, they find it difficult to ignore discrepant lexical content in full speech and do not appear to be sensitive to prosodic cues to speaker affect in filtered speech.

Seven-year-olds

As predicted, lexical information was also weighted more heavily than paralinguistic information in 7-year-olds’ judgments of full speech. Like 4-year-olds, these children’s judgments of consistent, full speech, stimuli were significantly more concordant with paralinguistic information than their judgments of discrepant, full speech, stimuli (t(27) = 7.55, p < 0.01). In contrast to the hypothesis that there would be a reduction with age in the tendency to base affective judgments on lexical content, the trend toward reduced reliance on lexical content was not significant between ages 4 and 7. However, the predicted improvement in paralinguistic sensitivity to prosodic variation in filtered speech was observed between age 4 and age 7 (t(54) = 5.46, p < 0.01). In addition, 7-year-olds, like 4-year-olds, were significantly more sensitive to paralinguistic information in the reiterant speech format than in the filtered speech format (t(27) = 6.78, p < 0.01). This pattern of performance suggests that at least some 7-year-olds find it difficult to ignore discrepant lexical content in full speech, even though their sensitivity to prosodic cues to speaker affect in filtered speech shows a developmental improvement over that of 4-year-olds.

Ten-year-olds

Ten-year-olds continued to demonstrate a bias in making affective judgments on the basis of lexical content when lexical content conflicted with paralinguistic cues (t(27) = 6.09, p>0.01). The reduction in reliance on lexical content in discrepant full speech was not significant from age 7 to age 10; however, there was a significant reduction in the influence of lexical content on children’s judgments from age 4 to age 10 (t(54) = 3.85, p < 0.01). There were two important departures from the data reported for 4- and 7-year-olds: (1) the improvement in sensitivity to prosodic variation in filtered speech between 7 and 10 years of age was not significant; and (2) there was no significant difference in 10-year-olds’ performance across the reiterant and filtered formats. This pattern of performance indicates that 10-year-olds, relative to 4-year-olds, are significantly more sensitive to the paralinguistic content of affectively discrepant messages. The absence of a difference between 7- and 10-year-olds in the filtered speech format suggests that the significant improvement in sensitivity occurs between age 4 and age 7, preceding the improvement in sensitivity to paralinguistic information in discrepant, full speech. Further, the absence of a difference between the filtered and reiterant formats suggests that 10-year-olds’ sensitivity to prosodic variation in filtered speech is comparable with their sensitivity to the full array of paralinguistic cues present in reiterant speech.

The general pattern of performance across age, speech format and discrepancy was maintained relatively well for average performance on individual stimuli as well as for average performance across stimuli. Percentage correct for each stimulus as a function of discrepancy and speech format is given for each age group in Tables 3, 4 and 5. These tables reveal that, for each age group, children were more accurate in their ratings of consistent full speech items than in their ratings of discrepant full speech items, reflecting the predicted reliance on lexical content in cases of discrepancy. For the 4- and 7-year-olds, performance on reiterant items was generally more accurate than performance on the same items in the filtered format, reflecting an ability to utilize paralinguistic cues in the reiterant, but not in the filtered, format.

Table 4.

Percentage of correct judgments of paralinguistic content by 7-year-olds as a function of stimulus and speech format

| Lexical content | Paralinguistic content | Speech format

|

||

|---|---|---|---|---|

| Filtered | Reiterant | Full | ||

| You’ll never behave yourself | Angry | 75 | 68 | 100 |

| Don’t play around with me child | Angry | 82 | 94 | 100 |

| You’re being punished you stay right there | Angry | 87 | 100 | 98 |

| Oh good you got them all | Happy | 70 | 89 | 98 |

| You play very well | Happy | 60 | 100 | 100 |

| You’re my favorite person | Happy | 89 | 86 | 100 |

| You’ll never behave yourself | Happy | 80 | 87 | 66 |

| Don’t play around with me child | Happy | 64 | 96 | 36 |

| You’re being punished you stay right there | Happy | 66 | 98 | 60 |

| Oh good you got them all | Angry | 73 | 88 | 75 |

| You play very well | Angry | 53 | 92 | 27 |

| You’re my favorite person | Angry | 75 | 100 | 73 |

Note: Values represent proportion correct (out of 28).

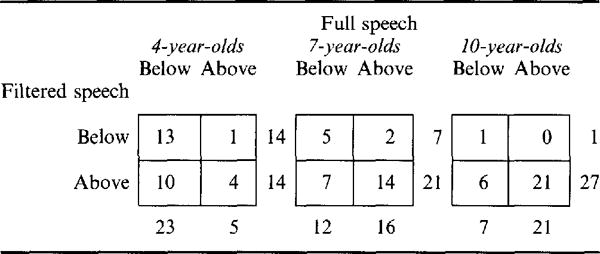

Individual performance

To determine the extent to which individual sensitivity to paralinguistic cues in full, discrepant speech could be predicted on the basis of sensitivity to prosodic variation in filtered speech, two analyses were conducted. In the first analysis, children were cross-classified as above or below chance sensitivity (three items correct out of six) for full, and filtered, discrepant speech (see Table 6 for cross-classification data). The purpose of the cross-classification was to compare individual children’s performance across different instantiations (filtered and full speech) of the same set of discrepant stimuli. The majority of children in each age group exhibited comparable sensitivity to paralinguistic cues across full and filtered versions of these utterances. This is consistent with the predicted correspondence between the paralinguistic utilization of prosodic variation and a reduction in lexical bias in cases of discrepancy.

Table 6.

Cross-classification of individual cases falling above and below chance sensitivity in discrepant filtered and full speech formats

|

Note: Values represent the number of cases out of 28 for each age group.

There were important developmental shifts, however, in the distribution of sensitivity to paralinguistic cues in the two speech formats. Individual 4-year-olds were distributed evenly above (n=14) and below (n=14) chance sensitivity in the filtered speech format, yet the majority of these children (n = 23) fell below chance for full, discrepant speech. In contrast, most individual 7-year-olds (n = 21) performed above chance on filtered speech and were more evenly distributed above (n = 16) and below (n=12) chance sensitivity to paralinguistic cues in full, discrepant speech. With respect to performance on full, discrepant utterances, this sample appeared to represent a transitional group composed of two main subgroups: children with low sensitivity to paralinguistic cues (like the 4-year-old sample) and children with high sensitivity (like the 10-year-old sample). In the 10-year-old sample, most children (n = 21) performed above chance in both the filtered and full speech formats. The observed pattern of sensitivity suggests that proficiency in attributing affect on the basis of prosodic variation in filtered speech appears developmentally prior to proficiency in attention to paralinguistic cues in full, discrepant, speech. In only three of 84 cases did children exhibit the opposite pattern: above chance sensitivity to paralanguage in full, discrepant speech and below chance sensitivity in filtered speech. To further explore the possibility that the utilization of F0 cues in filtered speech is associated with attention to paralinguistic cues in full, discrepant speech, a second, quantitative analysis was undertaken.

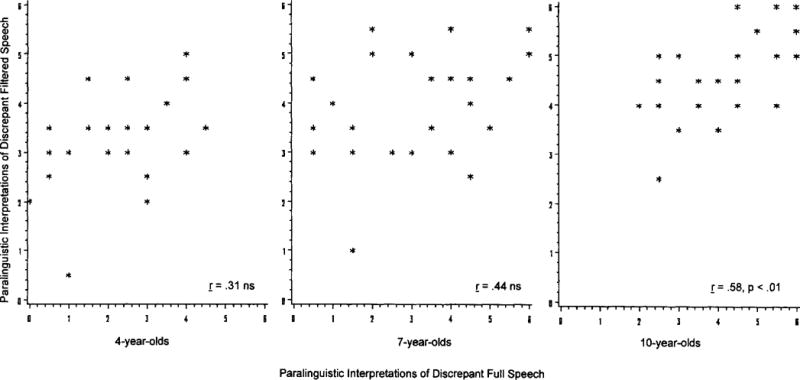

In the second analysis, the number of utterances that children judged in accordance with paralinguistic cues was plotted for filtered, discrepant, speech relative to full, discrepant, speech for each age group and a Pearson product–moment correlation coefficient was calculated (see Figure 6). If the ability to utilize prosodic variation as a paralinguistic cue is associated with attention to the paralinguistic content of discrepant utterances, then sensitivity to paralanguage in the filtered speech format should correspond to, or precede, sensitivity in full, discrepant, speech. The scatterplots reveal that this predicted pattern of performance was obtained, across ages, for approximately two-thirds of the sample. However, there were important differences in the distribution of children’s scores across these formats with age. In particular, at age 4, relative performance across the filtered and full speech formats tended to cluster toward the low end of sensitivity and there was no significant linear relation across formats. In contrast to the 4-year-old sample, 7-year-olds evinced substantial variability in their sensitivity to paralinguistic cues again suggesting, as in the previous analysis, the possibility of a developmental transition. Importantly, this variability was observed primarily for utterances in full, discrepant, speech, whereas performance in the filtered speech format was consistently above chance. Accordingly, as with the 4-year-old sample, the correlation between performance on filtered and full discrepant speech was not significant for the 7-year-old sample. (It should be noted, however, that this analysis was quite conservative, using α = 0.01 with a Bonferroni correction for family-wise error. In a less conservative analysis, the observed correlation, r = 0.44, p = 0.018, would be significant.) At age 10, relative performance in the filtered and full speech formats appeared considerably more uniform than at younger ages, tended to be clustered toward the high end of sensitivity for both formats, and revealed a significant linear relationship (r = 0.58, p<0.01).

Figure 6.

Individual sensitivity to paralanguage in discrepant utterances as a function of sensitivity to paralanguage in filtered speech. Values are the number of stimuli judged in accordance with paralinguistic cues in each format for each individual. The maximum possible score was 6. Multiple occurrences of the same relative scores on both axes result in some hidden observations; for each group, n = 28.

Both individual differences analyses indicate that, across ages, the basic relation between sensitivity to paralinguistic cues in filtered and full speech formats remains constant (i.e. in most cases, sensitivity to filtered speech corresponds to or precedes sensitivity to full, discrepant speech). Even so, the correlational data suggest that sensitivity to prosodic variation is not significantly related to 4-year-olds’ interpretations of affectively discrepant messages. Rather, 4-year-olds show variable sensitivity to prosodic variation in filtered speech and extremely limited sensitivity to paralanguage in full, discrepant speech. From age 4 to age 7, sensitivity in both formats improves but the relation between formats is not significant. It is not until 10 years of age that children’s performance across filtered and full speech formats appears to consolidate and a clear linear relationship emerges between sensitivity to prosodic variation in filtered speech and attention to paralinguistic content in full, discrepant speech.

Discussion

The observed developmental changes in paralinguistic sensitivity from 4 to 10 years of age are consistent with a model of vocal affect perception in which the ability to use specific acoustic cues paralinguistically improves over the course of childhood. As predicted, in cases of discrepancy, 4-year-olds made affective attributions based on lexical content. As a result, 4-year-olds’ poorest performance was obtained for full, discrepant, utterances. Evidence of limited sensitivity to the paralinguistic function of prosodic variation was provided by 4-year-olds’ chance sensitivity, on average, to filtered speech. The fact that 4-year-olds were sensitive to paralinguistic cues (71% correct) in reiterant speech suggests that children’s sensitivity in cases of discrepancy is not based upon the gradual acquisition of sensitivity to paralinguistic cues. The pattern of performance is clear: 4-year-olds are sensitive to paralinguistic information when multiple cues (prosodic variation and harmonic structure) and no competing words are present (as in the reiterant speech format). They exhibit limited sensitivity to paralanguage carried by prosodic variation alone, and when faced with discrepancy between lexical and paralinguistic cues 4-year-olds do not selectively attend to vocal paralanguage.

Seven-year-olds evinced a trend toward greater sensitivity, relative to 4-year-olds, to paralanguage in filtered and in full discrepant speech; however, this trend reached significance for filtered speech only. The analysis of individual performance revealed that, whereas 7-year-olds showed greater sensitivity to full discrepant speech, relative to 4-year-olds, they also displayed greater variability. Nearly one-half of 7-year-olds performed similarly to 4-year-olds (i.e. basing their attributions primarily on lexical content) while the other half performed more similarly to 10-year-olds (basing their attributions primarily on paralinguistic cues). In contrast, 21 of 28 7-year-olds showed above chance sensitivity to paralanguage in filtered speech. This pattern of findings suggests that sensitivity to the paralinguistic function of prosodic variation in the speech stream may precede sensitivity to paralanguage in the presence of competing lexical content. Seven-year-olds appear to represent a transitional group with respect to their ability to utilize their demonstrated sensitivity to paralanguage (in filtered and reiterant speech) in the service of interpreting discrepant utterances.

Improvement in sensitivity to paralanguage in full discrepant speech was observed for the 10-year-old sample. Importantly, it is at this age that a significant relation between performance in the filtered and full speech formats obtains. This reflects a synthesis of sensitivity to the paralinguistic function of prosodic variation with attention to paralanguage in the context of discrepancy, an integration that had only begun to emerge in the 7-year-old sample. The shift toward sensitivity to the paralinguistic function of acoustic cues appears to be fairly protracted, particularly in cases of discrepancy. This seems reasonable given evidence that prosodic variations play an important role in the facilitation of speech comprehension even among older children and adults (Cutler & Foss, 1977; Aitchison & Chiat, 1981; Cutler & Norris, 1988; Jusczyk, 1993; Cutler, 1996). The integration of the prosodic and paralinguistic functions of acoustic variations in the speech stream appears to extend until at least age 7 and is not realized in a significant reduction in lexical interpretations of discrepancy until sometime between ages 7 and 10.

The fact that all groups performed relatively well in the reiterant format indicates that the developmental changes observed in paralinguistic sensitivity in filtered and full discrepant speech cannot be explained on the basis of a limited ability to extract affective information from acoustic cues in general. Clearly, across ages, children were able to extract affective information from acoustic cues in the present experiment given that the following two conditions were met: (1) conflicting lexical content was absent; and (2) a complex configuration of acoustic cues (prosodic variation and harmonic structure) was present. This is important because it suggests that even the youngest children comprehended the task and that the observed developmental changes in sensitivity across speech formats have implications for understanding the emerging integration of linguistic and affective processing.

The effects obtained in the present experiment are viewed as emerging out of the process of selective attention to language. Specifically, it is proposed that prosodic variation facilitates the efficient processing of the child’s native language by focusing attention on those aspects of the speech stream that are most relevant to comprehension. In this view, affective information conveyed paralinguistically is secondary in importance to affective information conveyed lexically. Paralinguistic information is relegated to a subordinate role in which it may modify lexical information but not supplant it.

Developing facility in the paralinguistic interpretation of discrepant utterances involves a two-step process: first, the utilization of speech prosody toward paralinguistic ends, and second, shifting attention from, or inhibiting, salient lexical information. The first part of this process was evident in the developmental changes observed in children’s sensitivity to the paralinguistic function of prosodic variation in filtered speech. The second part of this process was evident in the developmentally subsequent improvement in the ability to attribute meaning on the basis of paralanguage in discrepant speech. As a whole, this process may reflect a shift from local (lexicon-based) to global (paralanguage-based) attributions of meaning.

Importantly, the lexical bias investigated in the present research is expected to apply generally to all cases in which a discrepancy exists between lexical and prosodic cues, not just to those cases relevant to affect detection. For example, Moore, Harris and Patriquin (1993) have found that 4-year-olds are unable to utilize pitch markers (rising or falling intonation) to relative certainty and rely instead upon the lexical terms ‘think’ and ‘know’. In this case, children were unable to utilize speech prosody as a cue to speaker certainty. In the present view, the mechanism underlying this inability reflects a bias to attend to the salience of acoustic markers in highlighting lexical content.

Two additional mechanisms that may influence children’s sensitivity to vocal paralanguage are the role of experience in either promoting or delaying sensitivity and the extent to which children’s judgments are influenced by factors such as uncertainty. With regard to the first issue, it is expected that the bias toward lexical interpretations of utterances may have adaptive consequences for language acquisition and may therefore be somewhat resistant to environmental influences. After all, in the majority of cases, one could expect a lexical bias to lead to correct conclusions about utterance meaning (thanks to an anonymous reviewer for this point). However, further research is required to determine the extent to which the developmental sequence reported may be modulated by extensive exposure to particular kinds of communication or by explicit training.

With regard to the role of uncertainty, the present experiment took a signal detection approach to assessing children’s sensitivity to the difference between happy and angry affective vocalizations. In this approach, it is important to obtain children’s ‘best guesses’ even in those cases in which they are uncertain. Indeed, children’s errors as well as their correct judgments are extremely important in establishing an estimate of the perceptual distance between these two types of vocalization. For this reason, a forced-choice response format was utilized. Nevertheless, uncertainty ratings might further clarify the pattern of results obtained across ages and speech formats. For example, a lexical bias may be most evident when children are uncertain about the meaning of a stimulus. A potentially useful technique in future research may be a combination of a forced-choice approach followed by a certainty rating.

In the present paper, a working model based on a bias emerging from selective attention to language has been proposed to account for the observed developmental changes in sensitivity to vocal paralanguage. An impetus for developing such a general model is that, in emotion as well as in other domains, discrepancy between lexical and paralinguistic cues results in interference for young children. Directions for future research include (1) establishing the age of onset of a bias to attend to lexical content and the relation of this bias to the language acquisition process, (2) specifying the role of working memory in permitting children to consider both lexical and paralinguistic cues in utterance interpretation, and 3) evaluating the role of social-cognitive factors specific to the domain of emotions such as hypothesis testing about the reliability of lexical versus paralinguistic cues.

Acknowledgments

This research was supported, in part, by Haskins Laboratories through grants NICHD #T32-HD07318 awarded to the Department of Psychology, University of Florida, NICHD #HD-07893 awarded to the author, and NIMH #R03MH44032 awarded to M. Jeffrey Farrar. Data collection was conducted under the joint sponsorship of the University of California, Irvine, and the University of Florida. A report of this research was presented at the biennial meeting of the Society for Research in Child Development, April 1995. Grateful acknowledgement is extended to several schools for their participation: University Montessori, Westpark Montessori, Irvine Child Development Center, Verano Place Preschool, Jenny Hart Early Education Center, Children’s Center, Irvine Community Nursery School, South Coast Early Childhood Center, Good Shepherd Preschool, Turtle Rock Preschool, UCI Extended Day Care, The Dolphin Club, UCI Farm School, Anderson Elementary School, Harborview Elementary School and Mariner’s Elementary School. Gratitude is also extended to M. Jeffrey Farrar, Dan I. Slobin, Brian MacWhinney, Helen Heyming and anonymous reviewers for their thoughtful comments on earlier versions of this manuscript.

References

- Aitchison J, Chiat C. Natural phonology or natural memory? The interaction between phonological processes and recall mechanisms. Language and Speech. 1981;24:311–326. [Google Scholar]

- Argyle M, Alkema F, Gilmour R. The communication of friendly and hostile attitudes by verbal and non-verbal signals. European Journal of Social Psychology. 1971;1:385–402. [Google Scholar]

- Bugental DE, Kaswan JW, Love LR. Perception of contradictory meanings conveyed by verbal and nonverbal channels. Journal of Personality and Social Psychology. 1970;16:647–655. doi: 10.1037/h0030254. [DOI] [PubMed] [Google Scholar]

- Clarkson MG, Clifton RK. Infant pitch perception: evidence for responding to pitch categories and the missing fundamental. Journal of the Acoustical Society of America. 1985;77:1521–1528. doi: 10.1121/1.391994. [DOI] [PubMed] [Google Scholar]

- Clarkson MG, Clifton RK, Perris EE. Infant timbre perception: discrimination of spectral envelopes. Perception and Psychophysics. 1988;43:15–20. doi: 10.3758/bf03208968. [DOI] [PubMed] [Google Scholar]

- Cutler A. Prosody and the word boundary problem. In: Morgan JL, Demuth K, editors. Signal to syntax: Bootstrapping from speech to grammar in early acquisition. Mahwah, NJ: Lawrence Erlbaum; 1996. [Google Scholar]

- Cutler A, Foss DJ. On the role of sentence stress in sentence processing. Language and Speech. 1977;20:1–10. doi: 10.1177/002383097702000101. [DOI] [PubMed] [Google Scholar]

- Cutler A, Mehler J. The periodicity bias. Journal of Phonetics. 1993;21:103–108. [Google Scholar]

- Cutler A, Norris D. The role of strong syllables in segmentation for lexical access. Journal of Experimental Psychology: Human Perception and Performance. 1988;14:113–121. [Google Scholar]

- DeCasper AJ, Fifer WP. Of human bonding: newborns prefer their mothers’ voices. Science. 1980;208:1174–1176. doi: 10.1126/science.7375928. [DOI] [PubMed] [Google Scholar]

- Fernald A. Approval and disapproval: infant responsiveness to vocal affect in familiar and unfamiliar languages. Child Development. 1993;64:657–674. [PubMed] [Google Scholar]

- Fernald A, Mazzie C. Prosody and focus in speech to infants and adults. Developmental Psychology. 1991;27:209–221. [Google Scholar]

- Friend M, Bryant JB. A developmental lexical bias in the interpretation of discrepant messages. Merrill-Palmer Quarterly. 2000;46:140–167. [PMC free article] [PubMed] [Google Scholar]

- Friend M, Farrar MJ. A comparison of the validity of content-masking procedures for obtaining judgments of discrete affective states. Journal of the Acoustical Society of America. 1994;96:1283–1290. doi: 10.1121/1.410276. [DOI] [PubMed] [Google Scholar]

- Friend M, Mahelona CL. Paper presented at the Society for Research in Child Development. Washington, DC: 1997. The functional significance of facial, vocal, and lexical signals: Bridging the gap between infancy and early childhood. [Google Scholar]

- Garnica OK. Some prosodic and paralinguistic features of speech to young children. In: Snow CE, Ferguson CA, editors. Talking to children. Cambridge: Cambridge University Press; 1978. pp. 63–88. [Google Scholar]

- Hirsh-Pasek K, Kemler Nelson DG, Jusczyk PW, Cassidy KW, Druss B, Kennedy L. Clauses are perceptual units for young infants. Cognition. 1987;26:269–286. doi: 10.1016/s0010-0277(87)80002-1. [DOI] [PubMed] [Google Scholar]

- Jerger S, Martin RC, Pirozzolo FJ. A developmental study of the auditory Stroop effect. Brain and Language. 1988;35:86–104. doi: 10.1016/0093-934x(88)90102-2. [DOI] [PubMed] [Google Scholar]

- Jusczyk PW. From general to language-specific capacities: the WRAPSA model of how speech perception develops. Journal of Phonetics. 1993;21:3–28. [Google Scholar]

- Jusczyk PW, Hirsh-Pasek K, Kemler Nelson DG, Kennedy L, Woodward A, Piwoz J. Perception of acoustic correlates of major phrasal units by young infants. Cognitive Psychology. 1992;24:252–293. doi: 10.1016/0010-0285(92)90009-q. [DOI] [PubMed] [Google Scholar]

- Katz GS, Cohn JF, Moore CA. A combination of vocal F0 dynamic and summary features discriminates between three pragmatic categories of infant directed speech. Child Development. 1996;67:205–217. doi: 10.1111/j.1467-8624.1996.tb01729.x. [DOI] [PubMed] [Google Scholar]

- Ladd DR, Silverman KEA, Tolkmitt F, Bergman G, Scherer KR. Evidence for the independent function of contour type, voice quality, and F0 range in signaling speaker affect. Journal of the Acoustical Society of America. 1985;78:435–444. [Google Scholar]

- Lawrence LL, Fernald A. Paper presented at the biennial meeting of the Society for Research in Child Development. New Orleans, LA: 1993. When prosody and semantics conflict: infants’ sensitivity to discrepancies between tone of voice and verbal content. [Google Scholar]

- Liberman MY, Streeter LA. Use of nonsense-syllable mimicry in the study of prosodic phenomena. Journal of the Acoustical Society of America. 1978;63:231–233. [Google Scholar]

- Lieberman P, Michaels SB. Some aspects of fundamental frequency and envelope amplitude as related to the emotional content of speech. Journal of the Acoustical Society of America. 1962;34:922–927. [Google Scholar]

- Mehrabian A, Wiener M. Decoding of inconsistent communications. Journal of Personality and Social Psychology. 1967;6:109–114. doi: 10.1037/h0024532. [DOI] [PubMed] [Google Scholar]

- Moore C, Harris L, Patriquin M. Lexical and prosodic cues in the comprehension of relative certainty. Journal of Child Language. 1993;20:153–167. doi: 10.1017/s030500090000917x. [DOI] [PubMed] [Google Scholar]

- Morgan JL. Converging measures of speech segmentation in preverbal infants. Infant Behavior and Development. 1994;17:389–403. [Google Scholar]

- Morrongiello BA, Roes CL. Children’s memory for new songs: integration or independent storage of words and tunes? Journal of Experimental Child Psychology. 1990;50:25–38. doi: 10.1016/0022-0965(90)90030-c. [DOI] [PubMed] [Google Scholar]

- Nakatani LH, Schaffer JA. Hearing ‘words’ without words: prosodic cues for word perception. Journal of the Acoustical Society of America. 1978;63:234–245. doi: 10.1121/1.381719. [DOI] [PubMed] [Google Scholar]

- O’Sullivan M, Ekman P, Friesen W, Scherer K. What you say and how you say it: the contribution of speech content and voice quality to judgments of others. Journal of Personality and Social Psychology. 1985;48:54–62. doi: 10.1037//0022-3514.48.1.54. [DOI] [PubMed] [Google Scholar]

- Peters A. Language segmentation: operating principles for the perception and analysis of language. In: Slobin DI, editor. The crosslinguistic study of language acquisition, Vol 2: Theoretical issues. Hillsdale, NJ: Lawrence Erlbaum; 1985. pp. 1029–1067. [Google Scholar]

- Reilly SS, Muzekari LH. Effects of emotional illness and age upon the resolution of discrepant messages. Perceptual and Motor Skills. 1986;62:823–829. doi: 10.2466/pms.1986.62.3.823. [DOI] [PubMed] [Google Scholar]

- Scherer KR. Emotion expression in speech and music. In: Sundberg J, Nord L, Carlson R, editors. Music, language, speech, and brain. London: Macmillan; 1991. pp. 146–156. (Wenner-Gren Center International Symposium Series). [Google Scholar]

- Scherer KR, Oshinsky JS. Cue utilization in emotion attribution from auditory stimuli. Motivation and Emotion. 1977;1:331–346. [Google Scholar]

- Scherer KR, Banse R, Wallbott HG, Goldbeck T. Vocal cues in emotion encoding and decoding. Motivation and Emotion. 1991;15:123–148. [Google Scholar]

- Solomon D, Ali FA. Age trends in the perception of verbal reinforcers. Developmental Psychology. 1972;7:238–243. [Google Scholar]

- Solomon D, Yaeger J. Effects of content and intonation on the perception of verbal reinforcers. Perceptual and Motor Skills. 1969;28:319–327. doi: 10.2466/pms.1969.28.1.319. [DOI] [PubMed] [Google Scholar]

- Street RL., Jr . The communicative functions of paralanguage and prosody. In: Giles H, Robinson WP, editors. Handbook of Language and Social Psychology. Chichester: Wiley; 1990. [Google Scholar]

- Svejda MJ. The development of infant sensitivity to affective messages in the mother’s voice. (University Microfilms No. 8209948).Dissertation Abstracts International. 1981;42(11):4623B. [Google Scholar]

- Whalen DH, Wiley ER, Rubin PE, Cooper FS. The Haskins Laboratories’ pulse code modulation (PCM) system. Behavior Research Methods, Instruments, and Computers. 1990;22:550–559. [Google Scholar]