Abstract

In an effort to improve patient safety, researchers at the Johns Hopkins University designed and implemented a comprehensive Web-based Intensive Care Unit Safety Reporting System (ICUSRS). The ICUSRS collects data about adverse events and near misses from all staff in the ICU. This report reflects data on 854 reports from 18 diverse ICUs across the United States. Reporting is voluntary, and data collected is confidential, with patient, provider, and reporter information deidentified. Preliminary data include system factors reported, degree of patient harm, reporting times, and evaluations of the system. Qualitative and quantitative data are reported back to the ICU site study teams and frontline staff through monthly reports, case discussions, and a quarterly newsletter.

In their report, “To Err Is Human,”1 the Institute of Medicine (IOM) called attention to health care errors as a nationwide patient safety problem, responsible for a significant number of deaths each year. The report identified system failures, rather than individual incompetence, as the primary cause of these errors and noted that attempts to track and reduce health care errors lag far behind safety improvements in other high-risk industries. In aviation, a safety reporting system (ASRS) was implemented in 1976 to report errors and near misses. The ASRS has received more than 474,000 reports to date, submitted 2,500 safety alerts, taken action on 42% of these alerts2 and significantly reduced deaths in commercial aviation.1

A major factor in aviation's success3,4 is that its incident reporting focuses on fixing systems rather than on blaming individuals. The IOM report generated many programs to improve safety, but health care institutions continue to struggle with how to encourage medical providers to report errors and near misses. A huge roadblock to the identification of hazards and mistakes is the oath all medical providers take to “do no harm”2 and the shame, guilt, and liability associated with mistakes that harm patients.5

Billings6 and other researchers believe a successful reporting system should be voluntary, nonpunitive, easy to complete, and capable of providing feedback to staff.6,7,8 In the face of strong evidence showing that virtually every patient admitted to an intensive care unit (ICU) experiences a potentially life-threatening error,9,10 it is clear that incident reporting is imperative to glean important information about how to reduce the likelihood of medical mistakes.3,11

There are a number of Web-based event reporting systems in health care. U.S. Pharmacopeia's MedMarx system (http://www.medmarx.com) and the Institute for Safe Medication Practices' Medication Errors Reporting Program (http://www.ismp.org/Pages/communications.asp) collect data on medication errors. The Emergency Care Research Institute (ECRI) (http://www.ecri.org) collects reports of adverse events involving medical products. Mekhijian et al12 recently implemented a voluntary reporting system that collects data about errors, events, and near misses solely at Ohio State. All of these systems focus on a specific type of event (e.g., medications) or are localized at one institution. In contrast, our system collects all types of incidents from multiple ICUs that are not part of the same health system.

This report describes the design and implementation of a comprehensive Web-based safety reporting system (ICUSRS) in a cohort of diverse ICUs. The ICUSRS links multiple ICUs in an effort to assemble a community of learning regarding safety problems. This approach is particularly important for rare events that lead to significant harm, where a rare event at a single hospital may not stimulate improvement efforts. The objectives of this project were to develop an incident reporting system that caregivers in a diverse group of ICUs would use; to identify factors contributing to incidents from a systems perspective; and to disseminate lessons learned in an effort to broadly improve safety.

Background

The ICUSRS was developed by a team of medical and public health researchers at the Johns Hopkins University as part of a demonstration project funded by the Agency for Healthcare Research & Quality. Through a partnership with the Society of Critical Care Medicine, 30 intensive care units (ICUs) across the United States were identified and then recruited to participate in the ICUSRS project. The goal was to recruit geographically diverse hospitals that were willing to participate in reporting and improvement efforts rather than to obtain a representative sample of hospitals or ICUs. Participants included both adult and pediatric ICUs and surgical, medical, trauma, and cardiac services. A study team was assembled at each ICU site, including the ICU director or other ICU attending (principal investigator), nurse manager (coprincipal investigator), nurse educator, and risk manager.

Developing an incident reporting system that would accommodate the needs of a diverse group of ICUs, caregivers, and incidents was a challenge. While the aviation industry collects over 30,000 reports annually,8,13 most attempts to collect similar data in health care have resulted in underreporting.2,9 Factors most often cited as the causes of underreporting include time pressures, fear of punitive/liability actions, lack of perceived benefit, worries about reputation and peer disapproval, and staff underestimation or misunderstanding of what constitutes a reportable event.14,15,16,17 Barach et al.18 estimate underreporting to be between 50% and 96% annually.

Usability Issues

In an effort to address underreporting and develop a system that ICU staff would use more often,2,9,18,19 we had to remove as many barriers to reporting as possible. Our first decision was to have electronic reporting rather than paper or scannable forms. Use of computers and Web technology in health care was growing, particularly in ICUs where increasingly a computer is stationed at every bedside. We thought an electronic format would be more efficient and cost effective than paper or scanned forms because it would eliminate lost forms, illegible handwriting, double data entry (from paper to database) and some causes of data entry errors. We chose a Web-based interface, which could be accessible from any computer with Internet access, whether in the workplace, at home, or on the road.

To help change from a culture of blame and reduce provider reluctance to report incidents, we made the ICUSRS voluntary, anonymous, and confidential. While virtually all medical institutions have some in-hospital mechanism for reporting incidents, typically these systems are mandatory, not anonymous, focus blame on individuals,20 and as a result suffer from grave underreporting.2

Deciding on the amount of information we could collect without breaching provider and patient confidentiality was another challenge. We do not ask for names and avoid collecting data that could identify individual patients, providers, or organizational units. For example, we ask for month and year of the incident but not the exact day, and age range of the patient instead of an exact age (http://icusrs.org, select Member Zone tab then Training Form tab). We do not record IP addresses. If the reporter wants to guarantee anonymity, they can elect not to identify their ICU, although we request ICU identifiers for the sole purpose of sending individualized data to each ICU site and giving each ICU ownership of its data. Anonymity allows multiple ICU staff to report the same incident. Since the purpose of the ICUSRS is to focus on system failures (i.e., how work is organized) and their effect on outcomes,21 not on specific patients or medical providers, we are not opposed to multiple reports of the same incident. Each provider adds a perspective based on their professional background, which may help to illuminate factors contributing to that incident.

The ICUSRS study has two levels of confidentiality and protection of data against discoverability. First, the ICUSRS is a research project and the data collected under this project are protected by Institutional Review Board (IRB) approval through the Johns Hopkins University School of Medicine. In addition, each ICU site also obtained IRB approval through their internal peer review process. Institutional Review Board approval protects these data from discoverability in a court of law. Second, our project is funded by the Agency for Healthcare Research and Quality (AHRQ). Projects funded by AHRQ are protected under 42 U.S.C., Section 301(d), which states that “projects may not be compelled to identify names or other identifying information from the database in Federal, State, or local courts,” and the data collected during the period of AHRQ funding is protected in perpetuity. It should be noted, however, that protection and discoverability has not been tested thus far in a court system, and state laws regarding discoverability of data vary widely. There is Congressional interest in stronger legal protection for information voluntarily reported for the purposes of patient safety and quality improvement as evidenced by recent action on related Bills in the House and Senate.

Other issues we had to address were staff time pressures, their interest in avoiding double reporting, and their perception that reporting incidents did not produce any benefits. The length of the reporting system needed to be as short as possible for rapid reporter entry, yet request enough information to capture adequate data for analyzing contributing system factors. Check boxes and drop-down menus were used to limit typing as well as to promote reporting of important aspects of the incident that might otherwise be overlooked. Pop-up windows were added that provide definitions and examples to make questions clearer to the user.

Despite the benefits of check boxes, experience from the Food and Drug Administration (FDA), ASRS,6 and others21 suggest that information from these boxes alone is insufficient to understand what happened; additional context surrounding the incident is needed. To adjust for the possible bias of guiding responses through check box responses and to enrich the data collected, we also included free-form text boxes. Including windows for free text gives users an opportunity to tell us in their own words what happened, which often provides a richer account of the incident and the context in which the incident occurred.

Frontline staff were very concerned that implementation of the ICUSRS would require them to report the same incident more than once. For this reason, we made efforts to minimize duplicate reporting of information to the ICUSRS and ICU site's existing reporting systems. Some degree of duplicate reporting was difficult to avoid at sites where risk management wanted to maintain their own reporting mechanisms. To help address the duplicative issue, we incorporated questions about medical devices and medication errors. The FDA Center for Devices and Radiologic Health requires that caregivers report problems with equipment and devices. Therefore, we partnered with the FDA and included a series of questions on our Web-based survey regarding the role of equipment and medical devices in the reported incident. Data abstracted from these questions are forwarded to the FDA without ICU site identifiers. Because medication errors are common11,22 and in-hospital reporting methods already exist, we also included basic queries about medication errors. We used a set of questions similar to the MedMarx reporting system, since many hospitals are familiar with their format and look at route of administration and point in the prescribing or dispensing process when the incident occurred.

A print option was also provided to encourage use and possible adoption of the ICUSRS reporting system. Printing in real-time allows ICU sites to use reports for case discussions or for local quality improvement or risk management efforts. Printed reports omit any patient, provider, or ICU identifiers, thus safeguarding anonymity and confidentiality. However, the printed version does provide a blank box at the top of page one where a patient's name, hospital ID, and other information can be added for more detailed follow-up by risk management, quality improvement, or ICU leaders.

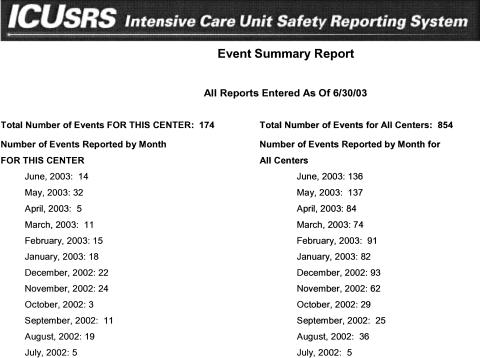

Finally, we attempted to address staff attitudes that “their efforts to report incidents are wasted because there is little, if any, feedback, particularly from mandatory reporting systems.” In an effort to change these attitudes, we designed a monthly report that is sent in PDF format to each ICU site about cases submitted in the previous month (▶). Included in this report are quantitative data specific to the ICU site (left column) and aggregate data from all the other participating ICU sites (right column) for that month. The ICU site study team sees the text description and system factors reported for each incident and other quantitative data, such as total events to date, types of events reported, and types of providers reporting. ICU site teams look to the monthly report to understand areas in which safety can be improved in their ICU. Before reports are sent they are read by a research scientist with a critical care nursing and outcomes research background or the Research Program Coordinator with a background in health services research and medicine, who removes all identifiers (i.e., provider names, institution names, specific dates). Two cases—one adverse event and one near miss—are also highlighted in discussion format and sent with the monthly report (go to http://icusrs.org/caseconf.cfm - adverse event; http://icusrs.org/success.cfm-near miss).

Figure 1.

Monthly ICU report.

Web-Based System Platform

In launching a Web-based reporting system we also had to address compatibility with existing computer technology at participating ICU sites. Our system needed to be easily accessible through the most common internet browsers (e.g., Netscape, Internet Explorer), with limited graphic content to accommodate slower computers with lower bandwidth connectivity.

Two Dell PowerEdge 2500 SC Pentium III servers house the Web interface and database, each connected to an APC Back-UPS 1400 uninterruptible power supply. One Web server has the SQL server database, and the second houses Microsoft IIS and ColdFusion, the latter allowing front-end input of data into the database. The Web interface is encrypted with secure socket layers (SSL) technology, requiring that users have Internet Explorer 5 or higher, or other comparable Web browsers, with 128-bit encryption.

We contracted with Davison and Associates (Silver Spring, MD) to program the Web interface and database. They were chosen because of their considerable experience with Web-based survey design and data management projects and their previous work for organizations requiring high security web sites, (e.g., Federal Bureau of Investigation [FBI] and FDA).

Data Collected

While the first objective was to build a system staff would use, the ICUSRS study was also designed to collect as much information as possible about harmful or potentially harmful incidents in ICUs and to apply these data to improvement efforts in patient safety. The ICUSRS collects information about all types of incidents, i.e., events that could or did lead to patient harm, whether it involved a medication, device, fall, or other event. There is wide variation in how to classify harm from adverse events23,24; most focus on biologic harm. We measured harm from a broad perspective. To evaluate types of patient harm, we identified whether the patient died; suffered physiologic changes, physical injury, psychological distress, discomfort, patient or family dissatisfaction; and if there was anticipated or actual increased length of stay.

We identify an incident that causes harm as an adverse event (e.g., drug overdose); an incident that does not cause harm is called a near miss (e.g., wrong medication dose in patient orders caught before administration). There are many definitions for adverse events and near misses in the literature and in guidelines, some separating what is preventable from what is not, some including all harm, some evaluating errors regardless of whether they led to harm25,26 (www.ahrq.gov). For example, the IOM defines an adverse event as “an injury resulting from a medical intervention” and a near miss as the “occurrence of an error that did not result in harm.”2 We recognized that little is known about the relative value of reporting near misses versus adverse events, with some suggesting that near misses or “close calls” yield information of equal importance to adverse events.8 As a result, we kept the definitions clear and encouraged staff to report all incidents regardless of whether they led to harm.

The data collected from frontline staff through free text and structured check boxes are then coded by experts in the field of critical care medicine. Initially, 50% (N = 426) of cases were independently coded by a second person, and analyses were compared to determine inter-rater reliability. Because inter-rater reliability was 99.6%, the percentage of cases recoded was dropped to 20%. Testing using inter-rater reliability is continued to maintain the integrity of coding and data analysis. Coding and analyzing free text are challenging, and we are currently developing a taxonomy for coding qualitative data. We are currently researching methods for automatically coding event types and system factors from quantitative data. Since this is a multisite system, implementing automatic coding of text is likely more feasible than continuing hand coding.

The science of incident reporting is immature. Based on discussion with other groups, such as the FDA's device reporting system (www.fda.gov/medwatch/index.html), Australian Incident Monitoring Study in Intensive Care,27ASRS,6 the Veteran's Administration,21,28 and our own experience, it is unclear what relative value can be obtained from structured versus text data. One important goal of our study was to better understand contributions of structured versus text data for improving patient safety. We hope in future analyses to delineate the value for each.

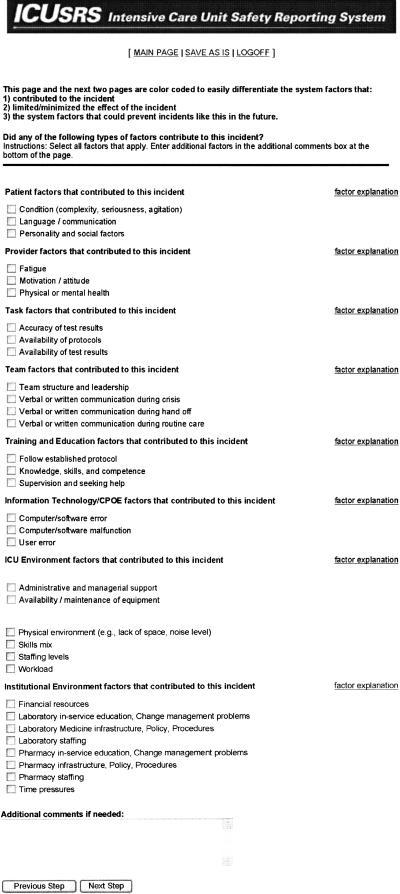

Following the IOM report and other studies2,29 we viewed system failures as the driving force in investigating incidents. As such, the ICUSRS asks a series of questions about factors that contributed to the incident (▶), factors that limited the impact of the incident, and the reporter's perception of how this type of incident could be prevented in the future. We categorized how work is organized by patient, provider, task, team, training/education, information technology, ICU environment, and institution environment to better understand where system failures occur (▶). Information technology included computer physician order entry (CPOE) and other technology systems. Three ICU sites implemented some form of information technology system. Since the three sites did not start using these systems until the last quarter of the first year of reporting to the ICUSRS, data for this system factor are not included in this report. Other data collected includes patient's clinical status (e.g., therapies being received at time of incident), general details of the incident (e.g., when and where incident occurred), degree of patient harm, and health care providers involved in patient's care and in the incident.

Figure 2.

Factors contributing to the incident.

Table 1.

Contributing System Factors Reported

| System Factors | Percent Incidents* (N = 854) | Percent Factors (N = 1,858) | Examples |

|---|---|---|---|

| Training & education | 54% | 37% | Knowledge, skills, and competence |

| Failure to follow established protocol | |||

| Supervision and seeking help | |||

| Team factors | 31% | 17% | Verbal or written communication during hand-off |

| Verbal or written communication during routine care | |||

| Verbal or written communication during crisis | |||

| Team structure and leadership | |||

| Patient factors | 27% | 14% | Condition (acuity, agitated) |

| Language/communication barrier | |||

| Personality and social factors (religious beliefs) | |||

| ICU environment | 22% | 13% | Staffing levels |

| Skills mix (intern & new RN, no senior physician) | |||

| Workload | |||

| Availability/maintenance of equipment | |||

| Administrative & managerial support | |||

| Physical environment (e.g., lack of space, noise level) | |||

| Provider factors | 19% | 10% | Fatigue |

| Motivation/attitude | |||

| Physical or mental health | |||

| Task factors | 10% | 5% | Availability of protocols |

| Availability of test results | |||

| Accuracy of test results | |||

| Institutional environment | 8% | 4% | Financial resources |

| Time pressures |

Percent of all incidents with at least one of these factors checked.

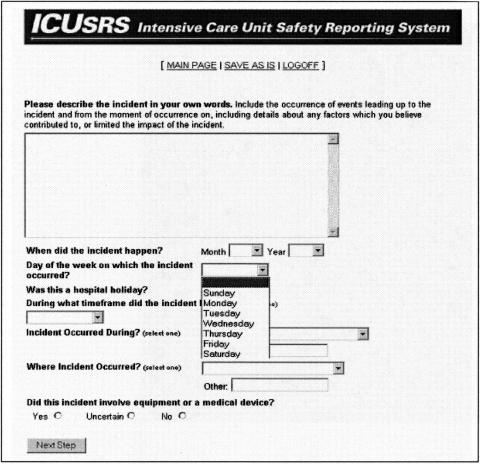

Although our reporting system was developed for ICU implementation, the questions are generic and could be used in other areas of the hospital. However, many of the drop-down menus provide ICU-specific options. For example, response options for “Where Incident Occurred?” are Within the ICU; Operating room; Transportation within hospital; Transportation outside hospital; and During testing outside the ICU (▶).

Figure 3.

Drop-down menu with ICU-specific options.

There is also an opportunity for reporters to evaluate the ICUSRS by completing a five-item questionnaire at the end of the reporting system. Two of the questions are open ended and three are check boxes.

Entering a Report

Any ICU staff member who sees an incident can report to the ICUSRS. This includes, but is not limited to, nurses, physicians, respiratory therapists, pharmacists, unit clerks, and aides. Our current reporting system consists of nine screens with two additional screens that appear if the reporter answers yes to either the medical device/equipment or medication error questions. The first three screens require password entry, ask whether a new entry is being submitted or a previous submission edited, and allow reporters to identify their ICU. The remaining six screens involve questions about the incident being reported. A final evaluation questionnaire can be accessed at the end of the reporting system by choosing “Continue” before confirming submission.

System Status

The ICUSRS went live on July 1, 2002, in 3 adult ICUs at the Johns Hopkins Hospital. Additional participating ICUs from across the United States started using the reporting system after internal approval for human subjects research was granted from their IRB and a two-day training visit completed. As of June 30, 2003, 18 ICUs from 11 hospitals were reporting and 854 adverse events or near misses were submitted. The ICUSRS has replaced incident reporting systems at 2 of the 11 hospitals. We identified 13 (less than 2%) duplicate incidents by matching the following 9 variables: reporting ICU, event time frame, day, month, location, harm (death versus no death), patient age category, gender, and mechanical ventilation status at the time of event (yes or no). In the figures reported here, the duplicate reports are included.

Since evidence suggests that the alignment of multiple system failures is often the cause of adverse events,30 reporters were asked to check all contributing factors involved in the incident. Of 854 incidents, 775 utilized the check box options to report contributing factors. Two hundred eighty (36%) had two system factors checked, and 257 (33%) had three or four system factors (▶). Seventy-nine reports did not have any of the check box options in the contributing factors list chosen. Many of these reports had descriptions of contributing factors in the text box at the bottom of the factor list (▶).

Table 2.

Number of System Factors Involved in an Incident (N = 854)

| # System Factors Involved | N (775*) | Percent |

|---|---|---|

| One | 218 | 28 |

| Two | 280 | 36 |

| Three | 161 | 21 |

| Four | 96 | 12 |

| Five | 20 | 3 |

Seventy-nine incidents reported did not have any system factors checked; many, however, did provide this information in the text box at the end of the list.

▶ describes categories of system factors reported as contributing to the incident with corresponding check-box options. For example, under patient factors, reporters could check acuity of patient, language barrier, and personality or social factors. Data are analyzed two ways in this table; first, by percent of incidents with the factor category (e.g., provider, task, and team) checked at least once in the incident. Training and education was attributed to 54% of incidents, team factors 31%, and patient factors 27%. Second, data were analyzed by percent of factors contributing to incidents. Training and education was a contributing factor 37% and team factors 17% of the time.

Degree of harm is presented in ▶. The reporter was given the ability to select or not select any harm category when reporting. As such, sample sizes in each category vary and percent responding yes or no is calculated for each category. For example, physiologic change was checked for 772 of 854 incidents, with 68% reporting no harm and 32% harm. Since measuring degree of harm is based on the reporter's opinion and not done through a root cause analysis, answers are subjective and may be driven by medical experience and job category. However, this is not to say that the perceptions of health care providers are any less credible than a purely objective approach to analyzing harm. Our taxonomy for coding type of events and degree of harm is still evolving.

Table 3.

Degree of Harm Reported (N = 854)

| Harm Classification | N* | Percent Yes | Percent No |

|---|---|---|---|

| Physiologic change | 772 | 32 | 68 |

| Discomfort | 754 | 26 | 74 |

| Physical injury | 771 | 21 | 79 |

| Patient/relative dissatisfaction | 727 | 21 | 79 |

| Psychological distress | 733 | 18 | 82 |

| Anticipated/actual prolonged stay | 736 | 14 | 86 |

Numbers vary because reporters were able to select or not select any harm category when reporting.

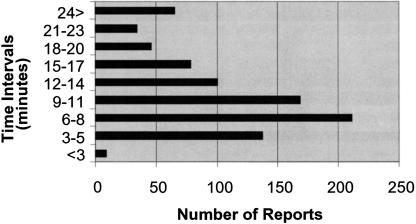

Average time to complete a report is 12.45 minutes (SD 8.41). Cases were most often reported in the 6- to 8-minute interval, accounting for 25% (n = 211) of reports, with 9 to 11 minutes making up 20% (n = 168) of reports (▶). Reports are timed in seconds, with each screen timed separately and then all screen times added for total time spent on a report. Learning curve, length of narrative description, and whether a reporter was distracted during their submission all affected the time it took to complete a report. For example, the longest report was clocked in at 97 minutes. A “save as is” option was included to allow reporters to save an unfinished report and return later to complete. This feature was added because of the hectic nature of critical care, time to complete particularly complex incidents, and the desire to accommodate any potential hesitation on the part of the reporter to submit a report immediately. Each report is given a unique identification number, which is assigned sequentially from any ICU site, and appears once when the reporter saves and submits data. The reporter must write down this number and identify the ICU if they wish to locate and edit the report.

Figure 4.

Time to complete a report.

Of 854 reports submitted, 90 evaluations of the ICUSRS were done. While all staff trained to use the ICUSRS were asked to evaluate the reporting system, it was optional. Because reporting is anonymous, there was no way to track whether the same reporter completed the evaluation screen more than once. As such, the data presented do not reflect a representative sample of reporters. Although time to complete the Web-based survey was a concern raised during staff training, 66% (n = 59) checked it “was not time consuming,” whereas 24% (n = 22) checked it “was time consuming.” Ninety percent (n = 77) found the system to be “user friendly” and 68% (n = 58) checked “questions were clear,” whereas 19% (n = 16) checked “not clear.” We also obtained feedback from study teams regarding the ICUSRS at an annual meeting held in conjunction with the SCCM Annual Congress in January 2003 and incorporated changes into the second iteration described below.

Examples of positive comments given for an open-ended question of whether the Web-based survey captured information relevant to the incident included, “it allows for open but structured discussion of the incident,” and “I think it captures most of the information very well.” Constructive criticisms included, “better explanation of what factor limited/minimized event means,” and “leaves out outside contributors to safety incidents as in this case, the pharmacy.”

Since one of our objectives is to encourage use, the ICUSRS asks all ICU staff and study teams to let us know how the reporting system can be more useful. As a result, the ICUSRS underwent a second iteration (released October 6, 2003) based on comments from the system's evaluation screen and from an annual meeting with study teams at the SCCM Congress. We incorporated a better explanation and clearer instructions for all system factors, including those that limited/minimized the incident. We also added check box options for pharmacy and laboratory medicine under the factor category of “Institutional Environment.” Future iterations of the ICUSRS will be developed at intervals of not more than every six months. When modifying an instrument or survey tool it is imperative to consider how changes will affect data analysis. In addition, changing the system also results in staff retraining and time spent readjusting to a new version, factors that may frustrate staff and discourage reporting. Any revisions undertaken for the remainder of this funding period (ending August 30, 2004) were based on user or expert input and involve aesthetic changes for ease of reporting or rewording of questions to increase user's understanding.

Through a meeting with ICU teams held at the Society of Critical Care Medicine in 2003, we found that teams wanted the ability to manipulate their own data in real time. As a result, study teams have the ability to log-in to the ICUSRS Web site and view the case descriptions submitted from their ICU site if the reporter gives permission when filling in the form. Only 2% (n = 17) of reporters have not identified their ICU. We are currently working on reporting capabilities that will enable PIs to query other data of interest from their unit at any time and generate reports. Team members also shared their ideas regarding how best to overlay the ICUSRS with existing reporting systems and how to facilitate staff reporting. While reporting to one system was preferable, the ability to print each report and attach to existing incident reporting forms provided equal benefit. Suggestions for facilitating reporting included incorporating a reminder during rounds or on checklists, training and educating staff why and how to report incidents, and sharing data and examples of incidents with ICU sites.

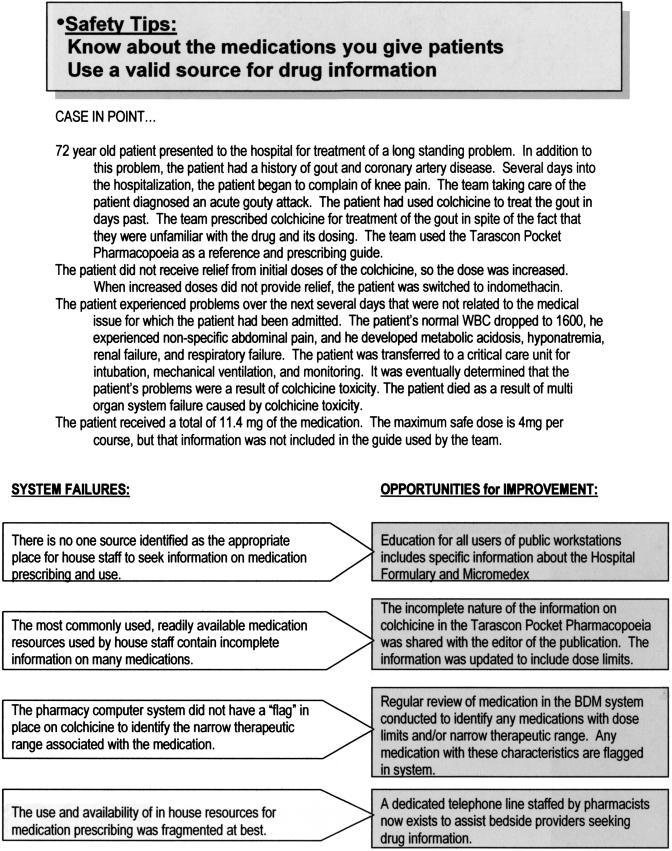

While study teams are encouraged to share data from the monthly reports with frontline ICU staff, it is not clear how often this occurs. As a result, we now send a one-page summary of the case discussions that accompany the monthly report for posting on staff bulletin boards (▶). We believe posting cases is an excellent educational tool for all ICU staff and will also keep the message of patient safety in the forefront of daily patient care. We also publish a quarterly newsletter and forward copies for all ICU staff. Included in this publication is one human interest story that profiles a staff member from a participating site who contributes to safety. The remainder of the newsletter is dedicated to updating staff on the project's activities, expounding on one common safety problem, and providing tips for improving safety. ICU staff and study teams have expressed the importance of the newsletter in educating and involving everyone in patient safety.

Figure 5.

Summary of case discussions.

Discussion

Health care carries a significant risk of patient injury, and efforts are needed to identify problems before they result in harm to additional patients. Incident reporting is an important safety improvement mechanism. Traditional methods of reporting incidents are mandatory and focus mainly on the unsafe actions of individual health workers, thereby fostering a culture of blame. The result has been gross underreporting and little, if any, improvement in averting the 44,000 annual deaths from adverse events.2,3 As such, it is imperative that voluntary and anonymous reporting systems be implemented to learn from incidents. For this reason, we collect general and specific information about the incident, particularly system factors, and categories of health care providers involved in the patient's care and the incident. We also collect data on the patient's clinical status, degree of harm resulting from the incident and limited identifying variables to maintain anonymity for reporter and patient.

The ICUSRS collects data from a cohort of ICUs across the United States to identify hazards from rare or less-common events that no single hospital will be able to identify. We believe this is a novel approach because ICUs can share lessons learned. For example, data from one site revealed more medication incidents on Thursdays. Through an investigation they found that the pharmacist did not attend ICU rounds on Thursdays. Nevertheless, we acknowledge that we and others are early in the journey regarding how to use incident reporting to improve patient safety. As evidenced by the 854 submissions, we have learned that ICU staff is comfortable reporting incidents to an anonymous system.

The ICUSRS received 854 incidents in the first year of reporting, confirming that staff will use our system. We do not know whether ICUSRS improved frequency of reporting. Most sites either would not release or did not have sufficient data on the number of reports submitted from their ICU site in the year before implementing the ICUSRS. However, during initial site visit meetings with risk management and the ICU study team, discussions typically ensued regarding the minimal number of reports submitted. For example, during one site visit meeting, the nurse manager and risk manager mentioned that only four incident reports had been filed from this unit in the previous year. At the start of day 2, two incidents had already been reported to the ICUSRS from this site. This site has been reporting for 10 months (August 8, 2003, through June 30, 2004) and has submitted 24 incidents to the ICUSRS.

Analyzing system factors is advocated by many experts as the solution to identifying failures and making safety improvements.30,31,32 In the first year of reporting we have found that training and education is a major factor contributing to incidents. Included under this category are knowledge, skills, supervision, seeking help, and failure to follow established protocol. The latter factor was most often checked because the provider was too inexperienced to know about the protocol. Team factors also contributed to incidents, particularly written and verbal communication among team members as well as the structure of a well-working team. In addition, incidents with more than one contributing system factor accounted for 72% of reported incidents. Most incidents did not lead to harm, although 21% led to physical injury, and 14% were anticipated to increase ICU length of stay.

A main concern of frontline staff was time to complete a report. To address this barrier, we designed a Web-based reporting system that can be easily accessed from any computer with an Internet connection—even from home if time spent at work reporting an incident is a problem. Based on user evaluations and critiques from study teams, the form underwent a second iteration. In this second version, better instructions are given for completing the system factor screens, and additional check box options were added for pharmacy and laboratory medicine. Sixty-six percent of reporters who completed the ICUSRS evaluation found it “not time consuming,” and 68% noted questions were clear.

We included several methods of feedback to encourage staff to report, learn from mistakes, and implement safety improvement efforts. Study teams use the monthly report to identify trends within their own ICU. Study teams and frontline staff also learn about the types of incidents that are occurring in ICUs and recommendations for improving safety through the case discussions, one-page case bulletin board summary, and the quarterly newsletter.

Conclusion

To realize the benefits from incident reporting, incidents must be submitted, coded, and used by caregivers to improve safety. Thus far, the ICUSRS has shown that a diverse group of ICUs will submit incidents. The ICUSRS currently collects information about adverse events and near misses from a cohort of 23 ICUs across the United States and plans to add another 3 ICUs. We are collecting data rapidly while also working on a coding taxonomy that is informed by other patient safety experts who are also currently looking at appropriate taxonomy. We are still exploring how to analyze data and how to use it to improve safety. Our reporting system focuses on analyzing system failures. Although there is limited research evaluating the success of such a reporting strategy in health care, it is known that aviation's ASRS, after which the ICUSRS is modeled, is highly successful in identifying and rectifying safety problems in aviation.6 We look forward to broader implementation of the ICUSRS and evaluation of its effectiveness in facilitating incident reporting and in improving patient safety and health care quality.

Supported by the Agency for Healthcare Research and Quality (grant number U18HS11902).

References

- 1.Kohn L, Corrigan J, Donaldson M, editors. To err is human: building a safer health system. Institute of Medicine Report. Washington, DC: National Academy Press, 1999. [PubMed]

- 2.Aviation Safety Reporting System. Significant Program Safety Products. Available at http://www.hq.nasa.gov/congress/asrs.html. Accessed January 4, 2005.

- 3.Reason J. Human error: models and management. BMJ. 2000;320:768–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Leape LL, Brennan TA, Laird N, et al. The nature of adverse events in hospitalized patients. Results of the Harvard Medical Practice Study II. N Engl J Med. 1991;324(6):377–84. [DOI] [PubMed] [Google Scholar]

- 5.Leape L. Foreword: Preventing medical accidents: Is “systems analysis” the answer? Am J Law Med. 2001;27:145–8. [PubMed] [Google Scholar]

- 6.The NASA Aviation Safety Reporting System: lessons learned from voluntary incident reporting. Chicago, IL: National Patient Safety Foundation; 1999.

- 7.Wu AW, Pronovost P, Morlock L. ICU incident reporting systems. J Crit Care. 2002;17(2):86–94. [DOI] [PubMed] [Google Scholar]

- 8.Leape LL. Reporting of adverse events. N Engl J Med. 2002;347(20):1633–8. [DOI] [PubMed] [Google Scholar]

- 9.Andrews L, Stocking C, Krizek T, et al. An alternative strategy for studying adverse events in medical care. Lancet. 1997;349(9048):309–13. [DOI] [PubMed] [Google Scholar]

- 10.Donchin Y, Gopher D, Olin M, et al. A look into the nature and causes of human errors in the intensive care unit. Crit Care Med. 1995;23:294–300. [DOI] [PubMed] [Google Scholar]

- 11.Bates D, Spell N, Cullen D, et al. The costs of adverse drug events in hospitalized patients. JAMA. 1997;277(4):307–11. [PubMed] [Google Scholar]

- 12.Mekhjian H, Bentley T, Ahmad A, Marsh G. Development of a web-based event reporting system in an academic environment. JAMIA. 2004;11(1):11–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Connell L. Statement before the Subcommittee on Oversight and Investigations. Veterans' Affairs, Oversight and Investigations; 2000.

- 14.Cullen D, Bates D, Small S, Cooper J, Nemeskal A, Leape L. The incident reporting system does not detect adverse drug events: a problem for quality improvement. Jt Comm J Qual Improv. 1995;21:541–8. [DOI] [PubMed] [Google Scholar]

- 15.Stanhope N, Crowley-Murphy M, Vincent C, O'Connor A, Taylor-Adams S. An evaluation of adverse incident reporting. J Eval Clin Pract. 1999;5:5–12. [DOI] [PubMed] [Google Scholar]

- 16.Medical error reporting: professional tensions between confidentiality and liability. Boston, MA: Massachusetts Health Policy Forum, 2001. [PubMed]

- 17.Kivlahan C, Sangster W, Nelson K, Buddenbaum J, Lobenstein K. Developing a comprehensive electronic adverse event reporting system in an academic health center. Journal of Quality Improvement. 2002;28(11):583–94. [DOI] [PubMed] [Google Scholar]

- 18.Medical errors and patient safety in Massachusetts: What is the role of the commonwealth? Boston, MA: Massachusetts Health Policy Forum; 2000. [PubMed]

- 19.Leape L. A systems analysis approach to medical error. J Eval Clin Pract. 1997;3:213–22. [DOI] [PubMed] [Google Scholar]

- 20.Anderson D, Webster C. A systems approach to the reduction of medication error on the hospital ward. J Adv Nurs. 2001;35:34–41. [DOI] [PubMed] [Google Scholar]

- 21.Bagian J. Promoting patient safety at VA: Learning from close calls. Forum, VA: Health Services Research & Development; 2001, October:1,2–8.

- 22.Pronovost PJ, Weast B, Schwartz M, et al. Medication Reconcilliation: a practical tool to reduce the risk for medication errors. J Crit Care. 2003;18(4):2001–205. [DOI] [PubMed] [Google Scholar]

- 23.Pronovost PJ, Nolan T, Zeger S, Miller M, Rubin H. How can clinicians measure safety and quality in acute care? Lancet. 2004;363(9414):1061–7. [DOI] [PubMed] [Google Scholar]

- 24.Classen D, Metzger J. Improving medication safety: the measurement conundrum and where to start. Int J Qual Health Care. 2003;15(I):41–7. [DOI] [PubMed] [Google Scholar]

- 25.Quality Interagency Coordination Task Force to the President. Doing what counts for patient safety: Federal actions to reduce medical errors and their impact. Washington, DC: 2000, pp 29–31.

- 26.The Sentinel Event Policy. Specific Research Needs and Questions. 00 Sep 11; JCAHO, 2000.

- 27.Beckmann U, West L, Groombridge G, et al. The Australian Incident Monitoring Study in Intensive Care: AIMS-ICU. The development and evaluation of an incident reporting system in intensive care. Anaesthesia and Intensive Care. 1996;24(3):314–9. [DOI] [PubMed] [Google Scholar]

- 28.Bagian J, Lee C, Gosbee J, et al. Developing and deploying a patient safety program in a large health care delivery system: you can't fix what you don't know about. The Joint Commission. 2001;27(10):522–32. [DOI] [PubMed] [Google Scholar]

- 29.Reason J. Managing the Risks of Organizational Accidents. Burlington, VT: Ashgate Publishing Company; 2000.

- 30.Reason J. Human Error. Cambidge, England: Cambridge University Press; 1990.

- 31.Vincent C. Understanding and responding to adverse events. N Engl J Med. 2003;348(11):1051–6. [DOI] [PubMed] [Google Scholar]

- 32.Leape L. Why should we report adverse events? J Eval Clin Pract. 1999;5:1–4. [DOI] [PubMed] [Google Scholar]