Abstract

Objective: To identify the most frequent obstacles preventing physicians from answering their patient-care questions and the most requested improvements to clinical information resources.

Design: Qualitative analysis of questions asked by 48 randomly selected generalist physicians during ambulatory care.

Measurements: Frequency of reported obstacles to answering patient-care questions and recommendations from physicians for improving clinical information resources.

Results: The physicians asked 1,062 questions but pursued answers to only 585 (55%). The most commonly reported obstacle to the pursuit of an answer was the physician's doubt that an answer existed (52 questions, 11%). Among pursued questions, the most common obstacle was the failure of the selected resource to provide an answer (153 questions, 26%). During audiotaped interviews, physicians made 80 recommendations for improving clinical information resources. For example, they requested comprehensive resources that answer questions likely to occur in practice with emphasis on treatment and bottom-line advice. They asked for help in locating information quickly by using lists, tables, bolded subheadings, and algorithms and by avoiding lengthy, uninterrupted prose.

Conclusion: Physicians do not seek answers to many of their questions, often suspecting a lack of usable information. When they do seek answers, they often cannot find the information they need. Clinical resource developers could use the recommendations made by practicing physicians to provide resources that are more useful for answering clinical questions.

Practicing physicians often have questions about how to care for their patients. Some questions seek highly specific information about individual patients (e.g., “What is this rash?”), but others could potentially be answered in generally available information resources (e.g., “What are the screening guidelines for women with a family history of breast cancer?”). Most questions asked by primary care physicians go unanswered, either because answers are not pursued or because, once pursued, answers cannot be found.1,2,3,4 Theoretically, the process of asking and answering clinical questions can be divided into five steps in which the physician (1) recognizes an uncertainty, (2) formulates a question, (3) pursues an answer, (4) finds an answer, and (5) applies the answer to patient care.5

Previous studies have identified many obstacles to answering clinical questions.3,4,5,6 Covell and colleagues6 found that lack of time and poorly organized personal libraries prevented the answering of many questions. In a case report, Schneeweiss7 asked how long postlactation amenorrhea should be expected to last. The subject was not addressed in three obstetric textbooks, not indexed well in MEDLINE, and not directly answered in the literature. He reasoned that it would be impractical for practicing physicians to pursue many of their questions.

Obstacles to answering clinical questions can be grouped into physician-related obstacles and resource-related obstacles. Physician-related obstacles include the failure to recognize an information need,3,5 the decision to pursue questions only when answers are thought to exist,4 the preference for the most convenient resource rather than the most appropriate one,8 and the tendency to formulate questions that are difficult to answer with general resources.6,8,9,10,11 For example, in a study by Covell and colleagues,6 a physician was more likely to ask questions of the sort, “Should I test the serum procainamide level in this patient?” rather than “What are the indications for measuring serum procainamide?”

Resource-related obstacles include the excessive time and effort required to find answers in existing resources,3,12,13,14 the lack of access to information resources,8,15 the difficulty navigating the overwhelming body of literature to find the specific information that is needed,16 the inability of literature search technology to directly answer clinical questions,17,18,19 and the lack of evidence that addresses questions arising in practice.8,9,13,19,20,21

Potential solutions to help overcome these obstacles have been proposed. For example, physicians are advised to ask questions in a format that can be directly answered with evidence.11 Sackett and colleagues11 recommend the “PICO” format to ensure that the question includes information about the patient, the intervention, the relevant comparison, and the outcome of interest.

Investigators are also working on methods to make resources more accessible at the point of care.18,22,23,24,25 For example, Cimino and colleagues18,22,26 have created “infobuttons,” which link clinical information, such as laboratory results, to information resources, such as PubMed and Micromedex using desktop and handheld computers. Ebell and colleagues24,25 have developed a resource for handheld computers (InfoRetriever) that presents up-to-date evidence to help guide patient-care decisions.

To help overcome the mismatch between the clinician's needs and the typical format of research literature, Florance27 proposed a “clinical extract” to help physicians glean information that could be directly applied to patient care. A related proposal describes the need for “informationists” with training in both information science and clinical medicine to help physicians answer questions that arise on hospital rounds or in the office.28 Pilot programs have been met with encouragement but also have faced many challenges and less than total acceptance by busy physicians.29

These solutions are promising and the investigators who developed them continue to strive for wider application,18 but at this time such solutions remain outside the mainstream of practice. Cimino and Li18 and Smith30 have noted the importance of understanding the information needs of practicing physicians before designing systems to help meet those needs. The current study attempts to further that understanding by providing the practicing physician's perspective on information needs through extensive observations and interviews.

This is a study of obstacles and solutions from the practicing physician's perspective. Our research questions were “Which obstacles to answering questions occur most often in practice, and what recommendations do practicing physicians have for improving clinical information resources?” These resources are generally developed without formal mechanisms to identify physicians' information needs or to determine when the resource fails to address these needs. This study attempts to provide a better understanding of how information resources might answer patient-care questions more successfully.

Methods

Overview

The principal investigator observed physicians as they saw patients in the office. Between patient visits, the physician reported questions to the investigator, who recorded them in a series of field notes. During a 20-minute audiotaped interview, the investigator asked physicians to report their general views on obstacles to answering questions and recommendations for authors. The field notes and interview transcripts were then analyzed using qualitative methods.

Participants

General internists, general pediatricians, and family physicians were eligible for the study if they were younger than 45 years old and practiced in the eastern third of Iowa. This region, which consists of small cities and towns, was chosen because of its proximity to the principal investigator. The age limit was imposed because younger physicians have been reported to ask more questions.31 A total of 351 physicians met these criteria. Using a database maintained by the University of Iowa, physicians were invited in random order with the goal of recruiting approximately 50. This number was based on the frequency of questions occurring in a previous study 31 and on our estimate of the number needed to sample to the “point of redundancy.”32,33 In this study, the point of redundancy occurred when newly recruited physicians reiterated recommendations cited by previously recruited physicians without adding any new ones. In retrospect, few new recommendations were added after the midpoint of the study. However, one of our goals was to estimate the frequencies of different obstacles and recommendations. Therefore, we continued to recruit to our preset estimate of 50 physicians, who we predicted would yield approximately 1,000 questions. To improve the generalizability of our findings, we invited ten minority physicians outside the random selection process and outside the age and geographic limits. In summary, we invited a total of 56 physicians (46 randomly and ten because of their minority status).

Procedures

Each invited physician received a letter followed by a phone call requesting participation. Two methods were used to collect data for this study: (1) field notes based on observations of physicians during clinic and (2) audiotaped interviews with these same physicians in which they were asked to suggest improvements to clinical resources, such as textbooks, reviews articles, and medical Web sites. One of the investigators (JWE) observed each participant for four half-days, which were spaced at approximately one-month intervals. The investigator stood in the hallway and recorded questions on a standard form between patient visits. We defined a “clinical question” as a question that pertains to a health care provider's management of one or more patients, potentially answerable in a print or electronic resource. Using a paraphrase of this definition, physicians were asked to report questions that occurred as they saw their patients. Common types of questions that did not meet this definition were “What was her serum potassium last week?” and “What is this rash?” The exact wording of the question was recorded along with field notes about attempts to answer it, reasons for not pursuing an answer, and the clinical context (patient's age, gender, and reason for visit). Physicians were asked to “think aloud”34,35 as they decided whether to pursue answers and as they succeeded or failed in their attempts. This is a typical set of field notes about one multipart question:

PPD [purified protein derivative] on someone who's had a BCG [bacille Calmette-Guérin vaccine], adopted from India, thinks she had the BCG. This [name of resource] is better than it used to be. Can't assume a positive PPD is from the BCG, especially if it was given long ago or if the country has a lot of TB [tuberculosis]. “It would be nice if there were clear guidelines on this: If the BCG was given more than 5 years previously and the PPD is positive, do this. How long does the PPD stay positive after the BCG? What is the conversion rate for BCG? Is it 100%? And how big should the PPD be to say it's positive? Because otherwise you are committed to nine months of treatment. The [name of resource] is still vague, you know, ‘if high-risk area, probably positive.’ Well, what do I DO? And then the whole thing with the TB skin test, whom should you screen? You know the [name of resource] is just ridiculous with this. They have this huge list of questions you're supposed to ask. Who has time to do that?”

Whether to extract individual questions from a closely related series like this is a decision that has not been formally studied. In our analysis, we chose to lump closely related questions if they were all expressed in rapid succession and pertained to a single patient. Thus, this example was counted as one question.

In addition to questions that arose during the observation period, physicians were asked to record questions that had occurred previously. Most of these previous questions occurred between the four observation periods.

At the end of the fourth observation period, the investigator conducted a 20-minute, semistructured interview. The audiotaped interviews generally took place in the physician's office and were later transcribed. The interview began with a request that the physician criticize the answer provided by the investigators to one of the questions from a previous observation. These answers consisted of one-page summaries of the literature that were directly related to the questions. Our purpose was to further characterize the physician's perspective on improving clinical resources. These comments were followed by three items related to information resources in general: (1) “Please describe the qualities of ideal information resources and ideal answers.” (2) “What are the problems and frustrations that you have experienced in the past when trying to answer your clinical questions?” (3) “What advice would you offer to medical Web site developers and textbook editors to help make their resources more useful?” The investigator used silence and open-ended follow-up questions to encourage a free flow of ideas related to these three questions.

Analysis

We used the field notes about physicians' questions to determine the frequency of obstacles to answering questions. The list of potential obstacles had been generated in a previous study,3 but that study did not include frequencies of obstacle occurrence. Each question was reviewed and coded by the principal investigator (JWE). The questions were then divided equally and randomly among the other four investigators (JAO, MLC, MHE, MER) who coded them independently. Thus, each question was coded by two investigators. Discrepancies between the two investigators were identified and reconciled during subsequent electronic mail discussions.

We used the interview transcripts to generate a taxonomy of recommendations for authors to help them meet the needs of practicing physicians. In this report, we use the term “author” as shorthand for the more accurate, but more cumbersome, “clinical information resource developer.” The recommendations taxonomy was developed using an iterative process similar to the “constant comparative method” of qualitative analysis,32,36 in which the investigators reviewed the initial interviews, drafted a taxonomy, reviewed more interviews, revised the taxonomy, and so on, until a final comprehensive taxonomy was approved by all investigators. Initially, the investigators developed a set of recommendations based on their attempts to answer questions in a previous study.3 Several methods for organizing the recommendations were discussed before settling on the final method, which consisted of two main groups: content and access. The principal investigator (JWE) used the first 20 interviews to revise the initial taxonomy of recommendations. The interviews and draft taxonomy were then distributed equally and randomly among the other four investigators (JAO, MLC, MHE, MER). The investigators coded the interviews using the draft taxonomy and made suggestions for changing it based on this coding exercise. The principal investigator then revised the taxonomy and distributed it to the investigators for further comment. After the final revision was agreed to by all investigators, it was used to code the first 32 interviews (including the first 20 that were recoded), again with the principal investigator coding all interviews and the other investigators dividing the 32 equally and randomly. After all interviews were completed, the final 16 were coded in the same fashion. Only minor wording changes were made to the taxonomy during these last two coding exercises. The frequency with which each recommendation was mentioned in the interviews was thus determined by two independent investigators (the principal investigator and one other investigator). Recommendations mentioned more than once in an interview were counted only once. Discrepancies in coding were identified, and consensus was reached during subsequent discussions that took place using electronic mail. The study was approved by the University of Iowa Human Subjects Committee.

Results

Participants

Forty-eight (86%) of the 56 invited physicians agreed to participate. The mean age of participants was 38 years, and 21 (44%) were female. The study sample included 16 general internists, 17 general pediatricians, and 15 family physicians. Thirty-eight physicians practiced in small cities with populations between 50,000 and 120,000. The remaining ten practiced in smaller towns. Each physician was observed for approximately 16 hours (four visits per physician with four hours per visit), resulting in a total of 768 hours of observation time. The study included 14 minority physicians, nine of whom were selected outside the random sample. The minorities included two Native-American, five Asian, four African-American, and three Hispanic physicians.

Obstacles to Answering Questions

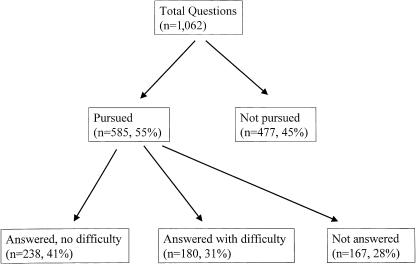

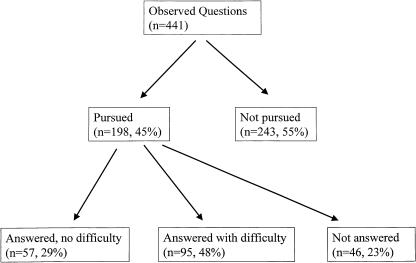

The 48 physicians asked 1,062 questions (5.5 questions per physician per half-day observation period), including 441 questions (42%) about patients seen during the observation period (2.3 questions per half day) and 621 (58%) about patients seen previously (3.2 questions per half day). Physicians pursued answers during or before the observation period to 585 (55%) of their questions but were unable to answer 167 (28%) of those pursued (▶). (▶ presents analogous data but limited to questions asked during the observation period.) The physicians answered 238 (41%) of their questions without difficulty and 180 (31%) with difficulty (▶). “Without difficulty” was defined as a complete answer (information completely answered question as judged by the physician) that was quickly found in the first resource consulted. “Difficulty” was defined as either an incomplete answer (n = 128, 71% of 180), a complete answer that required more than one resource to find (n = 45, 26%), or a complete answer that was difficult to find in the first resource consulted (n = 7, 4%). These assessments were based on experiences reported by physicians for questions occurring before the study visit and on direct observations for questions occurring during the study visit. The resources used most commonly are listed in ▶. Although no single resource accounted for more than 7% of the answers, the ten resources in ▶ account for 37% of pursued questions (215/585).

Figure 1.

Attempts of 48 physicians to answer their questions.

Figure 2.

Attempts of 48 physicians to answer their questions, limited to questions that arose during observation periods.

Table 1.

Most Common Resources Used to Answer 585 Pursued Questions

| n (%)† | |

|---|---|

| A.General categories of resources* | |

| Single textbook | 181 (31) |

| Human (informal consultation) | 107 (18) |

| Desktop computer application including the Web | 71 (12) |

| Multiple textbooks | 46 (8) |

| Human plus textbook | 35 (6) |

| Single journal article | 25 (4) |

| Handheld computer | 22 (4) |

| Refrigerator‡ | 11 (2) |

| Multiple journal articles | 9 (2) |

| Computer plus human plus textbook | 9 (2) |

| B.Specific nonhuman resources§ | |

| UpToDate (available at www.uptodate.com) | 41 (7) |

| Nelson Textbook of Pediatrics. Philadelphia: WB Saunders | 35 (6) |

| E-pocrates (available at www.epocrates.com) | 25 (4) |

| Monthly Prescribing Reference. London: Prescribing Reference, Inc. | 22 (4) |

| The Harriet Lane Handbook. St. Louis: Mosby | 21 (4) |

| Tarascon Pocket Pharmacopoeia. Loma Linda: Tarascon Publishing | 16 (3) |

| MICROMEDEX (available at www.micromedex.com) | 15 (3) |

| Sanford Guide to Antimicrobial Therapy. Hyde Park: Antimicrobial Therapy, Inc. | 14 (2) |

| Red Book: 2003 Report of the Committee on Infectious Diseases. Elk Grove Village, IL: American Academy of Pediatrics | 14 (2) |

| Griffith's 5-Minute Clinical Consult. Philadelphia: Lipincott Williams & Wilkins | 12 (2) |

Part A includes only the ten most common categories.

n denotes the number of questions for which the resource was used. The denominator for the percentages is 585 (number of questions pursued).

Refrigerator denotes informal notes, torn out pages, memos, and so on, that are fastened to walls, refrigerators, cupboard doors, and so on, in the clinic.

Part B includes only the ten most common resources.

Reasons for not pursuing an answer were identified for 212 (44%) of the 477 nonpursued questions. The investigator did not ask why the physician failed to pursue the remaining 265 questions because of the busyness of the physician and the investigator's sense that this potentially threatening inquiry would stifle further reporting of questions.

The most commonly reported reason for not pursuing an answer was the expectation that no useful information would be found (▶). For example, an internist seeing a 62-year-old man said:

“One question is the management of fasting hyperglycemia or impaired glucose tolerance. Nobody really talks about how often to check blood sugars or how aggressive to be with lipids in that setting.” I (investigator) asked whether the physician planned to pursue an answer. He said, “My guess is there are no concrete recommendations.”

Table 2.

Most Common Obstacles to Answering Patient-care Questions

| Frequency n (%)† | |

|---|---|

| A.Obstacles preventing pursuit of answers (212 questions)* | |

| Doubt about the existence of relevant information | 52 (25) |

| Ready availability of consultation leading to a referral rather than a search | 47 (22) |

| Lack of time to initiate a search for information | 41 (19) |

| Question not important enough to justify a search for information | 31 (15) |

| Uncertainty about where to look for information | 16 (8) |

| B.Obstacles to finding answers to pursued questions (questions)* | |

| Topic or relevant aspect of topic not included in the selected resource | 153 (26) |

| Failure of the resource to anticipate ancillary information needs | 41 (7) |

Physicians did not pursue answers to 477 questions. They provided reasons for their decision not to pursue answers to 212 questions. When the analysis was limited to questions about patients seen during the observation periods (excluding questions about previous patients), the relative frequencies of the most common obstacles were similar. The five most common obstacles preventing pursuit of an answer were “doubt about the existence of relevant information” (32 questions, 13% of 243 not pursued), “lack of time to initiate a search for information” (18 questions, 7%), “question not important enough to justify a search for information” (18 questions, 7%), “ready availability of consultation leading to a referral rather than a search” (14 questions, 6%) “resource needed was physically distant” (eight questions, 3%). The two most common obstacles among pursued questions were “topic or relevant aspect of topic not included in the selected resource” (44 questions, 22% of 198 pursued) and “inadequacy of resource's index (12 questions, 6%).

Percentages do not add to 100% because only the most common obstacles are included.

A commonly reported reason for not pursuing an answer was “ready availability of consultation leading to a referral rather than a search.” In some cases, physicians may have decided that patient care would be better served by a referral rather than pursuit of an answer. However, time pressures appeared to play a greater role. For example, an internist asked, “Why does he have this elevated alkaline phosphatase? I will ask a gastroenterologist because I think I would waste a lot of time trying to look this up.”

Another internist described

a middle-aged man with erectile dysfunction who had a low free testosterone. FSH [follicle-stimulating hormone] and LH [luteinizing hormone] were normal. “Would I need to do an MRI [magnetic resonance imaging] of the sella? The TSH [thyroid-stimulating hormone] was normal. I sent him to endocrine. It was either that or a phone call, and sometimes it just comes down to not having enough time—the referral is quicker.”

Once an answer was pursued, the most commonly encountered obstacle was not finding the needed information in the resource selected by the physician. For example, a family physician, who saw a 72-year-old woman with abdominal pain, asked:

“What are the signs and symptoms of mesenteric artery occlusion and how do you test for it? She has end-stage coronary artery disease with stents in her coronary arteries. I looked in [two textbooks and one Web site]. There was no listing under ‘mesenteric artery’ or ‘vascular occlusion.’ I spent over an hour looking for an answer and came up with nothing useful.”

Recommendations for Authors

The investigators extracted 80 recommendations for authors from the interview transcripts. This list included separate entries for each variation on a recommendation theme (Appendix 1, available as an online data supplement at www.jamia.org). We then deleted 39 recommendations that were mentioned by fewer than five physicians and combined similar recommendations among the remaining 41. The result was a shorter list of 22 repeatedly mentioned recommendations, which fell into two groups: 12 about the “content” of the resource and ten about “access” to information within the resource (▶). The most common content recommendation was to provide comprehensive information that anticipates and answers the specific needs of practicing physicians. For example, when one internist was asked about the frustrations that she had experienced in the past when trying to answer her questions, she said:

“Not finding the concise answer that I want. I need a two-sentence answer that tells me what I can do in between patients. Because I can't read through a whole article … like when we were talking about hypercoagulability … I couldn't find anywhere, ‘OK, for a hypercoagulability workup, it should be this, this, and this.”

Table 3.

Recommendations for Authors Based on Interviews with 48 Physicians

| Content | n (%)* |

|---|---|

| 1.Comprehensive information | |

| a.Topic coverage: Cover topics comprehensively by anticipating and answering clinical questions that are likely to occur | 36 (75) |

| b.Direct answers: Answer clinical questions directly | 14 (29) |

| c.Treatment: Include and emphasize detailed treatment recommendations; provide full prescribing information† | 20 (42) |

| d.Summary: Provide a summary with bottom-line recommendations | 15 (31) |

| e.Specific information: Provide enough detail so that a physician unfamiliar with the topic could apply the information directly to patient care | 13 (27) |

| f.Vagueness: Avoid vague statements | 6 (13) |

| g.Action: Tell the physician what to do; physicians must decide what to do (and when to do nothing), even when the evidence is insufficient | 20 (42) |

| 2.Trust | |

| a.Evidence: Provide a rationale for recommendations by citing evidence; separate descriptions of original research from clinical recommendations | 25 (52) |

| b.Practicality: Temper original research with practical considerations (e.g., recommended management in rural hospitals and clinics) | 19 (40) |

| c.Experience: Temper original research with clinical experience; address important questions regardless of the evidence available | 14 (29) |

| d.Updates: Update the information frequently | 16 (33) |

| e.Authority: Present information in a recognized, respected, authoritative resource | 8 (17) |

| Access | |

| 1.Index and search function | |

| a.Index cross referencing: Include alternate terms, clinically oriented terms, and common abbreviations with page numbers at each term | 17 (35) |

| b.Computer search function: Provide a user-friendly, intuitive interface and search function | 20 (42) |

| c.Search speed: Provide a search function or index that is quick to use | 14 (29) |

| d.Interactive computer interface: Allow entry of patient-specific data, such as age and gender, to help narrow the search | 6 (13) |

| 2.Clinical organization | |

| a.Conciseness: Be concise, succinct, and to the point | 26 (54) |

| b.Clinical findings: Organize from the clinician's perspective, starting with undiagnosed clinical findings (e.g., “The Approach to Dyspnea”) as well as with diseases (e.g., “The Approach to Pneumonia”) | 13 (27) |

| c.Algorithms: Present recommendations using a stepwise approach or an algorithm in which all important outcomes are addressed | 14 (29) |

| d.Rapid information access: Help physicians locate information quickly and easily by using lists, tables, bullets, and bolded subheadings; avoid lengthy uninterrupted prose | 37 (77) |

| e.Links: Provide links to related topics with full text of cited articles | 12 (25) |

| 3.Physical and temporal accessibility: Ensure physical and temporal accessibility by presenting information in commonly available books, journals, and Web sites that are available 24 hours a day | 17 (35) |

n denotes number of physicians making the recommendation. Percentages are number of physicians making recommendation divided by total number of physicians (n = 48).

Trade names, generic names, starting dose, usual dose, maximum dose, pediatric dose, geriatric dose, dosage in renal failure, dosage forms, tablet description, indications, contraindications, drug interactions, safety in pregnancy, safety in breast-feeding, safety in children, adverse effects with information about which adverse effects are most important clinically, specific clinical and laboratory monitoring recommendations (avoid vague statements such as “monitor liver function periodically”), serum drug level monitoring, treatment of overdose, clinically important kinetics, criteria for stopping drug. Include prescribing details for drugs that are commonly used in children, regardless of their approval status.

When another internist was asked what advice he would have for medical Web site developers and textbook editors, he said “mainly to try to find out for each subject, the real-life questions that come up, and don't invent questions and try to answer them.”

Other common recommendations included providing current information, providing an evidence-based rationale for recommendations, and telling the physician specifically “what to do.” For example, an internist said “ … like the thyroid antibodies that we talked about. It never told me exactly what do I do with an abnormal thyroid-peroxidase antibody even though their thyroid function tests are normal.”

The most common “access” recommendations focused on making the resource efficient to use. For example, when one family physician was asked to describe the qualities of ideal information resources, she said,

“Quick access. Something that I can get a hold of very quickly and look it up very quickly. The problem that I run into with a lot of stuff is that it's buried and I can't get that information. Charts, tables, bold print always help. Relevant information without all the … you know, I appreciate clinical studies and all that, but I don't need to read through that when I'm trying to address a patient question immediately. It's good to have those referenced, or the information there, but I need a short synopsis. What do I need to do to this patient right now?”

Discussion

Key Findings

The physicians in this study pursued more than half of the questions that they asked. They cited doubt about the existence of needed information as the most common reason for not pursuing a question. Other common reasons included lack of time and relative lack of question importance. Once an answer was pursued, the most commonly encountered obstacle was the absence of needed information in the selected resource. When physicians were asked to recommend improvements to clinical resources, they requested comprehensive resources that answered their questions. They wanted rapid access to concise answers that were easy to find and told them what to do in highly specific terms. Their specific recommendations (as detailed in Appendix 1, available as an online data supplement at www.jamia.org) could be helpful to clinical information resource developers.

Previous Studies

Our findings are consistent with those of Gorman and Helfand,4 who found that only two factors predicted pursuit of answers: the physician's belief that a definitive answer existed and the urgency of the patient's problem. In that study, only 88 of the 295 questions (30%) were pursued, and 70 of these 88 were answered. In other studies, the proportion of pursued questions ranged from 29% to 92%.14,37,38,39,40 Covell and colleagues30 found 81 barriers that hindered internists from answering their questions, and lack of time was the most frequent.6 In that study, physicians who pursued answers found that 34% of the information was not helpful: 25% only partially answered the question and 9% was considered unreliable. Connelly and colleagues8 developed a model based on studies of family physicians in which availability and applicability of resources were more important than quality. They noted that “quickness of decision and action is usually required” and that “the resulting time constraints generally preclude extensive evaluation of alternatives.”41

In previous studies, Ely and colleagues3,31,42 developed a taxonomy of generic questions and identified 59 potential obstacles to answering questions. However, the design of these studies did not allow the investigators to determine the frequency with which these obstacles occurred in practice. The current study reports the frequency of obstacles and the recommendations of physicians for overcoming these obstacles.

Limitations

We studied a relatively small number of primary-care physicians, who practiced in a limited geographic area, and we excluded those older than age 45 who might have different experiences and perceptions regarding information seeking. The extent to which our findings can be generalized to other physicians is unknown. In our introductory letter to physicians, we said that our purpose was to learn about obstacles to answering questions. This statement may have led physicians to focus more on difficult questions than easily answered ones. Conversely, the investigator's presence may have stimulated more (and perhaps less important) questions than would have otherwise occurred—a type of “Hawthorne effect” in which an individual's behavior is altered by the observation itself.43,44 A review by Gorman45 found that the frequency of questions is highly dependent on the methods used to collect them. Previous studies, which did not focus on obstacles to answering questions, have provided different frequency estimates of unanswered questions.6,31,40

Some recommendations from physicians might be considered unrealistic by information resource developers. For example, it would be difficult for authors to answer practice-generated questions without a mechanism for collecting such questions and making them available. Our study was not designed to show whether following the recommendations would actually help answer questions or improve patient care.

We limited the study to younger physicians to increase the number of questions during each observation period. However, we analyzed data from a previous study in which physicians of all ages were included31 and found that the frequency of the ten most common question types did not differ by age group. (A 2 × 10 table [two age groups by ten question types] yielded a chi-square of 7.68 [nine degrees of freedom, p = 0.57].)

The completeness of the data collection varied according to the busyness of the physician. Judgments about whether to pursue ancillary data about a question were subjective and based on factors such as the willingness of the physician to divulge information needs, the state of rapport between investigator and physician, and the intensity of annoyed looks from the nurse who was trying to keep the physician on schedule. The physicians who declined participation often cited “busyness” and fear that we would “slow them down.” In previous studies, we found that if physicians knew that they would be hounded for details every time they disclosed an uncertainty, they became less willing to report their uncertainties, especially if they were behind schedule. If we had put a higher priority on complete data collection in the current study, the resulting selection bias involving participants and the questions that they reported might have been at least as concerning as the lack of ancillary data for some questions.

In the interviews, we asked open-ended questions, which may have underestimated the number of physicians who would have endorsed specific recommendations. For example, more than six physicians might have agreed that authors should avoid vague statements (▶), if all physicians had been asked about vagueness. However, our use of open-ended prompts may have provided a more accurate description and frequency estimate of the recommendations that physicians believe are most important.

Conclusion

The physicians in this study were unable to answer many of their patient-care questions because the resources they consulted did not contain the needed information. Such gaps are potentially correctable. To make their resources more useful, authors could benefit from two kinds of information. First, they could follow recommendations such as those in ▶ and Appendix 1 (available as an online data supplement at www.jamia.org). Second, they could access a database of actual questions, such as the Clinical Questions Collection at the National Library of Medicine (prototype available at http://clinques.nlm.nih.gov/). The investigators are working with the Lister Hill National Center for Biomedical Communications to build this database, which currently holds 4,654 clinical questions that were collected in this study and previous studies.14,31,46 New clinical questions from additional studies, conducted under institutional review board approval, are being added. Authors can consult this database to strengthen their awareness of questions that actually arise in practice about various topics. For example, an author writing about pneumonia would find 51 questions in the current prototype using the key word, “pneumonia.” Among these questions are “What are the indications for hospitalizing a patient with pneumonia?” and “Are there rules, similar to the Ottawa ankle rules, for when to get a chest x-ray to rule out pneumonia?” These practical and important issues might not otherwise be covered in typical monographs on pneumonia. However, it remains to be seen whether implementing this strategy for covering a clinical topic will be helpful to clinicians.

The physicians in this study had a focused and forceful message: Authors should anticipate and answer questions that arise in practice and should answer them with actionable, step-by-step advice. Talking about a disease or clinical finding is not enough. Busy practitioners need immediate, easy-to-find advice on what to do when faced with a disease or finding. Recommendations about what to do should be communicated in the form of algorithms, bulleted lists, tables, and concise prose.

Supplementary Material

An abstract describing preliminary results of this study was published and presented at the Society of Teachers of Family Medicine Annual Spring Conference, Atlanta, GA, September 21, 2003.

Supported by a grant from the National Library of Medicine (1R01LM07179-01).

Dr. Osheroff is employed by Thomson MICROMEDEX, Greenwood Village, CO. This company disseminates decision support and reference information to health care providers and could benefit financially if it followed the recommendations described in this manuscript (as could its competitors). Similar considerations apply to his past consultation to Merck on their Merck Medicus project. Dr. Ebell is employed by Group for Organization Learning and Development, Athens, GA, and Michigan State University, East Lansing, MI. In addition, he is an editor at American Family Physician and has developed a computer application, “InfoRetriever,” which helps physicians answer their patient-care questions; he owns stock in the company that licenses this software. All these associations could benefit financially if they endorsed the recommendations made in this paper. However, the authors do not believe this represents a conflict of interest in the usual sense.

The authors thank the 48 physicians who participated in this study.

References

- 1.Currie LM, Graham M, Allen M, Bakken S, Patel V, Cimino JJ. Clinical information needs in context: an observational study of clinicians while using a clinical information system. Proc AMIA Symp. 2003:190–4. [PMC free article] [PubMed]

- 2.Allen M, Currie LM, Graham M, Bakken S, Patel VL, Cimino JJ. The classification of clinicians' information needs while using a clinical information system. Proc AMIA Symp. 2003:26–30. [PMC free article] [PubMed]

- 3.Ely JW, Osheroff JA, Ebell MH, et al. Obstacles to answering doctors' questions about patient care with evidence: qualitative study. BMJ. 2002;324:710–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Gorman PN, Helfand M. Information seeking in primary care: how physicians choose which clinical questions to pursue and which to leave unanswered. Med Decis Making. 1995;15:113–9. [DOI] [PubMed] [Google Scholar]

- 5.Ebell M. Information at the point of care: answering clinical questions. J Am Board Fam Pract. 1999;12:225–35. [DOI] [PubMed] [Google Scholar]

- 6.Covell DG, Uman GC, Manning PR. Information needs in office practice: are they being met? Ann Intern Med. 1985;103:596–9. [DOI] [PubMed] [Google Scholar]

- 7.Schneeweiss R. Morning rounds and the search for evidence-based answers to clinical questions. J Am Board Fam Pract. 1997;10:298–300. [PubMed] [Google Scholar]

- 8.Connelly DP, Rich EC, Curley SP, Kelly JT. Knowledge resource preferences of family physicians. J Fam Pract. 1990;30:353–9. [PubMed] [Google Scholar]

- 9.Feinstein AR, Horwitz RI. Problems in the “evidence” of “evidence-based medicine”. Am J Med. 1997;103:529–35. [DOI] [PubMed] [Google Scholar]

- 10.Bergus GR, Randall CS, Sinift SD, Rosenthal DM. Does the structure of clinical questions affect the outcome of curbside consultations with specialty colleagues? Arch Fam Med. 2000;9:541–7. [DOI] [PubMed] [Google Scholar]

- 11.Sackett DL, Richardson WS, Rosenberg W, Hayes RB. Evidence-Based Medicine. How to Practice and Teach EBM. New York: Churchill Livingstone; 1997.

- 12.Fozi K, Teng CL, Krishnan R, Shajahan Y. A study of clinical questions in primary care. Med J Malaysia. 2000;55:486–92. [PubMed] [Google Scholar]

- 13.Chambliss ML, Conley J. Answering clinical questions. J Fam Pract. 1996;43:140–4. [PubMed] [Google Scholar]

- 14.D'Alessandro DM, Kreiter CD, Peterson MW. An evaluation of information seeking behaviors of general pediatricians. Pediatrics. 2004;113:64–9. [DOI] [PubMed] [Google Scholar]

- 15.Bell DS, Daly DM, Robinson P. Is there a digital divide among physicians? A geographic analysis of information technology in Southern California physician offices. J Am Med Inform Assoc. 2003;10:484–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wyatt J. Use and sources of medical knowledge. Lancet. 1991;338:1368–73. [DOI] [PubMed] [Google Scholar]

- 17.Cimino JJ. Linking patient information systems to bibliographic resources. Methods Inf Med. 1996;35:122–6. [PubMed] [Google Scholar]

- 18.Cimino JJ, Li J. Sharing infobuttons to resolve clinicians' information needs. Proc AMIA Symp. 2003:815. [PMC free article] [PubMed]

- 19.Greer AL. The two cultures of biomedicine: can there be a consensus? JAMA. 1987;258:2739–40. [PubMed] [Google Scholar]

- 20.Gorman P. Does the medical literature contain the evidence to answer the questions of primary care physicians? Preliminary findings of a study. Proc Annu Symp Comput Appl Med Care. 1993:571–5. [PMC free article] [PubMed]

- 21.Hersh WR, Crabtree MK, Hickam DH, et al. Factors associated with success in searching MEDLINE and applying evidence to answer clinical questions. J Am Med Inform Assoc. 2002;9:283–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lei J, Chen ES, Stetson PD, McKnight LK, Mendonca EA, Cimino JJ. Development of infobuttons in a wireless environment. Proc AMIA Symp. 2003:906. [PMC free article] [PubMed]

- 23.Sackett DL, Straus SE. Finding and applying evidence during clinical rounds: The “evidence cart.”. JAMA. 1998;280:1336–8. [DOI] [PubMed] [Google Scholar]

- 24.Ebell MH, Barry HC. InfoRetriever: rapid access to evidence-based information on a handheld computer. MD Comput. 1998;15:289–97. [PubMed] [Google Scholar]

- 25.Ebell MH, Slawson D, Shaughnessy A, Barry H. Update on InfoRetriever software. J Med Libr Assoc. 2002;90:343. [PMC free article] [PubMed] [Google Scholar]

- 26.Cimino JJ, Li J, Graham M, et al. Use of online resources while using a clinical information system. Proc AMIA Symp. 2003:175–9. [PMC free article] [PubMed]

- 27.Florance V. Clinical extracts of biomedical literature for patient-centered problem solving. Bull Med Libr Assoc. 1996;84:375–85. [PMC free article] [PubMed] [Google Scholar]

- 28.Davidoff F, Florance V. The informationist: a new health profession? Ann Intern Med. 2000;132:996–8. [DOI] [PubMed] [Google Scholar]

- 29.Greenhalgh T, Hughes J, Humphrey C, Rogers S, Swinglehurst D, Martin P. A comparative case study of two models of a clinical informaticist service. BMJ. 2002;324:524–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Smith R. What clinical information do doctors need? BMJ. 1996;313:1062–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Ely JW, Osheroff JA, Ebell MH, et al. Analysis of questions asked by family doctors regarding patient care. BMJ. 1999;319:358–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Lincoln Y, Guba E. Naturalistic Inquiry. Beverly Hills, CA: Sage Publications; 1985.

- 33.Crabtree BF, Miller WL. Doing Qualitative Research., 2nd ed. Thousand Oaks, CA: Sage Publications; 1999.

- 34.Fonteyn M, Kuipers B, Grobe S. A description of think aloud method and protocol analysis. Qualitative Health Res. 1993;3:430–41. [Google Scholar]

- 35.Ericsson KA, Simon H. Protocol Analysis. Verbal Reports on Data. Cambridge, MA: MIT Press; 1993.

- 36.Glaser BG, Strauss AL. The Discovery of Grounded Theory: Strategies for Qualitative Research. New York: A. de Gruyter; 1967.

- 37.D'Alessandro MP, Nguyen BC, D'Alessandro DM. Information needs and information-seeking behaviors of on-call radiology residents. Acad Radiol. 1999;6:16–21. [DOI] [PubMed] [Google Scholar]

- 38.Green ML, Ciampi MA, Ellis PJ. Residents' medical information needs in clinic: are they being met? Am J Med. 2000;109:218–23. [DOI] [PubMed] [Google Scholar]

- 39.Cogdill KW, Friedman CP, Jenkins CG, Mays BE, Sharp MC. Information needs and information seeking in community medical education. Acad Med. 2000;75:484–6. [DOI] [PubMed] [Google Scholar]

- 40.Gorman PN. Information needs in primary care: a survey of rural and nonrural primary care physicians. Medinfo. 2001;10:338–42. [PubMed] [Google Scholar]

- 41.Curley SP, Connelly DP, Rich EC. Physicians' use of medical knowledge resources: preliminary theoretical framework and findings. Med Decis Making. 1990;10:231–41. [DOI] [PubMed] [Google Scholar]

- 42.Ely JW, Osheroff JA, Gorman PN, et al. A taxonomy of generic clinical questions: classification study. BMJ. 2000;321:429–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Mayo E. The Human Problems of an Industrial Civilization. New York: MacMillan; 1933.

- 44.Wickstrom G, Bendix T. The “Hawthorne effect”—what did the original Hawthorne studies actually show? Scand J Work Environ Health. 2000;26:363–7. [PubMed] [Google Scholar]

- 45.Gorman PN. Information needs of physicians. J Am Soc Inf Sci. 1995;46:729–36. [Google Scholar]

- 46.Ely JW, Osheroff JA, Ferguson KJ, Chambliss ML, Vinson DC, Moore JL. Lifelong self-directed learning using a computer database of clinical questions. J Fam Pract. 1997;45:382–8. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.