Abstract

Spike-timing-dependent plasticity (STDP) is considered critical to learning and memory functions in the human brain. Across various types of synapse, STDP is observed as different profiles of Hebbian and anti-Hebbian learning rules. However, the specific roles of diverse STDP profiles in memory formation still remain elusive. Here, we show that the symmetry of the learning rate profile in STDP is crucial to determining the character of stored memory. Using computer simulations, we found that an asymmetric learning rate generates flexible memory that is volatile and easily overwritten by newly appended information. Moreover, a symmetric learning rate generates stable memory that can coexist with newly appended information. In addition, by combining these two conditions, we could realize a hybrid memory type that operates in a way intermediate between stable and flexible memory. Our results demonstrate that various attributes of memory functions may originate from differences in the synaptic stability.

Introduction

Since Hebb established the concept of activity-dependent synaptic modulation1, synaptic plasticity of neural circuits has been considered the key mechanism of learning and memory function. Based on this dogma, there has been a number of efforts to explain the fundamental mechanism of memory—from molecular scale synaptic changes2 to population scale memory traces in neural circuits3. Extensive research has been done to examine the process of memory allocation4–6, how existing memories can be manipulated7–9, and how synaptic changes can form a specific type of memory10. A recent study also provided evidence of the link between synaptic changes and memory formation11. Despite such progress and findings, one important question still remains to be answered: What is the specific mechanism by which neural plasticity forms different types of memory?

Memory can be categorized into two types: flexible and stable forms of memory. The common ground in the dichotomous classifications is that the former decays quickly and is easily replaced by new input, whereas the latter decays relatively slowly and is robust against newly appended input12, 13. An intriguing finding from a recent study of the primate caudate nucleus, is that flexible and stable memories can be encoded in the same system14, 15. This finding raises the argument that flexible and stable memory might be formed in the same neural circuit, and may not require a completely different mechanism of memory formation. Although a number of theoretical models have been proposed for simulating memories with either flexible or stable features, little is known how to realize both types of memory in the same circuit.

Spike-timing-dependent-plasticity (STDP) is considered a key mechanism for memory formation in neural networks16–18 in which synaptic strength is updated according to the exact timing of pre- and postsynaptic spikes19–21. Diverse profiles of Hebbian and anti-Hebbian STDP have been observed across various types of post-synaptic cells22, 23 and synapses24–28. It has been reported that they can also change dynamically depending on the pre- and postsynaptic activity pattern29, synaptic cooperativity30, and synaptic strength at the moment19, 20. Computational models using STDP have successfully reproduced certain features of neuronal memory and memory circuits such as the assembly of inter-neural connectivity, pattern of sequential neural firing and noise robustness31–33. However, the mechanism for achieving flexible and stable memory characteristics has not been successfully explained based on these STDP rules.

Here, we introduce a novel model that asserts that the mathematical ‘stability’ of the synaptic learning rule would determine the characteristic of a memory stored in a neural network. Our hypothesis is that alteration of the learning rate symmetry in STDP can differentially regulate the synaptic stability so that it leads to generation of either flexible or stable memories. To test our idea, we designed a computer simulation using a model feedforward network to implement two types of symmetry profiles for learning rate: asymmetric learning rate STDP (AR)34, 35 and symmetric learning rate STDP (SR) as examples of two different profiles of synaptic stability. In this model, we defined “memory” as the ability of a system to retrieve consistent response spike patterns when a pre-trained input pattern was received repeatedly. Then we examined the performance of the system in terms of memory “sustainability”—how long the stored memories could last—and “appendability”—how robustly the previously stored memory could survive when new information was appended to memory. Our results showed that the difference in the synaptic stability profile could determine the characteristics of stored memory. Ultimately, we were able to implement a hybrid type of memory from the precise control of learning rate symmetry, which exhibited intermediate properties between flexible and stable memories. Our results may provide new insight for the role of STDP learning rate profile in memory formation.

Results

Symmetric and asymmetric learning rate models of STDP

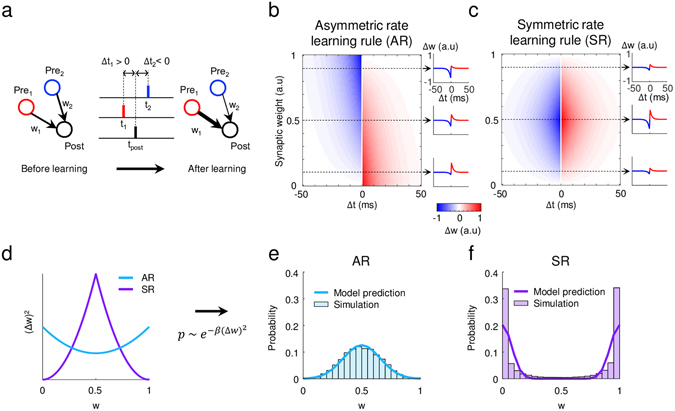

In a spike-timing-dependent-plasticity (STDP) model, change of synaptic strength or weight (w) depends on relative timing of pre- and postsynaptic spikes, Δt = tpost − tpre. For example, spikes of Δt > 0 leads to long term potentiation (LTP), while spikes of Δt < 0 leads to long-term depression (LTD) (Fig. 1a). For properly scaled synaptic modulation, the learning rate Δw is considered a function of synaptic strength w (Fig. 1b); so that any particular synapses could not become excessively strong16, 19, 20, 34. Here, we consider two models of learning rules—namely, asymmetric and symmetric rate STDP (Fig. 1b,c) where the weight-dependent scaling of learning rate for LTP and LTD is asymmetric or symmetric, respectively. First, asymmetric rate STDP (Fig. 1b) is a typically accepted form of learning rate model, and is sometimes called multiplicative weight dependence in theoretical studies34, 35. In this model, the learning rate Δw is biased towards positive (LTP) for weak synapses (w ≈ 0) and is biased towards negative (LTD) for strong synapse (w ≈ 1), so that weak synapses are likely to strengthen while strong synapse are easily weakened. On the other hand, in the symmetric rate STDP model (Fig. 1c), Δw is maximum around the mid-range of w (≈0.5) for both LTP and LTD, and decreases as w increases to ‘1’ or decreases to ‘0’. In this instance, any synapses, once they become very weak or strong by learning, tend to retain their synaptic weights.

Figure 1.

Weight-dependent learning rules: (a) A model of spike-timing-dependent plasticity (STDP). Synaptic strength change (Δw) depends on the relative spike timing between pre- and postsynaptic neurons (Δt). Spikes of Δt > 0 leads to long term potentiation (LTP), while spike of Δt < 0 leads to long-term depression (LTD). (b) Asymmetric learning rate (AR) model: Learning rates for LTP (red) and LTD (blue) are asymmetric for strong and weak synapses. (c) Symmetric learning rate (SR) model: Learning rates for LTP (red) and LTD (blue) are symmetric for strong and weak synapses. (d) Instability of the synapses (Δw2) for AR and SR models. (e,f) Weight density function for AR and SR was predicted from Boltzmann distribution (colored lines), and simulated using a single synapse model (histograms).

To predict the characteristics of each learning rule, we defined the ‘instability’ of the synapses as follows.

| 1 |

Synaptic instability indicates how much the synapse changes from the weight dependent learning rule, thus it is a function of the current value of synaptic weight. From the equations of AR and SR learning rate models in Fig. 1b,c, we mathematically calculated the synaptic instability (Fig. 1d). Then, in order to calculate the probability distribution of the synaptic state, we approximated the density function of w using the Boltzmann distribution of ∆w, by assuming that change of w can be approximated as a binary random-walk process with noisy inputs as

| 2 |

The estimated model probability distribution predicted that each synapse in the SR model converges to ‘0’ or ‘1’, while in the AR model it converges to 0.5. (Fig. 1e,f, colored lines). We verified this theoretical prediction with a model simulation of a single synapse (see Methods for details). In the simulation, an input neuron was connected to an output neuron and the two neurons were driven by 10 Hz random Poisson spike trains. Then spike pairs were generated randomly to input and output neurons for LTP and LTD, and the synaptic weight was updated by a given STDP rule. As a result, the simulated probability density function well matched the theoretical predictions (Fig. 1e,f, histograms). To summarize, each synapse in the SR model is stable at ‘0’ or ‘1’, while in the AR model, it is stable at 0.5. Due to this difference in instability profile in the learning rule, the SR model will try to retain synaptic weights located at ‘0’ and ‘1’, thus preserving memories well. On the other hand, synapses in the AR model will try to converge at 0.5, thus tending to erase stored memory.

Both symmetric and asymmetric learning rate models can learn input spike patterns

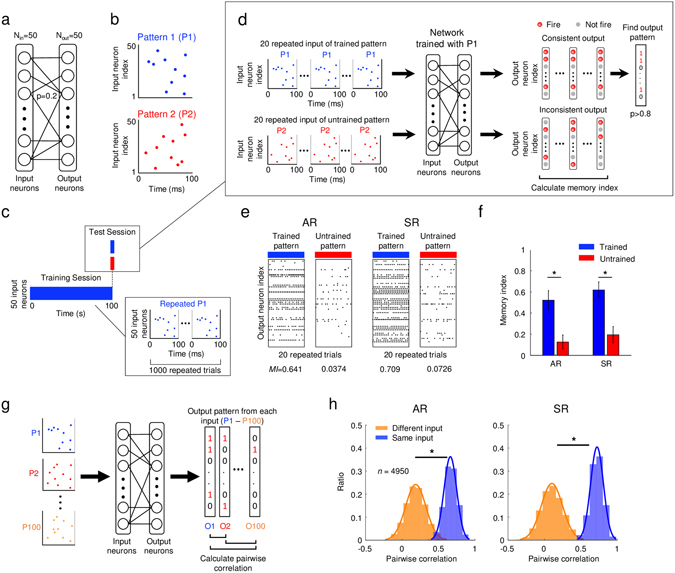

Using MATLAB, we constructed a model neural network where both input and output layers were composed of 50 leaky integrate-and-fire model neurons (Fig. 2a). Feedforward connections between the two layers were modelled as sparse-and-random synaptic connections, with connection probability of 0.2 between all pairs of input and output neurons. Next, as a simulation of arbitrary information given to the system, we designed “input spike patterns” consisted of 50 input neurons with a 100 ms time window. Every 50 input neurons fired exactly once with random timing within 100 ms such that the firing rate of each neuron was 10 Hz (Fig. 2b). Throughout the study, we used this temporal spike pattern to train our model network. As a simulation of learning and memory processes, we generated the same input patterns 1000 times (100 s in total) to train the network (Fig. 2c). The synaptic weight of each connection was updated using the STDP rules of either asymmetric or symmetric learning rate. For consistency between asymmetric and symmetric learning rate model simulations, all the parameters including the structure of random initial connectivity, synaptic weights, and input patterns were kept identical so that the difference in output activity was induced only from different learning rate profiles (Fig. 1b,c). After the pattern training process, in both the AR and SR models, we observed that the synaptic weights had bimodal distribution, similar to other traditional STDP learning systems16, 35 (Supplementary Fig. 1).

Figure 2.

Asymmetric learning rule (AR) and symmetric learning rule (SR) models for volatile/non-volatile memory: (a) A feedforward network model for memory: Input neurons (Nin = 50) were sparsely connected to output neurons (Nout = 50) with connection probability of 0.2. (b) Model input spike patterns: Each input neuron generates a spike at random timing within a 100-ms window. (c) Memory training and test scheme: In training sessions, a particular input pattern (P1) is fed into a network 1,000 times, for 100 s. (d) In each test session, the consistency of network response for trained and untrained patterns were estimated for 20 repeated inputs of identical patterns. (e) Response consistency of AR and SR models after training for one input pattern: Both AR and SR systems generated consistent firing patterns for trained input patterns, and inconsistent patterns for untrained input patterns. (f) Memory performance of the AR and SR models for 100 input patterns: Both AR and SR networks showed significantly higher memory indices for trained input patterns than for untrained input patterns (Mann-Whitney U-test: *p < 10−16, n = 100). Error bars represent the standard deviation. (g) To examine how the trained output pattern varies depending on the input pattern, 100 identical networks were trained from 100 different input patterns. The output pattern for each input was measured, and then pairwise correlations between them were calculated. (h) Distribution of the pairwise correlation for different and same inputs. The response correlation for different inputs (orange) was significantly lower than that for the same inputs (blue) in both AR and SR cases (Mann-Whitney U-test: *p < 10−16, n = 4950).

After training session of each network, we tested to see if the trained pattern was memorized so that the network could selectively respond to the pattern (Fig. 2d). We observed that both asymmetric and symmetric learning rate model networks could induce a consistent output spike pattern for the trained input; thus could distinguish the trained and untrained input patterns (Fig. 2e). To evaluate quantitatively how consistently the network responded to each trained pattern, we measured the “memory index” as an averaged pairwise cross-correlation between binary patterns of output firings for repeated trials (see Methods for details). As a result, for both asymmetric and symmetric learning rate models, the memory indices for trained pattern were significantly higher than those of untrained patterns, or randomly-ordered series of patterns (Fig. 2f and Supplementary Fig. 2) (Mann-Whitney U-test, p < 10−16 for both AR and SR models). We repeated the same simulation with different initial weights and confirmed that the trained and untrained patterns were consistently distinguishable regardless of the initial conditions (Supplementary Fig. 3). This result indicates that both learning rate models are capable of training networks to memorize and identify temporal spike patterns.

Then, to investigate how the trained output pattern varies depending on the input pattern36, we examined variation in the response pattern of the output neurons, which indicates a set of neurons consistently respond (above 80% chance) to a trained input (Fig. 2d, output pattern). We extracted 100 “output patterns” from 100 different input patterns, and calculated the pairwise correlations within 100 output patterns (Fig. 2g). Then, the distribution of pairwise correlation across different inputs was compared to that within the same input. In both the AR and SR models, we found that the response correlation for different inputs (Fig. 2h, orange) was significantly lower than that for same inputs (Fig. 2h, blue) (Mann-Whitney U-test, p < 10−16 for both AR and SR models). Therefore, we concluded that output patterns after training for different inputs are readily distinguishable in our model, whereas output patterns after training for the same inputs are fairly consistent.

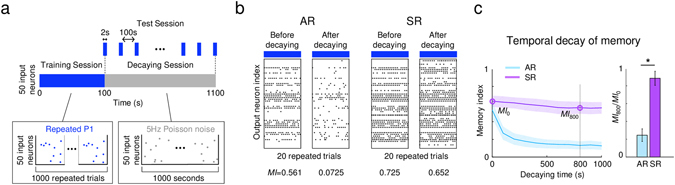

Symmetric learning rate model can form stable memory

The amount of memory decaying over time is a distinct factor in determining the degree of memory stability12. To test the decaying characteristics of each model after training with a particular input pattern, we introduced a 5-Hz Poisson noise input to the network as simulation of spontaneous neural activity in the network (Fig. 3a, decaying session). During the decaying session, we measured the memory index for every 100 s, to estimate the temporal degradation of memory (Fig. 3a, test session). As a result, we observed that the responses of the AR model after the decaying session were noticeably disturbed, indicating that the stored memory pattern was being erased, while that of the SR model remained nearly intact (Fig. 3b). Thus, the estimated memory index of the AR model decreased significantly whereas the SR model did not show a noticeable drop in the index.

Figure 3.

Comparison of AR and SR models for decaying by noise (a) Test scheme of memory decay: After 100 s of training, 5-Hz Poisson spikes were introduced to the networks for 1000 s to erase the stored memory. In decay sessions, we estimated the memory index every 100 s. (b) Response of AR and SR models after 1000 s of decay: The response pattern of the AR model became inconsistent output firing after the decay session, while the SR model showed consistent output firing even after decaying. (c) The time course of the memory index during the decay session: Memories in the AR model decayed rapidly, while those of the SR model did not show noticeable decay. The memory index ratio (MI 800/MI 0) of SR was significantly higher than that of AR (Mann-Whitney U-test: *p < 10−16, n = 100). Shaded area and error bars represent the standard deviation.

To quantify how much the initial memory was being preserved at each moment during the decaying session, we investigated the time-course of the memory index change (Fig. 3c). As expected, the memory decay over time appeared significant only in the AR model where the initial value of memory index quickly dropped as soon as the noise was introduced. Then we calculated the ratio of indices before the decaying session (MI 0) and after 800 s of decaying (MI 800). The ratio of the SR model, MI 800/MI 0 = 0.8848 was significantly higher than that of the AR model, 0.2452 (Mann-Whitney U-test, p < 10−16). We repeated the same simulation under different conditions of noise level, and confirmed that the observed difference between the AR and SR models was fairly consistent (Supplementary Fig. 4). To investigate how the SR model preserves old memories at the synaptic level, we examined synaptic weight changes during the decay process (Supplementary Fig. 5). During the decaying session, a bimodal distribution of the synaptic weights was sustained in the SR but not in the AR model. Given that synaptic weights hold memory information in this system, sustained bimodal weights in the SR model would be more likely to preserve old memory. Overall, these results indicate that the SR model can encode non-volatile or stable memory, while the AR model encodes more volatile or flexible memories.

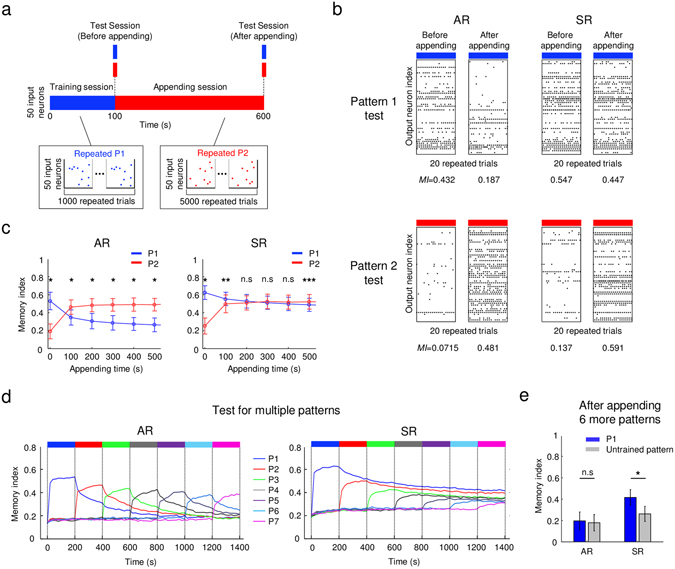

Symmetric learning rate model can append new memories

The ability to collect multiple memories is also a crucial feature of stable memory. For this, newly appended memories must not degrade previously stored memories. To test if this memory accumulation can be achieved in our models, both AR and SR networks were trained with multiple input patterns provided consecutively (Fig. 4a). First, we trained the network to memorize a pattern P1 for 100 s (training session), then we retrained the network with another pattern P2 (appending session). We tested the memory performance of the network response to P1 and P2, for every 100 s during the appending session. We first observed that both asymmetric and symmetric rate models showed a consistent response pattern for P2 after appending, confirming that the pattern P2 was memorized (Fig. 4b, bottom). In the AR model, however, the network response to P1 was noticeably altered, indicating that the previously stored memory was disturbed by the newly appended memory (Fig. 4b, top left). On the other hand, in the SR model, the response activity to pattern P1 was observed to be consistent even after appending (Fig. 4b, top right), suggesting that the information of both P1 and P2 was memorized in the circuit. For further quantitative analysis, we measured the instantaneous values of memory index, for every 100 s during the appending session (Fig. 4c). As expected, the memory index for P1 in the AR model decreased rapidly as soon as the training of P2 started, while that in the SR system changed only slightly.

Figure 4.

Comparison of AR and SR models for appending new memories: (a) Test scheme for appending memory: After 100 s of training with pattern P1, another input pattern P2 was trained for 500 s to test the ability of each model to append memory, and the memory indices for P1 and P2 were measured before and after each appending session. (b) The response of each model to old and new input patterns: The AR model lost P1 memory after appending P2 (left), while the SR model did not lose P1 memory even after appending new memory (right). (c) Memory index change during appending session: In the AR model, the memory index for the old pattern (P1) decayed fast as the memory of the new pattern (P2) formed. In the SR model, the memory index for P1 slightly decreased but was kept as high as that of the newly formed memory of P2 (Mann-Whitney U-test: *p < 10−16, **p < 10−4, ***p < 0.01, n = 100). (d) Memory index change for appending multiple patterns: Different temporal patterns (P1-P7) were sequentially introduced to the model networks: In the AR model, old memories rapidly decayed as soon as new patterns were introduced. In the SR model, both old memories and new memories were kept together, well above the response level for the untrained inputs. (e) P1 memory index after appending six new patterns. In the AR model, the memory index of P1 was remarkably decreased, indistinguishable from that of an untrained pattern (Mann-Whitney U-test, p = 0.2232, n = 100). On the other hand, in the SR model, the memory index of the P1 after training was still significantly higher than that of an untrained input (Mann-Whitney U-test: *p < 10−16, n = 100). Error bars represent the standard deviation.

We repeated the same test for multiple inputs. We sequentially gave seven different input spike patterns, each for 200 s, to both the AR and SR models. Then we assessed the memory index of each pattern during training (Fig. 4d). In the AR model, the trace of old patterns rapidly decayed, to be replaced by newly appended memories. The memory index of the pattern P1 after appending six different new patterns (P2-P7) was markedly reduced (Fig. 4d, left and Fig. 4e, left). The condition became indistinguishable from that of an untrained pattern (Mann-Whitney U-test, p = 0.2232). On the other hand, in the SR model, the memory of old pattern was preserved. The memory index of the pattern P1 after training of P2–P7 was reduced to some extent, but still significantly higher than that for untrained input (Mann-Whitney U-test: *p < 10−16) (Fig. 4d, right and Fig. 4e, right). Therefore, we confirmed that the memory of P1 in the AR model was lost after training with new patterns, whereas the memory of P1 remained after the accumulation of new memories in the SR system. This also indicates that the memory in the SR model network cannot be easily erased, because it will accumulate all the input patterns that the capacity of the system allows. To investigate how memories are allocated in the synapses of the network during this process, we measured the ratio of synaptic weight, which converges to ‘0’ or ‘1’, and indicated the information contained (Supplementary Fig. 6). As the number of appending patterns increased, in the SR model, the ratio of synaptic weights converged to either ‘0’ or ‘1’ monotonically increased, while the ratio of converged weights of AR model remained constant (≈0.4) through appending session. This result shows that, in the SR model, new inputs were stacked in available synapses that did not contain previous information, while in the AR model, new inputs were overwritten on synapses storing previous memories.

Linear combination of two learning models generates a hybrid memory

Next, we tested to see if we could create a different type of memory system by combining the AR and SR models. For this, we linearly integrated two STDP kernels, taking the weighted summation of the asymmetric and symmetric learning rate profiles (Fig. 5a), with a parameter α as the proportion of symmetric rate STDP in the linear combination. By regulating α, we could control the properties of new memory, making them closer to AR (α = 0) or SR (α = 1) models. As a result, for 0 < α < 1, we could create a new type of memory that decayed slower than AR but faster than in the SR model, and that had an intermediate feature of memory appendability between that in the AR and SR models. We refer to this type of memory as “hybrid memory.”

Figure 5.

A hybrid memory model: (a) Hybrid learning rule was defined as the weighted sum of AR and SR models, and the portion of SR was denoted by α. (b) Temporal decay of the memory in the hybrid model was tested as in Fig. 3c. The hybrid model with α = 0.5 showed intermediate characteristics between the AR and SR models (orange line). The error bars represent the standard deviation for 100 trials. (c) Modulation of memory decay for various values of α: As α increased, the ratio of memory indices (MI 800/MI 0) increased linearly, suggesting that the characteristics of the hybrid model make a linear transition from the AR to the SR model. (d) The decay of old memory with newly appended memory in the hybrid model was tested as in Fig. 4c. The memory index in the hybrid models with α = 0.5 and 0.99 were intermediate values between those of the AR and SR models, but showed nonlinear transition as α varied. (e) The proportion of old memory was maintained as α varied: As α increased from 0 to 0.99, the ratio of memory indices (MI 800/MI 0) increased linearly. After this, the ratio abruptly increased as α changed from 0.99 to 1 (Wilcoxon signed rank test: *p < 10−9, n = 100). The data point at α = 1 was excluded from the linear regression (dashed line). The error bars represent the standard deviation.

First, to examine the decay properties of a hybrid memory (α = 0.5), the network was tested in a decaying session (5 Hz Poisson noise, 1000 s) after training of an input pattern, as previously. Once every 100 s during the decaying session, we measured the memory index. As expected, the hybrid network showed intermediate characteristics in the memory decay test: the stored memory decayed faster than in the SR, but slower than in the AR models (Fig. 5b). In addition, as α increased, the ratio of the memory indices, MI 800/MI 0 increased linearly (Fig. 5c). This suggests that the transition from AR to SR model could be continuous.

Similarly, we also examined the memory appending feature of the hybrid model. With models of various α, we trained the network with a pattern P1 for 100 s, and then trained with another pattern P2 for 1000 s, while estimating the memory indices of pattern P1 for every 100 s. Different from the memory decay feature, the hybrid memory showed a nonlinear transition from AR to SR model. As we increased α from 0 to 1, the profile of the memory index of the seed pattern P1 in the hybrid memory model, differed only slightly from that of the AR model, up to a fairly high value of α (≈0.9) (Fig. 5d). For a very high value of α (near 1), the profile of the memory index suddenly transitioned to that of the SR model (Fig. 5d, α = 0.99). To examine this transition quantitatively, we measured the ratio of memory indices MI 800/MI 0 while varying α. As α increased from 0 to 0.99, MI 800/MI 0 slightly increased, but suddenly spiked when α increased from 0.99 to 1 (Fig. 5e, Wilcoxon signed rank test, p < 10−9). This implies that, the memory appending feature may not be consistent with the memory decaying feature in the model. For quantitative analysis on this nonlinear transition for varied α, we fitted each decay curve in Fig. 5b,d to an exponential function and examined the fitted parameters (Supplementary Fig. 7). As a result, we found that the decay rate, amplitude, and asymptote of the fitted curves showed a linear change for α < 0.9, and then spiked nonlinearly, near α = 1. Therefore, we concluded that the hybrid model showed a linear transition in its memory feature in a large regime of parameters, and became nonlinear only near α = 1 (like the pure SR model), which showed a stable memory characteristic.

Discussion

In this study, we introduced our hypothesis that the stability profile of the synaptic learning rule would determine the characteristic of a memory stored in a neural network, and showed that our simulated results supported this idea. The SR model retained encoded memories without being affected by noise or new memories and did not lose its ability to learn new information. On the other hand, the AR model continuously replaced stored memories with newly encoded inputs, and the hybrid model showed intermediate properties between those of the SR and AR models. In the current study, we focused on comparing SR and AR models to illustrate our theoretical idea regarding the effect of the synaptic stability and the STDP profile on memory function. However, these models are two extreme cases of our conceptual model, whereas the most generalized form of our theoretical model is the hybrid case with characteristics of both models. In addition, considering that (1) stability and flexibility are both basic properties of memory circuits, (2) the relative degree of stability and flexibility may vary from circuit to circuit, we may also suggest that our hybrid model is a reasonable design for describing memory characteristics of various circuits. For these reasons, we suggest that the hybrid model is the most realistic form of STDP that we may expect to observe in the experimental data. Various local neural circuits involved in memory function may have different properties in terms of stability and flexibility. This can be readily assessed by our hybrid model, and an experimental study may be able to observe various STDP profiles in local circuits, providing a good match to our hybrid model. In addition, these results may account for the mechanism of selectively controlled memory storage in the brain that, in turn, allows adaptation to both fast-changing and long-lasting environments.

One promising candidate with which to test this idea is the basal ganglia system, where flexible and stable value information is coded distinctively in the caudate nucleus subregions14, 15. Previous studies reported that flexible and stable value information are coded distinctively in the caudate nucleus subregions: the caudate “head” responded to flexible values, whereas the caudate “tail” was more sensitive to stable values. More interestingly, the neurons in the caudate “body” responded to both flexible and stable values in a mixed manner. This finding implies that fast-changing and long-lasting memories can potentially be formed in very similar neural circuits, and our model can provide a plausible explanation for the formation of flexible and stable memories within local neural circuits. This experimental observation can be readily reproduced in our model network in which the STDP learning rate symmetry profile gradually varies depending on where a neuron is located in the caudate nucleus. Using a hybrid STDP model, even the intermediate memory property of the caudate “body” can be readily realized.

Our finding that STDP symmetry is a potential key determinant of memory type can be explained in terms of synaptic strength stability. A number of theoretical circuit studies propose that distribution of synaptic strength tends to become bimodal during learning16, 35, 37, 38, and that the bimodally converged synapses are thought to play an important role in memory storage. In our model simulations, we confirmed that synapse strengths converged to either minimum or maximum value during the learning process, indicating that information is stored in these converged synapses (Supplementary Figs 1 and 3). In the SR model, the weight of a synapse is bi-stable at its minimum and maximum due to its stability profile; thus, a converged synapse cannot easily escape from its converged weight value (Supplementary Fig. 5). Notably, the network can store new information until every single synaptic connection is converged to minimum or maximum, and thus the number of available synaptic connections in the network will determine the memory capacity of the system. On the other hand, due to the synaptic stability profile of the AR model34, 35, 39, converged synapse strength could easily decay toward the mid-range value of weight by noise or other inputs, similar to the decay of stored memory in previous simulation studies on memory lifetime40, 41.

It is also notable that, even though we used a spike-pair-based model, our model does not require any particular type of STDP model. Our model idea is simply that memory performance depends on the STDP instability profile, regardless of the specific details of the type of STDP model. For this reason, we chose the spike-pair-based model as the simplest form of STDP, but adequate to generate different profiles of synaptic stability. We confirmed that our main result is consistent even with different types of STDP models such as the voltage-based model (Supplementary Fig. 8), as long as the profile of synaptic stability is designed similarly.

One of the distinctive features of our simulation is that the measured memory performance in terms of consistency of output spike pattern, which is assumed to be the formation of a memory engram, was compatible with previous observations. In fear-conditioning experiments8, 42, a consistent formation of neural firing pattern was reported in mice that showed well trained freezing behavior. Thus, in our model, a consistent firing pattern of output neurons approximates the selective firing of neurons or the structure of an engram in the hippocampus or lateral amygdala. In this way, we could quantitatively simulate the process of memory formation, decay and modulation, in terms of firing pattern consistency, and could also estimate the “sustainability” and “appendability” of stored memory.

Although we successfully showed the characteristics of memories formed with different STDP learning rate profiles, a number of additional studies ought to be conducted to confirm the biological validity of the model. Firstly, for simplicity, the model network used in this study does not contain any inhibitory interactions. Probably, lateral inhibition plays a crucial role in competition between neuronal assemblies during memory formation43–45. Although we focused on the effect of different STDP learning rate symmetry in the current study, we will extend our work to consider a more complete scenario on the mechanism of dynamic memory formation by including lateral inhibition, neural competition and synchronized neural activities. Secondly, in the simulations, we introduced some amount of noise fluctuation in the neural membrane potential which facilitated spike generation in the initial stage of learning by stochastic resonance. Stochastic resonance allowed initiation of synapse strength modulation by STDP that required output spikes induced by input spikes. Considering the fact that the amplitude of noise used in our simulation was higher than that reported in experiments46, noise may not be the only source of initiation of synaptic modulation. For example, another possible candidate is spontaneous gamma oscillation47–49, commonly observed across various brain regions. It is known that the amplitude and phase of gamma oscillation can be dynamically modulated by various network properties, such as theta frequency rhythm or local inhibition level. Therefore, we believe that the introduction of spontaneous gamma oscillation to the network may play a role in the initiation of spike-timing-dependent synaptic modulation by stochastic resonance, as well as dynamic control of learning and memory. Realization of this scenario will also be an important part of our follow-up studies. Lastly, in this case we manually balanced the network activity, but doing so was limited to a specific range of input firing rates. In order to make the system stable for any arbitrary input, we believe a homeostatic plasticity mechanism50 should be applied to the system in future studies.

Although one of the key components of our model, the symmetric learning rate profile, is yet to be explicitly observed in experiments, our results can still be valid not only with SR and AR models, but with any other plasticity model that could generate a stable and an unstable condition of learning. The bottom line of the SR model is that the synapses become stable as synaptic strength becomes either very strong or very weak. This condition can be achieved by various factors, such as neuromodulator that affects to LTP and LTD simultaneously51, 52, gain modulation or controlled excitability53 by feedback loop that temporally inhibits spike generation of target neurons with recently strengthened synapses. These scenarios can be validated experimentally, based on our theoretical prediction.

Overall, we propose a simple but powerful model that explains important features of memory formation. We believe that our model can shed light on the study of how memory is formed, erased and controlled.

Methods

Single Neuron Model

All simulations were performed using MATLAB codes. For a single neuron simulation, we used a leaky-integrate-and-fire neuron model. The membrane voltage of neuron j at time t can be updated by:

| 3 |

where C is membrane capacitance, gL is leak conductance, EL is resting potential, and Esyn is reversal potential. We used the commonly accepted values for physiological parameters (C = 1 μF, gL = 0.4 μS, EL = −65 mV, Esyn = −5 mV, dt = 1 ms). A Gaussian noise current, Inoise is given to each neuron with mean 0 and standard deviation 1.2 nA. Voltage gated channel conductance gj is determined by the following equation

| 4 |

where Si(t) denotes the spike train of presynaptic neuron i, and wij means synaptic weight between pre- and post-synaptic neuron. Time constant τsyn determines the decay speed of EPSP and csyn implicates the size of the excitatory postsynaptic conductance evoked by an input spike. When Vj reaches Ethreshold = 55 mV, an action potential is generated immediately and Vj is reset to resting potential EL. We used the commonly accepted values for these parameters (τsyn = 3 ms, csyn = 0.12 μS ms−1, dt = 1 ms).

Mathematical Model of Synaptic Plasticity

The update of the synaptic weight is determined by the spike timing interval Δt = tpost − tpre as in the following equations:

| 5 |

where k and τ are parameters that determine the amplitude and decay of STDP kernel, and ε+(w) and ε−(w) denote weight-dependent learning rates for positive and negative values of Δt. We set k+ = 0.06, k− = −0.09, τ+ = 3 ms, τ− = 15 ms. To make w stay in range wmin < wij < wmax, we implemented three different learning rate profiles: asymmetric (AR), symmetric (SR), and hybrid. The AR model, frequently called the multiplicative learning rate34, 35, is defined as

| 6 |

where wmax = 1 and wmin = 0. In this model, the weight change (Δw) is maximum at w = 0 for LTP and w = 1 for LTD.

On the other hand, the SR model uses a two-sided, linear-bound method as in

| 7 |

The hybrid model uses a linear combination of εAR and εSR as

| 8 |

where α denotes the proportion of the symmetric rate STDP rule.

The synaptic instability (Fig. 1d) was defined as

| 9 |

The learning rates of LTP and LTD were estimated separately, due to the asymmetry of the AR model.

The probability density function of w was approximated using the Boltzmann distribution of ∆w, by assuming that change of w can be approximated as a binary random walk process with noisy inputs as

| 10 |

Model Simulation of a Single Synapse

We designed a single synapse model that consisted of one presynaptic neuron, one postsynaptic neuron, and their connection (Fig. 1e). The synaptic weight between the pre- and postsynaptic neuron was initialized to a random value between ‘0’ and ‘1’. Two neurons were driven by 10 Hz random Poisson spike trains for 1000 s. Then spike pairs to the input and output neurons for LTP and LTD were generated randomly, and the synaptic weight was updated by the given STDP rule, either AR or SR. We examined the probability distributions in 10,000 trials of how weights changed after sufficient time (1000 s).

Model Neural Network

In this study, the model network we used consists of two layers, 50 excitatory input neurons, and 50 output neurons, with sparse random feedforward connections. The connection probability between each input and output neuron was set to 0.2. Initially, the synaptic weights or connection strength between input and output neurons were randomly sampled from normal distribution, with mean 0.5 and standard deviation 0.05.

Model Input Pattern for Memory Training

To train the network, we designed “input spike patterns” consisting of 50 input neurons with a 100 ms time window. Every 50 input neurons fired exactly once with a random timing within 100 ms such that the firing rate of each neuron was 10 Hz. Training for each pattern was done for 100 s by feeding 1000 successive identical patterns with no delay.

Test of Memory Performance

To confirm the network had “memorized” a trained pattern, we tested the response of the network with a trained and an untrained pattern. Each test input pattern was given repeatedly (20 times), and we represented firings of output neurons as a binary number—if it fires at least once, then ‘1’—to simply indicate if a neuron is involved in the memory pattern. Even if an output neuron fired more than once per trial, we counted it as ‘1’ (fire). When there was no firing at all during one trial, we counted it as ‘0’ (not fired). Then we calculated the memory index from these 20 binary response vectors. The memory index was defined as the average pairwise cross-correlation between output neuronal firing for repeated inputs as

| 11 |

where Sm denotes the mth binary vector of output firing, Npair is the number of all possible pairs, 20C2 = 190, and Nfiring is the number of output neurons fired at least once during 20 repetitions of input. Thus, the memory index is normalized in the range from 0 to 1.

Test of the Variation of Output Pattern

To examine how the output pattern varies depending on the input pattern, we defined the “output response pattern” as a set of neurons that consistently (above 80% chance) respond to a trained input. Thus, this “output pattern” represents a set of neurons involved in memory after the training. We compared this output pattern within the same input and across different inputs. From “100 different input patterns” and “100 same input patterns”, we trained the 100 identical networks and got 100 output patterns in the form of binary vectors. Then we calculated the pairwise correlations within 100 output patterns (total 4950 pairs). The response correlation “within the same input” and “across different inputs” was compared (Fig. 2h).

Test of Memory Decay

To simulate the temporal decay of memory with background noise, a 5-Hz Poisson random spike train was given to the trained network for T seconds. To quantify the amount of memory maintained in the network, the ratio between the memory index at t = T and at t = 0 was estimated as

| 12 |

where t denotes the time in the decay session.

Electronic supplementary material

Acknowledgements

We thank William Newsome, Bong-Kiun Kaang, Min Whan Jung and Yong-Seok Lee for providing comments on earlier versions of this manuscript. This research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science, ICT & Future Planning (NRF-2016R1C1B2016039, NRF-2016R1E1A2A01939949) and the Future Systems Healthcare Project of KAIST (to S.P.).

Author Contributions

Y.P. designed, performed and analyzed the simulations and wrote the manuscript. W.C. analyzed the simulations and revised the manuscript. S.P. conceived the project, designed the simulations and wrote the manuscript. All authors discussed and commented on the manuscript.

Competing Interests

The authors declare that they have no competing interests.

Footnotes

Electronic supplementary material

Supplementary information accompanies this paper at doi:10.1038/s41598-017-05929-2

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Hebb DO. The Organization of Behavior. Organ. Behav. 1949;911:335. [Google Scholar]

- 2.Bliss TV, Collingridge GL. A synaptic model of memory: long-term potentiation in the hippocampus. Nature. 1993;361:31–39. doi: 10.1038/361031a0. [DOI] [PubMed] [Google Scholar]

- 3.Josselyn SA, Köhler S, Frankland PW. Finding the engram. Nat. Rev. Neurosci. 2015;16:521–534. doi: 10.1038/nrn4000. [DOI] [PubMed] [Google Scholar]

- 4.Silva AJ, Zhou Y, Rogerson T, Shobe J, Balaji J. Molecular and cellular approaches to memory allocation in neural circuits. Science. 2009;326:391–5. doi: 10.1126/science.1174519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Rogerson T, et al. Synaptic tagging during memory allocation. Nat. Rev. Neurosci. 2014;15:157–69. doi: 10.1038/nrn3667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Han J-H, et al. Neuronal Competition and Selection During Memory Formation. Science. 2007;316:457–460. doi: 10.1126/science.1139438. [DOI] [PubMed] [Google Scholar]

- 7.Han, J-hee & Josselyn, S. Selective Erasure of fear memory. 323, 1492–1496 (2009). [DOI] [PubMed]

- 8.Ramirez S, et al. Creating a False Memory in the Hippocampus. Science. 2013;341:387–391. doi: 10.1126/science.1239073. [DOI] [PubMed] [Google Scholar]

- 9.Redondo RL, et al. Bidirectional switch of the valence associated with a hippocampal contextual memory engram. Nature. 2014;513:426–430. doi: 10.1038/nature13725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Mongillo G, Barak O, Tsodyks M. Synaptic theory of working memory. Science. 2008;319:1543–6. doi: 10.1126/science.1150769. [DOI] [PubMed] [Google Scholar]

- 11.Hayashi-Takagi A, et al. Labelling and optical erasure of synaptic memory traces in the motor cortex. Nature. 2015;525:333–8. doi: 10.1038/nature15257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Cowan N. What are the differences between long-term, short-term, and working memory? Prog. Brain Res. 2008;169:323–38. doi: 10.1016/S0079-6123(07)00020-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Tetzlaff C, Kolodziejski C, Markelic I, Wörgötter F. Time scales of memory, learning, and plasticity. Biol. Cybern. 2012;106:715–726. doi: 10.1007/s00422-012-0529-z. [DOI] [PubMed] [Google Scholar]

- 14.Kim HF, Hikosaka O. Distinct basal ganglia circuits controlling behaviors guided by flexible and stable values. Neuron. 2013;79:1001–10. doi: 10.1016/j.neuron.2013.06.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kim HF, Ghazizadeh A, Hikosaka O. Dopamine Neurons Encoding Long-Term Memory of Object Value for Habitual Behavior. Cell. 2015;163:1165–1175. doi: 10.1016/j.cell.2015.10.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Song S, Miller KD, Abbott LF. Competitive Hebbian learning through spike-timing-dependent synaptic plasticity. Nat. Neurosci. 2000;3:919–26. doi: 10.1038/78829. [DOI] [PubMed] [Google Scholar]

- 17.Gerstner W, Kempter R, van Hemmen JL, Wagner H. A neuronal learning rule for sub-millisecond temporal coding. Nature. 1996;383:76–81. doi: 10.1038/383076a0. [DOI] [PubMed] [Google Scholar]

- 18.Morrison A, Diesmann M, Gerstner W. Phenomenological models of synaptic plasticity based on spike timing. Biol. Cybern. 2008;98:459–478. doi: 10.1007/s00422-008-0233-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Bi GQ, Poo MM. Synaptic modifications in cultured hippocampal neurons: dependence on spike timing, synaptic strength, and postsynaptic cell type. J. Neurosci. 1998;18:10464–10472. doi: 10.1523/JNEUROSCI.18-24-10464.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Sjöström PJ, Turrigiano GG, Nelson SB. Rate, timing, and cooperativity jointly determine cortical synaptic plasticity. Neuron. 2001;32:1149–64. doi: 10.1016/S0896-6273(01)00542-6. [DOI] [PubMed] [Google Scholar]

- 21.Wang H-X, Gerkin RC, Nauen DW, Bi G-Q. Coactivation and timing-dependent integration of synaptic potentiation and depression. Nat. Neurosci. 2005;8:187–93. doi: 10.1038/nn1387. [DOI] [PubMed] [Google Scholar]

- 22.Fino E, Deniau J-M, Venance L. Cell-specific spike-timing-dependent plasticity in GABAergic and cholinergic interneurons in corticostriatal rat brain slices. J. Physiol. 2008;586:265–82. doi: 10.1113/jphysiol.2007.144501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Fino E, Paille V, Deniau JM, Venance L. Asymmetric spike-timing dependent plasticity of striatal nitric oxide-synthase interneurons. Neuroscience. 2009;160:744–754. doi: 10.1016/j.neuroscience.2009.03.015. [DOI] [PubMed] [Google Scholar]

- 24.Feldman DE. The spike timing dependence of plasticity. Neuron. 2012;75:556–571. doi: 10.1016/j.neuron.2012.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Froemke RC, Dan Y. Spike-timing-dependent synaptic modification induced by natural spike trains. Nature. 2002;416:433–438. doi: 10.1038/416433a. [DOI] [PubMed] [Google Scholar]

- 26.Froemke RC, Poo M-M, Dan Y. Spike-timing-dependent synaptic plasticity depends on dendritic location. Nature. 2005;434:221–5. doi: 10.1038/nature03366. [DOI] [PubMed] [Google Scholar]

- 27.Celikel T, Szostak VA, Feldman DE. Modulation of spike timing by sensory deprivation during induction of cortical map plasticity. Nat. Neurosci. 2004;7:534–541. doi: 10.1038/nn1222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Mishra RK, Kim S, Guzman SJ, Jonas P. Symmetric spike timing-dependent plasticity at CA3-CA3 synapses optimizes storage and recall in autoassociative networks. Nat. Commun. 2016;7:11552. doi: 10.1038/ncomms11552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Markram H, Lubke J, Frotscher M, Sakmann B. Regulation of synaptic efficacy by coincidence of postsynaptic APs and EPSPs. Science. 1997;275:213–215. doi: 10.1126/science.275.5297.213. [DOI] [PubMed] [Google Scholar]

- 30.Sjöström PJ, Häusser M. A cooperative switch determines the sign of synaptic plasticity in distal dendrites of neocortical pyramidal neurons. Neuron. 2006;51:227–38. doi: 10.1016/j.neuron.2006.06.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Clopath C, Büsing L, Vasilaki E, Gerstner W. Connectivity reflects coding: a model of voltage-based STDP with homeostasis. Nat. Neurosci. 2010;13:344–352. doi: 10.1038/nn.2479. [DOI] [PubMed] [Google Scholar]

- 32.Zenke F, Agnes EJ, Gerstner W. Diverse synaptic plasticity mechanisms orchestrated to form and retrieve memories in spiking neural networks. Nat. Commun. 2015;6:6922. doi: 10.1038/ncomms7922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Klampfl S, Maass W. Emergence of dynamic memory traces in cortical microcircuit models through STDP. J. Neurosci. 2013;33:11515–29. doi: 10.1523/JNEUROSCI.5044-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.van Rossum MC, Bi GQ, Turrigiano GG. Stable Hebbian learning from spike timing-dependent plasticity. J. Neurosci. 2000;20:8812–21. doi: 10.1523/JNEUROSCI.20-23-08812.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Gütig R, Aharonov R, Rotter S, Sompolinsky H. Learning input correlations through nonlinear temporally asymmetric Hebbian plasticity. J. Neurosci. Off. J. Soc. Neurosci. 2003;23:3697–3714. doi: 10.1523/JNEUROSCI.23-09-03697.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Buzsáki G. Neural Syntax: Cell Assemblies, Synapsembles, and Readers. Neuron. 2010;68:362–385. doi: 10.1016/j.neuron.2010.09.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Barbour B, Brunel N, Hakim V, Nadal JP. What can we learn from synaptic weight distributions? Trends Neurosci. 2007;30:622–629. doi: 10.1016/j.tins.2007.09.005. [DOI] [PubMed] [Google Scholar]

- 38.Toyoizumi T, Pfister J-P, Aihara K, Gerstner W. Generalized Bienenstock-Cooper-Munro rule for spiking neurons that maximizes information transmission. Proc. Natl. Acad. Sci. USA. 2005;102:5239–5244. doi: 10.1073/pnas.0500495102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Standage, D. & Trappenberg, T. The Trouble with Weight-Dependent STDP. in 2007 International Joint Conference on Neural Networks 1348–1353, doi:10.1109/IJCNN.2007.4371154 (IEEE, 2007).

- 40.Fusi S, Abbott LF. Limits on the memory storage capacity of bounded synapses. Nat. Neurosci. 2007;10:485–493. doi: 10.1038/nn1859. [DOI] [PubMed] [Google Scholar]

- 41.Fusi S, Drew PJ, Abbott LF. Cascade models of synaptically stored memories. Neuron. 2005;45:599–611. doi: 10.1016/j.neuron.2005.02.001. [DOI] [PubMed] [Google Scholar]

- 42.Liu X, et al. Optogenetic stimulation of a hippocampal engram activates fear memory recall. Nature. 2012;484:381–385. doi: 10.1038/484410a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kim D, Paré D, Nair SS. Assignment of model amygdala neurons to the fear memory trace depends on competitive synaptic interactions. J. Neurosci. 2013;33:14354–14358. doi: 10.1523/JNEUROSCI.2430-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Lucas EK, Jegarl AM, Morishita H, Clem RL. Multimodal and Site-Specific Plasticity of Amygdala Parvalbumin Interneurons after Fear Learning. Neuron. 2016;91:629–643. doi: 10.1016/j.neuron.2016.06.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Stefanelli T, Bertollini C, Lüscher C, Muller D, Mendez P. Hippocampal Somatostatin Interneurons Control the Size of Neuronal Memory Ensembles. Neuron. 2016;89:1074–1085. doi: 10.1016/j.neuron.2016.01.024. [DOI] [PubMed] [Google Scholar]

- 46.Stacey WC, Durand DM. Synaptic noise improves detection of subthreshold signals in hippocampal CA1 neurons. J. Neurophysiol. 2001;86:1104–1112. doi: 10.1152/jn.2001.86.3.1104. [DOI] [PubMed] [Google Scholar]

- 47.Penttonen M, Kamondi A, Acsády L, Buzsáki G. Gamma frequency oscillation in the hippocampus of the rat: Intracellular analysis in vivo. Eur. J. Neurosci. 1998;10:718–728. doi: 10.1046/j.1460-9568.1998.00096.x. [DOI] [PubMed] [Google Scholar]

- 48.Atallah BV, Scanziani M. Instantaneous Modulation of Gamma Oscillation Frequency by Balancing Excitation with Inhibition. Neuron. 2009;62:566–577. doi: 10.1016/j.neuron.2009.04.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Lundqvist M, et al. Gamma and Beta Bursts Underlie Working Memory. Neuron. 2015;90:152–164. doi: 10.1016/j.neuron.2016.02.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Turrigiano GG, Nelson SB. Homeostatic plasticity in the developing nervous system. Nat. Rev. Neurosci. 2004;5:97–107. doi: 10.1038/nrn1327. [DOI] [PubMed] [Google Scholar]

- 51.Seol GH, et al. Neuromodulators Control the Polarity of Spike-Timing-Dependent Synaptic Plasticity. Neuron. 2007;55:919–929. doi: 10.1016/j.neuron.2007.08.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Huang S, et al. Pull-Push neuromodulation of LTP and LTD enables bidirectional experience-induced synaptic scaling in visual cortex. Neuron. 2012;73:497–510. doi: 10.1016/j.neuron.2011.11.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Yiu AP, et al. Neurons Are Recruited to a Memory Trace Based on Relative Neuronal Excitability Immediately before Training. Neuron. 2014;83:722–735. doi: 10.1016/j.neuron.2014.07.017. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.