Abstract

Research ethics anew gained importance due to the changing scientific landscape and increasing demands and competition in the academic field. These changes are further exaggerated because of scarce(r) resources in some countries on the one hand and advances in genomics on the other. In this paper, we will highlight the current challenges thereof to scientific integrity. To mark key developments in research ethics, we will distinguish between what we call research ethics 1.0 and research ethics 2.0. Whereas research ethics 1.0 focuses on individual integrity and informed consent, research ethics 2.0 entails social scientific integrity within a broader perspective of a research network. This research network can be regarded as a network of responsibilities in which every stakeholder involved has to jointly meet the ethical challenges posed to research.

Keywords: Research ethics, Scientific integrity, Research network, Network of responsibility

Introduction

Scarce resources and limited funding opportunities resulting from the global economic crisis are recently posing various challenges to research. Ethical conduct was anew in the spotlight, yet existing approaches to research ethics failed to frame these challenges so far. It is widely accepted that researchers have an obligation towards their fellow researchers in providing accurate and reliable research. Fabricating, misrepresenting or falsifying data not only reflects on the individual researcher or research group condoning these practices. It can influence results of other research and in the end infest policy and people's lives. Holding on to norms like knowledge, truth, and avoidance of error are necessary conditions for reliable and trustworthy interpersonal collaboration and reflect the social responsibility inherent in research.

Incidents, which highlighted in how far ethics is crucial in research, have been exemplified during events in the 20th century, such as the Tuskegee study or research studies during the Nazi regime. Thereafter, the integrity of scientists was considered to be of key importance. Since then, principles stated in the Belmont Report [1] – respect for persons, beneficence, and justice – have been guiding principles in research ethics ever since. The development of guidelines for research conduct and the evolution of informed consent have also been described by Pelias [2]. Developments until this stage, which have been mainly dealing with the relationship between researcher and research subject, is what we will refer to as research ethics 1.0.

Recently, research ethics newly gained importance due to the changing scientific landscape and increasing demands and competition in the academic field. Specifically in genomic research, a highly complex field both in terms of data complexity and privacy concerns, scientists' integrity is key due to the fact that most sensitive data are involved and can impact the still hidden future of individuals, including the ones of future generations. However, we no longer can focus on the individual researcher or research group. The scientific process is embedded in a complex network with different stakeholders, which need to be more systematically addressed. Meslin and Cho [3] analysed the existing “common set of ethical principles” in research ethics and proposed a reframing of the “social contract” between science and society, highlighting the need to put focus on the broader context of research. With this paper, we will extend the existing body of knowledge assessing the development or evolution of research ethics by highlighting the current challenges to scientific integrity. Thereby, to mark key developments in research ethics, we will distinguish between what we call research ethics 1.0 and research ethics 2.0. Whereas research ethics 1.0 focuses on individual integrity and informed consent, research ethics 2.0 entails social scientific integrity within a broader perspective of a research network, as other actors apart from researcher and research subject increasingly move into the core of research ethics. The aim of this paper is thus to inform in a comprehensive way about recent challenges to research ethics which become evident through our so-called “research network” perspective. In this way, the research network serves as a framework through which changes and complexities in research ethics are modelled and can be systematically conceived.

Research Ethics 1.0: Focussing “Informed Consent”

A basic moral conflict in research ethics is balancing between the good for the individual and the good for the population. Certain medically oriented research questions that ultimately aim to benefit the population or future generations of patients can only be answered by involving individuals. Depending on the intervention, drug or placebo under research, those individuals however can be part of studies with uncertain outcomes. Two historic medical studies that failed to adhere to ethical standards exemplify the severe consequences. Even though nearly every public health lecture and textbook in the Western world mentions these studies and their misconduct is commonly known, they illustrate what constituted research ethics during the twentieth century. In these examples of scientific misconduct, research subjects were treated not as ends in themselves but were harmed for the benefit of others, while not being adequately (or not at all) informed about the nature of the research or pressured to participate.

The Tuskegee study, which was initiated by the US Public Health Service in 1932 aimed at revealing consequences of untreated syphilis [2]. Four hundred already infected Afro-Americans from Tuskegee, AL, were studied over a period of 4 decades (from 1932 until 1973) through a purely observational study even when effective penicillin treatment became available, yet subjects were not even informed about it. Treatment was only offered to the subjects when the study was publicly exposed in 1973 [4,5].

The other example that influenced the awareness of the rights of human research subjects in research is the study carried out at Willowbrook State School, New York, from 1956 until 1971, which intended to find a cure for infectious hepatitis. The school for “mentally retarded” children admitted new pupils only after their parents consented to place them in the hepatitis unit, where they were actively infected with the virus in order to “determine a prophylactic agent” [2,6].

It was through events like these that ground was given for ethical questions in relation to research and protecting study subjects. Responses to such immoral studies have emerged since the biomedical experiments during the Nazi regime. It turned out that although in Nazi Germany, rules for the protection of human research subjects already existed, they were violated by Nazi doctors in their “research” [7]. In response to the misconduct, the Nuremberg Code was set forth during “The Nazi Doctors Trial” in 1947 and aimed to proclaim 6 norms for protecting research subjects (Box 1). The Nuremburg Code can hence be deemed as the “foundation of modern protection of human rights” [2, p. 74].

Box 1.

The Nuremberg Code 1947 [2]

| 1 | Human subjects must give voluntary, informed consent, without coercion or duress |

| 2 | Experiments with human subjects should be preceded by experiments with animals |

| 3 | Experiments should be justified by the anticipated results |

| 4 | Experiments should be conducted by qualified scientists |

| 5 | Experiments should avoid physical and mental suffering and injury |

| 6 | Experiments should not entail an expectation of death or disabling injury |

In 1964, the World Medical Association established the Declaration of Helsinki and regularly updated it ever since. It became the golden standard for research involving human subjects. It was a further elaboration of the Nuremberg Code, paying attention to the distinction between therapeutic and non-therapeutic research and responsibility towards vulnerable groups [8]. The Belmont Report issued by the US National Commission for the Protection of Human Subjects of Biomedical and Behavioural Research in 1978 also served as a basis for several other codes of ethics through its three principles. While respect for persons emphasises absolute autonomy of research subjects, beneficence seeks to safeguard the minimisation of risks. Justice stresses the importance of distributing chances and risks of research fairly and treating subjects in a just way. These principles are also in line with the four principles of biomedical ethics proposed by Beauchamp and Childress [9] in 1977 which have offered an influential normative framework ever since.

Research Ethics 2.0: New Perspectives on Norms, Values, and Integrity

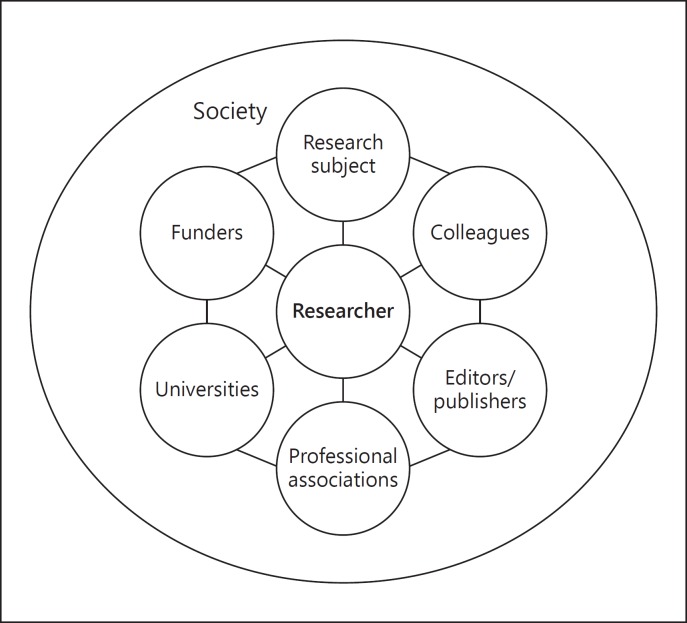

While research ethics during the 20th century was characterised by questioning behaviour mainly in the relationship between researcher and research subject, the 21st century brought to the forefront further issues at stake that go beyond this bilateral relationship. They encompass multiple levels, and have diverse underlying influencing causes and reasons. Even though these “new” issues might have existed before, they become more and more important due to increased interdependence between the stakeholders. In what follows, we will describe these multiple levels and challenges for various stakeholders, in what we are calling research ethics 2.0. Instead of focusing only on the relationship between researcher and research subject, research ethics 2.0 takes into account the broader social dimension of research that we depict as a research network, which we could also term “network of responsibilities” as different relations of moral responsibilities of the researcher(s) with other stakeholders and actors play a role (Fig. 1). Identification of the stakeholders was based on a literature review followed by a grouping of the key stakeholders involved in the research realm. Even though we offer a researcher-centrist view of the network, the relationships and mutual responsibilities between other actors also play an influential role.

Fig. 1.

The research network as a network of responsibilities.

At the heart of the research process lies the researcher who is influenced by several stakeholders and factors. Academic competition poses pressure on researchers. Funding opportunities, prestige, and recognition are often dependent on the number of articles published, the magnitude of studies conducted, and the amount of high-profile journals published in. The difficulty to win research funds is even more exacerbated in times of austerity, while publishing higher numbers of papers to gain doctoral degrees and/or to have research careers might lead to arguable incentives for non-accurate research or even wasted research efforts. All these situations pose a challenge to researchers' scientific integrity. According to the Swiss Academy of Medical Sciences [10], scientific integrity can be termed as “the voluntary commitment by researchers to comply with the basic rules of good scientific practice: Honesty, self-criticism and fairness are essential for morally correct behaviour.” However, researchers are pressured to achieve those stated requirements, not lastly by conducting so-called “scientific misconduct,” which is defined as “fabrication, falsification, or plagiarism (FFP) in proposing, performing, or reviewing research, or in reporting research results” [11]. According to Bouter [12, p. 150], many variances of misconduct or “questionable research practices” emerged in the grey zone between carrying out research according to the rules and three latter new “deadly sins” for scientific integrity (FFP). He states that the reasons for such behaviour can, in the best case, simply be unawareness of how it should be done or, in the worst case, that researchers know about the wrongdoing involved but proceed in those practices anyway for various reasons. Martinson et al. [13] argue that from an empirical study about US researchers' behaviour in terms of integrity, commonplace unfavourable research practices are a bigger threat to science than those produced by “high-profile misconduct cases such as fraud” (p. 737). Their survey of more than 3,000 early- and mid-career researchers in the US about ten common and six other research behaviours that were previously determined in focus group discussions reveal that 33% of respondents acknowledged to have conducted at least one of those behaviours, which range from falsifying, polishing or “cooking” research data to using the ideas of others without obtaining permission or giving credit to changing the design or results of the study due to funding source pressures. Other questionable research practices of the grey zone include refraining from publishing disappointing study results, publishing the same data in several publications, withholding results in line with selective reporting or other details or using inadequate research designs.

Behaviours, which are affected by social structures, are also important to take into account. Those behaviours include authorship questions, such as who can or should be author or the promotion of young scientists or lack thereof [14]. Lack of self-criticism of scientists on the one hand and dealing with multiple obligations, which can be overwhelming, on the other hand – including promoting young academics, acquiring funding, engaging in review and university boards, publishing, and teaching – further facilitate misconduct when accuracy in every task cannot be accomplished. Additionally, questions about justly dealing with research resources emerge, not only since the economic crisis, which will further be touched upon in the paragraph concerning funders hereinafter.

Martinson et al. [13] rightfully claim that current analyses only focus on researchers and their misconduct but fail to consider the broader research environment, involving its structures, which often trigger the researchers' misconduct through what Bouter [12] and Sandel [15] for instance call “perverse incentives.”

Taking the broader research environment into consideration extends the focus to the research network. In the researcher-centric network of responsibility, we see the following actors and stakeholders (Fig. 1). The researcher is connected to all of the different stakeholders. Even though the research environment is vast and complex, we identify 6 other stakeholders besides the researcher: research subjects, colleagues, editors/publishers, professional associations, universities, funders, and society at large.

The classic – and already mentioned above – relation to the research subject focuses on questions around avoiding harm and properly informing the research subject. Especially in the context of genomic research, big data, and digitalisation, new challenges continuously arise, such as aspects of the right not to know, data protection, and privacy, all of which have to be imbedded in the considerations for informed consent. Current research, as for example, advances in gene editing, is moving the frontiers of impacting only the single research subject but extends gained knowledge and its consequences to genetically related persons and thus future generations. Here, existing and traditional models of informed consent, such as opt-out, waiver, no consent, and open or categorical consent, need to be revised in order to meet the challenges posed. Incidental findings of genomic data, e.g. predisposition for diseases, furthermore pose a challenge as to how to deal with this information with regard to truthfulness and individual autonomy. With regard to also protecting privacy of biological relatives existing models should be further revised.

It is furthermore important to focus on aspects of non-exploitation of research subjects. Especially in times of austerity, non-exploitation becomes relevant in studies where people become research subjects on the basis of the incentives – often of financial nature – that they receive [16]. Generating research subjects at costs of potentially adverse side effects of the respective study is morally unjustifiable, not only since the Tuskegee experiments and the Declaration of Helsinki. Some forms of exploitation continue to occur, often in low-income countries [17]. It is well established that in poor settings, such as underserved communities and countries, inequitable access to resources have an important role to play in people's decisions to partake in research [18,19] and that in resource-poor settings, voluntary informed consent is undermined by financial incentives [16]. Partaking in research can also be a way to attain better or even basic health care provision when otherwise health care services are not readily accessible. This would be the case of early accessible and usually costly genomic treatment during research studies, where research subjects are often patients who wish to access and aim to benefit from evolving treatments [20].

However, according to Chalmers and Glasziou [21] there is often a mismatch between the research questions and the expected outcome for patients. Data are moreover available too late, with an average of nine years later [21]. It must hence be a researcher's responsibility to ensure timely and unbiased publication of study findings and a matching of research questions and patients' expectations [21]. When these requirements are not guaranteed, the already vast amount of unused research – which is deemed to account for 85% [21] – is “waste.”

In addition to research subjects, the researcher is related to his/her circle of colleagues, both from the academic environment as well as collaborators from other institutions at public and private levels. Here, it is important that the relation is defined through ethical principles of the professional roles, such as honesty in sharing study results or newly developed concepts and trust that the information is not used without permission or adequate credit. Also sharing confidential information, e.g. about participants who should stay anonymous, or failing to store study data securely, so that colleagues can access raw material remain questionable, as the moral duty towards research subjects to expose information only to participating researchers is undermined.

During the focus group discussions in the study by Martinson et al. [13], a concern was also raised about questionable relationships with students, research subjects, or clients. Concerning the former, the ethical concern about questions of authorship is central. While at least in Germany, it used to be (and is still the case in some disciplines) that students' theses are published without their name in the author list, it also often is the opposite case, namely that colleagues who want to accelerate their career or PhD students are authors on various publications to which they have contributed little or nothing at all. This practice can be seen as undermining competition and thus has to be regarded as unfair.

On another similar note, peer review – according to Bouter [12] the dominant kind of quality assessment – often affects or is affected by the relationship between colleagues. When assessing research proposals or manuscripts, objectivity is rather problematic. In some biomedical or niche disciplines, colleagues' works can be identified even when manuscripts are submitted anonymously. Rejecting manuscripts of competitors, or punishing colleagues who reviewers are in dispute with could be an easy, yet untruthful and mendacious way to increase one's own research career [22]. Lawrence [22] even reports from his experience as editor, that half of all submissions he received asks for not sending the submission to certain reviewers due to “conflicts of interest” and fear that confidential data and ideas are misused. Lawrence, however, purports that this could be a fruitful way to circumvent especially critical or unfavourable reviewers. Reviewer activities for different journals can pose an even higher workload to scientists that is not recognised or valued as part of their research or faculty-related work. Whether the evaluation process of a reviewer should be recognised and in which ways this could be operationalised needs to be assessed and possible options are to be discussed. Transparency, anonymity, and disclosure issues are central issues to be taken into account therein [23].

Sharing study results and methods is another issue pertaining to the relationship with colleagues, which gains increasing attention with advances in genomic research. By improving sharing behaviours between colleagues, research waste can be reduced to an enormous extent, as already existing data and methods can be used and applied respectively [24]. New research can then refer to and use existent knowledge instead of duplicating research in new settings.

The quest for seeking the “right” reviewers is an issue which editors have to deal with daily, and which leads us to the next stakeholder in the network of responsibilities: the editors or publishers. For researchers, there is often a bias towards publishing in popular journals, as the probability of getting cited is deemed to be higher and impact factors count for their careers. That this system is morally questionable is discernible and leads to a domination of certain journals, whereas more unknown journals cannot compete. An unfair competition for researchers is recognisable in the long journey of publication, which can vary as long as several years from submission to actual publication depending on reviewers' availability and promptitude and editors' decisions whether to publish or not and in which volume of their journal. English natives also have a competitive advantage, since most journals publish in English, posing a barrier to scientists from other language-speaking countries. The responsibility of editors and publishers for ensuring progress in research is central, as they can most often decide what type of research papers get commissioned and published or not – and thereby receives a platform for further communication and discussion or not. Here, publication of negative results has to be addressed which is often not seen as “successful” and interesting research to be published, but which nevertheless promotes knowledge and provides other researchers with information about which scientific approaches or experiments are not fruitful.

Editors and publishers have the power to put topics and theses on the agenda and hence shape not only the academic context to a great deal, but also society as a whole. The failure to focus on promising topics, however, also has an impact. Their role can be termed as being responsible towards society in shaping or pushing the research agenda into the “right” direction. They also have responsibility towards research subjects, which they ensure by checking granted research ethics approvals. However, many publishers and editors also come under pressure – or a “crisis” of publishing as Richard Horton [25] of The Lancet describes it. They experience a paradigm shift from a focus on quality of publications rather to quantity of publications issued by journals. With the emergence of so-called mega journals, which publish approximately 30,000 articles a year, other motives rather than scientific excellence are emphasised. These motives relate more to a high market share and connected benefits, such as “revenue growth, cost control, and profitability” [25, p. 322], even more so in times of economic hardship. For publishers and editors who used to function as gatekeepers to scientific publications, which should be based on excellence, this change has affected their core values and decisions pertaining to what is deemed science and relevant research. Moral questions about the purpose of scientific publications arise, reflecting or indicating a change in publishers' values. Instead of only focusing on volume and market share, Horton sees it as publishers' responsibility to focus on the added value of science to society. Hence, publishers' integrity to not only engage in profitability is key in developing science in a time where big datasets would easily provide substance for all sorts of publications. The question however, remains whether gatekeeping research for publication with the aim to publish only high quality research adversely affects transparency. Finding the right balance in this regard will remain a challenge and key in the years to come.

Professional communities are often organised in professional associations – another stakeholder in the network of responsibilities. Professional associations pool their members' scientific experience and knowledge and can hence be intellectually supportive for their members. A prime responsibility professional associations should take on is the development of guidelines for conducting research or establishing links between researchers to strengthen research for example. Yet, promoting international collaborations in forms of scholarships or travel grants might get more difficult for professional associations, as their budgets in some cases were reduced in the last years due to unpaid membership fees. By steering the research of their scientific community in the direction they deem promising, professional associations take on a leadership role. However, as in every community, challenges can emerge with regard to internal power struggles, personal discrepancies or different values. Hence, defining a common value set can help to align motives and avoid conflicts of interest. In addition to interdependencies as well as responsibilities towards colleagues, professional associations are accountable to society, and can and should aim to promote added value of their research activities.

Also universities, as institutional organisations within the research network, prescribe to the aim to promote added value to research. Their role as institutions is to provide safe working spaces and fruitful working environments for both researchers and their study results by securing proper storage of data, upholding anonymity of research subjects and providing the necessary infrastructure to support research endeavours. In light of scarce resources, buying new technology or renewing existing buildings or offices remains challenging. Prioritising what to invest in should be evaluated fairly and objectively so that standards are upheld and all departments within a university are equitably treated. With regard to meeting the obligation or legal requirement to regulate research by assessing ethical implications of research, institutional review boards have to be established and research ethics training should be institutionalised. Moreover, universities often set incentives for research, which however have to be arranged in a fair way.

Funders have a crucial role within the network of responsibilities, as research is in nearly all cases dependent on the funding agencies involved. These funding agencies or stakeholders can vary widely and can be embodied by stakeholders either from the public sphere such as government, advocacy groups or non-governmental, non-profit organisations; or can stem from private corporations with industry being the most ostensible. Various moral obligations are involved for each stakeholder, for example with regard to being accountable to where funding is from and for which purpose it is offered. Academics who are in advisory boards of industrial companies can easily get into conflicts of interests, which can result in exploiting either industry or university resources or people working there [26]. Governmental funding for an industry's purpose to expand its for-profit product range is morally questionable in terms of justice when research is publicly funded. However, as austerity measures heavily affect governmental budgets for research and development since 2008, private funding becomes more and more important and can yield new funding schemes, such as mutual funds or research bonds as proposed by Moses and Dorsey [27]. Evidence exists that austerity measures have negatively affected not only pharmaceutical growth [28] but that publicly funded independent clinical trials could not be carried out as funds were not disbursed since 2012 such as was the case in Italy [29]. The scheme from which funding was generated was based on a newly raised 5% tax on pharmaceutical marketing, established in 2005. This type of revenue for funding can be regarded as being a fair measure to balance excess profits from the pharmaceutical field to usually underfunded public or independent research. Funders have to respond to political interests or even “hypes” in certain research fields, for which the demand for distributing grants is strong. Acting on this demand can create inequalities between different fields of study [30]. Fewer funding opportunities have led to increased competition with regard to EU grants. Specifically for resource-poor countries, EU grants are often an essential possibility to fund research and maintain research infrastructure, e.g. in EU member states that were hit hard by the economic crisis. Along the same lines, continuing low levels of funding will create more uncertainty for young researchers, who would rather search for a career in other, mostly private, sectors.

Here, the question arises whether the liberal approach of providing grants based on competition is still justifiable in times of austerity or whether mechanisms that put a bigger focus on solidarity should be installed. In short, low levels of funding have adverse consequences for society in general as biomedical or genomic research yields prosperity and other economic benefits by creating jobs and valuable products and methods [3,27].

Even if funds are available, further issues arise with regard to the already mentioned vast amount of 85% waste in research [21], such as whether it is ethically justifiable to fund research which cannot be translated into practice or which aims to answer irrelevant or already answered research questions. The open science movement raises novel questions as to how to deal with raw data and study results funded by public resources. There are arguments for sharing data publicly and making them available through open access, which promotes access and thus freedoms to lay persons, as well as arguments questioning whether such access might result in misuse or misinterpretation of data. A thorough discussion of this issue however, goes beyond the scope of this paper. The role of funders, in collaboration with other stakeholders, such as researchers, universities, and society, is crucial in order to define common standards for funding practices.

With regard to society – our last stakeholder – new challenges have to be met in times where amounts of data increase rapidly and funding for research is scarce. Society's role in research increased in the last years due to the fact that advocacy groups, e.g. patient advocacy groups, and community engagement actions were paid more regard to in defining research priorities on the political level [31]. By this engagement, societal values became part of the scientific undertaking. Resnik argues that even though science is deemed objective, evidence-based, and “value-free,” it nevertheless involves researchers' epistemic and non-epistemic values [32]. Being aware and transparent about those values is central to improving the perception of science in society and strengthening their link. According to Meslin and Cho [3], research during the era of personalised medicine needs an update on what they call “social contract between science and society.” Hitherto, research was based on the precautionary principle as well as non-maleficence and protectionism, which all resulted in a request for more regulation and requirements of researchers and delineates a lack of trust in the latter. This lack of trust is also noticeable in the unsteady support to scientists by society, when support comes easily if results are progressive, but can be withdrawn rapidly when researchers are deemed to be driven by personal or industry-related motives [3]. Due to the changing landscape in research following novelties in genomic understanding and big data availability, such lack of trust can hinder advances tremendously. Therefore, a new framework has to be established, which should still root in avoiding harm, but moreover provides scientists with the necessary trust to conduct research freely, however with the best interest for society's needs and values. Hence, the social contract between scientists and society is increasingly based on integrity of scientists. Meslin and Cho propose within their “recipe for reciprocity” four ways by which researchers can confirm their integrity to society, namely by “(1) a clear articulation of goals and visions of what constitutes benefit, without overstatement of benefit, (2) a commitment to achieving these goals over the pursuit of individual interests, (3) greater transparency, and (4) involvement of the public in the scientific process” [3, p. 379]. Society would return consequently “(1) trust in the process and goals of science, (2) a greater willingness to volunteer to participate in research, (3) sustained, reliable funding, and (4) support for greater academic freedom, free from manipulation by political goals or ideology” [3, p. 379].

Besides emphasising values or principles for researchers to act upon, Meslin and Cho propose an increased inclusion of society in science. Citizen Science – as a relatively new term describing a rather old approach – includes laypersons as volunteers in research projects, e.g. in data collection, recruitment of research subjects or communicating results to society. Advantages include besides assistance in data collection, an improved insight for volunteers into science and scientific methods, and the possibility to engage in issues, which are relevant to them and their environment [33]. Ethical considerations emerge however, with regard to data integrity and sharing, intellectual property and authorship rights, responsible oversight and training of volunteers, and exploitation [34].

As regards scientists, strengthening their commitment to public benefit will contribute to their integrity and lead to valuable relationships, which have a greater potential to meet society's needs.

Concluding Remarks

Research practice and the researchers' environment have changed in the last years – economic pressure in times of austerity and genomic advances can be seen as two drivers for research ethics 2.0. The network of responsibilities described above shows the many (more) ethical issues researchers and other stakeholders or actors have to face nowadays. The integrity of research – implying adherence “to the basic rules of good scientific practice (such as) honesty and sincerity, self-discipline, self-criticism, and fairness” [10] – is challenged on diverse levels. Our claim is that focusing on research ethics and integrity on those various levels and taking into account all stakeholders can make research better, more truthful and thereby more socially acceptable. It is important that research is socially acceptable since it influences support for research in general. As Gunn has already pointed out in his Editorial in 1917, scientific misconduct, waste, or sloppy methods can undermine the integrity of “the whole public health movement” and have a negative impact on human welfare [35]. Even though this statement has been made a century ago, it has not changed ever since but is even more crucial with regard to genomic research.

In some cases, integrity might hinder research, for instance when conflicts of interest supersede funding, in others it promotes research, e.g. when professional associations engage in research collaborations. However, applying ethics and moral values enhances research and as such is not only a means to an end but also a necessary end in itself. Moral values can thus be regarded as drivers for science as they provide accountability and public trust to society and vice versa. What is new with regard to the mentioned recent challenges is that research ethics cannot focus on the relationship between researcher and subject alone anymore [36]. All stakeholders within the broader research field build a network of mutual responsibilities, rights, and duties.

It is crucial to strengthen integrity in the years to come. An auspicious way to do this is by means of education. Bouter [12] suggests that education and training about scientific integrity for PhD students as well as for permanent academic staff should be implemented. This could not only be done by lectures, but more profoundly and according to successful didactic methods such as group work and case studies [37]. Moreover, existing policies are to be reframed on the basis of the established network of responsibilities in order to provide improved guidance for the various stakeholders involved, e.g. to better protect research subjects or involve volunteers by means of citizen science, especially for research in times of scarce resources.

The field of research ethics however is still developing, and more and more aspects of research collaboration are being discussed. Therefore, there is still a magnitude of issues undiscovered. The account provided in this paper thus does not aim to be comprehensive, but to provide a heuristic endeavour to involve all stakeholders concerned within a network approach. It extends the classic bilateral relationship between researcher and research subjects towards the network of responsibilities and aims to specify some challenges at hand. Due to the multitude of developments ahead, a continued discussion is needed in which ways ethic(ist)s can support research. Central topics and challenges in genomic research where further ethical assessment is needed include the issue of data sharing and making use of existing data, open science concepts, and the role of science in the age of digitalisation.

Disclosure Statement

The authors have no disclosures to declare.

Acknowledgements

We thank two anonymous reviewers for their valuable input and remarks.

References

- 1.The National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research . Ethical Principles and Guidelines for the Protection of Human Subjects of Research. Washington: US Government Printing Office; 1978. The Belmont Report. [PubMed] [Google Scholar]

- 2.Pelias MK. Human subjects, third parties, and informed consent: a brief historical perspective of developments in the United States. Community Genet. 2006;9:73–77. doi: 10.1159/000091483. [DOI] [PubMed] [Google Scholar]

- 3.Meslin EM, Cho MK. Research ethics in the era of personalized medicine: updating science's contract with society. Public Health Genomics. 2010;13:378–384. doi: 10.1159/000319473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Brasel KJ. Research ethics primer. J Surg Res. 2005;128:221–225. doi: 10.1016/j.jss.2005.07.003. [DOI] [PubMed] [Google Scholar]

- 5.Jones J. Bad Blood: The Tuskegee Syphilis Experiment. New York: Free Press; 1993. [Google Scholar]

- 6.Goldman L. The Willowbrook debate. World Med. 1971;7:23–25. [PubMed] [Google Scholar]

- 7.Sass HM. Reichsrundschreiben 1931: pre-Nuremberg German regulations concerning new therapy and human experimentation. J Med Philos. 1983;8:99–111. doi: 10.1093/jmp/8.2.99. [DOI] [PubMed] [Google Scholar]

- 8.Schüklenk U, Ashcroft R. International research ethics. Bioethics. 2000;14:158–172. doi: 10.1111/1467-8519.00187. [DOI] [PubMed] [Google Scholar]

- 9.Beauchamp TL, Childress JF. Principles of Biomedical Ethics. ed 7. New York: Oxford University Press; 2013. [Google Scholar]

- 10.Swiss Academy of Medical Sciences 2016 Research Ethics. http://www.samw.ch/en/Ethics/Research-ethics.html

- 11.OSTP Federal Policy on Research Misconduct. 2005. http://www.ostp.gov/html/001207_3.html

- 12.Bouter LM. Commentary: perverse incentives or rotten apples? Account Res. 2015;22:148–161. doi: 10.1080/08989621.2014.950253. [DOI] [PubMed] [Google Scholar]

- 13.Martinson BC, Anderson MS, de Vries R. Scientists behaving badly. Nature. 2005;435:737–738. doi: 10.1038/435737a. [DOI] [PubMed] [Google Scholar]

- 14.Tijdink JK, Schipper K, Bouter LM, Maclaine Pont P, de Jonge J, Smulders YM. How do scientists perceive the current publication culture? A qualitative focus group interview study among Dutch biomedical researchers. BMJ Open. 2016;6:e008681. doi: 10.1136/bmjopen-2015-008681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Sandel MJ. The Moral Limits of Markets. New York: Farrar, Straus and Giroux; 2012. What Money Can't Buy. [Google Scholar]

- 16.Abadie R. The “mild-torture economy”: exploring the world of professional research subjects and its ethical implications. Rev Saúde Coletiva. 2015;25:709–728. [Google Scholar]

- 17.Nuffield Council on Bioethics . The Ethics of Research Related to Healthcare in Developing Countries. London: Nuffield Council on Bioethics; 2002. [Google Scholar]

- 18.Lynoe N, Ziauddin H. Obtaining informed consent in Bangladesh. N Engl J Med. 2001;344:460–461. doi: 10.1056/NEJM200102083440617. [DOI] [PubMed] [Google Scholar]

- 19.Sreenivasan G. Does informed consent to research require comprehension? Lancet. 2003;362:2016–2018. doi: 10.1016/S0140-6736(03)15025-8. [DOI] [PubMed] [Google Scholar]

- 20.Makhoul J, Shaito Z. Completing the picture: research participants' experiences of biomedical research. Eur J Public Health. 2016;26((suppl 1)) ckw164.004. [Google Scholar]

- 21.Chalmers I, Glasziou P. Avoidable waste in the production and reporting of evidence. Lancet. 2009;374:786. doi: 10.1016/S0140-6736(09)60329-9. [DOI] [PubMed] [Google Scholar]

- 22.Lawrence P. The politics of publication. Nature. 2003;422:259–261. doi: 10.1038/422259a. [DOI] [PubMed] [Google Scholar]

- 23.Resnik DB. Scientific misconduct and research integrity. In: ten Have HAMJ, Gordijn B, editors. Handbook of Global Bioethics. Dordrecht: Springer; 2014. pp. 799–810. [Google Scholar]

- 24.Moher D, Glasziou P, Chalmers I, Nasser M, Bossuyt PMM, Korevaar DA, et al. Increasing value and reducing waste in biomedical research: who's listening? Lancet. 2016;387:1573–1586. doi: 10.1016/S0140-6736(15)00307-4. [DOI] [PubMed] [Google Scholar]

- 25.Horton R. Offline: what is the point of scientific publishing? Lancet. 2015;385:1166. [Google Scholar]

- 26.Spier RE. Ethics and the funding of research and development at universities. Sci Eng Ethics. 1998;4:375–384. [Google Scholar]

- 27.Moses H, Dorsey ER. Biomedical research in an age of austerity. JAMA. 2012;308:2341. doi: 10.1001/jama.2012.14846. [DOI] [PubMed] [Google Scholar]

- 28.Sharma D, Martini LG. Austerity versus growth – the impact of the current financial crisis on pharmaceutical innovation. Int J Pharm. 2013;443:242–244. doi: 10.1016/j.ijpharm.2012.12.022. [DOI] [PubMed] [Google Scholar]

- 29.Turone F. Italy's austerity measures have led to delays in allocating research funds. BMJ. 2015;350:785. doi: 10.1136/bmj.h785. [DOI] [PubMed] [Google Scholar]

- 30.Master Z, Resnik DB. Hype and public trust in science. Sci Eng Ethics. 2013;19:321–335. doi: 10.1007/s11948-011-9327-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Landeweerd L, Townend D, Mesman J, Van Hoyweghen I. Reflections on different governance styles in regulating science: a contribution to “Responsible Research and Innovation. ” Life Sci Soc Policy. 2015;11:8. doi: 10.1186/s40504-015-0026-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Resnik DB, Elliott KC. The ethical challenges of socially responsible science. Account Res. 2016;23:31–46. doi: 10.1080/08989621.2014.1002608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Riesch H, Potter C. Citizen science as seen by scientists: methodological, epistemological and ethical dimensions. Public Underst Sci. 2014;23:107–120. doi: 10.1177/0963662513497324. [DOI] [PubMed] [Google Scholar]

- 34.Resnik DB, Elliot KC, Miller AK. A framework for addressing ethical issues in citizen science. Environ Sci Policy. 2015;54:475–481. [Google Scholar]

- 35.Gunn SM. Editorial. Am J Public Health. 1917;7:40–41. [Google Scholar]

- 36.Schröder-Bäck P. Ethical aspects of public health research – individual, corporate and social perspectives. Eur J Public Health. 2015;25:41. [Google Scholar]

- 37.Aceijas C, Brall C, Schröder-Bäck P, Otok R, Maeckelberghe E, Stjernberg L, Strech D, Tulchinsky T. Teaching ethics in schools of public health in the European region – findings from a screening survey. Public Health Rev. 2012;34:146–155. [Google Scholar]