Abstract

Objective

To assess the utility and cost of using routinely collected inpatient data for large-scale audit.

Design

Comparison of audit data items collected nationally in a designed audit of inflammatory bowel disease (UK IBD audit) with routinely collected inpatient data; surveys of audit sites to compare costs.

Setting

National Health Service hospitals across England, Wales and Northern Ireland that participated in the UK IBD audit.

Patients

Patients in the UK IBD audit.

Interventions

None.

Main outcome measures

Percentage agreement between designed audit data items collected for the UK IBD audit and routine inpatient data items; costs of conducting the designed UK IBD audit and the routine data audit.

Results

There were very high matching rates between the designed audit data and routine data for a small subset of basic important information collected in the UK IBD audit, including mortality; major surgery; dates of admission, surgery, discharge and death; principal diagnoses; and sociodemographic patient characteristics. There were lower matching rates for other items, including source of admission, primary reason for admission, most comorbidities, colonoscopy and sigmoidoscopy. Routine data did not cover most detailed information collected in the UK IBD audit. Using routine data was much less costly than collecting designed audit data.

Conclusion

Although valuable for large population-based studies, and less costly than designed data, routine inpatient data are not suitable for the evaluation of individual patient care within a designed audit.

Background

The quality of care for people with inflammatory bowel disease (IBD) has been the subject of two recent national audits,1 2 and a third is in progress. Measurement of the quality of care through designed audit requires extensive time and resources for data collection. However, with increasing guidelines for the management of care, in recent years there have been increasing numbers of audits undertaken across the UK. At the same time, substantial amounts of administrative data are collected routinely on hospital admissions (about both inpatients and day cases). It is unclear, though, how much of this routinely collected data might be used for large-scale national audit, and whether it would be an effective method of auditing quality of care, as a pointer for in-depth local audit.

For the first and second national IBD audits,1 2 all hospitals in the UK were invited to submit data extracted from hospital records on 20 consecutive admissions with ulcerative colitis (UC) and 20 with Crohn's disease (CD). Following these audits, a working group of professional organisations has published recommended national standards for IBD care.3 These audits have provided us with an opportunity to assess how far the audit can be replicated using central returns, what the costs of this alternative method would be and whether it might provide an effective way of conducting prospective, sustainable, national audit as a high-level measure of the quality of care.

The aim of this study was to assess the potential for routinely collected data to support a national audit of the management of inpatients with IBD. The main objectives were to compare the data collected in the designed UK IBD audit with routinely collected inpatient data, and to compare the costs of the two methods of audit.

Methods

We used the first UK IBD audit as the basis for comparison with routine data. All district general and teaching hospitals in the UK were invited to participate, and it covered retrospective data collection for a median of 19 patients per site who were hospitalised with CD or UC in 2006. We obtained the full dataset for the first UK IBD audit and corresponding administrative inpatient data for Wales (Patient Episode Database for Wales),4 England (Hospital Episode Statistics (HES))5 and Northern Ireland (Hospital Inpatient System).6

Matching patient cases across the UK IBD audit and routine data

We used the following anonymised patient identifiers to match patient records from the UK IBD audit with the corresponding routine data: date of admission, year of birth, hospital, sex and specified principal diagnosis. As both the routine data and the designed audit data sometimes contained errors for individual patient identifiers, we incorporated error margins when matching records in order to increase the likelihood of ‘true-positive’ matches while, at the same time, not inflating the number of ‘false-positive’ matches.

These error margins were as follows. For date of admission, we specified an admission date of plus or minus 3 days, as there were some discrepancies of up to 3 days for admissions that occurred around weekends. For location we matched first using hospital codes, then by trust, as we found that for a substantial minority of hospitals in England and a few in Wales, the hospital trust rather than the admitting hospital was specified in the routine data.

For age, we incorporated an error margin of 1 year either side of the year of birth as the UK IBD audit collected only the year of birth, whereas the routine data provided the age of the patient. For principal diagnosis we found that in some cases a more general diagnosis of IBD rather than CD or UC had been specified or coded in the routine data or CD, UC or IBD had been recorded as a secondary diagnosis. Therefore to minimise the risks of ‘false-negative’ matches, we matched first on diagnoses of CD or UC, and second on IBD in any position on the inpatient record.

When the above criteria were used, matching rates for CD were 89% of cases for Wales and Northern Ireland, and 65% for England. For UC, corresponding matches were 90%, 84% and 64%. As the shortfall in matching in England and Wales was attributable largely to a minority of hospitals, we excluded hospitals with successful matches of ≤40%. This generated matching rates for CD and UC, respectively, of 92% and 96% in Wales, 91% and 91% in England and 89% and 84% in Northern Ireland.

The International Classification of Diseases (ICD-10) codes used for CD, UC and IBD were, respectively, K50, K51 and K50–K52. Episodes of care were record linked to generate inpatient spells and diagnostic codes were based on diagnoses recorded across the inpatient admissions. Details of the data items in HES that were used to compare the UK IBD audit and the administrative inpatient data are provided in the footnotes to table 1.

Table 1.

Numbers of cases in the UK IBD audit in which the data items were recorded and percentage of cases in which the data items were matched in the routine inpatient data

| England | Wales | Northern Ireland | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CD | UC | CD | UC | CD | UC | |||||||

| UK IBD audit data item* | Cases (N) | % Matches | Cases (N) | % Matches | Cases (N) | % Matches | Cases (N) | % Matches | Cases (N) | % Matches | Cases (N) | % Matches |

| Primary reason for admission | 1491 | 74 | 1401 | 84 | 167 | 77 | 148 | 91 | 141 | 77 | 133 | 83 |

| Source of admission | 1141 | 75 | 1341 | 74 | 138 | 79 | 145 | 87 | 115 | 77 | 130 | 75 |

| Specialty of care in first 24 h | 1182 | 53 | 1085 | 53 | 140 | 48 | 128 | 50 | 117 | 66 | 116 | 65 |

| Mortality during admission | 1491 | 100 | 1401 | 100 | 167 | 100 | 148 | 100 | 141 | 99 | 132 | 99 |

| Date of death (±3 days)† | 17 | 94 | 31 | 84 | 3 | 33 | 2 | 0 | 1 | |||

| Date of discharge (±3 days) | 1491 | 89 | 1353 | 89 | 165 | 93 | 146 | 90 | 141 | 95 | 132 | 90 |

| Mortality after discharge | 1443 | 98 | 1334 | 98 | 163 | 97 | 139 | 97 | NA | NA | NA | NA |

| Major comorbidities recorded | 285 | 28 | 392 | 38 | 34 | 47 | 42 | 50 | 23 | 22 | 41 | 27 |

| Diabetes† | 33 | 70 | 85 | 80 | 6 | 100 | 10 | 100 | 2 | 50 | 10 | 50 |

| Ischaemic heart disease† | 81 | 32 | 139 | 38 | 12 | 58 | 13 | 62 | 8 | 25 | 13 | 46 |

| COPD† | 103 | 14 | 103 | 19 | 12 | 0 | 10 | 20 | 10 | 10 | 11 | 0 |

| Renal failure† | 18 | 50 | 7 | 57 | 1 | 100 | 1 | 100 | 0 | 2 | 0 | |

| Stroke† | 18 | 6 | 26 | 4 | 1 | 100 | 4 | 0 | 1 | 100 | 4 | 0 |

| Liver disease† | 4 | 21 | 20 | 10 | 0 | 2 | 0 | 0 | 0 | |||

| Peripheral vascular disease† | 8 | 13 | 10 | 10 | 2 | 50 | 1 | 0 | 2 | 0 | 0 | |

| Sigmoidoscopy/colonoscopy | NA | NA | 672 | 70 | NA | NA | 73 | 67 | NA | NA | 58 | 48 |

| Date of sigmoidoscopy/colonoscopy (±3 days) | NA | NA | 223 | 71 | NA | NA | 50 | 26 | NA | NA | 58 | 22 |

| Surgery performed‡ | 1491 | 82 | 1195 | 96 | 167 | 84 | 129 | 96 | 141 | 92 | 117 | 91 |

| Date of surgery (±3 days) | 320 | 96 | 356 | 92 | 42 | 95 | 46 | 85 | 30 | 93 | 35 | 60 |

| Specialism of the operating surgeon | 572 | 8 | 350 | 9 | 69 | 12 | 46 | 2 | 41 | 15 | 35 | 11 |

| Indications for surgery | 536 | 0 | 349 | 0 | 62 | 0 | 43 | 0 | 0 | 35 | 0 | |

| Type of surgical intervention‡ | 699 | 22 | 460 | 44 | 80 | 20 | 58 | 52 | 44 | 7 | 36 | 53 |

| Postsurgical complications† | 137 | 0 | 148 | 0 | 75 | 0 | 8 | 50 | 8 | 0 | 8 | 0 |

The data items used in the routine inpatient data for England (Hospital Episode Statistics) were as follows for: primary reason for admission (method of admission); source of admission (source of admission); specialty of care in first 24 h (specialty responsible for the first 24 h of care); specialism of surgeon (specialty in which the consultant was working during the episode of surgery); mortality during admission, date of death, mortality after discharge (date of death and method of discharge); date of discharge (date of discharge); major comorbidities, indications for surgery, postsurgical complications (all diagnosis codes); sigmoidoscopy/colonoscopy, type of surgical intervention, surgery performed (all operation codes); date of sigmoidoscopy, date of surgery (date of operation). The OPCS-4 codes used for sigmoidoscopy/colonoscopy were H22, H25, H28 and for surgery (colectomy) were H04–H11, H33. The ICD-10 codes used for comorbidities were as follows: diabetes (E10–E14), ischaemic heart disease (I20–I25), COPD (J41–J44), renal failure (N17–N19), stroke (I61–I69), liver disease (K70–K77) and peripheral vascular disease (I70–I74).

Figures for these audit data items are sometimes based on very low numbers of cases.

The relatively low percentage match for CD—compared with that for UC— is because patients with Crohn's disease were more likely to have surgery other than colectomy (elsewhere along the gastrointestinal tract rather than on the colon) which was used as the basis for these comparisons.

CD, Crohn's disease; COPD, chronic obstructive pulmonary disease; ICD-10, International Classification of Diseases; NA, data items were not collected; UC, ulcerative colitis.

Cost comparison

The second UK IBD audit, conducted during 2008, was used to estimate the cost of providing designed national audit data. This exercise was limited to staff costs, because of expected difficulties encountered by trusts in estimating non-staff resources used in audit activities. Previous studies, however, have shown that non-staff costs represent only a small percentage of total audit costs.7 8

A questionnaire was sent by email to all local audit leads, asking them to identify all staff who contributed to the audit, to provide details of the title and grade of each contributing staff member and to estimate the time spent by each contributor on any aspect of audit activity. Responses were requested at trust level and anonymity was assured.

Where staff grades were provided, hourly rates including on-costs and overheads were taken from Curtis.9 Where job titles were provided without an associated grade, a search was made on the online National Health Service (NHS) job website,10 to identify advertised posts which most closely resembled the stated job title. Hourly rates were assigned to these posts by matching them with the nearest salary band in Curtis.9

Unlike the designed audit, the routine data replication required start-up costs such as capital equipment, preparatory work and staff training. Therefore, two separate analyses of the costs of the routine data replication are provided. The first analysis shows costs as they were incurred in the study. The second analysis reports projected costs of running subsequent routine data audits assuming that no additional staff training is required and that other resources required are already in place. For further details of the methods used for the costing of the routine data audit see the footnotes to table 2.

Table 2.

Costs of audit based on routine inpatient data

| Cost* | Notes | £ |

|---|---|---|

| Recorded costs for initial audit | ||

| Labour (managerial) | Managing and overseeing audit tasks: (4 h/week × 14 weeks) = 56 h @ £50.33 = £2818.48 | 2818.48 |

| Labour (audit) | Training: (2 weeks @ 7.5 h/day, 5 days/week) 75 h @ £26.96 = £2022.00 | 18 198.00 |

| Developing audit criteria: (2 weeks @ 7.5 h/day, 5 days/week) 75 h @ £26.96 = £2022.00 | ||

| Audit: (14 weeks @ 7.5 h/day, 5 days/week) 525 h @ £26.96 = £14 154.00 | ||

| Supplies/training costs | Consumables: £50 | 1160.00 |

| Specialist software: | ||

| SQL software purchased @ £125 | ||

| Hospital Episode Statistics course (£485) and associated online link (£500) | ||

| Note: other generic software packages are omitted as it is assumed that an audit facility would already possess appropriate packages | ||

| Furniture and equipment | Desk and drawers for audit staff only @ £1400 | 473.50 |

| PC for audit staff only @ £650 | ||

| Note: annuitised over 5 years @ 5% | ||

| Total | £22 649.98 | |

| Estimated cost of subsequent audits | ||

| Labour (managerial) | Managing and overseeing audit tasks: (12 weeks) 4 h @ £50.33×48 h | 2415.84 |

| Labour (audit) | Audit: (12 weeks) 450 h @ £26.96 | 12 132.00 |

| Supplies | Consumables: £50 | 50.00 |

| Furniture and equipment | Continuing annuitised costs as per first audit | 473.50 |

| Total | £15 071.34 | |

For consistency with the designed audit costs, salary costs include uplifts for on-costs (22.5%) and overheads (41.5%). Capital costs such as furniture and equipment are annuitised over a 5-year lifespan using a 5% discount rate. Although training produces a flow of benefit over time, it was not possible to predict this timespan. Training costs have thus been attributed to the year in which they were incurred. All costs are at 2009 prices.

Results

Using the matching criteria described above, the UK IBD audit data items were compared across designed audit and routine administrative inpatient data (table 1 and figure 1). Although there was variation between countries, there were very high matches (90–100%) for mortality information (both for deaths that occurred in hospital as well as for those that occurred after discharge); surgery; dates of admission, surgery, discharge and death; patient age and sex; principal diagnosis; and hospital/trust.

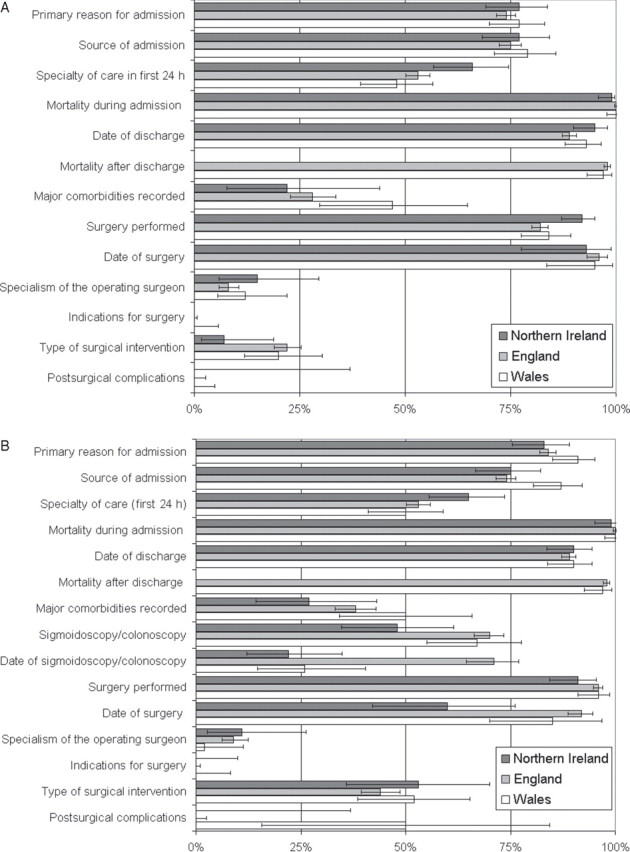

Figure 1.

Summary of matching audit data items from the UK inflammatory bowel disease audit to routine inpatient data in Wales, England and Northern Ireland for (A) Crohn's disease and (B) ulcerative colitis. Horizontal bars denote 95% CIs. For further details see table 1.

There were lower matching rates (typically 65–89% but sometimes >90%) for primary reason for admission; source of admission; and recording of colonoscopy or sigmoidoscopy for UC. Matching rates were lower still for specialty of care in the first 24 h and for comorbidities, and were very low or even zero for indications for surgery; specialism of the operating surgeon; and for postsurgical complications. Figure 1 illustrates the consistency across England, Wales and Northern Ireland, both for CD (figure 1A) and for UC (figure 1B).

The overall matching rates for all recorded major comorbidities for CD and UC were, respectively, 47% and 50% in Wales, 28% and 38% in England and 22% and 27% in Northern Ireland (table 1). Of individual comorbidities, matching rates were 50–100% for diabetes, 25–62% for ischaemic heart disease and renal failure and <25% for chronic obstructive pulmonary disease, stroke, liver disease and peripheral vascular disease (table 1).

We were not able to match many of the other detailed data items collected in the UK IBD audit, largely because the data items are not collected by routine data systems or because of the limitations of ICD and Office of Population Censuses and Surveys disease and procedure coding for specialised IBD-specific symptoms, procedures and complications.

Cost comparison

Designed audit costs were obtained from 48 returned questionnaires covering 54 of 222 sites which submitted data to the national audit. This represents an overall response rate of 24.3%. A comparison of responding and non-responding sites showed the sample to be over-represented by sites treating a higher number of inpatients with IBD (responding sites mean (SD) total admissions = 204.2 (212.4) vs 143.0 (149.4) in all audit sites reporting total admissions). The sample was also slightly over-represented by sites which submitted data on higher numbers of patients to the national audit (mean (SD) number of patients submitted in responding sites = 34.1 (7.8) vs 30.6 (10.6) in sites which had submitted at least one patient to the audit). Three questionnaires were incomplete and were excluded.

Designed data audit staff costs ranged across trusts from £902 to £10 898 (mean=£2827, SD=£2070). Costs per patient ranged from £24 to £193 (mean=£76, SD=£44). These suggest that the cost for all trusts which participated in the national audit was over £500 000.

For the routine data audit replication, table 2 ‘recorded costs for initial audit’ shows costs as incurred (including preparatory work and training), and table 2 ‘estimated cost of subsequent audits’ estimates costs that would be incurred if the same audit team were to carry out subsequent audits (assuming no preparatory work, reduced capital costs and use of trained staff). Total costs were respectively £22 650 in the initial audit and £15 071 in subsequent audits.

Discussion

We found high matching rates between the UK IBD audit and routine administrative inpatient data for a small subset of basic important information that is typically collected in designed audits. This includes mortality; major surgery; dates of admission, surgery, discharge and death; principal diagnoses; and sociodemographic patient characteristics including age, sex and social deprivation and hospital/trust.

We found lower matching rates for some further data items, including source of admission, primary reason for admission, some comorbidities and recording of colonoscopy or sigmoidoscopy. Coding of endoscopy may be omitted when the procedure is undertaken in the context of a more complex intervention(s) for severe IBD, which was more typical of the patient case mix in the UK IBD audit.

Routinely collected administrative data were substantially or largely incomplete or did not include most detailed information collected in the UK IBD audit. These include symptoms on admission, detailed disease history, many comorbidities, disease severity, imaging, dietetic details, details of most therapeutic interventions, indications for surgery, details of the surgeon, details of many surgical complications and details of discharge arrangements.

The anonymised patient identifiers made available in the designed UK IBD audit lacked discriminatory power for matching cases, particularly in the event of transcribing errors in either the routine or the designed audit data. For future studies, additional or more discriminatory anonymised patient identifiers would be recommended.

We employed a matching method that incorporated error margins to overcome this lack of discriminatory power. This would have included some ‘false-positive’ matches as a trade off for the improvement in ‘true-positive’ matches. The successful matching rates between the UK IBD audit and the routine data may therefore have been, in reality, slightly lower. This shortfall in matching naturally lowers the observed accuracy of the comparisons of data items between the UK IBD audit and the routine data.

In England, in particular, the trust, rather than the hospital to which the patient was admitted, was recorded on the routine data for some hospital admissions. As trusts can comprise many hospitals, it is important that the recording of hospital codes should be improved.

An important outcome measure that was collected by the designed UK IBD audit, and can be measured accurately through administrative data, is case death. Other outcome measures that are collected in many designed audits and that can potentially be assessed using record linkage of routine data include re-admission rates and infection rates for major infections such as Clostridium difficile and methicillin-resistant Staphylococcus aureus; although these were not assessed in this study.

We found that administrative data are not suitable for detecting more subtle deficiencies in hospital care—based on more specialised outcome measures—such as adherence to disease management guidelines, postoperative and therapeutic complications. However, a potential advantage of administrative inpatient data is that it should cover all admitted patients, whereas some patient cases may be excluded from clinical audit; if, for example, the notes are unavailable. As administrative inpatient data were used to inform the sampling frame of the UK IBD audit, it should not have been subject to biases in patient inclusion that can affect some audits.

The case fatality rate for IBD was 1.2% overall, which is too low for discriminatory comparisons across hospitals. Record linkage of routine inpatient data can be used to identify variation in case death across hospitals for more common, high-risk conditions. The length of time required depends heavily on the hospital admission rate and the case fatality rate for the disease or condition being studied. However, it is important that comparison of mortality across hospitals should be made carefully, because of variations in patient disease severity and case mix that often require adjustment. As we have found in this study, many comorbidities are not recorded comprehensively on inpatient data during single admissions. Record linkage to previous admissions and to alternative data sources—such as primary care data, cancer registry data and operational systems—has the potential to measure comorbidities more comprehensively. Unlike routine administrative inpatient data, however, these more specialised data sources often do not cover national or large regional populations.

The costs to NHS trusts of providing designed data to the UK IBD audit were much greater than the costs of carrying out the audit using routinely collected data alone, but the conclusions that can be drawn will be much more limited.

Many studies have demonstrated the considerable value of routine inpatient data for population studies, including studies of disease incidence, outcomes and risk factors.11–18 This study confirms our previous work that identified the limitations of these data when analysed at the level of the individual patient or healthcare professional.19 The weaknesses result from a lack of detailed clinical content, the process of data extraction from poor inpatient records, the use of coding classifications that lack the granularity of a detailed clinical terminology and a lack of the clinical engagement necessary to ensure rigorous data validation.20

This study was an attempt to demonstrate the wider potential of HES data, and to harness national audit as a driver to improve clinical engagement in data collection and validation at the point of care. However, we conclude that if prospective, continuous, sustainable national audits are to be conducted using routinely collected data in place of designed audit data, much change, effort and leadership will be needed to ensure that the data are collected and coded in sufficient detail and with accuracy.

Acknowledgments

The authors are grateful to the Health Information Research Unit (HIRU), School of Medicine, Swansea University, for preparing and providing access to the project-specific linked datasets from the Secure Anonymised Information Linkage (SAIL) system, which is funded by the Wales Office of Research and Development. The authors are also grateful to the following for various advice and help: Dr Keith Leiper and other members of the UK IBD audit steering committee; Calvin Down, Valerie Porter and Jane Ingham (Royal College of Physicians); Professor Michael Goldacre and Leicester Gill (Unit of Health-Care Epidemiology, University of Oxford); Professor Ronan Lyons, David Ford, Dr Sarah Rodgers, Caroline Brooks, Steven Macey, Tracey Hughes, Rohan D’silva, Jean-Philippe Verplancke (all School of Medicine, Swansea University); Dr Gareth John (National Public Health Service for Wales); Christine Kennedy and Rachel Stewart (Department of Health, Social Services & Public Safety, Belfast); Linda Baumber and Linda Davies (Singleton Hospital, Swansea); and UK IBD site contacts that responded to questionnaires for the costing sections of the project.

Footnotes

Funding: This study was funded by The Health Foundation. The views expressed are those of the authors and not necessarily those of the funding body.

Competing interests: None.

Provenance and peer review: Commissioned; externally peer reviewed.

References

- 1.UK IBD Audit Steering Group. National Results for the Organisation and Process of IBD Care in the UK, February 2007. London: Royal College of Physicians, 2007. [Google Scholar]

- 2.UK IBD Audit Steering Group. UK IBD Audit 2nd Round (2008). National Results for the Organisation & Process of Adult IBD Care in the UK Generic Hospital Report. London: Royal College of Physicians, 2009. [Google Scholar]

- 3.The IBD Standards Group. Quality Care: Service Standards for the Healthcare of People Who Have Inflammatory Bowel Disease (IBD). London: Oyster Healthcare Communications, 2009. [Google Scholar]

- 4.Health Solutions Wales. Patient Episode Database Wales (PEDW). http://www.hsw.wales.nhs.uk/page.cfm?orgid=166&pid=4262 (accessed 26 January 2011).

- 5.The Information Centre, NHS. Hospital Episode Statistics. http://www.hesonline.nhs.uk/Ease/servlet/ContentServer?siteID=1937&categoryID=571 (accessed 26 January 2011).

- 6.Department of Health, Social Services and Public Safety. Hospital Inpatient System (HIS). http://www.dhsspsni.gov.uk/index/stats_research/stats-activity_stats-2/hospital_inpatients-3.htm (accessed 26 January 2011).

- 7.Lock P, McElroy B, Mackenzie M. The hidden cost of clinical audit: a questionnaire study of NHS staff. Health Policy 2000;51:181–90. [DOI] [PubMed] [Google Scholar]

- 8.Robinson MB, Thompson E, Black NA. Why is evaluation of the cost effectiveness of audit so difficult? The example of thrombolysis for suspected acute myocardial infarction. Qual Health Care 1998;7:19–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Curtis L. Unit Costs of Health and Social Care. Report for Personal Social Services Research Unit (PSSRU). London: Personal Social Services Research Unit (PSSRU), 2008. [Google Scholar]

- 10.NHS Jobs. http://www.jobs.nhs.uk (accessed 1 June 2009).

- 11.Koskenvuo M, Kaprio J, Langinvainio H, et al. Changes in incidence and prognosis of ischaemic heart disease in Finland: a record linkage study of data on death certificates and hospital records for 1972 and 1981. Br Med J (Clin Res Ed) 1985;290:1773–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Eland IA, Sturkenboom MJ, Wilson JH, et al. Incidence and mortality of acute pancreatitis between 1985 and 1995. Scand J Gastroenterol 2000;35:1110–16. [DOI] [PubMed] [Google Scholar]

- 13.Lawrence DM, Holman CD, Jablensky AV, et al. Death rate from ischaemic heart disease in Western Australian psychiatric patients 1980–1998. Br J Psychiatry 2003;182:31–6. [DOI] [PubMed] [Google Scholar]

- 14.Weir NU, Gunkel A, McDowall M, et al. Study of the relationship between social deprivation and outcome after stroke. Stroke 2005;36:815–19. [DOI] [PubMed] [Google Scholar]

- 15.Roberts SE, Williams JG, Yeates D, et al. Mortality in patients with and without colectomy admitted to hospital for ulcerative colitis and Crohn's disease: record linkage studies. BMJ 2007;335:1033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Saposnik G, Baibergenova A, Bayer N, et al. Weekends: a dangerous time for having a stroke? Stroke 2007;38:1211–15. [DOI] [PubMed] [Google Scholar]

- 17.Roberts SE, Williams JG, Meddings D, et al. Incidence and case fatality for acute pancreatitis in England: geographical variation, social deprivation, alcohol consumption and aetiology – a record linkage study. Aliment Pharmacol Ther 2008;28:931–41. [DOI] [PubMed] [Google Scholar]

- 18.Button LA, Roberts SE, Evans PA, et al. Hospitalized incidence and case fatality for upper gastrointestinal bleeding from 1999 to 2007: a record linkage study. Aliment Pharmacol Ther 2011;33:64–76. [DOI] [PubMed] [Google Scholar]

- 19.Croft GP, Williams JO, Mann RY, et al. Can Hospital Episode Statistics support appraisal and revalidation? Randomised study of physician attitudes. Clin Med 2007;7:332–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Audit Commission. Information and Data Quality in the NHS: Key Messages from Three Years of Independent Review. London: Audit Commission, 2004. [Google Scholar]