Abstract

Despite evolutionary claims about the function of facial behaviors across phylogeny, rarely are those hypotheses tested in a comparative context – i.e., by evaluating how nonhuman animals process such behaviors. Further, while increasing evidence indicates that humans make meaning of faces by integrating contextual information, including that from the body, the extent to which nonhuman animals process contextual information during affective displays is unknown. In the present study, we evaluated the extent to which rhesus macaques (Macaca mulatta) process dynamic affective displays of conspecifics that included both facial and body behaviors. Contrary to hypotheses that they would preferentially attend to faces during affective displays, monkeys looked for longest, most frequently, and first at conspecifics’ bodies rather than their heads. These findings indicate that macaques, like humans, attend to available contextual information during the processing of affective displays, and that the body may also be providing unique information about affective states.

Keywords: Macaca mulatta, nonhuman primate, face perception, affect perception, naturalistic displays

Introduction

It is widely believed that facial behaviors communicate veridical information about primate emotions (Ekman, 1972; Chevalier-Skolnikoff, 1973; Ekman, 1972; Keltner & Ekman, 2000; Preuschoft, 1992; Shariff & Tracy, 2011; Visalberghi, Valenzano, & S., 2006; Shariff & Tracy, 2011). Yet, a growing human literature suggests that the story is more complicated. For example, humans use contextual information to help understand facial behaviors. Providing perceivers with linguistic labels, conceptual information, or narrative or visual information about the context in which displays occur shifts how accurately they are able to categorize facial behaviors associated with emotions (Barrett & Gendron, 2016; Barrett, Lindquist, & Gendron, 2007; Hassin, Aviezer, & Bentin, 2013; Barrett & Gendron, 2016). Contextual information is so powerful that it even drives whether people accurately categorize facial displays as being associated with positive or negative affective states (Kayyal, Widen, & Russell, 2015). Increasing evidence from humans indicates that contextual information – especially information about the body – influences how we understand facial behaviors (Hassin et al., 2013). The body also appears to communicate information about an individual’s emotion (de Gelder, 2006; de Gelder, de Borst, & Watson, 2015; Klin, Jones, Schultz, Volkmar, & Cohen, 2002; Kret & de Gelder, 2010; Kret, Stekelenburg, de Gelder, & Roelofs, 2015; Riby & Hancock, 2008; Smilek, Birmingham, Cameron, Bischof, & Kingstone, 2006; de Gelder, de Borst, & Watson, 2015; Kret, Stekelenburg, de Gelder, & Roelofs, 2015; for a reviews Hassin et al., 2013; Enea & Iancu, 2015; Hassin et al., 2013). Given the strong evolutionary claims made about homologies of emotion-related facial behaviors (Ekman, 1972; Keltner & Ekman, 2000; Shariff & Tracy, 2011) and the importance of bodies for communicating social information (Holland, Wolf, Looser, & Cuddy, 2016) in the absence of comparative data, evaluating how nonhuman primates process information about bodies during affective displays is critically important for establishing strong evolutionary theory. Macaque monkeys, the most widely used species in research (Carlsson, Schapiro, Farah, & Hau, 2004), like humans, have a broad repertoire of stereotyped facial behaviors and body postures (Andrew, 1963; Bliss-Moreau & Moadab, 2017; Chevalier-Skolnikoff, 1973; Hinde & Rowell, 1962; Maestripieri, 1997; Redican, 1975; van Hooff, 1967; Redican, 1975; Andrew, 1963; Bliss-Moreau & Moadab, 2017) that are often assumed to be expressions of emotions (but see Bliss-Moreau & Moadab, 2017). The extent to which they use contextual information, including that related to the body, to make meaning of facial behaviors is unknown.

While the extent to which macaques’ process body information during affective displays in unknown, information about the body modulates human emotion perception (Aviezer et al., 2008; Meeren, van Heijnsbergen, & de Gelder, 2005). For example, when human faces, isolated without context, are thought to convey “disgust” are placed on bodies that convey other emotions (e.g., “fear”, “anger”, “sadness”), accurate categorization of the face drops significantly (e.g., only 11% categorized correctly when presented with an “angry” body) (Aviezer et al., 2008; for a review Hassin et al., 2013). Yet, visual attention to static human faces engaged in emotion-related behavior was greater than visual attention to static human bodies engaged in emotion-related behavior (Kret, Stekelenburg, Roelofs, & de Gelder, 2013; Shields, Engelhardt, & Ietswaart, 2012).

Despite the importance of understanding how body information, and contextual information more generally, influences emotion perception, and the strong evolutionary claims that are made about the importance of facial behaviors for communicating emotions, few studies have tested macaques with dynamic, content-rich stimuli that mimic naturalistic affective displays. Instead, macaques are typically tested with static and/or isolated facial behaviors lacking contextual information – including bodies (Keating & Keating, 1982; Wilson & Goldman-Rakic, 1994; Guo et al., 2003; Gothard et al., 2004; Deaner et al., 2005; Gibboni et al., 2009; Dahl et al., 2010; Hirata et al., 2010; Leanard et al., 2012; Hanley et al., 2012; Paukner et al., 2013; Dal Monte et al., 2014; Machado et al., 2015). Brain regions supporting perception of bodies generating affective displays appear to be homologous across macaques and humans (de Gelder & Partan, 2009), suggesting homology in perceptual processes. Yet, only a few studies include faces and bodies, and those that do used static images. For example, when rhesus macaques were shown static images of full bodied conspecifics with neutral faces (i.e., those with no affect-related display), they looked longest and most frequently at hands (Hu et al., 2013). When viewing static positive or negative affective content, rhesus monkeys spent more time looking at faces relative to bodies (MacFarland et al., 2013). Whether this effect is driven by affective content is not clear because there was no neutral condition.

Methodological choices, like testing macaques only with isolated faces or static conspecific images, leaves open questions about whether macaques integrate other information (such as body posture, context, social environment, etc.) with the face to understand intentions and actions. Evidence from the human literature indicates that dynamic facial behaviors are perceived as more intense, arousing and realistic than static facial behaviors (Krumhuber, Kappas, & Manstead, 2013). In daily life, both humans and macaques must understand the intentions and actions of conspecifics whose behavior is dynamic, not static. Thus, evaluating how macaques process dynamic, naturalistic affective displays should provide ethologically relevant insights about the nature of emotion-related communication.

As a first step towards understanding how macaques use contextual information to make meaning of facial behaviors, we tested the hypothesis that rhesus macaques attend to information other than the face – specifically the body – during realistic, dynamic affective displays. We also hypothesized that subjects would look longest, most frequently, and first at the face/heads of conspecifics engaged in affective displays, but would also pay significant attention to their bodies (that is, fixation durations and frequencies for bodies would be non-zero). Further, we hypothesized that attention to the whole conspecifics (faces plus bodies) would be greatest when conspecifics engaged in affective behaviors relative to neutral behaviors; this effect would also manifest in less time attending to information other than the conspecifics (e.g., the conspecific’s caging, filming back drop, etc.) for videos with affective content as compared to neutral content.

Methods

Experimental procedures were carried out at the California National Primate Research Center (CNPRC) at University of California Davis (UC Davis) and were approved by the UC Davis Institutional Animal Care and Use Committee in accordance with the recommendations in the Guide for the Care and Use of Laboratory Animals of the National Institutes of Health.

Subjects and Living Conditions

Subjects were 6 adult male rhesus macaques (M=7.39, SD=1.29) that were born into large, semi-naturalistic social groups (ranging from 60–150 monkeys/group living in 0.2 hA; 30.5 m × 61.0 m × 2.4 m) at the CNPRC. All subjects lived in these groups for at least 2 years before being relocated in to indoor housing. Due to compatibility issues, one animal had no access to a social partner during the duration of his participation in the experiment. The other animals were paired with a compatible male social partner and housed in standard caging (size based on animal weight). They had access to their social partner either 6 hours per day, 5-days a week, or 24hours/day depending on pair compatibility. Pairs were allowed to interact either in full contact or restricted contact through a one-inch mesh grate. Animal rooms were maintained at 17.78–28.89°C and on a 12/12 light/dark cycle (lights on at 0600). Subjects were fed twice daily (Lab Diet #5047, PMI Nutrition International INC, Brentwood, MO), provided with fresh produce biweekly, had access to water ad libitum and a variety of enrichment devices.

Experimental Protocol

Animal training, equipment, and experimental stimuli are fully detailed in previous publications (Bliss-Moreau, Machado, & Amaral, 2013; Machado, Bliss-Moreau, Platt, & Amaral, 2011). We analyzed attention associated with a subset of the social videos from Bliss-Moreau et al., 2013; Machado et al., 2011—the “subject-directed” videos (Figure 1) in greater detail. Subject-directed videos were 30-second videos in which a single conspecific generates affective or non-affective behaviors toward the camera. Videos included multiple “scenes” that featured different conspecifics, but each scene only had one conspecific. Conspecifics and subjects had never physically interacted but may have been housed in the same room and been in visual contact at periods of time prior to the experiment. Monkeys viewed 60 videos that belonged to one of three categories: aggressive (including threats, cage displays, etc.), submissive (including bared teeth displays, lipsmacks, submissive body postures, etc.) or neutral (including movement within the cage such as walking, hanging, foraging, etc., but no facial or body posture displays). Daily test sessions included three phases: a) calibration—eye-tracker was calibrated for each monkey by having him fixate on small videos presented in nine positions on the monitor; b) test-chamber acclimation—animals watched 10, 30-second video screen savers to acclimate them to the chamber; and c) the experimental phase (see Figure 1 for details) which consisted of 50 videos per day. Testing occurred over 12 days.

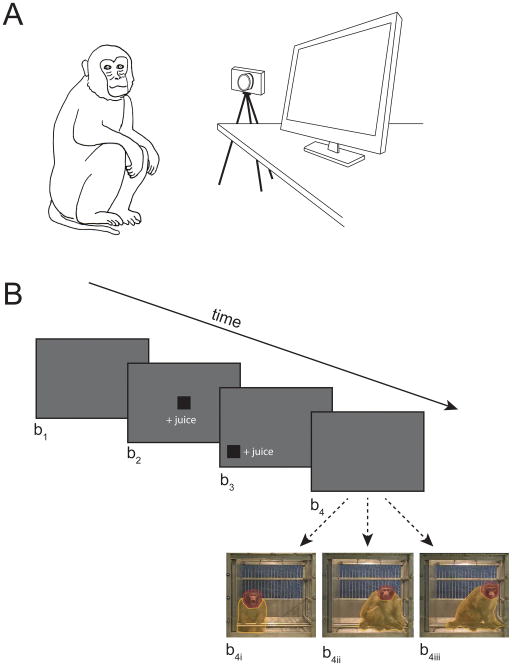

Figure 1.

Experimental Design. A) Testing occurred with subjects seated in a box chair, with their heads secured using custom-fit thermoplastic helmets and their arms and feet were tethered and secured comfortably to the chair using leather straps (1.3 cm × 3 mm × 1 m). Subjects sat seated in front of an infrared eye tracker, depicted here as a camera (Applied Science Laboratories, Bedford, MA; model R-HS-S6; positioned 53.34 cm from the animals’ eyes) and a large computer monitor (60.96 cm diagonal; Gateway Inc., Irvine, CA; positioned 127 cm from the animal’s eyes) in a darkened sound attenuated chamber (Acoustic Systems, Austin, TX; 2.1 m×2.4 m×1.1 m). Auditory distractions were masked with a white noise generator (60 dB). B) The experiment began with a grey screen for 10 s (b1), followed by a fixation target in the center of the screen (b2), and a fixation target at the periphery of the screen (b3). Each fixation screen required that the subjects fixate on the target for at least 500 ms before advancing to the next screen. Successful fixation was rewarded with juice dispensed from an automatic juice dispenser (Crist Instrument Co., Inc.; model # 5-RLD-E3) with curved mouthpiece (Crist Instrument Co., Inc.; model # 5-RLD-00A) attached to the top-left of the chair. Thirty-second videos were presented after the 2nd target fixation (b4). For the present report, we analyzed data from videos in which a single conspecific engaged in aggressive (b4i), submissive (b4ii), and neutral (b4iii) behaviors. ROIs were drawn around the conspecific’s head (red) and body (yellow).

Eye-Tracking Data Collection and Processing

Foveal gaze location and duration data were used to infer visual attention specific to two ROIs within each subject-directed video, rather than global attention to entire video (as previously analyzed in Machado et al., 2011). ROIs were hand drawn on each frame of the 30-second video using Applied Science Laboratory (ASL) software (Results Plus, Bedford, MA). Fixation and dwell data for each ROI were extracted using Results Plus with the default settings. Fixation onset occurred when gaze coordinates remained within a 1° × 1° visual angle for 100 ms and terminated when gaze coordinates left that space for greater than 360 ms. Total fixation duration was calculated from the summation of each individual fixation within each ROI for each video (i.e., 30-sec max). The total number of unique fixations were totaled for each ROI for each video.

Data Analysis Strategy

Statistical analyses were completed using IBM SPSS Statistics version 23 (IBM Corp. Released 2013. IBM SPSS Statistics for Windows, Version 22.0. Armonk, NY: IBM Corp.). Data were evaluated for non-normality and corrected when appropriate as indicated below. When Mauchly’s Test of Sphercity was significant, we used Greenhouse-Geisser corrected degrees of freedom. We used a series of repeated measures ANOVAs with video type (submissive, aggressive or neutral) and ROI (head, body) as the repeated factor. It is important to note that because the videos included a sustainable amount of space outside the head and body, looking at the head or body was not necessarily a zero-sum trade off.

Results

Fixation Duration

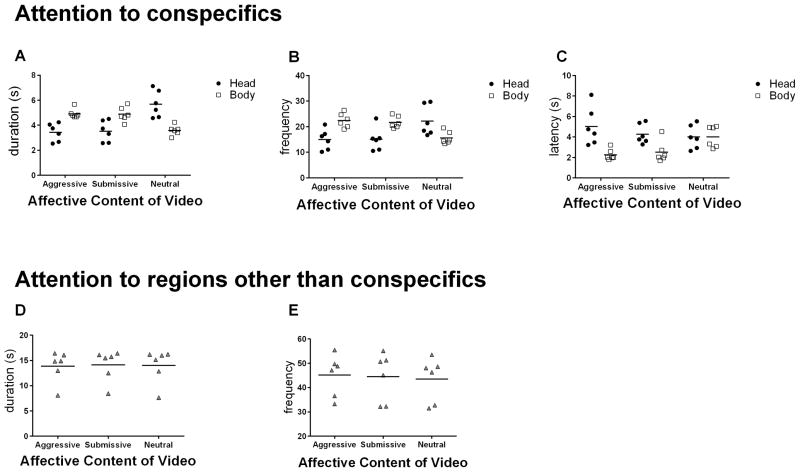

A main effect of affective content indicated that fixation duration differed based on the meaning of the conspecifics’ behaviors, F(2,20)=5.98, p=0.020, ηp2=0.54. Subjects fixated for significantly longer on videos with neutral content as compared to videos with affective content; neutral>aggressive: t(5)=2.74, p=0.041, d=1.16; neutral>submissive, t(5)=2.79, p=0.039, d=0.93. There was not a significant difference between the two conditions with affective content, t(5)=0.20, p=0.846, d=0.03. This main effect of affective content was influenced by an interaction with ROI, F(2,10)=79.40, p<0.0001, ηp2=0.94. Contrary to our hypotheses, while viewing both videos of aggressive or submissive conspecifics, subjects fixated for longer durations on bodies than heads. In contrast, while watching the neutral conspecifics, subjects fixated longer on their heads than their bodies. There was no significant main effect of ROI, indicating that across all videos, subjects fixated for equal durations on heads and bodies, F(1,5)=0.35, p=0.58, ηp2=0.07. See Figure 2a.

Figure 2.

Visual Attention During Dynamic Affective Displays. Horizontal lines represent mean values. A) Each monkey’s average total fixation duration for conspecifics’ heads and bodies, by affective content of video. B) Each monkey’s average total fixation frequency for conspecifics’ heads and bodies, by affective content of video. C) Each monkey’s latency to first fixation for conspecifics’ heads and bodies, by affective content of video. D) Each monkey’s average total fixation duration for areas outside the head and body ROIs. E) Each monkey’s average total fixation frequency for areas outside the head and body ROIs.

Fixation Frequency

Patterns of fixation frequency mirrored that of fixation duration, revealing a significant affective content X ROI interaction, F(2,10)=50.73, p<0.001, ηp2=0.91 that contrasted with our hypotheses. While viewing aggressive or submissive conspecifics, subjects fixated more frequently on their bodies than heads. In contrast, while viewing neutral conspecifics, subjects fixated more frequently on their heads than bodies. Neither the main effect of video type nor ROI were significant, indicating that across all trials subjects fixated equally often on aggressive, submissive, and neutral videos, F(2,10)=0.40, p=0.68, ηp2=0.07 and at comparable frequencies on both heads and bodies, F(1,5)=2.75, p=0.16, ηp2=0.35. See Figure 2b.

First Fixation Latency

We next evaluated whether affective content might influence whether subjects looked at heads or bodies first, by evaluating the latency to first fixation. Subjects fixated first on conspecifics’ bodies regardless of affective content, as indicated by a main effect of ROI, F(1,5)=8.36, p=0.034, ηp2=0.63. Importantly, this was only true for videos depicting aggressive and submissive affective behaviors as indicated by a significant affective content X ROI interaction, F(2,10)=13.71, p=0.001, ηp2=0.73. For videos in which conspecifics generated affectively neutral behaviors, the latency to fixate first on heads and bodies were statistically equivalent. Affective content did not significantly influence how quickly subjects made their first fixations, F(2,10)=1.08, p=0.38, ηp2=0.18 – that is, the presence of affective behavior did not capture attention more rapidly (Figure 2c).

Non-Conspecific Fixations

Finally, we evaluated whether attention to regions other than the conspecifics (i.e., all areas of the video outside of the head + body; e.g., caging) varied by affective content. Affective content did not significantly influence either the duration or frequency of fixations on areas other than the conspecifics’ heads and bodies, F(2,10)=0.243, p=0.789, ηp2=0.046, and F(2,10)=1.75, p=0.224, ηp2=0.26, respectively. See Figures 2d and 2e. Taken together, these analyses suggest that attention allocation to regions other than the conspecifics was similar for both affective and neutral information.

Discussion

Across several metrics of attention, our data demonstrate that not only do rhesus macaques attend to the bodies of conspecifics during dynamic affective displays, but they attend to bodies for the longest period of time, most frequently, and first. In addition, we observed no significant differences in either fixation duration or frequency between the two affective content types – aggressive behaviors and submissive behaviors – indicating that both classes of behavior were prioritized similarly. These findings suggest that monkeys encode information about the bodies of conspecifics while processing affective displays providing support for the hypothesis that, like humans (e.g., Aviezer et al., 2008; Kayyal et al., 2015; Meeren et al., 2005; Wenzler, Levine, van Dick, Oertel-Knochel, & Aviezer, 2016; for a review Hassin et al., 2013), monkey facial displays have multiple meanings that are context dependent. In this view, the position, movement, and shape (e.g., crook of the tail) of the body are all important sources of that contextual information. In all likelihood, monkeys, like humans, require additional contextual information in addition to information about the body, to fully understand facial behaviors. Accumulating evidence from biological anthropology indicates that the same facial behavior, the silent bared teeth display, has multiple meanings depending on the context in which it occurs (social peace versus social conflict) (Beisner & McCowan, 2014). Together, these findings suggest facial behaviors are not evolved “expressions” of emotion that can be “read” alone. This idea stands in stark contrast the predominant evolutionary views about the meaning of facial behaviors (e.g., Ekman, 1972; Keltner & Ekman, 2000; Shariff & Tracy, 2011).

One surprising finding from this experiment was that subjects fixated more frequently on heads than bodies of conspecifics who were not generating affective displays. The lack of clear affective information in these displays may signal the perceiver to continue processing the available visual scene, increasing the extent to which the face is scanned. Another possibility is that the effect was driven by visual properties of the videos themselves. Compared to videos with affective content, videos with neutral content included more frames in which the camera zoomed in on the face, rendering it larger. This possibility should be explored in future testing using a new set of neutral videos. However, had this been the case, we expected to see shorter fixation durations and fewer fixations on areas outside the conspecific (because there would be less area outside) – which was not the case. Taken together, these data indicate that neutral and affective social information are prioritized similarly in attention. This is consistent with other findings from our laboratory that demonstrated that rhesus macaques were more behaviorally reactive to neutral social information than to neutral nonsocial information (Bliss-Moreau, Bauman, & Amaral, 2011).

In conclusion, our findings clearly indicate that rhesus macaques, like humans, attend to contextual information – in this case, bodies – during dynamic affective displays. Understanding the extent to which and the process by which that information shapes an understanding of faces in humans and nonhuman animals is an important avenue for future research since it forms the core of our social decision-making processes and is impaired in many psychiatric disorders.

Acknowledgments

Thank you to the team of undergraduates in the Bliss-Moreau Laboratory who drew the AOIs on each frame of the stimulus videos. This research was funded by F32MH087067 to EBM, K99MH083883 to CJM and R37MH57502. Additional support was provided by the base grant of the California National Primate Research Center (OD011107). EBM was supported by K99MH10138 during the preparation of this manuscript. CJM is now at Cuesta College, San Luis Obispo, California.

References

- Andrew RJ. The Origin and evolution of the calls and facial expressions of the primate. Behaviour. 1963;20:1–109. [Google Scholar]

- Aviezer H, Hassin RR, Ryan J, Grady C, Susskind J, Anderson A, … Bentin S. Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychol Sci. 2008;19(7):724–732. doi: 10.1111/j.1467-9280.2008.02148.x. [DOI] [PubMed] [Google Scholar]

- Barrett LF, Gendron M. The importance of context: Three corrections to Cordaro, Keltner, Tshering, Wangchuk, and Flynn (2016) Emotion. 2016;16(6):803–806. doi: 10.1037/emo0000217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Lindquist KA, Gendron M. Language as context for the perception of emotion. Trends Cogn Sci. 2007;11(8):327–332. doi: 10.1016/j.tics.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beisner BA, McCowan B. Signaling context modulates social function of silent bared-teeth displays in rhesus macaques (Macaca mulatta) Am J Primatol. 2014;76(2):111–121. doi: 10.1002/ajp.22214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bliss-Moreau E, Bauman MD, Amaral DG. Neonatal amygdala lesions result in globally blunted affect in adult rhesus macaques. Behav Neurosci. 2011;125(6):848–858. doi: 10.1037/a0025757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bliss-Moreau E, Machado CJ, Amaral DG. Macaque cardiac physiology is sensitive to the valence of passively viewed sensory stimuli. PLoS One. 2013;8(8):e71170. doi: 10.1371/journal.pone.0071170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bliss-Moreau E, Moadab G. The faces monkeys make 2017 [Google Scholar]

- Carlsson HE, Schapiro SJ, Farah I, Hau J. Use of primates in research: a global overview. Am J Primatol. 2004;63(4):225–237. doi: 10.1002/ajp.20054. [DOI] [PubMed] [Google Scholar]

- Chevalier-Skolnikoff S. Facial expression of emotion in nonhuman primates. In: Ekman P, editor. Darwin and facial expression: A century of research and review. New York: Academic Press; 1973. pp. 11–89. [Google Scholar]

- de Gelder B. Towards the neurobiology of emotional body language. Nat Rev Neurosci. 2006;7(3):242–249. doi: 10.1038/nrn1872. [DOI] [PubMed] [Google Scholar]

- de Gelder B, de Borst AW, Watson R. The perception of emotion in body expressions. Wiley Interdiscip Rev Cogn Sci. 2015;6(2):149–158. doi: 10.1002/wcs.1335. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Partan S. The neural basis of perceiving emotional bodily expressions in monkeys. Neuroreport. 2009;20(7):642–646. doi: 10.1097/WNR.0b013e32832a1e56. [DOI] [PubMed] [Google Scholar]

- Ekman P. Universals and Cultural Differences in Facial Exprssions of Emotions. In: Cole J, editor. Nebraska Symposium on Motivation. Lincoln, NB: University of Nebraska Press; 1972. pp. 207–282. [Google Scholar]

- Enea V, Iancu S. Processing emotional body expressions: state-of-the-art. Soc Neurosci. 2015:1–12. doi: 10.1080/17470919.2015.1114020. [DOI] [PubMed] [Google Scholar]

- Inherently Ambiguous: Facial Expressions of Emotions. Context, 1, 5 Cong. Rec. 2013:60–65. [Google Scholar]

- Hinde RA, Rowell TE. Communication by postures and facial expressions in the rhesus monkey (Macaca mulatta) Proceedings of the Zoological Society of London. 1962;138(1):1–21. [Google Scholar]

- Holland E, Wolf EB, Looser C, Cuddy A. Visual attention to powerful postures: People avert their gaze from nonverbal dominance displays. Journal of Experimental Social Psychology. 2016;68:60–67. [Google Scholar]

- Hu YZ, Jiang HH, Liu CR, Wang JH, Yu CY, Carlson S, … Hu XT. What interests them in the pictures?--differences in eye-tracking between rhesus monkeys and humans. Neurosci Bull. 2013;29(5):553–564. doi: 10.1007/s12264-013-1367-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kayyal M, Widen S, Russell JA. Context is more powerful than we think: contextual cues override facial cues even for valence. Emotion. 2015;15(3):287–291. doi: 10.1037/emo0000032. [DOI] [PubMed] [Google Scholar]

- Keltner D, Ekman P. Facial Expression of Emotion. In: Lewis MH-J, editor. Handbook of emotions. 2. New York: Guilford Publications; 2000. pp. 236–249. [Google Scholar]

- Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Arch Gen Psychiatry. 2002;59(9):809–816. doi: 10.1001/archpsyc.59.9.809. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/12215080. [DOI] [PubMed] [Google Scholar]

- Kret ME, de Gelder B. Social context influences recognition of bodily expressions. Exp Brain Res. 2010;203(1):169–180. doi: 10.1007/s00221-010-2220-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kret ME, Stekelenburg JJ, de Gelder B, Roelofs K. From face to hand: Attentional bias towards expressive hands in social anxiety. Biol Psychol. 2015 doi: 10.1016/j.biopsycho.2015.11.016. [DOI] [PubMed] [Google Scholar]

- Kret ME, Stekelenburg JJ, Roelofs K, de Gelder B. Perception of face and body expressions using electromyography, pupillometry and gaze measures. Fronteirs in Psychology. 2013;4:28. doi: 10.3389/fpsyg.2013.00028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krumhuber EG, Kappas A, Manstead ASR. Effects of Dynamic Aspects of Facial Expressions: A Review. Emotion Review. 2013;5(1):41–46. doi: 10.1177/1754073912451349. [DOI] [Google Scholar]

- Machado CJ, Bliss-Moreau E, Platt ML, Amaral DG. Social and nonsocial content differentially modulates visual attention and autonomic arousal in Rhesus macaques. PLoS One. 2011;6(10):e26598. doi: 10.1371/journal.pone.0026598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maestripieri D. Gestural communicationin macaques: Usage and meaning of nonvocal signals. Evolution of Communication. 1997;1(2):193–222. [Google Scholar]

- Meeren HK, van Heijnsbergen CC, de Gelder B. Rapid perceptual integration of facial expression and emotional body language. Proceedings of the National Academy of Sciences of the United States of America. 2005;102(45):16518–16523. doi: 10.1073/pnas.0507650102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Preuschoft S. Laughter and Smile in Barbary Macaques (Macaca-Sylvanus) Ethology. 1992;91(3):220–236. Retrieved from <Go to ISI>://WOS:A1992JG57400003. [Google Scholar]

- Redican WK. Facial expressions in nonhuman primates. In: Rosenblum LA, editor. Primate Behavior: Developments in field and laboratory research. NY, NY: Academic Press, Inc; 1975. pp. 103–194. [Google Scholar]

- Riby DM, Hancock PJ. Viewing it differently: social scene perception in Williams syndrome and autism. Neuropsychologia. 2008;46(11):2855–2860. doi: 10.1016/j.neuropsychologia.2008.05.003. [DOI] [PubMed] [Google Scholar]

- Shariff AF, Tracy JL. What are emotion expressions for? Current Directions in Psychological Science. 2011;20(6):395–399. [Google Scholar]

- Shields K, Engelhardt PE, Ietswaart M. Processing emotion information from both the face and body: an eye-movement study. Cognition & Emotion. 2012;26(4):699–709. doi: 10.1080/02699931.2011.588691. [DOI] [PubMed] [Google Scholar]

- Smilek D, Birmingham E, Cameron D, Bischof W, Kingstone A. Cognitive Ethology and exploring attention in real-world scenes. Brain Res. 2006;1080(1):101–119. doi: 10.1016/j.brainres.2005.12.090. [DOI] [PubMed] [Google Scholar]

- van Hooff JARAM. The facial displays of the catarrhine monkeys and apes. In: Morris D, editor. Primate Ethology. Aldine, New York: London, Weidenfeld & Nicolson; 1967. pp. 7–68. [Google Scholar]

- Visalberghi E, Valenzano DR, SP Facial displays in tufted capuchins. International Journal of Primatology. 2006;27(6):1689–1707. [Google Scholar]

- Wenzler S, Levine S, van Dick R, Oertel-Knochel V, Aviezer H. Beyond pleasure and pain: Facial expression ambiguity in adults and children during intense situations. Emotion. 2016;16(6):807–814. doi: 10.1037/emo0000185. [DOI] [PubMed] [Google Scholar]