Abstract

This paper presents a technique to image the complex index of refraction of a sample across three dimensions. The only required hardware is a standard microscope and an array of LEDs. The method, termed Fourier ptychographic tomography (FPT), first captures a sequence of intensity-only images of a sample under angularly varying illumination. Then, using principles from ptychography and diffraction tomography, it computationally solves for the sample structure in three dimensions. The experimental microscope demonstrates a lateral spatial resolution of 0.39 μm and an axial resolution of 3.7 μm at the Nyquist–Shannon sampling limit (0.54 and 5.0 μm at the Sparrow limit, respectively) across a total imaging depth of 110 μm. Unlike competing methods, this technique quantitatively measures the volumetric refractive index of primarily transparent and contiguous sample features without the need for interferometry or any moving parts. Wide field-of-view reconstructions of thick biological specimens suggest potential applications in pathology and developmental biology.

OCIS codes: (180.6900) Three-dimensional microscopy, (110.6955) Tomographic imaging

1. INTRODUCTION

It is challenging to image thick samples with a standard microscope. High-resolution objective lenses offer a shallow depth of field, which require one to axially scan through the sample to visualize its three-dimensional (3D) shape. Unfortunately, refocusing does not remove light from areas above and below the plane of interest. This longstanding problem has inspired a number of solutions, the most widespread being confocal designs, two-photon excitation methods, light sheet microscopy, and optical coherence tomography. These methods “gate out” light from sample areas away from the point of interest and offer excellent signal enhancement, especially for thick, fluorescent samples [1].

Such gating techniques also encounter several problems. First, they typically must scan out each image, which might require physical movement and can be time consuming. Second, the available signal (i.e., the number of ballistic photons) decreases exponentially with depth. To overcome this limit, one must use a high NA lens, which provides a proportionally smaller image field of view (FOV). Finally, little light is backscattered when imaging non-fluorescent samples that are primarily transparent, such as commonly seen in embryology, in model organisms such as zebrafish, and after the application of recent tissue clearing [2] and expansion [3] techniques.

Instead of capturing just the ballistic photons emerging from the sample, one might instead image the entire optical field, which includes light that has scattered. Several techniques have been proposed to enable depth selectivity without gating for ballistic light. One might perform optical sectioning through digital deconvolution of a focal stack [4]. Light-field imaging [5] and point-spread function engineering [6] are two other alternatives. All three of these methods primarily operate with incoherent light, e.g., from fluorescent samples. They are thus not ideal tools for obtaining the refractive index distribution of a primarily transparent and non-fluorescent medium.

To do so, it is useful to use coherent illumination. For example, the amplitude and phase of a digital hologram may be computationally propagated to different depths within a thick sample, much like refocusing a microscope. However, the field at out-of-focus planes still influences the final result. Several techniques also aim for depth selectivity by using quasi-coherent illumination or through acquiring multiple images [7–10].

A very useful framework to summarize how coherent light scatters through thick samples is diffraction tomography (DT), as first developed by Wolf [11]. In a typical DT experiment, one illuminates a sample of interest with a series of tilted plane waves and measures the resulting complex diffraction patterns in the far field. These measurements may then be combined with a suitable algorithm into a tomographic reconstruction. An early demonstration of DT by Lauer is a good example [12]. Typically, the reconstruction algorithm assumes the first Born [13,14] or the first Rytov [15] approximation. It is also possible to apply the projection approximation, which models light as a ray. As a synthetic aperture technique, DT comes with the additional benefit of improving the resolution of an imaging element beyond its traditional diffraction-limit cutoff [12].

However, as a technique that models both the amplitude and phase of a coherent field, most implementations of DT require a reference beam and holographic measurement, or some sort of phase-stable interference (including spatial light modulator coding strategies, e.g., as in Ref. [16]). Since it is critical to control for interferometric stability [13] and thus limit the motion and phase drift to sub-micrometer variations, DT has been primarily implemented in well-controlled, customized setups. Several prior works have considered solving DT from intensity-only measurements to possibly remove the need for a reference beam [17–26]. However, while some applied the first Born approximation, these works also required customized setups and typically imposed additional sample constraints (e.g., a known sample support). They did not operate within a standard microscope or connect their reconstruction algorithms to ptychography, from which improvements like computational aberration correction [27] and multiplexed reconstruction [28] may be easily adopted.

Here, we perform DT using standard intensity images captured under variable LED illumination from an array source. Our technique, termed Fourier ptychographic tomography (FPT), acquires a sequence of images while changing the light pattern displayed on the LED array. Then, it combines these images using a phase retrieval-based ptychographic reconstruction algorithm, which computationally segments a thick sample into multiple planes, as opposed to physically rejecting light from above and below one plane of interest. Similar to DT, FPT also improves the lateral image resolution beyond the standard cutoff of the imaging lens. The end result is an accurate three-dimensional map of the complex index of refraction of a volumetric sample obtained directly from a sequence of standard microscope images.

2. RELATED WORK

To begin, a number of techniques attempt 3D imaging without applying the first Born approximation. These include lensless on-chip devices [29], lensless setups that assume an appropriate linearization [30], and methods relying upon effects like defocusing (e.g., the transport of intensity equation [31]) or spectral variations [32]. These techniques do not necessarily fit within a standard microscope setup or offer the ability to simultaneously improve spatial resolution. Two related works for 3D imaging, which also do not use DT with the first Born approximation, are by Tian and Waller [33] and Li et al. [34]. These two setups are quite similar to ours, and we discuss their operations in more detail below.

As mentioned above, there are also several prior works applying DT under the first Born approximation that only use intensity measurements [17–26]. While some of these works examine phase retrieval as a reconstruction algorithm, they must either shift the focal plane [22,24] or source [25] axially between each measurement, or must assume constraints on the sample [19,20,23,26] to successfully recover the phase. These prior works do not connect DT to ptychographic phase retrieval (i.e., they do not recover the phase by using diversity between the variably illuminated DT images). Connections between phase retrieval and DT under the first Born approximation have also been explored within the context of volume hologram design [35].

The field of x ray ptychography also offers a number of methods to image 3D samples with intensity measurements [36–38]. However, none of these ptychography methods seem to directly modify DT under the first Born or Rytov approximation, to the best of our knowledge. A popular technique appears to use standard two-dimensional (2D) ptychographic solvers to determine the complex field for individual projections of a slowly rotated sample, which are subsequently combined using conventional DT techniques [39].

Fourier ptychography (FP) [40] uses a standard microscope and no moving parts to simultaneously improve image resolution and measure quantitative phase but is restricted to thin samples. FPT effectively extends FP into the third dimension. As noted above, Tian and Waller [33] and Li et al. [34] also examine the problem of 3D imaging from intensities in a standard microscope. These two examples adopted their reconstruction technique from a 3D ptychography method [37,38] that splits up the sample into a specified number of infinitesimally thin slices (each under the projection approximation) and applies the beam propagation method (i.e., assumes small-angle scattering) [41]. This “multi-slice” approach remains accurate within a different domain of optical scattering than the first Born approximation (i.e., for different types of samples and setups, see chapter 2 in Ref. [42]). For example, one may include both forward and backscattered light in a DT solver to accurately reconstruct a 3D sample under the Born approximation [12]. However, the multi-slice method does not directly account for backscattered light. Its projection approximation also assumes the lateral divergence of the optical field gradient at each slice is zero. Alternatively, the validity of first Born approximation breaks down when the total amount of absorption and phase shift from a sample is large [43], whereas this appears to impact the multi-slice approach less (e.g., it can image two highly absorbing layers separated by a finite amount of free space [33,34]).

Thus, while the experimental setup of FPT is similar to prior work [33,34,40], our reinterpretation of ptychography within the physical framework of DT (under the first Born approximation) allows us to accurately reconstruct new specimen types in 3D without measuring phase. For example, primarily transparent samples of continuously varying optical density, which are often encountered in biology, typically obey the first Born approximation. Accordingly, we have used FPT to compute some of the first quantitatively accurate 3D maps of clear samples that contain contiguous features (e.g., an unstained nematode parasite and starfish embryo) from standard microscope images.

In addition, FPT offers a clear picture of the location and amount of data it captures in 3D Fourier space. Such knowledge currently helps us to establish various solution guarantees for 2D image phase retrieval [44]. These guarantees may also extend to the current case of 3D tomographic phase retrieval (e.g., to support its potential clinical use). Furthermore, instead of specifying an arbitrary number of sample slices and their location in a 3D volume, FPT simply inserts measured data into its appropriate 3D Fourier space location and ensures phase consistency between each measurement. By solving for the first term in the Born expansion, we aim this approach as a general framework to eventually form quantitatively accurate tomographic maps of complex biological samples with sub-micrometer resolutions.

3. METHOD OF FPT

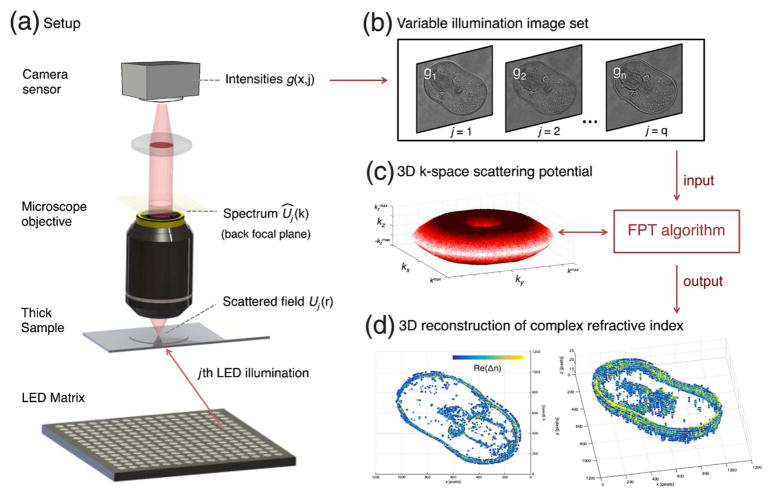

In this section, we develop a mathematical expression for our image measurements using the FPT framework and then summarize our reconstruction algorithm. We use the vector r = (rx, ry, rz) to define the 3D sample coordinates and the vector k = (kx, ky, kz) to define the corresponding k-space (wave vector) coordinates (see Fig. 1).

Fig. 1.

Setup for Fourier ptychographic tomography (FPT). (a) Labeled diagram of the FPT microscope, including optical functions of interest. (b) FPT captures multiple images under varied LED illumination. (c) A ptychography-inspired algorithm combines these images in a 3D k-space representation of the complex sample. (d) FPT outputs a 3D tomographic map of the complex index of refraction of the sample. Included images are experimental measurements from a starfish embryo (real index component, threshold applied; see Fig. 7).

A. Image Formation in FPT

It is helpful to begin our discussion by introducing a quantity termed the scattering potential, which contains the complex index of refraction of an arbitrarily thick volumetric sample,

| (1) |

Here, n(r) is the spatially varying and complex refractive index profile of the sample, nb is the index of refraction of the background (which we assume is constant), and k = 2π/λ is the wave-number in vacuum. We note that n(r) = nr (r) + 1i · nim(r), where nr is associated with the sample’s refractive index, nim is associated with its absorptivity, and we define for notational clarity. We typically neglect the dependence of n on λ since we illuminate with quasi-monochromatic light. This dependence cannot be neglected when imaging with polychromatic light. Finally, we use the term “thick” for samples that do not obey the thin sample approximation, which requires the sample thickness to be much less than , where θmax is the magnitude of the maximum scattering angle [45].

Next, to understand what happens to light when it passes through this volumetric sample, we define the complex field that results from illuminating the thick sample, U(r), as a sum of two fields: U(r) = Ui(r) + Us(r). Here, Ui(r) is the field “incident” upon the sample (i.e., from one LED) and Us(r) is the resulting field that “scatters” off of the sample. We may insert this decomposition into the scalar wave equation for light propagating through an inhomogeneous medium and use Green’s theorem to determine the scattered field as [11]

| (2) |

Here, G(|r′ − r|) is the Green’s function connecting light scattered from various sample locations, denoted by r, to an arbitrary location r′. V(r) is the scattering potential from Eq. (1). Since U(r) is unknown at all sample locations, it is challenging to solve Eq. (2). Instead, it is helpful to apply the first Born approximation, which replaces U(r) in the integrand with Ui(r). This approximation assumes that Ui(r) ≫ Us(r). It is the first term in the Born expansion that describes the scattering response of an arbitrary sample [11]. It assumes a weakly scattering medium. Specifically, the first Born approximation remains valid when the relative index shift δn = |n(r) − nb| and sample thickness t obey the relation, ktδn/2 ≪ 1 [43]. We expect FPT to remain quantitatively accurate with samples obeying this condition. By including higher-order terms, the above framework may in principle include samples with stronger scattering [46,47].

Our system sequentially illuminates the sample with an LED array, which contains q = qx × qy sources positioned a large distance l from the sample (in a uniform grid, with inter-LED spacing c; see Fig. 1). It is helpful to label each LED with a 2D counter variable (jx,jy), where −qx/2≤jx≤qx/2 and −qy/2≤jy≤qy/2, as well as a single counter variable j, where 1 ≤ j ≤ q. Assuming each LED acts as a spatially coherent and quasi-monochromatic source (central wavelength λ) placed at a large distance from the sample, the incident field takes the form of a plane wave traveling at a variable angle such that θjx = tan−1(jx · c/l) and θjy = tan−1(jy · c/l) with respect to the x- and y-axes, respectively. We may express the jth field incident upon the sample as

| (3) |

where kj is the wave vector of the jth LED plane wave,

| (4) |

As θjx and θjy vary, kj will always assume values along a spherical shell in 3D (kx, ky, kz) space (i.e., the Ewald sphere), since the value of kjz is a deterministic function of kjx and kjy.

After replacing U(r) in Eq. (2) with from Eq. (3) and additionally approximating the Green’s function G as a far-field response, the following relationship emerges between the scattering potential V and the Fourier transform of the jth scattered field, , in the far field [11]:

| (5) |

We refer to V̂(k) as the k-space scattering potential, which is the three-dimensional Fourier transform of V(r), with k the scattered wave vector in the far field. For simplicity, we have left out a multiplicative pre-factor (−1iπ/kz) on the right-hand side of Eq. (5), and instead assume it is included within the function V̂ for the remainder of this presentation. The field scattered by the sample and viewed at a large distance, , is given by the values along a specific manifold (or spherical “shell”) of the k-space scattering potential, here written as V̂(k − kj). We illustrate the geometric connection between V̂(k − kj) and for a 2D optical geometry in Fig. 2(b). The center of the jth shell is defined by the incident wave vector, kj. For a given shell center, each value of V̂(k − kj) lies on a spherical surface at a radial distance of |k| = k [see colored arcs in Fig. 2(b)]. As kj varies with the changing LED illumination, the shell center shifts along a second shell with the same radius [since kj is itself constrained to lie on an Ewald sphere; see gray circle in Fig. 2(b)].

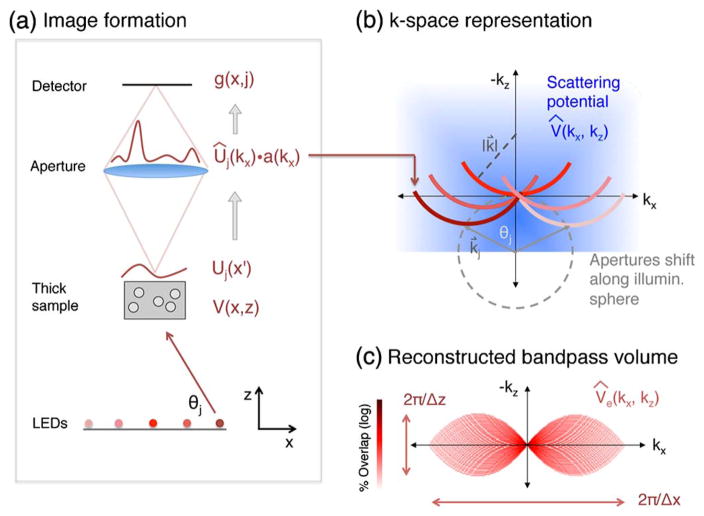

Fig. 2.

Mathematical summary of FPT. (a) The field from the jth LED scatters through the sample and exits its top surface as Uj(x′) (in 2D). This field forms Ûj(kx) at the microscope back focal plane, where it is bandlimited by the microscope aperture a(kx) before propagating to the image plane to form the jth sampled intensity image. (b) Under the first Born approximation, each detected image is the squared magnitude of the Fourier transform of one colored “shell” in (kx, kz) space. (c) By filling in this space with a ptychographic phase-retrieval algorithm, FPT reconstructs the complex values within the finite bandpass volume V̂e(kx, kz) (color indicates expected bowl overlap for this example). The Fourier transform of this reconstruction yields our complex refractive index map with resolutions Δx and Δz along x and z.

The goal of DT is to determine all the complex values within the volumetric function V̂ from a set of q scattered fields, , which is often measured holographically [12,15]. Each 2D holographic measurement maps to the complex values of V̂ along one 2D shell. The values from multiple measurements [i.e., the multiple shells in Fig. 2(b)] can be combined to form a k-space scattering potential estimate, V̂e Nearly all stationary optical setups will yield only an estimate, since it is challenging to measure data from the entire k-space scattering potential without rotating the sample. Figures 1(c) and 2(c) display typical measurable volumes, also termed a bandpass, from a limited-angle illumination and detection setup. Once sampled, an inverse 3D Fourier transform of the band-limited V̂e(k) yields the desired complex scattering potential estimate, Ve(r), which contains the quantitative index of refraction.

In FPT, we do not measure the scattered fields holographically. Instead, we use a standard microscope to detect image intensities and apply a ptychographic phase-retrieval algorithm to solve for the unknown complex potential. The scattered fields in Eq. (5) are defined at the microscope objective back focal plane (i.e., its Fourier plane), whose 2D coordinates k2D = (kx, ky) are Fourier conjugate to the microscope focal plane coordinates (x, y). If we neglect the effect of the constant background plane wave term (i.e., Ui in the sum U = Ui + Us), we may now write the jth shifted field at our microscope back focal plane as Û(j)(k2D) = V̂(k2D − kj2D, kz − kjz). These new coordinates highlight the 3D to 2D mapping from V̂ to Û, where again is a deterministic function of k2D, and the same applies between kjz and kj2D.

Each shifted, scattered field is then bandlimited by the microscope aperture function, a(k2D), before propagating to the image plane. The limited extent of a(k2D) (defined by the imaging system NA) sets the maximum extent of each shell along kx and ky. The jth intensity image acquired by the detector is given by the squared Fourier transform of the bandlimited field at the microscope back focal plane:

| (6) |

Here, F denotes a 2D Fourier transform with respect to k2D, and we neglect the effects of magnification (for simplicity) by assuming the image plane coordinates match the sample plane coordinates, (x, y). The goal of FPT is to determine the complex 3D function V̂ from the real, non-negative data matrix g(x, y, j). A final 3D Fourier transform of V̂ yields the desired scattering potential, and subsequently the refractive index distribution, of the thick sample.

B. FPT Reconstruction Algorithm

Equation (6) closely resembles the data matrix measured by FP [40], but now the intensities are sampled from shells within a 3D space (i.e., the curves in Fig. 2). We use an iterative reconstruction procedure, mirroring that from FP [40], to “fill in” the k-space scattering potential with data from each recorded intensity image. Ptychography and FP require at least approximately 50%–60% data redundancy (i.e., overlapping measurements in k-space) to ensure the successful convergence of the phase retrieval process [48]. With such a similar problem structure, FPT will also require overlap between shell regions in 3D k-space. With one extra dimension, overlap is less frequent and more images are needed for an accurate reconstruction. Both a smaller LED array pitch and a larger array-sample distance along z increase the amount of k-space overlap. An example cross section of FPT k-space overlap is shown in Fig. 2(c). As we demonstrate experimentally, several hundred images are sufficient for a complex reconstruction that offers a 4 × increase in resolution along (x, y) and contains approximately 30 unique axial slices. Additional overlap (i.e., more images across the same angular range) will increase robustness to noise.

It is important to select the correct limits and discretization of 3D k-space (i.e., the FOV and resolution of the complex sample reconstruction). The maximum resolvable wave vector along kx and ky is proportional to k(NAo + NAi), where NAo is the objective NA and NAi is maximum NA of LED illumination. This lateral spatial resolution limit matches FP [49]. The maximum resolvable wave vector range along kz is also determined as a function of the objective and illumination NA as . As shown in Ref. [12], this relationship is easily derived from the geometry of the k-space bandpass volume in Fig. 2. We typically specify the maximum imaging range along the axial dimension, zmax, to approximately match twice the expected sample thickness. This then sets the discretization level along kz : Δkz = 2π/zmax. The total number of resolved slices along z is set by the ratio .

We now summarize the FPT reconstruction algorithm:

Initialize a discrete estimate of the unknown k-space scattering potential, V̂e (k), using an appropriate 3D array size (see above). In our experiments, we form a refocused light field with the raw intensity image set and use its 3D Fourier transform for initialization [33]. However, we have noticed that simpler alternative initializers, such as the 3D Fourier transform of a single raw image padded along all three dimensions or a 3D array containing a constant value, also often lead to an accurate reconstruction.

For j = 1 to q images, compute the center coordinate, kj, and select values along its associated shell (radius k, maximum width 2k · NAo). This selection process samples a discrete 2D function, d̂j(kx, ky), from the 3D k-space volume. The selected voxels must partially overlap with voxels from adjacent shells. Currently, no interpolation is used to map voxels from the discrete shell to pixels within d̂j(kx, ky).

Fourier transform d̂j(kx, ky) to the image plane to create dj(x, y) and constrain its amplitudes to match the measured amplitudes from the jth image. For our experiments, we use the amplitude update form, . More advanced alternating projection-based updates are also available [50].

Inverse 2D Fourier transform the image plane update, , back to 2D k-space to form . Use the values of to replace the voxel values of V̂e (k) at locations where voxel values were extracted in step 2.

Repeat steps 2–4 for all j = 1 to q images. This completes one iteration of the FPT algorithm. Continue for a fixed number of iterations, or until satisfying some error metric. At the end, 3D inverse Fourier transform V̂e (k) to recover the complex scattering potential, Ve(r).

In practice, we also implement a pupil function recovery procedure [27] as we update each extracted shell from k-space, which helps remove possible microscope aberrations. As with other alternating projections-based ptychography solvers, the per iteration cost of the above FPT algorithm is O(n log n), using the big-O notation. Additional details regarding algorithm robustness and convergence are in Supplement 1.

4. RESULTS AND DISCUSSION

We experimentally verify our reconstruction technique using a standard microscope outfitted with an LED array. The microscope uses an infinity corrected objective lens (NAo = 0.4, Olympus MPLN, 20 ×) and a digital detector containing 4.54 μm pixels (Prosilica GX 1920, 1936 × 1456 pixel count). The LED array contains 31 × 31 surface-mounted elements (model SMD3528, center wavelength λ = 632 nm, 20 nm approximate bandwidth, 4 mm LED pitch, 150 μm active area diameter). We position the LED array 135 mm beneath the sample to create a maximum illumination NA of NAi = 0.41. This leads to an effective lateral NA of NAo + NAi = 0.81 and a lateral resolution gain along (x, y) of slightly over a factor of 2 (from a 1.6 μm minimum resolved spatial period in the raw images to a 0.78 μm minimum resolved spatial period in the reconstruction). The associated axial Nyquist resolution is computed at 3.7 μm. We reconstruct samples across a total depth range of approximately zmax = 110 μm, which is approximately 20 times larger than the stated objective lens DOF of 5.8 μm.

For most of the reconstructions presented below, we capture and process q = 675 images from the same fixed pattern of LEDs. Some LEDs from within the 31 × 31 array produce images containing a shadow of the microscope back focal plane. We do not use these LEDs so as to avoid certain reconstruction artifacts. We typically use the following parameters for FPT reconstruction: each raw image is cropped to 1000 × 1000 pixels, the reconstruction voxel size is 0.39 μm × 0.39 μm × 3.7 μm for Nyquist–Shannon rate sampling, the reconstruction array contains approximately 2100 × 2100 × 30 voxels (110 μm total depth), and the algorithm runs for 5 iterations. The first Born approximation should remain valid across this total imaging depth. The primarily transparent and somewhat sparse samples that we test next justify the relatively large 110 μm depth that we typically use.

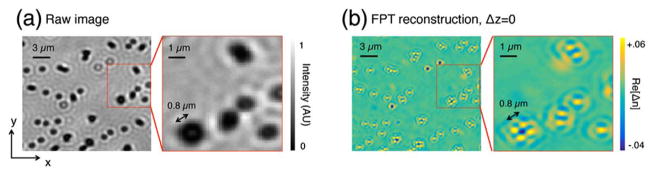

A. Quantitative Verification

First, we verify the ability of FPT to improve the lateral image resolution. The sample consists of 800 nm-diameter micro-spheres (index of refraction ns = 1.59) immersed in oil (index of refraction no = 1.515). We highlight a small group of these microspheres in Fig. 3. The single raw image in Fig. 3(a) (generated from the center LED) cannot resolve the individual spheres gathered in small clusters. Based upon the coherent Sparrow limit for resolving two points (0.68λ/NAo), this raw image cannot resolve points that are closer than 1.1 μm. After FPT reconstruction, we obtain the complex index of refraction in Fig. 3(b), where we show the real component of the recovered index. The FPT reconstruction along the Δz = 0 slice clearly resolves the spheres within each cluster. This 800 nm distance is close to the expected Sparrow limit for the FPT reconstruction: 0.68λ/(NAo + NAi) = 540 nm. The ringing features around each sphere indicate a jinc-like point-spread function, as expected theoretically from the circular shape of the finite FPT bandpass along kx, ky [in Fig. 1(c)] for all reconstructions. The resulting constructive interference forms the undesired dip feature at the center of each cluster.

Fig. 3.

Improved lateral resolution with FPT. (a) Single raw image of 0.8 μm microspheres. Beads within each cluster are not resolved. (b) Refractive index (real) from Δz = 0 slice (1 of 30) of the FPT reconstruction, resolving each microsphere.

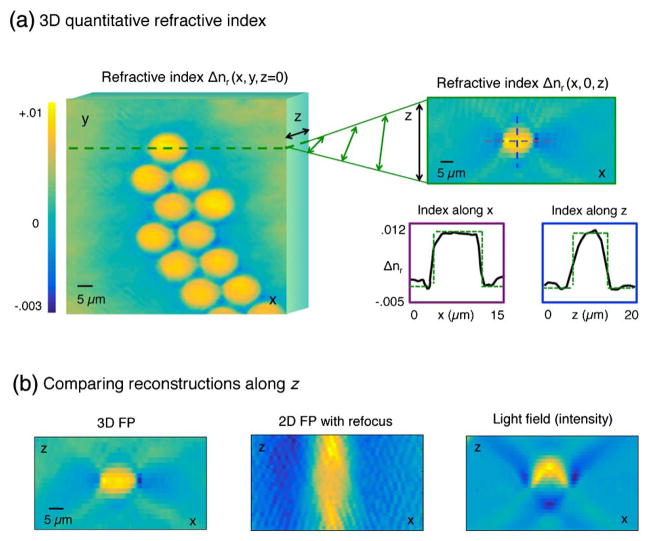

Second, we check the quantitative accuracy of FPT by imaging microspheres that extend across more than just a few reconstruction voxels. Figure 4 displays a reconstruction of 12 μm diameter microspheres (index of refraction ns = 1.59) immersed in oil (index of refraction no = 1.58). We use the same data capture and post-processing steps as in Fig. 3. Here, we display a cropped section (200 × 200 × 15 voxels) of the full 3D reconstruction, which required 221 seconds of computation time on a standard laptop. We again display the real (non-absorptive) component of the recovered index across both a lateral slice (along the Δz = 0 plane) and a vertical slice (along the Δy = 25 μm plane). We also include detailed 1D traces along the center of the vertical slice.

Fig. 4.

FPT quantitatively measures refractive index in 3D. (a) Tomographic reconstruction of 12 μm microspheres in oil with lateral (Δz = 0) slice on left, axial (Δy = 25 μm) slice on right, and one-dimensional plots of index shift along both x and z. (b) Digitally propagated FP reconstruction (middle) and refocused light field (right) created from the same data. FPT (left) best matches the expected spherical bead profile.

Three observations here are noteworthy. First, the measured index shift approximately matches the expected shift of Δn = ns − no = 0.01 across the entire bead, thus demonstrating quantitatively accurate performance across this limited volume. Currently, we only expect quantitative recovery for samples that meet the first Born approximation condition (ktδn/2 ≪ 1). This 12 μm microsphere sample approximately satisfies the condition (ktδn/2 = 0.59). Second, for any given one-dimensional trace through a microsphere center, we would ideally expect a perfect rect function (between Δn = 0 to Δn = 0.01). This is unlike 2D FP, which reconstructs the phase delay though each sphere and forms a parabolically shaped phase measurement (due to the varying thickness of each sphere along the optical axis). While FPT resolves an approximate step function through the center of the sphere along the lateral (x) dimension, it does not along the axial (z) direction. This is caused by the limited volume of 3D k-space that FPT measures (i.e., the limited bandpass or “missing cone” of information surrounding the kz axis). While our stationary sample/detector setup cannot avoid this missing cone, various methods are available to computationally fill it in [51].

Finally, we compare FPT with two alternative techniques for 3D imaging in Fig. 4(c). First, we use the same dataset to perform 2D FP and then holographically refocus its reconstructed optical field. We obtain this FP reconstruction using the same number of images (q = 675) and follow the procedure in Ref. [40] after focusing the objective lens at the axial center of the 12 μm microspheres. The “out-of-focus noise” above and below the plane of the microsphere, created by digital propagation of the complex field via the angular spectrum method, noticeably hides its spherical shape. Second, we interpret the same raw image set as a light field and perform light-field refocusing [5]. While the refocused light field approximately resolves the outline of microsphere along z, it does not offer a quantitative picture of the sample interior, nor a measure of its complex index of refraction. The areas above the microsphere are very bright due to its lensing effect (i.e., the light field displays the optical intensity at each plane and thus displays high energy where the microsphere focuses light).

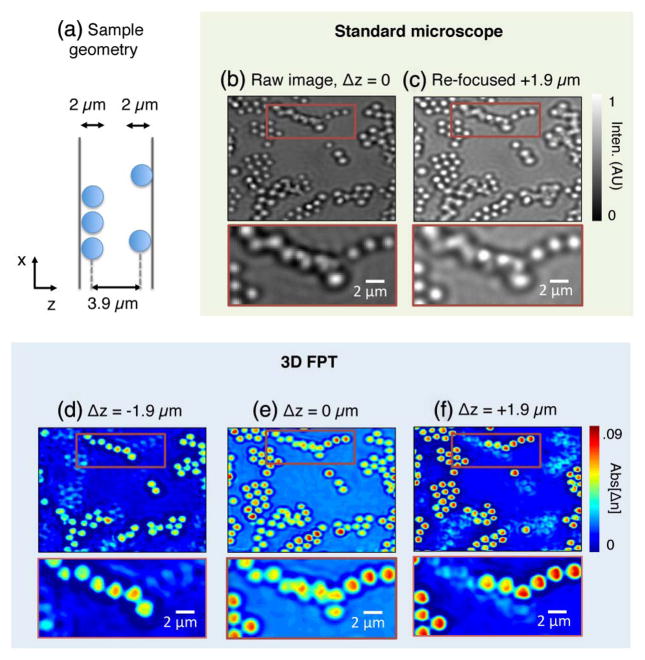

We verify the axial resolution of FPT in Fig. 5 using a sample containing two closely separated layers of 2 μm microspheres (ns = 1.59) distributed across the surface of a glass slide with oil in between (no = 1.515). The axial separation between the two microsphere layers, measured from the center of each sphere along z, is 3.9 μm [i.e., the separation between the microscope slide surfaces is 5.9 μm; see Fig. 5(a)]. This almost matches the expected axial resolution limit of 3.7 μm for the FPT microscope.

Fig. 5.

Testing the axial resolution of FPT. (a) The sample contains two layers of microspheres separated by a thin layer of oil. Raw images (b) focused at the center of the two layers and (c) on the top layer do not clearly resolve overlapping microspheres. (d)–(f) Slices of the FPT tomographic reconstruction, showing |Δn|, clearly resolve each sphere within the two individual sphere layers.

Conventional microscope images of the sample, using the center LED for illumination, are in Figs. 5(b) and 5(c). Here, we focus on the center of the two layers (Δz = 0) as well as the top microsphere layer (Δz = 1.9 μm) in an attempt to distinguish the two separate layers. At the top of each image (where microspheres in the two layers overlap), it is especially hard to resolve each sphere or determine which sphere is in a particular layer. These challenges are due in part to the limited amount of information contained within the optical intensity at each plane, as opposed to the sample’s complex refractive index.

Next, we return the focus to the Δz = 0 plane and implement FPT. We display three slices of our 3D scattering potential reconstruction in Figs. 5(d)–5(f). Here, we show the absolute values of the potential near the plane of the top layer, at the center, and near the plane of the bottom layer. The originally indistinguishable spheres within the top and bottom layers are now clearly resolved in each z-plane. Due to the system/s limited axial resolution, the reconstruction at the middle plane (Δz = 0) still shows the presence of spheres from both layers. Comparing Figs. 5(b) and 5(c) with Figs. 5(e) and 5(f), it is clear that the axial resolution of FPT is sharper than manual refocusing. Not only is each sphere layer distinguishable (as predicted theoretically), but we now also have quantitative information about the sample’s complex refractive index.

B. Biological Experiments

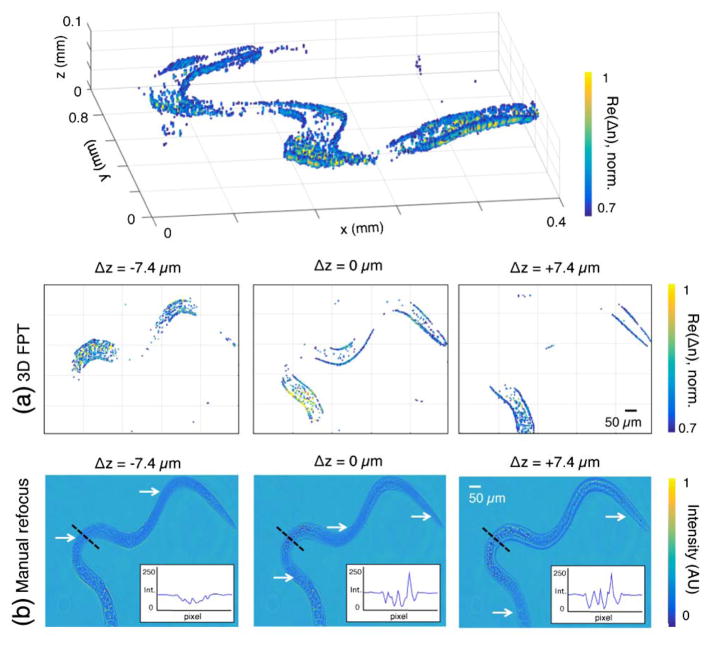

For our first biological demonstration, we reconstruct a Trichinella spiralis parasite in 3D (see Fig. 6 and Visualization 1 for the complete tomogram). Since the worm extended along a larger distance than the width of our detector, we performed FPT twice and shifted the FOV between to capture the left and right sides of the worm. We then merged each tomographic reconstruction together with a simple averaging operation (matching that from FP [40], 10% overlap). The total captured volume here is 0.8 mm × 0.4 mm × 110 μm. If our setup included a digital detector that occupied the entire microscope FOV, the fixed imaging volume would be 1.1 mm × 1.1 mm × 110 μm, and no movement would be needed for this example.

Fig. 6.

Tomographic reconstruction of a Trichinella spiralis parasite. (a) The worm’s curved trajectory resolved within various z planes. (b) Refocusing the same distance to each respective plane does not clearly distinguish each in-focus worm segment (marked by white arrows). Since the worm is primarily transparent, in-focus worm sections exhibit minimal intensity contrast, presenting significant challenges for segmentation (see intensity along each black dash in inset plots, where black dash location is in-focus in left image). FPT, on the other hand, exhibits maximum contrast at each worm voxel. See Visualization 1.

A thresholded 3D reconstruction of the parasite index is at the top of Fig. 6 (real component, threshold applied at Re[Δn]> 0.7 after |Δn| normalized to 1, under-sampled for clarity). The maximum real index variation across the tomogram before normalization is approximately 0.06, which can be seen without thresholding in Visualization 1. Its 3D curved trajectory is especially clear in the three separate z-slices of the reconstructed tomogram in Fig. 6(a). The two downward bends in the parasite body are lower than the upward bend in the middle, as well as at its front and back ends. It is very challenging to resolve these depth-dependent sample features by simply refocusing a standard microscope. Figure 6(b) displays such an attempt, where the same three z planes are brought into focus manually. Since the sample is primarily transparent, in-focus areas in each standard image actually exhibit minimal contrast, as marked by arrows in Fig. 6(b). We plot the intensity through a fixed worm section (black dash) in each of the three insets. The intensity contrast drops by over a factor of 2 at in-focus locations, which will pose a significant challenge to any depth segmentation technique (e.g., focal stack deconvolution [4]). Since FPT effectively offers 3D phase contrast, points along the parasite within its reconstruction voxels instead show maximum contrast, which enables direct segmentation via thresholding, as shown in the plots in Fig. 6(a).

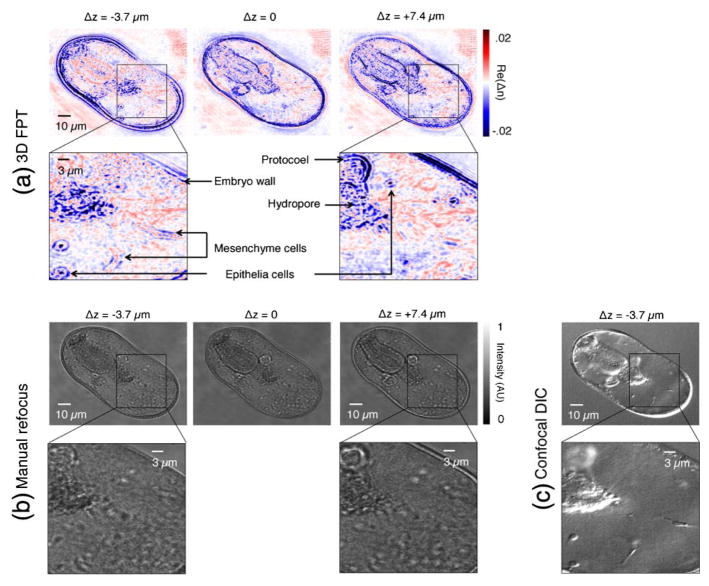

For our second 3D biological example, we tomographically reconstruct a starfish embryo at its larval stage [see Fig. 7(a) and Visualization 2]. Here, we again show three different closely spaced z-slices of the reconstructed scattering potential (Re[Δn], no thresholding applied). Each z-slice contains sample features that are not present in the adjacent z-slices. For example, the large oval structure in the upper left of the Δz = 0 plane, which is a developing stomach, nearly completely disappears in the Δz = −3.7 μm plane. Now at this z-slice, however, small structures, which we expect to be developing mesenchyme cells [52] and various epithelial cells [53], clearly appear in the lower right. We confirm the presence of these structures with a differential interference contrast (DIC) confocal microscope in Fig. 7(c) (Zeiss LSM 510, 0.8 NA, λ = 633 nm, 0.2 μm scan step). A DIC confocal scan is one of the few possible imaging options for this thick and primarily transparent sample, but suffers from a much smaller FOV (approximately 4% of the total effective FPT FOV). It also does not quantitatively measure the refractive index and requires mechanical scanning. Finally, we attempt to refocus through the embryo using a standard microscope (NA = 0.4) in Fig. 7(b). Both the particular plane of the developing stomach and even the presence of the mesenchyme cells are completely missing from the refocused images. This is due to the inability of the standard microscope to segment each particular plane of interest, the inability to accurately reconstruct transparent structures without a phase contrast mechanism, and an inferior lateral resolution with respect to FPT.

Fig. 7.

3D reconstruction of a starfish embryo at larval stage. (a) Three different axial planes of the FPT tomogram show significant feature variation (e.g., protocol is completely missing from Δz = −3.7 μm plane, expected developing mesenchyme cells are only visible in Δz = −3.7 μm plane). (b) Such axial information, and even certain structures (e.g., mesenchyme cells and various epithelia cells, marked in (a)) are completely missing from standard microscope images after manual refocusing. (c) A high-resolution DIC confocal scan of the Δz = −3.7 μm plane confirms presence of structures of interest. See Visualization 2.

5. CONCLUSIONS

We have performed diffraction tomography using intensity measurements captured with a standard microscope and an LED illuminator. The current system offers a lateral resolution of approximately 400 nm at the Nyquist–Shannon sampling limit (550 nm at the Sparrow limit and 800 nm full period limit) and an axial resolution of 3.7 μm at the sampling limit. The maximum axial extent attempted thus far was 110 μm along z, and we demonstrated quantitative measurement of the complex index of refraction through several types of thick specimen with contiguous features.

To improve the experimental setup, an alternative LED array geometry that enables a higher angle of illumination will increase the resolution. Also, we set the number of captured images here to match the data redundancy required by ptychography [48]. However, we have observed that reconstructions are successful with much fewer images than otherwise expected. Along with using a multiplexed illumination strategy [28], this may help speed up the tomogram capture time. In addition, we did not explicitly account for the finite LED spectral bandwidth or attempt poly-chromatic capture, which can potentially provide additional information about volumetric samples [32,35]. Finally, we set our reconstruction range along the z-axis somewhat arbitrarily at 110 μm. We expect the ability to further extend this axial range in the future.

Subsequent work should also examine connections between FPT, multi-slice-based techniques for ptychography [33,34], and machine learning for 3D reconstruction [54]. While prior work already specifies sample conditions under which the first Born [43] and multi-slice [42] approximations remain accurate, it is not yet clear if this translates directly to reconstructions that require phase retrieval. By merging these approaches, it may be possible to increase the domain of sample validity beyond what is currently achieved by each technique independently.

Finally, FPT may also adopt alternative computational tools to help improve ptychographic DT under the first Born approximation. We used the well-known alternating projections phase retrieval update. Other solvers based upon convex optimization [55] or alternative gradient descent techniques [56,57] may perform better in the presence of noise. Alternative approximations besides first Born approximation (e.g., Rytov [15]) are also available to simplify the Born series. In addition, the resolution is currently impacted by the missing cone in 3D k-space, and various methods are available to fill this cone in by assuming the sample is positive only, sparse, or of a finite spatial support [51]. Finally, methods exist to solve for the full Born series by taking into account the effects of multiple scattering [46,47]. Connecting this type of multiple scattering solver to FPT may aid with the reconstruction of increasingly turbid biological samples.

Supplementary Material

Acknowledgments

Funding. National Institutes of Health (NIH) (1R01AI096226-01); The Caltech Innovation Initiative (CI2) Program (13520135).

The authors would like to thank J. Brake, B. Judkewitz, and I. Papadopoulos for the helpful discussions and feedback.

Footnotes

See Supplement 1 for supporting content.

References

- 1.Ntziachristos V. Going deeper than microscopy: the optical imaging frontier in biology. Nat Methods. 2010;7:603–614. doi: 10.1038/nmeth.1483. [DOI] [PubMed] [Google Scholar]

- 2.Chung K, Deisseroth K. CLARITY for mapping the nervous system. Nat Methods. 2013;10:508–513. doi: 10.1038/nmeth.2481. [DOI] [PubMed] [Google Scholar]

- 3.Chen F, Tillberg PW, Boyden ES. Expansion microscopy. Science. 2015;347:543–548. doi: 10.1126/science.1260088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Agard DA. Optical sectioning microscopy: cellular architecture in three dimensions. Annu Rev Biophys Bioeng. 1984;13:191–219. doi: 10.1146/annurev.bb.13.060184.001203. [DOI] [PubMed] [Google Scholar]

- 5.Broxton M, Grosenick L, Yang S, Cohen N, Andalman A, Deisseroth K, Levoy M. Wave optics theory and 3D deconvolution for the light field microscope. Opt Express. 2013;21:25418–25439. doi: 10.1364/OE.21.025418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Pavani SRP, Piestun R. Three dimensional tracking of fluorescent microparticles using a photon-limited double-helix response system. Opt Express. 2008;16:22048–22057. doi: 10.1364/oe.16.022048. [DOI] [PubMed] [Google Scholar]

- 7.Dubois A, Vabre L, Boccara AC, Beaurepaire E. High-resolution full-field optical coherence tomography with a Linnik microscope. Appl Opt. 2002;41:805–812. doi: 10.1364/ao.41.000805. [DOI] [PubMed] [Google Scholar]

- 8.Adie SG, Graf BW, Ahmad A, Carney PS, Boppart SA. Computational adaptive optics for broadband optical interferometric tomography of biological tissue. Proc Natl Acad Sci USA. 2012;109:7175–7180. doi: 10.1073/pnas.1121193109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Matthews TE, Medina M, Maher JR, Levinson H, Brown WJ, Wax A. Deep tissue imaging using spectroscopic analysis of multiply scattered light. Optica. 2014;1:105–111. [Google Scholar]

- 10.Streibl N. Three-dimensional imaging by a microscope. J Opt Soc Am A. 1985;2:121–127. [Google Scholar]

- 11.Wolf E. Three-dimensional structure determination of semi-transparent objects from holographic data. Opt Commun. 1969;1:153–156. [Google Scholar]

- 12.Lauer V. New approach to optical diffraction tomography yielding a vector equation of diffraction tomography and a novel tomographic microscope. J Microsc. 2002;205:165–176. doi: 10.1046/j.0022-2720.2001.00980.x. [DOI] [PubMed] [Google Scholar]

- 13.Debailleul M, Simon B, Georges V, Haeberle O, Lauer V. Holographic microscopy and diffractive microtomography of transparent samples. Meas Sci Technol. 2008;19:074009. [Google Scholar]

- 14.Cotte Y, Toy F, Jourdain P, Pavillon N, Boss D, Magistretti P, Marquet P, Depeursinge C. Marker-free phase nanoscopy. Nat Photonics. 2013;7:113–117. [Google Scholar]

- 15.Sung Y, Choi W, Fang-Yen C, Badizadegan K, Dasari RR, Feld MS. Optical diffraction tomography for high resolution live cell imaging. Opt Express. 2009;17:266–277. doi: 10.1364/oe.17.000266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kim K, Yaqoob Z, Lee K, Kang JW, Choi Y, Hosseini P, So TC, Park Y. Diffraction optical tomography using a quantitative phase imaging unit. Opt Lett. 2014;39:6935–6938. doi: 10.1364/OL.39.006935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Devaney AJ. Structure determination from intensity measurements in scattering experiments. Phys Rev Lett. 1989;62:2385–2388. doi: 10.1103/PhysRevLett.62.2385. [DOI] [PubMed] [Google Scholar]

- 18.Maleki MH, Devaney AJ, Schatzberg A. Tomographic reconstruction from optical scattered intensities. J Opt Soc Am A. 1992;9:1356–1363. [Google Scholar]

- 19.Maleki MH, Devaney AJ. Phase-retrieval and intensity-only reconstruction algorithms for optical diffraction tomography. J Opt Soc Am A. 1993;10:1086–1092. [Google Scholar]

- 20.Wedberg TC, Stamnes JJ. Comparison of phase retrieval methods for optical diffraction tomography. Pure Appl Opt. 1995;4:39–54. [Google Scholar]

- 21.Takenaka T, Wall DJN, Harada H, Tanaka M. Reconstruction algorithm of the refractive index of a cylindrical object from the intensity measurements of the total field. Microwave Opt Technol Lett. 1997;14:182–188. [Google Scholar]

- 22.Gbur G, Wolf E. Diffraction tomography without phase information. Opt Lett. 2002;27:1890–1892. doi: 10.1364/ol.27.001890. [DOI] [PubMed] [Google Scholar]

- 23.Gureyev TE, Davis TJ, Pogany A, Mayo SC, Wilkins SW. Optical phase retrieval by use of first Born and Rytov-type approximations. Appl Opt. 2004;43:2418–2430. doi: 10.1364/ao.43.002418. [DOI] [PubMed] [Google Scholar]

- 24.Anastasio MA, Shi D, Huang Y, Gbur G. Image reconstruction in spherical-wave intensity diffraction tomography. J Opt Soc Am A. 2005;22:2651–2661. doi: 10.1364/josaa.22.002651. [DOI] [PubMed] [Google Scholar]

- 25.Huang Y, Anastasio MA. Statistically principled use of in-line measurements in intensity diffraction tomography. J Opt Soc Am A. 2007;24:626–642. doi: 10.1364/josaa.24.000626. [DOI] [PubMed] [Google Scholar]

- 26.D’Urso M, Belkebir K, Crocco L, Isernia T, Liftman A. Phaseless imaging with experimental data: facts and challenges. J Opt Soc Am A. 2008;25:271–281. doi: 10.1364/josaa.25.000271. [DOI] [PubMed] [Google Scholar]

- 27.Ou X, Zheng G, Yang C. Embedded pupil function recovery for Fourier ptychographic microscopy. Opt Express. 2014;22:4960–4972. doi: 10.1364/OE.22.004960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Tian L, Li X, Ramchandran K, Waller L. Multiplexed coded illumination for Fourier Ptychography with an LED array microscope. Biomed Opt Express. 2014;5:2376–2389. doi: 10.1364/BOE.5.002376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Isikman SO, Bishara W, Mavandadi S, Yu FW, Feng S, Lau R, Ozcan A. Lens-free optical tomographic microscope with a large imaging volume on a chip. Proc Natl Acad Sci USA. 2011;108:7296–7301. doi: 10.1073/pnas.1015638108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Gureyev TE, Paganin DM, Myers GR, Nesterets YI, Wilkins SW. Phase-and-amplitude computer tomography. Appl Phys Lett. 2006;89:034102. [Google Scholar]

- 31.Bronnikov AV. Theory of quantitative phase-contrast computed tomography. J Opt Soc Am A. 2002;19:472–480. doi: 10.1364/josaa.19.000472. [DOI] [PubMed] [Google Scholar]

- 32.Kim T, Zhou R, Mir M, Babacan SD, Carney PS, Goddard LL, Popescu G. White light diffraction tomography of unlabeled live cells. Nat Photonics. 2014;8:256–263. [Google Scholar]

- 33.Tian L, Waller L. 3D intensity and phase imaging from light field measurements in an LED array microscope. Optica. 2015;2:104–111. [Google Scholar]

- 34.Li P, Batey DJ, Edo TB, Rodenburg JM. Separation of three-dimensional scattering effects in tilt-series Fourier ptychography. Ultramicroscopy. 2015;158:1–7. doi: 10.1016/j.ultramic.2015.06.010. [DOI] [PubMed] [Google Scholar]

- 35.Gerke TD, Piestun R. Aperiodic volume optics. Nat Photonics. 2010;10:1–6. [Google Scholar]

- 36.Dierolf M, Menzel A, Thibault P, Schneider P, Kewish CM, Wepf R, Bunk O, Pfeiffer F. Ptychographic X-ray computed tomography at the nanoscale. Nature. 2010;467:436–439. doi: 10.1038/nature09419. [DOI] [PubMed] [Google Scholar]

- 37.Maiden AM, Humphry MJ, Rodenburg JM. Ptychographic transmission microscopy in three dimensions using a multi-slice approach. J Opt Soc Am A. 2012;29:1606–1614. doi: 10.1364/JOSAA.29.001606. [DOI] [PubMed] [Google Scholar]

- 38.Godden TM, Suman R, Humphry MJ, Rodenburg JM, Maiden AM. Ptychographic microscope for three-dimensional imaging. Opt Express. 2014;22:12513–12523. doi: 10.1364/OE.22.012513. [DOI] [PubMed] [Google Scholar]

- 39.Putkunz CT, Pfeifer MA, Peele AG, Williams GJ, Quiney HM, Abbey B, Nugent KA, McNulty I. Fresnel coherent diffraction tomography. Opt Express. 2010;18:11746–11753. doi: 10.1364/OE.18.011746. [DOI] [PubMed] [Google Scholar]

- 40.Zheng G, Horstmeyer R, Yang C. Wide-field, high-resolution Fourier ptychographic microscopy. Nat Photonics. 2013;7:739–745. doi: 10.1038/nphoton.2013.187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Roey JV, Donk JV, Lagasse PE. Beam-propagation method: analysis and assessment. J Opt Soc Am. 1981;71:803–810. [Google Scholar]

- 42.Paganin DM. Coherent X-Ray Optics. Oxford University; 2006. [Google Scholar]

- 43.Chen B, Stamnes JJ. Validity of diffraction tomography based on the first Born and the first Rytov approximations. Appl Opt. 1998;37:2996–3006. doi: 10.1364/ao.37.002996. [DOI] [PubMed] [Google Scholar]

- 44.Jaganathan K, Eldar YC, Hassibi B. Phase retrieval: an overview of recent developments. 2015 arXiv:1510.07713v1. [Google Scholar]

- 45.Nugent K. Coherent methods in the X-ray sciences. Adv Phys. 2010;59:1–99. [Google Scholar]

- 46.Wang YM, Chew WC. An iterative solution of the two-dimensional electromagnetic inverse scattering problem. Int J Imaging Syst Technol. 1989;1:100–108. [Google Scholar]

- 47.Tsihrintzis GA, Devaney AJ. Higher-order diffraction tomography: reconstruction algorithms and computer simulation. IEEE Trans Image Process. 2000;9:1560–1572. doi: 10.1109/83.862637. [DOI] [PubMed] [Google Scholar]

- 48.Bunk O, Dierolf M, Kynde S, Johnson I, Marti O, Pfeiffer F. Influence of the overlap parameter on the convergence of the ptychographical iterative engine. Ultramicroscopy. 2008;108:481–487. doi: 10.1016/j.ultramic.2007.08.003. [DOI] [PubMed] [Google Scholar]

- 49.Ou X, Horstmeyer R, Zheng G, Yang C. High numerical aperture Fourier ptychography: principle, implementation and characterization. Opt Express. 2015;23:3472–3491. doi: 10.1364/OE.23.003472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Marchesini S. A unified evaluation of iterative projection algorithms for phase retrieval. Rev Sci Instrum. 2007;78:011301. doi: 10.1063/1.2403783. [DOI] [PubMed] [Google Scholar]

- 51.Tam KC, Perezmendez V. Tomographical imaging with limited-angle input. J Opt Soc Am. 1981;71:582–592. [Google Scholar]

- 52.Hamanaka G, Matsumoto M, Imoto M, Kaneko H. Mesenchyme cells can function to induce epithelial cell proliferation in starfish embryos. Dev Dyn. 2010;239:818–827. doi: 10.1002/dvdy.22211. [DOI] [PubMed] [Google Scholar]

- 53.Agassiz A. Embryologic of the Starfish, in Vol. 5 of L. Agassiz Contributions to the Natural History of the United States. 1864. Cambridge: p. 76. [Google Scholar]

- 54.Kamilov US, Papadopoulos IN, Shoreh MH, Goy A, Vonesch C, Unserand M, Psaltis D. Learning approach to optical tomography. Optica. 2015;2:517–522. [Google Scholar]

- 55.Horstmeyer R, Chen RC, Ou X, Ames B, Tropp JA, Yang C. Solving ptychography with a convex relaxation. New J Phys. 2015;17:053044. doi: 10.1088/1367-2630/17/5/053044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Bian L, Suo J, Zheng G, Guo K, Chen F, Dai Q. Fourier ptychographic reconstruction using Wirtinger flow optimization. Opt Express. 2015;23:4856–4866. doi: 10.1364/OE.23.004856. [DOI] [PubMed] [Google Scholar]

- 57.Yeh LH, Dong J, Zhong J, Tian L, Chen M, Tang G, Soltanolkotabi M, Waller L. Experimental robustness of Fourier ptychography phase retrieval algorithms. Opt Express. 2015;23:33214–33240. doi: 10.1364/OE.23.033214. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.