Abstract

Purpose

Even older adults with relatively mild hearing loss report hearing handicap, suggesting that hearing handicap is not completely explained by reduced speech audibility.

Method

We examined the extent to which self-assessed ratings of hearing handicap using the Hearing Handicap Inventory for the Elderly (HHIE; Ventry & Weinstein, 1982) were significantly associated with measures of speech recognition in noise that controlled for differences in speech audibility.

Results

One hundred sixty-two middle-aged and older adults had HHIE total scores that were significantly associated with audibility-adjusted measures of speech recognition for low-context but not high-context sentences. These findings were driven by HHIE items involving negative feelings related to communication difficulties that also captured variance in subjective ratings of effort and frustration that predicted speech recognition. The average pure-tone threshold accounted for some of the variance in the association between the HHIE and audibility-adjusted speech recognition, suggesting an effect of central and peripheral auditory system decline related to elevated thresholds.

Conclusion

The accumulation of difficult listening experiences appears to produce a self-assessment of hearing handicap resulting from (a) reduced audibility of stimuli, (b) declines in the central and peripheral auditory system function, and (c) additional individual variation in central nervous system function.

Age-related hearing loss is a chronic condition of aging that can diminish quality of life for the approximately two thirds of older adults who have hearing loss (Chia et al., 2007; Lin, Thorpe, Gordon-Salant, & Ferrucci, 2011). Hearing impairment and greater self-assessment of hearing handicap predict social isolation (Cook, Brown-Wilson, & Forte, 2006; Weinstein & Ventry, 1982), which appears to contribute to depression (Dawes et al., 2015). These quality of life concerns are related to treatment-seeking behavior (Knutson, Johnson, & Murray, 2006; Ng & Loke, 2015). It is unfortunate that many older adults experience limited satisfaction with available interventions, including hearing aids, particularly for challenging listening conditions (Bertoli, Bodmer, & Probst, 2010; Humes, Wilson, Barlow, Garner, & Amos, 2002). These observations, together with evidence of neuroanatomical associations with hearing and word recognition in older adults (Eckert, Cute, Vaden, Kuchinsky, & Dubno, 2012; Harris, Dubno, Keren, Ahlstrom, & Eckert, 2009), suggest that the reduced audibility of speech associated with elevated thresholds is not the only factor contributing to self-assessed hearing handicap.

Hearing handicap is often measured with the Hearing Handicap Inventory for the Elderly/Adults (HHIE/HHIA; Ventry & Weinstein, 1982), which provides emotional, social, and total handicap scores. Both the emotional and social subscales of the HHIE/HHIA exhibit significant but modest associations with pure-tone thresholds (Gates, Murphy, Rees, & Fraher, 2003; Lichtenstein, Bess, & Logan, 1988; Newman, Weinstein, Jacobson, & Hug, 1990; Sindhusake et al., 2001; Weinstein & Ventry, 1983a) and with word recognition (Newman et al., 1990; Saunders & Forsline, 2006; Weinstein & Ventry, 1983b). The modest association between HHIE and speech recognition is also observed when the target speech is presented with competing speech or in a background noise (Gates, Feeney, & Mills, 2008; Golding, Mitchell, & Cupples, 2005). For example, pure-tone thresholds and scores for the Speech Perception In Noise Test (SPIN; Kalikow, Stevens, & Elliott, 1977) accounted for at most 40% of the variance in the HHIE total score, whereas word recognition in quiet accounted for 22% of the variance in HHIE scores (Matthews, Lee, Mills, & Schum, 1990). It is important to note that the association between self-assessed hearing handicap and word recognition in noise has been observed even after controlling for variance in pure-tone thresholds (Wiley, Cruickshanks, Nondahl, & Tweed, 2000). These findings provide further evidence that self-assessed hearing handicap is not simply due to reduced speech audibility related to hearing loss.

Older adults consistently exhibit speech recognition that is poorer than predicted from their pure-tone thresholds (Dubno, Dirks, & Schaefer, 1989). This effect can be shown with a variety of speech stimuli when the articulation index (AI) is used to predict speech recognition on the basis of the importance-weighted audibility of the speech (Dubno et al., 2008; Gates, Feeney, & Higdon, 2003), including when target speech stimuli are presented with a background of noise (Schum, Matthews, & Lee, 1991). One aim of the current study was to determine the strength of association between audibility-adjusted speech recognition in noise and self-assessed hearing handicap.

AI values are often used for adjusting speech recognition scores to account for individual differences in the audibility of the stimuli. Although pure-tone thresholds are used to predict speech recognition, this is different than controlling for pure-tone thresholds because the AI focuses on the audibility of stimuli within the average speech spectrum. The average pure-tone threshold would therefore more strongly represent individual differences across the peripheral and central auditory systems that occur with elevated thresholds in relatively lower and higher frequencies. We predicted that middle-aged and older adults with poorer-than-predicted speech recognition in noise would be more likely to report significant hearing handicap than individuals with better performance. We also predicted that this effect would be more pronounced when using AI-adjusted speech recognition scores compared with when pure-tone thresholds were covaried in a regression model.

Hearing handicap measures typically reflect the self-assessment of handicap across many prior daily life experiences rather than the self-assessment of handicap during or immediately after a speech recognition task. Extracting meaning from sparse perceptual representations during a speech recognition task can be effortful (Kramer, Kapteyn, Festen, & Kuik, 1997), particularly when there are competing stimuli or competing behavioral demands (Fraser, Gagné, Alepins, & Dubois, 2010; Kuchinsky et al., 2013; Mackersie & Cones, 2011; Tun, McCoy, & Wingfield, 2009). Because we planned to examine the association between HHIE ratings and audibility-adjusted speech recognition in noise, we also examined self-assessed ratings of workload immediately after the task. These workload ratings provided an opportunity to determine the extent to which variance in workload characterizes the same or unique variance in speech recognition in noise as the HHIE.

The National Aeronautics and Space Administration (NASA) Task Load Index (Hart & Staveland, 1988) has been used in recent hearing studies to assess listening effort and frustration (Bologna, Chatterjee, & Dubno, 2013; Mackersie & Cones, 2011; Zekveld, Kramer, Kessens, Vlaming, & Houtgast, 2009). Older adults with hearing impairment are more likely to report elevated effort during consonant recognition compared with older adults with normal hearing (Ahlstrom, Horwitz, & Dubno, 2014). In addition, effort ratings following a gap detection task were highest for older adults with the poorest gap detection thresholds (Harris, Eckert, Ahlstrom, & Dubno, 2010). These types of findings have contributed to the growing interest in understanding the listening effort that older listeners with hearing loss experience (e.g., McGarrigle et al., 2014). We predicted that these task-specific ratings of effort and frustration would explain significant variance in speech recognition.

Method

Participants

The participants examined in this study were selected from the Medical University of South Carolina human subject database, which includes audiometric measures, demographic information, medical and hearing health histories, and responses to self-report questionnaires (e.g., Dubno, Eckert, Lee, Matthews, & Schmiedt, 2013). Participants were included in the current study if the data from the Revised SPIN (R-SPIN; Bilger, 1984), NASA Task Load Index obtained after the SPIN test, and HHIE were available. There were 162 middle-aged to older adults with these data (56.2 to 88.7 years, mean 68.9 years; 64% women, 36% men; 87% with greater than a high school education [five cases with missing data]; and 50% with a self-reported history of high blood pressure [one case with missing data]). Participants were typically scheduled for multiple visits because of the time required to perform audiometric assessments, a blood draw for genetic analysis, a brief screening of mental status, collection of medical and hearing health histories, and completion of self-report questionnaires. The HHIE/HHIA was always obtained on the first visit prior to any audiometric testing or discussions about hearing loss. Although pure-tone thresholds were obtained on the same day as the HHIE/HHIA, thresholds obtained on the day of SPIN testing were used in these analyses. For this reason, the dates for audiometric and HHIE data collection varied for each participant. There was an average of 4.06 months (SD = 6.18) between the audiometric and HHIE measures. No significant associations were observed between the duration between visits and the measures described below.

Audiometric Measures

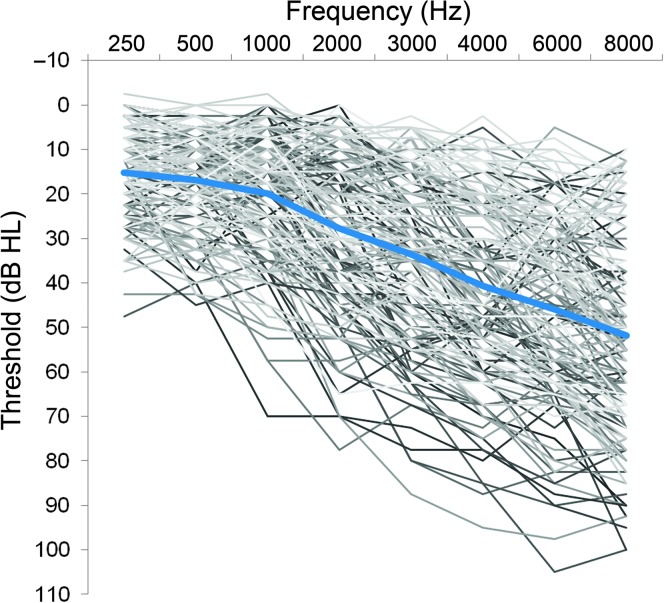

Audiometric assessment was performed using Madsen OB822 or Orbiter 922 clinical audiometers (GN Otometrics A/S, Taastrup, Denmark). These audiometers were calibrated to appropriate American National Standards Institute (2010) specifications and equipped with TDH-39 headphones mounted in MX-41/AR cushions (Telephonics, Farmingdale, NY). Pure-tone thresholds were measured using the guidelines recommended by the American Speech-Language-Hearing Association (2005). Figure 1 shows (a) the broad range of ear-averaged pure-tone thresholds across participants and (b) a generally mild-to-moderately severe gradually sloping hearing loss commonly observed in middle-aged to older adults. A pure-tone average (PTA) was obtained from both ears using pure-tone thresholds at 250, 500, 1000, 2000, 3000, 4000, 6000, and 8000 Hz. A PTA across ears was used because the speech recognition analyses involved an average of highly correlated left and right ear scores from the SPIN (both left and right ear low- or high-context observed scores: r = .71, p < .001). The results presented below using the averaged scores were not substantively different when left or right ear scores were used (results not shown).

Figure 1.

Left and right ear averaged pure-tone thresholds for each of the 162 participants. The blue line represents the mean audiogram.

Speech Recognition in Noise Measures

One of four R-SPIN test lists that includes 25 high-context (semantic and syntactic information) and 25 low-context (syntactic information) sentences was presented in multitalker babble to measure recognition for the final key word in each sentence. The SPIN sentences were presented at 50 dB sensation level relative to the participant's calculated babble threshold with babble presented at a +8 dB signal-to-noise ratio (Bilger, 1984). If the initial presentation level of the SPIN sentences was uncomfortably loud, the level was reduced to a tolerable loudness level while maintaining the +8 dB signal-to-noise ratio. Predicted scores for the SPIN low- and high-context sentences were obtained using AI values (Bell, Dirks, & Trine, 1992). These values were computed using each participant's pure-tone thresholds, the average spectra and overall levels of the final words from the SPIN sentences and the multitalker babble (Dubno et al., 2008), and the frequency–importance function developed for the SPIN materials (Dirks, Bell, Rossman, & Kincaid, 1986). Last, the transfer functions relating AI to speech recognition scores for the SPIN sentences (Dirks et al. 1986) were used to predict scores for high- and low-context sentences. Differences between measured and predicted scores (observed − predicted) indicate the extent to which key word recognition in high- and low-context sentences was better or worse than predicted given the importance-weighted speech audibility of the sentences for each participant (i.e., negative values are poorer-than-predicted scores after considering importance-weighted audibility of the speech).

Self-Assessed Hearing Handicap, Effort, and Frustration Measures

The 25-item HHIE/HHIA was used to assess the self-assessed social/situational (12 questions) and emotional (13 questions) consequences of hearing impairment (Newman, et al., 1990; Ventry & Weinstein, 1982). Depending on the study participant's age, either the HHIE (age ≥ 60 years) or the HHIA (age < 60 years) was administered by paper and pencil. The HHIA differs from the HHIE by the substitution of three questions that were considered to be more appropriate for younger adults, thus leaving 22 common questions between forms (Newman et al., 1990). All 25 questions on the HHIE were included in the analysis; however, due to sample size (n = 2), the three additional substitute questions on the HHIA were not included. The HHIE acronym and question labels are used to describe the results (i.e., S-1, E-2 for social/situational and emotional, respectively). These acronyms and HHIE questions are included in online Supplemental Material S1.

The HHIE includes questions involving embarrassment, irritability, frustration, self-worth and depression, changes in activities, and communication problems related to self-assessed hearing handicap. Participants are asked to indicate the extent to which they agree with a question about their perceived handicap (yes indicating total agreement [score = 4], sometimes indicating partial agreement [score = 2], no indicating either disagreement or “nonapplicable” [score = 0]). The responses for 13 items provide an emotional subscale score and the responses for 12 items are summed to a social/situational subscale score. The sum of all 25 items provides a total score. The total score indicates the degree of self-assessed hearing handicap, with higher scores indicating more handicap (0–16 = no self-perceived handicap, 18–42 = mild-to-moderate self-assessed handicap, 44–100 = significant self-assessed handicap). The HHIE social and emotional subscale scores exhibited a high degree of colinearity (Spearman r = .88), and thus the HHIE total score was used in the regression analyses described later. We also considered the degree to which each HHIE item related to speech recognition to better understand why the HHIE total score related to audibility-adjusted speech recognition. This post hoc examination described below includes appropriate procedures to control for false positive results and cautious interpretation of significant results.

Participants also completed a modified version of the NASA Task Load Index immediately following the SPIN task to assess the effort and frustration experienced while performing the SPIN task. The effort question asked, “How hard did you have to work to accomplish your level of performance?” The frustration question asked, “How insecure, discouraged, irritated, stressed, and annoyed were you?” Participants rated their effort and frustration using a 20-point scale. Higher scores indicated higher effort or frustration. Additional workload constructs are included in the questionnaire (e.g., physical demand and temporal demand) but were not examined in the current study.

Data Analyses

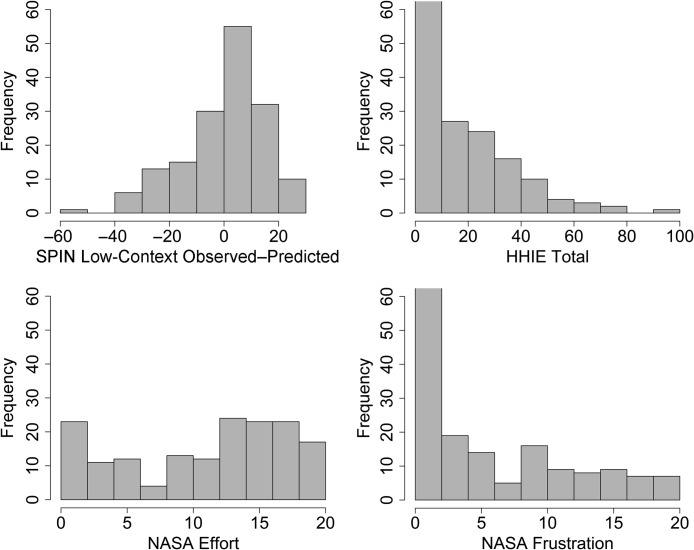

One-sample Kolgomorov–Smirnov tests indicated that the low- and high-context SPIN, HHIE, and NASA variables had significantly non-normal distributions (all ps < .001, Figure 2). For this reason, these variables were used in Spearman nonparametric correlations to test the prediction that HHIE total scores were associated with SPIN low- and high-context scores. Hierarchical regression analyses also were performed using SPSS v22 (IBM, Armonk, NY) to determine the extent to which the associations between HHIE total and SPIN scores were statistically dependent on control variables that might mediate significant HHIE and SPIN score associations. Hierarchical regressions also were performed to examine the unique predictive power of the HHIE total score compared with the NASA effort and NASA frustration ratings. Rank-ordered SPIN low- and high-context scores, HHIE total, and NASA variables were used in these regressions, and the normal probability P–P plots of the standardized residuals were examined to ensure that the residual variance met the assumption of normality.

Figure 2.

Histograms for the Speech Perception In Noise Test (SPIN) low-context observed–predicted differences (average across ears), Hearing Handicap Inventory for the Elderly (HHIE) total scores, National Aeronautics and Space Administration (NASA) effort ratings, and NASA frustration ratings demonstrate non-normal distributions, and thus these variables were used in nonparametric Spearman correlations or rank ordered prior to use with multiple regression.

The control variables in the first regression described in the “Results” section were PTA, age, sex, education level, and self-reported high blood pressure. Although PTA can account for the reduced audibility of the stimuli, it also can serve as a proxy for the magnitude of age-related changes in the auditory periphery and throughout the auditory system that could have additive effects on speech recognition in noise. The sex variable was included on the basis of evidence that men are more likely than women to exhibit poor word recognition when there is a competing message even after controlling for differences in speech audibility (Dubno, Lee, Matthews, & Mills, 1997; Gates, Cooper, Kannel, & Miller, 1990; Nash et al., 2011; Wiley et al., 1998). Education level was defined as (a) at least a high school education or (b) greater than a high school education. Education level was used to control for potential individual differences in lexical access and fluency (Schneider et al., 2013). Last, the self-reported high blood pressure measure was examined because of evidence that high blood pressure can relate to declines in auditory function (Nash et al., 2011) and the morphology of brain regions (Eckert et al., 2013) that support executive functions during speech-in-noise tasks (Vaden et al., 2013).

Results

Self-Assessed Hearing Handicap and Speech Recognition in Noise

Descriptive statistics are presented for the SPIN, HHIE, NASA, and PTA variables in Table 1. A one-sample t test demonstrated that participants were just as likely to have poorer-than-predicted SPIN low-context scores as better-than-predicted low-context scores, t(161) = 0.42 (ns), which is consistent with the 0.50 mean low-context observed–predicted speech recognition. In contrast, participants were more likely to demonstrate better-than-predicted than poorer-than-predicted high-context scores, t(161) = 5.07, p < .001, as indicated by the 3.63 mean high-context observed–predicted speech recognition. This high-context effect appeared to be due, at least in part, to participants (even those with hearing loss) demonstrating at or near ceiling performance for high-context sentences (see Table 1).

Table 1.

Descriptive statistics for the SPIN, HHIE, NASA, and PTA variables.

| SPIN low observed (0%–100%) | SPIN low observed–predicted | SPIN high observed (0%–100%) | SPIN high observed–predicted | HHIE total (0–100 points) | NASA effort (1–20 points) | NASA frustration (1–20 points) | PTA (dB HL) | |

|---|---|---|---|---|---|---|---|---|

| Mean | 66.43 | 0.50 | 95.33 | 3.63 | 18.41 | 11.42 | 6.40 | 31.82 |

| Minimum | 2.00 | −52.58 | 44.00 | −23.02 | 0.00 | 1.00 | 1.00 | 9.06 |

| Maximum | 98.00 | 23.95 | 100.00 | 45.29 | 96.00 | 20.00 | 20.00 | 65.00 |

Note. SPIN = Speech Perception In Noise Test; HHIE = Hearing Handicap Inventory for the Elderly; NASA = National Aeronautics and Space Administration; PTA = pure-tone average.

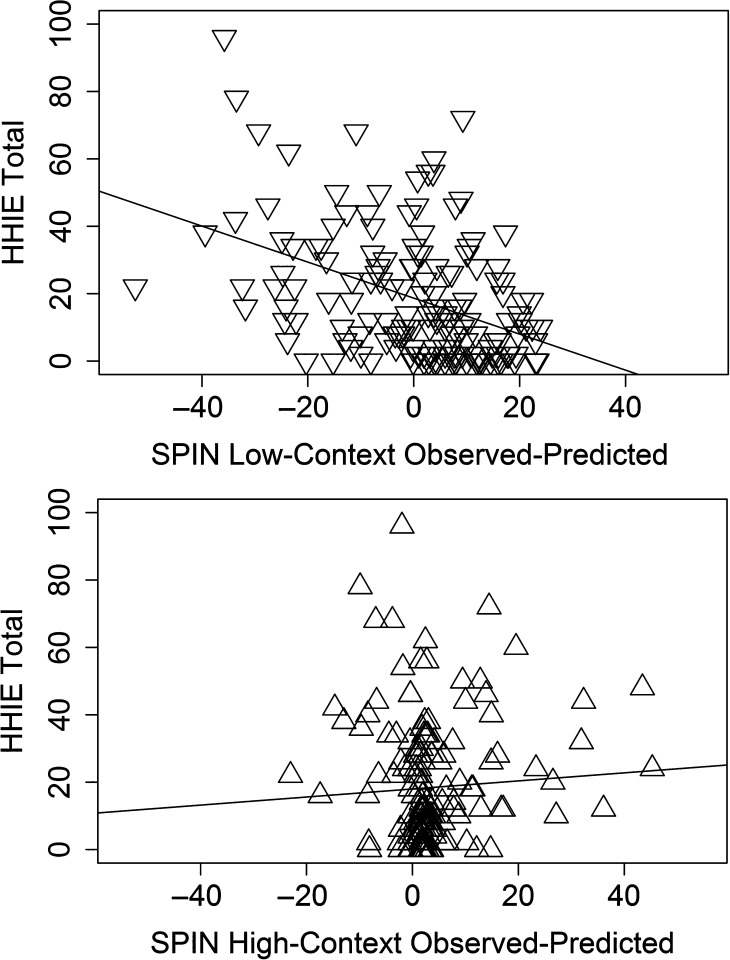

Hearing handicap (HHIE total score) was significantly negatively correlated with SPIN low-context observed–predicted speech recognition (see Table 2, Figure 3), that is, poorer-than-predicted speech recognition was more likely in participants with greater self-reported hearing handicap. In contrast, there was no significant association between hearing handicap and SPIN high-context observed–predicted differences (see Table 2, Figure 3), which may relate to the restricted distribution of observed–predicted differences for high-context sentences.

Table 2.

Spearman correlations between SPIN low- and high-context observed–predicted differences, HHIE total scores, and NASA effort and frustration ratings.

| SPIN low observed | SPIN low observed–predicted | SPIN high observed | SPIN high observed–predicted | HHIE total | NASA effort | |

|---|---|---|---|---|---|---|

| SPIN low observed–predicted | .78** | |||||

| SPIN high observed | .75** | .50** | ||||

| SPIN high observed–predicted | −.07 | .41** | .11 | |||

| HHIE total | −.58** | −.41** | −.48** | .05 | ||

| NASA effort | −.40** | −.26** | −.29** | .18* | .44** | |

| NASA frustration | −.35** | −.29** | −.31** | −.03 | .39** | .61** |

Note. SPIN = Speech Perception In Noise Test; HHIE = Hearing Handicap Inventory for the Elderly; NASA = National Aeronautics and Space Administration.

p < .05.

p < .001 and survive Bonferroni correction (p < .05/25 correlations).

Figure 3.

Scatter plots of the Speech Perception In Noise Test (SPIN) low- and high-context observed–predicted scores with the Hearing Handicap Inventory for the Elderly (HHIE) total score.

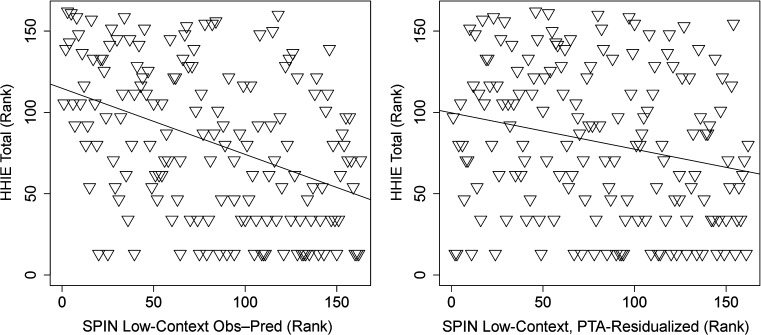

We then examined the extent to which the HHIE association with speech recognition was significantly greater when controlling for audibility compared with when the average pure-tone threshold was covaried from the SPIN low-context observed score. The relationships between HHIE and SPIN low-context observed–predicted and PTA-adjusted measures (r = −.41 and r = −.22, respectively) are shown in Figure 4. A test for the difference between these dependent correlations (Steiger, 1980) revealed a significantly stronger association between the HHIE and the SPIN low-context observed–predicted score compared with the SPIN low-context observed score that was adjusted for PTA (z = −3.24, p = .001).

Figure 4.

Hearing Handicap Inventory for the Elderly (HHIE) associations with SPIN low-context performance that is adjusted for audibility and adjusted for pure-tone average (PTA). Left: Scatterplot of the relationship between SPIN low-context observed–predicted (Obs–Pred) and the HHIE total score (r = −.41, p < .001). Right: Scatterplot of the relationship between SPIN low-context observed score, which was residualized for the pure-tone threshold, and the HHIE total score (r = −.22, p < .001). Rank-ordered data are presented so that the same scale can be presented for each variable.

These results indicate that (a) reduced speech audibility does not alone explain why people with speech recognition problems experience hearing handicap and (b) elevated pure-tone thresholds at frequencies that are not strongly weighted in the AI prediction of speech recognition also contribute to the relationship between the HHIE and speech recognition. This pure-tone threshold variance may relate to declines in the auditory periphery (and central centers).

HHIE Item Analysis

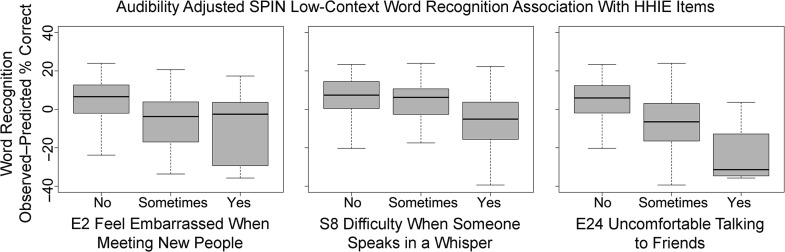

Inspection of the HHIE item responses revealed that scores (0, 2, or 4) from 13 of the 25 items exhibited a significant Bonferroni-corrected association with SPIN low-context observed–predicted differences (p < .05/25 comparisons; see Table 3). The following seven items, which were strongly negatively related to the HHIE total score (Spearman rs = .67 to .74, ps < .001), exhibited the strongest associations with SPIN low-context observed–predicted differences (Spearman rs = −.33 to −.38): embarrassment when meeting new people (HHIE question label E-2); frustration talking to family members (E-5); difficulty hearing when someone speaks in a whisper (S-8); feeling handicapped by a hearing problem (E-9); difficulty visiting with friends, relatives, or neighbors (S-10); problems listening to the TV or radio (S-15); and feeling uncomfortable when talking to friends (E-24). Figure 5 presents examples of these significant associations. Together, the 13 significant correlations suggested that handicap was experienced as a consequence of communication problems, particularly in social settings. In contrast, the five items related to doing something less often (e.g., going shopping or listening to the radio or TV) were not significantly related to speech recognition. There were no significant correlations between the HHIE items and SPIN high-context observed–predicted scores.

Table 3.

Spearman correlations between SPIN low-context observed–predicted differences and HHIE question responses.

| HHIE Social | Spearman's ρ | HHIE Emotional | Spearman's ρ |

|---|---|---|---|

| S-1 | −.18* | E-2 | −.34*** |

| S-3 | −.17* | E-4 | −.26*** |

| S-6 | −.26*** | E-5 | −.33*** |

| S-8 | −.36*** | E-7 | −0.14 |

| S-10 | −.34*** | E-9 | −.34*** |

| S-11 | −0.02 | E-12 | −.21** |

| S-13 | −.17* | E-14 | −0.15 |

| S-15 | −.38*** | E-17 | −.29*** |

| S-16 | −0.15 | E-18 | −.24** |

| S-19 | −.23** | E-20 | −.28*** |

| S-21 | −.25*** | E-22 | −.20* |

| S-23 | −0.15 | E-24 | −.36*** |

| E-25 | −.28*** |

Note. Please see online Supplemental Material S1 for HHIE item questions. SPIN = Speech Perception In Noise Test; HHIE = Hearing Handicap Inventory for the Elderly.

p < .05.

p < .01.

p < .001 and survive Bonferroni correction (p < .05/25 correlations).

Figure 5.

Box plots provide examples of Hearing Handicap Inventory for the Elderly (HHIE) items that were significantly correlated with Speech Perception In Noise Test (SPIN) low-context observed–predicted differences. Note that some items (E-2, E-24) were more sensitive to poorer-than-predicted speech recognition in noise than other items (S-8).

Resilience of the Hearing Handicap and Speech Recognition in Noise Association

PTA, education level, age, and high blood pressure predicted variance in SPIN low-context observed–predicted scores (see Table 4). Hierarchical regression was performed to determine the extent to which these control variables could explain the significant association between the low-context observed–predicted differences and HHIE total score. Although PTA accounted for 11% of the 15% of the variance in the relationship between the HHIE and speech recognition, the relationship between the HHIE and speech recognition remained significant (4% unique shared variance, p < .05, with PTA in the model) due to the relatively large sample (see Table 5). These results indicate that participants with more hearing loss and more hearing handicap were more likely to have poorer-than-predicted speech recognition. They also indicate that some participants with relatively better hearing also performed more poorly than predicted and reported significant hearing handicap.

Table 4.

Hearing and demographic Spearman correlations between SPIN low- and high-context observed–predicted differences, HHIE total scores, and NASA effort and frustration ratings.

| Demographic and hearing | SPIN low observed–predicted | SPIN high observed–predicted | HHIE total | NASA effort | NASA frustration |

|---|---|---|---|---|---|

| Sex (Male 0, Female 1) | .00 | −.29*** | −.14 | −.27*** | −.21** |

| Education (≤ HS 0, > HS 1) | .19* | .19* | −.15 | −.11 | −.07 |

| Age | −.17* | .02 | .11 | .02 | .13 |

| High blood pressure (No 0, Yes 1) | −.19* | −.17 | .09 | −.01 | .15 |

| PTA | −.44*** | .23*** | .58*** | .35*** | .29*** |

Note. SPIN = Speech Perception In Noise Test; HHIE = Hearing Handicap Inventory for the Elderly; NASA = National Aeronautics and Space Administration; HS = high school; PTA = pure-tone average.

p < .05.

p < .01.

p < .001.

Table 5.

Hierarchical regression results showing the relationship between the HHIE and SPIN low-context observed–predicted difference after accounting for control variables.

| Hierarchical level | Variable | Standard beta | % of unique variance | t score | p value |

|---|---|---|---|---|---|

| 1) Multiple R = .39**, % variance = 15, F(1, 155) = 27.96, p < .001 | |||||

| HHIE | −.39 | 15 | −5.29 | ** | |

| 2) Multiple R = .40**, % variance = 22, F(2, 155) = 21.71, p < .001 | |||||

| HHIE | −.21 | 4 | −2.45 | * | |

| PTA | −.32 | 8 | −3.64 | ** | |

| 3) Multiple R = .51**, % variance = 26, F(6, 155) = 8.69, p < .001 | |||||

| HHIE | −.19 | 3 | −2.20 | * | |

| PTA | −.33 | 7 | −3.26 | ** | |

| Gender | −.11 | 1 | −1.44 | ns | |

| Education | .11 | 2 | 1.54 | ns | |

| High blood pressure | −.09 | 1 | −1.25 | ns | |

| Age | −.01 | 0 | −0.07 | ns |

Note. HHIE = Hearing Handicap Inventory for the Elderly; SPIN = Speech Perception In Noise Test; PTA = pure-tone average; ns = nonsignificant.

p < .05.

p < .001.

The hierarchical regression described above included a third level with the control variables sex, education, and high blood pressure. The addition of these variables to the regression model had a limited impact on the association between hearing handicap and SPIN low-context observed–predicted differences (see Table 5). These results indicate that hearing handicap accounts for significant unique variance in SPIN low-context observed–predicted differences relative to sex, age, education level, and self-reported high blood pressure.

HHIE and NASA Task Load Index Contributions to Predicting Speech Recognition in Noise

Participants who reported higher NASA effort and frustration ratings immediately after the SPIN task were significantly more likely to exhibit poorer-than-predicted SPIN low-context speech recognition (see Table 2). The significant associations between these variables and HHIE (Spearman correlations: Effort × HHIE r = .44, p < .001; Frustration × HHIE r = .39, p < .001) suggested that they explained the same variance in SPIN scores as the HHIE. Although the NASA effort and frustration measures accounted for variance in audibility-adjusted speech recognition that was explained by the HHIE, they were not unique predictors of SPIN scores in comparison to the HHIE (see Table 6). Thus, self-assessed hearing handicap on the basis of a broad range of daily life communication experiences and listening difficulty was a better predictor of speech recognition scores than ratings of effort and frustration obtained immediately following the speech recognition task.

Table 6.

Hierarchical regression results showing the relationship between the HHIE and SPIN low-context observed–predicted difference after accounting for listening effort and frustration during the SPIN task.

| Hierarchical level | Variable | Standard beta | % of unique variance | t score | p value |

|---|---|---|---|---|---|

| 1) Multiple R = .41*, % variance = 17, F(1, 161) = 32.59, p < .001 | |||||

| HHIE | −.39 | 17 | −5.71 | * | |

| 2) Multiple R = .44*, % variance = 19, F(3, 161) = 12.72, p < .001 | |||||

| HHIE | −.35 | 10 | −4.31 | * | |

| NASA effort | −.02 | 0 | −0.12 | ns | |

| NASA frustration | −.14 | 1 | 1.57 | ns |

Note. HHIE = Hearing Handicap Inventory for the Elderly; SPIN = Speech Perception In Noise Test; NASA = National Aeronautics and Space Administration; ns = nonsignificant.

p < .001.

Discussion

The results of this study indicate that individual differences in the auditory periphery and at higher centers, which are not related to threshold elevation, may contribute to hearing handicap and speech recognition difficulties experienced by middle-aged and older adults. We observed that the expected and quite strong association between low-context speech recognition and hearing handicap remained significant after accounting for audibility using the AI and when also controlling for the average pure-tone threshold. Together, these results suggest that additional peripheral and central factors contribute at least some small amount of variance to hearing handicap. This variance appears to be due to multiple negative communication experiences, particularly in social settings, rather than just effort and frustration during speech recognition. An unexpected finding was that effort and frustration measured immediately after the task was relatively less related to speech recognition in comparison to HHIE items. Together, the results indicate that self-assessed HHIE hearing handicap is sensitive to speech recognition difficulties over and above the effects of reduced speech audibility because of the accumulation and recall of negative communication experiences.

Replication and Extension of HHIE and Speech Recognition Findings

The results of the current study are consistent with previous findings that people with more self-assessed hearing handicap exhibit poorer word recognition (Gates et al., 2008; Golding et al., 2005; Newman et al., 1990; Saunders & Forsline, 2006; Weinstein & Ventry, 1983b) and increased hearing loss (Gates, Murphy, et al., 2003; Lichtenstein et al., 1988; Sindhusake et al., 2001; Weinstein & Ventry, 1983a). We observed that hearing handicap was related to speech recognition before and after accounting for differences in audibility and/or pure-tone thresholds.

The hearing handicap associations with speech recognition were specific to sentences with limited semantic context. Hearing handicap was not significantly associated with a difference between observed and predicted scores for high-context sentences. Participants who reported hearing handicap were equally likely to demonstrate better- or worse-than-predicted key word recognition for the SPIN high-context sentences. This association suggests that the experience of handicap is most pronounced when limited language level cues are available to help determine sentence context that could narrow the lexical search for identifying key words. This is important because older adults can benefit from contextual semantic cues to a similar extent as younger adults (Dubno, Ahlstrom, & Horwitz, 2000; Sheldon, Pichora-Fuller, & Schneider, 2008; Stine-Morrow, Soederberg Miller, & Nevin, 1999) and could explain why aural rehabilitation counseling involving communication strategies can reduce the self-assessment of hearing handicap (Hawkins, 2005).

Although an association between hearing handicap and speech recognition has been reported previously (Wiley et al., 2000), a novel result of the current study is that associations between hearing handicap and speech recognition in noise are stronger when controlling for audibility than when controlling for an average pure-tone threshold. Wiley et al. (2000) demonstrated that word recognition (Northwestern University NU-6 word lists) in a competing message was associated with HHIE short form scores with an odds ratio of 0.95 after statistically controlling for hearing thresholds. We observed a similar odds ratio for the hearing handicap and speech recognition in noise association after controlling for PTA in the observed SPIN low-context scores (0.74; r values were converted to an odds ratio by obtaining the d effect size and then converting d to an odds ratio). A larger odds ratio was observed for the AI-adjusted SPIN low-context scores (1.61). These results suggest that individual differences in the auditory periphery and at higher centers that are (a) related to elevated pure-tone thresholds and (b) unrelated to pure-tone thresholds contribute to the hearing handicap and speech recognition difficulties experienced by middle-aged and older adults.

It is important to emphasize that PTA and hearing handicap shared considerable variance in audibility-adjusted speech recognition. To be specific, the percentage of significant unique variance in speech recognition explained by the HHIE dropped from 15% to 4% after including PTA in the regression model. This result was not unexpected given the evidence that events contributing to impaired hearing can exaggerate central auditory system structure and function declines in older animals (Fernandez, Jeffers, Lall, Liberman, & Kujawa, 2015). Cascading declines from the auditory periphery to central centers, perhaps due to a loss of input from sensory fibers (Fetoni, Troiani, Petrosini, & Paludetti, 2015), would contribute to an association between PTA and speech recognition after accounting for audibility.

We have previously observed that age group differences in word recognition were due to variation in Heschl's gyrus gray matter volume that was statistically independent of PTA in participants with relatively normal hearing (Harris et al., 2009). Variation in Heschl's gyrus gray matter volume within older and younger groups was also predictive of word recognition, suggesting that normative developmental variation may also contribute to poorer-than-predicted speech recognition. Aging- and development-related variation may therefore contribute to the modest yet significant variance in poorer-than-predicted speech recognition in noise that was associated with greater perceived hearing handicap.

Added Value of the HHIE Item Analyses

The HHIE item analysis results suggest an additional explanation that affective or emotional responses to listening difficulty, particularly in social settings, account for the audibility and PTA-adjusted association between speech recognition and hearing handicap. For example, participants who reported feeling frustrated, embarrassed, irritable, uncomfortable, and left out in social settings were more likely to have poorer-than-predicted speech recognition. These results are consistent with our observation that the NASA frustration measure was related to speech recognition. Some of these same HHIE emotional distress items were related to hearing loss (e.g., embarrassment, frustration, social engagement restrictions) and predicted depressive symptoms in the Blue Mountains Hearing Study (Gopinath et al., 2012). The association between depression feelings (HHIE item E-22) and speech recognition was relatively weak (p < .05, uncorrected) in the current study because of the relatively few cases reporting depressed feelings (no = 91%; sometimes = 7%; yes = 2% of cases).

We also observed that few people reported performing activities “less often” because of a hearing problem (e.g., shopping, attending religious services, listening to the radio or TV). This is an important observation because it suggests that hearing handicap and hearing problems are not deterring people from activities that they may enjoy and may be consistent with the relatively limited evidence for feelings of depression in our sample.

The Predictive Strength of the HHIE Versus NASA Ratings of Effort and Frustration

Ratings of effort and frustration from the NASA Task Load Index were predicted to be robust predictors of speech recognition in noise because they were obtained immediately after completion of the SPIN task. These variables were significantly correlated with SPIN low-context observed–predicted differences but not when the HHIE total score was included in a regression model. Thus, they captured the same effort and frustration variance that is measured in the HHIE with items that did not specifically characterize effort or frustration.

Post hoc examination was performed to better understand why the HHIE total score was a better predictor of audibility-adjusted speech recognition than the NASA measures. For example, the significant association between NASA frustration and speech recognition could not be attributed to a single item (e.g., E-5: frustration talking to family members). Multiple HHIE items (e.g., E-10 and S-15: hearing problem causing difficulty) appeared to chip away at the unique variance in speech recognition that was explained by the NASA frustration rating. These findings indicate that the accumulation and recall of difficult listening experiences can be a stronger predictor of speech recognition than ratings of effort and frustration alone obtained immediately after the speech recognition task.

Summary

The lower reported quality of life experienced by some older adults with communication difficulties is often attributed to reduced speech audibility due to hearing loss. This hearing loss effect on speech recognition and hearing handicap is clear in the results of the current study as demonstrated by the significant associations between hearing handicap and SPIN low- and high-context observed scores (see Table 2). The results further show that self-assessed hearing handicap is driven by factors that are additive to effects of threshold elevations that reduce audibility. In addition to changes in the auditory periphery beyond threshold elevation, these factors may include changes in higher order executive function that supports speech recognition in challenging listening conditions and/or affective systems (Vaden, Kuchinsky, Ahlstrom, Dubno, & Eckert, 2015; Vaden et al., 2013). Together, the results demonstrate that the HHIE is a good tool for characterizing auditory and potential nonauditory factors that contribute to difficulties in speech recognition in noise.

Supplementary Material

Acknowledgments

This work was supported (in part) by the National Institute on Deafness and Other Communication Disorders Grant P50 DC 000422 (awarded to Judy R. Dubno), Medical University of South Carolina Center for Biomedical Imaging, South Carolina Clinical and Translational Research (SCTR) Institute, and National Institutes of Health/National Center for Research Resources Grant UL1 RR029882 (awarded to K. T. Brady). This investigation was conducted in a facility constructed with support from Research Facilities Improvement Program Grant C06 RR14516 (awarded to R. K. Crouch) from the National Center for Research Resources, National Institutes of Health.

Funding Statement

This work was supported (in part) by the National Institute on Deafness and Other Communication Disorders Grant P50 DC 000422 (awarded to Judy R. Dubno), Medical University of South Carolina Center for Biomedical Imaging, South Carolina Clinical and Translational Research (SCTR) Institute, and National Institutes of Health/National Center for Research Resources Grant UL1 RR029882 (awarded to K. T. Brady). This investigation was conducted in a facility constructed with support from Research Facilities Improvement Program Grant C06 RR14516 (awarded to R. K. Crouch) from the National Center for Research Resources, National Institutes of Health.

References

- Ahlstrom J. B., Horwitz A. R., & Dubno J. R. (2014). Spatial separation benefit for unaided and aided listening. Ear and Hearing, 35, 72–85. doi:10.1097/AUD.0b013e3182a02274 [DOI] [PMC free article] [PubMed] [Google Scholar]

- American National Standards Institute. (2010). Specifications for audiometers. (ANSI S3.6-2010, revision of ANSI S3.6-2004). New York, NY: Author. [Google Scholar]

- American Speech-Language-Hearing Association. (2005). Guidelines for manual pure-tone threshold audiometry. Retrieved from http://www.asha.org/policy

- Bell T. S., Dirks D. D., & Trine T. D. (1992). Frequency-importance functions for words in high- and low-context sentences. Journal of Speech and Hearing Research, 35, 950–959. [DOI] [PubMed] [Google Scholar]

- Bertoli S., Bodmer D., & Probst R. (2010). Survey on hearing aid outcome in Switzerland: Associations with type of fitting (bilateral/unilateral), level of hearing aid signal processing, and hearing loss. International Journal of Audiology, 49, 333–346. doi:10.3109/14992020903473431 [DOI] [PubMed] [Google Scholar]

- Bilger R. C. (1984). Manual for the clinical use of the Speech Perception In Noise (SPIN) Test. Champaign-Urbana: University of Illinois. [Google Scholar]

- Bologna W. J., Chatterjee M., & Dubno J. R. (2013). Perceived listening effort for a tonal task with contralateral competing signals. The Journal of the Acoustical Society of America, 134, EL352–EL358. doi:10.1121/1.4820808 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chia E. M., Wang J. J., Rochtchina E., Cumming R. R., Newall P., & Mitchell P. (2007). Hearing impairment and health-related quality of life: The Blue Mountains Hearing Study. Ear and Hearing, 28, 187–195. doi:10.1097/AUD.0b013e31803126b6 [DOI] [PubMed] [Google Scholar]

- Cook G., Brown-Wilson C., & Forte D. (2006). The impact of sensory impairment on social interaction between residents in care homes. International Journal of Older People Nursing, 1, 216–224. doi:10.1111/j.1748-3743.2006.00034.x [DOI] [PubMed] [Google Scholar]

- Dawes P., Emsley R., Cruickshanks K. J., Moore D. R., Fortnum H., Edmondson-Jones M., & Munro K. J. (2015). Hearing loss and cognition: The role of hearing aids, social isolation and depression. PLoS One, 10, e0119616 doi:10.1371/journal.pone.0119616 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dirks D. D., Bell T. S., Rossman R. N., & Kincaid G. E. (1986). Articulation index predictions of contextually dependent words. The Journal of the Acoustical Society of America, 80, 82–92. [DOI] [PubMed] [Google Scholar]

- Dubno J. R., Ahlstrom J. B., & Horwitz A. R. (2000). Use of context by young and aged adults with normal hearing. The Journal of the Acoustical Society of America, 107, 538–546. [DOI] [PubMed] [Google Scholar]

- Dubno J. R., Dirks D. D., & Schaefer A. B. (1989). Stop-consonant recognition for normal-hearing listeners and listeners with high-frequency hearing loss. II: Articulation index predictions. The Journal of the Acoustical Society of America, 85, 355–364. [DOI] [PubMed] [Google Scholar]

- Dubno J. R., Eckert M. A., Lee F. S., Matthews L. J., & Schmiedt R. A. (2013). Classifying human audiometric phenotypes of age-related hearing loss from animal models. Journal of the Association for Research in Otolaryngology, 14, 687–701. doi:10.1007/s10162-013-0396-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dubno J. R., Lee F. S., Matthews L. J., Ahlstrom J. B., Horwitz A. R., & Mills J. H. (2008). Longitudinal changes in speech recognition in older persons. The Journal of the Acoustical Society of America, 123, 462–475. doi:10.1121/1.2817362 [DOI] [PubMed] [Google Scholar]

- Dubno J. R., Lee F. S., Matthews L. J., & Mills J. H. (1997). Age-related and gender-related changes in monaural speech recognition. Journal of Speech, Language, and Hearing Research, 40, 444–452. [DOI] [PubMed] [Google Scholar]

- Eckert M. A., Cute S. L., Vaden K. I. Jr., Kuchinsky S. E., & Dubno J. R. (2012). Auditory cortex signs of age-related hearing loss. Journal of the Association for Research in Otolaryngology, 13, 703–713. doi:10.1007/s10162-012-0332-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eckert M. A., Kuchinsky S. E., Vaden K. I. Jr., Cute S. L., Spampinato M. V., & Dubno J. R. (2013). White matter hyperintensities predict low frequency hearing in older adults. Journal of the Association for Research in Otolaryngology, 14, 425–433. doi:10.1007/s10162-013-0381-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernandez K. A., Jeffers P. W., Lall K., Liberman M. C., & Kujawa S. G. (2015). Aging after noise exposure: Acceleration of cochlear synaptopathy in “recovered” ears. Journal of Neuroscience, 35, 7509–7520. doi:10.1523/JNEUROSCI.5138-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetoni A. R., Troiani D., Petrosini L., & Paludetti G. (2015). Cochlear injury and adaptive plasticity of the auditory cortex. Frontiers in Aging Neuroscience, 7, 8. doi:0.3389/fnagi.2015.00008 [DOI] [PMC free article] [PubMed]

- Fraser S., Gagné J. P., Alepins M., & Dubois P. (2010). Evaluating the effort expended to understand speech in noise using a dual-task paradigm: The effects of providing visual speech cues. Journal of Speech, Language, and Hearing Research, 53, 18–33. doi:10.1044/1092-4388(2009/08-0140) [DOI] [PubMed] [Google Scholar]

- Gates G. A., Cooper J. C. Jr., Kannel W. B., & Miller N. J. (1990). Hearing in the elderly: The Framingham cohort, 1983–1985. Part I: Basic audiometric test results. Ear and Hearing, 11, 247–256. [PubMed] [Google Scholar]

- Gates G. A., Feeney M. P., & Higdon R. J. (2003). Word recognition and the articulation index in older listeners with probable age-related auditory neuropathy. Journal of the American Academy of Audiology, 14, 574–581. [DOI] [PubMed] [Google Scholar]

- Gates G. A., Feeney M. P., & Mills D. (2008). Cross-sectional age-changes of hearing in the elderly. Ear and Hearing, 29, 865–874. [DOI] [PubMed] [Google Scholar]

- Gates G. A., Murphy M., Rees T. S., & Fraher A. (2003). Screening for handicapping hearing loss in the elderly. Journal of Family Practice, 52, 56–62. [PubMed] [Google Scholar]

- Golding M., Mitchell P., & Cupples L. (2005). Risk markers for the graded severity of auditory processing abnormality in an older Australian population: The Blue Mountains Hearing Study. Journal of the American Academy of Audiology, 16, 348–356. [DOI] [PubMed] [Google Scholar]

- Gopinath B., Hickson L., Schneider J., McMahon C. M., Burlutsky G., Leeder S. R., & Mitchell P. (2012). Hearing-impaired adults are at increased risk of experiencing emotional distress and social engagement restrictions five years later. Age and Ageing, 41, 618–623. [DOI] [PubMed] [Google Scholar]

- Harris K. C., Dubno J. R., Keren N. I., Ahlstrom J. B., & Eckert M. A. (2009). Speech recognition in younger and older adults: A dependency on low-level auditory cortex. Journal of Neuroscience, 29, 6078–6087. doi:10.1523/JNEUROSCI.0412-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris K. C., Eckert M. A., Ahlstrom J. B., & Dubno J. R. (2010). Age-related differences in gap detection: Effects of task difficulty and cognitive ability. Hearing Research, 264, 21–29. doi:10.1016/j.heares.2009.09.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hart S. G., & Staveland L. E. (1988). Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. Advances in Psychology, 52, 139–183. [Google Scholar]

- Hawkins D. B. (2005). Effectiveness of counseling-based adult group aural rehabilitation programs: A systematic review of the evidence. Journal of the American Academy of Audiology, 16, 485–493. [DOI] [PubMed] [Google Scholar]

- Humes L. E., Wilson D. L., Barlow N. N., Garner C. B., & Amos N. (2002). Longitudinal changes in hearing aid satisfaction and usage in the elderly over a period of one or two years after hearing aid delivery. Ear and Hearing, 23, 428–438. doi:10.1097/01.AUD.0000034780.45231.4B [DOI] [PubMed] [Google Scholar]

- Kalikow D. N., Stevens K. N., & Elliott L. L. (1977). Development of a test of speech intelligibility in noise using sentence materials with controlled word predictability. The Journal of the Acoustical Society of America, 61, 1337–1351. [DOI] [PubMed] [Google Scholar]

- Knutson J. F., Johnson A., & Murray K. T. (2006). Social and emotional characteristics of adults seeking a cochlear implant and their spouses. British Journal of Health Psychology, 11(Pt. 2), 279–292. doi:10.1348/135910705X52273 [DOI] [PubMed] [Google Scholar]

- Kramer S. E., Kapteyn T. S., Festen J. M., & Kuik D. J. (1997). Assessing aspects of auditory handicap by means of pupil dilatation. Audiology, 36, 155–164. [DOI] [PubMed] [Google Scholar]

- Kuchinsky S. E., Ahlstrom J. B., Vaden K. I. Jr., Cute S. L., Humes L. E., Dubno J. R., & Eckert M. A. (2013). Pupil size varies with word listening and response selection difficulty in older adults with hearing loss. Psychophysiology, 50, 23–34. doi:10.1111/j.1469-8986.2012.01477.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lichtenstein M. J., Bess F. H., & Logan S. A. (1988). Validation of screening tools for identifying hearing-impaired elderly in primary care. Journal of the American Medical Association, 259, 2875–2878. [PubMed] [Google Scholar]

- Lin F. R., Thorpe R., Gordon-Salant S., & Ferrucci L. (2011). Hearing loss prevalence and risk factors among older adults in the United States. Journals of Gerontology: Series A: Biological Sciences and Medical Sciences, 66, M582–M590. doi:10.1093/gerona/glr002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mackersie C. L., & Cones H. (2011). Subjective and psychophysiological indexes of listening effort in a competing-talker task. Journal of the American Academy of Audiology, 22, 113–122. doi:10.3766/jaaa.22.2.6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matthews L. J., Lee F. S., Mills J. H., & Schum D. J. (1990). Audiometric and subjective assessment of hearing handicap. Archives of Otolaryngology—Head & Neck Surgery, 116, 1325–1330. [DOI] [PubMed] [Google Scholar]

- McGarrigle R., Munro K. J., Dawes P., Stewart A. J., Moore D. R., Barry J. G., & Amitay S. (2014). Listening effort and fatigue: What exactly are we measuring? A British Society of Audiology Cognition in Hearing Special Interest Group “white paper.” International Journal of Audiology, 53, 433–445. [DOI] [PubMed] [Google Scholar]

- Nash S. D., Cruickshanks K. J., Klein R., Klein B. E., Nieto F. J., Huang G. H., & Tweed T. S. (2011). The prevalence of hearing impairment and associated risk factors: The Beaver Dam Offspring Study. Archives of Otolaryngology—Head & Neck Surgery, 137, 432–439. doi:10.1001/archoto.2011.15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newman C. W., Weinstein B. E., Jacobson G. P., & Hug G. A. (1990). The Hearing Handicap Inventory for Adults: Psychometric adequacy and audiometric correlates. Ear and Hearing, 11, 430–433. [DOI] [PubMed] [Google Scholar]

- Ng J. H., & Loke A. Y. (2015). Determinants of hearing-aid adoption and use among the elderly: A systematic review. International Journal of Audiology, 54, 291–300. doi:10.3109/14992027.2014.966922 [DOI] [PubMed] [Google Scholar]

- Saunders G. H., & Forsline A. (2006). The Performance-Perceptual Test (PPT) and its relationship to aided reported handicap and hearing aid satisfaction. Ear and Hearing, 27, 229–242. doi:10.1097/01.aud.0000215976.64444.e6 [DOI] [PubMed] [Google Scholar]

- Schneider A. L., Gottesman R. F., Mosley T., Alonso A., Knopman D. S., Coresh J., & Selvin E. (2013). Cognition and incident dementia hospitalization: Results from the atherosclerosis risk in communities study. Neuroepidemiology, 40, 117–124. doi:10.1159/000342308000342308 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schum D. J., Matthews L. J., & Lee F. S. (1991). Actual and predicted word-recognition performance of elderly hearing-impaired listeners. Journal of Speech and Hearing Research, 34, 636–642. [DOI] [PubMed] [Google Scholar]

- Sheldon S., Pichora-Fuller M. K., & Schneider B. A. (2008). Priming and sentence context support listening to noise-vocoded speech by younger and older adults. The Journal of the Acoustical Society of America, 123, 489–499. doi:10.1121/1.2783762 [DOI] [PubMed] [Google Scholar]

- Sindhusake D., Mitchell P., Smith W., Golding M., Newall P., Hartley D., & Rubin G. (2001). Validation of self-reported hearing loss: The Blue Mountains Hearing Study. International Journal of Epidemiology, 30, 1371–1378. [DOI] [PubMed] [Google Scholar]

- Steiger J. H. (1980). Tests for comparing elements of a correlation matrix. Psychological Bulletin, 87, 245–251. [Google Scholar]

- Stine-Morrow E. A., Soederberg Miller L. M., & Nevin J. A. (1999). The effects of context and feedback on age differences in spoken word recognition. Journals of Gerontology: Series B: Psychological Sciences and Social Sciences, 54, P125–P134. [DOI] [PubMed] [Google Scholar]

- Tun P. A., McCoy S., & Wingfield A. (2009). Aging, hearing acuity, and the attentional costs of effortful listening. Psychology and Aging, 24, 761–766. doi:10.1037/a0014802 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaden K. I. Jr., Kuchinsky S. E., Ahlstrom J. B., Dubno J. R., & Eckert M. A. (2015). Cortical activity predicts which older adults recognize speech in noise and when. Journal of Neuroscience, 35, 3929–3937. doi:10.1523/JNEUROSCI.2908-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaden K. I. Jr., Kuchinsky S. E., Cute S. L., Ahlstrom J. B., Dubno J. R., & Eckert M. A. (2013). The cingulo-opercular network provides word-recognition benefit. Journal of Neuroscience, 33, 18979–18986. doi:10.1523/JNEUROSCI.1417-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ventry I. M., & Weinstein B. E. (1982). The hearing handicap inventory for the elderly: A new tool. Ear and Hearing, 3, 128–134. [DOI] [PubMed] [Google Scholar]

- Weinstein B. E., & Ventry I. M. (1982). Hearing impairment and social isolation in the elderly. Journal of Speech and Hearing Research, 25, 593–599. [DOI] [PubMed] [Google Scholar]

- Weinstein B. E., & Ventry I. M. (1983a). Audiologic correlates of hearing handicap in the elderly. Journal of Speech and Hearing Research, 26, 148–151. [DOI] [PubMed] [Google Scholar]

- Weinstein B. E., & Ventry I. M. (1983b). Audiometric correlates of the Hearing Handicap Inventory for the Elderly. Journal of Speech and Hearing Disorders, 48, 379–384. [DOI] [PubMed] [Google Scholar]

- Wiley T. L., Cruickshanks K. J., Nondahl D. M., & Tweed T. S. (2000). Self-reported hearing handicap and audiometric measures in older adults. Journal of the American Academy of Audiology, 11, 67–75. [PubMed] [Google Scholar]

- Wiley T. L., Cruickshanks K. J., Nondahl D. M., Tweed T. S., Klein R., & Klein B. E. (1998). Aging and word recognition in competing message. Journal of the American Academy of Audiology, 9, 191–198. [PubMed] [Google Scholar]

- Zekveld A. A., Kramer S. E., Kessens J. M., Vlaming M. S., & Houtgast T. (2009). User evaluation of a communication system that automatically generates captions to improve telephone communication. Trends in Amplification, 13, 44–68. doi:10.1177/1084713808330207 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.