Abstract

Background

Spaced education is a novel method that improves medical education through online repetition of core principles often paired with multiple-choice questions. This model is a proven teaching tool for medical students, but its effect on resident learning is less established. We hypothesized that repetition of key clinical concepts in a “Clinical Pearls” format would improve knowledge retention in medical residents.

Methods

This study investigated spaced education with particular emphasis on using a novel, email-based reinforcement program, and a randomized, self-matched design, in which residents were quizzed on medical knowledge that was either reinforced or not with electronically-administered spaced education. Both reinforced and non-reinforced knowledge was later tested with four quizzes.

Results

Overall, respondents incorrectly answered 395 of 1008 questions (0.39; 95% CI, 0.36–0.42). Incorrect response rates varied by quiz (range 0.34–0.49; p = 0.02), but not significantly by post-graduate year (PGY1 0.44, PGY2 0.33, PGY3 0.38; p = 0.08). Although there was no evidence of benefit among residents (RR = 1.01; 95% CI, 0.83–1.22; p = 0.95), we observed a significantly lower risk of incorrect responses to reinforced material among interns (RR = 0.83, 95% CI, 0.70–0.99, p = 0.04).

Conclusions

Overall, repetition of Clinical Pearls did not statistically improve test scores amongst junior and senior residents. However, among interns, repetition of the Clinical Pearls was associated with significantly higher test scores, perhaps reflecting their greater attendance at didactic sessions and engagement with Clinical Pearls. Although the study was limited by a low response rate, we employed test and control questions within the same quiz, limiting the potential for selection bias. Further work is needed to determine the optimal spacing and content load of Clinical Pearls to maximize retention amongst medical residents. This particular protocol of spaced education, however, was unique and readily reproducible suggesting its potential efficacy for intern education within a large residency program.

Introduction

Internal medicine residency curricula are designed around six core competencies, designated by the Accreditation Council for Graduate Medical Education, that are required for graduation, including patient care, medical knowledge, professionalism, systems-based practice, practice-based learning, and communication skills [1]. Residents reach many of these competencies through direct patient care on inpatient and outpatient teaching services [2,3]. Medical knowledge in particular must be supplemented with small- and large-group didactic sessions. However, these sessions are often sandwiched between busy days of patient care, leaving little room for reflection and retention of subject matter.

Knowledge retention among medical housestaff remains a pervasive concern [4]. Repetition of key clinical concepts has been shown to improve retention of medical knowledge. Much of this research has involved spaced education (SE), a novel, evidence-based form of online education demonstrated to improve knowledge acquisition in randomized trials [4–6]. The SE protocol classically involves a spacing effect and testing effect. The spacing effect includes repeated exposure to medical knowledge over a given time period to reinforce and consolidate retention. The testing effect typically leverages the principle that testing improves a learner’s performance. Previous studies have shown this model is successful with medical students, pediatric residents and surgical trainees [4, 7–9].

We sought to determine whether a modified version of SE focused on the spacing effect could be applied to a novel, email-based reinforcement program on medical facts amongst medical house-officers, using a randomized, self-matched design in which interns and residents were tested on material that was or was not further reinforced at random with electronically-administered SE.

Methods

The study was conducted at a large, single urban academic medical center with approximately 649 licensed beds. The internal medicine residency program is composed of approximately 160 housestaff. As education research, the project was deemed exempt from IRB approval by the Beth Israel Deaconess Medical Center Committee on Clinical Investigations.

To maximize retention, the residency program previously instituted a series of weekly "Clinical Pearls", or key learning points, from lectures and didactic sessions given throughout the week. These pearls are sent to all medical housestaff via email weekly and cover topics from major lecture series (Case Conferences, Morbidity and Mortality conference, Grand Rounds, Noon Conferences, Intern and Resident Report). All residents receive these “Pearls” each week, regardless of their individual rotation and attendance at conference. The Pearls document, which is drafted each week by a resident during his or her teaching elective, is typically one to two pages in length, in bullet-point format, and organized by day of week and didactic session.

Prior to this study, a survey of housestaff showed that 22% of residents read these emails weekly, while 72% read a Clinical Pearls email at least monthly. More than 60% of residents never referred back to old Clinical Pearls weekly emails. We hypothesized that synthesizing this learning material every month and testing this material would increase readership and improve retention.

From December 2013 to May 2014, one author (JM) compiled all weekly Pearls emails over a calendar month. Each individual learning point, or Pearl, underwent simple randomization to be reinforced or not reinforced via SE. Pearls that were not reinforced underwent no further repetition (i.e., the standard of care within the program). The reinforced Pearls were incorporated into a larger monthly document organized by subspecialty. This document was emailed each month to all housestaff in both Microsoft® Word and Portable Document Format formats. The document contained reinforced Pearls from both the current and prior month (i.e., the February email contained reinforced Pearls from both January and February, etc.).

To test the spacing effect of the reinforced material, we sent out eight original multiple choice questions each month to the entire housestaff based directly on the Clinical Pearls. Half of these questions covered material that had been randomly reinforced by spaced learning in the weekly and monthly documents, and half covered material that had only appeared in the weekly email; these were not distinguished on the survey in any way (Table 1).

Table 1. Questions written each week.

| Clinical Pearl Reinforced | Clinical Pearl NOT Reinforced | |

|---|---|---|

| Week 1 | 1 Question written | 1 Question written |

| Week 2 | 1 Question written | 1 Question written |

| Week 3 | 1 Question written | 1 Question written |

| Week 4 | 1 Question written | 1 Question written |

Each week, after each individual pearl was randomized to SE or no SE, two questions were written by either a resident on their teaching elective or by the author (JM). One question pertained to clinical information to be reinforced by SE, and another question was based on a Pearl not reinforced by SE, for a total of eight questions. This strategy was repeated for five months.

The eight questions covered material from weeks one and three of the most recent month and weeks two and four of the month prior (Table 2), ensuring that all weeks’ lectures and Pearls were evaluated with questions.

Table 2. Composition of each quiz for housestaff.

| Quiz | Content covered | Length |

|---|---|---|

|

Quiz 1 (After Month 2) |

Week 2/4 Questions from MONTH 1 and Week 1/3 Questions from MONTH 2 | 8 Questions |

|

Quiz 2 (After Month 3) |

Week 2/4 Questions from MONTH 2 and Week 1/3 Questions from MONTH 3 | 8 Questions |

|

Quiz 3 (After Month 4) |

Week 2/4 Questions from MONTH 3 and Week 1/3 Questions from MONTH 4 | 8 Questions |

|

Quiz 4 (After Month 5) |

Week 2/4 Questions from MONTH 4 and Week 1/3 Questions from MONTH 5 | 8 Questions |

Each monthly eight question quiz included questions from the current month and the previous month. Four questions were taken from material randomized to SE and four questions were taken from material not randomized to SE.

Subjects responded anonymously to these questions. No questions were repeated across surveys, and answers were not provided until all responses to questions were collected. These multiple choice questions were written by a resident on their teaching elective or by the author (JM) based directly on the weekly Clinical Pearls emails. All questions were reviewed by two reviewers- (JM and AV) to ensure standardization of the level of difficulty, question format, and quality of the content tested. Questions were edited as necessary to meet the standards agreed upon between the two reviewers prior to study initiation. To maximize power, an incorrect response rate near but below 50% was sought.

In August 2014, during the subsequent academic year, a post-study survey was sent out to junior and senior residents who were housestaff during the study (S1 Fig). Using a Likert scale, the survey assessed how often housestaff read the weekly and monthly Pearls as well as the educational impact of the Pearls and quizzes. The residents were also asked to provide free text responses describing the barriers to reading Clinical Pearls and answering the quiz questions.

Statistical methods

Because correct responses were more common than incorrect, we evaluated the likelihood of any given question being answered incorrectly as the primary outcome. We present the overall proportion of incorrect responses with exact binomial confidence intervals.

To evaluate the effect of reinforcement on the frequency of correct answers, we used generalized estimating equations to account for clustering of questions within-resident and within-quiz. We estimated the relative risk of an incorrect response to questions that had been reinforced with SE relative to those that had not, using a log link, binomial distribution, and exchangeable correlation matrix. We adjusted for post-graduate year (in three categories) except in analyses stratified by year. We tested the effect of post-graduate year and the specific quiz given similarly using type three tests with two or three degrees of freedom, respectively.

Results

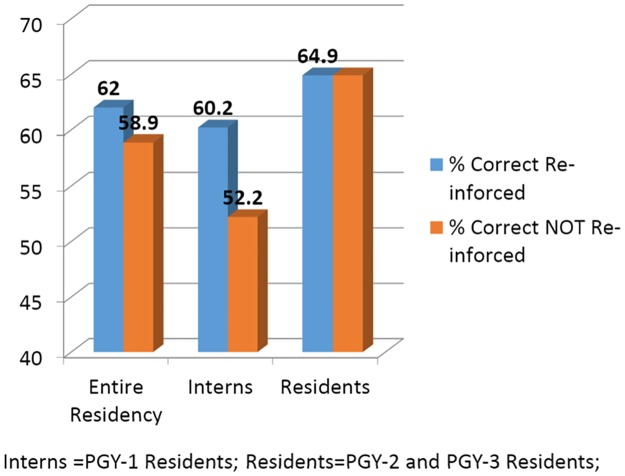

A total of four quizzes, each comprised of eight questions, were distributed to housestaff. On average, 31 (SD 6.55) house officers responded to each quiz, representing a response rate of 20%. Of these, interns comprised 47% of respondents and junior and senior residents comprised the remainder. All respondents answered all the questions on each quiz, providing an identical number of questions to reinforced and unreinforced material. Overall, respondents incorrectly answered 395 of 1008 questions (39%; 95% confidence interval [CI], 36%-42%). Incorrect response rates varied by quiz (range 34%-49%; p = 0.02) but not significantly by post-graduate year (PGY) (PGY1 44%, PGY2 33%, PGY3 38%; p = 0.08). In crude analyses, 188 of 504 questions on reinforced material were answered incorrectly, compared with 207 incorrect responses to unreinforced material (Fig 1).

Fig 1. Quiz results on material reinforced and not reinforced.

Quiz questions were more often correct on material that was reinforced, compared to on material not reinforced. Interns = PGY-1 Residents; Residents = PGY-2 and PGY-3 Residents.

We next evaluated the relative risk of an incorrect response overall and among interns and residents. In analyses accounting for clustering and adjusted for post-graduate year, the relative risk of answering a reinforced question incorrectly compared with an unreinforced question was 0.90 (95% CI, 0.79–1.08; p = 0.14). Although there was no evidence of benefit among junior and senior residents (RR = 1.01; 95% CI, 0.83–1.22; p = 0.95), we observed a significantly lower risk of incorrect responses to reinforced material among interns (RR = 0.83, 95% CI, 0.70–0.99, p = 0.04) (Table 3).

Table 3. Relative risk of answering a question on reinforced (vs. unreinforced) material incorrectly.

| Class | RR | 95% CI | P-value |

|---|---|---|---|

| All housestaff | .90 | .79–1.03 | .14 |

| PGY-1 | .83 | .69-.99 | .04 |

| PGY2 and PGY3 | 1.00 | .82–1.22 | .95 |

RR = Relative Risk; CI-Confidence Interval. Data was clustered by individual, month of quiz and adjusted for PGY class.

A total of 27 housestaff responded to the post-study survey (S1 Fig). Of these, 73% of responders felt the addition of the monthly Clinical Pearls helped them learn. However, 56% wished for immediate feedback to question responses and 48% felt that the reinforcement program with reminder messages involved too many emails.

Discussion

In this randomized trial of a focused form of SE tied to an existing email system to reinforce key teaching points from weekly lectures, we found no statistical difference in retention of reinforced versus unreinforced material among our housestaff overall. However, retention was significantly better with reinforced material among interns.

There are several reasons that interns may have been most likely to respond to this intervention. Interns may have a steeper learning curve and therefore may be more motivated to review Clinical Pearls emphasized in didactic sessions, as more of this material is likely to be new. Indeed, their rate of incorrect responses was modestly higher than that of junior and senior residents, although residents of all years appeared to find the questions challenging (as intended). In addition, interns may be less likely to suffer from email fatigue, a common problem with this type of intervention in an increasingly digitalized housestaff environment. A post-survey from this project concluded that many interns and residents were turned off by the reinforcement of Clinical Pearls due to the frequency of emails.

Repetition of clinical material has been shown to improve retention over time in other studies as well. A 2012 study of CPR skills and knowledge similarly showed that recertification status blunted the deterioration of knowledge-based skills (i.e. scene safety and EMS activation) over time. However, components requiring specific skills, such as chest marking, declined over time despite recertification status [10].

In traditional models of SE, when students submit an answer, the student is immediately presented with the correct answer and an explanation of the topic [7]. In addition to augmenting retention, regimented multiple-choice quizzes may also improve medical residents’ standardized testing results. Recent work has shown that a continuous 12-month multiple-choice testing program improved PGY-3 in-service training exam scores [11]. This, coupled with didactic exam attendance, may help combat declining American Board of Internal Medicine passing rates [12]. Although we did not formally evaluate the testing effect in our trial, our results suggest that quizzes supplemented with immediate feedback may be received warmly, as the majority of respondents wanted quizzes to contain answers. Nonetheless, for long-term sustainability, we tested a design that only required compilation and reinforcement of existing email-based teaching points. Future studies could contrast this method to one in which bullet points are reinforced by testing, rather than reiteration, to determine if the added burden of quiz creation would merit its incremental effort.

Our study has both strengths and weaknesses. A particular strength of this study was its method of randomization. We did not randomize house officers; in our experience, substantial crossover can occur in novel interventions that only some residents receive. Instead, the subject matter was randomized, thereby allowing the entire housestaff to participate in the program. This eliminated unintended crossover between groups and also allowed each quiz to serve as its own control, increasing the efficiency of the design. We studied approximately 16 weeks of reinforced material, allowing for a broad range of questions and a large sample size of questions. The SE was all administered electronically, which required 1–2 hours of organizational labor each week, although most of the effort inherent in this study was devoted to studying its effect rather than to the intervention itself. We suspect that as smartphone applications that can be harnessed for medical education before more ubiquitous, the process of SE import and extraction may simplify [13–16]. Importantly, this intervention was well-liked amongst the housestaff. Based on our post-intervention survey, 74% of house officers agreed the Clinical Pearls were a good learning exercise and 67% of house officers said they learned from the monthly quizzes, despite a one week delay in receiving the answer key. We feel this particular protocol of spaced education, though unique, is easily reproducible and feasible even within a large and busy medical residency program. Barriers include the necessity for motivated and experienced residents to compile Clinical Pearls and organize quizzes. In our program, a dedicated house officer on a teaching elective greatly facilitated the protocol.

Limitations of this study include a low response rate. The multiple choice quizzes were optional and were given online, with the link provided via email. However, the fact that each quiz included both intervention and control questions substantially minimizes the bias that a low response rate might otherwise produce. In addition, we had no means of determining who read the weekly or monthly Pearls. Therefore, it was unclear whether those who read the Pearls were the same residents who took the quizzes; if not, the benefit of this intervention may have been markedly underestimated. This study also did not use a typical SE protocol. In our study, housestaff were not given immediate feedback to their quiz responses, and questions were not repeated in a regimented program as is typically performed with SE. Our effect size may have been stronger had we used a more quintessential model, albeit with less immediate applicability to programs like ours.

We have no objective measure of the quality of our assessment questions, although they were all edited by two co-authors, including an associate residency program director. Two observations suggest that these questions achieved their desired aims. First, we aimed for a correct-response rate similar to that measured, suggesting that our calibration of difficulty was accurate. Second, the fact that we observed an effect of SE using these questions, albeit only among interns, suggests that the questions were sufficiently sensitive to change; had they been substantially easier or more difficult, it would have been impossible to detect such an effect.

In summary, in this randomized trial, we found that reinforcement of learning material statistically improves medical retention among medical interns, but not medical residents. SE appears to be a promising and readily incorporated modality for improving retention when added to low-cost, highly-accessible electronic reinforcement systems.

Supporting information

Clinical Pearls Assessment Survey.

(TIFF)

IRB determination email.

(MSG)

IRB determination form.

(DOCX)

Acknowledgments

The authors acknowledge Dr. C. Christopher Smith and the internal medicine residents at Beth Israel Deaconess Medical Center for their support and contribution to this educational intervention.

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

The authors received no specific funding for this work.

References

- 1.Chaudhry SI, Lien C, Ehrlich J, Lane S, Cordasco K, McDonald FS, et al. Curricular content of internal medicine residency programs: a nationwide report. The American journal of medicine 2014;127:1247–54. doi: 10.1016/j.amjmed.2014.08.009 [DOI] [PubMed] [Google Scholar]

- 2.Holmboe ES, Bowen JL, Green M, Gregg J, DiFrancesco L, Reynolds E, et al. Reforming internal medicine residency training. A report from the Society of General Internal Medicine's task force for residency reform. Journal of general internal medicine 2005;20:1165–72. doi: 10.1111/j.1525-1497.2005.0249.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Association of Program Directors in Internal M, Fitzgibbons JP, Bordley DR, Berkowitz LR, Miller BW, Henderson MC. Redesigning residency education in internal medicine: a position paper from the Association of Program Directors in Internal Medicine. Annals of internal medicine 2006;144:920–6. [DOI] [PubMed] [Google Scholar]

- 4.Kerfoot BP, Brotschi E. Online spaced education to teach urology to medical students: a multi-institutional randomized trial. American journal of surgery 2009;197:89–95. doi: 10.1016/j.amjsurg.2007.10.026 [DOI] [PubMed] [Google Scholar]

- 5.Shaw T, Long A, Chopra S, Kerfoot BP. Impact on clinical behavior of face-to-face continuing medical education blended with online spaced education: a randomized controlled trial. The Journal of continuing education in the health professions 2011;31:103–8. doi: 10.1002/chp.20113 [DOI] [PubMed] [Google Scholar]

- 6.Kerfoot BP, Kearney MC, Connelly D, Ritchey ML. Interactive spaced education to assess and improve knowledge of clinical practice guidelines: a randomized controlled trial. Annals of surgery 2009;249:744–9. doi: 10.1097/SLA.0b013e31819f6db8 [DOI] [PubMed] [Google Scholar]

- 7.Kerfoot BP, Baker H, Pangaro L, Agarwal K, Taffet G, Mechaber A, et al. An online spaced-education game to teach and assess medical students: a multi-institutional prospective trial. Academic medicine: journal of the Association of American Medical Colleges 2012;87:1443–9. [DOI] [PubMed] [Google Scholar]

- 8.Gyorki DE, Shaw T, Nicholson J, Baker C, Pitcher M, Skandarajah A, et al. Improving the impact of didactic resident training with online spaced education. ANZ journal of surgery 2013;83:477–80. doi: 10.1111/ans.12166 [DOI] [PubMed] [Google Scholar]

- 9.Mathes EF, Frieden IJ, Cho CS, Boscardin CK. Randomized controlled trial of spaced education for pediatric residency education. Journal of graduate medical education 2014;6:270–4. doi: 10.4300/JGME-D-13-00056.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Anderson GS, Gaetz M, Statz C, Kin B. CPR skill retention of first aid attendants within the workplace. Prehospital and disaster medicine 2012;27:312–8. doi: 10.1017/S1049023X1200088X [DOI] [PubMed] [Google Scholar]

- 11.Mathis BR, Warm EJ, Schauer DP, Holmboe E, Rouan GW. A multiple choice testing program coupled with a year-long elective experience is associated with improved performance on the internal medicine in-training examination. Journal of general internal medicine 2011;26:1253–7. doi: 10.1007/s11606-011-1696-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.McDonald FS, Zeger SL, Kolars JC. Factors associated with medical knowledge acquisition during internal medicine residency. Journal of general internal medicine 2007;22:962–8. doi: 10.1007/s11606-007-0206-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sayedalamin Z, Alshuaibi A, Almutairi O, Baghaffar M, Jameel T, Baig M. Utilization of smart phones related medical applications among medical students at King Abdulaziz University, Jeddah: A cross-sectional study. Journal of infection and public health 2016;9:691–7. doi: 10.1016/j.jiph.2016.08.006 [DOI] [PubMed] [Google Scholar]

- 14.Dimond R, Bullock A, Lovatt J, Stacey M. Mobile learning devices in the workplace: 'as much a part of the junior doctors' kit as a stethoscope'? BMC medical education 2016;16:207 doi: 10.1186/s12909-016-0732-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Blumenfeld O, Brand R. Real time medical learning using the WhatsApp cellular network: a cross sectional study following the experience of a division's medical officers in the Israel Defense Forces. Disaster and military medicine 2016;2:12 doi: 10.1186/s40696-016-0022-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Cavicchi A, Venturini S, Petrazzuoli F, Buono N, Bonetti D. INFORMEG, a new evaluation system for family medicine trainees: feasibility in an Italian rural setting. Rural and remote health 2016;16:3666 [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Clinical Pearls Assessment Survey.

(TIFF)

IRB determination email.

(MSG)

IRB determination form.

(DOCX)

Data Availability Statement

All relevant data are within the paper and its Supporting Information files.