Abstract

Health-care service delivery models have evolved from a practitioner-centered approach toward a patient-centered ideal. Concurrently, increasing emphasis has been placed on the use of empirical evidence in decision-making to increase clinical accountability. The way in which clinicians use empirical evidence and client preferences to inform decision-making provides an insight into health-care delivery models utilized in clinical practice. The present study aimed to investigate the sources of information audiologists use when discussing rehabilitation choices with clients, and discuss the findings within the context of evidence-based practice and patient-centered care. To assess the changes that may have occurred over time, this study uses a questionnaire based on one of the few studies of decision-making behavior in audiologists, published in 1989. The present questionnaire was completed by 96 audiologists who attended the World Congress of Audiology in 2014. The responses were analyzed using qualitative and quantitative approaches. Results suggest that audiologists rank clinical test results and client preferences as the most important factors for decision-making. Discussion with colleagues or experts was also frequently reported as an important source influencing decision-making. Approximately 20% of audiologists mentioned utilizing research evidence to inform decision-making when no clear solution was available. Information shared at conferences was ranked low in terms of importance and reliability. This study highlights an increase in awareness of concepts associated with evidence-based practice and patient-centered care within audiology settings, consistent with current research-to-practice dissemination pathways. It also highlights that these pathways may not be sufficient for an effective clinical implementation of these practices.

Keywords: decision-making, audiology, evidence-based practice, patient-centered care

Introduction

The type of information used by various health-care professionals in their clinical decision-making has been widely studied (Heiwe et al., 2011; Metcalfe et al., 2001; Sosnowy, Weiss, Maylahn, Pirani, & Katagiri, 2013; Straus, Tetroe, & Graham, 2011). There is, however, a paucity of current research relating to decision-making in audiology. Clinical decision-making is a contextual, continuous, and evolving process, where data are gathered, interpreted, and evaluated in order to select a course of action (Tiffen, Corbridge, & Slimmer, 2014). The way that clinicians make decisions about patient care provides insights into their style of practice. A study conducted by Doyle in 1989 surveyed Australian audiologists’ breadth of decision-making, including the frequency and topics of decisions relating to diagnostic (e.g., which test to use), rehabilitative (e.g., which type of hearing device to recommend), and procedural decisions (e.g., how to manage waiting lists). That study also surveyed the sources of information used when making decisions (e.g., client’s preferences, journals, or colleagues); the perceived degree of difficulty in reaching decisions; and the perceived confidence in decisions. The findings suggested that audiologists considered published literature a low priority for assisting clinical decisions, as it was seldom mentioned and consistently ranked below other sources of information. Interestingly, audiologists perceived some degree of difficulty in making decisions due to complex and sometimes conflicting information sources; however, they generally felt confident in their decisions (Doyle, 1989).

Over the last 30 years, health-care service delivery models have evolved away from the long-standing practitioner-centered framework, where decisions are unilateral and formed primarily from expert opinion, toward a model that is more patient-centered, placing greater emphasis on patient engagement and shared decision-making (Richards, Montori, Godlee, Lapsley, & Paul, 2013). As described by Berwick (2016), there has been a transition from an era in which clinicians had the authority to judge the quality of their own work, toward an era promoting outcome measurement and accountability to patients, third-party funding bodies, and society. This transition resulted from unexplained variations between practices and patient outcomes, high rates of injuries from errors, and increased intervention costs (Brennan et al., 1991; McIntyre & Popper, 1983; Vincent, Neale, & Woloshynowych, 2001; Wilson et al., 1995). Clinical practice guidelines (CPG), including performance measures (Williams, Schmaltz, Morton, Koss, & Loeb, 2005) and checklists (Cohen, Littenberg, Wetzel, & Neuhauser, 1982; Haynes et al., 2009), were introduced to minimize practice variability and error rates. Ideally, these guidelines are developed based on principles of evidence-based practice (EBP; Haines & Jones, 1994; Woolf, 1992). That is, guidelines should develop from systematic identification and synthesis of the best available scientific evidence, in conjunction with clinical expertise, and patient values (National Health and Medical Research Council, 1999; Sackett, Rosenberg, Gray, Haynes, & Richardson, 1996; Wolf, 1999). Clinicians’ imperative to align their practice with EBP is reflected in professional practice standards across many professional bodies.

While the important role of patient input is recognized in EBP, the specific concept of patient-centered care (PCC) has also been given greater attention in health care in recent years. In its most basic form, PCC refers to patient–clinician interactions, emphasizes the importance of relationship building, and sharing of input and control in information exchange and decision-making. That is, PCC advocates for a more biopsychosocial and mutualistic approach (Roter, 2000), whereby the patient’s beliefs, goals, and perspectives are taken into account in the development of practice guidelines and delivery of health care (Montori, Brito, & Murad, 2013).

EBP and PCC models may be more difficult to achieve within health-care professions that also function as a business practice, where financial interests might be in conflict with the patient’s best interest. For example, audiologists in many countries can both assess hearing and dispense hearing aids to the general public (Metz, 2000), and recent reports suggest that audiology has a focus on product sales (Lowy, 2013; Shaw, 2016). Audiology therefore provides an interesting field to evaluate the adoption of EBP and PCC practices.

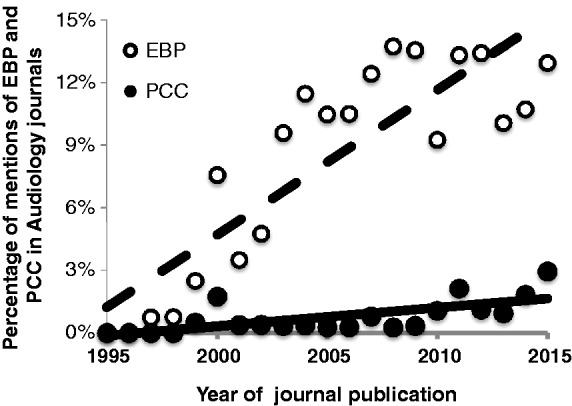

Over the last decade, several authors have studied or promoted the benefits of using EBP (Cox, 2005; Moodie et al., 2011; Wong & Hickson, 2011) and PCC in audiology (Ekberg, Grenness, & Hickson, 2014; Grenness, Hickson, Laplante-Lévesque, & Davidson, 2014; Grenness, Hickson, Laplante-Lévesque, Meyer, & Davidson, 2015a; Grenness, Hickson, Laplante-Lévesque, Meyer, & Davidson, 2015b; Laplante-Lévesque, Hickson, & Grenness, 2014; Laplante-Lévesque, Hickson, & Worrall, 2010; Poost-Foroosh, Jennings, Shaw, Meston, & Cheesman, 2011). As shown in Figure 1, among all articles (n = 6,152) including “audiology” in the title, abstract, or keywords, none included “evidence-based practice” or “patient-centered care” in 1995. Twenty years later, the inclusion of EBP and PCC had increased to 14% and 3%, respectively (based on Scopus, Elsevier database). Given the increase in publication and the formalization of the expectation by professional associations for EBP and PCC, it is expected that the patterns of information used to influence decision-making by audiologists may have changed since Doyle (1989). Yet, some research suggests that, contrary to EBP, audiologists are not effectively using empirical research evidence (Moodie et al., 2011). For example, the use of real-ear measurements for hearing aid fitting, a widely evidenced process for verifying and documenting individuals’ amplification settings (Aazh & Moore, 2007) and improving patient satisfaction (Kochkin et al., 2010), is reported to be inconsistent across the United States. One survey found that only 52% of the 420 participating clinicians routinely used real-ear measurements for hearing aid verification (Mueller & Picou, 2010). Moreover, recent evidence suggests that patient involvement in decisions, a central tenet of PCC and EBP, is limited in audiology practice (Ekberg et al., 2014; Grenness et al., 2015b).

Figure 1.

Percentage of articles over time, in audiology journals, that mention evidence-based practice (EBP) and patient-centered care (PCC).

Due to the increased focus on EBP and PCC, in combination with indications of notable discrepancies between recommended best practice and current practice, there is a need to reevaluate how clinical decisions are made in audiology. This questionnaire-based study aimed to: (a) identify how audiologists rate the importance and reliability of different sources of information; (b) identify which sources of information audiologists mention accessing in difficult clinical scenarios; (c) assess perceived difficulty and confidence in decision-making; (d) evaluate how these aspects of decision-making (a–c) have changed relative to Doyle (1989); and (e) assess how compatible current clinical decision-making behaviors are with EBP and PCC.

Methodology

Participants

Participants were practicing audiologists who attended the XXXII World Congress of Audiology (WCA; Brisbane, Australia: 2014). They were invited to complete an online or paper version of the questionnaire described later. Attendees were approached directly when visiting the parallel WCA Trade Exposition, where the researchers stood at the HEARing Cooperative Research Centre booth. A web-link to the survey and an invitation to visit this booth to complete the survey was also advertised during the WCA presentations. A total of 96 questionnaires were completed, from an estimated 1,138 practicing audiologists who attended the conference. This number was estimated based on the number of certificates of attendance that were requested to Audiology Australia for purpose of continuous professional development programs (personal communication with the Chair of the Congress as well the Operation Manager of Audiology Australia). This translates to a response rate of 8.5%. Demographic characteristics of the respondents are shown in Table 1.

Table 1.

Demographic Characteristics of Participants.

| Characteristics | % |

|---|---|

| Region of practice | |

| Asia Pacific | 90% |

| (Australia only) | (84%) |

| Asia and Middle East | 6% |

| Europe and Americas | 4% |

| Gender | |

| Male | 33% |

| Female | 67% |

| Age (years) | |

| <30 | 28% |

| ≥30 and <50 | 55% |

| ≥50 | 17% |

| Education | |

| Undergraduate | 9% |

| Masters or Postgraduate diploma | 80% |

| PhD or AuD | 11% |

| Experience (years) | |

| <10 | 51% |

| 10–20 | 28% |

| >20 | 16% |

| Funding of practice | |

| Public | 43% |

| Private | 35% |

| Both | 22% |

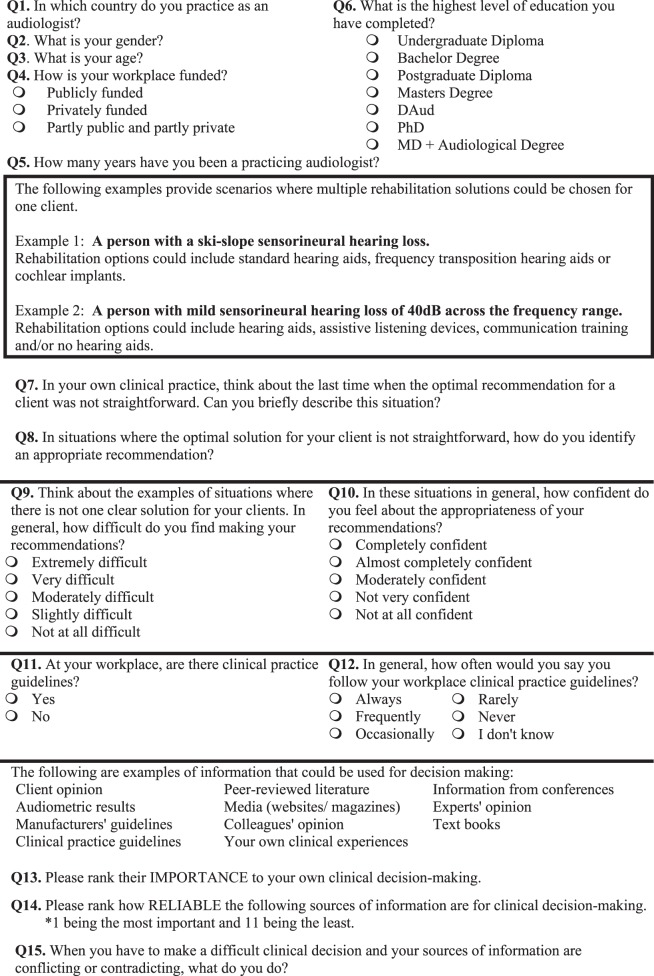

Questionnaire

The questionnaire was based on Doyle’s (1989) survey of Australian audiologists’ decision-making. While Doyle (1989) covered three types of decisions: (a) diagnostic—for example, the selection of particular tests, the referrals for additional investigations, or the classification of results; (b) rehabilitative—for example, the decision to fit a hearing aid, the prescription of hearing aid characteristics, or the referral for a particular therapy; and (c) procedural—for example, the formulation of criteria for client eligibility, allocation of time for client contact, the development of waiting list policies, or the referral procedures; the questionnaire in this study only focused on the rehabilitative type of decisions. To help the respondents conceptualize the difficult decisions that were addressed, examples of scenarios where multiple rehabilitation options were possible were provided. Following this, respondents were asked to give an example of a situation when the optimal solution was not straightforward and to describe how they came to their recommendation.

Questionnaire items included rating scales, ranking questions, and open-ended questions relating to decision-making behaviors. The topics covered in the questionnaire included decision-making difficulties, confidence in recommendations, and information used when making decisions. Ranking questions required participants to order common sources of information used in decision-making both in terms of importance and reliability. The questionnaire was piloted with 10 audiologists and feedback regarding the questions was incorporated before distribution. The complete questionnaire is found in the Appendix.

Analysis

Wilcoxon signed-rank tests with Bonferroni correction for multiple testing were used to compare the ranking questions, while a multiple linear regression analysis was used to determine whether age, experience, or practice funding may influence respondents’ self-reported use of CPG. The use of CPG was measured on a Likert-type scale, which can be argued to be ordinal and therefore would formally break the assumptions for multivariate regressions. However, as extensively discussed in the literature, the gains in power outweighs the small biases that they may cause (cf. Knapp, 1990; Labovitz, 1970). A p value of .05 was used to determine significance.

The responses to the two open-ended questions were initially analyzed using a qualitative deductive approach to content analysis (Elo & Kyngäs, 2008; Graneheim & Lundman, 2004). The questions were as follows: (a) In situations where the optimal solution for your client is not straightforward, how do you identify an appropriate recommendation? (b) When you have to make a difficult clinical decision and your sources of information are conflicting or contraindicating, what would you do? In other words, the questions were similar but the first question was placed at the beginning of the questionnaire and intended to focus on difficult scenarios where more than one option is possible. The second question was placed at the end of the questionnaire and focused on conflicting information. Responses to the second question may therefore have been more influenced by the content of the questionnaire itself, in comparison to the first question. Responses to the open-ended questions were read several times and recurring ideas were identified, then compared and contrasted between participant responses to extract meaning (Graneheim & Lundman, 2004). The meaning units were then condensed within codes and categorized. The initial categorization followed a structured matrix based on the information used in decision-making from Doyle’s (1989) study (i.e., client history; client characteristic; information from client’s family and friends; referral or file notes; test results; texts, journals, or other publications). Aspects of the data that did not fit the matrix categorization were further analyzed to build new categories based on an inductive approach (Elo & Kyngäs, 2008). Two investigators reviewed the survey responses, first independently and then together until a consensus was achieved in condensing the final categories. The final categories identified were: client goals and preferences; discussion with colleagues or experts; trial and error; texts, journals, or other publications; further test results; and clinical experience. The categories were ultimately analyzed quantitatively to identify how frequently each category had been used. This study was approved by Macquarie University’s Human Sciences Research Ethics Sub-Committee.

Results

Demographic Profile of Respondents

The majority of participants who completed the questionnaire were from Australia (n = 81) and held either a Master’s degree or a postgraduate diploma in audiology as their highest qualification (80%). This is consistent with the requirements to practice audiology in Australia, where a postgraduate diploma was sufficient until the beginning of 2000, after which the qualification required was a Master’s degree (Upfold, 2008). Additional characteristics of the respondents are outlined in Table 1.

Conceptualization of Difficult Clinical Situations

After reading examples of audiology rehabilitation scenarios where the intervention was not straightforward (see scenarios in Appendix), respondents were asked to write an example of their own. This question was included to help respondents to further conceptualize this type of situation before answering the rest of the questionnaire. Respondents noted multiple examples of difficult situations which involved factors such as: selecting the most appropriate intervention for particular types of hearing losses (n = 65/96, e.g., high or low frequency slope, conductive and mixed losses, cochlear implantation for less than profound losses, hearing asymmetries, auditory processing disorders); or the complications associated with aging (n = 6/96), multidisability (n = 6/96), or low client motivation to pursue intervention (n = 7/96). These examples are consistent with decision-making relating to rehabilitative decisions.

Ranking of Information Sources for Decision-Making

As demonstrated in Table 2, information sources with the highest average importance ranking were audiometric test results, clinical experience, and client opinion. In contrast, media, textbooks, and information from conferences were ranked as the least important sources for decision-making.

Table 2.

Importance and Reliability Rankings of Information Sources for Decision-Making.a

| Audiometric results | Clinical experience | Client opinion | Practice guidelines | Peer-reviewed literature | Colleagues' opinion | Experts' opinion | Manufacturers' guidelines | Conferences | Text books | Media | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Importance ranking | |||||||||||

| Mean | 2.6 | 3.3 | 3.5 | 4.2 | 6.1 | 6.3 | 6.7 | 7.1 | 7.9 | 8.3 | 10.2 |

| Median | 2.0 | 3.0 | 3.0 | 4.0 | 7.0 | 6.0 | 7.0 | 7.0 | 8.0 | 9.0 | 11.0 |

| SD | 1.8 | 1.9 | 2.6 | 2.5 | 2.7 | 2.1 | 2.2 | 2.5 | 2.0 | 2.4 | 1.4 |

| Reliability ranking | |||||||||||

| Mean | 3.0 | 3.9 | 5.4 | 4.7 | 5.0 | 6.2 | 6.0 | 7.1 | 7.4 | 7.3 | 10.1 |

| Median | 2.0 | 4.0 | 5.0 | 4.0 | 5.0 | 6.0 | 6.0 | 7.0 | 8.0 | 8.0 | 11.0 |

| SD | 2.3 | 2.4 | 3.3 | 2.7 | 2.7 | 2.6 | 2.6 | 2.6 | 2.2 | 2.8 | 1.8 |

rating scale: 1 = most important or reliable; and 11 = least important or reliable.

The pattern of results was different for ratings of the information source’s reliability, with audiometric data, past experience, and CPGs demonstrating the highest average rankings (Table 2). However, similar to importance ratings, media, textbooks, and information from conferences had the lowest average reliability rankings. Peer-reviewed literature and the opinion of colleagues and experts were consistently ranked middle of the range for both importance and reliability.

Similarly, respondents in Doyle (1989) ranked information from clients and test results as the most important source of information for the majority of respondents. In addition, information from texts, journals, and other publications were ranked lower than all the other categories. A Bonferroni-corrected Wilcoxon signed-ranks test identified that four sources were ranked significantly different (p < .004) when comparing the importance and reliability questions. That is, clients’ opinion and past clinical experience were ranked more important than reliable, while peer-reviewed literature and textbooks were ranked more reliable than important as compared with the other sources.

Self-Reported Use of Information in Decision-Making

Two open-ended questions asked respondents to state how they came to a recommendation when posed with a scenario in which there was no clear solution; and, what they would do if they were presented with conflicting information. Participants’ responses were analyzed using qualitative deductive content analysis, and categorized within six categories according to the information they used in such scenarios (Table 3).

Table 3.

Categorization of Open-Ended Responses for the Question “In situations where the optimal solution for your client is not straightforward, how do you identify an appropriate recommendation?”

| Category | N |

|---|---|

| Client goals and preferences | 52 |

| Discussion with colleagues or experts | 26 |

| Trial and error | 26 |

| Texts, journals or other publications | 20 |

| Further test results | 11 |

| Clinical experience | 6 |

| Total | 141a |

Multiple responses possible; mean number of sources reported = 1.45.

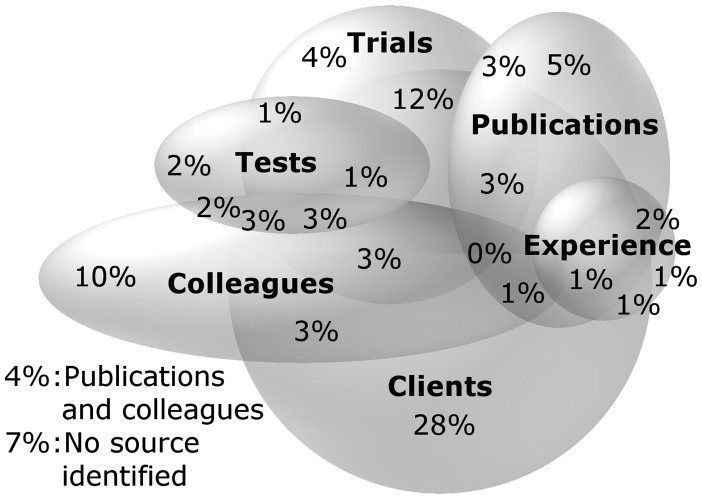

Results indicated that 54% of the 96 respondents considered client’s goals and preferences in their decision-making and 42% reported using a combination of multiple sources of information (as illustrated in Figure 2). For example, 12% mentioned the combination of client factors and use of trial and error: “I ask clients how they feel about options or what they would like to try first.” A further 28% mentioned client factors as their only source of information. For example: “By speaking with the client and identifying their wants and needs.” Interestingly, in these difficult cases, 10% reported referring to colleagues or experts only. In comparison, while 5% reported using only texts, journals, or other publications when a decision was not straightforward, 21% mentioned a combination of publications with other sources of information: “I would make a decision based on my clinical experience and peer reviewed literature. Also I would consult my colleague.” And: “[I would] weight everything from results, practice guidelines, textbooks and colleagues opinion.”

Figure 2.

Illustration of the combination of sources of information identified by audiologists to the question: “In situations where the optimal solution for your client is not straightforward, how do you identify an appropriate recommendation?” Note that an exact proportional scaling and overlapping of six variables is not possible and this illustration is therefore a close-approximation.

The second open-ended question asked clinicians what they would do when faced with conflicting information sources (Table 4). For this question, the largest proportion out of the 96 respondents (39%) stated that they would use a combination of different sources of information (Figure 3), while 30% only reported that they would refer to a colleague or expert: that is, “Consult peers and clinical leaders.” Another 26% reported they would refer to colleagues or experts in conjunction with other sources of information, such as texts, journals, or other publications: “[I would] check literature and discuss with colleagues.” Utilization of patients’ goals and preferences in conjunction with other sources was reported by 21% of participants, while 10% of participants reported utilization of patient’s goals and preferences alone. As an example: “[I] give preference to the option that the client is most interested in, I hand the choice over.” The mention of client factors alone or in combination with other factors was thus less frequent with the second, as compared with the first open-ended question. The reference to colleagues or expert opinion was more frequent, while the mention of publications remained similar. Only 4% of respondents stated they would use texts, journals, or other publications alone in these scenarios: that is, “Refer to literature.” Similarly, 7% of participants reported that they would use their professional experience to guide them in their decision-making, without mentioning any other factors. This is illustrated with the statements: “[I] trust my judgment,” and “[I] choose the source I find most reliable or base it on my previous experience in a similar situation.”

Table 4.

Categorization of Open-Ended Responses for the Question “When you have to make a difficult clinical decision and your sources of information are conflicting or contraindicating, what would you do?”

| Category | N |

|---|---|

| Client goals and preferences | 31 |

| Discussion with colleagues or experts | 54 |

| Trial and error | 14 |

| Texts, journals or other publications | 22 |

| Further test results | 6 |

| Clinical experience | 16 |

| Total | 143a |

Multiple responses possible; mean number of sources reported = 1.48.

Figure 3.

Illustration of the combination of sources of information identified by audiologists to the question: “When you have to make a difficult clinical decision and your sources of information are conflicting or contraindicating, what would you do?” Note that an exact proportional scaling and overlapping of six variables is not possible and this illustration is therefore a close-approximation.

Because EBP is based on using a combination of different sources of information (empirical evidence, patient factors, and clinical expertise), it was not identified as a specific category in Tables 3 and 4. Across both open-ended questions, however, 13 clinicians mentioned EBP directly or indirectly in situations where there was no clear solution for the client as demonstrated by the following quotes: “All decisions are made on some-what evidence-based practice,” “I base my recommendations […] on my professional knowledge of the scientific evidence plus my clinical experience of similar situations […] fitting the needs of the patient.”

A total of 81 audiologists reported having CPG available at their clinics. Within this group, a multiple regression analysis was used to assess factors that may affect the use of CPG, specifically: gender, age, years of practice, level of education, and whether the clinic is publicly or privately funded. Basic descriptive statistics and coefficients are shown in Table 5. The seven-predictor model accounted for 27% of the variance and suggested that older age, fewer years in practice, and working in a publically funded clinic significantly influenced how often guidelines were followed, F(7, 71) = 3.967, p < .002). Specifically, on a scale of 1 to 5 where 1 = Always and 5 = Never, each decade of age increased the tendency to follow guidelines with 0.6 whereas each decade of practice decreased the tendency to follow guidelines with 0.7 (the reversed sign in Table 5 is due to lower values indicating more compliance with guidelines). Consequently, clinicians who began practice at an older age tended to follow guidelines more often than clinicians of the same age who began practice relatively young. Compared with clinicians in fully privately funded practice, clinicians in publicly funded practice tended to follow guidelines more often (0.5 on the 1 to 5 scale). Note that while the tolerance was below 6 and the variance inflation factor was above 0.15 for all variables, the correlation between age and experience (r = .89) indicated potential problems with multicollinearity. Nevertheless, the full model is presented with both variables (age and experience) included because their effects run in opposite directions, providing tentative explanations for contradicting findings in previous research. As a robustness test, an ordinal regression analysis further corroborated the findings of the multivariate analysis.

Table 5.

Multiple Linear Regression of How Often Clinical Guidelines Are Followed.

| Variable | p | R 2 | Adjusted R2 | B | Std err B | Squared partial correlation |

|---|---|---|---|---|---|---|

| ** | 0.27 | 0.19 | ||||

| Constant | **** | 2.18 | 0.22 | |||

| Gendera | 0.17 | 0.16 | 0.02 | |||

| Age | **** | −0.06 | 0.02 | 0.16 | ||

| Years of Practice | **** | 0.07 | 0.02 | 0.19 | ||

| Educ < Mastersb | 0.17 | 0.17 | 0.01 | |||

| Educ > Mastersb | −0.27 | 0.29 | 0.01 | |||

| Fully public fundingc | ** | −0.50 | 0.17 | 0.12 | ||

| Mixed fundingc | 0.07 | 0.20 | 0.00 |

Coded 1 female and 0 for male.

Comparison group is the group with a Master’s degree.

Comparison group is those fully privately funded.

p < .05. **p < .01. ***p < .001. ****p < .0005.

In addition, respondents in publically funded clinics considered the guidelines more important in decision-making and deemed them more reliable as compared with respondents in privately funded clinics (independent-samples median test: p < .05 for both variables, median difference = 1 for importance, and = 2 for reliability).

Perceived Difficulty and Confidence in Decision-Making

In this study, participants reported moderate or slight difficulty in making recommendations when there was not one clear solution (Figure 4). In Doyle (1989), when respondents were asked to rate their degree of difficulty in the decision-making in general (not just when there was not one clear solution), they mainly rated a slight degree of difficulty.

Figure 4.

Level of perceived difficulty in making recommendations. The X-axis represents the percentage of audiologists who responded the specified level of perceived difficulty.

Interestingly, when asked to rate their confidence in decision-making in these situations, the vast majority of respondents reported they were moderately to completely confident in their decision (98% and 99%, respectively for Doyle’s [1989] and this study; Figure 5). Only two respondents reported that they were “not very” or “not at all” confident in their decision, despite respondents reporting a relative degree of difficulty. As in Doyle (1989), a negative correlation was found between confidence of decision and perceived difficulty (r = − .41, p = < .001).

Figure 5.

Level of perceived confidence in appropriateness of recommendations. The X-axis represents the percentage of audiologists who responded the specified level of perceived confidence.

Discussion

The aims of this study were to (a) identify how audiologists rate different sources of information that influence their decision-making, in order of importance and reliability; (b) identify which sources of information audiologists mention accessing in difficult clinical scenarios; (c) assess perceived difficulty and confidence in decision-making; (d) evaluate how these aspects of decision-making (a–c) have changed over the past several decades (Doyle, 1989); and (e) to assess how compatible the current clinical decision-making behavior is with EBP and PCC. The results indicated that client factors and test results are considered the most important by audiologists for their clinical decision-making. In comparison, information from peer-reviewed literature and textbooks were ranked lower than test results and client factors, in both this study and Doyle (1989). In the present study, respondents’ ranking suggested that while client’s opinion was an important factor in decision-making, it was considered somewhat less reliable. The opposite was observed with peer-reviewed literature, which was ranked as being more reliable than important for decision-making when the recommendation was not straightforward. This pattern of response suggests that clinicians distinguish between the importance and the reliability of different information sources. However, it is not clear how audiologists use this information in their daily practice, to incorporate best available evidence with patient’s preferences and values as part of EBP. Clinical decision-making requires a balance between identifying information that is important, and understanding its reliability and applicability to the clinical issue. This is typically not a simple task for complex clinical scenarios. As such, clinicians appear to adopt different styles of service delivery, as suggested by the two following quotes: “I will be firm on my findings” indicating a greater weight on the test results, in contrast to “I give preference to the option that the client is most interested in …” suggesting a greater weight on patient preferences.

The opinion of colleagues or experts took a predominant place when clinicians described how they came to a recommendation in more complex scenarios. It was, however, ranked mid-range in terms of importance and reliability. Specifically, in difficult clinical decision-making situations, results suggested that clinicians were more likely to refer to an expert or colleague over the search for published empirical evidence. A preference for oral sources over written sources, such as books, guidelines, and scientific papers, is in line with knowledge seeking behaviors of other professionals. For example, many health professionals would rely more on their peers than empirical research evidence during clinical decision-making (Lyons, Brown, Tseng, Casey, & McDonald, 2011; Mayer & Piterman, 1999; McAlister, Graham, Karr, & Laupacis, 1999; Rappolt & Tassone, 2002; Vallino-Napoli & Reilly, 2004). Evidence from outside health care reveals that information seeking behaviors of professionals follow a similar pattern, that is, employees prefer contacting colleagues directly before consulting written sources even when these colleagues are in other, including competing, organizations (Allen, James, & Gamlen, 2007; Ibrahim, Fallah, & Reilly, 2008; Lundmark & Klofsten, 2014; Schrader, 1991). Information technologies may also have expanded options for accessing the collective clinical expertise of audiologists, through media such as social media, distribution lists, or online forums. This collective clinical expertise may be particularly helpful in cases where there is no existing research evidence or practitioners encounter difficulties implementing CPGs. However, as with all elements of EBP, they should not be used in isolation (Sackett et al., 1996).

CPG enable clinicians to make informed decisions about clinical care (see Woolf, 1992). While CPG may be developed without following rigorous guidelines or engaging patients, and are often based on consensus within a specific clinic (Shaneyfelt, Mayo-Smith, & Rothwangl, 1999), they have the potential benefit of decreasing variability in practice and reducing error rates. In this study, among audiologists who reported having CPG at their workplace, those who reported using them often were more likely to be in a publically funded, as compared with a privately funded, organization. This may be partly due to differences in organizational culture (Dodek, Cahill, & Heyland, 2010; Hung, Leidig, & Shelley, 2014) as well as the standardized requirements for assessment for public funding in Australia (Australian Government Department of Health, 2015). Hung et al. (2014) demonstrated that primary care providers in clinics with stronger “group, hierarchical, and rational” culture reported a greater adherence to clinical guidelines. Certainly, in Australia, Australian Hearing, established as a federal agency in 1947, is the largest provider of Government-funded services. One of the objectives of this program is to maintain consistent standards of clinical service across their centers, through the provision of regular education and support to clinical staff, facilitating a strong and cohesive group culture.

The results also indicated that clinicians with longer clinical experience tend to report following CPG less often, but that older clinicians tend to report following guidelines more often. Interestingly, this result is in opposition to Chan et al. (2002) who evaluated compliance of nurse practitioners in Hong Kong with Universal Precautions (UP; i.e., preventing or minimizing exposure to blood and bodily fluids). While they found a positive relationship between age and compliance, they also found a significant positive relationship between years of experience and compliance. Another study, however, assessing hospital-based physicians in the United States have shown that compliance with Universal Precautions decreases with age (Michalsen et al., 1997). Because both these studies only provide results of univariate analyses, it is conceptually plausible that older respondents on average are also more experienced, a point raised by Michalsen et al. (1997). Our results indicated that while age and experience are correlated, their effects on compliance with guidelines may go in opposite directions. If so, this can explain contradicting findings in previous research. There are theoretical explanations for a differentiated effect of experience and increasing age such that as experience increases, it is possible that clinicians have developed clinical practice routines and their own “evidence-base” that might reduce the perceived need for guidelines (Harrison, Légaré, Graham, & Fervers, 2010). In addition, age in general may be positively associated with compliance based on generational differences in organizational behavior (Becton, Walker, & Jones-Farmer, 2014) and well-established age-related changes in personality traits such as agreeableness and conscientiousness (Soto, John, Gosling, & Potter, 2011).

With regard to the self-reported degree of difficulty and confidence in decision-making, the results for the current study are also similar to Doyle (1989). In particular, while audiologists identified a relative degree of difficulty, they still reported being confident in their decisions. The possible reasons for this remain to be explored; however, a number of clinicians stated that they would provide all the options to the client and then let the client decide. This behavior, however, appears to shift decision-making control and responsibility to the client (Say, Murtagh, & Thomson, 2006) and does not acknowledge the importance of providing decisional support to patients throughout the decision-making process (Elwyn et al., 2012; Stiggelbout et al., 2012) . Further, in some cases, it was unclear whether clinicians understood how to effectively involve the patient in shared decision-making. Shared decision-making is specific to the patient and takes into account the research evidence, clinician expertise, and patient preferences and values (Légaré & Witteman, 2013). Not all patients, however, want to be fully involved in the decision-making process, and many factors influence this preference (Say et al., 2006). For example, patients with higher socioeconomic status prefer an active role in the decision-making process as compared with those with lower socioeconomic status (Murray, Pollack, White, & Lo, 2007). Although the client makes the final choice, the clinician can facilitate the process by narrowing the options, framing the information in a certain way toward the patient’s needs, or choosing to include or omit information based on their discretion (Hibbard, Slovic, & Jewett, 1997). On the other hand, it is suggested that audiologists often play the dominant role in decision-making (Grenness et al., 2014). This is worthy of further exploration, as multiple barriers exist to the uptake of shared decision-making by health-care professionals, including motivation and time constraints, as well as the perception that it may not lead to improved patient outcomes or health-care processes (see Gravel, Légaré, & Graham, 2006). Therefore, it is unclear whether shared decision-making is not effectively utilized within audiology practice because of a poor understanding of how to implement it within clinical practice or whether the barriers for implementation have not yet been effectively addressed.

Over half of the surveyed audiologists responded that they would use multiple sources of information when their sources of information conflicted or contradicted each other. Of these, a reference to further research, peer-reviewed literature, or CPG was frequently mentioned. This finding suggests that there is a greater self-reported use of research in current clinical decision-making compared with the 1989 study by Doyle. Client factors were also commonly mentioned as being used as part of multiple sources, which is in line with the EBP and PCC models, and some clinicians mentioned EBP directly. While responses varied and suggested a range of practice styles, the overall results suggest a considerable increase in awareness of EBP and PCC for decision-making in audiology, which is aligned with the increased mention of EBP and PCC in the literature over the past few decades. Despite this, little is known about the extent to which EPB is implemented in audiological practice; moreover, of the evidence that exists regarding implementation of PCC, there appears to be significant scope for improvement (Grenness et al., 2015a, 2015b). Assessing the quality, reliability, and applicability of empirical research evidence to a clinical question requires skills and time (Mullen & Streiner, 2006; Straus & McAlister, 2000). If clinicians do not have adequate critical skills, it is unlikely that the EBP model will be implemented (Rambur, 1999) as little guidance is given when various forms of information are conflicting or vague. In addition, developing the psychosocial skills required for a truly patient-centered approach is complex and goes beyond the theoretical knowledge of PCC (Levinson, Lesser, & Epstein, 2010).

It is important to note that the results of the current study are based on questionnaire responses from 96 audiologists who attended the XXXII World Congress of Audiology, with 81 practicing in Australia. This number represents only a small proportion of the total membership of Audiology Australia, the main professional body of audiologists in the country (over 2,000 members). It is therefore possible to assume that respondents had certain characteristics that could bias the results toward more reports of EPB and PCC, than what may have been reported in a more representative sample of practicing audiologists who did not attend the conference or who attended the conference but chose not to respond to the questionnaire. This self-selection bias limits the generalizability of the findings. For example, respondents attending the conference and accepting to complete the questionnaire may have been more likely to be interested in or involved in research, leading to greater reports of using EBP and PCC.

Implications for Knowledge Translation and Professional Development in Audiology

Typical knowledge translation approaches in audiology include publication in peer-reviewed literature and presentations at conferences and seminars. These translation pathways may facilitate the dissemination of knowledge about EBP and PCC, but may not be sufficient for effective implementation of these practices in clinical settings (i.e., a measurable change in clinical behavior toward an increased use of EBP and PCC). In this study, peer-reviewed literature and information from conferences were not ranked as important nor reliable sources of information by the audiologists. This finding is not unique to audiology; clinical implications from research are seldom highlighted in publications and presentations, making it difficult for clinicians to grasp the every-day applicability to their patients (Heiwe et al., 2011; Metcalfe et al., 2001; Moodie et al., 2011). Moreover, as raised in multiple fields, knowing about an evidence-based intervention is not sufficient to adopt or implement this intervention in a scalable and sustainable manner within clinics (Damschroder et al., 2009). As suggested by Moodie et al. (2011), the audiology profession would benefit from working toward an integrated model of knowledge translation where front line clinicians are integrated in the research process, rather than a research driven hierarchical model, where the researchers identify the empirical evidence and inform the clinics about this evidence. An integrated model of knowledge translation would involve an “active collaboration between researchers and research users (clinicians) in […] designing the research questions, shared decision-making regarding methodology, data collection and tools development involvement, interpretation of the findings, and dissemination and implementation of the research results” (Moodie et al., 2011, p.11). The literature relating to knowledge translation and implementation in health care supports that interactive sessions with practitioners can be effective in changing behavior toward EBP and shared decision-making (Davis et al., 1999; Grol & Grimshaw, 2003). Further, frameworks such as the Consolidated Framework for Implementation Research can be used to guide teams of clinicians and researchers toward the most effective implementation strategies (Kirk et al., 2016).

Conclusion

This study suggests that many audiologists value patient preferences, as part of multiple sources of information, when making rehabilitative clinical decisions, which is aligned with PCC. While research evidence was reported to be used less often than expert opinion in complex decision cases, the reported use of empirical research evidence appears to have increased since 1989. Overall, while positive changes toward EBP and PCC appear to have occurred, the audiology profession may further benefit from reassessing its knowledge translation and implementation pathways.

Appendix

Questionnaire: Clinical Decision Making in Audiology

Declaration of Conflicting Interests

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This study was supported by the HEARing Cooperative Research Centre, established and supported under the Business Cooperative Research Centres Programme of the Australian Government. The authors acknowledge the support of the Organizing Committee of the XXXII World Congress of Audiology and Audiology Australia for supporting data collection. In addition, the Australian Hearing Hub, an initiative of Macquarie University and the Australian Government, provided financial support for the publication of this research article.

References

- Aazh H., Moore B. C. (2007) The value of routine real ear measurement of the gain of digital hearing aids. Journal of the American Academy of Audiology 18(8): 653–664. doi:10.3766/jaaa.18.8.3. [DOI] [PubMed] [Google Scholar]

- Allen J., James A. D., Gamlen P. (2007) Formal versus informal knowledge networks in R&D: A case study using social network analysis. R&D Management 37(3): 179–196. doi:10.1016/j.socnet.2015.02.004. [Google Scholar]

- Australian Government Department of Health. (2015). Hearing Rehabilitation Outcomes for Voucher Holders. Hearing Services Program. Retrieved from http://hearingservices.gov.au/wps/wcm/connect/hso/9f30e126-30c2-435c-999080d414c85ad8/Hearing+Rehabilitation+Outcomes+2015.pdf?MOD=AJPERES&CONVERT_TO=url&CACHEID=9f30e126-30c2-435c-9990-80d414c85ad8.

- Becton J. B., Walker H. J., Jones-Farmer A. (2014) Generational differences in workplace behavior. Journal of Applied Social Psychology 44(3): 175–189. doi:10.1111/jasp.12208. [Google Scholar]

- Berwick D. M. (2016) Era 3 for medicine and health care. JAMA - Journal of the American Medical Association 315(13): 1329–1330. doi:10.1001/jama.2016.1509. [DOI] [PubMed] [Google Scholar]

- Brennan T. A., Leape L. L., Laird N. M., Hebert L., Localio A. R., Lawthers A. G., Hiatt H. H. (1991) Incidence of adverse events and negligence in hospitalized patients: Results of the Harvard medical practice study I. New England Journal of Medicine 324(6): 370–376. doi:10.1056/nejm199102073240604. [DOI] [PubMed] [Google Scholar]

- Chan, R., Molassiotis, A., Eunice, C., Virene, C., Becky, H., Chit-Ying, L., … Ivy, Y. (2002). Nurses knowledge of and compliance with universal precautions in an acute care hospital. International Journal of Nursing Studies, 39(2), 157–163. doi:10.1016/S0020-7489(01)00021-9. [DOI] [PubMed]

- Cohen D. I., Littenberg B., Wetzel C., Neuhauser D. V. (1982) Improving physician compliance with preventive medicine guidelines. Medical Care 20(10): 1040–1045. doi:10.1097/00005650-198210000-00006. [DOI] [PubMed] [Google Scholar]

- Cox R. M. (2005) Evidence-based practice in provision of amplification. Journal of the American Academy of Audiology 16(7): 419–438. doi:10.3766/jaaa.16.7.3. [DOI] [PubMed] [Google Scholar]

- Damschroder L. J., Aron D. C., Keith R. E., Kirsh S. R., Alexander J. A., Lowery J. C. (2009) Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science 4(1): 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis D., O'Brien M. A. T., Freemantle N., Wolf F. M., Mazmanian P., Taylor-Vaisey A. (1999) Impact of formal continuing medical education: Do conferences, workshops, rounds, and other traditional continuing education activities change physician behavior or health care outcomes? JAMA 282(9): 867–874. [DOI] [PubMed] [Google Scholar]

- Dodek P., Cahill N. E., Heyland D. K. (2010) The relationship between organizational culture and implementation of clinical practice guidelines: A narrative review. Journal of Parenteral and Enteral Nutrition 34(6): 669–674. doi:10.1177/0148607110361905. [DOI] [PubMed] [Google Scholar]

- Doyle J. (1989) A survey of Australian audiologists’ clinical decision-making. Australian Journal of Audiology 11(2): 75. [Google Scholar]

- Ekberg K., Grenness C., Hickson L. (2014) Addressing patients' psychosocial concerns regarding hearing aids within audiology appointments for older adults. American Journal of Audiology 23(3): 337–350. doi:10.1044/2014_AJA-14-0011. [DOI] [PubMed] [Google Scholar]

- Elo S., Kyngäs H. (2008) The qualitative content analysis process. Journal of Advanced Nursing 62(1): 107–115. doi:10.1111/j.1365-2648.2007.04569.x. [DOI] [PubMed] [Google Scholar]

- Elwyn, G., Frosch, D., Thomson, R., Joseph-Williams, N., Lloyd, A., Kinnersley, P., … Edwards, A. (2012). Shared decision making: a model for clinical practice. Journal of General Internal Medicine, 27(10), 1361–1367. doi:10.1007/s11606-012-2077-6. [DOI] [PMC free article] [PubMed]

- Graneheim U. H., Lundman B. (2004) Qualitative content analysis in nursing research: Concepts, procedures and measures to achieve trustworthiness. Nurse Education Today 24(2): 105–112. doi:10.1016/j.nedt.2003.10.001. [DOI] [PubMed] [Google Scholar]

- Gravel K., Légaré F., Graham I. D. (2006) Barriers and facilitators to implementing shared decision-making in clinical practice: a systematic review of health professionals’ perceptions. Implementation Science 1(1): 16 doi:10.1016/j.pec.2008.07.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grenness C., Hickson L., Laplante-Lévesque A., Davidson B. (2014) Patient-centred care: A review for rehabilitative audiologists. International Journal of Audiology 53(S1): S60–S67. doi:10.3109/14992027.2013.847286. [DOI] [PubMed] [Google Scholar]

- Grenness C., Hickson L., Laplante-Lévesque A., Meyer C., Davidson B. (2015. a) Communication patterns in audiologic rehabilitation history-taking: audiologists, patients, and their companions. Ear and Hearing 36(2): 191–204. doi:10.3766/jaaa.26.1.5. [DOI] [PubMed] [Google Scholar]

- Grenness C., Hickson L., Laplante-Lévesque A., Meyer C., Davidson B. (2015. b) The nature of communication throughout diagnosis and management planning in initial audiologic rehabilitation consultations. Journal of the American Academy of Audiology 26(1): 36–50. doi:10.1097/aud.0000000000000100. [DOI] [PubMed] [Google Scholar]

- Grol R., Grimshaw J. (2003) From best evidence to best practice: Effective implementation of change in patients' care. The Lancet 362(9391): 1225–1230. [DOI] [PubMed] [Google Scholar]

- Haines A., Jones R. (1994) Implementing findings of research. British Medical Journal 308(6942): 1488–1492. doi:10.1136/bmj.308.6942.1488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrison M. B., Légaré F., Graham I. D., Fervers B. (2010) Adapting clinical practice guidelines to local context and assessing barriers to their use. Canadian Medical Association Journal 182(2): E78–E84. doi:10.1503/cmaj.081232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haynes A. B., Weiser T. G., Berry W. R., Lipsitz S. R., Breizat A. H. S., Dellinger E. P., Gawande A. A. (2009) A surgical safety checklist to reduce morbidity and mortality in a global population. New England Journal of Medicine 360(5): 491–499. doi:10.1056/NEJMsa0810119. [DOI] [PubMed] [Google Scholar]

- Heiwe S., Kajermo K. N., Tyni-Lenné R., Guidetti S., Samuelsson M., Andersson I.-L., Wengström Y. (2011) Evidence-based practice: Attitudes, knowledge and behaviour among allied health care professionals. International Journal for Quality in Health Care 23(2): 198–209. doi:10.1093/intqhc/mzq083. [DOI] [PubMed] [Google Scholar]

- Hibbard, J. H., Slovic, P., & Jewett, J. J. (1997). Informing consumer decisions in health care: implications from decision-making research. The Milbank Quarterly, 75(3), 395–414. doi:10.1111/1468-0009.00061. [DOI] [PMC free article] [PubMed]

- Hung D. Y., Leidig R., Shelley D. R. (2014) What’s in a setting?: Influence of organizational culture on provider adherence to clinical guidelines for treating tobacco use. Health Care Management Review 39(2): 154–163. doi:10.1097/hmr.0b013e3182914d11. [DOI] [PubMed] [Google Scholar]

- Ibrahim S. E., Fallah M. H., Reilly R. R. (2008) Localized sources of knowledge and the effect of knowledge spillovers: An empirical study of inventors in the telecommunications industry. Journal of Economic Geography 9: 405–431. doi:10.1093/jeg/lbn049. [Google Scholar]

- Kirk M. A., Kelley C., Yankey N., Birken S. A., Abadie B., Damschroder L. (2016) A systematic review of the use of the Consolidated Framework for Implementation Research. Implementation Science 11(1): 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knapp T. R. (1990) Treating ordinal scales as interval scales: An attempt to resolve the controversy. Nursing Research 39(2): 121–123. [PubMed] [Google Scholar]

- Kochkin S., Beck D. L., Christensen L. A., Compton-Conley C., Fligor B., Kricos P. B., Northern J. (2010) MarkeTrak VIII: The impact of the hearing healthcare professional on hearing aid user success. Hearing Review 17(4): 12–34. doi:10.1097/01.HJ.0000399150.30374.45. [Google Scholar]

- Labovitz S. (1970) The assignment of numbers to rank order categories. American Sociological Review 35: 515–524. [Google Scholar]

- Laplante-Lévesque A., Hickson L., Grenness C. (2014) An Australian survey of audiologists' preferences for patient-centredness. International Journal of Audiology 53(S1): S76–S82. doi:10.3109/14992027.2013.832418. [DOI] [PubMed] [Google Scholar]

- Laplante-Lévesque A., Hickson L., Worrall L. (2010) Factors influencing rehabilitation decisions of adults with acquired hearing impairment. International Journal of Audiology 49(7): 497–507. doi:10.3109/14992021003645902. [DOI] [PubMed] [Google Scholar]

- Légaré F., Witteman H. O. (2013) Shared decision making: Examining key elements and barriers to adoption into routine clinical practice. Health Affairs 32(2): 276–284. doi:10.1377/hlthaff.2012.1078. [DOI] [PubMed] [Google Scholar]

- Levinson W., Lesser C. S., Epstein R. M. (2010) Developing physician communication skills for patient-centered care. Health Affairs 29(7): 1310–1318. doi:10.1377/hlthaff.2009.0450. [DOI] [PubMed] [Google Scholar]

- Lowy, H. (2013). The real cost of hearing aids. HearingHQ, 13, 13–17. Retrieved from: https://issuu.com/thetangellogroup/docs/hearinghq_apr13_issue.

- Lundmark E., Klofsten M. (2014) Linking individual-level knowledge sourcing to project-level contributions in large R&D-driven product-development projects. Project Management Journal 45(6): 73–82. doi:10.1002/pmj.21457. [Google Scholar]

- Lyons C., Brown T., Tseng M. H., Casey J., McDonald R. (2011) Evidence-based practice and research utilisation: Perceived research knowledge, attitudes, practices and barriers among Australian paediatric occupational therapists. Australian Occupational Therapy Journal 58(3): 178–186. doi:10.1111/j.1440-1630.2010.00900.x. [DOI] [PubMed] [Google Scholar]

- Mayer J., Piterman L. (1999) The attitudes of Australian GPs to evidence-based medicine: A focus group study. Family Practice 16(6): 627–632. doi:10.1093/fampra/16.6.627. [DOI] [PubMed] [Google Scholar]

- McAlister F. A., Graham I., Karr G. W., Laupacis A. (1999) Evidence-based medicine and the practicing clinician. Journal of General Internal Medicine 14(4): 236–242. doi:10.1046/j.1525-1497.1999.00323.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McIntyre N., Popper K. (1983) The critical attitude in medicine: The need for a new ethics. British Medical Journal 287(6409): 1919–1923. doi:10.1136/bmj.287.6409.1919. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Metcalfe C., Lewin R., Wisher S., Perry S., Bannigan K., Moffett J. K. (2001) Barriers to implementing the evidence base in four NHS therapies: Dieticians, occupational therapists, physiotherapists, speech and language therapists. Physiotherapy 87(8): 433–441. doi:10.1016/s0031-9406(05)65462-4. [Google Scholar]

- Metz M. J. (2000) Some ethical issues related to hearing instrument dispensing. Seminars in Hearing 21(1): 63–74. doi:10.1055/s-2000-6826. [Google Scholar]

- Michalsen, A., Delclos, G. L., Felknor, S. A., Davidson, A. L., Johnson, P. C., Vesley, D., … Gershon, R. R. (1997). Compliance with universal precautions among physicians. Journal of Occupational and Environmental Medicine, 39(2), 130–137. doi:10.1097/00043764-199702000-00010. [DOI] [PubMed]

- Montori V. M., Brito J. P., Murad M. H. (2013) The optimal practice of evidence-based medicine: Incorporating patient preferences in practice guidelines. JAMA 310(23): 2503–2504. doi:10.1001/jama.2013.281422. [DOI] [PubMed] [Google Scholar]

- Moodie S. T., Kothari A., Bagatto M. P., Seewald R., Miller L. T., Scollie S. D. (2011) Knowledge translation in audiology promoting the clinical application of best evidence. Trends in Amplification 15(1): 5–22. doi:10.1177/1084713811420740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mueller H. G., Picou E. M. (2010) Survey examines popularity of real-ear probe-microphone measures. The Hearing Journal 63(5): 27–28. doi:10.1097/01.hj.0000373447.52956.25. [Google Scholar]

- Mullen, E. J., & Streiner, D. L. (2006). The evidence for and against evidence-based practice. Foundations of evidence-based social work practice, 21–34. doi:10.1093/brief-treatment/mhh009.

- Murray, E., Pollack, L., White, M., & Lo, B. (2007). Clinical decision-making: Patients' preferences and experiences. Patient education and counseling, 65(2), 189–196. doi:http://dx.doi.org/10.1016/j.pec.2006.07.007. [DOI] [PubMed]

- National Health and Medical Research Council (1999) A guide to the development, implementation and evaluation of clinical practice guidelines, Australia: Author. [Google Scholar]

- Poost-Foroosh L., Jennings M. B., Shaw L., Meston C. N., Cheesman M. F. (2011) Factors in client-clinician interaction that influence hearing aid adoption. Trends in Amplification 15(3): 127–139. doi:10.1177/1084713811430217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rambur, B. (1999). Fostering evidence-based practice in nursing education. Journal of Professional Nursing, 15(5), 270–274. doi:http://dx.doi.org/10.1016/S8755-7223(99)80051-9. [DOI] [PubMed]

- Rappolt S., Tassone M. (2002) How rehabilitation therapists gather, evaluate, and implement new knowledge. Journal of Continuing Education in the Health Professions 22(3): 170–180. doi:10.1002/chp.1340220306. [DOI] [PubMed] [Google Scholar]

- Richards T., Montori V. M., Godlee F., Lapsley P., Paul D. (2013) Let the patient revolution begin. BMJ 346: f2614 doi:10.1136/bmj.f2614. [DOI] [PubMed] [Google Scholar]

- Roter D. (2000) The enduring and evolving nature of the patient–physician relationship. Patient Education and Counseling 39(1): 5–15. doi:10.1016/s0738-3991(99)00086-5. [DOI] [PubMed] [Google Scholar]

- Sackett D. L., Rosenberg W. M. C., Gray J. A. M., Haynes R. B., Richardson W. S. (1996) Evidence based medicine: What it is and what it isn't. It's about integrating individual clinical expertise and the best external evidence. British Medical Journal 312(7023): 71–72. doi:10.1136/bmj.312.7023.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Say, R., Murtagh, M., & Thomson, R. (2006). Patients' preference for involvement in medical decision making: a narrative review. Patient education and counseling, 60(2), 102–114. doi: http://dx.doi.org/10.1016/j.pec.2005.02.003. [DOI] [PubMed]

- Schrader S. (1991) Informal technology transfer between firms: Cooperation through information trading. Research Policy 20(2): 153–170. doi:10.1016/0048-7333(91)90077-4. [Google Scholar]

- Shaneyfelt T. M., Mayo-Smith M. F., Rothwangl J. (1999) Are guidelines following guidelines?: The methodological quality of clinical practice guidelines in the peer-reviewed medical literature. JAMA 281(20): 1900–1905. doi:10.1001/jama.281.20.1900. [DOI] [PubMed] [Google Scholar]

- Shaw G. (2016) Disruptive innovation in hearing health care: Seismic shift or Ripple effect? The Hearing Journal 69(5): 16–18. doi:10.1097/01.hj.0000483266.92816.3e. [Google Scholar]

- Sosnowy C. D., Weiss L. J., Maylahn C. M., Pirani S. J., Katagiri N. J. (2013) Factors affecting evidence-based decision making in local health departments. American Journal of Preventive Medicine 45(6): 763–768. doi:10.1016/j.amepre.2013.08.004. [DOI] [PubMed] [Google Scholar]

- Soto C. J., John O. P., Gosling S. D., Potter J. (2011) Age differences in personality traits from 10 to 65: Big Five domains and facets in a large cross-sectional sample. Journal of Personality and Social Psychology 100(2): 330. [DOI] [PubMed] [Google Scholar]

- Straus, S. E., & McAlister, F. A. (2000). Evidence-based medicine: a commentary on common criticisms. Canadian Medical Association Journal, 163(7), 837–841. [PMC free article] [PubMed]

- Straus S. E., Tetroe J. M., Graham I. D. (2011) Knowledge translation is the use of knowledge in health care decision making. Journal of Clinical Epidemiology 64(1): 6–10. doi:10.1016/j.jclinepi.2009.08.016. [DOI] [PubMed] [Google Scholar]

- Stiggelbout, A. M., Van der Weijden, T., De Wit, M. P. T., Frosch, D., Légaré, F., Montori, V. M., … Elwyn, G. (2012). Shared decision making: really putting patients at the centre of healthcare. BMJ: British Medical Journal (Online), 344. doi:https://doi.org/10.1136/bmj.e256. [DOI] [PubMed]

- Tiffen J., Corbridge S. J., Slimmer L. (2014) Enhancing clinical decision making: Development of a contiguous definition and conceptual framework. Journal of Professional Nursing 30(5): 399–405. doi:10.1016/j.profnurs.2014.01.006. [DOI] [PubMed] [Google Scholar]

- Upfold L. J. (2008) A history of Australian audiology, Sydney, Australia: Phonak Pty Ltd. [Google Scholar]

- Vallino-Napoli L. D., Reilly S. (2004) Evidence-based health care: A survey of speech pathology practice. International Journal of Speech-Language Pathology 6(2): 107–112. doi:10.1080/14417040410001708530. [Google Scholar]

- Vincent C., Neale G., Woloshynowych M. (2001) Adverse events in British hospitals: Preliminary retrospective record review. British Medical Journal 322(7285): 517–519. doi:10.1136/bmj.322.7285.517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams S. C., Schmaltz S. P., Morton D. J., Koss R. G., Loeb J. M. (2005) Quality of care in U.S. hospitals as reflected by standardized measures, 2002–2004. New England Journal of Medicine 353(3): 255–264. doi:10.1056/NEJMsa043778. [DOI] [PubMed] [Google Scholar]

- Wilson M. R., Runciman W. B., Gibberd R. W., Harrison B. T., Newby L., Hamilton J. D. (1995) The quality in Australian health care study. Medical Journal of Australia 163(9): 458–471. [DOI] [PubMed] [Google Scholar]

- Wolf K. E. (1999) Preparing for evidence-based speech-language pathology and audiology. Texas Journal of Audiology and Speech Pathology 23: 69–74. [Google Scholar]

- Wong L., Hickson L. (2011) Evidence-based practice in audiology: Evaluating interventions for children and adults with hearing impairment, San Diego, CA: Plural Publishing Inc. [Google Scholar]

- Woolf S. H. (1992) Practice guidelines, a New Reality in Medicine: II. Methods of developing guidelines. Archives of Internal Medicine 152(5): 946–952. doi:10.1001/archinte.1992.00400170036007. [PubMed] [Google Scholar]