Abstract

In an implicit phonological priming paradigm, deaf bimodal bilinguals made semantic relatedness decisions for pairs of English words. Half of the semantically unrelated pairs had phonologically related translations in American Sign Language (ASL). As in previous studies with unimodal bilinguals, targets in pairs with phonologically related translations elicited smaller negativities than targets in pairs with phonologically unrelated translations within the N400 window. This suggests that the same lexicosemantic mechanism underlies implicit co-activation of a non-target language, irrespective of language modality. In contrast to unimodal bilingual studies that find no behavioral effects, we observed phonological interference, indicating that bimodal bilinguals may not suppress the non-target language as robustly. Further, there was a subset of bilinguals who were aware of the ASL manipulation (determined by debrief), and they exhibited an effect of ASL phonology in a later time window (700–900ms). Overall, these results indicate modality-independent language co-activation that persists longer for bimodal bilinguals.

Keywords: implicit phonological priming, bimodal bilingualism, American Sign Language, N400

1. Introduction

A preponderance of evidence now demonstrates that users of two spoken languages (“unimodal” bilinguals) access both of their languages, even when only one language is used overtly (e.g., Bijeljac-Babic, Biardeau, & Grainger, 1997; Guo, Misra, Tam, & Kroll, 2012; Midgley, Holcomb, Van Heuven, & Grainger, 2008; Spivey & Marian, 1999; Thierry & Wu, 2007; Van Heuven, Dijkstra, & Grainger, 1998; Wu & Thierry, 2010). That is, recognizing a word in one language prompts co-activation of the translation equivalent (e.g., Ferré, Sánchez-Casas, & Guasch, 2006; Guo et al., 2012; Thierry & Wu, 2007) and form-similar words in the other language (e.g., Grossi, Savill, Thomas, & Thierry, 2012; Midgley et al., 2008; Spivey & Marian, 1999; Van Heuven et al., 1998). Considerably less is known about analogous processes in “bimodal” bilinguals, who know both a spoken and a signed language. There is some evidence from event-related potentials (ERPs) suggesting that spoken language translation equivalents are activated during sign processing (Hosemann, 2015), and recent behavioral evidence suggests that sign translation equivalents may also be activated during visual or auditory word recognition, with activation propagating directly through lexical links or indirectly via shared semantic representations (e.g., Giezen, Blumenfeld, Shook, Marian, & Emmorey, 2015; Kubus, Villwock, Morford, & Rathman, 2015; Morford, Kroll, Piñar, & Wilkinson, 2014; Morford, Wilkinson, Villwock, Piñar, & Kroll, 2011; Shook & Marian, 2012; Villameriel, Dias, Costello, & Carreiras, 2016). However, these behavioral studies only index the endpoint of a number of processes, making it difficult to determine whether the effects result from lexicosemantic interactivity or from later, more explicit translation processes. Here, we aimed to distinguish between these two possible mechanisms by using ERPs to track the time course of co-activation of American Sign Language (ASL) as deaf bimodal bilinguals read English words.

1.1 Implicit co-activation in unimodal bilinguals

The lexical representations of translation equivalents are co-activated in unimodal bilinguals, even at high levels of proficiency (e.g., Guasch, Sánchez-Casas, Ferré, & García-Albea, 2008; Guo et al., 2012; Thierry & Wu, 2007; Wu & Thierry, 2010). Some of the evidence regarding this cross-language activation comes from translation recognition paradigms, in which participants must decide if a target in one language (e.g., ajo) is the correct translation of a prime from the other language (e.g., garlic). In form-distractor trials, the target is not the correct translation, but closely related to it in form (e.g., ojo means ‘eye’ in Spanish; e.g., Talamas, Kroll, & Dufour, 1999). If the lexical representation of the translation equivalent is activated during processing of the prime, this form relationship should interfere with participants’ ability to reject the form-related target. Indeed, in proficient Chinese-English bilinguals, form-related targets elicited slower and less accurate responses and larger positivities, beginning in the P200 window and continuing through the late positive component (LPC), compared to unrelated control targets (Guo et al., 2012). The P200 effect was attributed to the perceptual similarity between the implicitly co-activated target translation and the overtly presented form-related distractor, and the LPC effect was attributed to controlled resolution of the conflict between these two lexical representations. Thus, not only do these results indicate that the form representation of the translation equivalent was accessed, they suggest that interference caused by the form-related target can occur at both perceptual and decision levels. However, this paradigm suffers from the weaknesses that both languages are presented during the experiment and the task requires explicit translation, limiting the generalizability of the results.

Thierry and Wu (2007) circumvented this dual-language setting in their implicit phonological priming paradigm, providing further evidence for the co-activation of translation equivalents in proficient bilinguals. These authors presented late proficient Chinese-English bilinguals with pairs of English words and asked them to judge whether the meanings of the two words were related. Some of the word pairs, when translated into Chinese, shared a character, whereas others did not. Although there was no behavioral effect of this hidden Chinese form manipulation, targets in pairs that had phonologically related translation equivalents elicited smaller amplitude N400s than targets in pairs that did not have form-related translations. The authors used the absence of a behavioral effect, together with debrief responses that indicated a lack of awareness of the translation manipulation, to argue that the co-activation of Chinese was automatic and unconscious. This contention was further supported by results from monolingual Chinese speakers who were presented with the Chinese translation equivalents and could overtly process the phonological relationship. In addition to an N400 priming effect similar to that found during implicit processing in the bilinguals, the monolinguals had a behavioral interference effect such that semantically unrelated (i.e., ‘no’ response) trials elicited slower and less accurate responses when they were phonologically related than when they were phonologically unrelated. Thus, this implicit priming paradigm demonstrates that unimodal bilinguals co-activate their languages, even in a monolingual setting in which the non-target language is irrelevant (see also, Wu & Thierry, 2010). It also links behavioral effects to explicit processing of the phonological relationship and suggests that ‘online’ measures like ERPs may be needed to capture the implicit effect in this paradigm.

Motivated by the timing of their ERP effect, Thierry and Wu (2007) proposed that the implicit co-activation of Chinese translation equivalents occurred during or immediately after meaning retrieval. Presumably, activation spread from English primes (e.g., train) to their Chinese translation equivalents (e.g., huo che), either directly via lexical links or indirectly via a shared semantic store, and from those translations to their phonological neighbors (e.g., huo tui, meaning ‘ham’). In pairs with phonologically related translation equivalents, the Chinese translation of the subsequently presented English target (e.g., ham) would be among the co-activated neighbors, resulting in facilitated processing and smaller amplitude N400s. However, contrary to the translation recognition paradigm, this implicit activation of the non-target language is suppressed (Wu & Thierry, 2012) before reaching consciousness and influencing behavioral decision-level processes. This progression is consistent with the inhibitory control model of bilingual processing (e.g., Green, 1998), in which task-irrelevant schemas are automatically activated by a stimulus, but subsequently suppressed. Together then, these results suggest that translation equivalents are co-activated during word recognition in proficient unimodal bilinguals, but suppressed before they have behavioral consequences, unless translation is promoted by the task.

1.2 Implicit cross-modal co-activation in bimodal bilinguals: Behavioral evidence

Several recent studies using behavioral methods have extended the unimodal literature to provide preliminary evidence of cross-modal language co-activation in both hearing and deaf bimodal bilinguals (see Emmorey, Giezen, & Gollan, 2016; Ormel & Giezen, 2014, for reviews), and many of these studies employed the implicit phonological priming paradigm (e.g., Kubus et al., 2015; Morford et al., 2014; Morford et al., 2011; Villameriel et al., 2016). For example, Morford and colleagues (2011) compared processing of English word pairs with ASL translations that shared two out of three phonological parameters (handshape, location, movement) to word pairs with ASL translations that did not overlap phonologically. They found that phonological relatedness of the ASL translations led to faster ‘yes’ semantic relatedness judgments and slower ‘no’ judgments in deaf bimodal bilinguals, but not in ASL-naïve hearing controls. In a follow-up study, the same group found that the inhibitory effect of ASL phonological relatedness for semantically unrelated ‘no’ trials was greater for deaf participants with lower proficiency in English, as measured by the Passage Comprehension subtest of the Woodcock-Johnson III Tests of Achievement (Morford et al., 2014, Experiment 1). Thus, the implicit phonological priming paradigm also has the potential to index co-activation of languages that differ in modality, and bilinguals with lower second language (L2) proficiency may be more likely to strongly co-activate their native sign language (L1).

This pattern of behavioral results is commonly interpreted as support for implicit co-activation of translation equivalents via lexicosemantic spreading of activation in bimodal bilinguals (e.g., Kubus et al., 2015; Morford et al., 2014; Morford et al., 2011; Villameriel et al., 2016), just as in unimodal bilinguals. Curiously, however, the mere presence of behavioral effects in these bimodal bilinguals conflicts with the line of reasoning introduced by Thierry and Wu (2007; Wu & Thierry, 2010), in which behavioral effects in the implicit priming paradigm arise exclusively from overt phonological processing of the non-target language. In the present study, we examined whether it is indeed possible for implicit lexicosemantic spreading of activation to lead to behavioral effects in bimodal bilinguals or, alternatively, whether these behavioral effects reported in previous studies were induced by explicit processes.

In particular, these behavioral effects may have resulted from strategic translation, rather than being a consequence of implicit lexicosemantic interactivity. If the bimodal bilinguals in previous studies noticed the manipulation in the non-target sign language, they may have begun to actively recruit the sign translations and analyze the phonological relationship between them. This strategic translation account seems unlikely in the unimodal case (e.g., Thierry & Wu, 2007) because a) phonological relatedness in the non-target language did not affect behavior, b) debrief responses indicated that participants were unaware of the phonological relationship in the non-target language, and c) the timing of the N400 effect was more consistent with lexicosemantic processing than top-down translation. In contrast, interpretation of the behavioral studies with bimodal bilinguals is limited because there was no such debrief and the paradigm only indexed the endpoint of a succession of linguistic and cognitive processes. The time between target word onset and the response (roughly 625 to 850 ms on average) was sufficiently long to allow for an influence of overt translation processes. Without a better understanding of the processes that occur during this delay or information about whether participants were consciously aware of the translation manipulation, the possibility that previously reported behavioral effects in bimodal bilinguals resulted from controlled top-down translation remains viable. Including both an online measure and a debrief questionnaire, as in the present study, allows for an assessment of the plausibility of such an account, and thus a better characterization of the extent to which cross-language spreading of activation is similar within and across language modalities.

1.3 The Present Study

We used ERPs to investigate the time course of the co-activation of ASL in an implicit phonological priming paradigm in deaf bimodal bilinguals. A number of studies have suggested that sign language translations influence processing of print and spoken word recognition in bimodal bilinguals (e.g., Giezen et al., 2015; Kubus et al., 2015; Morford et al., 2014; Morford et al., 2011; Villameriel et al., 2016). However, the limited scope of these behavioral studies complicates their interpretation. Behavioral effects in bimodal bilinguals, which are absent in the corresponding studies with unimodal bilinguals (e.g., Thierry & Wu, 2007; Wu & Thierry, 2010), can either be explained in terms of a different manifestation of the same lexicosemantic spreading of activation observed in unimodal bilinguals or as a result of explicit translation processing once participants become aware of the hidden phonological manipulation. An ERP study that investigates the time course of co-activation in bimodal bilinguals who do not report being consciously aware of the manipulation at debrief can dissociate between these two possibilities.

We predicted that, if the behavioral effects in previous studies with bimodal bilinguals index a different manifestation of the same implicit cross-language co-activation mechanism as in unimodal bilinguals, then word pairs with phonologically related translations in ASL should elicit slower responses than word pairs with phonologically unrelated translations in participants who report being unaware of the ASL phonological manipulation (Kubus et al., 2015; Morford et al., 2014, Experiment 1; Morford et al., 2011). Furthermore, targets in pairs with phonologically related ASL translations should elicit smaller N400 amplitudes than targets in pairs with phonologically unrelated ASL translations (e.g., Thierry & Wu, 2007).

If, instead, the behavioral effects in previous studies with bimodal bilinguals were due to conscious awareness of the phonological manipulation and explicit translation, many of the bimodal bilingual participants should report having noticed the ASL phonological manipulation at debrief. In this case, we might expect an additional ERP effect that appears later than the N400 indicative of more explicit translation processes (e.g., Guo et al., 2012). Regardless of the underlying mechanism (i.e., spreading activation or strategic translation), we expected both RT and ERP effects of ASL phonological relatedness to be correlated with English proficiency, such that readers with lower levels of L2 English proficiency will be more influenced by the phonological relationship in ASL (Morford et al., 2014).

Finally, based on an extensive semantic priming literature (e.g., Kutas & Hillyard, 1989) that includes evidence of semantic priming in L2 speakers (e.g., McLaughlin, Osterhout, & Kim, 2004; Phillips, Segalowitz, O’Brien, & Yamasaki, 2004), we expected faster responses and smaller amplitude N400s for semantically related trials as compared to semantically unrelated trials.

2. Experiment 1: Bimodal Bilinguals

2.1 Method

2.1.1. Participants

Of the 24 deaf signers (10 female; mean 29.4 years, SD 4.5 years) who participated in this experiment, 17 were native signers (born into deaf, signing families), seven were early signers (acquired ASL before age 7), and three were left-handed. English proficiency was assessed using the reading comprehension subtest of the Peabody Individual Achievement Test – Revised (PIAT; Markwardt, 1989). Out of a total of 100 possible points, the average raw score for these participants was 85.9 (SE 1.6). Based on responses at debrief (see Procedure), 14 participants were included in an implicit co-activation subgroup and 10 were included in an explicit translation subgroup. The reading levels between the implicit (mean 84.4, SE 2.2) and explicit (mean 87.9, SE 2.2) subgroups did not significantly differ, t(22) = 1.07, p = .295. Participants were volunteers who received monetary compensation for their time. Informed consent was obtained from all participants in accordance with the institutional review board at San Diego State University. Data from an additional 3 participants were excluded from analyses due to high ERP artifact rejection rates.

2.1.2. Stimuli

Stimuli consisted of 100 word triplets composed of two primes and one target (e.g., gorilla, accent, bath; see Supplementary Table 1). Each triplet was used to form two word pairs that were either both semantically unrelated (e.g., gorilla-bath, accent-bath) or both semantically related (e.g., mouse-rat, squirrel-rat), for a total of 200 pairs. Semantically related and unrelated targets were balanced for English word length, orthographic neighborhood density (OLD20), average bigram counts, and orthographic form frequency (CELEX frequency per million) with metrics extracted from the English Lexicon Project (Balota et al., 2007) and McWord (Medler & Binder, 2005) databases (all ps > .25). Of the 100 semantically unrelated word pairs, 50 had phonologically related translations in ASL (e.g., gorilla and bath share handshape and location) and 50 had phonologically unrelated translations in ASL (e.g., accent and bath share no parameters). Primes in these two conditions were also balanced for English word length, OLD20, average bigram counts, and orthographic form frequency (all ps > .05). As described below, analyses of phonological priming included only these trials. Of the 100 semantically related pairs, 20 were phonologically related in ASL (e.g., mouse and rat share location and movement) and 80 were phonologically unrelated in ASL (e.g., squirrel and rat). Though there were too few pairs that were related both phonologically and semantically to be independently analyzed, 20 such trials were included to prevent participants from realizing that all phonologically related pairs always required a ‘no’ response in the semantic judgment task.

One pair from each triplet was assigned to one of two presentation lists such that each list contained one presentation of each target and half of the trials from each of the four prime conditions. The presentation order of these lists was counterbalanced across participants (e.g., half of the participants saw gorilla-bath in the first list and half saw accent-bath in the first list) to minimize effects of target repetition. Trial order was pseudorandomized within blocks to avoid having more than three consecutive trials with the same semantic relationship (and response).

The semantic relationship between pairs was determined through several rounds of semantic ratings from 15–16 hearing native speakers of English per round. On a paper response sheet, participants rated each pair on a 1–7 scale based on how similar in meaning the two words are (7 = very similar). Half of participants saw the word pairs in a randomized order and the other half saw them in the reversed order. Pairs with mean semantic ratings of ≤ 2.5 were included in the semantically unrelated condition (mean 1.36, SD .34) and those with mean semantic ratings of ≥ 5.4 were included in the semantically rated condition (mean 6.20, SD .34). These cut-offs are similar to, or more restrictive than, previous behavioral studies with bimodal bilinguals (Kubus et al, 2015; Morford et al, 2014; Morford et al, 2011; Villameriel et al, 2016). There was a significant difference between the ratings of semantically related and unrelated trials, t(198) = 100.43, p < .001. Importantly, for the semantically unrelated condition, the semantic ratings for trials with phonologically related ASL translations (mean 1.37, SD .28) did not significantly differ from those with phonologically unrelated translations (mean 1.34, SD .38), t(98) = .44, p = .658.

To determine the phonological relationship between ASL translations of the word pairs, we first needed to establish that each of the English words in the critical trials had a consistent translation in ASL. To do this, we presented single words to 16 deaf signers and elicited a single ASL translation. Two researchers (a deaf native ASL signer and a hearing L2 signer) determined whether or not the target sign was produced (with 94.4% interrater reliability); only signs with at least 75% consistency (i.e., produced by 12 out of 16 participants) were included.1 These signs were then used to determine phonological relatedness. Within the critical semantically unrelated conditions, the ASL translations of phonologically related pairs shared two of three phonological parameters (handshape, location, and movement), as in previous studies (e.g., Morford et al., 2014; Morford et al., 2011), and the ASL translations of phonologically unrelated signs shared none.

2.1.3. Procedure

After participants provided informed consent, the EEG cap was placed and they were seated in a dimly lit room, in a comfortable chair about 140 cm from the stimulus monitor. Instructions were provided in both English and ASL, and a native deaf signer was present throughout testing.

All participants saw all 200 trials, consisting of an English prime and target presented in lowercase Arial font. Targets subtended a maximal horizontal visual angle of 3.27 degrees and a maximal vertical visual angle of .41 degrees (the longest target, government, was 1 cm tall and 8 cm long). Each trial began with a purple fixation cross that was presented at the center of the screen for 900 ms, followed by a white fixation cross that was presented for 500 ms, and a 500 ms blank screen. The prime was then displayed for 500 ms, followed by a 500 ms blank screen, before the target was presented for 750 ms. Thus, a 1000 ms SOA separated the prime and the target, identical to previous studies with bimodal bilinguals (e.g., Morford et al., 2014; Morford et al., 2011) and similar to previous studies with unimodal bilinguals that used a variable SOA between 1000 and 1200 ms (e.g., Thierry & Wu, 2007). After target offset, the screen remained blank until the next trial began 750 ms after the button press response.

Participants were instructed to press one button if the meanings of the two words were related and another button if they were not, as quickly and accurately as possible. Response hand was counterbalanced across participants. Short breaks occurred roughly every 12 trials and longer breaks were provided after each quarter. Participants were instructed to blink during these breaks and when the purple fixation cross was on the screen between trials. The experiment began with a practice set of 12 word pairs not included in the experiment (three pairs from each of the four experimental conditions). Unlike the real experiment, none of the practice targets were repeated.

After the ERP experiment, participants were asked whether they noticed anything special about the pairs of words and, if so, what and when they noticed, and whether they felt that this affected their ability to make semantic relatedness judgments. Based on their responses to the first question of this debrief questionnaire, 14 participants were included in an implicit co-activation subgroup (i.e., those who did not report noticing the phonological relationship in ASL) and 10 were included in an explicit translation subgroup (i.e., those who reported noticing the overlap in handshape, location, or movement of the ASL translations).

2.1.4. EEG Recording and Analysis

Participants were fitted with an elastic cap (Electro-Cap) with 29 active electrodes. An electrode placed on the left mastoid was used as a reference during recording and for subsequent analyses. An electrode located below the left eye was used to identify blink artifacts in conjunction with recordings from FP1; an electrode on the outer canthus of the right eye was used to identify artifacts due to horizontal eye movements. Using saline gel (Electro-Gel), mastoid and scalp electrode impedances were maintained below 2.5 kΩ and eye electrode impedances below 5 kΩ. EEG was amplified with SynAmsRT amplifiers (Neuroscan-Compumedics) with a bandpass of DC to 100 Hz and was sampled continuously at 500 Hz.

Offline, ERPs were time-locked to targets and averaged over a 1,000 ms epoch, including a 100 ms pre-stimulus-onset baseline, with a 15 Hz low-pass filter to create 5 regions of interest (ROIs), four of which were included in analyses (see Figure 1). The left anterior (LA) ROI included sites F3, F7, FC5, and T3; the right anterior (RA) ROI included sites F4, F8, FC6, T4; the middle (M) ROI included sites FC1, FC2, Cz, CP1, and CP2; the left posterior (LP) ROI included sites CP5, T5, P3, and O1; the right posterior (RP) ROI included sites CP6, T6, P4, and O2. Trials contaminated by eye movement or drift artifacts were excluded from all analyses (5 trials, or 2.5%, on average).

Figure 1. Electrode montage and ROIs.

The left anterior (LA) ROI is indicated in orange, the right anterior (RA) ROI is indicated in purple, the left posterior (LP) ROI is indicated in green, and the right posterior (RP) ROI is indicated in blue. The middle (M) ROI, indicated in grey, was not included in analyses.

To investigate whether the hidden phonological relationship of the ASL translations influenced processing, we compared ERPs for targets in semantically unrelated word pairs that were phonologically related and unrelated in ASL. As a result of the stimulus triplets, the ERPs in these two conditions were time-locked to identical targets (e.g., bath), preceded by primes that were phonologically related in ASL (e.g., gorilla) or not (e.g., accent). Mean N400 amplitude was calculated for each subject at each ROI between 300 and 500 ms, a window determined based on visual inspection of the average waveforms and consistent with previous studies (e.g., Guo et al., 2012; Gutiérrez, Müller, Baus, & Carreiras, 2012). An ANOVA with factors ASL-phonology (Related, Unrelated), Hemisphere (Left, Right), and Anterior/Posterior (Anterior, Posterior) was used to analyze the N400 data. Because our primary question was about the effects of implicit cross-language co-activation in bimodal bilinguals, but our final group also included participants who reported being aware of the manipulation in ASL, we conducted a similar ANOVA including only data from participants for whom the phonological manipulation in ASL was implicit. For the sake of completeness, we also report a similar analysis including only the participants who reported being explicitly aware of the manipulation.

Visual inspection of the phonological priming effect suggested that there was also a strong post-N400 effect; a point-by-point time course analysis of the effect confirmed this impression and indicated that it was most reliable between 700 and 900 ms. Thus, an ANOVA with factors ASL-phonology (Related, Unrelated), Hemisphere (Left, Right), and Anterior/Posterior (Anterior, Posterior) was also used to analyze mean amplitude within this late window. These effects were also examined separately for the implicit and explicit subgroups in planned comparisons.

Semantic priming analyses included only trials that had correct responses. Mean N400 amplitude was calculated for each subject at each ROI between 300 and 500 ms separately for semantically related and unrelated trials. An ANOVA with factors Semantics (Related, Unrelated), Hemisphere (Left, Right), and Anterior/Posterior (Anterior, Posterior) was used to analyze the N400 semantic priming effect. Significant effects were examined separately for the implicit and explicit subgroups in planned comparisons.

Finally, exploratory Pearson correlations were used to examine potential associations between the measures of English proficiency, the RT effects, and the ERP effects. For the RTs, difference scores were calculated by subtracting the phonologically and semantically related conditions from the respective unrelated conditions. Similarly, ERPs in the related conditions were subtracted from the ERPs in the respective unrelated conditions. Mean amplitude was calculated for the ROI(s) where the effects were strongest: the right anterior ROI for the N400 phonological effect, the average of the two right ROIs for the late (700–900 ms) phonological effect, and the right posterior ROI for the N400 semantic effect.

2.2. Results

2.2.1. Behavioral Measures

Trials with incorrect responses (11 trials, or 5.5%, on average) or RTs shorter than 200 ms or longer than 2000 ms (2 trials, or 1%, on average) were discarded from all behavioral analyses.

2.2.1.1. ASL Phonological Effects

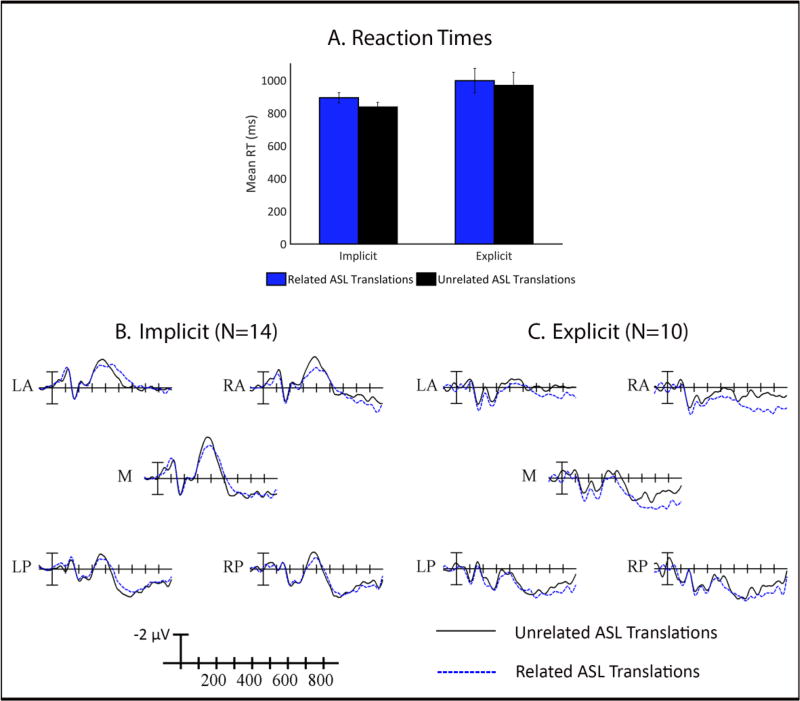

Only semantically unrelated trials, for which there were an equal number of phonologically related and unrelated word pairs, were included in analyses of phonological interference. In line with our predictions, a linear mixed effect model (with items and participants as random intercepts) indicated that RTs for phonologically related trials (mean 937 ms; SE = 36 ms) were slower than for phonologically unrelated trials (mean 892; SE = 39 ms), t = 3.30, 95% CI = [19.31, 75.99]. This effect was significant for the implicit subgroup, t = 3.46, 95% CI = [24.93, 89.97], but not for the explicit subgroup t = 1.61, 95% CI = [−6.94, 71.57] (see Figure 2A).

Figure 2. ASL Phonological Effects for Each Subgroup.

(A) Mean reaction times (RTs) to correct semantically unrelated ‘no’ trials as a function of whether the ASL translations were phonologically related or unrelated, plotted separately for the implicit and explicit subgroups. The effect of ASL phonological relatedness, such that phonologically related trials (blue) elicited slower responses than phonologically unrelated trials (black), was significant for the implicit subgroup but not for the explicit subgroup. Grand average ERP waveforms elicited by targets in pairs with phonologically unrelated ASL translations and phonologically related translations are also plotted for the implicit subgroup alone (B) and the explicit subgroup alone (C). Each vertical tick marks 100 ms and negative is plotted up. The calibration bar marks 2 µV.

2.2.1.2. Semantic Priming Effects

As predicted, a linear mixed effects model confirmed that semantically related trials (mean 851 ms; SE = 30 ms) elicited faster responses than semantically unrelated trials (mean 914 ms; SE = 38 ms), t = 3.17, 95% CI = [24.08, 101.88]. The semantic priming RT effect was significant for both the implicit subgroup, t = 2.18, 95% CI = [3.67 70.38], and the explicit subgroup, t = 2.67, 95% CI = [26.36, 172.58].

2.2.2. ERPs: ASL Phonological Effects

2.2.2.1. Whole Group

Targets in phonologically unrelated pairs elicited larger negativities than targets in phonologically related pairs within the N400 window overall, especially in the right anterior ROI, Phonology × Hemisphere × Anterior/Posterior, F(1,23) = 5.75, p = .025, ηp2 = .20 (see Figure 3)2. Targets in phonologically unrelated pairs continued to elicit larger negativities than targets in phonologically related pairs from 700 to 900 ms, particularly over right hemisphere ROIs, Phonology × Hemisphere, F(1,23) = 4.46, p = .046, ηp2 = .16.

Figure 3. ASL Phonological Effects in Bimodal Bilinguals.

(A) Grand average ERP waveforms elicited by targets in pairs with phonologically unrelated ASL translations (black) and phonologically related translations (blue). Each vertical tick marks 100 ms and negative is plotted up. The calibration bar marks 2 µV. (B) Scalp voltage maps showing the difference in mean amplitude between phonologically unrelated and related trials for each of the analyzed time windows.

2.2.2.2. Implicit Subgroup

In separate analyses that included only the implicit subgroup, we found an N400 effect with the same right anterior distribution as in the whole bimodal bilingual group, Phonology × Hemisphere × Anterior/Posterior, F(1,13) = 5.09, p = .042, ηp2 = .28 (see Figure 2B). The effect from 700–900 ms only approached significance, Phonology × Hemisphere, F(1,13) = 4.19, p = .061, ηp2 = .24. It went in the expected direction over right hemisphere sites and in the opposite direction over left hemisphere sites.

2.2.2.3. Explicit Subgroup

Although the N400 effect was in the expected direction for the explicit subgroup, the effect did not reach significance, Phonology × Hemisphere × Anterior/Posterior, F(1,9) = 1.01, p = .340, ηp2 = .10, potentially due to the fewer number of participants in this analysis. However, the late effect was widespread and significant, Phonology, F(1,9) = 5.95, p = .037, ηp2 = .40 (see Figure 2C).

2.2.3. ERPs: N400 Semantic Priming Effects

There was a clear N400 semantic priming effect that was largest in the right posterior ROI, Semantics, F(1,23) = 84.11, p < .001, ηp2 = .78, Semantics × Hemisphere, F(1,23) = 11.88, p = .002, ηp2 = .34, Semantics × Anterior/Posterior, F(1,23) = 36.46, p < .001, ηp2 = .61, Semantics × Hemisphere × Anterior/Posterior, F(1,23) = 4.9, p = .037, ηp2 = .18 (see Figure 4). This effect remained significant for both the implicit subgroup, Semantics, F(1,13) = 131.03, p < .001, Semantics × Hemisphere, F(1,13) = 7.83, p = .015, Semantics × Anterior/Posterior, F(1, 13) = 15.43, p = .002, and the explicit subgroup, Semantics, F(1,9) = 14.14, p = .004, Semantics × Hemisphere, F(1,9) = 5.32, p = .046, Semantics × Anterior/Posterior, F(1,9) = 25.21, p = .001.

Figure 4. Semantic Priming Effects in Bimodal Bilinguals.

(A) Grand average ERP waveforms elicited by targets in semantically unrelated (black) and semantically related (blue) pairs at each ROI. Each vertical tick marks 100 ms and negative is plotted up. The calibration bar marks 2 µV. (B) Scalp voltage maps showing the semantic priming effect on mean N400 amplitude (unrelated-related).

2.2.4. Correlations

A significant correlation between the RT effect of phonological relatedness and reading comprehension ability (PIAT scores), r = .46, p = .024, indicated that participants who had lower L2 English proficiency were more affected by the phonological relatedness of the ASL translations (i.e., had a larger RT difference between ASL related and unrelated pairs). The correlations between PIAT scores and the two ERP effects of ASL phonology were not significant, both ps > .39, and neither were the correlations between the RT and ERP effects of phonological relatedness, both ps > .09. Finally, neither of the semantic priming measures correlated with PIAT scores, both ps > .59.

2.3. Discussion

Overall, these results support the notion that implicit priming in bimodal bilinguals occurs through automatic lexicosemantic interactivity across languages in two different modalities. Consistent with previous studies with bimodal bilinguals (e.g., Kubus et al., 2015; Morford et al., 2014; Morford et al., 2011; Villameriel et al., 2016), but not unimodal bilinguals (Thierry & Wu, 2007; Wu & Thierry, 2010), we found an RT interference effect for semantically unrelated (‘no’ response) trials that had phonologically related ASL translations, as compared to those that had phonologically unrelated ASL translations. This effect held for participants who reported not being aware of the phonological manipulation at debrief, suggesting that the results of previous behavioral studies cannot solely be attributed to explicit translation strategies. The ERP results in the implicit subgroup provide further evidence of lexicosemantic spreading of activation; an effect of ASL translation was found within the N400 window, consistent with previous studies with unimodal bilinguals (e.g., Thierry & Wu, 2007; Wu & Thierry, 2010), albeit with a distinct right anterior scalp distribution. Given that the timing of automatic language co-activation is similar across the two types of bilinguals, we suggest that the subsequent phonological RT interference found in bimodal, but not unimodal, bilinguals results from less robust suppression of the co-activated translation equivalent.

In the subgroup of 10 participants who reported noticing the manipulation, the most notable effect of ASL phonology appeared between 700 and 900 ms. This later effect, which might index explicit translation and post-lexical conscious analysis of the phonological relationship of the translations, confirms the utility of online measures for differentiating between implicit and explicit processing. It should be noted, however, that because this subgroup was so small, the null behavioral and N400 window effects should be interpreted with caution and deserve replication in a larger group of participants who are explicitly aware of the manipulation in the non-target language. We elaborate on the significance of these findings and potential follow-up studies in the General Discussion after presenting a control experiment with sign-naïve hearing participants.

3. Experiment 2: Sign-Naïve Controls

In order to ensure that the effects observed in Experiment 1 were a result of the phonological relatedness in ASL rather than some other uncontrolled variable, we conducted the same experiment with ASL-naïve hearing controls. If the effects observed in Experiment 1 are indeed related to co-activation of ASL, there should be no significant effects in the phonological analyses in this group. We did, however, expect to find reliable semantic priming effects, as reflected in faster RTs and smaller amplitude N400s for semantically related trials as compared to semantically unrelated trials.

3.1. Method

3.1.1. Participants

Twenty-four hearing non-signers (12 female; mean age 29.3 years, SD 8.1 years) who had no formal exposure to American Sign Language and no exposure to any language other than English before the age of 6 participated. The average PIAT raw score in this group was 89.8 (SE 1.88). Three participants were left-handed, and none had taken part in the semantic rating norming study described above. Participants received monetary compensation for their time, and informed consent was obtained as in Experiment 1. Data from an additional five participants were excluded due to high artifact rejection rates.

3.1.2. Stimuli and Procedure

The stimuli, procedure, and analyses were identical to Experiment 1, with two minor changes: instructions were provided in print and verbally, and there was no debrief about phonological relatedness in ASL. On average 2 trials, or 1%, were rejected per participant for artifacts.

3.2. Results

3.2.1. Behavioral Effects

Trials with incorrect responses (7 trials, or 3.5% on average) or RTs shorter than 200 ms or longer than 2000 ms (2 trials, or 1% on average) were eliminated. As predicted, a linear mixed effects model indicated that were no effects of ASL phonological relatedness on RTs in the sign-naïve control group, t = 1.29, 95% CI = [−9.82, 47.80]. Also as predicted, a linear mixed effects model comparing RTs for correct trials indicated that semantically related trials (mean 859 ms, SE 49 ms) elicited faster responses than semantically unrelated trials (mean 936, SE 50 ms), t = 3.69, 95% CI = [34.90, 114.04].

3.2.2. ERP Effects

There were no reliable effects of ASL phonology in the N400 window, all ps > .48, or the late window, all ps > .19, in this sign-naïve control group (see Figure 5). As in the deaf bimodal bilinguals in Experiment 1, the semantic priming effect was present and strongest in the right posterior ROI, Semantics, F(1,23) = 56.79, p < .001, ηp2 = .71, Semantics × Hemisphere, F(1,23) = 14.38, p = .001, ηp2 = .38, Semantics × Anterior/Posterior, F(1,23) = 31.96, p < .001, ηp2 = .58, Semantics × Hemisphere × Anterior/Posterior, F(1,23) = 22.35, p < .001, ηp2 = .49 (see Figure 6).

Figure 5. ASL Phonological Effects in Hearing Controls.

(A) Grand average ERP waveforms elicited by targets in pairs with phonologically unrelated ASL translations (black) and phonologically related translations (blue). Each vertical tick marks 100 ms and negative is plotted up. The calibration bar marks 2 µV. (B) Scalp voltage maps showing the difference in mean amplitude between phonologically unrelated and related trials for each of the analyzed time windows.

Figure 6. Semantic Priming Effects in Hearing Controls.

(A) Grand average ERP waveforms elicited by targets in semantically unrelated (black) and semantically related (blue) pairs at each ROI. Each vertical tick marks 100 ms and negative is plotted up. The calibration bar marks 2 µV. (B) Scalp voltage maps showing the semantic priming effect on mean N400 amplitude (unrelated-related).

3.2.3. Correlations

English proficiency as measured by the PIAT did not correlate with the RT or ERP effects of ASL phonology in this group, all ps > .29. There was also no correlation between the RT effect and either of the ASL phonology ERP effects, both ps > .41. Finally, PIAT scores did not correlate with the RT or the ERP semantic priming effects, both ps > .41.

3.3. Discussion

None of the ASL phonology effects were significant in this control group of sign-naïve hearing participants, confirming that the results observed in Experiment 1 were related to co-activation of the ASL translation equivalents in the deaf bimodal bilinguals, rather than some other factor that differed between the two prime lists. In contrast, the RT and N400 effects of semantic priming in this group were similar to those found in the bimodal bilinguals in Experiment 1.

4. General Discussion

In the first ERP study to investigate implicit phonological priming in bimodal bilinguals, we found that languages that differ in modality can be co-activated automatically, but are not suppressed to the same degree as languages that occur within the same modality. Targets in English word pairs with phonologically related ASL translations elicited smaller negativities within the N400 window than targets in word pairs with phonologically unrelated ASL translations, including when participants reported being unaware of the ASL manipulation. This result strengthens the interpretation of previous studies that activation automatically spreads across languages that differ in modality. In contrast to previous studies with unimodal bilinguals (e.g., Thierry & Wu, 2007; Wu & Thierry, 2010), but consistent with previous behavioral studies with bimodal bilinguals (e.g., Kubus et al., 2015; Morford et al., 2014; Morford et al., 2011), word pairs with phonologically related ASL translations also elicited slower RTs than word pairs with phonologically unrelated ASL translations in the semantic relatedness judgment task. This RT interference effect was especially prominent for participants with lower levels of English reading comprehension, suggesting that deaf bimodal bilinguals with lower proficiency in English rely more on lexical links to the ASL translation equivalents. Finally, processing in participants who reported being aware of the hidden phonological manipulation was characterized by an ERP effect between 700 and 900 ms, likely associated with overt translation and assessment of the phonological relationship of the ASL translations. Together, this pattern of results demonstrates that the mechanism of cross-language co-activation is similar between unimodal and bimodal bilinguals, but that subsequent suppression of the non-target language is less robust in bimodal bilinguals. These results also lend preliminary insight into the limits of implicit priming in this paradigm and how processing might change as a result of explicit awareness of the hidden phonological manipulation.

4.1. N400 Amplitude Indexes Implicit Co-activation of a Sign Language

Overall, the time course of cross-language co-activation that we found in the implicit subgroup paralleled that observed in unimodal bilinguals in this paradigm (e.g., Thierry & Wu, 2007; Wu & Thierry, 2010), suggesting that similar mechanisms underlie implicit cross-language co-activation in all bilinguals, irrespective of language modality. Word pairs with phonologically related ASL translations elicited smaller negativities than those with phonologically unrelated ASL translations within the typical N400 window. It seems unlikely that this pattern resulted from some factor other than the phonological relationship of the ASL translations, as there were no significant phonological condition effects in the same window in the hearing sign-naïve control group in Experiment 2. Given the timing of this N400 effect and the lack of phonological overlap between English and ASL, interactivity at a lexicosemantic level seems to be the most plausible underlying mechanism, in agreement with unimodal bilingual studies (e.g., Thierry & Wu, 2007). This spreading of activation is formally represented in models of bimodal bilingual processing as direct lexical connections between the written word and the sign translation equivalent and/or indirect connections via a shared semantic store (Ormel, Hermans, Knoors, & Verhoeven, 2012; Shook & Marian, 2012). Thus, these results build on previous behavioral studies with bimodal bilinguals by demonstrating that automatic cross-language co-activation in this population occurs in a bottom-up fashion, rather than exclusively through top-down strategic translation.

The direction of this N400-like effect, though consistent with unimodal studies using this paradigm (e.g., Thierry & Wu, 2007; Wu & Thierry, 2010), is somewhat at odds with the only other study to our knowledge to investigate the electrophysiological indices of phonological priming in sign language using form-related versus unrelated sign pairs. Gutiérrez and colleagues (2012) investigated how phonological overlap between prime-target pairs in Spanish Sign Language influenced processing of the target sign in a delayed lexical decision task. Sign targets preceded by a prime that overlapped in only location elicited larger amplitude N400s than sign targets preceded by a phonologically unrelated prime, which is opposite the effect found here. One obvious difference between the two studies is that signs were never overtly presented here, whereas they were in the study by Gutiérrez et al. (2012). However, this is unlikely to be the cause of the reversal because Thierry and Wu (2007) reported nearly identical phonological priming effects when Chinese characters were overtly presented to monolingual Chinese speakers as when they were implicitly activated by the Chinese-English bilinguals.

The divergent N400 effect observed between the current study and Gutiérrez et al. (2012) most likely relates to the degree of phonological overlap between the translation pairs that were used in each study. In studies on auditory word recognition, the nature of the phonological overlap between word pairs has been shown to influence processing. For example, in the same experiment, cohort member pairs (e.g., cone-comb) elicited greater amplitude N400s relative to an unrelated condition (e.g., cone-fox) and rhyme pairs (e.g., cone-bone) elicited smaller amplitude N400s than unrelated pairs (e.g., Desroches, Newman, & Joanisse, 2008). Gutiérrez and colleagues (2012) interpreted their larger N400 amplitude for location overlap pairs as analogous to processing of cohort member pairs. In contrast, the two-parameter overlap in the present study appears to be more consistent with the reduced amplitude N400 found for rhymes in spoken language. This interpretation is consistent with the behavioral sign literature which demonstrates that both the specific parameter and the number of overlapping parameters influence priming effects (e.g., Dye & Shih, 2006) and explicit similarity ratings (e.g., Hildebrandt & Corina, 2002). For example, location overlap by itself has been shown to lead to interference (e.g., Baus, Gutiérrez-Sigut, Quer, & Carreiras, 2008; Corina & Emmorey, 1993), whereas double overlap between location and movement typically leads to facilitation (e.g., Baus, Gutiérrez, & Carreiras, 2014; Dye & Shih, 2006), relative to a phonologically unrelated condition. Here, we demonstrate that phonological overlap of two parameters facilitates processing as measured by N400 amplitude, even when the signs are implicitly activated, rather than explicitly presented.

Finally, though the timing and direction of the N400 effect that we observed are in accordance with unimodal bilingual studies, the distribution is strikingly different. The effect of the implicit phonological relationship in ASL was localized to right anterior sites, in contrast to the more centro-posterior effect that typically characterizes N400 effects (e.g., Kutas & Federmeier, 2011). Importantly, this more typical N400 distribution characterized the semantic priming effect in these same participants (and in the hearing monolingual controls; see Figure 4 and Figure 6). Thus, the right anterior distribution seems to be specific to the phonological contrast, rather than specific to this group of deaf participants. This pattern of results suggests that unique neural generators may underlie form processing in a sign language. Specifically, the right anterior distribution may be related in part to the dynamic, visual-depictive nature of signs, given that more frontal N400s have been observed in response to pictures (e.g., Ganis, Kutas, & Sereno, 1996) and that right anterior regions have recently been implicated in three-dimensional spatial reasoning (Han, Cao, Cao, Gao, & Li, 2016). Further supporting this possibility is ongoing work in our lab in which we have observed an anterior negative response when comparing processing of signs with high versus low iconicity in a semantic decision task with deaf ASL signers (Emmorey, Sevcikova Sehyr, Midgley, & Holcomb, 2016). Very little is known about how phonological form in a signed language is neurally instantiated, but evidence is now accumulating to suggest that the biology of the linguistic articulators (i.e., the hands vs. the vocal tract) has an impact on the neural substrate for language (e.g., Corina, Lawyer, & Cates, 2013). Documenting how these typological differences do or do not affect processing is an important step toward developing a comprehensive account of the biological basis of language (see Bornkessel-Schlesewsky & Schlesewsky, 2016).

Nonetheless, with the exception of this difference in distribution, the N400 effects observed here are remarkably similar to those observed in unimodal bilinguals (Thierry & Wu, 2007; Wu & Thierry, 2010). The timing of the effect and the finding that it was especially strong for participants who did not report being aware of the phonological relationship support the notion that implicit automatic co-activation occurs at a lexical level in bimodal bilinguals.

4.2. Behavioral Interference Effects Specific to Bimodal Bilinguals

In contrast to the similarities between unimodal and bimodal bilinguals reflected within the N400 window, there seem to be strikingly different behavioral patterns between the two types of bilinguals. Semantically unrelated word pairs with phonologically related ASL translations elicited slower RTs than those with phonologically unrelated ASL translations, whereas previous studies with unimodal bilinguals have failed to find behavioral effects. By administering a debrief questionnaire and conducting separate analyses as a function of explicit awareness, we have shown that this behavioral effect persists even when processing of the hidden phonological relationship is implicit. Thus, our results clarify previous behavioral studies (Kubus et al., 2015; Morford et al., 2014; Morford et al., 2011; Villameriel et al., 2016) and suggest that the behavioral interference effect is indeed a reflection of longer lasting effects of implicit language co-activation when the two languages differ in modality, rather than an artifact of overt translation.

Although both this behavioral interference and the effect within the N400 window index implicit co-activation of ASL, they do so at different stages of processing. Targets in pairs with phonologically related translations elicited smaller amplitude N400s, suggesting facilitated processing, but these same pairs had slower RTs. This pattern of results is analogous to that found by studies of neighborhood density in which words with only a few neighbors elicit smaller amplitude N400s, but slower RTs, than words with many neighbors (e.g., Holcomb, Grainger, & O’Rourke, 2002). The interpretation from those studies is that the N400 indexes bottom-up lexicosemantic co-activation, whereas the behavioral effects reflect specific task demands (see also Grainger & Jacobs, 1996). Applying such a framework here, the facilitation within the N400 window would reflect pre-activation of the target ASL translation, and the behavioral interference would reflect conflict at the response decision level when there is something related about the trial (i.e., the phonology of the implicitly co-activated signs), but a ‘no’ response is required. Tracking the earlier of these two effects is another reason why online measures are important for answering questions about language interactivity in bilinguals.

Given that the differences between unimodal and bimodal bilinguals arise between the N400 window and the behavioral response, we suggest that less robust suppression of the non-target language is what leads to the behavioral effect. A number of production studies have shown that hearing bimodal bilinguals are less likely to strongly inhibit a non-target language, as compared to unimodal bilinguals, most likely because the two languages differ in articulators (see, e.g., Emmorey, Giezen, et al., 2016, for a review). If activation of ASL is less strongly suppressed for a similar reason during comprehension, the residual activation could give rise to the behavioral phonological interference effect observed here. Alternatively, it is worth noting that the SOA in studies with bimodal bilinguals (including the present study) consistently used a 1000 ms SOA, whereas the studies with unimodal bilinguals had variable SOAs that ranged from 1000 to 1200 ms. The fact that our SOA was stable and, on average, shorter could have potentially led to stronger behavioral effects; how exactly the SOA influences the nature of both the behavioral and ERP implicit priming effects is an important question for future research.

If the modality explanation turns out to be correct, then the age at which the deaf bimodal bilinguals in these studies learned their L2, and the way in which they learned it, may also contribute to the reduced L1 suppression that we observed behaviorally. The bimodal bilinguals in the current study all had early exposure to both languages, whereas the Chinese-English unimodal bilinguals in the studies by Thierry and Wu (2007; Wu & Thierry, 2010) were first formally exposed to their L2 at the age of 12 (cf. Villameriel et al., 2016, for a discussion of age of acquisition effects in an implicit priming paradigm with hearing bimodal bilinguals). Simultaneous acquisition of the two languages may lead to greater sustained interactivity between them. Moreover, in the bilingual approach to deaf education, it is common to associate written English words with lexical signs, emphasizing the bidirectional relationship between the two languages (e.g., Mounty, Pucci, & Harmon, 2014). This “chaining” methodology may strengthen the lexicosemantic connections between translation equivalents in a way that is unique to deaf bimodal bilinguals. The relationship between each of these factors, the degree of suppression of the co-activated non-target language, and the resulting behavioral effects in this implicit paradigm warrant further attention. In particular, an implicit phonological priming study with unimodal bilinguals who have early L2 exposure would be useful in dissociating between effects of modality versus age of acquisition.

One factor that certainly seems to modulate the behavioral implications of a co-activated non-target L1 sign language is L2 reading ability. The RT interference effect was stronger for deaf bimodal bilinguals with weaker English comprehension skills, replicating a similar finding based on a different measure of English proficiency (Morford et al., 2014). This correlation suggested that bilinguals who were weaker in their L2 English were more likely to co-activate the L1 ASL translations or did so to a greater degree. As Morford and colleagues have discussed, this pattern is consistent with the predictions of the Revised Hierarchical Model (Kroll & Stewart, 1994). This model predicts that less proficient bilinguals rely more on direct lexical links to the L1 translation equivalents when processing words in their L2 (which should yield greater effects of ASL phonological relatedness), whereas more proficient bilinguals process their L2 via direct links to semantics (in which case, the form of the sign in ASL should have little to no effect on English processing). Interestingly, however, this correlation held only for the behavioral effect and not for the N400-like effect, supporting Morford et al.’s hypothesis that the correlation stems from individual differences in sensitivity to conflict at the decision level (p. 266). Given this increased reliance on ASL for weaker readers, one might predict that participants with lower levels of English proficiency would be more likely to explicitly notice the hidden phonological relationship. This was not borne out in the data, however, as mean reading levels were similar between the explicit and implicit subgroups. Thus, although the decision processes of less proficient English readers were more affected by the phonological relationship in ASL, this did not increase their chances of explicitly noticing the phonological relationship between the translation pairs.

4.3. A Possible Late Translation Effect in the Explicit Subgroup

The weaker language suppression and educational biases linking signs to words (“chaining”) might also help explain the fact that many of our participants (42%) reported noticing the phonological manipulation in ASL at debrief, whereas none of the unimodal bilinguals reported noticing the phonological manipulation in the studies presented by Thierry and Wu (2007; Wu & Thierry, 2010). This result reinforces the importance of a debrief questionnaire in studies of implicit phonological priming, particularly with bimodal bilinguals, who appear more likely than unimodal bilinguals to explicitly co-activate the non-target language and notice the manipulation. Contrasting the effect of ASL phonology between subgroups of bimodal bilinguals also allowed us to explore the signatures of explicit translation in this paradigm for the first time (to our knowledge).

The behavioral and N400 effects that we observed in the implicit subgroup were not significant in the explicit subgroup, but they did go in the same direction. There were fewer participants in the explicit subgroup, and the lack of significance may have been due to a lack of power. Another factor that could have led to a non-significant behavioral effect is the large variability within the explicit subgroup (as reflected in the error bars in Figure 2A), which may have been due to the use of individual strategies once participants became aware of the manipulation. This variability was also reflected in the introspective responses on the debrief questionnaire, with some participants reporting that they felt the ASL relationship interfered with their ability to make semantic relatedness judgments and others reporting that they felt it had no effect. A future study in which participants are informed beforehand about the phonological relatedness of the ASL translations, and are therefore explicitly aware of the manipulation, is needed to determine whether or not these effects are present in participants who are aware of the manipulation. Indeed, we would not be surprised if, under these conditions, the behavioral and N400 effects turn out to be significant, as they are presumably reflective of processes that happen automatically in bilinguals during word recognition.

What is clear from these data is that a late, widespread effect of ASL phonology from 700 to 900 ms was present for the explicit subgroup. Reminiscent of the increased positivity associated with resolving conflict in the form-related condition of translation recognition paradigms (e.g., Guo et al., 2012), this late effect could be related either to the process of explicit translation or to the ensuing evaluation of the phonological relationship between ASL translations. In contrast, for the implicit subgroup these late processes did not reach significance and had a distinct, more lateralized distribution. Thus, in this first attempt to characterize differences in processing as a function of explicit awareness in this priming paradigm, there is some evidence of a dissociation between early automatic processing within the N400 window and later, more strategic translation processes. How explicit awareness of the implicit phonological manipulation modulates the earlier N400-like response awaits further research, however.

4.4. Conclusions

In an investigation of the time course of cross-language co-activation in deaf bimodal bilinguals, we found evidence of automatic co-activation of a non-target sign language via lexicosemantic spreading of activation within the N400 window, including when participants reported being unaware of the hidden manipulation. The unique right anterior distribution of the N400-like effect of phonological relatedness of implicitly co-activated signs suggests the involvement of distinct neural generators for processing phonological form in a visual-manual language. This result provides further evidence that the neurobiology of language processing is impacted by the distinct linguistic articulators employed by signed versus spoken languages (see Corina et al., 2013, for review). Moreover, once activated, the non-target ASL translation equivalents were not robustly suppressed, as reflected in behavioral interference effects that have not been reported in previous studies with unimodal bilinguals. In contrast, in the subgroup of participants who reported being aware of the phonological manipulation in ASL, neural processing was characterized by a late ERP effect, which we suggest reflects explicit translation processes and/or overt consideration of sign form overlap. Overall, then, these results emphasize that the implicit phonological priming is sensitive to cross-language co-activation in bimodal bilinguals as in unimodal bilinguals, but that the consequences of that co-activation may differ depending on language modality and the task-specific strategies that result from being explicitly aware of the hidden phonological manipulation.

Supplementary Material

Highlights.

-

□

Deaf bilinguals read word pairs, some had ASL translations with form overlap

-

□

N400-like (anterior) and RT effects in the group unaware of form overlap at debrief

-

□

Lexicosemantic interactivity underlies implicit co-activation of sign language

-

□

Weaker suppression of non-target language from another modality leads to RT effect

-

□

Late ERP effect in group aware of form manipulation related to explicit translation

Acknowledgments

This research was funded by NIH grant DC014246 and NSF grant BCS 1439257. These sponsors had no role in the study design; collection, analysis, and interpretation of the data; writing of the report; or the decision to submit for publication. The authors wish to thank Lucinda O’Grady Farnady, Marcel Giezen, and the other members of the NeuroCognition Laboratory and the Laboratory for Language and Cognitive Neuroscience for their invaluable support in designing and conducting this project.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

The one exception was the target sign for the English word try, which was produced by only 11 of the 16 translation participants, or 68.8%. The remaining 5 participants used an initialized handshape, which is similar to, but does not completely overlap with, the handshape of the ASL translation of the corresponding prime, drive.

The lateralization of this effect cannot be attributed to the use of a left mastoid reference. In analyses with an average mastoid reference, the effect was almost identical, Phonology × Hemisphere × Anterior/Posterior, F(1,23) = 5.84, p = .024, ηp2 = .20.

References

- Balota DA, Yap MJ, Cortese MJ, Hutchinson KA, Kessler B, Loftis B, Treiman R. The English Lexicon Project. Behavior Research Methods. 2007;39(3):445–459. doi: 10.3758/bf03193014. [DOI] [PubMed] [Google Scholar]

- Baus C, Gutiérrez E, Carreiras M. The role of syllables in sign production. Frontiers in Psychology. 2014;5(1254):1–7. doi: 10.3389/fpsyg.2014.01254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baus C, Gutiérrez-Sigut E, Quer J, Carreiras M. Lexical access in Catalan Signed Language (LSC) production. Cognition. 2008;108:856–865. doi: 10.1016/j.cognition.2008.05.012. [DOI] [PubMed] [Google Scholar]

- Bijeljac-Babic R, Biardeau A, Grainger J. Masked orthographic priming in bilingual word recognition. Memory & Cognition. 1997;25(4):447–457. doi: 10.3758/bf03201121. [DOI] [PubMed] [Google Scholar]

- Bornkessel-Schlesewsky I, Schlesewsky M. The importance of linguistic typology for the neurobiology of language. Linguistic Typology. 2016;20(3):615–621. [Google Scholar]

- Corina DP, Emmorey K. Lexical priming in American Sign Language; Paper presented at the 34th Annual meeting of the Psychonomics Society; Washington D.C. 1993. [Google Scholar]

- Corina DP, Lawyer LA, Cates D. Cross-linguistic differences in the representation of human language: Evidence from users of sign languages. Frontiers in Psychology. 2013;3(587) doi: 10.3389/fpsyg.2012.00587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desroches AS, Newman RL, Joanisse MF. Investigating the tiem course of spoken word recognition: Electrophysiological evidence for the influences of phonological similarity. Journal of Cognitive Neuroscience. 2008;21(10):1893–1906. doi: 10.1162/jocn.2008.21142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dye MWG, Shih S-I. Phonological priming in British Sign Language. In: Goldstein LM, Whalen DH, Best CT, editors. Papers in laboratory phonology. Berlin: Mouton de Gruyter; 2006. pp. 241–263. [Google Scholar]

- Emmorey K, Giezen MR, Gollan TH. Psycholinguistic, cognitive, and neural implications of bimodal bilingualism. Bilingualism: Language and Cognition. 2016;19(2):223–242. doi: 10.1017/S1366728915000085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, Sevcikova Sehyr Z, Midgley KJ, Holcomb PJ. Neurophysiological correlates of frequency, concreteness, and iconicity in American Sign Language; Paper presented at the 23rd Annual Meeting of the Cognitive Neuroscience Society; New York, NY. 2016. [Google Scholar]

- Ferré P, Sánchez-Casas R, Guasch M. Can a horse be a donkey? Semantic and form interference effects in translation recognition in early and late proficient and nonproficient Spanish-Catalan bilinguals. Language Learning. 2006;56(4):571–608. [Google Scholar]

- Ganis G, Kutas M, Sereno MI. The search for “common sense”: An electrophysiological study of the comprehension of words and pictures in reading. Journal of Cognitive Neuroscience. 1996;8(2):89–106. doi: 10.1162/jocn.1996.8.2.89. [DOI] [PubMed] [Google Scholar]

- Giezen MR, Blumenfeld HK, Shook A, Marian V, Emmorey K. Parallel language activation and inhibitory control in bimodal bilinguals. Cognition. 2015;141:9–25. doi: 10.1016/j.cognition.2015.04.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grainger J, Jacobs AM. Orthographic processing in visual word recognition: A multiple read-out model. Psychological Review. 1996;103(3):518–565. doi: 10.1037/0033-295x.103.3.518. [DOI] [PubMed] [Google Scholar]

- Green DW. Mental control of the bilingual lexico-semantic system. Bilingualism: Language and Cognition. 1998;1(2):67–81. [Google Scholar]

- Grossi G, Savill N, Thomas EM, Thierry G. Electrophysiological cross-language neighborhood density effects in late and early English-Welsh bilinguals. Frontiers in Psychology. 2012;3(408):1–11. doi: 10.3389/fpsyg.2012.00408. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guasch M, Sánchez-Casas R, Ferré P, García-Albea JE. Translation performance of beginning, intermediate and proficient Spanish-Catalan bilinguals: Effects of form and semantic relations. Mental Lexicon. 2008;3:289–308. [Google Scholar]

- Guo T, Misra M, Tam JW, Kroll JF. On the time course of accessing meaning in a second language: An electrophysiological and behavioral investigation of translation recognition. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2012;38(5):1165–1186. doi: 10.1037/a0028076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gutiérrez E, Müller O, Baus C, Carreiras M. Electrophysiological evidence for phonological priming in Spanish Sign Language lexical access. Neuropsychologia. 2012;50:1335–1346. doi: 10.1016/j.neuropsychologia.2012.02.018. [DOI] [PubMed] [Google Scholar]

- Han J, Cao B, Cao Y, Gao H, Li F. The role of right frontal brain regions in integration of spatial relation. Neuropsychologia. 2016;86:29–37. doi: 10.1016/j.neuropsychologia.2016.04.008. [DOI] [PubMed] [Google Scholar]

- Hildebrandt U, Corina D. Phonological similarity in American Sign Language. Language and Cognitive Processes. 2002;17(6):593–612. [Google Scholar]

- Holcomb PJ, Grainger J, O’Rourke T. An electrophysiological study of the effects of orthographic neighborhood size on printed word perception. Journal of Cognitive Neuroscience. 2002;14(6):938–950. doi: 10.1162/089892902760191153. [DOI] [PubMed] [Google Scholar]

- Hosemann J. Unpublished doctoral dissertation. Georg-August-University Göttingen, Göttingen; Germany: 2015. The processing of German Sign Language sentences: Three event-related potential studies on phonological, morpho-syntactic, and semantic aspects. [Google Scholar]

- Kroll JF, Stewart E. Category interference in translation and picture naming: Evidence for asymmetric connection between bilingual memory representations. Journal of Memory and Language. 1994;33(2):149–174. [Google Scholar]

- Kubus O, Villwock A, Morford JP, Rathman C. Word recognition in deaf readers: Cross-language activation of German Sign Language and German. Applied Psycholinguistics. 2015;36:831–854. [Google Scholar]

- Kutas M, Federmeier KD. Thirty years and counting: Finding meaning in the N400 component of the event related brain potential (ERP) Annual Review of Psychology. 2011;62:621–647. doi: 10.1146/annurev.psych.093008.131123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kutas M, Hillyard SA. An electrophysiological probe of incidental semantic association. Journal of Cognitive Neuroscience. 1989;1(1):38–49. doi: 10.1162/jocn.1989.1.1.38. [DOI] [PubMed] [Google Scholar]

- Markwardt FC. Peabody Individual Achievement Test-Revised Manual. Circle Pines, MI: American Guidance Service; 1989. [Google Scholar]

- McLaughlin J, Osterhout L, Kim A. Neural correlates of second-language word learning: Minimal instruction produces rapid change. Nature Neuroscience. 2004;7(7):703–704. doi: 10.1038/nn1264. [DOI] [PubMed] [Google Scholar]

- Medler DA, Binder JR. MCWord: An on-line orthographic database of the English language. 2005 Retrieved from http://www.neuro.mcw.edu/mcword.

- Midgley KJ, Holcomb PJ, Van Heuven WJB, Grainger J. An electrophysiological investigation of cross-language effects of orthographic neighborhood. Brain Research. 2008;1246:132–135. doi: 10.1016/j.brainres.2008.09.078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morford JP, Kroll JF, Piñar P, Wilkinson E. Bilingual word recognition in deaf and hearing signers: Effects of proficiency and language dominance on cross-language activation. Second Language Research. 2014;30(2):251–271. doi: 10.1177/0267658313503467. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morford JP, Wilkinson E, Villwock A, Piñar P, Kroll JF. When deaf signers read English: Do written words activate their sign translations? Cognition. 2011;118(2):286–292. doi: 10.1016/j.cognition.2010.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mounty JL, Pucci CT, Harmon KC. How deaf American Sign Language/English bilingual children become proficient readers: An emic perspective. Journal of Deaf Studies and Deaf Education. 2014;19(3):333–346. doi: 10.1093/deafed/ent050. [DOI] [PubMed] [Google Scholar]

- Ormel E, Giezen M. Bimodal bilingual cross-language interaction: Pieces of the puzzle. In: Marschark M, Tang G, Knoors H, editors. Bilingualism and bilingual deaf education. Oxford University Press; 2014. pp. 74–101. [Google Scholar]

- Ormel E, Hermans D, Knoors H, Verhoeven L. Cross-language effects in written word recognition: The case of billingual deaf children. Bilingualism: Language and Cognition. 2012;15(2):288–303. [Google Scholar]

- Phillips NA, Segalowitz NS, O’Brien I, Yamasaki N. Semantic priming in a first and second language: Evidence from reaction time variability and event-related brain potentials. Journal of Neurolinguistics. 2004;17(2–3):237–262. [Google Scholar]

- Shook A, Marian V. Bimodal bilinguals co-activate both languages during spoken comprehension. Cognition. 2012;124(3):314–324. doi: 10.1016/j.cognition.2012.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spivey MJ, Marian V. Cross talk between native and second languages: Partial activation of an irrelevant lexicon. Psychological Science. 1999;10(3):281–284. [Google Scholar]

- Talamas A, Kroll JF, Dufour R. From form to meaning: Stages in the acquisition of second-language vocabulary. Bilingualism: Language and Cognition. 1999;2(1):45–58. [Google Scholar]

- Thierry G, Wu YJ. Brain potentials reveal unconscious translation during foreign-language comprehension. Proceedings of the National Academy of Sciences. 2007;104(30):12530–12535. doi: 10.1073/pnas.0609927104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Heuven WJB, Dijkstra T, Grainger J. Orthographic neighborhood effects in bilingual word recognition. Journal of Memory and Language. 1998;39(3):458–483. [Google Scholar]

- Villameriel S, Dias P, Costello B, Carreiras M. Cross-language and cross-modal activation in hearing bimodal bilinguals. Journal of Memory and Language. 2016;87:59–70. [Google Scholar]

- Wu YJ, Thierry G. Chinese-English bilinguals reading English hear Chinese. The Journal of Neuroscience. 2010;30(22):7646–7651. doi: 10.1523/JNEUROSCI.1602-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu YJ, Thierry G. Unconscious translation during incidental foreign language processing. NeuroImage. 2012;59(4):3468–3473. doi: 10.1016/j.neuroimage.2011.11.049. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.