How does the brain encode the breadth of information from our senses and use this to produce goal-directed behavior? A network of frontoparietal multiple-demand (MD) regions is implicated but has been studied almost exclusively in the context of visual tasks. We used multivariate pattern analysis of fMRI data to show that these regions encode tactile stimulus information, rules, and responses. This provides evidence for a domain-general role of the MD network in cognitive control.

Keywords: fMRI, MVPA, cognitive control, somatosensory perception

Abstract

At any given moment, our brains receive input from multiple senses. Successful behavior depends on our ability to prioritize the most important information and ignore the rest. A multiple-demand (MD) network of frontal and parietal regions is thought to support this process by adjusting to code information that is currently relevant (Duncan 2010). Accordingly, the network is proposed to encode a range of different types of information, including perceptual stimuli, task rules, and responses, as needed for the current cognitive operation. However, most MD research has used visual tasks, leaving limited information about whether these regions encode other sensory domains. We used multivoxel pattern analysis (MVPA) of functional magnetic resonance imaging (fMRI) data to test whether the MD regions code the details of somatosensory stimuli, in addition to tactile-motor response transformation rules and button-press responses. Participants performed a stimulus-response task in which they discriminated between two possible vibrotactile frequencies and applied a stimulus-response transformation rule to generate a button-press response. For MD regions, we found significant coding of tactile stimulus, rule, and response. Primary and secondary somatosensory regions encoded the tactile stimuli and the button-press responses but did not represent task rules. Our findings provide evidence that MD regions can code nonvisual somatosensory task information, commensurate with a domain-general role in cognitive control.

NEW & NOTEWORTHY How does the brain encode the breadth of information from our senses and use this to produce goal-directed behavior? A network of frontoparietal multiple-demand (MD) regions is implicated but has been studied almost exclusively in the context of visual tasks. We used multivariate pattern analysis of fMRI data to show that these regions encode tactile stimulus information, rules, and responses. This provides evidence for a domain-general role of the MD network in cognitive control.

successful behavior requires selection of, and attention to, task-relevant information from multiple senses. A well-specified network of frontal and parietal “multiple demand” (MD) regions has been implicated in controlling this process (Duncan 2010, 2013). It has been shown that neurons in these regions adjust their responses to selectively represent the information that is needed for behavior at any given moment (Duncan 2001, 2010, 2013). In doing so they are thought to drive adaptive focus, flexibly emphasizing information that is currently relevant for goal-directed behavior (Duncan 2001) and providing a source of bias to influence processing in more specialized brain systems (e.g., Dehaene et al. 1998; Desimone and Duncan 1995; Miller and Cohen 2001; Norman and Shallice 1980).

The MD network incorporates regions in the inferior frontal sulcus (IFS), anterior insula/frontal operculum (AI/FO), inferior frontal junction (IFJ), premotor cortex (PM), anterior cingulate/pre-supplementary motor area (ACC/pre-SMA), and intraparietal sulcus (IPS). Human neuroimaging data shows activation of this network in response to a diverse range of cognitive demands including response conflict, task switching, perceptual difficulty, and different aspects of memory (e.g., Dosenbach et al. 2006; Duncan and Owen 2000; Nyberg et al. 2003; Stiers et al. 2010; Yeo et al. 2015), as well as in standard tests of general intelligence (e.g., Duncan et al. 2000; Woolgar et al. 2013). Comparing activation within subjects, Fedorenko et al. (2013) demonstrated strikingly similar activation patterns for seven tasks including manipulations of spatial and verbal working memory, the inhibition of distracting information, and arithmetic. Given this broad response, the MD network has elsewhere been referred to as a “task positive network” (Fox et al. 2005), “task activation ensemble” (Seeley et al. 2007), or “cognitive control network” (Cole and Schneider 2007).

In addition to activation in response to a wide range of tasks, the MD regions are thought to represent or “code” different types of information as needed in different tasks. For example, in single-unit recordings from nonhuman primates, the firing rates of single frontal and parietal neurons have been found to distinguish a range of task features including stimuli, rules and responses (e.g., Andersen et al. 1985; Asaad et al. 1998; Hoshi et al. 1998; Niki and Watanabe 1976; Snyder et al. 1997; Stoet and Snyder 2004). In human functional imaging, evidence for information coding comes from multivoxel pattern analysis (MVPA), which examines statistical regularities in fine-grained patterns of activation. Where patterns reliably distinguish between two conditions (e.g., red and green), the distinction between the conditions (e.g., color) is said to be represented or coded in that region. With this method, regions that are known to be active when participants perform a task can also be interrogated for their representational content.

In line with the proposal that the MD regions adapt their function to task context, MVPA has been used to demonstrate coding of a range of task features including aspects of visual stimuli, task rules, and responses in these regions (e.g., Bode and Haynes 2009; Li et al. 2007; Woolgar et al. 2011b, 2016). Moreover, multivoxel codes in these regions are responsive to changes in task demand. For example, they show stronger discrimination of visual stimuli under conditions of high perceptual difficulty, when stimuli are degraded or difficult to distinguish, compared with when input is strong (Woolgar et al. 2011a, 2015b), and stronger separation of highly confusable complex rules relative to simple ones (Woolgar et al. 2015a). MD coding is also stronger for visual stimuli at the focus of attention compared with those that are ignored (Woolgar et al. 2015b), and a single visual stimulus may be represented differently in different task contexts (Erez and Duncan 2015; Harel et al. 2014; Jackson et al. 2017). However, because this research used visual task presentation, we have limited information about the breadth of information that these regions can encode. In particular, we do not know whether these regions are capable of encoding information from other sensory modalities, as would be required of a domain-general cognitive control system.

In parallel to the detailed study of MD responses in visual tasks, a separate field of research has examined human brain responses to tactile stimuli. In addition to primary somatosensory regions, prefrontal and parietal brain regions are commonly active in tactile studies involving vibrotactile stimuli or haptic shape exploration (Kaas et al. 2007; Li Hegner et al. 2007; McGlone et al. 2002; Miquée et al. 2008; Numminen et al. 2004; Preuschhof et al. 2006; Sörös et al. 2007; Staines et al. 2002; Stoeckel et al. 2003). This research is complemented by neurophysiology studies demonstrating that firing rates of prefrontal and premotor neurons discriminate vibrotactile frequency (see Romo and Salinas 2003 for a review). These tactile-responsive frontoparietal regions are similar to those identified as the MD regions in visual studies. This raises the possibility that the MD regions represent aspects of tactile tasks in much the same way as they do for visual tasks. In the present study, we used MVPA to test whether tactile information is encoded in MD activations.

Using an fMRI-compatible stimulator, we presented vibrotactile stimuli of different frequencies to participants lying in the scanner. Participants performed a stimulus-response task in which they were required to discriminate between two possible vibrotactile frequencies and apply a stimulus-response transformation rule to generate a button-press response. This design allowed us to separate blood oxygen level-dependent (BOLD) responses associated with two vibrotactile frequencies, two stimulus-response transformation rules, and two button-press responses and to examine coding of each of these task features separately. In line with a domain-general role for the MD regions, patterns of activation indeed discriminated between the two frequencies of vibrotactile stimulation, as well as representing the current task rule and button-press response. We also examined information coding in a region of occipitotemporal (OT) cortex that is commonly coactivated with the MD pattern (Fedorenko et al. 2013). Although activation in this region is generally assumed to reflect visual processing, it is also seen in tactile studies that do not have overt visual processing demands (e.g., Amedi et al. 2001, 2002; Beauchamp et al. 2007; Pietrini et al. 2004; Sathian et al. 1997). In our data, the OT showed a similar profile to the MD regions, representing all three task features. Patterns of activation in primary and secondary somatosensory regions discriminated between the tactile stimuli and the button-press response, but did not represent task rules. Consistent with the proposal that the MD system is domain general, our data show that these regions code the details of vibrotactile stimuli as well as tactile-motor response transformation rules and button-press responses.

METHODS

Participants

We tested 21 participants and excluded 3 data sets due to technical issues (imperfect scanner trigger or scanning parameter settings). The 18 participants included in this study (7 female, 11 male mean age 22 yr, SD 4.08 yr) all self-reported to be right-handed and had normal or corrected to normal vision. We had approval for this study from the Macquarie University Human Research Ethics Committee and obtained written informed consent from all participants. Participants received $20 per hour for their participation.

Stimuli and Task Design

We used an event-related fMRI design, and participants were scanned while performing a tactile stimulus-response task. The task design was adapted from our previous visual studies to separate coding of stimulus features, task rules, and button-press responses (Woolgar et al. 2011a, 2011b, 2015a).

Vibrotactile stimuli were delivered to the right thumb using an MRI-compatible piezo tactile stimulator (PTS-C2; Dancer Design, St. Helens, UK; dancerdesign.co.uk). The stimulating surface consisted of an 8-mm round probe affixed in a small box. Stimulus presentation was controlled using MATLAB (The MathWorks, Natick, MA) and Psychtoolbox 3 (Brainard 1997).

On each trial, the tactile stimulus was either an 80-Hz (“slow”; except for 2 participants for whom it was 100 Hz) or a 200-Hz (“fast”) vibration.1 The task was to discriminate the two different frequencies (slow vs. fast) and respond by pressing one of two response buttons. Participants learnt two different stimulus-response mappings that determined the correct response button for each vibration frequency (Fig. 1A). On each trial, the current rule to use was specified by the color of a central fixation cross (red for rule 1 and blue for rule 2) on an otherwise blank screen. To manipulate perceptual difficulty, we varied the vibration amplitude (amplitude 1, 1.75% of maximum system amplitude, was 7 times lower than amplitude 2, 12.5% of maximum system amplitude), causing relatively weak (“difficult”) and strong (“easy”) vibrations in separate blocks.

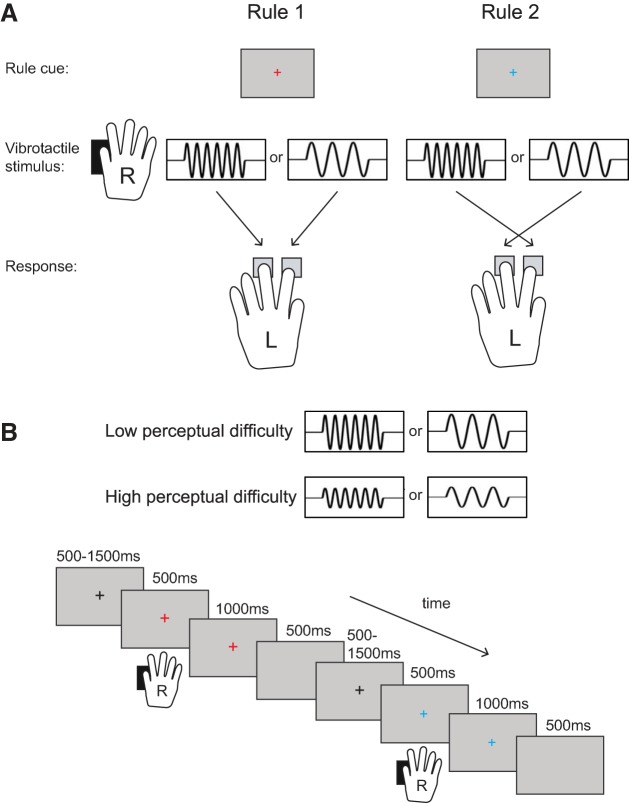

Fig. 1.

A: participants were presented with a colored fixation cross on a screen, and a vibrotactile stimulus was delivered to the thumb of their right hand. The color of the fixation cross indicated the stimulus-response mapping rule to be used on the current trial. For rule 1, participants had to press the left button (middle finger) for a “fast” vibration and the right button (index finger) for a “slow” vibration. For rule 2, the stimulus-response associations were reversed. We manipulated perceptual difficulty by varying the vibration amplitude in separate blocks. B: each trial began with the presentation of a black fixation cross (500-1,500 ms in duration, varied randomly over trials). Next, a vibration was delivered to the right thumb (500 ms). At the onset of the vibration the fixation cross changed color to indicate the rule for this trial. The colored fixation cross was visible for 1,500 ms, after which a blank screen was shown for 500 ms before the next trial began.

Each trial started with the presentation of a black fixation cross (500-1,500 ms in duration, varied randomly over trials; see Fig. 1B). Next, the vibration started simultaneously with a change in the fixation cross color. The vibration lasted 500 ms, and the fixation cross was visible for 1,500 ms. The color of the fixation cross (red or blue) indicated the rule for this trial. Finally, a blank screen was shown for 500 ms. Participants could respond at any time before the end of the trial (up to 2 s after stimulus onset).

Before scanning, participants learnt the task outside the scanner and practiced for ~20 min. They were familiarized with the different tactile stimuli and then learned each rule separately (half the participants started with rule 1 and half with rule 2). They then completed training blocks that included both rules. Training blocks alternated between high and low perceptual difficulty. During training, participants received feedback (“correct” or “incorrect”) after each trial as well as a summary of accuracy in percent correct at the end of each block. In the main task in the scanner, the summary was shown after each block, but no feedback was given on individual trials. Participants were instructed to respond as quickly and accurately as possible.

Acquisition

MRI data were acquired by using a Siemens Verio 3T scanner in combination with a 32-channel head coil (Erlangen, Germany) at Macquarie Medical Imaging, Macquarie University Hospital (Sydney, Australia). For functional data we used a gradient echo T2*-weighted sequence obtained every 2 s (repetition time, TR), consisting of 34 axial slices [echo time (TE) = 30 ms, flip angle = 78°, field of view (FOV) = 210 × 210 mm, in-plane resolution = 3 × 3 mm, slice thickness = 3 mm, and interslice gap = 0.7 mm]. We also obtained high-resolution structural images [3-dimensional magnetization-prepared rapid gradient-echo (MPRAGE) sequence, voxel size = 0.98 × 0.98 × 1 mm, FOV = 240 × 240 mm, 208 slices, TR = 2,000 ms, TE = 3.92, flip angle = 9°].

Acquisition started with two runs of the experimental task, followed by two functional localizer runs for somatosensory and MD regions (see below), and ended with the structural scan.

Main experimental task.

Participants held the small tactile stimulator box in their right hand and centered their thumb pad on the probe without pressing down. Participants used the index and middle fingers of the left hand to press one of two buttons on a response box.

Participants performed alternating blocks of high and low perceptual difficulty with the block order counterbalanced across participants. There were two runs of 8 blocks, giving a total of 16 blocks (8 low and 8 high perceptual difficulty) with a 30-s break after each block. Each block contained 40 trials with equal numbers of slow and fast vibrations in the context of each of the two rules. Trial order was randomized within each block. The first four volumes of each run before the task started were discarded. Each run consisted of 610 echo-planar imaging (EPI) acquisitions and lasted 20.33 min.

Somatosensory localizer.

In addition to the main experiment, we ran an independent functional localizer to identify the primary (SI) and secondary (SII) somatosensory cortex. During 16-s blocks, participants received tactile vibration stimuli on the right thumb (as in the main experiment) with fixation-only baseline blocks in between. The run started with a baseline block followed by a tactile stimulation block with one of the frequencies (80 or 200 Hz, order counterbalanced across participants), followed by a block with the other frequency. Blocks of high and low frequency were repeated eight times, after which there was an additional baseline block at the end of the run. Tactile stimuli consisted of 500-ms vibrations followed by 500 ms without stimulation. A number of these vibrations had a short (100 ms) gap in the middle, making them a “target.” Targets were randomly presented eight times in each run (not more than 1 target per block, 8 targets in total). The task was to count the number of targets. Three participants misunderstood the task and counted the total number of stimulations per block. For all other participants, the mean reported number of targets was 9.2 (SD 1.5). Behavioral performance was not critical; the task was only included to encourage participants to pay attention to the stimuli. The tactile localizer run consisted of 210 EPI acquisitions and lasted ~7 min.

MD localizer.

Participants performed a spatial working memory task, which we used to identify voxels within the MD regions that were maximally responsive in a cognitively demanding nontactile task. We used this data for a secondary analysis of the MD regions (see Regions of interest). We used the spatial working memory (WM) task described in Fedorenko et al. (2013). On each trial, participants were presented with a fixation cross (500 ms), followed by a series of four 3 × 4 grids (1,000 ms) in which either one (low WM) or two (high WM) were blue. Participants were required to remember the spatial locations of all the blue squares in the grid. Finally, participants were presented with a choice screen depicting two grids (maximum of 3,750 ms). One of these grids depicted the correct layout of the blue squares in the preceding grids (correct), and the other depicted the same grid with one incorrect square (incorrect). Participants indicated the correct grid by pressing the left or right button, at which point they were immediately shown feedback (a green tick for correct and a red cross for incorrect, 250 ms). If the participant responded in less than 3,750 ms, a fixation cross was shown until the next trial began (4,000 ms after choice screen). Participants performed six 32-s blocks each of low WM and high WM with the order counterbalanced across participants. Fixation blocks lasting 16 s were presented at the beginning of the run and after task blocks 4, 8, and 12. The run consisted of 225 EPI acquisitions and lasted ~7.5 min. As expected, participants were significantly more accurate on the low compared with high WM condition [93.6% in low, 77.8% in high, t(14) = 5.19, P < 0.001; behavioral data failed to record for 3 participants].

Analysis

Multivoxel pattern analysis (MVPA) was used to decode tactile stimulus frequency from fMRI activation patterns. The main analyses focused on MD regions. In addition, encoding of tactile stimulus frequency was also investigated for activation patterns in primary and secondary somatosensory areas and in OT.

Preprocessing

We employed SPM8 (Wellcome Trust Centre for Neuroimaging, University College London, London, UK) for fMRI data preprocessing. All volumes obtained per participant were slice-time corrected and spatially realigned to correct for head motion. To obtain spatial normalization parameters for each participant, the participant’s structural image was coregistered to the mean of the functional volumes. The structural image was then segmented and normalized to the standard MNI (Montreal Neurological Institute) T1 template included in SPM. For the tactile localizer only, the obtained transformation parameters were applied to normalize the coregistered functional volumes, which were additionally resampled to 2-mm isotropic voxels. This was done to facilitate group-level derivation of tactile-responsive regions of interest (ROIs; see below). Finally, spatial smoothing was performed for the native space EPI images to be used for decoding (4-mm FWH isotropic Gaussian kernel) as well as the normalized functional images from the tactile localizer (6-mm FWH isotropic Gaussian kernel).

Regions of Interest

MD and OT ROIs.

For our main analysis, we defined MD and OT ROIs based on group-level activation associated with activity for 7 different cognitive tasks in a prior imaging study (Fedorenko et al. 2013). We chose this approach because the MD regions are defined as regions that are activated by a wide range of cognitive demands (Duncan and Owen 2000) and for comparison with previous literature (Woolgar et al. 2016). We used the parcellated group-level activation image available at http://imaging.mrc-cbu.cam.ac.uk/imaging/MDsystem, which was created by reflecting group-level t-maps from the seven cognitive tasks across the midline, averaging the resulting maps, thresholding at t > 1.5, and dividing any clusters containing multiple peaks at t > 2.7 by assigning each voxel to the nearest subregion. To facilitate investigation of hemispheric effects, we further divided the bilateral ACC region at the midline to create symmetrical left and right ACC regions. For our analysis, we selected the 14 clusters in frontal and parietal cortex. These ROIs were centered around the left and right anterior IFS (aIFS; center of mass: ±35 47 19, volume in normal template: 5.0 cm3), left and right posterior IFS (pIFS; ±40 32 27, 5.7 cm3), left and right AI/FO (±34 19 2, 7.9 cm3), left and right IFJ (±43 4 32, 10.2 cm3), left and right PM (±28 2 56, 9.1 cm3), left and right ACC/pre-SMA (±7 15 46, 8.6 cm3), and left and right IPS (±30 −56 46, 34.1 cm3). We additionally included the clusters in left and right OT (±32 −71 −1, 23.2 cm3). These regions are shown in Fig. 2. All coordinates are given in MNI-152 space (McConnell Brain Imaging Centre, Montreal Neurological Institute, Montreal, QC, Canada).

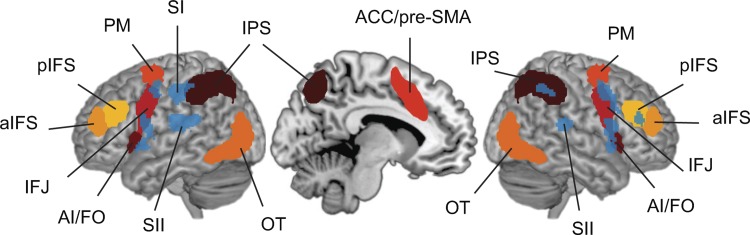

Fig. 2.

MD network regions and univariate tactile localizer activation. We depicted and labeled the MD network regions as well as OT that were associated with multiple demanding cognitive tasks in a prior imaging study (Fedorenko et al. 2013) (warm colors). On this MD network we superimposed the univariate tactile localizer activation from this study (blue). The tactile regions included SI and SII as well as additional regions that overlap with MD regions. aIFS, anterior inferior frontal sulcus; pIFS, posterior inferior frontal sulcus; AI/FO, anterior insula/frontal operculum; IFJ, inferior frontal junction; PM, premotor cortex; ACC/pre-SMA, anterior cingulate/pre-supplementary motor area; IPS, intraparietal sulcus; OT, occipitotemporal area; SI, somatosensory area I; SII, somatosensory area II.

To allow classification analysis to be carried out on native space data, ROIs were deformed to native space for each participant by applying the inverse of the normalization parameters. The mean number of voxels in native space for each of the MD and OT ROIs was as follows: left aIFS, 160 (SD 14); right aIFS, 167 (SD 11); left pIFS, 175 (SD 15); right pIFS, 187 (SD 15); left AI/FO, 249 (SD 16); right AI/FO, 251 (SD 15); left IFJ, 312 (SD 23); right IFJ, 320 (SD 25); left PM, 276 (SD 23); right PM, 281 (SD 23); left ACC/pre-SMA, 275 (SD 26); right ACC/pre-SMA, 275 (SD 26); left IPS, 1,011 (SD 76); right IPS, 1,014 (SD 70); left OT, 741 (SD 47); and right OT, 739 (SD 44).

Subject-specific MD and OT ROIs constrained by MD localizer data.

Given that regions responding to tactile stimulation have previously been found to be close to or overlap with our canonically defined MD and OT regions, it was possible that any coding of vibrotactile frequency in these regions in our main analysis could be driven by sub-ROI regions that are selective for “tactile” information. To examine this, we also carried out a more conservative analysis in which we restricted our ROIs, on an individual participant basis, to voxels that were activated by our MD localizer (following the logic of Fedorenko et al. 2010). Because the MD localizer involved spatial working memory in a visually presented task, and the same requirement for button-press responses between conditions, we reasoned that this was unlikely to activate regions specific to tactile information.

To define these restricted ROIs, we first carried out a standard general linear model (GLM) analysis of the MD localizer data for each participant in native space. Blocks of high and low WM were modeled using 16-s box car regressors. For each participant, we performed a whole brain mass-univariate analysis contrasting high minus low WM conditions. The resulting individual subject t-image was then thresholded at t = 2.34 (equivalent to P < 0.01, uncorrected). Finally, we took the intersection of the thresholded t-image and the 16 native space MD regions for that participant. For each MD region, the result is a subject-specific ROI that indexes only the voxels activated by the localizer. For MVPA, we only used individual ROIs with at least 10 voxels.

At the chosen thresholds, not all participants showed localizer activation in every region, and one person did not show localizer activation in any MD region. The number of participants (N) who showed activation (≥10 voxels) and the mean number of ROI voxels (M; SD in parentheses) in each region were as follows: left aIFS: N = 11, M = 46 (SD 27); right aIFS: N = 13, M = 53 (SD 39); left pIFS: N = 11, M = 44 (SD 21); right pIFS: N = 13, M = 69 (SD 40); left AI/FO: N = 11, M = 46 (SD 24); right AI/FO: N = 12, M = 53 (SD 31); left IFJ: N = 13, M = 76 (SD 48); right IFJ: N = 14, M = 56 (SD 32); left PM: N = 12, M = 51 (SD 32); right PM: N = 13, M = 59 (SD 36); left ACC/pre-SMA: N = 14, M = 49 (SD 28); right ACC/pre-SMA: N = 13, M = 66 (SD 30); left IPS: N = 15, M = 248 (SD 174); right IPS: N = 16, M = 292 (SD 212); left OT: N = 17, M = 87 (SD 56); and right OT: N = 18, M = 85 (SD 60).

Somatosensory regions identified with tactile localizer.

With the independent tactile localizer data we identified primary (SI) and secondary (SII) somatosensory ROIs for each participant. First, we fitted a GLM to the tactile localizer data with one regressor for tactile stimulation periods (boxcar function convolved with the SPM canonical hemodynamic response function, high-pass filter cutoff 128 s). We ran a second-level group analysis for the contrast tactile stimulation > implicit baseline (P < 0.0001 uncorrected, 50 cluster threshold) and identified left SI (MNI coordinates −54, −20, 48; cluster size 279 voxels), left SII (−48, −38, 22; 775 voxels), and right SII (64, −32, 14; 250 voxels) (Fig. 2). These ROIs were deformed to native space for each participant by applying the inverse of the normalization parameters.

In the second-level group analysis, we identified several regions in addition to SI and SII: a left ventral premotor area (−54, 2, 14; 235 voxels), a right ventral premotor area (56, 14, 14; 297 voxels), left precentral area (−52, −4, 46; 106 voxels), right precentral area (52, 6, 44; 175 voxels), right inferior parietal area (52, −54, 50; 74 voxels), and a middle frontal area (40, 38, 22; 52 voxels). Consistent with previous work (e.g., Kaas et al. 2007; Li Hegner et al. 2007; McGlone et al. 2002), these regions overlap with MD regions (Fig. 2), so only SI and SII were included as sensory regions in our analysis.

Multivoxel Pattern Analysis

The two authors analyzed the data in parallel using different toolboxes: Pattern Recognition for Neuroimaging Toolbox (PRoNTo; Schrouff et al. 2013) and The Decoding Toolbox (TDT; Hebart et al. 2015). We obtained identical results. Both toolboxes employed a linear support vector machine (LIBSVM; Chang and Lin 2011) for classification with cost parameter C = 1.

First, to estimate voxelwise activity in each of our conditions, we ran a standard GLM analysis with SPM 8. Beta values were estimated for slow and fast frequency, rule 1 and rule 2, and index finger and middle finger button-press response for each of the 16 blocks separately (8 low and 8 high perceptual difficulty). Each trial contributed to the estimation of three beta values (slow or fast frequency, rule 1 or rule 2, index or middle finger response). To account for trial-by-trial differences in reaction time (Todd et al. 2013), trials were modeled as epochs lasting from stimulus onset until response (Grinband et al. 2008; Henson 2007; Woolgar et al. 2014), as used in our previous work and elsewhere (e.g., Crittenden et al. 2015; Erez and Duncan 2015; Woolgar et al. 2011a, 2011b, 2015a, 2015b). Error trials were not modeled.

MVPA was used to examine multivoxel coding of vibrotactile frequency, rule, and response. Initially, we examined decoding in high and low perceptual difficulty blocks separately and compared the results from the different block types to establish whether perceptual difficulty affected decoding. To illustrate, the analysis of frequency coding in the low perceptual difficulty condition proceeded as follows. For a given participant and ROI, the pattern of beta values across the relevant voxels was extracted from each of the 16 relevant beta images (8 blocks of low perceptual difficulty × 2 frequencies). The linear support vector machine was trained to discriminate between the vectors for slow and fast frequencies. A leave-one-out eightfold classification design was used: the classifier was trained on data from seven of the eight blocks and subsequently tested for classification performance on the eighth block that was not used for training. This process was repeated eight times, using all possible combinations of training and testing blocks. The classification accuracies from the eight iterations were then averaged to give a mean accuracy score for this participant. This classification analysis was conducted for each feature (frequency, rule, and response) and for each level of perceptual difficulty (low, high) in each of our ROIs for each participant.

On the basis of our previous work with visual stimulation (Woolgar et al. 2011a, 2015b), we predicted an increase in coding of stimulus frequency under conditions of high relative to low perceptual difficulty. To examine this, for each task feature (frequency, rule, response), we entered the classification accuracies from each participant into a repeated-measures ANOVA. For the MD network, the three-way ANOVA had factors perceptual difficulty (high, low), region (aIFS, pIFS, AI/FO, IFJ, PM, ACC/pre-SMA, IPS) and hemisphere (left, right). For the OT region the ANOVA had factors perceptual difficulty (low, high) and hemisphere (left, right). For the tactile sensory system, the ANOVA had factors perceptual difficulty (low, high) and region (left SI, left SII, right SII).

We found no significant effect of perceptual difficulty on coding of any task feature in either network of interest or OT (see results). Therefore, we repeated the classification analyses, now pooling data across high and low perceptual difficulty blocks. This analysis proceeded as described above, but each classification fold contained twice the data (e.g., 32 beta values from 16 blocks × 2 frequencies). To test for coding of each task feature, the average classification accuracies in our ROIs (MD, OT region, tactile sensory) were compared with chance (50%). To this end, for the MD and tactile sensory network, we averaged the classification accuracies across different regions. To check whether decoding varied between the regions in the MD network, we also entered the classifications accuracies into a two-way ANOVA with factors region (aIFS, pIFS, AI/FO, IFJ, PM, ACC/pre-SMA, IPS) and hemisphere (left, right). We followed up significant effects of region with post hoc t-tests for each region separately (data collapsed over hemisphere) and significant effects of hemisphere with post hoc t-tests for each hemisphere separately (data collapsed over region). In one case (response coding in the MD system), both main effects were seen, so we report t-tests for individual regions in each hemisphere separately. To check whether decoding varied between regions for the tactile sensory network, we entered the classification results into a one-way ANOVA with factor region (left SI, left SII, right SII) for the tactile sensory system. We corrected our analyses for multiple comparisons using the Benjamin and Hochberg false discovery rate (FDR) procedure (Benjamin and Hochberg 1995) and an FDR-corrected threshold of P(FDR) = 0.05.

To investigate if any additional regions encoded the features of our tactile task, we also performed whole brain searchlight analyses (Kriegeskorte et al. 2006). We employed the searchlight function of The Decoding Toolbox (Hebart et al. 2015) using a sphere of radius 10 mm that centered in turn on each voxel in each individual’s brain. Within each sphere, we performed the same MVPA analysis as for the ROI analyses, testing for frequency, rule, and response decoding, and assigned the resulting classification accuracy to the central voxel. This yielded three native space classification accuracy maps (1 per feature) for each person. To analyze coding at the group level, each classification accuracy map was normalized using the participant’s normalization parameters obtained during preprocessing and then spatially smoothed (8-mm FWH isotropic Gaussian kernel). We then employed one-tailed t-tests to test for coding above chance (50%) at a statistical threshold of t = 4.69, equivalent to P < 0.001 (FDR corrected for multiple comparisons) in the frequency analysis, with an extent threshold of 20 voxels.

Univariate ROI Analysis

We also conducted univariate ROI analyses to examine whether there were group-level differences in activation between conditions. For each MD ROI, we calculated the mean beta estimate across all blocks for each task feature condition (slow frequency, fast frequency, rule 1, rule 2, index finger response, and middle finger response). We entered these means into three ANOVAs, one for each task feature, with factors condition (e.g., slow frequency, fast frequency), region (aIFS, pIFS, AI/FO, IFJ, PM, ACC/pre-SMA, IPS), and hemisphere (left, right).

RESULTS

Behavioral Performance

We analyzed behavioral performance on the main task in three separate two-way ANOVAs, one for each task feature. In the first analysis, the factors were perceptual difficulty (low, high) and frequency (slow, fast). As expected, participants were significantly faster [main effect of difficulty, F(1,17) = 18.44, P < 0.001] and more accurate [F(1,17) = 9.03, P = 0.008] in the easy condition than in the difficult condition (Table 1). There was also a main effect of frequency on reaction time [RT; F(1,17) = 4.97, P = 0.04], reflecting faster responses for fast compared with slow vibration trials. There was no main effect of frequency on percentage correct [F(1,17) = 0.91, P = 0.35] and no difficulty × frequency interaction for either measure [RT: F(1,17) = 3.78, P = 0.069; accuracy: F(1,17) = 2.51, P = 0.13]. In further analyses, we replaced the frequency factor with rule (rule 1, rule 2) or with response (index finger, middle finger). There was no significant main effect of these factors and no significant interaction with difficulty (all P > 0.05).

Table 1.

Reaction time and percentage correct scores for each frequency, rule, and response in low and high perceptual difficulty conditions

| Low Perceptual Difficulty |

High Perceptual Difficulty |

|||

|---|---|---|---|---|

| RT, ms | %Correct | RT, ms | %Correct | |

| Mean | 904 (22) | 91.1 (1.6) | 955 (29) | 85.0 (2.4) |

| Frequency slow | 930 (26) | 89.3 (2.1) | 968 (30) | 85.4 (3.0) |

| Frequency fast | 881 (21) | 93.0 (1.5) | 945 (31) | 84.5 (2.1) |

| Rule 1 | 898 (24) | 90.4 (1.8) | 949 (30) | 85.7 (2.2) |

| Rule 2 | 911 (25) | 91.9 (1.5) | 963 (30) | 84.2 (2.6) |

| Response index finger | 908 (21) | 91.2 (1.6) | 963 (28) | 84.0 (2.7) |

| Response middle finger | 901 (26) | 91.1 (1.7) | 948 (31) | 85.9 (2.1) |

Values are averages. SE are given in parentheses.

In our design, each of the three task features (frequency, rule, and response) has an equal contribution from the other two task features (e.g., each frequency has an equal contribution from the 2 rules and 2 responses). However, since we modeled only correct trials (see methods), it was possible that our design became unbalanced (e.g., a certain response could be associated with more trials from a certain frequency, etc.). To check, we calculated trial-wise phi coefficients (mean square contingency coefficients) for each of the pairs of task features (frequency with rule, frequency with response, and rule with response) at the individual subject level. Reassuringly, all mean phi coefficients were <0.01 (frequency with response: −0.0000042, SD 0.0143; frequency with rule: −0.0057376, SD 0.0219; rule with response: −0.0088626, SD 0.0353), indicating little or no association between task components in the final set of data.

Multivoxel Coding

Effect of difficulty.

We tested for the effect of perceptual difficulty (stimulus amplitude) on coding of vibration frequency (high vs. low). In contrast to our previous results with visual stimulation (Woolgar et al. 2011a, 2015b), MD coding of stimulus feature (tactile frequency) did not differ significantly between high and low perceptual difficulty conditions [no main effect of difficulty, F(1,17) = 0.36, P = 0.56]. An ANOVA with factors difficulty (easy, difficult), region (aIFS, pIFS, AI/FO, IFJ, PM, ACC/pre-SMA, IPS), and hemisphere (left, right) showed there was a significant difficulty × hemisphere interaction [F(1,17) = 6.79, P = 0.018], but the main effect of difficulty was not significant in either hemisphere separately [left: F(1,17) = 0.24, P = 0.63; right: F(1,17) = 2.80, P = 0.11]. Moreover, stimulus frequency was significantly encoded in the MD regions in both hemispheres separately, in both perceptual difficulty conditions [mean classification accuracy and one-sample t-test against chance 50%, for the low perceptual difficulty condition: left, 60.9%, t(17) = 4.09, P < 0.001; right, 62.4%, t(17) = 3.96, P < 0.001; and high difficulty condition: left, 62.5%, t(17) = 3.84, P < 0.001; right, 57.2%, t(17) = 2.29, P = 0.018; all P values survive FDR correction for multiple comparisons].

We also checked whether perceptual difficulty affected MD discrimination of the other task features (rule and response). For rule coding, there also was no main effect of difficulty and no difficulty × hemisphere interaction (all P > 0.05). There was a significant three-way interaction between difficulty, region, and hemisphere [F(6,102) = 2.77, P = 0.016], indicating a larger difficulty × region effect in the right hemisphere, but the difficulty × region interaction was not significant in either hemisphere separately [left: F(6,102) = 1.47, P = 0.20; right: F(6,102) = 1.89, P = 0.090]. There also was no main effect of difficulty in each hemisphere separately [left: F(1,17) = 0.12, P = 0.73, right: F(1,17) = 1.44, P = 0.25]. For response coding, there was no main effect of difficulty, no difficulty × hemisphere interaction, and no three-way interaction (all P > 0.05).

For OT and tactile regions, we also did not find any significant effect of difficulty (all P > 0.05). Given these results, our main analyses were carried out after data were collapsed across difficulty condition (see methods).

Tactile information coding in the MD system.

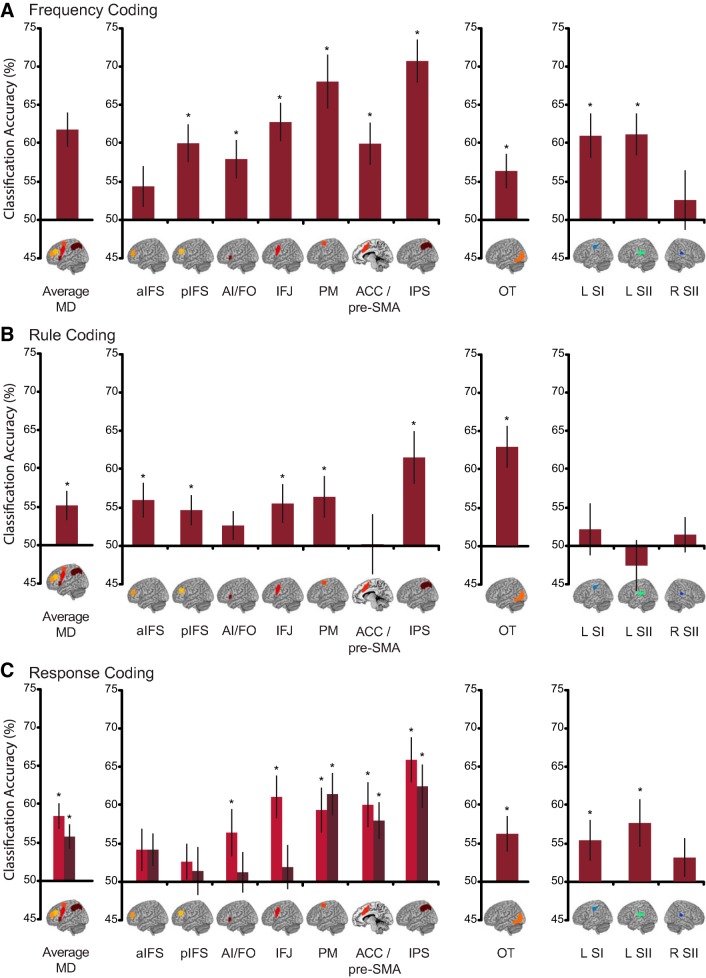

Our first question was whether somatosensory information was encoded in multivoxel patterns of activation in the MD system. We predicted that if these regions were truly “multisensory,” they should be capable of encoding the details of tactile sensory information. Indeed, classification of vibrotactile frequency in the MD network as a whole was significantly above chance [mean classification accuracy = 62%, t(17) = 5.32, P < 0.001; Fig. 3A, left]. There was no significant main effect of hemisphere [F(1,17) = 3.41, P = 0.082] and no significant hemisphere × region interaction [F(6,102) = 0.621, P = 0.713], but there was a main effect of region [F(6,102) = 10.73, P < 0.001]. When each region is considered separately, coding reached the FDR-corrected significance threshold (P = 0.003 last significant P value), in all but the aIFS region [1-tailed t-test against chance 50%, data collapsed over hemisphere; aIFS: t(17) = 1.63, P = 0.060; pIFS: t(17) = 4.05, P ≤ 0.001; AI/FO: t(17) = 3.15, P = 0.003; IFJ: t(17) = 5.08, P < 0.001; PM: t(17) = 5.12, P < 0.001; ACC/pre-SMA: t(17) = 3.58, P = 0.001; IPS: t(17) = 7.33, P < 0.001; Fig. 3A, second panel; see also Table 2).

Fig. 3.

MVPA results. Tactile frequency coding (A), rule coding (B), and response coding (C) are depicted for the average across the MD network (left panels), individual MD regions (second panels), OT (third panels), and somatosensory network regions (right panels). For response coding in the MD regions, we found a significant main effect of hemisphere (see text) and therefore plotted decoding accuracies for the hemispheres separately (light bars: left hemisphere; dark bars: right hemisphere). Error bars depict SE. *P < 0.05 after FDR correction for multiple comparisons. aIFS, anterior inferior frontal sulcus; pIFS, posterior inferior frontal sulcus; AI/FO, anterior insula/frontal operculum; IFJ, inferior frontal junction; PM, premotor cortex; ACC/pre-SMA, anterior cingulate/pre-supplementary motor area; IPS, intraparietal sulcus; OT, occipitotemporal area; SI, somatosensory area I; SII, somatosensory area II.

Table 2.

MVPA results in MD network

| Classification Accuracy, % |

||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| aIFS |

pIFS |

AI/FO |

IFJ |

PM |

ACC/pre-SMA |

IPS |

||||||||

| Left | Right | Left | Right | Left | Right | Left | Right | Left | Right | Left | Right | Left | Right | |

| Frequency | 54 (3.41) | 55 (2.59) | 63 (3.24) | 57 (2.88) | 61 (3.30) | 55 (3.76) | 65 (2.94) | 61 (3.50) | 68 (4.30) | 68 (3.38) | 62 (2.72) | 57 (3.36) | 71 (3.64) | 71 (2.83) |

| Rule | 57 (2.80) | 54 (2.80) | 56 (3.75) | 53 (1.91) | 51 (2.78) | 54 (2.72) | 57 (2.93) | 53 (3.44) | 58 (2.77) | 55 (3.73) | 52 (3.75) | 49 (3.54) | 63 (3.51) | 60 (3.76) |

| Response | 54 (2.78) | 54 (2.14) | 53 (2.36) | 51 (3.15) | 56 (3.10) | 51 (2.66) | 61 (2.80) | 52 (2.89) | 59 (2.96) | 61 (2.79) | 60 (2.96) | 58 (2.43) | 66 (2.95) | 63 (2.84) |

Values are vibrotactile frequency coding, rule coding, and response coding for all individual MD regions in both hemispheres. SE are given in parentheses.

Because regions responding to tactile stimulation have previously been found to be close to or overlap with our canonically defined MD regions, we carried out a more conservative analysis in which we restricted our MD ROIs, on an individual participant basis, to voxels that were activated by our visually presented working memory localizer (see methods). Classification of frequency remained above chance for the MD network as a whole [average of coding in all the subject-specific MD ROIs; classification accuracy = 59%, t(16) = 5.58, P < 0.001]. Because not every participant showed localizer activation in every region, the analysis of coding in each region separately was statistically somewhat weaker than our main analysis. Nonetheless, coding reached our FDR-corrected significance threshold (P = 0.0035 last significant P value) in five of the seven regions [1-tailed t-test against chance 50%, data collapsed over hemisphere: pIFS: t(12) = 2.59, P = 0.012; AI/FO: t(11) = 2.04, P = 0.033; IFJ: t(15) = 1.95, P = 0.035; PM: t(12) = 4.96, P < 0.001; IPS: t(15) = 7.61, P < 0.001]. There was no frequency coding in the subject-specific aIFS [t(13) = 1.31, P = 0.107] or ACC/pre-SMA [t(13) = 1.26, P = 0.12].

MD coding of other task features.

Having established that the MD regions coded the details of the vibrotactile stimuli, we next asked whether the MD regions also coded rule (rule 1 vs. rule 2) and response (index vs. middle finger) information in our tactile task. The MD network showed above chance coding of rule information [55%, t(17) = 2.71, P = 0.007, Fig. 3B, left]. There was no evidence that rule coding differed between hemispheres [F(1,17) = 2.72, P = 0.12], but it did differ between regions [F(6,102) = 3.31, P = 0.005; Fig. 3B, second panel; see also Table 2]. There was no significant hemisphere × region interaction [F(6,102) = 0.621, P = 0.71]. Coding reached our FDR-corrected significance threshold (P = 0.023 last significant P value) in five of the seven regions [1-tailed t-test against chance 50%, data collapsed over hemisphere: aIFS: t(17) = 2.60, P = 0.009; pIFS: t(17) = 2.34, P = 0.016; IFJ: t(17) = 2.16, P = 0.023; PM: t(17) = 2.34, P = 0.016; IPS: t(17) = 3.36, P = 0.002]. There was no significant rule coding in AI/FO [t(17) = 1.39, P = 0.091] or ACC/pre-SMA [t(17) = 0.055, P = 0.48].

The MD network also showed significant coding of response information [57%, t(17) = 4.73, P < 0.001; Fig. 3C, left]. For response, there was a main effect of region [F(6,102) = 5.13, P ≤ 0.001]. There was also a main effect of hemisphere [F(1,17) = 4.75, P = 0.044], with stronger decoding in the left hemisphere, although coding was significant in both hemispheres separately [left: 59%, t(17) = 5.05, P < 0.001, right: 56%, t(17) = 3.65, P < 0.001]. There was no significant hemisphere × region interaction [F(6,102) = 1.48, P = 0.19]. We followed up the main effects of region and hemisphere by testing coding in each region for each hemisphere separately (Fig. 3C, Table 2). In the left hemisphere, coding reached our FDR-corrected significance threshold (P = 0.027 last significant P value) in five regions [AI/FO: t(17) = 2.07, P = 0.027; IFJ: t(17) = 3.96, P = 0.001; PM: t(17) = 3.17, P = 0.003; ACC/pre-SMA: t(17) = 3.40, P = 0.002; IPS: t(17) = 5.42, P < .001]. There was no significant response coding in the two remaining MD regions [aIFS: t(17) = 1.50, P = 0.076; pIFS: t(17) = 1.11, P = 0.14]. In the right hemisphere, coding reached our FDR-corrected significance threshold (P = 0.027 last significant P value) in three regions [1-tailed t-test against chance 50%: PM: t(17) = 4.11, P < 0.001; ACC/pre-SMA: t(17) = 3.29, P = 0.002; IPS: t(17) = 4.41, P < .001]. There was no significant response coding in the other MD regions [aIFS: t(17) = 1.95, P = 0.034, pIFS: t(17) = 0.44, P = 0.33; AI/FO: t(17) = 0.46, P = 0.33; IFJ: t(17) = 0.66, P = 0.26].

Coding in OT.

Previous work with tactile stimuli (e.g., Amedi et al. 2001, 2002; Beauchamp et al. 2007; Pietrini et al. 2004; Sathian et al. 1997) has reported activation of a region of OT cortex that also commonly accompanies the MD pattern (Fedorenko et al. 2013). We therefore investigated whether the OT region also codes task features in this tactile task. For this region, we indeed found significant coding for all three task features [frequency: 56%, t(17) = 2.81, P = 0.006; rule: 63%, t(17) = 4.72, P < 0.001; and response: 56%, t(17) = 2.65, P = 0.008; Fig. 3, A–C, third panel]. We further tested for hemisphere differences and found no significant effect of hemisphere for any task feature [frequency: t(17) = 1.82, P = 0.086; rule: t(17) = −0.53, P = 0.60; and response: t(17) = 0.23, P = 0.82, 2-tailed paired t-tests].

Coding in tactile sensory regions.

Next, we investigated the coding of task features in tactile somatosensory regions. As expected we found significant coding of frequency [59%, t(17) = 4.51, P < 0.001] but no coding of rule [52%, t(17) = 1.01, P = 0.16; Fig. 3, A and B, fourth panel]. We also found significant coding of response [55%, t(17) = 3.34, P = 0.002; Fig. 3C, fourth panel], which may reflect the tactile events caused by pressing the response button. There was no significant effect of tactile region for any of the task features (all P > 0.05).

Whole brain searchlight analyses.

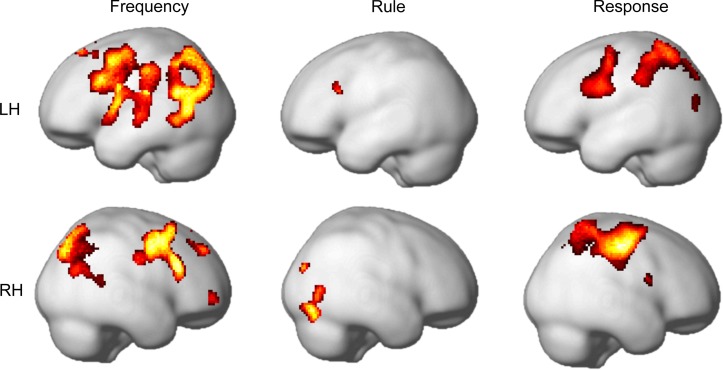

The results of the searchlight MVPA analyses were largely in line with our ROI results (Fig. 4, Table 3). Frequency coding was seen bilaterally in an extensive frontal region centered on the IFJ, extending dorsally to PM and ventrally to the AI/FO, and in the pIFS region and the ACC/pre-SMA in the right hemisphere. In addition, other frontal areas in the superior, middle, and orbital frontal cortex as well in the precentral gyrus also showed frequency coding. In the parietal cortex, frequency coding was seen in an extensive region centered on the IPS. In addition, frequency decoding was seen in an area centered on the precuneus in the medial part of the posterior parietal cortex (not depicted). There was also evidence for frequency coding in occipital and occipitotemporal regions. These overlapped partly with the dorsal part of our OT ROI but peaked over more anterior occipital and temporal regions in the middle occipital and temporal cortex.

Fig. 4.

Searchlight results. Areas of significant coding of frequency, rule, and response in searchlight MVPA are depicted for the left hemisphere (LH; top) and the right hemisphere (RH; bottom). Data are thresholded at t = 4.69, equivalent to P < 0.001 with FDR correction in the frequency analysis, with an additional extent threshold of 20 voxels.

Table 3.

Peak coding of frequency, rule, and response in whole brain MVPA analyses

| Representative Peaks |

||||||||

|---|---|---|---|---|---|---|---|---|

| MNI Coordinates |

||||||||

| Feature | Lobe | Cluster | Hemisphere | X | Y | Z | Brodmann Areas | t-Score |

| Frequency | Frontal | IFJ | Left | −40 | 4 | 32 | 44 | 8.08 |

| Right | 42 | 8 | 50 | 6 | 7.10 | |||

| pIFS | Right | 56 | 26 | 26 | 44/45 | 7.32 | ||

| Precentral gyrus | Left | −34 | −12 | 42 | 6 | 7.27 | ||

| Superior frontal gyrus | Right | 20 | 46 | 40 | 9 | 6.55 | ||

| Orbital medial frontal gyrus | Left | −8 | 36 | −10 | 11 | 5.92 | ||

| Right | 42 | 56 | −2 | 46 | 6.16 | |||

| Medial superior frontal gyrus | Left | −8 | 34 | 58 | 8 | 5.42 | ||

| Left | −14 | 38 | 24 | 32 | 5.34 | |||

| Cingulum | Right | 6 | −12 | 36 | 23 | 5.26 | ||

| Middle frontal gyrus | Left | −24 | 20 | 56 | 8 | 4.88 | ||

| Parietal | Precuneus | Bilateral | −4 | −66 | 40 | 7 | 8.46 | |

| IPS | Right | 34 | −52 | 40 | 40 | 8.05 | ||

| Right | 26 | −76 | 48 | 7 | 7.33 | |||

| Right | 12 | −70 | 36 | 7 | 6.97 | |||

| Occipital | Middle occipital gyrus | Left | −32 | −82 | 36 | 19 | 7.91 | |

| Superior occipital gyrus | Right | 26 | −76 | 38 | 19 | 7.55 | ||

| Occipitotemporal | Middle occipital/middle temporal gyri | Left | −48 | −76 | 22 | 39 | 6.73 | |

| Temporal | Middle temporal gyrus | Left | −50 | −52 | 22 | 22 | 7.07 | |

| Rule | Frontal | AI/FO | Left | −18 | 28 | 6 | 47 | 5.62 |

| IFJ | Left | −52 | 22 | 30 | 44 | 5.24 | ||

| Occipital | Inferior occipital gyrus | Right | 40 | −84 | −12 | 19 | 5.77 | |

| Fusiform gyrus | Right | 32 | −74 | −16 | 19 | 5.62 | ||

| Middle occipital gyrus | Right | 28 | −86 | 24 | 19 | 5.52 | ||

| Occipitotemporal | Middle occipital/middle temporal gyrus | Right | 44 | −70 | 4 | 19/37 | 6.20 | |

| Response | Frontal | Precentral gyrus (extending to PM) | Left | −48 | −2 | 52 | 6 | 6.40 |

| Precentral gyrus | Right | 46 | −16 | 50 | 4 | 15.38 | ||

| Right | 56 | 6 | 20 | 6 | 5.28 | |||

| IFJ | Left | −52 | 4 | 30 | 44 | 8.89 | ||

| PM | Left | −28 | −12 | 52 | 6 | 6.40 | ||

| Rolandic operculum | Right | 36 | −24 | 20 | N/A | 5.85 | ||

| Parietal | IPS | Left | −24 | −52 | 60 | 7 | 7.64 | |

| Left | −40 | −42 | 48 | 40 | 7.45 | |||

| Occipital | Calcarine sulcus | Left | −4 | −72 | 16 | 17 | 6.62 | |

| Middle occipital gyrus | Left | −40 | −84 | 20 | 19 | 5.14 | ||

| Left | −36 | −82 | 10 | 19 | 5.12 | |||

Also in line with our ROI analyses, rule decoding appeared to be weaker compared with frequency decoding. In the whole brain analysis we found significant rule decoding in the IFJ and AI/FO regions as well as in middle and inferior occipital and occipitotemporal areas. In contrast to the ROI analyses, searchlight analyses did not reveal significant rule coding in parietal, premotor, and inferior frontal areas at the chosen threshold.

For response coding, searchlight results were mostly in line with ROI analyses. We found significant encoding in the PM, IFJ, IPSb and occipital areas. However, in contrast to ROI analyses, there was no significant encoding of response in the ACC/pre-SMA region.

Univariate Results

We found no significant main effect of, or interaction with, condition for any of the MD ANOVAs (all P > 0.05). Thus we did not find significant differences in the mean estimated signal between high and low vibrotactile frequency, rule 1 and rule 2, or inner and outer responses in the MD network. This suggests that our multivariate results were not driven by large differences in overall activation at the group level.

DISCUSSION

In this study we used MVPA of fMRI data to test whether the broad coding of task relevant information observed in the MD system for visual tasks extends to stimuli in the somatosensory domain. Our task allowed us to separate coding of vibrotactile stimulus frequency, tactile-motor stimulus-response mapping rule, and button-press response. In the MD regions we found significant encoding of all three task features, commensurate with a domain-general role for these regions. In the somatosensory network we found significant encoding of events that involve tactile stimulation (tactile frequency and button-press response) but not stimulus-response mapping rule. We also examined coding in OT, a region classically associated with visual processing but which is commonly active alongside the MD network (Fedorenko et al. 2013) and which has previously been associated with activations in tactile tasks (e.g., Amedi et al. 2001, 2002; Beauchamp et al. 2007; Pietrini et al. 2004; Sathian et al. 1997). In this region we found significant coding of vibrotactile frequency, rule, and button-press response.

In our data, the MD system represented all the information necessary to solve the somatosensory stimulus-response task, with significant coding of the details of the tactile stimuli, stimulus-response mapping rule, and a motor response. These regions are well known to be active for a range of different cognitive demands (e.g., Dosenbach et al. 2006; Duncan and Owen 2000; Nyberg et al. 2003; Stiers et al. 2010; Yeo et al. 2015), and previous MVPA studies have demonstrated that they discriminate stimuli rules and responses in the context of visual tasks (e.g., Bode and Haynes 2009; Li et al. 2007; Woolgar et al. 2011b), with the strength of discrimination varying with changes in task demand (Woolgar et al. 2011a, 2015a). Although, to our knowledge, no studies have specifically assessed coding in MD ROIs using anything other than visual stimuli, a recent meta-analysis of studies using searchlight analyses found that coding of both visual and auditory stimuli has been reported at locations that fall within the MD system (Woolgar et al. 2016). In line with the proposition that these regions constitute a domain-general system capable of representing the features of a wide range of tasks (Duncan 2010; 2013), our data show that MD coding of task-relevant information extends to the somatosensory domain. Thus the MD system encodes task-relevant information from multiple senses.

Many previous studies have reported MD-like frontoparietal activation for tasks involving vibrotactile stimuli or haptic shape exploration (Kaas et al. 2007; Li Hegner et al. 2007; McGlone et al. 2002; Miquée et al. 2008; Numminen et al. 2004; Preuschhof et al. 2006; Sörös et al. 2007; Staines et al. 2002; Stoeckel et al. 2003). Our somatosensory localizer (monitoring vibrotactile stimulation for gaps minus rest with no vibrotactile stimulus) similarly produced frontoparietal activation overlapping with the canonical MD pattern. We go further, however, in demonstrating that patterns of activation in the MD regions also discriminate the details of vibrotactile stimulus frequency. We also expand on previous work with tactile stimuli by examining not only the coding of vibrotactile stimuli but also rules and behavioral responses used in the context of the tactile task, finding that these task features are also coded in frontoparietal cortex.

It is possible that the decoding of tactile information in the MD regions was driven by tactile-specific populations in frontoparietal cortex, rather than being the result of domain-general populations. Given the spatial resolution of fMRI, this alternative explanation cannot be ruled out completely, but we note that the pattern of results was the same even when we conducted a more conservative analysis and subselected MD voxels that were activated by a working memory localizer (high minus low spatial span) that had no vibrotactile component.

Our work converges with a broader literature that considers the way in which multisensory stimuli (i.e., stimuli presented simultaneously through multiple sensory modalities) are represented in the brain (e.g., Corbetta and Shulman 2002; Driver and Noesselt 2008; Ghazanfar and Schroeder 2006; van Atteveldt et al. 2014). For example, previous research has shown that neural activation when stimulating different sensory channels converges in a network of brain areas that includes frontal and parietal areas similar to the MD network (Corbetta and Shulman 2002; Driver and Noesselt 2008). Multisensory research has shown that information from different senses with respect to one feature can be combined and integrated when participants perform perceptual tasks (Ernst and Banks 2002; Yau et al. 2009) and that multisensory processing is flexible and can be influenced by task context and attention (Murray et al. 2016; Talsma et al. 2010; Tang et al. 2016; ten Oever et al. 2016). It has even been suggested that common neural mechanisms could support both top-down control and multisensory processing (van Atteveldt et al. 2014). In this study we cannot attest to the neural basis of multisensory processing per se because our stimuli were not multisensory, but we do show that the human frontoparietal MD system can encode task information derived from multiple different sensory modalities, namely, frequency information presented in the tactile modality and rule information presented through the visual domain, within a single task. Interesting next steps for future research could be to investigate whether representation in the MD system is independent of sensory modality (e.g., are multivoxel codes for implementing a particular rule or classifying a stimulus independent of input modality?) vs. modality tagged (maintaining separate codes for information from different sensory modalities) and the extent to which the MD system encodes information that is the result of combining input from multiple sensory modalities.

For the OT ROI, we found coding of all three task features. Our observation of vibrotactile frequency encoding in OT is in line with previous reports of OT activation for tactile input in the lateral occipital complex (LOC) and the medial superior temporal area (MST) (Amedi et al. 2001, 2002; Beauchamp et al. 2007; Pietrini et al. 2004; Sathian et al. 1997). Our data further show that activation in this classically visual region differentiates the details of the vibrotactile stimulus. Although we cannot completely rule out an explanation based on visualization strategies (Peltier et al. 2007; Sathian and Zangaladze 2001; Zhang et al. 2004), to drive our results any visualization would have to differentiate between the vibrotactile stimuli (i.e., a different visualization would need to be generated for the 2 frequencies, and this differential visualization would have to be carried out reliably over trials). Moreover, in our task, the visual system was needed to process the visual cue (albeit only a very simple visual stimulus). Similar considerations apply to the OT coding of rule, which was cued with a visual stimulus (red vs. blue fixation cross). Although the physical size of the color cue was small in our design, it is possible that OT could simply encode these visual representations, instead of more abstract rule representations, and a different design is needed to differentiate these possibilities. The observation of response coding in this region is harder to attribute to visual attributes. Previous work has suggested that OT regions activate with unseen bodily movements (Astafiev et al. 2004; Orlov et al. 2010). In the present study we show that it is possible to decode individual finger responses. However, these intriguing findings are tempered by the results of our whole brain searchlight analyses, which suggested that the peaks of frequency decoding were in the most anterior and superior parts of OT rather than in the more occipital regions, and which did not reveal rule or response decoding in this region at the chosen threshold. Certainly, the multisensory nature of OT was not as striking as, for example, that of the left IFJ, which showed significant coding of all three task features even at the relatively conservative threshold after corrections for multiple comparisons in the whole brain searchlight analyses. Further work is needed to investigate how OT task-related feature encoding differs from or is similar to the rest of the MD network encoding and what, if any, role this region has beyond encoding the details of visual stimuli.

In contrast to our previous findings involving visual tasks (Woolgar et al. 2011a, 2015a), we did not find an effect of difficulty on decoding, despite significant behavioral difficulty effects. In previous work, MD coding was only above chance for the more demanding version of the task (Woolgar et al. 2011a, 2015a), whereas in the present study we found significant encoding of tactile frequency in both the low and high perceptual difficulty conditions. Potentially, the tactile discrimination at both levels was more difficult than our previous visual tasks and thus always difficult enough to recruit the MD system. Another possible explanation relates to the different nature of visual and tactile stimuli. Tactile information always directly involves the body. Such direct relevance to the body is thought to facilitate information processing and specific response preparations (Graziano and Cooke 2006), which may make tactile stimuli a processing priority regardless of task. Frontoparietal regions, for example, are activated even when tactile stimuli are presented without an explicit task (McGlone et al. 2002). Future work could investigate if stimuli that are closer to the body and/or directly on the body are preferentially represented in the MD network.

An unexpected feature of the present data was the relatively weak representation of task rules in the MD system, where rule coding was significantly above chance but with classification accuracies that tended to be lower than for stimulus frequency. In our previous work using visual stimulus-response tasks, task rule has been the most strongly represented feature (Woolgar et al. 2011a, 2011b). Because our previous work focused on visual-motor rules, we did not know whether task rules would be represented at all in the context of our tactile task, and the observation that tactile-motor rules were significantly discriminable in the MD system speaks again to the adaptive nature of this system (Duncan 2001). Several differences in the paradigm may explain why rule was not as strongly represented in the present study, including the fact that the current rules were simpler, with only two response alternatives, compared with four in previous work (Woolgar et al. 2011a, 2011b). Another possibility is that the MD system adapts to focus its processing capacity on the most challenging aspect of the current task (Woolgar et al. 2011a), which in this case may have been the perceptual discrimination rather than the rule. In support of this idea, we have previously found that even complex task rules may be represented weakly if they are used in the context of even more complex rules (Woolgar et al. 2015a).

Another unexpected finding, from the whole brain searchlight MVPA analyses was that it was possible to decode stimulus frequency from the precuneus. This region is typically associated with default mode activity (e.g., Cavanna 2007; Fransson and Marrelec 2008), and therefore we did not predict information coding in the context of our active task. Interestingly, precuneus coding of task-relevant information in active tasks has been reported in two other recent decoding studies (Crittenden et al. 2015; Woolgar et al. 2015a). One possibility is that the functional connectivity of the precuneus may switch to be more associated with task-active brain regions during active tasks (Utevsky et al. 2014).

A final consideration is the contribution that trial-by-trial reaction time (RT) differences may make to decoding (Todd et al. 2013). In our data, the two stimulus frequencies yielded appreciably different RTs, in line with previous behavioral work reporting frequency-dependent tactile sensitivity (Löfvenberg and Johansson 1984; Nordmark et al. 2012). To account for the resulting difference in time-on-task between conditions, we modeled the RT on each trial as part of our GLM from which beta estimates of activation were derived (Grinband et al. 2008; Henson 2007; Woolgar et al. 2014; see methods). The sensitivity of MVPA comes at the cost of specificity (Woolgar et al. 2014), and as with any study using MVPA, we cannot completely rule out the contribution that individual differences in difficulty has on our ability to decode these task features. However, previous work using the same methods to account for RT differences have demonstrated that the resulting contribution of RT to decoding is minor (Crittenden et al. 2015; Erez and Duncan 2015; Woolgar et al. 2014).

In this study we addressed a gap in the literature regarding the representation of tactile information in the brain, and the breadth of information coding in the MD system. Although theoretical accounts posit that the MD regions are capable of representing information from different senses, the majority of work had been carried out in the visual domain, so this claim had not been tested empirically. We show that the MD network encodes task-relevant information from nonvisual input, including the details of vibrotactile stimuli, tactile-motor response mapping transformations, and button-press responses. The data suggest an adaptive system, capable of representing information from different sensory modalities as needed for goal-directed behavior.

GRANTS

This work was funded under the Australian Research Council (ARC)’s Discovery Projects funding scheme DP12102835 (to A. Woolgar). A. Woolgar and R. Zopf are recipients of ARC Discovery Early Career Researcher Award Fellowships DE120100898 and DE140100499, respectively.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the authors.

AUTHOR CONTRIBUTIONS

A.W. and R.Z. conceived and designed research; A.W. and R.Z. performed experiments; A.W. and R.Z. analyzed data; A.W. and R.Z. interpreted results of experiments; A.W. prepared figures; A.W. and R.Z. drafted manuscript; A.W. and R.Z. edited and revised manuscript; A.W. and R.Z. approved final version of manuscript.

ACKNOWLEDGMENTS

We thank Evelina Fedorenko for providing the stimulus delivery script for the working memory localizer.

Present address for A. Woolgar: Macquarie University, Sydney, Australia (email: alexandra.woolgar@mq.edu.au).

Footnotes

We changed the “slow” frequency from 100 to 80 Hz after acquiring data for two participants, because the subjective impression of these first two participants was that the frequency discrimination for 100/200 Hz was overly difficult. The average performance for these two participants was, however, comparable to that for the rest of the group (within 1 standard deviation of the group’s performance), and thus we did not exclude them from our analyses.

REFERENCES

- Amedi A, Jacobson G, Hendler T, Malach R, Zohary E. Convergence of visual and tactile shape processing in the human lateral occipital complex. Cereb Cortex 12: 1202–1212, 2002. doi: 10.1093/cercor/12.11.1202. [DOI] [PubMed] [Google Scholar]

- Amedi A, Malach R, Hendler T, Peled S, Zohary E. Visuo-haptic object-related activation in the ventral visual pathway. Nat Neurosci 4: 324–330, 2001. doi: 10.1038/85201. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Essick GK, Siegel RM. Encoding of spatial location by posterior parietal neurons. Science 230: 456–458, 1985. doi: 10.1126/science.4048942. [DOI] [PubMed] [Google Scholar]

- Asaad WF, Rainer G, Miller EK. Neural activity in the primate prefrontal cortex during associative learning. Neuron 21: 1399–1407, 1998. doi: 10.1016/S0896-6273(00)80658-3. [DOI] [PubMed] [Google Scholar]

- Astafiev SV, Stanley CM, Shulman GL, Corbetta M. Extrastriate body area in human occipital cortex responds to the performance of motor actions. Nat Neurosci 7: 542–548, 2004. doi: 10.1038/nn1241. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Yasar NE, Kishan N, Ro T. Human MST but not MT responds to tactile stimulation. J Neurosci 27: 8261–8267, 2007. doi: 10.1523/JNEUROSCI.0754-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benjamin Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc B 57: 289–300, 1995. [Google Scholar]

- Bode S, Haynes JD. Decoding sequential stages of task preparation in the human brain. Neuroimage 45: 606–613, 2009. doi: 10.1016/j.neuroimage.2008.11.031. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spat Vis 10: 433–436, 1997. doi: 10.1163/156856897X00357. [DOI] [PubMed] [Google Scholar]

- Cavanna AE. The precuneus and consciousness. CNS Spectr 12: 545–552, 2007. doi: 10.1017/S1092852900021295. [DOI] [PubMed] [Google Scholar]

- Chang CC, Lin CJ. LIBSVM: A library for support vector machines. ACM Trans Intell Syst Technol 2: 1–27, 2011. doi: 10.1145/1961189.1961199. [DOI] [Google Scholar]

- Cole MW, Schneider W. The cognitive control network: integrated cortical regions with dissociable functions. Neuroimage 37: 343–360, 2007. doi: 10.1016/j.neuroimage.2007.03.071. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci 3: 201–215, 2002. doi: 10.1038/nrn755. https://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&list_uids=11994752&dopt=Abstract [DOI] [PubMed] [Google Scholar]

- Crittenden BM, Mitchell DJ, Duncan J. Recruitment of the default mode network during a demanding act of executive control. eLife 4: e06481, 2015a. doi: 10.7554/eLife.06481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dehaene S, Kerszberg M, Changeux JP. A neuronal model of a global workspace in effortful cognitive tasks. Proc Natl Acad Sci USA 95: 14529–14534, 1998. doi: 10.1073/pnas.95.24.14529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annu Rev Neurosci 18: 193–222, 1995. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Dosenbach NU, Visscher KM, Palmer ED, Miezin FM, Wenger KK, Kang HC, Burgund ED, Grimes AL, Schlaggar BL, Petersen SE. A core system for the implementation of task sets. Neuron 50: 799–812, 2006. doi: 10.1016/j.neuron.2006.04.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Driver J, Noesselt T. Multisensory interplay reveals crossmodal influences on ‘sensory-specific’ brain regions, neural responses, and judgments. Neuron 57: 11–23, 2008. doi: 10.1016/j.neuron.2007.12.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan J. An adaptive coding model of neural function in prefrontal cortex. Nat Rev Neurosci 2: 820–829, 2001. doi: 10.1038/35097575. [DOI] [PubMed] [Google Scholar]

- Duncan J. The multiple-demand (MD) system of the primate brain: mental programs for intelligent behaviour. Trends Cogn Sci 14: 172–179, 2010. doi: 10.1016/j.tics.2010.01.004. [DOI] [PubMed] [Google Scholar]

- Duncan J. The structure of cognition: attentional episodes in mind and brain. Neuron 80: 35–50, 2013. doi: 10.1016/j.neuron.2013.09.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan J, Owen AM. Common regions of the human frontal lobe recruited by diverse cognitive demands. Trends Neurosci 23: 475–483, 2000. doi: 10.1016/S0166-2236(00)01633-7. [DOI] [PubMed] [Google Scholar]

- Duncan J, Seitz RJ, Kolodny J, Bor D, Herzog H, Ahmed A, Newell FN, Emslie H. A neural basis for general intelligence. Science 289: 457–460, 2000. doi: 10.1126/science.289.5478.457. [DOI] [PubMed] [Google Scholar]

- Erez Y, Duncan J. Discrimination of visual categories based on behavioral relevance in widespread regions of frontoparietal cortex. J Neurosci 35: 12383–12393, 2015. doi: 10.1523/JNEUROSCI.1134-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415: 429–433, 2002. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Fedorenko E, Duncan J, Kanwisher N. Broad domain generality in focal regions of frontal and parietal cortex. Proc Natl Acad Sci USA 110: 16616–16621, 2013. doi: 10.1073/pnas.1315235110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fedorenko E, Hsieh PJ, Nieto-Castañón A, Whitfield-Gabrieli S, Kanwisher N. New method for fMRI investigations of language: defining ROIs functionally in individual subjects. J Neurophysiol 104: 1177–1194, 2010. doi: 10.1152/jn.00032.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox MD, Snyder AZ, Vincent JL, Corbetta M, Van Essen DC, Raichle ME. The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proc Natl Acad Sci USA 102: 9673–9678, 2005. doi: 10.1073/pnas.0504136102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fransson P, Marrelec G. The precuneus/posterior cingulate cortex plays a pivotal role in the default mode network: Evidence from a partial correlation network analysis. Neuroimage 42: 1178–1184, 2008. doi: 10.1016/j.neuroimage.2008.05.059. [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Schroeder CE. Is neocortex essentially multisensory? Trends Cogn Sci 10: 278–285, 2006. doi: 10.1016/j.tics.2006.04.008. [DOI] [PubMed] [Google Scholar]

- Graziano MS, Cooke DF. Parieto-frontal interactions, personal space, and defensive behavior. Neuropsychologia 44: 2621–2635, 2006. doi: 10.1016/j.neuropsychologia.2005.09.011. [DOI] [PubMed] [Google Scholar]

- Grinband J, Wager TD, Lindquist M, Ferrera VP, Hirsch J. Detection of time-varying signals in event-related fMRI designs. Neuroimage 43: 509–520, 2008. doi: 10.1016/j.neuroimage.2008.07.065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harel A, Kravitz DJ, Baker CI. Task context impacts visual object processing differentially across the cortex. Proc Natl Acad Sci USA 111: E962–E971, 2014. doi: 10.1073/pnas.1312567111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hebart MN, Görgen K, Haynes JD. The Decoding Toolbox (TDT): a versatile software package for multivariate analyses of functional imaging data. Front Neuroinform 8: 88, 2015. doi: 10.3389/fninf.2014.00088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henson RN. Efficient experimental design for fMRI. In: Statistical Parametric Mapping. The Analysis of Functional Brain Images, edited by Frackowiak RS, Ashburner JT, Kiebel SJ, Nichols TE, and Penny WD. London: Academic, 2007, p. 193–210. doi: 10.1016/B978-012372560-8/50015-2. [DOI] [Google Scholar]

- Hoshi E, Shima K, Tanji J. Task-dependent selectivity of movement-related neuronal activity in the primate prefrontal cortex. J Neurophysiol 80: 3392–3397, 1998. [DOI] [PubMed] [Google Scholar]

- Jackson J, Rich AN, Williams MA, Woolgar A. Feature-selective attention in frontoparietal cortex: multivoxel codes adjust to prioritize task-relevant information. J Cogn Neurosci 29: 310–321, 2017. [DOI] [PubMed] [Google Scholar]

- Kaas AL, van Mier H, Goebel R. The neural correlates of human working memory for haptically explored object orientations. Cereb Cortex 17: 1637–1649, 2007. doi: 10.1093/cercor/bhl074. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proc Natl Acad Sci USA 103: 3863–3868, 2006. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li S, Ostwald D, Giese M, Kourtzi Z. Flexible coding for categorical decisions in the human brain. J Neurosci 27: 12321–12330, 2007. doi: 10.1523/JNEUROSCI.3795-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li Hegner Y, Saur R, Veit R, Butts R, Leiberg S, Grodd W, Braun C. BOLD adaptation in vibrotactile stimulation: neuronal networks involved in frequency discrimination. J Neurophysiol 97: 264–271, 2007. doi: 10.1152/jn.00617.2006. [DOI] [PubMed] [Google Scholar]

- Löfvenberg J, Johansson RS. Regional differences and interindividual variability in sensitivity to vibration in the glabrous skin of the human hand. Brain Res 301: 65–72, 1984. doi: 10.1016/0006-8993(84)90403-7. [DOI] [PubMed] [Google Scholar]

- McGlone F, Kelly EF, Trulsson M, Francis ST, Westling G, Bowtell R. Functional neuroimaging studies of human somatosensory cortex. Behav Brain Res 135: 147–158, 2002. doi: 10.1016/S0166-4328(02)00144-4. [DOI] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annu Rev Neurosci 24: 167–202, 2001. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Miquée A, Xerri C, Rainville C, Anton JL, Nazarian B, Roth M, Zennou-Azogui Y. Neuronal substrates of haptic shape encoding and matching: a functional magnetic resonance imaging study. Neuroscience 152: 29–39, 2008. doi: 10.1016/j.neuroscience.2007.12.021. [DOI] [PubMed] [Google Scholar]