Abstract

Motor-imagery tasks are a popular input method for controlling brain-computer interfaces (BCIs), partially due to their similarities to naturally produced motor signals. The use of functional near-infrared spectroscopy (fNIRS) in BCIs is still emerging and has shown potential as a supplement or replacement for electroencephalography. However, studies often use only two or three motor-imagery tasks, limiting the number of available commands. In this work, we present the results of the first four-class motor-imagery-based online fNIRS-BCI for robot control. Thirteen participants utilized upper- and lower-limb motor-imagery tasks (left hand, right hand, left foot, and right foot) that were mapped to four high-level commands (turn left, turn right, move forward, and move backward) to control the navigation of a simulated or real robot. A significant improvement in classification accuracy was found between the virtual-robot-based BCI (control of a virtual robot) and the physical-robot BCI (control of the DARwIn-OP humanoid robot). Differences were also found in the oxygenated hemoglobin activation patterns of the four tasks between the first and second BCI. These results corroborate previous findings that motor imagery can be improved with feedback and imply that a four-class motor-imagery-based fNIRS-BCI could be feasible with sufficient subject training.

1. Introduction

The ability to direct a robot using only human thoughts could provide a powerful mechanism for human-robot interaction with a wide range of potential applications, from medical to search-and-rescue to industrial manufacturing. As robots become more integrated into our everyday lives, from robotic vacuums to self-driving cars, it will also become more important for humans to be able to reliably communicate with and control them. Current robots are difficult to control, often requiring a large degree of autonomy (which is still an area of active research) or a complex series of commands entered through button presses or a computer terminal. Using thoughts to direct a robot's actions via a brain-computer interface (BCI) could provide a more intuitive way to issue instructions to a robot. This could augment current efforts to develop semiautonomous robots capable of working in environments unsafe for humans, which was the focus of a recent DARPA robotics challenge [1]. A brain-controlled robot could also be a valuable assistive tool for restoring communication or movement in patients with a neuromuscular injury or disease [2].

The ideal, field-deployable BCI system should be noninvasive, safe, intuitive, and practical to use. Many previous studies have focused on electroencephalography (EEG) and, to a lesser extent, functional magnetic resonance imaging (fMRI). Using these traditional neuroimaging tools, various proof-of-concept BCIs have been built to control the navigation of humanoid (i.e., human-like) robots [3–9], wheeled robots [10–12], flying robots [13, 14], robotic wheelchairs [15], and assistive exoskeletons [16]. More recently functional near-infrared spectroscopy (fNIRS) has emerged as a good candidate for next generation BCIs, as fNIRS measures the hemodynamic response similar to fMRI [17, 18] but with miniaturized sensors that can be used in field settings and even outdoors [19, 20]. It also provides a balanced trade-off between temporal and spatial resolution, compared to fMRI and EEG, that sets it apart and presents unique opportunities for investigating new approaches, mental tasks, information content, and signal processing for the development of new BCIs [21]. Several fNIRS-based BCI systems have already been investigated for use in robot control [22–27].

Motor imagery, or the act of imagining moving the body while keeping the muscles still, has been a popular choice for use in BCI studies [3, 11, 13, 22, 23, 28–37]. It is a naturalistic task, highly related to actual movements, which could make it a good choice for a BCI input. While motor-execution tasks produce activation levels that are easier to detect, motor imagery is often preferred as issues with possible proprioceptive feedback can be avoided [38]. EEG BCIs have shown success with up to four classes, typically right hand, left hand, feet, and tongue [11, 28, 29]. Other studies have shown potential for EEG to detect difference between right and left foot or leg motor imagery [39, 40] and even individual fingers [41]. Studies have also used fNIRS to detect motor-imagery tasks, with many focusing on a single hand versus resting state [30], left hand versus right hand [31, 32], or three motor-imagery tasks and rest [33]. Shin and Jeong used fNIRS to detect left and right leg movement tasks in a four-class BCI [42], and in prior studies we presented preliminary offline classification results using left and right foot tasks separately in a four-class motor-imagery-based fNIRS-BCI [22, 23]. fNIRS has also been used to examine differences in motor imagery due to force of hand clenching or speed of tapping [34].

Many factors can affect the quality of recorded motor-imagery data. Kinesthetic motor imagery (i.e., imagining the feeling of the movement) has shown higher activation levels in the motor cortex than visual motor imagery (i.e., visualizing the movement) [43, 44]. Additionally, individual participants have varying levels of motor-imagery skill, which also affects the quality of the BCI [45–47]. In some participants, the use of feedback during motor-imagery training can increase the brain activation levels produced during motor imagery [48, 49].

Incorporating robot control into a BCI provides visual feedback and can increase subject motivation. Improved motivation and feedback, both visual and auditory, have demonstrated promise for reducing subject training time and improving BCI accuracy [50, 51]. The realism of feedback provided by a BCI may also have an effect on subject performance during motor imagery. For example, Alimardani et al. found a difference in subject performance in a follow-up session after receiving feedback from viewing a robotic gripper versus a lifelike android arm [52].

In this study, we report the first online results of a four-class motor-imagery-based fNIRS-BCI used to control both a virtual and physical robot. The four tasks used were imagined movement of upper and lower limbs: the left hand, left foot, right foot, and right hand. To the best of our knowledge, this is the first online four-class motor-imagery-based fNIRS-BCI, as well as the first online fNIRS-BCI to use left and right foot as separate tasks. We also examine the differences in oxygenated hemoglobin (HbO) activation between the virtual and physical-robot BCIs in an offline analysis.

2. Materials and Methods

Participants attended two training sessions, to collect data to train an online classifier for the BCI, followed by a third session in which they used the BCI to control the navigation of both a virtual and actual robot. This section outlines the methods used for data collection, the design of the BCI, and offline analysis of the collected data following the completion of the BCI experiment.

2.1. Participants

Thirteen healthy participants volunteered to take part in this experiment. Subjects were aged 18–35, right-handed, English-speaking, and with vision correctable to 20/20. No subjects reported any physical or neurological disorders or were on medication. The experiment was approved by the Drexel University Institutional Review Board, and participants were informed of the experimental procedure and provided written consent prior to participating.

2.2. Data Acquisition

Data were recorded using fNIRS as described in our previous study [53]. fNIRS is a noninvasive, relatively low-cost, portable, and potentially wireless optical brain imaging technique [19]. Near-infrared light is used to measure changes in HbO and HbR (deoxygenated hemoglobin) levels due to the rapid delivery of oxygenated blood to active cortical areas through neurovascular coupling, known as the hemodynamic response [54].

Participants sat in a desk chair facing a computer monitor. They were instructed to sit with their feet flat on the floor and their hands in their lap or on chair arm rests with palms facing upwards. Twenty-four optodes (measurement locations) over the primary and supplementary motor cortices were recorded using a Hitachi ETG-4000 optical topography system, as shown in Figure 1. Each location recorded HbO and HbR levels at a 10 Hz sampling rate.

Figure 1.

fNIRS sensor layout of light sources (red squares) and detectors (blue squares). Adjacent sources and detectors are 3 cm apart and create 24 optodes (numbered 1–24).

2.3. Experiment Protocol

Motor-imagery and motor-execution data were recorded in three one-hour-long sessions on three separate days. The first two sessions were training days, used to collect initial data to train a classifier, and the third day used this classifier in a BCI to navigate both a virtual and physical robot to the goal location in a series of rooms. The two robots are described below in Section 2.3.3 Robot Control. The training session protocol included five tasks: a “rest” task and tapping of the right hand, left hand, right foot, and left foot. This protocol expands on a preliminary study reported previously [22, 23]. Data collection for the two training days has been described previously [53].

2.3.1. Tasks

Subjects performed all five tasks during the two training days (rest, along with the (actual or imagined) tapping of the right hand, left hand, right foot, and left foot). During the third session, only the four motor-imagery tasks were used to control the BCI.

Participants were instructed to self-pace their real or imagined movements at once per second for the duration of the trial. The hand-tapping task was curling and uncurling their fingers towards their palm as if squeezing an imaginary ball, while the foot-tapping task involved raising and lowering the toes while keeping the heel on the floor. While resting, subjects were instructed to relax their mind and refrain from moving. During motor-imagery tasks, subjects were instructed to refrain from moving and use kinesthetic imagery (i.e., imagine the feelings and sensations of the movement).

Each trial consisted of 9 seconds of rest, a 2-second cue indicating the type of upcoming task, and a 15-second task period. During the two training sessions the cue text indicated a specific task (e.g., “Left Foot”), while, during the robot-control task, it read “Free Choice,” indicating the subject should choose the task corresponding to the desired action of the robot. Trials during the training days ended with a 4-second display indicating that the task period had ended. During the robot-control session, the task was followed by a reporting period so that the subject could indicate which task they had performed. The BCI then predicted which task the user had performed and sent the corresponding command to the robot, which took the corresponding action. The timings for training and robot-control days are shown in Figure 2.

Figure 2.

Trial timing diagrams for training sessions (a) and robot-control session (b).

2.3.2. Session Organization

In total, 60 motor-execution and 150 motor-imagery trials were collected during the training days, and an additional 60 subject-selected motor-imagery trials were recorded during the robot-control portion. The two training days were split into two runs, one for motor execution and one for motor imagery, which were repeated three times as shown in Figure 3. The protocol alternated between a run of 10 motor-execution trials and a run of 25 motor-imagery trials in order to reduce subject fatigue and improve their ability to perform motor imagery [55]. Each run had an equal number of the five tasks (rest and motor execution or motor imagery of the right hand, left hand, right foot, and left foot) in a randomized order. The third day (robot control) had two runs of 30 motor-imagery tasks, chosen by the user, which were used to control the BCI. The rest and motor-execution tasks were collected for offline analysis and were not used in the online BCI.

Figure 3.

Trial organization protocol for the two training days and single robot-control day of the experiment.

2.3.3. Robot Control

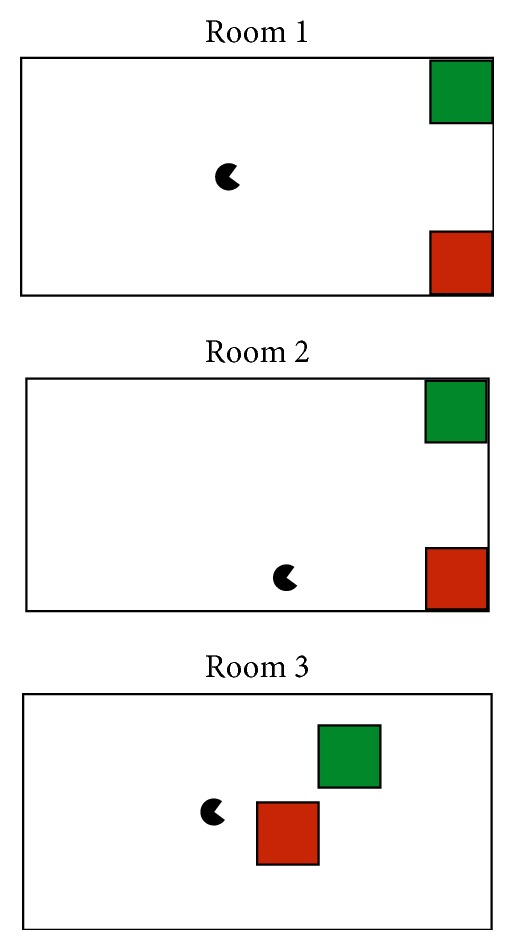

The robot-control session had two parts, beginning with control of a virtual robot using the MazeSuite program (http://www.mazesuite.com) [56, 57] and followed by control of the DARwIn-OP (Dynamic Anthropomorphic Robot with Intelligence-Open Platform) robot [58]. The objective in both scenarios was to use the BCI to navigate through a series of three room designs (shown in Figure 4), in which there was a single goal location (a green cube) and an obstacle (a red cube). A room was successfully completed if the user navigated the robot to the green cube, and it failed if the robot touched the red cube. After completion or failure of a room, the subject would advance to the next room. The sequence was designed such that the robot started closer to the obstacle in each successive room to increase the difficulty as the subject progressed. The run ended if the subject completed (or failed) all three rooms or reached the maximum of 30 trials. Each room could be completed in 5 or fewer movements, assuming perfect accuracy from the BCI.

Figure 4.

The three room layouts used during robot control.

To control the BCI, subjects selected a motor-imagery task corresponding to the desired action of the (virtual or physical) robot. The task-to-command mappings were as follows: left foot/walk forward, left hand/turn left 90°, right hand/turn right 90°, and right foot/walk backward. These four tasks were chosen to emulate a common arrow-pad setup, so that each action had a corresponding opposite action that could undo a movement. During BCI control, the original experiment display showed a reminder of the mapping between the motor-imagery tasks and the robot commands. A second monitor to the left of the experiment display showed a first-person view of the experiment room for either the virtual or physical robot. The experiment setup and example display screens are shown in Figure 5.

Figure 5.

The DARwIn-OP robot standing at the starting location of the first room (a), the first-person display of the virtual room (b), and the experiment display showing the mappings between motor-imagery tasks and robot commands (c).

The virtual robot was controlled using the built-in control functions of the MazeSuite program [56, 57]. The virtual environment and movements of the virtual robot were designed to replicate as closely as possible the physical room and movements of the DARwIn-OP, allowing the participants to acquaint themselves with the new robot-control paradigm before adding the complexities inherent in using a real robot. The virtual robot could make perfect 90° turns in place, and the forward and backward distance was adjusted to match the relative distance traveled by the DARwIn-OP robot as closely as possible. The goal and obstacle were shown as floating green and red cubes, respectively, that would trigger a success or failure state on contact with the virtual robot.

During the second run, the user controlled the DARwIn-OP in an enclosed area with a green box and red box marking the location of the goal and obstacle, respectively. Success or failure was determined by an experimenter watching the robot during the experiment. The DARwIn-OP is a small humanoid robot that stands 0.455 m tall, has 20 degrees of freedom, and walks on two legs in a similar manner to humans [58]. The robot received high-level commands from the primary experiment computer using TCP/IP over a wireless connection. Control of the DARwIn-OP was handled via a custom-built C++ class that called the robot's built-in standing and walking functions using prespecified parameters to control the movements at a high level. This class was then wrapped in a Python class for ease of communication with the experiment computer. The head position was lowered from the standard walking pose, in order to give a better view of the goal and obstacle. In order to turn as closely to 90° in place as possible, the robot used a step size of zero for approximately 3 seconds with a step angle of approximately 25° or −25°. When moving forward or backward, the DARwIn-OP used a step size of approximately 1 cm for 2 or 3 seconds, respectively. The exact values were empirically chosen for this particular robot.

2.4. Data Analysis

In addition to the evaluation of the classifier performance during the online BCI, a secondary offline analysis of the data was performed to further compare the two robot BCIs.

2.4.1. Online Processing

Motor-imagery data from the two training days were used to train a subject-specific classifier to control the BCI during the third day. The rest and motor-execution trials were excluded from the training set, as the BCI only used the four motor-imagery tasks. All data recordings from the training days for HbO, HbR, and HbT (total hemoglobin) were filtered using a 20th-order FIR filter with a 0.1 Hz cutoff. Artifacts and optodes with poor signal quality were noted and removed by the researcher. One subject was excluded from the online results due to insufficient data quality.

In addition to using only the low-pass filter, a variety of preprocessing methods were evaluated: correlation-based signal improvement (CBSI), common average referencing (CAR), task-related component analysis (TRCA), or both CAR and TRCA. CBSI uses the typically strong negative correlation between HbO and HbR to reduce head motion noise [59]. CAR is a simple method, commonly used in EEG, in which the average value of all optodes at each time point is used as a common reference (i.e., that value is subtracted from each optode at that time point). This enhances changes in small sets of optodes while removing global spatial trends from the data. TRCA creates signal components from a weighted sum of the recorded data signals [60]. It attempts to find components that maximize the covariance between instances of the same task while minimizing the covariance between instances of different tasks.

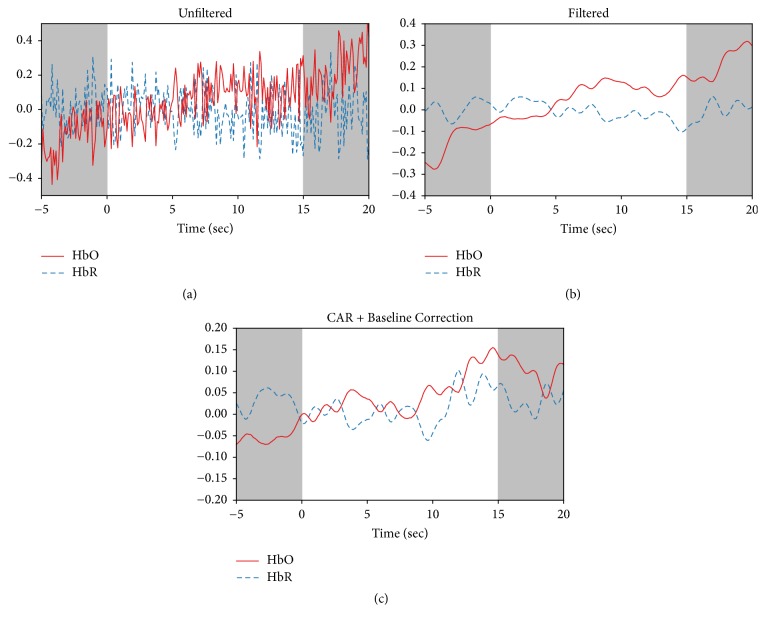

Individual task periods were extracted and baseline corrected, using the first 2 seconds of each task as the baseline level. Figure 6 shows an example of how preprocessing methods affect the recorded HbO and HbR for a single optode during one task period. Comparing Figures 6(a) and 6(b) shows how filtering removes a significant quantity of high-frequency noise from the signal. Figure 6(c) shows the change in the signal after applying CAR and baseline correction.

Figure 6.

Example of data analysis for a representative trial. Data from a single optode showing the original HbO and HbR signals (a), the data after filtering (b), and after applying CAR and baseline correction (c). Resting periods before and after the task are shown by gray boxes.

Four different types of features were calculated individually on each optode for HbO, HbR, and HbT. The features used were as follows: mean (average value of the last 10 seconds of the task), median (median of the last 10 seconds of the task), max (maximum value of the last 10 seconds of the task), and slope (slope of the line of best fit of the first 7 seconds of the task). Datasets were created using features calculated on HbO, HbT, or both HbO and HbR. Each feature set was reduced to between 4 and 8 features using recursive feature elimination. If both HbO and HbR were used, the specified number of features was selected for each chromophore. This resulted in 300 possible datasets (5 preprocessing methods, 3 chromophore combinations, 4 types of features, and 5 levels of feature reduction). Features in each dataset were normalized to have zero mean and unit variance.

Prior to the BCI session, a linear discriminant analysis (LDA) classifier was trained on the data from the two training days, following the flow chart shown in Figure 7 [61]. LDA is one of the simplest classification methods commonly used in BCIs [38], requiring no parameter tuning, which reduces the number of possible choices when selecting a classifier. LDA was implemented using the Scikit-learn toolkit [62].

Figure 7.

Flow chart outlining the creation of the online classifier.

To select an online classifier, an LDA classifier was trained on one training day (60 motor-imagery trials) and tested on the other for each of the 300 feature sets. This was repeated with the two days reversed, and the feature set with the highest average accuracy was selected. The classifier was then retrained on both training days (120 motor-imagery trials) using the selected feature set and was used as the online classifier for both robot-control BCIs.

Results are reported as accuracy (average number of correct classifications), precision (positive prediction value), recall (sensitivity or true positive rate), F-score (the balance between precision and recall), and the area under the ROC curve (AUC). The F-score is calculated as F-Score = 2 × (precision × recall)/(precision + recall).

2.4.2. Offline Processing

For the offline analysis, an automatic data-quality analysis was used on the BCI session data to determine which optodes and trials should be removed due to poor quality. This was done separately for the virtual and DARwIn-OP runs using a modified version of the method described by Takizawa et al. for fNIRS data [63]. Any optodes with a very high (near maximum) digital or analog gain were removed, as these were likely contaminated by noise. Areas with a standard deviation of 0 in a 2-second window of the raw light-intensity data were considered to have been saturated, and artifacts were determined to be areas with a change of 0.15 [mM] during a 2-second period on HbO and HbR data after application of the low-pass filter. Optodes that had at least 20 (of the original 30) artifact- and saturation-free trials were kept, with the remaining optodes being removed. Then, any trials with artifacts or saturated areas in any remaining good optodes were removed. An additional 5 subjects were excluded from the offline analysis due to insufficient data quality.

CAR was used for all offline analysis, followed by task data extraction and baseline correction as in the online analysis. Offline analysis examined the average HbO activation levels during the first and last second of each trial. Statistical analysis was done using linear mixed models, with multiple tests being corrected using false discovery rate (FDR).

3. Results

Offline analysis found that optode (24 levels), the interaction of optode and task (4 levels: right hand, left hand, right foot, and left foot), and the interaction of optode and robot type (2 levels: virtual and DARwIn-OP) had a significant effect on the average HbO activation during the last second of each trial. A post hoc analysis run individually for each optode found no significant effect for task, robot type, or task∗robot type interaction. F-values and p values for the main effects are shown in Table 1, with the post hoc analysis available in Table S1 in Supplementary Material available online at https://doi.org/10.1155/2017/1463512.

Table 1.

Effects and interactions of task, optode, and robot type for the BCI control session.

| F-value | p value | |

|---|---|---|

| Task | 0.011 | 0.998 |

| Optode | 10.982 | 0.000 |

| Robot type | 0.043 | 0.835 |

| Optode∗task | 1.393 | 0.018 |

| Task∗robot type | 0.008 | 0.999 |

| Optode∗robot type | 2.155 | 0.001 |

| Optode∗task∗robot type | 1.147 | 0.192 |

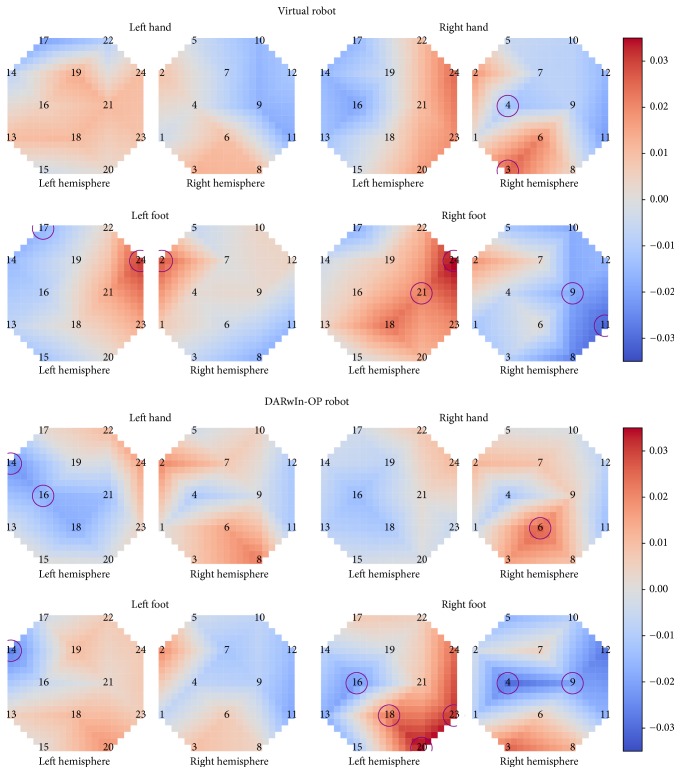

A second post hoc analysis, run individually for each optode under each task condition separately, showed that robot type had a significant effect on at least one optode under each task condition (p < 0.05, FDR corrected). The effect was found for two optodes (14 and 16) for the left hand task, one optode (14) for left foot, 6 optodes (4, 9, 16, 18, 20, and 23) for right foot, and one optode (6) for right hand. The full table of p values is available in Table S2 in Supplementary Material.

A comparison of topographic HbO activation levels demonstrated differences between individual tasks as well as the two BCIs. Left hand showed a much more contralateral activation pattern with the DARwIn-OP robot, with two optodes on the ipsilateral side showing a significant decrease in HbO levels between the first and last second of the task, whereas, during control of the virtual robot, it had a more ipsilateral activation pattern and no optodes with statistically significant changes in activation over the course of the task. Right hand, however, became strongly ipsilateral, with one ipsilateral optode showing significant activation, during the DARwIn-OP BCI.

Right foot activation became more contralateral, with stronger activation being closer to Cz on the contralateral side and a significant decrease in activation on the ipsilateral side. Left foot changed from a centralized bilateral activation near Cz when controlling the virtual robot to a more diffuse and ipsilateral activation pattern during DARwIn-OP control. It did, however, show an optode with significant decrease in HbO activation on the ipsilateral side during DARwIn-OP control.

Topographic plots of the average HbO activation during the last second of each task across all subjects are shown in Figure 8. Optodes showing a significant difference in average HbO level between the first and last second of the task are circled (p < 0.05, FDR corrected).

Figure 8.

Average HbO activation for each task during virtual and DARwIn-OP robot BCIs. Optodes with significant differences in HbO activation levels between the first and last second of the task are circled (p < 0.05, FDR corrected).

While controlling the online four-class BCI, participants achieved an average accuracy of 27.12% for the entire session. Five participants (S1, S5, S7, S8, and S11) achieved an accuracy of 30% or higher, reaching 36.67% accuracy (S8). The online accuracy, precision, recall, F-Score, and AUC for each subject are detailed in Table 2.

Table 2.

Online BCI results.

| Accuracy | Precision | Recall | F-Score | AUC | |

|---|---|---|---|---|---|

| S1 | 30.00 | 0.31 | 0.29 | 0.30 | 0.50 |

| S2 | 27.12 | 0.32 | 0.29 | 0.30 | 0.50 |

| S3 | 25.00 | 0.19 | 0.25 | 0.22 | 0.47 |

| S4 | 21.67 | 0.30 | 0.26 | 0.28 | 0.50 |

| S5 | 30.00 | 0.28 | 0.28 | 0.28 | 0.54 |

| S6 | 26.67 | 0.37 | 0.28 | 0.32 | 0.50 |

| S7 | 35.00 | 0.36 | 0.37 | 0.37 | 0.59 |

| S8 | 36.67 | 0.34 | 0.32 | 0.33 | 0.53 |

| S9 | 18.33 | 0.22 | 0.21 | 0.21 | 0.54 |

| S10 | 20.00 | 0.20 | 0.21 | 0.20 | 0.45 |

| S11 | 31.67 | 0.22 | 0.25 | 0.24 | 0.49 |

| S12 | 23.33 | 0.23 | 0.23 | 0.23 | 0.52 |

| Avg. | 27.12 | 0.28 | 0.27 | 0.27 | 0.51 |

There was a significant increase in classification accuracy during DARwIn-OP control as compared to virtual robot control (one-sided paired t-test, t(11) = 2.077, p = 0.031), with the average accuracy increasing by 5.21 +/− 2.51% (mean +/− standard error). All but one subject achieved the same or better performance in the second run while controlling the DARwIn-OP compared to during the first run with the virtual robot, and two subjects achieved 40% accuracy. The online accuracy, precision, recall, F-Score, and AUC for each subject for each BCI individually are detailed in Table 3. One subject (S5) did not use the left hand task during the virtual robot run, and therefore no AUC value is listed.

Table 3.

Online BCI results for virtual and DARwIn-OP BCIs individually.

| Virtual robot | DARwIn-OP robot | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F-Score | AUC | Accuracy | Precision | Recall | F-Score | AUC | |

| S1 | 23.33 | 0.27 | 0.28 | 0.27 | 0.51 | 36.67 | 0.36 | 0.38 | 0.37 | 0.53 |

| S2 | 24.14 | 0.24 | 0.23 | 0.23 | 0.40 | 30.00 | 0.44 | 0.34 | 0.38 | 0.63 |

| S3 | 23.33 | 0.16 | 0.25 | 0.19 | 0.55 | 26.67 | 0.20 | 0.23 | 0.21 | 0.43 |

| S4 | 26.67 | 0.36 | 0.44 | 0.40 | 0.59 | 16.67 | 0.17 | 0.18 | 0.18 | 0.47 |

| S5 | 30.00 | 0.29 | 0.23 | 0.25 | N/A | 30.00 | 0.35 | 0.31 | 0.33 | 0.57 |

| S6 | 13.33 | 0.21 | 0.14 | 0.17 | 0.42 | 40.00 | 0.55 | 0.52 | 0.53 | 0.65 |

| S7 | 33.33 | 0.35 | 0.34 | 0.35 | 0.59 | 36.67 | 0.46 | 0.39 | 0.42 | 0.57 |

| S8 | 33.33 | 0.29 | 0.30 | 0.29 | 0.53 | 40.00 | 0.40 | 0.35 | 0.38 | 0.54 |

| S9 | 16.67 | 0.16 | 0.22 | 0.18 | 0.52 | 20.00 | 0.25 | 0.20 | 0.22 | 0.55 |

| S10 | 20.00 | 0.17 | 0.22 | 0.20 | 0.42 | 20.00 | 0.20 | 0.17 | 0.18 | 0.48 |

| S11 | 30.00 | 0.23 | 0.25 | 0.24 | 0.44 | 33.33 | 0.19 | 0.27 | 0.22 | 0.54 |

| S12 | 20.00 | 0.21 | 0.19 | 0.20 | 0.50 | 26.67 | 0.26 | 0.30 | 0.28 | 0.58 |

| Avg. | 24.51 | 0.24 | 0.26 | 0.25 | 0.50 | 29.72 | 0.32 | 0.30 | 0.31 | 0.54 |

This improvement in performance appears to be reflected in the number of goals reached by the participants. While controlling the virtual robot, subject S11 was the only participant to run into an obstacle, and they were also the only participant to reach a goal. During control of the DARwIn-OP robot, two subjects (S2 and S5) reached two of the goals, and two others (S1 and S11) reached a single goal. Two subjects (S1 and S7) collided with an obstacle while navigating the DARwIn-OP.

Subjects S1 and S6, who showed the largest improvement between the virtual and DARwIn-OP BCIs, have confusion matrices that indicate differing methods used to increase accuracy. The confusion matrix of online classification results for subject S1 shows a strong diagonal pattern when controlling the DARwIn-OP, as expected for a well-performing classifier. Interestingly, left foot and right foot are never misclassified as the opposite foot, as might be expected based on their close proximity in homuncular organization, even though such misclassifications were present when controlling the virtual robot. Left hand was the most frequently misclassified task, commonly confused with left foot and right hand. Left foot tasks were also misclassified as left hand tasks but were correctly classified much more often. Subject S6, on the other hand, achieved higher accuracy when controlling the DARwIn-OP by primarily classifying the two hand tasks correctly. This subject's classifier had a strong tendency to predict right hand tasks during both BCIs, although actual right hand tasks were often misclassified during virtual robot control. The two foot tasks in both scenarios were frequently misclassified, typically as right hand. The confusion matrices are shown in Figure 9.

Figure 9.

Confusion matrices for the two subjects showing the most improvement between the virtual and DARwIn-OP online BCI results. The confusion matrices indicate different strategies for improving accuracy during DARwIn-OP control: S1 shows a mostly diagonal pattern while S6 shows a focus on correct classification of the two hand tasks.

4. Discussion

In this work, we present the results of a four-class motor-imagery-based BCI used to control a virtual and physical robot. There were significant differences in performance between controlling the virtual robot and the physical DARwIn-OP robot with the BCI. Subjects had significantly higher accuracy when controlling the DARwIn-OP than when controlling the virtual robot (29.72% versus 24.51% accuracy, resp.). An offline analysis showed that the interaction between optode and robot type had a significant effect on HbO levels, indicating that this increase in accuracy may be at least partially due to changes in HbO activation patterns during the tasks. Topographic plots of HbO activation also show changes in activation pattern between the virtual and DARwIn-OP BCIs, with left hand and right foot tasks moving to a more contralateral activation pattern while right hand and left foot became more ipsilateral in the second BCI.

These changes could be due to the participants adapting their mental strategy based on the BCI's classifier while controlling the virtual robot, thereby modifying their motor-imagery activation patterns. Confusion matrices of the online BCI classifiers show different patterns of correct and incorrect classification between subjects and between control of the virtual and physical robot. Such changes could reflect differences in the activation patterns generated during motor imagery, potentially showing differences in mental strategy developed by the participants while using the BCIs. This is in line with previous findings that feedback, especially from a BCI, can improve motor-imagery activation [49, 52, 64, 65]. Participants could also have improved as they became more familiar with the BCI experiment protocol, increasing their confidence in using the BCI, which has also been shown to have an effect on motor-imagery ability [45].

It is also possible that the differences between the virtual and DARwIn-OP robots themselves contributed to differences in subject performance. The more realistic visuals when using the DARwIn-OP could have had an effect, similar to the results found by Alimardani et al. [52]. There has been limited study on this topic, and further experiments would be needed in order to determine if this was a factor in subject performance.

There was a large difference between the accuracy of the highest-accuracy and lowest-accuracy subjects (40% versus 16% accuracy), in line with previous findings that people have different motor-imagery abilities [45–47]. Future studies could be improved by screening participants for motor-imagery abilities, as suggested by Marchesotti et al. [46], and potentially using feedback to improve the performance of participants identified as low motor-imagery ability [48]. As Bauer et al. found that the use of a robot BCI could improve motor-imagery performance, longer or additional BCI sessions could be incorporated in order to improve motor-imagery performance [49].

In this work, we adapted the preprocessing pipeline for each subject based on classifier performance on the two training days. While this allows one more element of customization for each subject-specific classifier, it also increases the likelihood of overfitting on the training data, which can result in poor performance on the online BCI. Future work could compare the different preprocessing methods and select a single method that performs best across subjects. Additionally, the ability to distinguish between four motor-imagery tasks with simple descriptive features and classifiers may be limited. Future work could employ more intelligent feature reduction methods (e.g., Sequential Floating Forward Selection) or explore more powerful feature design methods using deep neural networks or autoencoders. Support vector machines with nonlinear kernels may be able to achieve higher classification accuracy than LDA classifiers. The more powerful classification abilities of neural networks may also prove beneficial for improving BCI performance, as has been explored recently with EEG-based BCIs [66–69].

5. Conclusions

This study reports the first online results of a motor-imagery-based fNIRS-BCI to control robot navigation using four motor-imagery tasks. Subjects used the BCI to control first a virtual avatar and then a DARwIn-OP humanoid robot to navigate to goal locations within a series of three rooms. Classification accuracy was significantly greater during the DARwIn-OP BCI, and an offline analysis found a significant interaction between optode and both task and robot type on HbO activation levels. These findings corroborate previous studies that show feedback, including feedback from controlling a robot BCI, can improve motor-imagery performance. It is also possible that the use of a physical, as opposed to virtual, robot had an effect on the results, but future study would be needed to assess that. Furthermore, the activation patterns for left hand and right foot change to show a more strongly contralateral activation pattern during the second BCI, becoming more in line with the expected activation patterns based on the cortical homunculus layout of the motor cortex.

These findings indicate that future studies could benefit from additional focus on feedback during training and in particular additional training periods spent controlling the actual BCI. There was also a large discrepancy between the accuracy of the highest-accuracy and lowest-accuracy subject, indicating that future studies could be improved by screening potential subjects for BCI abilities and potentially providing these subjects with extra feedback training.

Supplementary Material

Results tables for post-hoc analyses.

Acknowledgments

This work was supported in part by the National Science Foundation Graduate Research Fellowship under Grant no. DGE-1002809. Work reported here was run on hardware supported by Drexel's University Research Computing Facility.

Conflicts of Interest

fNIR Devices, LLC, manufactures optical brain imaging instruments and licensed IP and know-how from Drexel University. Dr. Ayaz was involved in the technology development and thus offered a minor share in the new startup firm fNIR Devices, LLC. The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as potential conflicts of interest.

References

- 1.Guizzo E., Ackerman E. The hard lessons of DARPA's robotics challenge. IEEE Spectrum. 2015;52(8):11–13. doi: 10.1109/MSPEC.2015.7164385. [DOI] [Google Scholar]

- 2.Wolpaw J. R., Birbaumer N., McFarland D. J., Pfurtscheller G., Vaughan T. M. Brain-computer interfaces for communication and control. Clinical Neurophysiology. 2002;113(6):767–791. doi: 10.1016/S1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- 3.Chae Y., Jeong J., Jo S. Toward brain-actuated humanoid robots: asynchronous direct control using an EEG-Based BCI. IEEE Transactions on Robotics. 2012;28(5):1131–1144. doi: 10.1109/tro.2012.2201310. [DOI] [Google Scholar]

- 4.Li W., Li M., Zhao J. Control of humanoid robot via motion-onset visual evoked potentials. Frontiers in Systems Neuroscience. 2015;8 doi: 10.3389/fnsys.2014.00247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Choi B. J., Jo S. H. A low-cost EEG system-based hybrid brain-computer interface for humanoid robot navigation and recognition. PLoS ONE. 2013;8(9, article e74583) doi: 10.1371/journal.pone.0074583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Guneysu A., Akin L. H. An SSVEP based BCI to control a humanoid robot by using portable EEG device. Proceedings of the 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC '13); July 2013; Osaka, Japan. pp. 6905–6908. [DOI] [PubMed] [Google Scholar]

- 7.Cohen O., Druon S., Lengagne S., et al. fMRI-Based robotic embodiment: controlling a humanoid robot by thought using real-time fMRI. Presence: Teleoperators and Virtual Environments. 2014;23(3):229–241. doi: 10.1162/pres_a_00191. [DOI] [Google Scholar]

- 8.Bryan M., Green J., Chung M., et al. An adaptive brain-computer interface for humanoid robot control. Proceedings of the 2011 11th IEEE-RAS International Conference on Humanoid Robots, HUMANOIDS 2011; October 2011; svn. pp. 199–204. [DOI] [Google Scholar]

- 9.Bell C. J., Shenoy P., Chalodhorn R., Rao R. P. N. Control of a humanoid robot by a noninvasive brain-computer interface in humans. Journal of Neural Engineering. 2008;5(2):214–220. doi: 10.1088/1741-2560/5/2/012. [DOI] [PubMed] [Google Scholar]

- 10.Escolano C., Antelis J. M., Minguez J. A telepresence mobile robot controlled with a noninvasive brain-computer interface. IEEE Transactions on Systems, Man, and Cybernetics, Part B: Cybernetics. 2012;42(3):793–804. doi: 10.1109/tsmcb.2011.2177968. [DOI] [PubMed] [Google Scholar]

- 11.Barbosa A. O. G., Achanccaray D. R., Meggiolaro M. A. Activation of a mobile robot through a brain computer interface. Proceedings of the IEEE International Conference on Robotics and Automation (ICRA '10); May 2010; pp. 4815–4821. [DOI] [Google Scholar]

- 12.Millán J. D. R., Renkens F., Mouriño J., Gerstner W. Noninvasive brain-actuated control of a mobile robot by human EEG. IEEE Transactions on Biomedical Engineering. 2004;51(6):1026–1033. doi: 10.1109/TBME.2004.827086. [DOI] [PubMed] [Google Scholar]

- 13.Lafleur K., Cassady K., Doud A., Shades K., Rogin E., He B. Quadcopter control in three-dimensional space using a noninvasive motor imagery-based brain-computer interface. Journal of Neural Engineering. 2013;10(4):711–726. doi: 10.1088/1741-2560/10/4/046003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Akce A., Johnson M., Dantsker O., Bretl T. A brain-machine interface to navigate a mobile robot in a planar workspace: enabling humans to fly simulated aircraft with EEG. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2013;21(2):306–318. doi: 10.1109/TNSRE.2012.2233757. [DOI] [PubMed] [Google Scholar]

- 15.Leeb R., Friedman D., Müller-Putz G. R., Scherer R., Slater M., Pfurtscheller G. Self-paced (asynchronous) BCI control of a wheelchair in virtual environments: a case study with a tetraplegic. Computational Intelligence and Neuroscience. 2007;2007:8. doi: 10.1155/2007/79642.79642 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.López-Larraz E., Trincado-Alonso F., Rajasekaran V., et al. Control of an ambulatory exoskeleton with a brain-machine interface for spinal cord injury gait rehabilitation. Frontiers in Neuroscience. 2016;10 doi: 10.3389/fnins.2016.00359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Cui X., Bray S., Bryant D. M., Glover G. H., Reiss A. L. A quantitative comparison of NIRS and fMRI across multiple cognitive tasks. NeuroImage. 2011;54(4):2808–2821. doi: 10.1016/j.neuroimage.2010.10.069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Liu Y., Piazza E. A., Simony E., et al. Measuring speaker-listener neural coupling with functional near infrared spectroscopy. Scientific Reports. 2017;7, article 43293 doi: 10.1038/srep43293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ayaz H., Onaral B., Izzetoglu K., Shewokis P. A., Mckendrick R., Parasuraman R. Continuous monitoring of brain dynamics with functional near infrared spectroscopy as a tool for neuroergonomic research: empirical examples and a technological development. Frontiers in Human Neuroscience. 2013;7(871) doi: 10.3389/fnhum.2013.00871. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.McKendrick R., Mehta R., Ayaz H., Scheldrup M., Parasuraman R. Prefrontal hemodynamics of physical activity and environmental complexity during cognitive work. Human Factors. 2017;59(1):147–162. doi: 10.1177/0018720816675053. [DOI] [PubMed] [Google Scholar]

- 21.Gramann K., Fairclough S. H., Zander T. O., Ayaz H. Editorial: trends in neuroergonomics. Frontiers in Human Neuroscience. 2017;11, article 165 doi: 10.3389/fnhum.2017.00165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Batula A. M., Ayaz H., Kim Y. E. Evaluating a four-class motor-imagery-based optical brain-computer interface. Proceedings of the 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC '14); August 2014; Chicago, Ill, USA. pp. 2000–2003. [DOI] [PubMed] [Google Scholar]

- 23.Batula A. M., Mark J., Kim Y. E., Ayaz H. Developing an optical brain-computer interface for humanoid robot control. In: Schmorrow D. D., Fidopiastis M. C., editors. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) Vol. 9743. Toronto, Canda: Springer International Publishing; 2016. pp. 3–13. [DOI] [Google Scholar]

- 24.Canning C., Scheutz M. Functional near-infrared spectroscopy in human-robot interaction. Journal of Human-Robot Interaction. 2013;2(3):62–84. doi: 10.5898/JHRI.2.3.Canning. [DOI] [Google Scholar]

- 25.Kishi S., Luo Z., Nagano A., Okumura M., Nagano Y., Yamanaka Y. On NIRS-based BRI for a human-interactive robot RI-MAN. Proceedings of the in Joint 4th International Conference on Soft Computing and Intelligent Systems and 9th International Symposium on Advanced Intelligent Systems (SCIS & ISIS); 2008; pp. 124–129. [Google Scholar]

- 26.Takahashi K., Maekawa S., Hashimoto M. Remarks on fuzzy reasoning-based brain activity recognition with a compact near infrared spectroscopy device and its application to robot control interface. Proceedings of the 2014 International Conference on Control, Decision and Information Technologies, CoDIT 2014; November 2014; fra. pp. 615–620. [DOI] [Google Scholar]

- 27.Tumanov K., Goebel R., Möckel R., Sorger B., Weiss G. FNIRS-based BCI for robot control (demonstration). Proceedings of the 14th International Conference on Autonomous Agents and Multiagent Systems, AAMAS 2015; May 2015; tur. pp. 1953–1954. [Google Scholar]

- 28.Doud A. J., Lucas J. P., Pisansky M. T., He B. Continuous three-dimensional control of a virtual helicopter using a motor imagery based brain-computer interface. PLoS ONE. 2011;6(10, article e26322) doi: 10.1371/journal.pone.0026322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ge S., Wang R., Yu D. Classification of four-class motor imagery employing single-channel electroencephalography. PLoS ONE. 2014;9(6, article e98019) doi: 10.1371/journal.pone.0098019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Coyle S. M., Ward T. E., Markham C. M. Brain-computer interface using a simplified functional near-infrared spectroscopy system. Journal of Neural Engineering. 2007;4(3):219–226. doi: 10.1088/1741-2560/4/3/007. [DOI] [PubMed] [Google Scholar]

- 31.Naseer N., Hong K.-S. Classification of functional near-infrared spectroscopy signals corresponding to the right- and left-wrist motor imagery for development of a brain-computer interface. Neuroscience Letters. 2013;553:84–89. doi: 10.1016/j.neulet.2013.08.021. [DOI] [PubMed] [Google Scholar]

- 32.Sitaram R., Zhang H., Guan C., et al. Temporal classification of multichannel near-infrared spectroscopy signals of motor imagery for developing a brain-computer interface. NeuroImage. 2007;34(4):1416–1427. doi: 10.1016/j.neuroimage.2006.11.005. [DOI] [PubMed] [Google Scholar]

- 33.Ito T., Akiyama H., Hirano T. Brain machine interface using portable near-InfraRed spectroscopy—improvement of classification performance based on ICA analysis and self-proliferating LVQ. Proceedings of the 26th IEEE/RSJ International Conference on Intelligent Robots and Systems: New Horizon (IROS '13); 2013; pp. 851–858. [DOI] [Google Scholar]

- 34.Yin X., Xu B., Jiang C., et al. Classification of hemodynamic responses associated with force and speed imagery for a brain-computer interface. Journal of Medical Systems. 2015;39(5):53. doi: 10.1007/s10916-015-0236-0. [DOI] [PubMed] [Google Scholar]

- 35.Acqualagna L., Botrel L., Vidaurre C., Kübler A., Blankertz B. Large-scale assessment of a fully automatic co-adaptive motor imagery-based brain computer interface. PLoS ONE. 2016;11(2, article e0148886) doi: 10.1371/journal.pone.0148886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Koo B., Lee H.-G., Nam Y., et al. A hybrid NIRS-EEG system for self-paced brain computer interface with online motor imagery. Journal of Neuroscience Methods. 2015;244(1):26–32. doi: 10.1016/j.jneumeth.2014.04.016. [DOI] [PubMed] [Google Scholar]

- 37.Yi W., Zhang L., Wang K., et al. Evaluation and comparison of effective connectivity during simple and compound limb motor imagery. Proceedings of the 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC '14); August 2014; Chicago, Ill, USA. pp. 4892–4895. [DOI] [PubMed] [Google Scholar]

- 38.Naseer N., Hong K. fNIRS-based brain-computer interfaces: a review. Frontiers in Human Neuroscience. 2015;9:1–15. doi: 10.3389/fnhum.2015.00003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hashimoto Y., Ushiba J. EEG-based classification of imaginary left and right foot movements using beta rebound. Clinical Neurophysiology. 2013;124(11):2153–2160. doi: 10.1016/j.clinph.2013.05.006. [DOI] [PubMed] [Google Scholar]

- 40.Hsu W.-C., Lin L.-F., Chou C.-W., Hsiao Y.-T., Liu Y.-H. EEG classification of imaginary lower LIMb stepping movements based on fuzzy SUPport vector MAChine with Kernel-INDuced MEMbership FUNction. International Journal of Fuzzy Systems. 2017;19(2):566–579. doi: 10.1007/s40815-016-0259-9. [DOI] [Google Scholar]

- 41.Stankevich L., Sonkin K. Human-robot interaction using brain-computer interface based on eeg signal decoding. In: Ronzhin A., Rigoll G., Meshcheryakov R., editors. Proceedings of the Interactive Collaborative Robotics (ICR); Springer International Publishing; pp. 99–106. [DOI] [Google Scholar]

- 42.Shin J., Jeong J. Multiclass classification of hemodynamic responses for performance improvement of functional near-infrared spectroscopy-based brain-computer interface. Journal of Biomedical Optics. 2014;19(6, article 067009) doi: 10.1117/1.jbo.19.6.067009. [DOI] [PubMed] [Google Scholar]

- 43.Lotze M., Halsband U. Motor imagery. Journal of Physiology Paris. 2006;99(4–6):386–395. doi: 10.1016/j.jphysparis.2006.03.012. [DOI] [PubMed] [Google Scholar]

- 44.Guillot A., Collet C., Nguyen V. A., Malouin F., Richards C., Doyon J. Brain activity during visual versus kinesthetic imagery: an fMRI study. Human Brain Mapping. 2009;30(7):2157–2172. doi: 10.1002/hbm.20658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Jeunet C., N'Kaoua B., Lotte F. Advances in user-training for mental-imagery-based BCI control: pychological and cognitive factors and their neural correlates. Progress in Brain Research. 2016;228:3–35. doi: 10.1016/bs.pbr.2016.04.002. [DOI] [PubMed] [Google Scholar]

- 46.Marchesotti S., Bassolino M., Serino A., Bleuler H., Blanke O. Quantifying the role of motor imagery in brain-machine interfaces. Scientific Reports. 2016;6, article 24076 doi: 10.1038/srep24076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Lebon F., Byblow W. D., Collet C., Guillot A., Stinear C. M. The modulation of motor cortex excitability during motor imagery depends on imagery quality. European Journal of Neuroscience. 2012;35(2):323–331. doi: 10.1111/j.1460-9568.2011.07938.x. [DOI] [PubMed] [Google Scholar]

- 48.Miller K. J., Schalk G., Fetz E. E., Den Nijs M., Ojemann J. G., Rao R. P. N. Cortical activity during motor execution, motor imagery, and imagery-based online feedback. Proceedings of the National Academy of Sciences of the United States of America. 2010;107(9):4430–4435. doi: 10.1073/pnas.0913697107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Bauer R., Fels M., Vukelić M., Ziemann U., Gharabaghi A. Bridging the gap between motor imagery and motor execution with a brain-robot interface. NeuroImage. 2015;108:319–327. doi: 10.1016/j.neuroimage.2014.12.026. [DOI] [PubMed] [Google Scholar]

- 50.Ahn M., Jun S. C. Performance variation in motor imagery brain-computer interface: a brief review. Journal of Neuroscience Methods. 2015;243:103–110. doi: 10.1016/j.jneumeth.2015.01.033. [DOI] [PubMed] [Google Scholar]

- 51.Tidoni E., Gergondet P., Kheddar A., Aglioti S. M. Audio-visual feedback improves the BCI performance in the navigational control of a humanoid robot. Frontiers in Neurorobotics. 2014;8 doi: 10.3389/fnbot.2014.00020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Alimardani M., Nishio S., Ishiguro H. The importance of visual feedback design in BCIs; from embodiment to motor imagery learning. PLoS ONE. 2016;11(9) doi: 10.1371/journal.pone.0161945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Batula A. M., Mark J. A., Kim Y. E., Ayaz H. Comparison of brain activation during motor imagery and motor movement using fNIRS. Computational Intelligence and Neuroscience. 2017;2017:12. doi: 10.1155/2017/5491296.5491296 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Villringer A., Chance B. Non-invasive optical spectroscopy and imaging of human brain function. Trends in Neurosciences. 1997;20(10):435–442. doi: 10.1016/S0166-2236(97)01132-6. [DOI] [PubMed] [Google Scholar]

- 55.Rozand V., Lebon F., Stapley P. J., Papaxanthis C., Lepers R. A prolonged motor imagery session alter imagined and actual movement durations: potential implications for neurorehabilitation. Behavioural Brain Research. 2016;297:67–75. doi: 10.1016/j.bbr.2015.09.036. [DOI] [PubMed] [Google Scholar]

- 56.Ayaz H., Allen S. L., Platek S. M., Onaral B. Maze Suite 1.0: a complete set of tools to prepare, present, and analyze navigational and spatial cognitive neuroscience experiments. Behavior Research Methods. 2008;40(1):353–359. doi: 10.3758/BRM.40.1.353. [DOI] [PubMed] [Google Scholar]

- 57.Ayaz H., Shewokis P. A., Curtin A., Izzetoglu M., Izzetoglu K., Onaral B. Using MazeSuite and functional near infrared spectroscopy to study learning in spatial navigation. Journal of Visualized Experiments. 2011;(56, article 3443) doi: 10.3791/3443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Ha I., Tamura Y., Asama H., Han J., Hong D. W. Development of open humanoid platform DARwIn-OP. Proceedings of the SICE Annual Conference 2011; 2011; pp. 2178–2181. [Google Scholar]

- 59.Cui X., Bray S., Reiss A. L. Functional near infrared spectroscopy (NIRS) signal improvement based on negative correlation between oxygenated and deoxygenated hemoglobin dynamics. NeuroImage. 2010;49(4):3039–3046. doi: 10.1016/j.neuroimage.2009.11.050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Tanaka H., Katura T., Sato H. Task-related component analysis for functional neuroimaging and application to near-infrared spectroscopy data. NeuroImage. 2013;64(1):308–327. doi: 10.1016/j.neuroimage.2012.08.044. [DOI] [PubMed] [Google Scholar]

- 61.Bishop C. M. Pattern Recognition and Machine Learning. Springer; 2006. [Google Scholar]

- 62.Pedregosa F., Varoquaux G., Gramfort A. Scikit-learn: machine learning in Python. Journal of Machine Learning Research. 2011;12:2825–2830. [Google Scholar]

- 63.Takizawa R., Kasai K., Kawakubo Y., et al. Reduced frontopolar activation during verbal fluency task in schizophrenia: a multi-channel near-infrared spectroscopy study. Schizophrenia Research. 2008;99(1–3):250–262. doi: 10.1016/j.schres.2007.10.025. [DOI] [PubMed] [Google Scholar]

- 64.Friesen C. L., Bardouille T., Neyedli H. F., Boe S. G. Combined action observation and motor imagery neurofeedback for modulation of brain activity. Frontiers in Human Neuroscience. 2017;10 doi: 10.3389/fnhum.2016.00692. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Vourvopoulos A., Bermúdezi Badia S. Motor priming in virtual reality can augment motor-imagery training efficacy in restorative brain-computer interaction: a within-subject analysis. Journal of NeuroEngineering and Rehabilitation. 2016;13(1, article 69) doi: 10.1186/s12984-016-0173-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Yuksel A., Olmez T. A neural network-based optimal spatial filter design method for motor imagery classification. PLoS ONE. 2015;10(5, articel e0125039) doi: 10.1371/journal.pone.0125039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Lu N., Li T., Ren X., Miao H. A deep learning scheme for motor imagery classification based on restricted boltzmann machines. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2016;PP(99):1. doi: 10.1109/TNSRE.2016.2601240. [DOI] [PubMed] [Google Scholar]

- 68.Tabar Y. R., Halici U. A novel deep learning approach for classification of EEG motor imagery signals. Journal of Neural Engineering. 2017;14(1, article 016003) doi: 10.1088/1741-2560/14/1/016003. [DOI] [PubMed] [Google Scholar]

- 69.Tang Z., Li C., Sun S. Single-trial EEG classification of motor imagery using deep convolutional neural networks. Optik - International Journal for Light and Electron Optics. 2017;130:11–18. doi: 10.1016/j.ijleo.2016.10.117. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Results tables for post-hoc analyses.