Abstract

The number of papers about the orbitofrontal cortex (OFC) has grown from 1 per month in 1987 to a current rate of over 50 per month. This publication stream has implicated the OFC in nearly every function known to cognitive neuroscience and in most neuropsychiatric diseases. However, new ideas about OFC function are typically based on limited data sets and often ignore or minimize competing ideas or contradictory findings. Yet true progress in our understanding of an area’s function comes as much from invalidating existing ideas as proposing new ones. Here we consider the proposed roles for OFC, critically examining the level of support for these claims and highlighting the data that call them into question.

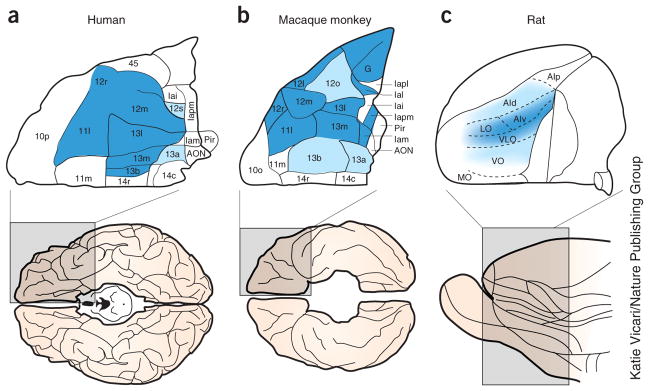

Invalidation is fundamental to the advancement of scientific ideas1. When evaluating new findings, it is important to assess not only which hypotheses are supported, but also which are called into question. Although exciting positive results that establish the viability of a new idea attract the most attention, it is the negative results, particularly across experiments, which help to constrain viable explanations for a particular brain region’s function. In this spirit, we offer this review of what we think the OFC does not—and probably does not—do. We focus primarily on what is now termed lateral OFC, encompassing lateral orbital and agranular regions in rats and the limbic or lateral parts of areas 11, 13 and parts of 12 in monkeys (Fig. 1). For reviews focusing more specifically on the functional distinction between medial and lateral OFC regions, see refs. 2–4.

Figure 1.

The (lateral) OFC across species. The figure illustrates the OFC in humans and monkeys and the approximate analogous area in rats. The OFC in humans and monkeys is defined as the lateral orbital network (dark blue) and related intermediate areas (light blue) proposed by Price and colleagues149. Note that this region is distinct from the medial network, ventromedial prefrontal cortex or what has more recently been called medial OFC. An analogous area has been identified in rats based on connectivity with mediodorsal thalamus, striatum and amygdala, as well as functional criteria150.

Response inhibition

Response inhibition is one of the first and perhaps still most influential ideas put forth as the function of prefrontal areas5. A general inhibitory function was popularized by the tale of Phineas Gage6, who became disinhibited after a traumatic injury affecting his prefrontal and particularly orbital regions. This idea was first operationalized using reversal learning, during which a subject is first taught to associate reward with one cue or response and not another, after which the opposite associations must be learned. OFC damage typically causes deficits in the rapid switching of behavior after reversal of the associations. This is not an isolated finding; deficits have been reported in rodent species, monkeys and humans, in both go, no-go and choice tasks, and using a variety of learning materials and training procedures7–18. Even today, such deficits are often described as reflecting an inability to inhibit responding7,19,20.

But is response inhibition a core function of OFC? Although OFC damage typically causes subjects to be slower than normal at acquiring reversals, they are typically unimpaired at acquiring the initial discriminations, despite the fact that these often require inhibiting some default response strategy. This is true even in go, no-go tasks in which the go response has been heavily pre-trained during shaping21,22.

These data suggest there is something special about response inhibition after a reversal, and yet, even here, OFC damage does not always lead to deficits in response inhibition8,18 and may not lead to deficits at all23–27. For example, a reversal task involving not two, but three, options, which makes it possible to identify whether reversal errors truly reflect an inability to withhold responding to the previously rewarded option, revealed that monkeys with lateral OFC damage exhibited suboptimal choices, but were in fact more likely to switch responding after reversal8.

Previous work has also shown that the OFC is not necessary in a variety of settings that require response inhibition. For example, if monkeys observe a large and a small reward being hidden under two objects, they will subsequently select the one under which the large reward was placed. However, they can be trained to pick the small reward object to get the large reward. OFC lesions do not affect the rate at which the prepotent strategy is reversed27. Similarly, OFC damage causes rats to exhibit impulsive or more rapid switching to a small, immediate reward from a larger, increasingly delayed reward26.

Furthermore, limited aspiration or even relatively large neurotoxic lesions of the OFC may leave reversal learning completely unaffected23,24, even when the lesions impair performance on reinforcer devaluation, another OFC-dependent task24. In this case, reversal deficits were reinstated by aspirating a small cortical strip at the caudal end of OFC containing passing fibers from temporal lobe to other prefrontal areas, consistent with ideas that response inhibition is primarily mediated by nearby prefrontal areas rather than OFC28,29.

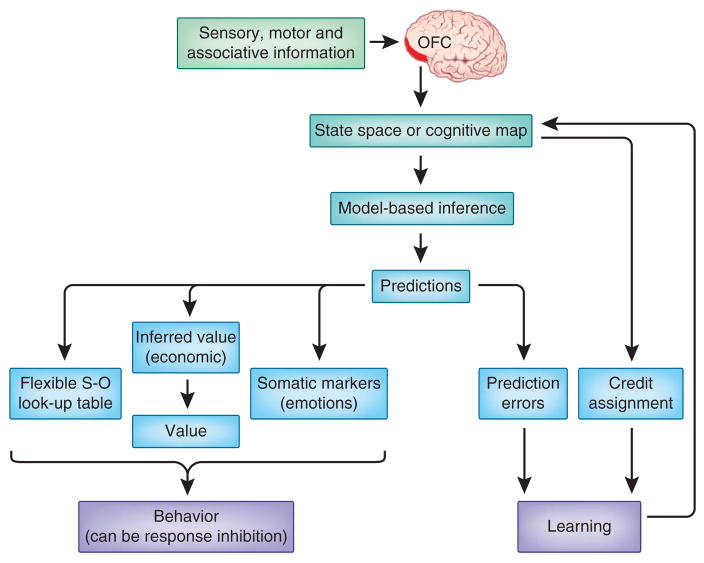

In short, the growing number of reports showing that OFC is not necessary for reversal learning violates a key prediction of the response inhibition hypothesis, which proposes that the OFC should always be necessary for inhibiting responses. As we shall see, there are also numerous studies showing effects of OFC damage on tasks that do not seem to require response inhibition at all. Together, these works provide strong evidence that response inhibition is not a core function of the OFC28,29 (Fig. 2).

Figure 2.

Taxonomy of proposed OFC functions. The diagram shows possible relationships between the various ideas for orbitofrontal function discussed in the review. Although many of these ideas explain some of the data, they generally fail to explain all of the data. Thus, they are no longer viable freestanding explanations for OFC function. However, as illustrated, they may still be viewed as subfunctions of larger or more general concepts, as they are able to explain subsets of the experimental findings. S-O, stimulus-outcome.

Flexible representation of stimulus-outcome associations

Another highly influential idea suggests that OFC operates as an especially flexible associative look-up table30. This proposal, that OFC is required for the flexible representation of stimulus-outcome associations, arose intuitively from single-unit recordings in which activity apparently tracked the initial and reversed associations during reversal learning31–34. Under this theory, the ability to track associative information would be redundant with that of other regions, with OFC distinguished by the special flexibility of its associative encoding35.

However, as reviewed above, the OFC is often not necessary for reversal learning. Just as this evidence contradicts the response inhibition hypothesis, it also contradicts predictions of the flexible encoding idea. This evidence is bolstered by numerous reports in other settings showing that OFC is not necessary for altering established associative behavior, including Pavlovian reversal and extinction, conditioned taste aversion, set shifting, and even changes in instrumental responding after reinforcer devaluation36–44. Such evidence at least places serious qualifications on when the OFC’s flexible encoding function is brought to bear (Fig. 2).

Moreover, neural representations in many other areas are far more flexible than those in the OFC. For example, amygdalar activity in rats45 and monkeys46,47 can encode the associative significance of cues more rapidly and with greater fidelity, even across reversals, than OFC neurons. Similar results have been obtained in recordings from other areas during reversal tasks48–50. Encoding in OFC often lags behind changes in other areas, and, in some settings, the flexibility seems to be inversely correlated with the speed of reversal learning51. In retrospect, encoding in the OFC seems as remarkable for its inflexibility or specificity as it is for its flexibility and ability to ‘reverse’. These observations raise the question of precisely which aspect of associative information is being encoded in OFC52, and which task features define when OFC-dependent information is required for flexible behavior.

Emotions or somatic markers

A third influential proposal, which begins to address the question regarding the content of associative representations in OFC, is that the OFC has a central role in signaling emotions. This idea harkens back to the idea that we experience emotions as a result of peripheral feedback about bodily states53. In this implementation, the OFC guides behavior via its modulation of these bodily states or somatic markers.

The central evidence supporting this proposal comes from patients with OFC damage, particularly in the ventromedial part, who are impaired on the Iowa gambling task11. In the original version of this task, patients had to choose from four decks of cards: two ‘bad’ and two ‘good’. Bad decks were associated with large gains on each trial, but also often led to large losses, whereas good decks led to relatively small gains on each trial, but had correspondingly small and less frequent losses. Although both normal and brain-damaged subjects began by choosing mostly from decks that yielded large rewards, normal subjects rapidly switched to choosing the small reward decks. This switching was associated with the development of elevated skin conductance, a proxy for arousal and anxiety, during impending choices of the bad deck. Patients with ventromedial OFC damage failed to switch their choices, continuing to choose the bad decks long after controls had stopped and also failing to manifest the skin conductance responses. These impairments, which occurred even though patients could verbally identify the bad decks, were interpreted as reflecting a dissociation between the rational and emotional control of behavior, leading to the idea that the OFC triggers emotional states about impending events to help guide advantageous choices54.

This proposal has had an enormous effect, particularly in reawakening interest in the OFC, yet, although the basic result has been replicated many times in humans and animals55–62, there is relatively little evidence that the deficit reflects a fundamental inability to trigger emotions. Patients with OFC damage do not exhibit flat affect or lack of emotion, nor are they unable to engage emotions in decision-making. They simply do so in a way that is unlike normal subjects.

In addition, the OFC-related deficit in the Iowa gambling task turns out to be dependent on the precise arrangement of the contingencies. If the penalties are delivered when subjects first choose the ‘bad’ decks, then OFC-damaged patients show little or no impairment63. This suggests that the original formulation of the task, which is highly sensitive to OFC damage, is essentially reproducing the reversal deficit seen after OFC damage in some other settings64. As such, it is open to all of the issues with reversal learning impairments discussed above.

Of course, the idea that normal decision-making reflects more than rational, explicit evaluation is certainly a good one. Computational neuroscience and learning theory have hypothesized two general mechanisms of behavioral control: one involving rational simulations of future consequences and the other relying on pre-computed values or policies65. Although this is not directly analogous to the distinction between rational decision-making and decision-making guided by somatic markers, it is somewhat similar in that one form of control involves a multi-step, explicit evaluation of options, whereas the other uses a kind of heuristic shortcut to call up a pre-computed value66. Recent accounts have even suggested how these two forms of control may interact, with some branches of a decision-tree being pruned out of consideration by the assignment of a pre-computed value to the entire branch67. Interestingly, in these somewhat related frameworks, the OFC appears to be much more important for behaviors supported by the process of mental simulation than it is for behaviors relying on pre-computed values or policies68—the opposite dissociation proposed by the somatic marker hypothesis. These accounts may be unified by postulating that the OFC is critical for applying emotional information to decisions, but only when such emotions are derived from a process involving mental simulation or inference. In this framework, somatic markers might be either synthesized on-line or pre-computed, with OFC involved in the former, but not the latter. This might be consistent with more recent versions of the somatic marker idea in which the OFC-related impairment has been described as “myopia for the future”69. However, such a characterization of the deficit places less emphasis on OFC triggering emotions and more on its involvement in imagining or inferring future outcomes. In this regard, the idea is more similar to several proposals described below than it is to the original conception of somatic markers (Fig. 2).

(Economic) value

A more recent proposal suggests that the OFC is critical for signaling value. Although firmly grounded in the historical understanding of OFC’s role in associative encoding and emotion, this idea is most strongly associated with the emergence of economic theory as a framework for neuroscience research70,71. Here the OFC has been proposed to serve as a final accountant of value, converting information about available outcomes—their probability, magnitude, time to receipt, current desirability, costs, etc.—into a common neural currency on which to base choices, particularly between different goods72–74.

The best support for the strong version of this hypothesis came from work showing that, during choices between different juices, the firing rates of some single units in the OFC were correlated with the chosen juice’s subjective value75. These neural correlates were indifferent to the identity of the juice chosen, the absolute quantity, the direction of the required response, the features of the predictive cues, and even to some extent the period of the trial. They fired simply on the basis of the chosen juice’s subjective value, such that a linear function relating value to firing rate could be derived for each juice. Across sessions, the ratio between the slopes of the value functions of the paired juices was predictive of idiosyncratic shifts in preference between them. This relationship between firing ratio and relative preference across sessions rules out any simple explanation that neuronal firing is based on ingredient or another static feature not influenced by or linked to the subjective value the monkey places on the juices.

Subsequent studies have replicated this result and shown further that these neural responses obey key principles for economic value including transitivity and menu invariance76,77, and similar results have been reported in humans using functional magnetic resonance imaging (fMRI)78–81. For example, BOLD responses in medial orbital areas are correlated with a subject’s ‘willingness to pay’ for items during a decision-making task78. In addition, BOLD correlates of value in nearby medial OFC that are specifically invariant to the identity of the expected outcomes have been reported80 (see refs. 2–4 for more extensive reviews of medial versus lateral subdivisions in primates and humans).

Of course, these are correlative measures. Although no single approach is without drawbacks, this issue is particularly problematic for fMRI, as the analysis inherently aggregates single units and may therefore average out their unique functions, such as coding of information about identity82. In unit recording work75,83,84, such specific correlates are plentiful, and in at least one instance, the strength of such value-neutral outcome representations was closely related to choice behavior82. Although one might argue that the aggregate signal is likely to be the function of the area overall, it seems equally likely that individual units or small ensembles could send their unique product downstream. BOLD signal is also sensitive to input and even subthreshold events. Thus, it may reflect as much what is happening upstream of an area as it does the unique output product of that region, as is the case for prediction error signals reported in ventral striatum commonly thought to reflect dopaminergic input85.

Similarly, although the demonstration that individual single units fire in a way that reflects pure value is more definitive, there are issues with using this as a theoretical foundation without additional causal evidence. Value is heavily confounded with arousal86 and salience87,88, and there is some evidence that OFC neurons signal salience (or risk or decision confidence, which are concepts associated with salience)89–91. Disentangling value and related constructs has been problematic in studies of other brain regions92.

Although solving all these issues here may not be realistic, they illustrate the limitations of making arguments solely on the basis of neural correlates. What one wants of course is convergent, causal evidence that the OFC is required for the function reflected in the correlates. With the economic value hypothesis, the supporting causal data come from studies of preference93–95 reporting that OFC-damaged subjects exhibit choices that violate transitivity96, which requires that choices reflecting economic value should reveal a consistent rank ordering across a group of items. Simply put, if you prefer A to B and B to C, then you should also prefer A to C.

If OFC signals economic value, then the decisions of OFC-damaged patients should violate transitivity. One study94 has tested this by asking subjects to choose between pairs of goods (food, people, color). Subjects chose between all possible pairs in a category once. Subsequently the items in each category were arranged in the order that minimized the number of irrational or intransitive choices. 6% of the choices of patients with damage to the ventromedial OFC still violated transitivity, compared with only ~3% of choices of controls or patients with prefrontal damage that excluded the OFC. This result, confirmed in both humans and monkeys93,95, provides evidence that consistency in subjective preferences can require the orbital area.

However, consistency of choices in this setting reflects both the ability to signal economic or pure value and the ability to infer structure or relationships among items from a set (for example, as in transitive inference97). When preferences are tested in the absence of required inference—that is, preferences among familiar rewards—OFC damage leaves them unaffected12,98. This may result from two ways of making preference judgments: an OFC-independent method that relies on a person’s historical ‘preference history’ and a second OFC-dependent method that reflects a ‘dynamic assessment of relative value’94.

Also problematic to this account, value signals, broadly defined, are generally ubiquitous in the brain, and there is growing evidence that even the very specific economic value correlate is not unique to OFC. For example, similar signals have been found in parietal cortex, anterior cingulate and other prefrontal regions48–50,99–102. Recordings from across several prefrontal areas in monkeys choosing between cues predictive of rewards differing in probability, payoff or effort required found that the anterior cingulate cortex had the simplest pure value correlates, as neurons there were more likely than those in OFC or lateral prefrontal cortex to code value monotonically across all three value dimensions3,50. In fact a growing number of reports have failed to find integration of different value dimensions in OFC single units when the dimensions are not properties of the actual outcome; thus, although reward identity and magnitude may be integrated, similar studies of the integration of reward magnitude with effort, delay, risk and social value have found largely independent representations90,102–105. Of course this doesn’t mean the pure value correlates in the OFC are not important on a more restricted scale, nor does it preclude weaker versions of this idea on the basis of multi-unit ensemble coding schemes71, but these results do question whether the OFC’s role is all-encompassing or even unique, particularly given that OFC manipulations cannot be said to generally disrupt value-guided behavior106.

(Inferred) value

OFC is not typically necessary for value-guided choices106. For example, OFC damage produces no deficits in Pavlovian or instrumental learning when subjects are required to discriminate between cues or actions predicting different-sized rewards12,38,42,107. Similarly, both blocking and unblocking, when they can be accounted for by value, do not require the OFC98,108. On the other hand, OFC is necessary for superficially similar behaviors when they require knowledge of specific outcome features to recognize errors or to infer a value12,38,42,107–109.

These observations lead to a weaker or more nuanced form of the value hypothesis, in which the OFC is necessary only when the value driving behavior or learning is derived from mental simulation or model-based processing. Thus, the OFC is not necessary for Pavlovian conditioning, which can be driven by prior experience, but it is necessary for modifying that response if the predicted outcome is devalued by pairing it with illness38. The feature of this design that may require OFC is not response inhibition, as sometimes assumed given that lesioned rats extinguish responses normally during the probe test (in which food is omitted), but rather the need to link two independently acquired pieces of associative information, the cue-outcome association and the outcome-illness association. Notably, similar results have been obtained in monkeys after OFC lesions (even fiber sparing)12,24,110, and OFC BOLD signal and single-unit responses to predictive cues change selectively after outcome devaluation32,107,111,112 or preference changes75. These deficits are observed even if lesions are made after initial learning and devaluation or if OFC is transiently inactivated only during the critical probe test113,114.

This modified version of the value hypothesis explains why OFC is or is not necessary across a wide variety of value-based behaviors. When the behavior is based on previous experience, which would allow a relevant value or policy to be pre-computed without simulating or imagining the future and without integrating new information, then OFC is not necessary. However, if normal behavior requires a novel value to be computed on the fly using new information or predictions that have been acquired since the original learning, then OFC is required. Other higher order processes, such as computing confidence91,115, experiencing hypothetical or imagined outcomes116, and generating regret117,118, may also be products of this sort of representative structure inasmuch as they rely on the ability to mentally simulate information about predicted outcomes. This account would even explain why OFC is necessary for preferences to satisfy transitivity, as in this context, transitive choices require a comparison between items that typically have not been directly and repeatedly experienced together. In other words, for new choices to be transitive, they cannot easily reflect pre-computed or cached valuations (for example, preference history94) and instead must rely on inference.

If economic value were conceptualized as only reflecting such computed-on-the-fly or inferred values, then the strong and weak versions of this hypothesis would converge73. Although this assertion seems reasonable, the experimental procedures employed to examine neural correlates of economic value have not explicitly controlled for the associative basis of the underlying behavior in the way that formal learning theory requires (Fig. 2).

Yet even if the OFC is critical to value-based behavior only when it requires a model-based value computation, this leaves open questions regarding the OFC’s role in that framework. Does it simply signal the inferred value to allow other areas to compare options or is it essential for some process through which those values are derived? Furthermore, why is the OFC necessary for behavior in situations that do not require value at all when behavior or learning is driven by information about the specific identity of predicted outcomes42,108? And finally, how does any explanation of the OFC’s role in guiding behavior account for its role in learning37,108,109,119? We will address these questions in the next two sections.

Prediction errors

Why is the OFC sometimes important for learning? One powerful proposal is that the OFC signals prediction errors. Prediction errors have long been hypothesized as key to associative learning120–122, and their neural signature has been reported most notably in midbrain dopamine neurons123–130. What if the OFC also signaled prediction errors to other areas? Although it would not explain its role in reinforcer devaluation or sensory preconditioning113,114,119, as in these cases the OFC is necessary at the time new information is used, direct signaling of errors would provide a powerful mechanism whereby OFC might facilitate learning.

Error signals have indeed been reported in OFC31,131–134. Although many such reports involve BOLD signal, which might reflect input from other areas, some report single-unit activity. For example, during reversal of a visual discrimination, some OFC neurons fire on receipt of saline when it is unexpected31. The examples reported (4 neurons, 1.3% of the population) did not fire to saline when it was given expectedly, and in one case the neuron also fired on trials when reward was expected, but the pump had been disconnected. More recently, the activity of a much larger proportion of OFC neurons was recorded in rats performing a spatial two-armed bandit task correlated with reward prediction errors134. Notably, their T-maze task allowed the rats to have full knowledge of the choices available on each upcoming trial. Thus, when the rats experienced a reward that was better than expected, a change in firing could reflect the reward prediction error; however, it could also reflect the updating of the value of that choice for the next trial, essentially a prediction about the next trial (see ref. 135 for fuller discussion).

Contradicting these findings are a number of negative reports in which OFC single units recorded under conditions that should have elicited prediction errors failed to show any change in firing, even during OFC-dependent learning37,50,83,136. In one study, dopamine neurons served as a positive control37. This study used an odor-guided choice task that was conceptually similar to the T-maze employed in ref. 134, except that reward delivery did not inform the rats about the available choices on the subsequent trial. With this confound removed, dopamine neurons signaled prediction errors at the time of reward, but OFC neurons did not.

Overall, these data fail to support direct error signaling as a viable explanation for OFC-dependent learning deficits. However, OFC may still participate in learning through its influence on error signals elsewhere. Consistent with this idea, when OFC and midbrain data were juxtaposed, anticipatory activity in the OFC was inversely related to dopaminergic error signaling downstream37. This suggests that the error signals in other brain areas might depend partly on OFC input for properly calculating the errors137 (Fig. 2). This idea has been partially confirmed for error signals in midbrain dopamine neurons, at least in rats138. Notably, an indirect role would explain why reinforcement-learning deficits after OFC damage are often (though not always) most evident in response to reward omission or ‘negative feedback’139, as these errors require a prediction of reward. Consistent with this idea, the OFC is thought to be particularly important in rats for facilitating reversal learning when contingencies have been stable, which would emphasize the contribution of these predictive signals18.

Credit assignment

Another proposal to explain why the OFC is important for learning is that it is necessary for appropriate credit assignment2,8,140. Credit assignment refers to the proper attribution of prediction errors to specific causes141. Without proper credit assignment, one might have intact error signaling mechanisms, but lose the ability to learn appropriately or as rapidly as normal, particularly when multiple possible antecedents may be related to the error or when the recent choice history is variable. Under these conditions, it becomes critical to assign credit to the most recent choice and ignore previous alternative selections. This idea was explored using a reversal task with three cues predicting different probabilities of reward8. Monkeys with lateral OFC lesions performed normally on the initial discrimination and could track the best option as well as controls when reward probabilities were varied without reversal; however, when the low- and high-probability cues were switched, lesioned monkeys showed the classic OFC-dependent reversal deficit. As noted earlier, by including a third option, the authors showed that the response pattern after reversal did not reflect perseveration, which would have manifested as responding for the previously best option, but instead appeared to reflect somewhat random responding. Closer analysis showed that this occurred because the credit for reward (or non-reward) on the current trial was spread abnormally back to cues selected on preceding trials in lesioned monkeys.

The inability to assign credit appropriately provides an elegant explanation for reversal deficits after OFC damage, as this ability would be useful after reversal when choice patterns become unstable, but not after changes in probability without reversal, when choice patterns remain stable. Only with an unstable pattern of prior choices would the spread of effect lead to markedly impaired learning.

However, taken at face value, this does not provide a straightforward explanation of why the OFC is required in other settings, such as the probe phase of devaluation studies or sensory preconditioning, in which OFC inactivation leads to deficits when learning is not necessary113,114,119 (Fig. 2). This dichotomy raises the question of whether it is necessary to have separate mechanisms to explain why the OFC is involved in learning versus guiding behavior or whether there might be deeper underlying functions necessary for both credit assignment and the behavioral control mediated by OFC.

A cognitive map?

Perhaps the most recent proposal, which may resolve some of the above issues, suggests that the OFC may support the formation of a so-called cognitive map142 that defines the current task space143. Such an associative structure is at the heart of ideas about the implementation of behavioral control. It is necessary for what has been termed goal-directed behavior by learning theorists and model-based behavior by computational neuroscientists. Behavior guided by inference or mental simulation of consequences not directly experienced previously, such as changes in learned behaviors after reinforcer devaluation, would be iconic examples of this. A cognitive map would also be required to generate specific predictions about impending events, such as their identity or features, and for using contextual or temporal structure in the environment to allow old rules to be disregarded so that new ones can be rapidly acquired, as is sometimes the case after reversal. It might also help maintain information about what specific events had just transpired, so that in particularly complex tasks, one could appropriately assign credit when errors are detected.

Of course, constructing and using this associative structure would not depend on any single brain area, but would reflect the operation of a circuit, perhaps spanning much of the brain. This then raises the obvious question of OFC’s precise contribution in this circuit. The OFC may represent the underlying structure (for example, the cognitive map) once it has been acquired143, perhaps with an emphasis on structures related to biologically relevant outcomes. The OFC receives input from hippocampal areas144, which may be important for organizing complex associative representations, and the OFC interacts with broad areas of dorsal and ventral striatum145,146. These connections may allow the OFC to acquire and maintain associative representations and to utilize them to influence how simpler associative information is accessed to guide behavior and learning. This would be consistent with the OFC’s involvement during both the learning and utilization phases of tasks that require cognitive maps of the task’s space119. Alternatively, the OFC might only represent the individual parts that comprise the task space, such as the states used to define the space and various events. This would still make the OFC critical to the circuit’s operation, but would no longer make this area essential for explicitly signaling value or directly driving learning or response inhibition, all of which appear to characterize some, but not all, of the deficits caused by OFC damage.

The possibility that the OFC provides state information, which is then used by other areas, could resolve the contradictions between the evidence and the various theorized functions discussed above (Fig. 2). Thus, the OFC is not necessary for inhibiting responses, calculating value, signaling errors or even credit assignment per se (for example, credit is still assigned in the absence of OFC, but it is not assigned as discretely as normal); however, it is necessary for each of these functions when the function utilizes, or is constrained by, the cognitive map that the OFC provides. For example, reversal learning would be OFC-dependent only when the normal learning rate improves via the formation of a new state space after learning; if the normal learning rate does not require this function, then OFC lesions (fiber sparing or not) would have no apparent effect. This prediction might be tested with a reversal task in which normal rats showed very low levels of spontaneous recovery or renewal of the original associations. Low levels of recovery would be consistent with unlearning or over-writing of the original learning as a result of a failure to use a new state for post-reversal learning. Under these conditions, reversal learning should not depend on OFC if the OFC is facilitating state creation.

The idea of state creation would explain the OFC’s involvement in value-guided behaviors38,98,119 and would also be broadly consistent with its role in well-constrained learning tasks108,119. This view also aligns with unit-recording and fMRI studies that emphasize the complex nature of representations in lateral OFC, where units and BOLD responses are driven not only by reward value, but also by reward identity, cues that precede rewards and even structure in the trial sequences147,148. Such rich representations would allow the derivation of values in new situations, would facilitate the appropriate assignment of credit, and so on.

Conclusions

Invalidation is fundamental to the advancement of scientific ideas. Here we have briefly reviewed a number of ideas concerning OFC function from this perspective. This exercise is important because it helps to narrow down the potential directions for future work. We believe, based on an overview of existing data, that response inhibition, flexible associative encoding, emotion or value alone do not provide adequate, freestanding explanations of OFC function; at best, they explain limited data sets (Fig. 2). Although the verdict is less clear on more recent proposals, such as signaling economic or derived value, credit assignment, and cognitive mapping, we think that there is already some data that at least forces modification of key predictions of many of these accounts. To refine these ideas, it will be important to design experiments that challenge their key predictions and to pay attention to the accumulation of evidence that calls them into question.

Acknowledgments

The authors would like to thank C. Padoa-Schioppa, J. Wallis and P. Rudebeck for critical readings of earlier versions. This work was supported by grants to G.S. from the National Institute on Drug Abuse, the National Institute of Mental Health and the National Institute on Aging while G.S. was employed at the University of Maryland, Baltimore, and by funding from the National Institute on Drug Abuse at the Intramural Research Program. The opinions expressed in this article are the authors’ own and do not reflect the view of the US National Institutes of Health, the Department of Health and Human Services, or the United States government.

Footnotes

COMPETING FINANCIAL INTERESTS

The authors declare no competing financial interests.

References

- 1.Popper K. The Logic of Scientific Discovery. Hutchinson & Co; 1959. [Google Scholar]

- 2.Noonan MP, Kolling N, Walton ME, Rushworth MF. Re-evaluating the role of the orbitofrontal cortex in reward and reinforcement. Eur J Neurosci. 2012;35:997–1010. doi: 10.1111/j.1460-9568.2012.08023.x. [DOI] [PubMed] [Google Scholar]

- 3.Wallis JD. Cross-species studies of orbitofrontal cortex and value-based decision-making. Nat Neurosci. 2012;15:13–19. doi: 10.1038/nn.2956. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Rudebeck PH, Murray EA. Balkanizing the primate orbitofrontal cortex: distinct subregions for comparing and contrasting values. Ann NY Acad Sci. 2011;1239:1–13. doi: 10.1111/j.1749-6632.2011.06267.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ferrier D. The Functions of the Brain. GP Putnam’s Sons; New York: 1876. [Google Scholar]

- 6.Harlow JM. Recovery after passage of an iron bar through the head. Pub Mass Med Soc. 1868;2:329–346. [Google Scholar]

- 7.Jones B, Mishkin M. Limbic lesions and the problem of stimulus-reinforcement associations. Exp Neurol. 1972;36:362–377. doi: 10.1016/0014-4886(72)90030-1. [DOI] [PubMed] [Google Scholar]

- 8.Walton ME, Behrens TEJ, Buckley MJ, Rudebeck PH, Rushworth MFS. Separable learning systems in teh macaque brain and the role of the orbitofrontal cortex in contingent learning. Neuron. 2010;65:927–939. doi: 10.1016/j.neuron.2010.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Rolls ET, Hornak J, Wade D, McGrath J. Emotion-related learning in patients with social and emotional changes associated with frontal lobe damage. J Neurol Neurosurg Psychiatry. 1994;57:1518–1524. doi: 10.1136/jnnp.57.12.1518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Meunier M, Bachevalier J, Mishkin M. Effects of orbital frontal and anterior cingulate lesions on object and spatial memory in rhesus monkeys. Neuropsychologia. 1997;35:999–1015. doi: 10.1016/s0028-3932(97)00027-4. [DOI] [PubMed] [Google Scholar]

- 11.Bechara A, Damasio H, Tranel D, Damasio AR. Deciding advantageously before knowing the advantageous strategy. Science. 1997;275:1293–1295. doi: 10.1126/science.275.5304.1293. [DOI] [PubMed] [Google Scholar]

- 12.Izquierdo A, Suda RK, Murray EA. Bilateral orbital prefrontal cortex lesions in rhesus monkeys disrupt choices guided by both reward value and reward contingency. J Neurosci. 2004;24:7540–7548. doi: 10.1523/JNEUROSCI.1921-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Chudasama Y, Robbins TW. Dissociable contributions of the orbitofrontal and infralimbic cortex to pavlovian autoshaping and discrimination reversal learning: further evidence for the functional heterogeneity of the rodent frontal cortex. J Neurosci. 2003;23:8771–8780. doi: 10.1523/JNEUROSCI.23-25-08771.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hornak J, et al. Reward-related reversal learning after surgical excisions in orbito-frontal or dorsolateral prefrontal cortex in humans. J Cogn Neurosci. 2004;16:463–478. doi: 10.1162/089892904322926791. [DOI] [PubMed] [Google Scholar]

- 15.Kim J, Ragozzino KE. The involvement of the orbitofrontal cortex in learning under changing task contingencies. Neurobiol Learn Mem. 2005;83:125–133. doi: 10.1016/j.nlm.2004.10.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Fellows LK, Farah MJ. Ventromedial frontal cortex mediates affective shifting in humans: evidence from a reversal learning paradigm. Brain. 2003;126:1830–1837. doi: 10.1093/brain/awg180. [DOI] [PubMed] [Google Scholar]

- 17.Tsuchida A, Doll BB, Fellows LK. Beyond reversal: a critical role for human orbitofrontal cortex in flexible learning from probabilistic feedback. J Neurosci. 2010;30:16868–16875. doi: 10.1523/JNEUROSCI.1958-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Riceberg JS, Shapiro ML. Reward stability determines the contribution of orbitofrontal cortex to adaptive behavior. J Neurosci. 2012;32:16402–16409. doi: 10.1523/JNEUROSCI.0776-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Izquierdo A, Jentsch JD. Reversal learning as a measure of impulsive and compulsive behavior in addictions. Psychopharmacology (Berl) 2012;219:607–620. doi: 10.1007/s00213-011-2579-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kringelbach ML, Rolls ET. The functional neuroanatomy of the human orbitofrontal cortex: evidence from neuroimaging and neuropsychology. Prog Neurobiol. 2004;72:341–372. doi: 10.1016/j.pneurobio.2004.03.006. [DOI] [PubMed] [Google Scholar]

- 21.Schoenbaum G, Nugent S, Saddoris MP, Setlow B. Orbitofrontal lesions in rats impair reversal but not acquisition of go, no-go odor discriminations. Neuroreport. 2002;13:885–890. doi: 10.1097/00001756-200205070-00030. [DOI] [PubMed] [Google Scholar]

- 22.Schoenbaum G, Setlow B, Nugent SL, Saddoris MP, Gallagher M. Lesions of orbitofrontal cortex and basolateral amygdala complex disrupt acquisition of odor-guided discriminations and reversals. Learn Mem. 2003;10:129–140. doi: 10.1101/lm.55203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kazama A, Bachevalier J. Selective aspiration or neurotoxic lesions of orbital frontal areas 11 and 13 spared monkeys’ performance on the object discrimination reversal task. J Neurosci. 2009;29:2794–2804. doi: 10.1523/JNEUROSCI.4655-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Rudebeck PH, Saunders RC, Prescott AT, Chau LS, Murray EA. Prefrontal mechanisms of behavioral flexibility, emotion regulation and value updating. Nat Neurosci. 2013;16:1140–1145. doi: 10.1038/nn.3440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Rudebeck PH, Murray EA. Amygdala and orbitofrontal cortex lesions differentially influence choices during object reversal learning. J Neurosci. 2008;28:8338–8343. doi: 10.1523/JNEUROSCI.2272-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Winstanley CA, Theobald DEH, Cardinal RN, Robbins TW. Contrasting roles of basolateral amygdala and orbitofrontal cortex in impulsive choice. J Neurosci. 2004;24:4718–4722. doi: 10.1523/JNEUROSCI.5606-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Chudasama Y, Kralik JD, Murray EA. Rhesus monkeys with orbital prefrontal cortex lesions can learn to inhibit prepotent responses in the reversed reward contingency task. Cereb Cortex. 2007;17:1154–1159. doi: 10.1093/cercor/bhl025. [DOI] [PubMed] [Google Scholar]

- 28.Wallis JD. Orbitofrontal cortex and its contribution to decision-making. Annu Rev Neurosci. 2007;30:31–56. doi: 10.1146/annurev.neuro.30.051606.094334. [DOI] [PubMed] [Google Scholar]

- 29.Aron AR, Robbins TW, Poldrack RA. Inibition and the right inferior frontal cortex: one decade on. Trends Cogn Sci. 2014;18:177–185. doi: 10.1016/j.tics.2013.12.003. [DOI] [PubMed] [Google Scholar]

- 30.Rolls ET. The orbitofrontal cortex. Phil Trans R Soc Lond B. 1996;351:1433–1443. doi: 10.1098/rstb.1996.0128. [DOI] [PubMed] [Google Scholar]

- 31.Thorpe SJ, Rolls ET, Maddison S. The orbitofrontal cortex: neuronal activity in the behaving monkey. Exp Brain Res. 1983;49:93–115. doi: 10.1007/BF00235545. [DOI] [PubMed] [Google Scholar]

- 32.Critchley HD, Rolls ET. Hunger and satiety modify the responses of olfactory and visual neurons in the primate orbitofrontal cortex. J Neurophysiol. 1996;75:1673–1686. doi: 10.1152/jn.1996.75.4.1673. [DOI] [PubMed] [Google Scholar]

- 33.Critchley HD, Rolls ET. Olfactory neuronal responses in the primate orbitofrontal cortex: analysis in an olfactory discrimination task. J Neurophysiol. 1996;75:1659–1672. doi: 10.1152/jn.1996.75.4.1659. [DOI] [PubMed] [Google Scholar]

- 34.Rolls ET, Critchley HD, Mason R, Wakeman EA. Orbitofrontal cortex neurons: role in olfactory and visual association learning. J Neurophysiol. 1996;75:1970–1981. doi: 10.1152/jn.1996.75.5.1970. [DOI] [PubMed] [Google Scholar]

- 35.Cohen JY, Haesler S, Vong L, Lowell BB, Uchida N. Neuron-type-specific signals for reward and punishment in the ventral tegmental area. Nature. 2012 doi: 10.1038/nature10754. (in the press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Burke KA, Takahashi YK, Correll J, Brown PL, Schoenbaum G. Orbitofrontal inactivation impairs reversal of Pavlovian learning by interfering with ‘disinhibition’ of responding for previously unrewarded cues. Eur J Neurosci. 2009;30:1941–1946. doi: 10.1111/j.1460-9568.2009.06992.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Takahashi YK, et al. The orbitofrontal cortex and ventral tegmental area are necessary for learning from unexpected outcomes. Neuron. 2009;62:269–280. doi: 10.1016/j.neuron.2009.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gallagher M, McMahan RW, Schoenbaum G. Orbitofrontal cortex and representation of incentive value in associative learning. J Neurosci. 1999;19:6610–6614. doi: 10.1523/JNEUROSCI.19-15-06610.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Dias R, Robbins TW, Roberts AC. Dissociation in prefrontal cortex of affective and attentional shifts. Nature. 1996;380:69–72. doi: 10.1038/380069a0. [DOI] [PubMed] [Google Scholar]

- 40.McAlonan K, Brown VJ. Orbital prefrontal cortex mediates reversal learning and not attentional set shifting in the rat. Behav Brain Res. 2003;146:97–103. doi: 10.1016/j.bbr.2003.09.019. [DOI] [PubMed] [Google Scholar]

- 41.Bissonette GB, et al. Double dissociation of the effects of medial and orbital prefrontal cortical lesions on attentional and affective shifts in mice. J Neurosci. 2008;28:11124–11130. doi: 10.1523/JNEUROSCI.2820-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Ostlund SB, Balleine BW. Orbitofrontal cortex mediates outcome encoding in Pavlovian but not instrumental learning. J Neurosci. 2007;27:4819–4825. doi: 10.1523/JNEUROSCI.5443-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Izquierdo AD, Murray EA. Bilateral orbital prefrontal cortex lesions disrupt reinforcer devaluation effects in rhesus monkeys. Soc Neurosci Abstr. 2000;26:978. [Google Scholar]

- 44.West EA, Forcelli PA, McCue DL, Malkova L. Differential effects of serotonin-specific and excitotoxic lesions of OFC on conditioned reinforcer devaluation and extinction in rats. Behav Brain Res. 2013;246:10–14. doi: 10.1016/j.bbr.2013.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Schoenbaum G, Chiba AA, Gallagher M. Neural encoding in orbitofrontal cortex and basolateral amygdala during olfactory discrimination learning. J Neurosci. 1999;19:1876–1884. doi: 10.1523/JNEUROSCI.19-05-01876.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Paton JJ, Belova MA, Morrison SE, Salzman CD. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature. 2006;439:865–870. doi: 10.1038/nature04490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Morrison SE, Saez A, Lau B, Salzman CD. Different time courses for learning-related changes in amygdala and orbitofrontal cortex. Neuron. 2011;71:1127–1140. doi: 10.1016/j.neuron.2011.07.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Kennerley SW, Dahmubed AF, Lara AH, Wallis JD. Neurons in the frontal lobe encode the value of multiple decision variables. J Cogn Neurosci. 2009;21:1162–1178. doi: 10.1162/jocn.2009.21100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Kennerley SW, Wallis JD. Evaluating choices by single neurons in the frontal lobe: outcome value encoded across multiple decision variables. Eur J Neurosci. 2009;29:2061–2073. doi: 10.1111/j.1460-9568.2009.06743.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Kennerley SW, Behrens TE, Wallis JD. Double dissociation of value computations in orbitofrontal and anterior cingulate neurons. Nat Neurosci. 2011;14:181–1589. doi: 10.1038/nn.2961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Stalnaker TA, Roesch MR, Franz TM, Burke KA, Schoenbaum G. Abnormal associative encoding in orbitofrontal neurons in cocaine-experienced rats during decision-making. Eur J Neurosci. 2006;24:2643–2653. doi: 10.1111/j.1460-9568.2006.05128.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Rescorla RA. Pavlovian conditioning: it’s not what you think it is. Am Psychol. 1988;43:151–160. doi: 10.1037//0003-066x.43.3.151. [DOI] [PubMed] [Google Scholar]

- 53.Lang PJ. The varieties of emotional experience: a meditation on James-Lange theory. Psychol Rev. 1994;101:211–221. doi: 10.1037/0033-295x.101.2.211. [DOI] [PubMed] [Google Scholar]

- 54.Maia TV, McClelland JL. A reexamination of the evidence for the somatic marker hypothesis: what participants really know in the Iowa gambling task. Proc Natl Acad Sci USA. 2004;101:16075–16080. doi: 10.1073/pnas.0406666101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Bechara A, Damasio H, Damasio AR, Lee GP. Different contributions of the human amygdala and ventromedial prefrontal cortex to decision-making. J Neurosci. 1999;19:5473–5481. doi: 10.1523/JNEUROSCI.19-13-05473.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Bechara A, Tranel D, Damasio H. Characterization of the decision-making deficit of patients with ventromedial prefrontal cortex lesions. Brain. 2000;123:2189–2202. doi: 10.1093/brain/123.11.2189. [DOI] [PubMed] [Google Scholar]

- 57.Bechara A. Neurobiology of decision-making: risk and reward. Semin Clin Neuropsychiatry. 2001;6:205–216. doi: 10.1053/scnp.2001.22927. [DOI] [PubMed] [Google Scholar]

- 58.Bechara A, et al. Decision-making deficits, linked to a dysfunctional ventromedial prefrontal cortex, revealed in alcohol and stimulant abusers. Neuropsychologia. 2001;39:376–389. doi: 10.1016/s0028-3932(00)00136-6. [DOI] [PubMed] [Google Scholar]

- 59.Bechara A, Damasio H. Decision-making and addiction (part I): impaired activation of somatic states in substance dependent individuals when pondering decisions with negative future consequences. Neuropsychologia. 2002;40:1675–1689. doi: 10.1016/s0028-3932(02)00015-5. [DOI] [PubMed] [Google Scholar]

- 60.Pais-Vieira M, Lima D, Galhardo V. Orbitofrontal cortex lesions disrupt risk assessment in a novel serial decision-making task for rats. Neuroscience. 2007;145:225–231. doi: 10.1016/j.neuroscience.2006.11.058. [DOI] [PubMed] [Google Scholar]

- 61.Zeeb FD, Winstanley CA. Lesions of the basolateral amygdala and the orbitofrontal cortex differentially affect acquisition and performance of a rodent gambling task. J Neurosci. 2011;31:2197–2204. doi: 10.1523/JNEUROSCI.5597-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Zeeb FD, Winstanley CA. Functional disconnection of the orbitofrontal cortex and basolateral amygdala impairs acquisition of a rat gambling task and disrupts animals’ ability to alter decision-making behavior after reinforcer devaluation. J Neurosci. 2013;33:6434–6443. doi: 10.1523/JNEUROSCI.3971-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Fellows LK, Farah MJ. Different underlying impairments in decision-making following ventromedial and dorsolateral frontal lobe damage in humans. Cereb Cortex. 2005;15:58–63. doi: 10.1093/cercor/bhh108. [DOI] [PubMed] [Google Scholar]

- 64.Fellows LK. The role of orbitofrontal cortex in decision making: a component process account. Ann NY Acad Sci. 2007;1121:421–430. doi: 10.1196/annals.1401.023. [DOI] [PubMed] [Google Scholar]

- 65.Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci. 2005;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- 66.Dayan P, Niv Y, Seymour P, Daw ND. The misbehavior of value and the discipline of the will. Neural Netw. 2006;19:1153–1160. doi: 10.1016/j.neunet.2006.03.002. [DOI] [PubMed] [Google Scholar]

- 67.Huys QJ, et al. Bonsai trees in your head: how the Pavlovian system sculpts goal-directed choices by pruning decision trees. PLoS Comput Biol. 2012;8:e1002410. doi: 10.1371/journal.pcbi.1002410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.McDannald MA, et al. Model-based learning and the contribution of the orbitofrontal cortex to the model-free world. Eur J Neurosci. 2012;35:991–996. doi: 10.1111/j.1460-9568.2011.07982.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Bechara A, Dolan S, Hindes A. Decision-making and addiction (part II): myopia for the future or hypersensitivity to reward? Neuropsychologia. 2002;40:1690–1705. doi: 10.1016/s0028-3932(02)00016-7. [DOI] [PubMed] [Google Scholar]

- 70.O’Doherty JP. The problem with value. Neurosci Biobehav Rev. 2014;43:259–268. doi: 10.1016/j.neubiorev.2014.03.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Pearson JM, Watson KK, Platt ML. Decision making: the neuroethological turn. Neuron. 2014;82:950–965. doi: 10.1016/j.neuron.2014.04.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Montague PR, Berns GS. Neural economics and the biological substrates of valuation. Neuron. 2002;36:265–284. doi: 10.1016/s0896-6273(02)00974-1. [DOI] [PubMed] [Google Scholar]

- 73.Padoa-Schioppa C. Neurobiology of economic choice: a goods-based model. Annu Rev Neurosci. 2011;34:333–359. doi: 10.1146/annurev-neuro-061010-113648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Levy DJ, Glimcher PW. The root of all value: a neural common currency for choice. Curr Opin Neurobiol. 2012;22:1027–1038. doi: 10.1016/j.conb.2012.06.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Padoa-Schioppa C, Assad JA. Neurons in orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Padoa-Schioppa C, Assad JA. The representation of economic value in the orbitofrontal cortex is invariant for changes in menu. Nat Neurosci. 2008;11:95–102. doi: 10.1038/nn2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Padoa-Schioppa C. Range-adapting representation of economic value in the orbitofrontal cortex. J Neurosci. 2009;29:14004–14014. doi: 10.1523/JNEUROSCI.3751-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Plassmann H, O’Doherty J, Rangel A. Orbitofrontal cortex encodes willingness to pay in everyday economic transactions. J Neurosci. 2007;27:9984–9988. doi: 10.1523/JNEUROSCI.2131-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.McNamee D, Rangel A, O’Doherty JP. Category-dependent and category-independent goal-value codes in human ventromedial prefrontal cortex. Nat Neurosci. 2013;16:479–485. doi: 10.1038/nn.3337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Levy DJ, Glimcher PW. Comparing apples and oranges: using reward-specific and reward-general subjective value representation in the brain. J Neurosci. 2011;31:14693–14707. doi: 10.1523/JNEUROSCI.2218-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Lebreton M, Jorge S, Michel V, Thirion B, Pessiglione M. An automatic valuation system in the human brain: evidence from functional neuroimaging. Neuron. 2009;64:431–439. doi: 10.1016/j.neuron.2009.09.040. [DOI] [PubMed] [Google Scholar]

- 82.Padoa-Schioppa C. Neuronal origins of choice variability in economic decisions. Neuron. 2013;80:1322–1336. doi: 10.1016/j.neuron.2013.09.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.McDannald MA, et al. Orbitofrontal neurons acquire responses to ‘valueless’ Pavlovian cues during unblocking. Elife. 2014;3:e02653. doi: 10.7554/eLife.02653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Stalnaker TA, et al. Orbitofrontal neurons infer the value and identity of predicted outcomes. Nat Commun. 2014;5:3926. doi: 10.1038/ncomms4926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Knutson B, Gibbs SEB. Linking nucleus accumbens dopamine and blood oxygenation. Psychopharmacology (Berl) 2007;191:813–822. doi: 10.1007/s00213-006-0686-7. [DOI] [PubMed] [Google Scholar]

- 86.Maunsell JH. Neuronal representations of cognitive state: reward or attention? Trends Cogn Sci. 2004;8:261–265. doi: 10.1016/j.tics.2004.04.003. [DOI] [PubMed] [Google Scholar]

- 87.Pearce JM, Kaye H, Hall G. Predictive accuracy and stimulus associability: development of a model for Pavlovian learning. In: Commons ML, Herrnstein RJ, Wagner AR, editors. Quantitative Analyses of Behavior. Ballinger; 1982. pp. 241–255. [Google Scholar]

- 88.Esber GR, Haselgrove M. Reconciling the influence of predictiveness and uncertainty on stimulus salience: a model of attention in associative learning. Proc Biol Sci. 2011;278:2553–2561. doi: 10.1098/rspb.2011.0836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Ogawa M, et al. Risk-responsive orbitofrontal neurons track acquired salience. Neuron. 2013;77:251–258. doi: 10.1016/j.neuron.2012.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.O’Neill M, Schultz W. Coding of reward risk by orbitofrontal neurons is mostly distinct from coding of reward value. Neuron. 2010;68:789–800. doi: 10.1016/j.neuron.2010.09.031. [DOI] [PubMed] [Google Scholar]

- 91.Kepecs A, Uchida N, Zariwala HA, Mainen ZF. Neural correlates, computation and behavioural impact of decision confidence. Nature. 2008;455:227–231. doi: 10.1038/nature07200. [DOI] [PubMed] [Google Scholar]

- 92.Leathers ML, Olson CR. In monkeys making value-based decisions, LIP neurons encode cue salience and not action value. Science. 2012;338:132–135. doi: 10.1126/science.1226405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Camille N, Griffiths CA, Vo K, Fellows LK, Kable JW. Ventromedial frontal lobe damage disrupts value maximization in humans. J Neurosci. 2011;31:7527–7532. doi: 10.1523/JNEUROSCI.6527-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Fellows LK, Farah MJ. The role of ventromedial prefrontal cortex in decision making: judgment under uncertainty or judgment per se? Cereb Cortex. 2007;17:2669–2674. doi: 10.1093/cercor/bhl176. [DOI] [PubMed] [Google Scholar]

- 95.Rudebeck PH, Murray EA. Dissociable effects of subtotal lesions within the macaque orbital prefrontal cortex on reward-guided behavior. J Neurosci. 2011;31:10569–10578. doi: 10.1523/JNEUROSCI.0091-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.von Neumann J, Morgenstern O. Theory of Games and Economic Behavior. Princeton University Press; 1947. [Google Scholar]

- 97.Bunsey M, Eichenbaum E. Conservation of hippocampal memory function in rats and humans. Nature. 1996;379:255–257. doi: 10.1038/379255a0. [DOI] [PubMed] [Google Scholar]

- 98.Burke KA, Franz TM, Miller DN, Schoenbaum G. The role of the orbitofrontal cortex in the pursuit of happiness and more specific rewards. Nature. 2008;454:340–344. doi: 10.1038/nature06993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Platt ML, Glimcher PW. Neural correlates of decision variables in parietal cortex. Nature. 1999;400:233–238. doi: 10.1038/22268. [DOI] [PubMed] [Google Scholar]

- 100.Louie K, Grattan LE, Glimcher PW. Reward value-based gain control: divisive normalization in parietal cortex. J Neurosci. 2011;31:10627–10639. doi: 10.1523/JNEUROSCI.1237-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Cai X, Padoa-Schioppa C. Neuronal encoding of subjective value in dorsal and ventral anterior cingulate cortex. J Neurosci. 2012;32:3791–3808. doi: 10.1523/JNEUROSCI.3864-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Hosokawa T, Kennerley SW, Sloan J, Wallis JD. Single-neuron mechanisms underlying cost-benefit analysis in frontal cortex. J Neurosci. 2013;33:17385–17397. doi: 10.1523/JNEUROSCI.2221-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Watson KK, Platt ML. Social signals in primate orbitofrontal cortex. Curr Biol. 2012;22:2268–2273. doi: 10.1016/j.cub.2012.10.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Roesch MR, Taylor AR, Schoenbaum G. Encoding of time-discounted rewards in orbitofrontal cortex is independent of value representation. Neuron. 2006;51:509–520. doi: 10.1016/j.neuron.2006.06.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Blanchard TC, Hayden BY, Bromberg-Martin ES. Orbitofrontal cortex uses distinct codes for different choice attributes in decisions motivated by curiousity. Neuron. doi: 10.1016/j.neuron.2014.12.050. (in the press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Schoenbaum G, Takahashi YK, Liu TL, McDannald M. Does the orbitofrontal cortex signal value? Ann NY Acad Sci. 2011;1239:87–99. doi: 10.1111/j.1749-6632.2011.06210.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Gremel CM, Costa RM. Orbitofrontal and striatal circuits dynamically encode the shift between goal-directed and habitual actions. Nat Commun. 2013;4:2264. doi: 10.1038/ncomms3264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.McDannald MA, Lucantonio F, Burke KA, Niv Y, Schoenbaum G. Ventral striatum and orbitofrontal cortex are both required for model-based, but not model-free, reinforcement learning. J Neurosci. 2011;31:2700–2705. doi: 10.1523/JNEUROSCI.5499-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.McDannald MA, Saddoris MP, Gallagher M, Holland PC. Lesions of orbitofrontal cortex impair rats’ differential outcome expectancy learning but not conditioned stimulus-potentiated feeding. J Neurosci. 2005;25:4626–4632. doi: 10.1523/JNEUROSCI.5301-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Machado CJ, Bachevalier J. The effects of selective amygdala, orbital frontal cortex or hippocampal formation lesions on reward assessment in nonhuman primates. Eur J Neurosci. 2007;25:2885–2904. doi: 10.1111/j.1460-9568.2007.05525.x. [DOI] [PubMed] [Google Scholar]

- 111.Gottfried JA, O’Doherty J, Dolan RJ. Encoding predictive reward value in human amygdala and orbitofrontal cortex. Science. 2003;301:1104–1107. doi: 10.1126/science.1087919. [DOI] [PubMed] [Google Scholar]

- 112.O’Doherty J, et al. Sensory-specific satiety-related olfactory activation of the human orbitofrontal cortex. Neuroreport. 2000;11:893–897. doi: 10.1097/00001756-200003200-00046. [DOI] [PubMed] [Google Scholar]

- 113.Pickens CL, Saddoris MP, Gallagher M, Holland PC. Orbitofrontal lesions impair use of cue-outcome associations in a devaluation task. Behav Neurosci. 2005;119:317–322. doi: 10.1037/0735-7044.119.1.317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114.West EA, DesJardin JT, Gale K, Malkova L. Transient inactivation of orbitofrontal cortex blocks reinforcer devaluation in macaques. J Neurosci. 2011;31:15128–15135. doi: 10.1523/JNEUROSCI.3295-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115.Lak A, et al. Orbitofrontal cortex is required for optimal waiting based on decision confidence. Neuron. 2014;84:190–201. doi: 10.1016/j.neuron.2014.08.039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116.Abe H, Lee D. Distributed coding of actual and hypothetical outcomes in the orbital and dorsolateral prefrontal cortex. Neuron. 2011;70:731–741. doi: 10.1016/j.neuron.2011.03.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 117.Steiner AP, Redish AD. Behavioral and neurophysiological correlates of regret in rat decision-making on a neuroeconomic task. Nat Neurosci. 2014;17:995–1002. doi: 10.1038/nn.3740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 118.Camille N, et al. The involvement of the orbitofrontal cortex in the experience of regret. Science. 2004;304:1167–1170. doi: 10.1126/science.1094550. [DOI] [PubMed] [Google Scholar]

- 119.Jones JL, et al. Orbitofrontal cortex supports behavior and learning using inferred but not cached values. Science. 2012;338:953–956. doi: 10.1126/science.1227489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 120.Rescorla RA, Wagner AR. A theory of Pavlovian conditiong: variations in the effectiveness of reinforcement and nonreinforcement. In: Black AH, Prokesy WF, editors. Classical Conditioning II: Current Research and Theory. Appleton Century Crofts; 1972. pp. 64–99. [Google Scholar]

- 121.Pearce JM, Hall G. A model for Pavlovian learning: variations in the effectiveness of conditioned, but not of unconditioned, stimuli. Psychol Rev. 1980;87:532–552. [PubMed] [Google Scholar]

- 122.Sutton RS. Learning to predict by the method of temporal difference. Mach Learn. 1988;3:9–44. [Google Scholar]

- 123.Mirenowicz J, Schultz W. Importance of unpredictability for reward responses in primate dopamine neurons. J Neurophysiol. 1994;72:1024–1027. doi: 10.1152/jn.1994.72.2.1024. [DOI] [PubMed] [Google Scholar]

- 124.Schultz W, Dayan P, Montague PR. A neural substrate for prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 125.Waelti P, Dickinson A, Schultz W. Dopamine responses comply with basic assumptions of formal learning theory. Nature. 2001;412:43–48. doi: 10.1038/35083500. [DOI] [PubMed] [Google Scholar]

- 126.Pan WX, Schmidt R, Wickens JR, Hyland BI. Dopamine cells respond to predicted events during classical conditioning: evidence for eligibility traces in the reward-learning network. J Neurosci. 2005;25:6235–6242. doi: 10.1523/JNEUROSCI.1478-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 127.Roesch MR, Calu DJ, Schoenbaum G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat Neurosci. 2007;10:1615–1624. doi: 10.1038/nn2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 128.Bayer HM, Glimcher P. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron. 2005;47:129–141. doi: 10.1016/j.neuron.2005.05.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 129.Morris G, Nevet A, Arkadir D, Vaadia E, Bergman H. Midbrain dopamine neurons encode decisions for future action. Nat Neurosci. 2006;9:1057–1063. doi: 10.1038/nn1743. [DOI] [PubMed] [Google Scholar]

- 130.D’Ardenne K, McClure SM, Nystrom LE, Cohen JD. BOLD responses reflecting dopaminergic signals in the human ventral tegmental area. Science. 2008;319:1264–1267. doi: 10.1126/science.1150605. [DOI] [PubMed] [Google Scholar]

- 131.Schultz W, Dickinson A. Neuronal coding of prediction errors. Annu Rev Neurosci. 2000;23:473–500. doi: 10.1146/annurev.neuro.23.1.473. [DOI] [PubMed] [Google Scholar]

- 132.Tobler PN, O’Doherty J, Dolan RJ, Schultz W. Human neural learning depends on reward prediction errors in the blocking paradigm. J Neurophysiol. 2006;95:301–310. doi: 10.1152/jn.00762.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 133.Nobre AC, Coull JT, Frith CD, Mesulam MM. Orbitofrontal cortex is activated during breaches of expectation in tasks of visual attention. Nat Neurosci. 1999;2:11–12. doi: 10.1038/4513. [DOI] [PubMed] [Google Scholar]

- 134.Sul JH, Kim H, Huh N, Lee D, Jung MW. Distinct roles of rodent orbitofrontal and medial prefrontal cortex in decision making. Neuron. 2010;66:449–460. doi: 10.1016/j.neuron.2010.03.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 135.Wallis JD, Rich EL. Challenges of interpreting frontal neurons during value-based decision-making. Front Neurosci. 2011;5:124. doi: 10.3389/fnins.2011.00124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 136.Takahashi YK, et al. Neural estimates of imagined outcomes in the orbitofrontal cortex drive behavior and learning. Neuron. 2013;80:507–518. doi: 10.1016/j.neuron.2013.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 137.Schoenbaum G, Roesch MR, Stalnaker TA, Takahashi YK. A new perspective on the role of the orbitofrontal cortex in adaptive behaviour. Nat Rev Neurosci. 2009;10:885–892. doi: 10.1038/nrn2753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 138.Takahashi YK, et al. Expectancy-related changes in firing of dopamine neurons depend on orbitofrontal cortex. Nat Neurosci. 2011;14:1590–1597. doi: 10.1038/nn.2957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 139.Wheeler EZ, Fellows LK. The human ventromedial frontal lobe is critical for learning from negative feedback. Brain. 2008;131:1323–1331. doi: 10.1093/brain/awn041. [DOI] [PubMed] [Google Scholar]

- 140.Walton ME, Behrens TE, Noonan MP, Rushworth MF. Giving credit where credit is due: orbitofrontal cortex and valuation in an uncertain world. Ann NY Acad Sci. 2011;1239:14–24. doi: 10.1111/j.1749-6632.2011.06257.x. [DOI] [PubMed] [Google Scholar]

- 141.Thorndike EL. A proof of the law of effect. Science. 1933;77:173–175. doi: 10.1126/science.77.1989.173-a. [DOI] [PubMed] [Google Scholar]

- 142.Tolman EC. There is more than one kind of learning. Psychol Rev. 1949;56:144–155. doi: 10.1037/h0055304. [DOI] [PubMed] [Google Scholar]

- 143.Wilson RC, Takahashi YK, Schoenbaum G, Niv Y. Orbitofrontal cortex as a cognitive map of task space. Neuron. 2014;81:267–279. doi: 10.1016/j.neuron.2013.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 144.Deacon TW, Eichenbaum H, Rosenberg P, Eckmann KW. Afferent connections of the perirhinal cortex in the rat. J Comp Neurol. 1983;220:168–190. doi: 10.1002/cne.902200205. [DOI] [PubMed] [Google Scholar]

- 145.Voorn P, Vanderschuren LJMJ, Groenewegen HJ, Robbins TW, Pennartz CMA. Putting a spin on the dorsal-ventral divide of the striatum. Trends Neurosci. 2004;27:468–474. doi: 10.1016/j.tins.2004.06.006. [DOI] [PubMed] [Google Scholar]

- 146.Groenewegen HJ, Berendse HW, Wolters JG, Lohman AHM. The anatomical relationship of the prefrontal cortex with the striatopallidal system, the thalamus and the amygdala: evidence for a parallel organization. Prog Brain Res. 1990;85:95–116. doi: 10.1016/s0079-6123(08)62677-1. [DOI] [PubMed] [Google Scholar]

- 147.Klein-Flügge MC, Barron HC, Brodersen KH, Dolan RJ, Behrens TE. Segregated encoding of reward-identity and stimulus-reward associations in human orbitofrontal cortex. J Neurosci. 2013;33:3202–3211. doi: 10.1523/JNEUROSCI.2532-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 148.Schoenbaum G, Eichenbaum H. Information coding in the rodent prefrontal cortex. I Single-neuron activity in orbitofrontal cortex compared with that in pyriform cortex. J Neurophysiol. 1995;74:733–750. doi: 10.1152/jn.1995.74.2.733. [DOI] [PubMed] [Google Scholar]

- 149.Ongür D, Price JL. The organization of networks within the orbital and medial prefrontal cortex of rats, monkeys and humans. Cereb Cortex. 2000;10:206–219. doi: 10.1093/cercor/10.3.206. [DOI] [PubMed] [Google Scholar]

- 150.Schoenbaum G, Setlow B, Gallagher M. Orbitofrontal cortex: modeling prefrontal function in rats. In: Squire L, Schacter D, editors. The Neuropsychology of Memory. Guilford Press; 2002. pp. 463–477. [Google Scholar]