Abstract

Underlying many complex behaviors are simple learned associations that allow humans and animals to anticipate the consequences of their actions. The orbitofrontal cortex and basolateral amygdala are two regions which are crucial to this process. In this review, we go back to basics and discuss the literature implicating both these regions in simple paradigms requiring the development of associations between stimuli and the motivationally-significant outcomes they predict. Much of the functional research surrounding this ability has suggested that the orbitofrontal cortex and basolateral amygdala play very similar roles in making these predictions. However, electrophysiological data demonstrates critical differences in the way neurons in these regions respond to predictive cues, revealing a difference in their functional role. On the basis of these data and theories that have come before, we propose that the basolateral amygdala is integral to updating information about cue-outcome contingencies whereas the orbitofrontal cortex is critical to forming a wider network of past and present associations that are called upon by the basolateral amygdala to benefit future learning episodes. The tendency for orbitofrontal neurons to encode past and present contingencies in distinct neuronal populations may facilitate its role in the formation of complex, high-dimensional state-specific associations.

Our brains are wired to make predictions about motivationally significant events. They evolved to use sensory input - different scents, colors, and sounds - to predict the presence of edible plants, animals, or predators. Not much has changed. We use these same learning processes to navigate around a supermarket or avoid getting hit by a car as we cross the street. Our tasks have become different in detail and more elaborate - we do not hunt for our meals, choosing instead between hundreds of dinner options in a supermarket - but the ability to form associations between environmental stimuli and the biologically significant outcomes they predict remains central to guiding our behavior.

Two brain regions thought to be critical for making predictions to guide behavior are the orbital frontal cortex (OFC) and the basolateral amygdala (BLA). These two structures are heavily interconnected (Aggleton, Burton, and Passingham, 1980; Morecraft, Geula, and Mesulam, 1992) and are often implicated in similar functions inasmuch as damage or inactivation of either region produce remarkably similar deficits. For example, it has been consistently reported that lesions of either the OFC or BLA produce an inability to cease responding for a cue predicting a devalued food outcome (Blundell, Hall, and Killcross, 2001; Johnson, Gallagher, and Holland, 2009; Ostlund and Balleine, 2007; Pickens, Saddoris, Gallagher, and Holland, 2005; Pickens, Saddoris, Setlow, Gallagher, Holland, and Schoenbaum, 2003; West, DesJardin, Gale, and Malkova, 2011). Further, several studies have found reductions in neural activity in anticipation of a devalued reward in OFC or BLA (Critchley and Rolls, 1996; Gottfried, 2003).

Both OFC and BLA have also been found to be critical for extinction, the process whereby humans and animals learn that a cue that was previously predictive of some outcome no longer leads to that outcome (Falls, Miserendino, and Davis, 1992; Gottfried and Dolan, 2004; Laurent and Westbrook, 2008; Panayi and Killcross, 2014). Similarly, they have both been implicated in reversal of previously-acquired contingencies, though only the OFC is now thought to be functionally necessary for reversal learning (Butter, 1969; Rudebeck and Murray, 2008; Schoenbaum, Nugent, Saddoris, and Setlow, 2002; Schoenbaum, Setlow, Saddoris, and Gallagher, 2003), and then only under some conditions (Jang, Costa, Rudebeck, Chudasama, Murray, and Averbeck, 2015; Machado and Bachevalier, 2007; Rudebeck and Murray, 2011; Rudebeck, Saunders, Prescott, Chau, and Murray, 2013c; Walton, Behrens, Buckley, Rudebeck, and Rushworth, 2010). Finally, both the OFC and BLA are critical for the ability of predictive cues to elicit a sensory-specific representation of food outcomes they predict (Blundell et al., 2001; Killcross, Robbins, and Everitt, 1997; Klein-Flugge, Barron, Brodersen, Dolan, and Behrens, 2013; McDannald, Esber, Wegener, Wied, Liu, Stalnaker, Jones, Trageser, and Schoenbaum, 2014; McDannald, Lucantonio, Burke, Niv, and Schoenbaum, 2011; Ostlund and Balleine, 2007). Despite some subtle differences in the specific nature of the deficits observed in these tasks across labs, the data obtained by manipulation of activity in these regions has often rendered explanations of their respective functions almost indistinguishable.

Insights from the electrophysiological data

On face value, recording of neurons within the OFC and BLA also suggest similarity of function in these regions. For example, neurons in both regions fire to cues that predict motivationally significant outcomes while also responding when the outcomes themselves are presented (Critchley and Rolls, 1996; Kennerley and Wallis, 2009; Paton, Belova, Morrison, and Salzman, 2006; Rolls, Critchley, Mason, and Wakeman, 1996; Samuelsen, Gardner, and Fontanini, 2012; Schoenbaum, Chiba, and Gallagher, 1998; Schoenbaum and Eichenbaum, 1995; Wallis and Miller, 2003). For example, when rats learn that one odor predicts delivery of an appetitive outcome whereas another odor predicts a less appetitive outcome or no outcome at all, neurons in both the OFC and BLA become selective to the predictive odorant cues across time (Schoenbaum et al., 1998; Schoenbaum and Eichenbaum, 1995). Thus as animals come to predict delivery of the outcomes, these neurons become tuned to the predictive cues and increase their firing rate during the cue sampling period. Similar results have been reported in primates (Critchley and Rolls, 1996; Kennerley and Wallis, 2009; Paton et al., 2006; Rolls et al., 1996; Thorpe, Rolls, and Maddison, 1983). Interestingly, these are often the same neurons that respond when the outcome is delivered after the cue itself has terminated (Schoenbaum et al., 2003). In both regions, some neurons fire to the reward-predicting odor and some to the odor predicting no reward or punishment. The development of these responses corresponds to the behavior of the animal; as these neural responses to the cue are acquired across time, animals demonstrate knowledge of the association by responding appropriately to the predictive cues (Schoenbaum et al., 2003; Paton et al., 2006). These data demonstrate that neurons in both the BLA and OFC encode the associative relationship between cues and the outcomes they predict.

Similarities and differences in how these regions encode value

Activity of neurons in the BLA is sensitive to the value of an outcome predicted by a cue. That is, the degree of activity elicited by presentation of a conditioned stimulus (CS) is tightly related to the amount of an appetitive or aversive outcome it predicts. For example, Belova, Paton, and Salzman (2008) have shown that neurons in the BLA exhibit firing to predictive cues in a manner that reflects the value of the outcome it predicts with an impressive degree of accuracy. Specifically, neurons in the BLA show a degree of firing towards a CS that directly relates to the value of the outcome it predicts in a graded fashion. One population of these neurons will exhibit more firing to a CS that signals a large amount of reward, intermediate levels of firing towards cues that signal low amounts of reward, and low levels of firing towards aversive stimuli. Other neurons show the opposite pattern. In essence, neurons in the BLA appear to signal both the absolute value of an outcome predicted by a cue and different populations reveal the valence of the outcome it predicts. Further, neurons in the BLA are not only sensitive to the predicted amount of reward; they also adapt to signal the relative value of outcomes when background rates of reinforcement change (Bermudez and Schultz, 2009). Bermudez and Schultz (2009) demonstrated that neurons in the BLA (and a smaller proportion in the central nucleus of the amygdala) adapted their responses to outcome-predictive cues depending on the how much reinforcement they received in the absence of the cue. Specifically, these neurons extinguished their responses when monkeys experienced a high rate of reward outside of the CS and increased when the background rate of reinforcement decreased and the cue again became a unique predictor of the outcome. This demonstrates that BLA neurons are sensitive to the contingency between the cue and outcome; BLA neurons do not just encode the correlation or contiguity between presentation of a cue and a motivationally significant outcome, they specifically encode the predictive relationship between them. Taken together, these data suggest that cue-selective responding in the BLA is sensitive to the value and valence of the reward that the animal has learned through past experience is contingent upon that cue’s occurrence.

Studies exploring neural activity in the OFC have found that OFC neurons are also influenced by predicted value (Bouret and Richmond, 2010; Gottfried, 2003; Padoa-Schioppa and Assad, 2006; Tremblay and Schultz, 1999; Tremblay and Schultz, 2000). For example, Padoa-Schioppa and Assad (2006) recorded neurons in the OFC while monkeys made a choice between actions that would elicit one of two outcomes. Here, the monkeys preferred one of the outcomes (outcome A) and were therefore willing to forego a greater amount of the less preferred outcome (outcome B) to obtain their preferred choice. To examine the nature of this trade-off, Padoa-Schioppa and Assad (2006) gave monkeys a choice between differing amounts of each outcome as predicted by different visual stimuli, where the number of stimuli indicated the amount of each outcome offered. The authors found that OFC neurons exhibited many different responses towards different aspects of the task. One population of neurons encoded what Padoa-Schioppa and Assad (2006) termed ‘chosen value’, which reflected the encoding of a pure value signal irrespective of identity that was in line with the monkey’s choice behavior. A second set of neurons encoded what the authors termed ‘offer value’, which fired to the value of one or another juice only. Based on these and other similar results (Tremblay and Schultz, 1999; Tremblay and Schultz, 2000), these authors have concluded that the OFC encodes value in a manner that reflects the relative value of the outcome to the animal. This would be similar to the sort of value signaling that was subsequently reported in BLA where neurons’ responses encapsulate both the absolute value and valence of the predicted reward (Belova et al., 2008), though to our knowledge there is currently no test of whether OFC neurons are sensitive to the contingency between cue presentation and outcome delivery.

Interestingly, Padoa-Schioppa and Assad (2006) also reported a prominent third class of neurons in the OFC, which they termed ‘taste neurons’. These neurons would respond only on trials where one of the outcomes was chosen (i.e. either A or B), and their firing did not scale with the amount of reward predicted. Since then, other studies have also found neurons in the OFC which respond to cues which predict a particular identity or value of a reward rather than graded firing to cues predicting outcomes of different values (Lopatina, McDannald, Styer, Sadacca, Cheer, and Schoenbaum, 2015; McDannald et al., 2014). An illustrative example of this is from recent data recording the activity in OFC neurons in a blocking design (Lopatina et al., 2015). Here, rats were given an odor that led to delivery of a liquid reward. In a second stage of training, rats received the same odor followed immediately by one of three odor-outcome pairings. The presence of the novel odors predicted either the same, more, or less reward than that predicted by the initial odor alone. Interestingly, Lopatina et al. (2015) found very little evidence of cells that encoded the value of reward in a graded fashion. Rather, the dominant profile in these ensembles was that different populations of neurons in the OFC responded to one of the three odors. Specifically, one population of these neurons responded to the odor that predicted less reward, another to the odor that predicted more reward, and yet a third population of neurons responded to the odor that predicted no change in the reward. The cue selectivity of these neurons does not reflect the identity of the odor itself as the odor preference emerges across training as animals learn the contingencies associated with the novel odor (Lopatina et al., 2015). Taken with data which has shown that OFC neurons increase their firing rate to cues which signal a change in reward identity in the absence of a value shift (Howard, Gottfried, Tobler, and Kahnt, 2015; Klein-Flugge et al., 2013; McDannald et al., 2011), this research suggests that the OFC encodes outcomes of different value or identity in distinct ensembles in addition to signaling the relative value of the outcome predicted by the cue. These data suggest that the OFC is not only interested in value, but more than that, is interested in specific conjunctions between different stimuli. That is, OFC neurons are sensitive to particular combinations of cues and outcomes where different populations respond to different cues that predict the same outcomes. This is distinct from neurons in the BLA where single populations encode different cues predicting the same outcome and the level of activity elicited by these cues is tightly related to the value of the outcome they predict. This is consistent with earlier conceptions of OFC as representing associative information as conjunctions (Rolls, 1996; Wallis and Miller, 2003); notably this sort of conjunctive encoding of specific associative information may go somewhat beyond the simpler representations that appear to be encoded in the BLA that seem to prioritize cue selectivity on the basis of the value of the outcome it predicts rather than specific cue-outcome relationships (Paton et al., 2006; Schoenbaum, Chiba, and Gallagher, 1999).

Neuronal responses in the BLA and OFC diverge when contingencies change

When contingencies change and animals are forced to adjust their expectations, the nature of responses in the BLA and OFC once again appear to diverge. An example of this is evident in the neuronal responses in OFC and BLA during reversal learning (Paton et al., 2006; Rolls et al., 1996; Schoenbaum et al., 1998; 1999; Schoenbaum et al., 2003; Wallis and Miller, 2003). As discussed above, neurons in both regions acquire conditioned responses to predictive cues where some neurons fire to cues that predict the appetitive outcome and some neurons fire to cues predictive of aversive outcomes. When these contingencies are reversed, however, neurons in the BLA and OFC adapt in different ways. Specifically, neurons in the BLA show a reversal of preference for the cues (Paton et al., 2006; Schoenbaum et al., 1999). That is, the neurons that were previously firing to the positive odor now begin firing to the negative odor and vice versa. Similarly to the pattern of activity seen with in BLA neurons in reference to cues which predict outcomes of different values (i.e. a graded monotonic signal), BLA neurons switch responding to cues when the value of the outcome they predict changes. That is, BLA neurons again appear to prioritize neural encoding of outcome value rather than encoding the cues that predict that outcome. Here, the cue predicting the outcome is irrelevant and these neurons will switch to responding whichever cue predicts the same outcome.

Far fewer neurons in the OFC show this profile of responding when the contingencies reverse (Schoenbaum et al., 1999; Schoenbaum, Chiba, and Gallagher, 2000). Rather, the predominant pattern in OFC is for a new set of neurons to start responding to the new contingencies (Schoenbaum et al., 2003; Wallis and Miller, 2003). Effectively, a large proportion of the neurons that were previously selective to the cue prior to the reversal phase stop responding and a new set of neurons replace them to exhibit cue selectivity to the new contingencies (Schoenbaum et al., 2003; Wallis and Miller, 2003). This again demonstrates that OFC neurons tend to be sensitive to changes in the combinations between cues and outcomes rather than the trend toward an exhibition of outcome-centric encoding as seen in the BLA where the cue predicting the outcome is irrelevant. Thus neurons in the BLA change to reflect the animals’ current expectations, whereas neurons in the OFC seem to form new associations when contingencies change to facilitate maintenance of both new and old associations. This distinction builds upon the thought that the OFC specifically encodes different conjunctions between different stimuli (Wallis and Miller, 2003), where a network of associations representing these conjunctions develops to allow past and present contingencies to exist in distinct populations.

How can the functional connectivity between the BLA and OFC inform our understanding of their similarities and differences?

BLA damage prevents OFC neurons from exhibiting selective activity towards predictive cues

Given these regions are functionally similar and heavily interconnected it becomes of interest to think about how these regions influence one another. One way to probe this question is to consider experiments that have assessed the change in neuronal responding in one of these regions following damage to the other. For example, this technique has shown a lack of input from BLA to OFC produces dramatic changes in the nature of neuronal responding in the OFC (Hampton, Adolphs, Tyszka, and O’Doherty, 2007; Rudebeck, MItz, Chacko, and Murray, 2013a; Schoenbaum et al., 2003).

Neural activity in the OFC of BLA-lesioned rats fails to acquire its characteristic cue selectivity across learning. Instead activity becomes more bound to the identity of the cue itself and the ‘outcome-selective’ neurons that respond to reward delivery fail to transfer to the cue across learning. Furthermore, following a reversal the OFC does not appear to recruit new neurons to respond to the reversed contingency in BLA-lesioned animals (Schoenbaum et al., 2003). These data suggest that the acquisition of cue selectivity in OFC neurons is dependent on input from the BLA and when the significance of a cue changes during a reversal, BLA input promotes the formation of a new ensemble in OFC to encode the changed contingencies. The interactions between BLA and OFC during reversal learning is interesting given research showing that, for the most part, BLA lesions do not produce a deficit in reversal learning. This suggests that under normal circumstances BLA and OFC interact to produce a change in stored information about cue-outcome relationships. However, animals may have access to other systems promoting a change in behavior to produce changes in performance during reversals under normal circumstances. Of particular interest here is research implicating the central nucleus of the amygdala in governing behavior through the use of stimulus-response associations (Lingawi and Balleine, 2012) while, as discussed, the BLA is integral to using stimulus-outcome associations to produce behavior which is sensitive to changes of outcome value. It is likely that both these processes contribute to normal behavior but in the absence of one system the other is capable of producing adaptive behavior that is sensitive to changes in the predictive relationship between a cue and an outcome.

Insofar as the OFC encodes the relative value of an outcome predicted by a cue, this signaling also appears to be dependent on input from the amygdala (Rudebeck, Mitz, Chacko, and Murray, 2013b). Like Padoa-Schioppa and Assad (2006), Rudebeck et al. (2013b) reported that neurons in the OFC encode the value of reward associated with a particular cue. Specifically, monkeys learnt that two visual stimuli led to differing amounts of reward. When the monkeys were presented with the predictive visual cues, Rudebeck et al. (2013b) found that the degree of activity in OFC neurons reflected the amount of reward that was predicted by the stimuli. That is, neurons in the OFC would elicit high rates of firing to cues predicting a large amount of reward, moderate rates of firing to cues predicting moderate amounts of reward, and low rates of firing to cues predicting low amounts of reward. Interestingly, lesions of the amygdala (where in this case both the central and basolateral amygdala were damaged) reduced the magnitude of these value-based signals in the OFC (Rudebeck et al., 2013b). That is, amygdala damage generally reduced the firing rates of OFC neurons in response to cues that signal reward.

These studies show that BLA signaling is critical to allowing OFC neurons to acquire cue responses that reflect changes in contingencies and the value of the outcome predicted by the cue. However, it is important to note here that the nature of the responses that eventuate in the OFC suggest additional processing in the OFC itself. For example, in the case of value coding, neural populations in the OFC exhibit information that goes beyond the graded value of reward reflect in BLA neurons. Specifically, distinct populations of OFC neurons appear to show a preference for encoding particular identities or magnitudes of reward in addition to signaling the magnitude of reward in a scaled fashion (Lopatina et al., 2015; Padoa-Schioppa and Assad, 2006). The tendency for OFC neurons to reflect information that exceeds that seen in BLA is also evident in the difference in cue responses in the BLA and OFC following a reversal. While neurons in the BLA reverse their preference following a switch in contingencies, the majority of neurons in the OFC that were encoding the previous contingencies remain unchanged and a new ensemble is recruited to encode the reversed contingencies (Schoenbaum et al., 2003). Thus, despite the fact that BLA is critical to allowing OFC to signal information about predictive cues, the OFC is clearly encoding information beyond what is represented by BLA neurons themselves. As it stands, it appears that the amygdala signals primary information regarding current value and is needed to update changing contingencies, which is then translated into a wider network coding past and present conjunctions between cues and outcomes in distinct populations within the OFC.

BLA neurons fail to integrate specific information about multiple distinct associations in the absence of OFC input

While the BLA signals important information about the predictive status of a cue to the OFC, the OFC also signals important information about predictive relationships to the BLA. In particular, recent evidence suggests that signaling from the OFC contributes to the ability of neurons in the BLA to reflect the integration of information from multiple sources (Lucantonio, Gardner, Mirenzi, Newman, Takahashi, and Schoenbaum, 2015). In this study, rats were trained on an over-expectation task and neurons in the BLA were recorded in OFC-lesioned and sham-lesioned animals. In the initial stages of training, rats were presented with two critical cue-outcomes pairings, A1 and V1. These cues both lead to presentations of the same appetitive outcome. Neurons in the BLA acquired their characteristic conditioned responses towards outcome-predictive cues and this pattern of activity was unaffected by OFC lesions. However, rats were subsequently presented with both cues A1 and V1 in compound with the same outcome. Essentially, this evokes an error in prediction as the respective predictions of the cues A1 and V1, if summed, would lead to animals ‘over-expecting’ the reward they receive. This is usually evident behaviorally by higher responding to the compound cue relative to responding to the individual cues in the initial training. Rats then show that they adapt their expectations by reducing their responding to both cues as when finally probed for levels of responding towards each of the cues A1 and V1 individually after compound training, they respond at roughly half the levels previously seen in the initial conditioning stage. Interestingly, neurons in the BLA usually respond in accordance with the behavior exhibited by animals across the different phases of this task. During the beginning of the compound stage, when rats over-expect the outcome and exhibit high levels of responding, firing of neurons in the BLA reflected this summation of expectancy. Further, this firing rate declined across the course of compound training in accordance with the rats’ behavioral adjustment to the accurate expectation of reward. In contrast, neurons in the BLA of OFC-lesioned rats failed to show either the summation of firing or the decline in this firing rate with additional compound training. This research demonstrates that while BLA input is necessary to establish neuronal responses in the OFC that reflect the significance of conditioned cues, when multiple past contingencies are necessary to adjust current expectations, the OFC is key to allowing past expectancies about cues predicting the same outcome to update cue-selective associations in the BLA. That is, the predominant value- and outcome-centric coding in the BLA becomes problematic when humans and animals are presented with multiple cues that predict the same outcome. Under such circumstances the OFC is necessary to allow BLA processing to reflect the integration of information from multiple cue-outcome associations.

BLA-OFC interactions: A dynamic system for updating and storing networks of learned associations

One way of interpreting the differences outlined above is to suppose that the BLA is involved in updating the value of the outcome predicted by a cue, whereas the OFC is involved in developing a network of associations between stimuli and the outcomes they predict to allow previous experience to benefit future learning. We began with a discussion of similarities in the deficits produced by damage or inactivation of these regions. However, there are subtle differences in these deficits that may lend support for this view. For example, while both the OFC and BLA have been shown to be involved in Pavlovian devaluation procedures, they appear to be involved in different phases of this task. Specifically, the BLA seems to be more important in the acquisition of the association between the cue and the predicted outcome (when such an association contains a sensory-specific representation of that outcome). In support of this, pre-training lesions of the BLA disrupt subsequent sensitivity to devaluation (Blundell et al., 2001; Pickens et al., 2003), whereas lesions made prior to the test for devaluation sensitivity after conditioning do not disrupt the reduction to the cue signaling the now devalued outcome (Pickens et al., 2003). Further, the BLA also seems to be involved in updating the value of the outcome during devaluation itself but not for expression of these associations at a choice test (Parkes and Balleine, 2013; Wellman, Gale, and Malkova, 2005). Specifically, reducing glutamatergic signaling in the BLA, through the use of the NMDA antagonist infenprodil, during devaluation of the appetitive outcome prevents the subsequent reduction of responding to a cue that predicts that outcome (Parkes and Balleine, 2013). In contrast, the OFC appears to be necessary for the expression of this updated association following devaluation. That is, lesions or inactivation of the OFC following conditioning disrupt the expression of devaluation sensitivity (Pickens et al., 2005; Pickens et al., 2003; West et al., 2011). This is consistent with the view that the BLA updates the value of an outcome predicted by a cue and then relays this information to the OFC, which builds these associations into a network that reflects the animal’s overall experience with differing contingencies. The OFC is able to deploy this network of representations to mentally simulate likely outcomes of different courses of actions and even to derive novel predictions that go beyond past experience, as for example during outcome devaluation, sensory preconditioning (Jones, Esber, McDannald, Gruber, Hernandez, Mirenzi, and Schoenbaum, 2012), or over-expectation. However, OFC depends on these interactions with BLA for establishing the underlying values at the time of learning.

This interpretation is strengthened by findings that disconnection of the amygdala and OFC prior to training also disrupts devaluation and reversal learning, suggesting their interaction is integral to facilitating the encoding and expression of outcome-selective associations (Baxter, Parker, Lindner, Izquierdo, and Murray, 2000; Jang et al., 2015). Thus, the data from both the electrophysiological and functional research suggest that the interaction between the BLA and OFC form a dynamic system which is capable of rapidly updating Pavlovian contingencies and storing them within a network of associations to benefit future learning episodes.

An architecture for state-specific learning?

As a final note, it is worth considering how the research discussed above sits within a recent proposal that implicates the OFC in the formation of state-specific associations (Bradfield, Dezfouli, van Holstein, Chieng, and Balleine, 2015; Schoenbaum, Stalnaker, and Niv, 2013; Sharpe, Wikenheiser, Niv, and Schoenbaum, 2015; Wilson, Takahashi, Schoenbaum, and Niv, 2014). Specifically, according to Wilson et al. (2014) the OFC represents an abstract task representation, called a state space, over which learning is performed. A state space captures the underlying structure of the task, incorporating external information about the state of the environment as well as any relevant information such as recent actions or a remembered task instruction that may be available perceptually. Put simply, this model argues that predictive relationships between a cue and an outcome become tagged with the ‘state’ in which they were experienced. Thus, the central notion is that multiple associations can be formed about the same stimulus in different state spaces. Evidence from the electrophysiological data discussed here, suggesting that neurons in the OFC encode discrete conjunctions between stimuli, show that this region is capable of encoding multiple associations about the same stimuli when contingencies change or a stimulus predicts multiple outcomes. Thus, the organization of neuronal activity in OFC seems to provide a platform for state-specific associations to reside, such as those postulated by the state-space theory of OFC function (Wilson et al., 2014). Put another way, the manner in which neurons in the OFC respond when animals have experienced multiple contingencies seems to suggest this region tends to encode different experiences in different spaces which may facilitate the development of state-specific associations as described by Wilson et al. (2014). Given the role of the BLA in allowing the OFC to develop these characteristic neuronal responses, it now becomes of interest to consider how the BLA may facilitate state-specific learning via projections to the OFC.

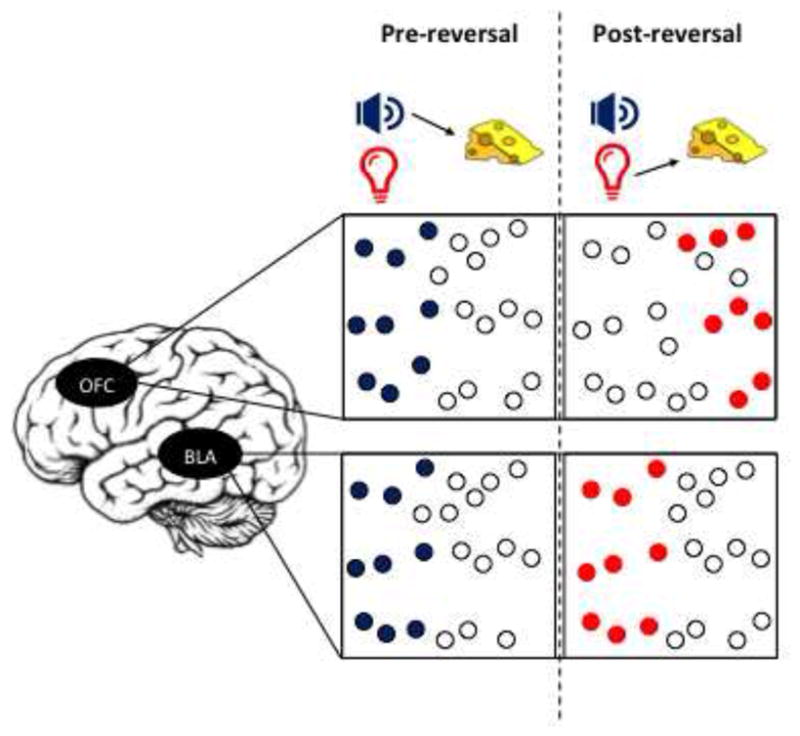

Figure 1. OFC and BLA neurons respond differentially to a reversal in contingencies.

During initial training (pre-reversal; left panel) both OFC and BLA respond to cues predicting a rewarding outcome. However, when contingencies are reversed (post-reversal; right panel) these neuronal responses diverge. Specifically, the BLA neurons which were encoding the previously reward-predictive outcome now switch to start responding to now reward-predictive cue. In contrast, only about 20% of OFC neurons reverse their preference (Schoenbaum et al., 2003). Instead, a new population of neurons that were previously not cue-selective begin responding to the new contingency.

Highlights.

BLA and OFC are critical to make predictions about motivationally-significant events.

The electrophysiological data reveal important differences in their functional role.

We propose that BLA is critical for updating existing predictions.

In contrast, OFC is important for building a network of cue-outcome associations.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Aggleton JP, Burton MJ, Passingham RE. Cortical and subcortical afferents to the amygdala of the rhesus monkey (Macaca mulatta) Brain Res. 1980;190:347–368. doi: 10.1016/0006-8993(80)90279-6. [DOI] [PubMed] [Google Scholar]

- Baxter MG, Parker A, Lindner CC, Izquierdo AD, Murray EA. Control of response selection by reinforcer value requires interaction of amygdala and orbital prefrontal cortex. J Neurosci. 2000;20:4311–4319. doi: 10.1523/JNEUROSCI.20-11-04311.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belova MA, Paton JJ, Salzman CD. Moment-to-Moment Tracking of State Value in the Amygdala. Journal of Neuroscience. 2008;28:10023–10030. doi: 10.1523/JNEUROSCI.1400-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bermudez MA, Schultz W. Responses of Amygdala Neurons to Positive Reward-Predicting Stimuli Depend on Background Reward (Contingency) Rather Than Stimulus-Reward Pairing (Contiguity) J Neurophysiol. 2009;103:1158–1170. doi: 10.1152/jn.00933.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blundell P, Hall G, Killcross S. Lesions of the basolateral amygdala disrupt selective aspects of reinforcer representation in rats. Journal of Neuroscience. 2001;21:9018–9026. doi: 10.1523/JNEUROSCI.21-22-09018.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouret S, Richmond BJ. Ventromedial and Orbital Prefrontal Neurons Differentially Encode Internally and Externally Driven Motivational Values in Monkeys. Journal of Neuroscience. 2010;30:8591–8601. doi: 10.1523/JNEUROSCI.0049-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradfield LA, Dezfouli A, van Holstein M, Chieng B, Balleine BW. Medial Orbitofrontal Cortex Mediates Outcome Retrieval in Partially Observable Task Situations. Neuron. 2015;88:1268–1280. doi: 10.1016/j.neuron.2015.10.044. [DOI] [PubMed] [Google Scholar]

- Butter CM. Perseveration in extinction and in discrimination reversal tasks following selective frontal ablations in Macaca mulatta. Physiology & Behavior. 1969;4:163–171. [Google Scholar]

- Critchley HD, Rolls ET. Hunger and satiety modify the responses of olfactory and visual neurons in the primate orbitofrontal cortex. J Neurophysiol. 1996;75:1673–1686. doi: 10.1152/jn.1996.75.4.1673. [DOI] [PubMed] [Google Scholar]

- Falls WA, Miserendino MJD, Davis M. Extinction of Fear-Potentiated Startle - Blockade by Infusion of an Nmda Antagonist into the Amygdala. Journal of Neuroscience. 1992;12:854–863. doi: 10.1523/JNEUROSCI.12-03-00854.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gottfried JA. Encoding Predictive Reward Value in Human Amygdala and Orbitofrontal Cortex. Science. 2003;301:1104–1107. doi: 10.1126/science.1087919. [DOI] [PubMed] [Google Scholar]

- Gottfried JA, Dolan RJ. Human orbitofrontal cortex mediates extinction learning while accessing conditioned representations of value. Nature Neuroscience. 2004;7:1144–1152. doi: 10.1038/nn1314. [DOI] [PubMed] [Google Scholar]

- Hampton AN, Adolphs R, Tyszka JM, O’Doherty JP. Contributions of the Amygdala to Reward Expectancy and Choice Signals in Human Prefrontal Cortex. Neuron. 2007;55:545–555. doi: 10.1016/j.neuron.2007.07.022. [DOI] [PubMed] [Google Scholar]

- Howard JD, Gottfried JA, Tobler PN, Kahnt T. Identity-specific coding of future rewards in the human orbitofrontal cortex. Proceedings of the National Academy of Science. 2015;112:5195–5200. doi: 10.1073/pnas.1503550112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jang AI, Costa VD, Rudebeck PH, Chudasama Y, Murray EA, Averbeck BB. The Role of Frontal Cortical and Medial-Temporal Lobe Brain Areas in Learning a Bayesian Prior Belief on Reversals. Journal of Neuroscience. 2015;35:11751–11760. doi: 10.1523/JNEUROSCI.1594-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson AW, Gallagher M, Holland PC. The Basolateral Amygdala Is Critical to the Expression of Pavlovian and Instrumental Outcome-Specific Reinforcer Devaluation Effects. Journal of Neuroscience. 2009;29:696–704. doi: 10.1523/JNEUROSCI.3758-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones JL, Esber GR, McDannald MA, Gruber AJ, Hernandez A, Mirenzi A, Schoenbaum G. Orbitofrontal Cortex Supports Behavior and Learning Using Inferred But Not Cached Values. Science. 2012;338:953–956. doi: 10.1126/science.1227489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Wallis JD. Encoding of Reward and Space During a Working Memory Task in the Orbitofrontal Cortex and Anterior Cingulate Sulcus. J Neurophysiol. 2009;102:3352–3364. doi: 10.1152/jn.00273.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Killcross S, Robbins TW, Everitt BJ. Different types of fear-conditioned behaviour mediated by separate nuclei within amygdala. Nature. 1997;388:377–380. doi: 10.1038/41097. [DOI] [PubMed] [Google Scholar]

- Klein-Flugge MC, Barron HC, Brodersen KH, Dolan RJ, Behrens TEJ. Segregated Encoding of Reward-Identity and Stimulus-Reward Associations in Human Orbitofrontal Cortex. Journal of Neuroscience. 2013;33:3202–3211. doi: 10.1523/JNEUROSCI.2532-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laurent V, Westbrook RF. Distinct contributions of the basolateral amygdala and the medial prefrontal cortex to learning and relearning extinction of context conditioned fear. Learning & Memory. 2008;15:657–666. doi: 10.1101/lm.1080108. [DOI] [PubMed] [Google Scholar]

- Lingawi NW, Balleine BW. Amygdala central nucleus interacts with dorsolateral striatum to regulate the acquisition of habits. The Journal of neuroscience. 2012;32:1073–1081. doi: 10.1523/JNEUROSCI.4806-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lopatina N, McDannald MA, Styer CV, Sadacca BF, Cheer JF, Schoenbaum G. Lateral orbitofrontal neurons acquire responses to upshifted, downshifted, or blocked cues during unblocking. eLife. 2015:4. doi: 10.7554/eLife.11299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lucantonio F, Gardner MPH, Mirenzi A, Newman LE, Takahashi YK, Schoenbaum G. Neural Estimates of Imagined Outcomes in Basolateral Amygdala Depend on Orbitofrontal Cortex. Journal of Neuroscience. 2015;35:16521–16530. doi: 10.1523/JNEUROSCI.3126-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Machado CJ, Bachevalier J. The effects of selective amygdala, orbital frontal cortex or hippocampal formation lesions on reward assessment in nonhuman primates. European Journal of Neuroscience. 2007;25:2885–2904. doi: 10.1111/j.1460-9568.2007.05525.x. [DOI] [PubMed] [Google Scholar]

- McDannald MA, Esber GR, Wegener MA, Wied HM, Liu T-L, Stalnaker TA, Jones JL, Trageser J, Schoenbaum G. Orbitofrontal neurons acquire responses to ‘valueless’ Pavlovian cues during unblocking. eLife. 2014:3. doi: 10.7554/eLife.02653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDannald MA, Lucantonio F, Burke KA, Niv Y, Schoenbaum G. Ventral Striatum and Orbitofrontal Cortex Are Both Required for Model-Based, But Not Model-Free, Reinforcement Learning. Journal of Neuroscience. 2011;31:2700–2705. doi: 10.1523/JNEUROSCI.5499-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morecraft RJ, Geula C, Mesulam MM. Cytoarchitecture and neural afferents of orbitofrontal cortex in the brain of the monkey. The Journal of Comparative Neurology. 1992;323:341–358. doi: 10.1002/cne.903230304. [DOI] [PubMed] [Google Scholar]

- Ostlund SB, Balleine BW. Orbitofrontal Cortex Mediates Outcome Encoding in Pavlovian But Not Instrumental Conditioning. Journal of Neuroscience. 2007;27:4819–4825. doi: 10.1523/JNEUROSCI.5443-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Panayi MC, Killcross S. Orbitofrontal cortex inactivation impairs between-but not within-session Pavlovian extinction: An associative analysis. Neurobiology of Learning and Memory. 2014;108:78–87. doi: 10.1016/j.nlm.2013.08.002. [DOI] [PubMed] [Google Scholar]

- Parkes SL, Balleine BW. Incentive Memory: Evidence the Basolateral Amygdala Encodes and the Insular Cortex Retrieves Outcome Values to Guide Choice between Goal-Directed Actions. Journal of Neuroscience. 2013;33:8753–8763. doi: 10.1523/JNEUROSCI.5071-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paton JJ, Belova MA, Morrison SE, Salzman CD. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature. 2006;439:865–870. doi: 10.1038/nature04490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pickens CL, Saddoris MP, Gallagher M, Holland PC. Orbitofrontal Lesions Impair Use of Cue-Outcome Associations in a Devaluation Task. Behavioral Neuroscience. 2005;119:317–322. doi: 10.1037/0735-7044.119.1.317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pickens CL, Saddoris MP, Setlow B, Gallagher M, Holland PC, Schoenbaum G. Different roles for orbitofrontal cortex and basolateral amygdala in a reinforcer devaluation task. Journal of Neuroscience. 2003;23:11078–11084. doi: 10.1523/JNEUROSCI.23-35-11078.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rolls ET. The orbitofrontal cortex. Philosophical Transactions of the Royal Society of London B. 1996;351:1433–1443. doi: 10.1098/rstb.1996.0128. [DOI] [PubMed] [Google Scholar]

- Rolls ET, Critchley HD, Mason R, Wakeman EA. Orbitofrontal cortex neurons: Role in olfactory and visual association learning. J Neurophysiol. 1996;75:1970–1981. doi: 10.1152/jn.1996.75.5.1970. [DOI] [PubMed] [Google Scholar]

- Rudebeck PH, MItz AR, Chacko RV, Murray EA. Effects of amygdala lesions on reward-value coding in orbital and medial prefrontal cortex. Neuron. 2013a;80:1519–1531. doi: 10.1016/j.neuron.2013.09.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudebeck PH, Mitz AR, Chacko RV, Murray EA. Effects of amygdala lesions on reward-value coding in orbital and medial prefrontal cortex. Neuron. 2013b;80:1519–1531. doi: 10.1016/j.neuron.2013.09.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudebeck PH, Murray EA. Amygdala and Orbitofrontal Cortex Lesions Differentially Influence Choices during Object Reversal Learning. Journal of Neuroscience. 2008;28:8338–8343. doi: 10.1523/JNEUROSCI.2272-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudebeck PH, Murray EA. Dissociable Effects of Subtotal Lesions within the Macaque Orbital Prefrontal Cortex on Reward-Guided Behavior. Journal of Neuroscience. 2011;31:10569–10578. doi: 10.1523/JNEUROSCI.0091-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudebeck PH, Saunders RC, Prescott AT, Chau LS, Murray EA. Prefrontal mechanisms of behavioral flexibility, emotion regulation and value updating. Nature Neuroscience. 2013c;16:1140–1145. doi: 10.1038/nn.3440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Samuelsen Chad L, Gardner Matthew PH, Fontanini A. Effects of Cue-Triggered Expectation on Cortical Processing of Taste. Neuron. 2012;74:410–422. doi: 10.1016/j.neuron.2012.02.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenbaum G, Chiba AA, Gallagher M. Orbitofrontal cortex and basolateral amygdala encode expected outcomes during learning. Nature Neuroscience. 1998;1:155–159. doi: 10.1038/407. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Chiba AA, Gallagher M. Neural encoding in orbitofrontal cortex and basolateral amygdala during olfactory discrimination learning. Journal of Neuroscience. 1999;19:1876–1884. doi: 10.1523/JNEUROSCI.19-05-01876.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenbaum G, Chiba AA, Gallagher M. Changes in functional connectivity in orbitofrontal cortex and basolateral amygdala during learning and reversal training. Journal of Neuroscience. 2000;20:5179–5189. doi: 10.1523/JNEUROSCI.20-13-05179.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenbaum G, Eichenbaum H. Information Coding in the Rodent Prefrontal Cortex .1. Single-Neuron Activity in Orbitofrontal Cortex Compared with That in Pyriform Cortex. J Neurophysiol. 1995;74:733–750. doi: 10.1152/jn.1995.74.2.733. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Nugent SL, Saddoris MP, Setlow B. Orbitofrontal lesions in rats impair reversal but not acquisition of go, no-go odor discriminations. Neuroreport. 2002;13:885–890. doi: 10.1097/00001756-200205070-00030. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Setlow B, Saddoris MP, Gallagher M. Encoding Predicted Outcome and Acquired Value in Orbitofrontal Cortex during Cue Sampling Depends upon Input from Basolateral Amygdala. Neuron. 2003;39:855–867. doi: 10.1016/s0896-6273(03)00474-4. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Stalnaker Thomas A, Niv Y. How Did the Chicken Cross the Road? With Her Striatal Cholinergic Interneurons, Of Course. Neuron. 2013;79:3–6. doi: 10.1016/j.neuron.2013.06.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharpe Melissa J, Wikenheiser Andrew M, Niv Y, Schoenbaum G. The State of the Orbitofrontal Cortex. Neuron. 2015;88:1075–1077. doi: 10.1016/j.neuron.2015.12.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thorpe SJ, Rolls ET, Maddison S. The orbitofrontal cortex: neuronal activity in the behaving monkey. Experimental Brain Research. 1983;49:93–115. doi: 10.1007/BF00235545. [DOI] [PubMed] [Google Scholar]

- Tremblay L, Schultz W. Relative reward preference in primate orbitofrontal cortex. Nature. 1999;398:704–708. doi: 10.1038/19525. [DOI] [PubMed] [Google Scholar]

- Tremblay L, Schultz W. Modifications of reward expectation-related neuronal activity during learning in primate orbitofrontal cortex. Journal of Neurophysiology. 2000;83:1877–1885. doi: 10.1152/jn.2000.83.4.1877. [DOI] [PubMed] [Google Scholar]

- Wallis JD, Miller EK. Neuronal activity in primate dorsolateral and orbital prefrontal cortex during performance of a reward preference task. European Journal of Neuroscience. 2003;18:2069–2081. doi: 10.1046/j.1460-9568.2003.02922.x. [DOI] [PubMed] [Google Scholar]

- Walton ME, Behrens TEJ, Buckley MJ, Rudebeck PH, Rushworth MFS. Separable learning systems in teh macaque brain and the role of the orbitofrontal cortex in contingent learning. Neuron. 2010;65:927–939. doi: 10.1016/j.neuron.2010.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wellman LL, Gale K, Malkova L. GABAA-mediated inhibition of basolateral amygdala blocks reward devaluation in macaques. The Journal of neuroscience. 2005;25:4577–4586. doi: 10.1523/JNEUROSCI.2257-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- West EA, DesJardin JT, Gale K, Malkova L. Transient Inactivation of Orbitofrontal Cortex Blocks Reinforcer Devaluation in Macaques. Journal of Neuroscience. 2011;31:15128–15135. doi: 10.1523/JNEUROSCI.3295-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson RC, Takahashi YK, Schoenbaum G, Niv Y. Orbitofrontal Cortex as a Cognitive Map of Task Space. Neuron. 2014;81:267–279. doi: 10.1016/j.neuron.2013.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]