Abstract

One of the fundamental challenges of visual cognition is how our visual systems combine information about an object's features with its spatial location. A recent phenomenon related to object-location binding, the “Spatial Congruency Bias”, revealed that two objects are more likely to be perceived as having the same identity or features if they appear in the same spatial location, versus if the second object appears in a different location. The Spatial Congruency Bias suggests that irrelevant location information is automatically encoded with and bound to other object properties, biasing their perceptual judgments. Here we further explored this new phenomenon and its role in object-location binding by asking what happens when an object moves to a new location: Is the Spatial Congruency Bias sensitive to spatiotemporal contiguity cues, or does it remain linked to the original object location? Across four experiments, we found that the Spatial Congruency Bias remained strongly linked to the original object location. However, under certain circumstances, e.g. when the first object paused and remained visible for a brief period of time after the movement, the congruency bias was found at both the original location and the updated location. These data suggest that the Spatial Congruency Bias is based more on low-level visual information than spatiotemporal contiguity cues, and reflects a type of object-location binding that is primarily tied to the original object location and may only update to the object's new location if there is time for the features to be re-encoded and re-bound following the movement.

Keywords: object-position binding, spatiotemporal contiguity, object-file, object recognition

Introduction

When we look upon a scene, our visual systems typically need to encode multiple different objects. Each of these objects contains a number of features, such as shape, color, and size, which must all be combined in order for us to recognize what we are seeing. Moreover, we must also process the locations of these objects in order to successfully act upon them. Object location is not only important for visually-guided action, but location has also been proposed to function as “pointers” or “indices” to help individuate objects and successfully solve the “binding problem” (Treisman & Gelade, 1980). For example, when viewing a red pen and a blue mug on your desk, the different features of each object may each be bound to their object's location (e.g., forming an “object file”: Kahneman, Treisman, & Gibbs, 1992), such that we don't get confused and incorrectly perceive a blue pen or a red mug.

A number of studies have demonstrated a special role for location in object recognition and a dominance of location information over other types of features across a variety of behavioral tasks (Cave & Pashler, 1995; Chen & Wyble, 2015; Chen, 2009; Golomb, Kupitz, & Thiemann, 2014; Leslie, Xu, Tremoulet, & Scholl, 1998; Pertzov & Husain, 2014; Treisman & Gelade, 1980; Tsal & Lavie, 1988, 1993). Of particular interest, a recent line of research has revealed a phenomenon termed the “Spatial Congruency Bias” (Golomb et al., 2014). The Spatial Congruency Bias reveals that people are more likely to judge two sequentially presented objects as having the same identity or features when they are presented in the same location, compared to when the objects are presented in different locations. This is a robust effect that seems to reflect an automatic influence of location information on object perception. Moreover, it is uniquely driven by location: object location biases judgments of shape, color, orientation, and even facial identity (Golomb et al., 2014; Shafer-Skelton, Kupitz, & Golomb, 2017); yet these features do not bias each other, nor do they bias judgments of location (Golomb et al., 2014). The Spatial Congruency Bias suggests that irrelevant location information is automatically encoded with and bound to other object properties, biasing their perceptual judgments. It seems to reveal an underlying assumption of our visual system that stimuli appearing in the same location are likely to be the same object, with the increased tendency to judge two objects as the same identity presumably resulting from location serving as an indirect link between them, with both objects bound to the same location pointer.

One theoretical account of the Spatial Congruency Bias is that it may be driven by spatiotemporal contiguity; in the real world, objects typically don't disappear and reappear at new locations, so location is generally a reliable cue for “sameness”. Spatiotemporal contiguity is known to be a robust cue for object recognition (Burke, 1952; Cox, Meier, Oertelt, & DiCarlo, 2005; Flombaum, Kundey, Santos, & Scholl, 2004; Flombaum, Scholl, & Santos, 2009; Flombaum & Scholl, 2006; Kahneman et al., 1992; Li & DiCarlo, 2008; Mitroff & Alvarez, 2007; Spelke, Kestenbaum, Simons, & Wein, 1995; Wallis & Bülthoff, 2001; Yi et al., 2008). For example, “object files” are thought to rely on spatiotemporal contiguity, with the strength of spatiotemporal information surpassing the influence of surface feature cues, such as color, size, and shape (Kahneman et al., 1992; Mitroff & Alvarez, 2007; but see Hollingworth & Franconeri, 2009). When stimuli follow a consistent spatiotemporal movement trajectory, we tend to perceive a single object, even if the features have obviously changed; e.g., if participants view a red stimulus pass behind an occluder, and a green stimulus emerges at the expected temporal and spatial point, they tend to perceive a single object that has changed in color (Burke, 1952; Flombaum & Scholl, 2006). Infants exhibit a similar reliance on spatiotemporal information (Flombaum et al., 2009; Spelke et al., 1995), as do nonhuman primates, e.g., monkeys behaving as if a kiwi fruit has transformed into a lemon (Flombaum et al., 2004). Spatiotemporal contiguity also modulates neural representations of object identity (Li & DiCarlo, 2008; Yi et al., 2008). Moreover, artificially altering spatiotemporal regularities can have substantial effects on subsequent object recognition; for example, when participants are trained with repeated exposure to “swapped” objects (Cox et al., 2005) or faces that change identity as the head smoothly rotates (Wallis & Bülthoff, 2001).

In the original Spatial Congruency Bias paradigm (Golomb et al., 2014), stimuli were presented sequentially at either the same or different locations, with a 1-2 second blank delay between presentations. Thus, spatial location biased feature perception even without temporal contiguity. But what if the object moved to a new location while maintaining spatiotemporal contiguity? If the Congruency Bias is sensitive to these contiguity cues, we would expect the bias to track with the moving object and update to reflect its new spatial location. But if the Congruency Bias is based simply on a low-level binding of features to location, movement poses an interesting challenge: If features are bound to one spatial location, when an object moves, is object-location binding automatically updated, or does it remain linked to the original spatial location, such that features would have to be subsequently re-bound to the object's new location?

In the current study, we tested four variations of the Spatial Congruency Bias paradigm with spatiotemporally contiguous object movement. In Experiment 1, a stimulus was briefly presented inside a placeholder object; the placeholder then smoothly moved to a new location, after which a second stimulus appeared either at the final placeholder location, at the original location, or at a control location. In Experiment 2, the stimulus itself moved, rather than a placeholder. Experiment 3 and Experiment 4 included manipulations of other factors, such as timing and occlusion during movement, respectively. Across all four studies, we found a strong Congruency Bias at the initial location, which sometimes - but not always - was partially updated to the end of the movement path.

General Methods

Subjects

Subjects for these experiments were recruited from the Ohio State University, ranging from 18-35 years of age with normal or corrected-to-normal vision. A sample size of N=16 for each experiment was chosen based on a power analysis of the original Spatial Congruency Bias experiment reported in Golomb et al. (2014), which had a Cohen's d = 1.01 and statistical power (1 - β) of 0.96. (One experiment included 17 subjects because we over-scheduled an extra subject.) Informed consent was obtained for all subjects, and study protocols were approved by the Ohio State University Behavioral and Social Sciences Institutional Review Board. All subjects were compensated with a small monetary sum or course credit.

Experimental Setup

Stimuli were generated using the Psychtoolbox extension (Brainard 1997) for Matlab (Mathworks) and presented on a 21-inch flatscreen CRT monitor. Subjects were seated at a chinrest 60 cm from the monitor. The monitor was color calibrated with a Minolta CS-100 colorimeter.

Eye-tracking

Eye position was monitored using an EyeLink 1000 eye-tracking system recording pupil and corneal reflection position. Fixation was monitored for all experiments. If at any point the subject's fixation deviated greater than 2°, the trial was aborted and repeated later in the block.

Stimuli

Stimuli were the same as those in Golomb, Kupitz, & Thiemann (2014), modified from the Tarr stimulus set (stimulus images courtesy of Michael J. Tarr, Center for the Neural Basis of Cognition and Department of Psychology, Carnegie Mellon University, http://www.tarrlab.org). Stimuli were drawn from ten families of shape morphs created using FantaMorph software (Abrosoft; http://www.abrosoft.com/). Each of these 10 families contained 20 individual exemplar objects (5% morph difference between each image). Within a family, the “body” of the object always remained constant, while the “appendages” could vary in shape, length, or relative location. Stimulus orientation was never varied. Stimuli were presented on a black background and sized 5x5° and centered at 7° eccentricity.

Analyses

The Spatial Congruency Bias was calculated as in Golomb et al (2014), using the Signal Detection Theory1 formula below, where “hits” were defined as the subject responding ‘same’ when the two objects were in fact the same identity and “false-alarms” were ‘same’ responses when the objects had different identities:

The Spatial Congruency Bias was calculated separately for each subject and location condition and submitted to random-effects analyses (planned two-tailed t-tests). Effect sizes were calculated using Cohen's d. Trials on which subjects failed to respond, or responded with RTs greater than or less than 2.5SD of the subject's mean RT were excluded (less than 3% of trials for each experiment). Subjects who had an overall task accuracy of less than 55% (indicating non-compliance or inability to perform the task; criterion set in advance, consistent with Finlayson & Golomb, 2016; Shafer-Skelton et al., 2017) were excluded from analyses. (Reanalyzing the data without excluding these subjects did not change the patterns or conclusions.)

Additional measures such as response time, proportion of ‘same’ responses, and d-prime are reported in the raw data tables included in the Appendix.

Experiment 1 – Placeholder Movement

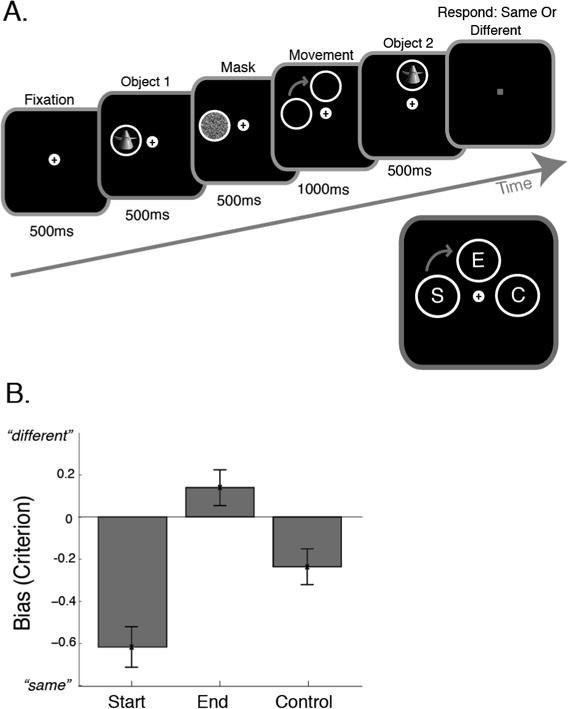

Experiment 1 (Figure 1) modified the original Golomb et al (2014) Spatial Congruency Bias experiment to test if the bias updated with object movement. As in the original paradigm, subjects saw two sequentially presented objects separated by a brief delay, and they judged whether the objects were the same or different identity. In the current experiment, Object 1 appeared on the screen inside a circular placeholder, the placeholder moved to a new location during the delay, and then Object 2 appeared in one of three locations: at the Start location (where the first object was presented), at the End location (where the object would be expected to appear if spatiotemporal contiguity were assumed), or a Control location.

Figure 1. Task and results for Experiment 1 - Placeholder Movement.

A) Trial timing: Object 1 appeared inside a white placeholder for 500ms at the original (start) location. The object was masked, and then the placeholder moved to a new location (end). Object 2 appeared at either the Start (S), End (E), or Control (C) location – see inset. The task was to respond whether Object 1 and Object 2 were the same or different shape. B) Bias (signal detection theory criterion measure) plotted as a function of location condition. Negative bias is increased likelihood to judge the objects as “same shape”. Error bars are standard error of the mean (SEM), N=16.

Methods

Subjects

16 subjects (11 females, 5 males; mean age 20.5 years) participated in this experiment; 4 additional subjects completed the study but were excluded for poor task performance (accuracy < 55%).

Task and Design

Participants initiated each trial by fixating on a central fixation cross. After 500ms of fixation, Object 1 appeared in one of four peripheral locations around the fixation point. The object was presented for 500ms, surrounded by a circular placeholder (Figure 1). The object was then replaced by a mask for 500ms. The mask then disappeared, leaving only the placeholder remaining. The placeholder moved ninety degrees along a circular path, either clockwise or counterclockwise, to the “End” location; motion was continuous and lasted 1000ms. Immediately after movement ceased, Object 2 appeared for 500ms.

Object 2 was presented in one of three locations, all at equal visual eccentricities: On 50% of the trials, Object 2 appeared at the End location, inside the placeholder. On 25% of trials, Object 2 appeared at the “Start” location of the movement (i.e., the location where Object 1 was presented). On the remaining 25% of trials, Object 2 appeared at a “Control” location, located 90 degrees past the End location along the circular trajectory. When Object 2 appeared at the Start or Control locations, the placeholder reappeared with the objects at these locations. The End location was both the spatiotemporally contiguous condition and the most probable condition.

Participants indicated whether they thought the two objects were identical (same shape) or not by making a 2-alternative forced choice “same” or “different” button press. Location was irrelevant to the task; subjects were instructed to compare the objects' identities (shape) only. Participants could respond at any point after Object 2 onset, and accuracy feedback (green or red square) was given at the end of each trial. After a 2000ms inter-trial interval, the next trial began.

The identity of Object 1 was randomly chosen from the set of exemplars on each trial; on 50% of trials Object 2 was the same exact image as Object 1 (“same identity”), and on 50% of trials Object 2 was chosen as a different exemplar from the same morph family (“different identity”). The difference between objects was meant to be subtle, and morph distance was chosen individually for each subject based on a staircase conducted during a practice block before the main task. An adaptive QUEST procedure (Watson & Pelli, 1983) was used targeting 75% accuracy. The final staircase value from the adaptive training block was selected as the morph distance for the main task. If performance on a certain block dropped below 65% or above 85%, the morph distance was re-adjusted prior to the next block.

The six different conditions – Object 2 Identity (same or different) × Object 2 Location (Start, End, or Control) – were counterbalanced and presented in a randomized order. Each condition was repeated four times per block (eight times per block for the End Location conditions, since they were twice as likely), to create blocks of thirty-two trials each. Subjects completed between eight and ten blocks total in the allotted time for the session. Eye position was monitored in real-time on each trial; if subjects broke fixation at any point during the trial a large red X would appear in the middle of the screen, and the trial was aborted and repeated later in the run. Before beginning the main task, subjects completed 1 practice block to orient them to the task and determine the appropriate staircase level for identity differences.

Results

Figure 1B shows the bias (criterion) at the three locations Object 2 could appear. A negative bias indicates a greater tendency to respond “same identity”. Hit and false alarm rates are included in Table 1, along with RT and d-prime.

Table.

| Experiment 1 | Start | End | Control | |

|---|---|---|---|---|

| p (“same”) | Same Identity | 0.86 | 0.60 | 0.76 |

| Different Identity | 0.50 | 0.31 | 0.39 | |

| Reaction Time | Same Identity | 0.746 | 0.772 | 0.806 |

| Different Identity | 0.829 | 0.782 | 0.828 | |

| Bias | -0.61 | 0.14 | -0.23 | |

| D-Prime | 1.20 | 0.80 | 1.09 |

| Experiment 2 | Start | End | Control 1 | Control 2 | |

|---|---|---|---|---|---|

| p (“same”) | Same Identity | 0.91 | 0.67 | 0.73 | 0.76 |

| Different Identity | 0.41 | 0.26 | 0.35 | 0.35 | |

| Reaction Time | Same Identity Different Identity | 0.763 0.804 | 0.815 0.826 | 0.796 0.822 | 0.798 0.818 |

| Bias | -0.58 | 0.12 | -0.12 | -0.18 | |

| D-Prime | 1.65 | 1.22 | 1.09 | 1.19 |

| Experiment 3 | Start | End | Control 1 | Control 2 | |

|---|---|---|---|---|---|

| p (“same”) | Same Id entity | 0.74 | 0.77 | 0.71 | 0.70 |

| Different Identity | 0.41 | 0.41 | 0.36 | 0.39 | |

| Reaction Time | Same Identity | 0.738 | 0.741 | 0.756 | 0.757 |

| Different Identity | 0.784 | 0.768 | 0.771 | 0.773 | |

| Bias | -0.23 | -0.27 | -0.11 | -0.13 | |

| D-Prime | 0.95 | 1.04 | 0.98 | 0.83 |

| Experiment 4 | Start | End | Control 1 | Control 2 | |

|---|---|---|---|---|---|

| p (“same”) | Same Id entity | 0.79 | 0.74 | 0.71 | 0.72 |

| Different Identity | 0.54 | 0.53 | 0.48 | 0.51 | |

| Reaction Time | Same Identity | 0.425 | 0.439 | 0.436 | 0.426 |

| Different Identity | 0.466 | 0.450 | 0.436 | 0.459 | |

| Bias | -0.49 | -0.38 | -0.27 | -0.33 | |

| D-Prime | 0.75 | 0.63 | 0.64 | 0.59 |

Note that RTs in Experiment 4 were measured from the time Object 2 was fully un-occluded. However, subjects could have begun accumulating information during the 360ms partial occlusion period as the object re-emerged from behind the occluder; hence the faster RTs here than in the other experiments.

The Spatial Congruency Bias was greatest (most negative) when Object 2 appeared at the Start location, indicating that subjects were more likely to report the two objects as being the same identity when the second object appeared at the same original location as the first object, even though this location was inconsistent with spatiotemporal contiguity (and probability) expectations. As in previous reports, the shift in bias was driven by an increase in both hits (reporting same identity when the objects were the same) and false alarms (reporting same identity when the objects were actually different). The bias was significantly greater for the Start location than both the Control location (t(15) = -4.68, p < 0.001, d = 1.17) and the End location (t(15) = -8.07, p < 0.001, d = 2.02). Unexpectedly, we also found a bias in the reverse direction at the End location, which was significantly different from the Control location (t(15) = 4.36, p = 0.001; d = 1.09), meaning subjects were significantly less likely to report these two objects as the same identity in this condition compared to control.

Discussion

In Experiment 1 we found a Spatial Congruency Bias similar to what has been previously reported (Golomb et al., 2014), where two objects presented in the same spatial location are more likely to be judged as the same identity (shape), even though location is irrelevant to the task. However, here we tested whether the congruency bias would update with object movement, or remain at the original spatial location. Interestingly, despite the End location carrying both spatiotemporal contiguity and probability advantages, the Spatial Congruency bias remained at the Start location, where Object 1 was originally presented. This suggests that the location where an object first appears carries particular importance for the Spatial Congruency Bias and the type of object-location binding it may reflect.

One alternative possibility is that subjects ignored the movement and maintained attention at the starting location. However, the (unspeeded) reaction time results (Table 1) suggest otherwise. Reaction time was actually fastest at the End location, and RTs for both Start and End locations were significantly faster than at the Control location (Start vs. Control: t(15) = -2.74, p = 0.015, d = 0.86; End vs. Control: t(15) = -4.31 p < 0.001; d = 1.08), indicating that subjects' attention was successfully moved to the end location with the movement. It thus seems that while spatial attention updated according to the spatiotemporal contiguity and probability cues, the Spatial Congruency Bias did not.

Finally, while we found a standard congruency bias at the Start location, the results showed an unexpected reverse bias at the End location (subjects were more likely to deem this object as ‘different’, compared to the control). As with the standard congruency bias, the reverse bias was driven by a shift in both hits and false alarms. This effect seems counterintuitive, since participants' attention seemed to follow the object's placeholder to the End location, yet they perceived the second object to be more different when it appeared at this location. In other words, both the Start and End locations had facilitated RTs compared to the Control location, but the congruency biases went in opposite directions. Experiments 2 and 3 modify the experimental paradigm (by having the object remain visible during movement and manipulating presentation times), to see if this reverse bias persists, or if the standard congruency bias updates with movement under these conditions.

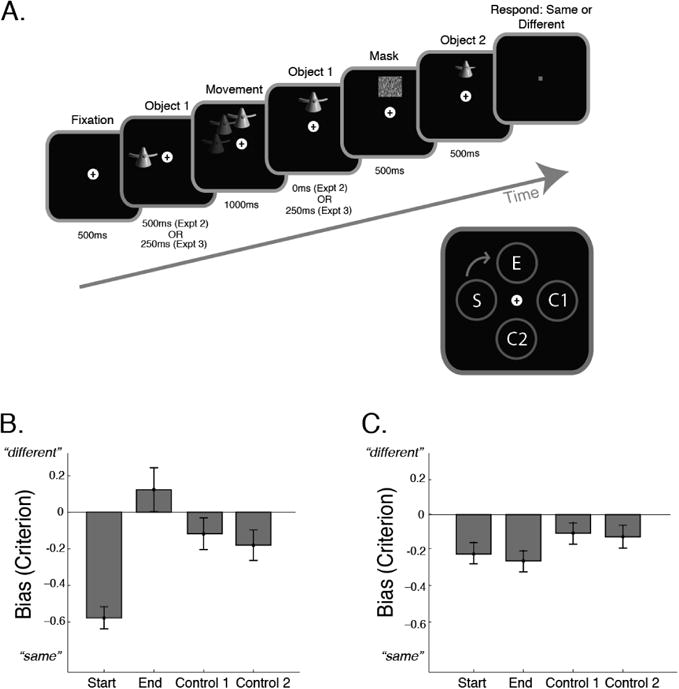

Experiment 2 – Object Movement

Experiment 1 used a placeholder to signal movement to the new location. But perhaps this movement cue was not effective enough to create spatiotemporal object continuity. In Experiment 2 we asked: would the Spatial Congruency Bias still remain at the start location if the object itself remained visible for the entire duration of movement? Furthermore, would the reverse bias still persist?

Methods

Subjects

17 subjects (9 females, 8 males, mean age 19.8 years) participated in this experiment; 2 additional subjects completed the study but were excluded for poor task performance (accuracy < 55%).

Task & Design

In the previous experiment, Object 1 was replaced by a placeholder, and the placeholder moved to the End location. In Experiment 2, Object 1 remained visible – there was no placeholder, and the object itself moved to the End location (Figure 2). Otherwise, the timing was analogous to the first experiment: Object 1 appeared for 500ms at the start location, moved for 1000ms, and was then masked upon reaching the end location. Then, the second object appeared for 500ms at either the start point, end point, or a control. A second control location was also added so that we could control both for the distance effects found in Golomb et al. (2014) and for momentum along the movement trajectory (Figure 2). The proportion of trials was divided equally among the four location conditions, and location remained irrelevant to the task (same/different identity judgment). The Start and End conditions were each compared to both control conditions (note: we did not treat it as a 2x2 design since we would have had to ignore either distance or momentum considerations; rather than prioritize one type of control, we compared all combinations using t-tests). All other details were the same as Experiment 1.

Figure 2. Task and results for Experiment 2 - Object Movement and Experiment 3 – Timing Manipulation.

A) Trial progression for Experiment 2 was the same as Experiment 1 (Figure 1), except the object itself moved, instead of a placeholder. In Experiment 2, Object 1 appeared at the original (start) location for 500ms and disappeared immediately after the movement to the end location was concluded. In Experiment 3, Object 1 was presented at the start location for 250ms before the movement, and remained visible for 250ms at the end location. Object 2 appeared at either the Start (S), End (E), Control 1 (C1), or Control 2 (C2) location – see inset. The task was to respond whether Object 1 and Object 2 were the same or different shape. B-C) Bias (signal detection theory criterion measure) plotted as a function of location condition for Experiment 2 (B) and 3 (C). Negative bias is increased likelihood to judge the objects as “same shape”. Error bars are SEM, N=17 (Expt 2) and N=16 (Expt 3).

Results

Figure 2B illustrates the bias for each of the four location conditions. There was again a strong Spatial Congruency Bias at the Start location, with subjects significantly more likely to report the two objects as the same identity when Object 2 appeared at the same Start location as Object 1 (compared to End location; t(16) = -6.99, p < 0.001, d = 1.70, Control 1 location: t(16) = -6.06, p < 0.001, d = 1.47; Control 2 location: t(16) = -5.24, p < 0.001, d = 1.27). There was also again a significant reverse bias at the End location compared to both control locations (t(16) = 2.32, p = 0.034, d = 0.56 and t(16) = 3.46, p = 0.003, d = 0.84, for Control 1 and Control 2, respectively). There was no difference in bias between the two control locations (t(16) = -1.25, p = 0.228, d = 0.30).

Discussion

In Experiment 2, Object 1 remained visible during the movement rather than being replaced by a placeholder. We also included an additional control location. However, we saw the same pattern of results as Experiment 1. The Spatial Congruency Bias (greater likelihood to report two objects as the same identity) was restricted to the Start location where the first object was originally presented, despite the fact that the object itself remained visible throughout the movement. Meanwhile, at the spatiotemporally consistent End location, both experiments found a reverse effect, where the congruency bias to say “same identity” was less than at the control locations.

Experiment 3 – Timing Manipulation

Experiments 1 and 2 demonstrated that the Spatial Congruency Bias does not automatically update when the first object (or its placeholder) moves to a new location. However, just because it did not automatically update under those circumstances does not mean it never updates. One possibility is that the Spatial Congruency Bias may in fact update with object movement, but rather than updating immediately, it requires time for the object features to be re-bound to the new location. To test this account, in Experiment 3 we modified the timing of Experiment 2. While Experiment 2 had the first object masked immediately upon completion of the movement trajectory, in Experiment 3 the first object remained visible at the End location for a brief period of time.

Methods

16 subjects (10 females, 6 males, mean age 18.8 years) participated in this experiment; one additional subject completed the study but was excluded for poor task performance (accuracy < 55%).

Experiment 3 was identical to Experiment 2 except for the following timing difference: The total object presentation time was equated across experiments, but in Experiment 3, Object 1 was presented before, during, and after the movement. Object 1 was presented for 250ms at the Start location, the same 1000ms movement period, and then 250ms at the End location before being masked (Figure 2A).

Results

Figure 2C illustrates the bias for each of the four location conditions. The Spatial Congruency Bias now appears to be split between the Start and End locations, with both locations exhibiting a bias to report objects as the same identity. The magnitude of the Spatial Congruency Bias was reduced compared to the previous experiments, as were the effect sizes and statistics (Start vs Control 1: t(15) = -2.11, p = 0.05, d = .53; Start vs Control 2: t(15) = -1.51, p = 0.15, d= 0.38; End vs Control 1: t(15) = -2.10, p = 0.05, d = 0.52; End vs Control 2: t(15) = -2.02, p = 0.06, d = 0.50). There was no significant difference in bias between the Start and End locations (t(15) = 0.56, p = 0.58, d = 0.14).

Discussion

In Experiment 3, we again found a Spatial Congruency Bias at the Start location, consistent with the previous experiments. However, the congruency bias was approximately half the magnitude as before, and now seemed to be split between the Start and End locations. Whether this means the bias was shared between both locations on each trial, or present at the Start location on some trials and the End location on others, cannot be differentiated here. Regardless, by reducing presentation time at the Start location and increasing it at the End location, Object 1 was now presented for equal amounts of time before and after the movement; under these conditions, the Spatial Congruency Bias seemed to at least partially update to the new location. Note that in previous studies, stimulus presentations of 200ms were sufficient to evoke a strong congruency bias (Golomb et al 2014 Expt 4); thus even though Object 1's presentation time was split here between the start and end locations, the presentation time of 250ms at each location should have been sufficient in principle to evoke a full bias, though it is possible that the End location bias might have been even larger with additional time after the movement.

However, there is an important caveat in interpreting these results: Because Object 1 was presented both before and after the movement, the updated Spatial Congruency Bias could be explained by a few different accounts. The extra presentation time after the movement could have allowed enough time for the Congruency Bias to update, but an alternate explanation is that subjects could have simply re-encoded the object at the end location. In other words, it is unclear whether the object-location binding was updated by the spatiotemporal movement or if it was simply over-written. Finally, a third possibility is that subjects could technically have performed the task by just paying attention to one location or the other (i.e., sometimes they encoded the object at the start location, but other times they could have waited until after the movement and just encoded the object then). This ambiguity is why Experiments 1 and 2 were designed in such a way as to not allow the object to be (re)encoded at the End location. While the results and interpretation of Experiment 3 are more ambiguous than the first two experiments, Experiment 3 still makes several important contributions: (1) that the Congruency Bias can sometimes update, (2) that a sizable component still remains at the start location, and (3) that the reverse bias was eliminated.

Experiment 4 – Occluded Movement

In the final experiment, we tested whether the Spatial Congruency Bias updates when an object moves behind an occluder. Occluded movement offers the advantage that an object can still be perceived to have spatiotemporal contiguity even when it is no longer visible, thus allowing an opportune time to swap an object's features/identity more subtly without having to rely on an abrupt change or mask. A number of previous studies looking at spatiotemporal contiguity have tested objects moving behind an occluder, demonstrating that when the motion pattern is spatially and temporally consistent, object identity is perceived to remain intact following occlusion (Burke, 1952; Flombaum & Scholl, 2006). Compelling spatiotemporal information can even trump obvious feature differences in the objects before and after the occluder; e.g., a kiwi turning into a lemon (Flombaum et al., 2004). Here we tested whether the occluded motion scenario might provide a more realistic and compelling impression of spatiotemporal contiguity, increasing the likelihood that the object-location binding would update and the congruency bias would transfer to the End location.

Methods

16 subjects (3 females, 13 males, mean age 18.8 years) participated in this experiment; 8 additional subjects completed the study but were excluded for poor task performance (accuracy < 55%), and 1 additional subject did not have enough trials remaining after RT trimming2.

Experiment 4 used a different layout from the previous versions to accommodate the occlusion (Figure 3A). Object 1 appeared at one of four possible corner locations around the fixation point; stimuli were sized 4×4° and centered at 9.9° eccentricity. Two occluder bars 4° thick were filled with the random noise mask texture and positioned horizontally (above and below the fixation point) or vertically (left and right of the fixation point) spanning the entire screen. The occluder bars were repositioned for each trial based on the start location and direction of movement, which was counterbalanced and randomized across trials. The occluders were presented for 500ms before Object 1 appeared, and they remained visible for the entire trial. Object 1 appeared for 500ms at the Start location and then moved either vertically or horizontally towards the End location. One occluder bar was always positioned near the end of this movement path, such that the object could pass behind the occluder and then re-appear at the End location.

Figure 3. Task and results for Experiment 4 - Occluded Movement.

A) Trial timing: Object 1 appeared at the original (start) location for 500ms, and then moved toward and behind an occluder. Object 2 re-appeared from behind an occluder and stopped at either the Start (S), End (E), Control 1 (C1), or Control 2 (C2) location – see inset. The task was to respond whether Object 1 and Object 2 were the same or different shape. B) Bias (signal detection theory criterion measure) plotted as a function of location condition. Negative bias is increased likelihood to judge the objects as “same shape”. Error bars are SEM, N=16.

The object moved for 830ms before reaching the occluder, at which point it disappeared from view. On 25% of trials Object 2 then re-emerged from the occluder with the expected spatiotemporal trajectory, and paused at the End location for 500ms. (The object was hidden completely behind the occluder for one frame, and then moved for 360ms until it reached the fully unoccluded End location position.) On the remaining trials, Object 2 re-emerged from behind a different location on one of the two occluders, and with the same timing moved toward either the original Start location or one of two control locations. The four locations were equally likely. Participants responded indicating whether they thought the two objects were identical (same shape) or not.

All other details were the same as Experiments 1-3.

Results

Figure 3B illustrates the bias for each of the four location conditions. The Spatial Congruency Bias was greatest (most negative) at the Start location; subjects were significantly more likely to report the two objects as the same identity when the object re-emerged at the Start location after occlusion, compared to Control 1 (t(15) = -4.77, p < 0.001, d = 1.19) and Control 2 (t(15) = -3.64, p = 0.002, d = 0.91) locations. The bias at the Start location was marginally greater than at the spatiotemporally consistent End location (t(15) = -2.01, p = 0.063, d = 0.50). The bias at the End location was numerically greater (more negative) than the controls, but not significantly different from either location (End vs Control 1: t(15) = -1.85, p = 0.084, d = 0.46; End vs Control 2: t(15) = -1.27, p = 0.222, d = 0.32). There was no significant difference in bias between the two control locations (t(15) = -0.85, p = 0.410, d = 0.21). The same pattern of results held when all 24 subjects were analyzed, except the bias at the End location was marginally increased relative to the controls.

Discussion

In Experiment 4 an object appeared, moved across the screen, disappeared behind an occluder, and then re-emerged at either the spatiotemporally consistent End location, the original Start location, or one of two control locations. Despite the strong spatiotemporal expectations at the End location, the Spatial Congruency Bias was again strongest at the Start location. The congruency bias at the End location was in the same direction, but was not significantly different from the control locations.

General Discussion

Here we set out to ask whether the Spatial Congruency Bias (Golomb et al., 2014) is sensitive to spatiotemporal contiguity. The Spatial Congruency Bias is a recently discovered phenomenon demonstrating a robust and dominant effect of an object's spatial location on perception of its other features and identity. Specifically, two objects appearing in the same location are more likely to be perceived as having the same features and identity. The Spatial Congruency Bias has been proposed to reflect a special role of location information in object recognition. However, the mechanisms and theoretical underpinnings of the congruency bias have yet to be fully uncovered. One critical question is whether the Spatial Congruency Bias is a purely spatial effect reflecting low-level retinotopic input, or whether it is sensitive to more ecologically relevant information about an object's location. Recent studies from our group have found that the Spatial Congruency Bias remains in retinotopic (not spatiotopic) coordinates after a saccadic eye movement (Shafer-Skelton et al., 2017), and that the congruency bias is driven by 2D (not 3D) spatial location information (Finlayson & Golomb, 2016). However, it has long been known that one of the most compelling cues for object “sameness” is spatiotemporal contiguity (Burke, 1952; Flombaum et al., 2009; Hollingworth & Franconeri, 2009; Kahneman et al., 1992; Mitroff & Alvarez, 2007). Given the links to object recognition and the binding problem, an important question is whether the spatial congruency bias is also sensitive to spatiotemporal contiguity, and whether the bias would update with object movement.

In the current study, we tested four variations of the Spatial Congruency Bias paradigm with spatiotemporally contiguous object movement. In Experiment 1, a stimulus was briefly presented inside a placeholder object; the placeholder then smoothly moved to a new location, and then a second stimulus appeared at the final placeholder location, at the original location, or at a control location. In Experiment 2, the stimulus itself moved, rather than a placeholder. In both experiments, we found a strong Spatial Congruency Bias at the object's original (Start) location. This occurred despite the End location carrying both spatiotemporal contiguity and probability advantages. Interestingly, at the End location there was actually a small effect in the opposite direction in both experiments: a reverse bias where subjects were actually less likely to report the objects as the same, compared to the Control location condition. The meaning of this reverse bias is unclear: we predicted that subjects would either be more likely to report the objects as the same in this End location compared to Control, or that there would be no difference. The fact that we found a reliable reverse bias was unexpected. One possibility is that the Spatial Congruency Bias reflects a more complex mechanism where there can be both facilitation and inhibition, so to speak. Another possibility is that because the objects were either replaced by a placeholder (Expt 1) or masked at the end of the movement (Expt 2), this might have interacted with the congruency bias or object file, causing Object 2 to be perceived as “more” different from the original. The fact that the reverse bias was not seen in Experiment 3, when the object remained visible after movement ended, and in Experiment 4, when the object more naturally passed behind an occluder and re-emerged, could support this interpretation, though further study would be needed. Regardless (or perhaps even in spite of this effect), it is notable that the standard-direction Spatial Congruency Bias remained so robust at the Start location.

Experiments 3 and 4 included manipulations of timing and occlusion during movement, respectively. In Experiment 3, Object 1 remained visible for an additional period after it reached the end of the movement path, such that it was presented for equal amounts of time before and after the movement. This led to the elimination of the reverse bias at the End location, and weak evidence for a standard congruency bias at this location, in addition to the Start location. The congruency bias here was weaker than the previous experiments, as if it were being split between the Start and End locations. However, it is unclear whether the Spatial Congruency Bias actually updated partially to the End location, or if subjects simply re-encoded the object during the delay after the movement.

Finally, Experiment 4 tested a scenario of movement behind an occluder. Object 1 passed behind the occluder near the end of the movement path, which allowed a more natural transition between Object 1 and Object 2. Despite this arguably more compelling sense of spatiotemporal contiguity, the Spatial Congruency Bias was again only reliably present at the original Start location.

These results suggest that the location where an object first appears carries particular importance for the Spatial Congruency Bias and the type of object-location binding it may reflect. These results are interesting in light of other work exploring object-location binding, particularly a study by Hollingworth & Rasmussen (2010) looking at object files and visual working memory. Object files are typically probed using the “object-reviewing” paradigm (Kahneman et al., 1992), in which participants tend to display an “object-specific preview benefit”: an RT or accuracy advantage when probes subsequently appear on the same object as they were initially previewed. This same-object advantage relies heavily on spatiotemporal contiguity (Mitroff & Alvarez, 2007). Hollingworth & Rasmussen (2010) used this framework to investigate the role of spatiotemporal contiguity and object files in visual working memory, asking whether object-position binding in visual working memory is linked to the original and/or updated locations after object movement, similar to our question for the Spatial Congruency Bias in the present set of experiments.

In Hollingworth & Rasmussen (2010), four objects were presented simultaneously, in a traditional multi-item working memory paradigm. Each of the four boxes was briefly filled with a color (initial array), the placeholder boxes rotated to new locations (motion), and then the boxes were again filled with colors (test array). The task was to judge whether all of the colors were the same as the initial array or if one was different. The objects in the test array spatially corresponded to the initial positions, the updated positions, or neither. The authors tested RT and accuracy, finding a performance benefit (bias was untested) for both the original and updated conditions.

Hollingworth & Rasmussen (2010) concluded that there were two mechanisms of object-position binding involved in visual working memory: one that updates with motion (object-files) and one that is tied to the original location, similar to the object-based and space-based components found for Inhibition of Return (Tipper, Driver, & Weaver, 1991; Tipper, Weaver, Jerreat, & Burak, 1994). Our finding that the Spatial Congruency Bias is also most strongly tied to the object's original location but may sometimes update to the new location is consistent with these findings.

Interestingly, Hollingworth & Rasmussen's latter, motion-insensitive mechanism was interpreted to reflect a scene-based representation – that is, the features seemed to be bound to the object locations relative to their original configuration in the display (array-centered locations), rather than their absolute locations. In a recent paper, we tested an additional manipulation of the Spatial Congruency Bias – whether it was tied to absolute (spatiotopic) locations or eye-centered (retinotopic) locations following an eye movement (Shafer-Skelton et al., 2017). The Spatial Congruency Bias was linked purely to retinotopic location; even at longer delays after the saccade (more time to update) and for objects of varying complexity (gabors, objects, and faces), there was no evidence for spatiotopic binding (Shafer-Skelton et al., 2017). While both paradigms found evidence against absolute-position binding, there is a difference between retinotopic (eye-centered) representations (Shafer-Skelton et al., 2017) and configural array-centered representations that survive translation and expansion to different retinotopic positions (Hollingworth & Rasmussen, 2010). Thus, while the Spatial Congruency Bias seems to be primarily associated with the type of object-position binding that is not updated with motion, it remains unknown whether this non-updated, retinotopic Congruency Bias reflects a variation of the configural coding mechanism associated with visual working memory (Hollingworth & Rasmussen, 2010), or if it reflects a different, third type of binding mechanism. Future research would be needed to test the Spatial Congruency Bias in the presence of multiple-object arrays, scenes, and/or simultaneous dissociation of retinotopic, spatiotopic, and array-centered reference frames (e.g., Tower-Richardi, Leber, & Golomb, 2016). It is also possible that the Spatial Congruency Bias operates on a different level of perceptual discrimination than the Object-Specific Preview Benefit; while the Congruency Bias is only apparent for fine perceptual discrimination tasks and is argued to influence similarity at a perceptual level (Golomb et al, 2014; see discussion below), object specific-preview benefits are typically seen for coarser discriminations (e.g., a set of 7 nameable colors in Hollingworth & Rasmussen) and primarily influence the speed or accuracy of responses.

In sum, the current paper adds to a growing body of knowledge characterizing the Spatial Congruency Bias and its relationship to object-location binding. The Spatial Congruency Bias is a robust effect demonstrating that when two objects appear in the same spatial location, they are more likely to be judged as the same object. The congruency bias is specifically driven by location information – object features such as shape or color do not induce a congruency bias (Golomb et al., 2014). The Congruency Bias also seems to be more than a simple response-level interference effect – when participants are asked to rate the perceived similarity of two objects on a continuous sliding scale, they systematically rate the objects as more similar when they appear in the same location versus different locations, and this occurs only when the task is perceptually difficult (Golomb et al., 2014). The Spatial Congruency Bias thus seems to reflect an underlying propensity to use object location as an indicator of “sameness”. When a task is perceptually difficult and two stimuli are not obviously different, our visual systems might rely on the default assumption that if it appears in the same location, it's probably the same object. If the congruency bias were based purely on some sort of conscious assumption about “sameness”, however, then it should be tied to the object's location in ecologically-relevant coordinates. In contrast, the Spatial Congruency Bias appears firmly rooted in the low-level, retinotopic position that the object was initially encoded at. The Spatial Congruency Bias does not seem to automatically update according to spatiotemporal contiguity cues, as shown in the current experiments, nor does it automatically update to reflect the world-centered spatiotopic location following an eye movement (Shafer-Skelton et al., 2017). Additionally, it has been shown to be sensitive only to the object's 2D location on the retina, rather than the object's 3D, depth-sensitive position in the world (Finlayson & Golomb, 2016).

The Spatial Congruency Bias thus seems to reflect a low-level, residual effect of the binding of object properties to their original, retinotopic location. As suggested by the current results, this particular component of binding does not automatically update to the new location when an object moves. It is possible that there are additional components of object-location binding that do update (Hollingworth & Rasmussen, 2010), or that it is primarily location pointers that automatically update, and these are dynamically re-bound to whatever feature information is present in the new location after the movement is completed (consistent with our findings from Experiment 3). This would be consistent with the idea that spatial tracking of objects preserves limited information about features, e.g. in multiple-object tracking when participants fail to remember the features of objects they are tracking spatially (Horowitz et al., 2007; Pylyshyn, 2004; Saiki, 2003; Scholl, Pylyshyn, & Franconeri, 1999). It could also be consistent with the finding that the experience of object continuity is not necessarily tied to the observed object-location binding (Mitroff, Scholl, & Wynn, 2005).

Conclusion

Here we explored a recent phenomenon - the Spatial Congruency Bias - and its role in object-location binding by asking what happens when an object moves to a new location. Across four experiments, we found that the Spatial Congruency Bias remained strongly linked to the original object location. However, under certain circumstances, e.g. when the first object paused and remained visible for a brief period of time after the movement (allowing time to re-encode the object at the new location), the congruency bias was found at both the original location and the updated location. These data suggest that the Spatial Congruency Bias is based more on low-level visual information than spatiotemporal contiguity cues, and reflects a type of object-location binding that is primarily tied to the original object location and may only update to the object's new location if there is time for the features to re-bind following the movement.

Acknowledgments

This work was supported by research grants from the National Institutes of Health (R01-EY025648) and Alfred P. Sloan Foundation (BR-2014-098), and support from the Ohio State University Undergraduate Research Office. We thank Samoni Nag and other members of the Golomb Lab for assistance with data collection and helpful discussion.

Footnotes

Note that although the bias (criterion) measure in Signal Detection Theory is commonly interpreted as a response- or decision-level bias, biases can also be perceptual (Witt, Taylor, Sugovic, & Wixted, 2015), as is thought to be the case with the Spatial Congruency Bias (Golomb, Kupitz, & Thiemann, 2014; Shafer-Skelton, Kupitz, & Golomb, 2017; see General Discussion).

The majority of the subjects cut for poor accuracy in this experiment also had extremely fast RTs (average RT for excluded subjects: 190ms; for included subjects: 444ms), indicating that they were not waiting for Object 2 to fully re-emerge but randomly pressing a button as quickly as possible once it started re-appearing. We excluded trials on which subjects responded within 1 frame of the fully unoccluded object (RTs < 20ms, a conservative cutoff), and these trials accounted for an average of 16% of trials for the excluded subjects (but <4% for the included subjects). An additional subject had over 50% of trials excluded for too-fast RT; although accuracy for this subject was actually >55% after removal of these trials, there were too few trials remaining to reliably analyze, so this subject was not included in analyses. An analysis of the data with all subjects included revealed a similar pattern of results to those reported with the subset.

References

- Burke L. On the tunnel effect. Quarterly Journal of Experimental Psychology. 1952;4(3):121–138. https://doi.org/10.1080/17470215208416611. [Google Scholar]

- Cave KR, Pashler H. Visual selection mediated by location: selecting successive visual objects. Percept Psychophys. 1995;57(4):421–32. doi: 10.3758/bf03213068. [DOI] [PubMed] [Google Scholar]

- Chen H, Wyble B. The location but not the attributes of visual cues are automatically encoded into working memory. Vision Research. 2015;107:76–85. doi: 10.1016/j.visres.2014.11.010. https://doi.org/10.1016/j.visres.2014.11.010. [DOI] [PubMed] [Google Scholar]

- Chen Z. Not all features are created equal: Processing asymmetries between location and object features. Vision Research. 2009;49(11):1481–1491. doi: 10.1016/j.visres.2009.03.008. https://doi.org/10.1016/j.visres.2009.03.008. [DOI] [PubMed] [Google Scholar]

- Cox DD, Meier P, Oertelt N, DiCarlo JJ. “Breaking”position-invariant object recognition. Nature Neuroscience. 2005;8(9):1145–1147. doi: 10.1038/nn1519. [DOI] [PubMed] [Google Scholar]

- Finlayson NJ, Golomb JD. Feature-location binding in 3D: Feature judgments are biased by 2D location but not position-in-depth. Vision Research. 2016;127:49–56. doi: 10.1016/j.visres.2016.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flombaum JI, Kundey SM, Santos LR, Scholl BJ. Dynamic Object Individuation in Rhesus Macaques A Study of the Tunnel Effect. Psychological Science. 2004;15(12):795–800. doi: 10.1111/j.0956-7976.2004.00758.x. https://doi.org/10.1111/j.0956-7976.2004.00758.x. [DOI] [PubMed] [Google Scholar]

- Flombaum JI, Scholl BJ. A temporal same-object advantage in the tunnel effect: facilitated change detection for persisting objects. J Exp Psychol Hum Percept Perform. 2006;32(4):840–53. doi: 10.1037/0096-1523.32.4.840. https://doi.org/10.1037/0096-1523.32.4.840. [DOI] [PubMed] [Google Scholar]

- Flombaum JI, Scholl BJ, Santos LR. Spatiotemporal Priority as a Fundamental Principle of Object Persistence. In: Hood BM, Santos LR, editors. The Origins of Object Knowledge. Oxford University Press; 2009. [Google Scholar]

- Golomb JD, Kupitz CN, Thiemann CT. The influence of object location on identity: A “spatial congruency bias”. Journal of Experimental Psychology: General. 2014;143(6):2262. doi: 10.1037/xge0000017. [DOI] [PubMed] [Google Scholar]

- Hollingworth A, Franconeri SL. Object correspondence across brief occlusion is established on the basis of both spatiotemporal and surface feature cues. Cognition. 2009;113(2):150–166. doi: 10.1016/j.cognition.2009.08.004. https://doi.org/10.1016/j.cognition.2009.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollingworth A, Rasmussen IP. Binding objects to locations: The relationship between object files and visual working memory. Journal of Experimental Psychology: Human Perception and Performance. 2010;36(3):543–564. doi: 10.1037/a0017836. https://doi.org/10.1037/a0017836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horowitz TS, Klieger SB, Fencsik DE, Yang KK, Alvarez GA, Wolfe JM. Tracking unique objects. Perception & Psychophysics. 2007;69(2):172–184. doi: 10.3758/bf03193740. https://doi.org/10.3758/BF03193740. [DOI] [PubMed] [Google Scholar]

- Kahneman D, Treisman A, Gibbs BJ. The reviewing of object files: Object-specific integration of information. Cognitive Psychology. 1992;24(2):175–219. doi: 10.1016/0010-0285(92)90007-o. [DOI] [PubMed] [Google Scholar]

- Leslie AM, Xu F, Tremoulet PD, Scholl BJ. Indexing and the object concept: developing ‘what’ and ‘where’ systems. Trends in Cognitive Sciences. 1998;2(1):10–18. doi: 10.1016/s1364-6613(97)01113-3. https://doi.org/10.1016/S1364-6613(97)01113-3. [DOI] [PubMed] [Google Scholar]

- Li N, DiCarlo JJ. Unsupervised natural experience rapidly alters invariant object representation in visual cortex. Science. 2008;321(5895):1502–1507. doi: 10.1126/science.1160028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitroff SR, Alvarez GA. Space and time, not surface features, guide object persistence. Psychonomic Bulletin & Review. 2007;14(6):1199–1204. doi: 10.3758/bf03193113. https://doi.org/10.3758/BF03193113. [DOI] [PubMed] [Google Scholar]

- Mitroff SR, Scholl BJ, Wynn K. The relationship between object files and conscious perception. Cognition. 2005;96(1):67–92. doi: 10.1016/j.cognition.2004.03.008. https://doi.org/10.1016/j.cognition.2004.03.008. [DOI] [PubMed] [Google Scholar]

- Pertzov Y, Husain M. The privileged role of location in visual working memory. Attention, Perception, & Psychophysics. 2014;76(7):1914–1924. doi: 10.3758/s13414-013-0541-y. https://doi.org/10.3758/s13414-013-0541-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pylyshyn Z. Some puzzling findings in multiple object tracking: I. Tracking without keeping track of object identities. Visual Cognition. 2004;11(7):801–822. https://doi.org/10.1080/13506280344000518. [Google Scholar]

- Saiki J. Feature binding in object-file representations of multiple moving items. Journal of Vision. 2003;3(1) doi: 10.1167/3.1.2. Retrieved from http://journalofvision.org.www.journalofvision.org/content/3/1/2.short. [DOI] [PubMed] [Google Scholar]

- Scholl BJ, Pylyshyn ZW, Franconeri SL. When are featural and spatiotemporal properties encoded as a result of attentional allocation? Investigative Ophthalmology & Visual Science. 1999;40:S797. [Google Scholar]

- Shafer-Skelton A, Kupitz CN, Golomb JD. Object-location binding across a saccade: A retinotopic spatial congruency bias. Attention, Perception, & Psychophysics. 2017:1–17. doi: 10.3758/s13414-016-1263-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spelke ES, Kestenbaum R, Simons DJ, Wein D. Spatiotemporal continuity, smoothness of motion and object identity in infancy. British Journal of Developmental Psychology. 1995;13(2):113–142. https://doi.org/10.1111/j.2044-835X.1995.tb00669.x. [Google Scholar]

- Tipper SP, Driver J, Weaver B. Short report: Object-centred inhibition of return of visual attention. The Quarterly Journal of Experimental Psychology. 1991;43(2):289–298. doi: 10.1080/14640749108400971. [DOI] [PubMed] [Google Scholar]

- Tipper SP, Weaver B, Jerreat LM, Burak AL. Object-based and environment-based inhibition of return of visual attention. Journal of Experimental Psychology: Human Perception and Performance. 1994;20(3):478. [PubMed] [Google Scholar]

- Tower-Richardi SM, Leber AB, Golomb JD. Spatial priming in ecologically relevant reference frames. Attention, Perception, & Psychophysics. 2016;78(1):114–132. doi: 10.3758/s13414-015-1002-6. [DOI] [PubMed] [Google Scholar]

- Treisman A, Gelade G. A feature-integration theory of attention. Cognitive Psychology. 1980;12(1):97–136. doi: 10.1016/0010-0285(80)90005-5. https://doi.org/10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- Tsal Y, Lavie N. Attending to color and shape: The special role of location in selective visual processing. Perception & Psychophysics. 1988;44(1):15–21. doi: 10.3758/bf03207469. https://doi.org/10.3758/BF03207469. [DOI] [PubMed] [Google Scholar]

- Tsal Y, Lavie N. Location dominance in attending to color and shape. Journal of Experimental Psychology. Human Perception and Performance. 1993;19(1):131–139. doi: 10.1037//0096-1523.19.1.131. [DOI] [PubMed] [Google Scholar]

- Wallis G, Bülthoff HH. Effects of temporal association on recognition memory. Proceedings of the National Academy of Sciences. 2001;98(8):4800–4804. doi: 10.1073/pnas.071028598. https://doi.org/10.1073/pnas.071028598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watson AB, Pelli DG. Quest: A Bayesian adaptive psychometric method. Perception & Psychophysics. 1983;33(2):113–120. doi: 10.3758/bf03202828. https://doi.org/10.3758/BF03202828. [DOI] [PubMed] [Google Scholar]

- Witt JK, Taylor JET, Sugovic M, Wixted JT. Signal Detection Measures Cannot Distinguish Perceptual Biases from Response Biases. Perception. 2015;44(3):289–300. doi: 10.1068/p7908. https://doi.org/10.1068/p7908. [DOI] [PubMed] [Google Scholar]

- Yi DJ, Turk-Browne NB, Flombaum JI, Kim MS, Scholl BJ, Chun MM. Spatiotemporal object continuity in human ventral visual cortex. Proceedings of the National Academy of Sciences. 2008;105(26):8840–8845. doi: 10.1073/pnas.0802525105. https://doi.org/10.1073/pnas.0802525105. [DOI] [PMC free article] [PubMed] [Google Scholar]