Key Points

Question

Does increasing price transparency for inpatient laboratory tests in the electronic health record at the time of order entry influence clinician ordering behavior?

Finding

In this year-long randomized clinical trial including 98 529 patients at 3 hospitals, displaying Medicare allowable fees in the electronic health record at the time of order entry did not lead to a significant change in overall clinician ordering behavior.

Meaning

These findings suggest that price transparency alone may not lead to significant changes in clinician behavior, and future price transparency interventions may need to be better targeted, framed, or combined with other approaches.

Abstract

Importance

Many health systems are considering increasing price transparency at the time of order entry. However, evidence of its impact on clinician ordering behavior is inconsistent and limited to single-site evaluations of shorter duration.

Objective

To test the effect of displaying Medicare allowable fees for inpatient laboratory tests on clinician ordering behavior over 1 year.

Design, Setting, and Participants

The Pragmatic Randomized Introduction of Cost data through the electronic health record (PRICE) trial was a randomized clinical trial comparing a 1-year intervention to a 1-year preintervention period, and adjusting for time trends and patient characteristics. The trial took place at 3 hospitals in Philadelphia between April 2014 and April 2016 and included 98 529 patients comprising 142 921 hospital admissions.

Interventions

Inpatient laboratory test groups were randomly assigned to display Medicare allowable fees (30 in intervention) or not (30 in control) in the electronic health record.

Main Outcomes and Measures

Primary outcome was the number of tests ordered per patient-day. Secondary outcomes were tests performed per patient-day and Medicare associated fees.

Results

The sample included 142 921 hospital admissions representing patients who were 51.9% white (74 165), 38.9% black (55 526), and 56.9% female (81 291) with a mean (SD) age of 54.7 (19.0) years. Preintervention trends of order rates among the intervention and control groups were similar. In adjusted analyses of the intervention group compared with the control group over time, there were no significant changes in overall test ordering behavior (0.05 tests ordered per patient-day; 95% CI, −0.002 to 0.09; P = .06) or associated fees ($0.24 per patient-day; 95% CI, −$0.42 to $0.91; P = .47). Exploratory subset analyses found small but significant differences in tests ordered per patient-day based on patient intensive care unit (ICU) stay (patients with ICU stay: −0.16; 95% CI, −0.31 to −0.01; P = .04; patients without ICU stay: 0.13; 95% CI, 0.08-0.17; P < .001) and the magnitude of associated fees (top quartile of tests based on fee value: −0.01; 95% CI, −0.02 to −0.01; P = .04; bottom quartile: 0.03; 95% CI, 0.002-0.06; P = .04). Adjusted analyses of tests that were performed found a small but significant overall increase in the intervention group relative to the control group over time (0.08 tests performed per patient day, 95% CI, 0.03-0.12; P < .001).

Conclusions and Relevance

Displaying Medicare allowable fees for inpatient laboratory tests did not lead to a significant change in overall clinician ordering behavior or associated fees.

Trial Registration

clinicaltrials.gov Identifier: NCT02355496

This randomized clinical trial investigated the effect of displaying Medicare allowable fees for inpatient laboratory tests on clinician ordering behavior over 1 year.

Introduction

It is estimated that nearly 30% of laboratory testing in the United States may be wasteful. Unnecessary blood draws can cause patient discomfort and harm from hospital-acquired anemia. It may also be associated with increased rates of false-positive results leading to increased costs and potential adverse outcomes from unwarranted interventions.

With the rising adoption of the electronic health record (EHR), many health systems are considering increasing price transparency at the time of order entry to influence clinician ordering behavior. While the evidence on these types of approaches is growing, the findings have been inconsistent, and most studies were not randomized clinical trials. Two recent studies tested price transparency for laboratory ordering and found only modest reductions in order rates. However, both interventions were only 6 months in duration, and neither analysis was risk-adjusted for patient comorbidities. Another 2 recent studies tested price transparency for imaging and procedures and both found no significant effect.

To advance existing evidence and to address limitations of prior studies, we conducted a randomized clinical trial at 3 hospitals to evaluate the effect of displaying Medicare allowable fees for inpatient laboratory tests on clinician ordering behavior. We compared changes over a 1-year preintervention period and a 1-year intervention period, adjusting for time trends and patient demographics, insurance, disposition, and comorbidity severity.

Methods

Study Design

The PRICE (Pragmatic Randomized Introduction of Cost data through the Electronic health record) trial was conducted at 3 hospitals at the University of Pennsylvania Health System and compared changes in clinician ordering of inpatient laboratory tests with and without displaying Medicare allowable fees. Changes in outcomes were evaluated 1 year before and 1 year during the intervention, which began on April 8, 2015. The study protocol is available online in Supplement 1 and was approved by the University of Pennsylvania institutional review board. Neither clinicians nor patients were compensated for their participation.

Study Setting and Population

All 3 sites (Hospital of the University of Pennsylvania, Penn Presbyterian Medical Center, and Pennsylvania Hospital) are adult hospitals located in Philadelphia and share the same EHR, Sunrise Clinical Manager (Allscripts Corp). Clinicians who could place orders in the EHR included faculty physicians, residents and fellows, nurse practitioners, and physician assistants. The sample comprised patients admitted and discharged in either the preintervention (April 8, 2014, to April 7, 2015) or the intervention (April 8, 2015, to April 7, 2016) periods. Patients with an admission in 1 period and a discharge either in the next period or after the study completed were excluded.

Randomization and Interventions

We randomly assigned 60 groups of inpatient laboratory tests to either display fees (in 2015 US dollars) in the EHR (intervention) or not (control). Randomization was performed at the test level because the EHR system did not allow for randomization at the level of the clinician or site. The randomization protocol was adapted from prior studies and conducted as follows. First, a list of higher volume and more expensive tests was compiled using November 2014 data from the Hospital of the University of Pennsylvania. The list comprised 75 inpatient laboratory tests: 30 classified as higher volume, 30 classified as more expensive (based on 2014 charge data), and 15 that were in both categories. Second, we grouped tests that could be ordered individually and as a panel, as well as tests with similar alternatives. By grouping tests in this manner, we avoided scenarios in which clinicians would have increased price transparency for only part of a group of similar options. Specifically, we grouped (1) complete blood cell count with and without a differential; (2) varying sizes of basic metabolic panels as well as the individual tests they comprised (sodium, potassium, chloride, bicarbonate, urea nitrogen, creatinine, glucose, and calcium levels); and (3) liver function panel, total bilirubin, direct bilirubin, alkaline phosphate, alanine aminotransferase, and aspartate aminotransferase levels. Third, stratified randomization was conducted using a computerized random number generator as follows: first, the top quartile of higher volume tests were randomized, then among remaining tests the top quartile of more expensive tests were randomized, and finally the remaining tests were randomized.

For the laboratory test groups randomized to the intervention arm, fees were displayed in the EHR at the time of order search results and entry along with the following text at the time of entry: “The dollar amount represents Medicare reimbursement for the test. Actual costs to the consumer may vary by patient insurance status.” On implementation, clinicians were informed by email and at in-person meetings that this was part of a health system–wide initiative to improve high value care and they were required to acknowledge a 1-time message within the EHR.

Main Outcome Measures

The primary outcome measure was the number of tests ordered per patient-day. Secondary outcome measures were the number of tests performed per patient-day (tests could be ordered but not performed, eg, patient refused or clinician canceled) and associated fees per patient-day. We hypothesized that the intervention group would have a significant reduction in the number of tests ordered per patient-day and associated fees relative to the control group over time. Owing to the nature of the intervention, clinicians could not be blinded to group assignment. All investigators, statisticians, and data analysts were blinded to the results until the study was completed.

Data and Statistical Analysis

Patient information, including admissions, discharges, demographics, comorbidities, length of stay, hospital location, and the number of tests ordered and performed, was obtained from Penn Data Store, the health system’s clinical data warehouse.

Multivariate linear regression models were fitted for the continuous outcomes variables of tests ordered and performed per patient-day, and associated fees for tests ordered and performed per patient-day using the patient admission as the unit of analysis. For each patient admission, an observation was generated to represent the number of intervention group tests ordered per inpatient-day, and another observation was generated to represent the number of control group tests ordered per day. Binary variables were used to indicate group (intervention or control) and time period (preintervention or intervention). Models were adjusted for calendar month and hospital site fixed effects, as well as patient characteristics including age, sex, race/ethnicity, insurance status, and discharge disposition. Models were risk-adjusted for patient comorbidity severity using the Charlson Comorbidity Index (CCI), which predicts 10-year mortality, and standard errors were adjusted to account for clustering by patient. The effect of the intervention for each calendar month in the intervention period was evaluated using an interaction term between time period (preintervention or intervention) and group (intervention or control) for the calendar month. To assess the mean effect during the entire intervention period, the LSMEANS command in SAS statistical software (SAS Institute Inc) was used to conduct a linear combination of the interaction terms.

To evaluate the robustness of our findings, we estimated the model using patient length of stay as a covariate to adjust for differences that may have occurred in the frequency of ordering among patient admissions of different durations. We also conducted a series of exploratory subgroup analyses. First, to evaluate differences in ordering behavior among patients with higher and lower comorbidity risk scores, we estimated a model using only patients in the top quartile (CCI ≥3) and another model using only patients in the bottom quartile (CCI = 0). Second, because clinicians in the intensive care unit (ICU) may make more frequent decisions and therefore may be more exposed to the price transparency intervention, we estimated the main model separately for patients who had an ICU stay and those who did not. Third, because different magnitudes of fee values exposed through price transparency may influences its effect, we estimated the main model for all patients but separately for tests with the top and bottom quartiles of Medicare allowable fee value.

To test if trends between the 2 study arms were similar during the preintervention period, a test of controls was conducted for tests ordered per patient-day and associated fees by comparing the second half of the preintervention period with the first half of the preintervention period.

All hypothesis tests were 2-sided and used a significance level of P < .05. All statistical analyses were conducted using SAS software, version 9.4.

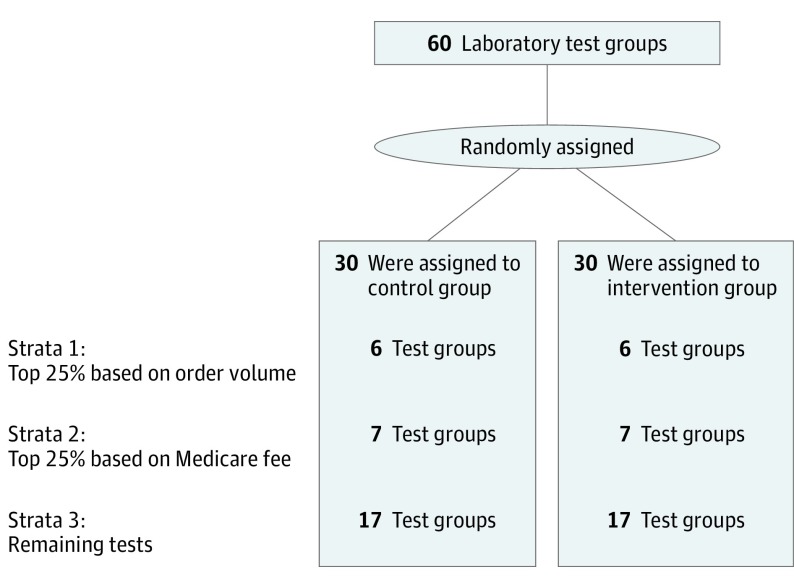

Results

The sample included 142 921 hospital admissions representing patients who were 51.9% white (74 165), 38.9% black (55 526), and 56.9% female (81 291) with a mean (SD) age of 54.7 (19.0) years. Laboratory test group strata are presented in Figure 1 (test allocation information is available in eTable 1 in Supplement 2) (Table 1).

Figure 1. Randomization Schema.

The 3 strata by which laboratory test groups were randomized. Names of the laboratory tests within each arm and strata are available in Supplement 2.

Table 1. Characteristics of the Study Sample.

| Characteristica | Hospital 1 (HUP) | Hospital 2 (PPMC) | Hospital 3 (PAH) | All Hospitals | Overall | ||||

|---|---|---|---|---|---|---|---|---|---|

| Pre | Post | Pre | Post | Pre | Post | Pre | Post | ||

| Admissions, No. | 36 135 | 35 164 | 15 114 | 17 199 | 19 637 | 19 672 | 70 886 | 72 035 | 142 921 |

| Length of stay, mean (SD), d | 6.7 (8.8) | 6.7 (9.0) | 5.5 (6.1) | 5.9 (6.9) | 4.6 (5.8) | 4.4 (5.7) | 5.9 (7.6) | 5.9 (7.8) | 5.9 (7.7) |

| Age, mean (SD), y | 54.0 (18.7) | 54.3 (18.4) | 60.8 (17.2) | 60.1 (17.8) | 50.8 (19.9) | 50.8 (19.8) | 54.6 (19.1) | 54.7 (18.9) | 54.7 (19.0) |

| Female, No. (%) | 19 888 (55.0) | 19476 (55.4) | 7570 (50.1) | 8191 (47.6) | 13 001 (66.2) | 13 165 (66.9) | 40 459 (57.1) | 40 832 (56.7) | 81 291 (56.9) |

| Race/ethnicity, No. (%) | |||||||||

| White | 19 601 (54.2) | 18 832 (53.6) | 5893 (39.0) | 6737 (39.2) | 11 568 (58.9) | 11 534 (58.6) | 37 062 (52.3) | 37 103 (51.5) | 74 165 (51.9) |

| Black | 12 813 (35.5) | 12 101 (34.4) | 8341 (55.2) | 9165 (53.3) | 6688 (34.1) | 6418 (32.6) | 27 842 (39.3) | 27 684 (38.4) | 55 526 (38.9) |

| Other/unknown | 3721 (10.3) | 4231 (12.0) | 880 (5.8) | 1297 (7.5) | 1381 (7.0) | 1720 (8.7) | 5982 (8.4) | 7248 (10.1) | 13 230 (9.3) |

| Insurance, No. (%) | |||||||||

| Private | 19 647 (54.4) | 19 782 (56.3) | 3863 (25.6) | 4541 (26.4) | 7759 (39.5) | 7555 (38.4) | 31 269 (44.1) | 31 878 (44.3) | 63 147 (44.2) |

| Medicare | 12 914 (35.7) | 12 757 (36.3) | 7341 (48.6) | 7988 (46.4) | 5409 (27.5) | 5608 (28.5) | 25 664 (36.2) | 26 353 (36.6) | 52 017 (36.4) |

| Medicaid | 1498 (4.2) | 955 (2.7) | 3551 (23.5) | 4016 (23.4) | 4702 (23.9) | 4880 (24.8) | 9751 (13.8) | 9851 (13.7) | 19 602 (13.7) |

| Other/unknown | 2076 (5.8) | 1670 (4.8) | 359 (2.4) | 654 (3.8) | 1767 (9.0) | 1629 (8.3) | 4202 (5.9) | 3953 (5.5) | 8155 (5.7) |

| Disposition, No. (%) | |||||||||

| Home | 29 273 (81.0) | 28 838 (82.0) | 11 250 (74.4) | 12 685 (73.8) | 16 744 (85.3) | 17 009 (86.5) | 57 267 (80.8) | 58 532 (81.3) | 115 799 (81.0) |

| Other facility | 4396 (12.2) | 4064 (11.6) | 2458 (16.3) | 2778 (16.2) | 1685 (8.7) | 1568 (8.0) | 8539 (12.1) | 8410 (11.7) | 16 949 (11.9) |

| Hospice | 322 (0.9) | 317 (0.9) | 111 (0.7) | 129 (0.8) | 130 (0.7) | 135 (0.7) | 563 (0.8) | 581 (0.8) | 1144 (0.8) |

| Left against medical advice | 244 (0.7) | 223 (0.6) | 270 (1.8) | 267 (1.6) | 165 (0.8) | 145 (0.7) | 679 (1.0) | 635 (0.9) | 1314 (0.9) |

| Expired | 1376 (3.8) | 1269 (3.6) | 279 (1.9) | 351 (2.0) | 159 (0.8) | 154 (0.8) | 1814 (2.6) | 1774 (2.5) | 3588 (2.5) |

| Other/unknown | 524 (1.5) | 453 (1.3) | 746 (4.9) | 989 (5.8) | 754 (3.8) | 661 (3.4) | 2024 (2.9) | 2103 (2.9) | 4127 (2.9) |

| Charlson comorbidity index, median (IQR) | 2 (0-3) | 2 (0-3) | 2 (0-3) | 1 (0-3) | 0 (0-2) | 0 (0-2) | 1 (0-3) | 1 (0-3) | 1 (0-3) |

Abbreviations: HUP, Hospital of the University of Pennsylvania; IQR, interquartile range; overall, the preintervention and intervention periods; PAH, Pennsylvania Hospital; post, intervention period defined as April 8, 2015 to April 7, 2016; PPMC, Penn Presbyterian Medical Center; pre, preintervention period, defined as April 8, 2014 to April 7, 2015.

Data presented are at the level of the patient admission.

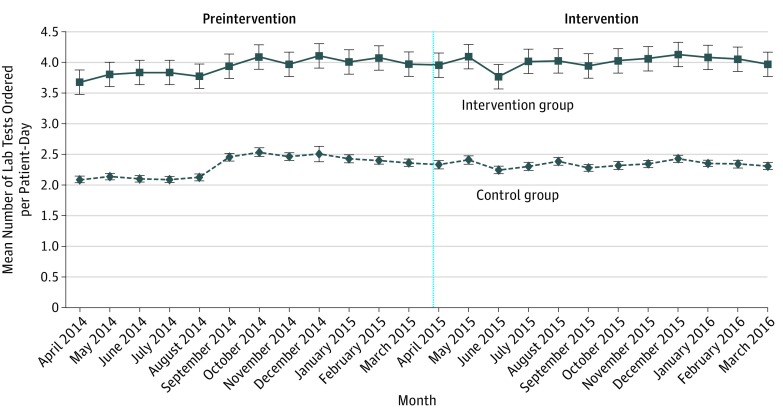

During the preintervention period, the mean number of tests ordered per patient-day was 2.31 in the control group and 3.93 in the intervention group (Figure 2). A test of controls during the preintervention period found no significant difference in trend when comparing the intervention group with the control group (0.03 tests ordered per patient-day; 95% CI, −0.04-0.10; P = .35). In the intervention period, the mean number of tests ordered per patient-day was 2.34 in the control group and 4.01 in the intervention group (Figure 2). The number of tests ordered varied by hospital sites, but trends were similar between the intervention and control groups (eTable 2 and eFigures 1-3 in Supplement 2). Unadjusted changes for each laboratory test are available (eTables 3 and 4 in Supplement 2)

Figure 2. Unadjusted Number of Inpatient Laboratory Tests Ordered per Patient-Day by Group and Month.

Error bars indicate the 95% CIs. Vertical black line delineates the preintervention and intervention periods. Data for April 2016 are not displayed because only 8 days exist within the study period in that month.

During the preintervention period, the mean associated fees per patient-day was $27.77 in the control group and $37.84 in the intervention group. A test of controls during the preintervention period found a small but significant diverging trend when comparing the intervention group with the control group ($1.85; 95% CI, $0.92-$2.78; P < .001). During the intervention period, the mean associated fee per patient-day was $27.59 in the control group and $38.85 in the intervention group.

In the main adjusted analyses of test ordering behavior for the intervention group compared with the control group over time, there was no significant overall change (0.05 tests ordered per patient-day; 95% CI, −0.002 to 0.09; P = .06). This finding was supported by sensitivity analyses adjusting for patient length of stay (Table 2). In subset analyses, several changes of smaller magnitude were found to be significant. There was a significant relative decrease in test ordering for patients with an ICU stay (−0.16 tests ordered per patient-day; 95% CI, −0.31 to −0.01; P = .04) and a significant relative increase in test ordering for patients without an ICU stay (0.13 tests ordered per patient-day; 95% CI, 0.08-0.17; P < .001). There was a significant relative decrease in test ordering among tests in the top quartile of fees (−0.01 tests ordered per patient-day; 95% CI, −0.02 to −0.01; P < .001) and a significant relative increase in test ordering among tests in the bottom quartile of fees (0.03 tests ordered per patient-day; 95% CI, 0.002-0.06; P = .04). For the secondary outcome of tests performed per patient-day, estimates were mostly significant, but of small magnitude and in a similar direction as the estimates for the primary outcome of tests ordered per patient-day (Table 2).

Table 2. Adjusted Model Estimates for Relative Changes in Tests Ordered and Performed per Patient-Day.

| Model and Analysis | Relative Change in Tests Ordered per Patient-Day (95% CI)a | P Value | Relative Change in Tests Performed per Patient-Day (95% CI)a | P Value |

|---|---|---|---|---|

| Model | ||||

| Main model | 0.05 (−0.002 to 0.09) | .06 | 0.08 (0.03 to 0.12) | <.001 |

| Main model adjusted by length of stay | 0.05 (−0.002 to 0.09) | .06 | 0.08 (0.03 to 0.12) | <.001 |

| Subset analyses | ||||

| Patients with CCI in the top quartileb | 0.08 (−0.01 to 0.17) | .10 | 0.10 (0.02 to 0.18) | .02 |

| Patients with CCI in the bottom quartileb | 0.02 (−0.03 to 0.08) | .43 | 0.06 (0.01 to 0.11) | .02 |

| Patients with ICU stay | −0.16 (−0.31 to −0.01) | .04 | −0.16 (−0.29 to −0.03) | .02 |

| Patients without ICU stay | 0.13 (0.08 to 0.17) | <.001 | 0.16 (0.12 to 0.20) | <.001 |

| Tests with Medicare fees in the top quartile | −0.01 (−0.02 to −0.01) | <.001 | −0.01 (−0.02 to −0.01) | <.001 |

| Tests with Medicare fees in the bottom quartile | 0.03 (0.002 to 0.06) | .04 | 0.02 (−0.01 to 0.05) | .13 |

Abbreviations: CCI, Charlson Comorbidity Index, which predicts 10-year mortality; ICU, intensive care unit.

Compares the intervention group to the control group in the intervention period relative to the preintervention period. To interpret the magnitude of the estimates, mean number of tests ordered per patient-day among the intervention group during the preintervention period was 3.93, and a 0.05 change represents a 1.3% change.

CCI top quartile defined as CCI of at least 3; CCI bottom quartile defined as CCI = 0.

In adjusted analyses of associated fees per patient-day, results were similar for tests ordered and performed (Table 3). Overall, there was no significant change. In subset analyses, there was a small but significant increase among patients with an ICU stay ($1.69; 95% CI, $1.06-$2.33; P < .001) and for tests in the bottom quartile of fees ($0.19; 95% CI, $0.05-$0.34; P = .01). There was a small but significant decrease for tests in the top quartile of fees (−$0.34; 95% CI, −$0.62 to −$0.06; P = .02).

Table 3. Adjusted Model Estimates for Relative Changes in Associated Medicare Fees per Patient-Day.

| Model and Analysis | Relative Change in Medicare Fees for Tests Ordered per Patient-Day (95% CI)a | P Value | Relative Change in Medicare Fees for Tests Performed per Patient-Day (95% CI)a | P Value |

|---|---|---|---|---|

| Model | ||||

| Main model | 0.24 (−0.42 to 0.91) | .47 | 0.30 (−0.28 to 0.88) | .31 |

| Main model adjusted by length of stay | 0.24 (−0.42 to 0.91) | .47 | 0.30 (−0.28 to 0.88) | .31 |

| Subset analyses | ||||

| Patients with CCI in the top quartileb | 0.07 (−1.08 to 1.23) | .90 | 0.02 (−0.98 to 1.03) | .96 |

| Patients with CCI in the bottom quartileb | 0.74 (−0.21 to 1.69) | .13 | 0.64 (−0.17 to 1.46) | .12 |

| Patients with ICU stay | 1.69 (1.06 to 2.33) | <.001 | 1.62 (1.06 to 2.18) | <.001 |

| Patients without ICU stay | 0.36 (−1.65 to 2.37) | .72 | −0.10 (−1.80 to 1.61) | .91 |

| Tests with Medicare fees in the top quartile | −0.34 (−0.62 to −0.06) | .02 | −0.54 (−0.76 to −0.33) | <.001 |

| Tests with Medicare fees in the bottom quartile | 0.19 (0.05 to 0.34) | .01 | 0.15 (0.002 to 0.29) | .05 |

Abbreviations: CCI, Charlson Comorbidity Index, which predicts 10-year mortality; ICU, intensive care unit.

Compares the intervention group to the control group in the intervention period relative to the preintervention period. To interpret the magnitude of the estimates, mean associated Medicare fees per patient-day among the intervention group during the preintervention period was $37.84, and a $0.24 change represents a 0.6% change.

CCI top quartile defined as CCI of at least 3; CCI bottom quartile defined as CCI = 0.

Discussion

There is growing interest in using price transparency to influence medical decision-making toward higher value care, but prior evidence on its effectiveness has been inconsistent. In this year-long randomized clinical trial conducted at 3 hospitals, displaying Medicare allowable fees in the EHR for inpatient laboratory tests at the time of order entry did not lead to any meaningful or consistent changes in overall clinician ordering behavior or associated fees. Our study incorporated several important design elements that differ from the existing literature on the effects of price transparency, including a longer duration, multiple hospital sites, grouping of similar test options, and adjustment for patient demographics, insurance, disposition, and comorbidity severity.

The absence of an overall effect on test ordering behavior from the intervention in this study may be due to several factors, and these may have important implications for hospitals considering using price transparency to change clinician behavior. First, the price transparency intervention in this study was always displayed regardless of the clinical scenario. The presence of this information for appropriate tests may have diminished its impact when tests were inappropriate. Future efforts may consider more selective targeting of price transparency.

Second, the salience of the intervention might have been further reduced owing to clinician practice habit. In a qualitative analysis at one of the hospital sites, 91% of resident physicians reported that unnecessary laboratory testing was due to the practice habit of entering repeating daily laboratory test orders on the patient’s first day of admission. The test may become more unnecessary later into the hospitalization, but if repeating orders were entered at the time of hospital admission, the clinician would not need to place another order for them and thus would not be presented with price transparency information when it would be most salient. One indication of this may have been demonstrated when comparing changes for patients with and without an ICU stay. Because health care decisions are changing more rapidly in this setting, clinicians may be less likely to rely on repeating orders and therefore may have been exposed to the intervention more often. Future efforts might also consider pairing price transparency information with changing the default setting in the EHR so clinicians cannot order repeating laboratory testing for an extended duration.

Third, the framing of information in price transparency interventions may have an important influence on its effectiveness owing to anchoring bias. Clinicians may have previously believed that the cost of some tests was higher than the price that was displayed. One indication of this was that we found a small but significant decrease in test ordering for the top quartile of fees (most expensive) and a small but significant increase in test ordering for the bottom quartile of Medicare fees (least expensive). Future efforts may consider other ways to frame price transparency, such as comparisons of differences in price between options, using other forms of price, such as charges, or targeting only more expensive tests.

It is important to note that there were small but significant overall changes in tests performed but not in tests ordered. It is unclear how much of the difference in findings between these 2 outcomes is due to the price transparency intervention or other factors that occur after the clinician has placed an order for a test. However, the small magnitude of the estimates contributes to the central finding that the intervention was not associated with any meaningful or consistent effects.

Limitations

This study is subject to several limitations. First, our findings are from patients at 3 hospital sites. While the patient populations varied among hospitals, all are located in Philadelphia, and this may limit generalizability to other settings. Second, owing to limitations of the EHR, randomization was performed at the level of the test and decisions on orders for tests in the control group may have been susceptible to spillover effects from the intervention group. However, we did not observe a significant decline in ordering within either group in isolation, indicating that our findings are more likely due to ineffectiveness than spillover effects. Third, tests in the intervention group had a higher baseline order rate than those of the control group. While randomization was stratified, this was largely driven by the intervention group including basic metabolic panel. However, a test of controls could not reject the null hypothesis of parallel trends between study groups for ordering behavior during the preintervention period. Fourth, information on clinicians was not available for comparison and model adjustment. Finally, our study evaluated price transparency using Medicare allowable fees, and further study is necessary to evaluate the impact of other forms of price transparency.

Conclusions

In this year-long randomized clinical trial, we found that displaying Medicare allowable fees for inpatient laboratory tests in the EHR did not lead to a significant change in overall clinician ordering behavior or associated fees. Exploratory subset analyses found small but significant changes based on patient stay in the intensive care unit and the magnitude of the fees displayed, indicating that future efforts should consider testing the impact of different ways to target and frame price transparency.

Study protocol

eTable 1. Randomization schema of inpatient laboratory tests and their associated Medicare allowable fees

eTable 2. Unadjusted mean inpatient laboratory tests ordered per patient-day by test arm and hospital

eTable 3. Unadjusted mean inpatient laboratory tests ordered per patient-day for test groups in the control arm at all three hospitals

eTable 4. Unadjusted mean inpatient laboratory tests ordered per patient-day for test groups in the intervention arm at all three hospitals

eFigure 1. Unadjusted number of inpatient laboratory tests ordered per patient-day by group and month at hospital #1 (HUP)

eFigure 2. Unadjusted number of inpatient laboratory tests ordered per patient-day by group and month at hospital #2 (PPMC)

eFigure 3. Unadjusted number of inpatient laboratory tests ordered per patient-day by group and month at hospital #3 (PAH)

References

- 1.Zhi M, Ding EL, Theisen-Toupal J, Whelan J, Arnaout R. The landscape of inappropriate laboratory testing: a 15-year meta-analysis. PLoS One. 2013;8(11):e78962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.May TA, Clancy M, Critchfield J, et al. Reducing unnecessary inpatient laboratory testing in a teaching hospital. Am J Clin Pathol. 2006;126(2):200-206. [DOI] [PubMed] [Google Scholar]

- 3.Stuebing EA, Miner TJ. Surgical vampires and rising health care expenditure: reducing the cost of daily phlebotomy. Arch Surg. 2011;146(5):524-527. [DOI] [PubMed] [Google Scholar]

- 4.Salisbury AC, Reid KJ, Alexander KP, et al. Diagnostic blood loss from phlebotomy and hospital-acquired anemia during acute myocardial infarction. Arch Intern Med. 2011;171(18):1646-1653. [DOI] [PubMed] [Google Scholar]

- 5.Gandhi TK, Kachalia A, Thomas EJ, et al. Missed and delayed diagnoses in the ambulatory setting: a study of closed malpractice claims. Ann Intern Med. 2006;145(7):488-496. [DOI] [PubMed] [Google Scholar]

- 6.Hsiao C-J, Hing E. Use and characteristics of electronic health record systems among office-based physician practices: United States, 2001-2013. NCHS Data Brief. 2014;(143):1-8. [PubMed] [Google Scholar]

- 7.Riggs KR, DeCamp M. Providing price displays for physicians: which price is right? JAMA. 2014;312(16):1631-1632. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Silvestri MT, Bongiovanni TR, Glover JG, Gross CP. Impact of price display on provider ordering: a systematic review. J Hosp Med. 2016;11(1):65-76. [DOI] [PubMed] [Google Scholar]

- 9.Goetz C, Rotman SR, Hartoularos G, Bishop TF. The effect of charge display on cost of care and physician practice behaviors: a systematic review. J Gen Intern Med. 2015;30(6):835-842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Horn DM, Koplan KE, Senese MD, Orav EJ, Sequist TD. The impact of cost displays on primary care physician laboratory test ordering. J Gen Intern Med. 2014;29(5):708-714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Feldman LS, Shihab HM, Thiemann D, et al. Impact of providing fee data on laboratory test ordering: a controlled clinical trial. JAMA Intern Med. 2013;173(10):903-908. [DOI] [PubMed] [Google Scholar]

- 12.Durand DJ, Feldman LS, Lewin JS, Brotman DJ. Provider cost transparency alone has no impact on inpatient imaging utilization. J Am Coll Radiol. 2013;10(2):108-113. [DOI] [PubMed] [Google Scholar]

- 13.Chien AT, Lehmann LS, Hatfield LA, et al. A Randomized trial of displaying paid price information on imaging study and procedure ordering rates [published online December 2, 2016]. J Gen Intern Med. doi: 10.1007/s11606-016-3917-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Penn Data Store. Penn Data Analytics Center. http://www.med.upenn.edu/dac/penn-data-store-warehouse.html. Accessed December 14, 2016.

- 15.Charlson ME, Pompei P, Ales KL, MacKenzie CR. A new method of classifying prognostic comorbidity in longitudinal studies: development and validation. J Chronic Dis. 1987;40(5):373-383. [DOI] [PubMed] [Google Scholar]

- 16.Rogers WH. Regression standard errors in clustered samples. Stata Tech Bull. 1993;13:19-23. [Google Scholar]

- 17.Williams RL. A note on robust variance estimation for cluster-correlated data. Biometrics. 2000;56(2):645-646. [DOI] [PubMed] [Google Scholar]

- 18.Newman SC. Biostatistical Methods in Epidemiology. New York, NY: Wiley; 2001:136. [Google Scholar]

- 19.Agresti A. An Introduction to Categorical Data Analysis. New York, NY: Wiley; 2007:108-109. [Google Scholar]

- 20.Sinaiko AD, Rosenthal MB. Increased price transparency in health care: challenges and potential effects. N Engl J Med. 2011;364(10):891-894. [DOI] [PubMed] [Google Scholar]

- 21.Cutler D, Dafny L. Designing transparency systems for medical care prices. N Engl J Med. 2011;364(10):894-895. [DOI] [PubMed] [Google Scholar]

- 22.Patel MS, Volpp KG. Leveraging insights from behavioral economics to increase the value of health-care service provision. J Gen Intern Med. 2012;27(11):1544-1547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sedrak MS, Patel MS, Ziemba JB, et al. Residents’ self-report on why they order perceived unnecessary inpatient laboratory tests. J Hosp Med. 2016;11(12):869-872. [DOI] [PubMed] [Google Scholar]

- 24.Iturrate E, Jubelt L, Volpicelli F, Hochman K. Optimize your electronic medical record to increase value: reducing laboratory overutilization. Am J Med. 2016;129(2):215-220. [DOI] [PubMed] [Google Scholar]

- 25.Volpp KG. Price transparency: not a panacea for high health care costs. JAMA. 2016;315(17):1842-1843. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Study protocol

eTable 1. Randomization schema of inpatient laboratory tests and their associated Medicare allowable fees

eTable 2. Unadjusted mean inpatient laboratory tests ordered per patient-day by test arm and hospital

eTable 3. Unadjusted mean inpatient laboratory tests ordered per patient-day for test groups in the control arm at all three hospitals

eTable 4. Unadjusted mean inpatient laboratory tests ordered per patient-day for test groups in the intervention arm at all three hospitals

eFigure 1. Unadjusted number of inpatient laboratory tests ordered per patient-day by group and month at hospital #1 (HUP)

eFigure 2. Unadjusted number of inpatient laboratory tests ordered per patient-day by group and month at hospital #2 (PPMC)

eFigure 3. Unadjusted number of inpatient laboratory tests ordered per patient-day by group and month at hospital #3 (PAH)