Abstract

Eye-tracking is a valuable research tool that is used in laboratory and limited field environments. We take steps toward developing methods that enable widespread adoption of eye-tracking and its real-time application in clinical decision support. Eye-tracking will enhance awareness and enable intelligent views, more precise alerts, and other forms of decision support in the Electronic Medical Record (EMR). We evaluated a low-cost eye-tracking device and found the device’s accuracy to be non-inferior to a more expensive device. We also developed and evaluated an automatic method for mapping eye-tracking data to interface elements in the EMR (e.g., a displayed laboratory test value). Mapping was 88% accurate across the six participants in our experiment. Finally, we piloted the use of the low-cost device and the automatic mapping method to label training data for a Learning EMR (LEMR) which is a system that highlights the EMR elements a physician is predicted to use.

Introduction

Eye-tracking is a valuable tool that biomedical researchers use to ascertain the focus of a participant’s attention1. Studies that utilize eye-tracking devices largely occur in laboratory and limited field environments2. In either case, the collected eye gaze data are usually analyzed retrospectively, rather than used at the point of collection. Widespread field use of eye-tracking is limited by device cost and by resource intensive data analysis3. If these barriers were overcome, eye-tracking devices would be used more widely in biomedical informatics research and deployment. These data would be valuable in understanding the practical use of Electronic Medical Records (EMRs).

Eye-tracking might also support advanced forms of clinical decision support. By knowing which elements (laboratory tests, vital signs, medication orders, etc.) in the EMR a physician has viewed over time, a clinical decision support system could model (albeit under uncertainty) a physician’s knowledge of a given patient case. Such a model could help the system work synergistically with the physician by drawing attention to important (but as yet unseen) EMR data and suggesting inferences that likely follow from that data, such as diagnoses not yet entered in the EMR. If eye- tracking devices were deployed on computer monitors throughout a hospital, then over time the patterns of EMR viewing by thousands of physicians could be collected and provide the basis for a system that learns which EMR elements a physician will use in current patient cases4.

This paper first reviews the use of eye-tracking devices to evaluate Health Information Technology (HIT). Next, it investigates and addresses two limitations currently prohibiting widespread field implementation of eye-tracking. Finally, it describes a pilot study that evaluates how well a low-cost eye-tracking device and mapping method are able to capture automatically what physicians are viewing in the EMR. These automatically captured viewing patterns are compared to viewing patterns that were manually labeled by physicians who were asked to label training data for a Learning EMR (LEMR)4.

Background

Eye-trackers measure a participant’s eye position in order to determine what he or she is viewing. There are two common types of eye-tracking equipment: a head mounted device that resembles eyeglasses and a fixed position remote device that is typically mounted on a computer monitor. Head mounted devices are obtrusive to the wearer; therefore, remote devices have greater potential for application in the field.

Eye-tracking has a long history of use in usability studies5 and consumer sciences6. With increasing frequency over the past ten years, HIT applications of eye-trackers focus primarily on understanding clinical reasoning1 and evaluating usability2. Table Table 1 provides a summary of studies that apply remote eye-tracking to understand time utilization7,8, to analyze information search patterns9,10, and to evaluate1112–13 and improve14 information displays.

Table 1.

Studies that utilize remote eye-tracking technology in HIT.

| Author, Year | Title | Objective | Results |

|---|---|---|---|

| Eghdam,201113 | Combining usability testing with eye-tracking technology: Evaluation of a visualization support for antibiotic use in intensive care | Observe the visual attention and scan patterns of system users. | Navigation paths were close to expected. Eye-tracking is a useful addition to usability studies. |

| Forsman, 201312 | Integrated information visualization to support decision making for use of antibiotics in intensive care: Design and usability evaluation | Evaluate a prototype visualization tool that aids decision making in antibiotic use in the intensive care unit (ICU). | Visual attention when completing the tasks differs between specialists and residents, who focus on the tables and on exploring the graphical user interface, respectively. |

| Nielson, 20137 | In-situ eye-tracking of emergency physician result review | Determine the time spent by physicians looking at lab results and fixating on specific values in a live clinical setting. | Average time viewing lab results was 13.9 seconds, with an average fixation length of 9.9 seconds. |

| Barkana, 201414 | Improvement of design of a surgical interface using an eye- tracking device | Evaluate a proposed surgical interface in terms of gaze fixations. | Fixation counts showed that displaying 8 CT scans for one patient was redundant, so they reduced the number to 2. This reduced time to task completion. |

| Doberne, 20159 | Using high-fidelity simulation and eye-tracking to characterize EHR workflow patterns among hospital physicians | Characterize typical EMR usage by hospital physicians as they encounter a new patient. | Found two different information gathering and documentation workflows among participants. |

| Gold, 201510 | Feasibility of utilizing a commercial eye tracker to assess electronic health record use during patient simulation | Understand factors associated with poor error recognition during an ICU based EMR simulation. | Improved performance was associated with a pattern of rapid scanning of data manifested by increased number of screens visited, mouse clicks, and saccades. |

| Moacdieh, 201511 | Clutter in electronic medical records: Examining its performance and attentional costs using eye-tracking | Assess the effects of clutter, in combination with stress and task difficulty, on visual search and noticing performance. | Clutter degraded performance in terms of response time and case awareness, especially for high stress and difficult tasks. |

| Rick, 20158 | Eyes on the clinic: Accelerating meaningful interface analysis through unobtrusive eye- tracking | Observe and report physician experiences using their EMRs. | Physician time was predominated by searching behavior indicating that the organization of the EMR system was not conducive to physician workflow. |

The studies listed in Table Table 1 provide valuable insight about the systems that they were used to evaluate, but they only scratch the surface of what can be learned from more widespread use of eye-tracking devices. For example, we would like to use eye-tracking to collect training data for a LEMR4. A LEMR learns a predictive model from data about how physicians used the EMR in the past. The model is then applied to a current patient case to predict and highlight the EMR elements that a physician will use given the current clinical context. Among other objectives, a LEMR is intended to help reduce the risk of physicians missing important patient data due to information overload1516–17. To have coverage across many different clinical contexts, LEMR models require large training sets of patient cases that include labels on the EMR elements a physician used for each case. Assuming that physicians view the EMR elements that they use for a patient case, eye-tracking is a promising means to collect how physicians use the EMR.

However, to be feasible, eye-tracking devices would need to be installed on many hospital monitors and the eye- tracking data would need to be automatically mapped to the displayed EMR elements. Eye-tracking devices intended for research are expensive, costing thousands of dollars. A license for commercial data analysis software is just as costly. This high cost of entry limits the number of researchers who can afford the devices. After eye-tracking data are collected, the coordinates of a participant’s gaze (gaze points) are not useful until they are mapped onto the part of the image or interface element that was in that onscreen location at that time. This mapping is typically performed by multiple human annotators who review and annotate screen capture recordings that are overlaid with the participant’s gaze points. Depending on the desired granularity of the results, five minutes of eye-tracking recordings can take as long as three hours to annotate3.

Addressing the first barrier: cost

New low-cost, commodity eye-trackers have been developed for novel commercial applications, such as consumer video games. These new devices do not have consistent sampling rates or function with commercial eye-tracking analysis software, but may still help increase eye-tracking adoption if their accuracy is not inferior to more expensive devices.

Addressing the second barrier: resource intensive data analysis

Mapping or annotating data from an eye-tracking study is another barrier to their widespread use. Two studies have addressed this issue by developing methods for automatically performing gaze point-to-element mapping to evaluate web-based interfaces18,19. The WebEyeMapper and WebLogger system18 records both eye gaze data from a remote eye-tracking device and a detailed event log of a participant’s web browsing session. After the recording session, the eye gaze data are converted into fixations and mapped to the interface elements that were present at each time point throughout the session. The WebGazeAnalyzer system19 functions in a similar manner, but is also able to map eye gaze onto individual lines of text.

In addition to automatic mapping, both the WebEyeMapper and WebLogger system and the WebGazeAnalyzer system provide exact playback of each study session. Exact playback is useful when the research team is interested in retrospectively analyzing the study data (e.g., Wright et al.20); however, if playback is not required, as is the case when collecting LEMR training data, then a less detailed browsing log will suffice because only the mapping output are the data of interest. We developed an easy-to-use method that automatically maps the output of an eye-tracking device to the onscreen elements of a webpage. This method works with low-cost eye-tracking devices that do not provide a consistent sampling rate, and has low time and storage requirements.

Methods

This section first describes our automatic gaze point-to-element mapping method. We explain what data we are recording during study sessions and describe two approaches toward performing the mapping. Next, we provide details for three different experiments we performed. The purpose of each experiment is provided at the start of each subsection.

Automatic gaze point-to-element mapping

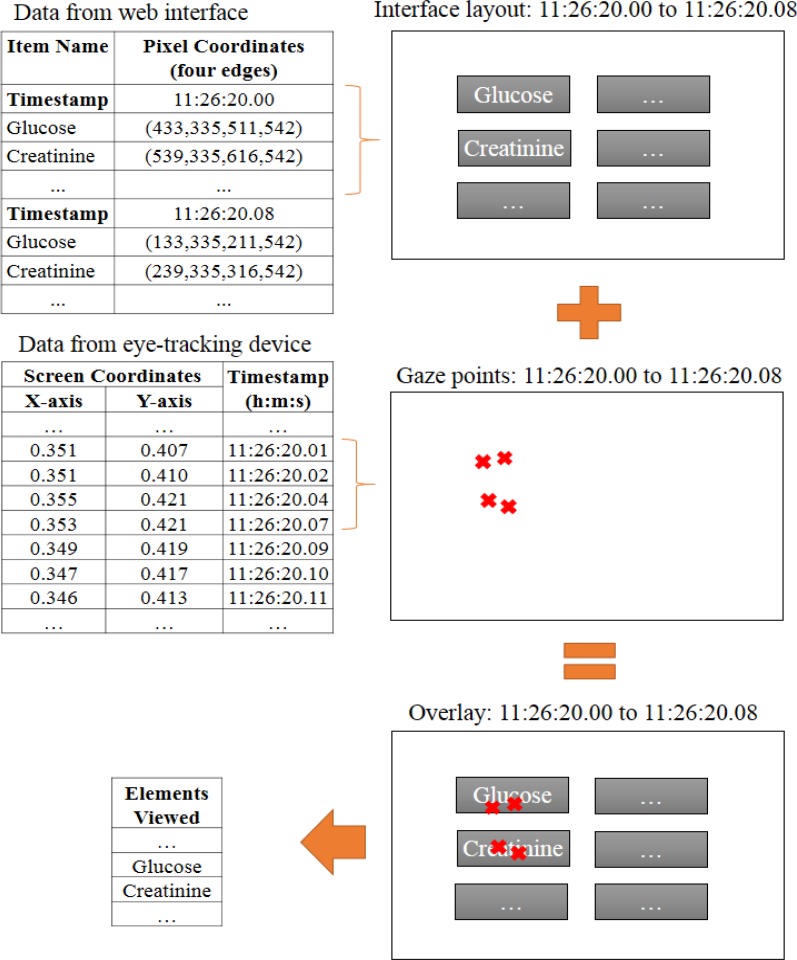

We developed an easy-to-use automatic gaze point-to-element mapping method that stores minimal information about the onscreen location of interface elements. On each page refresh, we use JavaScript to determine element locations and record them, with a timestamp, in a text file. Simultaneously, the data stream from the eye-tracking device (x- coordinate, y-coordinate, and timestamp) is recorded in a second text file. Next, these files are overlaid using the timestamp information (as shown in Figure 1). Once this overlay is made, we calculate the mapping by counting the number of gaze points that fall within or near (5-pixels or less) each interface element across time. We call this approach the Gaze Point (GP) method.

Figure 1.

Overlay of interface elements and eye gaze data.

Gaze point-to-element mapping via GP does not account for the error of the eye-tracking device. To account for this error, we developed a distribution-based approach, the Distributed Gaze Point (DGP) method, which allocates a portion of each gaze point to each of the elements that lie within the surrounding 100x100 pixel area. Allocations are made based on a bivariate normal distribution that was fit to the error of the eye-tracking device, as collected in the first experiment described below. Therefore, the portion of a gaze point that is allocated to an element is the approximated probability that the participant was actually viewing that element. We rank the viewed elements by the sum of the gaze probabilities allocated to the element across an interaction.

1. Evaluating a Low-cost eye-tracking device

Our first goal was to evaluate the eye-tracking accuracy of a low-cost device relative to a high-cost device. We hypothesized that the accuracy of the low-cost device would not be inferior to the accuracy of the high-cost device. We conclude that the low-cost device is non-inferior to the high-cost device if the upper bound of the 95% confidence interval of the difference in error (low-cost device minus high-cost device) is no greater than one percent of screen height, which is approximately 11 pixels. A difference of this magnitude could be accounted for with a slight increase in the size of each interface element. If the 95% confidence interval includes values greater than 11 pixels, then each interface element would need to be increased by a larger amount, resulting in a loss of information display density that could compromise the utility of the interface. In this situation, we would not conclude that the low-cost device is non-inferior.

Each study participant participated in two trial runs; one run with each of two eye-tracking devices: the inexpensive Tobii EyeX ($139) and the research quality Tobii X2-30 ($4,900). For each run, the participant was asked to sit in front of a computer monitor that had one of the eye-tracking devices attached. We adjusted the monitor to ensure that the participant was comfortable and the eye-tracking device had a clear view of the participant’s eyes. Once situated, the participant used the six-point Tobii EyeX Engine calibration program to calibrate the eye-tracking device to the computer monitor. Next, participants were asked to stare at a small (7x7 pixel) red box as it appeared for one-second durations, in 50 random onscreen locations. Then, we switched the eye-tracking devices for a second trial run. Half of the participants was tracked by the low-cost EyeX first, while the other half was tracked by the more expensive X2- 30 first.

Data collected during this study included the gaze points measured by the eye-tracking devices and the onscreen coordinates of each randomly generated box. We used this data to calculate the error between the median location of all of the gaze points measured while a box was onscreen and the coordinates of that box. We report the average error of each trial run and compare the error of the two eye-tracking devices using a paired sample t-test.

2. Evaluating automatic gaze point-to-element mapping

Our second study evaluated the accuracy of the DGP gaze point-to-element mapping method. The DGP mapping algorithm ranks interface elements by the amount of gaze that they receive. We assume that the longer an element is cumulatively viewed in an EMR, the more likely that the information within the element was used by the participant.

Each study participant was asked to perform a data retrieval task for twelve different patient cases displayed on an EMR prototype. The prototype displays laboratory test results, vital signs, and medication orders on time series plots. Each plot is contained in an interface element that is 200x80 pixels and has a 15-pixel white-space margin.

For each trial run, the participant was asked to sit in front of a computer monitor that had the EyeX device attached. We adjusted the monitor ensuring that the participant was comfortable and the eye-tracking device had a clear view of the participant’s eyes. Once situated, a two-step calibration was performed. First, the participant used the six-point Tobii EyeX Engine calibration program to calibrate the eye-tracking device to the computer monitor. Next, a nine- point, web-based calibration routine was used to calibrate the eye gaze data to the viewport of the bowser. After calibration, the participant was asked to perform the following case tasks: 1) find the most recent value of specified laboratory tests, 2) identify the date of the most recent value of specified laboratory tests, and 3) determine the trend in the values of specified laboratory tests. Task 1 was used for cases 1-4, Task 2 for cases 5-8, and Task 3 for cases 9-12. There were two specified laboratory tests for cases 4, 8, and 12. All other cases had only one specified laboratory test.

We applied the DGP automatic gaze point-to-element mapping method to each case. The output from the method is a ranked list of the interface elements that the participant viewed the most. We evaluate the accuracy of the mapping method by comparing the top ranked elements for each case to the specified laboratory tests that needed to be viewed in order to complete the case tasks.

3. Using eye-tracking to label training data for a Learning EMR

Our final study investigated the extent to which eye-tracking technologies can accurately determine the interface elements a physician uses when preparing for morning rounds (pre-rounding).

Each participant participated in one study session in which they were asked to review ten patient cases. For each case, the participant was asked to follow a two-step protocol. In the first step, the participant was presented with a patient case and asked to use the available information to prepare for presenting the case at morning rounds. During this step, an eye-tracking device and the automatic mapping method described above were used to record what elements the participant viewed (automatic labeling). Once the participant felt that they were prepared to present the case, they were asked to start the second step of the protocol. In this step, the participant was asked to select the elements they used when preparing to present the current case at morning rounds (manual labeling). Selections were indicated using features of the prototype. Eye gaze was not recorded during this step.

In addition to the automatic mapping methods described above, GP and DGP, we also tested augmenting the mapping method with two different fixation algorithms: Dispersion-Threshold Identification (I-DT) and Area-of-Interest Identification (I-AOI)21. These algorithms combine consecutive gaze points into fixations when they meet certain criteria: a time threshold (duration) and, for I-DT, a distance threshold (dispersion). When using these algorithms, we automatically mapped the fixations—rather than individual gaze points—to interface elements. We tested these two fixation algorithms across various parameter settings for their duration (2, 3, 4, and 5 consecutive gaze points) and dispersion (20, 30, 40, 50, 60, 70, and 80 pixels) thresholds.

After the study data were collected, we compared the automatically collected labels against the manually collected labels using Area Under the Curve (AUC) of the Receiver Operator Characteristic (ROC) and of the Precision Recall (PR) curves. To perform the analysis, time spent viewing each interface element for each case was used as the classification measure.

Eye-tracking studies were approved by the University of Pittsburgh Institutional Review Board (ID PRO16030092). Patient data displayed on the prototype EMR interface was selected from a set of de-identified ICU patient cases22.

Results

1. Evaluating a low-cost eye-tracking device

We recruited seven graduate students, two post-doctoral researchers, and one undergraduate student toparticipate in this study that took place between 3/24/2016 and 3/28/2016. Four of the participants wore corrective lenses (glasses), five had uncorrected eyesight, and one had corrective eye surgery. One participant who wore corrective lenses was excluded from the study due to calibration problems.

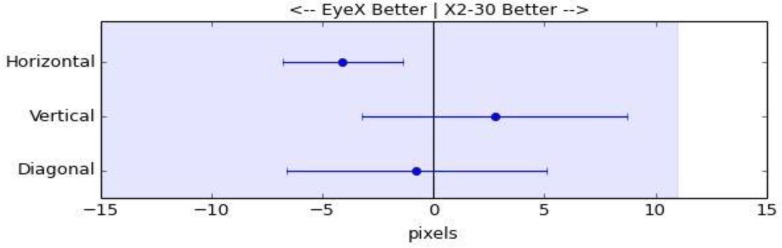

For each participant, average error was calculated on a two-dimensional plane (diagonal error) and on one-dimensional planes (horizontal error and vertical error). Results are shown in Table Table 2 and in Figure 2. Using a two-sided paired sample t-test, we did not find a statistically significant difference between the error of the two eye tracking devices in either the vertical or the diagonal directions (p-values: 0.313 and 0.768, respectively). The upper bounds of the 95% confidence intervals for the difference show that the average error for the lower cost device is likely no more than 9 pixels greater in the vertical direction and 5 pixels greater diagonally, magnitudes that are less than one percent of screen height. We did find a statistically significant difference in the horizontal error; however, it was the low-cost device that had less error than the more expensive device (p-value: 0.008). These results support the claim that the low-cost EyeX device is not inferior to the more expensive X2-30 device.

Table 2.

Average errors of two eye-tracking devices. Each error cell is the average of absolute median errors across fifty gaze points for each participant.

| Horizontal error (in pixels) | Vertical error (in pixels) | Diagonal error (in pixels) | ||||

|---|---|---|---|---|---|---|

| Participant | EyeX | X2-30 | EyeX | X2-30 | EyeX | X2-30 |

| 1 | 8 | 9 | 21 | 10 | 23 | 15 |

| 2 | 13 | 17 | 32 | 17 | 36 | 27 |

| 3 | 9 | 10 | 16 | 17 | 19 | 22 |

| 4 | 10 | 21 | 21 | 19 | 24 | 30 |

| 5 | 16 | 15 | 22 | 12 | 30 | 21 |

| 6 | 5 | 10 | 14 | 11 | 16 | 16 |

| 7 | 9 | 14 | 12 | 14 | 16 | 22 |

| 8 | 8 | 14 | 16 | 22 | 19 | 29 |

| 9 | 11 | 16 | 14 | 21 | 20 | 28 |

| Average | 9.9 | 13.9 | 18.6 | 15.7 | 22.6 | 23.3 |

| Difference (95% CI) | -4 (-6.8, -1.4) | 2.9 (-3.2, 8.7) | -0.7 (-6.6, 5.1) | |||

Figure 2.

Difference in error of two eye-tracking devices (EyeX minus X2-30). Error bars indicate two-sided 95% confidence intervals. The shaded area indicates error values below the non-inferiority margin (11 pixels). Since, the upper limit of each error bar is below the non-inferiority margin, the data support that the EyeX device is not inferior.

2. Evaluating automatic gaze point-to-element mapping

We recruited five graduate students and one post-doctoral researcher to participate in this study, which took place on 5/2/2016. Across the twelve patient cases, the automatic gaze point-to-element mapping was 88% accurate. Table Table 3 shows case by case results, where there are six participants and each case requires participants to look at either one or two elements (laboratory tests). Results are summed across the six participants. Correct elements refers to the number of times that the top ranked elements (based on the gaze mapping) were the elements needed to perform case tasks. To demonstrate, Case 1 had 1 element needed for the task and the top ranked element was the correct element for 3 of the 6 participants, resulting in an accuracy of 0.50.

Table 3.

Performance of the eye-tracking system across six participants.

| Case | Elements Needed | Correct Elements | Total Elements | Accuracy |

|---|---|---|---|---|

| 1 | 1 | 3 | 6 | 0.50 |

| 2 | 1 | 4 | 6 | 0.67 |

| 3 | 1 | 5 | 6 | 0.83 |

| 4 | 2 | 9 | 12 | 0.75 |

| 5 | 1 | 6 | 6 | 1.00 |

| 6 | 1 | 6 | 6 | 1.00 |

| 7 | 1 | 6 | 6 | 1.00 |

| 8 | 2 | 11 | 12 | 0.92 |

| 9 | 1 | 6 | 6 | 1.00 |

| 10 | 1 | 6 | 6 | 1.00 |

| 11 | 1 | 6 | 6 | 1.00 |

| 12 | 2 | 11 | 12 | 0.92 |

| Totals | 79 | 90 | 0.88 | |

3. Using eye-tracking to label training data for a LEMR

We recruited four University of Pittsburgh Medical Center (UPMC) ICU fellows as study participants. All four participants wore glasses. The AUC-ROC and AUC-PR (precision-recall) results for the four participants averaged across all ten patient cases are shown in Table Table 4. Only the best preforming I-AOI and I-DT parameter settings are shown. The GP and DGP mapping approaches, which are based on individual gaze points rather than fixations, performed the best. With nearly identical performance, it does not appear that DGP offered any benefit over GP. The two fixation algorithms resulted in reduced performance; this result may be due to the exclusion of valid gaze points that did not meet the criteria to be included in a fixation.

Table 4.

Averages across all ten cases of each mapping method tested.

| Participant 1 | Participant 2 | Participant 3 | Participant 4 | Average | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Algorithm | Duration (data points) | Dispersion (pixels) | AUC | AUC | AUC | AUC | AUC | |||||

| ROC | PR | ROC | PR | ROC | PR | ROC | PR | ROC | PR | |||

| DGP | 1 | 0.73 | 0.82 | 0.76 | 0.86 | 0.57 | 0.57 | 0.58 | 0.78 | 0.66 | 0.76 | |

| GP | 1 | 0.72 | 0.82 | 0.74 | 0.85 | 0.56 | 0.58 | 0.57 | 0.77 | 0.65 | 0.75 | |

| I-AOI | 2 | 0.66 | 0.78 | 0.72 | 0.83 | 0.55 | 0.55 | 0.54 | 0.76 | 0.62 | 0.73 | |

| I-DT | 3 | 80 | 0.50 | 0.64 | 0.52 | 0.67 | 0.55 | 0.54 | 0.55 | 0.74 | 0.53 | 0.65 |

Discussion

In combination with evaluating a low-cost eye-tracking device, we developed, evaluated, and applied a method for capturing automatically what physicians are viewing in an EMR prototype. Our results support that the low-cost eye- tracking device is not inferior to a device that is more than ten times more expensive. This result is promising because price reductions can lead to increased technology adoption23. With increased adoption, we can imagine and start developing novel EMR applications.

New eye-tracking applications that utilize low-cost devices are not without limitations. For example, the device tested does not have a consistent sampling rate. The sampling rate (number of gaze points collected per unit of time) is assumed to be constant for most algorithms that combine consecutive gaze points into fixations and is required for velocity based algorithms, such as Velocity-Threshold Identification (I-VT)21, because velocity cannot be calculated without knowing the time. Our solution was to consider each gaze point individually. The results from experiment 3 support this decision, as the fixation based algorithms do not seem to provide an advantage.

If manually performed, mapping individual gaze points to interface elements would be a tedious process and an impossible process for large scale or real-time clinical applications. We developed an automated method suitable for replacing manual mapping. This method had good accuracy (88%), which seemed to improve as participants became familiar with the EMR interface. We could test this hypothesis by repeating the experiment with a randomized ordering of the cases/case tasks. Even at current accuracy, this automatic mapping method has potential to save time and resources on eye-tracking data analysis and, as mentioned, opens up the possibility of large scale and real-time application of eye-tracking in the hospital.

We piloted the use of eye-tracking to collect LEMR training data during a simulation of ICU pre-rounding. Precision and recall of this approach were sometimes strong, as shown by AUC-PR results. However, AUC-ROC results were not as conclusive. One issue may be our classification measure which is based on viewing time (we assume that physicians view the elements that they find most useful the longest). This would be fine if all elements required the same amount of time to comprehend; however, it is reasonable to assume that different laboratory tests, vital signs, medication orders, etc., require different viewing times. We will experiment with element specific viewing times in a future study with a larger dataset.

The performance of automatic mapping when used to label LEMR training data varied by participant, suggesting that perhaps the quality of the manually labeled gold standard varies by participant. For example, Participant 3 in Table Table 4 did not select any medications for one of the patient cases. Likely, he simply forgot to select them for this case because nearly all active medications were selected by all participants for all cases other than this instance. We have not yet evaluated agreement of the manual labels between participants, but doing so in future work may improve the evaluated performance of the automatic labels, if we find that some participants provide a better labeled gold standard than others.

Using eye-tracking to automatically label LEMR training data is just one example of potential clinical applications. As mentioned in the introduction, an eye-tracking device could be used to determine what EMR information a physician has seen for a patient case. If information seen turns out, in certain situations, to be a reasonable approximation for what information a physician knows about a patient case, then we can use this knowledge in providing clinical decision support. For example, a minor allergy alert could be silenced if a physician had viewed the patient’s allergy list before starting the order. Such a capability opens up many possible eye-tracking applications which could decrease the burden of using an EMR and potentially improve patient outcomes and physician experience with the EMR.

Conclusions

We investigated approaches for addressing two barriers to the widespread adoption of eye-tracking. First, the cost of eye-tracking devices is dropping rapidly, and our results support a low-cost eye-tracking device being non-inferior to a much more expensive device. Second, we developed an automatic mapping method that may be a suitable substitute for current manual eye-tracking data analysis, which is very time consuming. Finally, we applied eye-tracking in a pilot study to collect training data for a LEMR. The results provide initial evidence to support further development of clinical applications, including especially clinical decision support. We anticipate that these advances, as well as many others yet to be developed, will facilitate use of eye-tracking technology in decision support.

Acknowledgements

We thank Dr. Milos Hauskrecht for providing the ICU data that were extracted and processed in another project. Research reported in this publication was supported by the National Library of Medicine of the National Institutes of Health under award numbers T15LM007059 and R01LM012095, and by the National Institute of General Medical Sciences of the National Institutes of Health under award number R01GM088224. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

References

- 1.Blondon K, Wipfli R, Lovis C. Use of eye-tracking technology in clinical reasoning: a systematic review. Stud Health Technol Inform. 2015;210:90–94. [PubMed] [Google Scholar]

- 2.Asan O, Yang Y. Using eye trackers for usability evaluation of health information technology: a systematic literature review. JMIR Hum Factors. 2015;2:e5. doi: 10.2196/humanfactors.4062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Segall N, Taekman J, Mark J, et al. Coding and visualizing eye tracking data in simulated anesthesia care. Proc Hum Factors Ergon Soc Annu Meet. 2007;51:765–770. [Google Scholar]

- 4.King AJ, Cooper GF, Hochheiser H, et al. Development and preliminary evaluation of a prototype of a learning electronic medical record system. AMIA Annu Symp Proc. 2015:1967–75. [PMC free article] [PubMed] [Google Scholar]

- 5.Jacob R, Karn K. Eye tracking in human-computer interaction and usability research: ready to deliver the promises. The mind’s eye: Cognitive and applied aspects of eye movement researchognitive and applied aspects of eye movement research. 2013. pp. 573–604.

- 6.Wedel M, Pieters R. A review of eye-tracking research in marketing. Review of marketing research. 2008. pp. 123–147.

- 7.Nielsona JA, Mamidala RN, Khan J. In-situ eye-tracking of emergency physician result review. Stud Health Technol Inform. 2013;192:1156. [PubMed] [Google Scholar]

- 8.Rick S, Calvitti A, Agha Z, et al. Eyes on the clinic: accelerating meaningful interface analysis through unobtrusive eye tracking.; Pervasive Computing Technologies for Healthcare (PervasiveHealth), 2015 9th International Conference on; 2015. pp. 213–216. [Google Scholar]

- 9.Doberne JW, He Z, Mohan V, et al. Using high-fidelity simulation and eye tracking to characterize EHR workflow patterns among hospital physicians. AMIA Annu Symp Proc. 2015. pp. 1881–9. [PMC free article] [PubMed]

- 10.Gold JA, Stephenson LE, Gorsuch A, et al. Feasibility of utilizing a commercial eye tracker to assess electronic health record use during patient simulation. Health Informatics J. 2016;22:744–757. doi: 10.1177/1460458215590250. [DOI] [PubMed] [Google Scholar]

- 11.Moacdieh N, Sarter N. Clutter in electronic medical records: examining its performance and attentional costs using eye tracking. Hum Factors. 2015;57:591–606. doi: 10.1177/0018720814564594. [DOI] [PubMed] [Google Scholar]

- 12.Forsman J, Anani N, Eghdam A, et al. Integrated information visualization to support decision making for use of antibiotics in intensive care: design and usability evaluation. Inform Health Soc Care. 2013;38:330–53. doi: 10.3109/17538157.2013.812649. [DOI] [PubMed] [Google Scholar]

- 13.Eghdam A, Forsman J, Falkenhav M, et al. Combining usability testing with eye-tracking technology: Evaluation of a visualization support for antibiotic use in intensive care. Studies in Health Technology and Informatics. 2011. pp. 945–949. [PubMed]

- 14.Erol Barkana D,, Açık A, Duru DG, et al. Improvement of design of a surgical interface using an eye tracking device. Theor Biol Med Model. 2014;11:S4. doi: 10.1186/1742-4682-11-S1-S4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Singh H, Spitzmueller C, Petersen NJ. Information overload and missed test results in electronic health record-based settings. JAMA Intern Med. 2013;173:702–704. doi: 10.1001/2013.jamainternmed.61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Hall A, Walton G. Information overload within the health care system: a literature review. Health Info Libr J. 2004;21:102–108. doi: 10.1111/j.1471-1842.2004.00506.x. [DOI] [PubMed] [Google Scholar]

- 17.Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Informatics Assoc. 2004;11:104–112. doi: 10.1197/jamia.M1471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Reeder RW, Pirolli P, Card SK. WebEyeMapper and WebLogger: tools for analyzing eye tracking data collected in web-use studies. CHI ‘01 Ext Abstr Hum Factors Comput Syst. 2001:19–20. [Google Scholar]

- 19.Beymer D, Russell DM. WebGazeAnalyzer: a system for capturing and analyzing web reading behavior using eye gaze. CHI ‘05 Ext Abstr Hum Factors Comput Syst. 2005:1913–1916. [Google Scholar]

- 20.Wright MC, Dunbar S, Moretti EW, et al. Eye-tracking and retrospective verbal protocol to support information systems design. Proc Int Symp Hum Factors Ergon Healthc. 2013;2:30–37. [Google Scholar]

- 21.Salvucci DD, Goldberg JH. Identifying fixations and saccades in eye-tracking protocols. Proceedings of the Eye Tracking Research and Applications Symposium. 2000. pp. 71–78.

- 22.Visweswaran S, Mezger J, Clermont G, et al. Identifying deviations from usual medical care using a statistical approach. AMIA Annu Symp Proc. 2010:827–831. [PMC free article] [PubMed] [Google Scholar]

- 23.Gruber H, Verboven F. The diffusion of mobile telecommunications services in the European Union. Eur Econ Rev. 2001;45:577–588. [Google Scholar]