Abstract

Objective:

In the course of clinical treatment, several medical media are required by a phy-sician in order to provide accurate and complete information about a patient. Medical image registra-tion techniques can provide a richer diagnosis and treatment information to doctors and to provide a comprehensive reference source for the researchers involved in image registration as an optimization problem.

Methods:

The essence of image registration is associating two or more different images spatial asso-ciation, and getting the translation of their spatial relationship. For medical image registration, its pro-cess is not absolute. Its core purpose is finding the conversion relationship between different images.

Result:

The major step of image registration includes the change of geometrical dimensions, and change of the image of the combination, image similarity measure, iterative optimization and interpo-lation process.

Conclusion:

The contribution of this review is sort of related image registration research methods, can provide a brief reference for researchers about image registration.

Keywords: Medical image, multimodal, optimization problem, registration assessment, registration

1. INTRODUCTION

In the course of clinical treatment, several medical media are required by a physician in order to provide accurate and complete information about a patient. For example, a CT scan provides information on the structure of bones, an MRI scan provides information on the structure of tissues such as muscles and blood vessels, while ultrasound images focus on organs, lesions, and luminal structures. With the development of digital image, medical image could transfer into digital information which made the image process deal with the computer. The integration of these imagery information media requires aid from image registration techniques. Such techniques essentially involve the process of correlating images from different temporal and spatial sources in order to ensure spatial consistency between given points in all images, especially matching anatomical points to allow a physician to obtain improved and detailed information. Some scholars have reviewed the registration in [1-5]. At the same time, medical image registration techniques serve as the fundamental basis for procedures such as image-guided radiation therapy, image-guided radiation surgery, and image-guided minimally invasive treatments [6-8]. Apart from the above function, image registration can also be further used in retinal imaging which achieve a comprehensive description of retinal morphology [9]. However, differential imaging principles and modalities, sampling time, and the physical state of a patient are variables that need to be considered when accessing various medical media simultaneously, and are serious issues that need resolution in image registration techniques. Improving the accuracy, time sensitivity, and robustness of image registration are issues that need to be resolved in current investigations. Further, automatic diagnose need to integrate more medical information which have to be registries.

The principle of image registration is the correlation of spatial transformation between the images, which could be converted from a registration issue into a parameter optimization issue in mathematics. From the perspective of parameter iterative optimization of angles it can classify and summarize the existing algorithms used in medical image registration. The organization of this review is as follows: Section 2 describes the issues in image registration, Section 3 introduces cross-modal conversions used in images and interpolation algorithms, Section 4 provides an overview on the geometric transformation of images, Section 5 describes the methods used in detecting similarity between images, Section 6 introduces iterative optimization algorithms, and Section 7 evaluates the results from each of the registration methods.

2. Description of issues in image registration

Image registration is one of the basic tasks of image processing, mainly used in matching different images with different time, different sensors, different perspectives and different shooting conditions. Any medical image registration could be divided into three steps:

Determine transformation between the source image and target image;

Measure similarity degree of the source image and target image;

Adopt some optimization methods, make the similarity measure degree better and faster to reach the optimal value.

Combining the above part and the digital image technology, image registration problem can transform into optimization problem.

The aim of image registration is to search for the transformation correlation operator g between two images I1 and I2, which are used as examples for the registration process, and obtain the optimized measurement of similarity or function E, which ultimately serves to obtain the optimized transformation gopt, represented as:

gopt: I1 → I2= optimum (E(I1 – g (I2))) (1)

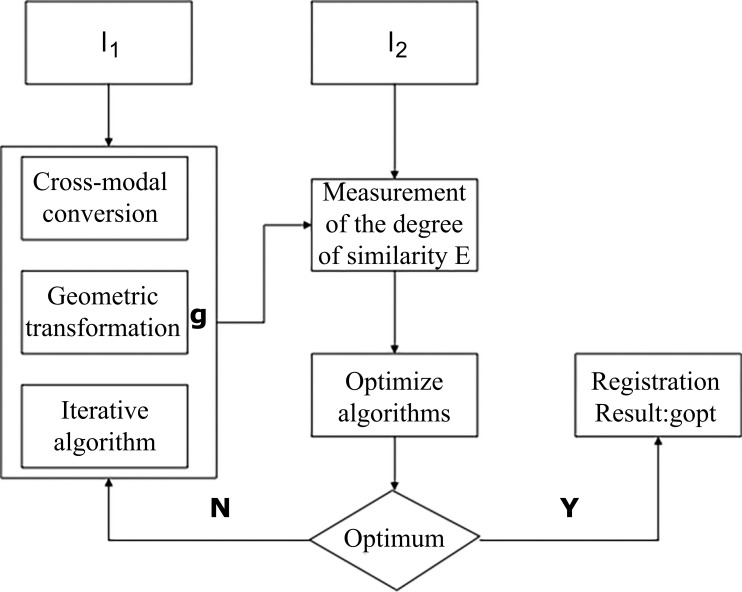

The current conventional registration process can be separated into several main steps: (1) cross-modal conversion, (2) geometric transformation, (3) measurement of the degree of similarity, and (4) iterative optimization search, as illustrated in Fig. (1). First, image I2 undergoes cross-modal conversion and a geometric transformation process to arrive at g’ (I2). Post transformation, the pixelated position of g’(I2) may not be a whole integer in pixel position; therefore, interpolation is required in order to assess the pixel. If the interpolation is necessary, the interpolation of g’(I2) will equate the value of g(I2); otherwise, g’(I2) will directly be equivalent to g(I2). Then, g (I2) and I1 are measured for similarity in order to obtain the measurement indicator, or the function E. The goal of image registration is to optimize algorithms derived from the complexity of similarity measurements through the measurement of the similarity indicator or the function E in order to simplify similarity detection in images. Therefore, the efficiency of registration is enhanced using the algorithm. When the similarity indicator (function E) reaches the most optimum state, the optimal transformation correlation gopt will be obtained. Thus, image registration becomes a problem in parameter optimization in the transform operator g.

Fig. (1).

Process of image registration.

In each specific case of image registration, not all the aforementioned steps are absolutely required. Appropriate adjustments can be made to the methods based on the specific application. For example, a cross-modal conversion process would not be necessary if the images to be registered have the same modality. Similarly, if registration employs manual identification, the similarity detection step can be omitted.

At present, another difficulty encountered in image registration is the assessment of algorithms. The assessment is performed for determining the performance level and the application scope for a given registration method. In addition, the assessment of results from a registration illustrates its potential in clinical applications and scope for improvement.

3. Cross-modal conversion and interpolative algorithms

As a unique case of parameter optimization, image registration can primarily be characterized by its input variable, which is image data. Medical images can have multiple modalities because of the differences in imaging principles and instruments, ultimately resulting in the complexity of the input variable. The cross-modal conversion of images with different modalities into the same modality reduces registration complexity.

Medical imaging provides information on several modalities for clinical diagnosis, including CT images, MRI images, X-ray images, and ultrasound images. For the registration of images with different modalities, it is often required to convert them into images having the same modality. The principle involved in this process is manipulating the physical principles used in producing the images, such that images with different modalities can be converted to images with a single modality. The physical basis of medical image registration is that images with different modalities describe the anatomical structures of the body.

Minimally invasive interventions or surgeries usually rely on fluoroscopic and ultrasound images. As fluoroscopic images consist of projections only, it poses difficulties for a physician to accurately position the three-dimensional coordinates of anatomical structures. Therefore, the conversion of CT and MRI images into X-ray images, or vice versa, has important significance in image-guided minimally invasive surgeries.

Presently, maximum intensity projection are the main methods of generating X-ray images from CT or MRI images [10, 11]. However, these methods have two shortcomings. First, MRI images cannot be used to simulate and generate X-ray images, and second, a partial loss of information occurs when a highly precise CT image is generated into an X-ray image. Based on these shortcomings, some researchers have made improvements in ray-casting and maximum intensity projection methods to achieve more realistic projections [12, 13]. Alternatively, X-ray images can be converted into CT or MRI images by using reverse algorithms [14, 15].

Furthermore, another common conversion of image modality is the conversion of CT or MRI images into ultrasound images. Roche used the magnetic resonance intensity and grayscale information in MRI images to predict the grayscale of ultrasound images, but omitted some of the noisy situations such as signal attenuation and spotting [16]. Similarly, Wein used a physical model of ultrasound image production to convert CT images into ultrasound images in order to realize the registration of CT–ultrasound images [17].

Another means of solving the cross-modal image registration problem is to convert all images into the same modality, ultimately simplifying the question. There are two methods of performing this conversion. The first method involves converting an image into an existing modality, as described in the present article. The second method involves converting all modalities into a modality that is different from all images to be registered. For example, Michel and Paragios used an expertise hybrid model to learn about the conditional probabilities of objectives to achieve cross-modal conversion of images [18]. Although the modalities of images to be registered vary, all images possess the same anatomical structure. Therefore, the isolation of such structures can be extracted into single-modal images, following which single-modal image registration can be used to complete the cross-modal conversion [19, 20].

After cross-modal conversion and spatial transformation, the position of a specified pixel may not lie on an integer pixel in the resulting image, or in other words, at a non-grid position. Therefore, it is necessary to assess the grayscale of the resulting image at the new projected position. The goal of the interpolation process is to regenerate an image at the new projected position, and the process of interpolation can increase the accuracy and speed of image registration.

The most frequently used techniques in interpolation include nearest-neighbor interpolation, linear interpolation, polynomial interpolation, and the Hanning window Sinc function. The simplest method in this list is the nearest-neighbor interpolation technique, which selects the grayscale of the nearest similar pixel as the grayscale of the sampling point. Linear interpolation is relatively simple as well: it obtains the grayscale information of a neighboring pixel or voxel in the spatial orientation of the pixel, and conducts an interpolation in every direction to enhance the precision of the overall interpolation. However, in situations of drastic changes in grayscale, linear interpolation will result in the blurring of edges, which affects the accuracy of interpolation. Therefore, Lagrange or Gaussian interpolation and Fourier or Wavelet transformation should be used in case non-smooth or non-robust grayscale exists in images [21].

4. Geometric transformation

Geometric transformation in image registration corresponds to parameter to be optimized g in parameter optimization. Based on the presence or absence of uncertainty in the parameter, the geometric transformation of an image can be categorized into two main types: linear transformation and non-linear transformation. Choosing the correct type of parameter can enhance the efficiency and accuracy of registration.

4.1. Linear Transformation

Linear transformation is performed through the calculation of translational and rotational vectors, which is mainly used in situations where the internal structure of an image does not show obvious distortion or deformation, for the registration of bone structures and tissues [22]. The main disadvantage of linear transformation is the lack of solution to local or soft tissue deformation. Linear transformations of an image primarily include rigid transformation, affine transformation, and projective transformation. Linear transformations are rotational, translational, and scaling under the insurance that structures remain unchanged and the integrity and consistency of the structure is maintained. The time sensitivity of linear transformation is relatively high.

4.2. Non-linear Transformation

With regard to tissues or structures in the human body, deformation of soft tissue can lead to local deformations that will further affect the accuracy of linear transformation. In contrast to linear transformation, the greatest advantage of non-linear transformation is that it has the capacity for registering local deformations, thus achieving more accurate registration. Non-linear image registration can be further categorized into two subtypes based on the physical basis of registration: registration based on a physical model, and registration based on basis functions.

4.2.1. Non-linear Registration Based on Physical Model

Non-linear transformations can be based on physical models, such as linear elasticity, fluid flow, and optical flow, and statistical models that are based on medical information. Linear elasticity transformation is predominantly based on stress and strain theory, in which a contact force is applied to a given position in an orthogonal plane, and stress is used to confirm deformation in the image [23]. Deformation can be determined by means of solid elasticity [24] (Solid elasticity views an object as a deformable body, and deformations are introduced by the application of an external force.), and finite element method [25] (Finite element method divides the image into several regions, each of which is assigned an organizational property.) A necessary presumption for elastic deformation is that the changes in the image to be registered should occur only in a small range. Thus, it is clearly inapplicable to tissues or organs, which are subjected to large deformations. Christensen et al. proposed a fluid flow model for image registration [26]. The basic idea in the fluid flow model is to view an image as a fluid with a continuous motion toward the other image, and by detecting the similarity between the two images, convert the process of image registration into a motion problem [27]. Optical flow registration algorithm, which is based on the principle of strength conservation in an image, is another algorithm that is similar to fluid flow [28]. Its main application lies in tracking objects with small-scale movement in temporally different images [29, 30]. Furthermore, if an image is transformed from a grayscale image to a gradient image, this method can be used in the registration of images with different modalities. Although each individual has different tissues, most of the tissues are in line with a statistics model. Therefore, some researchers have proposed non-linear methods of image registration based on the statistics model; these methods are based either on the distribution model [31] or the portfolio theory [32].

Elastic models are used in cases with small deformations, but are not applicable in medical image registration mainly because soft tissues undergo large deformations. Fluid flow or optical flow not only solves this problem of large deformations, but also preserves the topological structures of tissue to the maximum extent. However, fluid flow registration does not define the organizational components of soft tissues, i.e., in certain situations, it is not realistic to register the deformation in soft tissue. In practicality, tissue deformation is a very complex physical behavior, and soft tissues can be assumed as an elastic or viscoelastic material only under specific conditions, as soft tissues generally exhibit anisotropy. Therefore, a model that can include the biomechanics of soft tissues more precisely is required.

4.2.2. Image Registration Using Basis Functions

In contrast to physical models, image registration based on basis functions does not draw support from physical models, but rather registers the deformed regions by setting coefficients to the basis functions. Its mathematical structure relies on the function differential theory [33] or the approximation theory [34]. Basis functions used in image registration mainly include radius basis function, thin-plate splines (TPS), and B-splines.

(1) Radius basis function

Radius basis function is defined as the distance function between the interpolation point and the central or identification point of the basis function [35]. Registration using radius basis function works by setting the function approximate as 0. Examples of radius basis functions are the Gaussian method or multivariate quadratic radius basis function. Medical image registration mainly utilizes the radius basis function based on the identification point in medical images [36].

(2) Thin-plate splines

TPS uses the movement of a series of control points toward a specified direction, and performs an adequate amount of similarity detection [37, 38]. TPS can be applied to interpolation in multiple modalities, and has an inherent smooth property. It often makes use of homologous features, anatomical identifications, and other characteristics of an image that can be manually registered. TPS is a global registration method; however, it cannot describe local deformations with high accuracy.

(3) B-splines

B-splines were proposed by Schoenberg in 1946 [39], and it has been widely used in several areas since then. Similar to TPS, B-splines also optimize the projective correlation between images by solving a series of parameters [40]. The B-splines used in medical image registration mainly consist of linear interpolation B-splines, convex nuclear B-splines, and cubic B-splines, which are the most commonly used B-splines [41]. B-splines also used for registration to analyze the plantar pressure image sequences and attain geometric transformation models, so that enhance methodology [42].

As image registration using basis functions does not depend on physical modals, but rather on building a model from interpolation or approximation, the registration process is not affected by changes in physical or biological aspects. Most basic functions are compactly supported, and thus, can accurately describe local deformations and reduce the complexities in calculation and time used in optimization. Identification points are used in registration conducted using radius basis function, which makes the method applicable for registering images of different modalities, and an analytical solution is achieved. B-splines and TPS have similar algorithms, both of which do not measure vectors by using distance, but rather by using a multimodal basis function consisting of a single variable linear combination.

5. Methods in measuring similarity

The similarity measurement indicator E is required during parameter optimization in order to determine whether the optimal parameters are achieved. The methods of measuring similarity in image registration are mostly used to determine if the registration process was optimal. Based on the differences in the feature details of an image, the common methods of similarity measurement include features-based or grayscale-based method.

5.1. Features-based Measurement of Similarity

The extraction of features can drastically reduce the amount of information in a given image, and this increases the speed of similarity detection. There are some examples as global-to-local matching [43], hierarchical model refinement [44], and dual-bootstrap [45]. Based on the nature of selected features, the method can be further specified into two subtypes: registration based on artificial identification and registration based on anatomical identification.

5.1.1. Artificial Identification Points

Artificial identification points are identifications that are artificially implanted on patients, which can be seen and used as features during the sampling process of images. These identifications can be a three-dimensional framework [46], implanted bone structures [47], soft tissues [48], or identified parts of skin adherents [49]. These identification points are usually spherical in order to facilitate the recognition of images. With the exception of the relatively stable positions of the artificial identification points implanted on bones, those implanted on the skin or soft tissues usually undergo movement or translation because of deformations in the structure of soft tissue, thus resulting in deviations in positioning. In spite of this disadvantage, the registration process based on artificial identification points is still being used on tissues that do have the structural organization of bones, such as the liver, the lungs, the prostate, and the pancreas [49].

Measurement of similarity by artificial identification points can be completed using specially designed identification points. Therefore, this method can be very easily performed, requiring only a simple optimization using the least squares method, making the process rapid as well. Thus, the key to measuring similarity by using artificial identification is the rapid and precise recognition of the marker. However, at present, there are two issues with this method. First, error in registration is smaller when identification points are closer to the target region and vice versa [50]. Thus, in special cases where identification points cannot be placed at ideal positions, the accuracy of registration is reduced. Second, this method requires the implantation and stabilization of identification points before image sampling, which needs additional surgical procedures on the patient.

5.1.2. Anatomical Identifications

Anatomical identifications are identifications that are shown in image obviously, which can be seen and used as features during the sampling process of images. The method of measuring similarity based on anatomical identification calculates the “distance” between the same set of anatomical identification in the two images, and uses these “distances” to determine the degree of similarity. Here, “distances” are usually Euclidean distances, or other distances, such as the Mahalanobis distance, or a link that relates the identifications to transformational means. The anatomical identifications can be further classified into feature points, feature curvatures, and feature surfaces or contours.

Based on different anatomical identifications, image registration methods based on anatomical identification to measure similarity can be classified into similarity between feature point / feature point, feature curvature / feature curvature, and feature surface / feature surface.

The method of similarity measurement of feature point / feature point first recognizes the clear anatomical structures in an image, and then positions the corresponding feature points though linear or non-linear geometric transformations to correlate the images, similar to some of the results published previously [6, 51]. The similarity measurement of feature point/ feature point mainly consists of two steps: recognition of feature points and registration of feature points. In contrast to conventional images, the information obtained is not abundant in medical images [52], and the internal similarities of an image can lead to a lack of clarity in feature points. Thus, feature points should have obvious features that are not easily mistaken for. The Harris detection algorithm uses the detection of corners to achieve the detection of features in different images [53]. The application of the Harris detection algorithm in medical imaging reduces uncertainty [54] and realizes linear or non-linear transformation. The focus of studies on feature points is the analysis of scalar spaces.

For each point, it was represented by a descriptor. The “corresponding costs” are the “distance” between descriptor [55-57]. The translation between images is decided by minimum cost between descriptor.

The scale invariant feature transform (SIFT) algorithm proposed by Lowe [58] has been widely used in medical image registration [59, 60]. Han used an improved SIFT operator, the SURF operator [61], to conduct the registration in lung CT [62]. Although this algorithm is widely used in the extraction of feature points in medical images, the diverse and complex nature of medical imaging dictates that the algorithm is not applicable for all types of images. Some researchers have established specific ways of feature point recognition for the images of specific organs [63].

For most point-based registration methods, the feature point is independent, in [64], the positional relation is considered which feature point is defined as a node to enhance the accuracy of registration.

Measurement of similarity from feature curvatures / feature curvature [65, 66] or feature surface / feature surface [67] is achieved by searching the image for characteristic curves or surfaces, and then determining the similarity by using these features. In comparison to the measurement method using feature point/feature point, these two methods have the advantage of easy extraction. Feature-based similarity measurements require the feature to possess robustness; therefore, an incorrect selection of feature will lead to an outlier. Such outliers can lead to catastrophic consequences in image registration because of the high registration accuracy required for medical images. The establishment of a correct correlation value for registration and detection as well as the elimination of outliers is another problem in feature-based similarity measurement that needs to be resolved.

The most important step in methods used for detecting similarities between images is the automatic division and recognition of identification features, and the correlation or registration of these features in various images. Some researchers have proposed methods to reduce the error rate in recognizing features [68, 69]. The most direct method in correlating features is to take advantage of the feature descriptor, through a strategic selection of features that satisfy certain requirements. The simplest requirement is a similarity threshold; the surpassing of the threshold reflects the success in feature registration. Another strategy is to measure the Euclidean distance of candidate features, which are observed around each identifiable feature, and select features by using that Euclidean distance as a feature descriptor. However, as this method allows the inclusion of Pseudo-features, researchers proposed another registration method based on Euclidean distance and feature similarities [70], which selects the best and second-best points by using Euclidean distances and then calculates the feature similarities based on those points.

The ICP algorithm proposed by Besl and McKay is a common alternative to optimize the selection of identification feature points, which is rather rapid. This method reiterates the process of “confirm corresponding points–calculate optimal transformation” until a certain convergence criterion is met. The registration of medical images based on the ICP algorithm is applicable to the image registration of organs with different modalities [71, 72].

Some other methods of state estimation, for example, extended Kalman filters, such as colorless Kalman filters, can also be used to reduce the impact from pseudo-features [73]. The limitations of feature structures can also be used in the correlation of features through the correlation of unique shapes, but this method is applied infrequently in medical image registration because of the uncertainties present in medical images.

5.2. Intensity Registration

As usual, intensity registration is appropriate for the status when the number of feature is insufficient [74]. Intensity similarity measurement strategies are often designed based on the correlation of grayscales. In contrast to anatomical structure-based image registration, this method does not utilize the distance between features, but instead utilizes the pixel information from different images. This requires a strategy that can obtain grayscale information from images generated using different physical principles. Meanwhile, the use of a similarity function can reduce the impact of image generation from various physical principles and achieve the ideal registration outcomes.

5.2.1. Mutual Information

The method based on mutual information basically detects the degree of mutual information of two images. Its advantage is that no presumptions need to be made, which results in its popularity in the registration of medical images [22, 75-79]. In 1997, Viola and Wells described the application of mutual information for registration between magnetic resonance and real scene with gradient descent optimization [80]. The major difference between algorithms of mutual information is the method by which entropy is estimated. Shannon entropy is used in standard mutual information algorithms. The normalization of mutual information can be understood as a change in the algorithm of mutual information in Shannon entropy, which resolves the registration of images with small regions of overlap [76]. Another method to measure similarity by using mutual information is the differential detection method based on joint distribution, which obtains the distribution of joint strength from the order of the images or the separation of corresponding anatomical structures [22]. Other entropies, such as Renyi entropy [77], Tsallis entropy [78], and Havrda-Charvat entropy, [79] have also been used in medical image registration. Standard mutual information algorithms and its derivative algorithms are global statistical methods with low local accuracy. Thus, some researchers have focused on enhancing the accuracy of locally deformed images in registration methods based on mutual information. Studholme [81] suggested a method of regional mutual information, which calculates weighted linear regions to decrease errors induced by regional changes in grayscale. Sundar [82] proposed an Octree method that adaptively covers an image to enhance robustness in cases of regional changes in grayscale. Bardera [83] suggested an NMI method based on the elements of an image to convert multi-modal mutual information into unimodal mutual information. Woo [84] also suggested a similar method. All the aforementioned algorithms require the calculation of mutual information from regional pixels. However, there is a method that uses the extraction and analysis of local information to describe the characteristics of the region [85].

Mutual information is a commonly used method in clinical settings, as it does not require the pre-treatment of images. Moreover, the initialization or adjustment of the parameters is not required in this method. However, its non-generic nature is a disadvantage. During the registration of images with different modalities, if images are not converted for their modalities, both the accuracy and speed will be lower in the registration process. In addition, the adaptiveness to local changes is relatively low when mutual information is used for registration. Likar and Pernus researched on the performance of different algorithms for the joint probability estimation of registration on muscle fibre images [86].

5.2.2. Statistics Method

The similarity measurement can directly use the intensity as statistics information to complete image registration. Examples of this method include the sum of squared differences [87] or cross-correlation [88]. In [89], the authors determined the vector displacement by local cross-correlation to ensure the translation between images. However, this presumption is not robust. To address this, Myronenko proposed a method of residual complexity to increase the robustness for determining the similarity of unimodal images [90].

5.2.3. Joint Registration

For intensity registration, there are some problems, such as mutual information was not suitable for small images. Thus some authors have use two or more methods to finish registration.

In [91] the authors used the mutual information for global registration and further the cross-correlation to register the small image patches. In [92], a two-stage strategy was used on retinal images. First coarse stage used cross-correlation method, and second stage, parabolic interpolation on the peak of the cross-correlation or maximum-likelihood estimation were used for sharp information. Moreover, some methods combine feature-based registration and intensity-based registration [93].

6. Iterative optimization algorithms

Iterative optimization algorithms utilize the results from similarity detection in order to select the optimal search strategy and obtain the optimal transform operator g. The optimization value of E reflects the registration outcome at the point where the similarity measurement function E is optimum. An iterative optimization algorithm aims at reducing the search time in optimization in order to increase the time sensitivity of the algorithm.

Optimization algorithms can be separated into two categories depending on the nature of the variable: continuous variables and discrete variables. The former optimizes function E through true values, while the latter extracts discrete variables from a discrete set.

6.1. Continuous Variables

Optimization method based on continuous variables essentially solves the differential in an objective function, with its parameters defined in several ways. For example, if the length of each step is constant, each iteration aims toward minimizing the direction of search. The direction of search usually takes advantage of first-order or second-order specific information. Based on the differences in search methods, the commonly used methods in the optimization of continuous variables include (1) gradient descent method (GD), (2) conjugate gradient method (CG), (3) Powell optimization method, (4) Quasi-Newton method (QN), (5) Levenberg-Marquardt method (LM), and (6) stochastic gradient descent method.

The objective optimization function of the GD method moves in a direction that reduces the difference, or the direction, of its negative gradient, and has been used in solving a series of issues in registration. The consistent image registration algorithm proposed by Johnson and Christensen [94] and the FFD registration algorithm proposed by Rueckert [95] are both GD methods of optimization.

The conjugate gradient method has a faster convergence time compared to GD. Compared to the convergence search strategy of GD, CG uses prior knowledge from gradient descent to advance in the conjugate direction of search [96].

The Powell optimization method aims at reducing objective functions in the conjugate direction. Its difference from CG lies in that it does not make use of gradients in the image [95]. Also, Powell’s method has used as the optimization algorithm with a three level multi-resolution strategy to assist in the diagnosis of Parkinson's disease by SPECT brain images [97]. Therefore, the Powell method is a non-gradient optimization search method, and is applied in image registrations with less degrees of freedom. A pitfall of the Powell method is that its direction of search is often linear, and thus is not applicable to image registration searches with higher degrees of freedom.

The Quasi-Newton method has a convergence rate that is higher than that of GD. In addition, compared to the CG method, the QN method uses second-order information to speed up its convergence. Other factors contributing to the quick convergence in QN are its accumulation and the use of iterative information [78, 98].

The Levenberg-Marquardt is a method that determines the minimum or maximum value in a linear function by using the gradient to solve the optimal state. It combines the advantages of the GD and QN methods [99].

The aforementioned techniques include gradient convergence as a common optimization method in image registration. Medical image registration requires an abundance of derivative images for improving the data and multi-dimensionality of search space. In order to reduce the burden of calculations, researchers have proposed to use the method of stochastic gradient descent [100].

6.2. Discrete Variables

Compared to optimization methods based on continuous variables, discrete optimization of variables is restricted to discrete values. In image registration applications, discrete variable optimization essentially uses the Markov random field to conduct the optimization. Therefore, discrete optimization is fundamentally utilized to determine the lowest cost curve in a transformed image, where proper restrictions are placed on the respective coordinates and the connections in between a transformed grid. Discrete variable optimization has the advantage of high calculation efficiency, and is currently used in medical image registration [101]. Also, the point based registration could optimize transformation between images through the well-known Procrustes method which is similar least squares method [102].

7. Assessment of the registration outcomes

The measurement of similarity during the optimization of image registration can be viewed as a rough approximation or an initial assessment of the registration outcomes. However, not all registration methods possess clear physical or geometrical identifiers.

At present, several means exist that can be employed to assess each image registration method utilized in the research of medical image registration. An example is determining the smoothness coefficient in gradient optimization methods, or consistent reversal in order to check if the registration of image A to image B yields identical results as the registration of image B to image A. An evaluation method based on pixelated grayscale difference between pre- and post-registration images is used to assess the registration of unimodal images. The most commonly used method involves determining the means square error (MSE) between images in order to ultimately calculate the peak signal noise ratio (PSNR) for evaluating the quality of image registration. For affine and non-linear transformations, MSE and PSNR do not have global consistency, and thus, a more direct measurement of registration quality is performed by estimating the registration error using redundant information in registered regions after transformation steps [103].

Furthermore, identification points can also be used for assessing the outcomes of medical image registration. The target registration error (TRE) can be calculated using corresponding identification points in the image [104]. However, because deviations in the position of identification points can lead to reduction in registration accuracy, an assessment method based on morphology has been proposed. Some studies have further improved the assessment method based on identification points and morphology of image registration [21].

A standard assessment method should be defined in medical image registration in order to facilitate the comparison of the advantages and disadvantages of different algorithms.

CONCLUSION

Image registration has been widely studied and has formed a relatively isolated system in its own. Currently, a key target for research in medical image registration is the improvement and application of this technique for clinical use.

This article discussed the conventional methods in image registration from the aspects of cross-modal conversion and geometric transformation of an image, measurement of similarity, iteration and optimization, in addition to other areas.

In the field of medical image analysis, image registration remains one of the most studied areas. However, several problems remain to be resolved in this area. One such problem is that medical images are mostly grayscale images, which mandates higher requirements in parameter optimization and adjustments. Other problem is that how to promote the accuracy and speed of registration. Also, the robust is one of the problem which impedes the development of registration. Further investigation in this area should focus on effectively using the limited information to conduct high-accuracy registration, while reducing the registration and calculation time to increase the time sensitivity of registrations.

The concluding lines of the article may be presented in a short section of conclusion.

ACKNOWLEDGEMENTS

This project is sponsored by National Natural Science Foundation of China (Grant No. 61333019).

CONFLICT OF INTEREST

The authors declare no conflict of interest, financial or otherwise.

REFERENCES

- 1.Tavares J.M. Analysis of biomedical images based on automated methods of image registration.; 10th International Symposium on Visual Computing; Las Vegas, USA. 2014. pp. 21–30. [Google Scholar]

- 2.Alves R.S., Tavares J.M. Computer image registration techniques applied to nuclear medicine images. Computational and experimental biomedical sciences: methods and applications. Berlin: Springer International Publishing; 2015. pp. 173–191. [Google Scholar]

- 3.da Silva Tavares J.M. Image processing and analysis: applications and trends.; LE QUEBEC 2010. AES-ATEMA 2010 Fifth International Conference on Advances and Trends in Engineering Materials and their Applications.; 2010. [Google Scholar]

- 4.Uchida S. Image processing and recognition for biological images. Dev. Growth Differ. 2013;55(4):523–549. doi: 10.1111/dgd.12054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hill D.L., Batchelor P.G., Holden M., Hawkes D.J. Medical image registration. Phys. Med. Biol. 2001;46(3):R1–R45. doi: 10.1088/0031-9155/46/3/201. [DOI] [PubMed] [Google Scholar]

- 6.Wang L., Gao X., Zhou Z., Wang X. Evaluation of four similarity measures for 2D/3D registration in image-guided intervention. J Med Imag Health Inf. 2014;4(3):416–421. [Google Scholar]

- 7.Sadozye A.H., Reed N. A review of recent developments in image-guided radiation therapy in cervix cancer. Curr. Oncol. Rep. 2012;14(6):519–526. doi: 10.1007/s11912-012-0275-3. [DOI] [PubMed] [Google Scholar]

- 8.Jaffray D., Kupelian P., Djemil T., Macklis R.M. Review of image-guided radiation therapy 2007. Expert Rev Anticanc. 2007;7(1):89–103. doi: 10.1586/14737140.7.1.89. [DOI] [PubMed] [Google Scholar]

- 9.Abràmoff MD, Garvin MK, Sonka M. Retinal imaging and image analysis. IEEE T Bio-Med Eng . 2010;3 :169 –208. doi: 10.1109/RBME.2010.2084567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Munbodh R., Tagare H.D., Chen Z., et al. 2D-3D registration for prostate radiation therapy based on a statistical model of transmission images. Med. Phys. 2009;36(10):4555–4568. doi: 10.1118/1.3213531. [DOI] [PubMed] [Google Scholar]

- 11.Birkfellner W., Stock M., Figl M., et al. Stochastic rank correlation: A robust merit function for 2D/3D registration of image data obtained at different energies. Med. Phys. 2009;36(8):3420–3428. doi: 10.1118/1.3157111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Fu D., Kuduvalli G. A fast, accurate, and automatic 2D-3D image registration for image-guided cranial radiosurgery. Med. Phys. 2008;35(5):2180–2194. doi: 10.1118/1.2903431. [DOI] [PubMed] [Google Scholar]

- 13.Chen L., Nguyen T.B., Jones É., et al. Magnetic resonance–based treatment planning for prostate intensity-modulated radiotherapy: creation of digitally reconstructed radiographs. Int J Radiat Oncol. 2007;68(3):903–911. doi: 10.1016/j.ijrobp.2007.02.033. [DOI] [PubMed] [Google Scholar]

- 14.Markelj P., Tomaževič D., Pernuš F., Likar B. Robust gradient-based 3-D/2-D registration of CT and MR to X-ray images. IEEE Trans. Med. Imaging. 2008;27(12):1704–1714. doi: 10.1109/TMI.2008.923984. [DOI] [PubMed] [Google Scholar]

- 15.Tomaževič D, Likar B, Pernuš F. 3-D/2-D registration by integrating 2-D information in 3-D. IEEE T Med Imaging. 2006;25(1):17–27. doi: 10.1109/TMI.2005.859715. [DOI] [PubMed] [Google Scholar]

- 16.Roche A., Pennec X., Malandain G., et al. Rigid registration of 3-D ultrasound with MR images: A new approach combining intensity and gradient information. IEEE Trans. Med. Imaging. 2001;20(10):1038–1049. doi: 10.1109/42.959301. [DOI] [PubMed] [Google Scholar]

- 17.Wein W., Karamalis A., Baumgartner A., Navab N. Automatic bone detection and soft tissue aware ultrasound–CT registration for computer-aided orthopedic surgery. Int J Comput Ass Rad. 2015;10(6):971–979. doi: 10.1007/s11548-015-1208-z. [DOI] [PubMed] [Google Scholar]

- 18.Michel F., Paragios N. Image transport regression using mixture of experts and discrete Markov Random Fields.; 2010. [Google Scholar]

- 19.Haber E., Modersitzki J. Intensity gradient based registration and fusion of multi-modal images. Methods Inf. Med. 2007;46(3):292–299. doi: 10.1160/ME9046. [DOI] [PubMed] [Google Scholar]

- 20.Penney G.P., Blackall J.M., Hamady M.S., Sabharwal T., Adam A., Hawkes D.J. Registration of freehand 3D ultrasound and magnetic resonance liver images. Med. Image Anal. 2004;8(1):81–91. doi: 10.1016/j.media.2003.07.003. [DOI] [PubMed] [Google Scholar]

- 21.Avants B.B., Epstein C.L., Grossman M., Gee J.C. Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Med. Image Anal. 2008;12(1):26–41. doi: 10.1016/j.media.2007.06.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Zhang Z., Zhang S., Zhang C., Chen Y. Multi-modality medical image registration using support vector machines.; IEEE-EMBS 2005. 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference; 2005 Sep 1-4; Shanghai: China 2006. New York:IEEE; 2005. pp. 6293–6. [DOI] [PubMed] [Google Scholar]

- 23.Holden M. A Review of Geometric Transformations for nonrigid body registration. IEEE Trans. Med. Imaging. 2008;27(1):111–118. doi: 10.1109/TMI.2007.904691. [DOI] [PubMed] [Google Scholar]

- 24.Gefen S., Tretiak O., Nissanov J. Elastic 3-D alignment of rat brain histological images. IEEE Trans. Med. Imaging. 2003;22(11):1480–1489. doi: 10.1109/TMI.2003.819280. [DOI] [PubMed] [Google Scholar]

- 25.Grosland N.M., Ritesh B., Magnotta V.A. Automated hexahedral meshing of anatomic structures using deformable registration. Comput Method Biomec. 2009;12(1):35–43. doi: 10.1080/10255840903065134. [DOI] [PubMed] [Google Scholar]

- 26.Christensen G.E., Joshi S.C., Miller M.I. Volumetric transformation of brain anatomy. IEEE Trans. Med. Imaging. 1997;16(6):864–877. doi: 10.1109/42.650882. [DOI] [PubMed] [Google Scholar]

- 27.Auzias G., Colliot O., Glaunes J.A., et al. Diffeomorphic brain registration under exhaustive sulcal constraints. IEEE Trans. Med. Imaging. 2011;30(6):1214–1227. doi: 10.1109/TMI.2011.2108665. [DOI] [PubMed] [Google Scholar]

- 28.Horn B.K., Schunck B.G. Determining optical flow. Artif. Intell. 1980;17(81):185–203. [Google Scholar]

- 29.Gooya A., Biros G., Davatzikos C. Deformable registration of glioma images using EM algorithm and diffusion reaction modeling. IEEE Trans. Med. Imaging. 2011;30(2):375–390. doi: 10.1109/TMI.2010.2078833. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Yeo B.T., Sabuncu M.R., Vercauteren T., Ayache N., Fischl B., Golland P. Spherical demons: fast diffeomorphic landmark-free surface registration. IEEE Trans. Med. Imaging. 2010;29(3):650–668. doi: 10.1109/TMI.2009.2030797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Zheng G. Reconstruction of Patient-Specific 3D Bone Model from Biplanar X-Ray Images and Point Distribution Models.; ICIP 2006. Image Process 2006 IEEE Int Conf; 2006 Oct 8-11; Atlanta USA. New York:IEEE 2006: pp.; 2006. pp. 1197–200. [Google Scholar]

- 32.Hurvitz A., Joskowicz L. Registration of a CT-like atlas to fluoroscopic X-ray images using intensity correspondences. Int. J. CARS. 2008;3(6):493–504. [Google Scholar]

- 33.Meijering E. A chronology of interpolation: from ancient astronomy to modern signal and image processing. Proc. IEEE. 2002;90(3):319–342. [Google Scholar]

- 34.Jerome J.W. Review: Larry L. Schumaker, Spline functions: Basic theory. Bull. Am. Math. Soc. 1982;6(2):238–247. [Google Scholar]

- 35.Fornefett M., Rohr K., Stiehl H.S. Radial basis functions with compact support for elastic registration of medical images. Image Vis. Comput. 2001;19(1-2):87–96. [Google Scholar]

- 36.Rohr K., Fornefett M., Stiehl H.S. Spline-based elastic image registration: integration of landmark errors and orientation attributes. Comput. Vis. Image Underst. 2003;90(2):153–168. [Google Scholar]

- 37.Zhen X., Chen H., Yan H., et al. A segmentation and point-matching enhanced efficient deformable image registration method for dose accumulation between HDR CT images. Phys. Med. Biol. 2015;60(7):2981–3002. doi: 10.1088/0031-9155/60/7/2981. [DOI] [PubMed] [Google Scholar]

- 38.Serifović-Trbalić A., Demirović D., Prljaca N., Szekely G., Cattin P.C. Intensity based elastic registration incorporating anisotropic landmark errors and rotational information. Int. J. CARS. 2009;4(5):463–468. doi: 10.1007/s11548-009-0358-2. [DOI] [PubMed] [Google Scholar]

- 39.Schönberg I.J. Contributions to the problem of approximation of equidistant data by analytic functions. Boston: Part A. -On the Problem of Smoothing or Graduation. A First Class of Analytic Approximation Formulae. I. J. Schoenberg Selected Papers; 1988. pp. 3–57. [Google Scholar]

- 40.Thévenaz P., Blu T., Unser M. Interpolation Revisited. IEEE Trans. Med. Imaging. 2000;19(7):739–758. doi: 10.1109/42.875199. [DOI] [PubMed] [Google Scholar]

- 41.Klein S., Staring M., Pluim J.P. Evaluation of optimization methods for nonrigid medical image registration using mutual information and B-splines. IEEE Trans. Image Process. 2007;16(12):2879–2890. doi: 10.1109/tip.2007.909412. [DOI] [PubMed] [Google Scholar]

- 42.Oliveira F.P., Tavares J.M. Enhanced spatio-temporal alignment of plantar pressure image sequences using B-splines. Med. Biol. Eng. Comput. 2013;51(3):267–276. doi: 10.1007/s11517-012-0988-3. [DOI] [PubMed] [Google Scholar]

- 43.You X., Fang B., He Z., Tang Y. A global-to-local matching strategy for registering retinal fundus images.; Proceedings of the Second Iberian conference on Pattern Recognition and Image Analysis; 2005 Jun 7-9; Estoril Portugal. 2005. pp. 259–67. [Google Scholar]

- 44.Can A., Stewart C.V., Roysam B., Tanenbaum H.L. A featurebased robust, hierarchical algorithm for registering pairs of images of the curved human retina. IEEE Trans. Pattern Anal. Mach. Intell. 2002;24(3):347–364. [Google Scholar]

- 45.Stewart C.V., Tsai C.L., Roysam B. The dual-bootstrap iterative closest point algorithm with application to retinal image registration. IEEE Trans. Med. Imaging. 2003;22(11):1379–1394. doi: 10.1109/TMI.2003.819276. [DOI] [PubMed] [Google Scholar]

- 46.Jin J., Ryu S., Faber K., et al. 2D/3D image fusion for accurate target localization and evaluation of a mask based stereotactic system in fractionated stereotactic radiotherapy of cranial lesions. Med. Phys. 2006;33(12):4557–4566. doi: 10.1118/1.2392605. [DOI] [PubMed] [Google Scholar]

- 47.Tang T.S., Ellis R.E., Fichtinger G. 3rd International Conference on Medical Image Computing & Computer-assisted Intervention; 2000 Oct 11-14; Pittsburgh USA. 2000. pp. 502–11. [Google Scholar]

- 48.Mu Z., Fu D., Kuduvalli G. A probabilistic framework based on hidden markov model for fiducial identification in image-guided radiation treatments. IEEE Trans. Med. Imaging. 2008;27(9):1288–1300. doi: 10.1109/TMI.2008.922693. [DOI] [PubMed] [Google Scholar]

- 49.Schweikard A., Shiomi H., Adler J. Respiration tracking in radiosurgery without fiducials. Int J Med Robot Comp. 2005;1(2):19–27. doi: 10.1002/rcs.38. [DOI] [PubMed] [Google Scholar]

- 50.Mehdi H.M., Purang A. A high-order solution for the distribution of target registration error in rigid-body point-based registration.; 9th International Conference on Medical Image Computing and Computer-Assisted Intervention; 2006 Oct 1-6; Copenhagen Denmark. 2006. pp. 603–11. [DOI] [PubMed] [Google Scholar]

- 51.Hu L., Wang M., Song Z. Manifold-based feature point matching for multi-modal image registration. Int J Med Robot Comp. 2013;9(1):e10–e18. doi: 10.1002/rcs.1465. [DOI] [PubMed] [Google Scholar]

- 52.Zitová B., Flusser J. Image registration methods: a survey. Image Vis. Comput. 2003;21(11):977–1000. [Google Scholar]

- 53.Harris C., Stephens M.A. A combined corner and edge detector.; Proceedings of the Alvey Vision Conference; 1988. pp. 15–50. [Google Scholar]

- 54.Mikolajczyk K., Schmid C. Scale & affine invariant interest point detectors. Int. J. Comput. Vis. 2004;60(1):63–86. [Google Scholar]

- 55.Oliveira F.P., Tavares J.M. Matching contours in images through the use of curvature, distance to centroid and global optimization with order-preserving constraint. Cmes-Comp Model Eng. 2009;43(1):91–110. [Google Scholar]

- 56.Bastos L.F., Tavares J.M. Improvement of modal matching image objects in dynamic pedobarography using optimization techniques.; Third International Workshop; 2004 Sep. 22-24; Palma de Mallorca Spain. Berlin: Springer-Verlag; 2004. pp. 39–50. [Google Scholar]

- 57.Oliveira F.P., Tavares J.M., Pataky C. Rapid pedobarographic image registration based on contour curvature and optimization. J. Biomech. 2009;42(15):2620–2623. doi: 10.1016/j.jbiomech.2009.07.005. [DOI] [PubMed] [Google Scholar]

- 58.Lowe D.G. Distinctive image fearures from scale-invariant keypoints. Int. J. Comput. Vis. 2004;60(2):91–110. [Google Scholar]

- 59.Cheung W., Hamarneh G. N-sift: N-dimensional scale invariant feature transform for matching medical images. IEEE Trans. Image Process. 2009;18(9):2012–2021. doi: 10.1109/TIP.2009.2024578. [DOI] [PubMed] [Google Scholar]

- 60.Cheung W.A., Hamarneh G. Scale invariant feature transform for n-dimensional images (n-SIFT). Insight. 2007:2–8. doi: 10.1109/TIP.2009.2024578. [DOI] [PubMed] [Google Scholar]

- 61.Bay H., Ess A., Tuytelaars T., Gool L.V. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008;110(3):346–359. [Google Scholar]

- 62.Han X. Feature-constrained nonlinear registration of lung CT images. In: Bram van G, Keelin M, Tobias H, editors. 2010. pp. 63–72. [Google Scholar]

- 63.Xiao C., Staring M., Shamonin D., Reiber J.H., Stolk J., Stoel B.C. A strain energy filter for 3D vessel enhancement with application to pulmonary CT images. Med. Image Anal. 2011;15(1):112–124. doi: 10.1016/j.media.2010.08.003. [DOI] [PubMed] [Google Scholar]

- 64.Bastos L.F., Tavares J.M. Matching of objects nodal points improvement using optimization. Inverse Probl. Sci. Eng. 2006;14(5):529–541. [Google Scholar]

- 65.Sani Z.A., Shalbaf A., Behnam H., Shalbaf R. Automatic computation of left ventricular volume changes over a cardiac cycle from echocardiography images by nonlinear dimensionality reduction. J. Digit. Imaging. 2015;28(1):91–98. doi: 10.1007/s10278-014-9722-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Sparks R., Madabhushi A. Explicit shape descriptors: Novel morphologic features for histopathology classification. Med. Image Anal. 2013;17(8):997–1009. doi: 10.1016/j.media.2013.06.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Xie Y., Chao M., Xing L. Tissue Feature-based and segmented deformable image registration forimproved modeling of shear movement of lungs. Int J Radiat Oncol. 2009;74(4):1256–1265. doi: 10.1016/j.ijrobp.2009.02.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Benameur S, Mignotte M, Labelle H, et al. A hierarchical statistical modeling approach for the unsupervised 3-D biplanar reconstruction of the scoliotic spine. IEEE T Bio-Med Eng. 2005;52(12):2041–57. doi: 10.1109/TBME.2005.857665. [DOI] [PubMed] [Google Scholar]

- 69.Benameur S, Mignotte M, Destrempes F, Guise JAD. Three dimensional biplanar reconstruction of scoliotic rib cage using the estimation of a mixture of probabilistic prior models. IEEE T Bio-Med Eng. 2005;52(10):1713–28. doi: 10.1109/TBME.2005.855717. [DOI] [PubMed] [Google Scholar]

- 70.Mikolajczyk K., Schmid C. A performance evaluation of local descriptors. IEEE T Pattern Anal. 2005;27(10):1615–1630. doi: 10.1109/TPAMI.2005.188. [DOI] [PubMed] [Google Scholar]

- 71.Chen E.C., Mcleod A.J., Baxter J.S., Peters T.M. Registration of 3D shapes under anisotropic scaling. Int. J. CARS. 2015;10(6):867–878. doi: 10.1007/s11548-015-1199-9. [DOI] [PubMed] [Google Scholar]

- 72.Mei X, Li Z, Xu S, Guo X. Registration of the cone beam CT and blue-ray scanned dental model based on the improved ICP algorithm. 2014 doi: 10.1155/2014/348740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Moghari M.H., Abolmaesumi P. Point-based rigid-body registration using an unscented Kalman filter. IEEE Trans. Med. Imaging. 2007;26(12):1708–1728. doi: 10.1109/tmi.2007.901984. [DOI] [PubMed] [Google Scholar]

- 74.Cattin P.C., Bay H., Van Gool L., et al. Retina mosaicing using local features.; 9th International Conference on Medical Image Computing and Computer-Assisted Intervention; 2006 Oct 1-6; Copenhagen Denmark. 2006. pp. 185–92. [DOI] [PubMed] [Google Scholar]

- 75.Rubeaux M., Nunes J.C., Albera L., Garreau M. Medical image registration using Edgeworth-based approximation of Mutual Information. IRBM. 2014;35(3):139–148. [Google Scholar]

- 76.Studholme C., Hill D.L., Hawkes D.J. An overlap invariant entropy measure of 3D medical image alignment. Pattern Recognit. 1999;32(1):71–86. [Google Scholar]

- 77.He Y., Hamza A.B., Krim H.A. Generalized divergence measure for robust image registration. IEEE Trans. Signal Process. 2003;51(5):1211–1220. [Google Scholar]

- 78.Khader M., Hamza A.B. An Entropy-Based Technique for Nonrigid Medical Image Alignment.. In: Jake KA, Reneta PB, Valentin EB, editors. International Workshop on Combinatorial Image Analysis; 2011 May 23-25; Madrid, Spain. 2011. pp. 444–55. [Google Scholar]

- 79.Tustison N.J., Awate S.P., Gang S., Cook T.S., Gee J.C. Point set registration using Havrda-Charvat-Tsallis entropy measures. IEEE Trans. Med. Imaging. 2011;30(2):451–460. doi: 10.1109/TMI.2010.2086065. [DOI] [PubMed] [Google Scholar]

- 80.Viola P., Wells I.I. Alignment by maximization of mutual information. Int. J. Comput. Vis. 1997;24(2):137–154. [Google Scholar]

- 81.Colin S., Corina D., Bistra I., Valerie C. Deformation-based mapping of volume change from serial brain MRI in the presence of local tissue contrast change. IEEE Trans. Med. Imaging. 2006;25(5):626–639. doi: 10.1109/TMI.2006.872745. [DOI] [PubMed] [Google Scholar]

- 82.Sundar H., Shen D., Biros G., Xu C., Davatzikos C. Robust computation of mutual information using spatially adaptive meshes.; 10th International Conference on Medical Image Computing and Computer-Assisted Intervention; 2007 Oct 29-Nov 2; Brisbane Australia. Berlin: Springer Verlag; 2007. pp. 950–8. [DOI] [PubMed] [Google Scholar]

- 83.Bardera A., Feixas M., Boada I., Sbert M. High-Dimensional normalized mutual information for image registration using random lines. Lect. Notes Comput. Sci. 2006;4057:264–271. [Google Scholar]

- 84.Woo J., Stone M., Prince J.L. Multimodal registration via mutual information incorporating geometric and spatial context. IEEE Trans. Image Process. 2015;24(2):757–769. doi: 10.1109/TIP.2014.2387019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Pluim J.P., Maintz J.B., Viergever M.A. F-information measures in medical image registration. IEEE Trans. Med. Imaging. 2005;23(12):1508–1516. doi: 10.1109/TMI.2004.836872. [DOI] [PubMed] [Google Scholar]

- 86.Likar B., Pernuš F. A hierarchical approach to elastic registration based on mutual information. Image Vis. Comput. 2001;19(1):33–44. [Google Scholar]

- 87.Ashburner J., Friston K.J. Nonlinear spatial normalization using basis functions. Hum. Brain Mapp. 1999;7(4):254–266. doi: 10.1002/(SICI)1097-0193(1999)7:4<254::AID-HBM4>3.0.CO;2-G. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Orchard J. Informatio Proceedings.; 2007 IEEE Int Conf Image Process; 2007 Sep 16- Oct 19; San Antinio USA. New York: IEEE; 2007. pp. I-485–8. [Google Scholar]

- 89.Molodij G., Ribak E.N., Glanc M., Chenegros G. Enhancing retinal images by nonlinear registration. Opt. Commun. 2015;342:157–166. [Google Scholar]

- 90.Zhang J., Lu Z.T., Pigrish V., Feng Q.J., Chen W.F. Intensity based image registration by minimizing exponential function weighted residual complexity. Comput. Biol. Med. 2013;43(10):1484–1496. doi: 10.1016/j.compbiomed.2013.07.017. [DOI] [PubMed] [Google Scholar]

- 91.Andronache A., von Siebenthal M., Székely G., Cattin P. Non-rigid registration of multi-modal images using both mutual information and cross-correlation. Med. Image Anal. 2008;12(1):3–15. doi: 10.1016/j.media.2007.06.005. [DOI] [PubMed] [Google Scholar]

- 92.Ramaswamy G., Devaney N. Pre-processing, registration and selection of adaptive optics corrected retinal images. Ophthal Phys Opt. 2013;33(4):527–539. doi: 10.1111/opo.12068. [DOI] [PubMed] [Google Scholar]

- 93.Oliveira F.P., Sousa A., Santos R., Tavares J.M. Spatio-temporal alignment of pedobarographic image sequences. Med. Biol. Eng. Comput. 2011;49(7):843–850. doi: 10.1007/s11517-011-0771-x. [DOI] [PubMed] [Google Scholar]

- 94.Johnson H.J., Christensen G.E. Consistent landmark and intensity-based image registration. IEEE Trans. Med. Imaging. 2002;21(5):450–461. doi: 10.1109/TMI.2002.1009381. [DOI] [PubMed] [Google Scholar]

- 95.Rueckert D., Sonoda L.I., Hayes C., Hill D.L., Leach M.O., Hawkes D.J. Nonrigid registration using free-form deformations: application to breast MR images. IEEE Trans. Med. Imaging. 1999;18(8):712–721. doi: 10.1109/42.796284. [DOI] [PubMed] [Google Scholar]

- 96.Tustison N.J., Avants B.B., Gee J.C. Directly Manipulated Free-Form Deformation Image Registration. IEEE Trans. Image Process. 2009;18(3):624–635. doi: 10.1109/TIP.2008.2010072. [DOI] [PubMed] [Google Scholar]

- 97.Oliveira F.P., Faria D.B., Costa D.C., Tavares J.M. A robust computational solution for automated quantification of a specific binding ratio based on [123I] FP-CIT SPECT images. Q. J. Nucl. Med. Mol. Imaging. 2014;58(1):74–84. [PubMed] [Google Scholar]

- 98.Loeckx D., Slagmolen P., Maes F., Vandermeulen D., Suetens P. Nonrigid image registration using conditional mutual information. IEEE Trans. Med. Imaging. 2010;29(1):19–29. doi: 10.1109/TMI.2009.2021843. [DOI] [PubMed] [Google Scholar]

- 99.Kabus S., Netsch T., Fischer B., Modersitzki J. SPIE 2004. Med Imaging 2004 Int Society Optics Photonics; 2004 May 12. 2004. B-spline registration of 3D images with Levenberg-Marquardt optimization. [Google Scholar]

- 100.Staring M., Van Der Heide U.A., Klein S., Viergever M.A., Pluim J.P. Registration of cervical MRI using multifeature mutual information. IEEE Trans. Med. Imaging. 2009;28(9):1412–1421. doi: 10.1109/TMI.2009.2016560. [DOI] [PubMed] [Google Scholar]

- 101.So R.W., Tang T.W., Chung A.C. Non-rigid image registration of brain magnetic resonance images using graph-cuts. Pattern Recognit. 2011;44(10-11):2450–2467. [Google Scholar]

- 102.Hill D.L., Batchelor P. Registration methodology: concepts and algorithms. Med Image Regis; 2001. pp. 39–70. [Google Scholar]

- 103.Tang L., Hero A., Hamarneh G. Locally-Adaptive Similarity Metric for Deformable Medical Image Registration.; 9th IEEE International Symposium on Biomedical Imaging; 2012 May 2-5; Barcelona Spain. New York: IEEE; 2012. pp. 728–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Danilchenko A., Fitzpatrick J.M. General approach to first-order error prediction in rigid point registration. IEEE Trans. Med. Imaging. 2011;30(3):679–693. doi: 10.1109/TMI.2010.2091513. [DOI] [PMC free article] [PubMed] [Google Scholar]