Abstract

Orbitofrontal cortex (OFC) function is often characterized in terms of stimulus–reward mapping; however, more recent evidence suggests that the OFC may play a role in selecting and representing extended actions. First, previously encoded reward associations in the OFC could be used to inform responding in novel but similar situations. Second, when evaluated in tasks requiring the animal to perform extended actions, response selective activity can be recorded in the OFC. Finally, the interaction between the OFC and hippocampus illustrates OFC’s role in response selection. The OFC may facilitate reward-guided memory retrieval by selecting the memories most relevant to achieve a goal. This model for OFC function places it within the hierarchy of increasingly complex action representations that support decision making.

Keywords: orbitofrontal cortex, hippocampus, reward, memory retrieval

Introduction

The function of the orbitofrontal cortex (OFC) has been described in many ways, including the inhibition of inappropriate responses,1 the binding of emotional salience to particular outcomes,2 the mapping of stimuli to their associated reward, particularly in the context of flexible responding,3 and the integration of reward history with respect to an arbitrary stimulus to generate a reward expectation.4 Current theories argue that while the OFC may contribute to decision making, its primary role is to represent the rewards associated with stimuli. In contrast, under current theories higher-order rules and action plans are represented in other parts of the brain, especially the lateral prefrontal cortex (LPFC). Recent findings suggest, however, that the OFC may play a more prominent role in the selection and representation of extended sequences of actions or plans than previously supposed.5,6 Although neuronal activity in the OFC of rats and monkeys is reliably selective for stimulus–reward conjunctions, extended actions are also coded by OFC neurons. In light of this evidence, we propose that beyond mapping reward history of stimuli or responses, the OFC contributes proactively to action selection and planning, a natural consequence of its role in computing reward expectancy. The example of reward-guided memory retrieval illustrates how the OFC performs this function in action selection. We propose that reward expectancy signals by the OFC contribute to memory retrieval and more generally that the OFC contributes to the prospective function of the prefrontal cortex: the organization of plans and actions.

The OFC and projecting future outcomes

Most studies have focused on the OFC’s role in modifying previously learned responses or mapping new rewards to existing responses, that is, reversal learning. Reversal learning requires animals to withhold previously rewarded responses and produce previously unrewarded ones, and an OFC lesion or inactivation reliably produces deficits in reversal in rats,7–12 monkeys,13–15 and humans.16,17 These results imply that animals with OFC lesions cannot update their representations of rewards associated with particular stimuli or responses when the task contingencies change. Thus, these experiments support the model that the OFC map stimuli or responses to their associated reward.

The evidence also suggests that the OFC contributes to a high-resolution mapping between stimuli and reward history, such that the expected values of stimuli are represented specifically. For example, Pavlovian unblocking is impaired in rats with OFC lesions when the reward is changed, even when the value of the reward is held constant.18 Similarly, monkeys with OFC lesions have difficulty attributing rewards to particular stimuli.19 Both studies suggest that the OFC contributes to associating reward with particular stimuli and responses rather than encoding of running tally of recent rewards from all stimuli. This discrete encoding extends to conjoint stimuli. In a Pavlovian overexpectation task, rats were trained to associate reward with each of two separate stimuli. Normal rats learn to reduce their reward expectations after the two stimuli are presented together for several sessions; rats in which the OFC is inactivated during this conjoint training do not.20 Thus, the association of rewards with stimuli extends to the combination of stimuli and the ability to summate their predicted rewards as well. Finally, the OFC reward map includes the reward probability associated with stimuli. If the probability of reward is low enough, humans and monkeys with OFC lesions are impaired in matching stimulus selection with the probability of reward.17,19 These studies confirm that the OFC is important for mapping highly specific stimuli or responses with reward probability and, thereby, expectancy.

Merely recording the history of reward associated with specific stimuli, however, does not imply a role in response selection. Yet accumulating evidence suggests that the OFC uses information derived from the outcomes of prior choices to guide decision making prospectively in novel contexts, as has been suggested elsewhere.21 Consider the following scenario. If you live in New York City and are late for a date downtown, you have two options for getting there quickly: either take the subway or a cab. To minimize the time required for the trip, you must estimate the likely total duration of both means of transit. We argue that this cost/benefit estimate requires the OFC. By drawing on a “library” of remembered facts, responses, and their associated outcomes, the OFC helps you to estimate the likely outcome when placed in this new, but undoubtedly familiar, situation. The purpose of generating a library of reward expectancies is not nostalgia alone, but rather to support future choices. To the extent that the OFC helps encode such a library of specific reward associations, it can participate in a prospective function: estimating the outcome of potential actions. Hence, evidence for a specific reward map in the OFC is consistent with a contribution to response selection, as long as the map can inform future decisions in similar contexts.

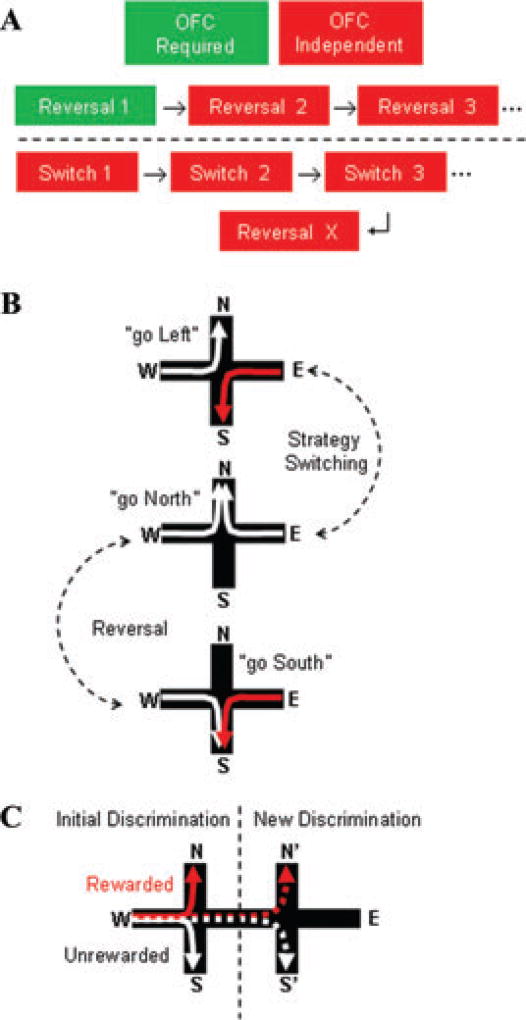

The experimental evidence linking stimulus–outcome information coding by the OFC to the prospective selection of action is just emerging. A recent study found that the OFC and the dorsolateral prefrontal cortex code the reward value of both actual and hypothetical outcomes.22 Further, this view may explain anomalous results we observed in rats given temporary inactivation of the OFC and trained to enter one arm (e.g., East) of a plus-maze to find food. Spatial reversal learning (e.g., learning to enter the West arm) was impaired by OFC inactivation,11 but only in the first reversal; subsequent reversals were learned and retained normally (Fig. 1). Moreover, rats trained in the same maze to make serial strategy switches (e.g., from “go East” to “turn left”) did not require the OFC to learn their first spatial reversal, suggesting that something learned during strategy switching was applied to reversal learning. We hypothesized that during strategy switching the rats learned about rewarded paths and that this information was stored in the OFC. Later, when the rat was first given spatial reversal training, it “remembered” the paths that were rewarded previously, even if the path was taken in the context of a different strategy. This interpretation of the experimental evidence supports a role for the OFC in response selection because it explains what the OFC must encode in order to perform this function. What the OFC encodes is a library of paths—sequences of actions toward a goal. Using this knowledge as a base, the likely reward of transit to a known goal arm via a novel path can be estimated. Without an active OFC to retain this information, the animal cannot make a good estimate, leading to poor performance. We could further test this hypothesis by requiring animals to make novel discriminations using familiar responses. For example, an animal could be trained to go north rather than south on the plus-maze. Then the discrimination could be changed such that the animal would still have to go to the north part of the room, but by traversing different paths that previously trained. Normal animals should be able to apply prior training to perform this new contingency rapidly. We hypothesize that rats given OFC lesions would not show the benefit of prior training when tested on this new contingency, and the impairment would be due to a failure of the prospective function of the OFC.

Figure 1.

Knowledge of previously rewarded paths may help generate novel responses. (A) Rats trained on repeated spatial reversals no longer require the OFC. Further, animals trained on repeated strategy switches—an OFC-independent task—no longer require the OFC on subsequent reversals. (B) Because strategy switching in this task has a common path with spatial reversal, knowledge of the rewarded paths learned during strategy switching may be used to support responding during later reversals. (C) A sample experiment for testing the prospective function of the OFC. Animals would be trained to go north rather than south on the plus-maze. Later the animals would be tested on going north via a different path. If the OFC contributes to selecting novel responses, then the OFC lesions should impair this new discrimination.

OFC neurons encode temporally extended actions

OFC activity correlates with stimulus–reward pairings in both rats and monkeys. OFC neurons in rats fire in response to odor-reward pairings.23–31 Selective firing to the odor–reward pair rises in correlation with learning,23,24,28,29 and selective firing changes on reversal.25,29,31 Similar results have been obtained in recordings of activity in the primate OFC.32–34 This evidence forms an additional basis for the argument that the OFC dynamically tracks the reward values associated with specific stimuli.

OFC coding of responses, on the other hand, is controversial. OFC activity correlates solely with reward in some studies,35–37 but with response direction in others.38–40 We observed that OFC neuronal activity in rats was associated with extended sequences of actions on the plus-maze.41 The OFC neurons fired selectively as rats followed specific paths, and the firing rate was correlated with the likelihood that the path would be rewarded. The neuronal activity did not vary with overt behavior, which was kept constant, but rather with the history of reward associated with a given path. For example, OFC firing patterns on the start arm of the plus-maze anticipated the pending “decision” in the choice point. Thus, while OFC activity did not code behavioral responses per se, it discriminated the reward expectancies associated with these responses in advance of a choice. This pattern of activity suggests that OFC contributes prospectively to response selection.42

The collection of studies that do or do not report response correlates in OFC activity differ along many dimensions, including task design and species, making direct comparisons difficult. Differences in observed OFC coding across experiments are not surprising given the wide range of task demands employed. The OFC is required for some decision-making tasks in rats11 and monkeys13,43 but not others, and unit activity can vary even during identical overt behaviors. In addition, most of the other studies recorded OFC activity in tasks that require associating explicit, temporally discrete cues such as odors or visual images with temporally limited responses such as a nose poke or a lever press. In these tasks, unit activity is compared during brief, discrete events. Changing the stimulus provides most information within and between trials. Responses are limited spatially and temporally to a visual saccade or motion in a small operant chamber. The limited duration and extent of these responses could obscure response selective activity even as it reveals strong correlations with stimulus presentation. In contrast, our experiment used the distal cues in a relatively large room as discriminative stimuli, which were kept constant throughout testing. The same goal was maintained across several trials. Intertrial intervals and response durations were relatively unrestricted. Because the same stimuli were always available, they could not predict reward reliably, allowing activity to become organized around response contingencies. These factors explain why our study observed activity selective for rule-guided responses in the form of path coding.

Other studies have also suggested that response-related activity in the OFC could reflect species differences or difficulty in specifying homologous neurons in monkeys and rats.40 More recently, however, OFC activity was found to correlate with the presentation of the cue that signaled response strategy prior to the response, and the OFC signal preceded activity in the dorsolateral prefrontal cortex.5 As in our study, the selective coding was observed in a task that required the animal to combine knowledge about the prior trial with the cue that signaled different response rules. OFC neurons discriminated the strategy during the cue presentation and then the specific response before rewards were presented.5 Moreover, OFC activity discriminated responses after the decision and in anticipation of reward.40 These patterns of activity are analogous to the “prospective” and “retrospective” activity patterns we observed in path coding neurons in the rat OFC41 (discussed below). Together, the results suggest that OFC activity in both rats and monkeys encodes information about responses in the context of temporally extended sequences of rewarded actions.42,44

OFC coding, comparative neuropsychology, and delay discounting

Enormous differences between humans, monkeys, and rats are obvious in both the neuroanatomy of the frontal cortex and its associated behaviors. Differences in the ability to perform complex tasks may be related to the degree to which the species can delay gratification. Delay discounting quantifies the degree to which reward value interacts with its temporal immediacy. People and other animals typically prefer rewards now rather than later (e.g., would you rather I give you $100 now or $200 next year?). Delay discounting has been observed in nonhuman primates, rats, and pigeons.45 Moreover, the steepness of decline in value, reflecting a declining ability to delay gratification, is highly variable between species. The persistence of a reward value can be measured by its half-life: the time required for a given reward to be valued at half of its subjective value in the present. For rats, this time is roughly 5 sec;46 for monkeys, depending on species, it ranges between 30 sec and a minute.47 In humans, the half-life of a reward is usually measured in months but can be highly variable depending on the individual, their age, and the reward used.45 Further, delay discounting correlates with the persistence of reward representations in the OFC. OFC activity shows delay discounting in both rats48 and primates49 on a time scale similar to behavioral measures, in the sense that reward activity declines at the same rate as the animal’s responsiveness to a rewarded stimulus. Differences in delay discounting in both unit activity and behavior may contribute to different aspects of OFC coding relevant to responses, because it may constrain the interval over which response-related OFC activity can be detected. The behavioral complexity of problems and delay discounting may be mechanistically related, in that problem solving may be limited by the half-life of rewards and, thus, the number of cognitive steps that can support conjunctions between stimuli, responses, and rewards. From this view, the OFC in people, monkeys, and rats may perform identical computations (e.g., mapping reward expectancies), but the temporal domain and behavioral range of the reward expectancy mapping may lead to the obvious qualitative differences in behavior among species.

Reward history and guided memory retrieval

The interaction between the OFC and other structures provides a tractable experimental opportunity for investigating mechanisms by which the OFC influences decision making. For example, spatial reversal learning requires both the hippocampus and the OFC, and the two structures may interact in this and similar tasks. The hippocampus and the OFC are reciprocally connected. CA1 directly projects to the PFC in rats and primates,50–52 and the PFC projects back to the hippocampus via the nucleus reunions of the thalamus,53 which then projects to the entorhinal cortex.54,55 Lesion studies in human, primates, and rats also support functional interaction between the two structures. OFC lesions in primates56 and prefrontal-temporal disconnection57 impair object-in-place learning, a hippocampus-dependent task.58 OFC lesions in rats can also impair spatial navigation.59,60 Further, other experiments have shown that the prefrontal cortex interacts with the hippocampus specifically in the context of temporally extended actions.42 These studies support the idea that the PFC and hippocampus participate in a functional network to support the memory and execution of spatial and other types of responses.

Simultaneous recording in the OFC and hippocampal circuits suggest that the two structures interact as rats learned in the plus-maze. Local field potentials (LFPs) reflect population oscillations in dendritic excitability within circuits. LFP coherence measures the correlation of these oscillations between structures and is believed to reflect mutual information.61–63 Simultaneous recordings in the hippocampus and OFC showed substantial theta band (5–12 Hz) coherence, i.e., the two structures oscillated in phase and frequency.41 Coherence was high during baseline performance, declined during learning, and returned slowly as performance improved. In addition, while OFC neurons fired throughout significantly more of the maze than hippocampal neurons, OFC neurons fired in patterns that resembled prospective and retrospective coding in the hippocampus.41,64 Prospective coding is defined operationally by observing firing rates on the start arm that differ significantly depending on the goal arm the animal will take. Retrospective coding is defined operationally by differential firing that depends on the start arm of the path taken. Path-selective neurons in the OFC met both of these operational definitions. Thus, in the OFC and the hippocampus, firing on a start or goal arm was dependent on the unfolding history of behavior—on the journey the animal would take or had taken. These observations both support a functional interaction between the OFC and hippocampus to support performance of these tasks.

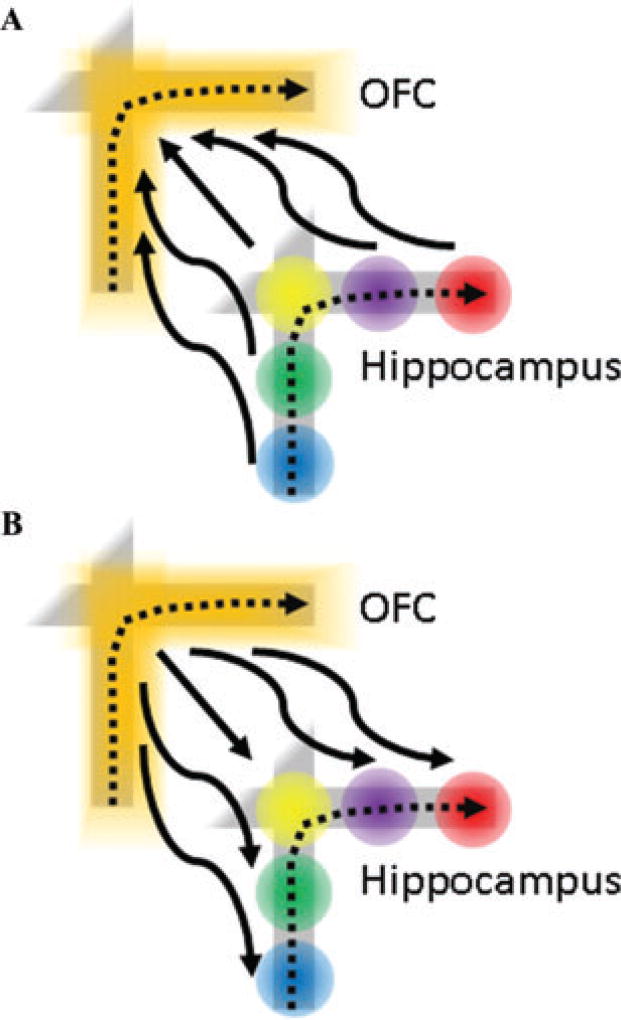

These results are consistent with several types of interactions between the OFC and hippocampus that could facilitate expectancy guided memory retrieval. Clearly, the covariation in theta frequency across learning could reflect a common influence on otherwise independent brain structures. On the other hand, the interactions between the OFC and hippocampus may be important for memory-guided behavior (Fig. 2). During hippocampus-dependent learning and memory, OFC activity could signal reward from the “top down” and modify hippocampal encoding. From this view, OFC activity would modulate the activity of hippocampal place fields along rewarded paths, thereby facilitating the formation of prospective and retrospective coding activity in the hippocampus. Conversely, the hippocampus may modulate OFC path-selective activity from the “bottom up” and help integrate contextual information with reward expectancy. In this model, OFC path-selective activity could be generated by the combined activity of ensembles of hippocampal place fields to which the OFC adds reward information derived from other brain regions. Finally, the OFC–hippocampal interaction may be bidirectional. OFC activity may modulate hippocampal place fields along rewarded paths while simultaneously drawing contextual information from the hippocampus and associating it with reward. To illustrate these possible roles, consider again the scenario of deciding whether to take a cab or the subway downtown during rush hour. Facts and episodes can help inform the selection of one course over the other. The hippocampus is critical for episodic memories perhaps because its neurons encode the spatial and temporal context of events.65 Memory for these past journeys could be used to estimate the duration of each possible route. Furthermore, only specific episodes related to subway and cab travel are particularly relevant in this scenario. Not all memories related to cab or subway travel are relevant; rather, subsets of memories that pertain to cab travel during similar circumstances are key. For example, finding a cab during rush hour is difficult, and traffic moves slowly. Both factual and episodic knowledge would affect the decision of whether to take a cab or the subway. In this sense, the OFC could be involved in selectively retrieving those memories that are most related to the chosen goal—an instance of reward-guided memory retrieval. The OFC may interact with the hippocampus to selectively retrieve the memories that are most relevant to securing the estimated reward. This selection among reward memories based on relevance is an instance of OFC’s role in response selection.

Figure 2.

The OFC and hippocampus may interact through different mechanisms to support reward-guided memory retrieval. (A) OFC path coding could be composed of the combined activity of hippocampal place fields to which the expected reward could be associated. (B) Alternatively, OFC coding could activate hippocampal place fields along rewarded paths, generating prospective and retrospective coding in the hippocampus.

The notions of a “top-down” and “bottom-up” interaction between the OFC and hippocampus are not mutually exclusive, and each model suggests testable predictions, for example, about the effects of OFC lesions on prospective and retrospective coding in the hippocampus. If the OFC modulates hippocampal activity from the top down, OFC lesions should eliminate prospective and retrospective coding in the hippocampus, but hippocampal lesions should not alter path coding by the OFC. In contrast, if the hippocampus influences the OFC from the bottom up, hippocampal lesions should reduce path coding by the OFC, but OFC lesions should not affect hippocampal prospective and retrospective coding. Finally, if the interactions between the OFC and hippocampus are bidirectional, lesions of either structure should impair coding by the other. Ongoing experiments in our lab will test these predictions and assess the degree to which OFC and hippocampal interaction contributes to response selection.

Conclusion

In this review, we propose a model for the role of the OFC in representing and selecting temporally extended sequences of actions. We argue that the OFC encodes a library of outcomes associated with particular stimuli, and this library can be used to estimate the reward expectancy associated with novel responses. In addition, path-selective cells in the OFC shows that it can encode extended responses. Finally, a specific example of the OFC in cognitive control is reward-based memory retrieval. The OFC and hippocampus appear to interact because of high LFP coherence and the presence of prospective and retrospective coding in both regions. The OFC may influence memory retrieval by selecting from the associations in the hippocampus that are most relevant to achieving the coded goal.

This perspective extends existing models of OFC function by suggesting a prospective role for value expectancy coding. Further, we emphasize that the OFC participates in a larger network that represents and supports extended action, which may help to bridge strategic encoding in PL-IL of rats66 and the LPFC of primates67,68 with memory coding by the hippocampus65 and action sequence coding by the striatum.69 In this model, actions are hierarchically encoded in increasing levels of abstraction by different brain regions, as suggested by Joaquin Fuster, who described these distributed action representations as cognits.70 This model of distributed action representation is supported by recent lesion studies that have shown impairment in different aspects of the same task through lesions of different portions of the primate PFC.71 By describing how the OFC could contribute to response selection, the larger brain networks that support decision making can be better understood.

Footnotes

Conflicts of interest

The authors declare no conflicts of interest.

References

- 1.Ferrier D. The goulstonian lectures on the localisation of cerebral disease. BMJ. 1878;1:555–559. doi: 10.1136/bmj.1.903.555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Damasio A. Descartes’ Error: Emotion, Reason, and the Human Brain. Penguin; London: 2005. [Google Scholar]

- 3.Rolls ET. The orbitofrontal cortex and reward. Cereb. Cortex. 2000;10:284–294. doi: 10.1093/cercor/10.3.284. [DOI] [PubMed] [Google Scholar]

- 4.Schoenbaum G, Roesch MR, Stalnaker TA, Takahashi YK. A new perspective on the role of the orbitofrontal cortex in adaptive behaviour. Nature Rev. Neurosci. 2009;10:885–892. doi: 10.1038/nrn2753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Tsujimoto S, Genovesio A, Wise SP. Comparison of strategy signals in the dorsolateral and orbital prefrontal cortex. J Neurosci. 2011;31:4583–4592. doi: 10.1523/JNEUROSCI.5816-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kennerley SW, Wallis JD. Reward-dependent modulation of working memory in lateral prefrontal cortex. J Neurosci. 2009;29:3259–3270. doi: 10.1523/JNEUROSCI.5353-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kim J, Ragozzino ME. The involvement of the orbitofrontal cortex in learning under changing task contingencies. Neurobiol. Learn. Mem. 2005;83:125–133. doi: 10.1016/j.nlm.2004.10.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Schoenbaum G, Nugent SL, Saddoris MP, Setlow B. Orbitofrontal lesions in rats impair reversal but not acquisition of go, no-go odor discriminations. Neuroreport. 2002;13:885–890. doi: 10.1097/00001756-200205070-00030. [DOI] [PubMed] [Google Scholar]

- 9.Schoenbaum G, Setlow B, Nugent SL, et al. Lesions of orbitofrontal cortex and basolateral amygdala complex disrupt acquisition of odor-guided discriminations and reversals. Learn. Mem. 2003;10:129–140. doi: 10.1101/lm.55203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Stalnaker TA, Franz TM, Singh T, Schoenbaum G. Basolateral amygdala lesions abolish orbitofrontal-dependent reversal impairments. Neuron. 2007;54:51–58. doi: 10.1016/j.neuron.2007.02.014. [DOI] [PubMed] [Google Scholar]

- 11.Young JJ, Shapiro ML. Double dissociation and hierarchical organization of strategy switches and reversals in the rat PFC. Behav. Neurosci. 2009;123:1028–1035. doi: 10.1037/a0016822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.McAlonan K, Brown VJ. Orbital prefrontal cortex mediates reversal learning and not attentional set shifting in the rat. Behav. Brain Res. 2003;146:97–103. doi: 10.1016/j.bbr.2003.09.019. [DOI] [PubMed] [Google Scholar]

- 13.Dias R, Robbins TW, Roberts AC. Dissociation in prefrontal cortex of affective and attentional shifts. Nature. 1996;380:69–72. doi: 10.1038/380069a0. [DOI] [PubMed] [Google Scholar]

- 14.Izquierdo A, Suda RK, Murray EA. Bilateral orbital prefrontal cortex lesions in rhesus monkeys disrupt choices guided by both reward value and reward contingency. J Neurosci. 2004;24:7540–7548. doi: 10.1523/JNEUROSCI.1921-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Jones B, Mishkin M. Limbic lesions and the problem of stimulus—reinforcement associations. Exp. Neurol. 1972;36:362–377. doi: 10.1016/0014-4886(72)90030-1. [DOI] [PubMed] [Google Scholar]

- 16.Hornak J, et al. Reward-related reversal learning after surgical excisions in orbitofrontal or dorsolateral prefrontal cortex in humans. J Cog. Neurosci. 2006;16:463–478. doi: 10.1162/089892904322926791. [DOI] [PubMed] [Google Scholar]

- 17.Tsuchida A, Doll BB, Fellows LK. Beyond reversal: a critical role for human orbitofrontal cortex in flexible learning from probabilistic feedback. J Neurosci. 2010;30:16868–16875. doi: 10.1523/JNEUROSCI.1958-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.McDannald MA, Lucantonio F, Burke KA, et al. Ventral striatum and orbitofrontal cortex are both required for model-based, but not model-free, reinforcement learning. J Neurosci. 2011;31:2700–2705. doi: 10.1523/JNEUROSCI.5499-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Walton ME, Behrens TEJ, Buckley MJ, et al. Separable learning systems in the macaque brain and the role of orbitofrontal cortex in contingent learning. Neuron. 2010;65:927–939. doi: 10.1016/j.neuron.2010.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Takahashi YK, et al. The orbitofrontal cortex and ventral tegmental area are necessary for learning from unexpected outcomes. Neuron. 2009;62:269–280. doi: 10.1016/j.neuron.2009.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Schoenbaum G, Esber GR. How do you (estimate you will) like them apples? Integration as a defining trait of orbitofrontal function. Curr. Opin. Neurobiol. 2010;20:205–211. doi: 10.1016/j.conb.2010.01.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Abe H, Lee D. Distributed coding of actual and hypothetical outcomes in the orbital and dorsolateral prefrontal cortex. Neuron. 2011;70:731–741. doi: 10.1016/j.neuron.2011.03.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Alvarez P, Eichenbaum H. Representations of odors in the rat orbitofrontal cortex change during and after learning. Behav. Neurosci. 2002;116:421–433. [PubMed] [Google Scholar]

- 24.Ramus SJ, Eichenbaum H. Neural correlates of olfactory recognition memory in the rat orbitofrontal cortex. J Neurosci. 2000;20:8199–8208. doi: 10.1523/JNEUROSCI.20-21-08199.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Roesch MR, Stalnaker TA, Schoenbaum G. Associative encoding in anterior piriform cortex versus orbitofrontal cortex during odor discrimination and reversal learning. Cereb. Cortex. 2007;17:643–652. doi: 10.1093/cercor/bhk009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Schoenbaum G, Eichenbaum H. Information coding in the rodent prefrontal cortex: I. Single-neuron activity in orbitofrontal cortex compared with that in pyriform cortex. J Neurophysiol. 1995;74:733–750. doi: 10.1152/jn.1995.74.2.733. [DOI] [PubMed] [Google Scholar]

- 27.Schoenbaum G, Eichenbaum H. Information coding in the rodent prefrontal cortex. II. Ensemble activity in orbitofrontal cortex. J Neurophysiol. 1995;74:751–762. doi: 10.1152/jn.1995.74.2.751. [DOI] [PubMed] [Google Scholar]

- 28.Schoenbaum G, Chiba AA, Gallagher M. Orbitofrontal cortex and basolateral amygdala encode expected outcomes during learning. Nature neuroscience. 1998;1:155–159. doi: 10.1038/407. [DOI] [PubMed] [Google Scholar]

- 29.Schoenbaum G, Chiba AA, Gallagher M. Neural encoding in orbitofrontal cortex and basolateral amygdala during olfactory discrimination learning. J Neurosci. 1999;19:1876–1884. doi: 10.1523/JNEUROSCI.19-05-01876.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Schoenbaum G, Chiba AA, Gallagher M. Changes in functional connectivity in orbitofrontal cortex and basolateral amygdala during learning and reversal training. J Neurosci. 2000;20:5179–5189. doi: 10.1523/JNEUROSCI.20-13-05179.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Schoenbaum G, Setlow B, Saddoris MP, Gallagher M. Encoding predicted outcome and acquired value in orbitofrontal cortex during cue sampling depends upon input from basolateral amygdala. Neuron. 2003;39:855–867. doi: 10.1016/s0896-6273(03)00474-4. [DOI] [PubMed] [Google Scholar]

- 32.Thorpe SJ, Rolls ET, Maddison S. The orbitofrontal cortex: neuronal activity in the behaving monkey. Exp. Brain Res. 1983;49:93–115. doi: 10.1007/BF00235545. [DOI] [PubMed] [Google Scholar]

- 33.Critchley HD, Rolls ET. Olfactory neuronal responses in the primate orbitofrontal cortex: analysis in an olfactory discrimination task. J Neurophysiol. 1996;75:1659–1672. doi: 10.1152/jn.1996.75.4.1659. [DOI] [PubMed] [Google Scholar]

- 34.Critchley HD, Rolls ET. Hunger and satiety modify the responses of olfactory and visual neurons in the primate orbitofrontal cortex. J Neurophysiol. 1996;75:1673–1686. doi: 10.1152/jn.1996.75.4.1673. [DOI] [PubMed] [Google Scholar]

- 35.Wallis JD, Miller EK. Neuronal activity in primate dorsolateral and orbital prefrontal cortex during performance of a reward preference task. Eur. J. Neurosci. 2003;18:2069–2081. doi: 10.1046/j.1460-9568.2003.02922.x. [DOI] [PubMed] [Google Scholar]

- 36.Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Tremblay L, Schultz W. Relative reward preference in primate orbitofrontal cortex. Nature. 1999;398:704–708. doi: 10.1038/19525. [DOI] [PubMed] [Google Scholar]

- 38.Feierstein CE, Quirk MC, Uchida N, et al. Representation of spatial goals in rat orbitofrontal cortex. Neuron. 2006;51:495–507. doi: 10.1016/j.neuron.2006.06.032. [DOI] [PubMed] [Google Scholar]

- 39.Furuyashiki T, Holland PC, Gallagher M. Rat orbitofrontal cortex separately encodes response and outcome information during performance of goal-directed behavior. J Neurosci. 2008;28:5127–5138. doi: 10.1523/JNEUROSCI.0319-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Tsujimoto S, Genovesio A, Wise SP. Monkey orbitofrontal cortex encodes response choices near feedback time. J Neurosci. 2009;29:2569–2574. doi: 10.1523/JNEUROSCI.5777-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Young JJ, Shapiro ML. Dynamic coding of goal-directed paths by orbital prefrontal cortex. J Neurosci. 2011;31:5989–6000. doi: 10.1523/JNEUROSCI.5436-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Browning PGF, Gaffan D. Prefrontal cortex function in the representation of temporally complex events. J Neurosci. 2008;28:3934–3940. doi: 10.1523/JNEUROSCI.0633-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Baxter MG, Gaffan D, Kyriazis DA, Mitchell AS. Ventrolateral prefrontal cortex is required for performance of a strategy implementation task but not reinforcer devaluation effects in rhesus monkeys. Eur. J. Neurosci. 2009;29:2049–2059. doi: 10.1111/j.1460-9568.2009.06740.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Wilson CRE, Gaffan D, Browning PGF, Baxter MG. Functional localization within the prefrontal cortex: missing the forest for the trees? TINS. 2010;33:533–540. doi: 10.1016/j.tins.2010.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Reynolds B. A review of delay-discounting research with humans: relations to drug use and gambling. Behav. Pharm. 2006;17:651–667. doi: 10.1097/FBP.0b013e3280115f99. [DOI] [PubMed] [Google Scholar]

- 46.Reynolds B, de Wit H, Richards J. Delay of gratification and delay discounting in rats. Behav. Pro. 2002;59:157. doi: 10.1016/s0376-6357(02)00088-8. [DOI] [PubMed] [Google Scholar]

- 47.Freeman KB, Green L, Myerson J, Woolverton WL. Delay discounting of saccharin in rhesus monkeys. Behav. Pro. 2009;82:214–218. doi: 10.1016/j.beproc.2009.06.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Roesch MR, Taylor AR, Schoenbaum G. Encoding of time-discounted rewards in orbitofrontal cortex is independent of value representation. Neuron. 2006;51:509–520. doi: 10.1016/j.neuron.2006.06.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Roesch MR, Olson CR. Neuronal activity in primate orbitofrontal cortex reflects the value of time. J Neurophysiol. 2005;94:2457–2471. doi: 10.1152/jn.00373.2005. [DOI] [PubMed] [Google Scholar]

- 50.Jay TM, Witter MP. Distribution of hippocampal CA1 and subicular efferents in the prefrontal cortex of the rat studied by means of anterograde transport of Phaseolus vulgaris-leucoagglutinin. J Comp. Neurol. 1991;313:574–586. doi: 10.1002/cne.903130404. [DOI] [PubMed] [Google Scholar]

- 51.Cenquizca LA, Swanson LW. Spatial organization of direct hippocampal field CA1 axonal projections to the rest of the cerebral cortex. Brain Res. Rev. 2007;56:1–26. doi: 10.1016/j.brainresrev.2007.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Barbas H, Blatt GJ. Topographically specific hippocampal projections target functionally distinct prefrontal areas in the rhesus monkey. Hippocampus. 1995;5:511–533. doi: 10.1002/hipo.450050604. [DOI] [PubMed] [Google Scholar]

- 53.McKenna JT, Vertes RP. Afferent projections to nucleus reuniens of the thalamus. J Comp. Neurol. 2004;480:115–142. doi: 10.1002/cne.20342. [DOI] [PubMed] [Google Scholar]

- 54.Wouterlood FG, Saldana E, Witter MP. Projection from the nucleus reuniens thalami to the hippocampal region: light and electron microscopic tracing study in the rat with the anterograde tracer Phaseolus vulgaris-leucoagglutinin. J Comp. Neurol. 1990;296:179–203. doi: 10.1002/cne.902960202. [DOI] [PubMed] [Google Scholar]

- 55.Wouterlood FG. Innervation of entorhinal principal cells by neurons of the nucleus reunions thalami. Anterograde PHA-L tracing combined with retrograde fluorescent tracing and intracellular injection with Lucifer yellow in the rat. Eur. J. Neurosci. 1991;3:641–647. doi: 10.1111/j.1460-9568.1991.tb00850.x. [DOI] [PubMed] [Google Scholar]

- 56.Baxter MG, Gaffan D, Kyriazis DA, Mitchell AS. Orbital prefrontal cortex is required for object-in-place scene memory but not performance of a strategy implementation task. J Neurosci. 2007;27:11327–11333. doi: 10.1523/JNEUROSCI.3369-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Browning PGF, Easton A, Buckley MJ, Gaffan D. The role of prefrontal cortex in object-in-place learning in monkeys. Eur. J. Neurosci. 2005;22:3281–3291. doi: 10.1111/j.1460-9568.2005.04477.x. [DOI] [PubMed] [Google Scholar]

- 58.Gaffan D, Parker A. Interaction of perirhinal cortex with the fornix-fimbria: memory for objects and “object-inplace” memory. J Neurosci. 1996;16:5864–5869. doi: 10.1523/JNEUROSCI.16-18-05864.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Vafaei AA, Rashidy-Pour A. Reversible lesion of the rat’s orbitofrontal cortex interferes with hippocampus-dependent spatial memory. Behav. Brain Res. 2004;149:61–68. doi: 10.1016/s0166-4328(03)00209-2. [DOI] [PubMed] [Google Scholar]

- 60.Kolb B, Sutherland RJ, Whishaw IQ. A comparison of the contributions of the frontal and parietal association cortex to spatial localization in rats. Behav. Neurosci. 1983;97:13–27. doi: 10.1037//0735-7044.97.1.13. [DOI] [PubMed] [Google Scholar]

- 61.Womelsdorf T, et al. Modulation of neuronal interactions through neuronal synchronization. Science. 2007;316:1609–1612. doi: 10.1126/science.1139597. [DOI] [PubMed] [Google Scholar]

- 62.Buzsáki G, Chrobak JJ. Temporal structure in spatially organized neuronal ensembles: a role for interneuronal networks. Curr. Opin. Neurobiol. 1995;5:504–510. doi: 10.1016/0959-4388(95)80012-3. [DOI] [PubMed] [Google Scholar]

- 63.Buzsáki G, Draguhn A. Neuronal oscillations in cortical networks. Science. 2004;304:1926–1929. doi: 10.1126/science.1099745. [DOI] [PubMed] [Google Scholar]

- 64.Ferbinteanu J, Shapiro ML. Prospective and retrospective memory coding in the hippocampus. Neuron. 2003;40:1227–1239. doi: 10.1016/s0896-6273(03)00752-9. [DOI] [PubMed] [Google Scholar]

- 65.Ferbinteanu J, Kennedy PJ, Shapiro ML. Episodic memory—from brain to mind. Hippocampus. 2006;16:691–703. doi: 10.1002/hipo.20204. [DOI] [PubMed] [Google Scholar]

- 66.Rich EL, Shapiro M. Rat prefrontal cortical neurons selectively code strategy switches. J Neurosci. 2009;29:7208–7219. doi: 10.1523/JNEUROSCI.6068-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Asaad WF, Rainer G, Miller EK. Task-specific neural activity in the primate prefrontal cortex. J Neurophysiol. 2000;84:451–459. doi: 10.1152/jn.2000.84.1.451. [DOI] [PubMed] [Google Scholar]

- 68.Mansouri FA, Matsumoto K, Tanaka K. Prefrontal cell activities related to monkeys’ success and failure in adapting to rule changes in a Wisconsin card sorting test analog. J Neurosci. 2006;26:2745–2756. doi: 10.1523/JNEUROSCI.5238-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Berke JD, Breck JT, Eichenbaum H. Striatal versus hippocampal representations during win-stay maze performance. J Neurophysiol. 2009;101:1575–1587. doi: 10.1152/jn.91106.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Fuster JM. The cognit: a network model of cortical representation. Int. J. Psychophysiol. 2006;60:125–132. doi: 10.1016/j.ijpsycho.2005.12.015. [DOI] [PubMed] [Google Scholar]

- 71.Buckley MJ, et al. Dissociable components of rule-guided behavior depend on distinct medial and prefrontal regions. Science. 2009;325:52–58. doi: 10.1126/science.1172377. [DOI] [PubMed] [Google Scholar]