Abstract

Photonic systems for high-performance information processing have attracted renewed interest. Neuromorphic silicon photonics has the potential to integrate processing functions that vastly exceed the capabilities of electronics. We report first observations of a recurrent silicon photonic neural network, in which connections are configured by microring weight banks. A mathematical isomorphism between the silicon photonic circuit and a continuous neural network model is demonstrated through dynamical bifurcation analysis. Exploiting this isomorphism, a simulated 24-node silicon photonic neural network is programmed using “neural compiler” to solve a differential system emulation task. A 294-fold acceleration against a conventional benchmark is predicted. We also propose and derive power consumption analysis for modulator-class neurons that, as opposed to laser-class neurons, are compatible with silicon photonic platforms. At increased scale, Neuromorphic silicon photonics could access new regimes of ultrafast information processing for radio, control, and scientific computing.

Introduction

Light forms the global backbone of information transmission yet is rarely used for information transformation. Digital optical logic faces fundamental physical challenges1. Many analog approaches have been researched2–4, but analog optical co-processors have faced major economic challenges. Optical systems have never achieved competitive manufacturability, nor have they satisfied a sufficiently general processing demand better than digital electronic contemporaries. Incipient changes in the supply and demand for photonics have the potential to spark a resurgence in optical information processing.

A germinating silicon photonic integration industry promises to supply the manufacturing economomies normally reserved for microelectronics. While firmly rooted in demand for datacenter transceivers5, the industrialization of photonics would impact other application areas6. Industrial microfabrication ecosystems propel technology roadmapping7, library standardization8, 9, and broadened accessibility10, all of which could open fundamentally new research directions into large-scale photonic systems. Large-scale beam steerers have been realized11, and on-chip communication networks have been envisioned12–14; however, opportunities for scalable silicon photonic information processing systems remain largely unexplored.

Concurrently, photonic devices have found analog signal processing niches where electronics can no longer satisfy demands for bandwidth and reconfigurability. This situation is exemplified by radio frequency (RF) processing, in which front-ends have come to be limited by RF electronics, analog-to-digital converters (ADCs), and digital signal processors (DSP)15, 16. In response, RF photonics has offered respective solutions for tunable RF filters17, 18, ADC itself19, and simple processing tasks that can be moved from DSP into the analog subsystem20–22. RF photonic circuits that can be transcribed from fiber to silicon are likely to reap the economic benefits of silicon photonic integration. In a distinct vein, an unprecedented possibility for large-scale photonic system integration could enable systems beyond what can be considered in fiber. If scalable information processing with analog photonics is to be considered, new standards relating physics to processing must be developed and verified.

Standardized concepts that link physics to computational models are required to define essential quantitative engineering tools, namely metrics, algorithms, and benchmarks. For example, a conventional gate has simultaneous meaning as an abstract logical operation and as an arrangement of electronic semiconductors and thereby acts as a conduit between device engineering and computational performance. In another case, neuromorphic electronics adopt unconventional standards defining spiking neural networks as event-based packet networks23–25. These architectures’ adherence to neural network models unlocks a wealth of metrics26, algorithms27, 28, tools29, 30, and benchmarks31 developed specifically for neural networks. Likewise, scalable information processing with analog photonics would rely upon standards defining the relationship between photonic physics and a suitable processing model. Neural networks are among the most well-studied models for information processing with distributed analog elements. The fact that distributed interconnection and analog dynamics are performance strongsuits of photonic physics motivates the study of neuromorphic photonics.

“Broadcast-and-weight”32 was proposed as a standard protocol for implementing neuromorphic processors using integrated photonic devices. Broadcast-and-weight is potentially compatible with mainstream silicon photonic device platforms, unlike free-space optical neural networks4, 33. It is capable of implementing generalized reconfigurable and recurrent neural network models. In the broadcast-and-weight protocol, shown in Fig. 1(a), each neuron’s output is assigned a unique wavelength carrier that is wavelength division multiplexed (WDM) and broadcast. Incoming WDM signals are weighted by reconfigurable, continuous-valued filters called photonic weight banks and then summed by total power detection. This electrical weighted sum then modulates the corresponding WDM carrier through a nonlinear or dynamical electro-optic process. Previous work on microring (MRR) weight banks have established a correspondence between weighted addition operations and integrated photonic filters. In reference to the operation, MRR weight bank scalability34 and accuracy35 metrics can be defined, but MRR weight banks have not been demonstrated within a network.

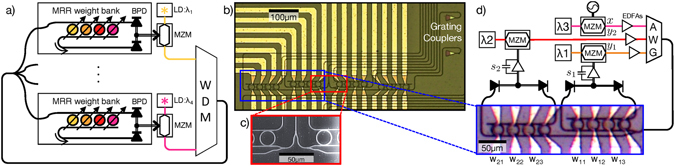

Figure 1.

Broadcast-and-weight protocol and experiment. (a) Concept of a broadcast-and-weight network with modulators used as neurons. MRR: microring resonator, BPD: balanced photodiode, LD: laser diode, MZM: Mach-Zehnder modulator, WDM: wavelength-division multiplexer. (b) Micrograph of 4-node recurrent broadcast-and-weight network with 16 tunable microring (MRR) weights and fiber-to-chip grating couplers. (c) Scanning electron micrograph of 1:4 splitter. (d) Experimental setup with two off-chip MZM neurons and one external input. Signals are wavelength-multiplexed in an arrayed waveguide grating (AWG) and coupled into a 2 × 3 subnetwork with MRR weights, w 11, w 12, etc. Neuron state is represented by voltages s 1 and s 2 across low-pass filtered transimpedance amplifiers, which receive inputs from the balanced photodetectors of each MRR weight bank.

In this manuscript, we demonstrate a broadcast-and-weight system configured by microring weight banks that is isomorphic to a continuous-time recurrent neural network (CTRNN) model. As opposed to “brain-inspired” and “neuro-mimetic”, “neuromorphic” is an unambiguous mathematical concept meaning that a physical system’s governing equations are isomorphic to those describing an abstract neural network model. Isomorphic dynamical systems share qualtitative changes in dynamics as a result of parameter variation. We adopt a strategy for proving neuromorphism experimentally by comparing these dynamical transitions (a.k.a. bifurcations) induced in an experimental device against those predicted of a CTRNN model. In particular, we observe single-node bistability across a cusp bifurcation and two-node oscillation across a Hopf bifurcation. While oscillatory dynamics in optoelectronic devices have long been studied36, 37, this work relies on configuring an analog photonic network that can be scaled to more nodes in principle. This implies that CTRNN metrics, simulators, algorithms, and benchmarks can be applied to larger neuromorphic silicon photonic systems. To illustrate the significance of this implication, we simulate a 24-modulator silicon photonic CTRNN solving a differential equation problem. The system is programmed by appropriating an existing “neural compiler”29 and benchmarked against a conventional CPU solving the same problem, predicting an acceleration factor of 294×.

Results

The CTRNN model is described by a set of ordinary differential equations coupled through a weight matrix.

| 1 |

| 2 |

where s(t) are state variables with timeconstants τ, W is the recurrent weight matrix, y(t) are neuron outputs, w in are input weights, and u(t) is an external input. σ is a saturating transfer function associated with each neuron. Figure 1(b,c) shows the integrated, reconfigurable analog network and experimental setup. Signals u and y are physically represented as the power envelope of different optical carrier wavelengths. The weight elements of W and w in are implemented as transmission values through a network of reconfigurable MRR filters. The neuron transfer function, σ, is implemented by the sinusoidal electro-optic transfer function of a fiber Mach-Zehnder modulator (MZM). The neuron state, s, is the electrical voltage applied to the MZM, whose timeconstant, τ, is determined by an electronic low-pass filter. We aim to establish a correspondence between experimental bifurcations induced by varying MRR weights and the modeled bifurcations38 derived in Supplementary Section 1.

Cusp Bifurcation

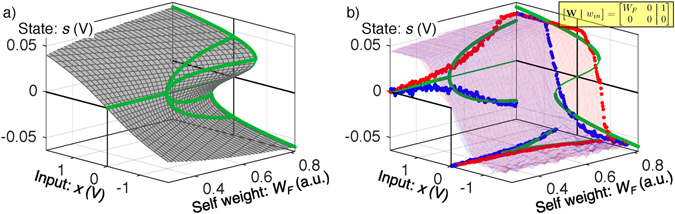

A cusp bifurcation characterizes the onset of bistability in a single node with self-feedback. To induce and observe a cusp, the feedback weight of node 1 is parameterized as w 11 = W F. The neurosynaptic signal, s 1, is recorded as the feedback weight is swept through 500 points from 0.05–0.85, and the input is swept in the rising (blue surface) and falling (red surface) directions. The parameters τ, α, and κ in equation (S1.1) are fit to minimize root mean squared error between model and data surfaces. The best fit model has a cusp point at W B = 0.54. Figure 2(a) shows a modeled cusp surface described by equation (S1.1) and fit to data from Fig. 2(b). In Fig. 2(b), the data surfaces are interpolated at particular planes and projected onto 2D axes as red/blue points. The corresponding slices of the model surface are similarly projected as green lines. The u = 0 slice projected on the s − W F plane yields a pitchfork curve described by equation (S1.2). The W F = 0.08 slice projected on the u − s plane yields the bistable curve described by equation (S1.3). Finally, the s = 0 slice projected on the u − W F plane yields the cusp curve described by equation (S1.4).

Figure 2.

A cusp bifurcation in a single node with feedback weight, W F, external input, u, and neurosynaptic state, s. (a) Theoretical model surface (gray) and bifurcation curves (green) plotted in 3D. Parameters of the model are fit to data. (b) Experimental data for increasing (blue surface) and decreasing (red surface) input. Theoretical bifurcation curves – with parameters identical to those in (a) – are projected onto 2D axes. The data surfaces are sliced at the planes: u = 0, s = 0, and W F = 0.08, and similarly projected onto the axes (red and blue points) to illustrate the reproduction of pitchfork, cusp, and saddle-node bifurcations, respectively.

The experimental reproduction of pitchfork, bistable, and cusp bifurcations is demonstrative of an isomorphism between the single-node model and the device under test. An opening of an area between rising and falling data surfaces is characteristic of bistability. The transition boundary closely follows a cusp form. While the pitchfork and bistable slices reproduce the number and growth trends of fixed points, their fits have non-idealities. These non-idealities can be attributed to a hard saturation of the electrical transimpedance amplifier when the input voltage and feedback weight are high. Furthermore, the stability of cusp measurements serve as a control indicating the absence of time-delayed dynamics resulting from long fiber delays and causing spurious oscillations39. Electrical low-pass filtering is used to eliminate these unmodeled dynamics in order to observe modeled bifurcations.

Hopf Bifurcation

Dynamical systems are capable of oscillating if there exists a closed orbit (a.k.a. limit cycle) in the state space, which must therefore exceed one dimension. The Hopf bifurcation occurs when a stable fixed-point becomes unstable while giving rise to a stable limit cycle. Hopf bifurcations are further characterized by oscillations that approach zero amplitude and nonzero frequency near the bifurcation point38. We induce a Hopf bifurcation by configuring the MRR weight matrix to have asymmetric, fixed off-diagonals and equal, parameterized diagonals.

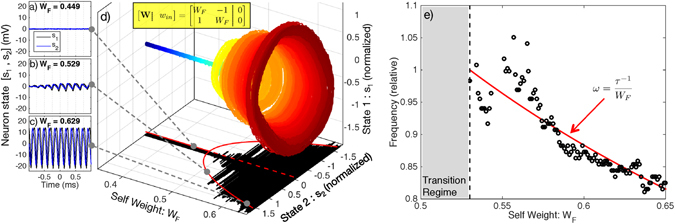

Figure 3 compares the observed and predicted oscillation onset, amplitude, and frequency. Figure 3(a–c) show the time traces for below, near, and above the oscillation threshold. Above threshold, oscillation occurs in the range of 1–5 kHz, as limited by electronic low-pass filters and feedback delay. Figure 3(d) shows the result of a fine sweep of self-feedback weights in the 2-node network, exhibiting the paraboloid shape of a Hopf bifurcation. The voltage of neuron 1 is plotted against that of neuron 2 with color corresponding to W F parameter. The peak oscillation amplitude for each weight is then projected onto the W F − y 2 plane in black, and these amplitudes are fit using the model from equation (S1.8) (red). Bifurcation occurs at W B = 0.48 in the fit model. Figure 3(e) plots the oscillation frequency above the Hopf point. Data are discarded for W B < W F < 0.53 because the oscillations are erratic in the sensitive transition region. Frequency data are then fit with the model of equation (S1.10). The frequency axis is scaled so that 1.0 corresponds to the model frequency at region boundary, which is 4.81 kHz. The Hopf bifurcation only occurs in systems of more than one dimension, thus confirming the observation of a small integrated photonic neural network.

Figure 3.

A Hopf bifurcation between stable and oscillating states. (a–c) Time traces below, near, and above the bifurcation. (d) Oscillation growth versus feedback weight strength. Color corresponds to feedback weight parameter, W F, to improve visibility. Black shadow: average experimental amplitudes; solid red curve: corresponding fit model; dotted red line: unstable branch. (e) Frequency of oscillation above the Hopf bifurcation. The observed data (black points) are compared to the expected trend of equation (S1.10) (red curve). Frequencies are normalized to the threshold frequency of 4.81 kHz.

Significantly above the bifurcation point, experimental oscillation amplitude and frequency closely match model predictions, but discrepancies are apparent in the transition regime. Limit cycles with amplitudes comparable to noise amplitude can be destabilized by their proximity to the unstable fixed point at zero. This effect could explain the middle inset of Fig. 3, in which a small oscillation grows and then shrinks. Part of this discrepancy can be explained by weight inaccuracy due to inter-bank thermal cross-talk. The two MRR weight banks were calibrated independently accounting only for intra-bank thermal cross-talk. As seen in Fig. 1(c), the physical distance between w 12 (nominally −1) and w 22 (nominally W F) is approximately 100 μm. While inter-bank cross-talk is not a major effect, w 12 is very sensitive because weight −1 corresponds to on-resonance, and the dynamics are especially sensitive to the weight values near the bifurcation point.

Emulation Benchmark

A dynamical isomorphism between a silicon photonic system and the CTRNN model of equations (1 and 2) implies that larger, faster neuromorphic silicon photonic systems could utilize algorithms and tools developed for generic CTRNNs. Here, we apply a “neural compiler” called the Neural Engineering Framework (NEF)40 to program a simulated photonic CTRNN to solve an ordinary differential equation (ODE). This simulation is benchmarked against a conventional central processing unit (CPU) solving the same task. The procedures for each approach are detailed in Methods. As opposed to implementation-specific metrics, benchmarks are task-oriented indicators suitable for comparing technologies that use disparate computing standards. Benchmarking approaches are therefore needed to evaluate the potentials of any unconventional processor in application domains currently served by conventional processors. The chosen benchmark problem consists of solving a well-known ODE called the Lorenz attractor, described by a system of three coupled ODEs with no external inputs:

| 3 |

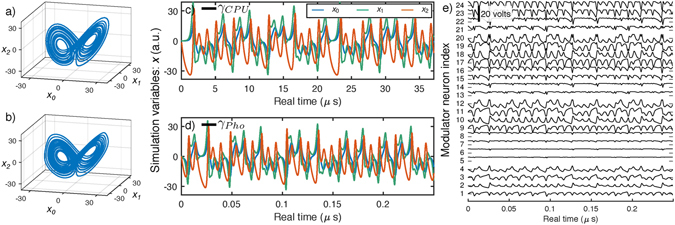

where x are the simulation state variables, and γ is a time scaling factor. When parameters, υ, β, and ρ, are set to (υ, β, ρ) = (6.5, 8/3, 28), the solutions of the attractor are chaotic. The photonic CTRNN and CPU solutions are compared in x phase space in Fig. 4(a,b) and the time-domain in Fig. 4(c,d). Figure 4(e) plots the physical modulator voltages, s, linear combinations of which represent simulation variables, x, as discussed in Methods. Because the two simulators are implemented differently, they cannot be compared based on equivalent metrics; however, the time scaling factor, γ, links physical real-time to a virtual simulation time basis, in which a direct comparison can be made.

Figure 4.

Photonic CTRNN benchmarking against a CPU. (a,b) Phase diagrams of the Lorenz attractor simulated by a conventional CPU (a) and a photonic CTRNN (b). (c,d) Time traces of simulation variables for a conventional CPU (c) and a photonic CTRNN (d). The horizontal axes are labeled in physical real time, and cover equal intervals of virtual simulation time, as benchmarked by γ CPU and γ Pho. The ratio of real-time values of γ’s indicates a 294-fold acceleration. (e) Time traces of modulator voltages s i (minor y-axis) for each modulator neuron i (major y-axis) in the photonic CTRNN. The simulation variables, x, in (d) are linear decodings of physical variables, s, in (e).

Figure 4(c,d) plots photonic CTRNN and CPU solutions in the real-time bases, scaled to cover equal simulation intervals. The discrete-time simulation is linked to physical real-time by the step calculation time, Δt = 24.5 ns, and its stability is limited by numerical divergence. We find that γ CPU ≥ Δt × 150 is sufficient for <1% divergence probability, resulting in γ CPU = 3.68 μs. The CTRNN simulation is linked to physical real-time by its feedback delay, t fb = 47.8 ps, and its stability is limited by time-delayed dynamics. We find that γ Pho ≥ t fb × 260 is sufficient to avoid spurious dynamics, resulting in γ Pho = 12.5 ns. The acceleration factor, γ CPU/γ Pho, is thus predicted to be 294×.

Implementing this network on a silicon photonic chip would require 24 laser wavelengths, 24 modulators, and 24 MRR weight banks, for a total of 576 MRR weights. The power used by the CTRNN would be dominated by static MRR tuning and pump lasers,as discussed in the Methods section. Minimum pump power is determined by the requirement that recurrently connected neurons are able to exhibit positive eigenvalues. Considering 24 lasers with a realistic wall-plug efficiency of 5%, minimum total system power is expected to be 106 mW. The area used by the photonic CTRNN is split evenly between MRR weight banks (576 × 25 μm × 25 μm = 0.36 mm2) and modulators41 (24 × 500 μm × 25 μm = 0.30 mm2). The fundamental limits of these performance metrics are compared with other neuromorphic approaches below.

While the qualitative Lorenz behavior is reproduced by CTRNN and CPU implementations, the chaotic nature of the attractor presents a challenge for benchmarking emulation accuracy. Non-chaotic partial differential equations (PDEs) exist to serve as accuracy benchmarks42, 43; however, most non-trivial ODEs are chaotic. One exception is work on central pattern generators (CPGs) that are used to shape oscillations for driving mechanical locomotion44. CPGs have been implemented with analog ASICs45 and digital FPGAs46. While work on CPG hardware has fallen in sub-kHz timescales, similar tasks could be developed for GHz timescales with possible application to adaptive RF waveform generation. Further work could develop CPG-like tasks to benchmark the accuracy of photonic CTRNN ODE emulators.

Accuracy can be assessed through metrics of weight accuracy. Previous work discussed the precision and accuracy to which MRR weight banks could be configured to a desired weight vector35. Even in the presence of fabrication variation and thermal cross-talk, the dynamic weight accuracy was demonstrated to be 4.1 + 1(sign) bits and anticipated to increase with improved design. Since the weight is analog, its dynamic accuracy (range/command error) is not an integer; however, this metric corresponds to bit resolution in digital architectures. In the majority of modern-day neuromorphic hardware, this resolution is selected to be 4 + 1(sign) bits24, 47, 48 as a tradeoff between hardware resources and functionality. Significant study has been devoted to the effect of limited weight resolution on neural network function49 and techniques for mitigating detrimental effects50.

Neuromorphic photonic metrics

In addition to task-driven benchmark analyses, we can perform component-level metric comparisions with other neuromorphic systems in terms of area, signal bandwidth, and synaptic operation (SOP) energy. The 24-modulator photonic CTRNN used as an emulation benchmark was predicted to use a 4.4 mW/neuron using realistic pump lasers, and was limited to 1 GHz bandwidth to spoil spurious oscillations. This results in a computational efficiency of 180 fJ/SOP. The area of an MRR weight is approximately (l × w)/N 2 = 25 × 25 = 625 μm2/synapse.

The coherent approach described by Shen et al.51 based on a matrix of Mach-Zehnder interferometers (MZIs) would exhibit similar fundamental energy and speed limitations, given similar assumptions about detection bandwidth and laser efficiency. The power requirements of this approach were limited by nonlinear threshold activation, rather than signal cascadability. Supposing a laser efficiency of 5%, this was estimated to be around 20 mW/neuron. A 24-neuron system limited to 1 GHz bandwidth would therefore achieve 830 fJ/SOP. While there is no one-to-one correspondence between MZIs and synapses, there is still a quadratic area scaling relationship: (l × w)/N 2 = 200 × 100 = 20,000 μm2/synapse, as limited by thermal phase shifter dimension.

The superconducting optoelectronic approach described by Shainline et al.52 would be optimized for scalability and efficiency, instead of speed. This can be attributed to the extreme sensitivity of cryogenic photodetectors, but this difference also limits signal bandwidth to 20 MHz. For a 700-neuron interconnect, the wall-plug efficiency is estimated to be around 20 fJ/SOP. Area was calculated to be (l × w)/N 2 = 1.4 × 15 = 21 μm2/synapse.

A metric analysis can extend to include neuromorphic electronics, although metrics do not necessarily indicate signal processing merit. Electronic and photonic neuromorphics are designed to address complementary types of problems. Akopyan et al.24 demonstrated a chip containing 256 million synapses dissipating 65 mW. The signal bandwidth, determined by the effective tick, or timestep, is 1.0 kHz, and the chip area is 4.3 cm2. This results in an effective 240 fJ/SOP and effective area of 6.0 μm2/synapse. We note that TrueNorth is event-based, meaning explicit computation does not occur for every effective SOP, but only when the input to a synapse is nonzero.

Discussion

We have demonstrated an isomorphism between a silicon photonic broadcast-and-weight system and a reconfigurable CTRNN model through observations of predicted bifurcations. In addition to proof-of-concept, this repeatable method could be used to characterize the standard performance of single neurons and pairs of neurons within larger systems. Employing neuromorphic properties, we then illustrated a task-oriented programming approach and benchmark analysis. Similar analyses could assess the potentials of analog photonic processors against state-of-the-art conventional processors in many application domains. Here, we discuss the implications of these results in the broader context of information processing with photonic devices.

This work constitutes the first investigation of photonic neurons implemented by modulators, an important step towards silicon-compatible neuromorphic photonics. Interest in integrated lasers with neuron-like spiking behavior has flourished over the past several years53, 54. Experimental work has so far focused on isolated neurons55–57 and fixed, cascadable chains58, 59. The shortage of research on networks of these lasers might be explained by the challenges of implementing low-loss, compact, and tunable filters in the active III/V platforms required for laser gain. In some cases where fan-in is sensitive to input optical phase, it is also unclear how networking would occur without global laser synchronization. In contrast to lasers, Mach-Zehnder, microring, and electroabsorption modulators are all silicon-compatible. Modulator-class neurons are therefore a final step towards making complete broadcast-and-weight systems entirely compatible with silicon foundry platforms. While laser-class neurons with spiking dynamics present richer processing opportunities, modulator-class neurons would still possess the formidable repertoire of CTRNN functions.

In parallel with work on individual laser neurons, recent research has also investigated systems with isomorphisms to neural network models. A fully integrated superconducting optoelectronic network was recently proposed52 to offer unmatched energy efficiency. While based on an exotic superconducting platform, this approach accomplishes fan-in using incoherent optical power detection in a way compatible with the broadcast-and-weight protocol. A programmable nanophotonic processor was recently studied in the context of deep learning51. Coherent optical interconnects exhibit a sensitivity to optical phase that must be re-synchronized after each layer. In the demonstration, optical nonlinearity and phase regeneration were performed digitally. Analog solutions for counteracting signal-dependent phase shifts induced by nonlinear materials60 have not yet been proposed. Recurrent neural networks have been investigated in fiber61. While the current work employs fiber neurons, it is the first demonstration of a recurrent weight network that is integrated. Optical neural networks in free-space have also been investigated in the past4 and recently33. Free-space systems occupy an extra dimension but can not necessarily use it for increased scalability. The volume between focal planes is used for diffractive evolution of the optical field and unused for network configuration. Spatial light modulators that configure the network are generally planar. Shainline et al. noted that integrated neuromorphic photonic systems could potentially be stacked to take advantage of a third dimension52.

Reservoir computing techniques that take inspiration from certain brain properties (e.g. analog, distributed) have received substantial recent attention from the photonics community62–65. Reservoir techniques rely on supervised learning to discern a desired behavior from a large number of complex dynamics, instead of relying on establishing an isomorphism to a model. Neuromorphic and reservoir approaches differ fundamentally and possess complementary advantages. Both derive a broad repertoire of behaviors (often referred to as complexity) from a large number of physical degrees-of-freedom (e.g. optical intensities) coupled through interaction parameters (e.g. transmissions). Both offer means of selecting a specific, desired behavior from this repertoire using controllable parameters. In neuromorphic systems, network weights are both the interaction and controllable parameters, whereas, in reservoir computers, these two groups of parameters are separate. This distinction has two major implications. Firstly, the interaction parameters of a reservoir do not need to be observable or even repeatable from system to system. Reservoirs can thus derive complexity from physical processes that are difficult to model or reproduce, such as coupled amplifiers66, coupled nonlinear MRRs67, time-delayed dynamics in fibers64, and fixed interferometric circuits63. Furthermore, they do not require significant hardware to control the state of the reservoir. Neuromorphic hardware has a burden to correspond physical parameters (e.g. drive voltages) to model parameters (e.g. weights), as was shown in this paper. Secondly, reservoir computers can only be made to elicit a desired behavior through instance-specific supervised training, whereas neuromorphic computers can be programmed a priori using a known set of weights. Because neuromorphic behavior is determined only by controllable parameters, these parameters can be mapped directly between different system instances, different types of neuromorphic systems, and simulations. Neuromorphic hardware can leverage existing algorithms (e.g. NEF) and virtual training results. Particular behaviors, fully determined by the virtual/hardware weights, are guaranteed to occur. Photonic RCs can of course be simulated; however, they have no corresponding guarantee that a particular hardware instance will reproduce a simulated behavior or that training will be able to converge to this behavior.

At increased scale, neuromorphic silicon photonic systems could be applied to unaddressed computational areas in scientific computing and RF signal processing. A key benefit of neuromorphic engineering is that existing algorithms can be leveraged. A subset of CTRNNs, Hopfield networks68, have been used extensively in mathematical programming and optimization problems27. The ubiquity of PDE problems in scientific computing has motivated the development of analog electronic neural emulators42. Further work could explore the use of NEF to emulate discrete space points of PDEs. Neural algorithms for CTRNNs have been developed for real-time RF signal processing, including spectral mining69, spread spectrum channel estimation70, and arrayed antenna control71. There is insistent demand to implement these tasks at wider bandwidths using less power than possible with RF electronics. Additionally, methodologies developed for audio applications, such as noise mitigation28, could conceivably be mapped to RF problems if implemented on ultrafast hardware. Unsupervised neural-inspired learning has been used with a single MRR weight bank for statistical analysis of multiple RF signals72.

We have demonstrated a reconfigurable analog neural network in a silicon photonic integrated circuit using modulators as neuron elements. Network-mediated cusp and Hopf bifurcations were observed as a proof-of-concept of an integrated broadcast-and-weight system32. Simulations of a 24 modulator neuron network performing an emulation task estimated a 294× speedup over a verified CPU benchmark. Neural network abstractions are powerful tools for bridging the gap between physical dynamics and useful application, and silicon photonic manufacturing introduces opportunities for large-scale photonic systems.

Methods

Experimental Setup

Samples shown in Fig. 1(b) were fabricated on silicon-on-insulator (SOI) wafers at the Washington Nanofabrication Facility through the SiEPIC Ebeam rapid prototyping group10. Silicon thickness is 220 nm, and buried oxide (BOX) thickness is 3 μm. 500 nm wide WGs were patterned by Ebeam lithography and fully etched through to the BOX73. After a cladding oxide (3 μm) is deposited, Ti/W and Al layers are deposited. Ohmic heating in Ti/W filaments causes thermo-optic resonant wavelength shifts in the MRR weights. The sample is mounted on a temperature stabilized alignment stage and coupled to a 9-fiber array using focusing subwavelength grating couplers74. The reconfigurable analog network consists of 2 MRR weight banks each with four MRR weights with 10 μm radii.

Each MRR weight bank is calibrated using a multi-channel protocol described in past work35, 75: an offline measurement procedure is performed to identify models of thermo-optic cross-talk and MRR filter edge transmission. During this calibration phase, electrical feedback connections are disabled and the set of wavelength channels carry a set of linearly seperable training signals. After calibration, the user can specify a desired weight matrix, and the control model calculates and applies the corresponding electrical currents.

Weighted network outputs are detected off-chip, and the electrical weighted sums drive fiber Mach-Zehnder modulators (MZMs). Detected signals are low-pass filtered at 10 kHz, represented by capacitor symbols in Fig. 1(c). Low-pass filtering is used to spoil time-delayed dynamics that arise when feedback delay is much greater than the state time-constant37. In this setup with on-chip network and off-chip modulator neurons, fiber delayed dynamics would interfere with CTRNN dynamical analysis39. MZMs modulate distinct wavelengths λ 1 = 1549.97 nm and λ 2 = 1551.68 nm with neuron output signals y 1(t) and y 2(t), respectively. The MZM electro-optic transfer function serves as the nonlinear transfer function, y = σ(s), associated with the continuous-time neuron. A third wavelength, λ 3 = 1553.46 nm, carries an external input signal, x(t), derived from a signal generator. Each laser diode source (ILX 7900B) outputs +13 dBm of power. All optical signals (u, y 1, and y 2) are wavelength multiplexed in an arrayed waveguide grating (AWG) and then coupled back into the on-chip broadcast STAR consisting of splitting Y-junctions76 (Fig. 1(c)).

Photonic CTRNN Solver

Recently developed compilers, such as Neural ENGineering Objects (Nengo)77, employ the Neural Engineering Framework (NEF)40 to arrange networks of neurons to represent values and functions without relying on training. While originally developed to evaluate theories of cognition, the NEF has been appropriated to solve engineering problems78 and has been used to program electronic neuromorphic hardware79. Background on the NEF compilation procedure is provided in Supplementary Section 2. Simulation state variables, x, are encoded as linear combinations of real population states, s. Each neuron in a population has the same tuning curve shape, σ, but differ in gain g, input encoder vector e, and offset b. The input-output relation of neuron i – equivalent to equation (2), is thus s i = σ (g i e i · x + b i). In this formulation, arbitrary nonlinear functions of the simulation variables, f(x), are represented by linear combinations of the set of these tuning curves across the domain of values of x considered. Introducing recurrent connections in the population introduces the notion of state time-derivatives, as in equation (1). By applying the decoder transform to both sides of equation (1) and using the arbitrary function mapping technique to find W, the neural population emulates an effective dynamical system of the form . Given equations (3) stated in this form, Nengo performs the steps necessary to represent the variables, functions, and complete ODE.

Modifications were made to the standard Nengo procedure. Firstly, we specify the tuning curve shape as the sinusoidal electro-optic transfer characteristic of a MZM. Secondly, to reduce the number of MZMs required, we choose encoders to be the vertices of a unit-square {e} = [1, ±1, ±1], while they are typically chosen randomly. Thirdly, the MZM sinusoidal transfer function provides a natural relation to the Fourier basis. Gains are chosen to correspond to the first three Fourier frequencies of the domain: g ∈ s π/2 · {1, 2, 3}, where s π is the MZM half-period. Offsets were chosen to be b ∈ {0, s π/2}, corresponding to sine and cosine components of each gain frequency. The total number of modulator neurons is therefore #e · #g · #b = 4 · 3 · 2 = 24. Figure 4(e) shows the MZM states, s(t), of which simulation variables, x(t), are linear combinations. From this plot, it appears that some neurons are barely used. Thus, further optimizations of number of neurons could be made by pruning those neurons after compilation of the weight matrix.

The operational speed of this network would be limited by time-of-flight feedback delay. In Fig. 1(a), the longest feedback path is via the drop port of the last (pink) MRR weight of the first (yellow) neuron’s bank. The path includes the perimeter of the square MRR weight matrix, plus a drop waveguide folded back along the bank. Supposing a minimum MRR pitch of 25 μm and MZM length of 500 μm, the feedback delay would then be (6 × 25 × 25 + 500) · n/c = 48 ps. We model this delayed feedback in the Nengo simulation and then adjust feedback strength to find the minimum stable simulation timescale. For γ Pho/t fb < 65, spurious time-delayed dynamics dominate. For γ Pho/t fb < 104, the butterfly phase diagram in Fig. 4(b) is not reproduced accurately. γ Pho/t fb ≥ 260 is chosen for robust reproduction of the expected dynamics.

Conventional CPU Solver

Conventional digital processors must use a discrete-time approximation to simulate continuous ODEs, the simplest of which is Euler continuation:

| 4 |

where Δt is the time step interval. To estimate the real-time value of Δt, we develop and validate a simple CPU model. For each time step, the CPU must compute f(x[nΔt]) as defined in equation (3), resulting in 9 floating-point operations (FLOPs), and 12 cache reads of the operands. The Euler update in equation (4) constitutes one multiply, one addition, and one read/write for each state variable, resulting in 6 FLOPs and 6 cache accesses. Supposing a FLOP latency of 1 clock cycle, Level 1 (L1) cache latency of 4 cycles, and 2.6 GHz clock, this model predicts a time step of Δt = 33 ns. This model is empirically validated using an Intel Core i5-4288U. The machine-optimized program randomly initializes and loops through 106 Euler steps of the Lorenz system, over 100 trials. CPU time was measured to be Δt = 24.5 ± 1.5 ns. The minimum stable simulation timescale is limited by divergent errors stemming from time discretization. We performed a series of 100 trials over 100 values of Δt/γ CPU, finding that <1% probability of divergence occurred for γ CPU/Δt ≥ 150.

Minimum Power Calculations

Static thermal power must be applied to each weight in order to track MRRs to the on-resonance condition. Supposing a bank length set by an MRR pitch of 25 μm and count of 24, the MRR network would occupy a square with 600 μm sides. Within this length, resonances can be fabricated with repeatability within ±1.3 nm80. Supposing a tuning efficiency of 0.25 nm/mW81, it would take an average of 5.2 mW/weight to track resonance, for a static power dissipation of 3.0 W. On the other hand, if depletion-based tuning can be used, there would be negligible static power dissipation in the weights.

The laser bias power must be set such that a modulator neuron can drive downstream neurons with sufficient strength. A neuron fed back to itself should be able to elicit an equivalent or greater small-signal response after one round-trip. This condition is referred to as signal cascadability and can be stated as g ≥ 1, where g is round-trip, small-signal gain. If the cascadability condition is not met, all signals will eventually attenuate out with time. In other words, in a recurrent network, the real part of system eigenvalues would not be able to exceed zero. Round-trip gain is expressed as

| 5 |

For a modulator-based broadcast-and-weight system, this breaks down into receiver and modulator components. Assuming a voltage-mode modulator, such as reverse-biased MRR depletion modulator,

| 6 |

| 7 |

where V π is modulator π-voltage, P pump is modulator pump power, and R PD is detector responsivity. Because input power generates a photocurrent, yet a depletion modulator is voltage-driven, the receiver’s impedance, R r, determines the conversion and can be set externally. As R r increases, round-trip gain also increases, but bandwidth decreases according to f = (2πR r C mod)−1, where C mod is PN junction capacitance of the modulator. By setting the cascadability condition: g = 1 and combining the above equations, we find that

| 8 |

| 9 |

The values of V π, C mod, and R PD on a typical silicon photonic foundry platform have been published41. For an MRR depletion modulator, V π = 1.5 V, C mod = 35 fF. For a PD on the same platform, R PD = 0.97 A/W. This means that the minimum pump power for a given signal bandwidth is 2.2 × 10−13 W/Hz.

In this paper, we study a 24-node CTRNN whose signal bandwidth is restricted to 1 GHz to avoid time-delay dynamics. This means that, for the cascadability condition to be met, modulator pumping must be at least 0.22 mW/neuron of optical power. Adding up 24 lasers and accounting for laser inefficiency, wall-plug system power would be 106 mW.

Electronic supplementary material

Acknowledgements

This work is supported by National Science Foundation (NSF) Enhancing Access to the Radio Spectrum (EARS) program (Award 1642991). Fabrication support was provided via the Natural Sciences and Engineering Research Council of Canada (NSERC) Silicon Electronic-Photonic Integrated Circuits (SiEPIC) Program. Devices were fabricated by Richard Bojko at the University of Washington Washington Nanofabrication Facility, part of the NSF National Nanotechnology Infrastructure Network (NNIN).

Author Contributions

A.N.T. conceived of the methods and experiments. A.N.T., E.Z., and A.X.W. conducted the experiments and analyzed the results. T.FdL developed methods and simulations of the photonic CTRNN emulator. All authors reviewed the manuscript.

Competing Interests

The authors declare that they have no competing interests.

Footnotes

Electronic supplementary material

Supplementary information accompanies this paper at doi:10.1038/s41598-017-07754-z

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Keyes RW. Optical logic-in the light of computer technology. Optica Acta: International Journal of Optics. 1985;32:525–535. doi: 10.1080/713821757. [DOI] [Google Scholar]

- 2.Reimann OA, Kosonocky WF. Progress in optical computer research. IEEE Spectrum. 1965;2:181–195. doi: 10.1109/MSPEC.1965.5531775. [DOI] [Google Scholar]

- 3.McCormick FB, et al. Six-stage digital free-space optical switching network using symmetric self-electro-optic-effect devices. Appl. Opt. 1993;32:5153–5171. doi: 10.1364/AO.32.005153. [DOI] [PubMed] [Google Scholar]

- 4.Jutamulia S, Yu F. Overview of hybrid optical neural networks. Optics & Laser Technology. 1996;28:59–72. doi: 10.1016/0030-3992(95)00070-4. [DOI] [Google Scholar]

- 5.Vlasov Y. Silicon CMOS-integrated nano-photonics for computer and data communications beyond 100 G. IEEE Commun. Mag. 2012;50:s67–s72. doi: 10.1109/MCOM.2012.6146487. [DOI] [Google Scholar]

- 6.Hochberg M, et al. Silicon photonics: The next fabless semiconductor industry. IEEE Solid-State Circuits Magazine. 2013;5:48–58. doi: 10.1109/MSSC.2012.2232791. [DOI] [Google Scholar]

- 7.Thomson D, et al. Roadmap on silicon photonics. Journal of Optics. 2016;18:073003. doi: 10.1088/2040-8978/18/7/073003. [DOI] [Google Scholar]

- 8.Lim A-J, et al. Review of silicon photonics foundry efforts. IEEE J. Sel. Top. Quantum Electron. 2014;20:405–416. doi: 10.1109/JSTQE.2013.2293274. [DOI] [Google Scholar]

- 9.Orcutt JS, et al. Open foundry platform for high-performance electronic-photonic integration. Opt. Express. 2012;20:12222–12232. doi: 10.1364/OE.20.012222. [DOI] [PubMed] [Google Scholar]

- 10.Chrostowski, L. & Hochberg, M. Silicon Photonics Design: From Devices to Systems (Cambridge University Press, 2015).

- 11.Sun J, et al. Large-scale silicon photonic circuits for optical phased arrays. Selected Topics in Quantum Electronics, IEEE Journal of. 2014;20:264–278. doi: 10.1109/JSTQE.2013.2293316. [DOI] [Google Scholar]

- 12.Beausoleil RG. Large-scale integrated photonics for high-performance interconnects. J. Emerg. Technol. Comput. Syst. 2011;7:6:1–6:54. doi: 10.1145/1970406.1970408. [DOI] [Google Scholar]

- 13.Le Beux, S. et al. Optical ring network-on-chip (ORNoC): Architecture and design methodology. In Design, Automation Test in Europe Conference Exhibition (DATE), 2011, 1–6 (2011).

- 14.Narayana, V. K., Sun, S., Badawy, A.-H. A., Sorger, V. J. & El-Ghazawi, T. MorphoNoC: Exploring the Design Space of a Configurable Hybrid NoC using Nanophotonics. arXiv:1506.03264 (2017).

- 15.Capmany J, et al. Microwave photonic signal processing. Journal of Lightwave Technology. 2013;31:571–586. doi: 10.1109/JLT.2012.2222348. [DOI] [Google Scholar]

- 16.Farsaei, A. et al. A review of wireless-photonic systems: Design methodologies and topologies, constraints, challenges, and innovations in electronics and photonics. Optics Communications (2016).

- 17.Feng N-N, et al. Thermally-efficient reconfigurable narrowband RF-photonic filter. Opt. Express. 2010;18:24648–24653. doi: 10.1364/OE.18.024648. [DOI] [PubMed] [Google Scholar]

- 18.Zhuang L, Roeloffzen CGH, Hoekman M, Boller K-J, Lowery AJ. Programmable photonic signal processor chip for radiofrequency applications. Optica. 2015;2:854–859. doi: 10.1364/OPTICA.2.000854. [DOI] [Google Scholar]

- 19.Valley GC. Photonic analog-to-digital converters. Opt. Express. 2007;15:1955–1982. doi: 10.1364/OE.15.001955. [DOI] [PubMed] [Google Scholar]

- 20.Khan MH, et al. Ultrabroad-bandwidth arbitrary radiofrequency waveform generation with a silicon photonic chip-based spectral shaper. Nature: Photonics. 2010;4:117–122. [Google Scholar]

- 21.Chang J, Meister J, Prucnal PR. Implementing a novel highly scalable adaptive photonic beamformer using “blind” guided accelerated random search. Journal of Lightwave Technology. 2014;32:3623–3629. doi: 10.1109/JLT.2014.2309691. [DOI] [Google Scholar]

- 22.Ferreira de Lima T, Tait AN, Nahmias MA, Shastri BJ, Prucnal PR. Scalable wideband principal component analysis via microwave photonics. IEEE Photonics Journal. 2016;8:1–9. doi: 10.1109/JPHOT.2016.2538759. [DOI] [Google Scholar]

- 23.Merolla PA, et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science. 2014;345:668–673. doi: 10.1126/science.1254642. [DOI] [PubMed] [Google Scholar]

- 24.Akopyan F, et al. Truenorth: Design and tool flow of a 65 mW 1 million neuron programmable neurosynaptic chip. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2015;34:1537–1557. doi: 10.1109/TCAD.2015.2474396. [DOI] [Google Scholar]

- 25.Indiveri G, Liu SC. Memory and information processing in neuromorphic systems. Proceedings of the IEEE. 2015;103:1379–1397. doi: 10.1109/JPROC.2015.2444094. [DOI] [Google Scholar]

- 26.Hasler, J. & Marr, H. B. Finding a roadmap to achieve large neuromorphic hardware systems. Front. Neurosci. 7 (2013). [DOI] [PMC free article] [PubMed]

- 27.Wen U-P, Lan K-M, Shih H-S. A review of Hopfield neural networks for solving mathematical programming problems. European Journal of Operational Research. 2009;198:675–687. doi: 10.1016/j.ejor.2008.11.002. [DOI] [Google Scholar]

- 28.Lee, T. & Theunissen, F. A single microphone noise reduction algorithm based on the detection and reconstruction of spectro-temporal features. Proceedings of the Royal Society of London A: Mathematical, Physical and Engineering Sciences471 (2015).

- 29.Eliasmith, C. & Anderson, C. H. Neural engineering: Computation, representation, and dynamics in neurobiological systems (MIT Press, 2004).

- 30.Donnarumma F, Prevete R, de Giorgio A, Montone G, Pezzulo G. Learning programs is better than learning dynamics: A programmable neural network hierarchical architecture in a multi-task scenario. Adaptive Behavior. 2016;24:27–51. doi: 10.1177/1059712315609412. [DOI] [Google Scholar]

- 31.Diamond, A., Nowotny, T. & Schmuker, M. Comparing neuromorphic solutions in action: implementing a bio-inspired solution to a benchmark classification task on three parallel-computing platforms. Frontiers in Neuroscience9 (2016). [DOI] [PMC free article] [PubMed]

- 32.Tait AN, Nahmias MA, Shastri BJ, Prucnal PR. Broadcast and weight: An integrated network for scalable photonic spike processing. Journal of Lightwave Technology. 2014;32:4029–4041. doi: 10.1109/JLT.2014.2345652. [DOI] [Google Scholar]

- 33.Brunner D, Fischer I. Reconfigurable semiconductor laser networks based on diffractive coupling. Optics letters. 2015;40:3854–3857. doi: 10.1364/OL.40.003854. [DOI] [PubMed] [Google Scholar]

- 34.Tait, A. N. et al. Microring weight banks. IEEE Journal of Selected Topics in Quantum Electronics22 (2016).

- 35.Tait AN, Ferreira de Lima T, Nahmias MA, Shastri BJ, Prucnal PR. Multi-channel control for microring weight banks. Opt. Express. 2016;24:8895–8906. doi: 10.1364/OE.24.008895. [DOI] [PubMed] [Google Scholar]

- 36.Yamada M. A theoretical analysis of self-sustained pulsation phenomena in narrow-stripe semiconductor lasers. IEEE Journal of Quantum Electronics. 1993;29:1330–1336. doi: 10.1109/3.236146. [DOI] [Google Scholar]

- 37.Romeira B, et al. Broadband chaotic signals and breather oscillations in an optoelectronic oscillator incorporating a microwave photonic filter. Lightwave Technology, Journal of. 2014;32:3933–3942. doi: 10.1109/JLT.2014.2308261. [DOI] [Google Scholar]

- 38.Beer RD. On the dynamics of small continuous-time recurrent neural networks. Adaptive Behavior. 1995;3:469–509. doi: 10.1177/105971239500300405. [DOI] [Google Scholar]

- 39.Zhou, E. et al. Silicon photonic weight bank control of integrated analog network dynamics. In Optical Interconnects Conference, 2016 IEEE, TuP9 (IEEE, 2016).

- 40.Stewart TC, Eliasmith C. Large-scale synthesis of functional spiking neural circuits. Proceedings of the IEEE. 2014;102:881–898. doi: 10.1109/JPROC.2014.2306061. [DOI] [Google Scholar]

- 41.Khanna, A. IMEC silicon photonics platform. In European Conference on Optical Communication (2015).

- 42.Roska T, et al. Simulating nonlinear waves and partial differential equations via cnn. i. basic techniques. Circuits and Systems I: Fundamental Theory and Applications, IEEE Transactions on. 1995;42:807–815. doi: 10.1109/81.473590. [DOI] [Google Scholar]

- 43.Ratier, N. Analog computing of partial differential equations. In Sciences of Electronics, Technologies of Information and Telecommunications (SETIT), 2012 6th International Conference on, 275–282 (2012).

- 44.Vogelstein RJ, Tenore FVG, Guevremont L, Etienne-Cummings R, Mushahwar VK. A silicon central pattern generator controls locomotion in vivo. IEEE Transactions on Biomedical Circuits and Systems. 2008;2:212–222. doi: 10.1109/TBCAS.2008.2001867. [DOI] [PubMed] [Google Scholar]

- 45.Arena P, Fortuna L, Frasca M, Patane L. A cnn-based chip for robot locomotion control. IEEE Transactions on Circuits and Systems I: Regular Papers. 2005;52:1862–1871. doi: 10.1109/TCSI.2005.852211. [DOI] [Google Scholar]

- 46.Barron-Zambrano, J. H. & Torres-Huitzil, C. {FPGA} implementation of a configurable neuromorphic cpg-based locomotion controller. Neural Networks45, 50–61 Neuromorphic Engineering: From Neural Systems to Brain-Like Engineered Systems (2013). [DOI] [PubMed]

- 47.Friedmann, S., Fr’emaux, N., Schemmel, J., Gerstner, W. & Meier, K. Reward-based learning under hardware constraints - using a RISC processor embedded in a neuromorphic substrate. Front. Neurosci. 7 (2013). [DOI] [PMC free article] [PubMed]

- 48.Benjamin B, et al. Neurogrid: A mixed-analog-digital multichip system for large-scale neural simulations. Proceedings of the IEEE. 2014;102:699–716. doi: 10.1109/JPROC.2014.2313565. [DOI] [Google Scholar]

- 49.Pfeil T, et al. Is a 4-bit synaptic weight resolution enough? – constraints on enabling spike-timing dependent plasticity in neuromorphic hardware. Frontiers in Neuroscience. 2012;6:90. doi: 10.3389/fnins.2012.00090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Binas, J., Neil, D., Indiveri, G., Liu, S.-C. & Pfeiffer, M. Precise deep neural network computation on imprecise low-power analog hardware. arXiv preprint arXiv:1606.07786 (2016).

- 51.Shen, Y. et al. Deep learning with coherent nanophotonic circuits. arXiv:1610.02365 (2016).

- 52.Shainline, J. M., Buckley, S. M., Mirin, R. P. & Sae Woo, N. Superconducting optoelectronic circuits for neuromorphic computing. arXiv preprint arXiv:1610.00053 (2016).

- 53.Nahmias MA, Shastri BJ, Tait AN, Prucnal PR. A leaky integrate-and-fire laser neuron for ultrafast cognitive computing. IEEE J. Sel. Top. Quantum Electron. 2013;19:1–12. doi: 10.1109/JSTQE.2013.2257700. [DOI] [Google Scholar]

- 54.Prucnal PR, Shastri BJ, Ferreira de Lima T, Nahmias MA, Tait AN. Recent progress in semiconductor excitable lasers for photonic spike processing. Adv. Opt. Photon. 2016;8:228–299. doi: 10.1364/AOP.8.000228. [DOI] [Google Scholar]

- 55.Selmi F, et al. Relative refractory period in an excitable semiconductor laser. Phys. Rev. Lett. 2014;112:183902. doi: 10.1103/PhysRevLett.112.183902. [DOI] [PubMed] [Google Scholar]

- 56.Romeira, B., Av’o, R., Figueiredo, J. M. L., Barland, S. & Javaloyes, J. Regenerative memory in time-delayed neuromorphic photonic resonators. Scientific Reports6, 19510 EP – (2016). [DOI] [PMC free article] [PubMed]

- 57.Nahmias, M. A. et al. An integrated analog O/E/O link for multi-channel laser neurons. Applied Physics Letters108 (2016).

- 58.Vaerenbergh TV, et al. Cascadable excitability in microrings. Opt. Express. 2012;20:20292–20308. doi: 10.1364/OE.20.020292. [DOI] [PubMed] [Google Scholar]

- 59.Shastri BJ, et al. Spike processing with a graphene excitable laser. Sci. Rep. 2015;5:19126. doi: 10.1038/srep19126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Zhang H, et al. Z-scan measurement of the nonlinear refractive index of graphene. Opt. Lett. 2012;37:1856–1858. doi: 10.1364/OL.37.001856. [DOI] [PubMed] [Google Scholar]

- 61.Hill M, Frietman EEE, de Waardt H, Khoe G-D, Dorren H. All fiber-optic neural network using coupled soa based ring lasers. IEEE Trans. Neural Networks. 2002;13:1504–1513. doi: 10.1109/TNN.2002.804222. [DOI] [PubMed] [Google Scholar]

- 62.Brunner D, Soriano MC, Mirasso CR, Fischer I. Parallel photonic information processing at gigabyte per second data rates using transient states. Nat Commun. 2013;4:1364. doi: 10.1038/ncomms2368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Vandoorne, K. et al. Experimental demonstration of reservoir computing on a silicon photonics chip. Nat Commun5 (2014). [DOI] [PubMed]

- 64.Soriano MC, Brunner D, Escalona-Mor’an M, Mirasso CR, Fischer I. Minimal approach to neuro-inspired information processing. Frontiers in Computational Neuroscience. 2015;9:68. doi: 10.3389/fncom.2015.00068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Duport, F., Smerieri, A., Akrout, A., Haelterman, M. & Massar, S. Fully analogue photonic reservoir computer. Scientific Reports6, 22381 EP – (2016). [DOI] [PMC free article] [PubMed]

- 66.Vandoorne K, et al. Toward optical signal processing using photonic reservoir computing. Opt. Express. 2008;16:11182–11192. doi: 10.1364/OE.16.011182. [DOI] [PubMed] [Google Scholar]

- 67.Mesaritakis C, Papataxiarhis V, Syvridis D. Micro ring resonators as building blocks for an all-optical high-speed reservoir-computing bit-pattern-recognition system. J. Opt. Soc. Am. B. 2013;30:3048–3055. doi: 10.1364/JOSAB.30.003048. [DOI] [Google Scholar]

- 68.Hopfield JJ, Tank DW. “Neural” computation of decisions in optimization problems. Biological Cybernetics. 1985;52:141–152. doi: 10.1007/BF00339943. [DOI] [PubMed] [Google Scholar]

- 69.Tumuluru, V. K., Wang, P. & Niyato, D. A neural network based spectrum prediction scheme for cognitive radio. In Communications (ICC), 2010 IEEE International Conference on, 1–5 (2010).

- 70.Mitra U, Poor HV. Neural network techniques for adaptive multiuser demodulation. IEEE Journal on Selected Areas in Communications. 1994;12:1460–1470. doi: 10.1109/49.339913. [DOI] [Google Scholar]

- 71.Du K-L, Lai A, Cheng K, Swamy M. Neural methods for antenna array signal processing: a review. Signal Processing. 2002;82:547–561. doi: 10.1016/S0165-1684(01)00185-2. [DOI] [Google Scholar]

- 72.Tait, A. et al. Silicon microring weight banks for multivariate RF photonics. In CLEO: 2017 (IEEE, 2017 (accepted)).

- 73.Bojko, R. J. et al. Electron beam lithography writing strategies for low loss, high confinement silicon optical waveguides. J. Vac. Sci. Technol., B29 (2011).

- 74.Wang Y, et al. Focusing sub-wavelength grating couplers with low back reflections for rapid prototyping of silicon photonic circuits. Opt. Express. 2014;22:20652–20662. doi: 10.1364/OE.22.020652. [DOI] [PubMed] [Google Scholar]

- 75.Tait A, F de Lima T, Nahmias M, Shastri B, Prucnal P. Continuous calibration of microring weights for analog optical networks. Photonics Technol. Lett. 2016;28:887–890. doi: 10.1109/LPT.2016.2516440. [DOI] [Google Scholar]

- 76.Zhang Y, et al. A compact and low loss Y-junction for submicron silicon waveguide. Opt. Express. 2013;21:1310–1316. doi: 10.1364/OE.21.001310. [DOI] [PubMed] [Google Scholar]

- 77.Bekolay T, et al. Nengo: a Python tool for building large-scale functional brain models. Frontiers in Neuroinformatics. 2013;7:48. doi: 10.3389/fninf.2013.00048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Friedl KE, Voelker AR, Peer A, Eliasmith C. Human-inspired neurorobotic system for classifying surface textures by touch. IEEE Robotics and Automation Letters. 2016;1:516–523. doi: 10.1109/LRA.2016.2517213. [DOI] [Google Scholar]

- 79.Mundy, A., Knight, J., Stewart, T. & Furber, S. An efficient SpiNNaker implementation of the neural engineering framework. In Neural Networks (IJCNN), 2015 International Joint Conference on, 1–8 (2015).

- 80.Chrostowski, L. et al. Impact of fabrication non-uniformity on chip-scale silicon photonic integrated circuits. In Optical Fiber Communication Conference, Th2A.37 (Optical Society of America, 2014).

- 81.Jayatilleka H, et al. Wavelength tuning and stabilization of microring-based filters using silicon in-resonator photoconductive heaters. Opt. Express. 2015;23:25084–25097. doi: 10.1364/OE.23.025084. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.