“Now that he’d remembered what he meant to tell her, he seemed to lose interest. She didn’t have to see his face to know this. It was in the air. It was in the pause that trailed from his remark of eight, ten, twelve seconds ago” (1). Lauren Hartke, Don DeLillo’s protagonist in The Body Artist (1), does not have to look at her husband to feel him drifting away. Words and pauses between them carry a wealth of information about the speakers’ intentions and emotions. Words, however, cannot only be heard but also can be seen being signed in sign language. How does the brain go about extracting identity and emotional cues from sentences when they arrive via different senses? Are they duly processed in their corresponding sensory cortices: the speaking speaker’s identity and emotional tone in the auditory cortex and the signing speaker’s identity and emotional tone in the visual cortex? Alternately, do they converge rapidly into one common brain area, irrespective of whether the conversation is heard or seen? If the latter is the case, where would that area be? Deaf signers can communicate through the visual channel with a dexterity that matches spoken communication between two speakers. This capacity gives one a rare opportunity to explore fundamental questions on how different parts of the brain go about dividing their labor. Now, in PNAS, Benetti et al. (2) bring important insights into this matter.

For many decades, it has been thought that a fundamental principle of brain organization is the division of that labor between separate sensory systems (e.g., visual, auditory, tactile). Consequently, experience-dependent changes were thought to occur almost exclusively within the bounds of this division: Visual training would cause changes in the visual cortex, and so on (e.g., ref. 3). Against this view, the past 30 years of research have shown that atypical sensory experience can trigger changes that overcome this division. Such “cross-modal plasticity” is particularly well documented in the visual cortex of the blind, where it follows the rule of task-specific reorganization (4): The sensory input of a given region is being switched, but its typical functional specialization is being preserved. For example, separate ventral visual regions in the blind respond to tactile and auditory object recognition (5, 6), tactile and auditory reading (7, 8), and auditory perception of body shapes (9), and this division of labor corresponds to the typical organization of the visual cortex in the sighted (10). Until recently, however, it remained an open question as to whether such task-specific reorganization is unique to the visual cortex or, alternatively, whether it is a general principle applying to other cortical areas. True, task-specific reorganization of the auditory cortex has been demonstrated in deaf cats. Impressive experiments, some of them involving reversible inactivation of auditory cortex with cooling loops, have shown that a distinct auditory area supports peripheral visual localization and visual motion detection in deaf cats, and that the same region supports these functions in the auditory modality in hearing cats (11, 12). However, human evidence, in contrast, has been relatively scarce (13).

In their article, Benetti et al. (2) study cross-modal plasticity in the system that supports recognition of a person’s identity: a skill that is critical in everyday social interactions. Typically, this goal is achieved by combining face and voice recognition, because both of these channels convey important information about the person’s individual characteristics. Through specialized brain areas, these social cues are extracted from faces and voices with ease and accuracy. Face processing is primarily supported by the fusiform face area, a ventral visual region described by Kanwisher et al. (14) 20 y ago, whereas voice processing is supported by the temporal voice area identified in the auditory cortex by Belin et al. (15) 3 y later.

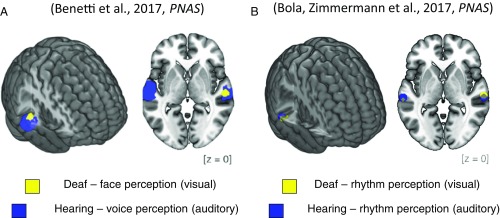

Benetti et al. (2) ask how the brain refashions itself when spoken words cannot be heard, as is the case in the deaf. Using functional magnetic resonance imaging (fMRI) and magnetoencephalography (MEG), they showed that in these circumstances, the temporal voice area reorganizes and becomes selective for faces. Their results show a near-perfect overlap between the area activated by voice recognition in the hearing and the novel, additional face activation emerging in the auditory cortex of the deaf (Fig. 1A). Furthermore, they used a sophisticated fMRI-adaptation paradigm to show that the temporal voice area of the deaf is activated more strongly when deaf individuals perceive different faces rather than only one face repeated several times. This finding constitutes powerful evidence that, indeed, part of the auditory cortex in the deaf supports face identity processing.

Fig. 1.

Task-specific auditory cortex reorganization in deaf humans. Cross-modal plasticity in the auditory cortex of congenitally deaf people overcomes the division between visual and auditory processing streams, as regions of high-level auditory cortex become recruited for visual face processing and visual rhythm perception. (A) fMRI results show peaks of activation for auditory voice recognition in the hearing (blue) and for visual face recognition in the deaf (yellow). Reproduced from ref. 2. (B) fMRI results also show peaks of activation for auditory rhythm perception in the hearing (blue) and visual rhythm perception in the deaf (yellow). Adapted from ref. 13.

To make sure that these activations to faces in the temporal voice area were not a result of acquiring sign language itself, the researchers studied a special control subject group: hearing subjects who are fluent in sign language. Just like the deaf subjects, this additional control group was proficient in Italian sign language. However, they did not exhibit these additional auditory cortex activations to faces visible only in the deaf, most likely because their temporal voice area was also exposed to regular spoken language. Benetti et al. (2) also took advantage of the superior temporal resolution offered by MEG, and demonstrated that the face selectivity in the temporal voice area of deaf individuals emerges very fast, within the first 200 ms following stimulus onset, which is only milliseconds later than the activation in the main face recognition area, the fusiform face area (2). This finding is another indirect, yet potent, sign of the importance of the processing that occurs in the temporal voice area of the deaf at onset, only milliseconds later than the activation in the main face recognition area, the fusiform face area (2).

Benetti et al. (2) propose that the temporal voice area of deaf individuals becomes incorporated in the face recognition system, because face and voice processing share a common functional goal: recognition of one’s identity. This proposition implies that following hearing loss, auditory areas switch their sensory modality but maintain a relation to its typical function. Following the same thread, another study recently published in PNAS by Bola et al. (13) showed an analogous outcome. Using similar groups of deaf and hearing subjects, we explored how the deaf’s auditory cortex processes rhythmic stimuli. In an fMRI experiment, we asked deaf and hearing adults to discriminate between temporally complex sequences of flashes and beeps. Our results demonstrated that the posterior part of the high-level auditory cortex in the deaf was activated by rhythmic visual sequences but not by regular visual stimulation. Moreover, this region was the same auditory region that was activated when hearing subjects perceived rhythmic sequences in the auditory modality (Fig. 1B). Our results thus demonstrated that in deaf humans, the auditory cortex preserves its typical specialization for rhythm processing despite switching to a different sensory modality.

Overall, these two studies demonstrate that the high-level auditory cortex in the deaf switches its input modality from sound to vision but preserves its task-specific activation pattern independent of input modality (2, 13). These findings mean that that task-specific reorganization is not limited to the visual cortex, but might be a general principle that guides cortical plasticity in the brain. This possibility naturally opens new vistas for future research. For example, it seems natural to ask whether the mechanism of task-specific brain reorganization is limited to the very particular circumstances of prolonged sensory deprivation. Previous studies on visual cortex have already shown that some forms of task-specific recruitment of the visual cortex are possible in nondeprived adults, either after several days of blindfolding (16) or after extensive tactile or auditory training (6, 17, 18). In their experiment, Benetti et al. (2) report a marginally significant trend in the fMRI adaptation effect for faces in hearing participants, suggesting that the temporal voice area may have a similar cross-modal potential for face discrimination in both hearing and deaf subjects. It remains to be explained to what extent the potential for multimodal changes can be exploited in nondeprived adults engaging in complex human activity and whether task-specific reorganization could reach beyond high-order cortices to primary cortices, which are classically considered more bounded to their specific sensory modality. Nevertheless, one can already suppose that some chapters in textbooks of neuroscience will soon need to be amended.

Acknowledgments

The authors thank an anonymous advisor for help in finding the right opening sentences. M.S. is supported by National Science Centre Poland Grants 2015/19/B/HS6/01256 and 2016/21/B/HS6/03703; Marie Curie Career Integration Grant 618347; and funds from the Polish Ministry of Science and Higher Education for cofinancing of international projects, years 2013–2017. Ł.B. is supported by National Science Centre Poland Grant 2014/15/N/HS6/04184.

Footnotes

The authors declare no conflict of interest.

See companion article on page E6437.

References

- 1.DeLillo D. The Body Artist. Picador; London: 2011. pp. 9–10. [Google Scholar]

- 2.Benetti S, et al. Functional selectivity for face processing in the temporal voice area of early deaf individuals. Proc Natl Acad Sci USA. 2017;114:E6437–E6446. doi: 10.1073/pnas.1618287114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Draganski B, et al. Neuroplasticity: Changes in grey matter induced by training. Nature. 2004;427:311–312. doi: 10.1038/427311a. [DOI] [PubMed] [Google Scholar]

- 4.Amedi A, Hofstetter S, Maidenbaum S, Heimler B. Task selectivity as a comprehensive principle for brain organization. Trends Cogn Sci. 2017;21:307–310. doi: 10.1016/j.tics.2017.03.007. [DOI] [PubMed] [Google Scholar]

- 5.Amedi A, Malach R, Hendler T, Peled S, Zohary E. Visuo-haptic object-related activation in the ventral visual pathway. Nat Neurosci. 2001;4:324–330. doi: 10.1038/85201. [DOI] [PubMed] [Google Scholar]

- 6.Amedi A, et al. Shape conveyed by visual-to-auditory sensory substitution activates the lateral occipital complex. Nat Neurosci. 2007;10:687–689. doi: 10.1038/nn1912. [DOI] [PubMed] [Google Scholar]

- 7.Reich L, Szwed M, Cohen L, Amedi A. A ventral visual stream reading center independent of visual experience. Curr Biol. 2011;21:363–368. doi: 10.1016/j.cub.2011.01.040. [DOI] [PubMed] [Google Scholar]

- 8.Striem-Amit E, Cohen L, Dehaene S, Amedi A. Reading with sounds: Sensory substitution selectively activates the visual word form area in the blind. Neuron. 2012;76:640–652. doi: 10.1016/j.neuron.2012.08.026. [DOI] [PubMed] [Google Scholar]

- 9.Striem-Amit E, Amedi A. Visual cortex extrastriate body-selective area activation in congenitally blind people “seeing” by using sounds. Curr Biol. 2014;24:687–692. doi: 10.1016/j.cub.2014.02.010. [DOI] [PubMed] [Google Scholar]

- 10.Hirsch GV, Corinna MB, Merabet LB. Using structural and functional brain imaging to uncover how the brain adapts to blindness. Ann Neurosci Psychol. 2015;5:2. [PMC free article] [PubMed] [Google Scholar]

- 11.Meredith MA, et al. Crossmodal reorganization in the early deaf switches sensory, but not behavioral roles of auditory cortex. Proc Natl Acad Sci USA. 2011;108:8856–8861. doi: 10.1073/pnas.1018519108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lomber SG, Meredith MA, Kral A. Cross-modal plasticity in specific auditory cortices underlies visual compensations in the deaf. Nat Neurosci. 2010;13:1421–1427. doi: 10.1038/nn.2653. [DOI] [PubMed] [Google Scholar]

- 13.Bola Ł, et al. Task-specific reorganization of the auditory cortex in deaf humans. Proc Natl Acad Sci USA. 2017;114:E600–E609. doi: 10.1073/pnas.1609000114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B. Voice-selective areas in human auditory cortex. Nature. 2000;403:309–312. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- 16.Merabet LB, et al. Rapid and reversible recruitment of early visual cortex for touch. PLoS One. 2008;3:e3046. doi: 10.1371/journal.pone.0003046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Siuda-Krzywicka K, et al. Massive cortical reorganization in sighted Braille readers. eLife. 2016;5:e10762. doi: 10.7554/eLife.10762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kim JK, Zatorre RJ. Tactile-auditory shape learning engages the lateral occipital complex. J Neurosci. 2011;31:7848–7856. doi: 10.1523/JNEUROSCI.3399-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]